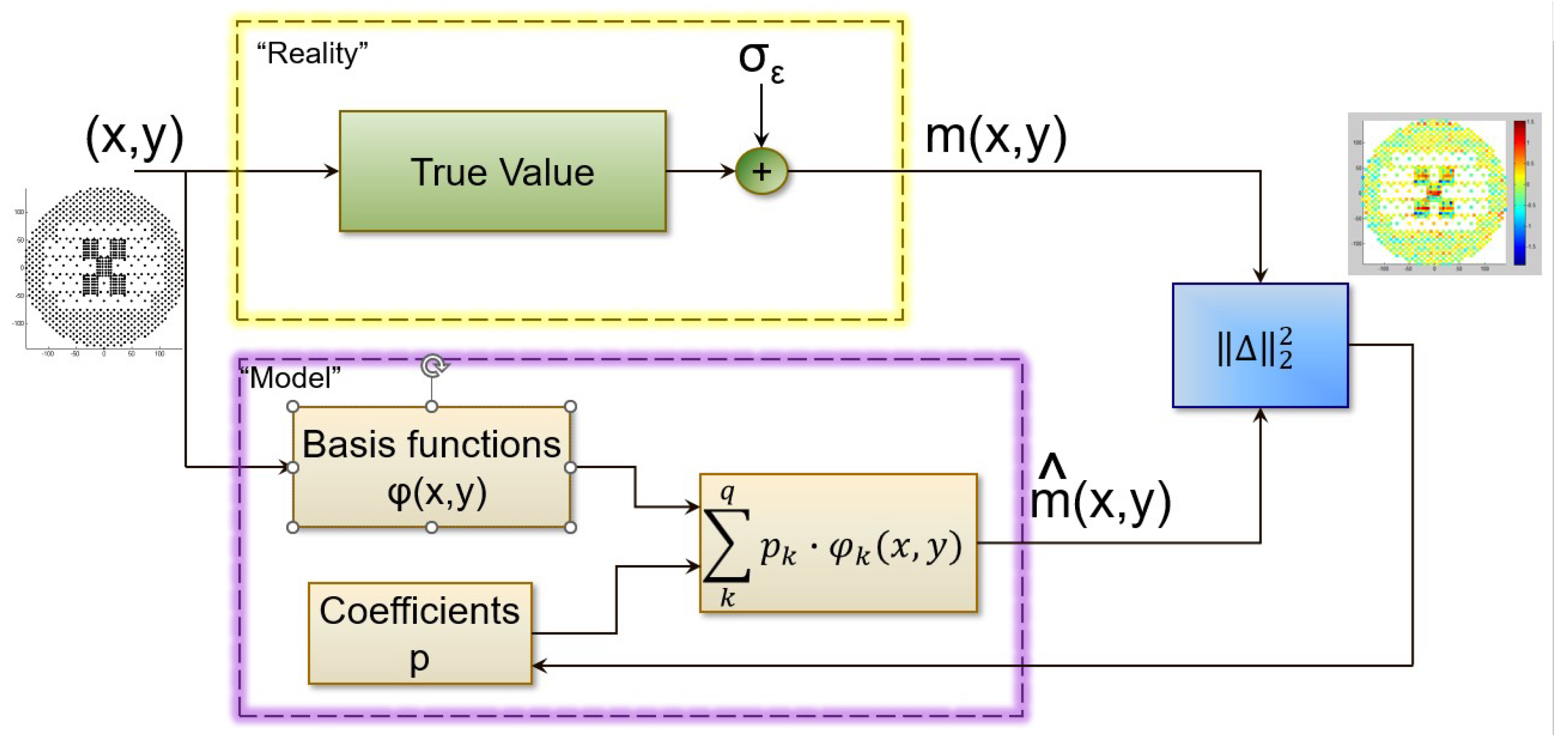

NMU Optimality though is not our only concern. We are also interested in sampling uniformly from the wafer. Hence, our optimality criteria are 1)NMU Optimal Design and 2) Uniform Sampling.

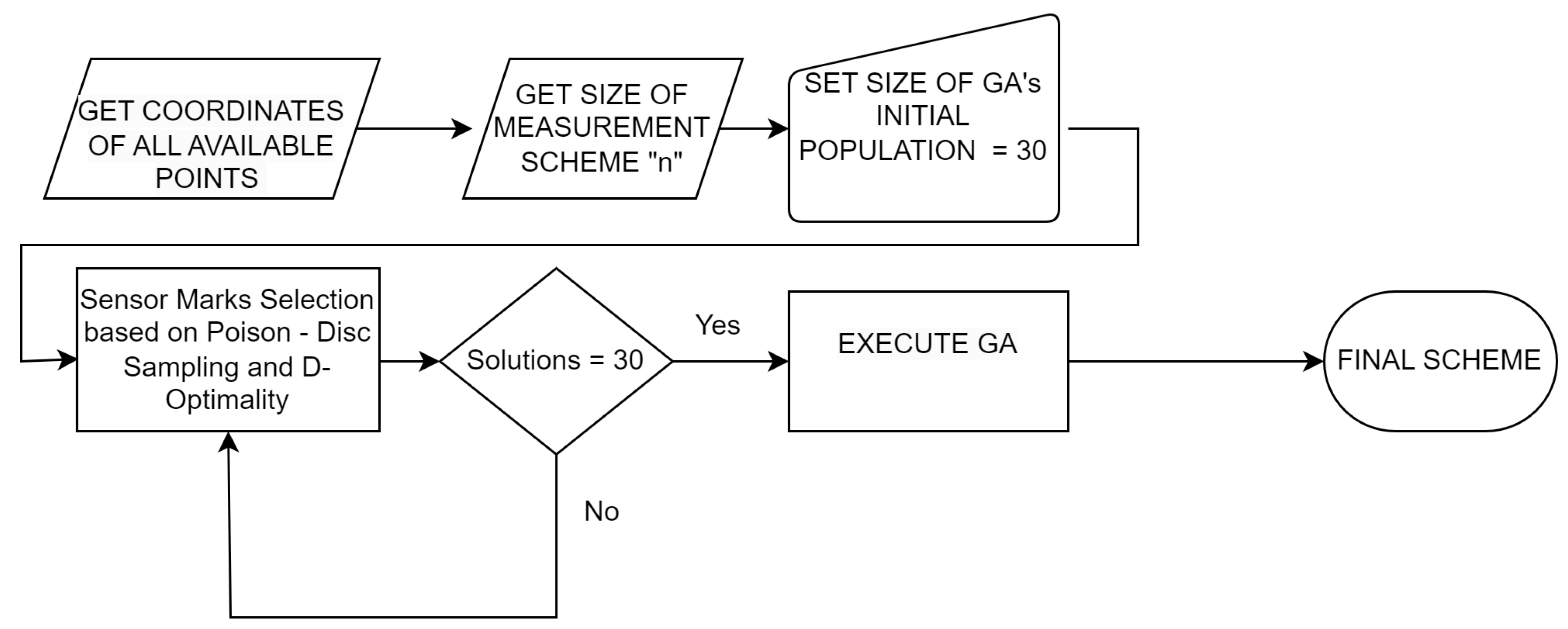

3.1. Sensor Marks Selection based on Poison-Disc Sampling and D-Optimality

In sensor marks selection problems, the goal is to select a subset of points from a larger set of candidate points such that the selected points provide the most informative measurements for a given application. Two important criteria for selecting these points are spatial randomness and sample uniformity. Spatial randomness refers to the evenness of the distribution of the selected points across the area of interest. A spatially random distribution of points helps ensure that the selected points are representative of the entire area, rather than being biased towards certain regions. This is important because biased samples can lead to inaccurate or incomplete measurements, which can ultimately impact the quality and reliability of the application. Sample uniformity, on the other hand, refers to the evenness of the distance between selected points. A uniform distribution of points helps ensure that each point contributes equally to the overall measurement and that the measurements are not biased towards certain areas. This is particularly important in applications where the measured quantity varies significantly across the area of interest, as a non-uniform sample may miss important features or over-represent certain regions.

In summary, both spatial randomness and sample uniformity are critical criteria for selecting sensor marks in order to ensure accurate and representative measurements. By considering these criteria, we can select a subset of points that provides the most informative measurements and improves the overall performance of the application.

In our problem as well, as already mentioned above, instead of only an NMU based criterion we also need to provide a uniform design. For this reason, we incorporate Poison-Disc sampling as part of the Sensor Marks Selection algorithm.

Poisson Disc Sampling with nearest neighbors is a method used for generating spatially random point sets on a two-dimensional surface [

13,

14]. This technique is particularly useful in sensor mark selection problems, as in our case, where it is essential to ensure both spatial randomness and sample uniformity. The goal is to generate a set of points that cover the area of interest while avoiding overlaps and producing a uniform distribution.

To ensure spatial randomness, the algorithm disqualifies the nearest neighbors of a point as candidate points. Specifically, for each new point, the algorithm checks the nearest neighbors of all existing points and removes them from the list of potential candidate points. This prevents points from being too close to each other and ensures a spatially random distribution. The algorithm continues this process, iteratively selecting new points until the entire surface is covered with a set of non-overlapping discs.

By adjusting the parameters of the algorithm, such as the number of nearest neighbors to be excluded, the user can control the spatial distribution and sample uniformity of the generated point set.

In summary, Poison Disc Sampling with nearest neighbors is a powerful algorithm that ensures both spatial randomness and sample uniformity in sensor mark selection problems. By generating a spatially random and uniform point set, this technique enables accurate and efficient sampling for a wide range of applications.

In Algorithm 1 the process of Sensor Marks Selection based on the Poison-Disc sampling and D-Optimality is presented. In the first step we get the coordinates

of the available points

N. Then, we initialize the number of nearest neighbors to 32 and calculate the Euclidean distances between all points and their nearest neighbors. Next, we initialize an empty active points list and an empty inactive points list. We select a random point and add it to the active points list.

|

Algorithm 1 Algorithm: Sensor Marks Selection based on Poison-Disc Sampling and D-Optimality |

- 1:

Get coordinates (x,y) of all candidate points N on the wafer surface - 2:

Initialize the number of nearest neighbors -

- 3:

Calculate the Euclidean distances between all points and their nearest neighbors - 4:

Initialize an empty

- 5:

Initialize an empty

- 6:

Select a random point and add it to the active points list - 7:

while size of active points list < 221 do

- 8:

Initialize a list of list - 9:

Initialize an empty list of

- 10:

For each active point, add its to the list - 11:

Remove any disqualified points from the list - 12:

if size of list then

- 13:

- 14:

Go to Step 10 - 15:

end if

- 16:

Calculate D-Optimality of the scheme for every point on the list - 17:

Add to list the point that contributes most to the D-Optimality of the scheme - 18:

end while |

In the main loop of the algorithm, we repeatedly add points to the active points list until we have selected 221 points. In each iteration of the loop, we first initialize a list of candidate points and a list of disqualified points. We add the nearest neighbors of all active points to the disqualified points list and remove any disqualified points from the candidate points list. If the candidate points list is empty, we decrease the number of nearest neighbors by 4 and repeat the loop.

Next, we calculate the D-Optimality of the scheme for every point on the candidate points list and add the point that contributes the most to the D-Optimality of the scheme to the active points list. As mentioned before NMU can not be used on its own since on the one hand it is not a direct criterion for experimental design and one the other hand it is computationally expensive. Finally, we repeat this process until we have selected 221 points.

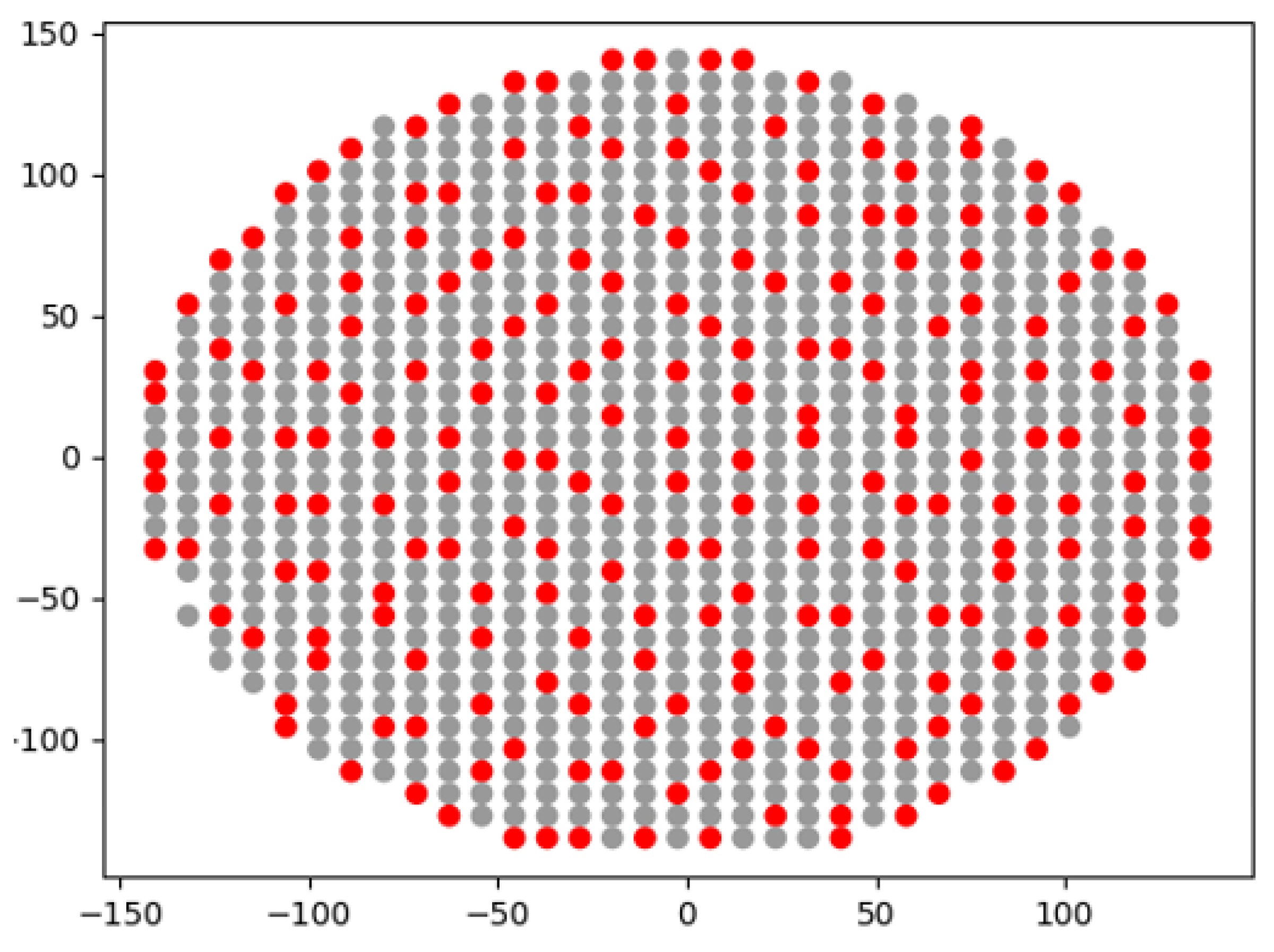

In

Figure 8 a nice visualization of the progress of the above algorithm is presented. In green, we see the points that qualifying as candidates at a specific iteration of the process while the blue ones are the points that are already part of the scheme. In this case, the

k nearest neighbors of the already selected points are disqualified, with

k depending on the iteration of the algorithm since we start with

and this is reduced further. Hence the disqualified points are the ones belonging to the area that the red circles define. In a very careful look we notice that the red circles in the center are larger than the circles on the edge of the wafer. This is because the points in the center of the wafer were selected at an earlier stage of the points on the edge. Hence, at that step of the process the exclusion zone defined by the

k disqualified neighbors is bigger (for enforcing uniforming) but as the process continues a large

k yields no available points. In that case

k decreases as described above, and the circle of exclusion zone becomes smaller.

In the end of the above process we get a scheme containing 221 selected points, uniformly sampled and with spatial randomness ensured, end at the same time the most important a near D-Optimal design.

3.2. Improving Solution with Genetic Algorithms (GA)

So far in the previous part of our algorithm we created an initial population of, in this case 30 solutions. The strategy though that we followed can be considered "greedy"since we add to the schemes the point that contributes more to the D-Optimality. A greedy algorithm makes locally optimal choices at each step with the hope of finding a global optimum, but it may not always lead to the best solution overall. It is important to strike a balance between the optimality criterion and practical considerations when designing an algorithm for a real-world problem. Hence, at this part of the algorithm we propose Genetic Algorithms to balance out the greedy approach. In fact, one of the strengths of GAs is that they can help to overcome local optima that might be encountered with a purely greedy approach.

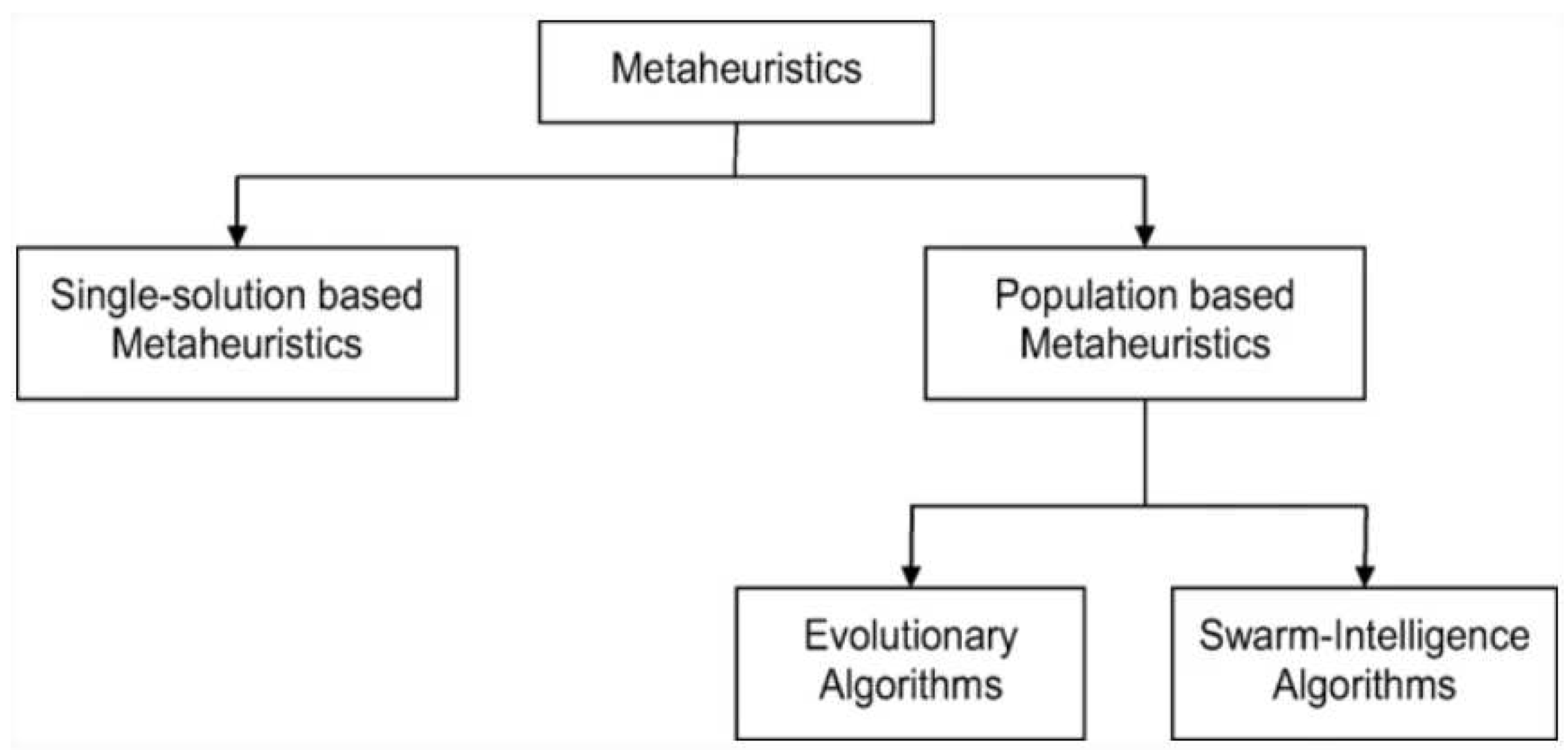

Recently, real-world complicated problems originating from a variety of disciplines, including economics, engineering, politics, management, and engineering, have been solved using meta heuristic algorithms. These algorithms can be broadly divided into two categories: population-based meta heuristic algorithms and single solution algorithms (

Figure 9).

Evolutionary algorithms, including Genetic Algorithms, have undergone significant advancements in recent years, resulting in the creation of efficient and dependable optimization tools. These algorithms have been applied to solve numerous optimization problems, such as the economic dispatch problem [

16], optimal control problem [

17], scheduling problem [

18], energy harvesting problem [

19], and others.

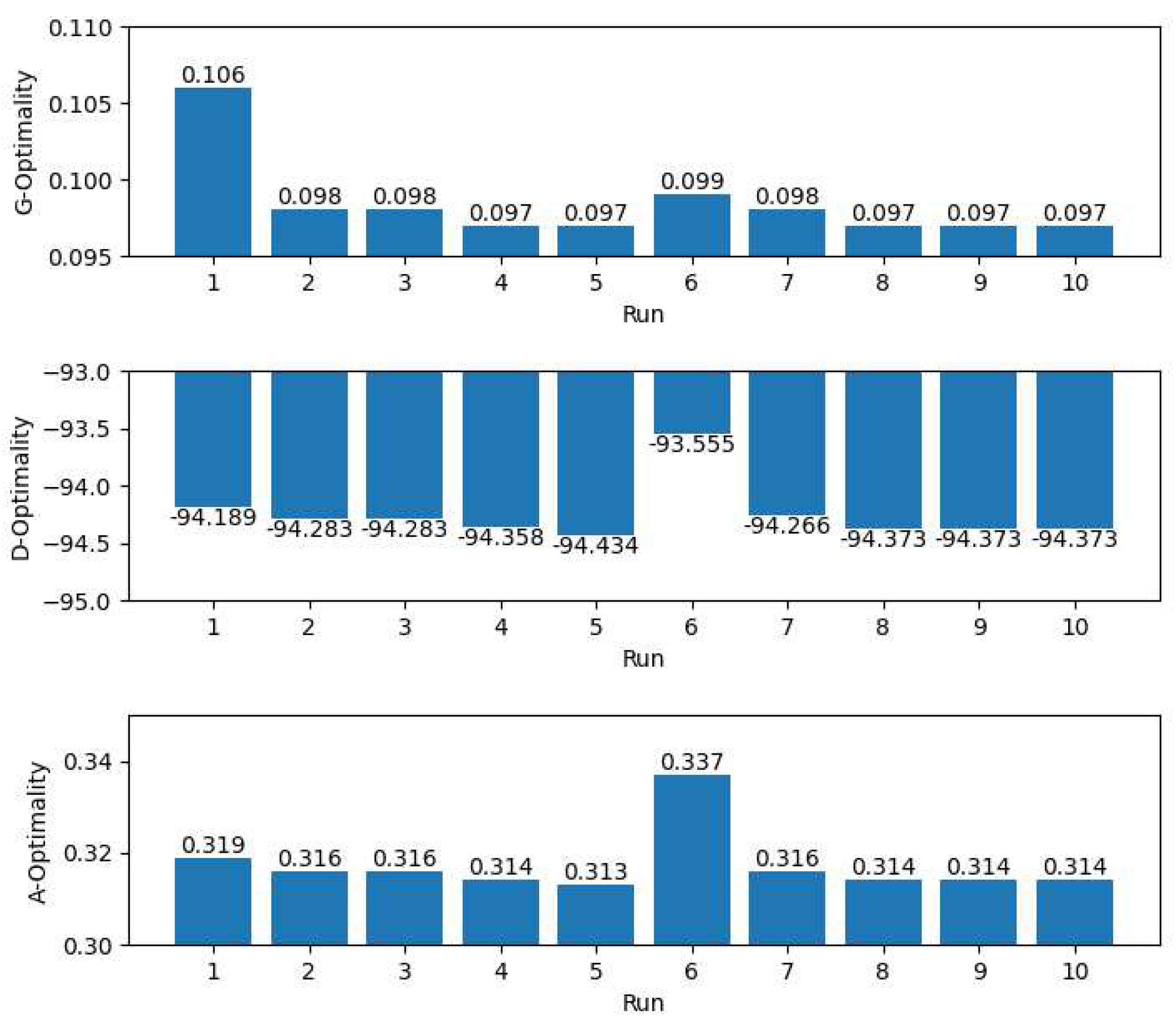

In our problem, after ensuring the uniformity of samples, we aim to find an optimal solution in terms of G, D, and A-Optimality, which is a multi-objective optimization problem. Multi-objective optimization seeks to find solutions that produce the best values for one or more objectives, typically having a series of compromise options known as Pareto optimal solutions rather than a single optimal solution. In our case, we simplify the multi-objective problem as a single objective by aggregating multiple objectives into one using a weighted sum. Our use case allows for this simplification since the three different objectives are very similar, representing different metrics of the same goal. Thus, the cost function of our Genetic Algorithm is the compound criterion of G, D, and A-Optimality, similar to the second step of the process.

The Genetic Algorithm draws its inspiration from the natural selection process. It is a population-based search algorithm that applies the survival of the fittest principle. The main components of the Genetic Algorithm are chromosome representation, selection, crossover, mutation, and fitness function computation. The Genetic Algorithm process involves the initialization of an n-chromosome population (

Y), which is usually created randomly, but in our proposed strategy, the initial population is created using a deterministic approach, except for the first two random points. This detail plays a significant role in the quality of results and the robustness of the proposed algorithm. Then, the fitness of each chromosome in

Y is calculated, and two chromosomes, designated

and

, are selected based on their compound criterion fitness value. The single-point crossover operator with crossover probability (

) is applied to

and

to produce an offspring,

O. The offspring

O is then subjected to the uniform mutation operator with mutation probability (

) to create

. The new progeny

is added to the new population, and this process is repeated until the new population is complete. GA dynamically modifies the search process using the probabilities of crossover and mutation to arrive at the best solution. GA can change the encoded genes, and it can evaluate multiple individuals to generate multiple ideal results, giving it higher ability for global search. The core part of the GA is the fitness function. In our solution we propose a compound criterion of G,D and A-Optimality instead of using only one type of optimality. Using a compound criterion that combines multiple types of optimality (such as D-, G-, and A-Optimality) in a GA fitness function can be beneficial for several reasons. Firstly, using a single type of optimality can result in the GA getting trapped in a local optima. Local optima are solutions that appear to be optimal in the immediate vicinity but are not the globally optimal solution. By using a compound criterion that considers multiple types of optimality, the GA can search for a solution that is not only locally optimal but also globally optimal. Secondly, a compound criterion can help balance different types of optimality. For example, a solution that is highly optimized for D-Optimality may not be optimized for G-Optimality or A-Optimality. By combining these different types of optimality, the GA can search for a solution that is optimized across all dimensions. Finally, a compound criterion can help ensure that the GA converges to a solution that is practical and usable in real-world situations. For example, a solution that is optimized for D-Optimality may not be feasible to implement due to other practical considerations such as cost or manufacturing constraints. By combining different types of optimality, the GA can search for a solution that is both optimal and feasible to implement. More specifically we define the fitness function as:

In this scenario, the multi-objective optimization problem requires that all objectives are taken into account equally while giving slightly more weight to G-Optimality. This is achieved by assigning a weight of 0.4 to G-Optimality, and weights of 0.3 to both D-Optimality and A-Optimality, in the compound criterion used for evaluating the fitness of the solutions generated by the GA. By giving more weight to G-Optimality, we can bias the optimization process towards generating solutions that have a good overall fit to the data, while still taking into account the other objectives. This can help to avoid the problem of getting trapped in local optima, since the GA will be better able to explore the search space and find better solutions that are not necessarily optimal in any one objective but are good overall. Furthermore, the use of multiple objectives in the fitness function can help to generate more diverse and robust solutions, since it allows the GA to explore a larger space of potential solutions. This can help to avoid over fitting to the training data and improve the generalization performance of the model. In the given fitness function, we have a constraint that the solution should not have more than 221 elements. If a solution violates this constraint, we need to penalize it to discourage the GA algorithm from selecting it. Penalizing a solution means assigning a high cost to it, which in turn lowers its fitness value. In our case for ensuring that we have 221 points in the final solution, the fitness function first checks if the sum of the elements in the solution is equal to 221. If it is not, we assign a penalty to the solution by adding a very high value (100000) to the sum of the elements in the solution. This will make the fitness value of the solution extremely low, which means it will have a very low chance of being selected by the GA algorithm. Finally the GA will have the following settings:

The initial population consists of 30 solutions, which were created using the Sensor Marks Selection based on Poison-Disc and D-Optimality technique described above.

The size of the population is set to 100, meaning that there will be 100 solutions in each generation.

The algorithm will run for 50 generations.

The probability of crossover is set to 0.6, meaning that there is a 60% chance that two parent solutions will be combined to produce a new offspring solution in each crossover event.

The probability of mutation is set to 0.05, meaning that there is a 5% chance that each gene in a solution will be mutated during a mutation event.

Elitism is enabled, which means that the best solution from the previous generation will always be included in the next generation.

The fitness function will be maximized, meaning that the algorithm will try to find solutions with the highest possible fitness value.

In our case, the Genetic Algorithm significantly improves the solution in terms of G, D, and A-Optimality, as demonstrated in the Experimental Results section.