Submitted:

29 June 2023

Posted:

30 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Types of brain tumors

| Types of tumors based on | Type | comment |

|---|---|---|

| Nature | Benign | Less aggressive and grows slowly. |

| Malignant | Life-threatening and rapidly expanding. | |

| Origin | Primary tumor | Originates in the brain directly. |

| Secondary tumor | This tumor develops in another area of the body like lung and breast before migrating to the brain. | |

| Grading | Grade I | Basically regular in shape, and they develop slowly. |

| Grade II | Appear strange to the view and grow more slowly. | |

| Grade III | These tumors grow more quickly than grade II cancers. | |

| Grade IV | Reproduced with greater rate. | |

| Progression stage | Stage 0 | Malignant but do not invade neighboring cells. |

| Stage 1 | Malignant and quickly spreading | |

| Stage 2 | ||

| Stage 3 | ||

| Stage 4 | The malignancy invades every part of the body. |

3. Imaging Modalities

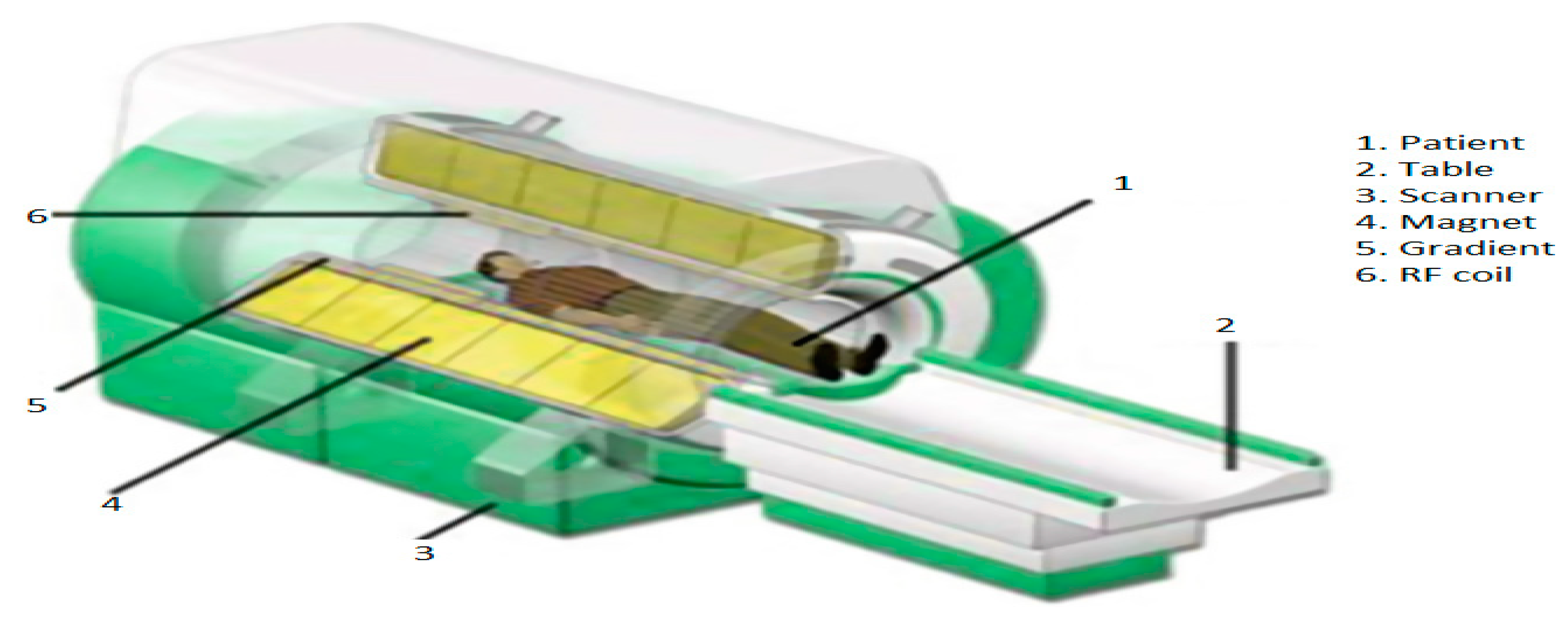

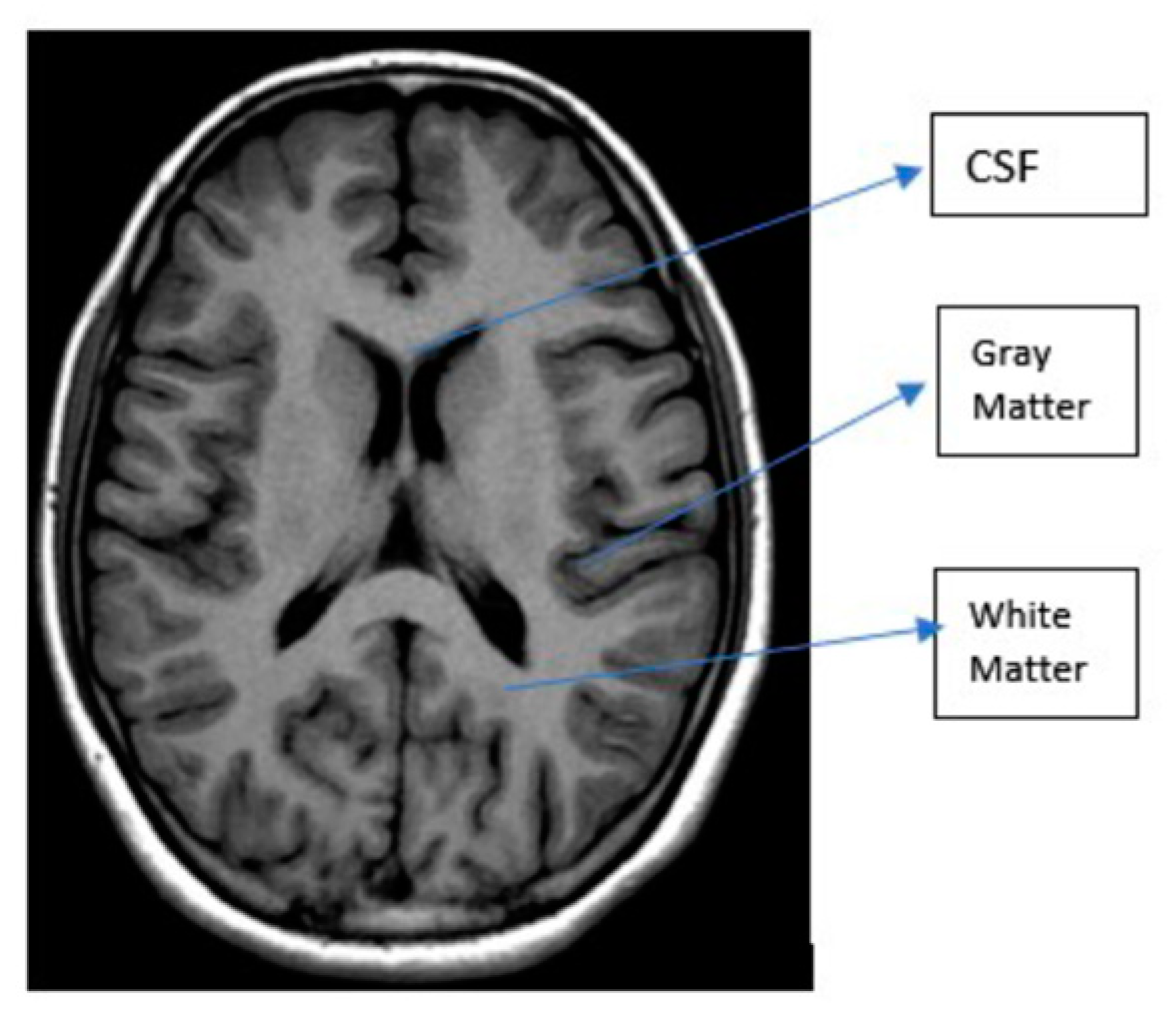

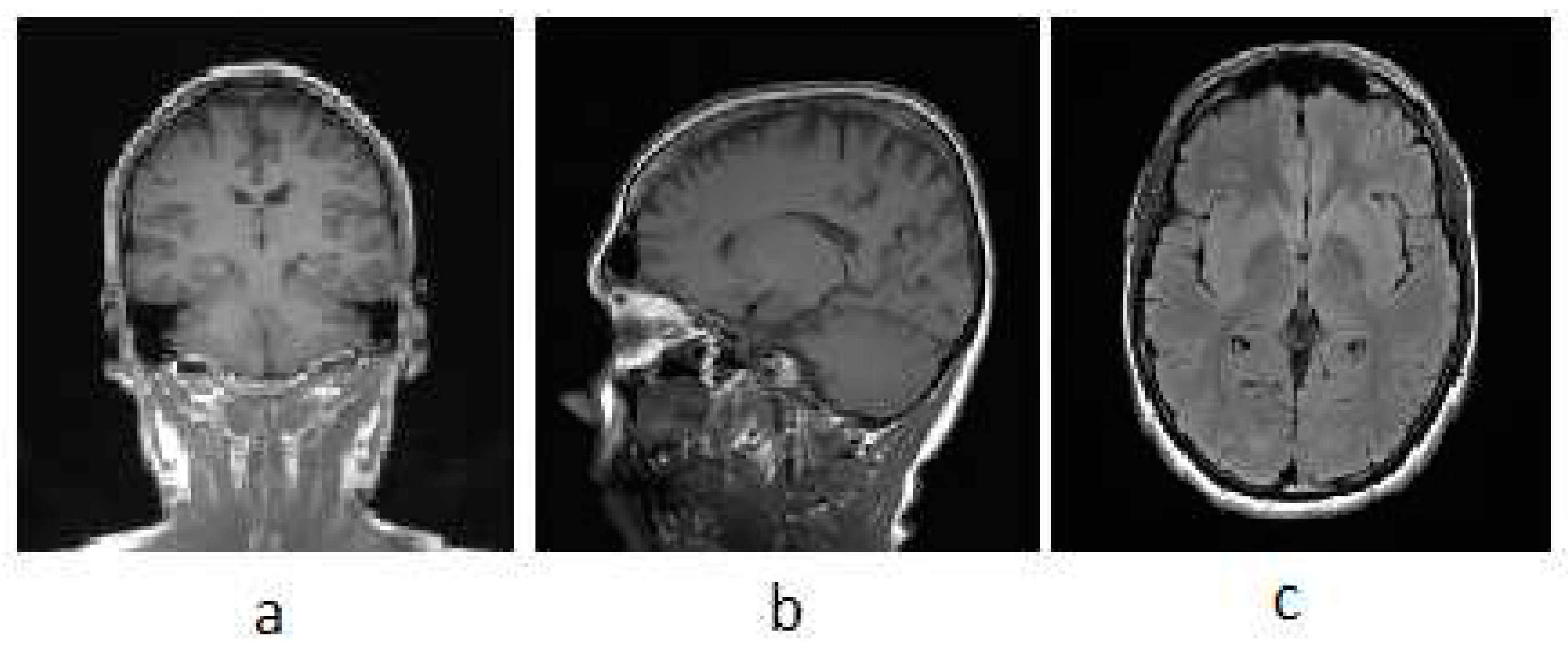

3.1. MRI

| T1 | T2 | Flair | |

|---|---|---|---|

| White Matter | Bright | Dark | Dark |

| Grey Matter | Grey | Dark | Dark |

| CSF | Dark | Bright | Dark |

| Tumor | Dark | Bright | Bright |

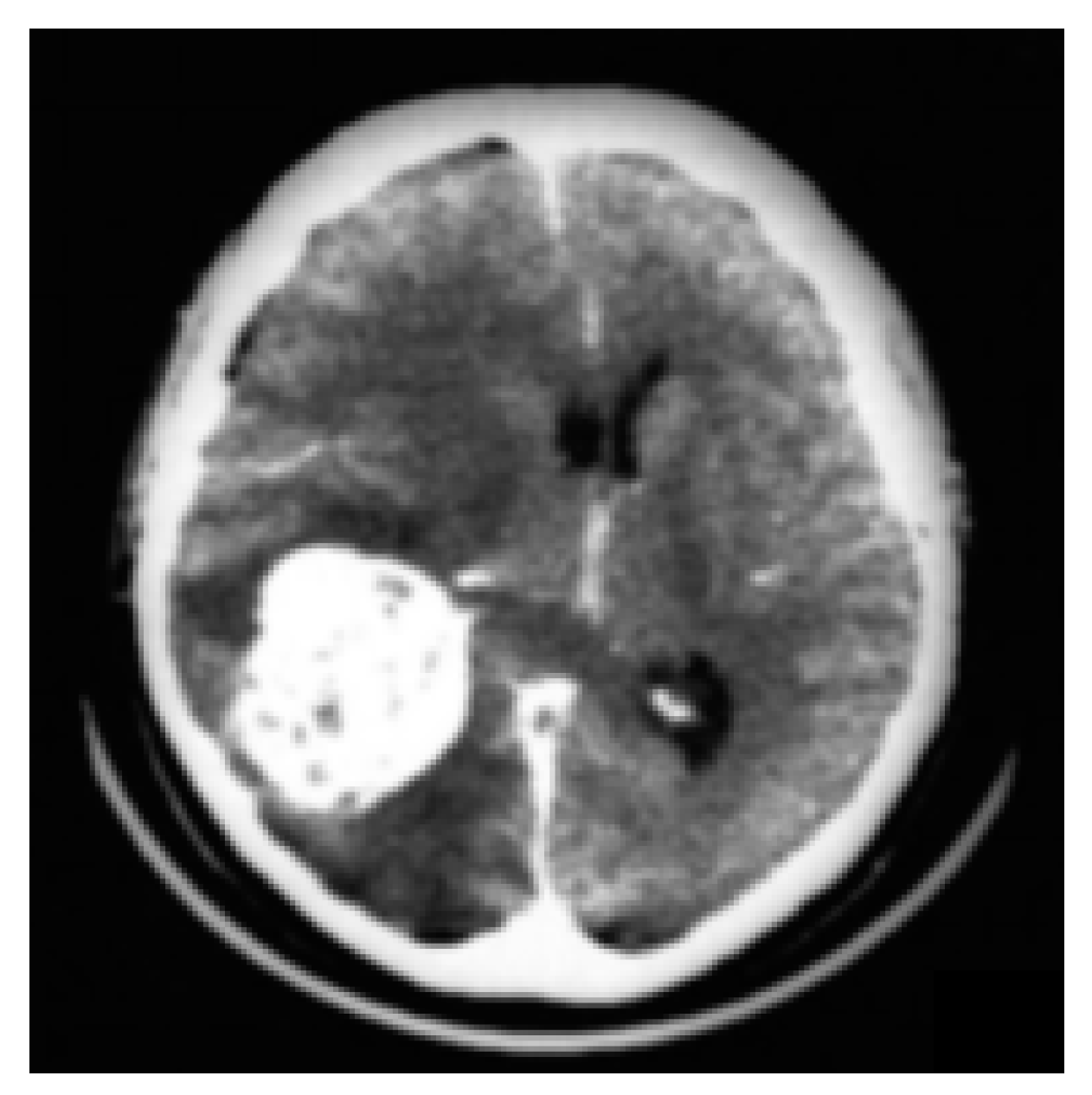

3.2. CT

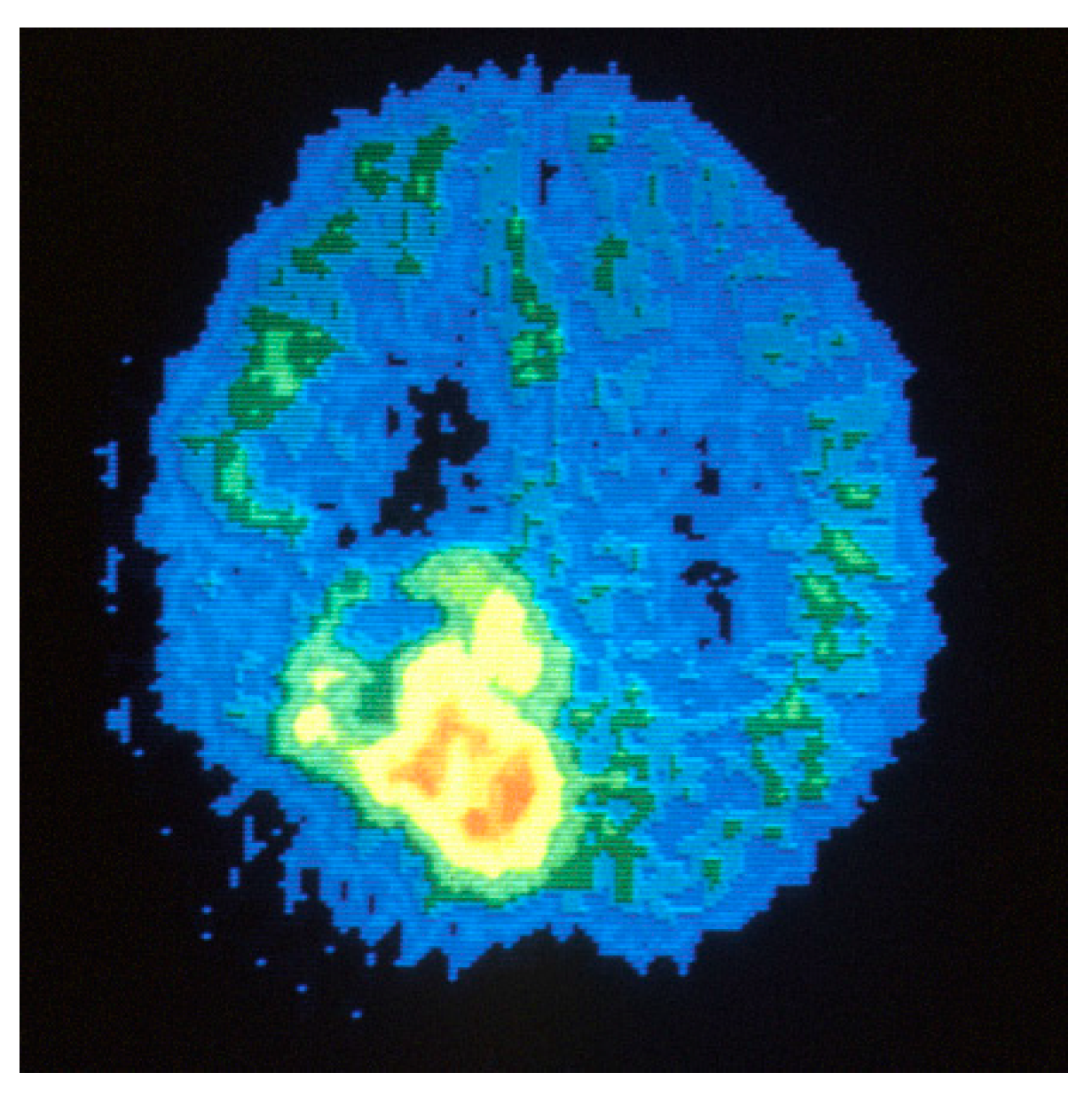

3.3. PET

3.4. SPECT

3.5. Ultrasound

4. Classification and segmentation method

4.1. Classification methods

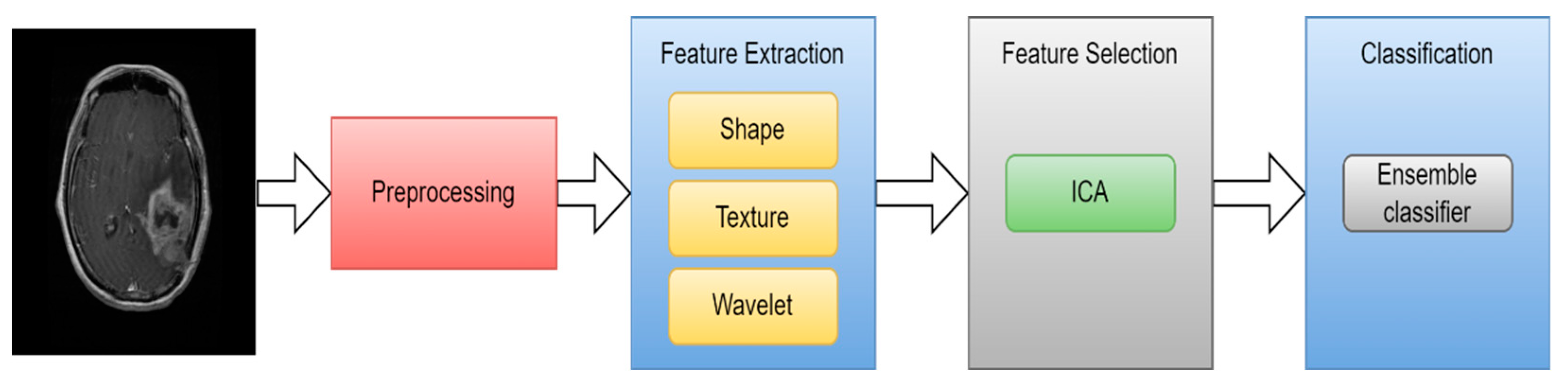

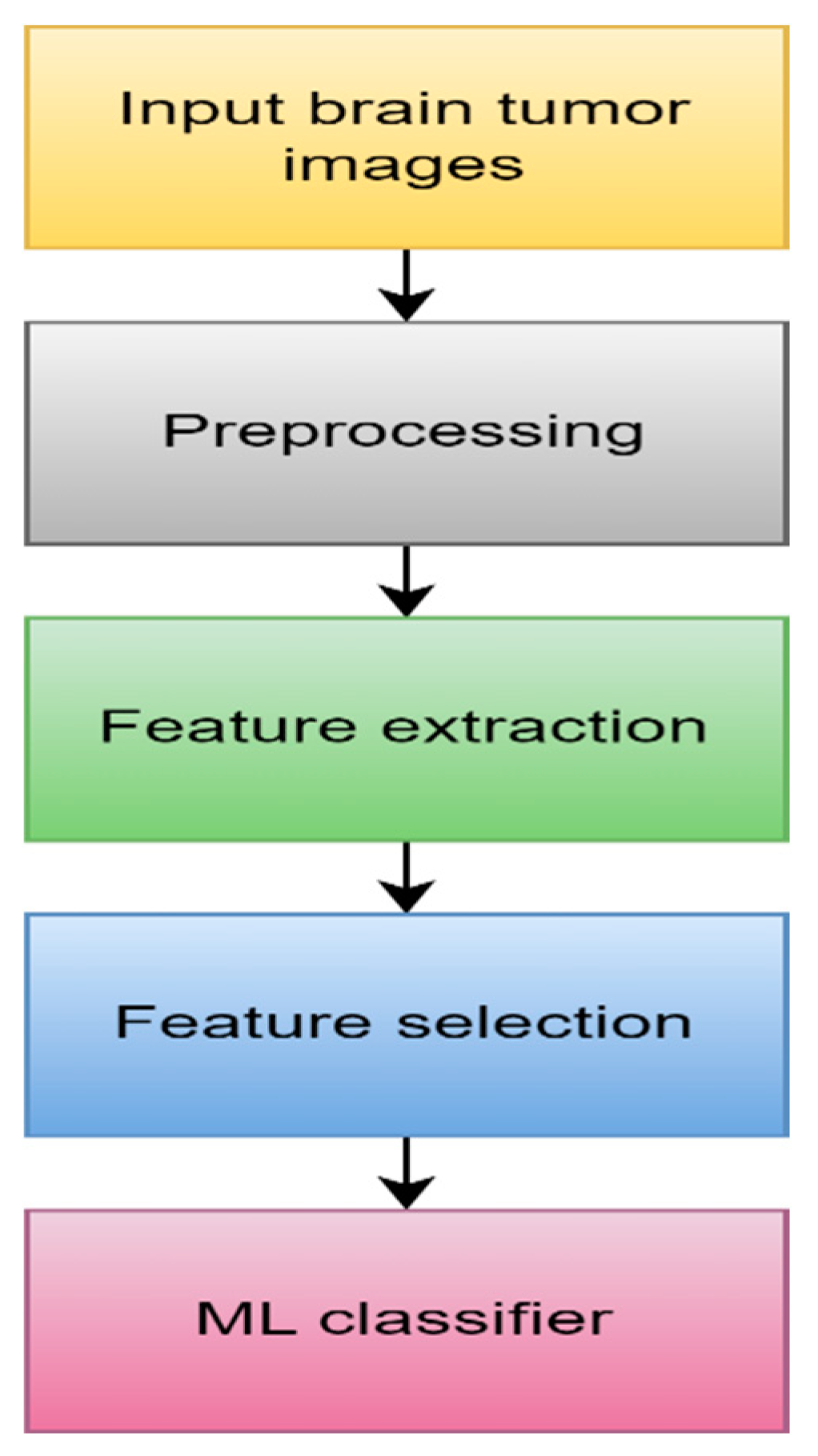

4.1.1. Machine learning

- Data Acquisition

- 2.

- Preprocessing

- 3.

- Feature extraction

- 4.

- Feature selection

- 5.

- ML algorithm

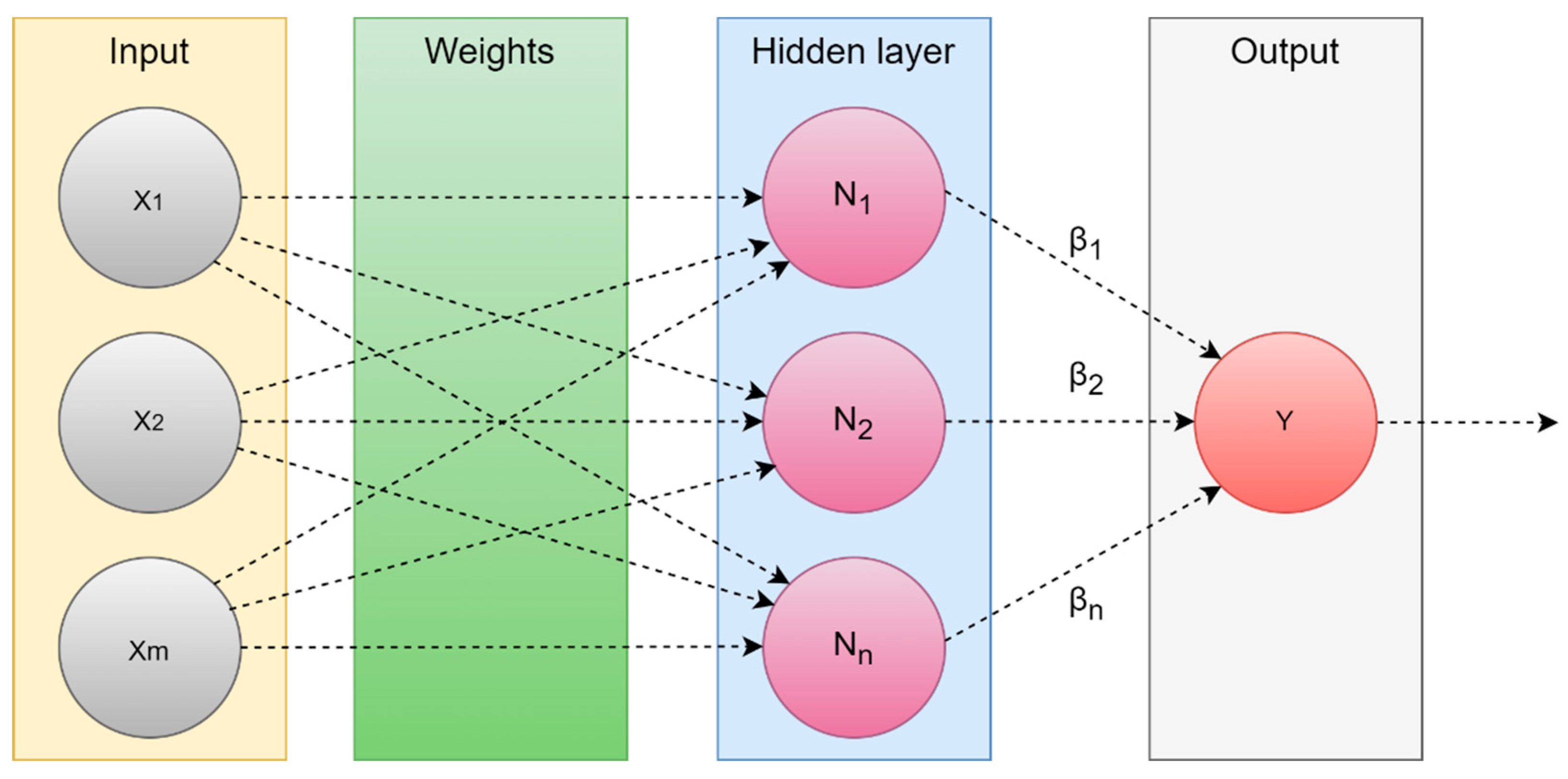

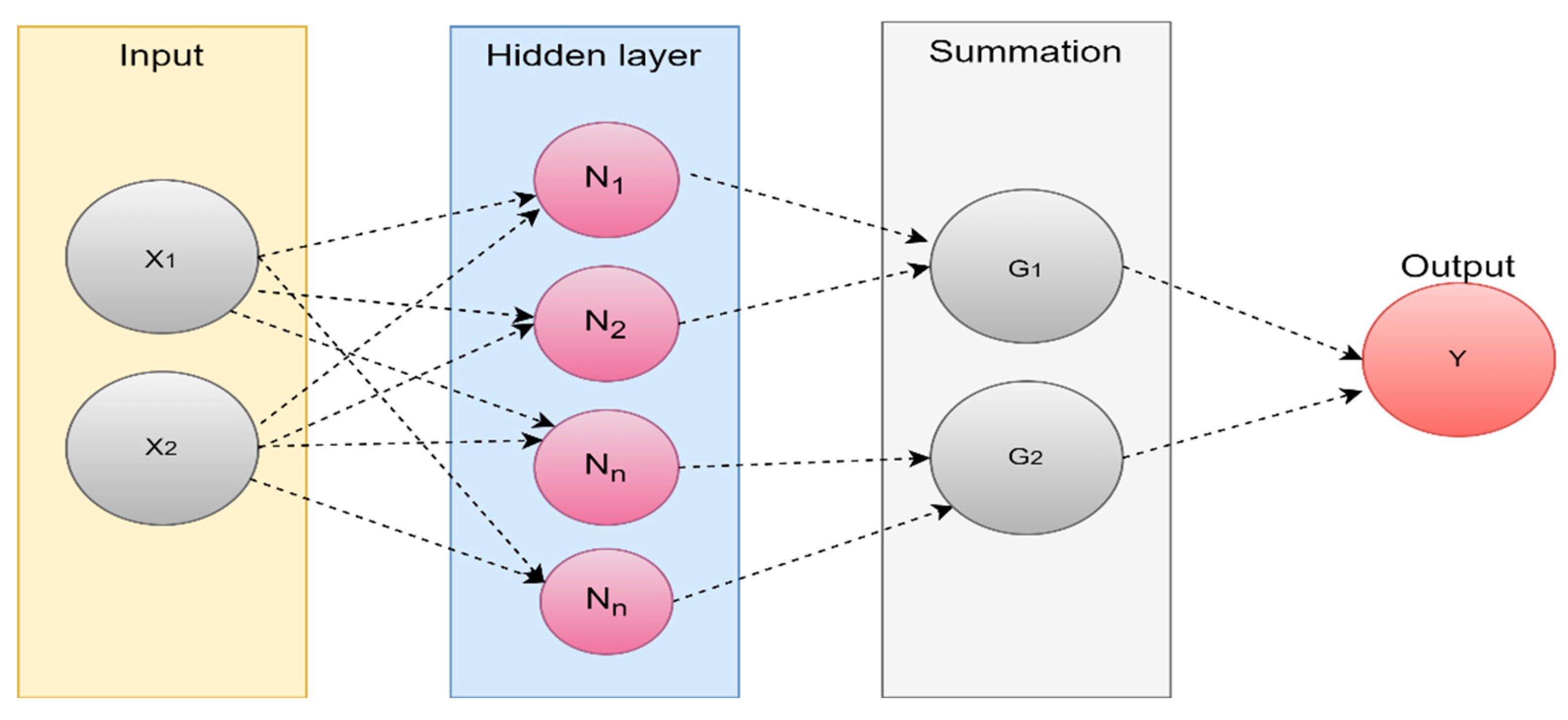

4.1.2. Extreme Learning Machine (ELM)

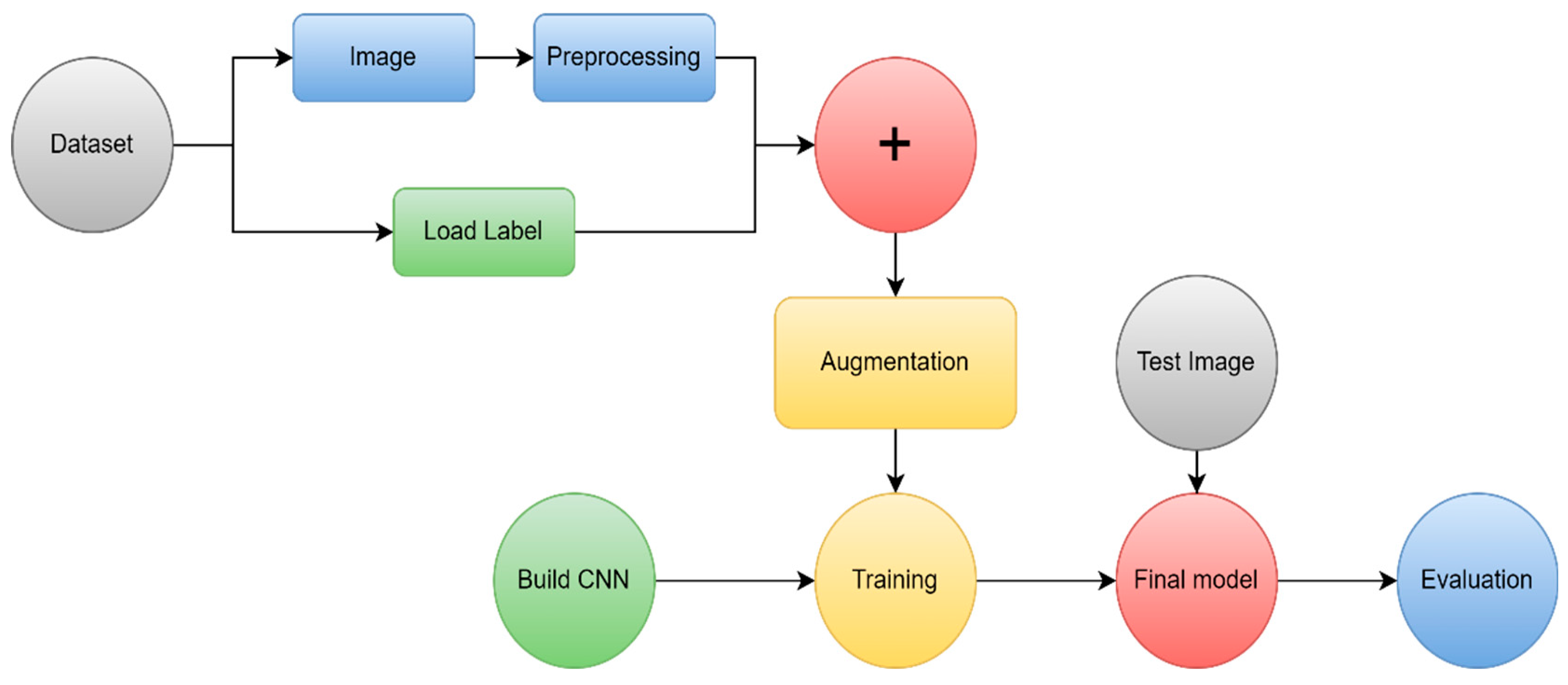

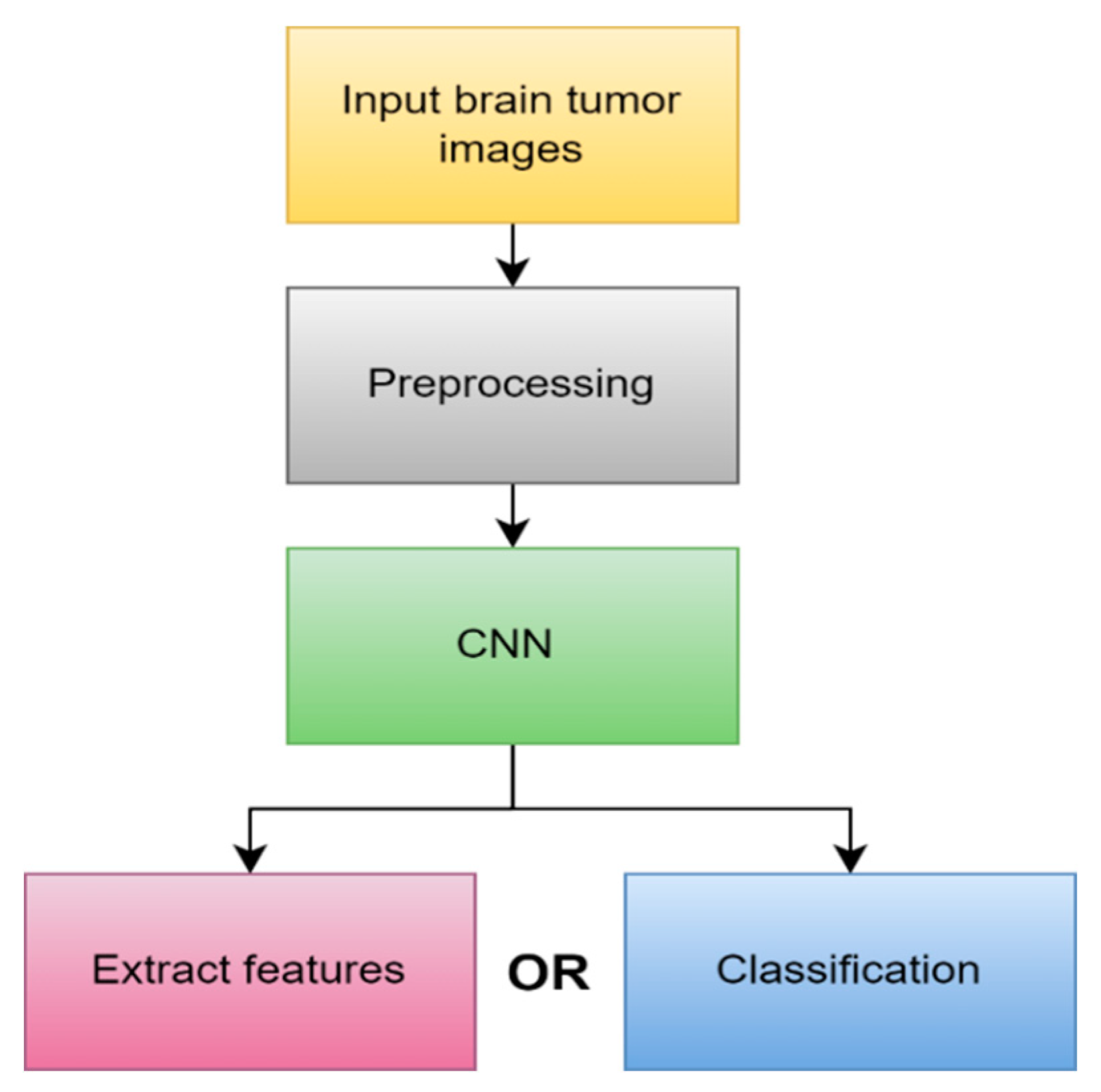

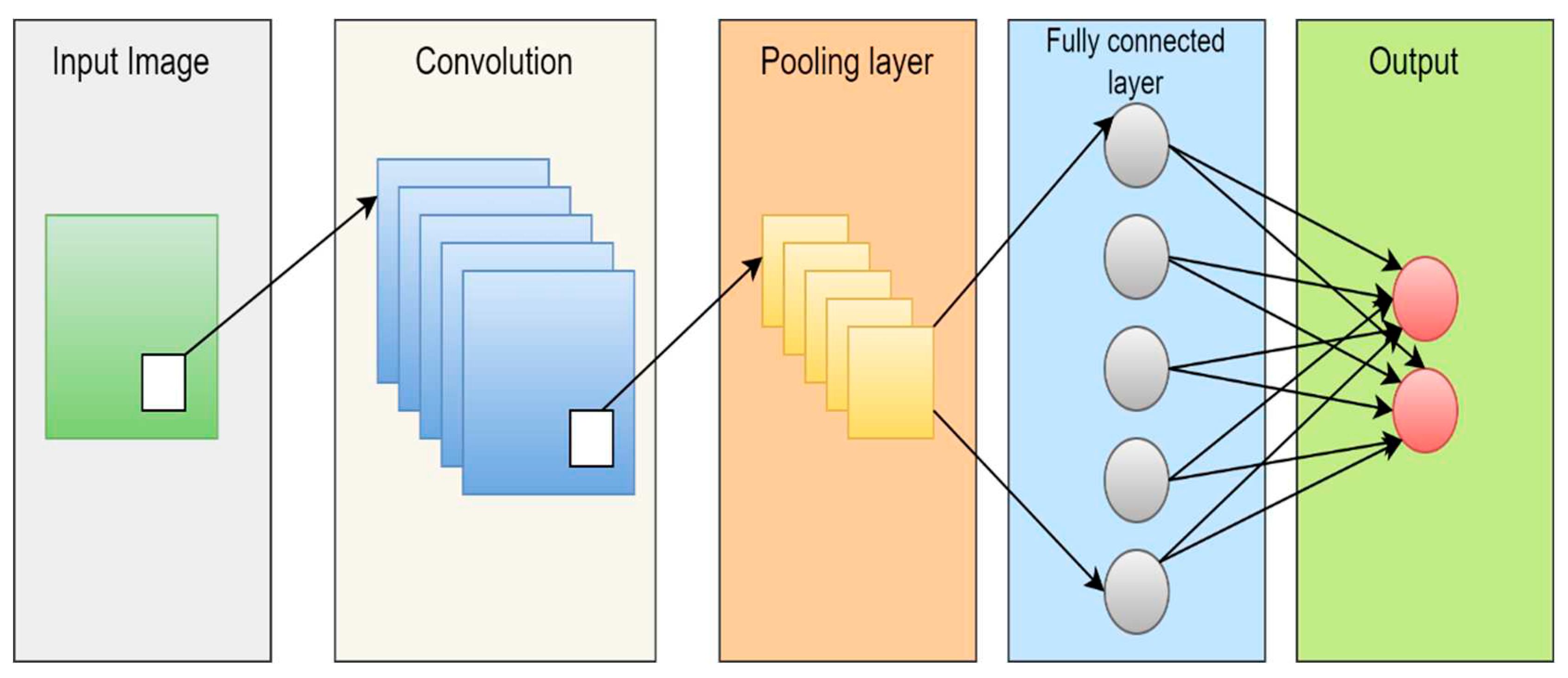

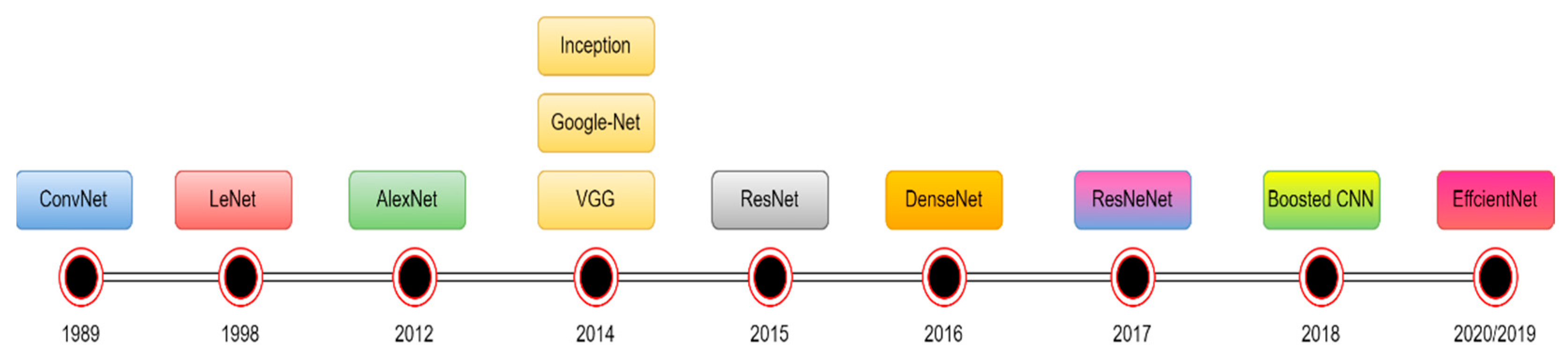

4.1.3. Deep learning (DL)

4.2. Segmentation method

4.2.1. Region-Based segmentation

4.2.2. Thresholding methods

4.2.3. Watershed techniques

4.2.4. Morphological-Based Method

4.2.5. Edge-Based Method.

4.2.6. Neural networks based methods

4.2.7. DL-based segmentation

4.3. Performance evaluation

| Parameter | Equation |

|---|---|

| ACC | |

| SEN | |

| SPE | |

| PR | |

| F1_SCORE | |

| DCS | |

| Jaccard |

5. Literature Review

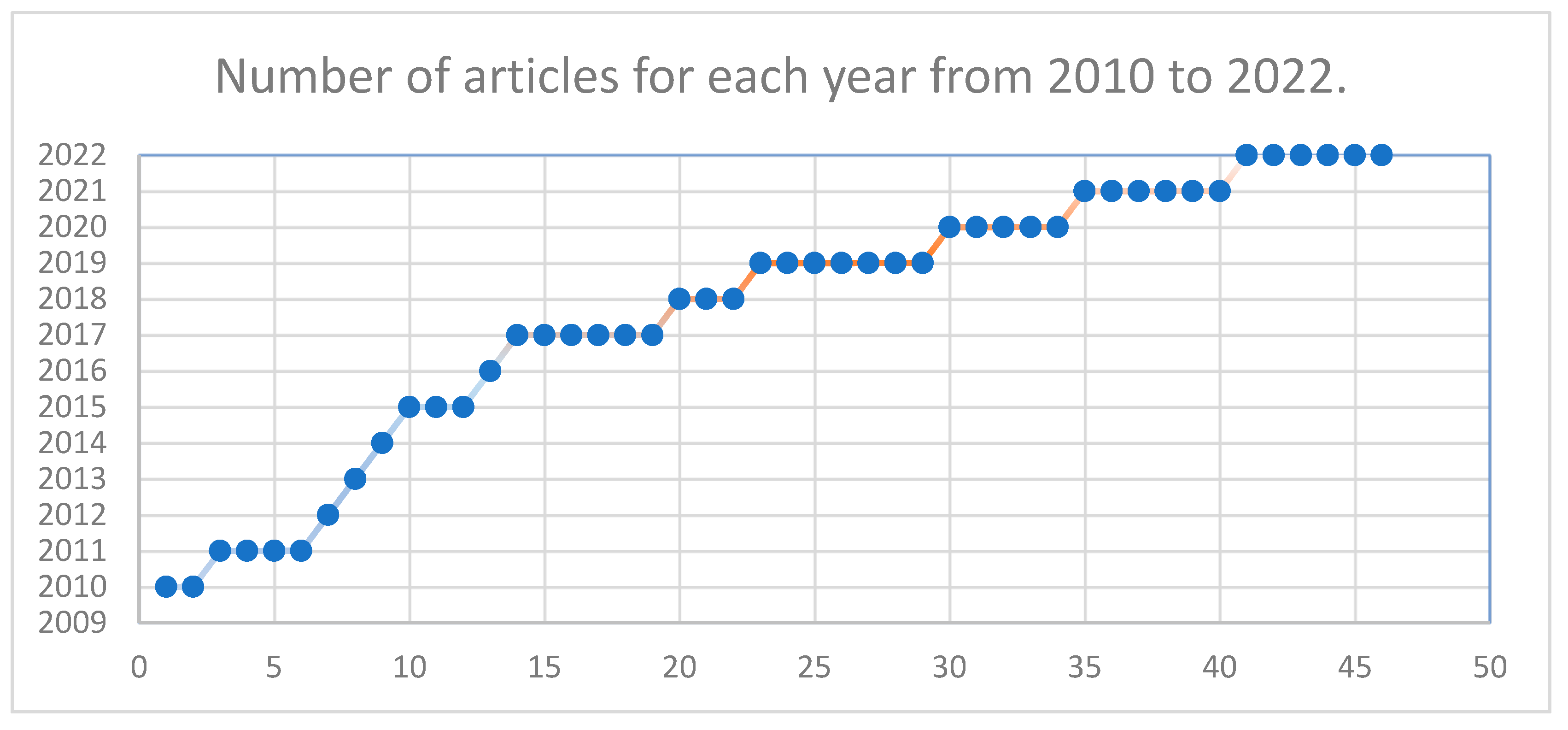

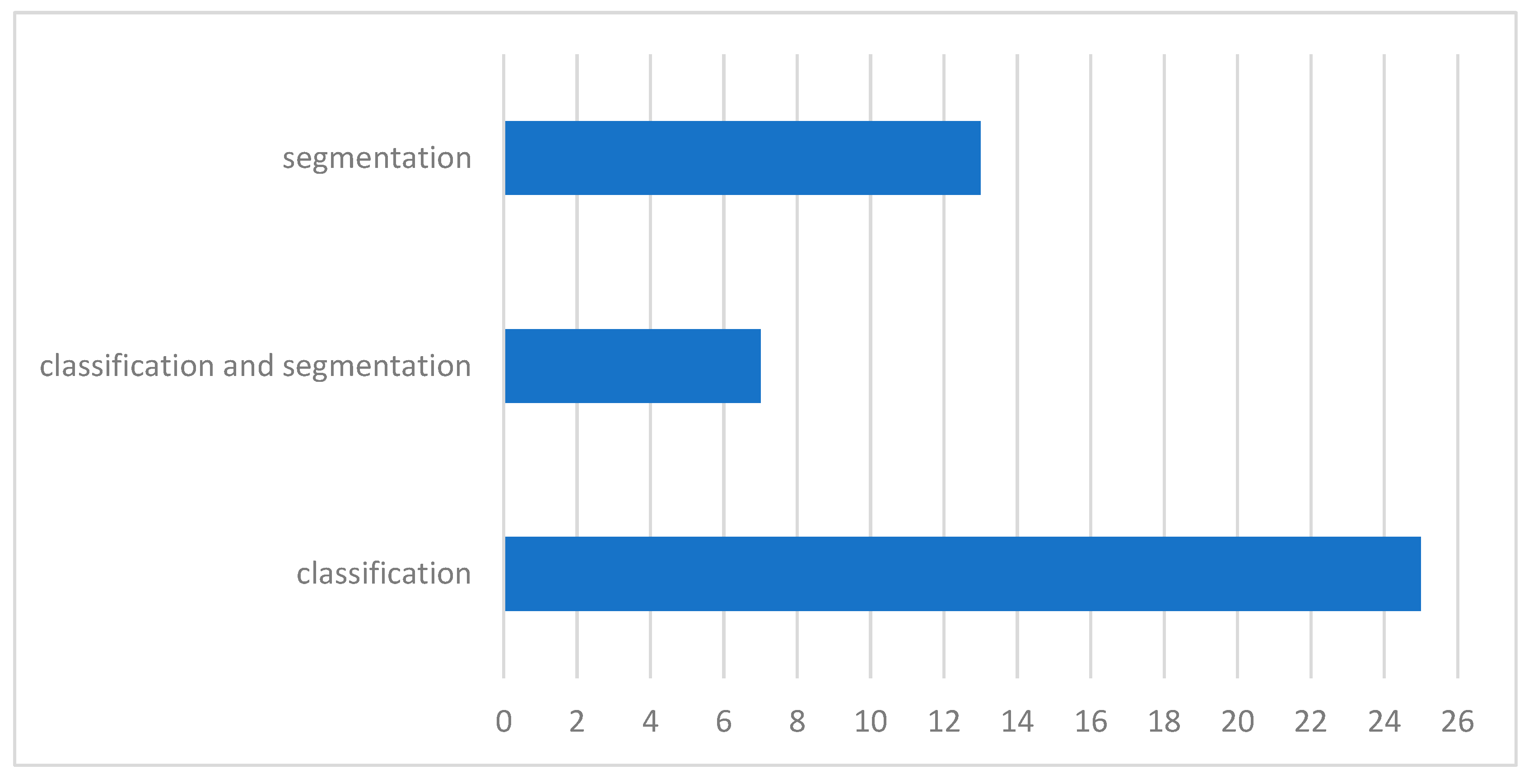

5.1. Article Selection

5.2. Publicly available datasets

5.3. Related work

5.3.1. MRI Brain tumor segmentation

| Ref | Scan | year | technique | Method | Performance Metrics | result |

|---|---|---|---|---|---|---|

| [80] | MRI | 2010 | region-based | FCM | Acc | 93.00% |

| [81] | MRI | 2011 | region-based | FCM | Jaccard | 83.19% |

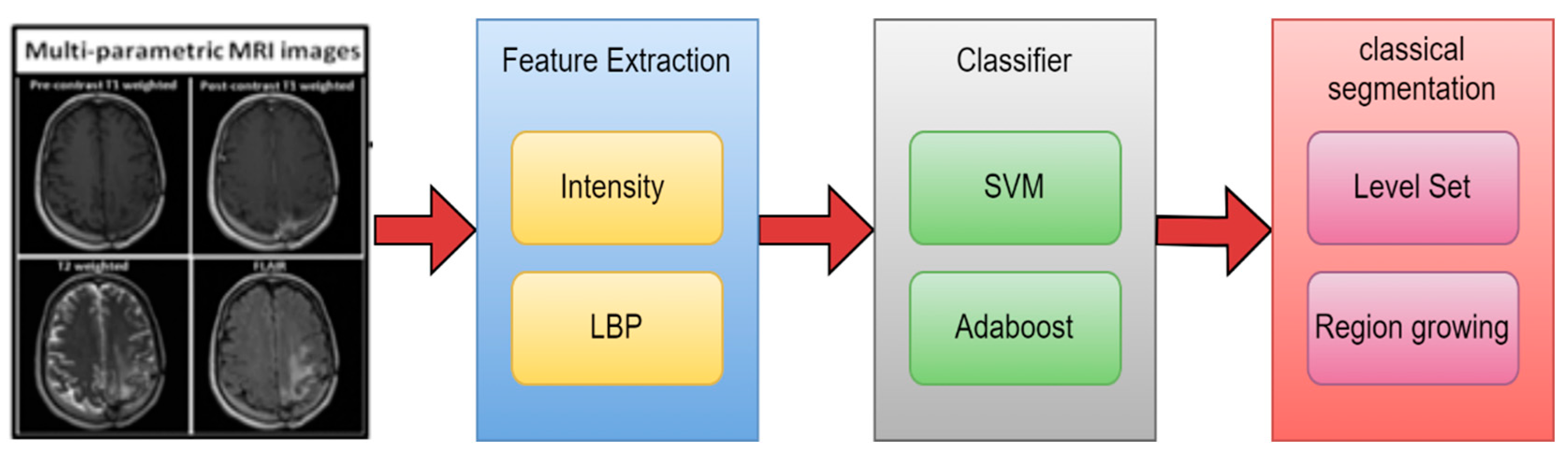

| [82] | MRI | 2012 | NN | LBP with SVM | DSC | 69.00% |

| [69] | MRI | 2016 | DL | CNN | DSC | 88.00% |

| [84] | MRI | 2017 | NN | GLCM with SVM | DSC | 86.12% |

| [39] | MRI | 2017 | NN | LBP with RF | Jaccard & DSC | 87.% & 93% |

| [85] | MRI | 2018 | region-based | FCM | Acc | 98.00% |

| [83] | MRI | 2018 | region-based | FCM and k-mean | Acc | 91.94% |

| [67] | MRI | 2019 | DL & NN | CNN with SVM | DSC | 88.00% |

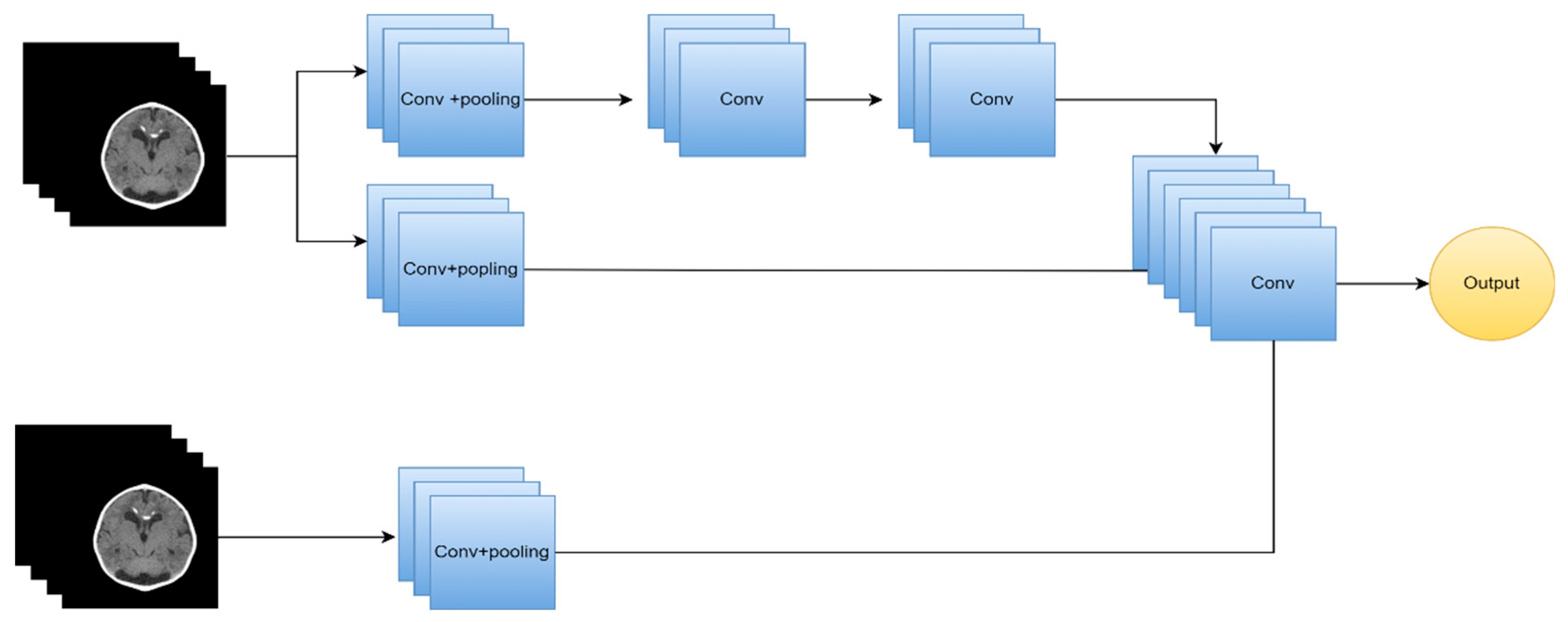

| [86] | MRI | 2019 | DL | Two-path CNN | DSC | 89.20% |

| [87] | MRI | 2019 | DL | semantic | Acc | 88.20% |

| [88] | MRI | 2021 | DL | semantic | IoU | 91.72% |

5.3.2. MRI brain tumor classification using ML

| Ref | Scan | year | feature extraction | feature selection | classification | Acc |

|---|---|---|---|---|---|---|

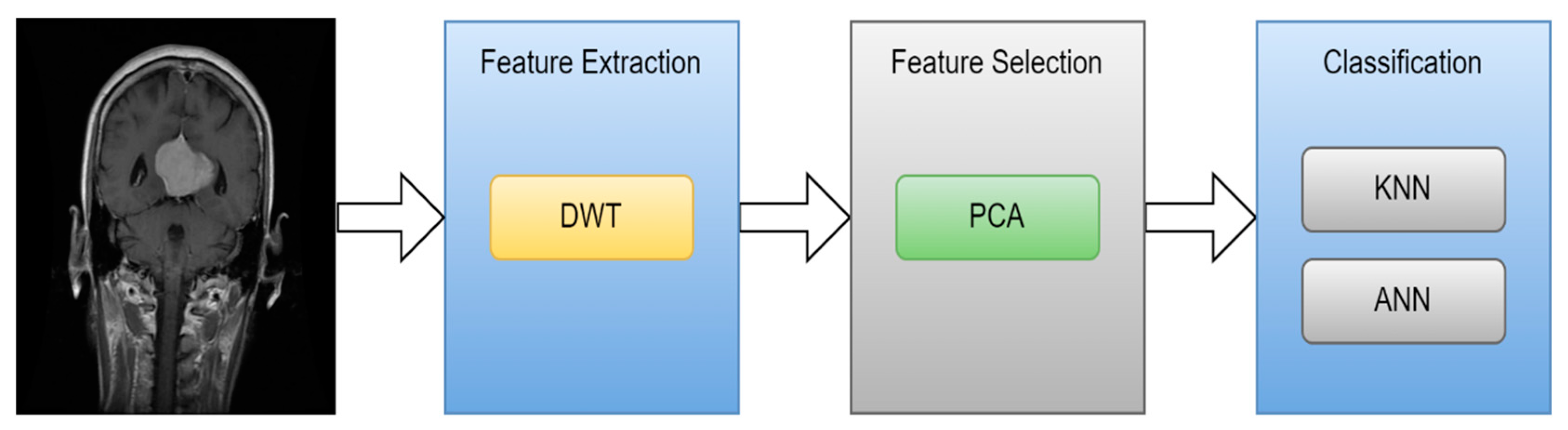

| [95] | MRI | 2010 | GLCM | PCA | ANN and KNN | 98% and 97% |

| [89] | MRI | 2011 | Wavelet | PCA | Back Propagation NN | 100.00% |

| [93] | MRI | 2013 | Intensity and texture | PCA | ANN | 85.50% |

| [94] | MRI | 2014 | GLCM | - | SVM | 93.00% |

| [36] | MRI | 2015 | Texture and shape | ICA | SVM | 99.09% |

| [90] | MRI | 2015 | Wavelet | - | SVM | 97.00% |

| [91] | MRI | 2017 | Texture and shape | - | SVM | 97.10% |

| [92] | MRI | 2017 | Intensity and texture | - | ANN | 92.43% |

5.3.3. MRI brain tumor classification using DL

| Ref | Scan | year | technique | Method | result | Performance Metrics |

|---|---|---|---|---|---|---|

| [99] | MRI | 2015 | DL | Custom-CNN | 96.00% | Acc |

| [100] | MRI | 2019 | DL | Custom-CNN | 98.70% | Acc |

| [101] | MRI | 2020 | DL | VGG-16, Inception-v3, ResNet-50 | 96% 75% 89% | Acc |

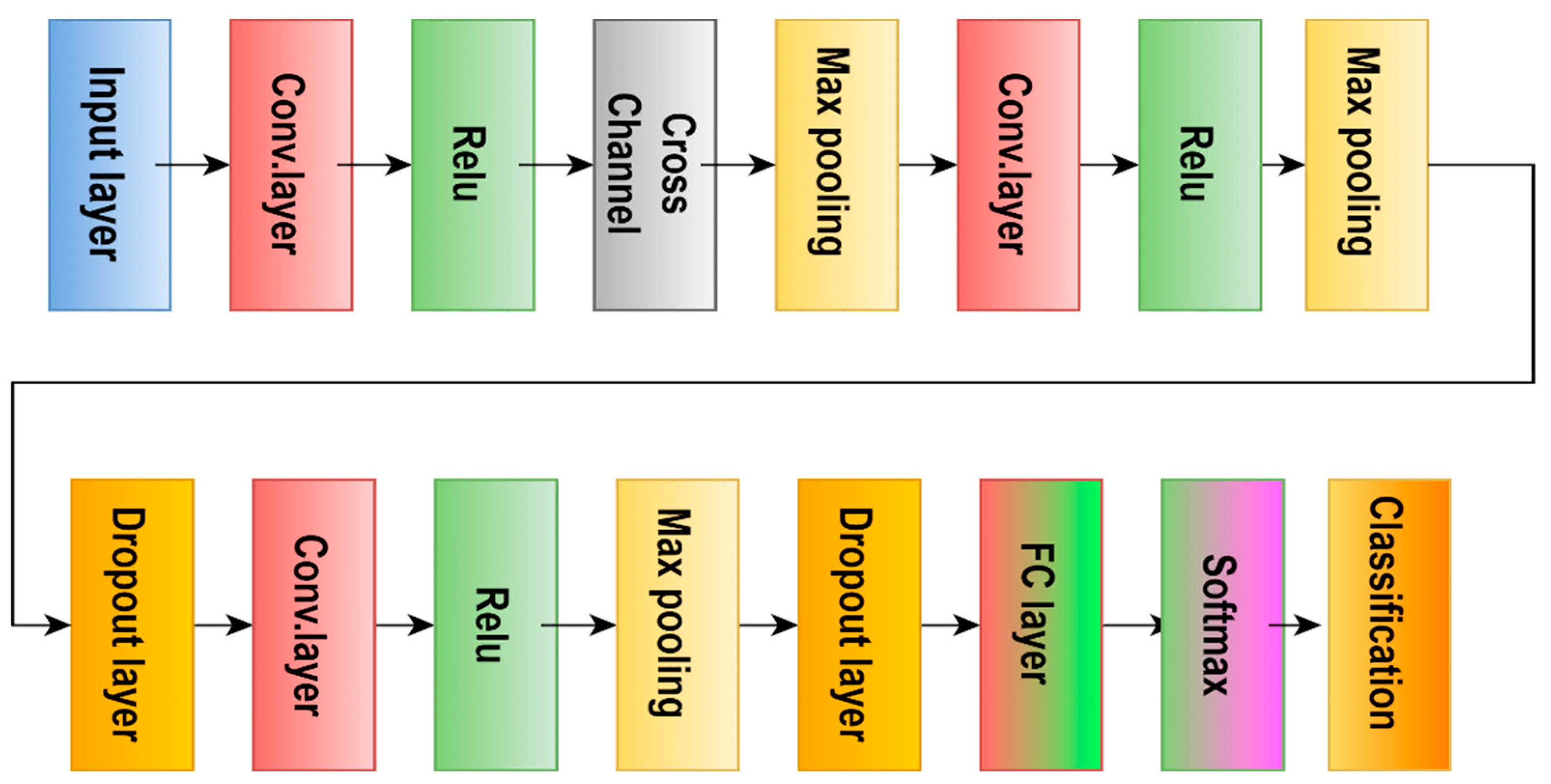

| [102] | MRI | 2021 | DL | AlexNet, GoogLeNet, SqueezeNet | 97.10% | Acc |

| [103] | MRI | 2021 | DL | Custom-CNN | 82.89% | ROC |

| [104] | MRI | 2018 | DL | AlexNet | 90.90% | test acc |

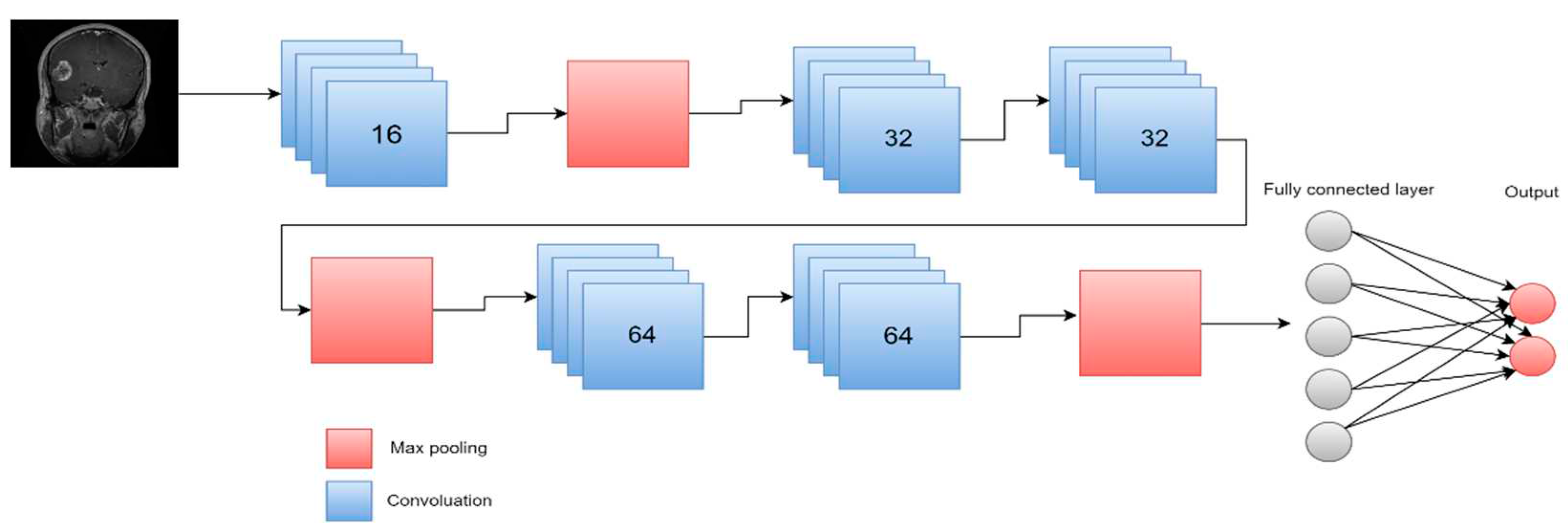

| [105] | MRI | 2021 | DL | multi CNN structure | 98.67% 98.06% 98.33% 98.06% | precision, f1 score, precision, sensitivity |

| [106] | MRI | 2022 | DL | EfficientNetB0 | 98.80% | Acc |

| [70] | MRI | 2022 | DL | ResNet18 | 88.00% | AUC |

| [107] | MRI | 2022 | DL | Custom-CNN | 98.70% | Acc |

| [108] | MRI | 2022 | DL | Custom-CNN | 95.75% | Acc |

| [109] | MRI | 2022 | DL | Gaussian-CNN | 99.80% | Acc |

| [110] | MRI | 2020 | DL | seven-layer CNN | 97.52% | Acc |

| [111] | MRI | 2021 | DL | Alexnet | 100.00% | Acc |

| [112] | MRI | 2019 | DL | VGG16 | 98.69% | Acc |

5.3.4. Hybrid techniques

| Ref | year | Segmentation Method | Feature Extraction | Classifier | Accuracy |

|---|---|---|---|---|---|

| [113] | 2017 | FCM | shape and statistical | SVM and ANN | 97.44% and 97.37% |

| [117] | 2017 | FCM | DWT and PCA | CNN | 98.00% |

| [52] | 2019 | watershed | shape | KNN | 89.50% |

| [115] | 2019 | Ostu's | DWT | SVM | 99.00% |

| [116] | 2020 | thresholding and watershed | CNN | SVM | 87.4%. |

| [114] | 2020 | canny | GLCM and Gabor | ANN | 98.90% |

5.3.5. Various segmentation and classification methods employing CT images.

| Ref | year | type | segmentation | feature extraction | feature selection | classification | result |

|---|---|---|---|---|---|---|---|

| [118] | 2011 | CT | NN | WCT and WST | GA | - | 97.00% |

| [119] | 2011 | CT | FCM & k-mean | GLCM and WCT | GA | SVM | 98.00% |

| [120] | 2020 | CT | Semantic | - | - | GoogleNet | 99.60% |

| [121] | 2021 | CT | - | - | - | CNN | 96.00% |

| [122] | 2022 | SPECT/MRI | - | DCT | - | SVM | 96.80% |

6. Discussion

7. Conclusions

Funding

Conflicts of Interest

References

- Watson C., Kirkcaldie M., and Paxinos G., The Brain: An Introduction to Functional Neuroanatomy. 2010. [Online].. Available: http://ci.nii.ac.jp/ncid/BB04049625.

- Jellinger K., “The Human Nervous System Structure and Function, 6th edn,” European Journal of Neurology, vol. 16, no. 7, p. e136, May 2009. 20 May. [CrossRef]

- DeAngelis L., M., “Brain tumors,” New England Journal of Medicine, vol. 344, no. 2, pp. 114–123, 2001. [CrossRef]

- Louis, D.N.; Perry, A.; Wesseling, P.; Brat, D.J.; Cree, I.A.; Branger, D.F.; Hawkins, C.; Ng, H.K.; Pfister, S.M.; Reifenberger, G.; et al. The 2021 WHO classification of tumors of the central nervous system: A summary. Neuro-Oncology 2021, 23, 1231–1251. [Google Scholar] [CrossRef]

- Hayward, R.M.; Patronas, N.; Baker, E.H.; Vézina, G.; Albert, P.S.; Warren, K.E. Inter-observer variability in the measurement of diffuse intrinsic pontine gliomas. J. Neuro-Oncol. 2008, 90, 57–61. [Google Scholar] [CrossRef] [PubMed]

- Mahaley, M.S., Jr.; Mettlin, C.; Natarajan, N.; Laws, E.R., Jr.; Peace, B.B. National survey of patterns of care for brain-tumor patients. J. Neurosurg. 1989, 71, 826–836. [Google Scholar] [CrossRef] [PubMed]

- Sultan, H. H., Salem, N. M. and Al-Atabany, W. (2019) “Multi-Classification of Brain Tumor Images Using Deep Neural Network,” IEEE Access, vol. 7, pp. 69215–69225, Jan. 2019. [CrossRef]

- Johnson, Derek R., Julie B. Guerin, Caterina Giannini, Jonathan M. Morris, Lawrence J. Eckel, and Timothy J. Kaufmann. "2016 updates to the WHO brain tumor classification system: what the radiologist needs to know." Radiographics 37, No. 7 (2017): 2164-2180. [CrossRef]

- Jan, C. Buckner, et al., ―Central Nervous System Tumors, Mayo Clinic Proceedings, Vol. 82, No. 10, 2007, pp. 1271-1286. [CrossRef]

- World Health Organization: WHO, “Cancer,” www.who.int, Jul. 2019, [Online]. Available: https://www.who.int/health-topics/cancer (accessed on 10 may 2023).

- Amyot, F.; Arciniegas, D.B.; Brazaitis, M.P.; Curley, K.C.; Diaz-Arrastia, R.; Gandjbakhche, A.; Herscovitch, P.; Hindsll, S.R.; Manley, G.T.; Pacifico, A.; et al. A review of the effectiveness of neuroimaging modalities for the detection of traumatic brain injury. J. Neurotrauma 2015, 32, 1693–1721. [Google Scholar] [CrossRef] [PubMed]

- Pope, W.B. Brain metastases: Neuroimaging. Handb. Clin. Neurol. 2018, 149, 89–112. [Google Scholar] [PubMed]

- Abd-Ellah, Mahmoud Khaled, Ali Ismail Awad, Ashraf AM Khalaf, and Hesham FA Hamed. "A review on brain tumor diagnosis from MRI images: Practical implications, key achievements, and lessons learned." Magnetic resonance imaging 61 (2019): 300-318. [CrossRef]

- Ammari, S.; Pitre-Champagnat, S.; Dercle, L.; Chouzenoux, E.; Moalla, S.; Reuze, S.; Talbot, H.; Mokoyoko, T.; Hadchiti, J.; Diffetocq, S.; et al. Influence of Magnetic Field Strength on Magnetic Resonance Imaging Radiomics Features in Brain Imaging, an In Vitro and In Vivo Study. Front. Oncol. 2021, 10. [Google Scholar] [CrossRef] [PubMed]

- L. Sahoo, L. Sarangi, B. R. Dash, and H. K. Palo, “Detection and Classification of Brain Tumor Using Magnetic Resonance Images,” in Lecture notes in electrical engineering, Springer Science+Business Media, 2020. [CrossRef]

- Ammari, S.; Pitre-Champagnat, S.; Dercle, L.; Chouzenoux, E.; Moalla, S.; Reuze, S.; Talbot, H.; Mokoyoko, T.; Hadchiti, J.; Diffetocq, S.; et al. Influence of Magnetic Field Strength on Magnetic Resonance Imaging Radiomics Features in Brain Imaging, an In Vitro and In Vivo Study. Front. Oncol. 2021, 10. [Google Scholar] [CrossRef] [PubMed]

- Kaur, R. and Doegar A., ‘Localization and Classification of Brain Tumor using Machine Learning & Deep Learning Techniques’, Int. J. Innov. Technol. Explor. Eng., vol. 8, no. 9S, pp. 59–66, Aug. 2019. [CrossRef]

- “The Radiology Assistant : Multiple Sclerosis 2.0,” Dec. 01, 2021. https://radiologyassistant.nl/neuroradiology/multiple-sclerosis/diagnosis-and-differential-diagnosis-3#mri-protocol-ms-brain-protocol (accessed , 2023). 22 May.

- Luo, Q.; Li, Y.; Luo, L.; Diao, W. Comparisons of the accuracy of radiation diagnostic modalities in brain tumor. Medicine 2018, 97, e11256. [Google Scholar] [CrossRef] [PubMed]

- “Positron Emission Tomography (PET),” Johns Hopkins Medicine, Aug. 20, 2021. https://www.hopkinsmedicine.org/health/treatment-tests-and-therapies/positron-emission-tomography-pet (accessed , 2023). 20 May.

- M. B. & Spine, “SPECT scan,” 2022. https://mayfieldclinic.com/pe-spect.

- Sastry R., A. et al., “Applications of Ultrasound in the Resection of Brain Tumors,” Journal of Neuroimaging, vol. 27, no. 1, pp. 5–15, Jan. 2017. [CrossRef]

- Nasrabadi, N.M. Pattern recognition and machine learning. J. Electron. Imaging 2007, 16, 049901. [Google Scholar] [CrossRef]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine learning for medical imaging. Radiographics 2017, 37, 505–515. [Google Scholar] [CrossRef] [PubMed]

- Mohan, MR Maneesha, C. Helen Sulochana, and T. Latha. "Medical image denoising using multistage directional median filter." 2015 International Conference on Circuits, Power and Computing Technologies [ICCPCT-2015].. IEEE, 2015. [CrossRef]

- Borole, Vipin Y., Sunil S. Nimbhore, and Dr Seema S. Kawthekar. "Image processing techniques for brain tumor detection: A review." International Journal of Emerging Trends & Technology in Computer Science (IJETTCS) 4.5 (2015): 2.

- Ziedan, R. H., Mead, M. A., & Eltawel, G. S. Selecting the Appropriate Feature Extraction Techniques for Automatic Medical Images Classification. International Journal, 1. (2016).

- Amin, J., Sharif, M., Yasmin, M., & Fernandes, S. L. A distinctive approach in brain tumor detection and classification using MRI. Pattern Recognition Letters, (2020). 139, 118-127. [CrossRef]

- Islam, A., Reza, S. M., & Iftekharuddin, K. M. Multifractal texture estimation for detection and segmentation of brain tumors. IEEE transactions on biomedical engineering, 2013. 60(11), 3204-3215. [CrossRef]

- Gurbină, M., Lascu, M., & Lascu, D. Tumor detection and classification of MRI brain image using different wavelet transforms and support vector machines. In 2019 42nd International Conference on Telecommunications and Signal Processing (TSP) July, 2019. pp. 505-508. IEEE. [CrossRef]

- X. Xu et al., “Three-dimensional texture features from intensity and high-order derivative maps for the discrimination between bladder tumors and wall tissues via MRI,” International Journal of Computer Assisted Radiology and Surgery, vol. 12, no. 4, pp. 645–656, Jan. 2017. [CrossRef]

- Kaplan, K. Kaya, Y. Kuncan, M. Ertunç, H.M. Brain tumor classification using modified local binary patterns (LBP) feature extraction methods. Med. Hypotheses 2020, 139, 109696. [CrossRef] [PubMed]

- Afza, F., Khan M. S., Sharif M., and Saba T., “Microscopic skin laceration segmentation and classification: A framework of statistical normal distribution and optimal feature selection,” Microscopy Research and Technique, vol. 82, no. 9, pp. 1471–1488, Jun. 2019. [CrossRef]

- Lakshmi, A., Arivoli T., and Rajasekaran M. P., “A Novel M-ACA-Based Tumor Segmentation and DAPP Feature Extraction with PPCSO-PKC-Based MRI Classification,” Arabian Journal for Science and Engineering, vol. 43, no. 12, pp. 7095–7111, Nov. 2017. [CrossRef]

- Adair, J., Brownlee A. E. I., and Ochoa G., “Evolutionary Algorithms with Linkage Information for Feature Selection in Brain Computer Interfaces,” in Advances in intelligent systems and computing, Springer Nature, 2016, pp. 287–307. [CrossRef]

- Arakeri M., P. and Reddy G. R. M., “Computer-aided diagnosis system for tissue characterization of brain tumor on magnetic resonance images,” Signal, Image and Video Processing, vol. 9, no. 2, pp. 409–425, Feb. 2015. [CrossRef]

- Adair, J., ABrownlee. E. I., and Ochoa G., “Evolutionary Algorithms with Linkage Information for Feature Selection in Brain Computer Interfaces,” in Advances in intelligent systems and computing, Springer Nature, 2016, pp. 287–307. [CrossRef]

- Wang, S., Zhang, Y., Dong, Z., Du, S., Ji, G., Yan, J.,... & Phillips, P. Feed-forward neural network optimized by hybridization of PSO and ABC for abnormal brain detection. International Journal of Imaging Systems and Technology, 2015. 25(2), 153-164. [CrossRef]

- Abbasi, S. and Tajeripour F., Detection of brain tumor in 3D MRI images using local binary patterns and histogram orientation gradient, Neurocomputing, vol. 219, pp. 526–535, Jan. 2017. [CrossRef]

- Zöllner, F. G., Emblem, K. E., & Schad, L. R. SVM-based glioma grading: Optimization by feature reduction analysis. Zeitschrift Fur Medizinische Physik, 2012. 22(3), 205–214. [CrossRef]

- Huang, G.B., Zhu, Q.Y., Siew, C.K. Extreme learning machine: Theory and applications. Neuro-computing 2006, 70, 489–501. [CrossRef]

- Bhatele, K. R., & Bhadauria, S. S. Brain structural disorders detection and classification approaches: a review. Artificial Intelligence Review, 2019. 53(5), 3349–3401. [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Networks, 2015. 61, 85–117. [CrossRef]

- Hu, A., & Razmjooy, N. (2021). Brain tumor diagnosis based on metaheuristics and deep learning. International Journal of Imaging Systems and Technology, 31(2), 657–669. 2. [CrossRef]

- Tandel, G. M., Balestrieri, A., Jujaray, T., Khanna, N. N., Saba, L., & Suri, J. S. Multiclass magnetic resonance imaging brain tumor classification using artificial intelligence paradigm. Computers in Biology and Medicine, 2020. 122, 103804. [CrossRef]

- Sahaai, M. B. Brain tumor detection using DNN algorithm. Turkish Journal of Computer and Mathematics Education (TURCOMAT), 2021.12(11), 3338-3345.

- Hashemi, M. (2019). Enlarging smaller images before inputting into convolutional neural network: zero-padding vs. interpolation. Journal of Big Data, 6(1). [CrossRef]

- Miotto, R., Wang, F., Wang, S., Jiang, X., & Dudley, J. T. (2018). Deep learning for healthcare: review, opportunities and challenges. Briefings in Bioinformatics, 19(6), 1236–1246. [CrossRef]

- Gorach, T. Deep convolutional neural networks-a review. International Research Journal of Engineering and Technology (IRJET), 5(07), (2018). 439.

- Ogundokun, R. O., Maskeliunas, R., Misra, S., & Damaševičius, R. Improved CNN Based on Batch Normalization and Adam Optimizer. In Computational Science and Its Applications–ICCSA 2022 Workshops: Malaga, Spain, July 4–7, 2022, Proceedings, Part V (pp. 593-604). Cham: Springer International Publishing. [CrossRef]

- Ismael, S. a. A., Mohammed, A., & Hefny, H. A. An enhanced deep learning approach for brain cancer MRI images classification using residual networks. Artificial Intelligence in Medicine, (2020).102, 101779. [CrossRef]

- Ramdlon, R. H., Kusumaningtyas, E. M., & Karlita, T. Brain Tumor Classification Using MRI Images with K-Nearest Neighbor Method. (2019). [CrossRef]

- Gurusamy, R., & Subramaniam, V. A machine learning approach for MRI brain tumor classification. Computers, Materials and Continua, 53(2), (2017). 91-109. [CrossRef]

- Pohle, R., & Toennies, K. D. Segmentation of medical images using adaptive region growing. In Medical Imaging 2001: Image Processing (Vol. 4322, pp. 1337-1346). SPIE. [CrossRef]

- Dey, N., & Ashour, A. S. Computing in medical image analysis. In Soft computing based medical image analysis (pp. 3-11). Academic Press.

- Hooda, H., Verma, O. P., & Singhal, T. Brain tumor segmentation: A performance analysis using K-Means, Fuzzy C-Means and Region growing algorithm. In 2014 IEEE International Conference on advanced communications, control and computing technologies (pp. 1621-1626). IEEE. (2014, May). [CrossRef]

- Sharif, M., Tanvir, U., Munir, E. U., Khan, M. A., & Yasmin, M. Brain tumor segmentation and classification by improved binomial thresholding and multi-features selection. Journal of ambient intelligence and humanized computing, 1-20. (2018). [CrossRef]

- Shanthi, K. J., & Kumar, M. S. Skull stripping and automatic segmentation of brain MRI using seed growth and threshold techniques. In 2007 International conference on intelligent and advanced systems (pp. 422-426). (2007, November). IEEE. [CrossRef]

- Yao, J. Image Processing in Tumor Imaging, New Techniques in Oncologic Imaging. Zhang, F., & Hancock, ER Zhang.(2010). New Riemannian techniques for directional and tensorial image data. Pattern Recognition, 43(4), 1590-1606. [CrossRef]

- Singh, N. P., Dixit, S., Akshaya, A. S., & Khodanpur, B. I. Gradient Magnitude Based Watershed Segmentation for Brain Tumor Segmentation and Classification. In Advances in intelligent systems and computing (pp. 611–619). (2017). [CrossRef]

- Couprie, M., & Bertrand, G. Topological gray-scale watershed transformation. In Vision Geometry VI (Vol. 3168, pp. 136-146). (1997, October). SPIE. [CrossRef]

- Khan, M. S., Lali, M. I. U., Saba, T., Ishaq, M., Sharif, M., Saba, T., Zahoor, S., & Akram, T. Brain tumor detection and classification: A framework of marker-based watershed algorithm and multilevel priority features selection. Microscopy Research and Technique, 82(6), (2019). 909–922. [CrossRef]

- De Alencar Lotufo, R., Falcão, A. X., & De Assis Zampirolli, F. IFT-Watershed from gray-scale marker.(2003). [CrossRef]

- Dougherty, E. R. An introduction to morphological image processing. In SPIE. (1992). Optical Engineering Press.

- Kaur, D., & Kaur, Y. Various image segmentation techniques: a review. International Journal of Computer Science and Mobile Computing, (2014). 3(5), 809-814.

- Aslam, A., Khan, E., & Beg, M. S. Improved edge detection algorithm for brain tumor segmentation. Procedia Computer Science, (2015). 58, 430-437. [CrossRef]

- Cui, B., Xie, M., & Wang, C. A deep convolutional neural network learning transfer to SVM-based segmentation method for brain tumor. In 2019 IEEE 11th International Conference on Advanced Infocomm Technology (ICAIT) (2019, October). (pp. 1-5). IEEE. [CrossRef]

- Egmont-Petersen, M., de Ridder, D., & Handels, H. Image processing with neural networks—a review. Pattern recognition, (2002). 35(10), 2279-2301. [CrossRef]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain Tumor Segmentation Using Convolutional Neural Networks in MRI Images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef] [PubMed]

- Ye, N., Yu, H., Chen, Z., Teng, C., Liu, P., Liu, X., Xiong, Y., Lin, X., Li, S., & Li, X. Classification of Gliomas and Germinomas of the Basal Ganglia by Transfer Learning. Frontiers in Oncology, 12 (2022). [CrossRef]

- Biratu, E. S., Schwenker, F., Ayano, Y. M., & Debelee, T. G. A survey of brain tumor segmentation and classification algorithms. Journal of Imaging, (2021). 7(9), 179. [CrossRef]

- Wikipedia contributors. F score. Wikipedia. https://en.wikipedia.org/wiki/F-score (2023).

- Brain Tumor Segmentation (BraTS) Challenge. Available online: http://www.braintumorsegmentation.org/.

- RIDER NEURO MRI - The Cancer Imaging Archive (TCIA) Public Access - Cancer Imaging Archive Wiki. https://wiki.cancerimagingarchive.net/display/Public/RIDER+NEURO+MRI.

- Harvard Medical School Data. Available online: http://www.med.harvard.edu/AANLIB/.

- The Cancer Genome Atlas. TCGA. Available online: https://wiki.cancerimagingarchive.net/display/Public/TCGA-GBM.

- The Cancer Genome Atlas. TCGA-LGG. Available online: https://wiki.cancerimagingarchive.net/display/Public/TCGA-LGG.

- figshare. brain tumor dataset. (2017, April2) . Figshare. https://figshare.com/articles/dataset/brain_tumor_dataset/1512427/5. 1512.

- IXI Dataset – Brain Development. https://brain-development.org/ixi-dataset/.

- Gordillo, N., Montseny, E., Sobrevilla, P., A New Fuzzy Approach to Brain Tumor Segmentation, Fuzzy Systems (FUZZ), 2010 IEEE International Conference on, 18-, pp.1-8, 23 July. [CrossRef]

- Rajendran and, R. Dhanasekaran, A hybrid Method Based on Fuzzy Clustering and Active Contour Using GGVF for Brain Tumor Segmentation on MRI Images, European Journal of Scientific Research, Vol. 61, No. 2, 2011, pp. 305-313.

- Kishore, K. Reddy, et al, Confidence Guided Enhancing Brain Tumor Segmentation in Multi-Parametric MRI, 9th IEEE International Symposium on Biomedical Imaging, 12, pp. 366-369. 20 May. [CrossRef]

- Almahfud, M.A.; Setyawan, R.; Sari, C.A.; Setiadi, D.R.I.M.; Rachmawanto, E.H. An Effective MRI Brain Image Segmentation using Joint Clustering (K-Means and Fuzzy C-Means). In Proceedings of the 2018 International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Yogyakarta, Indonesia, 21-22 Nov. 2018; pp. 11–16. [Google Scholar]

- Chen, W.; Qiao, X.; Liu, B.; Qi, X.; Wang, R.; Wang, X. Automatic brain tumor segmentation based on features of separated local square. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar] [CrossRef]

- Gupta, N., Mishra S., and Khanna P., Glioma identification from brain MRI using superpixels and FCM clustering. 2018. [CrossRef]

- Razzak, M.I.; Imran, M.; Xu, G. Efficient Brain Tumor Segmentation With Multiscale Two-Pathway-Group Conventional Neural Networks. IEEE J. Biomed. Health Inform. 2019, 23, 1911–1919. [Google Scholar] [CrossRef] [PubMed]

- Myronenko, A. and Hatamizadeh A., Robust Semantic Segmentation of Brain Tumor Regions from 3D MRIs, in Springer eBooks, 2019, pp. 82–89. [CrossRef]

- Karayegen, G. and Aksahin M. F., Brain tumor prediction on MR images with semantic segmentation by using deep learning network and 3D imaging of tumor region, Biomedical Signal Processing and Control, vol. 66, p. 102458, Apr. 2021. [CrossRef]

- Zhang, Y., Dong Z., Wu L., and Wang S., “A hybrid method for MRI brain image classification,” Expert Systems With Applications, vol. 38, no. 8, pp. 10049–10053, Aug. 2011. [CrossRef]

- G. Yang et al., “Automated classification of brain images using wavelet-energy and biogeography-based optimization,” Multimedia Tools and Applications, vol. 75, no. 23, pp. 15601–15617, 15. 20 May. [CrossRef]

- Amin, J., Sharif M., Yasmin M., and Fernandes S. L., “A distinctive approach in brain tumor detection and classification using MRI,” Pattern Recognition Letters, vol. 139, pp. 118–127, Oct. 2017. [CrossRef]

- Tiwari, P., Sachdeva J., Ahuja C. K., and Khandelwal N., “Computer Aided Diagnosis System-A Decision Support System for Clinical Diagnosis of Brain Tumours,” International Journal of Computational Intelligence Systems, vol. 10, no. 1, p. 104, Jan. 2017. [CrossRef]

- Sachdeva J., Kumar V., Gupta I. R., Khandelwal N., and Ahuja C. K., “Segmentation, Feature Extraction, and Multiclass Brain Tumor Classification,” Journal of Digital Imaging, vol. 26, no. 6, pp. 1141–1150, May 2013. [CrossRef]

- Jayachandran and, R. Dhanasekaran, “Severity Analysis of Brain Tumor in MRI Images Using Modified Multi-texton Structure Descriptor and Kernel-SVM,” Arabian Journal for Science and Engineering, vol. 39, no. 10, pp. 7073–7086, Aug. 2014. [CrossRef]

- El-Dahshan, E.S.A.; Hosny, T.; Salem, A.B.M. Hybrid intelligent techniques for MRI brain images classification. Dig. Signal Process 2010, 20, 433–441. [Google Scholar] [CrossRef]

- Kang, J.; Ullah, Z.; Gwak, J. MRI-Based Brain Tumor Classification Using Ensemble of Deep Features and Machine Learning Classifiers. Sensors 2021, 21, 2222. [Google Scholar] [CrossRef] [PubMed]

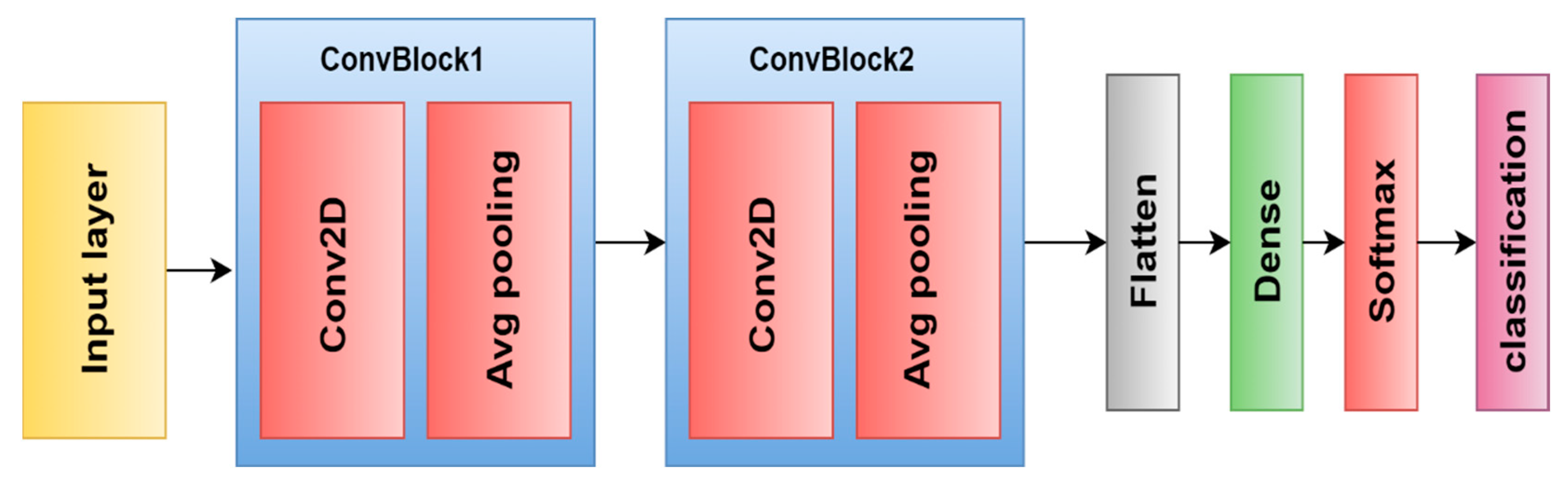

- Díaz-Pernas, F. J., Martínez-Zarzuela, M., Antón-Rodríguez, M., & González-Ortega, D. A Deep Learning Approach for Brain Tumor Classification and Segmentation Using a Multiscale Convolutional Neural Network. Healthcare, 9(2), 153. (2021). [CrossRef]

- Badža, M. M., & Barjaktarović, M. Classification of Brain Tumors from MRI Images Using a Convolutional Neural Network. Applied Sciences, 10(6), (2020). [CrossRef]

- Ertosun M., G. and Rubin D. L., ``Automated grading of gliomas using deep learning in digital pathology images: A modular approach with ensemble of convolutional neural networks,'' in Proc. AMIA Annu. Symp. Proc., vol. 2015, Nov. 2015, pp. 1899_1908. [PubMed]

- Sultan H., H., Salem N. M., and Al-Atabany,W. “Multi-Classification of Brain Tumor Images Using Deep Neural Network,” IEEE Access, vol. 7, pp. 69215–69225, 2019. [CrossRef]

- Khan, H., Jue W., Mushtaq M., and Mushtaq M., “Brain tumor classification in MRI image using convolutional neural network,” Mathematical Biosciences and Engineering, vol. 17, no. 5, pp. 6203–6216, Jan. 2020. [CrossRef]

- Özcan, H.; Emiro˘ glu, B.G.; Sabuncuo˘ glu, H.; Özdo˘gan, S.; Soyer, A.; Saygı, T. A comparative study for glioma classification using deep convolutional neural networks. Math. Biosci. Eng. MBE 2021, 18, 1550–1572. [Google Scholar] [CrossRef] [PubMed]

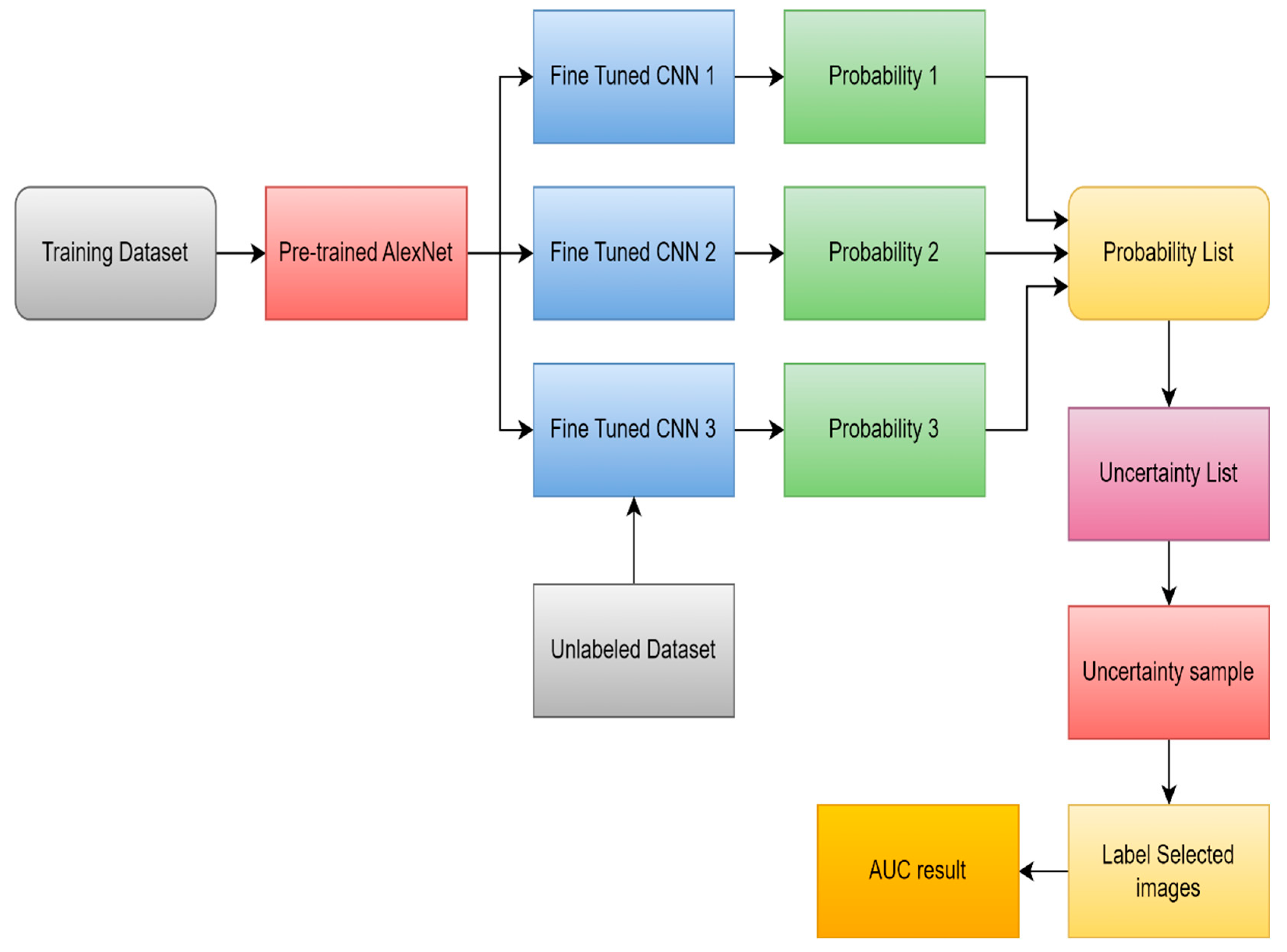

- Ruqian, H., Namdar K., Liu L., and Khalvati F., “A Transfer Learning–Based Active Learning Framework for Brain Tumor Classification,” Frontiers in Artificial Intelligence, vol. 4, 21. 20 May. [CrossRef]

- Yang, Y., Yan, L., Zhang, X., Han, Y., Nan, H., Hu, Y., Hu, B., Yan, S., Zhang, J. Z., Cheng, D., Ge, X., Cui, G., Zhao, D., & Wang, W. Glioma Grading on Conventional MR Images: A Deep Learning Study With Transfer Learning. Frontiers in Neuroscience, 12. (2018). [CrossRef]

- Hamdaoui, H. E., Benfares, A., Boujraf, S., Chaoui, N. E. H., Alami, B., Maaroufi, M., & Qjidaa, H. High precision brain tumor classification model based on deep transfer learning and stacking concepts. Indonesian Journal of Electrical Engineering and Computer Science. 2021, 24(1), 167. [CrossRef]

- Khazaee, Z., Langarizadeh, M., & Ahmadabadi, M. R. N. Developing an Artificial Intelligence Model for Tumor Grading and Classification, Based on MRI Sequences of Human Brain Gliomas. International Journal of Cancer Management, (2022). 15(1). [CrossRef]

- Amou, M. A., Xia, K., Kamhi, S., & Mouhafid, M. A Novel MRI Diagnosis Method for Brain Tumor Classification Based on CNN and Bayesian Optimization. Healthcare, 10(3), 494. (2022). [CrossRef]

- Alanazi, M., Ali, M., Hussain, J., Zafar, A., Mohatram, M., Irfan, M., AlRuwaili, R., Alruwaili, M., Ali, N. T., & Albarrak, A. M. (2022). Brain Tumor/Mass Classification Framework Using Magnetic-Resonance-Imaging-Based Isolated and Developed Transfer Deep-Learning Model. Sensors, 22(1), 372. [CrossRef]

- Rizwan, M., Shabbir, A., Javed, A. R., Shabbr, M., Baker, T., & Al-Jumeily, D. Brain Tumor and Glioma Grade Classification Using Gaussian Convolutional Neural Network. IEEE Access, 2022. 10, 29731–29740. [CrossRef]

- Isunuri, B. V., & Kakarla, J. Three-class brain tumor classification from magnetic resonance images using separable convolution based neural network. Concurrency and Computation: Practice and Experience, 34(1). (2021). [CrossRef]

- Kaur, T., & Gandhi, T. K. Deep convolutional neural networks with transfer learning for automated brain image classification. Journal of Machine Vision and Applications, 31(3). (2020). [CrossRef]

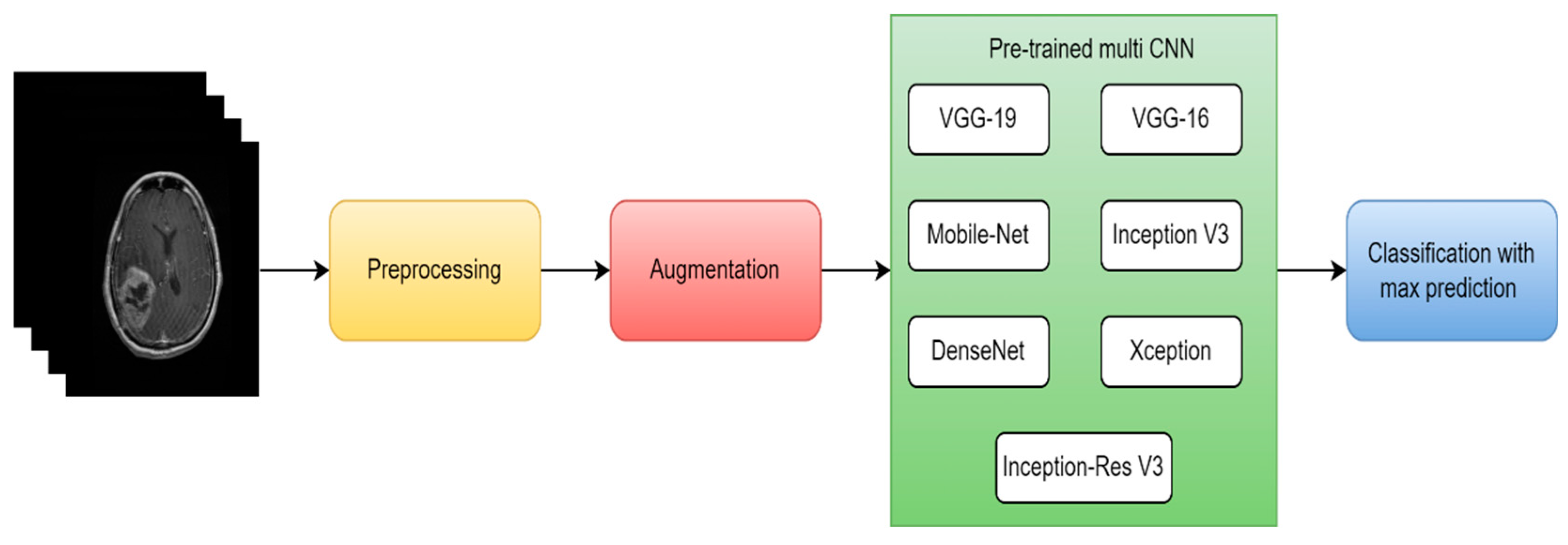

- Rehman, A., Naz, S., Razzak, M. I., Akram, F., & Imran, M. A Deep Learning-Based Framework for Automatic Brain Tumors Classification Using Transfer Learning. Circuits Systems and Signal Processing, (2019). 39(2), 757–775. [CrossRef]

- Ahmmed, R., Swakshar, A. S., Hossain, M. F., & Rafiq, M. A. Classification of tumors and it stages in brain MRI using support vector machine and artificial neural network. (2017). [CrossRef]

- Sathi, K., & Islam, S. Hybrid Feature Extraction Based Brain Tumor Classification using an Artificial Neural Network. (2020). [CrossRef]

- Gurbina, M., Lascu, M., & Lascu, D. Tumor Detection and Classification of MRI Brain Image using Different Wavelet Transforms and Support Vector Machines. (2019). [CrossRef]

- Islam, R., Imran, S., Ashikuzzaman, M., & Khan, M. A. Detection and Classification of Brain Tumor Based on Multilevel Segmentation with Convolutional Neural Network. Journal of Biomedical Science and Engineering, (2020). 13(04), 45–53. [CrossRef]

- Mohsen, H., El-Dahshan, E. A., El-Horbaty, E. M., & Salem, A. M. (2017). Classification using deep learning neural networks for brain tumors. Future Computing and Informatics Journal, 3(1), 68–71. 1. [CrossRef]

- Padma, A., and Sukanesh R. "A wavelet based automatic segmentation of brain tumor in CT images using optimal statistical texture features." International Journal of Image Processing 5.5 (2011): 552-563.

- Padma, A., & Sukanesh, R. Automatic Classification and Segmentation of Brain Tumor in CT Images using Optimal Dominant Gray level Run length Texture Features. International Journal of Advanced Computer Science and Applications, (2011). 2(10). [CrossRef]

- Ruba, T., Tamilselvi, R., Parisabeham, M., & Aparna, N. Accurate Classification and Detection of Brain Cancer Cells in MRI and CT Images using Nano Contrast Agents. Biomedical and Pharmacology Journal, (2020). 13(03), 1227–1237. [CrossRef]

- Woźniak, M., Siłka, J., & Wieczorek, M. W. Deep neural network correlation learning mechanism for CT brain tumor detection. Neural Computing and Applications, (2021). 35(20), 14611–14626. [CrossRef]

- Nanmaran, R., et al. "Investigating the role of image fusion in brain tumor classification models based on machine learning algorithm for personalized medicine." Computational and Mathematical Methods in Medicine 2022 (2022). [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).