Submitted:

28 June 2023

Posted:

04 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

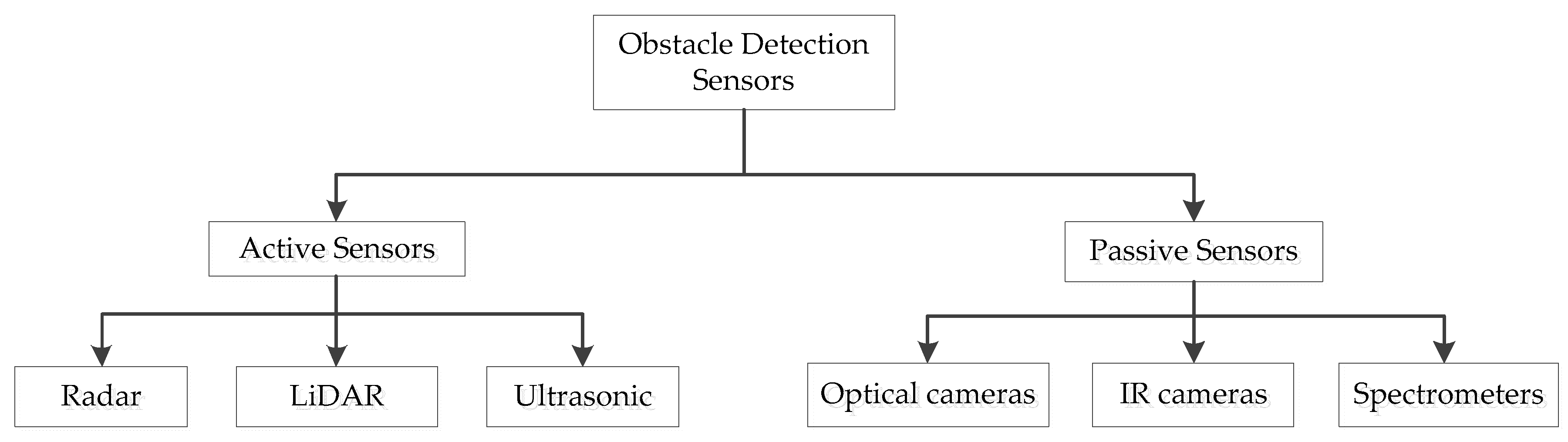

2. Obstacle Detection Sensors

2.1. Active Sensors

2.1.1. Radar

2.1.2. LiDar

2.1.3. Ultrasonic

2.2. Passive Sensors

2.2.1. Optical

2.2.2. Infrared

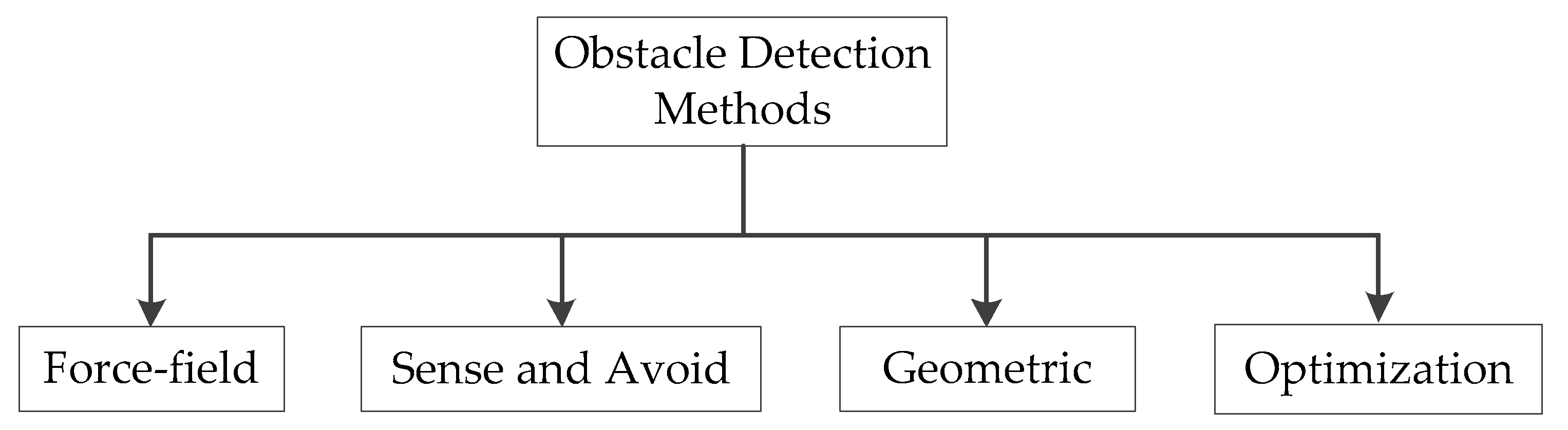

3. Obstacle Detection Method

3.1. Force-field Method

3.2. Sense and Avoid Method

3.3. Geometric Method

3.4. Optimization Method

4. Conclusion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zosimovych, N. Preliminary design of a VTOL unmanned aerial system for remote sensing of landscapes. Aeron Aero Open Access J. 2020, 4(2), 62–67. [Google Scholar] [CrossRef]

- Papa, U.; Ponte, S. Preliminary Design of an Unmanned Aircraft System for Aircraft General Visual Inspection. Electronics 2018, 7, 435. [Google Scholar] [CrossRef]

- Giernacki W, Gośliński J, Goślińska J, Espinoza-Fraire T, Rao J. Mathematical Modeling of the Coaxial Quadrotor Dynamics for Its Attitude and Altitude Control. Energies. 2021; 14(5):1232.

- D. Gheorghi¸t˘a, I. Vîntu, L. Mirea and C. Br˘aescu. Quadcopter control system. 2015 19th International Conference on System Theory, Control and Computing (ICSTCC) 2015, 421-426.

- Huang, Hui-Min. Autonomy levels for unmanned systems (ALFUS) framework: safety and application issues. Proceedings of the 2007 Workshop on Performance Metrics for Intelligent Systems 2007.

- Zhang, W., Zelinsky, G., Samaras, D. Real-time accurate object detection using multiple resolutions. 2007 IEEE 11th International Conference on Computer Vision 2007.

- Holovatyy, A. , Teslyuk V., Lobur M. VHDL-AMS model of delta-sigma modulator for A/D converter in MEMS interface circuit. Perspective Technologies and Methods In MEMS Design, MEMSTECH 2015 – Proceedings of the 11th International Conference, 2015, pp. 55-57. [CrossRef]

- Holovatyy, A. , Lobur M. , Teslyuk V. VHDL-AMS model of mechanical elements of MEMS tuning fork gyroscope for the schematic level of computer-aided design. Perspective Technologies and Methods In MEMS Design – Proceedings of the 4th International Conference of Young Scientists, MEMSTECH 2008, 2008, pp. 138–140. [Google Scholar] [CrossRef]

- Zhuge, C., Cai, Y., Tang, Z. A novel dynamic obstacle avoidance algorithm based on collision time histogram. Chinese Journal of Electronics 2017, 6(3), 522–529.

- Puchalski R, Giernacki W. UAV Fault Detection Methods, State-of-the-Art. Drones. 2022; 6(11):330.

- Bondyra A, Kołodziejczak M, Kulikowski R, Giernacki W. An Acoustic Fault Detection and Isolation System for Multirotor UAV. Energies. 2022; 15(11):3955.

- Chao, H. , Cao, Y., Chen, Y. Autopilots for small fixed-wing unmanned air vehicles: A survey. 2007 International Conference on Mechatronics and Automation 2007.

- A.Vijayavargiya, A. Sharma, Anirudh, A. Kumar, A. Kumar, A. Yadav, A. Sharma, A. Jangid, and A. Dubey. Unmanned aerial vehicle. Imperial J. Interdiscip. 2016, 2(5).

- Zhuge, C., Cai, Y., Tang, Z. A novel dynamic obstacle avoidance algorithm based on collision time histogram. Chinese Journal of Electronics 2017, 6(3), 522–529.

- Shim, D. , Chung, H., Kim, H. J., Sastry, S. Autonomous exploration in unknown urban environments for unmanned aerial vehicles. AIAA Guidance, Navigation, and Control Conference and Exhibit 2005.

- Mikołajczyk T, Mikołajewski D, Kłodowski A, Łukaszewicz A, Mikołajewska E, Paczkowski T, Macko M, Skornia M. Energy Sources of Mobile Robot Power Systems: A Systematic Review and Comparison of Efficiency. Applied Sciences. 2023; 13(13):7547. [CrossRef]

- Zhang, A. , Zhou, D. , Yang, M., Yang, P. Finite-time formation control for unmanned aerial vehicle swarm system with time-delay and input saturation. IEEE Access: Practical Innovations, Open Solutions 2019, 7, 5853–5864. [Google Scholar]

- Yasin, J. N. , Mohamed, S. A. S., Haghbayan, M.-H., Heikkonen, J., Tenhunen, H., Plosila, J. Unmanned aerial vehicles (UAVs): Collision avoidance systems and approaches. IIEEE Access: Practical Innovations, Open Solutions 2020, 8, 105139–105155 Version June 19, 2023 submitted to Journal Not Specified 10 of 12. [Google Scholar]

- Mircheski, I. , Łukaszewicz A. , Szczebiot R. Injection process design for manufacturing of bicycle plastic bottle holder using CAx tools, Procedia Manuf. 2019, 32, 68–73. [Google Scholar] [CrossRef]

- R. J. Kiefer, D. K. Grimm, B. B. Litkouhi, and V. Sadekar. Collision avoidance system. U.S. Patent 7 245 231 2007. 428.

- Foka, A. , Trahanias, P. Real-time hierarchical POMDPs for autonomous robot navigation. Robotics and Autonomous Systems, 2020, 55(7, 561–571.

- Ladd, G. , Bland, G. Non-military applications for small UAS platforms. J. Dyn. Syst. Meas. Control 2007, 129, 571–598. [Google Scholar]

- Ladd, G. , Bland, G. Non-military applications for small UAS platforms. J. AIAA Infotech@Aerospace Conference 2009.

- He, L. , Bai, P. , Liang, X., Zhang, J., Wang, W. Feedback formation control of UAV swarm with multiple implicit leaders. Aerospace Science and Technology 2018, 72, 327–334. [Google Scholar]

- Esfahlani, S. S. Mixed reality and remote sensing application of unmanned aerial vehicle in fire and smoke detection. Journal of Industrial Information Integration 2019, 15, 42–49. [Google Scholar] [CrossRef]

- K. P. Valavanis. Unmanned Aircraft Systems: The Current State-of-theArt. Cham, Switzerland: Springer 2016.

- Wargo, C. A. , Church, G. C., Glaneueski, J., Strout, M. Unmanned Aircraft Systems (UAS) research and future analysis. 2014 IEEE Aerospace Conference 2014.

- Horla, D. , Giernacki, W., Báča, T. et al. AL-TUNE: A Family of Methods to Effectively Tune UAV Controllers in In-flight Conditions. J Intell Robot Syst 103, 5 (2021).

- Wang, X. , Yadav, V., Balakrishnan, S. N. Cooperative UAV formation flying with obstacle/collision avoidance. IEEE Transactions on Control Systems Technology: A Publication of the IEEE Control Systems Society 2007, 15(4), 672–67.

- Łukaszewicz, A. Panas K., Szczebiot R. Design process of technological line to vegetables packaging using CAx tools. Proceedings of 17th International Scientific Conference on Engineering for Rural Development, Jelgava, Latvia, 23-25 May 2018, pp. 871-87. [CrossRef]

- Łukaszewicz, A. , Szafran, K., Jóźwik, J. CAx techniques used in UAV design process, In Proceedings of the 2020 IEEE 7th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Pisa, Italy, 22–24 June 2020; pp. 95–98. [CrossRef]

- Everett, C. H. R. Survey of collision avoidance and ranging sensors for mobile robots. Robotics and Autonomous Systems 1989, 5(1), 5–67. [Google Scholar] [CrossRef]

- Kamat, S. U. , Rasane, K. A Survey on Autonomous Navigation Techniques. Robotics and Autonomous Systems 1989, 5(1), 5–67. [Google Scholar]

- Active sensors. (n.d.). Esa.int. Available online: https://www.esa.int/Education/7.ActiveSensors (accessed on December 15, 2022).

- What is Active Sensor?—Definition. Available online: https://internetofthingsagenda.techtarget.com/defisensor (accessed on Mar. 13, 2020).

- Blanc, C., Aufrère, R., Malaterre, L., Gallice, J., Alizon, J. Obstacle detection and tracking by millimeter wave RADAR. IFAC Proceedings Volumes 2004, 37(8), 322–327.

- Korn, B. , Edinger, C. UAS in civil airspace: Demonstrating “sense and avoid” capabilities in flight trials. 2008 IEEE/AIAA 27th Digital Avionics Systems Conference. 2008.

- Owen, M. P. , Duffy, S. M., Edwards, M. W. M. Unmanned aircraft sense and avoid radar: Surrogate flight testing performance evaluation. 2014 IEEE Radar Conference. 2014.

- Quist, E. B. , Beard, R. W. Radar odometry on fixed-wing small unmanned aircraft. IEEE Transactions on Aerospace and Electronic Systems, 2016, 52(1), 396–410.

- Kwag, Y. K. , Chung, C. H. UAV based collision avoidance radar sensor. 2007 IEEE International Geoscience and Remote Sensing Symposium., 2007.

- Hugler, P. , Roos, F., Schartel, M., Geiger, M., Waldschmidt, C. Radar taking off: New capabilities for UAVs. IEEE Microwave Magazine, 2018, 19(7), 43–53.

- Nijsure, Y. A. , Kaddoum, G., Khaddaj Mallat, N., Gagnon, G., Gagnon, F. Cognitive chaotic UWB-MIMO detect-avoid radar for autonomous UAV navigation. IEEE Transactions on Intelligent Transportation Systems: A Publication of the IEEE Intelligent Transportation Systems Council, 2016, 17(11), 3121–3131.

- Mohamed, S. A. S. , Haghbayan, M. -H., Westerlund, T., Heikkonen, J., Tenhunen, H., Plosila, J. A survey on odometry for autonomous navigation systems. IEEE Access: Practical Innovations, Open Solutions, 2019, 7, 97466–97486. [Google Scholar]

- Nashashibi, F. , Bargeton, A. Laser-based vehicles tracking and classification using occlusion reasoning and confidence estimation. 2008 IEEE Intelligent Vehicles Symposium., 2008.

- Nüchter, A. , Lingemann, K., Hertzberg, J., Surmann, H. 6D SLAM-3D mapping outdoor environments: 6D SLAM-3D Mapping Outdoor Environments. Journal of Field Robotics, 2007, 24(8–9), 699–722.

- Zhang, J. , Singh, S. Visual-lidar odometry and mapping: low-drift, robust, and fast. 2015 IEEE International Conference on Robotics and Automation (ICRA), 2015.

- Tahir, A. , Böling, J., Haghbayan, M.-H., Toivonen, H. T., Plosila, J. Swarms of unmanned aerial vehicles — A survey. Journal of Industrial Information Integration, 2019, 16(100106), 100106.

- J. M. Armingol, J. Alfonso, N. Aliane, M. Clavijo, S. Campos-Cordobés, A. de la Escalera, J. del Ser, J. Fernández, F. García, F. Jiménez, A. M. López, M. Mata, D. Martín, J. M. Menéndez, J. Sánchez-Cubillo, D. Vázquez, and G. Villalonga. Environmental perception for intelligent vehicles. F. Jiménez, Ed. Oxford, U.K.: Butterworth-Heinemann, 2018, 2, 23–101 Version June 19, 2023 submitted to Journal Not Specified 11 of 12.

- Wang, C.-C. R. , Lien, J.-J. J. Automatic vehicle detection using local features—A statistical approach. IEEE Transactions on Intelligent Transportation Systems: A Publication of the IEEE Intelligent Transportation Systems Council, 2008, 9(1), 83-96.

- Mizumachi, M. , Kaminuma, A., Ono, N., Ando, S. Robust sensing of approaching vehicles relying on acoustic cues. Sensors. Basel, Switzerland, 2014, 14(6), 9546–9561.

- J. Kim, S. Hong, J. Baek, E. Kim, and H. Lee. Autonomous vehicle detection system using visible and infrared camera. 12th Int.Conf. Control, Automat. Syst., 2012, 630–634.

- Kota, F. , Zsedrovits, T., Nagy, Z. Sense-and-avoid system development on an FPGA. 2019 International Conference on Unmanned Aircraft Systems (ICUAS), 2019.

- Mcfadyen, A. , Durand-Petiteville, A., Mejias, L. Decision strategies for automated visual collision avoidance. 2014 International Conference on Unmanned Aircraft Systems (ICUAS), 2014.

- J. Kim, S. Hong, J. Baek, E. Kim, and H. Lee. Autonomous vehicle detection system using visible and infrared camera. 12th Int.Conf. Control, Automat. Syst., 2012, 630–634.

- Saha, S. , Natraj, A., Waharte, S. A real-time monocular vision-based frontal obstacle detection and avoidance for low cost UAVs in GPS denied environment. 2014 IEEE International Conference on Aerospace Electronics and Remote Sensing Technology, 2014.

- Mejias, L. , McNamara, S., Lai, J., Ford, J. Vision-based detection and tracking of aerial targets for UAV collision avoidance. 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2010.

- Mohamed, S. A. S. , Haghbayan, M.-H., Heikkonen, J., Tenhunen, H., Plosila, J. Towards real-time edge detection for event cameras based on lifetime and dynamic slicing. In Advances in Intelligent Systems and Computing. Springer International Publishing, 2020.

- Lee, T.-J. , Yi, D.-H., Cho, D.-I. D. A monocular vision sensor-based obstacle detection algorithm for autonomous robots. Sensors. Basel, Switzerland, 2016, 16(3, 311.

- Haque, A. U. , Nejadpak, A. Obstacle avoidance using stereo camera. 2017.

- Hartmann, W. , Tilch, S., Eisenbeiss, H., Schindler, K. Determination of the uav position by automatic processing of thermal images. ISPRS - International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences, 2012, 111–116.

- Nonlinear geometric and differential geometric guidance of UAVs for reactive collision avoidance. (n.d.

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. 1985 IEEE International Conference on Robotics and Automation., 2005.

- A.A. Holenstein and E. Badreddin. Collision avoidance in a behavior based mobile robot design. IEEE Int. Conf. Robot. Automat., 1991, 898–903.

- J. Oroko and G. Nyakoe. Obstacle avoidance and path planning schemes for autonomous navigation of a mobile robot: A review. Sustain. Res. Innov. Conf., 2014, 314–318.

- Enhanced Potential Field Based Collision Avoidance for Unmanned Aerial Vehicles in a Dynamic Environment. (n.d.

- Grodzki, W. , Łukaszewicz, A. Design and manufacture of unmanned aerial vehicles (UAV) wing structure using composite materials. Mater. Werkst. 2015, 46, 269–278. [Google Scholar] [CrossRef]

- Sun, J. , Tang, J. , Lao, S. Collision avoidance for cooperative UAVs with optimized artificial potential field algorithm. Sensors. IEEE Access: Practical Innovations, Open Solutions, 2017, 5, 18382–18390. [Google Scholar]

- Wolf, M. T. , Burdick, J. W. Artificial potential functions for highway driving with collision avoidance. 2008 IEEE International Conference on Robotics and Automation, 2008.

- Kim, C. Y. , Kim, Y. H., Ra, W.-S. Modified 1D virtual force field approach to moving obstacle avoidance for autonomous ground vehicles. Journal of Electrical Engineering and Technology, 2019, 14(3), 1367–1374.

- Yasin, J. N. , Mohamed, S. A. S., Haghbayan, M.-H., Heikkonen, J., Tenhunen, H., Plosila, J. M. Navigation of autonomous swarm of drones using translational coordinates. In Advances in Practical Applications of Agents, Multi-Agent Systems, and Trustworthiness. J Springer International Publishing, 2020, 353–362.

- Yu, X. , Zhang, Y. Sense and avoid technologies with applications to unmanned aircraft systems: Review and prospects. Progress in Aerospace Science, 2015, 74, 152–166. [Google Scholar]

- Wang, M. , Voos, H., Su, D. Robust online obstacle detection and tracking for collision-free navigation of multirotor UAVs in complex environments. 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2018.

- Sharma, S. U. , Shah, D. J. A practical animal detection and collision avoidance system using computer vision technique. IEEE Access: Practical Innovations, Open Solutions, 2017, 5, 347–358. [Google Scholar]

- De Simone, M., Rivera, Z., Guida, D. Obstacle avoidance system for unmanned ground vehicles by using ultrasonic sensors. Machine. , 2018, 6(2), 18. Version June 19, 2023 submitted to Journal Not Specified 12 of 12.

- Yu, Y. , Tingting, W., Long, C., Weiwei, Z. Stereo vision based obstacle avoidance strategy for quadcopter UAV. 2018 Chinese Control And Decision Conference (CCDC), 2018.

- Bilimoria, K. A geometric optimization approach to aircraft conflict resolution. 18th Applied Aerodynamics Conference, 2000.

- Goss, J. , Rajvanshi, R., Subbarao, K. Aircraft conflict detection and resolution using mixed geometric and collision cone approaches. AIAA Guidance, Navigation, and Control Conference and Exhibit, 2004.

- Seo, J. , Kim, Y., Kim, S., Tsourdos, A. Collision avoidance strategies for unmanned aerial vehicles in formation flight. IEEE Transactions on Aerospace and Electronic Systems, 2017, 53(6), 2718–2734.

- Lin, Z. , Castano, L., Mortimer, E., Xu, H. Fast 3D collision avoidance algorithm for fixed wing UAS. Journal of Intelligent Robotic Systems, 2020, 97(3–4),577–604.

- Ha, Bui, Hong. Nonlinear control for autonomous trajectory tracking while considering collision avoidance of UAVs based on geometric relations. Energies, 2019, 12(8), 1551.

- Pérez-Carabaza, S. , Scherer, J. , Rinner, B., López-Orozco, J. A., Besada-Portas, E. UAV trajectory optimization for Minimum Time Search with communication constraints and collision avoidance. Applications of Artificial Intelligence, 2019, 85, 357–371. [Google Scholar]

- Boivin, E. , Desbiens, A., Gagnon, E. UAV collision avoidance using cooperative predictive control. 2008 16th Mediterranean Conference on Control and Automation, 2008.

- Biswas, S. , Anavatti, S. G., Garratt, M. A. A particle swarm optimization based path planning method for autonomous systems in unknown terrain. 2019 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), 2019.

- van den Berg, J. , Wilkie, D., Guy, S. J., Niethammer, M., Manocha, D. LQG-obstacles: Feedback control with collision avoidance for mobile robots with motion and sensing uncertainty. 2012 IEEE International Conference on Robotics and Automation., 2012.

- Zhu, H. , Alonso-Mora, J. Chance-constrained collision avoidance for MAVs in dynamic environments. IEEE Robotics and Automation Letters, 2019, 4(2), 776–783.

| Sensor | Sensor Size | Power Required | Accuracy | Range | Weather Condition | Light Sensitivity | Cost |

|---|---|---|---|---|---|---|---|

| Radar | Large | High | High | Long | Not Affected | No | High |

| LiDar | Small | Low | Medium | Medium | Affected | No | Medium |

| Ultrasonic | Small | Low | Low | Short | Slightly Affected | No | Low |

| Geometric | Sense and Avoid | Force Field | Optimization | |||||

|---|---|---|---|---|---|---|---|---|

| [78,79] | [80] | [84] | [72] | [74] | [69] | [65] | [85] | |

| Multiple UAV Compatibility | / | / | / | / | / | / | O | / |

| 3D Compatibility | / | / | / | / | / | O | O | / |

| Communication | O | / | / | / | / | O | O | / |

| Alternate Route Generation | / | / | / | / | O | / | / | / |

| Real-time Detection | / | / | / | / | / | / | / | / |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).