Submitted:

07 July 2023

Posted:

10 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methodology

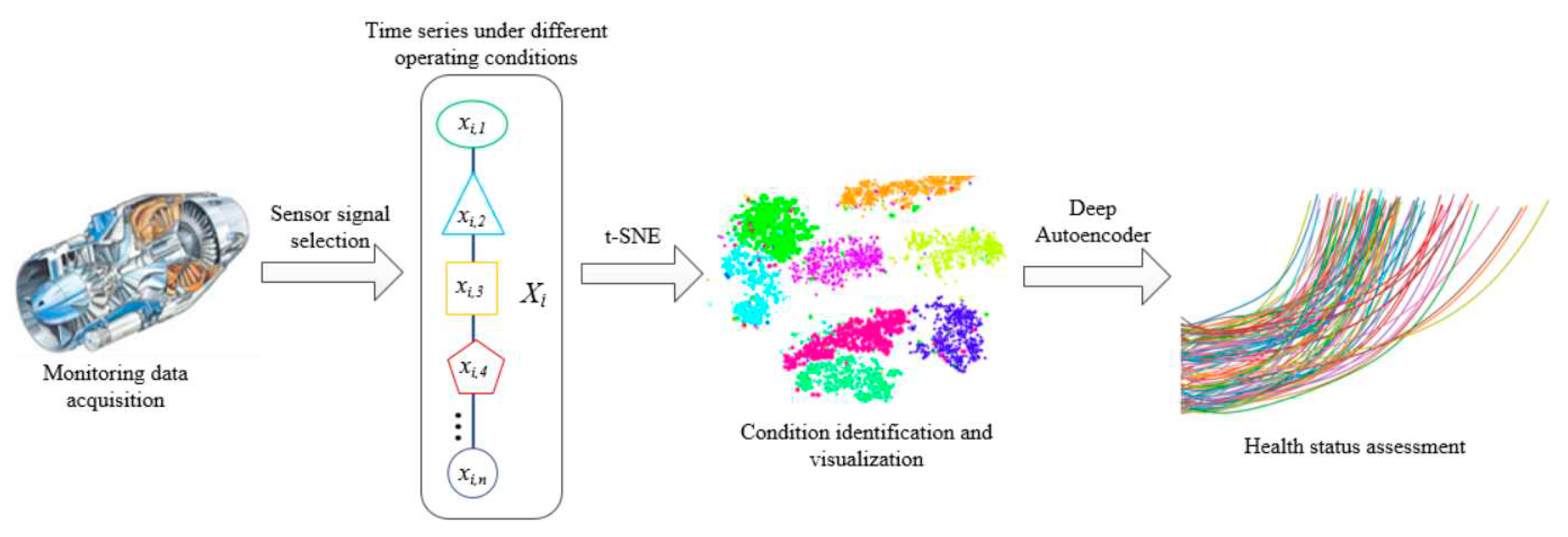

2.1. Overall framework

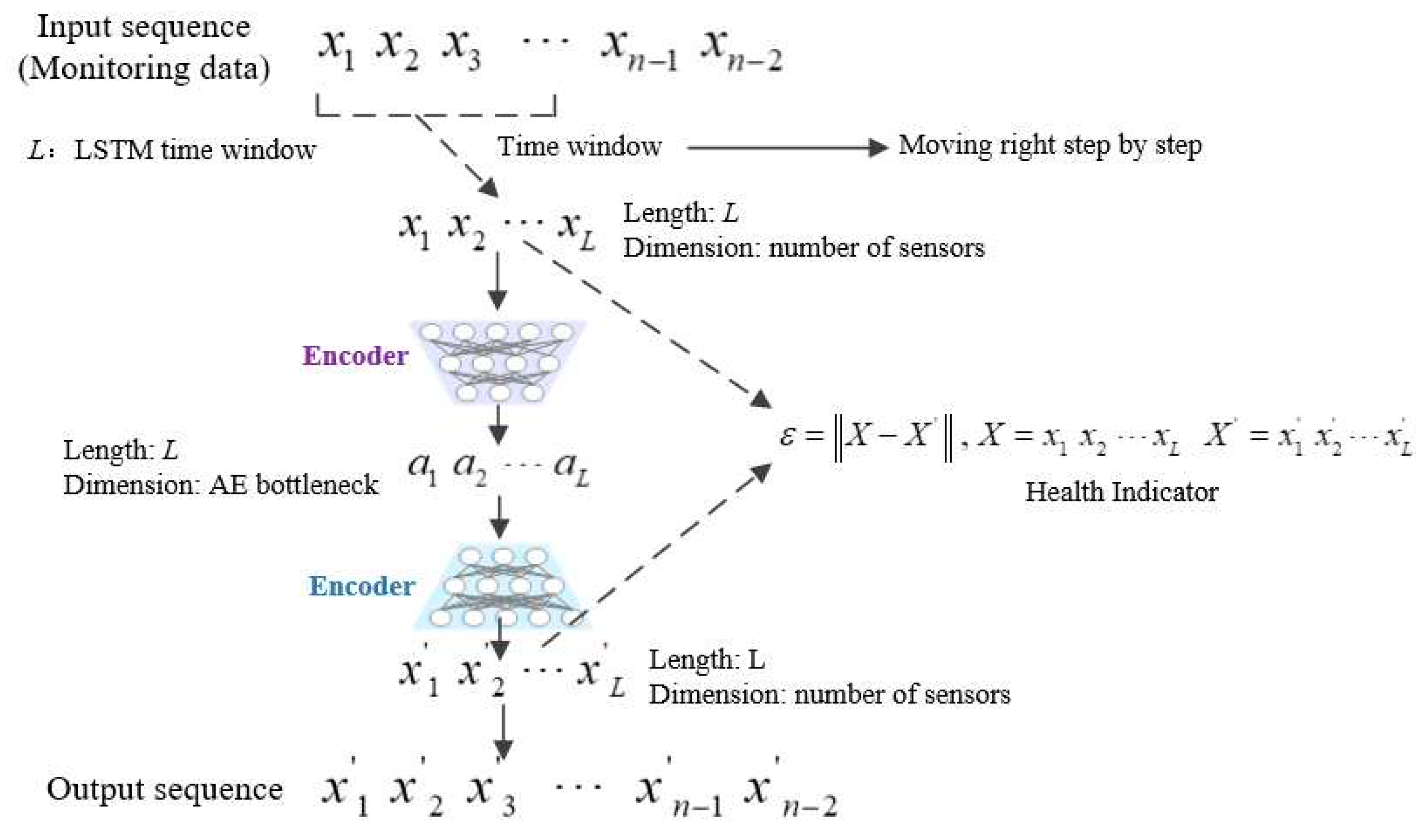

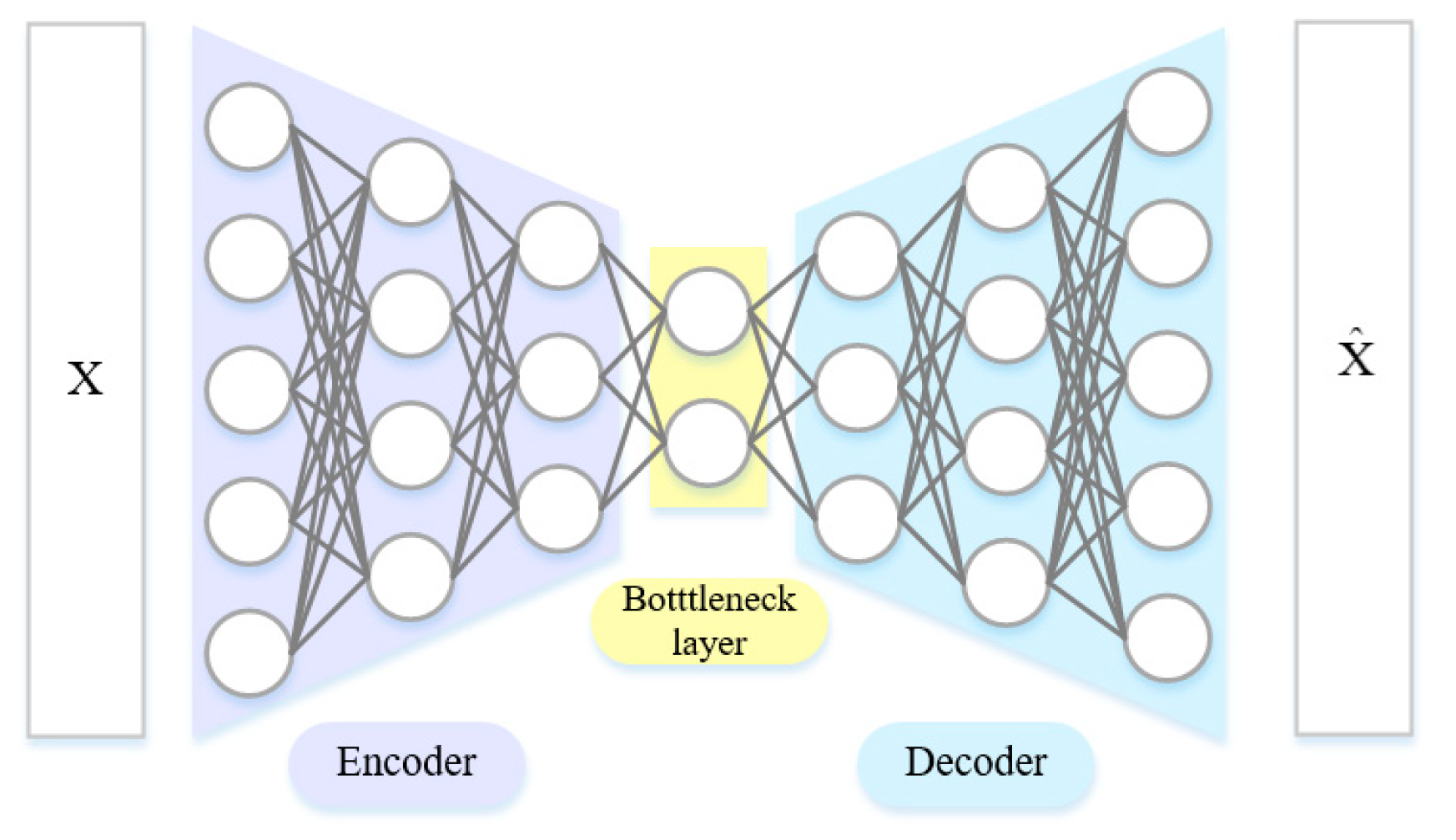

2.2. Deep autoencoder

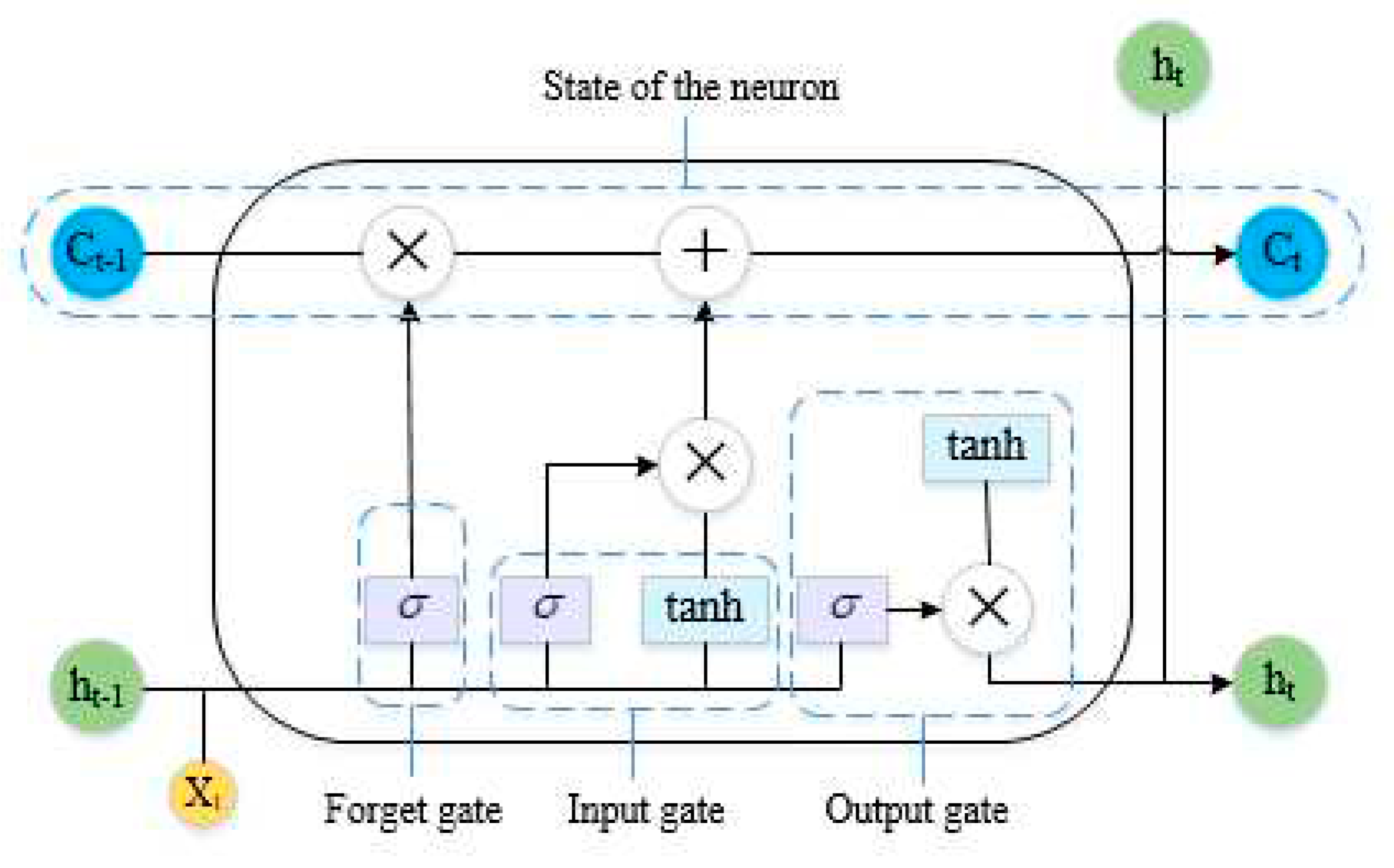

2.3. Long Short-Term Neural Network

2.4. Sensor Selection based on mutual information

2.5. Operating condition identification based on T-SNE

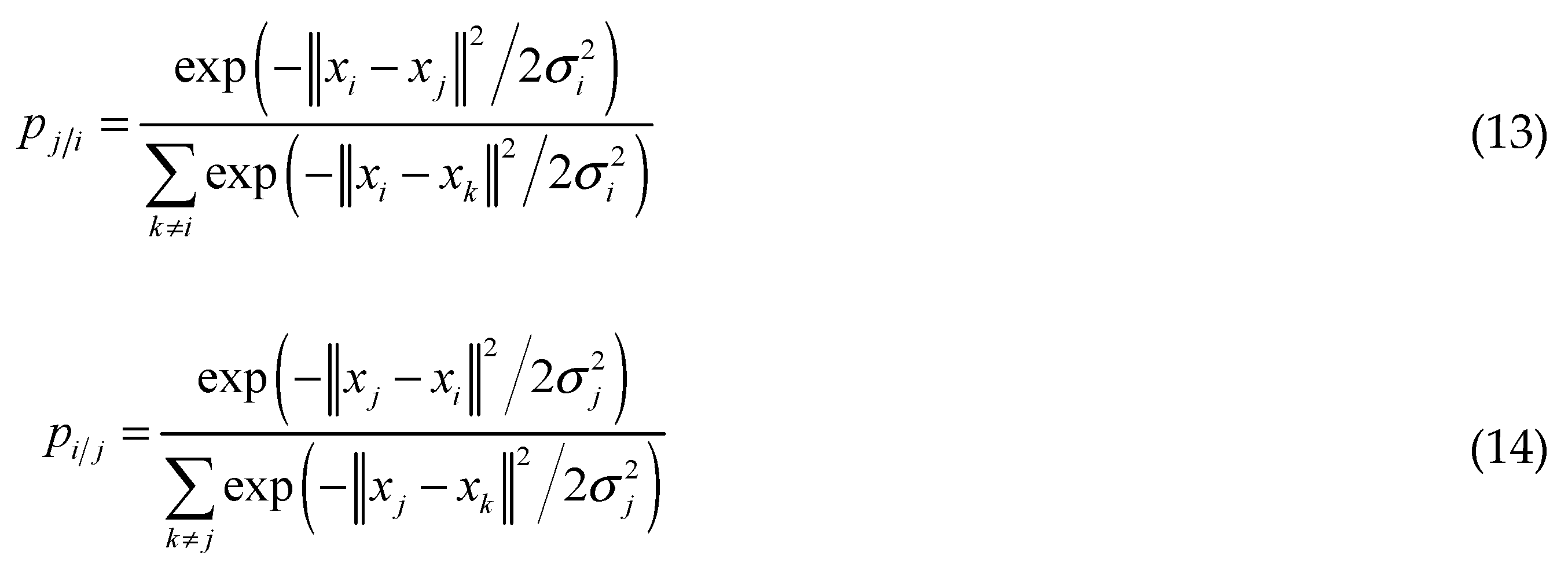

where k is the number of nearest neighbors of each

point, σi and σj are the Gaussian

variances of xi and xj respectively. Then the symmetric joint probability distribution of data points in the original space is:

where k is the number of nearest neighbors of each

point, σi and σj are the Gaussian

variances of xi and xj respectively. Then the symmetric joint probability distribution of data points in the original space is: where N is the size of the data. The variance σ in formulas 13 and

14 is calculated by binary search of perplexity Prep(Pi), which is a manually

defined parameter. Its function is to measure the number of points around each

point in the effective domain that can form a smooth epidemic, and it is

defined as follows:

where N is the size of the data. The variance σ in formulas 13 and

14 is calculated by binary search of perplexity Prep(Pi), which is a manually

defined parameter. Its function is to measure the number of points around each

point in the effective domain that can form a smooth epidemic, and it is

defined as follows:  where H(Pi) is

the Shannon entropy of Pi and is defined as follow:

where H(Pi) is

the Shannon entropy of Pi and is defined as follow:

where, k and l respectively represent any two different points in a low dimensional space, defined by a t-distribution with a degree freedom of 1, which makes the points within the same cluster aggregate more tightly and the points in different clusters more distant.

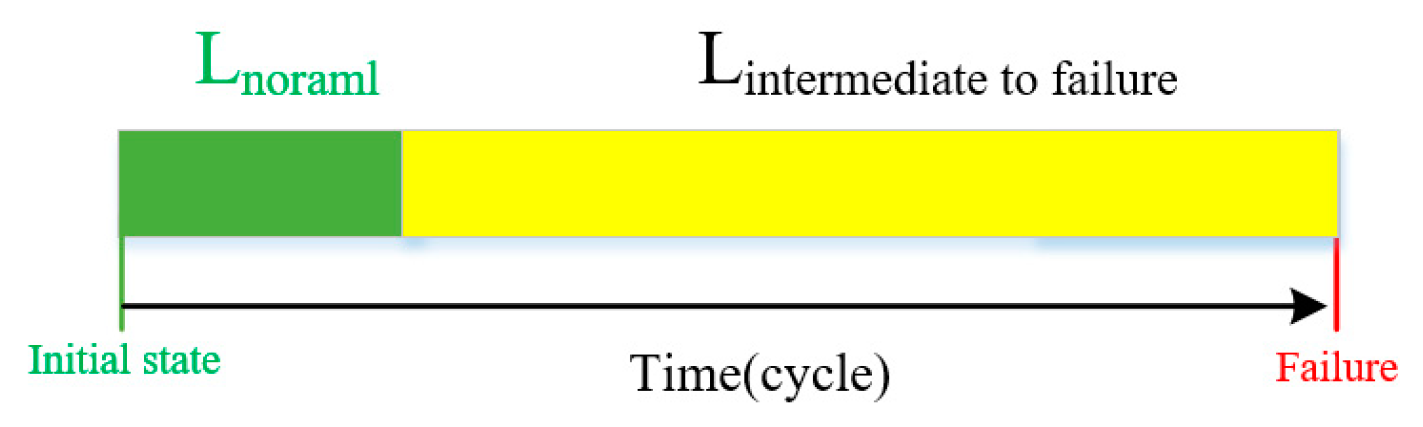

where, k and l respectively represent any two different points in a low dimensional space, defined by a t-distribution with a degree freedom of 1, which makes the points within the same cluster aggregate more tightly and the points in different clusters more distant.2.6. Reconstruction error as health indicator

3. Experiments

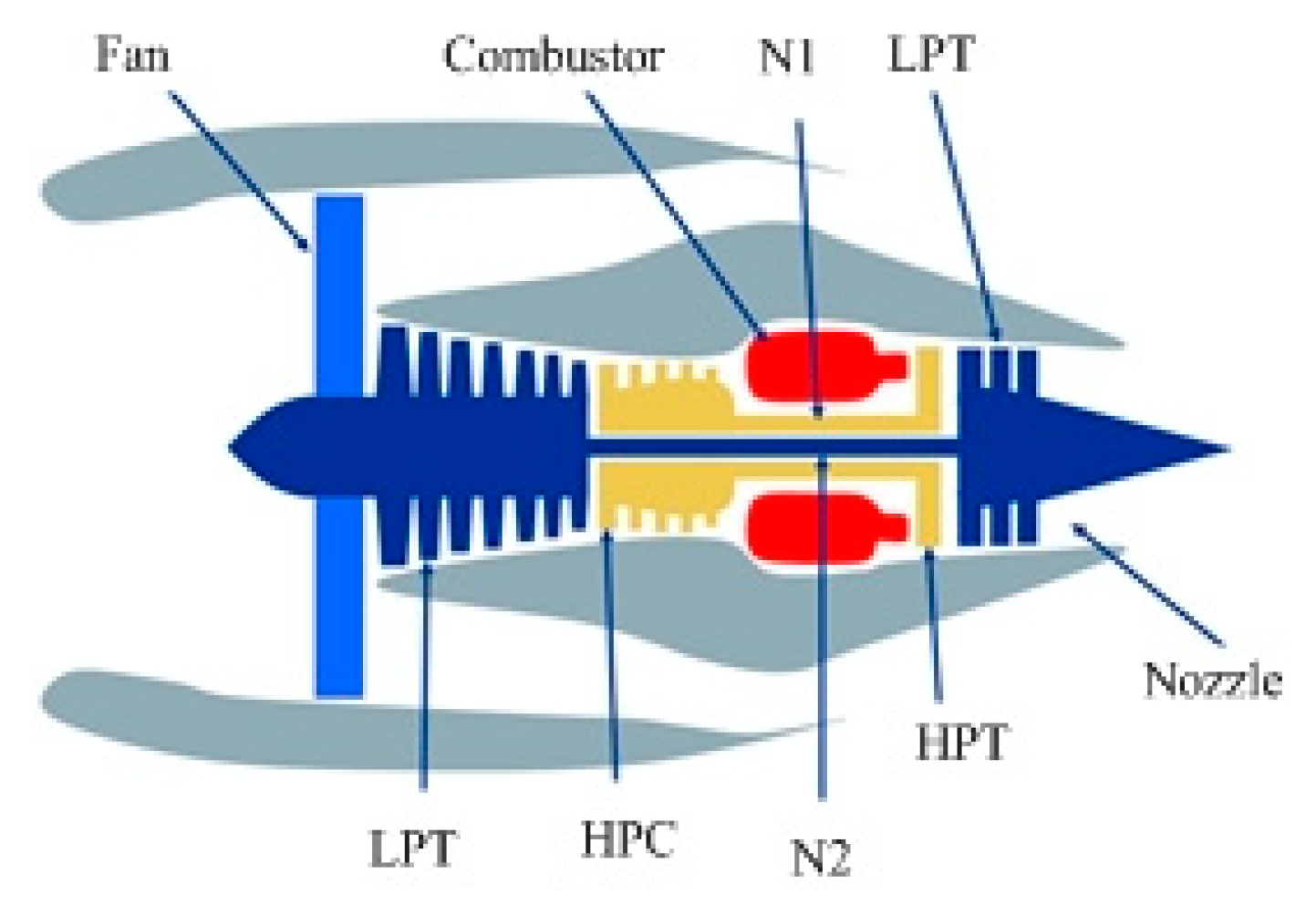

3.1. C-MAPPS Datasets

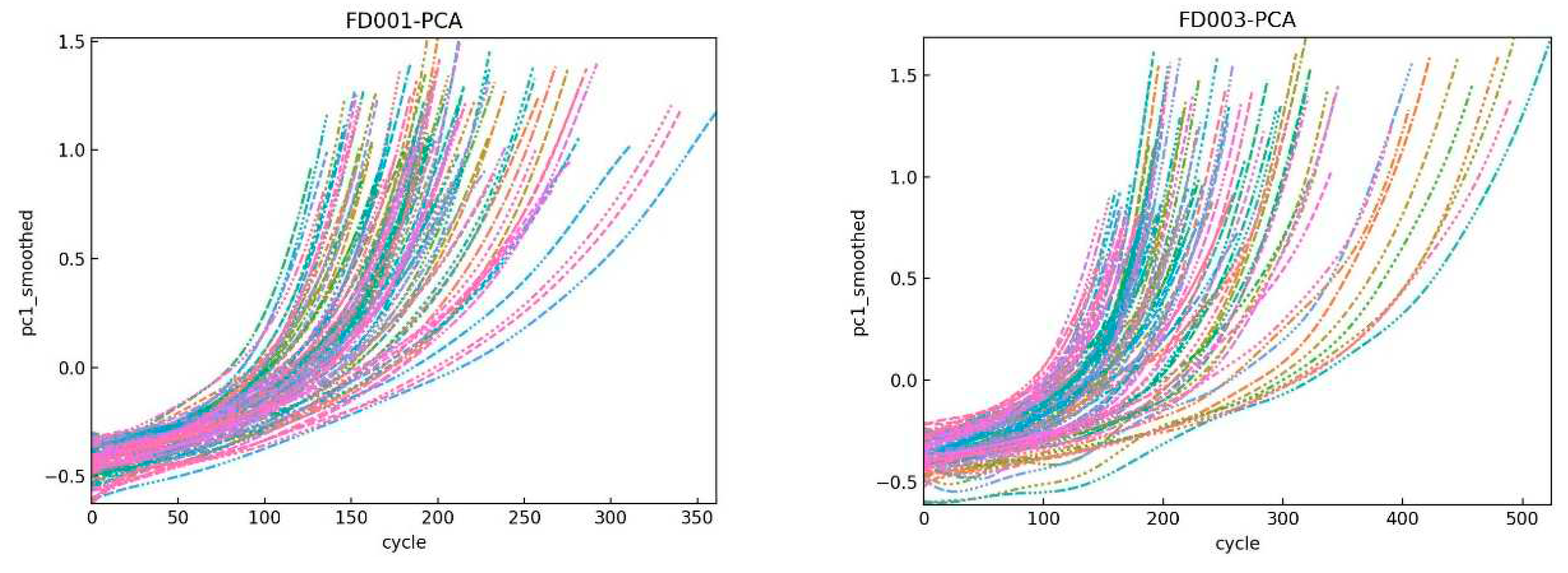

3.2. Data Preprocessing

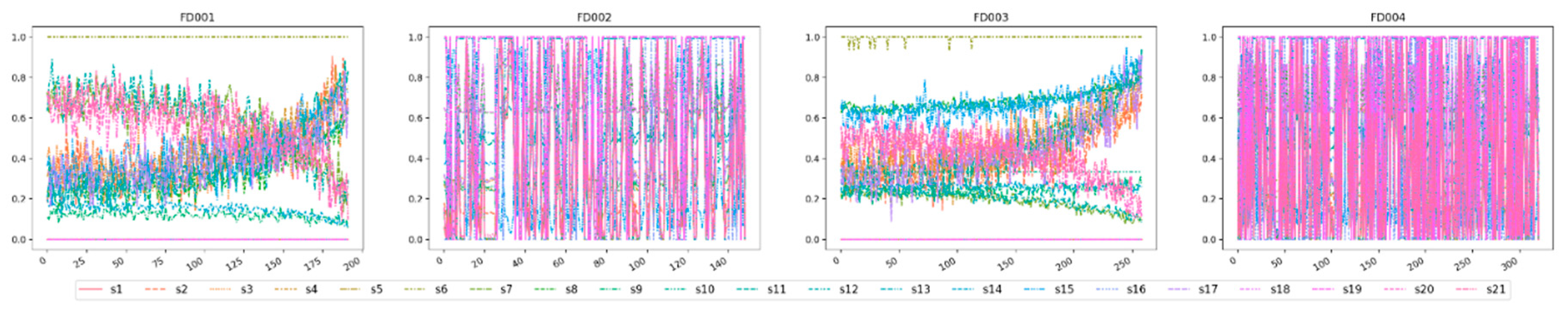

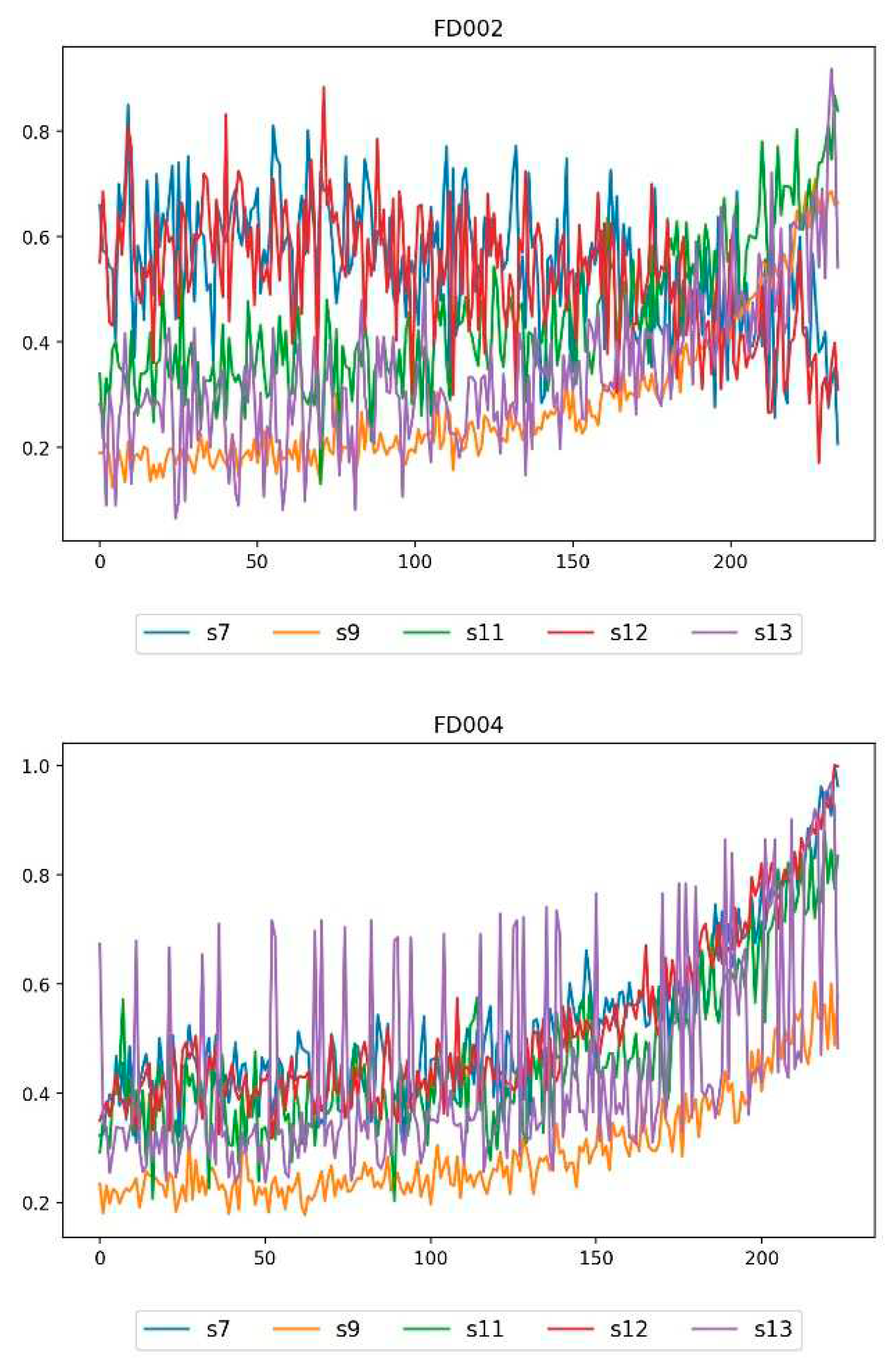

3.3. Sensor Selection

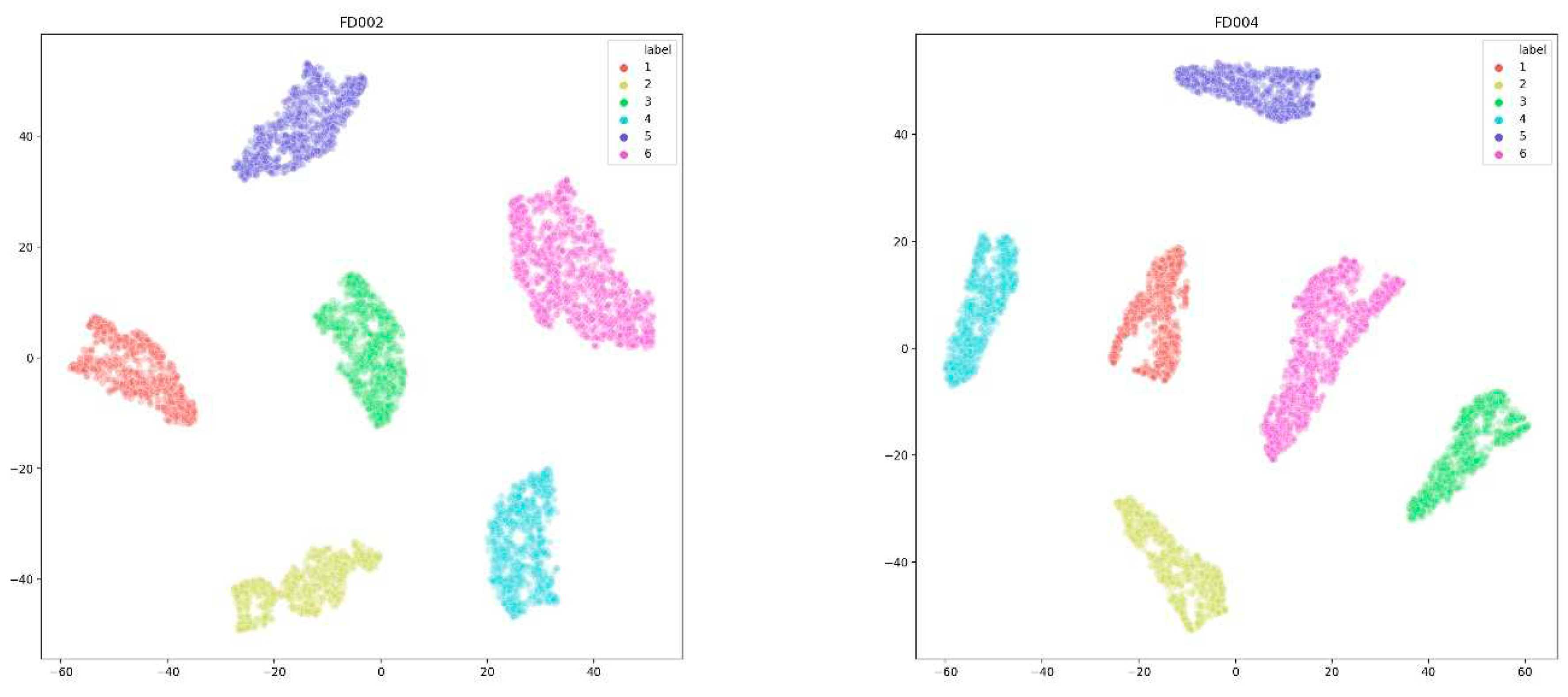

3.4. Operating Condition Identification

3.5. Performance Metrics

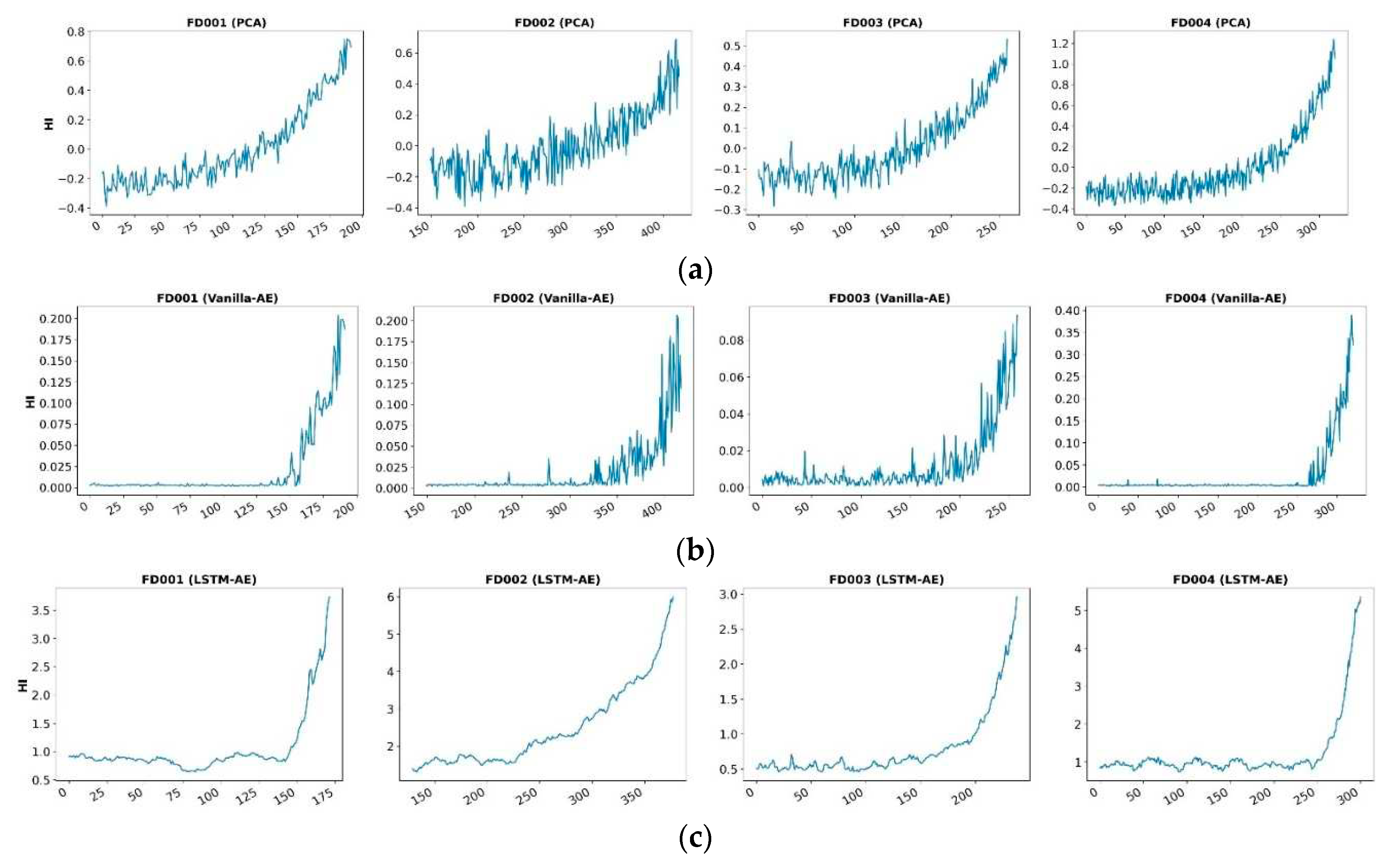

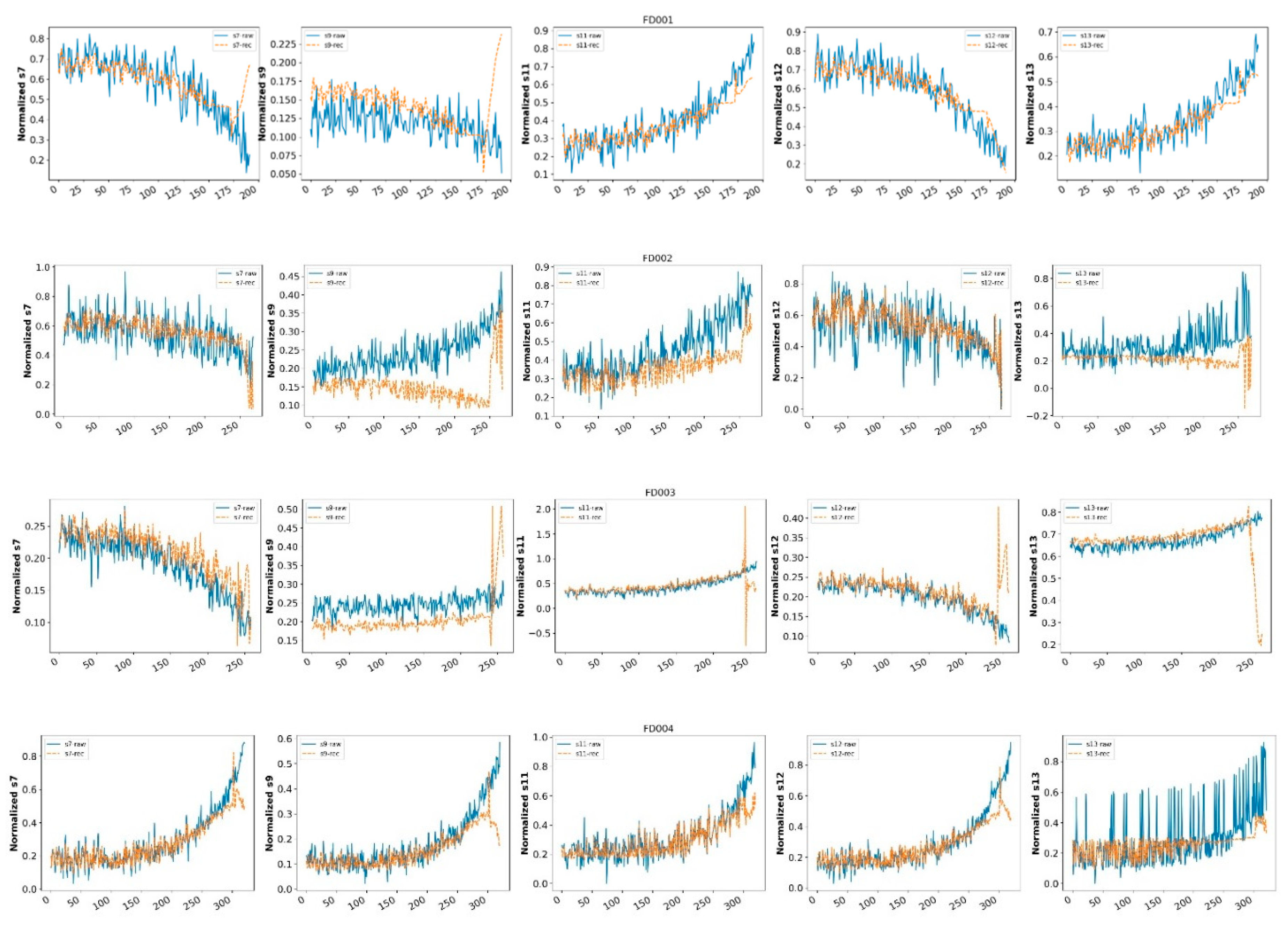

3.6. Health indicator evaluation

| Model | Neural Network | Name | Neuron number |

| Input layer | LSTM | Input | 5 |

| Decoder | LSTM | Hidden layer | 128 |

| Decoder | LSTM | Hidden layer | 64 |

| Decoder | LSTM | Hidden layer | 32 |

| Middle layer | LSTM | Bottleneck | 8 |

| Encoder | LSTM | Hidden layer | 32 |

| Encoder | LSTM | Hidden layer | 64 |

| Encoder | LSTM | Hidden layer | 128 |

| Output layer | Dense | Output | 5 |

| Hyperparameters | Value |

| Batch size | 128 |

| Learning rate | 0.001 |

| Optimizer | Adam |

| Loss | Mae |

| Epochs | 200 |

| Dropout rate | 0.5 |

3.7. The effect of LSTM time step on predictive performance

4. Conclusion

References

- Y. Hu, X. Miao, Y. Si, E. Pan, and E. Zio, “Prognostics and health management: A review from the perspectives of design, development and decision,” Reliability Engineering & System Safety, vol. 217, p. 108063, 2022-1-1 2022. [CrossRef]

- E. Quatrini, F. Costantino, G. Di Gravio, and R. Patriarca, “Condition-Based Maintenance—An Extensive Literature Review,” in Machines. vol. 8, 2020. [CrossRef]

- K. L. Tsui, N. Chen, Q. Zhou, Y. Hai, W. Wang, and S. Wu, “Prognostics and Health Management: A Review on Data Driven Approaches,” Mathematical Problems in Engineering, vol. 2015, p. 793161, 2015-1-1 2015. [CrossRef]

- A. A. Bataineh, A. Mairaj and D. Kaur, “Autoencoder based Semi-Supervised Anomaly Detection in Turbofan Engines,” International journal of advanced computer science & applications, vol. 11, 2020-1-1 2020.. [CrossRef]

- K. T. P. Nguyen and K. Medjaher, “An automated health indicator construction methodology for prognostics based on multi-criteria optimization,” ISA Transactions, vol. 113, pp. 81-96, 2021. [CrossRef]

- P. Wen, S. Zhao, S. Chen, and Y. Li, “A generalized remaining useful life prediction method for complex systems based on composite health indicator,” Reliability Engineering & System Safety, vol. 205, p. 107241, 2021-1-1 2021. [CrossRef]

- L. Xiao, J. Tang, X. Zhang, E. Bechhoefer, and S. Ding, “Remaining useful life prediction based on intentional noise injection and feature reconstruction,” Reliability Engineering & System Safety, vol. 215, p. 107871, 2021-1-1 2021. [CrossRef]

- D. D., L. H. Q., W. Z., and G. X., “A Survey on Model-Based Distributed Control and Filtering for Industrial Cyber-Physical Systems,” IEEE Transactions on Industrial Informatics, vol. 15, pp. 2483-2499, 2019-1-1 2019. [CrossRef]

- S. Cofre-Martel, E. Lopez Droguett and M. Modarres, “Big Machinery Data Preprocessing Methodology for Data-Driven Models in Prognostics and Health Management,” in Sensors. vol. 21, 2021. [CrossRef]

- R. Rocchetta, Q. Gao, D. Mavroeidis, and M. Petkovic, “A robust model selection framework for fault detection and system health monitoring with limited failure examples: Heterogeneous data fusion and formal sensitivity bounds,” Engineering Applications of Artificial Intelligence, vol. 114, p. 105140, 2022-1-1 2022. [CrossRef]

- W. Zhu, G. Ni, Y. Cao, and H. Wang, “Research on a rolling bearing health monitoring algorithm oriented to industrial big data,” Measurement, vol. 185, p. 110044, 2021-1-1 2021. [CrossRef]

- Y. Zhang, Y. Xin, Z. Liu, M. Chi, and G. Ma, “Health status assessment and remaining useful life prediction of aero-engine based on BiGRU and MMoE,” Reliability Engineering & System Safety, vol. 220, p. 108263, 2022. [CrossRef]

- L. Guo, Y. Yu, A. Duan, H. Gao, and J. Zhang, “An unsupervised feature learning based health indicator construction method for performance assessment of machines,” Mechanical Systems and Signal Processing, vol. 167, p. 108573, 2022-1-1 2022. [CrossRef]

- M. M. Manjurul Islam, A. E. Prosvirin and J. Kim, “Data-driven prognostic scheme for rolling-element bearings using a new health index and variants of least-square support vector machines,” Mechanical Systems and Signal Processing, vol. 160, p. 107853, 2021-1-1 2021. [CrossRef]

- S. Wang, J. Chen, H. Wang, and D. Zhang, “Degradation evaluation of slewing bearing using HMM and improved GRU,” Measurement, vol. 146, pp. 385-395, 2019-1-1 2019. [CrossRef]

- Z. Lyu, G. Wang and C. Tan, “A novel Bayesian multivariate linear regression model for online state-of-health estimation of Lithium-ion battery using multiple health indicators,” Microelectronics Reliability, vol. 131, p. 114500, 2022-1-1 2022. [CrossRef]

- Y. Wu, M. Yuan, S. Dong, L. Lin, and Y. Liu, “Remaining useful life estimation of engineered systems using vanilla LSTM neural networks,” Neurocomputing, vol. 275, pp. 167-179, 2018-1-1 2018. [CrossRef]

- L. Chen, G. Xu, S. Zhang, W. Yan, and Q. Wu, “Health indicator construction of machinery based on end-to-end trainable convolution recurrent neural networks,” Journal of Manufacturing Systems, vol. 54, pp. 1-11, 2020-1-1 2020. [CrossRef]

- Y. Cheng, K. Hu, J. Wu, H. Zhu, and X. Shao, “A convolutional neural network based degradation indicator construction and health prognosis using bidirectional long short-term memory network for rolling bearings,” Advanced Engineering Informatics, vol. 48, p. 101247, 2021-1-1 2021. [CrossRef]

- Z. Ye and J. Yu, “Health condition monitoring of machines based on long short-term memory convolutional autoencoder,” Applied Soft Computing, vol. 107, p. 107379, 2021-1-1 2021. [CrossRef]

- P. Li, Z. Zhang, R. Grosu, Z. Deng, J. Hou, Y. Rong, and R. Wu, “An end-to-end neural network framework for state-of-health estimation and remaining useful life prediction of electric vehicle lithium batteries,” Renewable and Sustainable Energy Reviews, vol. 156, p. 111843, 2022-1-1 2022. [CrossRef]

- M. Marei, S. E. Zaatari and W. Li, “Transfer learning enabled convolutional neural networks for estimating health state of cutting tools,” Robotics and Computer-Integrated Manufacturing, vol. 71, p. 102145, 2021-1-1 2021. [CrossRef]

- S. Behera and R. Misra, “Generative adversarial networks based remaining useful life estimation for IIoT,” Computers & Electrical Engineering, vol. 92, p. 107195, 2021-1-1 2021. [CrossRef]

- P. Li, Y. Pei and J. Li, “A comprehensive survey on design and application of autoencoder in deep learning,” Applied Soft Computing, vol. 138, p. 110176, 2023-1-1 2023. [CrossRef]

- P. Vincent, H. Larochelle, Y. Bengio, and P. A. Manzagol, “Extracting and Composing Robust Features with Denoising Autoencoders,” in Machine Learning, Proceedings of the Twenty-Fifth International Conference (ICML 2008), Helsinki, Finland, June 5-9, 2008, 2008.

- D. She, M. Jia and M. G. Pecht, “Sparse auto-encoder with regularization method for health indicator construction and remaining useful life prediction of rolling bearing,” Measurement Science and Technology, vol. 31, p. 105005, 2020-1-1 2020. [CrossRef]

- F. Xu, Z. Huang, F. Yang, D. Wang, and K. L. Tsui, “Constructing a health indicator for roller bearings by using a stacked auto-encoder with an exponential function to eliminate concussion,” Applied Soft Computing, vol. 89, p. 106119, 2020-1-1 2020. [CrossRef]

- J. Jakubowski, P. Stanisz, S. Bobek, and G. J. Nalepa, “Anomaly Detection in Asset Degradation Process Using Variational Autoencoder and Explanations,” in Sensors. vol. 22, 2022. [CrossRef]

- H. D. Nguyen, K. P. Tran, S. Thomassey, and M. Hamad, “Forecasting and Anomaly Detection approaches using LSTM and LSTM Autoencoder techniques with the applications in supply chain management,” International Journal of Information Management, vol. 57, p. 102282, 2021-1-1 2021. [CrossRef]

- T. Han, J. Pang and A. C. C. Tan, “Remaining useful life prediction of bearing based on stacked autoencoder and recurrent neural network,” Journal of Manufacturing Systems, vol. 61, pp. 576-591, 2021-1-1 2021. [CrossRef]

- Z. Yang, B. Xu, W. Luo, and F. Chen, “Autoencoder-based representation learning and its application in intelligent fault diagnosis: A review,” Measurement, vol. 189, p. 110460, 2022-1-1 2022. [CrossRef]

- X. Wu, Y. Zhang, C. Cheng, and Z. Peng, “A hybrid classification autoencoder for semi-supervised fault diagnosis in rotating machinery,” Mechanical Systems and Signal Processing, vol. 149, p. 107327, 2021-1-1 2021. [CrossRef]

- H. Lee, H. J. Lim and A. Chattopadhyay, “Data-driven system health monitoring technique using autoencoder for the safety management of commercial aircraft,” Neural Computing and Applications, vol. 33, pp. 3235-3250, 2021-1-1 2021. [CrossRef]

- J. Li, W. Ren and M. Han, “Mutual Information Variational Autoencoders and Its Application to Feature Extraction of Multivariate Time Series,” International Journal of Pattern Recognition and Artificial Intelligence, vol. 36, p. 2255005, 2022-1-1 2022. [CrossRef]

- H. Stögbauer, P. Grassberger and A. Kraskov, “Estimating mutual information,” Physical Review E, vol. 69, p. 066138, 2004-6-23 2004. [CrossRef]

- C. Pascoal, M. R. Oliveira, A. Pacheco, and R. Valadas, “Theoretical evaluation of feature selection methods based on mutual information,” Neurocomputing, vol. 226, pp. 168-181, 2017-1-1 2017. [CrossRef]

- J. Cheng, J. Sun, K. Yao, M. Xu, and Y. Cao, “A variable selection method based on mutual information and variance inflation factor,” Spectrochimica Acta Part A: Molecular and Biomolecular Spectroscopy, vol. 268, p. 120652, 2022-1-1 2022. [CrossRef]

- W. Zaremba, I. Sutskever and O. Vinyals, “Recurrent neural network regularization,” arXiv preprint arXiv:1409.2329, 2014-1-1 2014. [CrossRef]

- de Pater and M. Mitici, “Developing health indicators and RUL prognostics for systems with few failure instances and varying operating conditions using a LSTM autoencoder,” Engineering Applications of Artificial Intelligence, vol. 117, p. 105582, 2023-1-1 2023. [CrossRef]

- S. A., G. K., S. D., and E. N., “Damage propagation modeling for aircraft engine run-to-failure simulation,” in 2008 International Conference on Prognostics and Health Management, 2008, pp. 1-9.

- U. M. Khaire and R. Dhanalakshmi, “Stability of feature selection algorithm: A review,” Journal of King Saud University-Computer and Information Sciences, vol. 34, pp. 1060-1073, 2022-1-1 2022. [CrossRef]

- H. Peng, F. Long and C. Ding, “Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy,” IEEE Transactions on pattern analysis and machine intelligence, vol. 27, pp. 1226-1238, 2005-1-1 2005. [CrossRef]

- C. Liu, J. Sun, H. Liu, S. Lei, and X. Hu, “Complex engineered system health indexes extraction using low frequency raw time-series data based on deep learning methods,” Measurement, vol. 161, p. 107890, 2020-1-1 2020. [CrossRef]

- M. L. Baptista, K. Goebel and E. M. P. Henriques, “Relation between prognostics predictor evaluation metrics and local interpretability SHAP values,” Artificial Intelligence, vol. 306, p. 103667, 2022-1-1 2022. [CrossRef]

| Datasets | FD001 | FD002 | FD003 | FD004 |

| Operating condition | 1 | 6 | 1 | 6 |

| Fault mode | 1 | 1 | 2 | 2 |

| Number of engines | 100 | 260 | 100 | 249 |

| Sensor number | Value of MI | |

| s2 | 0.570 | |

| s7 | 0.697 | |

| s8 | 0.597 | |

| s9 | 1.000 | |

| s11 | 0.951 | |

| s12 | 0.705 | |

| s13 | 0.682 | |

| s17 | 0.485 | |

| s20 | 0.506 |

| Sensor number | Description | Units |

| s7 | Total pressure at High-Pressure Compressor outlet | kpa |

| s9 | Physical core speed | rpm |

| s11 | Static pressure at High-Pressure Compressor outlet | kpa |

| s12 | Ratio of fuel flow to Ps30 | — |

| s13 | Corrected fan speed | rpm |

| Predictor | FD001 | FD002 | |||||||

| Mono | Tren | Prog | Fitness | Mono | Tren | Prog | Fitness | ||

| PCA | 0.335 | 0.892 | 0.855 | 2.082 | 0.194 | 0.0004 | 0.751 | 0.9454 | |

| Vanilla-AE | 0.246 | 0.796 | 0.818 | 1.860 | 0.142 | 0.419 | 0.617 | 1.178 | |

| LSTM-AE | 0.449 | 0.890 | 0.841 | 2.180 | 0.446 | 0.861 | 0.822 | 2.129 | |

| Predictor | FD003 | FD004 | |||||||

| Mono | Tren | Prog | Fitness | Mono | Tren | Prog | Fitness | ||

| PCA | 0.330 | 0.805 | 0.701 | 1.836 | 0.169 | 0.0002 | 0.581 | 0.7502 | |

| Vanilla-AE | 0.215 | 0.640 | 0.496 | 1.351 | 0.15 | 0.383 | 0.529 | 1.062 | |

| LSTM-AE | 0.419 | 0.718 | 0.605 | 1.742 | 0.272 | 0.387 | 0.641 | 1.300 | |

| Time step (cycle) |

FD001 | FD002 | |||||||

| Mono | Tren | Prog | Fitness | Mono | Tren | Prog | Fitness | ||

| 5 | 0.298 | 0.880 | 0.852 | 2.030 | 0.184 | 0.356 | 0.537 | 1.077 | |

| 10 | 0.313 | 0.801 | 0.739 | 1.853 | 0.296 | 0.776 | 0.657 | 1.729 | |

| 15 | 0.406 | 0.709 | 0.795 | 1.910 | 0.358 | 0.791 | 0.648 | 1.797 | |

| 20 | 0.449 | 0.890 | 0.841 | 2.180 | 0.446 | 0.861 | 0.822 | 2.129 | |

| 25 | 0.448 | 0.886 | 0.817 | 2.151 | 0.358 | 0.772 | 0.729 | 1.859 | |

| Time step (cycle) |

FD003 | FD004 | |||||||

| Mono | Tren | Prog | Fitness | Mono | Tren | Prog | Fitness | ||

| 5 | 0.329 | 0.845 | 0.561 | 1.735 | 0.216 | 0.371 | 0.549 | 1.136 | |

| 10 | 0.383 | 0.860 | 0.330 | 1.573 | 0.284 | 0.454 | 0.610 | 1.348 | |

| 15 | 0.418 | 0.707 | 0.545 | 1.670 | 0.316 | 0.406 | 0.614 | 1.336 | |

| 20 | 0.419 | 0.718 | 0.605 | 1.742 | 0.272 | 0.387 | 0.641 | 1.300 | |

| 25 | 0.448 | 0.820 | 0.441 | 1.709 | 0.323 | 0.188 | 0.453 | 0.964 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).