1. Introduction

As Cyber-Physical systems (CPS) became increasingly integrated into our daily lives, concerns about the security and privacy of data generated by these systems are growing. CPS are complex systems that combine physical and computational components, often with a high degree of connectivity and interaction with the internet. This complexity can make them vulnerable to cyberattacks, which can compromise the security and privacy of the data they generate. Therefore, ensuring the protection of data security and privacy in CPS is essential to prevent unauthorized access, tampering, or theft of sensitive information [

1,

2,

3]. According to Zion Market Research’s report, the global market size of Cyber-Physical Systems (CPSs) is expected to increase from

$76.98 billion in 2022 to

$177.57 billion by 2030, at a compound annual growth rate (CAGR) of 8.01% during the forecast period. The growth is attributed to the advancements in Internet of Things (IoT) technology, which is driving the demand for more efficient and intelligent CPS solutions across various industries such as healthcare, automotive, and industrial automation [

4]. In this context, there is a pressing need to address the challenges associated with securing and protecting data generated by CPS, and to develop effective strategies and solutions to mitigate potential risks. One of the most common security issues in CPS is related to the vulnerabilities in the communication channels between the cyber and physical components [

5]. For instance, an attacker may compromise the communication channel to send false commands to the physical components, causing physical damage or disruption to the system. Another common security issue in CPS is related to the lack of authentication and authorization mechanisms for system components . Without proper authentication and authorization, an attacker can gain unauthorized access to the system and manipulate its components [

6,

7].

One of the most recent and well-known CPS security issues is the SolarWinds cyberattack that occurred in late 2020. The SolarWinds hack was a sophisticated attack on various U.S. government agencies, critical infrastructure, and private companies. It was executed as a supply chain attack by exploiting a flaw in the popular SolarWinds Orion software, which is widely used for IT management. The attackers inserted harmful code into the Orion software, which was then spread to thousands of SolarWinds customers through software updates. This code enabled the attackers to unlawfully access the victims’ networks, steal confidential data, and launch additional attacks [

8]. Additionally, CPS are often subject to software vulnerabilities that can be exploited by attackers to compromise the system. These vulnerabilities can arise due to software bugs, design flaws, or inadequate testing procedures. An attacker can exploit these vulnerabilities to take control of the system, steal sensitive data, or cause physical harm. Therefore, the security of CPS is a critical concern, and it requires a multidisciplinary approach involving cybersecurity experts, engineers, and policymakers to develop robust security mechanisms that can protect these systems from cyber threats [

7].

IoT, on the other hand, is a network of physical objects that are endowed with sensors, software, and connectivity, enabling them to exchange data and communicate with each other [

9]. The IoT often involves large numbers of devices that are distributed across a wide area, such as a city or a manufacturing plant. IoT devices may be used for a variety of applications, including environmental monitoring, asset tracking, and smart home automation. While CPS and IoT share some commonalities, there are some key differences between them. CPS typically have more complex computational and control systems than IoT devices, as they need to sense and respond to the physical world in real-time [

6,

10]. CPS may also require specialized hardware and software to meet specific performance, reliability, and safety requirements. IoT devices, on the other hand, are often simpler and less specialized than CPS, as they may not require the same level of real-time control or safety features. However, IoT devices may need to operate in a more diverse and dynamic environment than CPS, which can pose challenges for security, privacy, and interoperability [

11].

Table 1 shows the comparison of requirements in CPS and IoT as some of the security and privacy issues of CPS could extend to IoT.

There are various application areas where efforts are being made to address security and privacy issues in CPSs. In the field of Industrial Control Systems, studies have focused on addressing data theft and intrusion attacks [

12,

13]. Solutions such as secure data containers and Intrusion Detection Systems (IDS) have been proposed to safeguard data integrity and monitor system behavior. The use of realistic simulation frameworks like MiniCPS has enabled the development and validation of new defensive strategies for CPSs. Weather and satellite applications [

14] have also been an area of interest. Researchers have proposed frameworks like the Internet of Predictable Things (IoPT) to enhance the resilience of CPSs against cybersecurity risks. Adversarial machine learning attacks in IoT environments have been addressed through the use of machine learning-based net load forecasting algorithms and the Cyberattack Detection algorithm.

In the manufacturing domain [

15], researchers have focused on cyber-physical security for electric vehicles and developed metrics to measure performance degradation caused by cyber-physical attacks. Machine learning techniques have been employed to process large amounts of data and detect various types of attacks. In the healthcare domain, ensuring the reliability and security of modeled systems against tampering with sensor data is crucial. Machine learning algorithms have been applied to detect data breaches, improve cloud security, and develop frameworks for IoT cloud deployment. The significance of addressing security breaches in Healthcare Cyber-Physical Systems (HCPS) is emphasized to prevent unauthorized access to sensitive health data and potential misdiagnosis or incorrect treatment [

10,

16,

17].

Water treatment is another major application area where security attacks have occurred in CPSs. Researchers have focused on intrusion detection mechanisms [

18,

19,

20], false data injection [

18], DoS attacks [

21], spoofing [

21,

22], authentication [

23], and the identification of anomalies in water systems [

17,

19,

24]. Machine learning methods have been utilized for anomaly detection and vessel trajectory prediction to improve maritime surveillance [

25]. The power domain has seen studies on machine learning-based detection of attacks in water and power grids [

15,

20,

26,

27]. False data attacks in ML systems and physical attacks causing damage, device overheating, and power outages have been addressed. Researchers have developed security models and detection mechanisms to mitigate risks and maintain the reliability of power systems [

28,

29]. In the transportation domain [

11,

30,

31], researchers have explored methods to protect highly confidential information from cyberattacks and assess the risk using models such as Factor Analysis of Information Risk (FAIR) and Crime Prevention through Environmental Design (CPTED). Detection mechanisms and security models have been developed to respond to physical attacks and ensure system resilience.

The main objective of this paper is to investigate the security and privacy concerns associated with CPS. The authors conducted a thorough analysis of existing literature and made several valuable contributions, such as categorizing the various security and privacy issues in CPS, identifying different domains where CPS are used, and exploring the use of AI techniques to address such issues.

1.1. Contributions of this research

t is crucial to prioritize data protection in CPS systems, especially considering the integration of AI and CPS, which is expected to bring revolutionary advancements in the next decade, alongside the development of 6G communication technologies. This study makes several contributions. Firstly, it presents a comprehensive classification diagram that encompasses various security and privacy threats in CPS. Secondly, it explores the utilization of AI in addressing these security and privacy concerns. Thirdly, it provides a taxonomy of AI techniques employed for securing CPSs. These contributions collectively enhance our understanding of the security and privacy landscape in CPS and provide valuable insights for developing robust defense mechanisms. Furthermore, this research sheds light on potential challenges and issues that may arise in the future regarding the implementation of CPS systems in terms of security and privacy.

1.2. Scope of the review

CPS systems use hardware devices such as sensors, actuators, microcontrollers, and robotic components that are embedded with computer systems designed to perform specific functions. However, the data generated from these devices are prone to vulnerabilities. To protect this data, researchers have incorporated AI techniques.

Table 2 provides a summary of the merits and demerits of existing surveys. The scope of this research focuses on developing taxonomies of security and privacy issues among various application domains and the AI techniques used to address these issues in CPS. This survey does not concentrate on blockchain-based security, since the primary focus is on AI techniques.

1.3. Organizing and reading map

The introduction section of the paper presents the need and motivation for conducting the research, highlighting the similarities between CPS and IoT and drawing comparisons with existing surveys. The paper is structured as follows:

Section 2 outlines the research questions and methodology employed in the survey. The study results are presented in Sections 3 to 5.

Section 3 covers various security issues in CPS, while

Section 4 discusses how AI helps address security and privacy concerns in different application areas of CPS.

Section 5 illustrates the taxonomy of AI techniques used in CPS.

Section 6 delves into the research’s significance, limitations, and challenges in implementing future CPS systems. Finally,

Section 7 provides the study’s conclusion.

2. Methodology

The overall research goal is to investigate the various security and privacy issues encountered in the literature regarding cyber-physical systems, and to identify the different application areas that utilize AI techniques to address data protection issues in CPS. The specific research questions (RQ) and their objectives are presented below.

- RQ1

What are the concerns related to security and privacy in CPS? A classification of various security and privacy concerns is presented to answer this research question. The purpose is to recognize a variety of attacks that could occur in CPS systems.

- RQ2

How can AI help address CPS’s security and privacy concerns? A flowchart in presented and explained how AI/ML detects security and privacy issues. The purpose is to help provide an integrated approach to design an ML-based secure CPS.

- RQ3

What AI techniques are used to address these concerns in CPS? A taxonomy of various ML techniques concerning security and privacy issues is provided to achieve this.

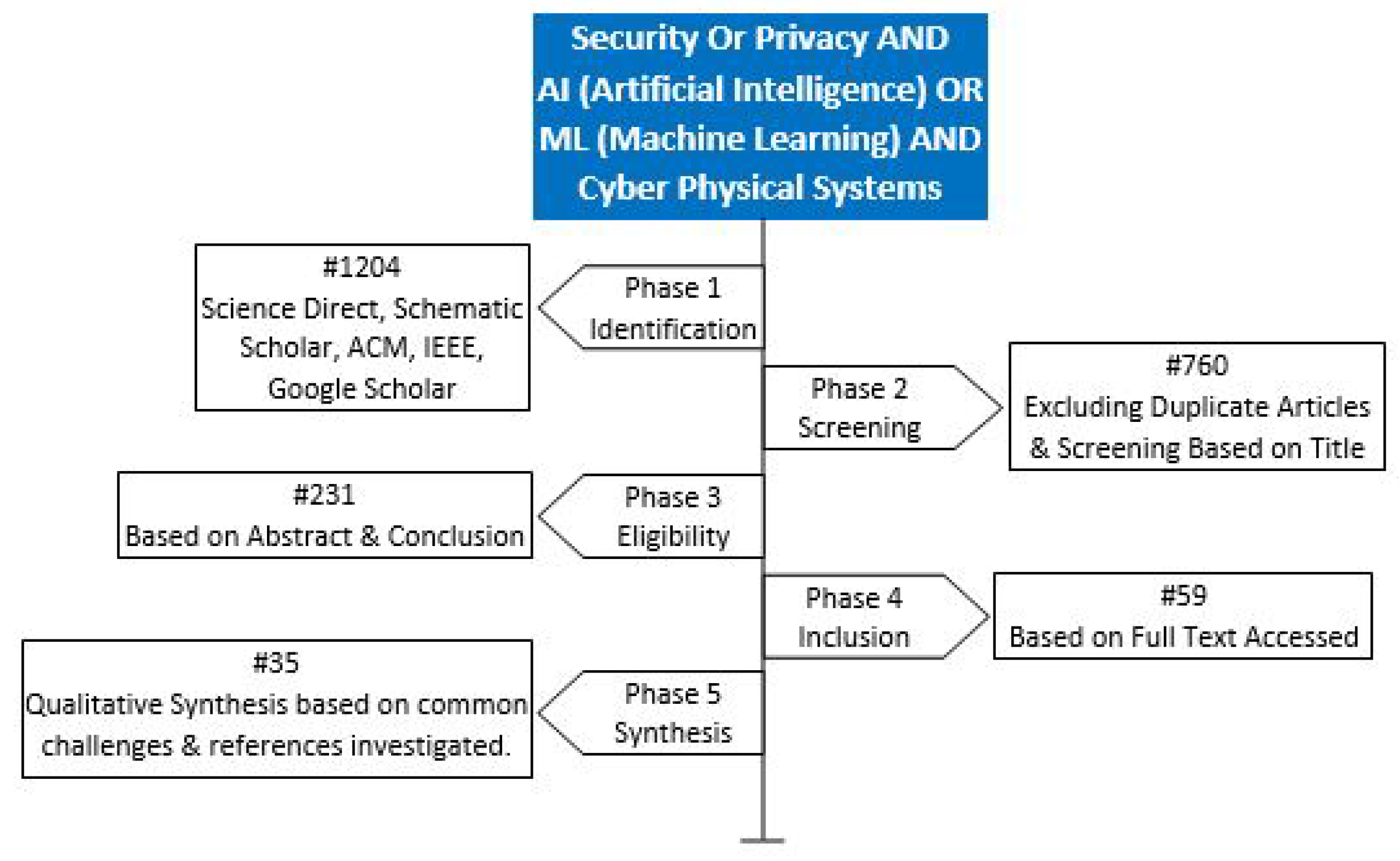

To achieve the research goal, we searched Google Scholar, Semantic Scholar, ACM Digital Library, and IEEE Xplore using the search string "(security or privacy) AND (AI OR artificial intelligence OR ML OR machine learning) AND CPS OR cyber-physical systems". The search strategy is illustrated in

Figure 1. We carefully examined the results of the search string and included the related studies in our review. Our inclusion criteria involved a clear definition of specific attacks in CPS and the use of AI or ML techniques to resolve those attacks in various CPS applications. The articles that were not related to IoT or CPS or not related to our research goals are excluded. The studies related to blockchain based security defense mechanisms are not included because it was beyond the scope of the research, but plan to explore this area in future work.

3. Security and privacy issues in CPS

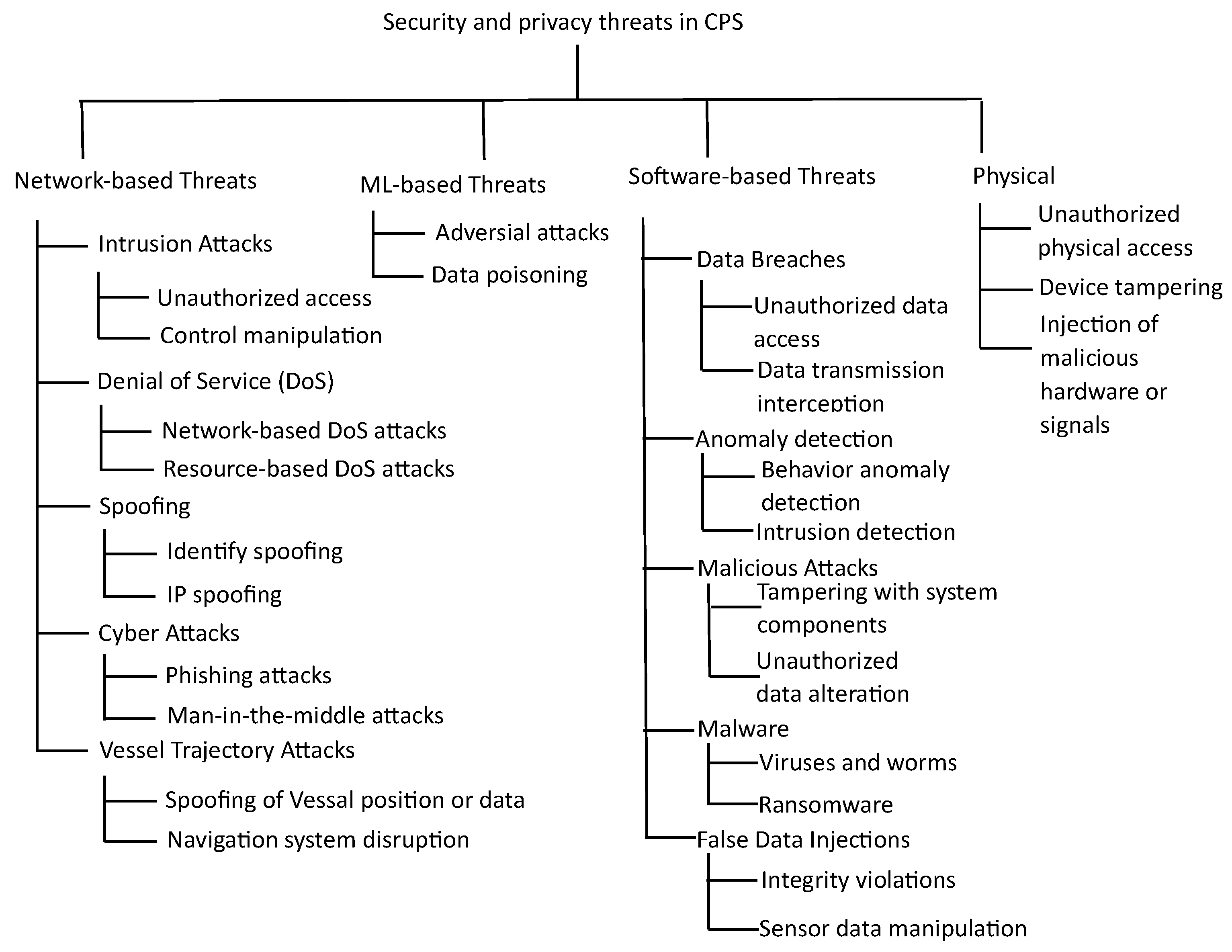

This section presents the answer to the RQ1. What are the concerns related to security and privacy in CPS. To answer this research question, a classification system for different security and privacy concerns is presented in

Table 3. The purpose is to recognize a variety of attacks that could occur in CPS systems. The categorization is based on the nature and primary focus of each security and privacy issue. Network-based threats primarily target the network layer, software-based threats focus on vulnerabilities in software components in CPS systems, and ML-based threats specifically exploit weaknesses in machine learning models.

Table 3 also includes the information of the economic loss to the CPSs due to the attacks.

Figure 2 shows the further classification of the security threats in CPSs.

3.1. Network-based threats

Network-based Threats: The security issues categorized under network-based threats involve attacks that exploit vulnerabilities in the network infrastructure or communication channels. Intrusion attacks, denial of service (DoS), spoofing, cyberattacks, and vessel trajectory attacks are all examples of attacks that target the network layer. These threats aim to gain unauthorized access, disrupt services, manipulate data, or compromise the integrity and availability of the network. An Intrusion Detection System (IDS) is a tool used to identify and alert about any malicious activities that may compromise network security or data stored on connected computers. The system monitors the network continuously and generates alerts when suspicious activity is detected, which can be further investigated by a security analyst or incident responder to mitigate the threat.

Pordelkhaki et al. [

18] explored the use of an ML-based Network Intrusion Detection System (NIDS) for an Industrial Control System (ICS) using a secure water treatment testbed. They combined network traffic data with physical process data from a pre-labelled dataset and evaluated the effectiveness of using privileged information as a supervised learning technique to enhance the detection of network intrusion attacks. They found that this approach was more effective than other ML algorithms that used network traffic data alone. The authors also discussed various other ML algorithms, including the Support Vector Machine Plus Algorithm (SVM+), Decision Tree Algorithm (DT), K-Nearest Neighbors (KNN), Logistic Regression (LR), and Convolutional Neural Networks (CNN), and evaluated their performance in detecting network intrusion attacks. They found that SVM+ outperformed the other algorithms in terms of F1-score, although the dataset used was imbalanced in nature. Colelli et al. [

13] developed a machine learning tool that detects cyberattacks in cyber-physical systems to improve their security. They evaluated the performance of three models in classifying normal and anomalous behavior in a water tank system to identify attacks and prevent hazardous conditions. The results were promising, as the machine learning approach effectively detected and prevented cyberattacks.They also explored the use of supervised machine learning with the Random Forest algorithm to enhance IDS capabilities. They found that this approach had high accuracy and detection rates for both binary and multi-class classification and outperformed other ML algorithms in terms of accuracy, detection rates, and false-positive rates.

Denial-of-service attacks aim to make a resource unavailable by disrupting the services of a connected host, while spoofing involves pretending to be something else to gain access to a system for malicious purposes. Perrone et al. [

21] conducted research on using intelligent threat detection to identify malicious activities and anomalous events in water systems that are regulated by SCADA. They compared the effectiveness of different machine learning techniques such as KNN, NB, SVM, DT, and RF to classify these activities, with RF showing the most reliable performance. In the future, the security of CPSs will depend on the use of AI to automate threat identification and countermeasures through SOAR systems, which will enhance situational awareness, emergency response, and crisis management. A cyberattack refers to any effort to gain unauthorized access to a computer, computing system, or computer network with the intention of causing harm. The objective of a cyberattack is to disable, disrupt, destroy, or control computer systems, or to modify, block, delete, manipulate, or steal the data stored within these systems. According to Jamal et al. [

15], ML techniques are crucial for detecting various attacks in Cyber-Physical Systems (CPS), such as replay attacks, Denial of Service (DOS) attacks, Jamming attacks, time synchronization attacks, and false data injection attacks. Their survey focuses on cyberattacks in different CPS industries, including industrial, construction, cyber manufacturing, and electric power.

Liu et al. [

25] suggest that machine learning algorithms like CNN, LSTM, and hybrid models can effectively predict vessel trajectories by taking into account vessel characteristics, historical movement patterns, and environmental variables, aided by advanced sensor technologies such as AIS and GPS. The SFM-LSTM model combines LSTM with the social force model, providing an accurate and reliable approach for vessel trajectory prediction and enabling smart traffic services in marine transportation systems with the help of AI and IoT technologies. Additionally, data-driven frameworks using LSTM and GRU models have also been used for vessel trajectory prediction.

3.2. ML-based Threats

The security issue categorized as an ML-based threat specifically relates to attacks that exploit vulnerabilities in machine learning models. ML-powered attacks refer to malicious activities that manipulate or deceive ML models within CPS. These threats can include adversarial attacks or techniques that tamper with training data or model outputs, compromising the accuracy, reliability, or robustness of the ML algorithms utilized.

"ML-powered attacks" refer to cyberattacks that utilize machine learning algorithms to execute malicious activities by identifying and exploiting system vulnerabilities. These types of attacks are becoming more widespread as machine learning technologies are increasingly adopted across industries. "Adversarial attacks" are one type of ML-powered attack where hackers manipulate machine learning models by injecting malicious inputs to cause unintended behavior. Organizations need a comprehensive strategy that includes monitoring, detection, and prevention methods, such as anomaly detection, model retraining, and data validation, to safeguard against ML attacks.

To address the potential vulnerabilities of machine learning in Cyber-Physical Systems (CPSs), Li et al. [

26] proposed a defense mechanism called Constrained Adversarial Machine Learning (ConAML). ConAML generates adversarial examples that adhere to the intrinsic constraints of physical systems, and a general threat model and the best effort search algorithm were developed to iteratively generate adversarial examples. The authors tested the algorithms on power grids and water treatment systems through simulations, and the results showed that ConAML was effective in generating adversarial examples that reduced the performance of ML models, even under practical constraints. Additionally, the study recommended using techniques such as adversarial detection and re-training to enhance neural networks’ resilience against ConAML attacks.

3.3. Software-based Threats

The security issues classified as software-based threats primarily focus on vulnerabilities and attacks related to software components in CPS. Data breaches, anomaly detection, malicious attacks, malware, and false data injections are all software-related concerns. These threats target the software layer of CPS and encompass breaches of sensitive data, the detection of anomalous behavior or patterns, the injection of malicious code, and the dissemination of false or manipulated data.

Data breaches occur when sensitive or confidential information is accessed, stolen, or exposed without authorization through various methods like hacking, phishing, physical theft, or human error. Such breaches can result in serious consequences, such as financial losses, reputational damage, legal liabilities, and identity theft. Attacks on cloud-based infrastructure, services, or applications are called cloud security attacks, which pose new security risks and challenges for organizations despite offering flexibility, scalability, and cost savings. To prevent such attacks, organizations need to implement robust security measures like access controls, encryption, firewalls, and intrusion detection and prevention systems, along with regular monitoring and security audits.

Bharathi and Kumar [

10] have proposed a new approach for detecting attacks on Healthcare cyber-physical systems (HCPS) by combining Wise Greedy Routing, agglomeration mean shift maximization clustering, and multi-heuristic cyber ant optimization-based feature extraction. The system employs an Ensemble Crossover XG Boost classifier to identify attacks and has displayed promising results in terms of accuracy and reducing false positives. In addition, the authors have examined the positive aspects of HCPS compared to the current healthcare system, and the negative effects of cyber-attacks on IoT devices and the current limitations of cloud-based security in this context. The authors have also discussed the use of ML-based Ensemble crossover XG boost classifiers in healthcare using Matlab simulations, which demonstrated a 99.642% accuracy rate, a 95% precision accuracy, and an F1 score of 98.5%. Anomaly attacks exploit abnormal behavior or patterns in a system or network with the aim of gaining unauthorized access or causing harm. Essentially, they exploit system weaknesses in behavioral patterns to achieve malicious goals.

Feng and Tian [

24] propose a new method called Neural System Identification and Bayesian Filtering (NSIBF) for detecting anomalies in time series data in cyber-physical systems (CPSs). NSIBF uses a customized neural network to identify the system in CPSs and a Bayesian filtering algorithm to detect anomalies by monitoring the uncertainty of the system state. The authors evaluated NSIBF on synthetic and real-world datasets, including the PUMP, WADI, and SWAT datasets. They found that NSIBF outperformed existing techniques by 2.9%, 3.7%, and 7.6% at the F1 score on the PUMP, WADI, and SWAT datasets, respectively. Additionally, NSIBF showed significantly better performance compared to NSIBF-RECON and NSIBF-PRED on all three datasets. These results demonstrate the effectiveness of NSIBF for detecting anomalies in complex CPSs with noisy sensor data, and highlight the advantage of using a neural-identified state-space model and Bayesian filtering to detect anomalies in CPS signals over time. Other researchers [

37] focused on deep learning techniques to detect anomalies. The act of intentionally trying to compromise the security, integrity, or availability of a system or network is referred to as a malicious attack, and it can take various forms, such as DoS attacks and social engineering.

Malware refers to software that is installed on a computer without the user’s knowledge or consent, and it carries out harmful activities like stealing passwords or money. There are several techniques for identifying malware, but the most common one is to scan the computer for malicious files or programs. Sengan et al. [

27] propose a solution for detecting malware attacks in smart grids by analyzing power system information and signals. Malware can corrupt voltage data, resulting in fraudulent output, and the proposed solution uses an Artificial Feed-forward Network (AFN) with a distance metric cost function to differentiate between secured and malicious data. AFN is capable of handling complex functions and is suitable for the task of identifying malware incidents in smart grids. The solution aims to enhance the security of smart grids by detecting and preventing malware attacks. The alteration of sensor measurements by False Data Injection Attacks (FDIA) can pose a significant threat to a system’s computational capabilities and lead to cyberattacks. Detecting such attacks is crucial to maintain system integrity and security. Sengan et al. [

27] highlighted the importance of detecting these attacks in the smart grid and proposed a True Data Integrity Agent-Based Model (TDI-ABM) to effectively distinguish between secured data and data generated by intruders. The TDI-ABM can mitigate the effects of FDIA, improve the security of smart grids and is based on Deep Learning (DL) applications with various methods and algorithms used to retrieve data from the network.

3.4. Physical threats

While the initial list provided focused on other types of threats, it’s important to note that physical attacks can pose significant security risks to CPSs. Physical threats target the physical components of a CPS and can have serious consequences. Examples of physical attacks include physical tampering, supply chain attacks, side-channel attacks, and physical destruction. These attacks involve unauthorized manipulation of hardware, compromising the supply chain, exploiting physical information leakage, or causing physical damage to the CPS [

16,

19,

21]. Ensuring physical security measures are in place is crucial to protect the integrity and functionality of a CPS against these types of attacks.

4. How AI help to address security and privacy concerns in CPS?

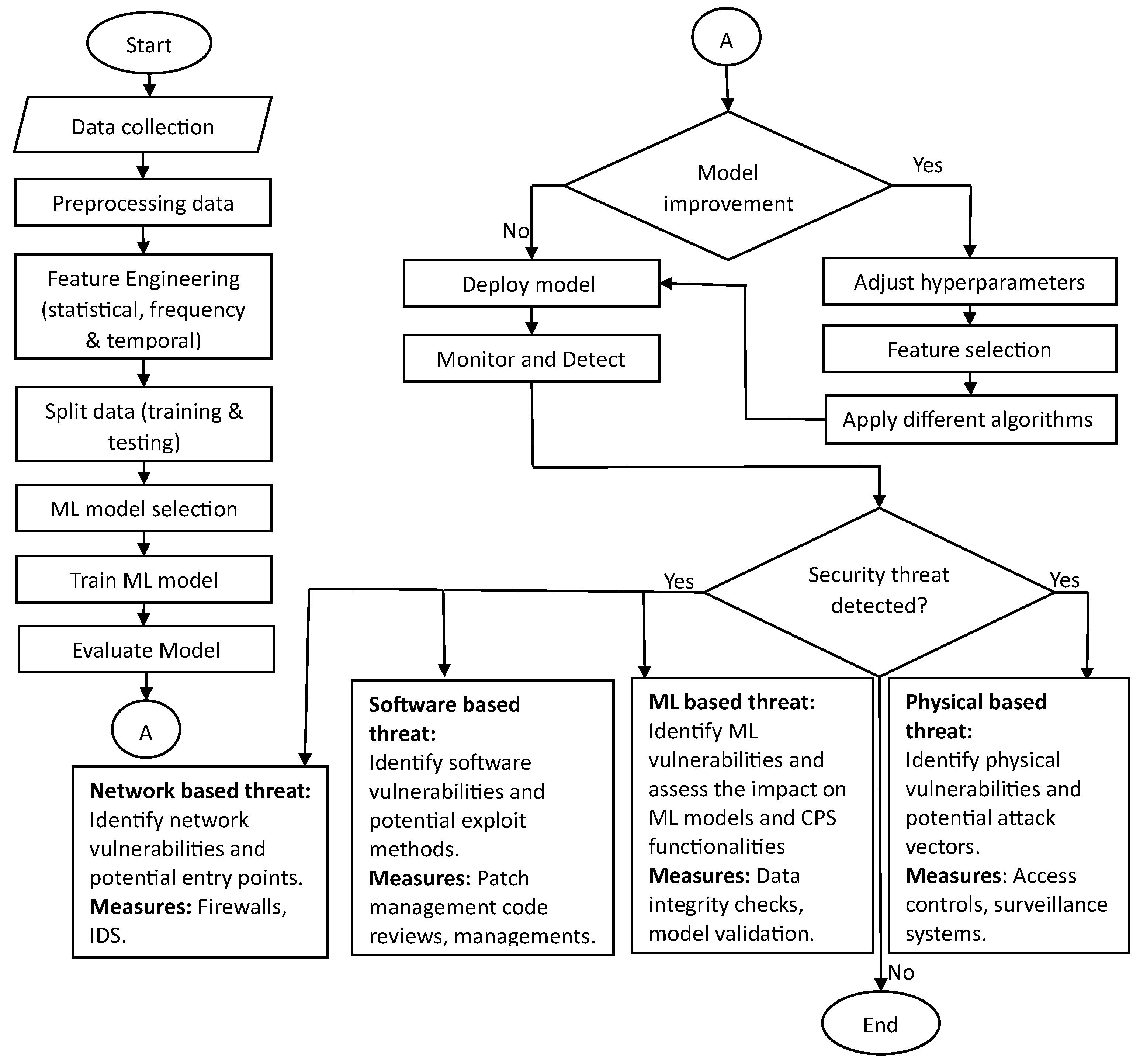

This section answers RQ2. A flowchart is presented and explained how AI/ML is used in detecting security and privacy issues. The purpose is to help present an integrated approach to design an ML-based secure CPS.

Figure 3 presents the steps for using ML algorithms in collecting, monitoring, and detecting security threats in CPSs. The first step is to collect data from diverse sources within CPSs, including sensors, controllers, and network logs, etc. This data is then subjected to preprocessing, where noise is removed, missing values are handled, and it is transformed into a suitable format for ML algorithms. Relevant features are extracted from the preprocessed data, encompassing network traffic patterns, sensor readings, system states, and other pertinent information. The preprocessed data is split into training and testing sets, with the former used to train the selected ML model(s) and the latter to evaluate its performance. ML model selection entails choosing appropriate algorithms, such as anomaly detection, classification algorithms (e.g., decision trees, support vector machines, neural networks), ensemble methods, or Sequence Modeling Algorithms. The selected model is trained using the training set, learning patterns and characteristics of normal system behavior. Model performance is evaluated using the testing set, considering metrics like accuracy, precision, recall, and F1 score. If the model’s performance is unsatisfactory, iterative improvement is pursued by adjusting hyperparameters, feature selection, or trying different algorithms. Upon achieving satisfactory performance set by the threshold values, the model is deployed in the CPS environment for real-time system monitoring. Continual monitoring and data feeding into the deployed ML model enable the detection of deviations and anomalies that signal possible security threats. If a security threat is detected, three approaches can help identify its nature: network-based threat identification involves analyzing network logs, utilizing Network Intrusion Detection Systems (NIDS), and conducting packet inspection; software-based threat identification includes reviewing system logs, performing malware analysis, and conducting vulnerability assessments; ML-based threat identification involves analyzing the ML model output, implementing adversarial attack detection techniques, and monitoring model performance. The subsequent paragraphs explain how AI/ML algorithms are used to detect security threats in CPSs.

Network-based threats identification involves analyzing network logs and traffic patterns to detect suspicious or malicious activities. By examining network logs, one can look for unusual network behavior, unauthorized access attempts, or unusual data transfers. Network intrusion detection systems (NIDS) play a crucial role in monitoring network traffic and identifying known network-based threats. These systems can detect patterns or signatures of common network attacks, including DDoS attacks, port scanning, or suspicious network connections. Additionally, performing deep packet inspection allows for a thorough examination of network packets. By analyzing packet headers, payloads, and protocols, it becomes possible to identify indicators of network-based threats. This comprehensive analysis helps in identifying malicious activities or anomalies, contributing to an effective network security strategy.

Software-based threat identification involves various techniques to detect and address potential threats originating from software components within a CPS system. Analyzing system logs and event data is crucial in this process, as it allows for the review of activities, error messages, and unauthorized access attempts that may indicate a software-based threat. Additionally, conducting malware analysis plays a significant role in identifying potential threats. Suspicious files or programs can be analyzed using antivirus software, sandboxing techniques, or other malware analysis tools to identify any malicious code or behavior. Regular vulnerability assessments and scans are essential to identify known software vulnerabilities that attackers could exploit. By proactively identifying vulnerabilities, it becomes possible to address potential entry points for software-based threats.

ML-based threat identification focuses on detecting and addressing threats that specifically target machine learning models deployed within a CPS system. Analyzing the output and predictions of the ML model is essential in this process. By examining the model’s classifications, false positives or negatives, and instances where the model may be manipulated or attacked, it becomes possible to identify ML-based threats. Implementing techniques to detect and mitigate adversarial attacks is crucial. This can involve monitoring for model evasion attempts, analyzing input data for adversarial perturbations, or employing anomaly detection techniques specifically designed for ML-based threats. Real-time monitoring of the model’s performance is also vital. Tracking metrics such as accuracy, precision, recall, and F1 score helps identify sudden drops in performance that could indicate an ML-based attack or model degradation. By actively monitoring and analyzing the ML model’s behavior, it becomes possible to identify and mitigate ML-based threats in the CPS system.

AI brings significant benefits to address security and privacy concerns in CPS. It offers capabilities for threat detection and prevention, intrusion detection and response, anomaly detection, vulnerability assessment, predictive maintenance, privacy preservation, behavior analytics, access control, and security analytics. By leveraging these AI-empowered solutions, CPSs can strengthen their security posture, detect and respond to threats in real-time, preserve privacy, and ensure the robustness and resilience of their systems.

AI plays a crucial role in addressing security and privacy concerns in CPS. AI-powered systems offer a range of capabilities to enhance CPS security. These systems aid in threat detection and prevention by analyzing network traffic, system logs, and sensor data. Machine learning algorithms continuously learn and adapt, enabling proactive identification of both known and emerging security threats. AI-based intrusion detection systems monitor network behavior in real-time, quickly responding to unauthorized access attempts, malicious activities, and intrusion incidents. Furthermore, AI enables anomaly detection, allowing the system to identify deviations from normal behavior patterns. This capability contributes to early detection and response to potential security breaches or privacy violations. Additionally, AI facilitates vulnerability assessment, automatically scanning and evaluating CPS components for potential weaknesses. By identifying vulnerabilities, security measures can be implemented to mitigate risks effectively. Predictive maintenance using AI algorithms can estimate potential failures or security vulnerabilities by analyzing sensor data and system logs. Timely identification and resolution of these issues help strengthen the overall security and privacy of the CPS.

Moreover, AI techniques are invaluable in preserving privacy within CPS environments. Differential privacy is a widely-used AI technique [

38], adds noise to data, safeguarding the privacy of individuals or sensitive information while still providing valuable insights. Behavior analytics, powered by AI, enable the detection of suspicious activities or deviations from normal patterns, enabling the identification of potential security breaches or privacy violations. Access control and authentication mechanisms are strengthened through AI, leveraging techniques such as facial recognition, voice recognition, and behavioral biometrics for secure identity verification. AI also plays a vital role in security analytics and incident response. AI-powered security analytics platforms can aggregate and analyze data from various security sources, providing actionable insights to security teams. This accelerates incident response, allowing for informed decision-making during security incidents. By leveraging AI, CPS environments can enhance their security and privacy safeguards effectively, helping to mitigate risks and protect critical systems and sensitive data.

Table 4.

Security/Privacy Issues and ML Techniques in CPS.

Table 4.

Security/Privacy Issues and ML Techniques in CPS.

| Type |

Security/Privacy Issues |

ML Techniques |

| Network-based Threats [13,15,18,19,20,21,22,23,25,27,31] |

Intrusion Attacks, Denial of Service (DOS)/Spoofing, Cyberattacks, Vessel Trajectory Attacks |

Support Vector Machine, Decision Tree, Convolutional Neural Network, Random Forest, K-Nearest Neighbor, Linear Regression, Long short-term memory - Recurrent Neural Network, Back-Propagation Neural Network, Artificial Neural Network, Naive Bayes, K-means, Deep Learning, Random Forest |

| Software-based Threats [10,12,14,17,18,19,21,24,27,30] |

Data Breaches, Anomaly detection, Malicious Attacks, Malware, False Data Injections |

Support Vector Machine, Decision Tree, K-Nearest Neighbor, Naive Bayes, Digital Transformation, Random Forest, Deep Learning, Artificial Feed Forward, Crossover XG Boost Algorithm |

| ML-based Threats [14,26] |

ML-Powered Attacks |

Adversarial Machine Learning |

5. AI Techniques used to address the security and privacy issues

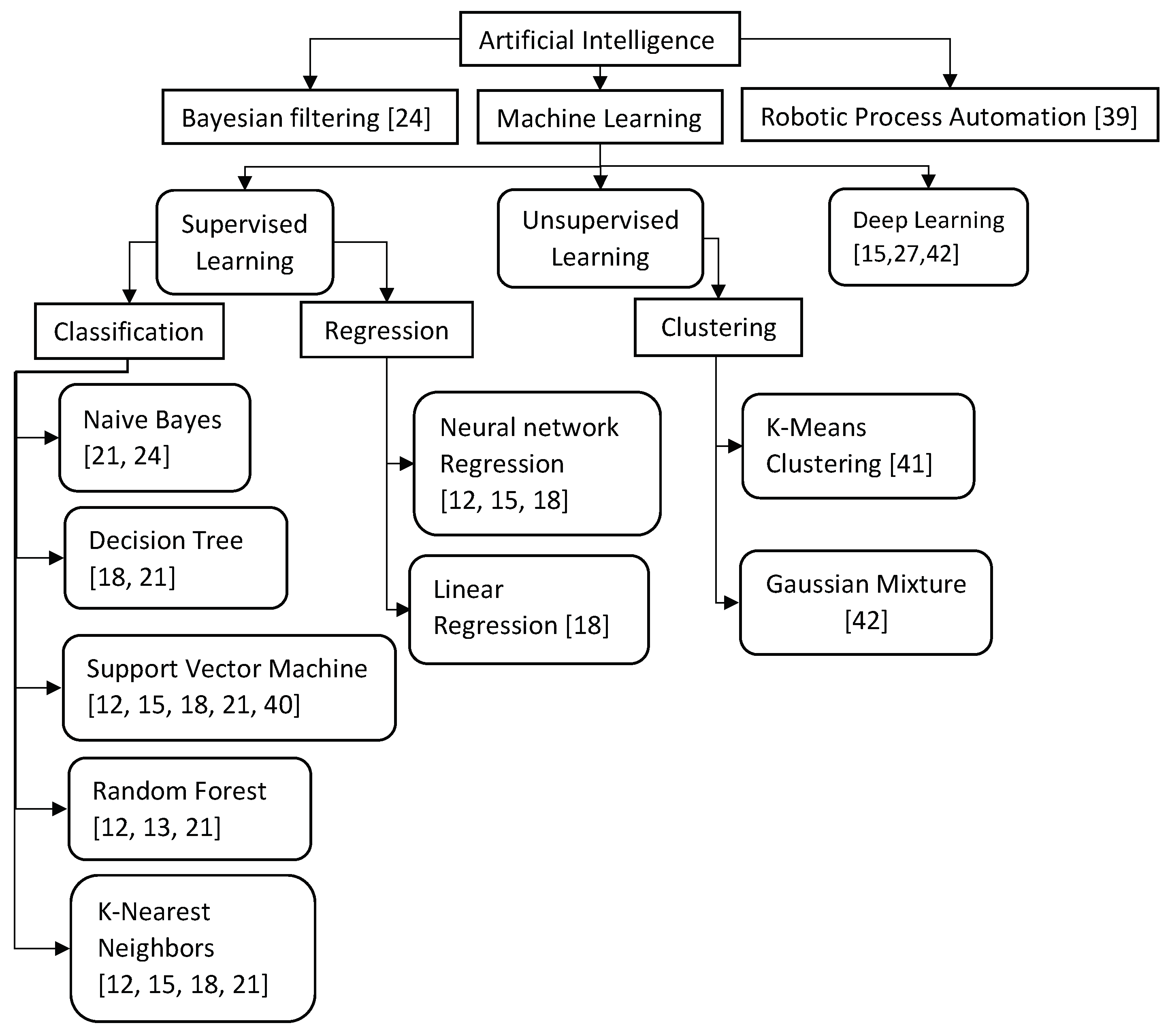

This section answers the third research question. How can AI help in addressing the security and privacy concerns in CPS? A taxonomy of AI methods is presented to determine which techniques are predominantly utilized and to identify the gaps in identifying security concerns. The AI techniques used to protect data in CPS are shown in

Figure 4. The shortcomings of AI are discussed in the next section.

AI is a computer science discipline that enables machines to think and behave like humans using methods such as machine learning, deep learning, game theory, optimization theory, and evolutionary algorithms. Bayesian filtering [

24] and robotic automation [

39] are being used to protect sensitive data CPSs. Bayesian filtering, which is a statistical technique, is used to detect anomalies and cyber-attacks by comparing incoming sensor data to a model of what the data should look like. This technique can identify and filter out corrupted data, thus helping to improve the accuracy of the system. Robotic automation, on the other hand, can be used to create a secure and isolated environment for the data. For example, robots can be used to physically isolate the system, such as by removing external ports, to reduce the risk of unauthorized access. Additionally, robots can be used to monitor and regulate access to sensitive data, ensuring that only authorized personnel have access. The combination of Bayesian filtering with robotic automation can provide a robust solution for protecting data in CPSs against cyber threats.

In addressing security and privacy issues in CPS, several AI techniques are employed, with supervised machine learning algorithms being the most prevalent, particularly classification algorithm [

12,

13,

15,

18,

21,

24,

40]. The classification ML algorithms can be used to train models that can classify data as normal or anomalous. For example, anomaly detection algorithms can be used to identify abnormal network traffic, which could be indicative of a cyberattack. Similarly, classification algorithms can be used to detect malicious software or malware that could compromise the security of the CPS. These algorithms can also be used to protect privacy in CPS by identifying and classifying sensitive data that should not be shared with unauthorized parties. For instance, classification algorithms can be trained to recognize personal information, such as social security numbers or credit card numbers, and prevent them from being transmitted outside a secure network. Therefore, classification ML algorithms can be a powerful tool in detecting and preventing security and privacy issues in CPS. By analyzing data and identifying patterns, these algorithms can help ensure the integrity and safety of critical infrastructure systems. Regression algorithms like neural network regression [

12] and linear regression [

18] are also used to detect cyberattacks.

Clustering algorithms are commonly used in the security and privacy of CPSs for identifying patterns and grouping similar data points together. Some of the commonly used clustering algorithms in this domain include K-means clustering [

41] and Gaussian Mixture Model (GMM) [

42] This algorithm partitions the data points into K distinct clusters based on their similarity. It is commonly used in intrusion detection systems for identifying anomalous network traffic. GMM is another clustering algorithm used in unsupervised learning. It models the distribution of data as a mixture of several Gaussian distributions and attempts to identify the parameters of each distribution to cluster the data. This helps in identifying the anomalies in the CPSs. Reinforcement learning algorithms [

43,

44] can be used in CPS security and privacy to develop autonomous decision-making systems that can respond to changing environments and emerging threats. Reinforcement learning algorithms learn from feedback and reinforcement signals generated by the environment to adapt and improve their decision-making over time. For example, in a scenario where a CPS is under attack, reinforcement learning algorithms can be used to automatically adjust security measures to mitigate the effects of the attack. Reinforcement learning can also be used to develop adaptive intrusion detection and response systems that can learn from past attacks and update their responses accordingly. By leveraging the flexibility and adaptability of reinforcement learning, it is possible to develop more efficient and effective security and privacy solutions for CPSs.

Deep learning is a subfield of machine learning, which falls under the category of supervised learning. However, deep learning models use artificial neural networks that are composed of multiple layers to learn from data, which distinguishes it from traditional machine learning algorithms [

45]. Deep learning can be utilized to detect and prevent cyberattacks [

33]. By training deep learning models on large datasets of historical attacks and their corresponding features, such as network traffic patterns and system logs, these models can learn to recognize patterns and anomalies that may indicate an ongoing or potential attack. The use of artificial neural networks with multiple layers allows for complex relationships and dependencies to be captured and learned from the data, potentially leading to more accurate and robust detection capabilities. Additionally, deep learning models can also be used for anomaly detection in sensor data, helping to identify abnormal behavior that may indicate a physical attack or malfunction in the system. However, it is important to note that deep learning models can also be vulnerable to adversarial attacks.

Adversarial machine learning (AML) algorithms are a subfield of machine learning that aims to detect and defend against attacks on machine learning models. To protect the data in CPSs, AML algorithms are used to identify and mitigate threats to the system. One common type of attack is called an adversarial attack, where an attacker intentionally modifies the input data to mislead the machine learning model. AML algorithms work by introducing adversarial examples into the training data to improve the model’s robustness against attacks. Another approach is to use AML algorithms to identify and classify potential attacks, allowing the system to take appropriate action to defend against them. Therefore, AML algorithms are an important tool for enhancing the security and privacy of CPSs and ensuring their resilience against evolving threats.

6. Discussion

6.1. Research Significance and Limitations

The classification of security threats is significant as it provides an organized overview of potential risks in CPS. It raises awareness, enables risk assessment, and helps in secure CPS design and development. The classification aids in incident response and recovery by guiding targeted actions based on threat categories. It facilitates effective communication and collaboration among stakeholders, fostering a common understanding and knowledge sharing. Eventually, the classification enhances the security and resilience of CPS by guiding proactive measures and promoting a secure environment.

The flowchart explains the step-by-step process of identifying the different security threats using machine learning algorithms. By exploring the role of AI in CPS security, this research aims to enhance the protection of CPS against potential threats, mitigate privacy risks, enable proactive defense mechanisms, improve incident response and recovery, and promote trust in CPS deployments. The application of AI techniques can contribute to developing advanced security strategies, privacy-preserving mechanisms, and real-time threat detection, ultimately ensuring CPS applications’ reliability, resilience, and trustworthiness.

The recommendation is to prioritize research on unsupervised, reinforcement, and deep learning techniques for CPS applications, as there is currently limited evidence in the literature. Additionally, as ML-based attacks become more prevalent, research is needed to focus on developing robust and secure AI systems. Researchers are advised to define attacks in specific terms instead of general terms. This means that they should provide a detailed description of the attack instead of using broad, vague terms to describe attacks. This research does not include the research related to the blockchain, as the focus was explicitly on using AI techniques to handle security and privacy concerns in the CPS.

6.2. Challenges and Implementation Issues

This section discusses the challenges and implementation issues to security and privacy in CPS.

6.2.1. Shortcomings of AI

While AI has the potential to improve performance in CPS, there are a few shortcomings that need to be addressed. AI works as a black box, and the user is not always aware of how it works or why a particular decision was made. One of the biggest challenges is the lack of transparency in the decision-making process. AI algorithms can be complex and difficult to understand, which can make it hard to explain why a particular decision was made. In mission-critical systems, it is essential that decisions must be explainable and accountable. Additionally, AI algorithms rely on data to learn and make decisions. In CPS, due to the heterogeneous nature of data, the quality of data can vary and impact the reliability of AI models. AI algorithms are also vulnerable to cyber-attacks, which can compromise the safety of the CPS [

26]. CPS devices generate huge volumes of data, making it challenging to scale AI algorithms to handle massive amounts of data. Moreover, AI algorithms require significant computational resources.

6.2.2. Federated Learning in Edge AI for CPS systems

Federated learning in Edge AI for CPS refers to the use of distributed machine learning techniques that allow machine learning models to be trained using data from edge devices in a decentralized and collaborative manner. CPS are systems that integrate physical and computational components, generating vast amounts of data that can be used to improve system performance and reliability [

46]. However, collecting and processing this data can be challenging, especially in large-scale systems that are distributed across multiple locations. Federated learning provides a solution to this problem by allowing machine learning models to be trained using data that remains on the local devices where it was generated. In Edge AI for CPS, federated learning can be used to train machine learning models on data generated by sensors and devices located at the edge of the network. By keeping data local, federated learning can reduce the amount of data that needs to be transmitted over the network, which can be important in systems with limited bandwidth or high communication costs. By distributing the learning process across multiple edge devices, federated learning can improve the scalability of machine learning models, allowing them to adapt to changing conditions and improve system performance in real-time. Therefore, federated learning in Edge AI for CPS provides a flexible and scalable approach to machine learning that can help improve the performance and reliability of CPS while reducing communication costs and preserving user privacy. However, federated learning in a distributed environment increases the complexity and maintenance of CPS. In heterogeneous distributed CPS, variability in storage capacity, computational power, and energy consumption poses challenges for developing federated models that can effectively execute across multiple devices [

5].

6.2.3. Beyond 5G technologies

Implementing CPS with beyond 5G technology is an attractive option for many application domains. However, there are several challenges associated with it. The beyond 5G network architectures include the use of more distributed networks, making the system complex and requiring significant investment in network infrastructure [

47,

48]. Additionally, data management is another challenge as future CPS require efficient systems to collect, process, store, analyze, and visualize data. Developing such systems to handle complex and larger datasets is expensive. Since beyond 5G is in its early stages of development, there is no clear standardization framework for the technology, and ensuring that different systems are compatible and interoperable is essential. Implementing CPS with beyond 5G technology will require a significant investment in research, development, and infrastructure.

6.2.4. Regulatory and legal compliance

CPS applications must comply with a range of regulatory and legal requirements, including safety standards, privacy laws, and data protection regulations. Compliance with these requirements can be complex and time-consuming, and can add significant costs to the development and deployment of CPS [

49]. For example, in the healthcare industry, CPS must comply with the Health Insurance Portability and Accountability Act (HIPAA), which sets strict requirements for the protection of patient data. Another example is in the automotive industry, where CPS must comply with safety standards such as the ISO 26262, which provides a framework for the development of safety-critical systems in vehicles. This standard requires a systematic approach to safety engineering, including hazard analysis and risk assessment, as well as extensive testing and verification. In addition to industry-specific regulations, CPS must also comply with more general legal requirements such as data protection regulations and privacy laws. For example, the General Data Protection Regulation (GDPR) in Europe sets strict rules for the collection, use, and storage of personal data, including data generated by CPS. Compliance with these regulations and standards can be challenging, as it requires a deep understanding of the legal and regulatory landscape, as well as significant investment in compliance processes and technologies [

49].

7. Conclusion and Future Research

In conclusion, the classification of security threats in CPS plays a crucial role in enhancing these systems’ overall security and resilience. It provides an organized overview of potential risks, raising awareness and enabling risk assessment. This classification framework aids in designing and developing secure CPS by guiding targeted actions based on threat categories. It facilitates communication and collaboration among stakeholders, fostering a common understanding and promoting knowledge sharing. The research presented here explores the role of AI in CPS security, aiming to enhance the protection of CPS against potential threats and mitigate privacy risks. By utilizing machine learning algorithms and AI techniques, developing advanced security strategies, privacy-preserving mechanisms, and real-time threat detection is possible. This research contributes to CPS applications’ reliability, resilience, and trustworthiness in deploying CPSs.

To further advance the field, future research should prioritize investigating unsupervised, reinforcement, and deep learning techniques for CPS applications, as limited evidence exists in the literature. With the rise of ML-based attacks, developing robust and secure AI systems to safeguard CPS is crucial. Researchers are advised to define attacks in specific terms, providing detailed descriptions rather than broad and vague terms. This approach will lead to a better understanding of attacks and enable the development of effective defense mechanisms. It is important to note that this research does not encompass the study of blockchain concerning CPS. This work focused on utilizing AI techniques to address security and privacy concerns in CPS. Further exploration of blockchain technology and its potential contributions to CPS security would be an opportunity for future investigation. Overall, this study sheds light on CPS’s current security and privacy issues and provides insights into potential solutions and areas for further research.

References

- Tahsien, S.M.; Karimipour, H.; Spachos, P. Machine learning based solutions for security of Internet of Things (IoT): A survey. Journal of Network and Computer Applications 2020, 161, 102630. [Google Scholar] [CrossRef]

- Yampolskiy, M.; Horvath, P.; Koutsoukos, X.D.; Xue, Y.; Sztipanovits, J. Systematic analysis of cyber-attacks on CPS-evaluating applicability of DFD-based approach. 2012 5th International Symposium on Resilient Control Systems. IEEE, 2012, pp. 55–62. [CrossRef]

- Singh, S.; Yadav, N.; Chuarasia, P.K. A review on cyber physical system attacks: Issues and challenges. 2020 International Conference on Communication and Signal Processing (ICCSP). IEEE, 2020, pp. 1133–1138. [CrossRef]

- Cyber-Physical Systems (CPS) Market Size & Industry Analysis. ttps://www.zionmarketresearch.com/report/cyber-physical-systems-market/, 2022. [Online; accessed 2-April-2023].

- Salau, B.; Rawal, A.; Rawat, D.B. Recent advances in artificial intelligence for wireless internet of things and cyber-physical systems: A comprehensive survey. IEEE Internet of Things Journal 2022. [Google Scholar] [CrossRef]

- Vlachos, V.; Stamatiou, Y.C.; Nikoletseas, S. The Privacy Flag Observatory: A Crowdsourcing Tool for Real Time Privacy Threats Evaluation. Journal of Cybersecurity and Privacy 2023, 3, 26–43. [Google Scholar] [CrossRef]

- Karale, A. The Challenges of IoT Addressing Security, Ethics, Privacy, and Laws. Internet of Things 2021, 15, 100420. [Google Scholar] [CrossRef]

- SolarWinds hack explained: Everything you need to know. https://www.techtarget.com/whatis/feature/SolarWinds-hack-explained-Everything-you-need-to-know/, 2022. [Online; accessed 2-April-2023].

- Bour, G.; Bosco, C.; Ugarelli, R.; Jaatun, M.G. Water-Tight IoT–Just Add Security. Journal of Cybersecurity and Privacy 2023, 3, 76–94. [Google Scholar] [CrossRef]

- Bharathi. V.; Vinoth Kumar, C. A real time health care cyber attack detection using ensemble classifier. Computers and Electrical Engineering 2022, 101, 108043. [CrossRef]

- Cheh, C.; Keefe, K.; Feddersen, B.; Chen, B.; Temple, W.G.; Sanders, W.H. Developing Models for Physical Attacks in Cyber-Physical Systems. Proceedings of the 2017 Workshop on Cyber-Physical Systems Security and PrivaCy; Association for Computing Machinery: New York, NY, USA, 2017; CPS ’17, p. 49–55. [CrossRef]

- Ulybyshev, D.; Yilmaz, I.; Northern, B.; Kholodilo, V.; Rogers, M. Trustworthy Data Analysis and Sensor Data Protection in Cyber-Physical Systems. Proceedings of the 2021 ACM Workshop on Secure and Trustworthy Cyber-Physical Systems; Association for Computing Machinery: New York, NY, USA, 2021; SAT-CPS ’21, p.13–22. [CrossRef]

- Colelli, R.; Magri, F.; Panzieri, S.; Pascucci, F. Anomaly-Based Intrusion Detection System for Cyber-Physical System Security. 2021 29th Mediterranean Conference on Control and Automation (MED) 2021, pp. 428–434. [CrossRef]

- Cali, U.; Kuzlu, M.; Sharma, V.; Pipattanasomporn, M.; Catak, F.O. Internet of Predictable Things (IoPT) Framework to Increase Cyber-Physical System Resiliency. ArXiv 2021, abs/2101.07816. [CrossRef]

- Ahmed Jamal, A.; Mustafa Majid, A.A.; Konev, A.; Kosachenko, T.; Shelupanov, A. A review on security analysis of cyber physical systems using Machine learning. Materials Today: Proceedings 2021. [Google Scholar] [CrossRef]

- Khan, M.T.; Serpanos, D.; Shrobe, H.; Yousuf, M.M. Rigorous Machine Learning for Secure and Autonomous Cyber Physical Systems. 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), 2020, Vol. 1, pp. 1815–1819. [CrossRef]

- Mboweni, I.V.; Abu-Mahfouz, A.M.; Ramotsoela, D.T. A Machine Learning approach to Intrusion Detection in Water Distribution Systems – A Review. IECON 2021 – 47th Annual Conference of the IEEE Industrial Electronics Society, 2021, pp. 1–7. [CrossRef]

- Pordelkhaki, M.; Fouad, S.; Josephs, M. Intrusion Detection for Industrial Control Systems by Machine Learning using Privileged Information. 2021 IEEE International Conference on Intelligence and Security Informatics (ISI), 2021, pp. 1–6. [CrossRef]

- Abbas, W.; Laszka, A.; Vorobeychik, Y.; Koutsoukos, X. Scheduling Intrusion Detection Systems in Resource-Bounded Cyber-Physical Systems. Proceedings of the First ACM Workshop on Cyber-Physical Systems-Security and/or PrivaCy; Association for Computing Machinery: New York, NY, USA, 2015; CPS-SPC ’15, p. 55–66. [CrossRef]

- Junejo, K.N.; Goh, J. Behaviour-Based Attack Detection and Classification in Cyber Physical Systems Using Machine Learning. Proceedings of the 2nd ACM International Workshop on Cyber-Physical System Security; Association for Computing Machinery: New York, NY, USA, 2016; CPSS ’16, p. 34–43. [CrossRef]

- Perrone, P.; Flammini, F.; Setola, R. Machine Learning for Threat Recognition in Critical Cyber-Physical Systems. 2021 IEEE International Conference on Cyber Security and Resilience (CSR), 2021, pp. 298–303. [CrossRef]

- Balduzzi, M.; Pasta, A.; Wilhoit, K. A security evaluation of AIS automated identification system. Proceedings of the 30th annual computer security applications conference, 2014, pp. 436–445. [CrossRef]

- Balduzzi, M.; Pasta, A.; Wilhoit, K. A Security Evaluation of AIS Automated Identification System. Proceedings of the 30th Annual Computer Security Applications Conference; Association for Computing Machinery: New York, NY, USA, 2014; ACSAC ’14, p. 436–445. [Primary Study]. [CrossRef]

- Feng, C.; Tian, P. Time Series Anomaly Detection for Cyber-Physical Systems via Neural System Identification and Bayesian Filtering. Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining; Association for Computing Machinery: New York, NY, USA, 2021; KDD ’21, p. 2858–2867. [CrossRef]

- Liu, R.W.; Liang, M.; Nie, J.; Deng, X.; Xiong, Z.; Kang, J.; Yang, H.; Zhang, Y. Intelligent Data-Driven Vessel Trajectory Prediction in Marine Transportation Cyber-Physical System. 2021 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics) 2021, pp. 314–321. [CrossRef]

- Li, J.; Yang, Y.; Sun, J.S.; Tomsovic, K.; Qi, H. ConAML: Constrained Adversarial Machine Learning for Cyber-Physical Systems. Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security; Association for Computing Machinery: New York, NY, USA, 2021; ASIA CCS ’21, p. 52–66. [CrossRef]

- Sengan, S.; V, S.; V, I.; Velayutham, P.; Ravi, L. Detection of false data cyber-attacks for the assessment of security in smart grid using deep learning. Computers & Electrical Engineering 2021, 93, 107211. [Google Scholar] [CrossRef]

- Mohammadhassani, A.; Teymouri, A.; Mehrizi-Sani, A.; Tehrani, K. Performance evaluation of an inverter-based microgrid under cyberattacks. 2020 IEEE 15th International Conference of System of Systems Engineering (SoSE). IEEE, 2020, pp. 211–216. [CrossRef]

- Ashok, A.; Wang, P.; Brown, M.; Govindarasu, M. Experimental evaluation of cyber attacks on automatic generation control using a CPS security testbed. 2015 IEEE Power & Energy Society General Meeting. IEEE, 2015, pp. 1–5. [CrossRef]

- Mo, Y.; Sinopoli, B. Integrity Attacks on Cyber-Physical Systems. Proceedings of the 1st International Conference on High Confidence Networked Systems; Association for Computing Machinery: New York, NY, USA, 2012; HiCoNS ’12, p. 47–54. [CrossRef]

- Joo, M.; Seo, J.; Oh, J.; Park, M.; Lee, K. Situational Awareness Framework for Cyber Crime Prevention Model in Cyber Physical System. 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN) 2018, pp. 837–842. [CrossRef]

- Haque, N.I.; Shahriar, M.H.; Dastgir, M.G.; Debnath, A.; Parvez, I.; Sarwat, A.; Rahman, M.A. A Survey of Machine Learning-based Cyber-physical Attack Generation, Detection, and Mitigation in Smart-Grid. 2020 52nd North American Power Symposium (NAPS), 2021, pp. 1–6. [CrossRef]

- Zhang, J.; Pan, L.; Han, Q.L.; Chen, C.; Wen, S.; Xiang, Y. Deep Learning Based Attack Detection for Cyber-Physical System Cybersecurity: A Survey. IEEE/CAA Journal of Automatica Sinica 2022, 9, 377–391. [Google Scholar] [CrossRef]

- Hasan, Z.; Roy, N. Trending machine learning models in cyber-physical building environment: A survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 2021, 11. [Google Scholar] [CrossRef]

- Olowononi, F.O.; Rawat, D.B.; Liu, C. Resilient Machine Learning for Networked Cyber Physical Systems: A Survey for Machine Learning Security to Securing Machine Learning for CPS. IEEE Communications Surveys & Tutorials 2021, 23, 524–552. [Google Scholar] [CrossRef]

- Asghar, M.R.; Hu, Q.; Zeadally, S. Cybersecurity in industrial control systems: Issues, technologies, and challenges. Computer Networks 2019, 165, 106946. [Google Scholar] [CrossRef]

- Dhir, S.; Kumar, Y. Study of Machine and Deep Learning Classifications in Cyber Physical System. 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), 2020, pp. 333–338. [CrossRef]

- Hassan, M.U.; Rehmani, M.H.; Chen, J. Differential privacy techniques for cyber physical systems: a survey. IEEE Communications Surveys & Tutorials 2019, 22, 746–789. [Google Scholar]

- Beltrame, G.; Merlo, E.; Panerati, J.; Pinciroli, C. Engineering Safety in Swarm Robotics. Proceedings of the 1st International Workshop on Robotics Software Engineering; Association for Computing Machinery: New York, NY, USA, 2018; RoSE ’18, p. 36–39. [CrossRef]

- Rahman, M.; Chowdhury, M.A.; Rayamajhi, A.; Dey, K.C.; Martin, J.J. Adaptive Queue Prediction Algorithm for an Edge Centric Cyber Physical System Platform in a Connected Vehicle Environment. ArXiv 2017, abs/1712.05837. 10.48550/arXiv.1712.05837.

- Sahin, M.E.; Tawalbeh, L.; Muheidat, F. The Security Concerns On Cyber-Physical Systems And Potential Risks Analysis Using Machine Learning. Procedia Computer Science 2022, 201, 527–534. The 13th International Conference on Ambient Systems, Networks and Technologies (ANT) / The 5th International Conference on

Emerging Data and Industry 4.0 (EDI40). [Google Scholar] [CrossRef]

- Padmajothi.; Iqbal, J.L.M. Review of machine learning and deep learning mechanism in cyber-physical system. International Journal of Nonlinear Analysis and Applications 2022, 13, 583–590. [CrossRef]

- Ibrahim, M.; Elhafiz, R. Security Analysis of Cyber-Physical Systems Using Reinforcement Learning. Sensors 2023, 23, 1634. [Google Scholar] [CrossRef]

- Uprety, A.; Rawat, D.B. Reinforcement learning for iot security: A comprehensive survey. IEEE Internet of Things Journal 2020, 8, 8693–8706. [Google Scholar] [CrossRef]

- Kaplan, H.; Tehrani, K.; Jamshidi, M. fault diagnosis of smart grids based on deep learning approach. 2021 World Automation Congress (WAC). IEEE, 2021, pp. 164–169. [CrossRef]

- Olowononi, F.O.; Rawat, D.B.; Liu, C. Federated learning with differential privacy for resilient vehicular cyber physical systems. 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC). IEEE, 2021, pp. 1–5. [CrossRef]

- Bandi, A. A Review Towards AI Empowered 6G Communication Requirements, Applications, and Technologies in Mobile Edge Computing. 2022 6th International Conference on Computing Methodologies and Communication (ICCMC), 2022, pp. 12–17. [CrossRef]

- Bandi, A.; Yalamarthi, S. Towards Artificial Intelligence Empowered Security and Privacy Issues in 6G Communications. 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), 2022, pp. 372–378. [CrossRef]

- Moongilan, D. 5G Internet of Things (IOT) near and far-fields and regulatory compliance intricacies. 2019 IEEE 5th World Forum on Internet of Things (WF-IoT). IEEE, 2019, pp. 894–898. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).