Submitted:

07 July 2023

Posted:

10 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background: Definitions of interdependence

1.2. Harmonic Oscillators. A test of models

| Authors (year published) | Definition | Issues |

|---|---|---|

| Lewin (1942) (republished [4]) | behaviour, dx/dt, occurs from the “totality of coexisting and interdependent forces in the social field that impinge on a person or group and make up the life space” (computational context; see [7]). Interdependence includes all effects that co-vary with the individual, giving an “interdependence of parts ... handled conceptually only with the mathematical concept of space and the dynamic concepts of tension and force” (p. xiii) that "holds the group together ... [making] the whole ... more than the sum of its parts” (p. 146). | A theoretical rather than a working construct. |

| Von Neumann and Morgenstern, 1944 (p. 35, in [3]) | "The simplest game … is a two-person game where the sum of all payments is variable. This corresponds to a social economy with two participants and allows both for their interdependence and for variability of total utility with their behavior … exactly the case of bilateral monopoly." | Assumed that cognition and behavior are the same, like Spinoza [10] and Hume [11], and missed Nash’s solution that an equilibrium existed in a bounded space. |

| Nash 1950 [12] | In a two-person game, “a strategy counters another if the strategy of each player” occurs in a closed space until it reaches an “equilibrium point.” | Behavior is inferred [13]; fails facing uncertainty or conflict [2], but may be recoverable with constraints (see [1]; also, see [14]). |

| Kelley, Holmes, Kerr, Reis, Rusbult and van Lange [15] | "Three dimensions describe interdependence theory: mutual influence or dependence between two or more agents; conflict among their shared interests; and the relative power among them. Interdependence is defined based on specific decomposition of situations [e.g., with abstract representations such as the Prisoner’s Dilemma Game] to account for how mutual influence affects the outcome of their interactions to reach an outcome." | After working with payoff matrices for decades, Kelly capitulated, complaining that situations, represented by a given (logical) matrix of outcomes, was overwhelmed by the effective matrix, which, he concluded, was based on the interaction and unknowable. For a given game’s structure, Kelley concluded that “the transformation from given to effective matrix is subjective to observers and subject to their interpretation error with no solution in hand" [16], which Jones said caused "bewildering complexities" in the laboratory (p. 33, [17]). |

1.3. Background: Justification for the rejection of Spinoza and Hume

OpenAI’s ChatGPT, Google’s Bard and Microsoft’s Sydney are marvels of machine learning. Roughly speaking, they take huge amounts of data, search for patterns in it and become increasingly proficient at generating statistically probable outputs—such as seemingly human like language and thought. These programs have been hailed as the first glimmers on the horizon of artificial general intelligence …That day may come, but its dawn is not yet breaking …[and] cannot occur if machine learning programs like ChatGPT continue to dominate the field of A.I. …The crux of machine learning is description and prediction; it does not posit any causal mechanisms or physical laws.

1.4. Background: Domain complexity of a team’s task

1.5. Background: Justification for the quantum model of interdependence

To date, most experiments have concentrated on single-particle physics and (nearly) non-interacting particles. But the deepest mysteries about quantum matter occur for systems of interacting particles, where new and poorly understood phases of matter can emerge. These systems are generally difficult to computationally simulate [45].

Another way of expressing the peculiar situation is: the best possible knowledge of a whole does not necessarily include the best possible knowledge of all its parts, even though they may be entirely separate and therefore virtually capable of being ‘best possibly known,’ i.e., of possessing, each of them, a representative of its own. The lack of knowledge is by no means due to the interaction being insufficiently known — at least not in the way that it could possibly be known more completely — it is due to the interaction itself. Attention has recently been called to the obvious but very disconcerting fact that even though we restrict the disentangling measurements to one system, the representative obtained for the other system is by no means independent of the particular choice of observations which we select for that purpose and which by the way are entirely arbitrary. It is rather discomforting that the theory should allow a system to be steered or piloted into one or the other type of state at the experimenter’s mercy in spite of his having no access to it.

[T]he superposition of amplitudes ... is only valid if there is no way to know, even in principle, which path the particle took. It is important to realize that this does not imply that an observer actually takes note of what happens. It is sufficient to destroy the interference pattern, if the path information is accessible in principle from the experiment or even if it is dispersed in the environment and beyond any technical possibility to be recovered, but in principle still ‘‘out there.’’ The absence of any such information is the essential criterion for quantum interference to appear.

2. Interdependence. Mathematics. Non-factorability leads to tradeoffs

2.1. Best-worst teams

2.2. Deception

2.3. Vulnerability

2.3.1. Non-factorability: The key characteristic

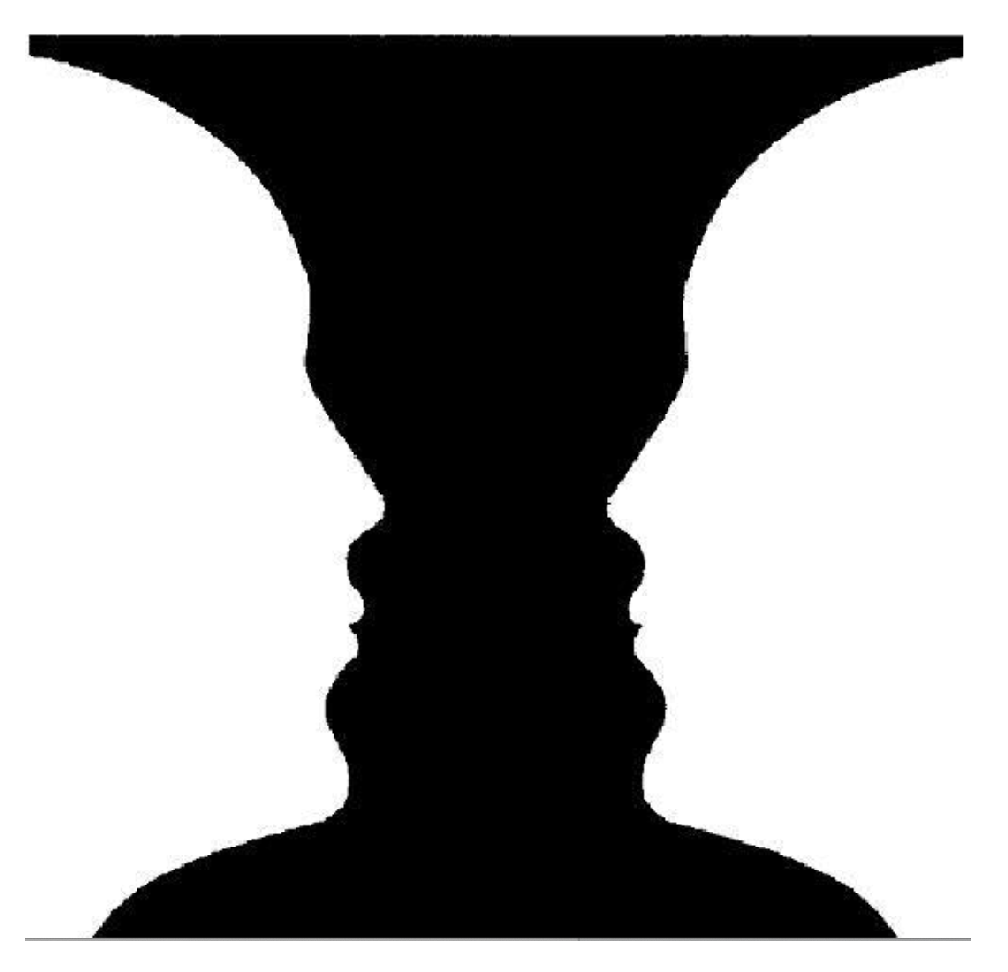

2.4. Bistable reality

2.5. Risk perception versus risk determination

2.6. Recovering rational choice, reducing complexity, and debate

2.7. Emotion

2.8. Innovation and oppression

You can’t get your game to the next level by just stealing our stuff. You’re going to have to innovate.

The press is now completely under state control and independent voices of dissent, like that of opposition leader Alexei Navalny, are quickly suppressed. Critics of the regime have been murdered both inside and outside the country.

Although China publicly denies engaging in economic espionage, Chinese officials will indirectly acknowledge behind closed doors that the theft of intellectual property from overseas is state policy.

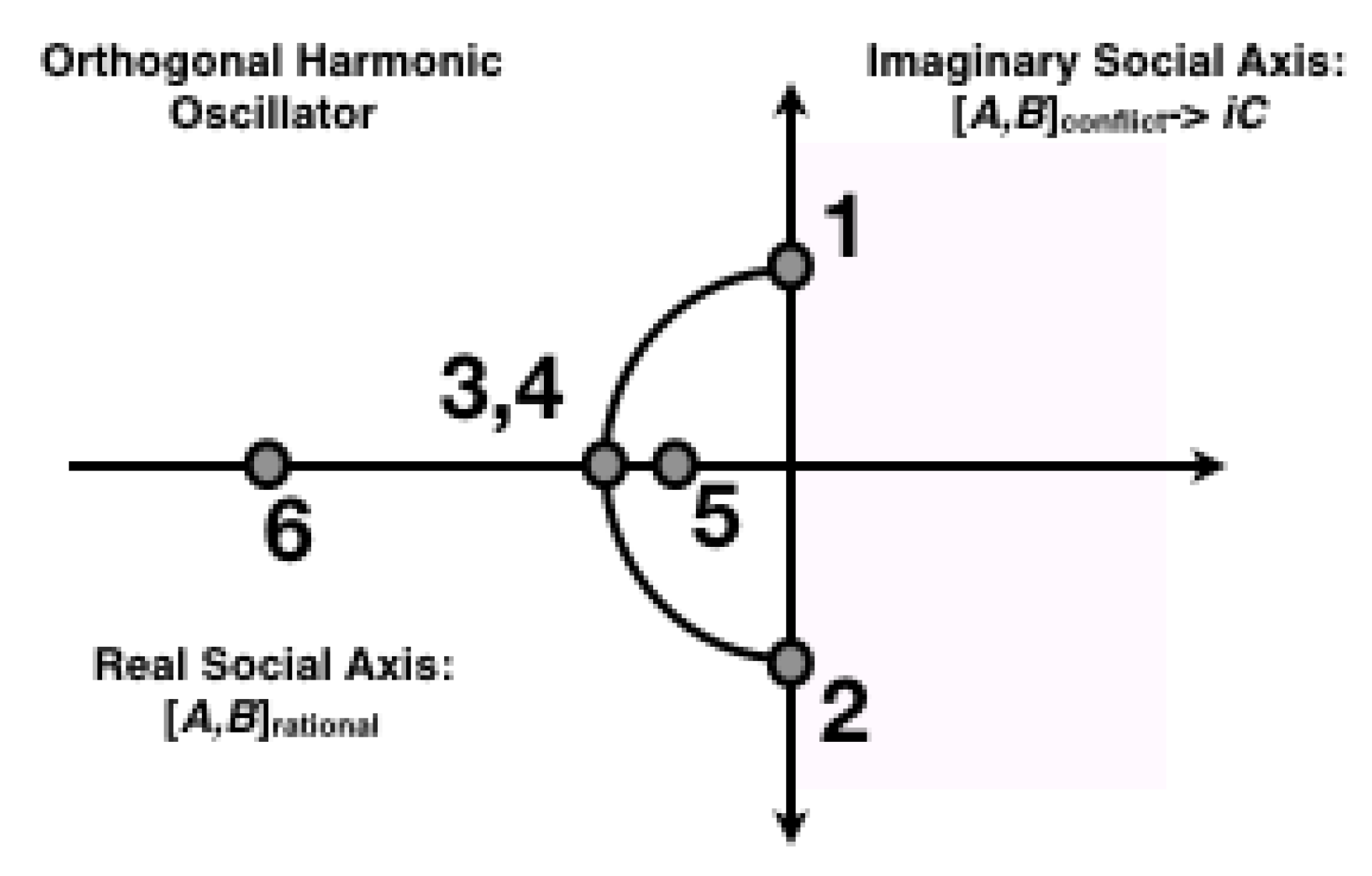

2.9. Orthogonality: Training and experience versus education

2.10. Oscillations

2.11. Decision Advantage (DA)

strategic advantage is the ability to rapidly and accurately anticipate and adapt to complex challenges … the key to intelligence-driven victories may not be the collection of objective ‘truth’ so much as the gaining of an information edge or competitive advantage over an adversary … one prerequisite for decision advantage is global awareness: the ability to develop, digest, and manipulate vast and disparate data streams about the world as it is today. Another requirement is strategic foresight: the ability to probe existing conditions and use the responses to consider alternative hypotheses and scenarios, and determine linkages and possibilities. …Secrecy, however, is only one technique that may lead to decision advantage; so may speed, relevance, or collaboration.

3. Discussion

4. Conclusions

5. Future Directions. Harmonic Oscillators

5.1. Harmonic Oscillators. A test of models

Acknowledgments

References

- Lawless, W.; Sofge, D.A.; Lofaro, D.; Mittu, R. Editorial. Interdisciplinary Approaches to the Structure and Performance of Interdependent Autonomous Human Machine Teams and Systems. Frontiers in Physics 2023. [Google Scholar] [CrossRef]

- Mann, R. Collective decision making by rational individuals. PNAS 2018, 115, E10387–E10396. [Google Scholar] [CrossRef] [PubMed]

- Neumann, J.V.; Morgenstern, O. Theory of games and economic behavior (originally published in 1944)); Princeton University Press, 1953. [Google Scholar]

- Lewin, K. Field theory in social science. Selected theoretical papers; Harper and Brothers, 1951. [Google Scholar]

- Cooke, N.; Hilton, M.E. Enhancing the Effectiveness of Team Science; National Research Council, National Academies Press: Washington (DC), 2015. [Google Scholar]

- Clark, A. Supersizing the Mind: Embodiment, Action, and Cognitive Extension; Oxford University Press, 2010. [Google Scholar]

- Lawless, W.F., M. R.S.D.; Hiatt, L. Editorial (Introduction to the Special Issue), “Artificial intelligence (AI), autonomy and human-machine teams: Interdependence, context and explainable AI. AI Magazine 2019, 40, 5–13. [Google Scholar] [CrossRef]

- Cooke, N.; Lawless, W. Effective Human-Artificial Intelligence Teaming. In Engineering Science and Artificial Intelligence; Springer, 2021. [Google Scholar]

- Shapiro, L.; Spaulding, S. Embodied Cognition. In The Stanford Encyclopedia of Philosophy; Edward N., Zalta, Ed.; 2021. [Google Scholar]

- Spinoza, B. The ethics. The Collected Writings of Spinoza, vol. 1; Princeton University Press, 1985. [Google Scholar]

- Hume, D. A Treatise of Human Nature, edited by L. A. Selby-Bigge, 2nd ed. revised by P. H. Nidditch; Clarendon Press, 1975. [Google Scholar]

- Nash, J. Equilibrium points in n-person games. PNAS 1950, 36, 48–49. [Google Scholar] [CrossRef]

- Amadae, S. Rational choice theory. POLITICAL SCIENCE AND ECONOMICS. Encyclopaedia Britannica 2017. [Google Scholar]

- Doctor, K.; Task, C.; Kejriwal, M.; colleagues. Toward Defining a Domain Complexity Measure Across Domains. AAAI 2022. [Google Scholar]

- H.H. Kelley, J.G. Holmes, N.K.H.R.C.R.; van Lange, P, An atlas of interpersonal situations; Cambridge University Press, 2003.

- Kelley, H. Lewin, situations, and interdependence. Journal of Social Issues 1991, 47, 211–233. [Google Scholar] [CrossRef]

- Jones, E. Major developments in five decades of social psychology. In D.T. Gilbert, S.T. Fiske and G. Lindzey, The Handbook of Social Psychology; McGraw-Hill, 1998; Volume 1, pp. 3–57. [Google Scholar]

- Nadler, Steven (Edward N. Zalta, e. Baruch Spinoza. The Stanford Encyclopedia of Philosophy 2022.

- Thagard, P. Rationality and science. In Handbook of rationality; Mele, A., Rawlings, P., Eds.; Oxford University Press, 2004; pp. 363–379. [Google Scholar]

- Schölkopf, B.; Locatello, F.; Bauer, S.; colleagues. Towards Causal Representation Learning. arXiv 2021. [Google Scholar] [CrossRef]

- Bednar, R.; Peterson, S. Self-esteem Paradoxes and innovations in clinical practice, 2nd ed.; American Psychological Association (APA), 1995. [Google Scholar]

- Baumeister, R.F.; Campbell, J.; Krueger, J.; Vohs, K. Exploding the self-esteem myth. Scientific American 2005, 292, 84–91. [Google Scholar] [CrossRef]

- Blanton, H.; Klick, J.; Mitchell, G.; Jaccard, J.; Mellers, B.; Tetlock, P. Strong Claims and Weak Evidence: Reassessing the Predictive Validity of the IAT. Journal of Applied Psychology 2009, 94, 567–582. [Google Scholar] [CrossRef]

- Paluck, E.; Porat, R.; Clark, C.; Green, D. Prejudice Reduction: Progress and Challenges. Annual Review of Psychology 2021, 72, 533–60. [Google Scholar] [CrossRef] [PubMed]

- Leach, C. Editorial. Journal of Personality and Social Psychology: Interpersonal Relations and Group Processes 2021.

- Nosek, B. Estimating the reproducibility of psychological science. Science 2015, 349, 943. [Google Scholar]

- et al., P.; et al. Mastering the game of Stratego with model-free multiagent reinforcement learning. Science 2022, 78, 990–996. [Google Scholar]

- Klein, E. This Changes Everything. New York Times 2023. [Google Scholar]

- Zumbrun, J. ChatGPT Needs Some Help With Math Assignments. `Large language models’ supply grammatically correct answers but struggle with calculations. Wall Street Journal 2023. [Google Scholar]

- Chomsky, N. The False Promise of ChatGPT. New York Times 2023. [Google Scholar]

- Pearl, J. Reasoning with Cause and Effect. AI Magazine 2002, 23, 95–111. [Google Scholar]

- Pearl, J.; Mackenzie, D. AI Can’t Reason Why. The current data-crunching approach to machine learning misses an essential element of human intelligence. Wall Street Journal 2018. [Google Scholar]

- W.F. Lawless, R. Mittu, D.S.; Russell, S., Eds., Autonomy and Artificial Intelligence: A threat or savior?; Springer, 2017; chapter Chapter 1. Introduction.

- Davies, P. Does new physics lurk inside living matter? Physics Today 2021, 73. [Google Scholar] [CrossRef]

- Berscheid, E.; Reis, H. Attraction and close relationships. In The handbook of social psychology, 4th ed.; Lawrence Erlbaum, 1998. [Google Scholar]

- Chen, B., H. K.R.S.; colleagues. Automated discovery of fundamental variables hidden in experimental data. Nature Computational Science 2022, 2, 433–442. [Google Scholar] [CrossRef]

- Whang, O. Can Intelligence Be Separated From the Body? Some researchers question whether A.I. can be truly intelligent without a body to interact with and learn from the physical world. New York Times 2023. [Google Scholar]

- Nasaw, D.U.S. Offers Payments to Families of Afghans Killed in August Drone Strike. State Department to support slain aid worker’s family’s effort to relocate to U.S., Pentagon says. Wall Street Journal 2021. [Google Scholar]

- Weinberg, S. The Trouble with Quantum Mechanics. The New York Review of Books. 2017. Available online: http://www.nybooks.com.

- Endsley, M. colleagues. Human-AI Teaming: State of the Art and Research Needs; National Research Council, National Academies Press: Washington (DC), 2021. [Google Scholar]

- Lawless, W. Interdependent Autonomous Human-Machine Systems: The Complementarity of Fitness, Vulnerability & Evolution, Entropy. Entropy 2022, 24, 1308. [Google Scholar] [PubMed]

- Bohr, N. Causality and Complementarity. Philosophy of Science 1937, 4, 289–298. [Google Scholar] [CrossRef]

- Bohr, N. Science and unity of knowledge. In Unity of knowledge; Leary, L., Ed.; Doubleday, 1955; pp. 44–62. [Google Scholar]

- Pais, A. Niels Bohr’s Times: In Physics, Philosophy, and Polity; Clarendon Press: 1991.

- Hazzard, K.; Gadway, B. Synthetic dimensions. Physics Today 2023, 62–63. [Google Scholar] [CrossRef]

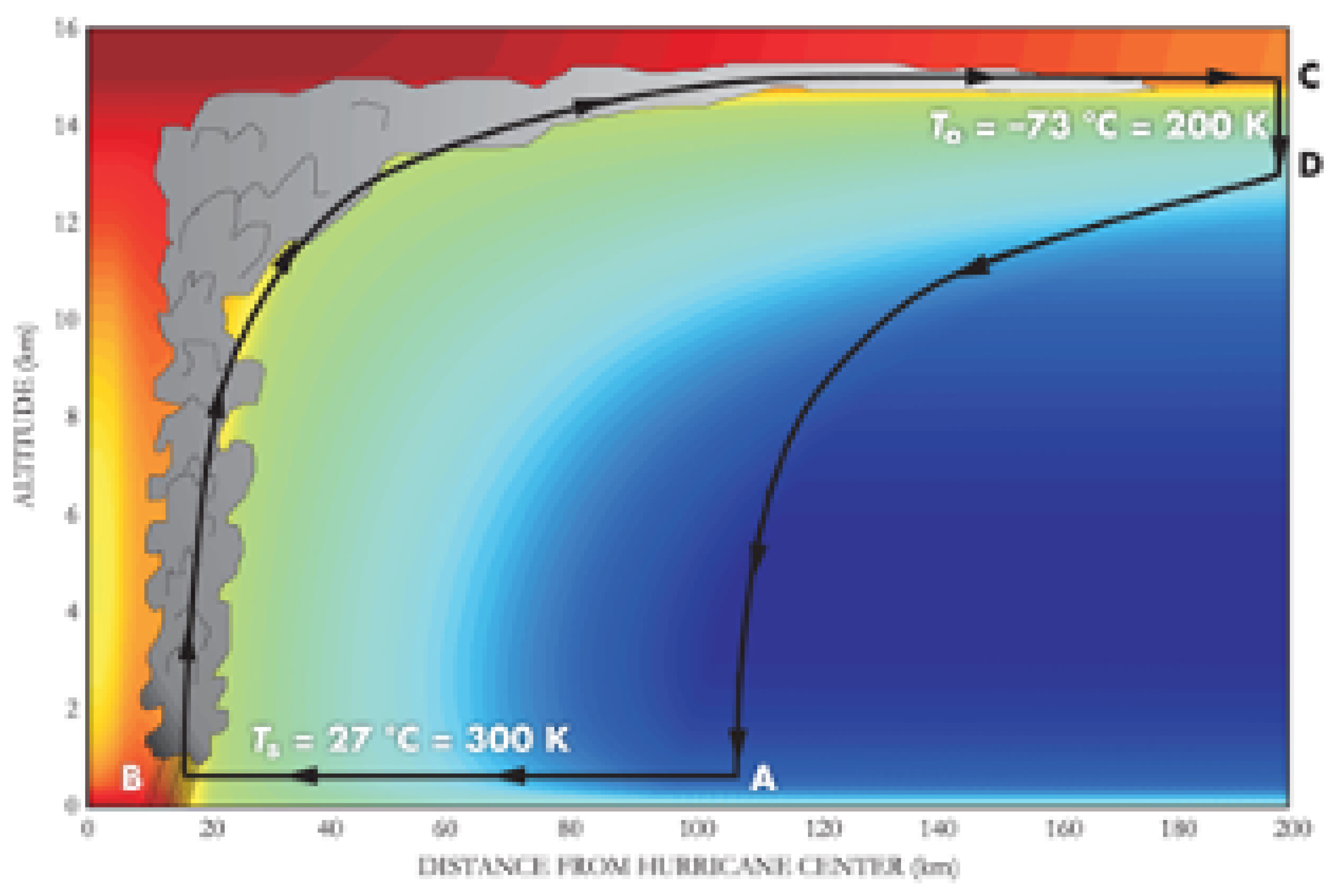

- Emanuel, K. Hurricanes: Tempests in a greenhouse. Physics Today 2006, 59, 74–75. [Google Scholar] [CrossRef]

- Moskowitz, I. A Cost Metric for Team Efficiency. Frontiers in Physics 2022. [Google Scholar] [CrossRef]

- Reiche, B.S. Between interdependence and autonomy: Toward a typology of work design modes in the new world of work. Human Resource Management Journal 2023, 1–17. [Google Scholar] [CrossRef]

- Cummings, J. Team Science Successes and Challenges; NSF Workshop Fundamentals of Team Science and the Science of Team Science: Bethesda MD, 2015; June 2. [Google Scholar]

- Ponce de León, M.; Marom, A.; Engel, S.; colleagues. The primitive brain of early Homo. Science 2021, 372, 165–171. [Google Scholar] [CrossRef]

- Sliwa, J. Toward collective animal neuroscience. Science 2022, 374, 397–398. [Google Scholar] [CrossRef]

- Schrödinger, E. Discussion of Probability Relations Between Separated Systems. Proceedings of the Cambridge Philosophical Society 1935, 31, 555–563. [Google Scholar] [CrossRef]

- Walden, D.; Roedler, G.; Forsberg, K.; Hamelin, R.; Shortell, T. Systems Engineering Handbook. A guide for system life cycle processes and activities (4th Edition); Volume INCOSE-TP-2003-002-04, John Wiley and Sons, 2015. [Google Scholar]

- Brillouin, L. Science and Information Theory; Academic Press: 1956.

- Hohman, Z.; Kuljian, O. Why people join groups; Oxford University Press: 2021.

- Mathieu, J.E., G. P.D.M.; Klock, E. Embracing Complexity: Reviewing the Past Decade of Team Effectiveness Research. Annual Review of Organizational Psychology and Organizational Behavior 2019, 6, 17–46. [Google Scholar] [CrossRef]

- Smith, A. An Inquiry into the Nature and Causes of the Wealth of Nations; University of Chicago Press, 1776/1977. [Google Scholar]

- Dimitrova, T.; Weis, A. The wave-particle duality of light. A demonstration. American Journal of Physics 2008, 76, 137–142. [Google Scholar] [CrossRef]

- Zeilinger, A. Experiment and the foundations of quantum physics. Reviews of Modern Physics 1999, 71, 288–297. [Google Scholar] [CrossRef]

- Cohen, L. Time-frequency analysis; Prentice Hall Signal Processing Series; 1995. [Google Scholar]

- Acebrón JA, Bonilla LL, P. V.C.R.F.; R., S. The Kuramoto model: a simple paradigm for synchronization phenomena. Reviews of Modern Physics 2005, 77, 137–185. [Google Scholar] [CrossRef]

- Moreau, Q. L.D.C.R.G.; Dumas, G. A neurodynamic model of inter-brain coupling in the gamma band. Journal of Neurophysiology 2022. [Google Scholar] [CrossRef]

- Martyushev, L. Entropy and entropy production: Old misconceptions and new breakthroughs. Entropy 2013, 15, 1152–1170. [Google Scholar] [CrossRef]

- Von Neumann, J. Theory of self-reproducing automata; University of Illinois Press, 1966. [Google Scholar]

- Düval, S.; Hinz, T. Different Order, Different Results? The Effects of Dimension Order in Factorial Survey Experiments. Research Methods & Evaluation, Sage 2019, 32. [Google Scholar]

- Weaver, J.M.; Schofield, T.J. Mediation and moderation of divorce effects on children’s behavior problems. Journal of Family Psychology 2015, 29, 39–48. [Google Scholar] [CrossRef]

- Christensen, C.; Alton, R.; Rising, C.; Waldeck, A. The Big Idea: The New M-and-A Playbook. Harvard Business Review 2011. [Google Scholar]

- Tzu, S. The art of war; Basic Books, 1994. [Google Scholar]

- Editors. Command economy. Encyclopedia Britannica 2017. [Google Scholar]

- Giles, L. The art of war by Sun Tzu; Special Edition Books, 2007. [Google Scholar]

- Shannon, C. A Mathematical Theory of Communication. The Bell System Technical Journal 1948, 27, 379–423 and 623–656. [Google Scholar] [CrossRef]

- Eagleman, D. Visual illusions and neurobiology. Nature Reviews Neuroscience 2001, 2, 920–926. [Google Scholar] [CrossRef] [PubMed]

- Carroll, S. The big picture. On the Origins of Life, Meaning, and the Universe Itself; Dutton (Penguin Random House), 2016. [Google Scholar]

- Wickens, C.D. Engineering psychology and human performance (second edition); Merrill, 1992. [Google Scholar]

- Cohen, J. Human Nature Sinks HIV Prevention Trial. Science 2013, 351, 1160. [Google Scholar]

- DoD. Pentagon Press Secretary John F. Kirby and Air Force Lt. Gen. Sami D. Said Hold a Press Briefing. Department of Defense 2021. [Google Scholar]

- Simon, H. Bounded rationality and organizational learning; Technical Report AIP 107; CMU: Pittsburgh, PA, 1989; Volume 9/23. [Google Scholar]

- Sen, A. The Formulation of Rational Choice. The American Economic Review 1994, 84, 385–390. [Google Scholar]

- U. S..; Court, S. California versus Green 399 U.S. 149. U.S. Supreme Court 1970. [Google Scholar]

- Ginsburg, R. AMERICAN ELECTRIC POWER CO., INC. ET AL v. CONNECTICUT ET AL. US Supreme Court 2011, 10–174. [Google Scholar]

- Crowe, W.J., J. Investigation report. Formal investigation into the circumstances surrounding the downing of Iran Air Flight 655 on 3 July 1988. Chairman, Joint Chiefs of Staff 1988.

- Lawless, W. The quantum of social action and the function of emotion in decision-making. AAAI Technical Report 2001. [Google Scholar]

- Baker, G. Interview of the former Central Intelligence Agency (CIA) and National Security Administration (NSA) chief. Wall Street Journal 2015. [Google Scholar]

- Rubenstein, J. Putin Re-Stalinizes Russia Seventy years after the dictator’s death, he casts a grim shadow over the lands he dominated. Wall Street Journal 2023. [Google Scholar]

- Bhattacharjee, Y. The Daring Ruse That Exposed China’s Campaign to Steal American Secrets. How the downfall of one intelligence agent revealed the astonishing depth of Chinese industrial espionage. New York Times 2023. [Google Scholar]

- W.F. Lawless, T.C.; Ballas, J. Virtual knowledge: Bistable reality and the solution of ill-defined problems. IEEE Systems Man, and Cybernetics 2000, 30, 119–126. [Google Scholar] [CrossRef]

- Lawless, W.; Akiyoshi, M.; Angjellari-Dajcic, F.; Whitton, J. Public consent for the geologic disposal of highly radioactive wastes and spent nuclear fuel. International Journal of Environmental Studies 2014, 71, 41–62. [Google Scholar] [CrossRef]

- Suleiman, Y. A War of Words. Language and Conflict in the Middle East; Cambridge University Press, 2012. [Google Scholar]

- McConnell, J. Vision 2015. A globally networked and integrated intelligence enterprise; The Director of National Intelligence, 2015. [Google Scholar]

- James, L. Delivering Decision Advantage. Air and Space Power Journal 2012, 26. [Google Scholar]

- Hughes, J.; Maxwell, J.; Weiss, L. Reimagine decision making to improve speed and quality. Inefficient decision making wastes time, money and productivity. As leaders respond to today’s paradigm shift, companies can pursue four actions to adopt and sustain high-velocity decision making. McKinsey and Company 2020. [Google Scholar]

- Cohn, P. Be Decisive to Improve Sports Performance. Peak Performance Sports 2021. [Google Scholar]

- Khaldun, I. The Muqaddimah. An Introduction to History. Translated by F. Rosenthal and edited by N.J. Dawood. Princeton University Press, 1400. [Google Scholar]

- Schwirtz, M.; Troianovski, A.; Al-Hlou, Y.; Froliak, M.; Entous, A.; Gibbons-Neff, T. Putin’s war. A Times investigation based on interviews, intercepts, documents and secret battle plans shows how a “walk in the park” became a catastrophe for Russia. New York Times 2022. [Google Scholar]

- Conant, R.C. Laws of information which govern systems. IEEE Transaction on Systems, Man, and Cybernetics 1976, 6, 240–255. [Google Scholar] [CrossRef]

- Kenny, D. colleagues. Data analyses in social psychology. In D.T. Gilbert, S.T. Fiske and G. Lindzey, Handbook of Social Psychology (4th Ed.); McGraw-Hill, 1998; Volume 1, pp. 233–265. [Google Scholar]

- Alain Aspect, J.F.C.; Zeilinger, A. Entanglement, Einstein, Podolsky and Rosen paradox (). The Nobel Committee for Physics 2022. [Google Scholar]

- Lucas, R. Monetary neutrality. Nobel Prize Lecture 1995. [Google Scholar]

- Wooters, W.; Zurek, W. The no-cloning theorem. Physics Today 2009, 76–77. [Google Scholar] [CrossRef]

- S.M. Marshall, C. Mathis, E.C.; colleagues. Identifying molecules as biosignatures with assembly theory and mass spectrometry. Nature Communications 2021, 12.

- Bette, D.A., P. R.; Chassot, P. Is our heart a well-designed pump? The heart along animal evolution. European Heart Journal 2014, 35, 2322–2332. [Google Scholar] [CrossRef]

- Thaler, R. Richard H. Thaler: Facts. Nobel Prize Committee 2022. [Google Scholar]

- Mueller, E. Applications of quantum mechanics. Cornell Phys 3317 2014. [Google Scholar]

- Centola, D.; Macy, M. Complex Contagions and the Weakness of Long Ties. American Journal of Sociology 2007, 113, 702–34. [Google Scholar] [CrossRef]

- Lawless, W. The entangled nature of interdependence. Bistability, irreproducibility and uncertainty. Journal of Mathematical Psychology 2017, 78. [Google Scholar] [CrossRef]

- Lawless, W. The physics of teams: Interdependence, measurable entropy and computational emotion. Frontiers of physics 2017, 5. [Google Scholar] [CrossRef]

| 1 | See NIH’s Implicit Bias Proceedings 508 at https://diversity.nih.gov/sites/coswd/files/images/NIH

|

| 2 | Reproduced from [46] with the permission of the American Institute of Physics. See Figure 1 in [46] at https://doi.org/10.1063/1.2349743. |

| 3 | If one is not familiar with operators in Hilbert space, treat them as a complex valued matrix. |

| 4 | Fittedness is the quality of being fitted; we use it to mean the bidirectional fit between a new team member and the team. |

| 5 | In 2001, with the assistance of George Kang, Naval Research Laboratory, Washington, DC |

| 6 | the data comes from https://www.wipo.int

|

| 7 | |

| 8 | By the State of South Carolina’s Department of Health and Environmental Control (DHEC). |

| 9 | |

| 10 | The South Carolina Department of Health and Environmental Control (DHEC) is the government agency responsible for public health and the environment in the U.S. state of South Carolina (https://scdhec.gov/) |

| 11 | After a request to intervene by DHEC, the recommendation was drafted by the first author, who was formerly on the CAB, but he was not a member at that time. |

| 12 | “President Vladimir V. Putin’s war was never supposed to be like this. When the head of the C.I.A. traveled to Moscow last year to warn against invading Ukraine, he found a supremely confident Kremlin, with Mr. Putin’s national security adviser boasting that Russia’s cutting-edge armed forces were strong enough to stand up even to the Americans” [94] |

| 13 | "Meaning of entanglement," https://en.wikipedia.org/wiki/Quantumentanglement

|

| 14 | We suspect that the inability to factor apart the independent, individual contributions of teams may play a part in assembly theory. In brief, assembly theory [100] attempts to establish that complexity is a signature of life; however, it overlooks that one of life’s well-fitted structures (characterized by fewer degrees of freedom, reduced structural entropy and non-factorability) transfers its available (free) energy to maximize the productivity of its structure’s function, helping it to survive (e.g., to be an effective structure able to pump across a wide range of activities, a heart must be efficient; p. 2326, in [101]). This was also overlooked by Von Neumann in his theory of self-reproducing automata [64]. |

| Model | Benefit | Weakness |

|---|---|---|

| LRC-like model | Established Decision Advantage | Adjustments for errors are not rational. |

| Classic Harmonic Oscillator | Can be adjusted | Assumes that cognition and behavior form a Spinoza-Hume type monad (1:1). |

| Quantum Harmonic Oscillator | Can be adjusted | Assumes beliefs and behaviors form complex interactions, orthogonal under convergence (e.g., competition), parallel in mundane situations. |

| Qutrit Harmonic Oscillator | Can be adjusted | Allows a model of the interdependence between beliefs and actions for intelligent outsiders and intelligent insider team members (e.g., a coach). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).