Submitted:

09 July 2023

Posted:

11 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

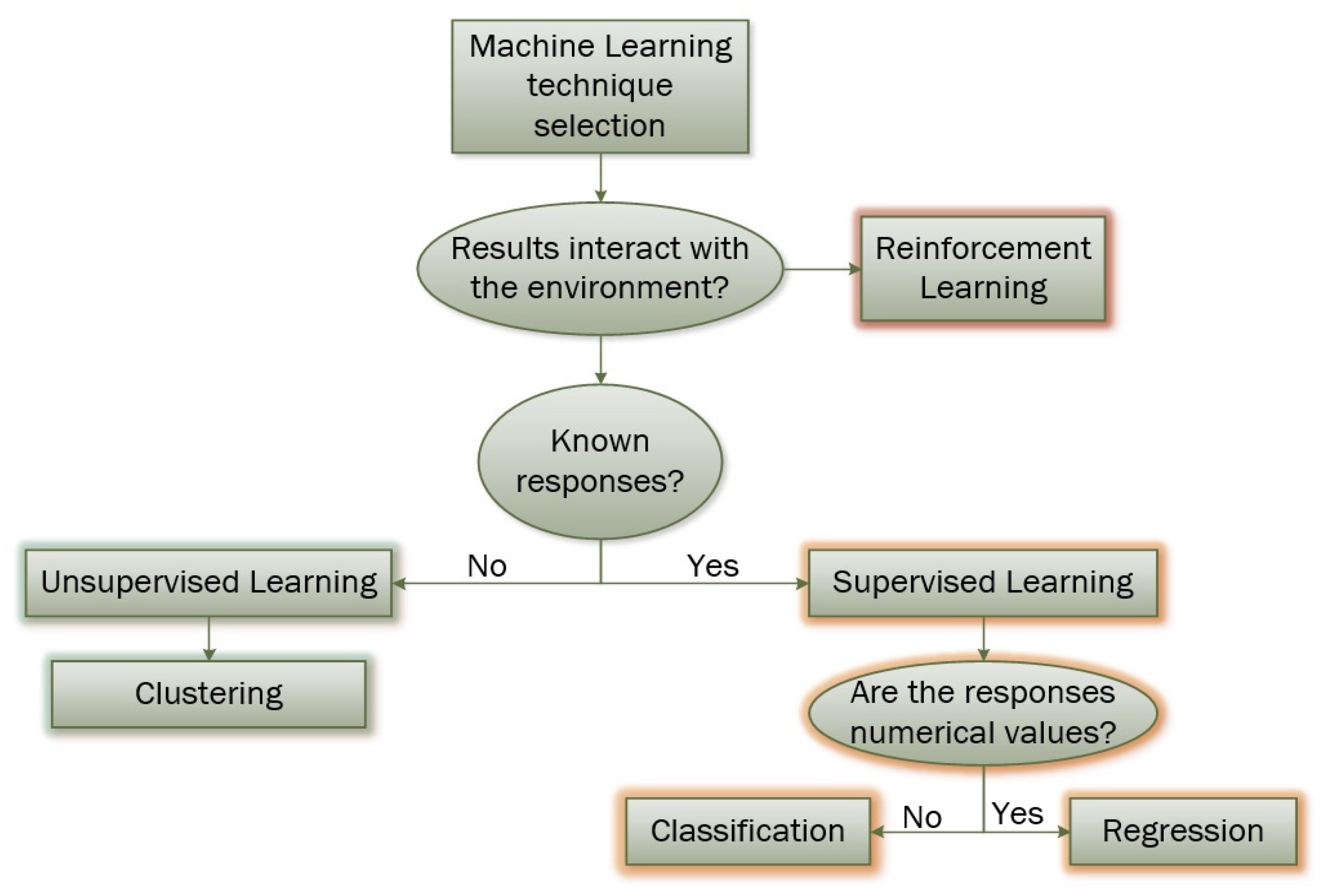

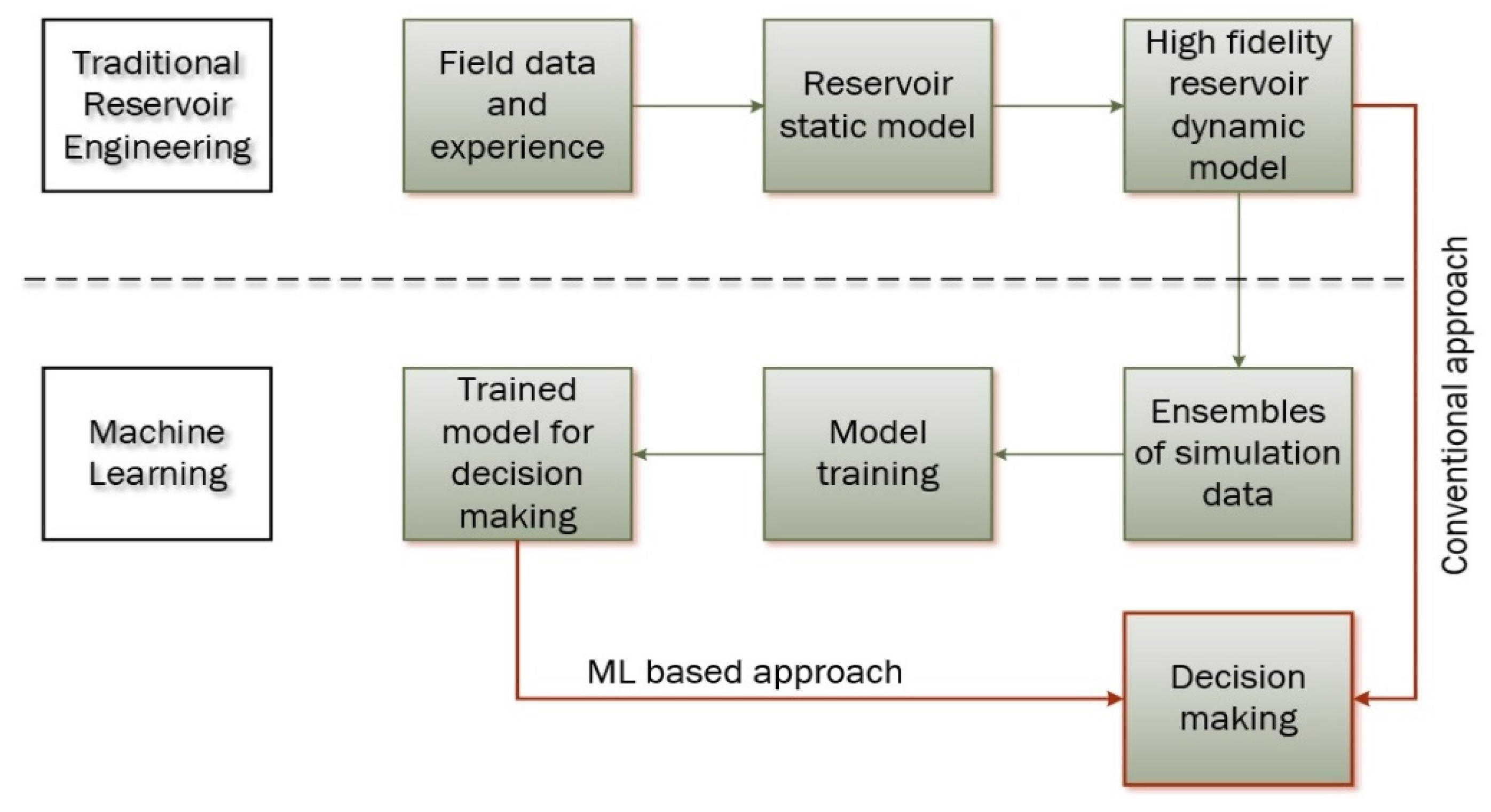

1.2. Machine Learning in Reservoir Simulation

2. Machine Learning Strategies for Individual Simulation Runs

2.1. Machine Learning Methods for Surrogate Models

2.2. Machine Learning Methods for Handling the Stability and Phase Split Problems

2.3. Machine Learning Methods for Predicting Black Oil PVT Properties

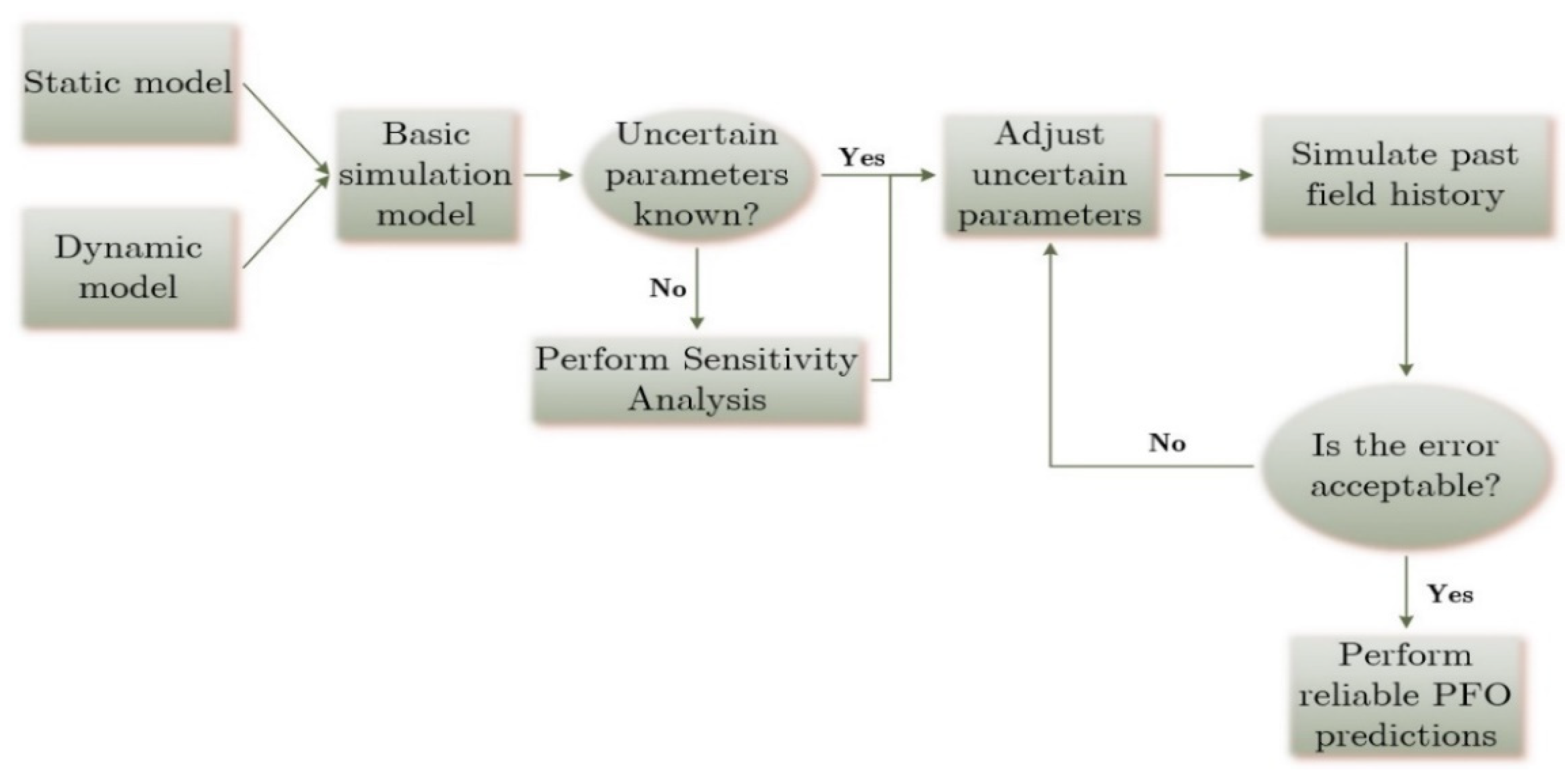

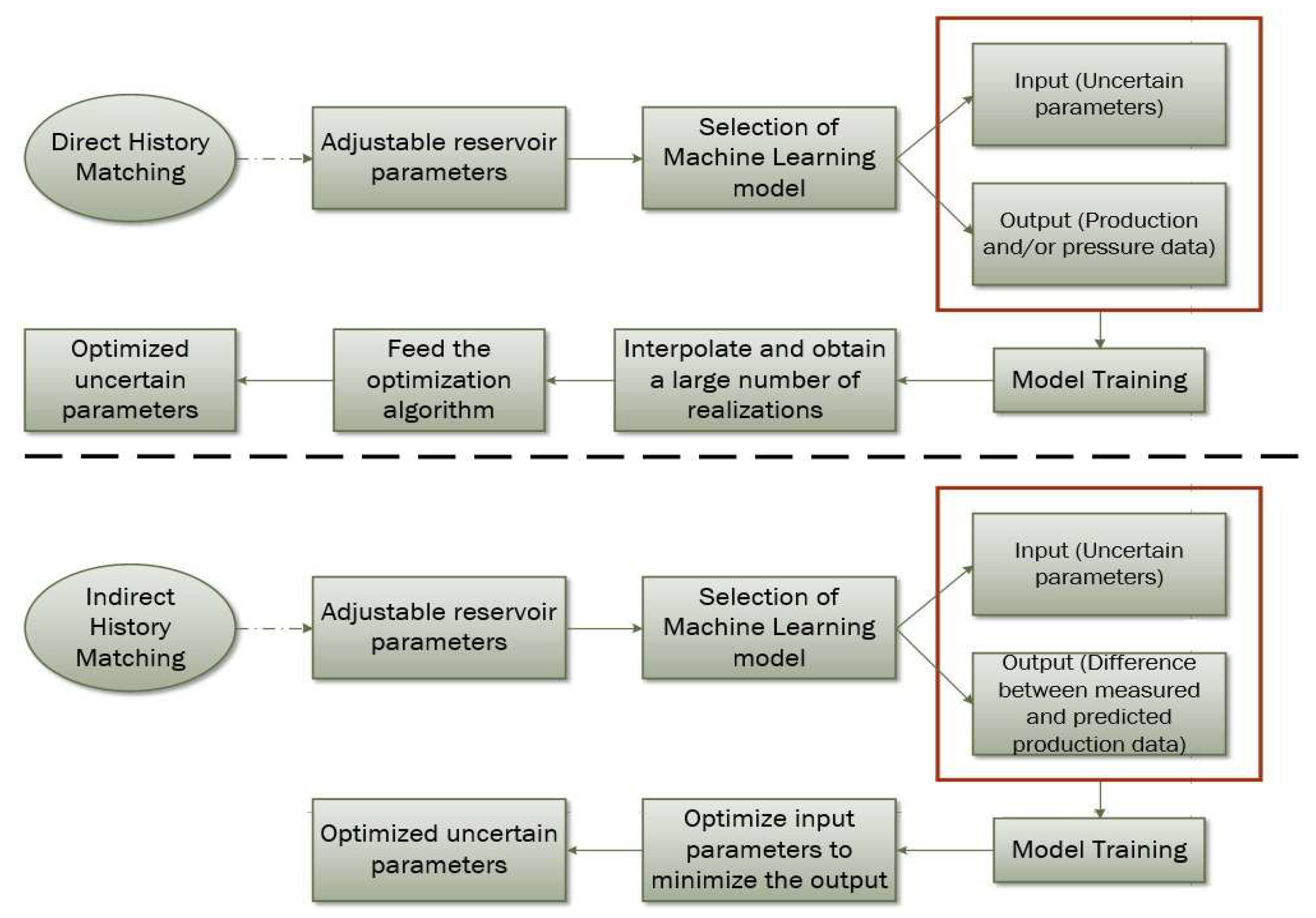

3. Machine Learning Strategies for History Matching

3.1. Machine Learning Methods for Indirect History Matching

3.2. Machine Learning Methods for Direct History Matching

3.2.1. History Matching Based on ANN Models

3.2.2. History Matching Based on Bayesian ML Models

3.2.3. History Matching Based on ML Models other than ANNs

3.2.4. History Matching Based on Deep Learning Methods

3.2.5. History Matching ML Methods Using Dimensionality Reduction Techniques

3.2.6. History Matching Based on Reinforcement Learning Methods

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Abbreviation | Meaning |

|---|---|

| ML | Machine Learning |

| EOR | Enhanced Oil Recovery |

| EoS | Equation of State |

| HM | History Matching |

| PFO | Production Forecast and Optimization |

| OF | Objective Function |

| HPC | High-Performance Computing |

| SRM | Surrogate Reservoir Model |

| RFM | Reduced Physics Model |

| ROM | Reduced Order Model |

| AI | Artificial Intelligence |

| SL | Supervised Learning |

| UL | Unsupervised Learning |

| RL | Reinforcement Learning |

| ANN | Artificial Neural Network |

| BHP | Bottom Hole Pressure |

| Bo | oil formation volume factor |

| Bg | gas formation volume factor |

| GOR | Gas to Oil Ratio |

| RBFNN | Radial Basis Function Neural Network |

| SVM | Support Vector Machine |

| TPD | Tangent Plane Distance |

| DL | Deep Learning |

| SS | Successive Substitution |

| DT | Decision Tree |

| Z-factor | gas compressibility factor |

| S-K | Standing-Katz |

| SCG | Scaled Conjugate Gradient |

| LM | Levenberg–Marquardt |

| RBP | Resilient Back Propagation |

| FIS | Fuzzy Interface System |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| LSSVM | Least Square Support Vector Machines |

| CSA | Coupled Simulated Annealing |

| MW | Molecular Weight |

| PSO | Particle Swarm Optimization |

| GA | Genetic Algorithm |

| KRR | Kernel Ridge Regression |

| CVD | Constant Volume Depletion |

| MLP | Multi-Layer Perceptron |

| GBM | Gradient Boost Method |

| SA | Sensitivity Analysis |

| BB | Box Behnken |

| LH | Latin Hypercube |

| GRNN | Generalized Regression Neural Network |

| FSSC | Fuzzy Systems with Subtractive Clustering |

| MCMC | Markov Chain Monte Carlo |

| MARS | Multivariate Adaptive Regression Splines |

| SGB | Stochastic Gradient Boosting |

| RF | Random Forest |

| SOM | Self Organizing Map |

| SVR | Support Vector Regression |

| DGN | Distributed Gauss-Newton |

| PCA | Principal Component Analysis |

| RNN | Recurrent Neural Network |

| MEDA | Multimodal Estimation Distribution Algorithm |

| CNN | Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| ES | Ensemble Smoother |

| PRaD | Piecewise Reconstruction from a Dictionary |

| VAE | Variational AutoEncoder |

| CAE | Convolutional AutoEncoder |

| CDAE | Convolutional Denoising AutoEncoder |

| EnKF | Ensemble Kalman Filter |

| MDP | Markov Decision Process |

| DQN | Deep Q Network |

| DDPG | Deep Deterministic Policy Gradient |

| PPO | Proximal Policy Optimization |

References

- Alenezi, F.; Mohaghegh, S.A. Data-Driven Smart Proxy Model for a Comprehensive Reservoir Simulation. In Proceedings of the 4th Saudi International Conference on Information Technology (Big Data Analysis) (KACSTIT), Riyadh, Saudi Arabia, 6-9 November 2016; pp. 1–6. [Google Scholar]

- Ghassemzadeh, S. A Novel Approach to Reservoir Simulation Using Supervised Learning, Ph.D. dissertation, University of Adelaide, Australian School of Petroleum and Energy Resources, Faculty of Engineering, Computer & Mathematical Sciences, Australia, November 2020.

- Danesh, A. PVT and Phase Behavior of Petroleum Reservoir Fluids; Elsevier: Amsterdam, The Netherlands, 1998; ISBN 9780444821966. [Google Scholar]

- Gaganis, V.; Marinakis, D.; Samnioti, A. A soft computing method for rapid phase behavior calculations in fluid flow simulations. Journal of Petroleum Science and Engineering 2021, 205, 108796. [Google Scholar] [CrossRef]

- Voskov, D.V.; Tchelepi, H. Comparison of nonlinear formulations for two-phase multi-component EoS based simulation. Journal of Petroleum Science and Engineering 2012, 82–83, 101–111. [Google Scholar] [CrossRef]

- Wang, P.; Stenby, E.H. Compositional simulation of reservoir performance by a reduced thermodynamic model. Computers & Chemical Engineering 1994, 18, 2–75. [Google Scholar]

- Gaganis, V.; Varotsis, N. Machine Learning Methods to Speed up Compositional Reservoir Simulation. In Proceedings of the EAGE Annual Conference & Exhibition incorporating SPE Europe, SPE 154505. Copenhagen, Denmark, 4–7 June 2012. [Google Scholar]

- Jaber, A.K.; Al-Jawad, S.N.; Alhuraishawy, A.K. A review of proxy modeling applications in numerical reservoir simulation. Arabian Journal of Geosciences 2019, 12. [Google Scholar] [CrossRef]

- Aminian, K. Modeling and simulation for CBM production. In Coal Bed Methane: Theory and Applications, 2nd ed; Elsevier: Amsterdam, The Netherlands, 2020; ISBN 9780128159972. [Google Scholar]

- Shan, J. High performance cloud computing on multicore computers. PhD dissertation, New Jersey Institute of Technology, USA. 31 May 2018. [Google Scholar]

- Amini, S.; Mohaghegh, S. Application of Machine Learning and Artificial Intelligence in Proxy Modeling for Fluid Flow in Porous Media. Fluids 2019, 4. [Google Scholar] [CrossRef]

- Bahrami, P.; Moghaddam, F.S.; James, L.A. A Review of Proxy Modeling Highlighting Applications for Reservoir Engineering. Energies 2022, 15. [Google Scholar] [CrossRef]

- Sircar, A.; Yadav, K.; Rayavarapu, K.; Bist, N.; Oza, H. Application of machine learning and artificial intelligence in oil and gas industry. Petroleum Research, 2021, 6, 379–391. [Google Scholar] [CrossRef]

- Bao, A.; Gildin, E.; Zalavadia, H. Development Of Proxy Models for Reservoir Simulation by Sparsity Promoting Methods and Machine Learning Techniques. In Proceedings of the 16th European Conference on the Mathematics of Oil Recovery, Barcelona, Spain, 3-6 September 2018. [Google Scholar]

- Denney, D. Pros and cons of applying a proxy model as a substitute for full reservoir simulations. Journal of Petroleum Technology 2010, 62, 41–42. [Google Scholar] [CrossRef]

- Ibrahim, D. An overview of soft computing. In Proceeding of the 12th International Conference on Application of Fuzzy Systems and Soft Computing, ICAFS, Vienna, Austria., 29-30 August 2016. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning. Springer: New York, 650 NY, USA, 2006; ISBN-10: 0241973376.

- Nocedal, J.; Wright, S. Numerical Optimization, 2nd ed.; Mikosch, T.V., Robinson, S.M., Resnick, S.I., Eds.; Springer: New York, 650 NY, USA, 2006. [Google Scholar]

- Samnioti, A.; Anastasiadou, V.; Gaganis, V. Application of Machine Learning to Accelerate Gas Condensate Reservoir Simulation. Clean Technol. 2022, 4, 153–173. [Google Scholar] [CrossRef]

- James. G.; Witten, D., Hastie, T., Tibshirani, R. An Introduction to Statistical Learning: with Applications in R, Eds.; Springer: New York, 650 NY, USA, 2013; ISBN 978-1-4614-7139-4. [Google Scholar]

- Freeman, J.A.; Skapura, D.M. Neural Networks: Algorithms, Applications, and Programming Techniques. Addison-Wesley: Boston, Massachusetts, USA, 1991. ISBN 0201513765.

- Fausett, L. Fundamentals of Neural Network: Architectures, Algorithms, and Applications. Prentice-Hall international editions, Hoboken, 1994.

- Veelenturf, L.P.J. Analysis and Applications of Artificial Neural Networks, 1st ed; Prentice-Hall international editions, Hoboken, 1995.

- Kumar, A. A Machine Learning Application for Field Planning. In Proceedings of the Offshore Technology Conference, OTC-29224-MS. Houston, Texas, 6-9 May 2019. [Google Scholar]

- Zhang, D.; Chen, Y.; Meng, J. Synthetic well logs generation via Recurrent Neural Networks. Petroleum Exploration and Development 2018, 45, 629–639. [Google Scholar] [CrossRef]

- Castiñeira, D.; Toronyi, R.; Saleri, N. Machine Learning and Natural Language Processing for Automated Analysis of Drilling and Completion Data. In Proceedings of the SPE Kingdom of Saudi Arabia Annual Technical Symposium and Exhibition, Dammam, Saudi Arabia, 23-26 April 2018; SPE-192280-MS.

- Bhandari, J.; Abbassi, R.; Garaniya, V.; Khan, F. Risk analysis of deepwater drilling operations using Bayesian network. Journal of Loss Prevention in the Process Industries 2015, 38, 11–23. [Google Scholar] [CrossRef]

- Varotsis, N.; Gaganis, V.; Nighswander, J.; Guieze, P. A Novel Non-Iterative Method for the Prediction of the PVT Behavior of Reservoir Fluids. In Proceedings of the SPE Annual Technical Conference and Exhibition, SPE-56745-MS. Houston, Texas, 3-6 October 1999. [Google Scholar]

- Avansi, G, D. Use of Proxy Models in the Selection of Production Strategy and Economic Evaluation of Petroleum Fields. In Proceeding of the SPE Annual Technical Conference and Exhibition, New Orleans, Louisiana, 4-, SPE-129512-STU.

- Aljameel, S.S.; Alomari, D.M.; Alismail, S.; Khawaher, F.; Alkhudhair, A.A.; Aljubran, F.; Alzannan, R.M. An Anomaly Detection Model for Oil and Gas Pipelines Using Machine Learning. Computation 2022, 10, 138. [Google Scholar] [CrossRef]

- Jacobs, T. The Oil and Gas Chat Bots Are Coming. J. Pet. Technol. 2019, 71, 34–36; [Google Scholar] [CrossRef]

- Anastasiadou, V.; Samnioti, A.; Kanakaki, R.; Gaganis, V. Acid gas re-injection system design using machine learning. Clean Technol. 2022, 4, 1001–1019. [Google Scholar] [CrossRef]

- Navrátil, J.; King, A.; Rios, J. Kollias, G.; Torrado, R.; Codas, A. Accelerating Physics-Based Simulations Using End-to-End Neural Network Proxies: An Application in Oil Reservoir Modeling. Front. Big Data 2019, 2. [Google Scholar] [CrossRef] [PubMed]

- Mohaghegh, S.; Popa, A.; Ameri, S. Intelligent systems can design optimum fracturing jobs. In Proceedings of the SPE Eastern Regional Conference and Exhibition, SPE-57433-MS., 21-22 October 1999. [Google Scholar]

- Mohaghegh, S.D.; Hafez, H.; Gaskari, R.; Haajizadeh, M.; Kenawy, M. Uncertainty analysis of a giant oil field in the middle east using surrogate reservoir model. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference; 2006. [Google Scholar]

- Mohaghegh, S.D. Quantifying uncertainties associated with reservoir simulation studies using surrogate reservoir models. In Proceedings of the SPE Annual Technical Conference and Exhibition, SPE-102492-MS. San Antonio, Texas, USA, 24-27 September 2006. [Google Scholar]

- Mohaghegh, S.D.; Modavi, A.; Hafez, H.H.; Haajizadeh, M.; Kenawy, M.; Guruswamy, S. Development of Surrogate Reservoir Models (SRM) for Fast-Track Analysis of Complex Reservoirs. In Proceedings of the Intelligent Energy Conference and Exhibition, SPE-99667-MS. Amsterdam, The Netherlands, 11-13 April 2006. [Google Scholar]

- Kalantari-Dahaghi, A.; Esmaili, S.; Mohaghegh, S.D. Fast Track Analysis of Shale Numerical Models. In Proceedings of the SPE Canadian Unconventional Resources Conference, SPE-162699-MS. Calgary, Alberta, Canada, 30 October-1 November 2012. [Google Scholar]

- Mohaghegh, S.D. Reservoir simulation and modeling based on artificial intelligence and data mining (AI&DM). Journal of Natural Gas Science and Engineering 2011, 3, 697–705. [Google Scholar]

- Alenezi, F.; Mohaghegh, S. A data-driven smart proxy model for a comprehensive reservoir simulation. In Proceedings of the 4th Saudi International Conference on Information Technology (Big Data Analysis) (KACSTIT), Riyadh, Saudi Arabia, 6-9 November 2016. [Google Scholar]

- Alenezi, F.; Mohaghegh, S. Developing a Smart Proxy for the SACROC Water-Flooding Numerical Reservoir Simulation Model. In Proceedings of the SPE Western Regional Meeting, SPE-185691-MS. Bakersfield, California, 23-27 April 2017. [Google Scholar]

- Shahkarami, A.; Mohaghegh, S.D.; Gholami, V.; Haghighat, A.; Moreno, D. Modeling pressure and saturation distribution in a CO2 storage project using a Surrogate Reservoir Model (SRM). Greenhouse Gases: Science and Technology 2014, 4, 289–315. [Google Scholar] [CrossRef]

- Shahkarami, A.; Mohaghegh, S. Applications of smart proxies for subsurface modeling. Pet. Explor. Dev. 2020, 47, 400–412. [Google Scholar] [CrossRef]

- Dahaghi, A.K.; Mohaghegh, S. Numerical simulation and multiple realizations for sensitivity study of shale gas reservoirs. In Proceedings of the SPE Production and Operations Symposium; 2011. [Google Scholar]

- Memon, P.Q.; Yong, S.P.; Pao, W.; Sean, P.J. Surrogate reservoir modeling-prediction of bottom-hole flowing pressure using radial basis neural network. In Proceedings of the Science and Information Conference (SAI); 2014. [Google Scholar]

- Amini, S.; Mohaghegh, S.D.; Gaskari, R.; Bromhal, G. Uncertainty analysis of a CO2 sequestration project using surrogate reservoir modeling technique. In Proceedings of the SPE Western Regional Meeting, SPE-153843-MS. Bakersfield, California, USA, 21-23 March 2012. [Google Scholar]

- Gaganis, V.; Varotsis, N. Non-iterative phase stability calculations for process simulation using discriminating functions. Fluid Phase Equilibria 2012, 314, 69–77. [Google Scholar] [CrossRef]

- Gaganis, V.; Varotsis, N. An integrated approach for rapid phase behavior calculations in compositional modeling. J. Petrol. Sci. Eng. 2014, 118, 74–87. [Google Scholar] [CrossRef]

- Gaganis, V. Rapid phase stability calculations in fluid flow simulation using simple discriminating functions. Comput. Chem. Eng. 2018, 108, 112–127. [Google Scholar] [CrossRef]

- Kashinath, A.; Szulczewski, L.M.; Dogru, H.A. A fast algorithm for calculating isothermal phase behavior using machine learning. Fluid Phase Equilibria 2018, 465, 73–82. [Google Scholar] [CrossRef]

- Schmitz, J.E.; Zemp, R.J.; Mendes, M.J. Artificial neural networks for the solution of the phase stability problem. Fluid Phase Equilibria 2016, 245, 83–87. [Google Scholar] [CrossRef]

- Gaganis, V. , Varotsis N. In Rapid multiphase stability calculations in process simulation. In Proceedings of the 27th European Symposium on Applied Thermodynamics, Eindhoven, Netherlands, July 2014. [Google Scholar]

- Wang, K.; Luo, J.; Yizheng, W.; Wu, K.; Li, J.; Chen, Z. Artificial neural network assisted two-phase flash calculations in isothermal and thermal compositional simulations. Fluid Phase Equilibria 2019, 486, 59–79. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, T.; Sun, S.; Gao, X. Accelerating flash calculation through deep learning methods. Journal of Computational Physics 2019, 394, 153–165. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, T.; Sun, S. Acceleration of the NVT Flash Calculation for Multicomponent Mixtures Using Deep Neural Network Models. Industrial & Engineering Chemistry Research 2019, 58, 12312–12322. [Google Scholar]

- Wang, S.; Sobecki, N.; Ding, D.; Zhu, L.; Wu, Y.S. Accelerating and stabilizing the vapor-liquid equilibrium (VLE) calculation in compositional simulation of unconventional reservoirs using deep learning-based flash calculation. Fuel 2019, 253, 209–219. [Google Scholar] [CrossRef]

- Zhang, T.; Li, Y.; Sun, S.; Bai, H. Accelerating flash calculations in unconventional reservoirs considering capillary pressure using an optimized deep learning algorithm. Journal of Petroleum Science and Engineering 2020, 195, 107886. [Google Scholar] [CrossRef]

- Zhang, T.; Li, Y.; Li, Y.; Sun, S.; Gao, X. A self-adaptive deep learning algorithm for accelerating multi-component flash calculation. Computer Methods in Applied Mechanics and Engineering 2020, 369(1), 113207. [Google Scholar] [CrossRef]

- Sheth, S.; Heidari, M.R.; Neylon, K.; Bennett, J.; McKee, F. Acceleration of thermodynamic computations in fluid flow applications. Computational Geosciences 2022, 26, 1–11. [Google Scholar] [CrossRef]

- Ahmed, T. Equations of State and PVT Analysis; Gulf Publishing Company: Houston, TX, USA, 2007; ISBN 978-1-933762-03-6. [Google Scholar]

- Moghadassi, A.R.; Parvizian, F.; Hosseini, S.M.; Fazlali, A.R. A new approach for estimation of PVT properties of pure gases based on artificial neural network model. Braz. J. Chem. Eng. 2009, 26. [Google Scholar] [CrossRef]

- Beggs, D.H.; Brill, J.P. A Study of Two-Phase Flow in Inclined Pipes. J Pet Technol 1973, 25, 607–617; [Google Scholar] [CrossRef]

- Dranchuk, P.M.; Abou-Kassem, H. Calculation of Z Factors For Natural Gases Using Equations of State. J Can Pet Technol 1975, 14, PETSOC-75-03–03. [Google Scholar] [CrossRef]

- Kamyab, M.; Sampaio, J.H.B.; Qanbari, F.; Eustes, A.W. Using artificial neural networks to estimate the z-factor for natural hydrocarbon gases. Journal of Petroleum Science and Engineering 2010, 73, 248–257. [Google Scholar] [CrossRef]

- Sanjari, E.; Lay, E.N. Estimation of natural gas compressibility factors using artificial neural network approach. Journal of Natural Gas Science and Engineering 2012, 9, 220–226. [Google Scholar] [CrossRef]

- Irene, A.I.; Sunday, I.S.; Orodu, O.D. Forecasting Gas Compressibility Factor Using Artificial Neural Network Tool for Niger-Delta Gas Reservoir. In Proceedings of the SPE Nigeria Annual International Conference and Exhibition, SPE-184382-MS. Lagos, Nigeria, 2-4 August 2016. [Google Scholar]

- Al-Anazi, B.D.; Pazuki, G.R.; Nikookar, M.; Al-Anazi, A.F. The Prediction of the Compressibility Factor of Sour and Natural Gas by an Artificial Neural Network System. Petroleum Science and Technology 2011, 29, 325–336. [Google Scholar] [CrossRef]

- Mohamadi-Baghmolaei, M.; Azin, R.; Osfouri, S.; Mohamadi-Baghmolaei, R.; Zarei, Z. Prediction of gas compressibility factor using intelligent models. Nat. Gas Ind. B 2015, 2, 283–294. [Google Scholar] [CrossRef]

- Fayazi, A.; Arabloo, M.; Mohammadi, A.H. Efficient estimation of natural gas compressibility factor using a rigorous method. J. Nat. Gas Sci. Eng. 2014, 16, 8–17. [Google Scholar] [CrossRef]

- Kamari, A.; Hemmati-Sarapardeh, A.; Mirabbasi, S.-M.; Nikookar, M.; Mohammadi, A.H. Prediction of sour gas compressibility factor using an intelligent approach. Fuel Process. Technol. 2013, 116, 209–216. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Vandewalle, J. Least Squares Support Vector Machine Classifiers. Neural Processing Letters 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Chamkalani, A.; Maesoumi, A.; Sameni, A. An intelligent approach for optimal prediction of gas deviation factor using particle swarm optimization and genetic algorithm. Journal of Natural Gas Science and Engineering 2013, 14, 132–143. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the IEEE International Conference on Neural Networks, 1942-1948. 1995. [Google Scholar]

- Gaganis, V.; Homouz, D.; Maalouf, M.; Khoury, N.; Polycrhonopoulou, K. An Efficient Method to Predict Compressibility Factor of Natural Gas Streams. Energies 2019, 12, 2577. [Google Scholar] [CrossRef]

- Maalouf, M.; Homouz, D. Kernel ridge regression using truncated newton method. Knowledge-Based Systems 2014, 71, 339–344. [Google Scholar] [CrossRef]

- Samnioti, A.; Kanakaki, E.M.; Koffa, E.; Dimitrellou, I.; Tomos, C.; Kiomourtzi, P.; Gaganis, V.; Stamataki, S. Wellbore and Reservoir Thermodynamic Appraisal in Acid Gas Injection for EOR Operations. Energies 2023, 16. [Google Scholar] [CrossRef]

- Kamari, A.; Hemmati-Sarapardeh, A.; Mirabbasi, S.M.; Nikookar, M.; Mohammadi, A.H. Prediction of sour gas compressibility factor using an intelligent approach. Fuel Processing Technology 2013, 116, 209–216. [Google Scholar] [CrossRef]

- Seifi, M.; Abedi, J. An Efficient and Robust Saturation Pressure Calculation Algorithm for Petroleum Reservoir Fluids Using a Neural Network. Petroleum Science and Technology 2012, 30. [Google Scholar] [CrossRef]

- Gharbi, R.B.C.; Elsharkawy, A.M. Neural Network Model for Estimating the PVT Properties of Middle East Crude Oils. SPE Res Eval & Eng 1999, 2, 255–265; [Google Scholar]

- Al-Marhoun, M.A.; Osman, E.A. Using Artificial Neural Networks to Develop New PVT Correlations for Saudi Crude Oils. In Proceedings of the Abu Dhabi International Petroleum Exhibition and Conference, SPE-78592-MS. Abu Dhabi, United Arab Emirates, 13-16 October 2002. [Google Scholar]

- Moghadam, J.N.; Salahshoor, K.; Kharrat, R. Introducing a new method for predicting PVT properties of Iranian crude oils by applying artificial neural networks. Petroleum Science and Technology 2011, 29, 1066–1079. [Google Scholar] [CrossRef]

- Al-Marhoun, M. PVT correlations for Middle East crude oils. Journal of Petroleum Technology 1988, 40, 650–666. [Google Scholar] [CrossRef]

- Al-Marhoun, M. New correlations for formation volume factors of oil and gas mixtures. Journal of Canadian Petroleum Technology 1992, 31. [Google Scholar] [CrossRef]

- Ahmed, T.H. Reservoir engineering handbook, 4th ed.; Gulf Professional Publishing: Oxford, UK, 2010; ISBN 978-1-85617-803-7. [Google Scholar]

- Farasat, A.; Shokrollahi, A.; Arabloo, M.; Gharagheizi, F.; Mohammadi, A.H. Toward an intelligent approach for determination of saturation pressure of crude oil. Fuel Processing Technology 2013, 115, 201–214. [Google Scholar] [CrossRef]

- El-Sebakhy, E.A.; Sheltami, T.; Al-Bokhitan, S.Y.; Shaaban, Y.; Raharja, P.D.; Khaeruzzaman, Y. Support vector machines framework for predicting the PVT properties of crude-oil systems. In Proceedings of the SPE Middle East Oil and Gas Show and Conference, SPE-105698-MS. Manama, Bahrain, 11-14 March 2007. [Google Scholar]

- Akbari, M.K.; Farahani, F.J, Abdy, Y. Dewpoint Pressure Estimation of Gas Condensate Reservoirs, Using Artificial Neural Network (ANN). In Proceedings of the EUROPEC/EAGE Conference and Exhibition, SPE-107032-MS. London, UK, 11-14 June 2007.

- Nowroozi, S.; Ranjbar, M.; Hashemipour, H.; Schaffie, M. Development of a neural fuzzy system for advanced prediction of dew point pressure in gas condensate reservoirs. Fuel Processing Technology 2009, 90, 452–457. [Google Scholar] [CrossRef]

- Kaydani, H.; Hagizadeh, A.; Mohebbi, A. A Dew Point Pressure Model for Gas Condensate Reservoirs Based on an Artificial Neural Network. Petroleum Science and Technology 2013, 31. [Google Scholar] [CrossRef]

- González, A.; Barrufet, M.A.; Startzman, R. Improved neural-network model predicts dewpoint pressure of retrograde gases. J Pet Sci Eng 2003, 37, 183–194. [Google Scholar] [CrossRef]

- Majidi, S.M.; Shokrollahi, A.; Arabloo, M.; Mahdikhani-Soleymanloo, R.; Masihi, M. Evolving an accurate model based on machine learning approach for prediction of dew-point pressure in gas condensate reservoirs. Chemical Engineering Research and Design 2014, 92, 891–902. [Google Scholar] [CrossRef]

- Rabiei, A.; Sayyad, H.; Riazi, M.; Hashemi, A. Determination of dew point pressure in gas condensate reservoirs based on a hybrid neural genetic algorithm. Fluid Phase Equilibria 2015, 387, 38–49. [Google Scholar] [CrossRef]

- Ahmadi, M.A.; Ebadi, M.; Yazdanpanah, A. Robust intelligent tool for estimating dew point pressure in retrograded condensate gas reservoirs: Application of particle swarm optimization. Journal of Petroleum Science and Engineering 2014, 123, 7–19. [Google Scholar] [CrossRef]

- Ahmadi, M.A.; Ebadi, M. Evolving smart approach for determination dew point pressure through condensate gas reservoirs. Fuel 2014, 117, 1074–1084. [Google Scholar] [CrossRef]

- Arabloo, M.; Shokrollahi, A.; Gharagheizi, F.; Mohammadi, A.H. Toward a predictive model for estimating dew point pressure in gas condensate systems. Fuel Processing Technology 2013, 116, 317–324. [Google Scholar] [CrossRef]

- Ikpeka, P.; Ugwu, J.; Russell, P.; Pillai, G. Performance evaluation of machine learning algorithms in predicting dew point pressure of gas condensate reservoirs. SN Applied Sciences 2020, 2, 2124. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, S.; Kazemi, M.; Carr, T.R. Dew point pressure prediction based on mixed-kernels-function support vector machine in gas-condensate reservoir. Fuel 2018, 232, 600–609. [Google Scholar] [CrossRef]

- Alolayan, O.S.; Alomar, A.O.; Williams, J.R. Parallel Automatic History Matching Algorithm Using Reinforcement Learning. Energies 2023, 16(2), 860. [Google Scholar] [CrossRef]

- Bishop, C.M. Neural Networks for Pattern Recognition. Oxford University Press, USA. ISBN-10: 9780198538646.

- Aggarwal, C.C. Neural Networks and Deep Learning: A Textbook. Springer: Cham, Switzerland, 2018. ISBN 978-3-319-94463-0.

- Costa, L.A.N.; Maschio, C.; Schiozer, D.J. Study of te influence of training data set in artificial neural network applied to the history matching process. In Proceedings of the Rio Oil & Gas Expo and Conference, January 2010. [Google Scholar]

- Zangl, G.; Giovannoli, M.; Stundner, M. Application of Artificial intelligence in gas storage management. In Proceedings of the SPE Europec/EAGE Annual Conference and Exhibition, SPE-100133-MS. Vienna, Austria, 12-15 June 2006. [Google Scholar]

- Rodriguez, A.A.; Klie, H.; Wheeler, M.F.; Banchs, R.E. Assessing multiple resolution scales in history matching with metamodels. In Proceedings of the SPE Reservoir Simulation Symposium, SPE-105824-MS. Houston, TX, USA, 26-28 February 2007. [Google Scholar]

- Esmaili, S.; Mohaghegh, S.D. Full field reservoir modeling of shale assets using advanced data-driven analytics. 2016.

- Silva, P.C.; Maschio, C.; Schiozer, D.J. Applications of the soft computing in the automated history matching. In Proceedings of the Petroleum Society’s 7th Canadian International Petroleum Conference (57th Annual Technical Meeting), Calgary, Alberta, Canada, 13-15 June 2006. [Google Scholar]

- Silva, P.C.; Maschio, C.; Schiozer, D.J. Application of neural network and global optimization in history matching. J Can Pet Technol 2008, 47, 11. [Google Scholar] [CrossRef]

- Silva, P.C.; Maschio, C.; Schiozer, D.J. Use of Neuro-Simulation techniques as proxies to reservoir simulator: Application in production history matching. Journal of Petroleum Science and Engineering 2007, 57, 273–280. [Google Scholar] [CrossRef]

- Gottfried, B.S.; Weisman, J. Introduction to Optimization Theory, 1st ed; Prentice Hall, Englewood Cliffs, NJ, 1973; ISBN-10: 0134914724.

- Shahkarami, A.; Mohaghegh, S. D.; Gholami, V.; Haghighat, S. A. Artificial Intelligence (AI) Assisted History Matching. In Proceedings of the SPE Western North American and Rocky Mountain Joint Meeting, 17-18 April 2014, SPE-169507-MS.

- Sampaio, T.P.; Ferreira Filho, V.J.M.; de Sa Neto, A. An Application of Feed Forward Neural Network as Nonlinear Proxies for the Use During the History Matching Phase. In Proceedings of the Latin American and Caribbean Petroleum Engineering Conference, SPE-122148-MS. Cartagena de Indias, Colombia, 30-31 May 2009. [Google Scholar]

- Cullick, A.S. Improved and more-rapid history matching with a nonlinear proxy and global optimization. In Proceedings of the SPE Annual Technical Conference and Exhibition, SPE-101933-MS. San Antonio, Texas, USA, 24-27 September 2006. [Google Scholar]

- Lechner, J.P.; Zangl, G. Treating Uncertainties in Reservoir Performance Prediction with Neural Networks. In Proceedings of the SPE Europec/EAGE Annual Conference, SPE-94357-MS. Madrid, Spain, 13-16 June 2005. [Google Scholar]

- Reis, L.C. Risk analysis with history matching using experimental design or artificial neural networks. In Proceedings of the SPE Europec/EAGE Annual Conference and Exhibition, SPE-100255-MS. Vienna, Austria, 12-15 June 2006. [Google Scholar]

- Mohmad, N.I.; Mandal, D.; Amat, H.; Sabzabadi, A.; Masoudi, R. History Matching of Production Performance for Highly Faulted, Multi Layered, Clastic Oil Reservoirs using Artificial Intelligence and Data Analytics: A Novel Approach. In Proceddings of the SPE Asia Pacific Oil & Gas Conference and Exhibition, Virtual, 17-19 November 2020, SPE-202460-MS.

- Ramgulam, A.; Ertekin, T.; Flemings, P.B. Utilization of Artificial Neural Networks in the Optimization of History Matching. In Proceedings of the Latin American & Caribbean Petroleum Engineering Conference, SPE-107468-MS. Buenos Aires, Argentina, 15-18 April 2007. [Google Scholar]

- Christie, M.; Demyanov, V.; Erbas, D. Uncertainty quantification for porous media flows. Journal of Computational Physics 2006, 217, 143–158. [Google Scholar] [CrossRef]

- Maschio, C.; Schiozer, D.J. Bayesian history matching using artificial neural network and Markov Chain Monte Carlo. Journal of Petroleum Science and Engineering 2014, 123, 62–71. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Chai, Z. , Yan, B., Killough, J.E; Wang, Y. An efficient method for fractured shale reservoir history matching: The embedded discrete fracture multi-continuum approach. Journal of Petroleum Science and Engineering 2018, 160, 170–181. [Google Scholar] [CrossRef]

- Eltahan, E.; Ganjdanesh, R.; Yu, W.; Sepehrnoori, K.; Drozd, H.; Ambrose, R. Assisted history matching using Bayesian inference: Application to multi-well simulation of a huff-n-puff pilot test in the Permian Basin. In Proceddings of the Unconventional Resources Technology Conference, Austin, Texas, USA, 20-22 July 2020.

- Shams, M.; El-Banbi, A.; Sayyouh, H. A Comparative Study of Proxy Modeling Techniques in Assisted History Matching. In Proceedings of the SPE Kingdom of Saudi Arabia Annual Technical Symposium and Exhibition, April 2017. [Google Scholar]

- Brantson, E.T; Ju, B.; Omisore, B.O.; Wu, D.; Selase, A.E.; Liu, N. Development of machine learning predictive models for history matching tight gas carbonate reservoir production profiles. J. Geophys. Eng 2018, 15, 2235–2251. [Google Scholar] [CrossRef]

- Gao, C.C.; Gao, H.H. Evaluating early-time Eagle Ford well performance using Multivariate Adaptive Regression Splines (MARS). In Proceedings of the SPE Annual Technical Conference and Exhibition, SPE-166462-MS. New Orleans, Louisiana, USA, 30 September-2 October 2013. [Google Scholar]

- Friedman, J.H. Stochastic gradient boosting Comput. Stat. Data Anal. 2002, 38, 367–78. [Google Scholar] [CrossRef]

- Bauer, M. General regression neural network—a neural network for technical use. Master’s Thesis, University of Wisconsin, Madison, USA, 1995. [Google Scholar]

- Al-Thuwaini, J.S.; Zangl, G.; Phelps, R. Innovative Approach to Assist History Matching Using Artificial Intelligence. In Proceedings of the Intelligent Energy Conference and Exhibition, SPE-99882-MS. Amsterdam, The Netherlands, 11-13 April 2006. [Google Scholar]

- Simplilearn, AL and machine learning. What are self-organizing maps. Beginner’s guide to Kohonen map. Available online: https://www.simplilearn.com/self-organizing-kohonen-maps-article (accessed on 10 March 2023).

- Dharavath, A. Bisection Method. Available online: https://protonstalk.com/polynomials/bisection-method/ (accessed on 10 March 2023).

- Guo, Z.; Chen, C.; Gao, G.; Vink, J. Applying Support Vector Regression to Reduce the Effect of Numerical Noise and Enhance the Performance of History Matching. In Proceeding of the SPE Annual Technical Conference and Exhibition, SPE-187430-MS. San Antonio, Texas, USA, 9-11 October 2017. [Google Scholar]

- Rui Liu; Siddharth Misra. Machine Learning Assisted Recovery of Subsurface Energy: A Review. Authorea 2021.

- Ma, X.; Zhang, K.; Zhao, H.; Zhang, L.; Wang, J.; Zhang, H.; Liu, P.; Yan, X.; Yang, Y. A vector-to-sequence based multilayer recurrent network surrogate model for history matching of large-scale reservoir. Journal of Petroleum Science and Engineering 2022, 214, 110548. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, K.; Zhang, J.; Wang, Y.; Zhang, L.; Liu, P.; Yang, Y.; Wang, J. A novel hybrid recurrent convolutional network for surrogate modeling of history matching and uncertainty quantification. Journal of Petroleum Science and Engineering 2022, 210, 110109. [Google Scholar] [CrossRef]

- MathWorks, Convolutional neural network. What Is a Convolutional Neural Network? Available online: https://www.mathworks.com/discovery/convolutional-neural-network-matlab.html (accessed on 22 March 2023).

- Brownlee, J. A Gentle Introduction to Generative Adversarial Networks (GANs). Available online: https://machinelearningmastery.com/what-are-generative-adversarial-networks-gans/ (accessed on 22 March 2023).

- Ma, X.; Zhang, K.; Wang, J.; Yao, C.; Yang, Y.; Sun, H.; Yao, J. An Efficient Spatial-Temporal Convolution Recurrent Neural Network Surrogate Model for History Matching. SPE J. 2022, 27, 1160–1175. [Google Scholar] [CrossRef]

- Evensen, G.; Raanes, P.N.; Stordal, A.S.; Hove, J. Efficient Implementation of an Iterative Ensemble Smoother for Data Assimilation and Reservoir History Matching. Frontiers in Applied Mathematics and Statistics 2019, 5. [Google Scholar] [CrossRef]

- Honorio, J.; Chen, C.; Gao, G; Du, K.; Jaakkola, T. Integration of PCA with a Novel Machine Learning Method for Reparameterization and Assisted History Matching Geologically Complex Reservoirs. In Proceedings of the SPE Annual Technical Conference and Exhibition, SPE-175038-MS. Houston, TX, USA, 28-30 September 2015.

- Alguliyev, R.; Aliguliyev, R.; Imamverdiyev, Y.; Sukhostat, L. History matching of petroleum reservoirs using deep neural networks. Intelligent Systems with Applications 2022, 16, 200128. [Google Scholar] [CrossRef]

- Kana, M. Variational Autoencoders (VAEs) for dummies — Step by step tutorial. Available online: https://towardsdatascience.com/variational-autoencoders-vaes-for-dummies-step-by-step-tutorial-69e6d1c9d8e9v (accessed on 2 April 2023).

- Canchumuni, S.A.; Emerick, A.A.; Pacheco, M.A. Integration of Ensemble Data Assimilation and Deep Learning for History Matching Facies Models. In Proceedings of the OTC Brazil, OTC-28015-MS. Rio de Janeiro, Brazil, 24-26 October 2017. [Google Scholar]

- Jo, S.; Jeong, H.; Min, B.; Park, C.; Kim, Y.; Kwon, S.; Sun, A. Efficient deep-learning-based history matching for fluvial channel reservoirs. Journal of Petroleum Science and Engineering 2022, 208, 109247. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, W.; Durlofsky, L.J. A Deep-Learning-Based Geological Parameterization for History Matching Complex Models. Mathematical Geosciences 2019, 51, 725–766. [Google Scholar] [CrossRef]

- Jo, H.; Pan, W.; Santos, J.E.; Jung, H.; Pyrcz, M.J. Machine learning assisted history matching for a deepwater lobe system. Journal of Petroleum Science and Engineering 2021, 207, 109086. [Google Scholar] [CrossRef]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; 2nd ed; Bradford Books: Denver, CO, USA, 2018; ISBN 0262039249. [Google Scholar]

- Li, H.; Misra, S. Reinforcement learning based automated history matching for improved hydrocarbon production forecast. Applied Energy 2021, 284, 116311. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).