Submitted:

07 July 2023

Posted:

12 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Unsupervised pipeline: Once hyperparameters and transformation models are optimized, the entire process becomes unsupervised.

- Mitigation of the lack-of-context: the integration of different methods (neural network transformers, dictionary based and syntactic) allows the mitigation of the lack of context of keywords and the definition of final similarity scores.

- Scalability: Using pre-trained language models, the approach can efficiently handle even archives with hundreds of elements.

- Multilingual support: The use of pre-trained multilingual text models enables the approach to efficiently manage archives containing documents in different languages, such as English, French, Italian, and others.

- Real-world scenarios: The experiments performed for this article demonstrate the ability of the method to adapt to real data without having to adapt it to a specific context. The use of ensemble methods makes it possible to overcome any critical problems that may arise due to unfamiliar words or different languages.

- 1)

- Provide an overview of different approaches that can be adapted to identify overall similarities of 1- or n-gram tags.

- 2)

- Evaluate and compare the performance of different pre-trained language models on short texts/keywords, which by their nature are self-contained.

- 3)

- Define methods that can overcome limitations through integrated approaches, after analyzing the results of individual methods.

2. Related works

3. Materials and Methods

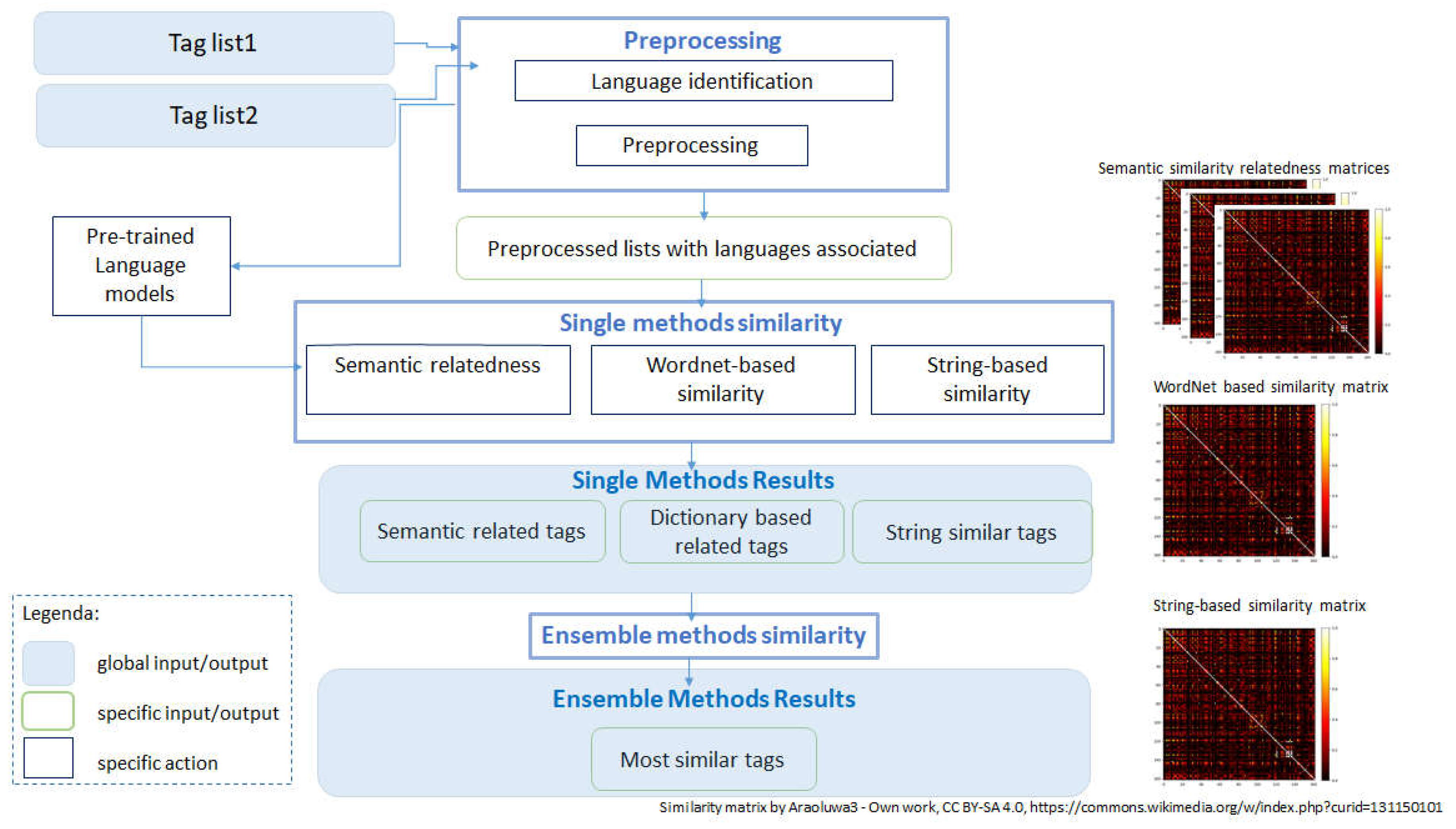

- 1)

- Language identification and Preprocessing: Identify the language and clean and normalize the data by removing punctuation, converting to lowercase, and handling any specific linguistic considerations (e.g., stemming or lemmatization) to ensure consistency in word representations.

- 2)

-

Single methods similarity:

- a)

- identify the different similarity/relatedness techniques to be used to evaluate the similarity between terms. Examples can be: word embeddings, transformers using pretrained Bert-like models, dictionary based and syntactic based, and so on.

- b)

- a similarity matrix is computed for each element in the lists, for each similarity/relatedness method: the similarity scores between the target word and all other words in the dataset using cosine similarity or other similarity measures are computed. The similarity score quantifies the relatedness between two words based on their vector representations or other representation, according to the technique.

- c)

- Ranking and Selection: Rank the words based on their similarity scores in descending order. You can define a threshold or select the top N words as the most related terms, depending on the desired number of results.

- 3)

- Ensemble approach: combine the results of multiple similarity measures to derive final similarity scores.

3.1. Materials

3.2. Language identification and preprocessing

3.3. Single methods similarity

- The shortest path length measure computes the length of the shortest path between two synsets in the WordNet graph, representing the minimum number of hypernym links required to connect the synsets. This measure assigns a higher similarity score to word pairs with a shorter path length, indicating a closer semantic relationship. It will be referred to as path.

- Wu-Palmer Similarity: The Wu-Palmer similarity measure utilizes the depth of the LCS (Lowest Common Subsumer - the most specific common ancestor of two synsets in WordNet's hierarchy) and the shortest path length to assess the relatedness between synsets. By considering the depth of the LCS in relation to the depths of the synsets being compared, this measure aims to capture the conceptual similarity based on the position of the common ancestor in the WordNet hierarchy. It will be referred to as wu.

- Measure based on distance: analogously to shortest path length, this measure is also based on the minimum distance between 2 synsets. This measure, hand-crafted by the authors, takes into consideration that the shorter the distance, the greater the similarity. In this case, the similarity measure is calculated using this equation:

3.4. Ensemble methods

- n pretrained language models: as we will see in the experimentation part, the datasets on which we tested our approach can be monolingual (English or Italian, so far), or multilingual (English, Italian or French, in the experiments). It is therefore necessary to identify the models that are best able to represent the specificity of the data under consideration.

- semantic relatedness: three different methods of calculating the representative vector to be evaluated must be compared.

- WordNet based similarity: again 3 different ways of calculating the similarity between words.

3.5. Evaluation of the results

- 1)

- Recall: Recall measures the proportion of relevant items that are correctly identified or retrieved by a model. It focuses on the ability to find all positive instances and is calculated as the ratio of true positives to the sum of true positives and false negatives.

- 2)

- Precision: Precision measures the proportion of retrieved items that are actually relevant or correct. It focuses on the accuracy of the retrieved items and is calculated as the ratio of true positives to the sum of true positives and false positives.

- 3)

- F1 score: The F1 score combines precision and recall into a single metric. It is the harmonic mean of precision and recall and provides a balanced measure of a model's performance. The F1 score ranges from 0 to 1, with 1 being the best performance. It is calculated as 2 * (precision * recall) / (precision + recall).

- 4)

- Dice coefficient: The Dice coefficient is a metric commonly used for measuring the similarity between two sets. In the context of natural language processing, it is often employed for evaluating the similarity between predicted and reference sets, such as in entity extraction or document clustering. The Dice coefficient ranges from 0 to 1, with 1 indicating a perfect match. It is calculated as 2 * (intersection of sets) / (sum of set sizes).

- 5)

- Jaccard coefficient: The Jaccard coefficient, also known as the Jaccard similarity index, measures the similarity between two sets. It is commonly used for tasks like clustering, document similarity, or measuring the overlap between predicted and reference sets. The Jaccard coefficient ranges from 0 to 1, with 1 indicating a complete match. It is calculated as the ratio of the intersection of sets to the union of sets.

4. The Experimentation

4.1. Datasets

- QueryLab Platform

- During the search phase, expanding the query to include all tags that surpass a predetermined similarity threshold. This expansion allows for a broader search scope, encompassing tags that are semantically similar to the original query. By including such tags, we aim to enhance the search results by considering related keywords.

- During the fruition stage, suggesting elements that contain similar keywords to enhance the user experience. By identifying and recommending elements that share similar keywords, we provide users with relevant and related content. This approach enables users to explore and access information beyond their initial query, promoting a comprehensive and enriched browsing experience.

4.2. Dataset preparation

- Language identification and preprocessing

4.3. Single Methods similarity

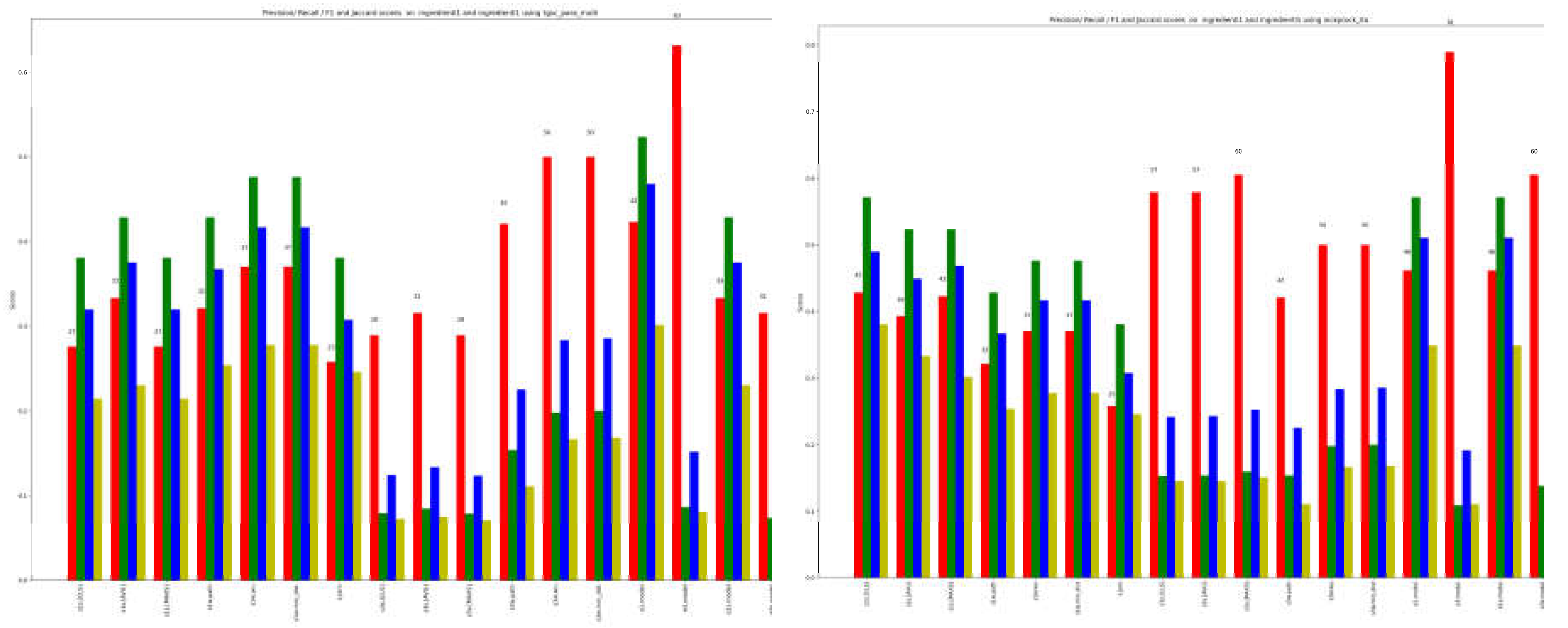

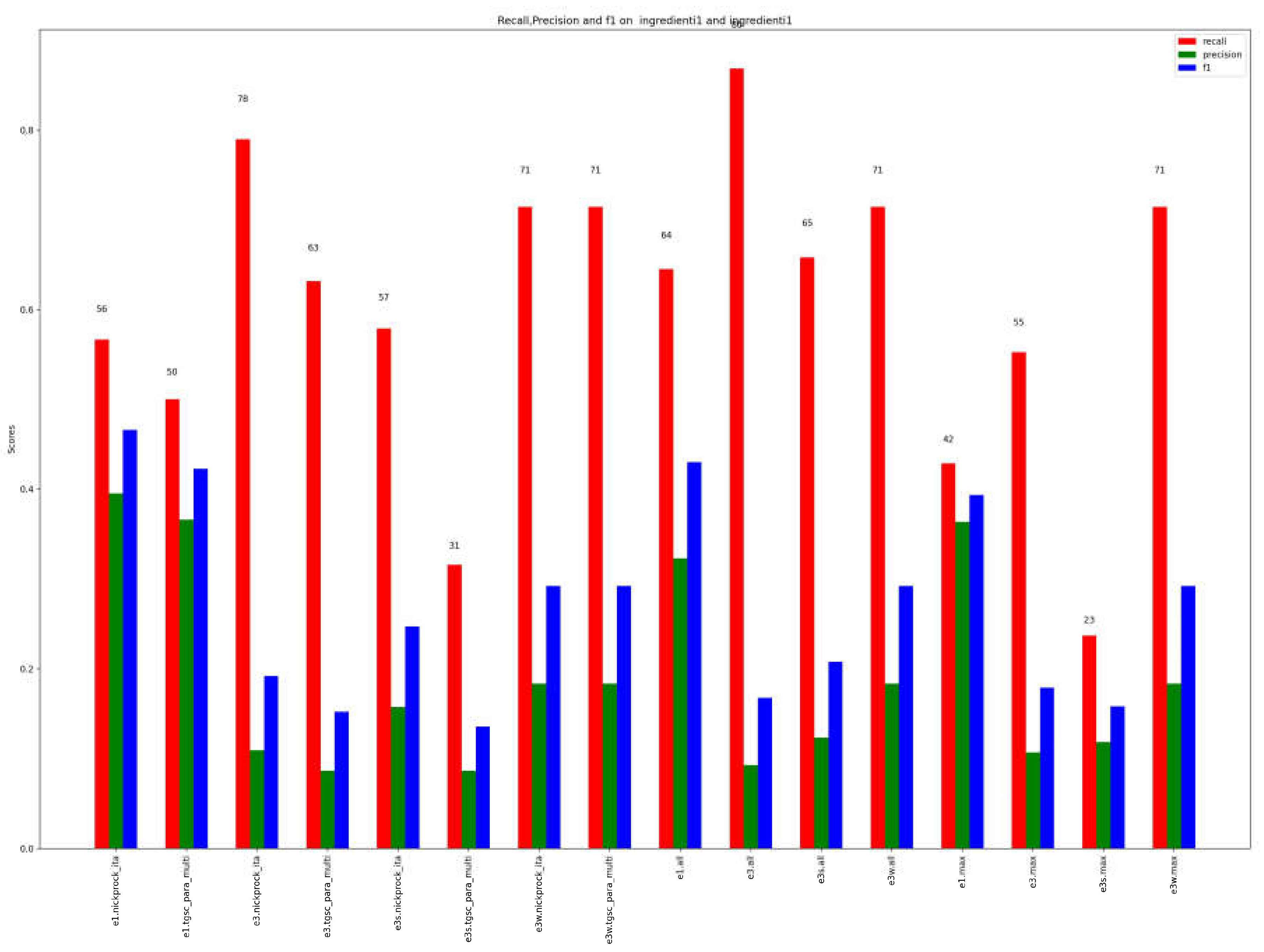

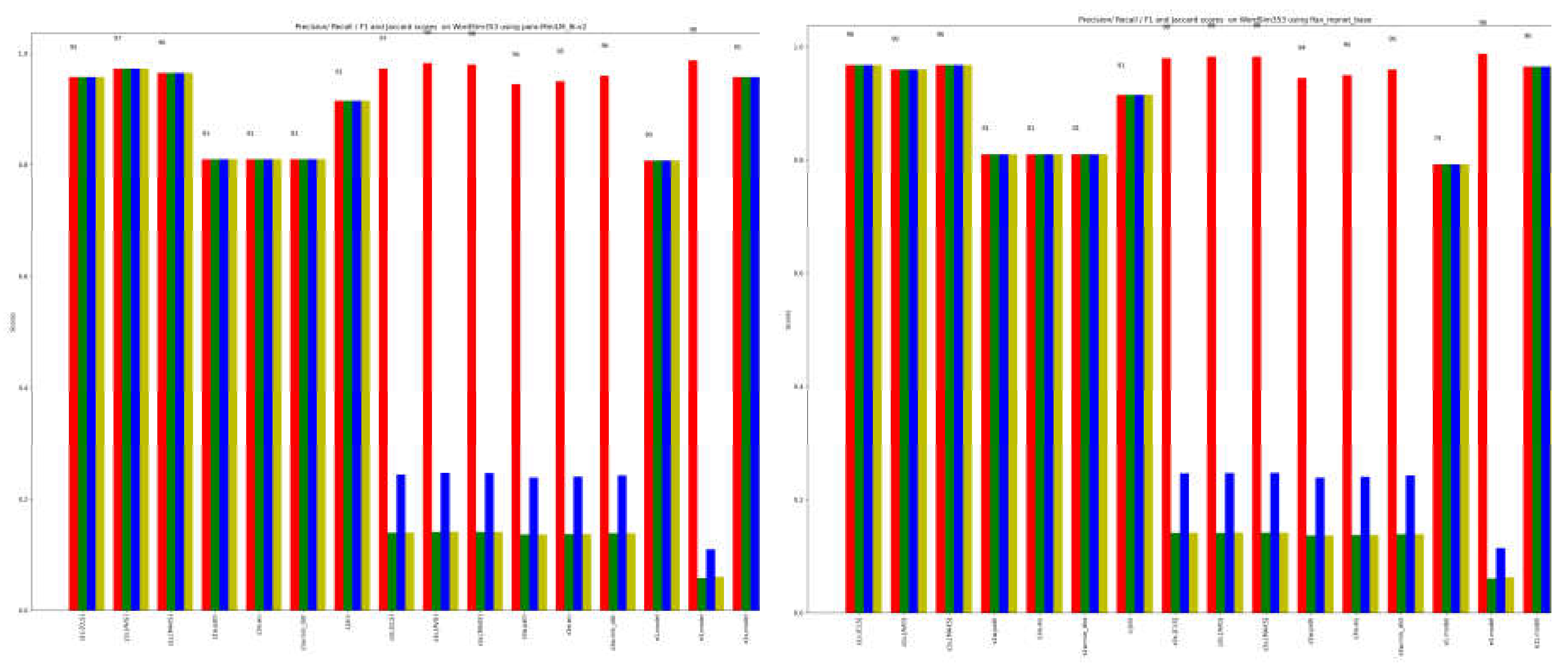

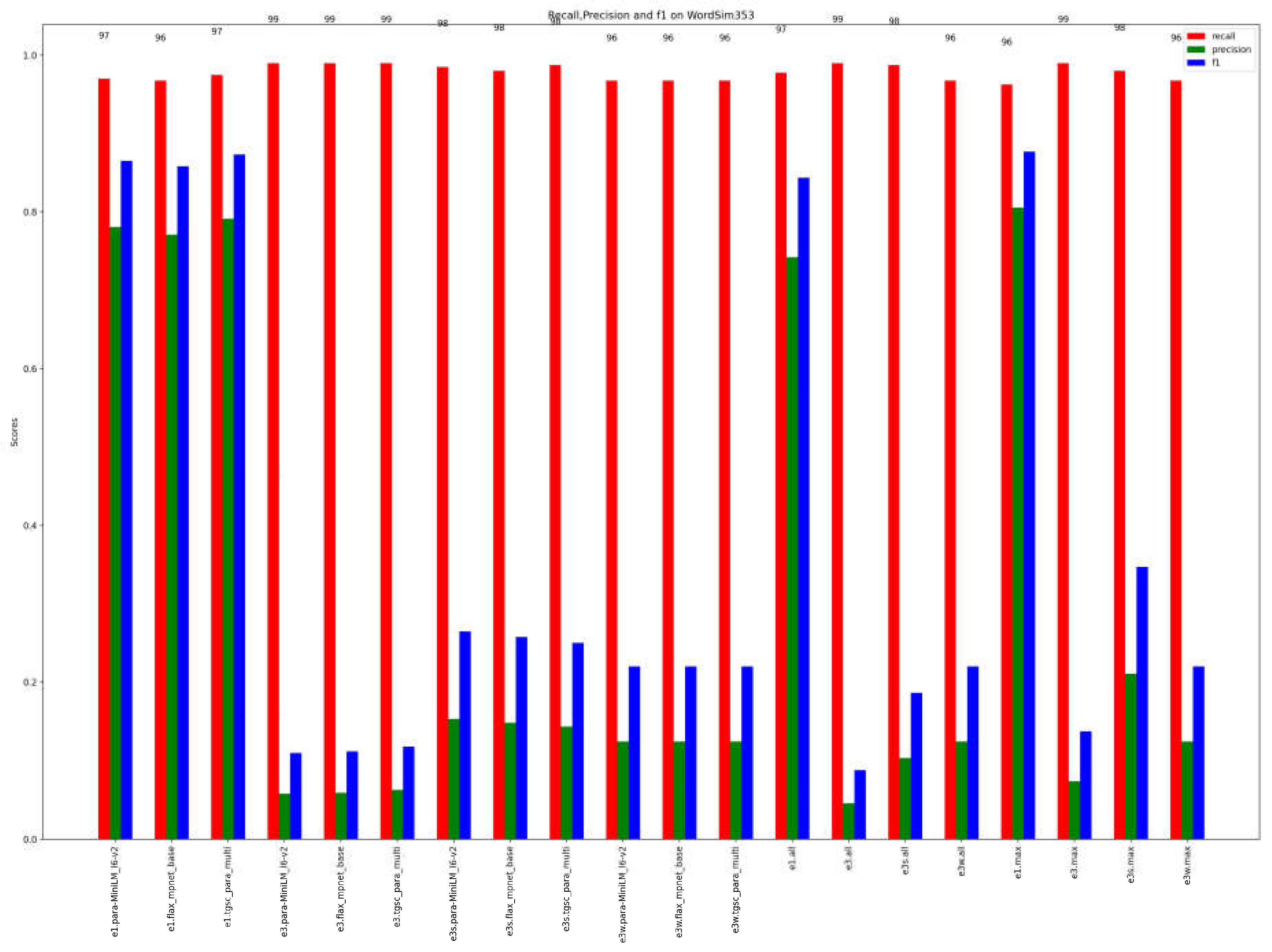

- Determining the best similarity score: Various similarity assessment methods were utilized to calculate similarity scores between tags. The evaluation aimed to identify the similarity score that best captured the semantic similarity between the given tag and other tags in the datasets.

- Identifying the single tag with the highest similarity: Based on the calculated similarity scores, the tags that exhibited the highest similarity to the given tag were identified, one for each similarity measure.

- Identifying the three most similar features: In addition to finding the single tag with the highest similarity, the evaluation process also focused on identifying the three most similar features. These features are the elements (1-gram or n-grams) that demonstrated high similarity to the given tag.

- Semantic relatedness

-

Models designed for the English language: we tested three pretrained models, i.e.:

- 'sentence-transformers/paraphrase-MiniLM-L6-v2'

- 'flax-sentence-embeddings/all_datasets_v3_mpnet-base'

- 'tgsc/sentence-transformers_paraphrase-multilingual-mpnet-base-v2'

-

Models tailored for the Italian language.

- 'nickprock/sentence-bert-base-italian-xxl-uncased'

- 'tgsc/sentence-transformers_paraphrase-multilingual-mpnet-base-v2'

-

Multilingual models capable of handling multiple languages.

- 'LLukas22/paraphrase-multilingual-mpnet-base-v2-embedding-all'

- ‘tgsc/sentence-transformers_paraphrase-multilingual-mpnet-base-v2'

- WordNet based similarity

- Not all WordNet synsets have been translated into all languages. This implies that some synsets may not be available in certain languages, which can affect the matching process.

- By employing machine translation on tags, we can increase the likelihood of finding a corresponding synset. This strategy helps overcome language barriers and enhances the chances of locating relevant synsets.

- String-based similarity

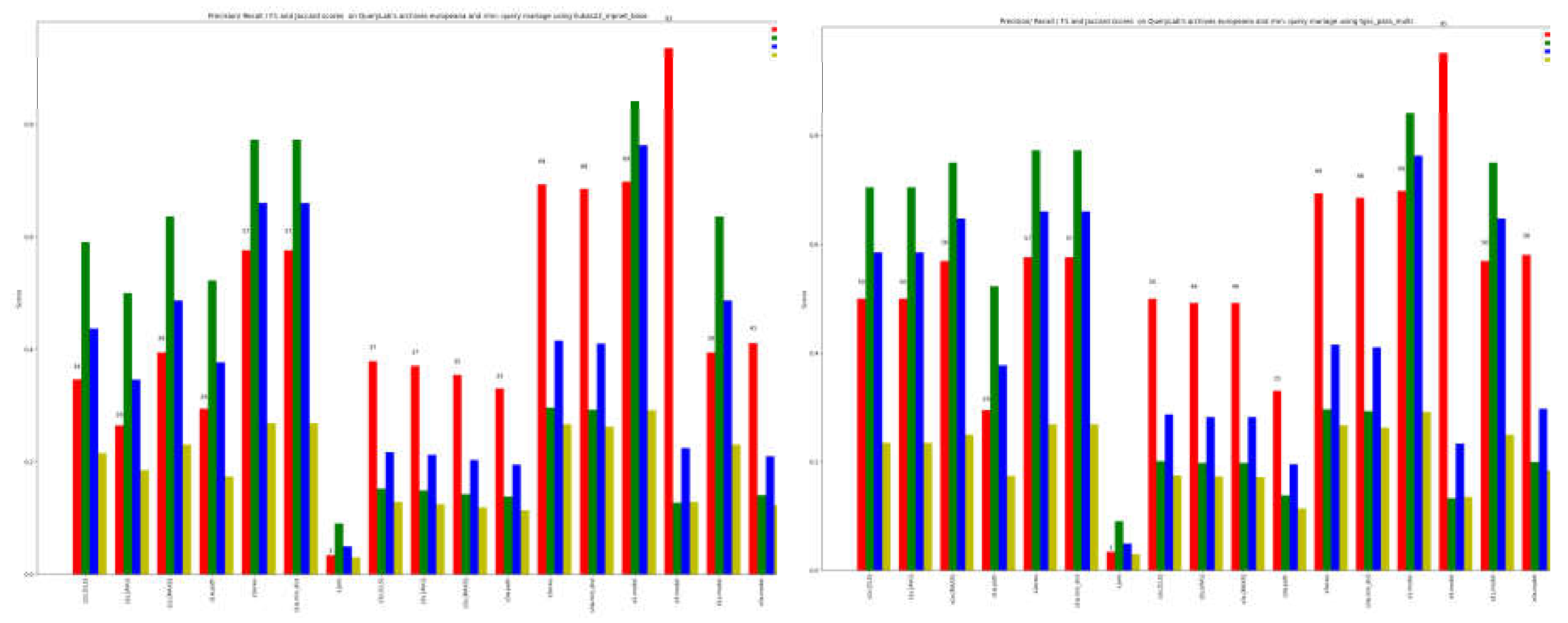

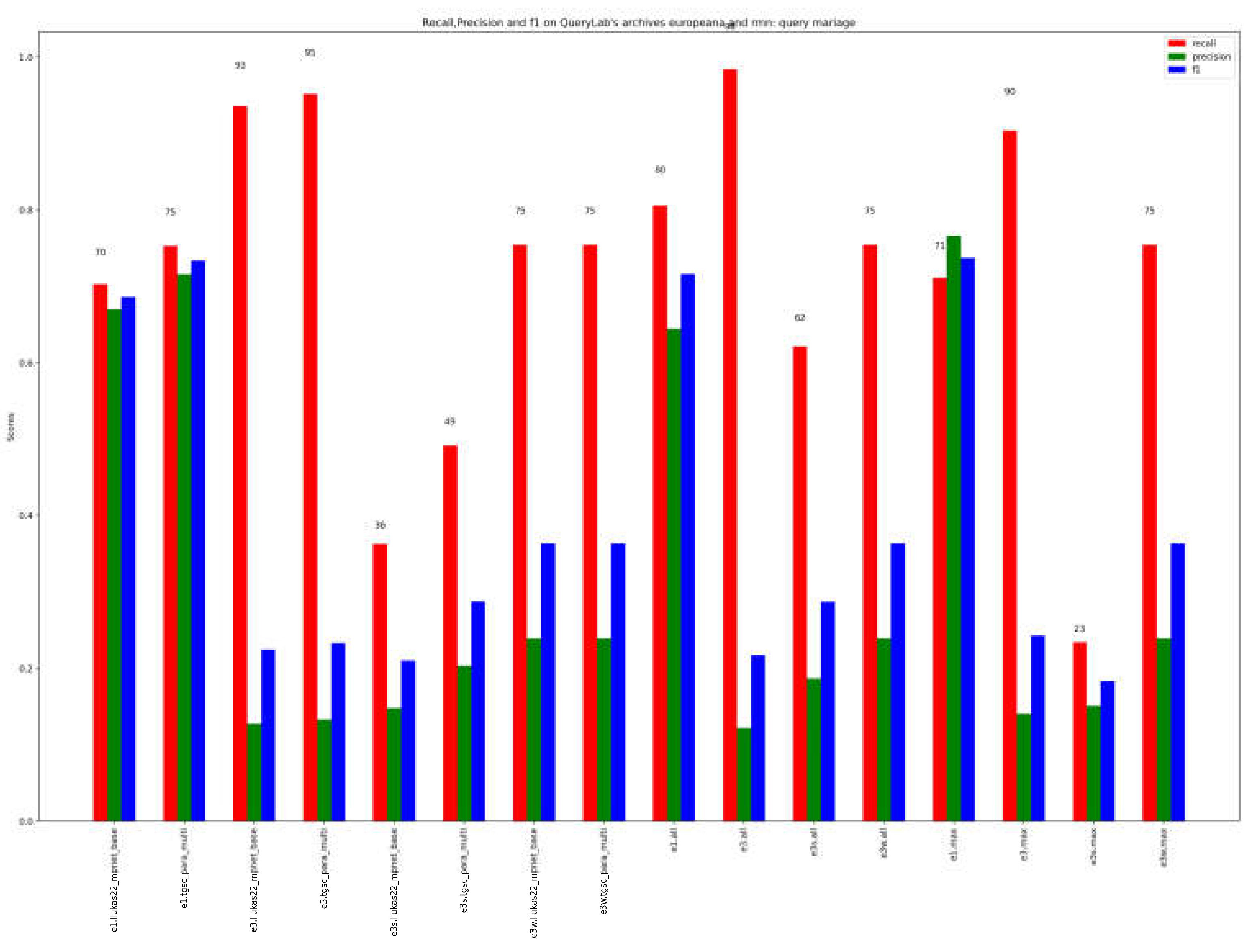

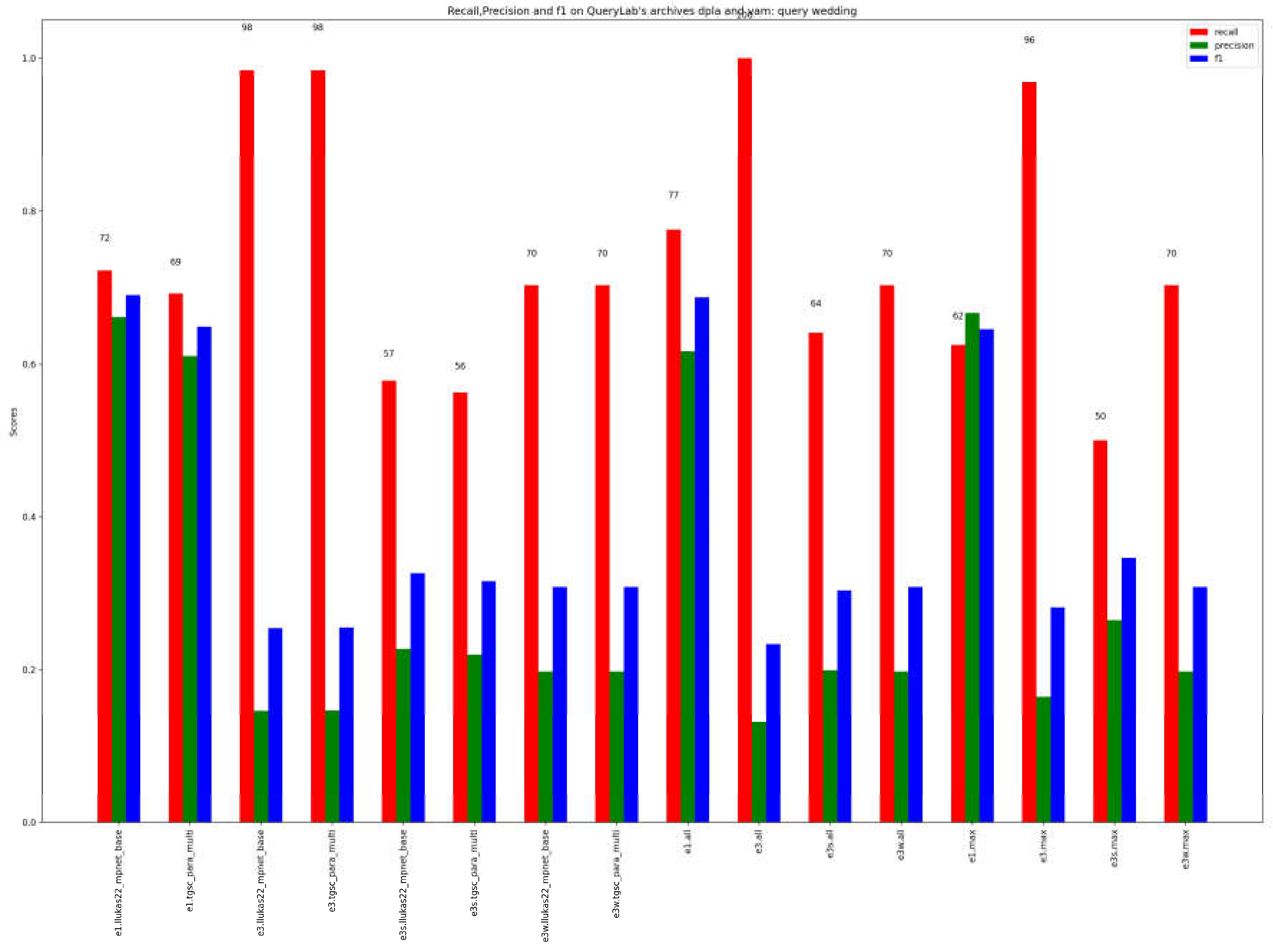

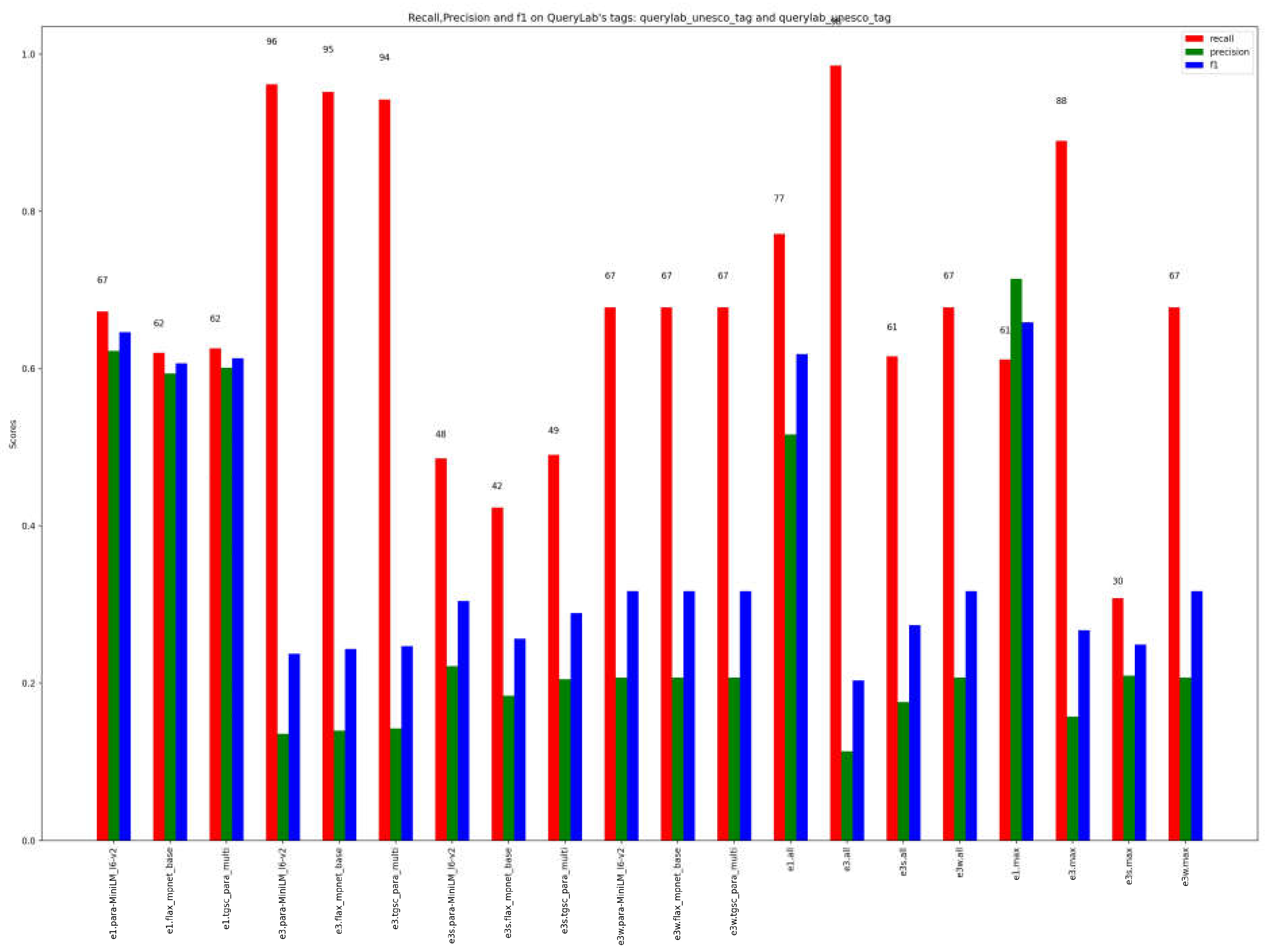

4.4. Ensemble Methods similarity/relatedness

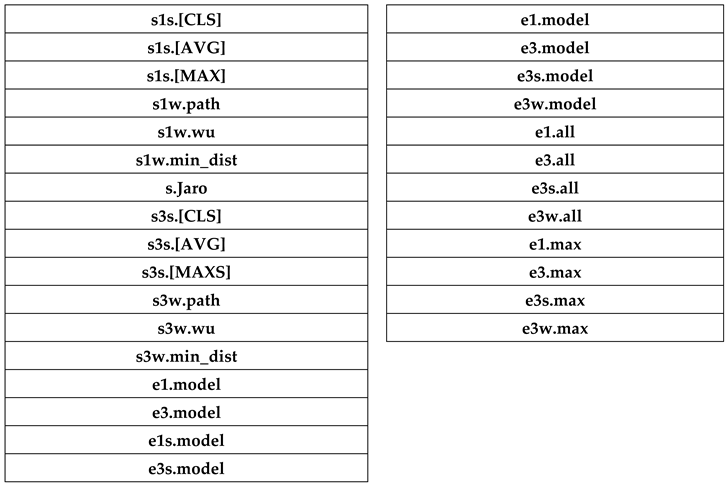

- Single Model Ensemble: The combined results for a single model are referred to as enx.modelname, considering either the most similar term (n=1) or the first three most similar terms (n=3). The 'x' in the notation can be empty (indicating no specific method), 's' for semantic methods, or 'w' for WordNet-based methods. The 'modelname' refers to the individual pretrained models used for the specific experiment.

- All Models Ensemble: The combined results (enx.all) considering all models, with the majority voting mechanism applied to all terms. The 'n' value can be 1 or 3, while 'x' can be empty, 's' for semantic methods, or 'w' for WordNet-based methods.

- Most Frequent Terms Ensemble: The combined results (enx.max) considering all models, with the majority voting mechanism applied only to the most frequent terms. The 'n' value can be 1 or 3, and 'x' can be empty, 's' for semantic methods, or 'w' for WordNet-based methods.

4.5. Evaluation

- User ground truth

- -

- Eliminate the word: If no suitable term could be found, the annotators had the option to exclude the word from the annotation process due to the unavailability of appropriate alternatives.

- -

- Make an extremely bland association: In cases where the annotators faced significant difficulty in finding similar terms, they had the option to make a less precise or less contextually relevant association. This option aimed to capture any resemblance or connection, even if it was not an ideal match.

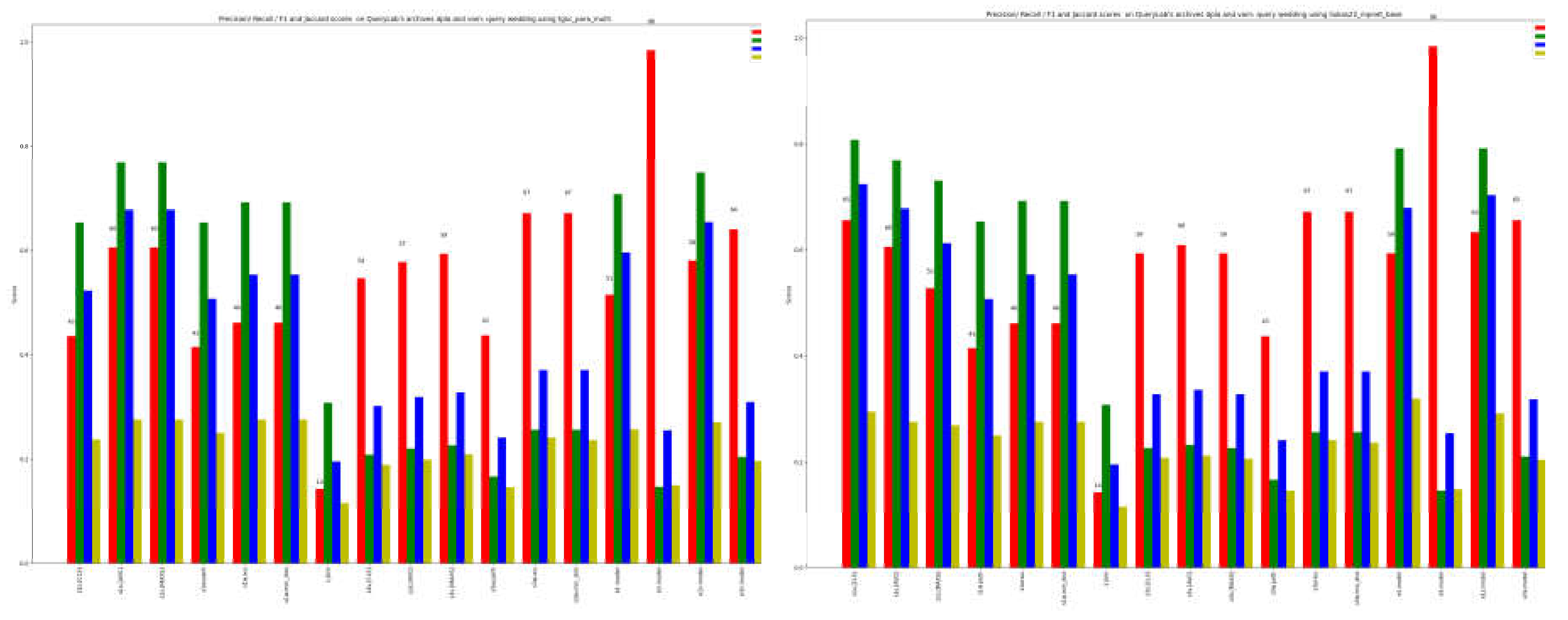

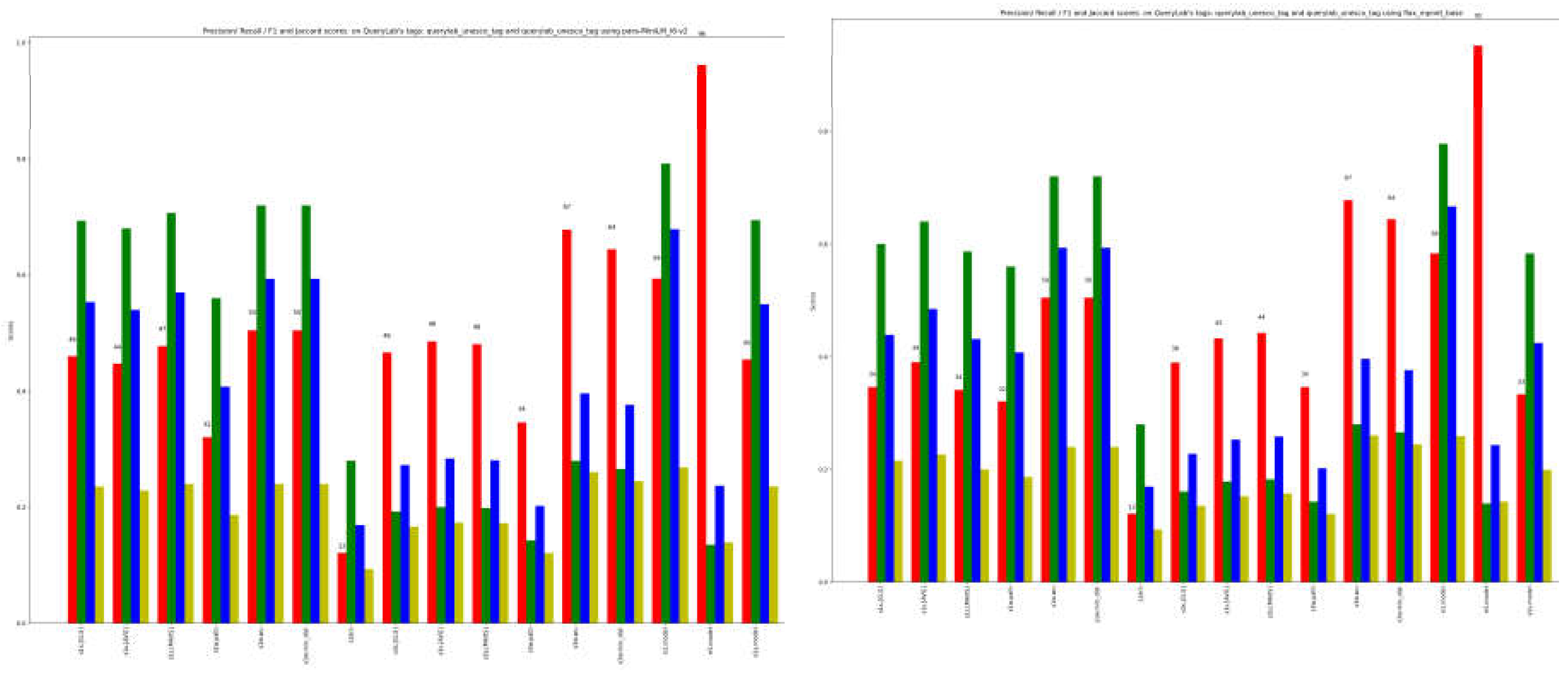

5. Results and discussion

5.1. Datasets used

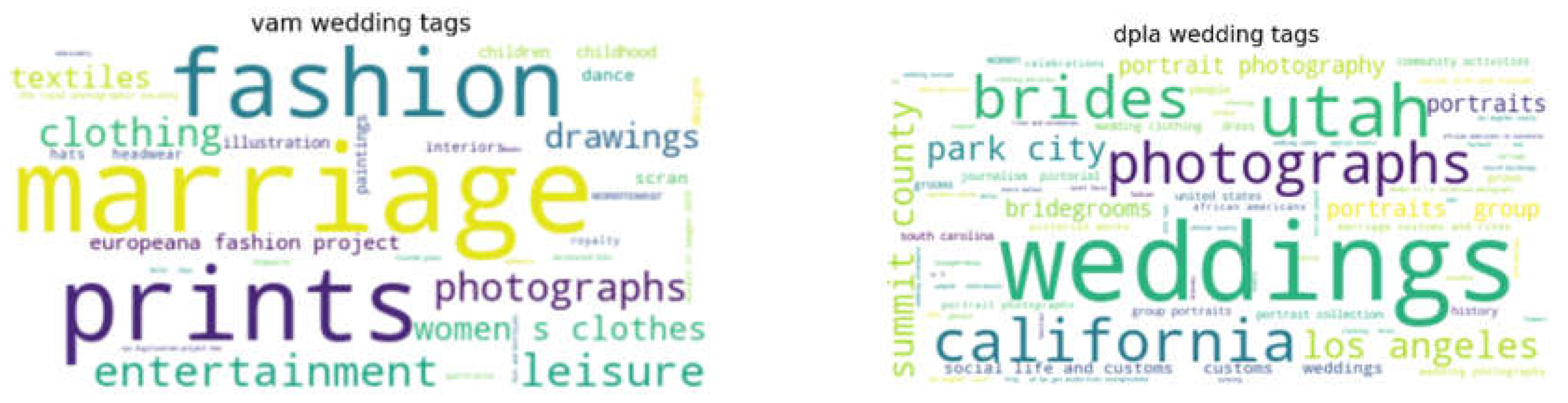

-

keywords extracted dynamically in QueryLab. An interesting thing is that QueryLab's archives are multilingual and almost all of them are able to respond with English terms. when tested, however, different languages coexist in e.g. RNM or DPLA. Here are the results of the query:

- 1)

- mariage on Europeana and Rnm

- 2)

- wedding on Victoria &Albert Museum and DPLA.

- 2.

- Intangible heritage-related tag lists extracted from QueryLab. These lists are hand-built by experienced ethnographers. The tags we compare are those defined for the Archives of Ethnographies and Social History AESS and its transnational version for the inventory of the intangible cultural heritage of some regions in Northern Italy, some cantons in Switzerland and some traditions in Germany, France and Austria [28] and those of UNESCO [29], imported in QueryLab. The language is the same (English), but there are extremely specialized terms, making it difficult to assess similarity. This dataset is called ICH_TAGS.

- 3.

- tag lists referring to cooking and ingredients, in Italian, again taken from QueryLab. The interest is to handle data, belonging to a specific domain, in a language other than English, both for semantic and dictionary-based similarity. The pretrained models used are those for Italian. A comparison was made with a multilingual model. In the following called Cook_IT

- 4.

- WordSim363, gold standard

5.2. Results Evaluation

6. Conclusions and future works

References

- Atoum, I., & Otoom, A. A Comprehensive Comparative Study of Word and Sentence Similarity Measures. International Journal of Computer Applications, 975, 8887. [CrossRef]

- Gomaa, W. H., & Fahmy, A. A. (2013). A survey of text similarity approaches. international journal of Computer Applications, 68(13), 13-18.

- Gupta, A., Kumar, A., Gautam, J., Gupta, A., Kumar, M. A., & Gautam, J. (2017). A survey on semantic similarity measures. International Journal for Innovative Research in Science & Technology, 3(12), 243-247.

- Sunilkumar, P., & Shaji, A. P. (2019, December). A survey on semantic similarity. In 2019 International Conference on Advances in Computing, Communication and Control (ICAC3) (pp. 1-8). IEEE.

- Wang, J., & Dong, Y. (2020). Measurement of text similarity: a survey. Information, 11(9), 421. [CrossRef]

- Meng, L., Huang, R., & Gu, J. (2013). A review of semantic similarity measures in wordnet. International Journal of Hybrid Information Technology, 6(1), 1-12.

- Atoum, I., & Otoom, A. (2016). Efficient hybrid semantic text similarity using WordNet and a corpus. International Journal of Advanced Computer Science and Applications, 7(9). [CrossRef]

- Ensor, T. M., MacMillan, M. B., Neath, I., & Surprenant, A. M. (2021). Calculating semantic relatedness of lists of nouns using WordNet path length. Behavior Research Methods, 1-9. [CrossRef]

- Kenter, T., & De Rijke, M. (2015, October). Short text similarity with word embeddings. In Proceedings of the 24th ACM international on conference on information and knowledge management (pp. 1411-1420).

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint.

- Chandrasekaran, D., & Mago, V. (2021). Evolution of semantic similarity—a survey. ACM Computing Surveys (CSUR), 54(2), 1-37.

- Zad, S., Heidari, M., Hajibabaee, P., & Malekzadeh, M. (2021, October). A survey of deep learning methods on semantic similarity and sentence modeling. In 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON) (pp. 0466-0472). IEEE. [CrossRef]

- Arslan, Y., Allix, K., Veiber, L., Lothritz, C., Bissyandé, T. F., Klein, J., & Goujon, A. (2021, April). A comparison of pre-trained language models for multi-class text classification in the financial domain. In Companion Proceedings of the Web Conference 2021 (pp. 260-268). [CrossRef]

- Li, Y., Wehbe, R. M., Ahmad, F. S., Wang, H., & Luo, Y. (2023). A comparative study of pretrained language models for long clinical text. Journal of the American Medical Informatics Association, 30(2), 340-347. [CrossRef]

- Wang, H., Li, J., Wu, H., Hovy, E., & Sun, Y. (2022). Pre-Trained Language Models and Their Applications. Engineering. [CrossRef]

- Guo, T. (2021). A Comprehensive Comparison of Pre-training Language Models (Version 7). TechRxiv. https://doi.org/10.36227/techrxiv.14820348.v7. [CrossRef]

- Artese, M. T., & Gagliardi, I. (2022). Integrating, Indexing and Querying the Tangible and Intangible Cultural Heritage Available Online: The QueryLab Portal. Information, 13(5), 260. [CrossRef]

- Wolf, T., Debut, L., Sanh, V., Chaumond, J., Delangue, C., Moi, A., . . . Xu, C. (2020). Transformers: State-of-the-art natural language processing. Proceedings of the 2020 conference on empirical methods in natural language processing: system demonstrations (p. 38-45). Association for Computational Linguistics. doi:10.18653/v1/2020.emnlp-demos.6. [CrossRef]

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., ... & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Fellbaum, C. (2010). WordNet. In Theory and applications of ontology: computer applications (pp. 231-243). Dordrecht: Springer Netherlands.

- Miller, G. A. (1995). WordNet: a lexical database for English. Communications of the ACM, 38(11), 39-41.

- Hassan, B., Abdelrahman, S. E., Bahgat, R., & Farag, I. (2019). UESTS: An unsupervised ensemble semantic textual similarity method. IEEE Access, 7, 85462-85482. [CrossRef]

- Rychalska, B., Pakulska, K., Chodorowska, K., Walczak, W., & Andruszkiewicz, P. (2016, June). Samsung Poland NLP Team at SemEval-2016 Task 1: Necessity for diversity; combining recursive autoencoders, WordNet and ensemble methods to measure semantic similarity. In Proceedings of the 10th international workshop on Semantic Evaluation (SemEval-2016) (pp. 602-608). [CrossRef]

- Manning, Christopher D.; Raghavan, Prabhakar; Schütze, Hinrich (2008). Introduction to Information Retrieval. Cambridge University Press.

- Finkelstein, L., Gabrilovich, E., Matias, Y., Rivlin, E., Solan, Z., Wolfman, G., & Ruppin, E. (2001, April). Placing search in context: The concept revisited. In Proceedings of the 10th international conference on World Wide Web (pp. 406-414).

- Hill, F., Reichart, R., & Korhonen, A. (2015). Simlex-999: Evaluating semantic models with (genuine) similarity estimation. Computational Linguistics, 41(4), 665-695. [CrossRef]

- Artese, M. T., & Gagliardi, I. (2022). Methods, Models and Tools for Improving the Quality of Textual Annotations. Modelling, 3(2), 224-242. [CrossRef]

- Artese, M. T., & Gagliardi, I. (2017). Inventorying intangible cultural heritage on the web: a life-cycle approach. International Journal of Intangible Heritage(12), 112-138.

- Unesco ICH. (2023, May). Retrieved from Browse the Lists of Intangible Cultural Heritage and the Register of good safeguarding practices: https://ich.unesco.org/en/lists.

| 1 | Hugging face model for sentence similarity: https://huggingface.co/models?pipeline_tag=sentence-similarity

|

| 2 | WordSim353 and SimLex999 datasets: https://github.com/kliegr/word_similarity_relatedness_datasets

|

|

# Task 1: Dataset Preparation - Harvesting process - Language identification - Preprocessing (possibly strip stopwords, accents, …) - Output: items of interest # Task 2: Single Method similarity/relatedness - # semantic relatedness - Choice of transformers and pre-trained models - Fine tuning of pre-trained Bert-like models to obtain the vectors - Computation of similarity matrix using [CLS] tokens, means and max pooling - Output: for each model, 3 lists of the most related tags, ordered by score value - # wordnet-based - For each tag1 in list1 and tag2 in list2: - Synsets identification for the tag1 and tag2, using language and automatic translation if necessary - Computation of the max path_similarity and wu_p_similarity - Computation of the min distance and hand crafted similarity - Output: 3 lists of the most related tags, ordered by score value - #string-based similarity - Computation of jaro-wrinkler similarity among string - Output: list of the most related tags, ordered by score value # Task 3: Ensemble similarity - #ensemble for each pretrained model - Weighted Voting mechanism to create, for each language model, a unique list of most related tags - Output: list of the most related tags, ordered by score value - #global ensemble - Majority Voting mechanism - Output: lists of the most related tags, ordered by number of votes # Task 4: Evaluation - Ground truth creation - Computation of recall, precision, and Jaccard similarity |

| Name (source) | Country | Data | Language | Description |

| Intangible Search (local) | Italy | ICH | Italian, English, French, German | Arts and Entertainment, Oral Traditions, Rituals, Naturalistic knowledge, Technical knowledge |

| ACCUDatabank (local) | Pacific Area | ICH | English | Performing Arts |

| Sahapedia(local) | India | ICH | English (used here) and other local idioms | Knowledge Traditions, Visual and Material Arts, Performing Arts, Literature and Languages, Practices and Rituals, Histories… |

| Lombardy Digital Archive(local) | Italy | ICH, CH | Italian | Oral history, Literature, Performing Arts, Popular traditions, Work tools, Religion, Musical instruments, Family… |

| Museum Contemporary Photography(local) | Italy | CH | Italian | Architecture, Cities, Emigration, Family, Objects, Work, Landscapes, Sport… |

| Europeana [6] (web) | European countries | ICH, CH | multilingual | 1914-18 World War, Archaeology, Art, Fashion, Industrial Heritage, Manuscripts, Maps, Migration, Music, Natural History, Photography… |

| Victoria&Albert Museum (web) | UK | ICH, CH | English | Architecture, Embroidery, Costume, Paintings, Photography, Frames, Wallpaper, Jewelry, Illustration, Fashion, Manuscripts, Wedding dress, Books, Opera, Performance… |

| Cooper Hewitt (web) | USA | CH | English | Decorative Arts, Designs, Drawings, Posters, Models, Objects, Fashion, Textiles, Wallcoverings, Prints… |

| Réunion des Musée Nat – RMN (web) | France | ICH, CH | French with tags in English | Photography, Paintings, Sculptures, Literature, Dances, Modern Art, Decorative Arts, Music, Drawings, Architecture… |

| Auckland Museum (web) | New Zealand | ICH, CH | English | Natural Sciences, Human History, Archaeology, War history, Arts and Design, Manuscripts, Maps, Books, Newspapers… |

| Digital Public Library of America – DPLA (web) | USA | ICH, CH | English | American civil war, Aviation, Baseball, Civil rights movement, Immigration, Photography, Food, Women in science… |

| Harvard Art Museums – HAM (web) | USA | ICH,CH | English | art history, conservation, and conservation science; learning and supporting activities |

| CookIT | Italy | ICH | Italian | Traditional Italian Recipes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).