1. Introduction

Music information retrieval is one of the core research areas of computer vision and audition [

1,

2], which mainly provides technical support for music education, music theory, music composition, and other related fields through understanding and analyzing music content. Among them, the staff is an extremely important way to record auditory music in an image way and also an important source of digital music. Semantic understanding of optical staff aims to encode pictorial music into playable digital code. Because of the multitude of scores recorded in staff, this technology is and will continue to have a huge impact on musical applications in the deluge of digital information [

3,

4].

Semantic understanding of optical staff is closely related to optical music recognition (OMR) [

5,

6,

7]. This area has been an important application in machine learning since the middle of the last century. The extent to which optical music recognition can be achieved has been varying according to technology development and different needs. In the nadir of deep learning, OMR went from separating and extracting symbolic primitives (lines, heads, stems, tails, beams, etc.) to using correlations between primitives and related rules of musical notation and recognizing notes [

8,

9].With the gradual improvement of deep learning ecology, various researches based on deep learning provide new ideas for OMR and put forward new recognition requirements. Pacha et al. [

10] proposed a Region-based convolutional neural networks for staff symbol detection tasks and used the Faster R-CNN [

11] neural network model to locate and classify single-line staff symbols. Both the semantic segmentation methods to staff symbols, where one is the U-Net [

12] neural network model applied by Hajič jr. et al. [

13] and the other is deep-water detector algorithm proposed by Tuggener et al. [

14], fail to detect pitch and duration. Huang et al. [

15] proposed an end-to-end network model for staff symbol recognition by modifying the YOLOv3 [

16] to detect pitch and duration separately. OMR algorithms based on sequence modeling mainly target monophonic music sheet, and cannot completely understand the meaning of all symbols, e.g. Van der Wel et al. [

17] used a sequence-to-sequence [

18] model; Baró et al. [

19] used convolutional recurrent neural network which consists of CNN and LSTM [

20]. In summary, as so far deep learning algorithms are able to detect and recognize the locations and classes of some symbols in staff images with low complexities (i.e., low symbol density, small span, and few varieties) and achieve partial semantic understanding.

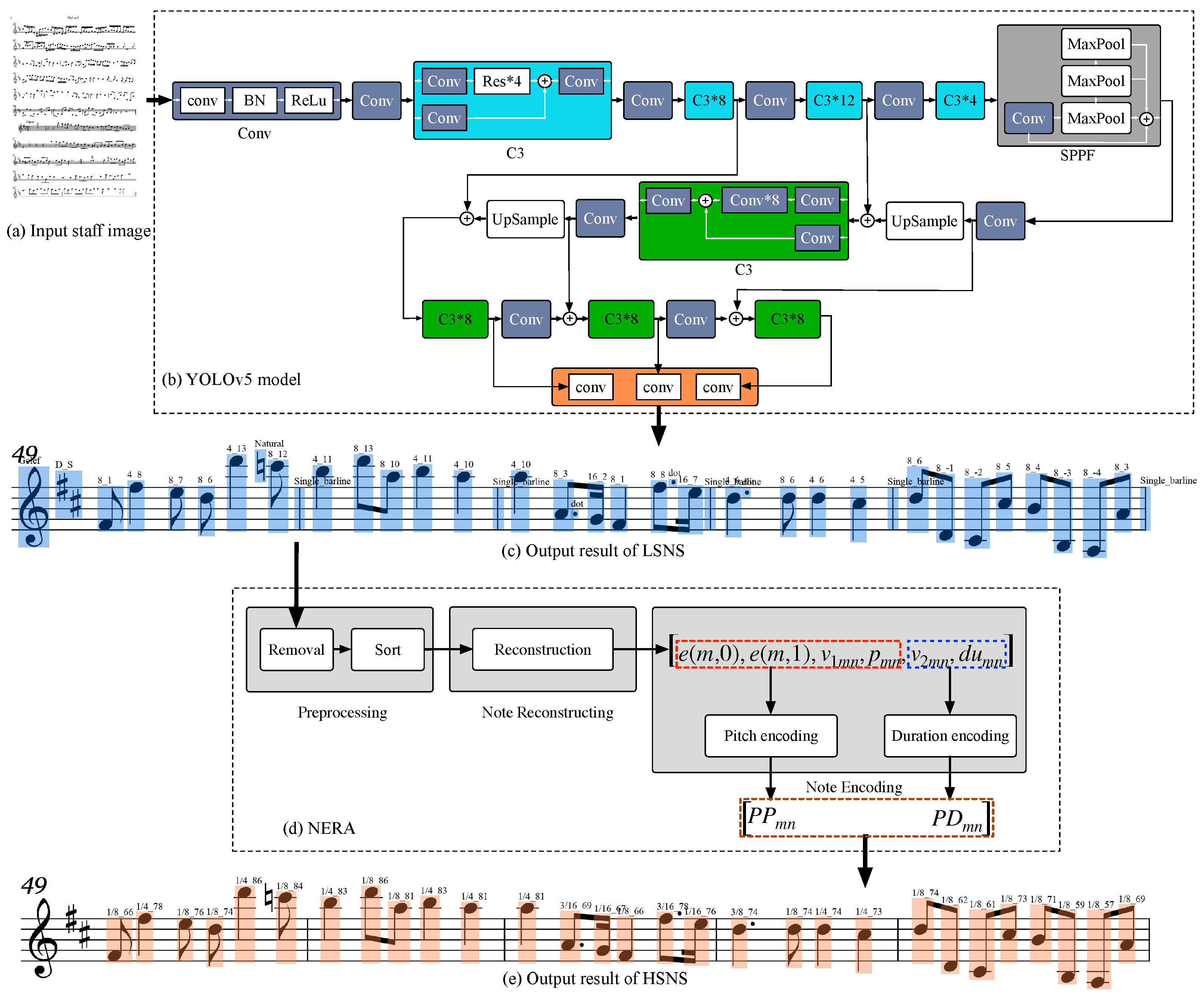

This paper aims to achieve codes of pitch and duration of the notes in a complex staff image during the performance, so an end-to-end optical staff semantic understanding system is designed. The system consists of the YOLOv5 as the Low-level Semantic Understanding Stage (LSNS) and the Note Encoding Reconstruction Algorithm (NERA) as the High-level Semantic Understanding Stage (HSNS). In the LSNS, the whole optical staff is the input of the system. The model is then trained with the self-constructed SUSN dataset to output digital codes for the pitch and duration of the main note under the natural scales and other symbols that affect the pitch and duration of the main note. The NERA which takes the output of the LSNS of the staff as the input and applies music theory and MIDI encoding rules [

21] , resolves the natural scale and other symbol semantics and their mutual logical, spatial, and temporal relationships which results in the output of the staff symbol relationship structure of the given symbols, and realizes the HSNS of the main notes through calculation, outputs the pitch-duration codes of the main notes during the performance and provides an end-to-end optical staff symbol semantic understanding encoding for notation recognition through listening, intelligent score flipping and music information retrieval.

4. Conclusions and Outlooks

4.1. Conclusions

This paper aims to solve the problem of semantic understanding of the main notes of the optical staff as the pitch and duration during performances in the field of music information retrieval. The SUSN dataset is constructed using the basic properties of the staff as a starting point, and the YOLO object detection algorithm is used to achieve LSNS of the pitch and duration of the natural scale and other symbols (such as clefs, key signatures, accidentals, etc.) that affect the natural scale. Experimental results of LSNS show that the precision is 0.989 and the recall is 0.972. We analyze the causes of error and omission at the LSNS due to the difference in the complexity of the staff.

The HSNS is based on the NERA proposed by the music theory, which parses the low-level semantic information according to the semantics of each symbol and its logical, spatial, and temporal relationships with each other, constructs the staff symbol relationship structure of the given symbols, and calculates the pitch and duration of each note played. The NERA has limitations in modeling the staff image system, and can only realize the encoding of the pitch and duration of the notes whose staff symbols are defined according to a version of the rules of notation. The accuracy of notes in the process of HSNS depends on the accuracy of LSNS, and once there is symbol error and omission, it will lead to wrong pitch and duration encoding of corresponding notes in the process of HSNS. In this paper, we summarize the different cases of HSNS errors caused by the symbol errors and omissions of different symbols of the staff scale during the LSNS.The optical staff notation semantic understanding system implements the input staff images and outputs the encoding of the pitch and duration of each note when it is played.

4.2. Outlooks

The main problems of LSNS are as follows:

The staff notation in this paper is mainly related to the pitch and duration of musical melodies. The recognition of other symbols, such as dynamics, staccatos, trills and characters related to the information of the staff is one of the future tasks to be solved;

The accurate recognition of complex natural scales such as chords is a priority;

The recognition of symbols in more complex staff images, e.g., those with larger intervals, denser symbols and more noise in the image.

For the HSNS, the following problems still need to be solved:

It is important to improve the scope of accidentals, so that they can be combined with bar lines and repetition lines, etc;

The semantic understanding of notes is based on the LSNS, and after solving the problem of the types of symbols recognized by the model, each note can be given richer expression information;

In this paper, rests are recognized, but the information is not utilized in semantic understanding. In the future, this information and the semantic relationships of other symbols can be used to generate a complete code of the staff during performances.

The system provides an accurate semantic understanding of optical staff symbols for multimodal music artificial intelligence applications such as notation recognition through listening, intelligent score flipping and automatic performance.

Author Contributions

Conceptualization, F.L. and Y.L.; methodology, F.L. and Y.L.; software, F.L.; validation, F.L.; formal analysis, F.L. and Y.L.; data curation, F.L. and G.W.; writing—original draft preparation, F.L.; writing—review and editing, F.L., Y.L. and G.W.; visualization, F.L.; supervision, Y.L.; project administration, F.L.; All authors have read and agreed to the published version of the manuscript.

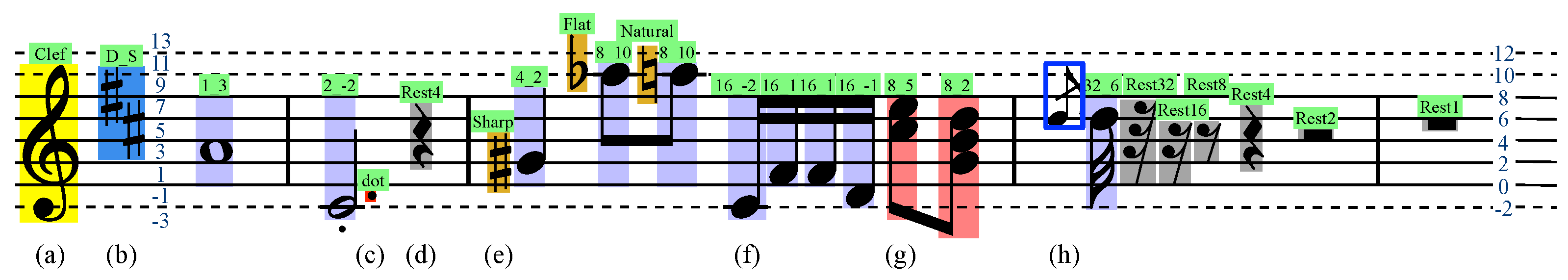

Figure 1.

Dataset labeling method. (a) Labeling of the treble clef: the yellow minimum external bounding box labeled as ’Gclef’; (b) Labeling of the D major: the blue minimum external bounding box labeled as ’D_S’; (c) Labeling of the dot: the red minimum external bounding box labeled as ’dot’; (d) Labeling of the quarter rest: the gray minimum external bounding box labeled as ’Rest4’; (e) Labeling of the sharp symbol: the orange minimum external bounding box labeled as ’Sharp’; (f) Labeling of the single note ’C’ in the main note: purple bounding box from the note head (including the lower plus 1 line) to the 5th line labeled as ’16_-1’. (g) Harmony in the main note: the rose minimum external bounding box, labeled only the first note and annotated as ’8_5’; (h) Appoggiatura: appoggiatura’s size is smaller than main note’s in the staff.

Figure 1.

Dataset labeling method. (a) Labeling of the treble clef: the yellow minimum external bounding box labeled as ’Gclef’; (b) Labeling of the D major: the blue minimum external bounding box labeled as ’D_S’; (c) Labeling of the dot: the red minimum external bounding box labeled as ’dot’; (d) Labeling of the quarter rest: the gray minimum external bounding box labeled as ’Rest4’; (e) Labeling of the sharp symbol: the orange minimum external bounding box labeled as ’Sharp’; (f) Labeling of the single note ’C’ in the main note: purple bounding box from the note head (including the lower plus 1 line) to the 5th line labeled as ’16_-1’. (g) Harmony in the main note: the rose minimum external bounding box, labeled only the first note and annotated as ’8_5’; (h) Appoggiatura: appoggiatura’s size is smaller than main note’s in the staff.

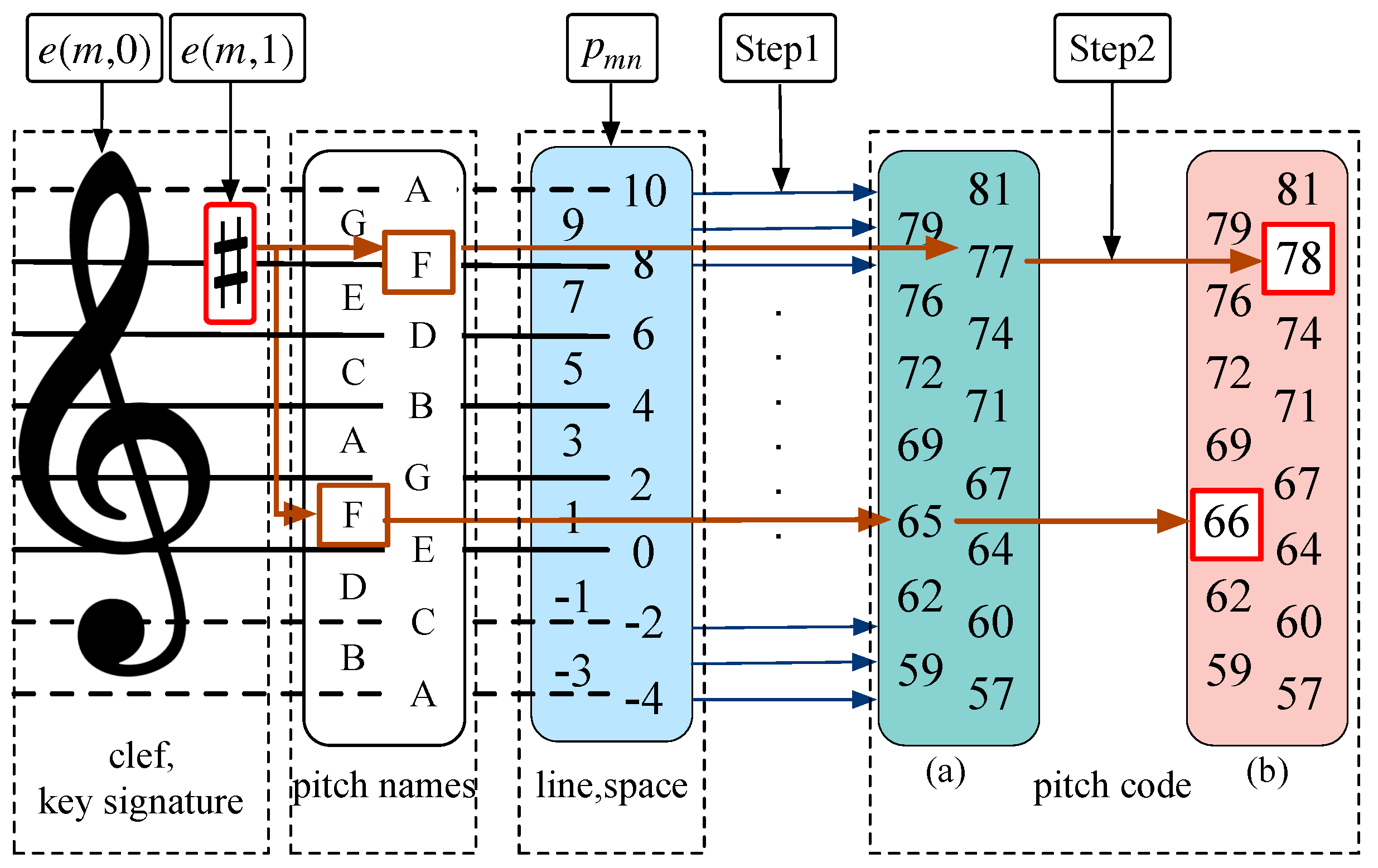

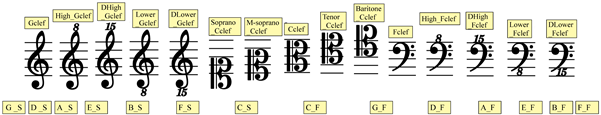

Figure 2.

The mapping between the clef, key signature and the pitch code. In the diagram, the clef is a treble clef. Step1 means the clef’s mapping and the MIDI encoding rules. After passing Step1, is converted to (a). The key signature is G major. Each note in the m-th line is raised a half tone correspondingly, i.e. the upper is 78, and the lower is 66. Then, (a) is converted to (b). The mapping relationship is shown in Step2 in the figure.

Figure 2.

The mapping between the clef, key signature and the pitch code. In the diagram, the clef is a treble clef. Step1 means the clef’s mapping and the MIDI encoding rules. After passing Step1, is converted to (a). The key signature is G major. Each note in the m-th line is raised a half tone correspondingly, i.e. the upper is 78, and the lower is 66. Then, (a) is converted to (b). The mapping relationship is shown in Step2 in the figure.

Figure 3.

Structure of the optical staff symbol semantic understanding system. The system comprises two parts: the LSNS (b) and the HSNS (d). Natual scale (c) is the response of the (b) modual when the optical staff image (a) is the system’s excitation. (c) then acts as the excitation of the module (d) which eventually outputs the performance code (e).

Figure 3.

Structure of the optical staff symbol semantic understanding system. The system comprises two parts: the LSNS (b) and the HSNS (d). Natual scale (c) is the response of the (b) modual when the optical staff image (a) is the system’s excitation. (c) then acts as the excitation of the module (d) which eventually outputs the performance code (e).

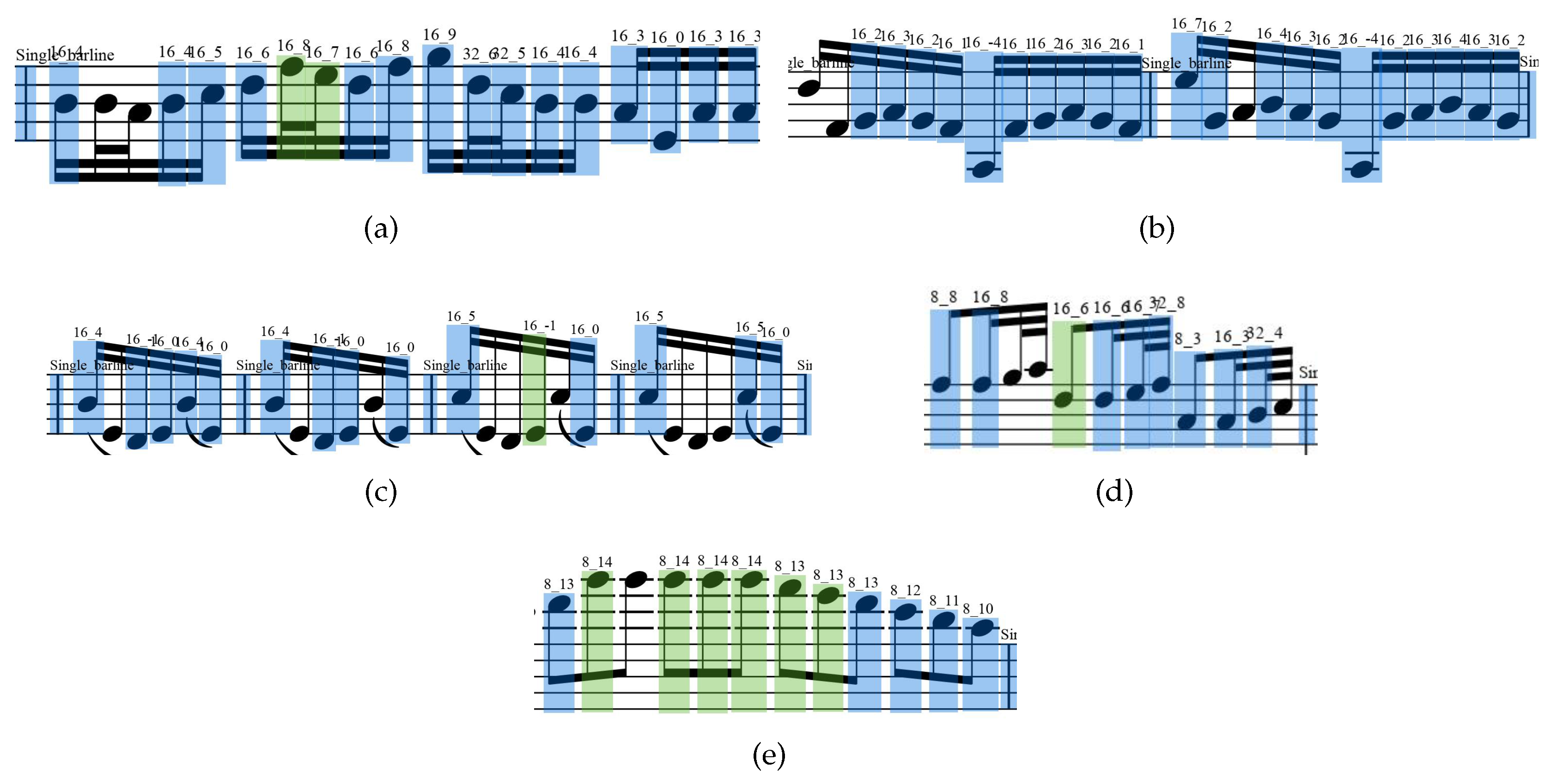

Figure 4.

The partial error causes of LSNS. The blue boxes are the correctly identified symbols, the green boxes are the incorrectly identified symbols, the characters on the boxes are the identification results, and the symbols without boxes are the missed notes

Figure 4.

The partial error causes of LSNS. The blue boxes are the correctly identified symbols, the green boxes are the incorrectly identified symbols, the characters on the boxes are the identification results, and the symbols without boxes are the missed notes

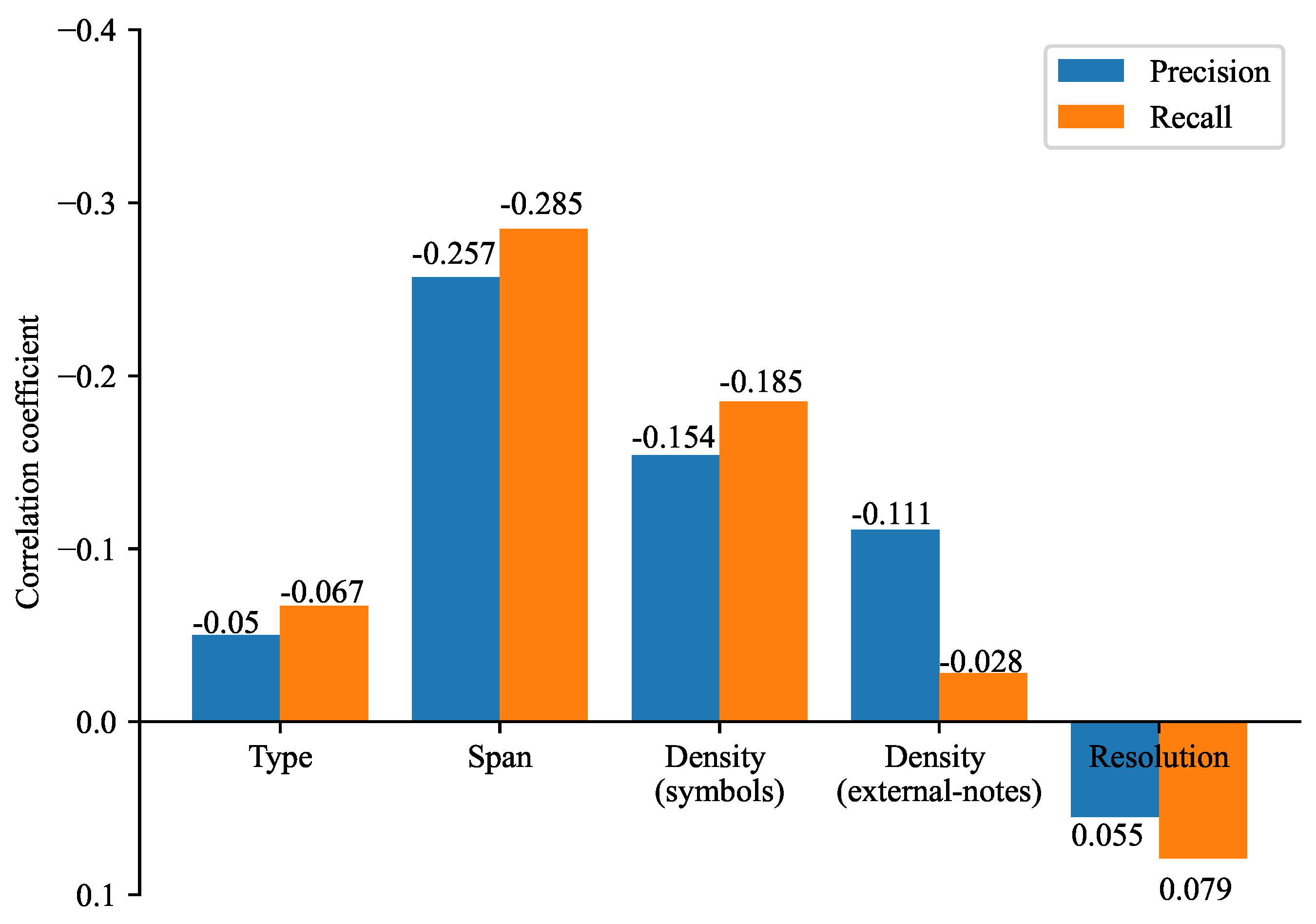

Figure 5.

Complexity variable correlation coefficient.

Figure 5.

Complexity variable correlation coefficient.

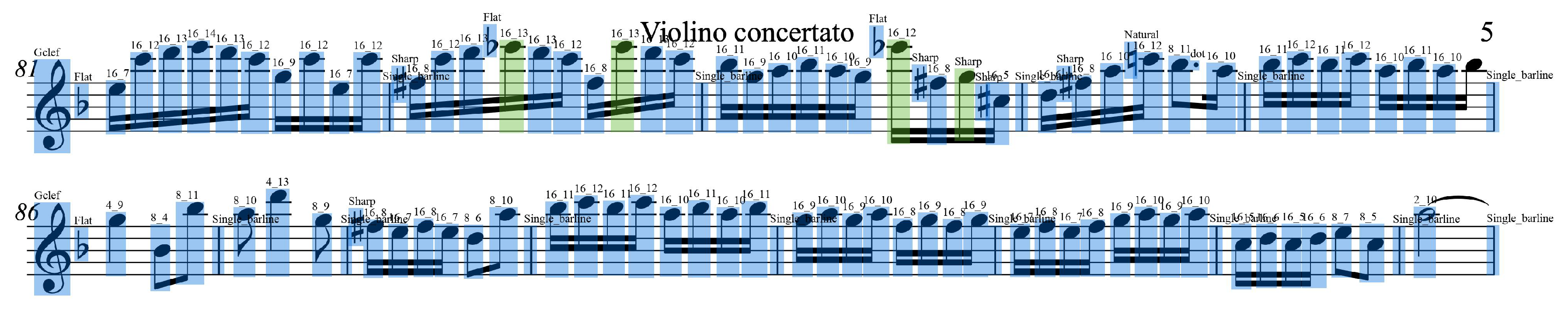

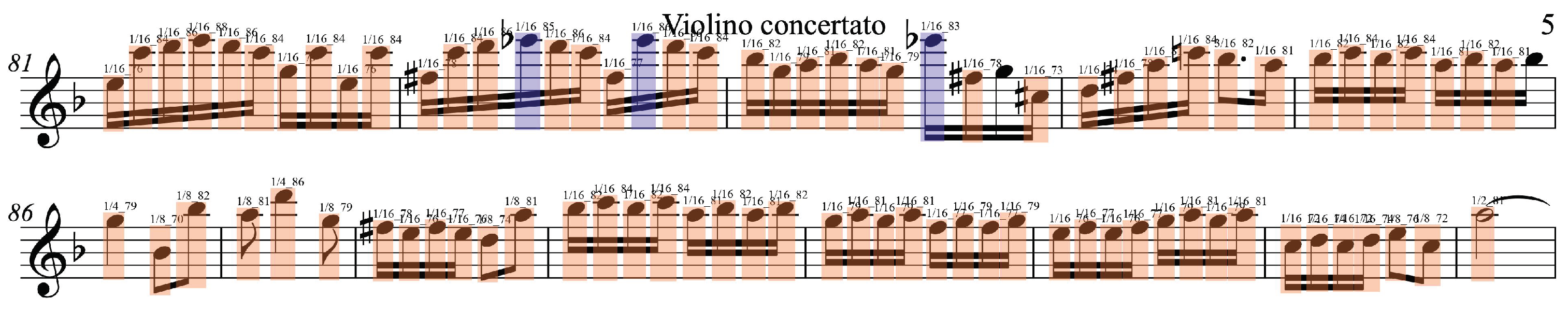

Figure 6.

For LSNS visualization, the diagram shows the staff of Oboe String Quartet in C Minor, Violino concertato (JS BACH BWV 1060), page 5, lines 1 and 2. The characters on each box indicate the semantics of the corresponding symbol. In the diagram, green boxes indicate incorrectly identified symbols and the symbols without boxes are the missed notes.

Figure 6.

For LSNS visualization, the diagram shows the staff of Oboe String Quartet in C Minor, Violino concertato (JS BACH BWV 1060), page 5, lines 1 and 2. The characters on each box indicate the semantics of the corresponding symbol. In the diagram, green boxes indicate incorrectly identified symbols and the symbols without boxes are the missed notes.

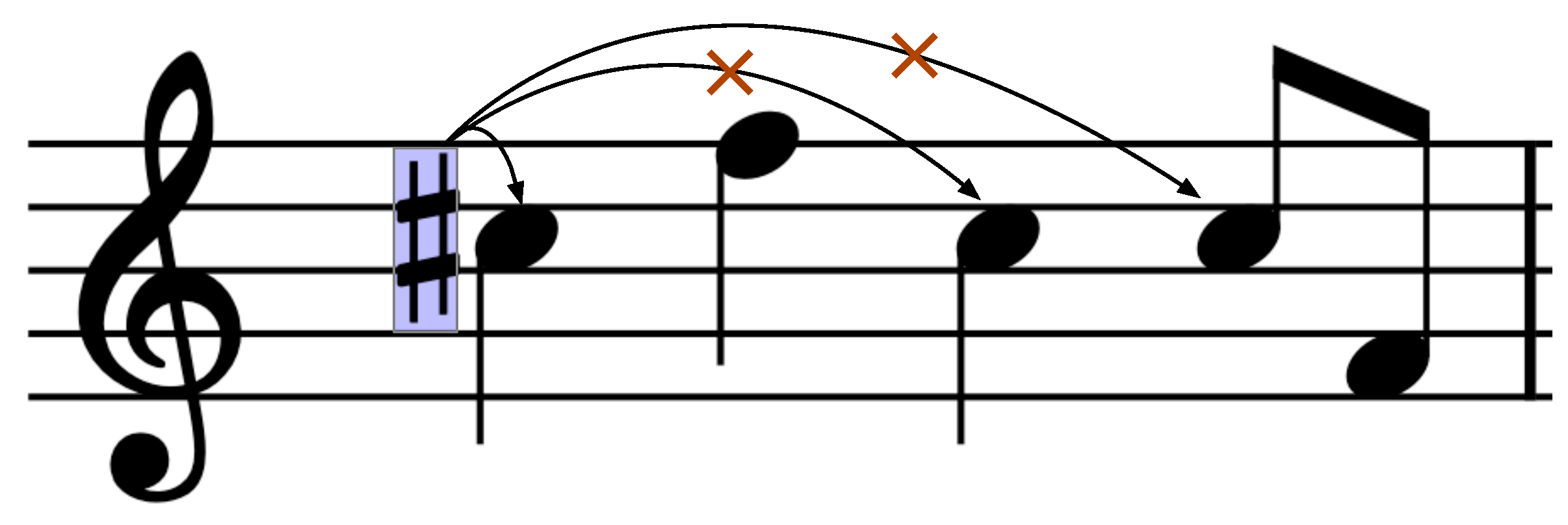

Figure 7.

The pitch-shifting notation leads to HSNS errors. The sharp should be applied to notes of the same height in the bar, but the NERA only applies to the first note after the sharp.

Figure 7.

The pitch-shifting notation leads to HSNS errors. The sharp should be applied to notes of the same height in the bar, but the NERA only applies to the first note after the sharp.

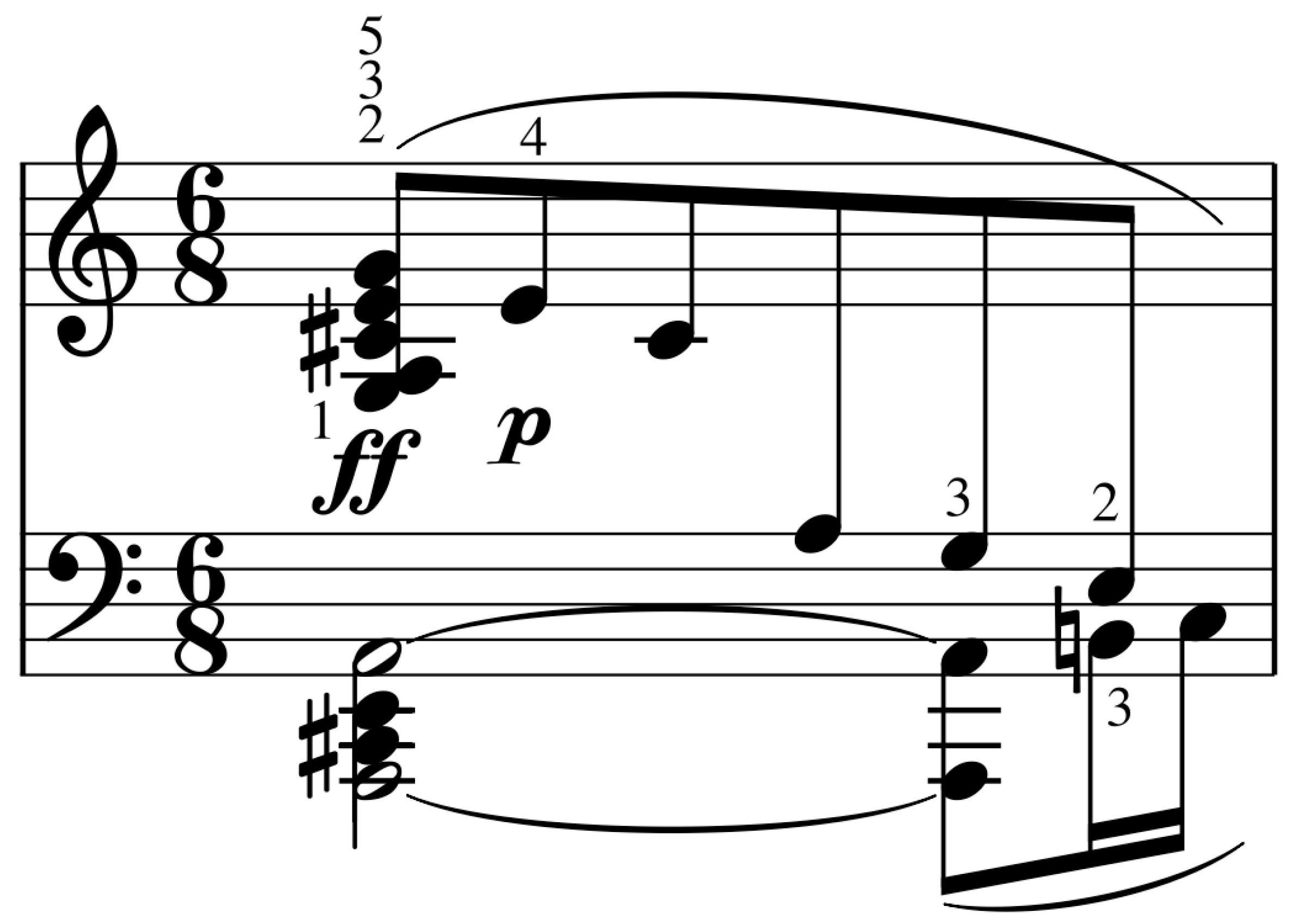

Figure 8.

This excerpt from Beethoven’s Piano Sonata illustrates some of the characteristics that distinguish musical notation from ordinary notation. The chord in the lower left contains a mixture of half- and quarter-notes of the mixed note head, yet the musical intent is that the two quarter notes in the middle of the chord are actually played as eighth notes, adding the thickness of the first beat. Reproduced with permission from Calvo.J et al., Understanding Optical Music Recognition; published by ACM COMPUTING SURVEYS, 2020.

Figure 8.

This excerpt from Beethoven’s Piano Sonata illustrates some of the characteristics that distinguish musical notation from ordinary notation. The chord in the lower left contains a mixture of half- and quarter-notes of the mixed note head, yet the musical intent is that the two quarter notes in the middle of the chord are actually played as eighth notes, adding the thickness of the first beat. Reproduced with permission from Calvo.J et al., Understanding Optical Music Recognition; published by ACM COMPUTING SURVEYS, 2020.

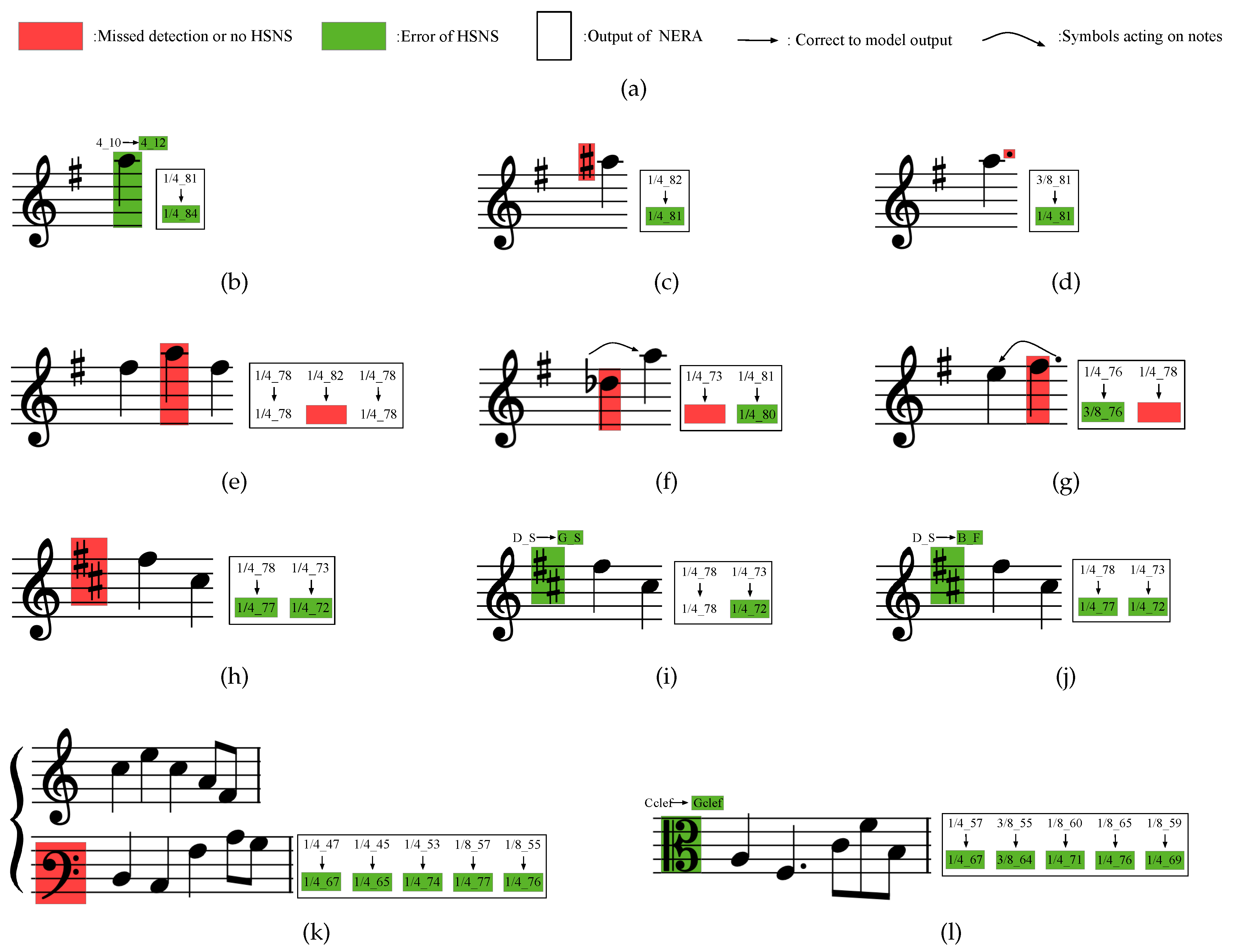

Figure 9.

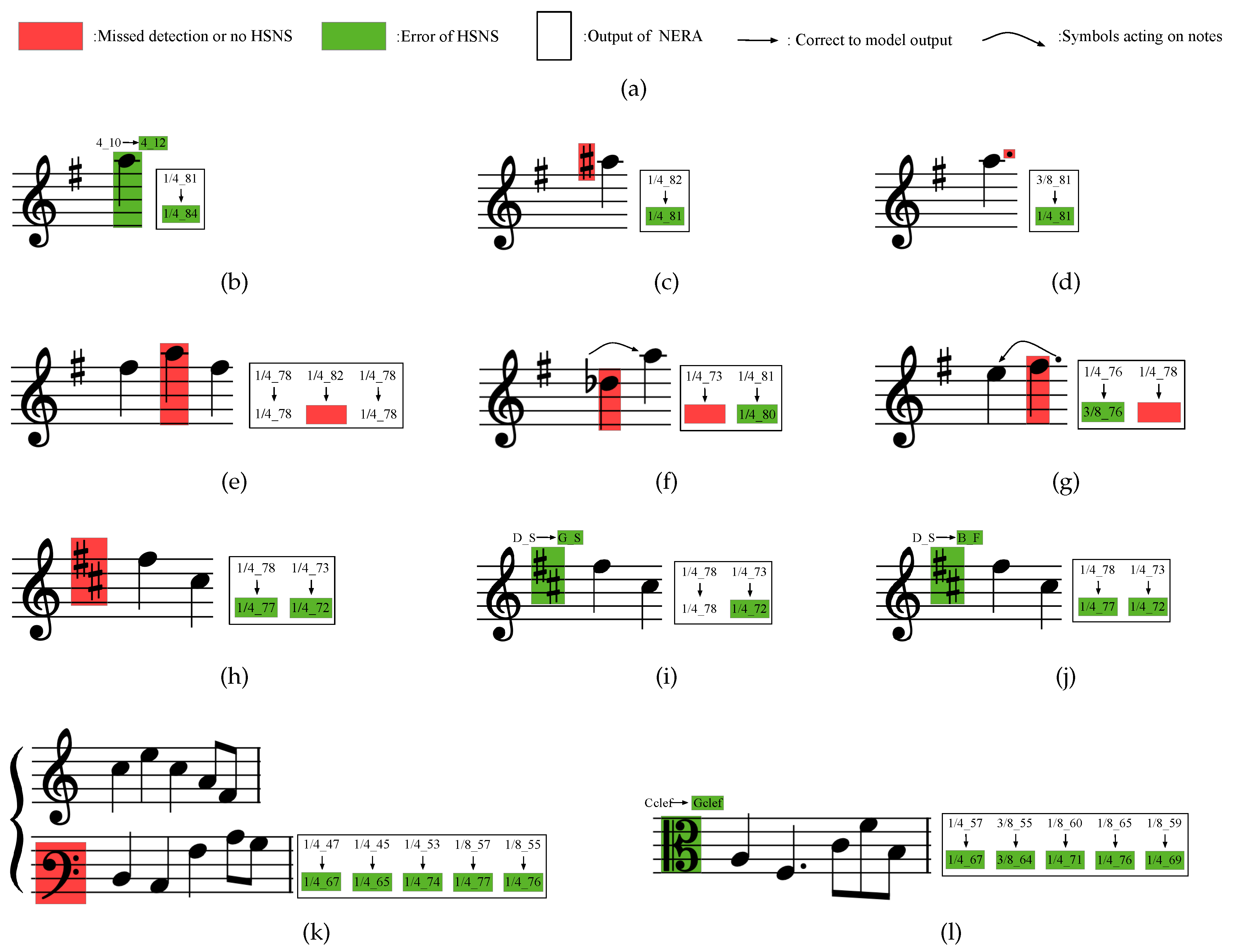

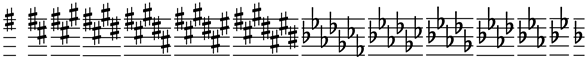

Impact on HSNS in case of symbolic errors or omissions at LSNS. (a) indicates the meaning of the corresponding symbol in the figure below. (b) has a wrong note pitch identification of 12, then the HSNS has a pitch error. (c) missed a sharp, then the note pitch of the action is wrong. (d) omission of the dot, then the note duration of the action is wrong. (e) has a note omission which does not affect the semantics of the preceding and following notes. (f) however has an omission of a note which causes the flat to act on the pitch of the next note, and the pitch of the next note is incorrect. (g) has an omission of a note which causes the dot to act on the duration of the preceding note, and duration of the preceding note is incorrect. (h) has missed the key signature of D major, and the notes in the natural scale roll call of "Do" and "Fa" will not be raised. (i) has incorrectly indentified the D major as G major, and the pitches of the notes in the range of action are raised (D major acts on natural scales with the with a roll call of "Fa" and "Do", while G major acts on natural scales with the roll call of "Fa "), when performing the HSNS, natural scales with a roll call of "Fa" in this line of the staff are not subject to error, while natural scales with a roll call of "Do" are subject to error. (j) has recognized D major as F major, and the mode of action is different, which causes the pitch of all the notes in the range to be incorrect. (k) missed the bass clef, and the pitch of all the notes in this line of the staff is determined by the clef of the previous line. (l) identified the alto clef incorrectly as the treble clef, and all the pitches in this line of the pitch are incorrect.

Figure 9.

Impact on HSNS in case of symbolic errors or omissions at LSNS. (a) indicates the meaning of the corresponding symbol in the figure below. (b) has a wrong note pitch identification of 12, then the HSNS has a pitch error. (c) missed a sharp, then the note pitch of the action is wrong. (d) omission of the dot, then the note duration of the action is wrong. (e) has a note omission which does not affect the semantics of the preceding and following notes. (f) however has an omission of a note which causes the flat to act on the pitch of the next note, and the pitch of the next note is incorrect. (g) has an omission of a note which causes the dot to act on the duration of the preceding note, and duration of the preceding note is incorrect. (h) has missed the key signature of D major, and the notes in the natural scale roll call of "Do" and "Fa" will not be raised. (i) has incorrectly indentified the D major as G major, and the pitches of the notes in the range of action are raised (D major acts on natural scales with the with a roll call of "Fa" and "Do", while G major acts on natural scales with the roll call of "Fa "), when performing the HSNS, natural scales with a roll call of "Fa" in this line of the staff are not subject to error, while natural scales with a roll call of "Do" are subject to error. (j) has recognized D major as F major, and the mode of action is different, which causes the pitch of all the notes in the range to be incorrect. (k) missed the bass clef, and the pitch of all the notes in this line of the staff is determined by the clef of the previous line. (l) identified the alto clef incorrectly as the treble clef, and all the pitches in this line of the pitch are incorrect.

Figure 10.

Visualization of the results of HSNS.

Figure 10.

Visualization of the results of HSNS.

Table 1.

Images with labels of the note control symbols and rests.

Table 2.

Performance evaluation of LSNS with different complexity quintiles.

Table 2.

Performance evaluation of LSNS with different complexity quintiles.

| Staff |

|

Complexity Variables |

|

Evaluation |

| name |

page |

|

Type |

Span |

Density (symbols) |

Density (external-notes) |

Resolution |

|

Precision |

Recall |

| Staff 1 |

2 |

|

16 |

19 |

484 |

78 |

1741 |

|

0.968 |

0.930 |

| Staff 2 |

5 |

|

17 |

19 |

679 |

146 |

2232 |

|

0.996 |

0.988 |

| Staff 3 |

3 |

|

13 |

19 |

319 |

95 |

1673 |

|

0.997 |

0.992 |

| Staff 4 |

12 |

|

20 |

20 |

478 |

80 |

1741 |

|

0.994 |

0.981 |

| Staff 5 |

7 |

|

19 |

24 |

530 |

145 |

200 |

|

0.980 |

0.958 |

| Staff 6 |

5 |

|

19 |

20 |

367 |

63 |

435 |

|

0.992 |

0.970 |

| Staff 7 |

5 |

|

15 |

19 |

350 |

62 |

854 |

|

0.996 |

0.993 |

| Staff 8 |

3 |

|

13 |

20 |

441 |

40 |

1536 |

|

0.990 |

0.969 |

| Staff 9 |

3 |

|

11 |

20 |

424 |

160 |

2389 |

|

0.986 |

0.966 |

| Staff 10 |

2 |

|

17 |

18 |

315 |

86 |

1780 |

|

0.987 |

0.976 |

Table 3.

Precision and Recall of clef and key signature.

Table 3.

Precision and Recall of clef and key signature.

| |

Precision |

Recall |

| clef |

1.0 00 |

0.993 |

| key signature |

0.992 |

0.990 |

Table 4.

Experimental results of HSNS.

Table 4.

Experimental results of HSNS.

| Staff |

|

Ideal input |

|

Practical input |

| |

|

Error Rate |

Omission Rate |

|

Error Rate |

Omission Rate |

| Staff 1 |

|

0.006 |

0.000 |

|

0.052 |

0.044 |

| Staff 2 |

|

0.011 |

0.000 |

|

0.016 |

0.010 |

| Staff 3 |

|

0.010 |

0.000 |

|

0.020 |

0.006 |

| Staff 4 |

|

0.019 |

0.000 |

|

0.027 |

0.020 |

| Staff 5 |

|

0.013 |

0.000 |

|

0.044 |

0.014 |

| Staff 6 |

|

0.005 |

0.000 |

|

0.020 |

0.008 |

| Staff 7 |

|

0.000 |

0.000 |

|

0.004 |

0.010 |

| Staff 8 |

|

0.020 |

0.000 |

|

0.055 |

0.053 |

| Staff 9 |

|

0.022 |

0.000 |

|

0.037 |

0.021 |

| Staff 10 |

|

0.000 |

0.000 |

|

0.036 |

0.019 |