1. Introduction

Odometry and mapping, also known as simultaneous localization and mapping (SLAM) [

1], is an important task for autonomous mobile systems to localize themselves and interact with surroundings in unknown environments. RGB cameras and light detection and ranging (LiDAR) are two commonly used sensors for SLAM. RGB cameras capture detailed texture information of the environments, which offers useful constraints for visual SLAM [

2,

3,

4,

5,

6]. However, they are easily affected by environmental lighting changes and lack depth information, which limits their effectiveness in 3D perception. LiDAR, as an active sensor, is less susceptible to environmental changes and provides accurate 3D information of the environment. Therefore, LiDAR-based SLAM has gained significant attention in applications where reliable perception is critical for ensuring safety and achieving high performance, such as autonomous driving and specialized robotics.

Solid-state LiDAR sensors [

7,

8] have recently made remarkable progress and gained increasing attention for SLAM applications due to their numerous advantages over traditional mechanical LiDAR. Firstly, solid-state LiDAR eliminates the need for a mechanical rotating mechanism, making it more suitable for specialized environments with high/low temperatures and vibrations. Secondly, solid-state LiDAR is generally less expensive and more lightweight than mechanical LiDAR, making it more suitable for small mobile robots. Lastly, the most significant characteristic of solid-state LiDAR is its non-repeating scanning pattern, which results in significantly higher point-cloud density with increasing scan time compared to mechanism LiDAR.

However solid-state LiDAR typically has a narrower field of view (FOV) than mechanism LiDAR, e.g. Livox HAP with FOV and HESAI FT120 with , which poses challenge for scan registration. A narrow FOV represents the robot is difficult to get sufficient information from the environment, making the SLAM system vulnerable to less featured and elongated environments. Such a disadvantage is more severe for mobile robot where the sensor installation position is restricted and installed height is commonly lower, resulting in less effective scanning information. Equipping multiple LiDARs can solve this problem, but it goes against the original intention of using more cost-effective solid-state LiDAR on mobile robots.

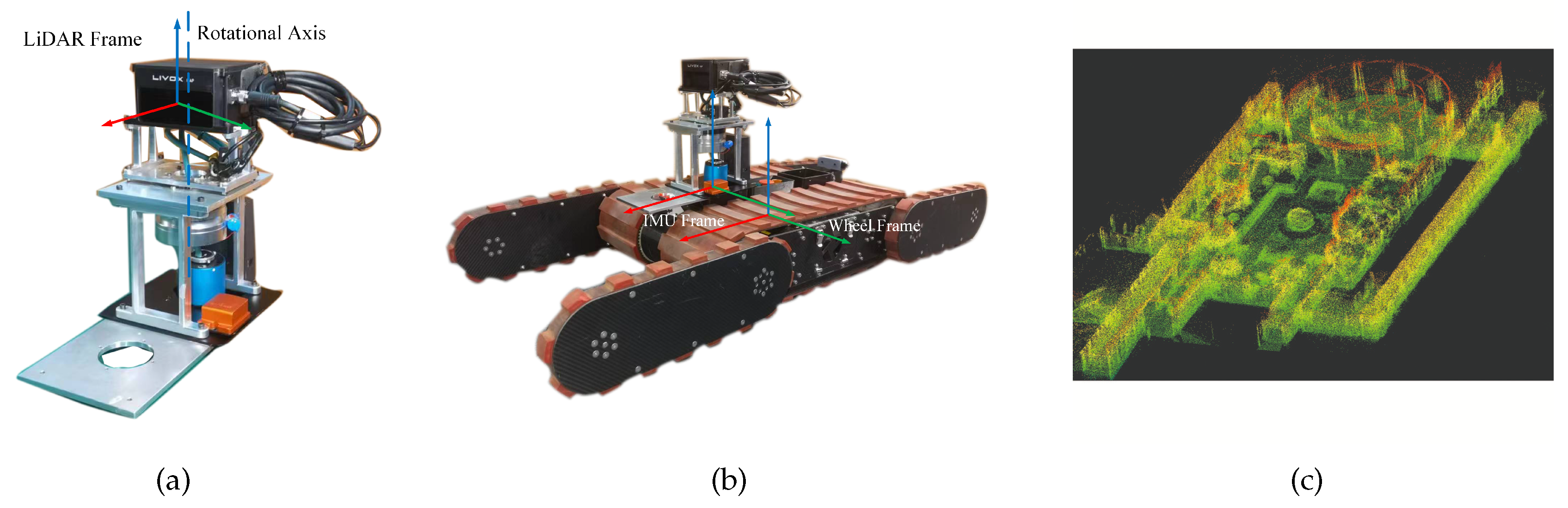

To address this challenge, we present a novel solution for solid-state LiDAR-based SLAM in mobile robot applications. Specifically, we designed and implemented an economical rotating sensory platform that effectively expands the LiDAR’s horizontal FOV to 360 degrees as shown in

Figure 1. We propose a multi-source information fusion odometry system that combines the rotation encoding measurements of the rotating platform, IMU measurements, wheel speed odometry, and LiDAR odometry in an iterative extended Kalman filter to obtain robust and accurate pose estimation. The proposed solution overcomes the limitations of solid-state LiDAR by providing a wider FOV while maintaining cost-effectiveness. The multi-source information fusion algorithm ensures accurate and reliable localization even in challenging environments where feature degradation may occur. Experimental results on outdoor, indoor and stair scenarios as

Figure 1(c) demonstrate the effectiveness and practicality of our proposed solution for mobile robot localization and mapping.

The following of this article is structured as follows: In

Section 2, past relevant works are discussed.

Section 3 provides the detailed design of rotating platform and presents the kinematic model of our experimental mobile robot. Our proposed LiDAR odometry system is detailed in

Section 4. In

Section 5, we present the experimental results and evaluation. Finally, we conclude our work and discuss directions for future research in

Section 6.

2. Related work

Many existing approaches for LiDAR SLAM have been proposed. In this work, we mainly focus on the LiDAR-only odometry and mapping(LOM), LiDAR-IMU odometry and mapping(LIOM), and LiDAR-IMU-Wheel odometry and mapping(LIWOM).

LiDAR-only odometry and mapping is based on the iterated closest points (ICP) [

9,

10,

11,

12,

13] method, which is proposed by Besl et al. [

9] for registering scans. ICP provides good results for dense 3D point-clouds, but it requires exact point matching, which may not be available in sparse LiDAR measurements. To address this issue, Segal et al. [

11] proposed a solution called Generalized-ICP, which is based on the distance between points and planes. Building upon this method, Zhang et al. [

14] added point-to-edge matching and developed LOAM, a LiDAR odometry and mapping framework, in 2014. After that, many works have been proposed for LOAM, such as LeGO-LOAM [

15], LOAM-Livox [

16] and methods using semantic information [

17,

18], deep learning networks [

19,

20,

21], or most recent neural rendering techniques [

22]. However, due to their reliance only on LiDAR measurements, such methods may perform poorly in featureless environments. One solution to this issue is to fuse the measurements of other sensors, such as IMU, GNSS, camera and LiDAR measurements [

23,

24].

Combining LiDAR data with IMU measurements is one of the popular solutions to address the LiDAR SLAM degeneration problem in featureless environments [

25]. Such methods can be divided into two groups, loosely-coupled LiDAR-inertial odometry and mapping(LIOM) and tightly-coupled LiDAR-inertial odometry. In the loosely-coupled approaches, scan registration and data fusion are separated. For example, the method by Zhen et al. [

26] first registers LiDAR scans and estimates robot poses, then fuses these estimates with IMU measurements. Another example is using IMU measurements as the initial estimate for registering LiDAR scans, as presented in IMU-aided LOAM [

14]. The Loosely-coupled approach has lower computational requirements but neglects the relationship between other states, such as velocity and pose of new LiDAR measurements. In contrast, the tightly-coupled LiDAR-inertial odometry directly combines the raw feature points of LiDAR with IMU measurements. Two main paths can be used for this approach, filter-based and optimization-based methods. For instance, Geneva et al. [

27] fuse IMU measurements and LiDAR plane feature points using graph optimization, while LIOM [

28] also uses graph optimization to fuse plane and edge features of IMU and LiDAR measurements. Gaussian Particle Filters [

29] have also been used to fuse IMU and planar 2D-LiDAR. However, as the number of feature points and dimension of the system increase, the requirements for computational power quickly grow in both graph optimization and Gaussian Particle Filter methods. Kalman filters and their variants, such as extended Kalman filters [

30,

31] and iterated Kalman filters, have demonstrated a more efficient and effective performance in real-time situations. Xu et al. [

32,

33] proposed the latest approaches, FAST-LIO and FAST-LIO2, that adopt the iterated extended Kalman filter in LIOM field. Bai et al. [

34,

35] also increased the speed of integrating the LIOM system by replacing ikd-tree with iVox.

In complex real-world environments, enhancing perception-based odometry methods by integrating the robot’s kinematics model obtained from sensors such as wheel encoders can improve the robustness of odometry and mapping results. In the field of visual SLAM, there are instances where wheel encoder messages have been incorporated into existing odometry and mapping frameworks. For example, Zhang et al. [

5] employed an iterative optimization method based on sliding windows to fuse visual, IMU, and wheel encoder measurements. Liu et al. [

5] adopted a tightly-coupled approach, integrating wheel encoder and IMU measurements during a pre-integration stage. However, the utilization of both LiDAR and wheel encoders is relatively limited. Júnior et al. [

36] proposed an approach that combines LiDAR measurements, IMU data, and wheel encoder information to establish an odometry and mapping framework specifically designed for challenging environments. Yuan et al. [

37] introduced a framework based on bundle adjustment (BA) to achieve similar functionality. Existing methods mainly focus on fixed LiDAR sensors and perform poorly when directly applied with rotating LiDAR sensors due to the special motion characteristics.

To bridge this gap, we propose a tightly-coupled method to fuse rotating LiDAR data with IMU and wheel encoder measurements to obtain an accurate odometry and dense mapping result. During the motion compensation stage for rotating solid-state LiDAR scans, we combine IMU measurements with motor encoder readings to generate undistorted point-clouds. We install this solid-state LiDAR on a rotating platform and run our approach on a track robot designed by our lab. We tested our odometry and mapping approach for rotating solid-state LiDAR in complex environments. To the best of our knowledge, our approach is the first work that combines the rotating ability of solid-state LiDARs with exclusive odometry and mapping frameworks for multiple complex environment SLAM tasks.

3. Rotating Sensory Platform and Experimental Robot

3.1. Experimental Platforms

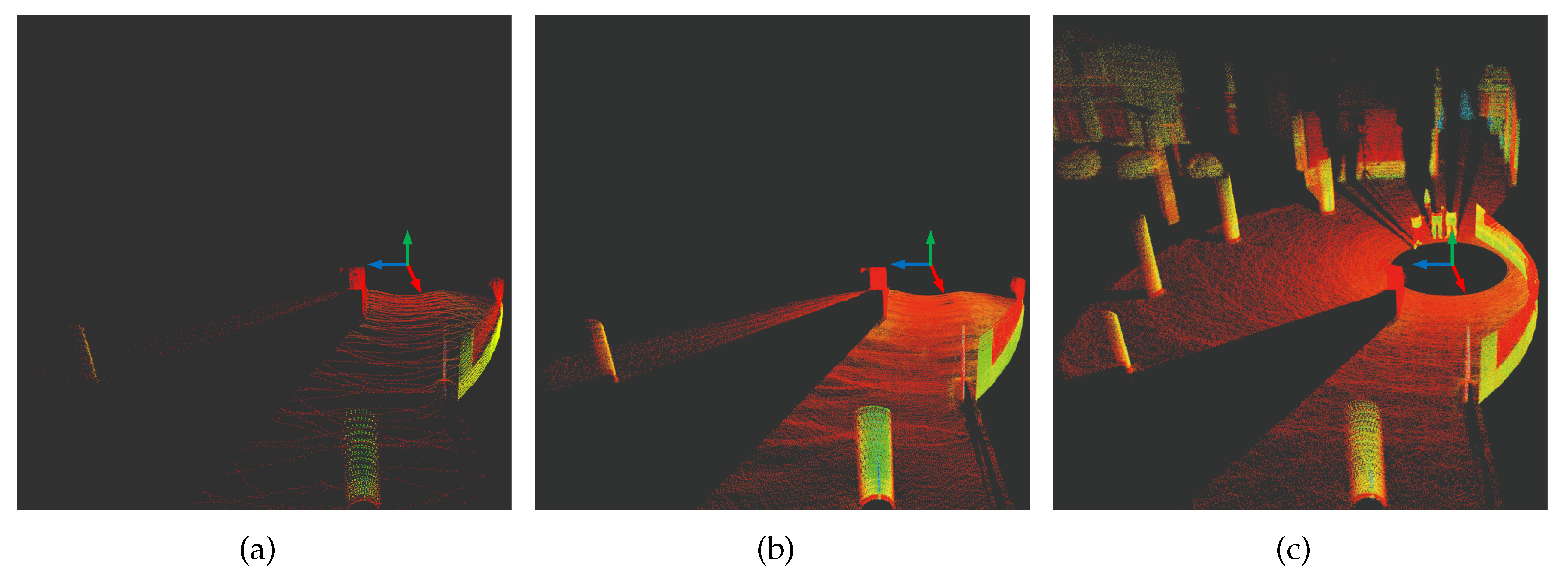

We first introduce our designed rotating sensory platform for solid-state LiDAR. As shown in

Figure 1(a), we use the Livox HAP solid-state LiDAR sensor which provides a horizontal FOV of 120° and vertical FOV of 25°. Note that, our rotating platform is not limited to this type of LiDAR, and theoretically it can work with any type of LiDAR. Our rotating platform uses a servo motor to continuously rotate around z-axis, expanding the LiDAR’s horizontal FOV to 360°. We use conductive slip rings to achieve data transmission during the rotation process. In this paper, we set the motor speed to 3000 rotations per minute (RPM) with a reduction ratio of 100, thus resulting in a speed of 30 RPM for the solid-state LiDAR. We visualize the point cloud obtained by the LiDAR sensor in a stationary state for 0.1 seconds (see in

Figure 2(a)), 2 seconds (see in

Figure 2(b)), and on our rotating platform for 2 seconds (see in

Figure 2(c)). As can be seen in

Figure 2(a) and

Figure 2(b), benefiting from the non-repeating scanning characteristic of the solid-state LiDAR, a longer scanning period resulting in higher coverage of the scene. Comparing

Figure 2(b) and

Figure 2(c), we can see that using our rotating platform the point cloud collected by the LiDAR can cover more areas of the scene.

We use our designed tracked robot for evaluating the proposed odometry and mapping experiment in complex campus environments.

Figure 1(b) shows the robot equipped with our rotating sensory platform. The size of the rotating platform is

, and when installed on the robot, the height of LiDAR’s optical center from the ground is

. The robot is equipped with four independently driven fins with strong obstacle-crossing abilities, allowing us to conduct experiments in more complex terrains, such as indoor environments with steps even multi-floor stairs. The robot also equips a wheel encoder enabling us to form its kinematic equation as:

where

and

are the velocity of the left and right of main track, and the

L is the distance of two main track centers. The final measurement of wheel encoder can be written as

and

.

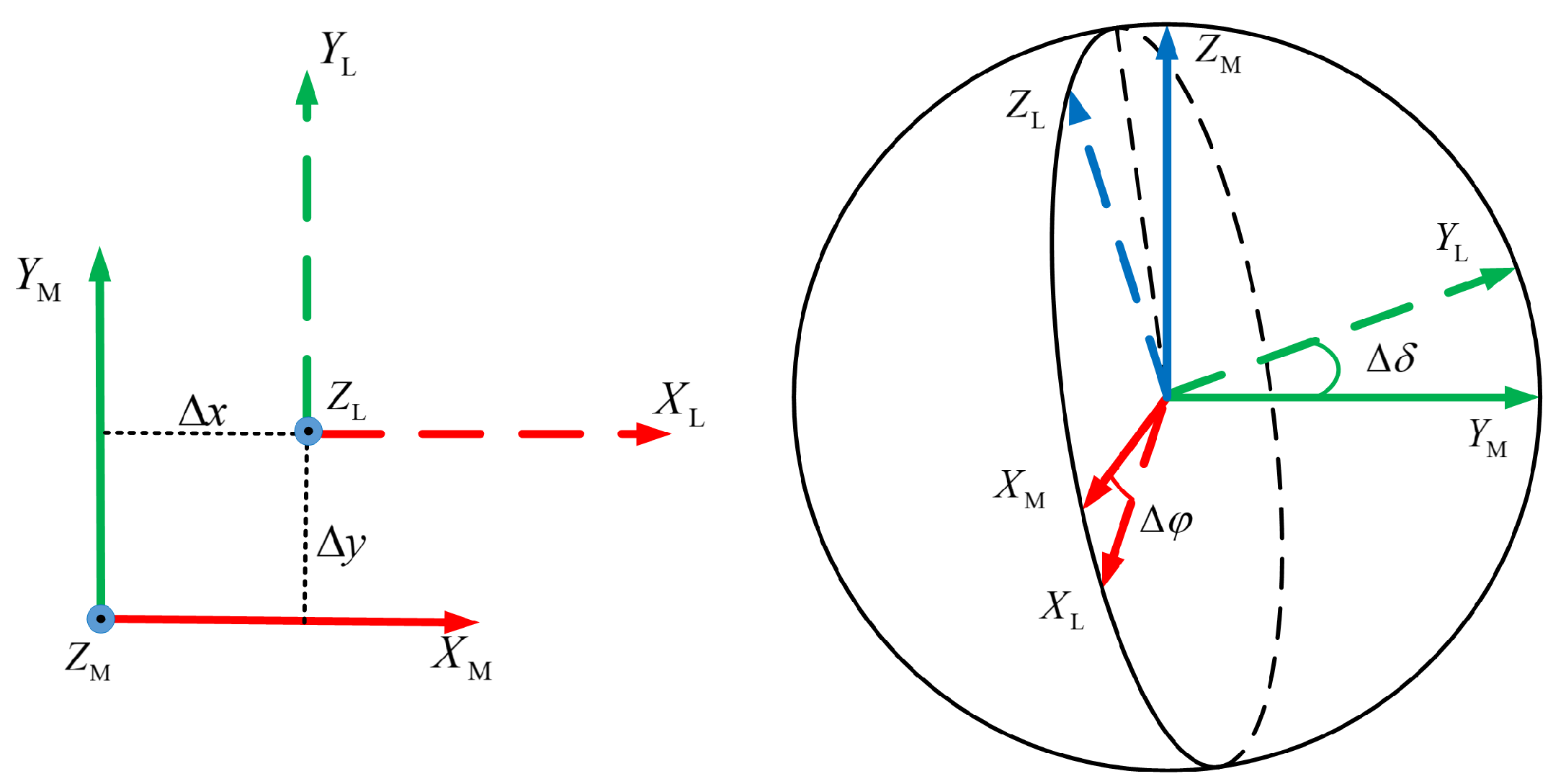

3.2. Extrinsic Calibration

In this section, we introduce the extrinsic calibration process of our sensor system. Some notations used in this paper are shown in

Table 1. The definition of the sensor coordinate systems of our experimental robot is given in

Figure 1. We also define the coordinate system of LiDAR, IMU and robot that the coordinate origin of the LiDAR coincides with the optical center, with the X-axis pointing towards the scanning direction and the coordinate system of the IMU coincides with that of the accelerometer. The coordinate origin of the robot locates at the center of gravity of the robot. For the coordinate system of rotating platform, we define the z-axis pointing upward coinciding with the rotating axis, and the x-axis and y-axis are coincided with robot’s. The center is defined at the same height as LiDAR’s optical center for convenience. Ideally, the rotating axis of the rotating platform should coincide with the z-axis of the LiDAR. However, mechanical installation issues can cause errors in both the distance from the LiDAR’s center to the rotating axis and the angle between these two axes as in

Figure 3. Therefore, we need to calibrate the rotating platform with the LiDAR sensor. We denote the extrinsic parameters between the LiDAR system and the rotating platform system as

:

where

is the translation parameters and

is the rotating parameters represented in Euler angles, i.e., the roll and pitch angle. We design an extrinsic calibration for our platform to obtain the extrinsic parameters

as follows.

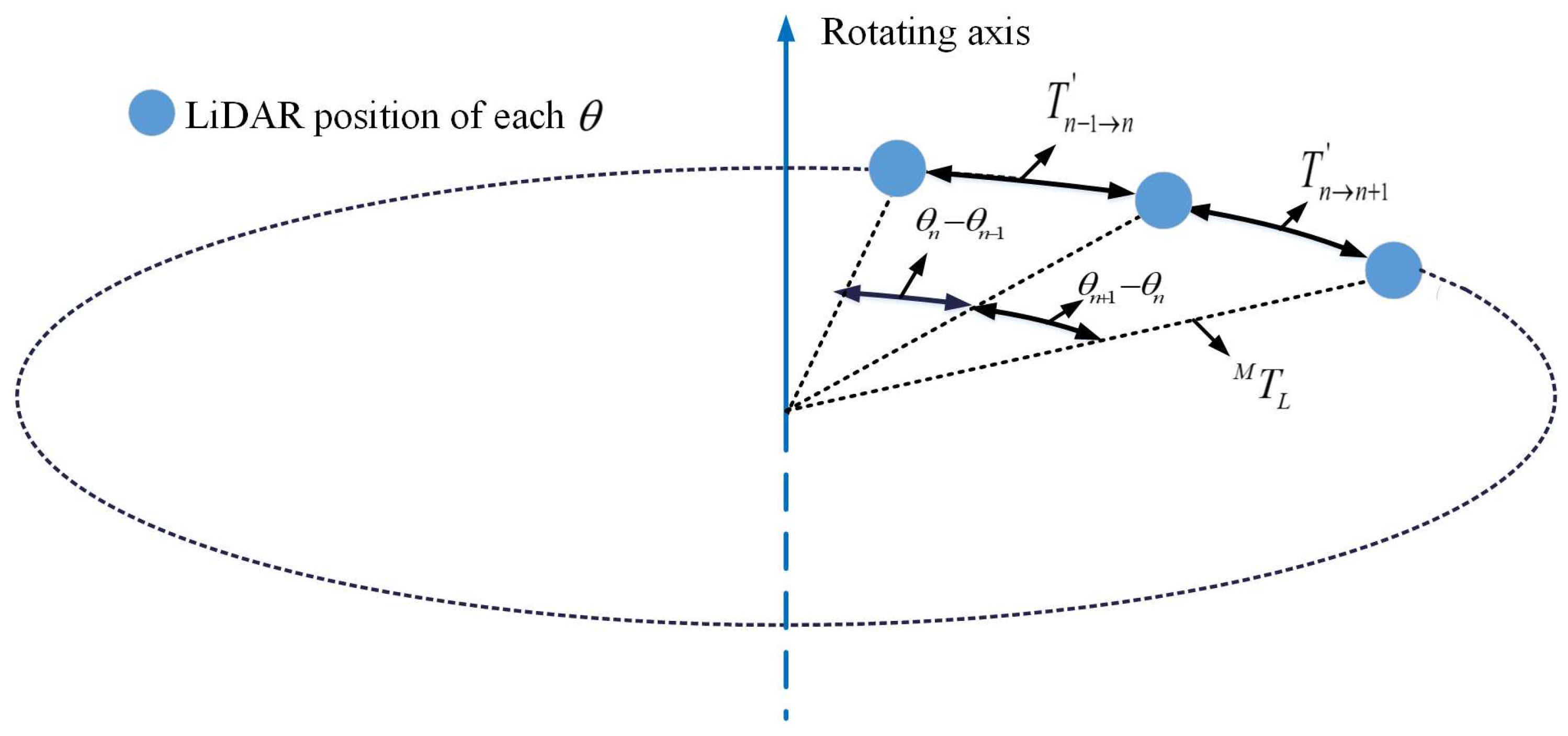

We firstly collect scans at different rotating angles

of the platform and can obtain a related transform as Equation (

5).

Each angle is related to a LiDAR pose measurement

in world frame which is defined coincided with the original rotating platform frame. For each pair of angles

, we can establish the relative pose measurement from

to

as

:

By obtain the relative pose observation

using point cloud registration, we can then establish the optimization function as:

which can be solved iteratively using Levenberg-Marquardt algorithm.

To obtain precise observation of relative pose

, we perform the extrinsic calibration in a structured environment, and obtain the ground truth dense point cloud of the environment using a Faro FocusS350 scanner

1. FocusS350 is a high precision laser scanner with a measuring range of up to 350 m and a measurement accuracy of 1 mm.

For each rotating angle we keep the platform stationary and collect all the point clouds acquired by the LiDAR within 5 s as one scan. Each scan is registered to the ground truth point cloud of the environment using ICP [

9] to solve the precise pose

in Faro frame. The relative pose observation is then obtained as

. As the registration is performed between the sparse scan and the ground truth dense point clouds, we can obtain the relative transformation between the sparse scans even without overlap to include more constraints in the optimization function Equation (9) to obtain a better calibration result.

For the extrinsic parameters between the wheel odometry and the IMU

, we use the calibration method described in [

6]. For the extrinsic parameters between the rotating platform and the IMU

, we opt a indirect way. We fix the rotating platform and adopt the LiDAR-IMU calibration method described in [

38] to calculate the transformation between LiDAR frame and IMU frame

, and then calculate the extrinsic parameters as

4. LiDAR-Inertial-Wheel Odometry and Mapping

4.1. System Overview

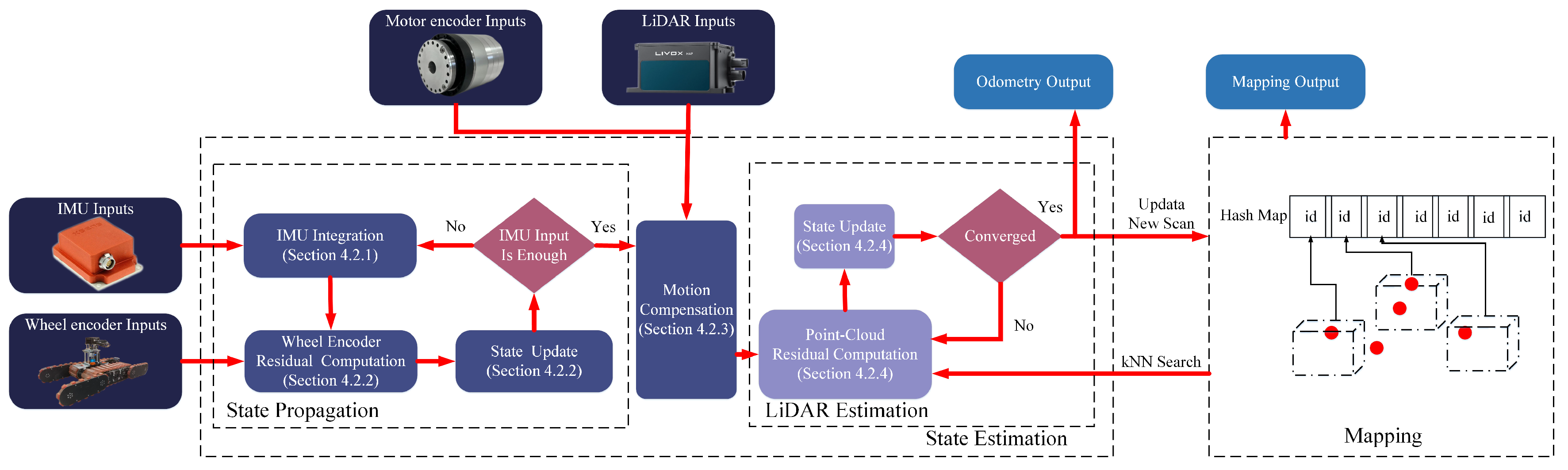

The overview of our approach is presented in

Figure 5. The measurements of IMU, motor encoder, wheel encoder and LiDAR are fed into our state estimation module for a fast state estimation. The estimated pose is then used to register the point cloud with the built global map. The updated map is finally used for registration in the next step.

4.2. State Estimation

We first model the state of our system as , where p is pose, v is velocity, is Euler angel, is bias of accelerometer, is bias of gyroscope, and g is gravity. We use the iterated Kalman filter as the optimization framework. Instead of directly updating the state in a general Kalman filter, iterated Kalman filter updates the errors of the state, i.e., , where is the nominal state and is the true state. This allows for a smoother and more stable state estimation. The iterated Kalman filter optimizes the estimation of and incorporates it into the nominal state variables to obtain the final state estimation.

4.2.1. IMU Integration

In IMU integration stage, we use IMU measurements as inputs to predict the state estimation:

where

is the state equation of

in continuous time with noise

w set to 0, and

refers to the time interval between consecutive IMU time-steps

and

.

We follow [

31] and calculate

as:

where

is the measurements of gyrometer and accelerometer of IMU, and

refers to the Gaussian noise of gyrometer and accelerometer, and bias of gyrometer and accelerometer respectively.

represents transferring 3D-vector to its skew-symmetric matrix.

is the

identity matrix and

is the transformation from world frame to IMU frame.

and

are the bias of gyrometer and accelerometer.

Using

and

we can then propagate the covariance

of state

iteratively following:

where

represents the covariance of noise

w.

4.2.2. Wheel Encoder Residual Computation and State Update

After the IMU integration, we obtain the state estimation

and the covariance matrix

at iteration

. In low velocity situation, the cumulative error of the IMU’s accelerometer can greatly affect the velocity estimation. To eliminate this error

in

and achieve a more stable velocity estimation, we build the observation model to rectify velocity estimation

in the propagated state

based on the measurements from wheel encoder:

where

is the measurement of wheel encoder calculated as in Equation (

1), and

and

is the extrinsic parameters between wheel encoder with IMU, and

is the extrinsic parameters between world frame with IMU. Based on the observation model, we can the formulate the optimization function as:

where

is the noise of wheel encoder measurements, and

is the Jacobian w.r.t velocity

v in state variables

x. Equation (

16) can be solved by Kalman filter as follow:

where Kalman gain

is solved in Equation (

17),

is Equation (18), and used to update state

in Equation (19) and covariance

in Equation (20).

The updated and will be then used for motion compensation and the next optimization of the state.

4.2.3. Motion Compensation

In order to address the distortions and deviations caused by the movement of the robot and the rotation of the motor in LiDAR scanning results, a kinematic analysis is performed. This analysis aims to compensate for the motion effects and determine the position of all points at the end of the scanning in the IMU frame system. The kinematic analysis assumes a constant velocity and utilizes the transformation information obtained from the extrinsic calibration process.

The first step in motion compensation involves establishing a motion model for each point within a single scanning. By transforming the points from the LiDAR frame to the world frame as:

The kinematic relationship between the velocities

and

can be derived as:

where

and

are the transformations from LiDAR frame to IMU frame. Assuming the environment is perfectly stationary, i.e.,

, we can further derive Equation (

22) to solve the velocity of the point in LiDAR frame as:

where

is the measurements of IMU gyrometer, and

is the reading of motor encoder and

is the velocity of robot in the real world that can be obtained by state optimization. Using the timestamp of each point

in a single scan, we can then compensate the motion distortion for each point by projecting it to the end of the scan as:

where

is the scanning time duration of a

k-th scan, and

, and

is the timestamp of a point

.

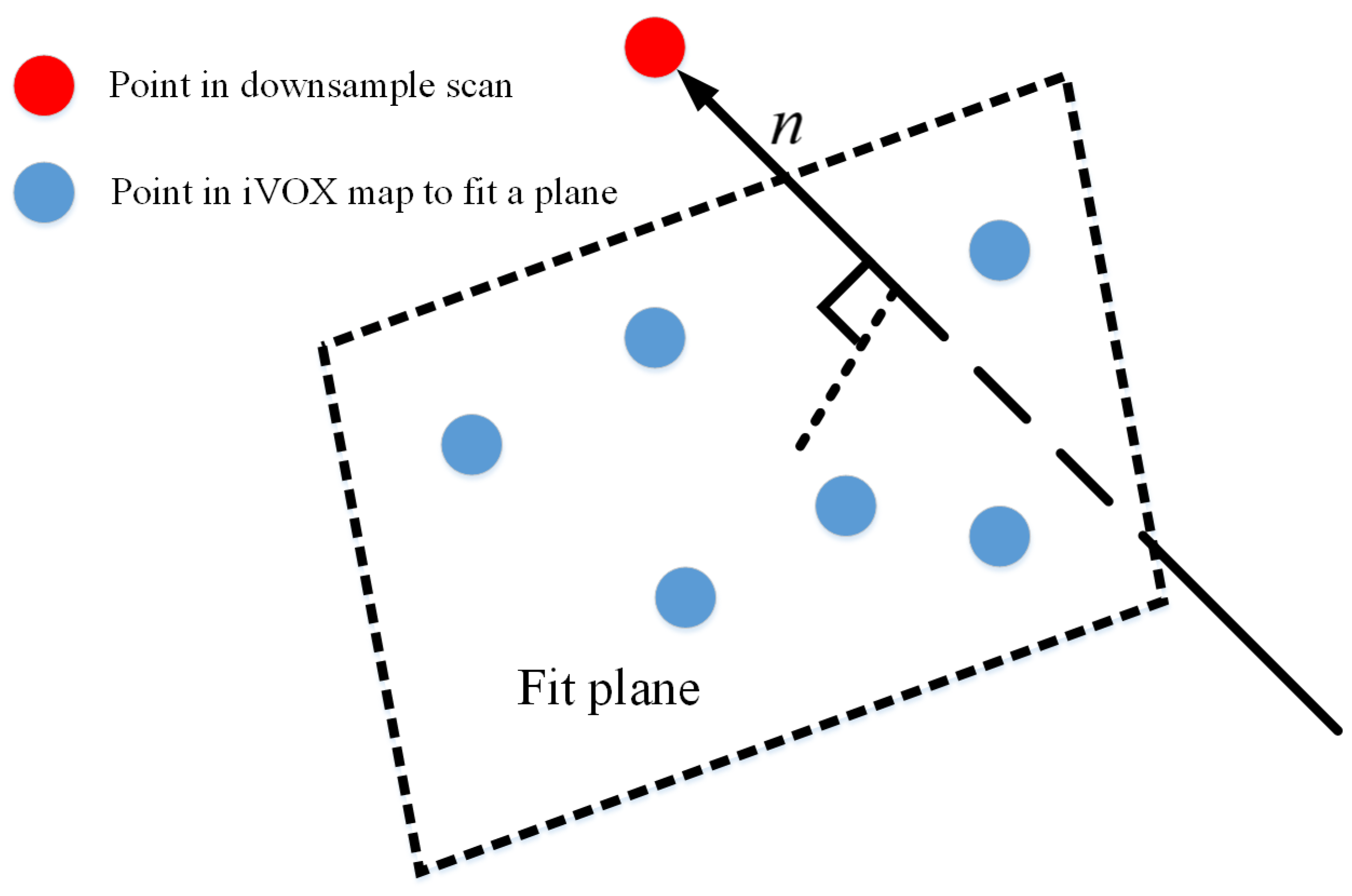

4.2.4. Point-cloud Residual Computation and State Update

After the motion compensation, we obtain the undistorted point cloud denoted as

and use it to construct the residual. Assuming the current iteration of the iterated Kalman filter is

, and the corresponding state estimation is

and the covariance matrix is

.When new scanning

is input, a downsample process will be executed that generate

. Then we transform the point cloud

into world frame as:

After downsampling and transforming, the nearest points of

in hash map are retrieved. Each set of nearest points is used to fit a plane and calculate the distance between the plane with the corresponding point in the downsampled point cloud, as shown in

Figure 6.

Specifically, for each point

, we find its nearest points set

in built map:

We then calculate the normal vector

n to fit the plane formed by

as:

where

. The residual of LiDAR measurements can be then calculated as:

We construct an optimization equation for

as:

where

is the noise of LiDAR measurements, and

is the Jacobian w.r.t position

p and posture

. Similarly, we use iterated Kalman filter to solve Equation (

30) as follows:

where Kalman gain

is solved in Equation (31),

is Equation (32), and used to update state

in Equation (33) and covariance

in Equation (34).

The overall procedure of state estimation can be summarized in Algorithm. 1

|

Algorithm 1 State Estimation Algorithm |

-

Require:

Last optimal estimation and covariance matrix , LiDAR scan , the sequence of IMU measurements , wheel encoder measurements and the measurements of motor encoder in scan . - 1:

whiledo - 2:

integrate IMU measurements to obtain predict as Equation ( 10) and as Equation ( 14). - 3:

calculate the residual by Equation ( 16). - 4:

solving Equation ( 16) measurements to calculate as Equation (18). - 5:

update by and by Equation ( 31) - 6:

- 7:

end while - 8:

distort the scan by state estimation of IMU integration and measurement of motor encoder according to Equation ( 23) and Equation ( 25). - 9:

calculate normal vector and residual for every point in by Equation ( 29). - 10:

while do - 11:

- 12:

update and by Equation (33) Equation (34) - 13:

end while -

Ensure:

and |

4.3. Mapping

In the mapping module, we adopt the iVox structure proposed in [

34] to achieve storage and management of the global map based on the hash algorithm [

39].

When a new scan is fed into the mapping module, the first step is to compute a hash-index for each point. Subsequently, we check whether a voxel in iVox shares the same hash-index as each point. If a voxel with the same hash-index is found, the corresponding point is inserted into that voxel. Conversely, if such a voxel does not exist, a new voxel is created based on the hash-index, and the point is then inserted into it. Each voxel has a maximum capacity, and once this capacity is reached, no additional points can be inserted.

Regarding the search and matching process, we primarily utilize the k-nearest neighbors search (k-NN) method to identify voxels that fulfill the specified criteria. Compared to traditional k-d tree structures, the iVox structure offers significantly faster insertion and retrieval speeds for voxels, typically ranging from 1 to 2 orders of magnitude faster. This improvement is achieved by leveraging hash algorithms.

5. Experiments

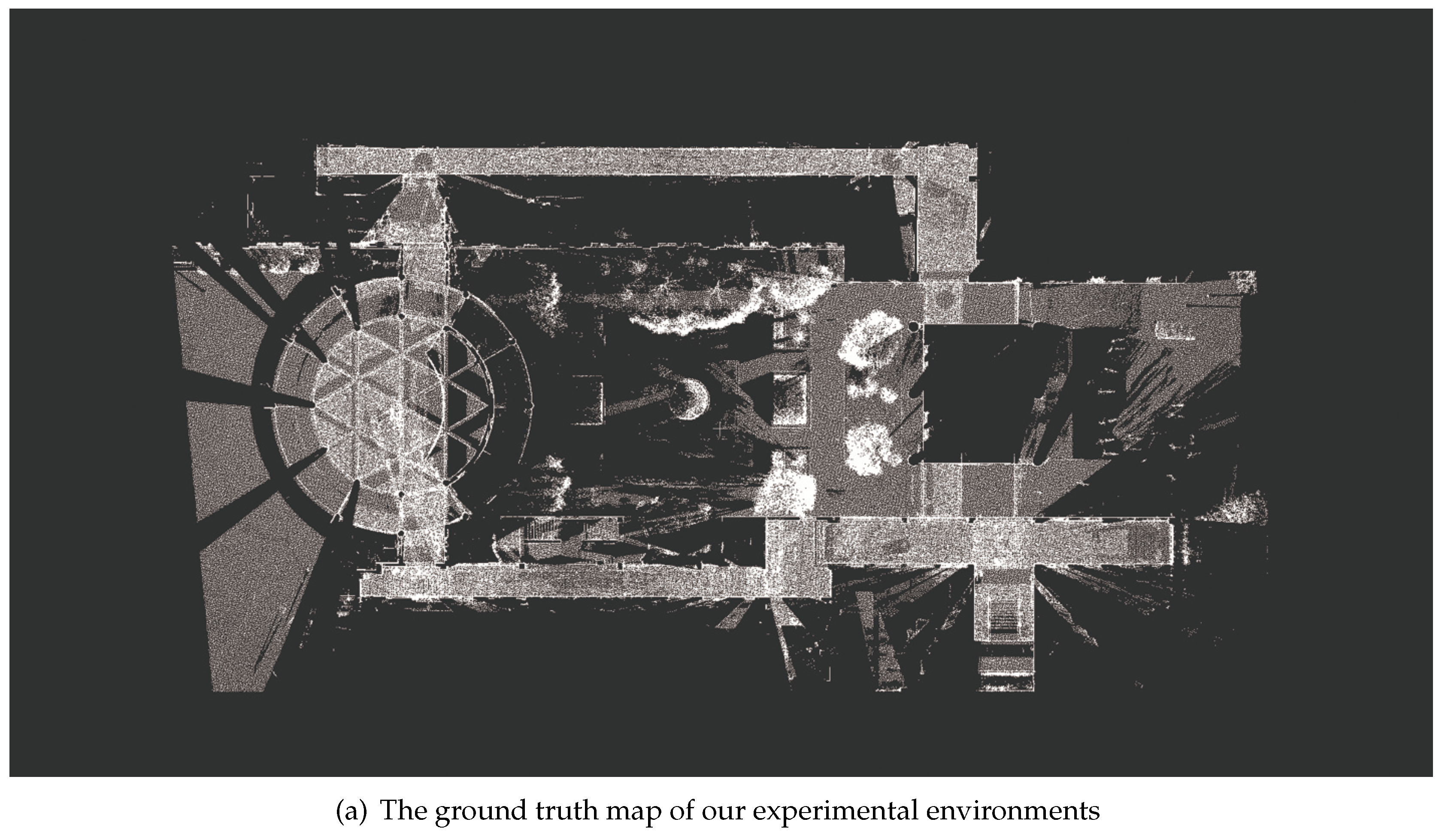

We conduct experiments to evaluate the effectiveness of our RSS-LIWOM and rotating platform under two challenging scenes, an indoor-outdoor mixed campus and an indoor stairway, as shown in

Figure 7. The campus environment presents several challenges, such as scene changes and the presence of a long, narrow, symmetric corridor with limited structural features. This corridor specifically poses a significant challenge for LiDAR odometry, which relies heavily on structural information for accurate mapping. On the other hand, the odometry and mapping in the stairway environment introduce additional complexities due to large motion vibrations and sharp turns.

We choose three current state-of-the-art approaches as our baselines, FAST-LIO2 [

33], LIO-SAM [

28], and EKF-LOAM [

36]. For a fair comparison, we use the solid-state LiDAR Livox HAP for all methods. However, EKF-LOAM was designed for mechanical LiDAR and failed to work with solid-state LiDAR. We therefore additionally employ a more expensive mechanical LiDAR Velodyne-VLP16 for EKF-LOAM, denoted as EKF-LOAM

. We also provide results of two variants of our approach to show the effectiveness of our designs, where RSS-LIWOM

represent our RSS-LIWOM without rotating platform, and RSS-LIWOM

represents RSS-LIWOM without wheel odometry. We evaluate both the odometry and mapping results of all methods and all the experiments are performed in real-time.

5.1. Evaluation on Odometry Estimation

It is hard to obtain the ground truth pose in indoor and outdoor mixed environments. Therefore, we evaluate the odometry performance using accumulated position errors. Specifically, for the stairway, we first measure the ground truth height between floors using Faro FocusS350 scanner, and then calculate the difference between the estimated climbing height of each odometry method with the ground truth height. For the campus environment, we control the robot to circumnavigate around the area and return to the star position, and then compute the difference between the final pose and the origin.

We present the odometry results in the campus scene in

Table 2. As can be seen, Our approach demonstrates superior performance in the campus scene, achieving the lowest accumulated error of 2.08 m and an error per meter of 0.01 m. Notably, our approach also achieves the smallest

z axis drift of 0.01 m. This advantage is particularly significant during the mapping stage, as precise estimation of the

z direction helps prevent layering artifacts. In comparison, EKF-LOAM struggles to function effectively with a solid-state LiDAR, while FAST-LIO2 and LIO-SAM exhibit significant odometry drift. Although EKF-LOAM

performs well with a more expensive mechanical LiDAR, our RSS-LIWOM still outperforms it. Comparing our RSS-LIWOM with its variants, RSS-LIWOM

and RSS-LIWOM

, it can be observed that the fusion with wheel odometry and our devised rotating sensory platform contributes to improve performance. For long-distance scenes, the fusion of wheel encoder has a more significant impact on performance improvement.

The results obtained in the stairway scene are presented in

Table 3. In this experiment, the robot climbs multiple floors via stairs. As observed from the results, our RSS-LIWOM outperforms all baseline methods. It is worth noting that FAST-LIO2, LIO-SAM, and EKF-LOAM all fail to produce meaningful results. Although EKF-LOAM

using a more expensive mechanical LiDAR still works, its performance is inferior to all our variants. Comparing our variants, we consistently observe that each component of our approach contributes to improved performance. Specifically, the introduction of the rotating platform leads to a significant performance enhancement, reducing the error from 0.43 m to 0.06 m. This finding suggests that a wider FOV and additional information are crucial for narrow scenes. By integrating all the modules, our approach achieves the best performance overall.

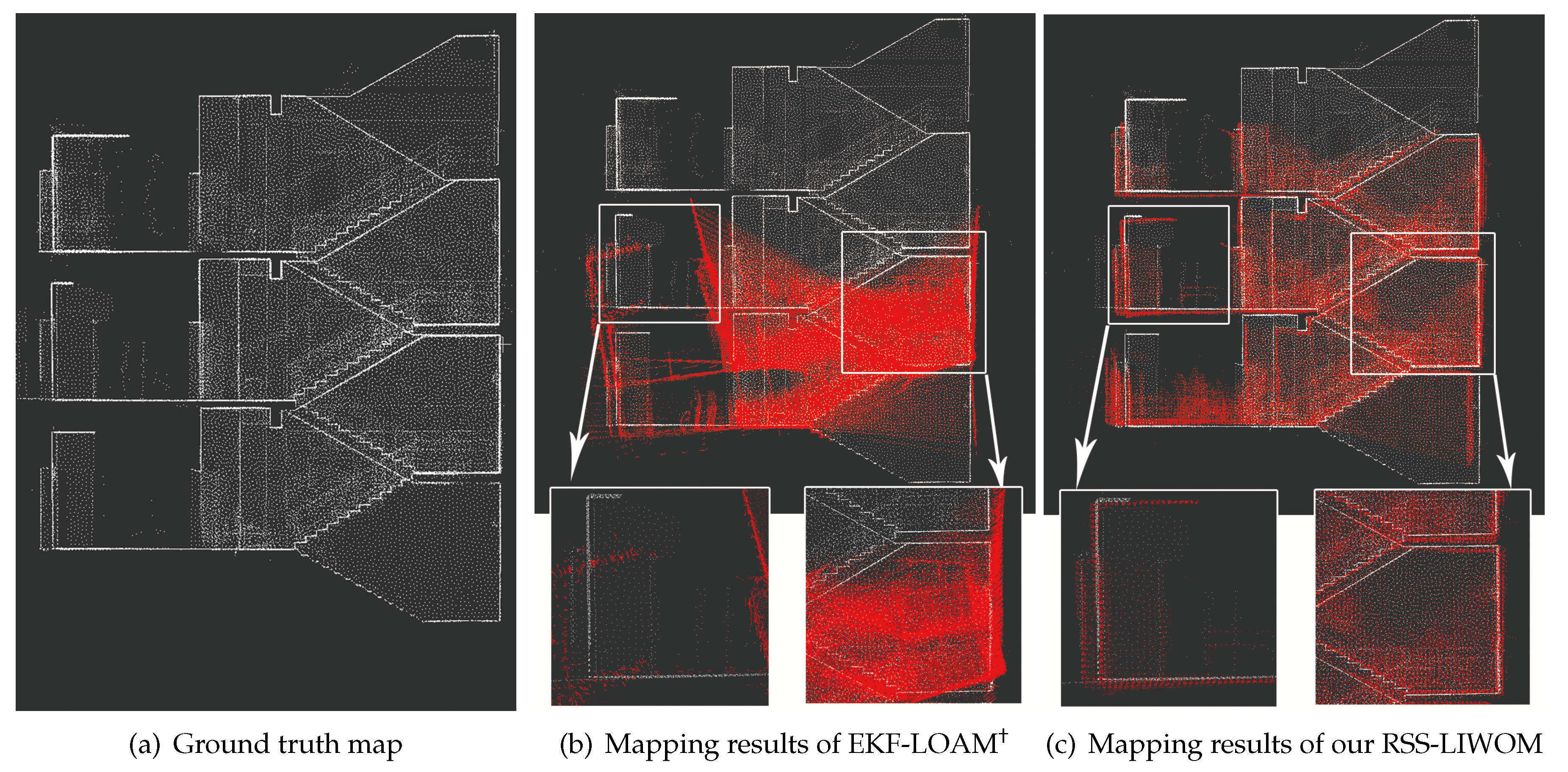

5.2. Evaluation on Mapping Quality

In this section, we evaluate the mapping quality of our RSS-LIWOM compared to the baselines with respect to the ground truth map obtained by Faro FocusS350 scanner. The real scene and corresponding ground truth map are shown in

Figure 7. We employ two metrics to quantitatively evaluate the accuracy of reconstructed maps of each methods. The first metric measures the accuracy of the reconstructed map using Chamfer distance, which computes the average distance between each point in the reconstructed map to its nearest counterpart in the ground truth map. The second metric is the mapping coverage rate, which represents the percentage of map points that are accurately reconstructed. We define accurately reconstructed map points as those whose distance to the nearest point in the ground truth map is below a threshold of 0.2 m. To ensure a fair comparison, the mapping results of all the methods are voxelized using a consistent voxel size of 0.1 m. Additionally, we provide the number of points in the mapping result for each method.

The quantitative mapping results for both scenes are presented in

Table 4. As can be seen, our RSS-LIWOM utilizing a rotating solid-state LiDAR, benefits from a larger FOV and accurate odometry estimation, resulting in the highest mapping accuracy and coverage rate in both the campus and stairway scenes. Specifically, in the campus scene, our approach achieves the lowest Chamfer Distance of 0.67 m and the highest coverage rate of 70.2 %. In the stairway scene, our approach consistently achieves a lowest Chamfer Distance of 0.37 m and a coverage rate of 70 %. In contrast, FAST-LIO2 is limited by its narrow FOV solid-state LiDAR, which leads to inaccurate odometry estimation. Consequently, FAST-LIO2 exhibits suboptimal mapping accuracy in the campus scene and fails to produce meaningful results in the stairway scene. The performance of EKF-LOAM varies significantly between the two scenes. It achieves comparable mapping accuracy to our approach in the campus scene but does not perform well in the stairway scene. EKF-LOAM also suffers from the issue of feature degeneration in narrow scenes, which contributes to its poor performance. Overall, the larger FOV and accurate odometry estimation provided by our rotating solid-state LiDAR enable our approach to outperform FAST-LIO2 and EKF-LOAM in terms of mapping accuracy and coverage rate in both scenes.

To better demonstrate the advantages of our RSS-LIWOM in mapping, we provide more qualitative visualization results of different methods compared against the ground truth maps. We first visualize the mapping result in campus scene in

Figure 8. The red dots represent the mapping results of our RSS-LIWOM and baseline methods, while the white dots represent the ground truth map points. As can be seen, our reconstructed maps align better than other methods with the ground truth maps, which reveals that benefiting from high-quality point cloud and accurate odometry estimation, RSS-LIWOM performs better and captures more environmental details than the baseline methods. FAST-LIO2 performs sub-optimally with significant deviation from the ground truth map due to feature degradation. Although EKF-LOAM has similar overall mapping performance as RSS-LIWOM, it cannot capture as many details as RSS-LIWOM. We zoom in on two typical areas in campus scene, a narrow corridor and the starting/ending points, our RSS-LIWOM overlaps better with the ground truth map than the baselines and achieves a higher coverage rate.

We also visualize the mapping results in the stairway scene. As shown in

Figure 9(c), our RSS-LIWOM performs well in the climbing stairway scene, achieving a high degree of overlap with the ground truth map and clear distinction between different floors. In contrast, the mapping accuracy of EKF-LOAM significantly decreases when climbing stairs (as shown in

Figure 9(b)). This is mainly due to the inaccurate vertical odometry estimation of EKF-LOAM.

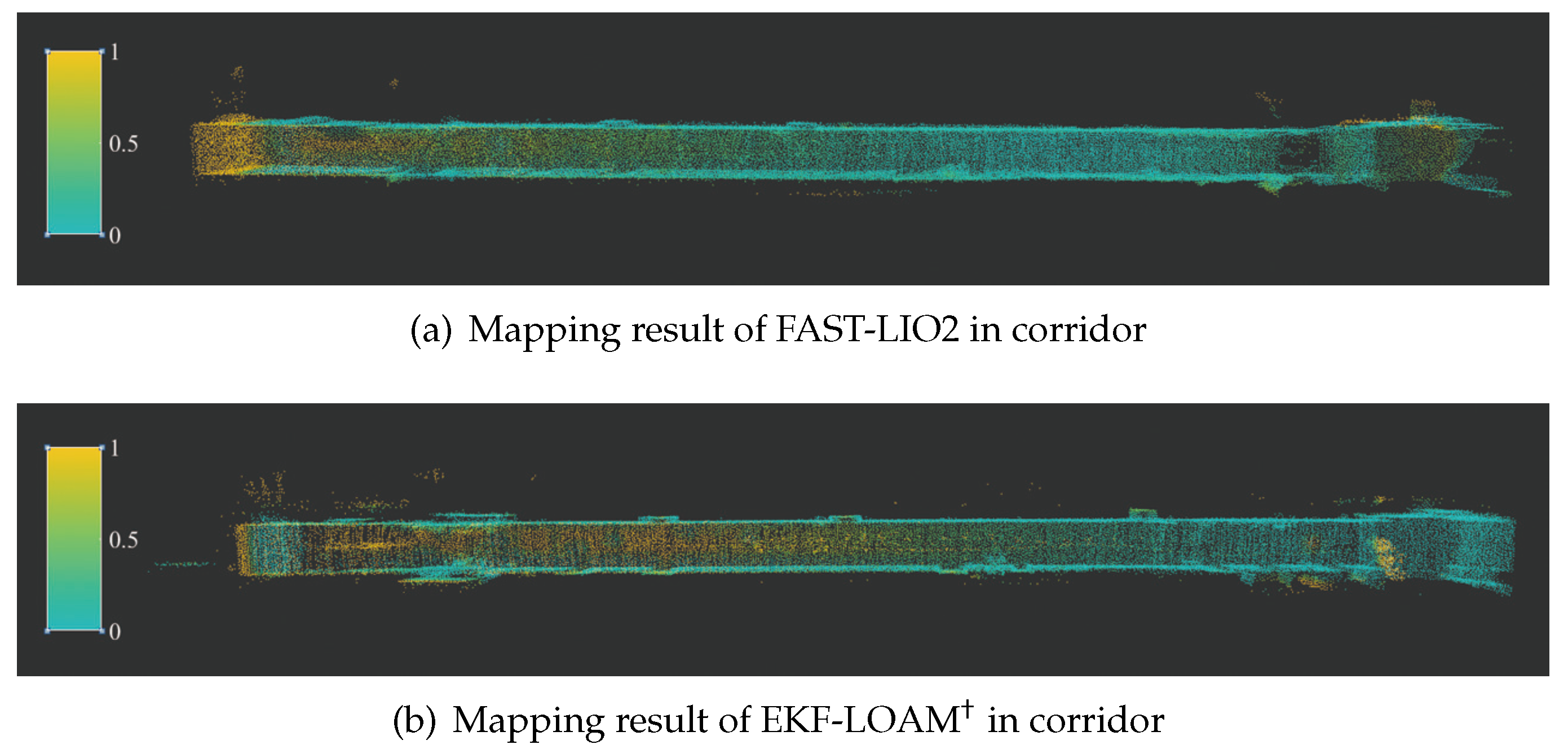

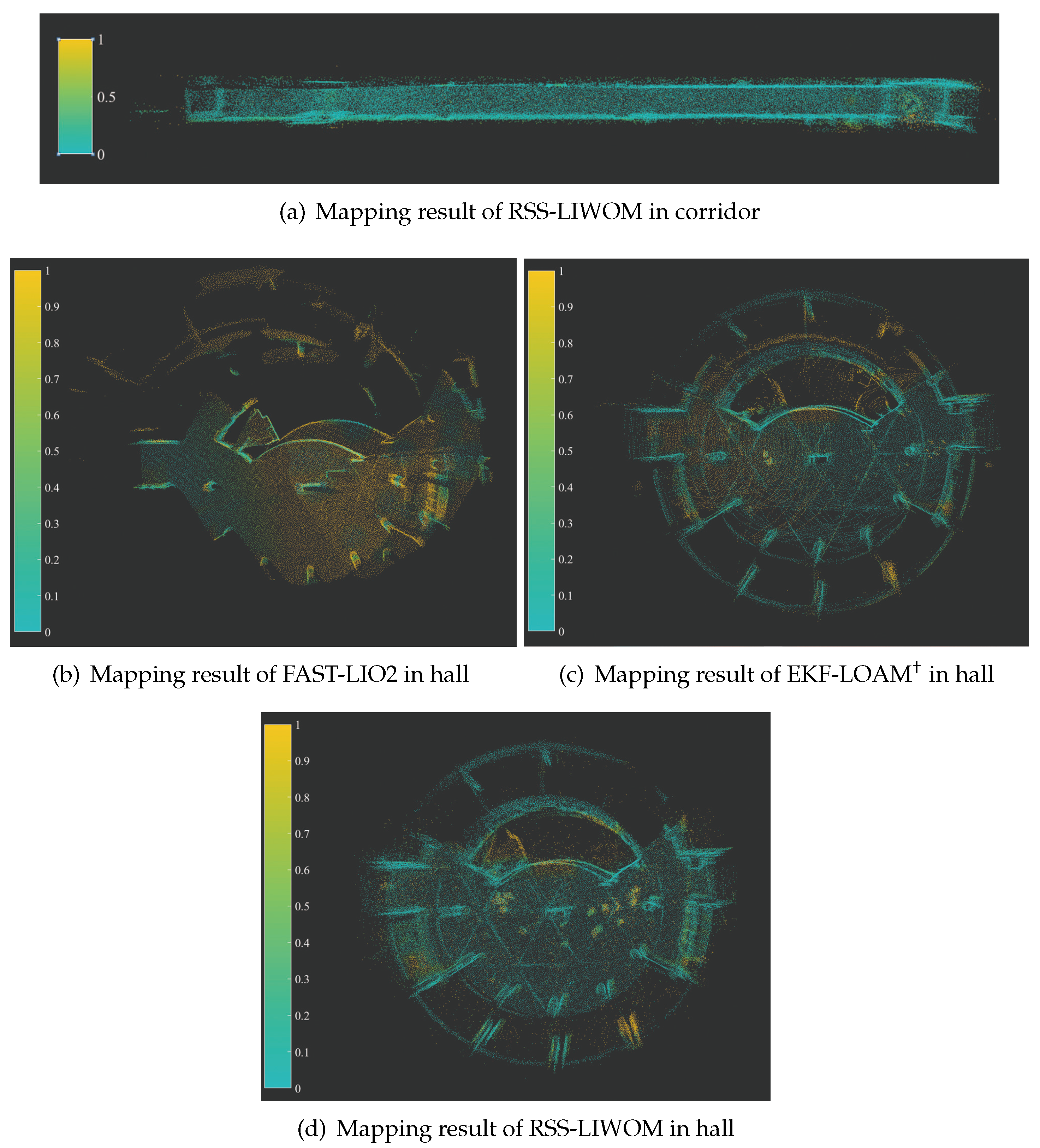

We furthermore provide qualitatively comparison of different methods in terms of heat maps shown in

Figure 10. In the figure, different colors represent the magnitude of Chamfer Distance for each point, with cyan indicating smaller distances and orange indicating larger. The visualization results provide a more intuitive demonstration of the advantages of our algorithm in terms of Chamfer Distance in corridor and hall of campus, compared with FAST-LIO2 and EKF-LOAM.

5.3. Smoothness of Velocity Estimation

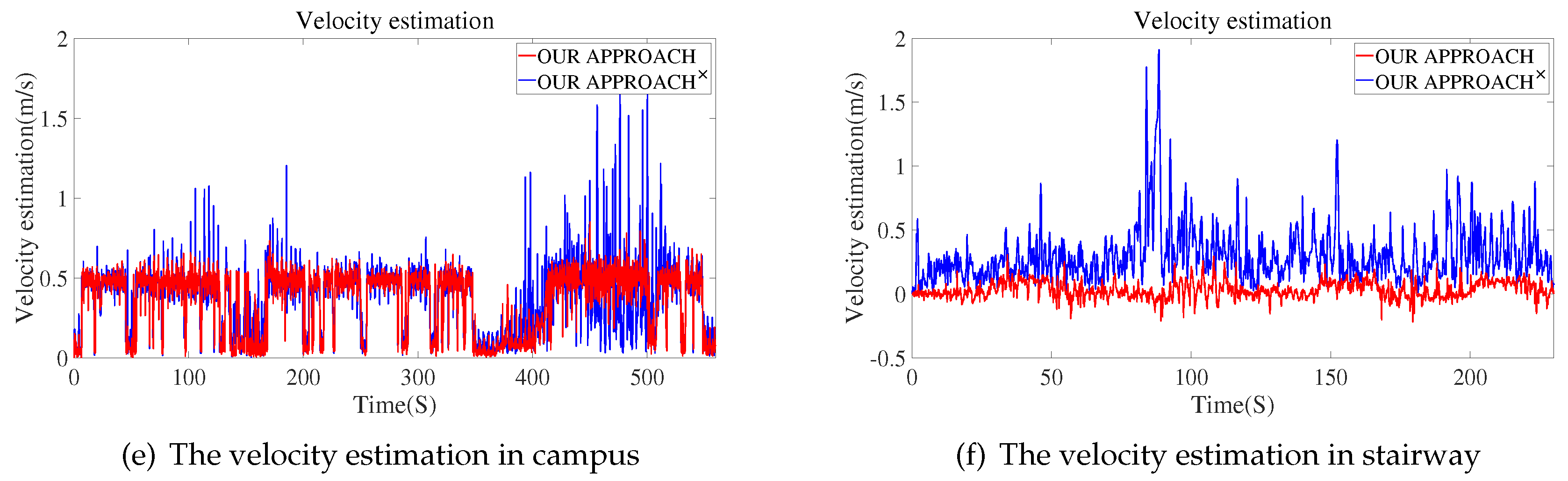

To further demonstrate the improvement of our method through the fusion wheel encoder measurements, we compare RSS-LIWOM with RSS-LIWOM in terms of the smoothness of the velocity estimation.

We evaluate the velocity estimation based on the hypothesis that the robot’s movements should be continual and smooth, rather than high-frequent oscillations. As shown in

Figure 11, our RSS-LIWOM achieves smoother velocity estimation by incorporating wheel encoder measurements, outperforming methods that do not utilize such information.

6. Discussions

In this work, we design a rotating solid-state LiDAR sensory platform and develop a LIWOM framework named RSS-LIWOM that fuses LiDAR, IMU, and wheel encoder data for odometry and mapping on ground unmanned platforms such as track robots. The rotating solid-state LiDAR has a larger scanning range and stronger ability to capture detailed information in the environment, compared to original solid-state LiDAR and mechanism LiDAR. The LIWOM framework uses wheel encoder measurements as an observation for velocity, which is not present in existing LIOM frameworks. By combining the rotating solid-state LiDAR and LIWOM framework, our RSS-LIWOM achieved a robust and accurate LIWOM system.

We conducted extensive experiments to thoroughly evaluate our approach using our own-designed track robot, which navigated through both indoor-outdoor mixed campus and stairway environments. In the campus environment, the robot followed a predetermined trajectory and returned to the starting point. We compared our approach with the baseline in terms of odometry estimation and mapping results, and our approach outperformed the baselines by exhibiting the lowest odometry drift. In the stair scene experiment, we manipulated the robot to climb stairs across multiple floors. Our approach demonstrated minimal odometry drift in estimating the vertical orientation. Additionally, we generated a highly precise 3D map using Faro FocusS350 scanner as the ground truth for evaluating mapping quality. When comparing the Chamfer Distance with the ground truth, our RSS-LIWOM achieved the best results. These experiments validate the localization and mapping capabilities of our approach on ground unmanned platforms within building scenes.

In future work, we aim to improve our approach by enhancing map management with a confidence factor for greater robustness and adaptability. We also plan to integrate trajectory planning and object recognition, enabling robots to navigate complex environments more efficiently. These advancements will make our approach more valuable for applications such as search and rescue, exploration, and transportation.

References

- Yan, L.; Dai, J.; Zhao, Y.; Chen, C. Real-Time 3D Mapping in Complex Environments Using a Spinning Actuated LiDAR System. Remote Sensing 2023. [Google Scholar] [CrossRef]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An overview on visual slam: From tradition to semantic. Remote Sensing 2022. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans. on Robotics (TRO) 2015. [Google Scholar] [CrossRef]

- Wang, R.; Wan, W.; Wang, Y.; Di, K. A new RGB-D SLAM method with moving object detection for dynamic indoor scenes. Remote Sensing 2019. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, Y.; Li, M. Vision-Aided Localization For Ground Robots. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2019.

- Liu, J.; Gao, W.; Hu, Z. Visual-Inertial Odometry Tightly Coupled with Wheel Encoder Adopting Robust Initialization and Online Extrinsic Calibration. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2019.

- Wang, D.; Watkins, C.; Xie, H. MEMS mirrors for LiDAR: A review. Micromachines 2020. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhang, F.; Hong, X. Low-Cost Retina-Like Robotic Lidars Based on Incommensurable Scanning. IEEE/ASME Transactions on Mechatronics 2022.

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 1991.

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Intl. Journal of Computer Vision (IJCV) 1994. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. Robotics: science and systems, 2009.

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Transactions on Pattern Analysis and Machine Intelligence 1992. [Google Scholar] [CrossRef]

- Vizzo, I.; Guadagnino, T.; Mersch, B.; Wiesmann, L.; Behley, J.; Stachniss, C. KISS-ICP: In Defense of Point-to-Point ICP Simple, Accurate, and Robust Registration If Done the Right Way. IEEE Robotics and Automation Letters (RA-L) 2023.

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. Robotics: Science and Systems, 2014.

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2018.

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2020.

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguère, P.; Behley, J.; Stachniss, C. SuMa++: Efficient LiDAR-based Semantic SLAM. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2019.

- Chen, X.; Läbe, T.; Milioto, A.; Röhling, T.; Behley, J.; Stachniss, C. OverlapNet: A Siamese Network for Computing LiDAR Scan Similarity with Applications to Loop Closing and Localization. Autonomous Robots 2021, 46, 61–81. [Google Scholar] [CrossRef]

- Shi, C.; Chen, X.; Huang, K.; Xiao, J.; Lu, H.; Stachniss, C. Keypoint matching for point cloud registration using multiplex dynamic graph attention networks. IEEE Robotics and Automation Letters 2021, 6, 8221–8228. [Google Scholar] [CrossRef]

- Guadagnino, T.; Chen, X.; Sodano, M.; Behley, J.; Grisetti, G.; Stachniss, C. Fast Sparse LiDAR Odometry Using Self-Supervised Feature Selection on Intensity Images. IEEE Robotics and Automation Letters 2022, 7, 7597–7604. [Google Scholar] [CrossRef]

- Shi, C.; Chen, X.; Lu, H.; Deng, W.; Xiao, J.; Dai, B. RDMNet: Reliable Dense Matching Based Point Cloud Registration for Autonomous Driving. IEEE Trans. on Intelligent Transportation Systems (ITS) 2023. [Google Scholar]

- Deng, J.; Chen, X.; Xia, S.; Sun, Z.; Liu, G.; Yu, W.; Pei, L. NeRF-LOAM: Neural Implicit Representation for Large-Scale Incremental LiDAR Odometry and Mapping. arXiv preprint arXiv:2303.10709 2023. arXiv:2303.10709 2023.

- Chen, H.; Wu, W.; Zhang, S.; Wu, C.; Zhong, R. A GNSS/LiDAR/IMU Pose Estimation System Based on Collaborative Fusion of Factor Map and Filtering. Remote Sensing 2023. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Lu, H.; Xiao, J.; Qiu, Q.; Li, Y. Robust SLAM system based on monocular vision and LiDAR for robotic urban search and rescue. Proc. of the Intl. Symposium on Safety, Security and Rescue Robotics (SSRR), 2017, pp. 41–47.

- Yang, X.; Lin, X.; Yao, W.; Ma, H.; Zheng, J.; Ma, B. A Robust LiDAR SLAM Method for Underground Coal Mine Robot with Degenerated Scene Compensation. Remote Sensing 2022. [Google Scholar] [CrossRef]

- Zhen, W.; Zeng, S.; Soberer, S. Robust localization and localizability estimation with a rotating laser scanner. Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2017.

- Geneva, P.; Eckenhoff, K.; Yang, Y.; Huang, G. Lips: Lidar-inertial 3d plane slam. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2018.

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2020.

- Bry, A.; Bachrach, A.; Roy, N. State estimation for aggressive flight in GPS-denied environments using onboard sensing. Proc. of the IEEE Intl. Conf. on Robotics & Automation (ICRA), 2012.

- Xu, C.; Zhang, H.; Gu, J. Scan Context 3D Lidar Inertial Odometry via Iterated ESKF and Incremental K-Dimensional Tree. Proc. of the IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), 2022.

- Sola, J. Quaternion kinematics for the error-state Kalman filter. arXiv preprint 2017. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A Fast, Robust LiDAR-Inertial Odometry Package by Tightly-Coupled Iterated Kalman Filter. IEEE Robotics and Automation Letters (RA-L) 2021.

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast Direct LiDAR-Inertial Odometry. IEEE Trans. on Robotics (TRO) 2022. [Google Scholar] [CrossRef]

- Bai, C.; Xiao, T.; Chen, Y.; Wang, H.; Zhang, F.; Gao, X. Faster-LIO: Lightweight Tightly Coupled Lidar-Inertial Odometry Using Parallel Sparse Incremental Voxels. IEEE Robotics and Automation Letters (RA-L) 2022.

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Transactions on Graphics 2013. 2013.

- Júnior, G.P.C.; Rezende, A.M.C.; Miranda, V.R.F.; Fernandes, R.; Azpúrua, H.; Neto, A.A.; Pessin, G.; Freitas, G.M. EKF-LOAM: An Adaptive Fusion of LiDAR SLAM With Wheel Odometry and Inertial Data for Confined Spaces With Few Geometric Features. Proc. of the IEEE Transactions on Automation Science and Engineering(T-ASE) 2022.

- Yuan, Z.; Lang, F.; Xu, T.; Yang, X. LIW-OAM: Lidar-Inertial-Wheel Odometry and Mapping. arXiv preprint 2023. [Google Scholar]

- Zhu, F.; Ren, Y.; Zhang, F. Robust real-time lidar-inertial initialization. Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2022.

- Teschner, M. Optimized Spatial Hashing for Collision Detection of Deformable Objects. VMV 2003. [Google Scholar]

Figure 1.

(a) Rotating solid-state LiDAR, (b) experimental robot, (c) mapping result of campus.

Figure 1.

(a) Rotating solid-state LiDAR, (b) experimental robot, (c) mapping result of campus.

Figure 2.

The scanning result of original solid-state LiDAR and our rotating solid-state LiDAR. (a) is one scanning of original solid-state LiDAR. (b) is the result of two seconds scanning in the same place of original solid-state LiDAR. (c) is the result of two seconds scanning obtained by our rotating solid-state LiDAR.

Figure 2.

The scanning result of original solid-state LiDAR and our rotating solid-state LiDAR. (a) is one scanning of original solid-state LiDAR. (b) is the result of two seconds scanning in the same place of original solid-state LiDAR. (c) is the result of two seconds scanning obtained by our rotating solid-state LiDAR.

Figure 3.

Assembly error between the LiDAR and motor.

Figure 3.

Assembly error between the LiDAR and motor.

Figure 4.

Diagram of calibration showing the relationship of different measurements.

Figure 4.

Diagram of calibration showing the relationship of different measurements.

Figure 5.

System overview of our approach, the system can be separated into two parts: state estimation and mapping. In state estimation, iterated Kalman filter is adopted to estimate state variables. IMU measurements are used for integration to generate state prediction. It then updates velocity state by fusing wheel encoder, transforms raw points from LiDAR frame to IMU frame and compensates for motion distortion. LiDAR scanning after motion compensation is used to compute residual to optimize the pose to obtain final odometry result. In mapping module, the new scan is then added to the map that consists of hash structure and the updated mapping result.

Figure 5.

System overview of our approach, the system can be separated into two parts: state estimation and mapping. In state estimation, iterated Kalman filter is adopted to estimate state variables. IMU measurements are used for integration to generate state prediction. It then updates velocity state by fusing wheel encoder, transforms raw points from LiDAR frame to IMU frame and compensates for motion distortion. LiDAR scanning after motion compensation is used to compute residual to optimize the pose to obtain final odometry result. In mapping module, the new scan is then added to the map that consists of hash structure and the updated mapping result.

Figure 6.

LiDAR measurements model. Red point is the point in scan, blue points are the points in map nearby red point, and the vector from plane to red point is the normal and residual.

Figure 6.

LiDAR measurements model. Red point is the point in scan, blue points are the points in map nearby red point, and the vector from plane to red point is the normal and residual.

Figure 7.

Real scene and ground truth map of our experimental environments.

Figure 7.

Real scene and ground truth map of our experimental environments.

Figure 8.

The mapping results of (a) FAST-LIO2, (b) EKF-LOAM, and (c) our RSS-LIWOM. The white point-cloud is the ground truth map points measured by Faro FocusS350 scanner and the red point-cloud is the estimated result.

Figure 8.

The mapping results of (a) FAST-LIO2, (b) EKF-LOAM, and (c) our RSS-LIWOM. The white point-cloud is the ground truth map points measured by Faro FocusS350 scanner and the red point-cloud is the estimated result.

Figure 9.

(a) The ground truth map and the mapping result of (b) EKF-LOAM and (c) our RSS-LIWOM. different approach in stair scene. White point cloud is ground truth map measured by Faro FocusS350 scanner and the red point cloud is the estimated result.

Figure 9.

(a) The ground truth map and the mapping result of (b) EKF-LOAM and (c) our RSS-LIWOM. different approach in stair scene. White point cloud is ground truth map measured by Faro FocusS350 scanner and the red point cloud is the estimated result.

Figure 10.

The visual results of chamfer distance and cover rate in typical part of campus scene. (a) is FAST-LIO2 in corridor, (b) is EKF-LOAM in corridor, (c) is RSS-LIWOM in corridor, (d) is FAST-LIO2 in hall, (e) is EKF-LOAM in hall and (f) is RSS-LIWOM in hall.

Figure 10.

The visual results of chamfer distance and cover rate in typical part of campus scene. (a) is FAST-LIO2 in corridor, (b) is EKF-LOAM in corridor, (c) is RSS-LIWOM in corridor, (d) is FAST-LIO2 in hall, (e) is EKF-LOAM in hall and (f) is RSS-LIWOM in hall.

Figure 11.

The velocity estimation of RSS-LIWOM (blue line) and RSS-LIWOM (red line ). (a) The velocity estimation in campus. (b) The velocity estimation in stairway.

Figure 11.

The velocity estimation of RSS-LIWOM (blue line) and RSS-LIWOM (red line ). (a) The velocity estimation in campus. (b) The velocity estimation in stairway.

Table 1.

Notations in our paper.

Table 1.

Notations in our paper.

| Symbols |

Meaning |

|

The timestamp of the measurements of senor A. |

|

scan and after downsampling in A frame. |

|

true state variables, nominal state variables and error state variables calculated |

| |

by measurement of senor A. |

|

T is transform from B to A that R is rotation and t is translation. |

|

The coordinate system of world, IMU, LiDAR, wheel odometry and rotating platform. |

Table 2.

Odometry evaluation on campus.

Table 2.

Odometry evaluation on campus.

| approach |

x drift(m) |

y drift(m) |

z drift(m) |

accumulated errors(m) |

error per meter(m) |

| FAST-LIO2 |

2.23 |

0.45 |

3.77 |

4.40 |

0.02 |

| LIO-SAM |

22.16 |

19.21 |

6.69 |

30.06 |

0.12 |

| EKF-LOAM |

- |

- |

- |

- |

- |

| EKF-LOAM

|

1.72 |

0.01 |

1.60 |

2.36 |

0.01 |

| RSS-LIWOM

|

2.64 |

1.11 |

1.53 |

3.25 |

0.02 |

| RSS-LIWOM

|

2.74 |

4.75 |

0.79 |

5.54 |

0.03 |

| RSS-LIWOM (Ours) |

2.07 |

0.04 |

0.01 |

2.08 |

0.01 |

Table 3.

Odometry evaluation on stairway.

Table 3.

Odometry evaluation on stairway.

| approach |

z drift(m) |

error per meter height(m) |

| FAST-LIO2 |

- |

- |

| LIO-SAM |

- |

- |

| EKF-LOAM |

- |

- |

| EKF-LOAM

|

5.14 |

0.67 |

| RSS-LIWOM

|

3.29 |

0.43 |

| RSS-LIWOM

|

1.64 |

0.21 |

| RSS-LIWOM (Ours) |

0.44 |

0.06 |

Table 4.

Comparison of mapping quality.

Table 4.

Comparison of mapping quality.

| Scene |

Method |

Chamfer Distance |

cover rate(%) |

number of points |

| ]3*Campus |

FAST-LIO2 |

1.44 |

16.2 |

4.3

|

| |

EKF-LOAM

|

0.69 |

62.7 |

2.7

|

| |

RSS-LIWOM (Ours) |

0.67 |

70.2 |

4.8

|

| ]3*Stairway |

FAST-LIO2 |

- |

- |

- |

| |

EKF-LOAM

|

1.50 |

36.2 |

3.1

|

| |

RSS-LIWOM (Ours) |

0.37 |

70.0 |

4.4

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).