Submitted:

17 July 2023

Posted:

18 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Universality: Every emitter possesses the characteristics or features used to identify it.

- Uniqueness: No two emitters have the exact same RF fingerprint or SEI exploited features.

- Permanence: The RF fingerprint features are invariant to time or environmental conditions.

- Collectability: The exploited features can be quantitatively measured.

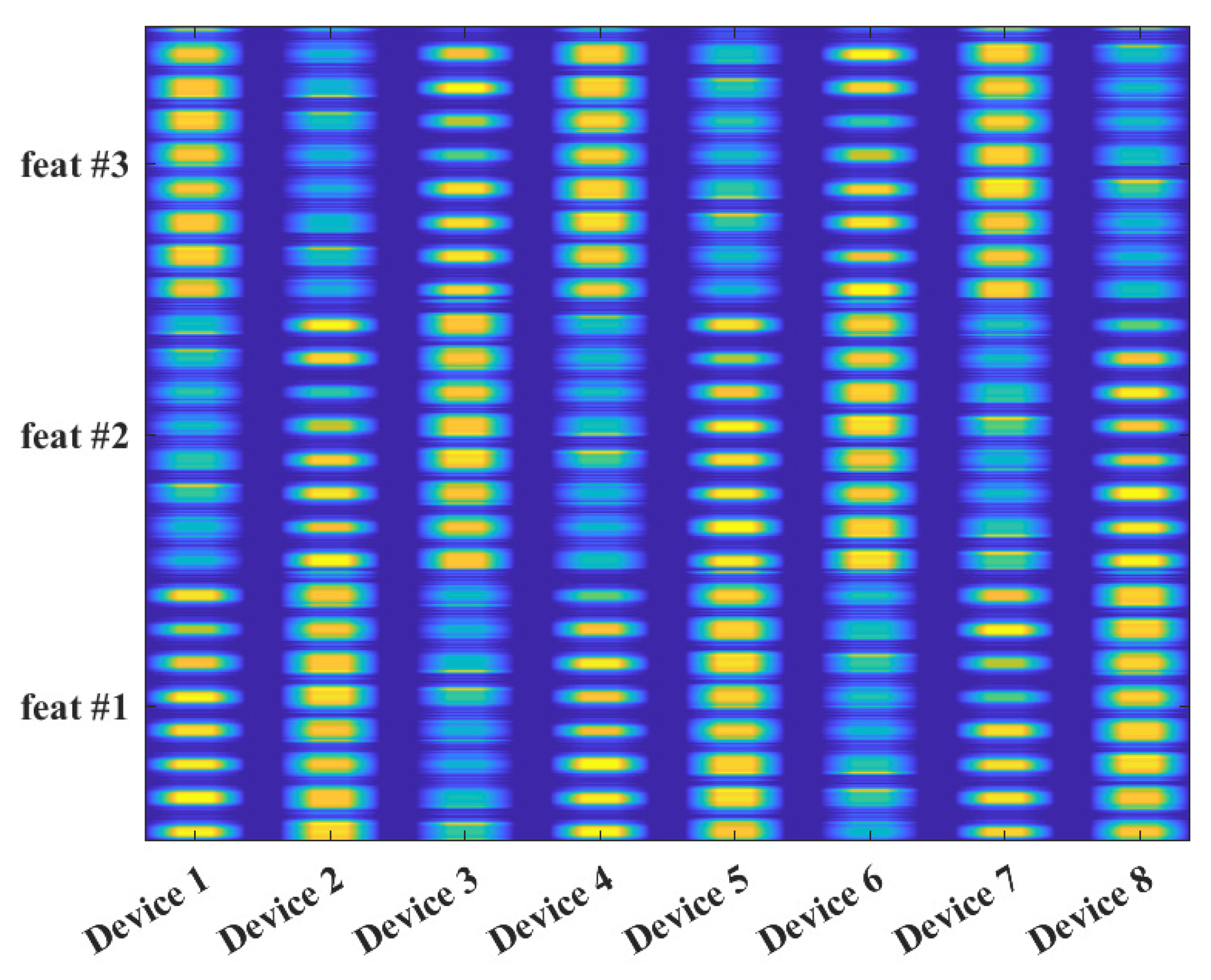

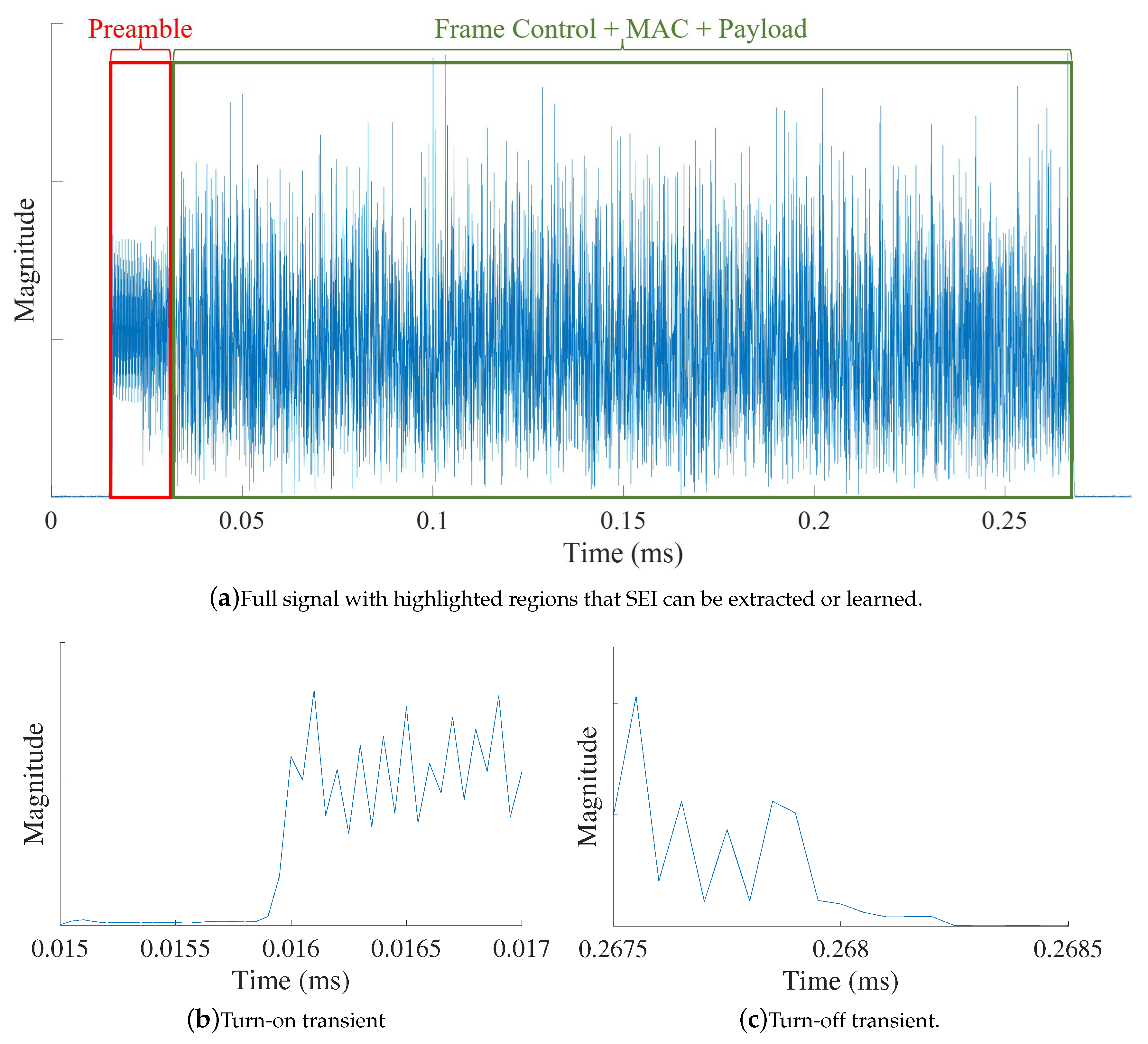

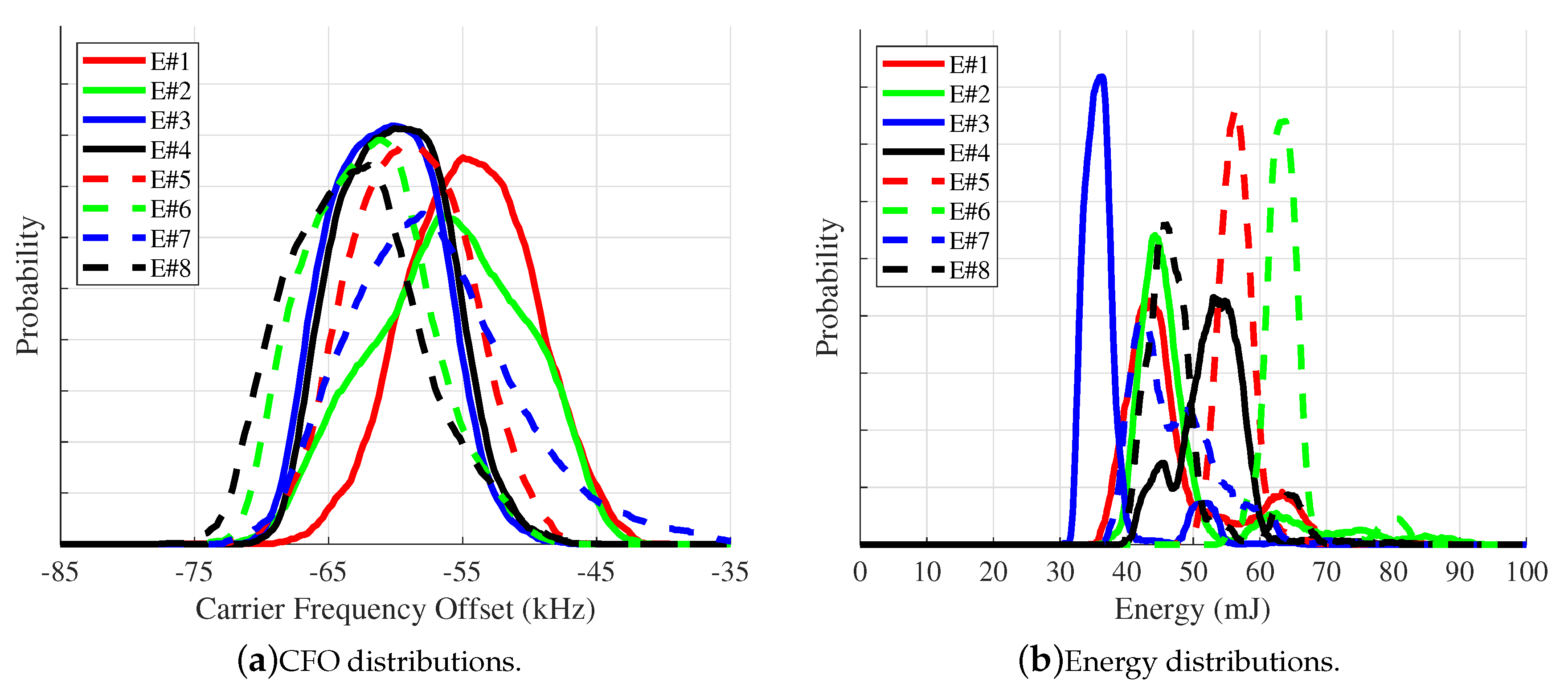

2. The Essence of Specific Emitter Identification

3. Specific Emitter Identification Operating Conditions

3.1. Operating Channel Conditions

3.2. Operating Temperature Conditions

4. Threats to Specific Emitter Identification

5. Specific Emitter Identification at Scale

5.1. Increasing Number of Emitters

5.2. Cross-Collection SEI

6. SEI Data Sets

- POWDER Signals Set: The Platform for Open Wireless Data-driven Experimental Research (POWDER) signals set is used to evaluate SEI performance in vendor-neutral hardware deployments of 5G and Open Radio Access Networks (ORANs) [158]. The new paradigm in 5G and ORANs includes emitters transmitting different protocol signals such as 5G, Long-Term Evolution (LTE), and Wi-Fi at different times. The work in [158] evaluates SEI as a PHY layer authentication technique in such networks using over-the-air signals collected by the large-scale POWDER platform. The signals set includes IQ signal samples collected from four base stations located in different geographical areas. Each base station is implemented using an Ettus USRP X310 SDR and is used to transmit standard-compliant IEEE 802.11a Wi-Fi, LTE, and Fifth Generation-New Radio (5G-NR) frames generated using MATLAB®’s Wireless Local Area Network (WLAN), LTE, and 5G toolboxes. A USRP B210 SDR–located at a fixed point–collects the signals transmitted by the four base stations at a sampling frequency of 5 MHz for Wi-Fi and 7.69 MHz for LTE and 5G. For each base station, the receiver is used to collect IQ samples for two independent days. A single-day collection is comprised of five sets of IQ samples per base station and protocol, each two seconds in duration. The data is stored in binary files using Signal Metadata Format (SigMF). Each SigMF file consists of a metadata file containing a description of the collected signals and a data file holding the actual collected signals’ IQ samples.

-

DeepSig RadioML Signals Sets: These signals sets are used to evaluate the classification performance of emitter signals in [63,159]. The work in [63] studies the effects of symbol rate and channel impairments on RF signals classification performance by (i) simulating the effects of CFO, symbol rate, and multipath as well as (ii) measuring over-the-air classification performance using software emitters. The signals set used in [63] captures twenty-four different digital and analog single-carrier modulation schemes including On-Off Keying (OOK), 4-ary Amplitude Shift Keying (ASK), 8-ary ASK, Binary Phase Shift Keying (BPSK), Quadrature Phase-Shift Keying (QPSK), 8-ary Phase Shift-Keying (PSK), 16-ary PSK, 32-ary PSK, 16-ary Amplitude and Phase-Shift keying (APSK), 32-ary APSK, 64-ary APSK, 128-ary APSK, 16QAM, 32QAM, 64QAM, 128QAM, 256QAM, Amplitude Modulation-Single Side-Band-Without Carrier (AM-SSB-WC), AM-SSB with Suppressed Carrier (AM-SSB-SC), AM-Double Side-Band (DSB)-WC, AM-DSB-SC, Frequency Modulation (FM), Gaussian Minimum-Shift Keying (GMSK), and Offset Quadrature Phase-Shift Keying (OQPSK). The resulting modulated symbols are shaped using a root-raised cosine pulse shaping filter. To simulate a time-varying wireless channel, channel parameters such as Rayleigh fading delay spread are randomly initialized before each transmission. The signals are transmitted and collected using USRP B210 SDRs in an indoor channel on the 900 MHz Industrial, Scientific, and Medical (ISM) band for the over-the-air portion of the signals set. Each captured signal in this data set is comprised of 1,024 samples. The signals of two-million samples are encoded using the hdf5 file format.Another DeepSig signal set was generated by the authors of [159]. The authors of [159] investigate the feasibility of applying machine learning to the signal processing domain. The authors use the GNU Radio platform to generate a synthetic collection of signals with varying SNR and eleven types of analog and digital modulation including 8-ary PSK, AM-DSB, AM-SSB, BPSK, Continuous-Phase Frequency-Shift Keying (CPFSK), Gaussian Frequency Shift Keying (GFSK), PAM4, 16QAM, 64QAM, QPSK, and Wide-Band FM (WBFM). For the analog and digital portion of the signal set, the authors use a continuous data source from acoustic voice speech and Gutenberg’s works of Shakespeare in ASCII, respectively. The data is organized in a multidimensional float32 vector with a size of,where refers to the number of signals. is set to one, referring to the I and Q channels, and is the number of samples in each signal. The four-dimensional data set is stored in cPickle format to facilitate access and integration of machine learning platforms such as Keras, Theano, and TensorFlow.

- ORACLE Signal Set: This set of signals was collected by the authors of [160] to evaluate their Optimized Radio clAssification through Convolutional neuraL nEtworks (ORACLE) approach. The authors of [160] evaluate the classification (identification) performance of the proposed approach within static and dynamic channels that are simulated using MATLAB® toolboxes. The ORACLE data set includes signals collected from sixteen USRP X310 emitters that are transmitting IEEE 802.11a Wi-Fi-compliant frames. A stationary USRP B210 SDR is used to collect all of the IEEE 802.11a Wi-Fi frames at a sampling frequency of 5 MHz and a center frequency of 2.45 GHz. More than twenty million signals are collected for each emitter. Each signal is divided into length 128 sub-sequences and stored as float64 in binary files.

-

WiSig Signal Set: This signal set is generated by the authors of [161] and includes ten million IEEE 802.11 Wi-Fi signals collected from 174 COTS Wi-Fi emitters using forty-one USRP receivers over four captures representing four different days. The authors of [161] attempt to address degrading SEI performance due to channel variations caused by the use of different receivers or signals collected over multiple days. The Wi-Fi signals sent by 174 Wi-Fi nodes to the Access Point (AP) are captured by forty-one USRP receivers including B210s, X310s, and N210s. To create the raw WiSig data set, four single-day captures were performed and combined to generate a 1.4 terabyte data set. The collected, raw signals are prepossessed to extract the first 256 IQ samples from each Wi-Fi frame with and without channel equalization. The steps and scripts to preprocess the collected signals are provided by the authors along with the data set. For convenience, the authors of [161] subdivided the data set into four smaller subsets:

- ManyTx: contains fifty signals for each of the 150 emitters and the signals collected by eighteen receivers over a four-day period.

- ManyRx: contains 200 signals for each of the ten emitters and the signals collected using thirty-two receivers over a four-day period.

- ManySig: contains 1,000 signals for each of the six emitters and the signals collected using twelve receivers over a four-day period.

- SingleDay: contained 800 signals for each of the twenty-eight emitters and the signals collected by ten receivers in a single day.

The WiSig signal set signals are detected using auto-correlation performed using the Wi-Fi preamble’s STS portion and re-sampled to a rate of 20 MHz.

7. Considerations for SEI in IoT Deployments

7.1. SEI On Resource Constrained Devices

7.2. Receiver-Agnostic SEI

8. Supplemental Challenges

8.1. Quantization of Deep Learning Models

- The authors of [194] present a flexible open-source mixed low-precision library referred to as CMix-NN for low-bit quantization of weights and activations into 8-, 4-, and 2-bit integers. The proposed quantization method targets micro-controller units with a few megabytes of memory and without hardware support for floating-point operations. The quantization library can be used to convert convolutional kernels of CNNs to any bit precision in the set of 8-, 4-, and 2-bits. The authors of [194] used the CMix-NN library to compress, deploy, and evaluate the performance of multiple Mobile-net family models on an STM32H7 microcontroller. The CMix-NN library achieves up to an 8% improvement in accuracy compared to the other state-of-the-art quantization and compression solutions for microcontroller units.

- The authors of [195] present effective quantization approaches for Recurrent Neural Network (RNN) implementations that includes LSTM, Gated Recurrent Units (GRU), and Convolutional Long-Short Term Memory (ConvLSTM). The proposed quantization methods are intended for FPGAs and embedded devices such as low-power mobile devices. The authors of [195] evaluated the performance of their quantization approach using the IMDb and moving MNIST data sets.

- Access Point (AP): provides traditional AP functionality as well as SEI. The AP is powered by a Raspberry Pi 4 model B.

- Authorized Users: Each authorized user is a TP-Link AC1300 USB Wi-Fi adapter and a computer running Ubuntu Linux 16.0.

- Adversary: The adversary is implemented using an Ettus USRP B210 SDR powered by an NVIDIA Jetson Nano Developer Kit. The adversary actively learns the SEI features of an authorized emitter and then modifies its own signals’ SEI features to match those of the selected authorized emitter before transmission in an attempt to hinder or defeat the AP co-located SEI process.

8.2. Unlocking the Secrets of SEI

8.3. Availability and Format of Large signal Data Sets

8.4. Standardization of Language

- Classification: (a.k.a., Identification) The process through which emitters are assigned to different classes or categories. Classification is the result of a one-to-many comparison between the emitter’s signal or its representation and each of the known classes or categories using a measure of similarity (e.g., distance, probability, etc.).

- Authentication: (a.k.a., Verification or Validation) The process through which an emitter’s identity–typically a digital one such as a MAC address–is authenticated or verified by performing a one-to-one comparison between the emitter’s signal or its representation and the stored model or representation associated with the identity claimed by the to-be-authenticated emitter.

8.5. IoT-imposed Temperature Considerations

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| 5G | Fifth Generation |

| 5G-NR | Fifth Generation-New Radio |

| ABL | Adaptive Broad Learning |

| ADA | Adversarial Domain Adaption |

| ADLM | Analog Devices Active Learning Module |

| ADS-B | Automatic Dependent Surveillance-Broadcast |

| AE | AutoEncoder |

| AI | Artificial Intelligence |

| AIS | Automatic Identification System |

| AM | Amplitude Modulation |

| AP | Access Point |

| APG | Average Path Gain |

| ASCII | American Standard Code for Information Interchange |

| ASK | Amplitude Shift-Keying |

| AWG | Arbitrary Waveform Generator |

| AWGN | Additive White Gaussian Noise |

| BER | Bit-Error-Rate |

| BLE | Bluetooth Low Energy |

| BLS | Broad Learning System |

| BP | Back Propagation |

| BPSK | Binary Phase Shift-Keying |

| BS | Base Station |

| CAE | Convolutional AutoEncoder |

| CFO | Carrier Frequency Offset |

| ChaRRNets | Channel Robust Representation Networks |

| CGAN | Conditional Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| ConvLSTM | Convolutional Long Short-Term Memory |

| COTS | Commercial-Off-The-Shelf |

| CPFSK | Continuous-Phase Frequency-Shift Keying |

| CPU | Central Processing Unit |

| CSI | Channel State Information |

| CuSI | Cubic-Spline Interpolation |

| CvT | Convolutions to Vision Transformers |

| C&W | Carlini & Wagner |

| DAC | Digital-to-Analog Converter |

| DCFT | Differential Constellation Trace Figure |

| DCT | Discrete Cosine Transform |

| DDoS | Distributed Denial-of-Service |

| DFT | Discrete Fourier Transform |

| DI | Differential Interval |

| DNA | Distinct, Native, Attribute |

| DNN | Deep Neural Network |

| DoLoS | Difference of the Logarithm of the Spectrum |

| DSB | Double Side-Band |

| EMD | Empirical Mode Decomposition |

| FAR | False Accept Rate |

| FGSM | Fast Gradient Sign Method |

| FLOPS | Floating Point Operations Per Second |

| FM | Frequency Modulation |

| FPGA | Field Programmable Gate Array |

| FRR | False Reject Rate |

| GAN | Generative Adversarial Network |

| GAN-RXA | Generative Adversarial Network-based Receiver Agnostic |

| GFSK | Gaussian Frequency-Shift Keying |

| GLFormer | Gated and sliding Local self-attention transFormer |

| GMSK | Gaussian Minimum Shift-Keying |

| GPU | Graphical Processing Unit |

| GRU | Gated Recurrent Unit |

| GT | Gabor Transform |

| HART | Highway Addressable Remote Transducer |

| ICMP | Internet Control Message Protocol |

| IIR | Infinite Impulse Response |

| InfoGANs | Information maximized Generative Adversarial Networks |

| IoT | Internet of Things |

| IoV | Internet of Vehicles |

| IoBT | Internet of Battlefield Things |

| IoMT | Internet of Military Things |

| IIoT | Industrial Internet of Things |

| IQ | In-phase & Quadrature |

| IQI | IQ Imbalance |

| ISM | Industrial, Scientific, and Medical |

| ISR | Intentional Structure Removal |

| ITD | Intrinsic Time-scale Decomposition |

| JCAECNN | Joint CAE and CNN |

| kNN | k-Nearest Neighbors |

| LAI | Linear Approximation Interpolation |

| LMMD | Local Maximum Mean Discrepancy |

| LO | Local Oscillator |

| LoS | Line-of-Sight |

| LSTM | Long Short-Term Memory |

| LTE | Long-Term Evolution |

| LTS | Long Training Symbol |

| MAC | Media Access Control |

| MANET | Mobile Ad hoc NETwork |

| MDA | Multiple Discriminant Analysis |

| MDA/ML | Multiple Discriminant Analysis/Maximum Likelihood |

| MIMO | Multiple Input Multiple Output |

| MLP | Multi-Layer Perceptron |

| MMSE | Minimum Mean Squared Error |

| N-M | Nelder-Mead |

| NN | Neural Network |

| OFDM | Orthogonal Frequency-Division Multiplexing |

| OOK | On-Off Keying |

| OQPSK | Offset Quadrature Phase-Shift Keying |

| ORACLE | Optimized Radio clAssification through Convolutional neuraL nEtworks |

| ORANs | Open Radio Access Networks |

| OSTBC | Orthogonal Space-Time Block Code |

| PA | Power Amplifier |

| PAM | Pulse Amplitude Modulation |

| PARADIS | Passive RAdiometric Device Identification System |

| PBA | Per Batch Accuracy |

| PCA | Principal Component Analysis |

| PGD | Projected Gradient Descent |

| PHY | Physical |

| PLA | Physical Layer Authentication |

| PLL | Phase-Locked Loop |

| PMF | Probability Mass Function |

| POWDER | Platform for Open Wireless Data-driven Experimental Research |

| PSA | Per Slice Accuracy |

| PSK | Phase Shift-Keying |

| PTA | Per-Transmission Accuracy |

| QAM | Quadrature Amplitude Modulation |

| QoS | Quality of Service |

| QPSK | Quadrature Phase Shift-Keying |

| RECAP | Radiometric signature Exploitation Countering using |

| Adversarial machine learning-based Protocol | |

| RF | Radio Frequency |

| RFF | Radio Frequency Fingerprint |

| RFFE | Radio Frequency Fingerprint Embedding |

| RF-DNA | Radio Frequency-Distinct, Native, Attributes |

| RNN | Recurrent Neural Network |

| SC | Suppressed Carrier |

| SDR | Software-Defined Radio |

| SD-RXA | Statistical Distance-based Receiver Agnostic |

| SEI | Specific Emitter Identification |

| SepBN-DANN | Separated Batch Normalization-Deep Adversarial Neural Network |

| SFEBLN | Signal Feature Embedded Broad Learning Network |

| SIB | Square Integral Bispectrum |

| SigMF | Signal Metadata Format |

| SNR | Signal-to-Noise Ratio |

| STS | Short Training Symbol |

| STBC | Space-Time Block Code |

| STFT | Short-Time Fourier Transform |

| SSB | Single Side-Band |

| SVM | Support Vector Machines |

| SWaP-C | Size, Weight, and Power-Cost |

| SYNC | Synchronization |

| TeRFF | Temperature-aware Radio Frequency Fingerprinting |

| TF | Time-Frequency |

| USRP | Universal Software Radio Peripheral |

| VCP | Voltage Controlled Oscillator |

| VMD | Variational Mode Decomposition |

| WANET | Wireless Ad hoc NETwork |

| WBFM | Wide-Band Frequency Modulation |

| WC | Without Carrier |

| Wi-Fi | Wireless-Fidelity |

| WLAN | Wireless Local Area Network |

| ZSL | Zero-Shot Learning |

References

- Department of Defense (DoD), United States. DoD Policy Recommendations for the Internet of Things (IoT). 2016. Available online: https://www.hsdl.org/?view&did=799676.

- Gartner Research. Gartner Says 6.4 Billion Connected“Things” Will Be in Use in 2016, Up 30 Percent From 2015, 2015.

- Juniper Research. `Internet of Things’ Connected Devices to Triple by 2021, Reaching Over 46 Billion Units, 2016.

- Statista. Internet of Things (IoT) connected devices installed base worldwide from 2015 to 2025 (in billions). 2019. Available online: https://www.statista.com/statistics/471264/iot-number-of-connected-devices-worldwide/.

- Rawlinson, K. Hp study reveals 70 percent of internet of things devices vulnerable to attack. [Online]. 2014. Available online: https://www8.hp.com/us/en/hp-news/press-release.html?id=1744676.

- Ray, I.; Kar, D.M.; Peterson, J.; Goeringer, S. Device identity and trust in IoT-sphere forsaking cryptography. 2019 IEEE 5th International Conference on Collaboration and Internet Computing (CIC). IEEE, 2019, pp. 204–213.

- Neshenko, N.; Bou-Harb, E.; Crichigno, J.; Kaddoum, G.; Ghani, N. Demystifying IoT security: an exhaustive survey on IoT vulnerabilities and a first empirical look on internet-scale IoT exploitations. IEEE Communications Surveys & Tutorials 2019, 21, 2702–2733. [Google Scholar] [CrossRef]

- Larson, S. A smart fish tank left a casino vulnerable to hackers, 2017.

- Wright, J.; Cache, J. Hacking exposed wireless: Wireless security secrets & solutions; McGraw-Hill Education Group, 2015.

- Stanislav, M.; Beardsley, T. Hacking iot: A case study on baby monitor exposures and vulnerabilities. Rapid7 Report 2015. [Google Scholar]

- Wright, J. KillerBee: Practical ZigBee Exploitation Framework or” Wireless Hacking and the Kinetic World”, 2018.

- Shipley, P.; Gooler, R. Insteon: False security and deceptive documentation. DEF CON 2015, 23. [Google Scholar]

- Shipley, P. Tools for Insteon RF. GitHub 2015. [Google Scholar]

- Krebs, B. Mirai IoT Botnet Co-Authors Plead Guilty-Krebs on Security. Krebs on Security. 2017. [Google Scholar]

- Simon, S.; Selyukh, A. Internet Of Things’ Hacking Attack Led To Widespread Outage Of Popular Websites. National Public Radio (NPR) 2016. [Google Scholar]

- Talbot, C.M.; Temple, M.A.; Carbino, T.J.; Betances, J.A. Detecting rogue attacks on commercial wireless Insteon home automation systems. Computers & security 2018, 74, 296–307. [Google Scholar] [CrossRef]

- Sa, K.; Lang, D.; Wang, C.; Bai, Y. Specific emitter identification techniques for the internet of things. IEEE Access 2019, 8, 1644–1652. [Google Scholar] [CrossRef]

- Reising, D.; Cancelleri, J.; Loveless, T.D.; Kandah, F.; Skjellum, A. Radio identity verification-based IoT security using RF-DNA fingerprints and SVM. IEEE Internet of Things Journal 2020, 8, 8356–8371. [Google Scholar] [CrossRef]

- Langley, L.E. Specific emitter identification (SEI) and classical parameter fusion technology. Proceedings of WESCON’93. IEEE, 1993, pp. 377–381.

- Terry, I. Networked Specific Emitter Identification in Fleet Battle Experiment Juliet. [Online]. 2003. Available online: https://apps.dtic.mil/sti/citations/ADA415972.

- Defence R&D Canada - Ottawa. Interferometric Intrapulse Radar Receiver for Specific Emitter Identification and Direction-Finding. Fact Sheet REW 224 2007.

- Dudczyk, J.; Kawalec, A. Specific emitter identification based on graphical representation of the distribution of radar signal parameters. Bulletin of the Polish Academy of Sciences. Technical Sciences 2015, 63, 391–396. [Google Scholar] [CrossRef]

- Matuszewski, J. Specific emitter identification. 2008 International Radar Symposium. IEEE, 2008, pp. 1–4.

- Toonstra, J.; Kinsner, W. Transient analysis and genetic algorithms for classification. IEEE WESCANEX 95. Communications, Power, and Computing. Conference Proceedings. IEEE, 1995, Vol. 2, pp. 432–437.

- Ureten, O.; Serinken, N. Detection of radio transmitter turn-on transients. Electronics letters 1999, 35. [Google Scholar] [CrossRef]

- Ureten, O.; Serinken, N. Bayesian detection of Wi-Fi transmitter RF fingerprints. Electronics letters 2005, 41, 373–374. [Google Scholar] [CrossRef]

- Ureten, O.; Serinken, N. Wireless security through RF fingerprinting. Canadian Journal of Electrical and Computer Engineering 2007, 32, 27–33. [Google Scholar] [CrossRef]

- Jana, S.; Kasera, S.K. On fast and accurate detection of unauthorized wireless access points using clock skews. Proceedings of the 14th ACM international conference on Mobile computing and networking, 2008, pp. 104–115.

- Brik, V.; Banerjee, S.; Gruteser, M.; Oh, S. Wireless device identification with radiometric signatures. Proceedings of the 14th ACM international conference on Mobile computing and networking, 2008, pp. 116–127.

- Suski II, W.C.; Temple, M.A.; Mendenhall, M.J.; Mills, R.F. Radio frequency fingerprinting commercial communication devices to enhance electronic security. International Journal of Electronic Security and Digital Forensics 2008, 1, 301–322. [Google Scholar] [CrossRef]

- Suski, W.C., II; Temple, M.A.; Mendenhall, M.J.; Mills, R.F. Using spectral fingerprints to improve wireless network security. IEEE GLOBECOM 2008-2008 IEEE Global Telecommunications Conference. IEEE, 2008, pp. 1–5.

- Danev, B.; Capkun, S. Transient-based identification of wireless sensor nodes. 2009 International Conference on Information Processing in Sensor Networks. IEEE, 2009, pp. 25–36.

- Klein, R.; Temple, M.A.; Mendenhall, M.J.; Reising, D.R. Sensitivity analysis of burst detection and RF fingerprinting classification performance. 2009 IEEE International Conference on Communications. IEEE, 2009, pp. 1–5.

- Klein, R.W.; Temple, M.A.; Mendenhall, M.J. Application of wavelet-based RF fingerprinting to enhance wireless network security. Journal of Communications and Networks 2009, 11, 544–555. [Google Scholar] [CrossRef]

- Liu, M.W.; Doherty, J.F. Specific emitter identification using nonlinear device estimation. 2008 IEEE Sarnoff Symposium. IEEE, 2008, pp. 1–5.

- Liu, M.W.; Doherty, J.F. Nonlinearity estimation for specific emitter identification in multipath channels. IEEE Transactions on Information Forensics and Security 2011, 6, 1076–1085. [Google Scholar] [CrossRef]

- Kennedy, I.O.; Kuzminskiy, A.M. RF fingerprint detection in a wireless multipath channel. 2010 7th International Symposium on Wireless Communication Systems. IEEE, 2010, pp. 820–823.

- Reising, D.R.; Temple, M.A.; Mendenhall, M.J. Improving intra-cellular security using air monitoring with RF fingerprints. 2010 IEEE Wireless Communication and Networking Conference. IEEE, 2010, pp. 1–6.

- Reising, D.R.; Temple, M.A.; Mendenhall, M.J. Improved wireless security for GMSK-based devices using RF fingerprinting. International Journal of Electronic Security and Digital Forensics 2010, 3, 41–59. [Google Scholar] [CrossRef]

- Reising, D., D. Prentice, and M. Temple. An FPGA Implementation of Real-Time RF-DNA Fingerprinting for RFINT Applications. IEEE Military Communications Conference (MILCOM), 2011.

- Reising, D.R.; Temple, M.A.; Oxley, M.E. Gabor-based RF-DNA fingerprinting for classifying 802.16 e WiMAX mobile subscribers. 2012 International Conference on Computing, Networking and Communications (ICNC). IEEE, 2012, pp. 7–13.

- Reising, D.R.; Temple, M.A.; Jackson, J.A. Authorized and rogue device discrimination using dimensionally reduced RF-DNA fingerprints. IEEE Transactions on Information Forensics and Security 2015, 10, 1180–1192. [Google Scholar] [CrossRef]

- Williams, M.D.; Temple, M.A.; Reising, D.R. Augmenting bit-level network security using physical layer RF-DNA fingerprinting. 2010 IEEE Global Telecommunications Conference GLOBECOM 2010. IEEE, 2010, pp. 1–6.

- Takahashi, D.; Xiao, Y.; Zhang, Y.; Chatzimisios, P.; Chen, H.H. IEEE 802.11 user fingerprinting and its applications for intrusion detection. Computers & Mathematics with Applications 2010, 60, 307–318. [Google Scholar] [CrossRef]

- Tekbaş, Ö.; Üreten, O.; Serinken, N. Improvement of transmitter identification system for low SNR transients. Electronics Letters 2004, 40, 182–183. [Google Scholar] [CrossRef]

- Ellis, K.; Serinken, N. Characteristics of radio transmitter fingerprints. Radio Science 2001, 36, 585–597. [Google Scholar] [CrossRef]

- Soliman, S.S.; Hsue, S.Z. Signal classification using statistical moments. IEEE Transactions on Communications 1992, 40, 908–916. [Google Scholar] [CrossRef]

- Azzouz, E.; Nandi, A.K. Automatic modulation recognition of communication signals; Springer Science & Business Media, 2013.

- Wheeler, C.G.; Reising, D.R. Assessment of the impact of CFO on RF-DNA fingerprint classification performance. 2017 International Conference on Computing, Networking and Communications (ICNC). IEEE, 2017, pp. 110–114.

- Pan, Y., S. Yang, H. Peng, T. Li, and W. Wang. Specific Emitter Identification Based on Deep Residual Networks. Access 2019. [CrossRef]

- Fadul, M.K.; Reising, D.R.; Loveless, T.D.; Ofoli, A.R. RF-DNA fingerprint classification of OFDM signals using a Rayleigh fading channel model. 2019 IEEE Wireless Communications and Networking Conference (WCNC). IEEE, 2019, pp. 1–7.

- Fadul, M., D. Reising, T. D. Loveless, and A. Ofoli. Preprint: Using RF-DNA Fingerprints To Classify OFDM Transmitters Under Rayleigh Fading Conditions. IEEE Trans on Info Forensics & Security 2020.

- Fadul, M., D. Reising, and M. Sartipi. Identification of OFDM-based Radio sunder Rayleigh Fading using RF-DNA and Deep Learning. Access 2020, Under Review.

- Dubendorfer, C.K.; Ramsey, B.W.; Temple, M.A. An RF-DNA verification process for ZigBee networks. MILCOM 2012-2012 IEEE Military Communications Conference. IEEE, 2012, pp. 1–6.

- Baldini, G., G. Steri. A Survey of Techniques for the Identification of Mobile Phones Using the Physical Fingerprints of the Built-In Components. IEEE Communications Surveys & Tutorials 2017, 19, 1761–1789. [Google Scholar] [CrossRef]

- Baldini, G., C. Gentile, R. Giuliani and G. Steri. Comparison of Techniques for Radiometric Identification based on Deep Convolutional Neural Networks. Electronics Letters 2019, 55. [Google Scholar] [CrossRef]

- Baldini, G. and R. Giuliani. An Assessment of the Impact of Wireless Interferences on IoT Emitter Identification using Time Frequency Representations and CNN. Global IoT Summit (GIoTS), 2019. [CrossRef]

- Restuccia, F.; D’Oro, S.; Al-Shawabka, A.; Belgiovine, M.; Angioloni, L.; Ioannidis, S.; Chowdhury, K.; Melodia, T. DeepRadioID: Real-time channel-resilient optimization of deep learning-based radio fingerprinting algorithms. Proceedings of the Twentieth ACM International Symposium on Mobile Ad Hoc Networking and Computing, 2019, pp. 51–60.

- Wong, L., W. Headley, S. Andrews, R. Gerdes and A. Michaels. Clustering Learned CNN Features from Raw I/Q Data for Emitter Identification. IEEE Military Communications Conf (MILCOM), 2018. [CrossRef]

- Jafari, H., O. Omotere, D. Adesina, H. Wu, and L. Qian. IoT Devices Fingerprinting Using Deep Learning. IEEE Military Communications Conf (MILCOM), 2018. [CrossRef]

- Bassey, J., X. Li, and L. Qian. Device Authentication Codes based on RF Fingerprinting using Deep Learning. arXiv, Electrical Engineering and Systems Science, Signal Processing 2020. [Google Scholar]

- Chen, X.; Hao, X. Feature Reduction Method for Cognition and Classification of IoT Devices Based on Artificial Intelligence. Access 2019, 7, 103291–103298. [Google Scholar] [CrossRef]

- O’Shea, T., T. Roy, and T. Clancy. Over-the-Air Deep Learning Based Radio Signal Classification. IEEE J. of Selected Topics in Signal Processing 2018, 12. [Google Scholar] [CrossRef]

- Youssef, K., L. Bouchard, K. Haigh, J. Silovsky, B. Thapa, and C. Valk. Machine Learning Approach to RF Transmitter Identification. IEEE J. of Radio Frequency Identification 2018, 2. [Google Scholar] [CrossRef]

- Wilson, A.J.; Reising, D.R.; Loveless, T.D. Integration of matched filtering within the RF-DNA fingerprinting process. 2019 IEEE Global Communications Conference (GLOBECOM). IEEE, 2019, pp. 1–6.

- Hall, J.; Barbeau, M.; Kranakis, E.; others. Detection of transient in radio frequency fingerprinting using signal phase. Wireless and optical communications 2003, 9, 13. [Google Scholar]

- Hall, M. Correlation-based Feature Selection for Discrete and Numeric Class Machine Learning. Proceedings of the 17th international conference on machine learning (ICML-2000) 2000, 359–366. [Google Scholar]

- Hall, J.; Barbeau, M.; Kranakis, E. Radio frequency fingerprinting for intrusion detection in wireless networks. IEEE Transactions on Defendable and Secure Computing 2005, 12, 1–35. [Google Scholar]

- Merchant, K., S. Revay, G. Stantchev and B. Nousain. Deep Learning for RF Device Fingerprinting in Cognitive Communication Networks. IEEE J. of Selected Topics in Sig Proc 2018, 12. [Google Scholar] [CrossRef]

- Polak, A. , and D. Goeckel. RF Fingerprinting of Users Who Actively Mask Their Identities with Artificial Distortion. 2011 Conference Record of the Forty Fifth Asilomar Conference on Signals, Systems and Computers (ASILOMAR), 2011.

- Polak, A. , and D. Goeckel. Wireless Device Identification Based on RF Oscillator Imperfections. IEEE Transactions on Information Forensics and Security 2015, 10. [Google Scholar] [CrossRef]

- Polak, A., S. Dolatshahi, and D. Goeckel. Identifying Wireless Users via Transmitter Imperfections. IEEE Journal on Selected Areas in Communications 2011, 29. [Google Scholar] [CrossRef]

- Polak, A. , and D. Goeckel. Identification of Wireless Devices of Users Who Actively Fake Their RF Fingerprints With Artificial Data Distortion. IEEE Transactions on Wireless Communications 2015, 14. [Google Scholar] [CrossRef]

- Dolatshahi, S., A. Polak, and D. Goeckel. Identification of wireless users via power amplifier imperfections. 2010 Conference Record of the Forty Fourth Asilomar Conference on Signals, Systems and Computers, 2010.

- Yu, J., A. Hu, G. Li, and L. Peng. A Robust RF Fingerprinting Approach Using Multisampling Convolutional Neural Network. IEEE Internet of Things Journal 2019, 6, 6786–6799. [Google Scholar] [CrossRef]

- Guyue, L., Y. Jiabao, Y. Xing, and A. Hu. Location-Invariant Physical Layer Identification Approach for WiFi Devices. Access. [CrossRef]

- Liang, J., Z. Huang, and Z. Li. Method of Empirical Mode Decomposition in Specific Emitter Identification. Wireless Personal Communications 2017, 96, 1–15. [Google Scholar] [CrossRef]

- Smith, L., N. Smith, J. Hopkins, D. Rayborn, J. Ball, B. Tang, and M. Young. Classifying WiFi Physical Fingerprints using Complex Deep Learning. Automatic Target Recognition XXX. International Society for Optics and Photonics, 2020, Vol. 11394, p. 113940J.

- Kandah, F., J. Cancelleri, D. Reising, A. Altarawneh, and A. Skjellum. A Hardware-Software Co-design Approach to Identity, Trust, and Resilience for IoT/CPS at Scale. International Conference on Internet of Things (iThings), 2019.

- Harmer, P.K.; Temple, M.A.; Buckner, M.A.; Farquhar, E. 4G Security Using Physical Layer RF-DNA with DE-Optimized LFS Classification. J. Commun. 2011, 6, 671–681. [Google Scholar] [CrossRef]

- Harmer, P.K.; Reising, D.R.; Temple, M.A. Classifier selection for physical layer security augmentation in cognitive radio networks. 2013 IEEE International Conference on Communications (ICC). IEEE, 2013, pp. 2846–2851.

- Wang, X., P. Hao, and L. Hanzo. Physical-layer Authentication for Wireless Security Enhancement: Current Challenges and Future Developments. IEEE Communications Magazine 2016, 54, 152–158. [Google Scholar] [CrossRef]

- Xu, Q.; Zheng, R.; Saad, W.; Han, Z. Device fingerprinting in wireless networks: Challenges and opportunities. IEEE Communications Surveys & Tutorials 2015, 18, 94–104. [Google Scholar] [CrossRef]

- Gerdes, R., T. Daniels, M. Phd, and S. Russell. “Device Identification via Analog Signal Fingerprinting: A Matched Filter Approach,”. NDSS Symposium, 2006.

- Joo, K.; Choi, W.; Lee, D.H. Hold the door! fingerprinting your car key to prevent keyless entry car theft. arXiv preprint arXiv:2003.13251 2020. [Google Scholar]

- Riyaz, S., K. Sankhe, S. Ioannidis, and K. Chowdhury. Deep Learning Convolutional Neural Networks for Radio Identification. IEEE Communications Magazine 2018, 56. [Google Scholar] [CrossRef]

- O’Shea, T. , and J. Hoydis. An Introduction to Deep Learning for the Physical Layer. IEEE Trans on Cognitive Communications & Networking 2017, 3. [Google Scholar] [CrossRef]

- Mendis, G., J. Wei, and A. Madanayake. Deep Learning Based Radio-Signal Identification With Hardware Design. IEEE Trans on Aerospace & Electronic Systems 2019, 55. [Google Scholar] [CrossRef]

- Baldini, G.; Steri, G. A survey of techniques for the identification of mobile phones using the physical fingerprints of the built-in components. IEEE Communications Surveys & Tutorials 2017, 19, 1761–1789. [Google Scholar] [CrossRef]

- Jain, A.K.; Hong, L.; Pankanti, S.; Bolle, R. An identity-authentication system using fingerprints. Proceedings of the IEEE 1997, 85, 1365–1388. [Google Scholar] [CrossRef]

- Bai, L.; Zhu, L.; Liu, J.; Choi, J.; Zhang, W. Physical layer authentication in wireless communication networks: A survey. Journal of Communications and Information Networks 2020, 5, 237–264. [Google Scholar] [CrossRef]

- Clarke, R.H. A statistical theory of mobile-radio reception. Bell system technical journal 1968, 47, 957–1000. [Google Scholar] [CrossRef]

- Danev, B.; Luecken, H.; Capkun, S.; El Defrawy, K. Attacks on physical-layer identification. Proceedings of the third ACM conference on Wireless network security, 2010, pp. 89–98.

- Soltanieh, N.; Norouzi, Y.; Yang, Y.; Karmakar, N.C. A Review of Radio Frequency Fingerprinting Techniques. IEEE Journal of Radio Frequency Identification 2020, 4, 222–233. [Google Scholar] [CrossRef]

- Xie, N.; Li, Z.; Tan, H. A survey of physical-layer authentication in wireless communications. IEEE Communications Surveys & Tutorials 2020, 23, 282–310. [Google Scholar]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Song, H. Machine learning for the detection and identification of Internet of Things devices: A survey. IEEE Internet of Things Journal 2021, 9, 298–320. [Google Scholar] [CrossRef]

- Wang, N.; Wang, P.; Alipour-Fanid, A.; Jiao, L.; Zeng, K. Physical-layer security of 5G wireless networks for IoT: Challenges and opportunities. IEEE Internet of Things Journal 2019, 6, 8169–8181. [Google Scholar] [CrossRef]

- Jagannath, A.; Jagannath, J.; Kumar, P.S.P.V. A comprehensive survey on radio frequency (rf) fingerprinting: Traditional approaches, deep learning, and open challenges. arXiv preprint arXiv:2201.00680 2022. [Google Scholar]

- Parmaksiz, H.; Karakuzu, C. A Review of Recent Developments on Secure Authentication Using RF Fingerprints Techniques. Sakarya University Journal of Computer and Information Sciences 2022, 5, 278–303. [Google Scholar] [CrossRef]

- Brandes, T.S.; Kuzdeba, S.; McClelland, J.; Bomberger, N.; Radlbeck, A. RF waveform synthesis guided by deep reinforcement learning. 2020 IEEE International Workshop on Information Forensics and Security (WIFS). IEEE, 2020, pp. 1–6.

- Wang, W.; Gan, L. Radio Frequency Fingerprinting Improved by Statistical Noise Reduction. IEEE Transactions on Cognitive Communications and Networking 2022. [Google Scholar] [CrossRef]

- Han, H.; Cui, L.; Li, W.; Huang, L.; Cai, Y.; Cai, J.; Zhang, Y. Radio Frequency Fingerprint Based Wireless Transmitter Identification Against Malicious Attacker: An Adversarial Learning Approach. 2020 International Conference on Wireless Communications and Signal Processing (WCSP). IEEE, 2020, pp. 310–315.

- Yuan, Y.; Huang, Z.; Wu, H.; Wang, X. Specific emitter identification based on Hilbert–Huang transform-based time–frequency–energy distribution features. IET communications 2014, 8, 2404–2412. [Google Scholar] [CrossRef]

- Guo, S.; Akhtar, S.; Mella, A. A method for radar model identification using time-domain transient signals. IEEE Transactions on Aerospace and Electronic Systems 2021, 57, 3132–3149. [Google Scholar] [CrossRef]

- Chen, N.; Hu, A.; Fu, H. LoRa radio frequency fingerprint identification based on frequency offset characteristics and optimized LoRaWAN access technology. 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC). IEEE, 2021, Vol. 5, pp. 1801–1807.

- Gu, X.; Wu, W.; Guo, N.; He, W.; Song, A.; Yang, M.; Ling, Z.; Luo, J. TeRFF: Temperature-aware Radio Frequency Fingerprinting for Smartphones. 2022 19th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON). IEEE, 2022, pp. 127–135.

- Chen, Y.; Chen, X.; Lei, Y. Emitter Identification of Digital Modulation Transmitter Based on Nonlinearity and Modulation Distortion of Power Amplifier. Sensors 2021, 21, 4362. [Google Scholar] [CrossRef] [PubMed]

- Liting, S.; Xiang, W.; Huang, Z. Unintentional modulation evaluation in time domain and frequency domain. Chinese Journal of Aeronautics 2022, 35, 376–389. [Google Scholar] [CrossRef]

- Zhang, J., R. Woods, M. Sandell, M. Valkama, A. Marshall, and J. Cavallaro. Radio Frequency Fingerprint Identification for Narrowband Systems, Modelling and Classification. IEEE Transactions on Information Forensics and Security 2021, 16, 3974–3987. [Google Scholar] [CrossRef]

- Brik, V.; Banerjee, S.; Gruteser, M.; Oh, S. Wireless device identification with radiometric signatures. Proceedings of the 14th ACM international conference on Mobile computing and networking, 2008, pp. 116–127.

- Hou, W.; Wang, X.; Chouinard, J.Y.; Refaey, A. Physical layer authentication for mobile systems with time-varying carrier frequency offsets. IEEE Transactions on Communications 2014, 62, 1658–1667. [Google Scholar] [CrossRef]

- Robyns, P.; Marin, E.; Lamotte, W.; Quax, P.; Singelée, D.; Preneel, B. Physical-layer fingerprinting of LoRa devices using supervised and zero-shot learning. Proceedings of the 10th ACM Conference on Security and Privacy in Wireless and Mobile Networks, 2017, pp. 58–63.

- Hao, P.; Wang, X.; Behnad, A. Relay authentication by exploiting I/Q imbalance in amplify-and-forward system. 2014 IEEE Global Communications Conference. IEEE, 2014, pp. 613–618.

- Xing, G.; Shen, M.; Liu, H. Frequency offset and I/Q imbalance compensation for direct-conversion receivers. IEEE Transactions on Wireless Communications 2005, 4, 673–680. [Google Scholar] [CrossRef]

- Jian, T., B. Rendon, E. Ojuba, N. Soltani, Z. Wang, K. Sankhe, A. Gritsenko, J. Dy, K. Chowdhury, and S. Ioannidis. Deep Learning for RF Fingerprinting: A Massive Experimental Study. IEEE Internet of Things Magazine 2020, 3, 50–57. [Google Scholar] [CrossRef]

- Robinson, J., S. Kuzdeba, J. Stankowicz, and J. Carmack. Dilated Causal Convolutional Model For RF Fingerprinting. 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), 2020, pp. 0157–0162. [CrossRef]

- Morin, C.; Cardoso, L.S.; Hoydis, J.; Gorce, J.M.; Vial, T. Transmitter classification with supervised deep learning. Cognitive Radio-Oriented Wireless Networks: 14th EAI International Conference, CrownCom 2019, Poznan, Poland, June 11–12, 2019, Proceedings 14. Springer, 2019, pp. 73–86.

- Behura, S.; Kedia, S.; Hiremath, S.M.; Patra, S.K. WiST ID—Deep Learning-Based Large Scale Wireless Standard Technology Identification. IEEE Transactions on Cognitive Communications and Networking 2020, 6, 1365–1377. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, B.; Zhang, J.; Yang, N.; Wei, G.; Guo, D. Specific Emitter Identification Based on Deep Adversarial Domain Adaptation. 2021 4th International Conference on Information Communication and Signal Processing (ICICSP), 2021, pp. 104–109. [CrossRef]

- Gong, J.; Xu, X.; Qin, Y.; Dong, W. A Generative Adversarial Network Based Framework for Specific Emitter Characterization and Identification. 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), 2019, pp. 1–6. [CrossRef]

- Gong, J.; Xu, X.; Lei, Y. Unsupervised Specific Emitter Identification Method Using Radio-Frequency Fingerprint Embedded InfoGAN. IEEE Transactions on Information Forensics and Security 2020, 15, 2898–2913. [Google Scholar] [CrossRef]

- Basha, N.; Hamdaoui, B.; Sivanesan, K.; Guizani, M. Channel-Resilient Deep-Learning-Driven Device Fingerprinting Through Multiple Data Streams. IEEE Open Journal of the Communications Society 2023. [Google Scholar] [CrossRef]

- Xing, Y.; Hu, A.; Zhang, J.; Peng, L.; Wang, X. Design of A Channel Robust Radio Frequency Fingerprint Identification Scheme. IEEE Internet of Things Journal 2022. [Google Scholar] [CrossRef]

- Yuan, H.; Yan, Y.; Bao, Z.; Xu, C.; Gu, J.; Wang, J. Multipath Canceled RF Fingerprinting for Wireless OFDM Devices Based on Hammerstein System Parameter Separation. IEEE Canadian Journal of Electrical and Computer Engineering 2022, 45, 401–408. [Google Scholar] [CrossRef]

- Yang, L.; Camtepe, S.; Gao, Y.; Liu, V.; Jayalath, D. On the Use of Power Amplifier Nonlinearity Quotient to Improve Radio Frequency Fingerprint Identification in Time-Varying Channels. arXiv preprint arXiv:2302.13724 2023. [Google Scholar]

- Fadul, M.; Reising, D.; Loveless, T.D.; Ofoli, A. Nelder-mead simplex channel estimation for the RF-DNA fingerprinting of OFDM transmitters under Rayleigh fading conditions. IEEE Transactions on Information Forensics and Security 2021, 16, 2381–2396. [Google Scholar] [CrossRef]

- Reising, D.R.; Weerasena, L.P.; Loveless, T.D.; Sartipi, M. ; others. Improving RF-DNA Fingerprinting Performance in an Indoor Multipath Environment Using Semi-Supervised Learning. arXiv preprint arXiv:2304.00648, arXiv:2304.00648 2023.

- Brown, C.N.; Mattei, E.; Draganov, A. ChaRRNets: Channel robust representation networks for RF fingerprinting. arXiv preprint arXiv:2105.03568 2021. [Google Scholar]

- Tyler, J., M. Fadul, D. Reising, and F. Kandah. An Analysis of Signal Energy Impacts and Threats to Deep Learning Based SEI. IEEE Int’l Conf on Communications (ICC) ACCEPTED, 2022.

- Rehman, S.; Sowerby, K.; Alam, S.; Ardekani, I. Radio frequency fingerprinting and its challenges. 2014 IEEE conference on communications and network security. IEEE, 2014, pp. 496–497.

- Wang, T.; Fang, K.; Wei, W.; Tian, J.; Pan, Y.; Li, J. Microcontroller Unit Chip Temperature Fingerprint Informed Machine Learning for IIoT Intrusion Detection. IEEE Transactions on Industrial Informatics 2022, 19, 2219–2227. [Google Scholar] [CrossRef]

- Yilmaz, Ö.; Yazici, M. The Effect of Ambient Temperature On Device Classification Based On Radio Frequency Fingerprint Recognition. Sakarya University Journal of Computer and Information Sciences 2022, 5, 233–245. [Google Scholar] [CrossRef]

- Frerking, M. Crystal oscillator design and temperature compensation; Springer Science & Business Media, 2012.

- Peggs, C.S.; Jackson, T.S.; Tittlebaugh, A.N.; Olp, T.G.; Tyler, J.H.; Reising, D.R.; Loveless, T.D. Preamble-based RF-DNA Fingerprinting Under Varying Temperatures. 2023 12th Mediterranean Conference on Embedded Computing (MECO). IEEE, 2023, pp. 1–8.

- Shi, Y.; Davaslioglu, K.; Sagduyu, Y.E. Generative adversarial network for wireless signal spoofing. Proceedings of the ACM Workshop on Wireless Security and Machine Learning, 2019, pp. 55–60.

- Shi, Y.; Davaslioglu, K.; Sagduyu, Y.E. Generative adversarial network in the air: Deep adversarial learning for wireless signal spoofing. IEEE Transactions on Cognitive Communications and Networking 2020, 7, 294–303. [Google Scholar] [CrossRef]

- Restuccia, F.; D’Oro, S.; Al-Shawabka, A.; Rendon, B.C.; Chowdhury, K.; Ioannidis, S.; Melodia, T. Hacking the waveform: Generalized wireless adversarial deep learning. arXiv preprint arXiv:2005.02270 2020. [Google Scholar]

- Lalouani, W.; Younis, M.; Baroudi, U. Countering radiometric signature exploitation using adversarial machine learning based protocol switching. Computer Communications 2021, 174, 109–121. [Google Scholar] [CrossRef]

- Abanto-Leon, L.F.; Bäuml, A.; Sim, G.H.; Hollick, M.; Asadi, A. Stay connected, leave no trace: Enhancing security and privacy in wifi via obfuscating radiometric fingerprints. Proceedings of the ACM on Measurement and Analysis of Computing Systems 2020, 4, 1–31. [Google Scholar] [CrossRef]

- Karunaratne, S.; Krijestorac, E.; Cabric, D. Penetrating RF fingerprinting-based authentication with a generative adversarial attack. ICC 2021-IEEE International Conference on Communications. IEEE, 2021, pp. 1–6.

- Nikoofard, A.; Givehchian, H.; Bhaskar, N.; Schulman, A.; Bharadia, D.; Mercier, P.P. Protecting Bluetooth User Privacy through Obfuscation of Carrier Frequency Offset. IEEE Transactions on Circuits and Systems II: Express Briefs 2022. [Google Scholar]

- Sun, L.; Ke, D.; Wang, X.; Huang, Z.; Huang, K. Robustness of Deep Learning-Based Specific Emitter Identification under Adversarial Attacks. Remote Sensing 2022, 14, 4996. [Google Scholar] [CrossRef]

- Ke, D.; Huang, Z.; Wang, X.; Sun, L. Application of adversarial examples in communication modulation classification. 2019 International Conference on Data Mining Workshops (ICDMW). IEEE, 2019, pp. 877–882.

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572 2014. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083 2017. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. 2017 IEEE symposium on security and privacy (sp). IEEE, 2017, pp. 39–57.

- Han, H.; Cui, L.; Li, W.; Huang, L.; Cai, Y.; Cai, J.; Zhang, Y. Radio Frequency Fingerprint Based Wireless Transmitter Identification Against Malicious Attacker: An Adversarial Learning Approach. 2020 International Conference on Wireless Communications and Signal Processing (WCSP). IEEE, 2020, pp. 310–315.

- Defense Advances Research Projects Agency (DARPA). Spectrum Collaboration Challenge — Using AI to Unlock the True Potential of the RF Spectrum. https://archive.darpa.mil/sc2/, 2017.

- Defense Advances Research Projects Agency (DARPA). Radio Frequency Machine Learning Systems. https://www.darpa.mil/program/radio-frequency-machine-learning-systems, 2019.

- Yang, Y.; Hu, A.; Yu, J.; Li, G.; Zhang, Z. Radio frequency fingerprint identification based on stream differential constellation trace figures. Physical Communication 2021, 49, 101458. [Google Scholar] [CrossRef]

- Tyler, J.H.; Fadul, M.K.; Reising, D.R.; Liang, Y. Assessing the Presence of Intentional Waveform Structure In Preamble-based SEI. GLOBECOM 2022 - 2022 IEEE Global Communications Conference, 2022, pp. 4129–4135. [CrossRef]

- Al-Shawabka, A.; Restuccia, F.; D’Oro, S.; Jian, T.; Costa Rendon, B.; Soltani, N.; Dy, J.; Ioannidis, S.; Chowdhury, K.; Melodia, T. Exposing the Fingerprint: Dissecting the Impact of the Wireless Channel on Radio Fingerprinting. IEEE INFOCOM 2020 - IEEE Conference on Computer Communications, 2020, pp. 646–655. [CrossRef]

- Hamdaoui, B.; Basha, N.; Sivanesan, K. Deep Learning-Enabled Zero-Touch Device Identification: Mitigating the Impact of Channel Variability Through MIMO Diversity. IEEE Communications Magazine 2023, 61, 80–85. [Google Scholar] [CrossRef]

- Downey, J.; Hilburn, B.; O’Shea, T.; West, N. In the Future, AIs—Not Humans—Will Design Our Wireless Signals. IEEE Spectrum Magazine 2020. [Google Scholar]

- Tyler, J.H.; Reising, D.R.; Kandah, F.I.; Kaplanoglu, E.; Fadul, M.M.K. Physical Layer-Based IoT Security: An Investigation Into Improving Preamble-Based SEI Performance When Using Multiple Waveform Collections. IEEE Access 2022, 10, 133601–133616. [Google Scholar] [CrossRef]

- Li, H.; Gupta, K.; Wang, C.; Ghose, N.; Wang, B. RadioNet: Robust Deep-Learning Based Radio Fingerprinting. 2022 IEEE Conference on Communications and Network Security (CNS), 2022, pp. 190–198. [CrossRef]

- Smith, L.; Smith, N.; Rayborn, D.; Tang, B.; Ball, J.E.; Young, M. Identifying unlabeled WiFi devices with zero-shot learning. Automatic Target Recognition XXX. SPIE, 2020, Vol. 11394, pp. 129–137.

- Reus-Muns, G.; Jaisinghani, D.; Sankhe, K.; Chowdhury, K.R. Trust in 5G open RANs through machine learning: RF fingerprinting on the POWDER PAWR platform. GLOBECOM 2020-2020 IEEE Global Communications Conference. IEEE, 2020, pp. 1–6.

- O’shea, T.J.; West, N. Radio machine learning dataset generation with gnu radio. Proceedings of the GNU Radio Conference, 2016, Vol. 1.

- Sankhe, K.; Belgiovine, M.; Zhou, F.; Riyaz, S.; Ioannidis, S.; Chowdhury, K. ORACLE: Optimized Radio clAssification through Convolutional neuraL nEtworks. IEEE INFOCOM 2019 - IEEE Conference on Computer Communications, 2019, pp. 370–378. [CrossRef]

- Hanna, S.; Karunaratne, S.; Cabric, D. WiSig: A large-scale WiFi signal dataset for receiver and channel agnostic RF fingerprinting. IEEE Access 2022, 10, 22808–22818. [Google Scholar] [CrossRef]

- Jian, T.; Gong, Y.; Zhan, Z.; Shi, R.; Soltani, N.; Wang, Z.; Dy, J.; Chowdhury, K.; Wang, Y.; Ioannidis, S. Radio frequency fingerprinting on the edge. IEEE Transactions on Mobile Computing 2021, 21, 4078–4093. [Google Scholar] [CrossRef]

- Zhang, T.; Ye, S.; Zhang, K.; Tang, J.; Wen, W.; Fardad, M.; Wang, Y. A systematic dnn weight pruning framework using alternating direction method of multipliers. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 184–199.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Lin, D.; Wu, W. RF Fingerprint-Identification-Based Reliable Resource Allocation in an Internet of Battle Things. IEEE Internet of Things Journal 2022, 9, 21111–21120. [Google Scholar] [CrossRef]

- Xu, Z.; Han, G.; Liu, L.; Zhu, H.; Peng, J. A Lightweight Specific Emitter Identification Model for IIoT Devices Based on Adaptive Broad Learning. IEEE Transactions on Industrial Informatics 2022, 1–10. [Google Scholar] [CrossRef]

- Ya, T.; Yun, L.; Haoran, Z.; Zhang, J.; Yu, W.; Guan, G.; Shiwen, M. Large-scale real-world radio signal recognition with deep learning. Chinese Journal of Aeronautics 2022, 35, 35–48. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Y.; Sun, J.; Gui, G.; Lin, Y.; Gacanin, H.; Adachi, F. A real-world radio frequency signal dataset based on LTE system and variable channels. arXiv preprint arXiv:2205.12577 2022. [Google Scholar]

- Zhang, Y.; Peng, Y.; Sun, J.; Gui, G.; Lin, Y.; Mao, S. GPU-Free Specific Emitter Identification Using Signal Feature Embedded Broad Learning. IEEE Internet of Things Journal 2023. [Google Scholar] [CrossRef]

- Zhang, Y.; Peng, Y.; Adebisi, B.; Gui, G.; Gacanin, H.; Sari, H. Specific Emitter Identification Based on Radio Frequency Fingerprint Using Multi-Scale Network. 2022 IEEE 96th Vehicular Technology Conference (VTC2022-Fall). IEEE, 2022, pp. 1–5.

- Kuzdeba, S.; Robinson, J.; Carmack, J.; Couto, D. Systems View to Designing RF Fingerprinting for Real-World Operations. Proceedings of the 2022 ACM Workshop on Wireless Security and Machine Learning, 2022, pp. 33–38.

- Taha, M.A.; Fadul, M.K.; Tyler, J.H.; Reising, D.R.; Loveless, T.D. An assessment of entropy-based data reduction for SEI within IoT applications. MILCOM 2022-2022 IEEE Military Communications Conference (MILCOM). IEEE, 2022, pp. 385–392.

- Fadul, M.; Reising, D.; Weerasena, L. An Investigation into the Impacts of Deep Learning-based Re-sampling on Specific Emitter Identification Performance. Authorea Preprints 2022. [Google Scholar]

- Krug, S.; O’Nils, M. IoT Communication Introduced Limitations for High Sampling Rate Applications. GI/ITG KuVS Fachgespräch Sensornetze–FGSN 2018, 2018.

- Long, J.D.; Temple, M.A.; Rondeau, C.M. Discriminating WirelessHART Communication Devices Using Sub-Nyquist Stimulated Responses. Electronics 2023, 12, 1973. [Google Scholar] [CrossRef]

- Deng, P.; Hong, S.; Qi, J.; Wang, L.; Sun, H. A Lightweight Transformer-Based Approach of Specific Emitter Identification for the Automatic Identification System. IEEE Transactions on Information Forensics and Security 2023. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Shen, G.; Zhang, J.; Marshall, A.; Woods, R.; Cavallaro, J.; Chen, L. Towards Receiver-Agnostic and Collaborative Radio Frequency Fingerprint Identification. arXiv preprint arXiv:2207.02999 2022. [Google Scholar]

- Qian, Y.; Qi, J.; Kuai, X.; Han, G.; Sun, H.; Hong, S. Specific emitter identification based on multi-level sparse representation in automatic identification system. IEEE Transactions on Information Forensics and Security 2021, 16, 2872–2884. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 10012–10022.

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 22–31.

- Nguyen, D.D.N.; Sood, K.; Xiang, Y.; Gao, L.; Chi, L.; Yu, S. Towards IoT Node Authentication Mechanism in Next Generation Networks. IEEE Internet of Things Journal 2023. [Google Scholar] [CrossRef]

- Merchant, K.; Nousain, B. Toward receiver-agnostic RF fingerprint verification. 2019 IEEE Globecom Workshops (GC Wkshps). IEEE, 2019, pp. 1–6.

- He, B.; Wang, F. Cooperative specific emitter identification via multiple distorted receivers. IEEE Transactions on Information Forensics and Security 2020, 15, 3791–3806. [Google Scholar] [CrossRef]

- Huang, K.; Jiang, B.; Yang, J.; Wang, J.; Hu, P.; Li, Y.; Shao, K.; Zhao, D. Cross-receiver specific emitter identification based on a deep adversarial neural network with separated batch normalization. Third International Conference on Computer Science and Communication Technology (ICCSCT 2022). SPIE, 2022, Vol. 12506, pp. 1659–1663.

- Zhao, T.; Sarkar, S.; Krijestorac, E.; Cabric, D. GAN-RXA: A Practical Scalable Solution to Receiver-Agnostic Transmitter Fingerprinting. arXiv preprint arXiv:2303.14312 2023. [Google Scholar]

- Zha, X.; Li, T.; Qiu, Z.; Li, F. Cross-Receiver Radio Frequency Fingerprint Identification Based on Contrastive Learning and Subdomain Adaptation. IEEE Signal Processing Letters 2023, 30, 70–74. [Google Scholar] [CrossRef]

- Tatarek, T.; Kronenberger, J.; Handmann, U. Functionality, advantages and limits of the tesla autopilot 2017.

- Soori, M.; Arezoo, B.; Dastres, R. Artificial Intelligence, Machine Learning and Deep Learning in Advanced Robotics, A Review. Cognitive Robotics 2023. [Google Scholar] [CrossRef]

- Shen, G.; Zhang, J.; Marshall, A.; Cavallaro, J.R. Towards scalable and channel-robust radio frequency fingerprint identification for LoRa. IEEE Transactions on Information Forensics and Security 2022, 17, 774–787. [Google Scholar] [CrossRef]

- Chen, X.; He, K. Exploring simple siamese representation learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 15750–15758.

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep subdomain adaptation network for image classification. IEEE transactions on neural networks and learning systems 2020, 32, 1713–1722. [Google Scholar] [CrossRef]

- Ma, H.; Qiu, H.; Gao, Y.; Zhang, Z.; Abuadbba, A.; Fu, A.; Al-Sarawi, S.; Abbott, D. Quantization backdoors to deep learning models. arXiv preprint arXiv:2108.09187 2021. [Google Scholar]

- Capotondi, A.; Rusci, M.; Fariselli, M.; Benini, L. CMix-NN: Mixed low-precision CNN library for memory-constrained edge devices. IEEE Transactions on Circuits and Systems II: Express Briefs 2020, 67, 871–875. [Google Scholar] [CrossRef]

- Alom, M.Z.; Moody, A.T.; Maruyama, N.; Van Essen, B.C.; Taha, T.M. Effective quantization approaches for recurrent neural networks. 2018 international joint conference on neural networks (IJCNN). IEEE, 2018, pp. 1–8.

- Tyler, J.; Fadul, M.; Hilling, M.; Reising, D.; Loveless, D. Assessing Adversarial Replay and Deep Learning-Driven Attacks on Specific Emitter Identification-based Security Approaches. IEEE Open Journal of the Communications Society (Accepted) 2023. [Google Scholar]

- Kaushal, N.; Kaushal, P. Human identification and fingerprints: a review. J biomet biostat 2011, 2, 2. [Google Scholar] [CrossRef]

- Henke, C. A Comprehensive Guide to IoT Protocols. emnify 2022. [Google Scholar]

| Survey | |||||||||

| This paper | [89] | [97] | [91] | [94] | [95] | [98] | [99] | [96] | |

| Year | 2023 | 2017 | 2019 | 2020 | 2020 | 2021 | 2022 | 2022 | 2022 |

| IoT Motivated | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Handcrafted SEI | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| DL-based SEI | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Operating Conditions | ✓ | ✓ | |||||||

| Threats to SEI | ✓ | ✓ | |||||||

| SEI at scale | ✓ | ||||||||

| IoT Limitations | ✓ | ✓ | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).