Submitted:

19 July 2023

Posted:

21 July 2023

You are already at the latest version

Abstract

Keywords:

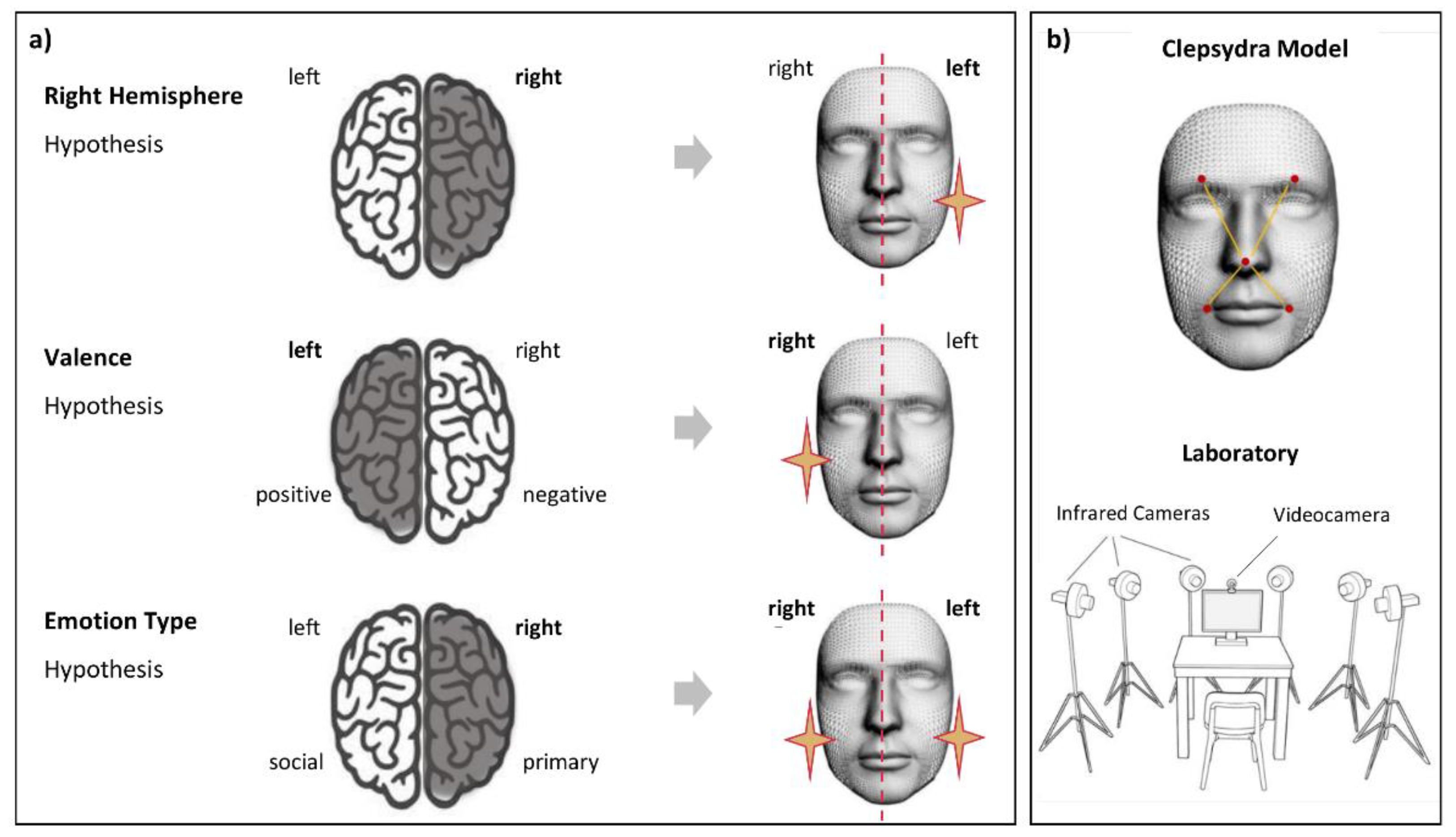

1. Introduction

2. General methods

2.1. Ethics Statement

2.2. Apparatus

2.3. Procedure

2.4. Expression extraction and FACS validation procedure

2.5. Data Acquisition

2.5.1. Kinematic 3-D tracking

2.5.2. Kinematic 3-D analysis

- Left cheilion and tip of the nose (Left-CH);

- Right cheilion and tip of the nose (Right-CH).

- Left eyebrow and tip of the nose (Left-EB);

- Right eyebrow and tip of the nose (Right-EB).

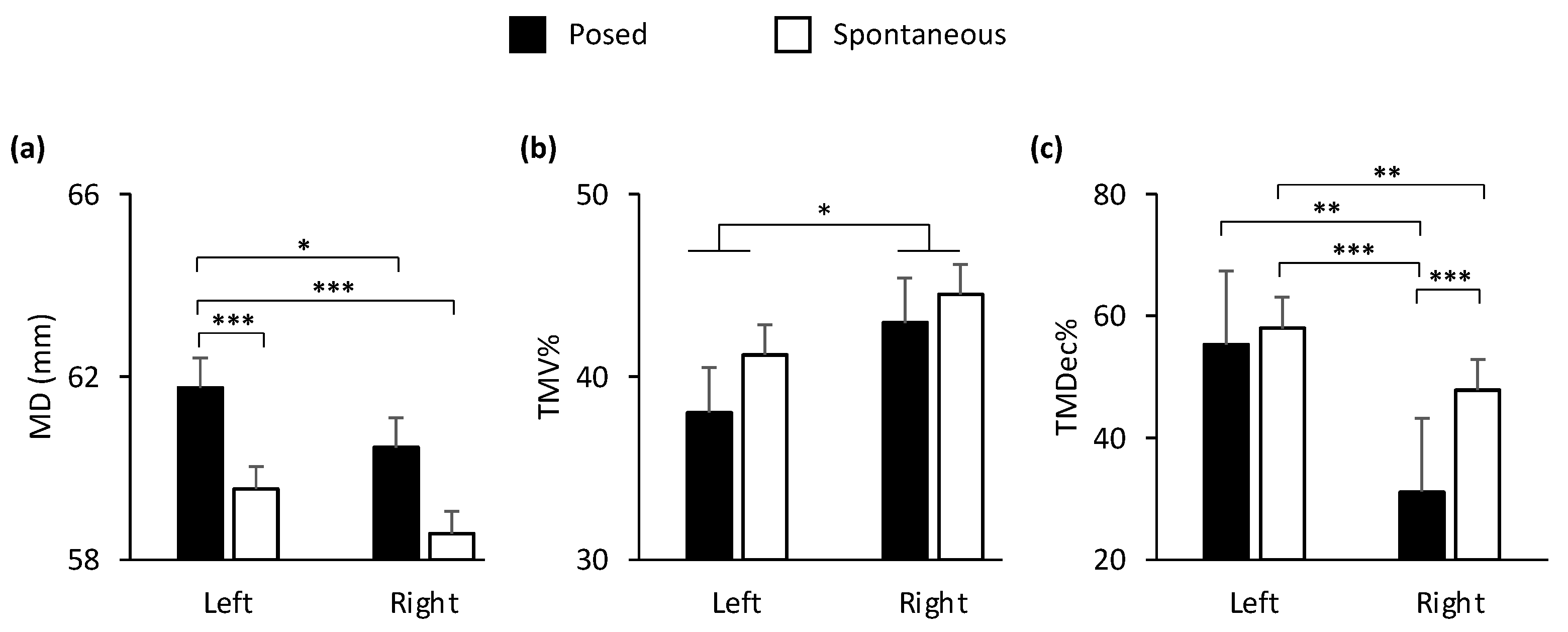

- Maximum Distance (MD, mm) was calculated as the maximum distance reached by the 3-D coordinates (x,y,z) of two markers.

- Delta Distance (DD, mm) was calculated as the difference between the maximum and the minimum distance reached by two markers, to account for functional and anatomical differences across participants.

- Maximum Velocity (MV, mm/s) was calculated as the maximum velocity reached by the 3-D coordinates (x,y,z) of each pair of markers during movement time.

- Maximum Acceleration (MA, mm/s2) was calculated as the maximum acceleration reached by the 3-D coordinates (x,y,z) of each pair of markers during movement time.

- Maximum Deceleration (MDec, mm/s2): was calculated as the maximum deceleration reached by the 3-D coordinates (x,y,z) of each pair of markers during movement time.

- Time to Maximum Distance (TMD%);

- Time to Maximum Velocity (TMV%);

- Time to Maximum Acceleration (TMA%);

- Time to Maximum Deceleration (TMDec%).

2.6. Statistical Approach

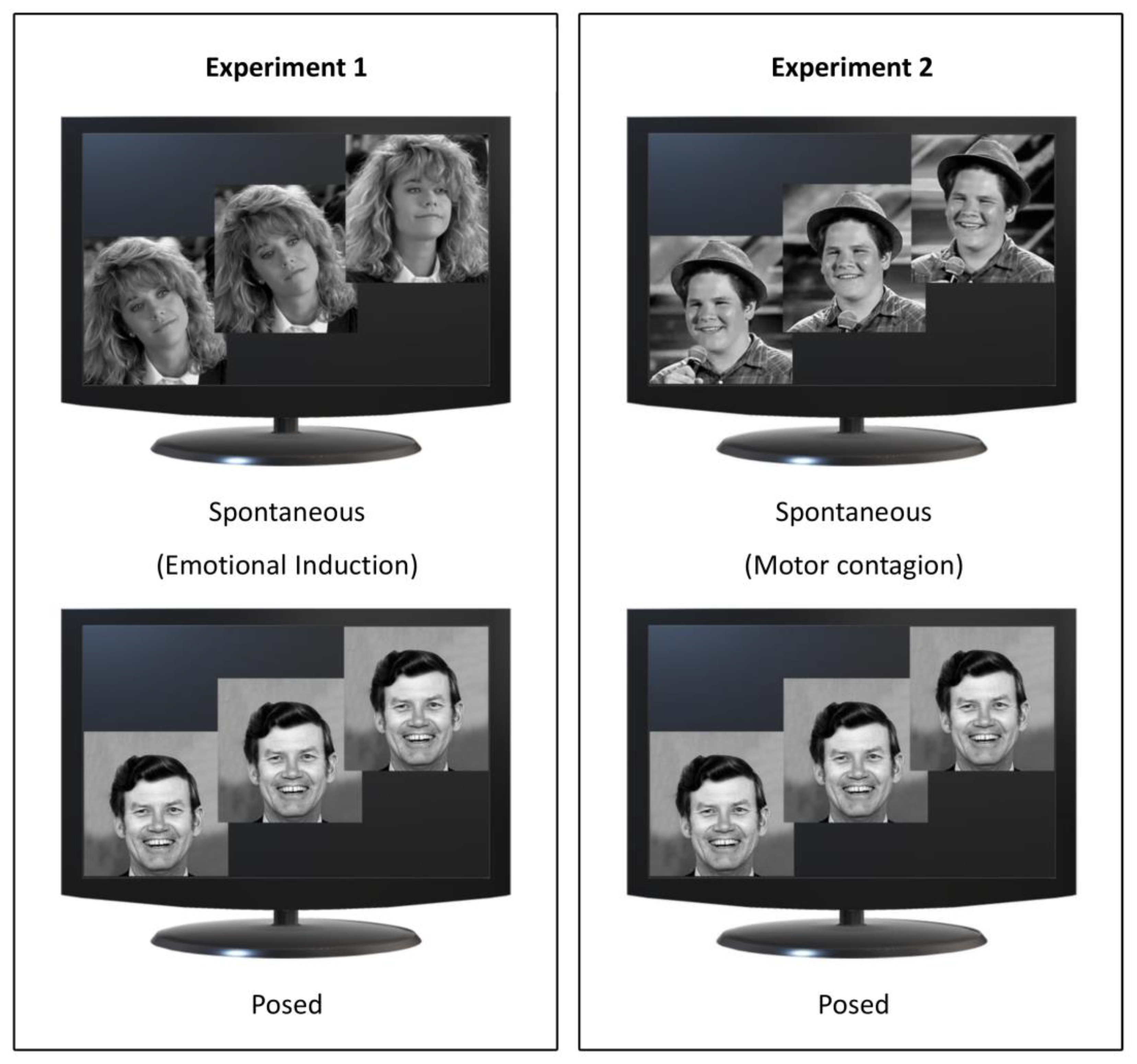

3. Experiment 1

3.1. Participants

3.2. Stimuli

3.3. Results

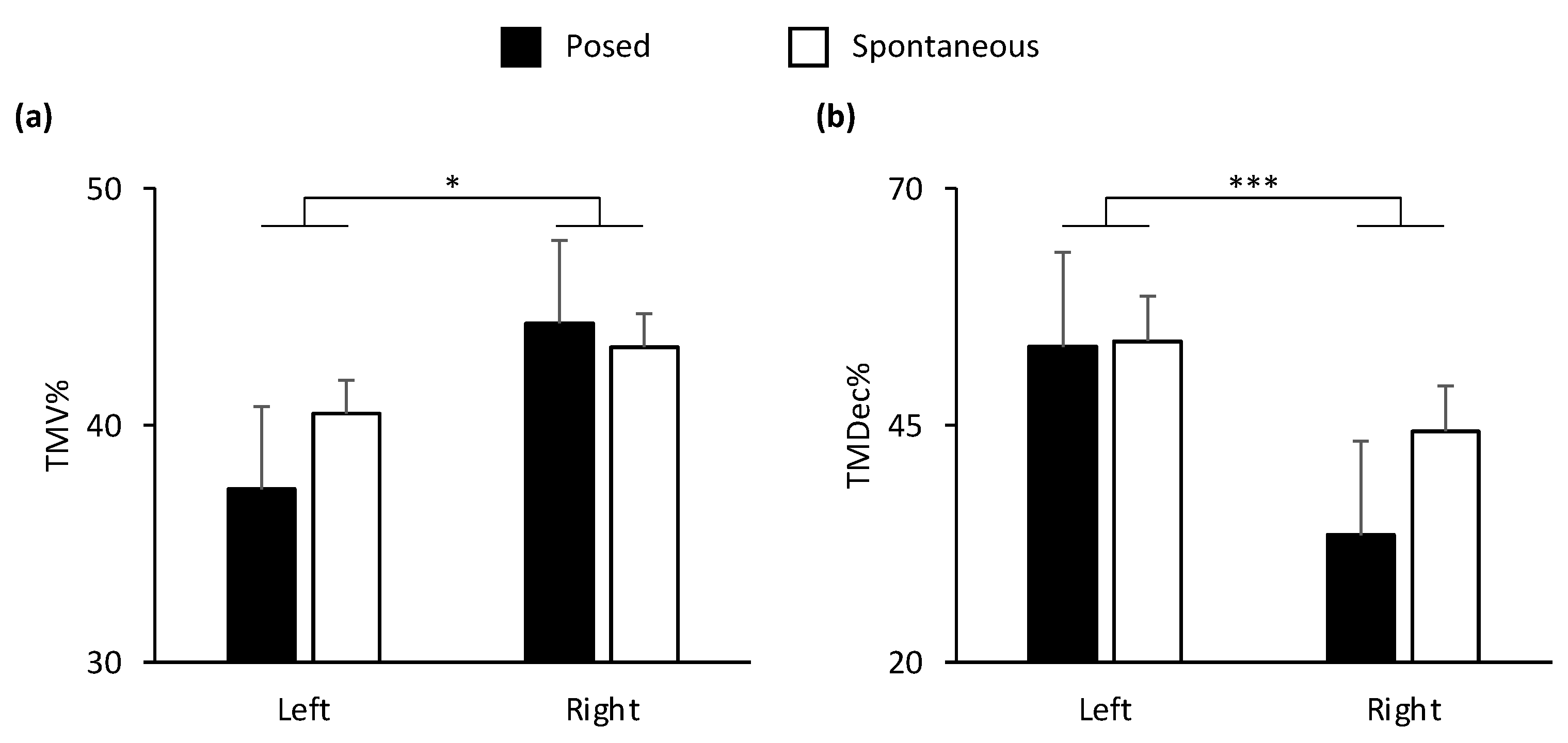

3.3.1. Repeated-measures ANOVA

4. Experiment 2

4.1. Participants

4.2. Stimuli

4.3. Results

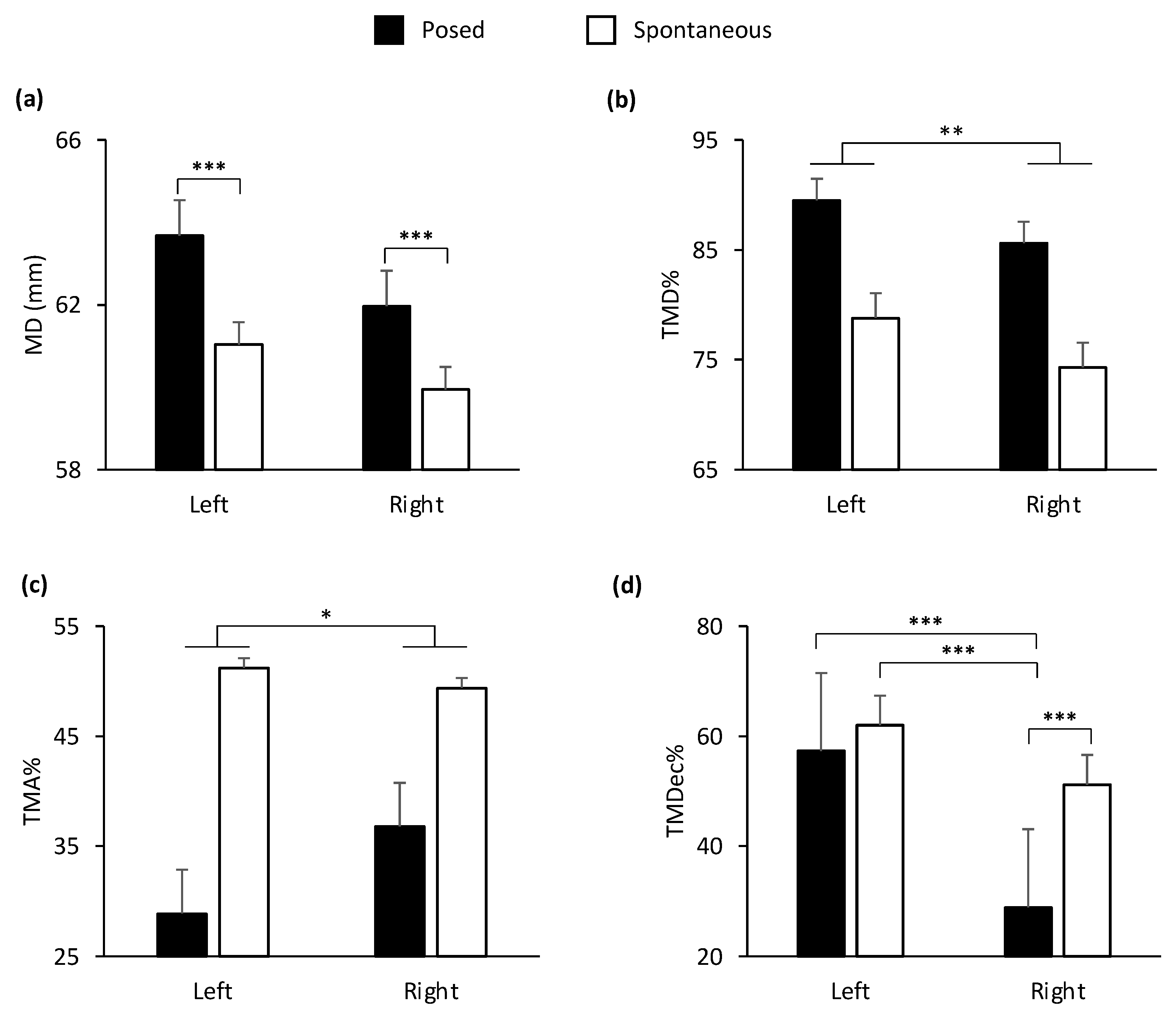

4.3.1. Repeated-measures ANOVA

5. Comparison analysis (Experiment 1 vs. 2)

5.1. Mixed ANOVA: Posed vs. Spontaneous, left vs. right, and Experiment 1 vs. 2

6. Discussion

6.1. Left vs. right

6.2. Posed vs. Spontaneous

6.3. Emotional Induction vs. Motor Contagion

6.4. Clinical applications

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekman, P. Lie Catching and Microexpressions. In The Philosophy of Deception; Martin, C.W., Ed.; 2009; pp. 118–136 ISBN 978-0-19-532793-9.

- Koff, E.; Borod, J.; Strauss, E. Development of Hemiface Size Asymmetry. Cortex 1985, 21, 153–156. [Google Scholar] [CrossRef] [PubMed]

- Rinn, W.E. The Neuropsychology of Facial Expression: A Review of the Neurological and Psychological Mechanisms for Producing Facial Expressions. Psychol. Bull. 1984, 95, 52–77. [Google Scholar] [CrossRef] [PubMed]

- Morecraft, R.J.; Stilwell–Morecraft, K.S.; Rossing, W.R. The Motor Cortex and Facial Expression:: New Insights From Neuroscience. The Neurologist 2004, 10, 235–249. [Google Scholar] [CrossRef] [PubMed]

- Ross, E.D.; Gupta, S.S.; Adnan, A.M.; Holden, T.L.; Havlicek, J.; Radhakrishnan, S. Neurophysiology of Spontaneous Facial Expressions: I. Motor Control of the Upper and Lower Face Is Behaviorally Independent in Adults. Cortex J. Devoted Study Nerv. Syst. Behav. 2016, 76, 28–42. [Google Scholar] [CrossRef]

- Morecraft, R.J.; Louie, J.L.; Herrick, J.L.; Stilwell-Morecraft, K.S. Cortical Innervation of the Facial Nucleus in the Non-Human Primate: A New Interpretation of the Effects of Stroke and Related Subtotal Brain Trauma on the Muscles of Facial Expression. Brain J. Neurol. 2001, 124, 176–208. [Google Scholar] [CrossRef]

- Gazzaniga, M.S.; Smylie, C.S. Hemispheric Mechanisms Controlling Voluntary and Spontaneous Facial Expressions. J. Cogn. Neurosci. 1990, 2, 239–245. [Google Scholar] [CrossRef]

- Hopf, H.C.; Md, W.M.-F.; Hopf, N.J. Localization of Emotional and Volitional Facial Paresis. Neurology 1992, 42, 1918–1918. [Google Scholar] [CrossRef]

- Krippl, M.; Karim, A.A.; Brechmann, A. Neuronal Correlates of Voluntary Facial Movements. Front. Hum. Neurosci. 2015, 9. [Google Scholar] [CrossRef]

- Ross, E.D.; Prodan, C.I.; Monnot, M. Human Facial Expressions Are Organized Functionally Across the Upper-Lower Facial Axis. The Neuroscientist 2007, 13, 433–446. [Google Scholar] [CrossRef]

- Demaree, H.A.; Everhart, D.E.; Youngstrom, E.A.; Harrison, D.W. Brain Lateralization of Emotional Processing: Historical Roots and a Future Incorporating “Dominance. ” Behav. Cogn. Neurosci. Rev. 2005, 4, 3–20. [Google Scholar] [CrossRef]

- Killgore, W.D.S.; Yurgelun-Todd, D.A. The Right-Hemisphere and Valence Hypotheses: Could They Both Be Right (and Sometimes Left)? Soc. Cogn. Affect. Neurosci. 2007, 2, 240–250. [Google Scholar] [CrossRef] [PubMed]

- Davidson, R.J. Affect, Cognition, and Hemispheric Specialization. In Emotions, cognition, and behavior; Cambridge University Press: New York, NY, US, 1985; ISBN 978-0-521-25601-8. [Google Scholar]

- Straulino, E.; Scarpazza, C.; Sartori, L. What Is Missing in the Study of Emotion Expression? Front. Psychol. 2023, 14. [Google Scholar] [CrossRef] [PubMed]

- Sackeim, H.A.; Gur, R.C.; Saucy, M.C. Emotions Are Expressed More Intensely on the Left Side of the Face. Science 1978, 202, 434–436. [Google Scholar] [CrossRef]

- Ekman, P.; Hager, J.C.; Friesen, W.V. The Symmetry of Emotional and Deliberate Facial Actions. Psychophysiology 1981, 18, 101–106. [Google Scholar] [CrossRef] [PubMed]

- Borod, J.C.; Cicero, B.A.; Obler, L.K.; Welkowitz, J.; Erhan, H.M.; Santschi, C.; Grunwald, I.S.; Agosti, R.M.; Whalen, J.R. Right Hemisphere Emotional Perception: Evidence across Multiple Channels. Neuropsychology 1998, 12, 446–458. [Google Scholar] [CrossRef] [PubMed]

- Borod, J.C.; Koff, E.; White, B. Facial Asymmetry in Posed and Spontaneous Expressions of Emotion. Brain Cogn. 1983, 2, 165–175. [Google Scholar] [CrossRef]

- Thompson, J.K. Right Brain, Left Brain; Left Face, Right Face: Hemisphericity and the Expression of Facial Emotion. Cortex J. Devoted Study Nerv. Syst. Behav. 1985, 21, 281–299. [Google Scholar] [CrossRef]

- Ross, E.D. Differential Hemispheric Lateralization of Emotions and Related Display Behaviors: Emotion-Type Hypothesis. Brain Sci. 2021, 11, 1034. [Google Scholar] [CrossRef]

- Duchenne de Boulogne, G.-B. The Mechanism of Human Facial Expression; Studies in Emotion and Social Interaction; Cuthbertson, R.A., Ed.; Cambridge University Press: Cambridge, 1990; ISBN 978-0-521-36392-1. [Google Scholar]

- Ekman, P.; Friesen, W.V. Felt, False, and Miserable Smiles. J. Nonverbal Behav. 1982, 6, 238–252. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’Sullivan, M. Smiles When Lying. J. Pers. Soc. Psychol. 1988, 54, 414–420. [Google Scholar] [CrossRef]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychol. Sci. Public Interest J. Am. Psychol. Soc. 2019, 20, 1–68. [Google Scholar] [CrossRef]

- Siedlecka, E.; Denson, T.F. Experimental Methods for Inducing Basic Emotions: A Qualitative Review. Emot. Rev. 2019, 11, 87–97. [Google Scholar] [CrossRef]

- Kavanagh, L.C.; Winkielman, P. The Functionality of Spontaneous Mimicry and Its Influences on Affiliation: An Implicit Socialization Account. Front. Psychol. 2016, 7. [Google Scholar] [CrossRef] [PubMed]

- Prochazkova, E.; Kret, M.E. Connecting Minds and Sharing Emotions through Mimicry: A Neurocognitive Model of Emotional Contagion. Neurosci. Biobehav. Rev. 2017, 80, 99–114. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Fogassi, L.; Gallese, V. Neurophysiological Mechanisms Underlying the Understanding and Imitation of Action. Nat. Rev. Neurosci. 2001, 2, 661–670. [Google Scholar] [CrossRef] [PubMed]

- Hess, U.; Fischer, A. Emotional Mimicry: Why and When We Mimic Emotions. Soc. Personal. Psychol. Compass 2014, 8, 45–57. [Google Scholar] [CrossRef]

- Straulino, E.; Scarpazza, C.; Miolla, A.; Spoto, A.; Betti, S.; Sartori, L. Different Induction Methods Reveal the Kinematic Features of Posed vs. Spontaneous Expressions. under review.

- Ekman, P.; Friesen, W. Facial Action Coding System: A Technique for the Measurement of Facial Movement.; Consulting Psychologists Press: Palo Alto, 1978. [Google Scholar]

- Straulino, E.; Miolla, A.; Scarpazza, C.; Sartori, L. Facial Kinematics of Spontaneous and Posed Expression of Emotions. In Proceedings of the International Society for Research on Emotion - ISRE 2022; Los Angeles, California USA; 2022. [Google Scholar]

- Sowden, S.; Schuster, B.A.; Keating, C.T.; Fraser, D.S.; Cook, J.L. The Role of Movement Kinematics in Facial Emotion Expression Production and Recognition. Emot. Wash. DC 2021, 21, 1041–1061. [Google Scholar] [CrossRef]

- Zane, E.; Yang, Z.; Pozzan, L.; Guha, T.; Narayanan, S.; Grossman, R.B. Motion-Capture Patterns of Voluntarily Mimicked Dynamic Facial Expressions in Children and Adolescents With and Without ASD. J. Autism Dev. Disord. 2019, 49, 1062–1079. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, X.-H.; Liang, J.; Yan, W.-J. The Dynamic Features of Lip Corners in Genuine and Posed Smiles. Front. Psychol. 2018, 9. [Google Scholar] [CrossRef]

- Fox, D. The Scientists Studying Facial Expressions. Nature 2020. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Pictures of Facial Affect; Consulting Psychologists Press: Palo Alto, Calif, 1976. [Google Scholar]

- Miolla, A.; Cardaioli, M.; Scarpazza, C. Padova Emotional Dataset of Facial Expressions (PEDFE): A Unique Dataset of Genuine and Posed Emotional Facial Expressions 2021.

- Le Mau, T.; Hoemann, K.; Lyons, S.H.; Fugate, J.M.B.; Brown, E.N.; Gendron, M.; Barrett, L.F. Professional Actors Demonstrate Variability, Not Stereotypical Expressions, When Portraying Emotional States in Photographs. Nat. Commun. 2021, 12, 5037. [Google Scholar] [CrossRef] [PubMed]

- Vimercati, S.L.; Rigoldi, C.; Albertini, G.; Crivellini, M.; Galli, M. Quantitative Evaluation of Facial Movement and Morphology. Ann. Otol. Rhinol. Laryngol. 2012, 121, 246–252. [Google Scholar] [CrossRef] [PubMed]

- JASP Team JASP - Version 0. 16.4. Comput. Softw. 2022. [Google Scholar]

- Sellke, T.; Bayarri, M.J.; Berger, J.O. Calibration of ρ Values for Testing Precise Null Hypotheses. Am. Stat. 2001, 55, 62–71. [Google Scholar] [CrossRef]

- Erdfelder, E.; Faul, F.; Buchner, A. GPOWER: A General Power Analysis Program. Behav. Res. Methods Instrum. Comput. 1996, 28, 1–11. [Google Scholar] [CrossRef]

- Miolla, A.; Cardaioli, M.; Scarpazza, C. Padova Emotional Dataset of Facial Expressions (PEDFE): A Unique Dataset of Genuine and Posed Emotional Facial Expressions. Behav. Res. Methods 2022. [Google Scholar] [CrossRef]

- Rottenberg, J.; Ray, R.D.; Gross, J.J. Emotion elicitation using films. Emot. Elicitation Using Films 2007, 9–28. [Google Scholar]

- Ambadar, Z.; Cohn, J.F.; Reed, L.I. All Smiles Are Not Created Equal: Morphology and Timing of Smiles Perceived as Amused, Polite, and Embarrassed/Nervous. J. Nonverbal Behav. 2009, 33, 17–34. [Google Scholar] [CrossRef]

- Palomero-Gallagher, N.; Amunts, K. A Short Review on Emotion Processing: A Lateralized Network of Neuronal Networks. Brain Struct. Funct. 2022, 227, 673–684. [Google Scholar] [CrossRef]

- Morawetz, C.; Riedel, M.C.; Salo, T.; Berboth, S.; Eickhoff, S.B.; Laird, A.R.; Kohn, N. Multiple Large-Scale Neural Networks Underlying Emotion Regulation. Neurosci. Biobehav. Rev. 2020, 116, 382–395. [Google Scholar] [CrossRef]

- Schmidt, K.L.; Ambadar, Z.; Cohn, J.F.; Reed, L.I. Movement Differences between Deliberate and Spontaneous Facial Expressions: Zygomaticus Major Action in Smiling. J. Nonverbal Behav. 2006, 30, 37–52. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, K.L.; Bhattacharya, S.; Denlinger, R. Comparison of Deliberate and Spontaneous Facial Movement in Smiles and Eyebrow Raises. J. Nonverbal Behav. 2009, 33, 35–45. [Google Scholar] [CrossRef] [PubMed]

- Smith, M.C.; Smith, M.K.; Ellgring, H. Spontaneous and Posed Facial Expression in Parkinson’s Disease. J. Int. Neuropsychol. Soc. JINS 1996, 2, 383–391. [Google Scholar] [CrossRef] [PubMed]

- Blandini, F.; Nappi, G.; Tassorelli, C.; Martignoni, E. Functional Changes of the Basal Ganglia Circuitry in Parkinson’s Disease. Prog. Neurobiol. 2000, 62, 63–88. [Google Scholar] [CrossRef]

- Alexander, G.E.; Crutcher, M.D. Functional Architecture of Basal Ganglia Circuits: Neural Substrates of Parallel Processing. Trends Neurosci. 1990, 13, 266–271. [Google Scholar] [CrossRef] [PubMed]

- McDonald, A.J. Organization of Amygdaloid Projections to the Prefrontal Cortex and Associated Striatum in the Rat. Neuroscience 1991, 44, 1–14. [Google Scholar] [CrossRef]

- Adolphs, R. Recognizing Emotion from Facial Expressions: Psychological and Neurological Mechanisms. Behav. Cogn. Neurosci. Rev. 2002, 1, 21–62. [Google Scholar] [CrossRef]

| Kinematicparameters | Main effect Condition |

Main effect Side of the face |

Interaction Condition by Side of the face |

|---|---|---|---|

| Cheilions (CH) | |||

| MD |

F(1,16) = 21.440, p < 0.001, VS-MPR = 161.690, η2p = 0.573 |

F(1,16) = 3.007, p = 0.102, VS-MPR = 1.579, η2p = 0.158 |

F(1,16) = 0.014, p = 0.908, VS-MPR = 1.000, η2p < 0.001 |

| DD |

F(1,16) = 8.221, p = 0.011, VS-MPR = 7.325, η2p = 0.339 |

F(1,16) = 1.882, p = 0.189, VS-MPR = 1.168, η2p = 0.105 |

F(1,16) = 1.23, p = 0.305, VS-MPR = 1.016, η2p = 0.066 |

| MV |

F(1,16) = 10.595, p = 0.005, VS-MPR = 13.958, η2p = 0.398 |

F(1,16) = 0.636, p = 0.437, VS-MPR = 1.000, η2p = 0.038 |

F(1,16) = 0.539, p = 0.473, VS-MPR = 1.000, η2p = 0.033 |

| MA |

F(1,13) = 8.523, p = 0.012, VS-MPR = 6.952, η2p = 0.396 |

F(1,13) = 0.365, p = 0.556, VS-MPR = 1.000, η2p = 0.027 |

F(1,13) = 0.029, p = 0.868, VS-MPR = 1.000, η2p = 0.002 |

| MDec |

F(1,13) = 6.491, p = 0.024, VS-MPR = 4.073, η2p = 0.333 |

F(1,13) = 0.766, p = 0.397, VS-MPR = 1.000, η2p = 0.056 |

F(1,13) = 0.192, p = 0.668, VS-MPR = 1.000, η2p = 0.015 |

| TMD% |

F(1,16) = 5.670, p = 0.030, VS-MPR = 3.495, η2p = 0.262 |

F(1,16) = 0.026, p = 0.873, VS-MPR = 1.000, η2p = 0.002 |

F(1,16) = 1.142, p = 0.301, VS-MPR = 1.018, η2p = 0.067 |

| TMV% |

F(1,16) = 0.120, p = 0.733, VS-MPR = 1.000, η2p = 0.007 |

F(1,16) = 4.616, p = 0.047, VS-MPR = 2.548, η2p = 0.224 |

F(1,16) = 0.530, p = 0.477, VS-MPR = 1.000, η2p = 0.032 |

| TMA% |

F(1,14) = 5.670, p = 0.030, VS-MPR = 3.495, η2p = 0.262 |

F(1,14) = 0.709, p = 0.414, VS-MPR = 1.000, η2p = 0.048 |

F(1,14) = 0.562, p = 0.466, VS-MPR = 1.000, η2p = 0.039 |

| TMDec% |

F(1,14) = 1.168, p = 0.298, VS-MPR = 1.020, η2p = 0.077 |

F(1,14) = 24.37, p < 0.001, VS-MPR = 188.689, η2p = 0.632 |

F(1,14) = 2.795, p = 0.117, VS-MPR = 1.467, η2p = 0.166 |

| Eyebrows (EB) | |||

| MD |

F(1,16) = 12.298, p = 0.003, VS-MPR = 21.580, η2p = 0.435 |

F(1,16) = 0.518, p = 0.482, VS-MPR = 1.000, η2p = 0.031 |

F(1,16) = 1.411, p = 0.252, VS-MPR = 1.059, η2p = 0.081 |

| TMV% |

F(1,14) = 10.083, p = 0.007, VS-MPR = 10.912, η2p = 0.419 |

F(1,14) = 0.287, p = 0.601, VS-MPR = 1.000, η2p = 0.020 |

F(1,14) = 0.413, p = 0.531, VS-MPR = 1.000, η2p = 0.029 |

| Kinematic parameters |

Main effect Condition |

Main effect Side of the face |

Interaction Condition by Side of the face |

|---|---|---|---|

| Cheilions (CH) | |||

| MD |

F(1,19) = 29.400, p < 0.001, VS-MPR = 1135.133, η2p = 0.607 |

F(1,19) = 5.681, p = 0.028, VS-MPR = 3.700, η2p = 0.230 |

F(1,19) =6.452, p = 0.020, VS-MPR = 4.706, η2p = 0.253 |

| DD |

F(1,19) = 21.393, p < 0.001, VS-MPR = 231.784, η2p = 0.530 |

F(1,19) = 0.187, p = 0.670, VS-MPR = 1.000, η2p = 0.010 |

F(1,19) = 0.080, p = 0.780, VS-MPR = 1.000, η2p = 0.004 |

| MV |

F(1,19) = 29.728, p < 0.001, VS-MPR = 1205.041, η2p = 0.610 |

F(1,19) = 3.451, p = 0.079, VS-MPR = 1.837, η2p = 0.154 |

F(1,19) = 0.165, p = 0.689, VS-MPR = 1.000, η2p = 0.009 |

| MA |

F(1,19) = 17.149, p < 0.001, VS-MPR = 88.406, η2p = 0.474 |

F(1,19) = 0.102, p = 0.753, VS-MPR = 1.000, η2p = 0.005 |

F(1,19) = 0.273, p = 0.608, VS-MPR = 1.000, η2p = 0.014 |

| MDec |

F(1,19) = 18.450, p < 0.001, VS-MPR = 120.051, η2p = 0.493 |

F(1,19) = 0.473, p = 0.500, VS-MPR = 1.000, η2p = 0.024 |

F(1,19) = 0.895, p = 0.356, VS-MPR = 1.001, η2p = 0.045 |

| TMD% |

F(1,19) = 26.586, p < 0.001, VS-MPR = 669.279, η2p = 0.583 |

F(1,19) = 9.818, p = 0.005, VS-MPR = 12.910, η2p = 0.341 |

F(1,19) = 0.036, p = 0.851, VS-MPR = 1.000, η2p = 0.002 |

| TMA% |

F(1,19) = 17.956, p < 0.001, VS-MPR = 106.987, η2p = 0.486 |

F(1,19) = 5.300, p = 0.033, VS-MPR = 3.282, η2p = 0.218 |

F(1,19) = 4.089, p = 0.057, VS-MPR = 2.241, η2p = 0.177 |

| TMDec% |

F(1,19) = 10.120, p = 0.005, VS-MPR = 14.076, η2p = 0.348 |

F(1,19) = 46.466, p < 0.001, VS-MPR = 16685.144, η2p = 0.710 |

F(1,19) = 9.707, p = 0.006, VS-MPR = 12.502, η2p = 0.338 |

| Kinematic parameters |

Main effect Condition |

Main effect Side of the face |

2-way interaction between Condition and Side of the face |

|---|---|---|---|

| Cheilions (CH) | |||

| MD |

F(1,35) = 49.138, p < 0.001, VS-MPR = 579497.156, η2p = 0.584 |

F(1,35) = 8.314, p = 0.007, VS-MPR = 10.987, η2p = 0.192 |

F(1,35) = 4.106, p = 0.05, VS-MPR = 2.443, η2p = 0.105 |

| DD |

F(1,35) = 27.775, p < 0.001, VS-MPR = 4382.16, η2p = 0.442 |

F(1,35) = 1.380., p = 0.248, VS-MPR = 1.064, η2p = 0.038 |

F(1,35) = 0.487, p = 0.490, VS-MPR = 1.000, η2p = 0.014 |

| MV |

F(1,35) = 36.953, p < 0.001, VS-MPR = 42283.314, η2p = 0.514 |

F(1,35) = 3.246, p = 0.080, VS-MPR = 1.817, η2p = 0.085 |

F(1,35) = 0.167, p = 0.685, VS-MPR = 1.000, η2p = 0.005 |

| MA |

F(1,32) = 23.699, p < 0.001, VS-MPR = 1208.896, η2p = 0.425 |

F(1,32) = 0.498, p = 0.485, VS-MPR = 1.000, η2p = 0.015 |

F(1,32) = 0.031, p = 0.861, VS-MPR = 1.000, η2p < 0.001 |

| MDec |

F(1,32) = 22.148, p < 0.001, VS-MPR = 791.644, η2p = 0.409 |

F(1,32) =0.038, p = 0.847, VS-MPR = 1.000, η2p = 0.001 |

F(1,32) = 0.898, p = 0.350, VS-MPR = 1.001, η2p = 0.027 |

| TMD% |

F(1,35) = 27.941, p < 0.001, VS-MPR = 4576.896, η2p = 0.444 |

F(1,35) =3.258, p = 0.080, VS-MPR = 1.825, η2p = 0.085 |

F(1,35) = 1.128, p = 0.296, VS-MPR = 1.021, η2p = 0.031 |

| TMV% | F(1,35) =1.136, p = 0.294, VS-MPR = 1.022, η2p = 0.031 |

F(1,35) = 6.551, p = 0.015, VS-MPR = 5.851, η2p = 0.158 |

F(1,35) =0.200, p = 0.657, VS-MPR = 1.000, η2p = 0.006 |

| TMA% |

F(1,33) = 20.198, p < 0.001, VS-MPR = 481.057, η2p = 0.380 |

F(1,33) = 3.389, p = 0.075, VS-MPR = 1.900, η2p = 0.093 |

F(1,33) = 3.683, p = 0.064, VS-MPR = 2.098, η2p = 0.100 |

| TMDec |

F(1,33) = 8.160, p = 0.007, VS-MPR = 10.181, η2p = 0.198 |

F(1,33) = 66.159, p < 0.001, VS-MPR > 100000, η2p = 0.667 |

F(1,33) = 10.947, p = 0.002, VS-MPR = 26.596, η2p = 0.249 |

| Eyebrows (EB) | |||

| MD |

F(1,35) = 6.535, p = 0.015, VS-MPR = 5.818, η2p = 0.157 |

F(1,35) < 0.01, p = 0.986, VS-MPR = 1.000, η2p < 0.001 |

F(1,35) = 2.596, p = 0.116, VS-MPR = 1.471, η2p = 0.069 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).