Since individual voice is always stored and transmitted on different hardware and applications, it is important to identify speakers across channels. However, in the deep learning processing method of intelligent speech, the end-to-end speaker representation has a good prospect. Therefore, this chapter proposes the identification method of voice print recognition technology based on the adaptation of the channel domain.

3.1. A voice information database of the speaker recognition system

In real life, the scale of audio data is very large, and the centralized storage method of the server is unable, to meet the demand of massive data storage and management [

14]. Therefore, a distributed storage scheme is used in the system, which mainly concentrates the disk space on the machines within the network into a virtual storage space, and even though the physical storage is distributed everywhere, it can still be used as a virtual overall space to provide storage services to the outside world [

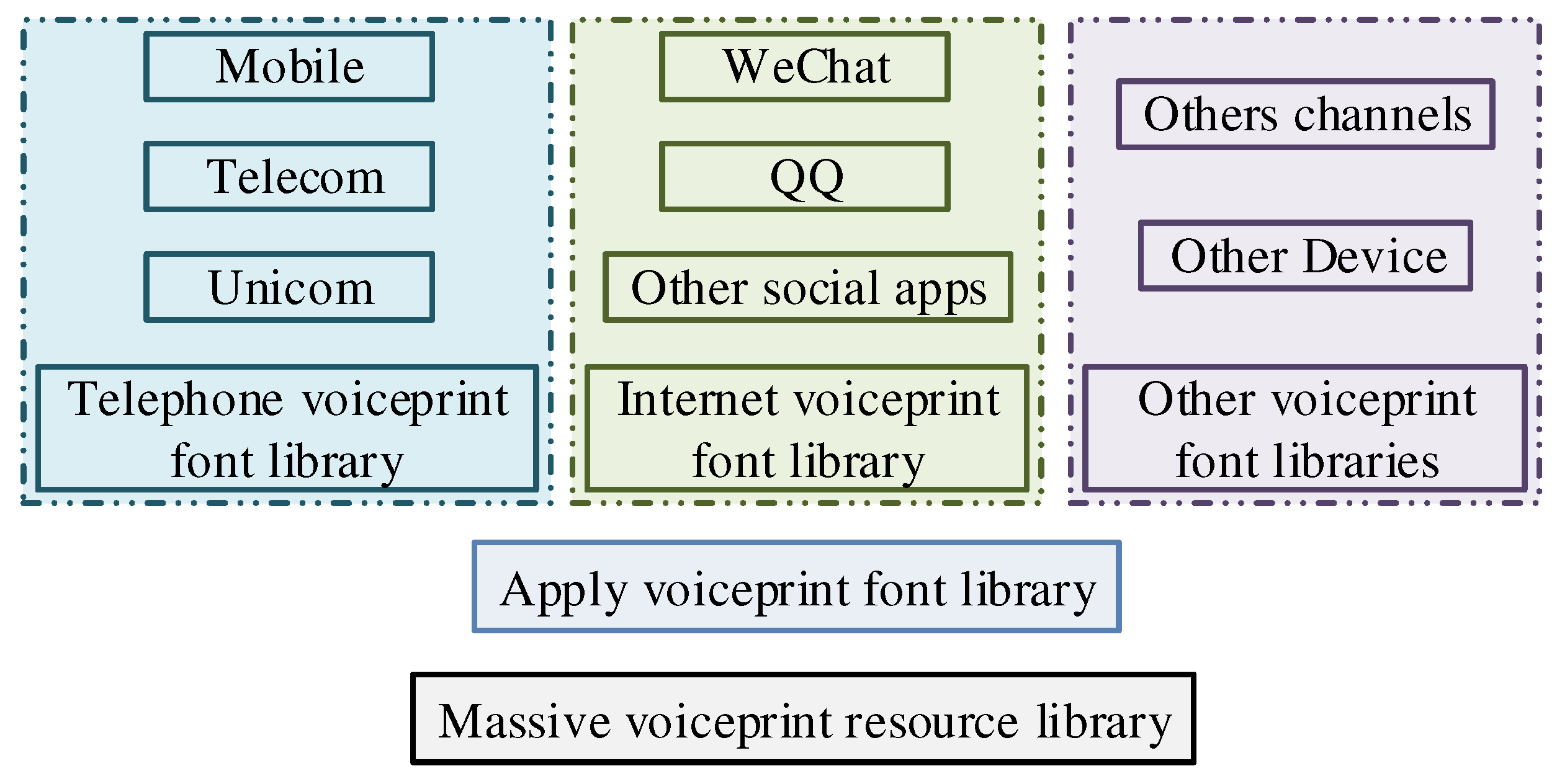

15]. The storage and management of massive audio data are shown in

Figure 1.

Figure 1 is a massive voice data resource database, which is mainly composed of telephone voice, Internet voice, and other voice, the three sub-speaker voice databases. In the face of the storage of massive speaker representations, distributed storage is the storage of externally provided interfaces for file upload, file download, file modification and file deletion [

16]. Distributed storage is to create the voice print sublibrary based on the gender, age, and other information of the speaker, which is the most concise and effective method to build the voice print word database, as shown in

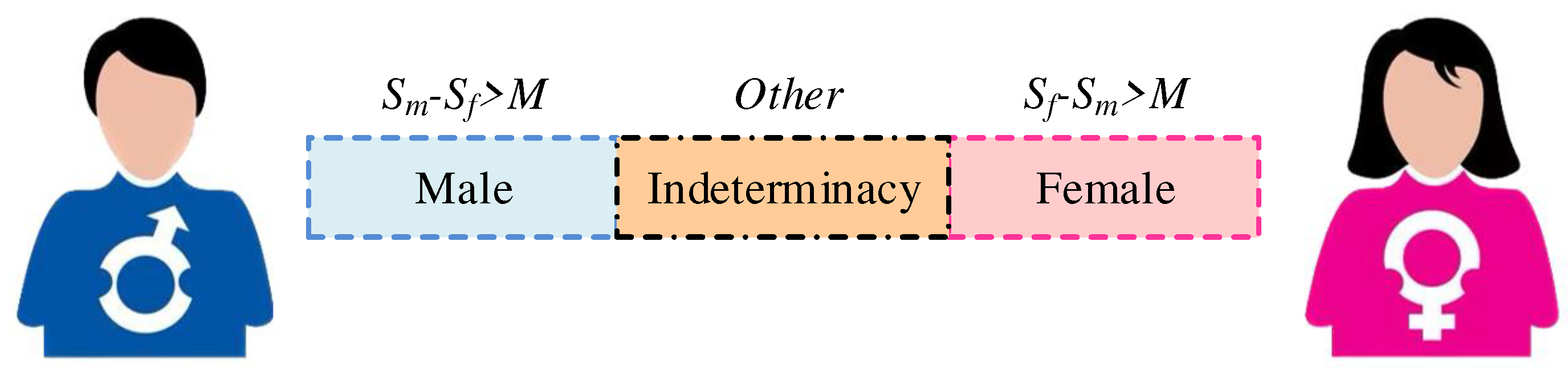

Figure 2.

In

Figure 2, an error tolerance mechanism is added to the word bank-building process.

represents the male confidence score predicted by the gender recognition model,

represents the female confidence score. When

-

is used, the speaker is labeled as female, when

-

is used, the speaker is labeled as male, and when both conditions are not met, it is labeled as uncertain. The machine automatically gives a gender label to the speaker in the library, and when retrieving the test speech, it can go directly to the corresponding word bank after obtaining the information [

17]. However, gender-based identification usually requires the speaker-related and irrelevant judgment of the category information after clustering. Among them, the most common application mode is the text-irrelevant speaker identification and text-related speaker identification system.

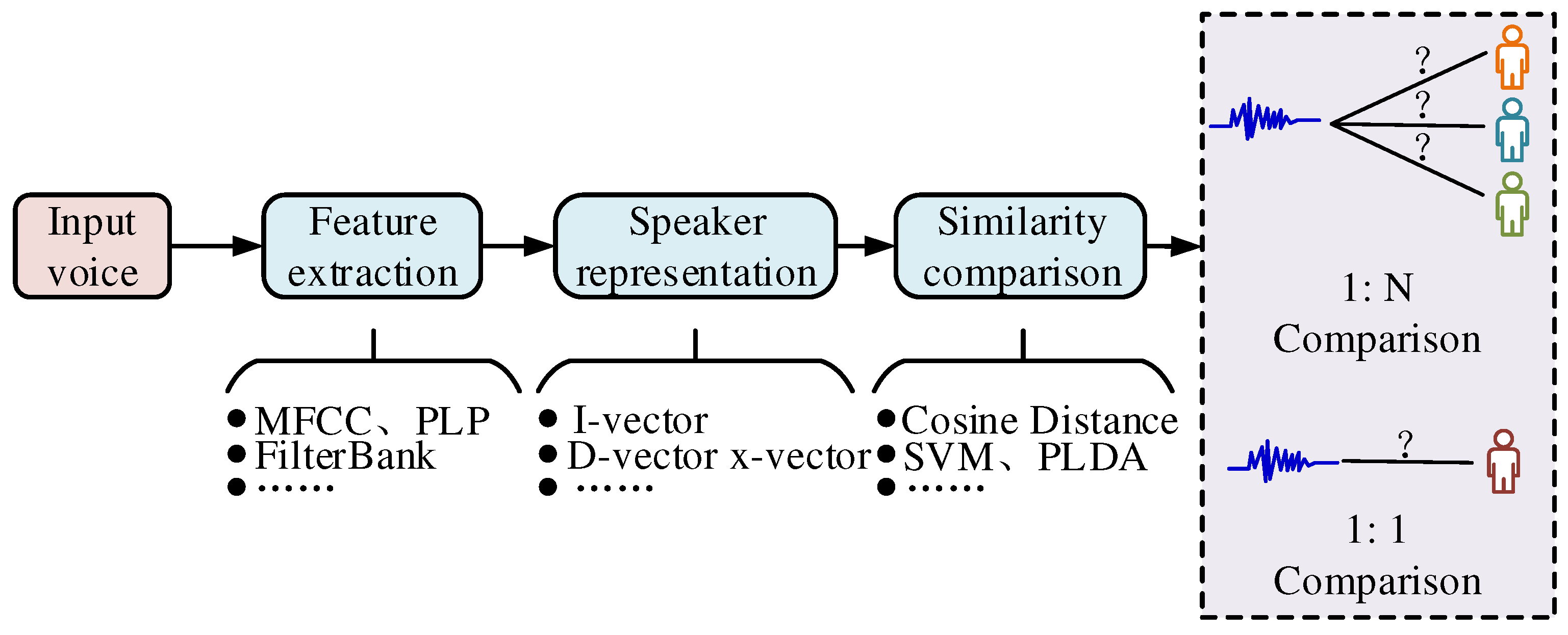

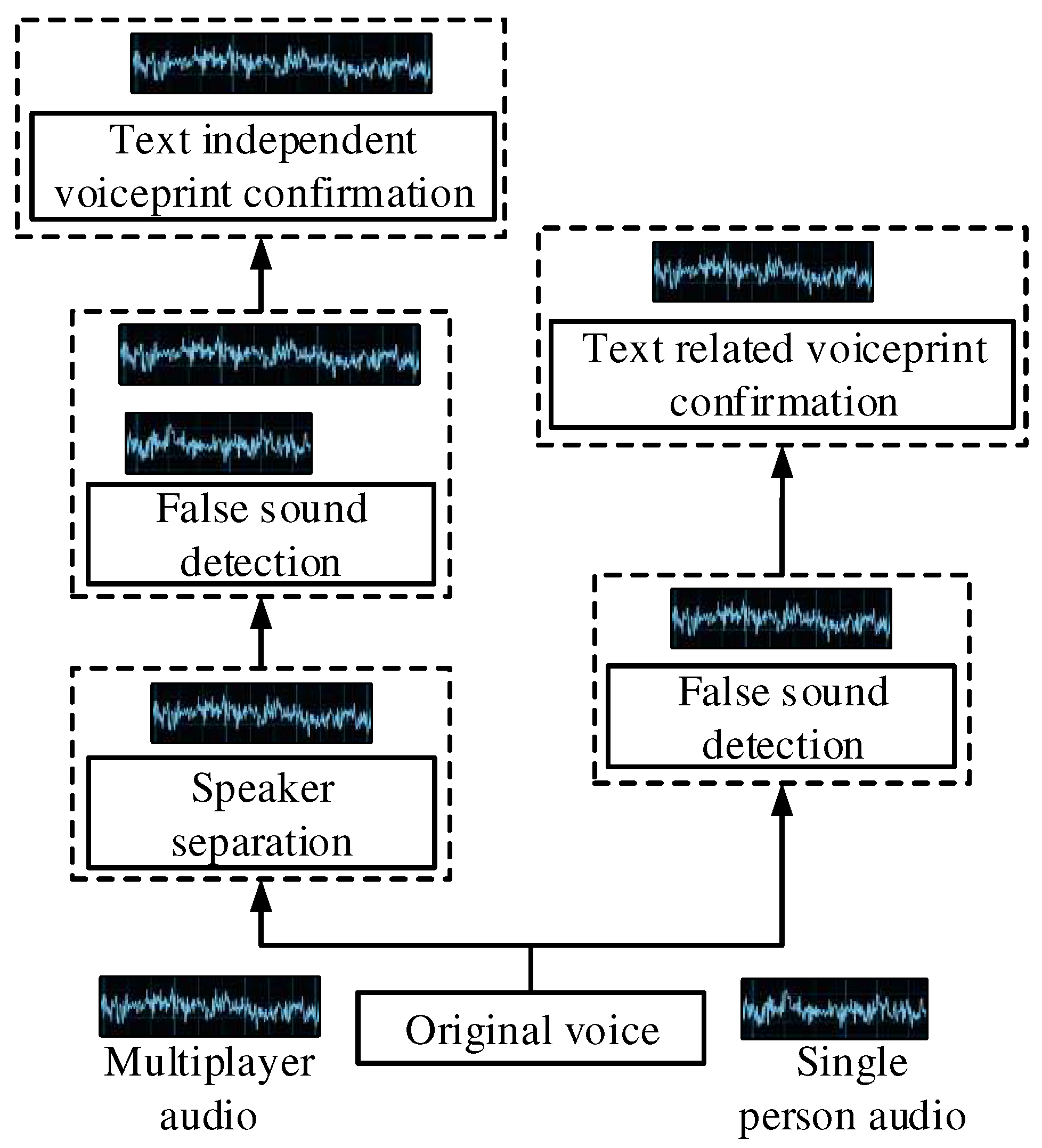

Its structural process is shown in

Figure 3.

A flow chart of vocal recognition based on multiple methods under text-independent speaker recognition and text-dependent speaker confirmation systems is shown in

Figure 3. In practice, there is also often a scene and channel mismatch between the registration and test datasets, which is less effective for speaker recognition [

18]. Therefore, for a speech recognition system, a detection cost function (DCF) is given as an evaluation metric using the expression shown in Equation (1).

In Equation (1),

is the false acceptance rate,

is the false rejection rate,

denotes the false rejection cost,

denotes the false reception cost,

denotes the non-target speaker prior probability, and

denotes the target speaker prior probability. The values of these four variables can be adjusted according to different application scenarios. And in order to solve the scenario channel mismatch, the

back-end system is used to compensate the channel. PLDA is a channel compensation algorithm that can decouple the guaranteed information more thoroughly, and its calculation formula is shown in Equation (2).

In Equation (2),

denotes the

extracted from the

speech of the

speaker,

denotes the overall mean of the training data

,

denotes the speaker information space,

denotes the channel space,

denotes the residual term,

denotes the speaker space coordinates, and

denotes the channel space coordinates. PLDA is tested either by extracting

to calculate the cosine distance score or by directly calculating the likelihood generated from

, as defined in Equation (3).

In Equation (3),

and

denote the

of two voices,

and

denote the same speaker and different speakers,

denotes the

and

likelihood functions of the same speaker, and

denotes the final score. Deep learning approaches have become the main technique for speaker recognition, whereas the end-to-end approach has become one of the mainstream approaches in the field of pattern recognition. The advantage of the end-to-end framework is that it can directly have the optimization of the target, and in the speech separation task, Scale-Invariant Source-to-Noise Ratio (SI-SNR) is used as the metric, which is calculated as shown in Equation (4).

In Equation (4),

,

and

are the estimated and target values, respectively. For multiple outputs, the overall loss function is usually based on the displacement-invariant training learning target, which is calculated as shown in Equation (5).

In Equation (5), denotes the number of speakers, denotes the error between the network output and the target, denotes the first predicted voice, and denotes the reference voice with the alignment order that minimizes the training target. The end-to-end speaker characterization using deep neural networks to compensate for channel mismatch is even more widely used.

3.2. Voice recognition based on channel adversarial training

As deep learning becomes a major technique in the field of intelligent speech and is popularly used in speaker recognition, end-to-end speaker-based characterization has a good prospect [

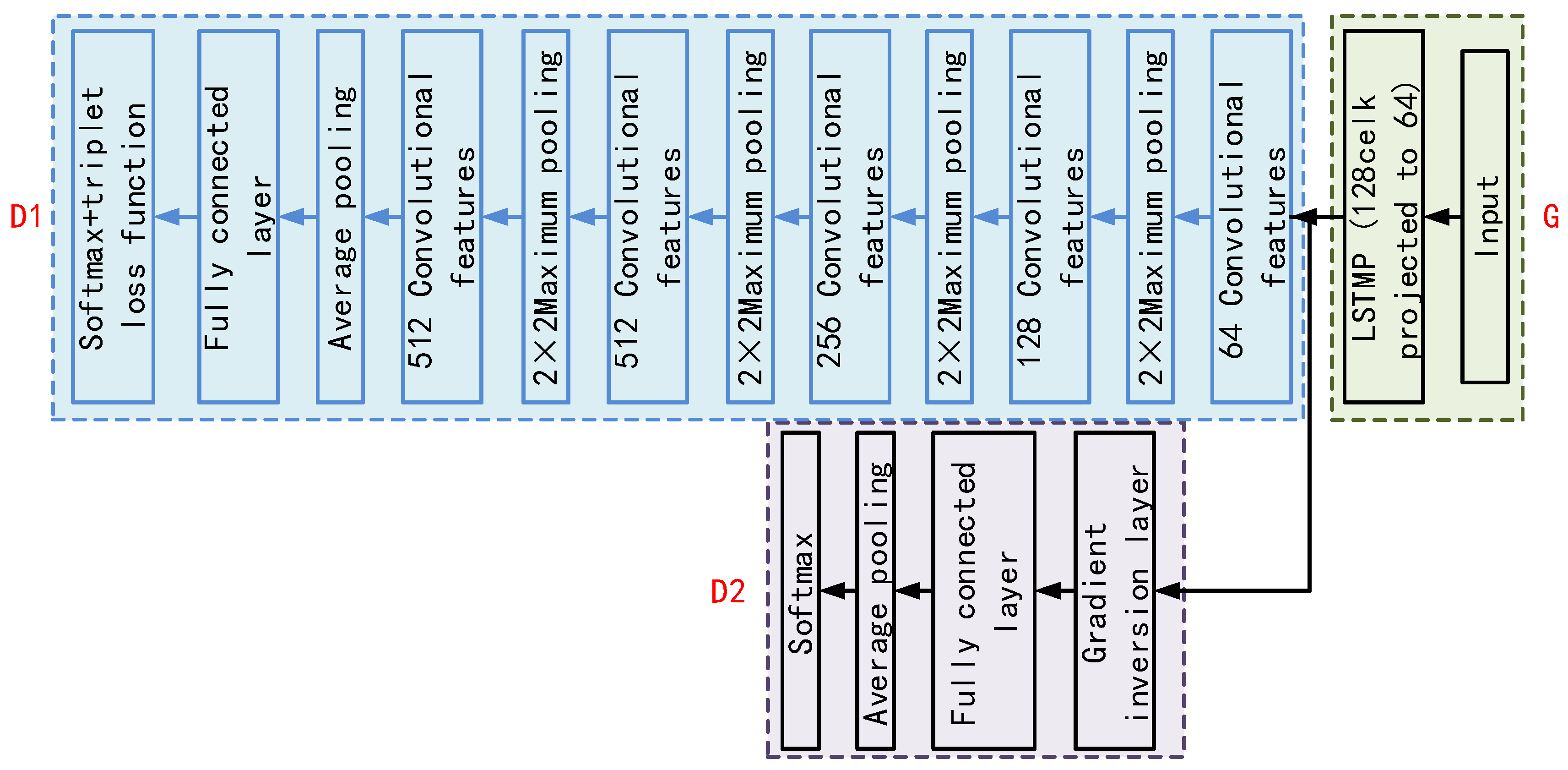

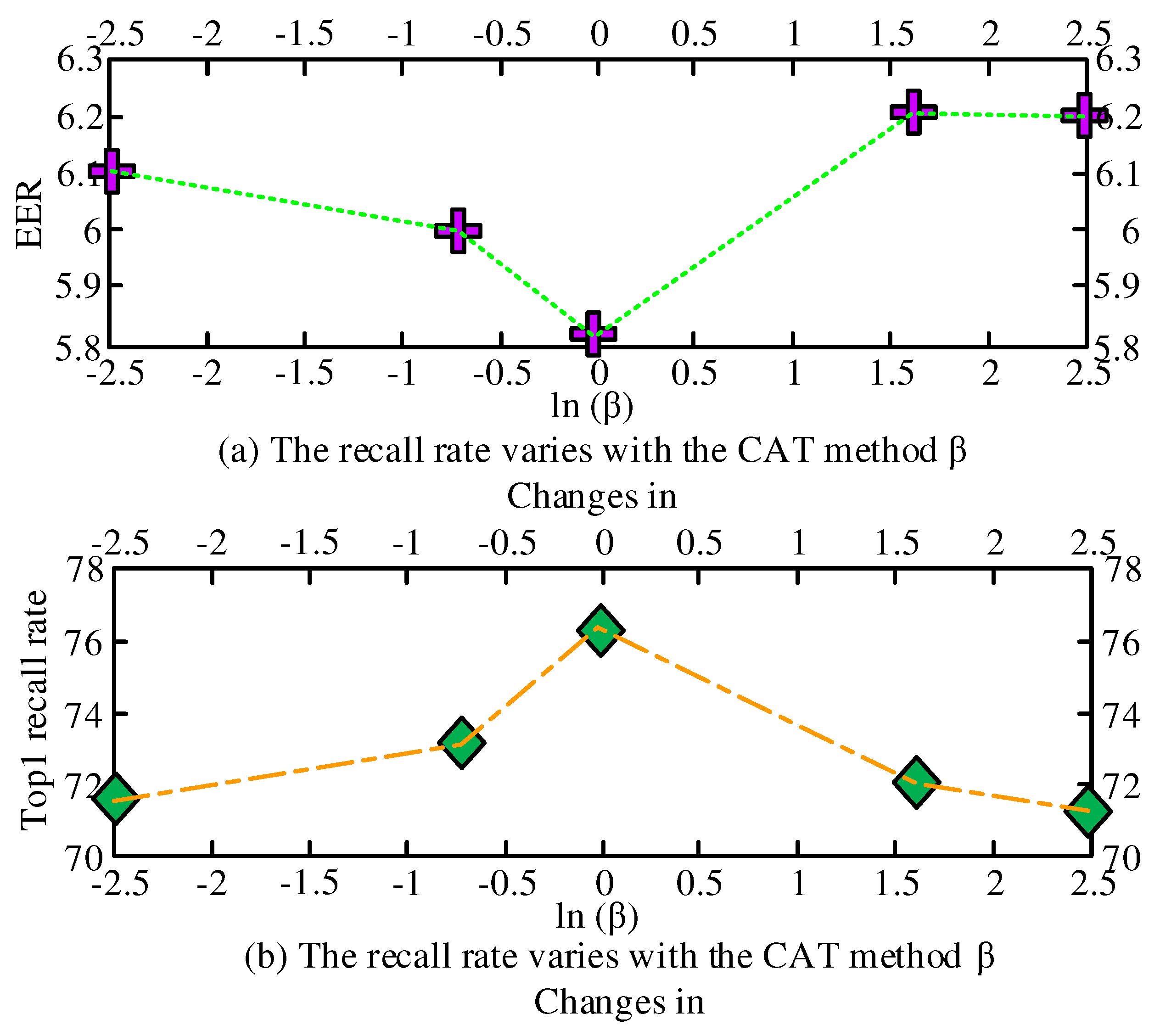

19]. However, for the training data collected from different channels, the convolutional neural network cannot directly model the speaker information between different channels. In this study, based on an unsupervised domain adaptive approach, we propose a model by channel adversarial training (CAT), which relies only on the speaker's speech data under each channel and does not require the same speaker's speech data under different channels. The model structure is shown in

Figure 4.

Figure 4 shows the structure of the speaker recognition network based on channel adversarial training with a baseline CNN model. Five convolutional layers are included, and the input layers piece together the features of the same person to form a feature map. The overall loss function of the model consists of Softmax and Triplet loss functions together. Its calculation formula is shown in Equation (6).

In Equation (6),

is represented as the representation of the

speaker, which belongs to the speaker

.

denotes the last fully connected column

and

is the bias term. the size of the minibatch is

and the number of speakers is

. The Triplet loss function is defined as shown in Equation (7).

In Equation (7), the two samples in

are from the same speaker, while

is from a different speaker and

is the anchor sample of the triad.

denotes the cosine distance between the two input vectors, and the two loss functions are superimposed and adjusted by the weights

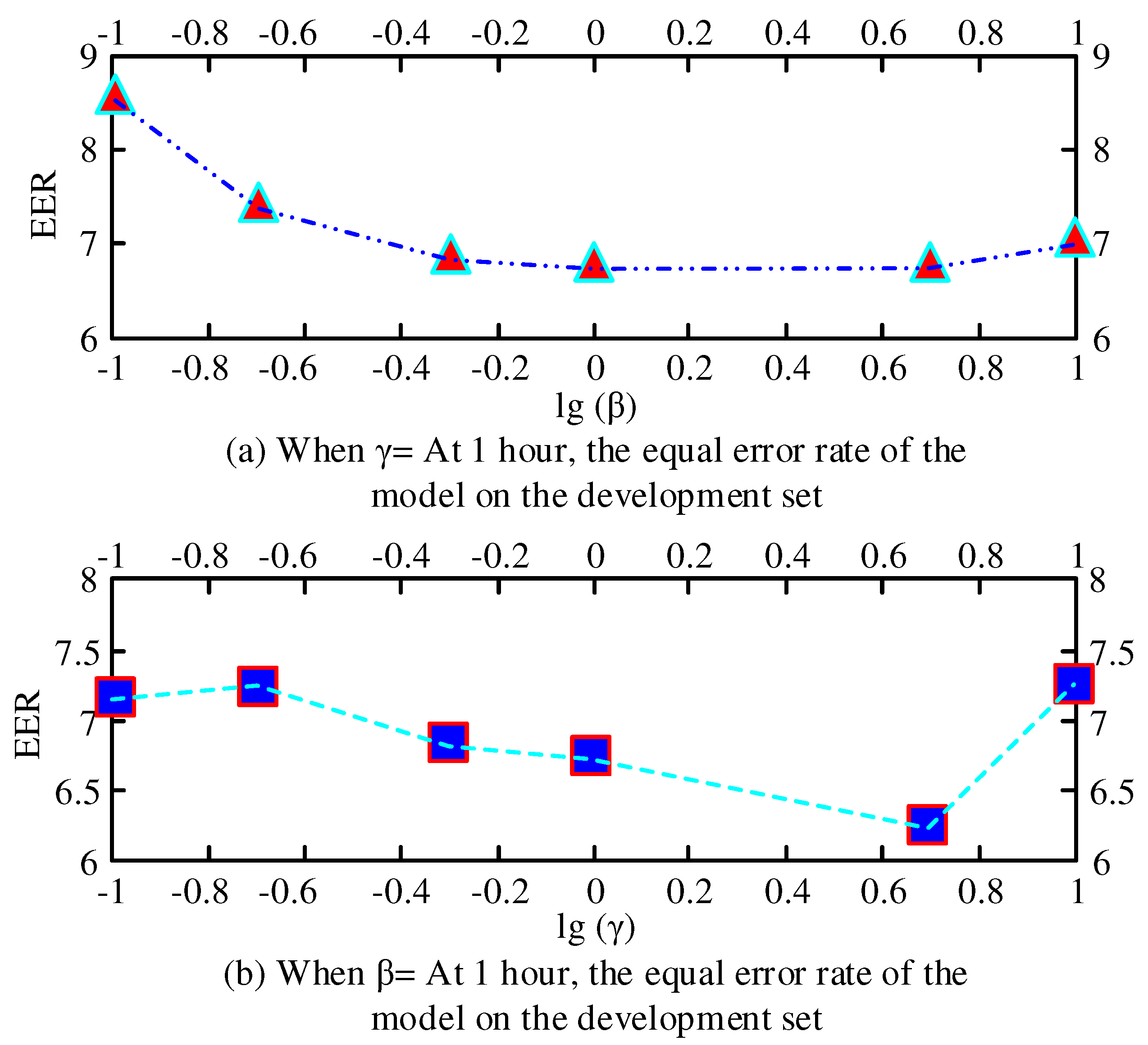

, which are calculated as shown in Equation (8).

In the face of excessively long sentences, the long sentences are segmented into multiple short segments by using sliding windows that do not overlap each other. And then the sentence-level speaker representation vector is obtained by averaging pooling. The model is decomposed into three different neural networks, including a feature extractor

, a speaker label classifier

and a channel label classifier

, and the expressions are shown in Equation (9).

In Equation (9),

,

, and

represent the parameters of each network, respectively, by using a gradient inversion layer and optimizing

to minimize the speaker prediction loss and maximize the channel classification loss. The hyperparameter

is used to balance the

and

losses in the backpropagation process to obtain a representation of channel invariance and speaker differentiation. The overall optimized loss function is a combination of the

and

loss functions.

The loss function is defined as shown in Equation (10).

In Equation (10), the overall loss function consists of Softmax and Triplet loss functions together and is adjusted by the weights

,

The loss function is defined as shown in Equation (11).

In Equation (11), denotes the representation of the

th speaker, which belongs to the speaker

.

denotes the last fully connected column of

and

is the bias term. the size of the minibatch is

and the number of speakers is

. The whole CAT framework is optimized using the stochastic gradient descent method. The optimal parameters are obtained from the optimization process of the following equation as shown in Equation (12).

In Equation (12), speaker representations with channel invariance, as well as speaker differentiation, are extracted directly from the network after training with the above parameters. This method can map two different channels within a common subspace, train processes with channel invariance, as well as obtaining speaker representations of speaker discriminability. Thus eliminating channel differences and improving the performance of speaker recognition. This framework alleviates channel mismatch, through channel antagonism training, overcomes channel divergence, and improves applicability in real scenarios. Since most of the massive voice data comes from telephone, Internet audio and video, and other APP applications, the schematic diagram of the speaker recognition system in the field of information security is shown in

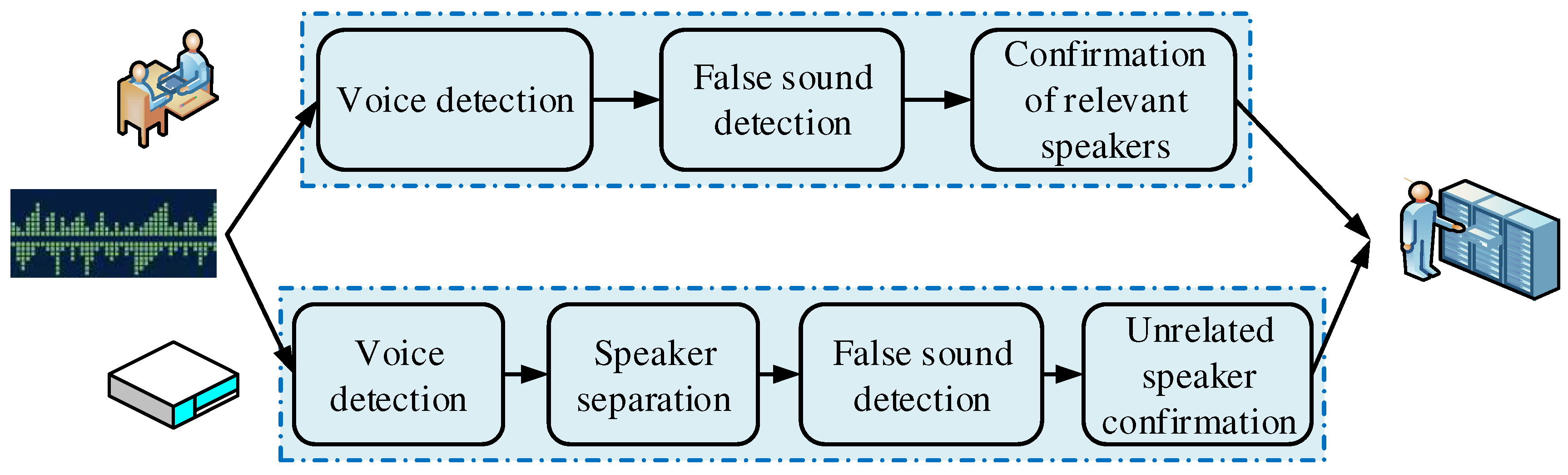

Figure 5.

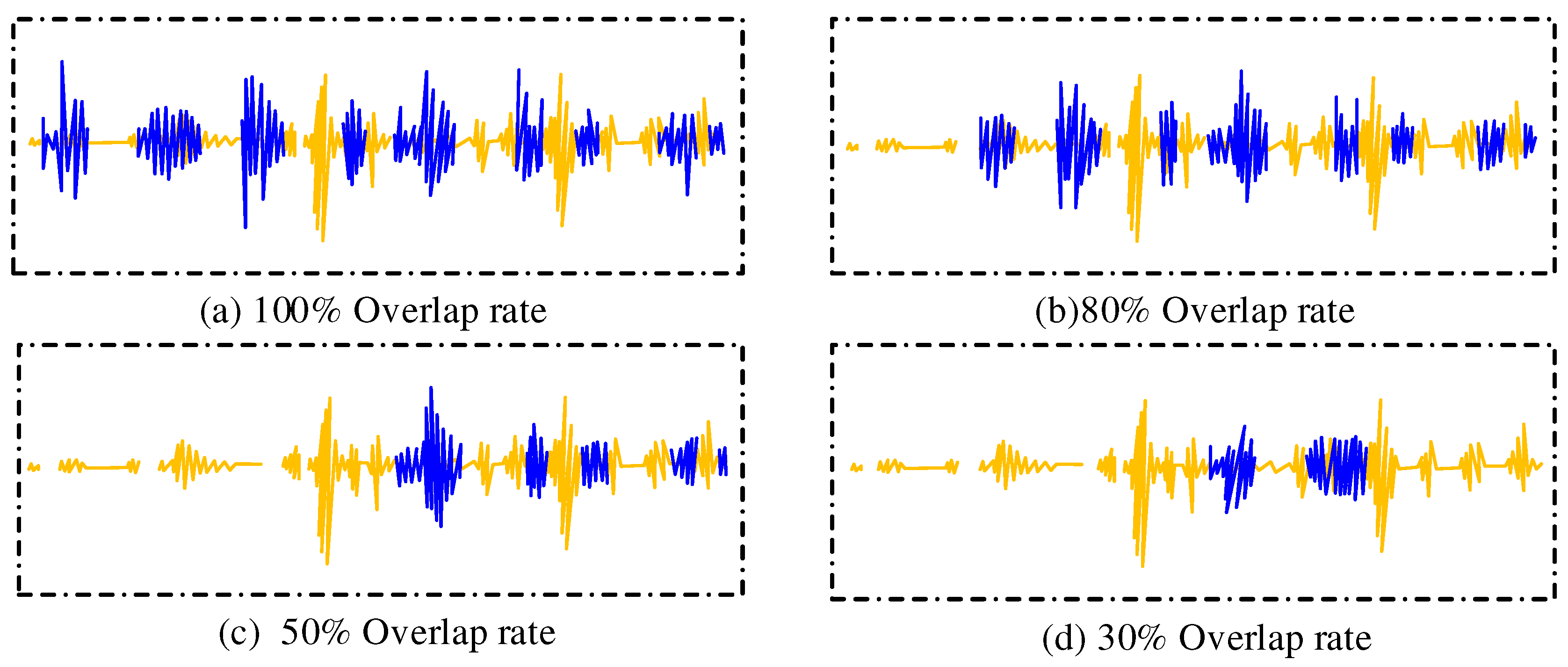

Figure 5 shows the speaker identification system in the field of information security. The system needs to have the data storage and fast retrieval ability of the speaker. It mainly aims at the separation of the speaker, text-related speaker confirmation, and false detection. Where the resulting separation error rate refers to the percentage of the length of the entire effective speech length and is defined as shown in Equation (13).

In Equation (13),

denotes the false alarm rate of valid speech detection,

denotes the miss detection rate of valid speech detection, and

denotes the speaker-to-speaker classification error rate. In the false voice detection task, an acoustic feature CQCC based on Constant Q Transform (CQT) combined with cepstral generation is commonly used. it first performs the CQT transform on the speech signal as shown in Equation (14).

The system extracts the speaker representation for each speech data, extract the speaker information, and store and retrieve it. The control layer includes the functions of task scheduling, data management, and plug-in management, and the plug-in is the process as shown in

Figure 6.

Plug-in management is the voice detection, false sound detection, speaker separation, and text-related speaker recognition as a separate plug-in to quickly synthesize the application market demand module [

20]. In addition, fast switching to application scenarios is achieved through the dynamic combinability of functional layers to enable plug-in management of the application market. Plug-in management enables not only fast configuration of application scenarios after selection but also fast switching of multiple application scenarios in a system.