1. Introduction

According to research on human anatomy by medical professionals, the skin is the largest and heaviest single biological tissue in the human body, covering roughly 20 square feet in average surface area and 6 pounds in typical weight [

1] [

2]. The skin is the body's first line of defence, yet it is not entirely impenetrable. As a result, the skin is continually susceptible to diverse environmental and genetic factors. Therefore, skin conditions affect everyone, regardless of age, skin tone, way of life, or socioeconomic status. In a recent study, the Skin Cancer Index 2018 [

3] shows that geographic and geopolitical factors make some places more likely than others to have skin cancer, which can occasionally be fatal. Computer-aided systems that automate the diagnosis of skin problems are the focus of research [

4,

5,

6,

7,

8]. There have been created methods for image acquisition, preprocessing, segmentation, extracting features, and classification. Medical images can be taken with non-standard cameras, cell phones, and digital cameras [9, 10].

Diagnostic imaging, such as CT scans, MRIs, dermoscopy, radiography, and ultrasound, is often used in medical diagnoses. Medical images assist physicians in more effectively, accurately, and consistently diagnosing patients by displaying the internal anatomy of the patient's body and assisting them in identifying any potential problems. Dermoscopy is most commonly used to identify skin lesions. Dermoscopic images benefit from bright lighting and a low noise level [

11]. Therefore, applying deep learning techniques can speed up the development of image-assisted medical diagnostics [12, 13]. Deep learning has facilitated resolving complicated learning problems previously intractable to rule-based methods [

14]. Deep learning-based algorithms have come close to matching human performance on various challenging computer vision and image classification tasks. In [

15], it was demonstrated that deep learning systems matched the results of skin cancer classification performed by 21 certified dermatologists.

However, applying deep learning to medical image-assisted diagnosis still presents numerous challenges. A common issue in healthcare applications is a scarcity of adequately diverse, large training datasets. These datasets typically exhibit severe class imbalance [

16], which can lead to biased models [

17]. Class imbalance occurs when there are disproportionately many samples of one pathology compared to another. As such, networks frequently overfit [

18] and fail to generalize to new models [

19]. Although more data is available online, most of it is still unlabeled. Annotating medical data is time-consuming and expensive as it requires specialized diagnostic tools and expert clinicians, which is why it is one of the key challenges to generating models suitable for clinical application.

This strong restriction results in two major issues: (1) restricted generalization and dataset bias and (2) precise classification. To address the first problem, the authors proposed an improved Deep Convolution Generative Adversarial Networks (DCGANs) classifier approach to create convincing synthetic data for training skin lesions for classification models with better generalization and to boost classification performance [

20]. Skin lesions are treated using effective image filtering and enhancement methods to improve the model's ability to find and extract features during training. As a solution to the problem of scarce medical datasets, basic GANs and networks based on GANs that generate synthetic medical images have gained significant traction in recent years. GANs [

21] attempt to mimic the distribution of actual images by making the synthetic samples look precisely like real images, thereby it increases the Classifier's capacity to distinguish between the skin types. Generator-Discriminator networks are used to generate synthetic skin lesions using dermoscopic features. Synthetic images of skin lesions using multiple modalities could solve the problem and give deep learning models more and better data. Several medical algorithms [

22] rely heavily on these features, which are local patterns in the lesion. To address the second issue, we used a stack of convolution layers in the discriminator network, which acts as a classifier for class prediction. Our proposed framework demonstrates that DCGAN augmentation improves performance, which is critical in high-stakes clinical decision-making, although it requires an additional trained network compared to typical augmentation procedures. This study investigated the prospect of improving Deep Neural Network diagnostic performance by incorporating DCGAN data into training data.

The main contribution to this study is as follows:

Construct and train an improved DCGAN classifier using customized synthetic augmentation techniques and fine-tune the parameters for skin lesion classification that can accurately diagnose skin lesions.

Investigate whether the synthetic images generated by a multi-layered convolutional generative network accurately reflect the distribution of the original image dataset. In contrast, a discriminator perceptron, which is also multi-layered, tries to distinguish between false and real image samples.

Evaluate the performance of the improved DCGAN Classifier compared with existing state-of-the-art classifiers for skin lesion classification.

The rest of the work is organized as follows:

Section 2 describes the ISIC Standards for medical imaging;

Section 3 discusses the literature study;

Section 4 provides the methodology used for the model; the performed experiments and results are described and discussed in

Section 5, and finally, the conclusion is presented in section 6.

2. The Practice Standards for Medical Imaging

The adoption of artificial intelligence (AI) in medicine is increasing and has the potential to revolutionize clinical treatment and dermatology processes. However, to ensure the final output's fairness, dependability, and safety, specific criteria-setting development and performance evaluation requirements must be met when developing image-based algorithms for dermatology applications [23, 24]. In health informatics, ISO 12052:2017 addresses the transfer of digital images and data of their creation and management between medical imaging equipment and systems handling the management and transmission of that data [

25].

The International Skin Imaging Collaboration (ISIC) is a university-industry partnership that promotes the use of digital imaging systems to reduce the number of mortalities from melanoma. Teledermatology, clinical decision support, and automated diagnosis use digital skin lesions images to teach specialists and people about melanoma and help diagnose it. ISIC promotes digital skin imaging standards and engages dermatologists and computer scientists to improve diagnosis. Lack of dermatologic imaging standards degrades skin lesion imaging. ISIC proposed digital skin image quality, confidentiality, and interoperability standards (i.e., the capacity to transfer visual data between different clinical and technological systems). ISIC is creating dermatological and computer science resources, including a vast and expanding open-source public access skin picture database (Goals of the Archive). This repository provides images for research, study, and testing of diagnostic AI systems. ISIC engages stakeholders through meetings, papers, conferences, and AI Grand Challenges [

26]. Classification and segmentation are the most common uses for ISIC datasets. To train their algorithms, researchers focus primarily on binary classification problems. Researchers began experimenting with multiclass classification after the release of ISIC 2018 and ISIC 2019, with the ISIC 2020 dataset as the primary resource. However, melanoma detection was the main goal of the ISIC 2020 challenge. As a result, more research on binary classification can be done. ISIC did not extend this challenge category beyond 2019, suggesting that segmentation tasks are less popular than lesion diagnosis. Segmentation masks were only available for a small subset of datasets (ISIC 2016-2018) compared to most classification tasks. Colour constancy research and generative adversarial network-based data enhancement are further applications [

27] that use ISIC datasets. Variations in the metrics for image acquisition at the whole-body, regional, close-up, and dermoscopic levels can affect the quality and validity of skin images. Universal imaging standards in dermatology require the establishment of consensus norms. Clinical practice information exchange, documentation in electronic health records, harmonization of clinical trials, database building, and clinical decision assistance all benefit from standard procedures like Delphi for image standardization [

28].

3. Literature Review

Variations in skin tone, illness location, and picture capture devices complicate the development of automated skin disease detection systems. Furthermore, skin issues have distinctive characteristics, making classification difficult. However, CNN has achieved remarkable success in the medical industry as in other fields, providing optimism for the future of automated medical system development [29, 30].

The generative adversarial network (GAN) is another technique that has researchers intrigued because of its ability to represent complex real-world visual input. It also can normalize unbalanced data sets [

31]. However, few applications have used GAN beyond simple binary classification and data augmentation [

32].

Researchers commonly use data augmentation strategies to boost the models' robustness and generalizability [29-32]. Standard augmentation techniques for enhancing image data include resizing, rotating, flipping, and shifting the original image. These traditional data augmentation techniques have become commonplace in computer vision network training [33, 34]. Unfortunately, these techniques are limited in yielding important new insights from relatively few adjustments to the source data.

Data synthesis, a more advanced data augmentation method [

35], shows promise and is the subject of much interest. Among the many approaches for creating synthetic data, variational autoencoders (VAEs) [

36] and generative adversarial networks (GANs) [

37] stand out as the most common. The latent representation of VAEs is highly structured and continuous, making them simple to train. Despite their challenging and unreliable training procedure, GANs can produce high-quality, realistic images. Although the success of GANs in medical imaging [38, 39], there has been little systematic investigation into the synthesis of images of skin lesions and class imbalance [

40]. By investigating the issue of class imbalance across various data regimens and contrasting the effectiveness of conventional and GAN data augmentation methods, we seek to close this gap.

Academics have developed several variants of GANs to enhance the GANs capacity to generate images. Authors of [

41,

42] integrated a convolution operation into a Deep Convolutional Generative Adversarial Network (DCGAN) to improve GAN performance. Moreover, to enhance the diversity of generated images, the noise vector is sampled by DeLiGAN and fed into the Generator as noise [

43].

In [

44], authors combined meta-learning and CGAN to propose MetaCGAN, a new variation of GAN. MetaCGAN can transfer its knowledge from a large dataset training to a new problem with a small dataset. In recent years, some researchers created high-resolution images using convolutional and recurrent neural networks (CNNs, RNNs). However, the algorithms produce images one pixel at a time rather than in their entirety [

45,

46]. [

47] Presented categorical generative adversarial networks using (catGAN) [

48] and Wasserstein distance [

49]. CatWGAN can identify input data and generate 64 × 64 pixels images. Using a dataset of 140 labelled images from the ISIC 2016 Challenge, the proposed method outperformed the denoising autoencoder and the simple hand-crafted features, with an average precision of 0.424 [

50]. Using the Pseudo Cycle consistent module and the domain control module, a variant of Cycle GAN was introduced in [

50] to generate CT images. The domain control module supplies supplementary domain information, while the Pseudo Cycle consistent module ensures that all created images look identical. Baur et al. [

51] proposed deeply discriminated GAN to improve image resolution (DDGAN). The authors created realistic 256 X 256 skin lesion images and compared DCGAN, LAPGAN and DDGAN [52, 53], showing that both can learn dataset distributions and synthesize realistic samples.

The authors [

54] also synthesized realistic, high-resolution skin lesion images. Progressive growing of GANs (PGGAN) [

55] helped them synthesize images from noise at 1024 × 1024 pixels. The Visual Turing Test and Sliced Wasserstein Distance were used to evaluate DCGAN [

56], LAPGAN [

56], and PGGAN images. The Visual Turing Test reveals that even trained dermatologists have problems recognising fake from real images. Jiang et al. [

57] proposed using a Fused Attentive GAN (FA-GAN) to create and reconstruct high-resolution MR images. [

57] Built modules for local and global feature extraction to extract valuable traits. FA-GAN used PSNR and SSIM performance metrics to train the network on 40 sets (256 slices) of 3D MR images. The authors used pix2pixHD GAN for image generation in [58, 59] and generated images using semantic and instance mappings. The pix2pixHD GAN is an innovative approach to using meaningful skin lesion knowledge to synthesise high-quality images and improve skin lesion classification performance; however, it does require annotated data to create images. The authors exploited GAN-synthesized breast ultrasound images for breast lesion categorization augmentation [

60].

The study in [

61] presented Cascade Ensemble Super-Resolution CESR-GAN to rebuild high-resolution skin lesion images from low-resolution photos. They created an image-based loss function [

61]. In [

62], the author enhanced the image data of skin lesions using GANs. The GAN discriminator was the final Classifier trained to recognise seven categories of skin lesions from the ISIC 2018 challenge dataset [63, 64]. Transfer learning was utilized to improve the DenseNet [

65] and ResNet [

66] architectures' classification performance compared to the GAN-based data augmentation model. The suggested method raised the score for balancing correctness.

One of the most challenging and well-liked research areas is the simulation of medical imaging in many medical specialities. Researchers use intense architectures to enhance their findings. GANs are frequently employed in a variety of medical imaging applications. GAN input noise management in medical imaging still has room for innovation. Medical image synthesis, segmentation, detection, classification, and reconstruction using GANs are becoming increasingly common [

67]. However, over the last two years, the image synthesis-based data augmentation technique has been applied for skin lesion imaging. Previous literature searches reveal few related studies on skin lesion classification.

Table 1 summarizes the research needs in determining and analyzing the available literature. Skin lesion image generation requires further use of specific, more advanced GAN designs that can produce high-resolution representations.

The research gap is identified by analyzing the available literature and is summarized in

Table 1. We have investigated DCGAN's potential on our dataset to contribute to developing a reliable skin cancer detection and classification application.

4. Methodology

This study proposes a skin lesion DCGAN Classifier model based on a GANs framework, which can produce high-quality images of skin lesions. The authors have investigated the potential of DCGAN on the ISIC 2017 dataset to create a reliable tool for detecting and classifying skin cancer.

4.1. Skin Cancer Dataset

The first stage in skin lesion classification is acquiring a high-quality dataset to train our proposed model. The ISIC datasets are widely utilized for automated skin lesion analysis due to the need for more high-quality, annotated images of skin lesions. Our DCGAN model was trained using 2000 images from the ISIC 2017 skin cancer dataset, uniformly distributed between benign and malignant cases. When using deep learning to classify skin lesions, gathering an extensive dataset representing a wide range of lesions is essential. Images are typically labelled with the type of skin lesion depicted, providing the algorithm with ground truth. The dataset contains images of varying resolutions. It is a complex task to categorize accurately due to the resolution variability and class imbalance.

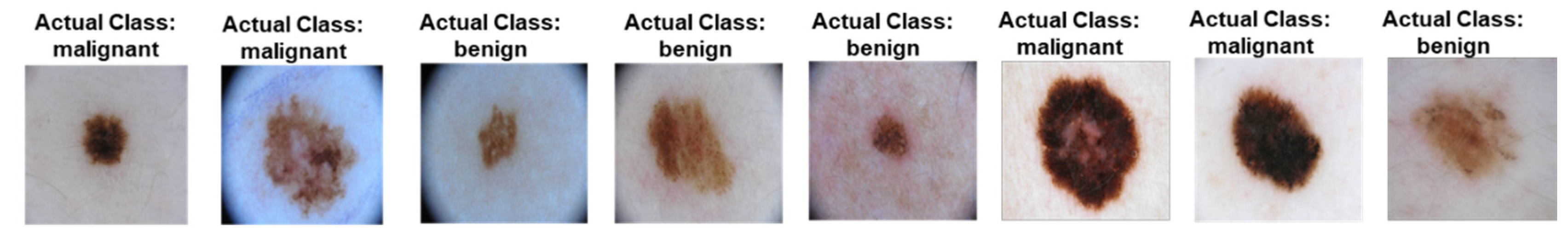

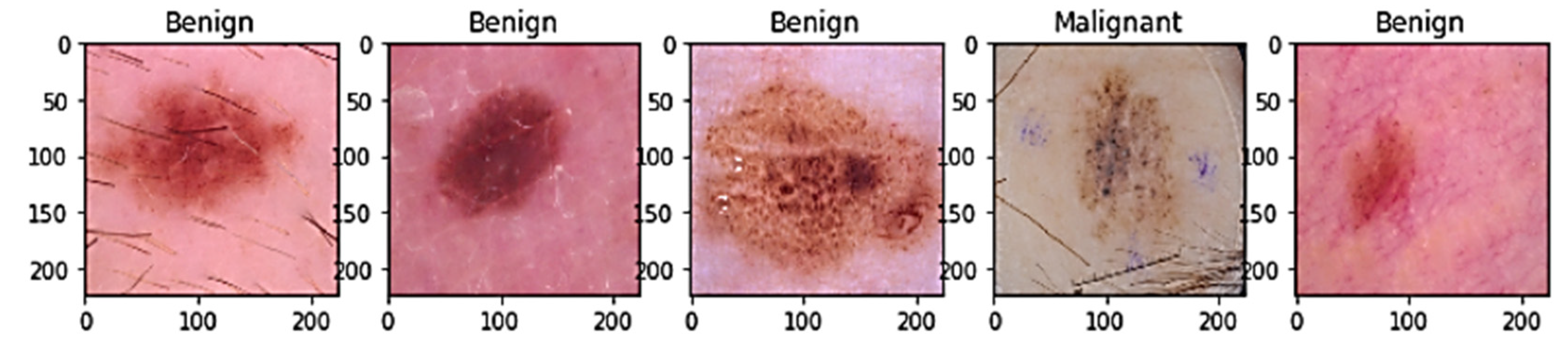

Figure 1 shows the sample representation of both benign and melanoma skin lesions. During the random selection of test images, the authors tried to maintain a consistent class distribution of the data set and ensure that each class's percentage of test images was comparable to that of the train data set. For this purpose, the classes in the data were first labelled and annotated as follows:

Image name: a unique identifier that refers to the filename of the corresponding image.

Patient id: a unique identifier assigned to each patient.

Sex: the gender of the patient or a blank field if unknown.

Approximate age: the patient's approximate age at the time of the imaging was conducted.

Anatomical site: the location of the imaged site on the patient's body.

Diagnosis: detailed diagnostic information (only included in the training data).

Benign/malignant indicates whether the imaged lesion is benign or malignant.

Target: a binarized form of the target variable.

4.2. Proposed Framework of the DCGAN-Based Classifier

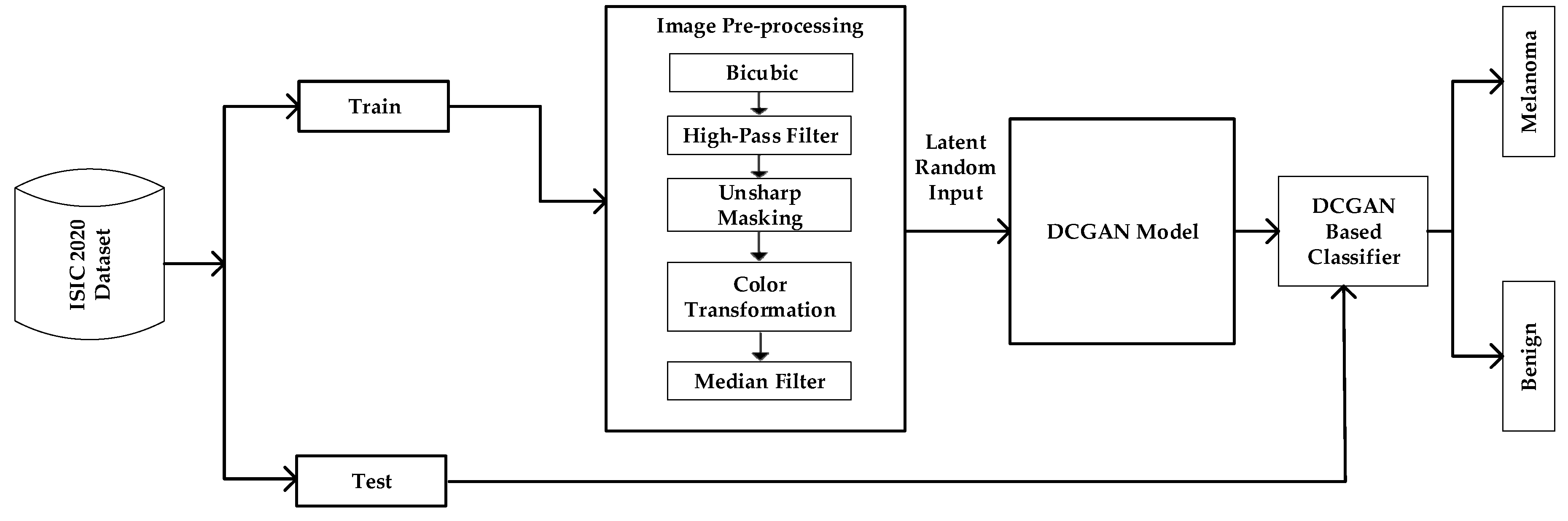

The proposed framework consists of three distinct phases, namely (1) Image Preprocessing, (2) DCGAN Modeling, and (3) Classification, as depicted in

Figure 2. Each of these phases is addressed in greater detail in the following subsections. The image Preprocessing methods were performed before the images were fed into the GAN model for classification.

4.3. Image Preprocessing Techniques

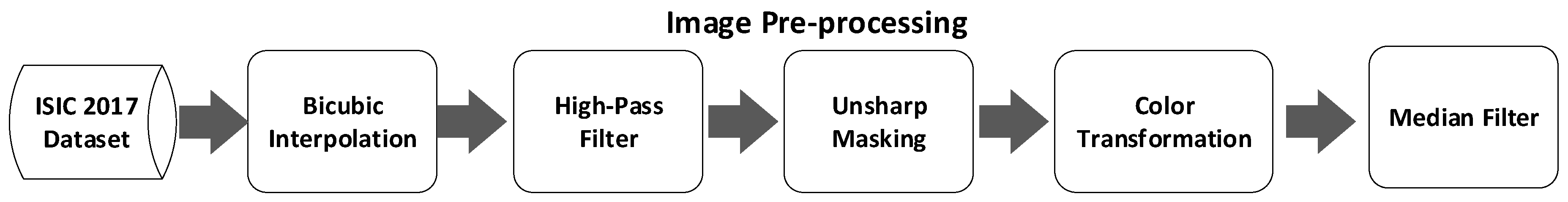

In image preprocessing, the images are transformed using various preprocessing techniques to reduce noise and other potential artefacts and to put it in a format that can be used for more sophisticated processing. Before being used in image processing, the dataset is divided into train (70%) and test (30%) datasets. The first step in image preprocessing is image scaling, where images are resized to a specified pixel width using the bicubic interpolation method [68-74]. Each image in the dataset varied in size; therefore, images were scaled to a standard size to improve faster and easier processing. Histogram equalization techniques are used after rescaling the image to improve the image's intensity values and to modify contrast to increase the brightness of dark images [75-79]. Next, to capture the edges and fine details of the image, the authors used a combination of two image sharpening techniques Unsharp Masking (USM) [80, 82-83] and Gaussian High Pass Filtering (GHPF) [81, 84-85] and then color space transformations [81, 86-90] are applied to the image. Since color information is essential for skin disease detection systems, we attempted to extract the most relevant color for faster processing. Since the pictures for the model training came from different online dermatology sites and could be different sizes and colour spaces, image scaling and colour space transformation were used to make all images the same size and colour space. All images will be changed to function in RGB space. After analyzing the potential image restoration procedures, the median filter was implemented using Gaussian noise to smoothen the images [91- 94].

Additionally, it reduces the impact of insignificant factors like fine hairs in pictures of skin lesions.

Figure 3 shows the image preprocessing pipeline used in this study. The preprocessed images are fed to the GAN model.

4.4. DCGAN Architecture

Deep learning is gaining recognition in image classification because it can extract and choose more features than traditional techniques [95, 96]. However, large, labelled datasets are required to maximize deep learning's potential. Researchers have reported that dermoscopy images are often unlabeled or under-labeled, necessitating expert annotations. GANs are a new and intriguing method that has recently appeared to solve unlabeled datasets [97-99]. The ability of GANs to generate precise synthetic data has recently increased their prominence. Also, GANs are seen to be a promising approach to the problem of accurately classifying data with low levels of inter-class variation. For learning or sampling complicated probability distributions of image data, GANs are a popular generative model type.

Many researchers have implemented DCGAN on various real-world applications and have produced significantly good results in data synthesis and classification [

100]. GANs are prone to instability and might be difficult to train. Finding the ideal balance might be challenging because the training involves a minmax game between the generator and discriminator networks. It is common for GANs to suffer from mode collapse, which occurs when the generator doesn't accurately reflect the diversity of the target distribution. To address these gaps, the authors in [

101] proposed Deep Convolutional Generative Adversarial Networks (DCGAN), which have a set of architectural constraints to balance GANs [117].

DCGAN is an extension of the GAN design that uses deep convolutional neural networks for both the generator and discriminator models, as well as configurations for the models and training that lead to stable training of a generator model. The DCGAN offers model constraints needed to construct high-quality generator models. This architecture enabled the rapid development of several GAN extensions and applications [

99].

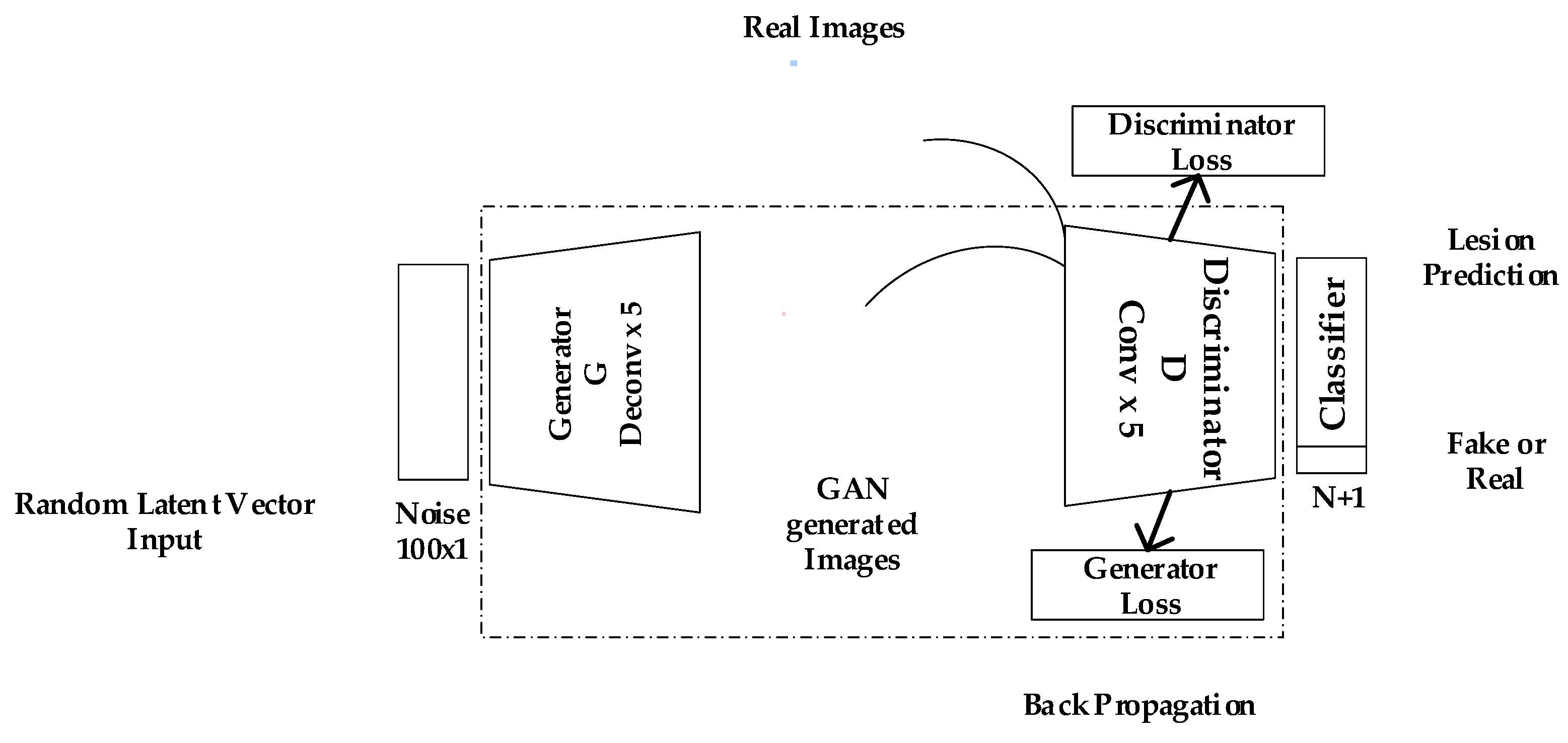

The architecture of GANs consists of two multi-layered networks, Generator and Discriminator. Both networks of a GAN are trained simultaneously [

97]. The Generator network is tasked with creating synthetic images that closely resemble real images in the training dataset. On the other hand, the Discriminator network seeks to discriminate between real and fake images by evaluating the likelihood that a particular input sample is real or fake [

98]. The two networks are trained so that the generator is encouraged to create images that the discriminator finds increasingly difficult to distinguish from real ones. Since GANs may be used to simulate the underlying data distribution, they form the basis of the proposed methodology. As a result, they get better at distinguishing various data sets with minimal alterations. As such, it produces synthetic data for each category to train the discriminator by employing a GAN to anticipate the data distribution. After training, the discriminator can accurately classify brand-new samples in each category, even when like examples in other classes [

99].

Mathematically, to learn the generator's distribution

over the image data represented as

x, define the input noise vector

with a prior distribution

, then represent the data mapping to Generator

a neural network with parameters

. The discriminator

outputs a single scalar, where

is the probability that

x comes from data rather than

. Discriminator

D is trained to maximize the likelihood of correctly labelling training examples and G samples. At the same time, Generator G is trained to mislead the discriminator by attempting to minimize

. So, D and G play the following two-player minimax game with the value function V (G, D) [

20] [97-99]:

Theoretically, the discriminator makes a random guess as to whether the inputs are real or fake, and the solution to this minimax game is where .

The primary goal of DCGAN is to extend the capabilities of GAN by making use of Convolution Network designs. Radford [

101] achieved consistent outcomes by advocating for a few critical architectural limits on DCGAN. The authors of this study employed the basic ideas proposed by Radford [

101] to classify skin lesions.

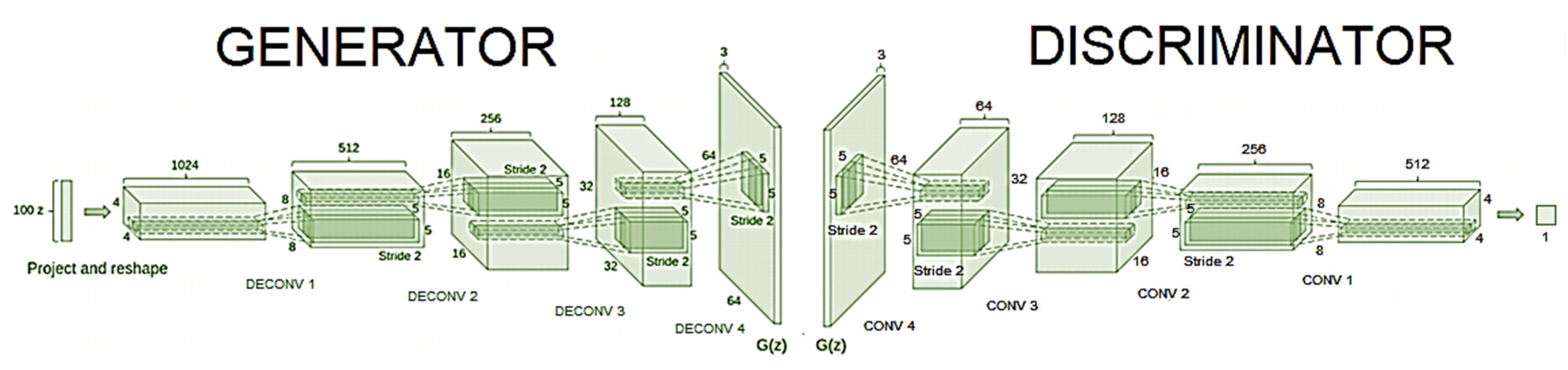

Figure 4 and

Figure 5 illustrate the flow diagram and improved simulation model used for the DCGAN framework. The following modifications are made to the Generator and Discriminator networks to avoid mode collapse, model instability and convergence [

101]:

Modifications to the Generator:

The generator uses five deconvolutional layers instead of four.

Replace deterministic spatial pooling layers such as global average pooling with 2 x 2 fractional-stride convolutions (Generator), which allows the networks to learn by themselves spatial downsampling.

Eliminate connected hidden layers to avoid model instability and stabilize the convergence speed.

Update the generator weights using backpropagation and an optimizer SGDM with a constant learning rate of 0.01 instead of 0.0002.

Batch normalization is used to stabilize the learning of the generator.

All generating levels use the ReLu activation, except the output layer, which employs the Tanh activation to scale the output between -1 and 1.

Modifications to Discriminator:

The discriminator uses five convolutional- layers to train the networks instead of four.

Replace deterministic spatial pooling layers such as max pooling with 2 x 2 stride convolutions (Discriminator), allowing the networks to learn spatial upsampling by themselves.

Eliminate connected hidden layers to avoid model instability and stabilize the convergence speed.

Update the weights of the discriminator using backpropagation and an optimization step.

Batch normalization is used to stabilize the learning of the discriminator.

The LeakyReLU activation function is used for all layers in the discriminator except the output layer to allow gradients to flow backwards through the layer.

The final layer functions as a classifier and uses the SoftMax activation function for classification.

4.4.1. Model Training and Classification

The authors trained the proposed Improved DCGAN model on the ISIC 2017 dataset to classify the cutaneous skin lesion. Firstly, the dataset is split into train (70%) and test (30%) datasets. The training dataset is preprocessed and augmented and then passed into the proposed DCGAN model for training. A preprocessed random latent vector input is fed to the generator to generate synthetic data. The deconvolution neural network of our generator is activated by calling the transposedConv2dLayer function to perform weight multiplication and the Bias function to execute bias addition. In addition to performing weight multiplication and bias addition, the convolution neural network in the discriminator called the convolution2dLayer function performs weight multiplication. A transposed convolutional layer is typically used for upsampling or to generate an output feature map with a larger spatial dimension than the input feature map, thereby generating synthetic images. As shown in

Figure 5, the authors built the generator to meet DCGAN framework requirements and set OUTPUT_SIZE to 64. The deconvolution step is 2, and each output is four times the input, so each layer's output size is 4 x 4, 8 x 8, 16 x 16, and 32 x 32, as well as feature maps are set to 512, 256, 128 and 64, respectively. The Generator images were rescaled to the range of [-1,1] for the tanh activation function. The model was trained with mini-batch size 64 using Stochastic Gradient Descent with Momentum (SGDM) optimizer with a learning rate set to 0.01 and momentum set to 0.5 to stabilize the training. The default values were initialized to zero for all the weights, and normal distribution, with a 0.02% standard deviation.

The discriminator is a feed-forward neural network with four convolutional neural networks. The LeakyReLU is set to 0.2. In addition to discriminating between three target classes, the discriminator also functions as a classifier. As a result, the discriminator output layer contains N + 1 (= 3) units, where n is the number of target classes. The final layer, D, is labelled for predicting the probability of an image being real or fake. The remaining

N units in three layers are trained using a standard cross-entropy loss function which is represented as [

101]:

Whereas D(x) is the correct class label corresponding to the input x and is the probability of predicted class by discriminator D. Adding an extra label for the fake class allows us to train the discriminator network across two loss functions simultaneously: one for recognizing fake and genuine images and the other for classification inside actual images. The discriminator network backpropagates discriminator loss to update its weights.

The training step involves changing the network's weights based on the training data while minimizing the loss function, which measures the difference between the anticipated and real data. During the discriminator training phase, the generator is kept constant. Also, the discriminator is kept constant during the Generator training phase. As discriminator training seeks to determine how to differentiate between real and fake data, it learns how to identify the generator's defects. When it comes to discriminators, it is assumed that the real input needs to be close to 1 and that the result of the generators will be 0. For the generator network, the discriminator should predict 1 for each image it makes. During training, the validation data assesses the network's performance and prevent overfitting. Probability distributions are what GANs attempt to reproduce. Therefore, we employed loss functions that account for the discrepancy between GAN’s output and real distribution. The total loss for the discriminator is the sum of the losses for real and fake images [

101]:

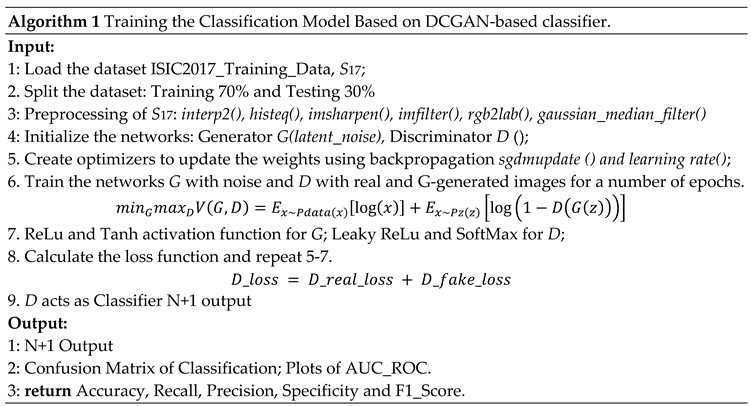

G_loss is the cross entropy resulting from the difference between the generator's generated data and the discriminator's input data. In our study, the discriminator also serves as a classifier to distinguish between benign and malignant cancers. The mathematical description of a classifier with a training algorithm is shown below:

5. Experiments and Results

In this section, experimental tests were carried out, including quantitative evaluation of GANs and classifier evaluation, to assess the performance of the proposed skin lesion DCGANs in image synthesis and their application to image classification. The studies used procedural preprocessing processes such as image scaling using the bicubic interpolation approach, normalization, which normalizes image pixel values to the range [0, 1], Sharpening techniques, color transformation and median filters. The study is performed on an Intel Core i7 processor running at 4 GHz, 12 GB of GPU RAM from an NVIDIA K80 GPU, with 4.1 TFLOPS of performance.

5.1. Evaluation Metrics

The authors employed qualitative and quantitative evaluation measures to assess the effectiveness of the DCGAN model. In the qualitative approach, the authors focused on the quality of the image; and in quantitative overfitting, diverse images and mode-dropping problems were evaluated. In this study, evaluation is done in two phases (1) the Image Preprocessing phase and (2) the Classification Phase. In the Image Preprocessing phase, Mean Square Error (MSE) [

100], Structural Similarity Index (SSIM) [

104] and Peak Signal-to-Noise Ratio (PSNR) [

100] were used. To assess the classification performance of the model, Balanced Accuracy Score (BAS) [

99], accuracy, recall, precision, specificity, and F1-Score [

103] were used as evaluation metrics, which are developed from True Positive (TP), True negative (TN), False positive (FP), and False negative (FN) predictions. The confusion matrix and AUC-ROC plot are used for the graphical presentation of the accuracy of the proposed improved model [

100].

In a binary classification problem, there are only two outcomes that can be either positive or negative. The key metrics in medical diagnosis are recall and specificity, which are used to compute BAS. Specificity refers to the instances of positives, whereas recall refers to the instances of negatives. The Balanced Accuracy Score (BAS) is a metric used to assess a classifier's performance on imbalanced datasets. It is determined by averaging the Classifier's sensitivity and specificity. The ROC curve illustrates the compromise between sensitivity and specificity (false positive and false negative rates) as a function of the threshold value. The proportion of real negatives incorrectly identified as positives is known as the false positive rate. A contrast to this is the actual positive rate, which measures how successfully positive samples are recognized. The ROC figure compares different thresholds in terms of the true positive rate (y-axis) and the false positive rate (x-axis). The curve of a random classifier, depicted as a diagonal from left to right, has 50% sensitivity and 50% specificity. A perfect classifier, however, would have a ROC curve that intersects the upper left corner of the plot with a sensitivity and specificity of 100%. The area under the curve (AUC) measures the Classifier's overall efficacy. An AUC of 1 indicates an ideal classifier, while an AUC of 0.5 indicates an unpredictable classifier. The Classifier's performance is generally better the closer the AUC is to 1 [

100].

5.2. Results of Image Preprocessing Techniques

This section presents the experimental results of the image processing phase. MSE, SSIM and PSNR evaluation methods are used to measure the quality of the image.

5.2.1. Image Scaling

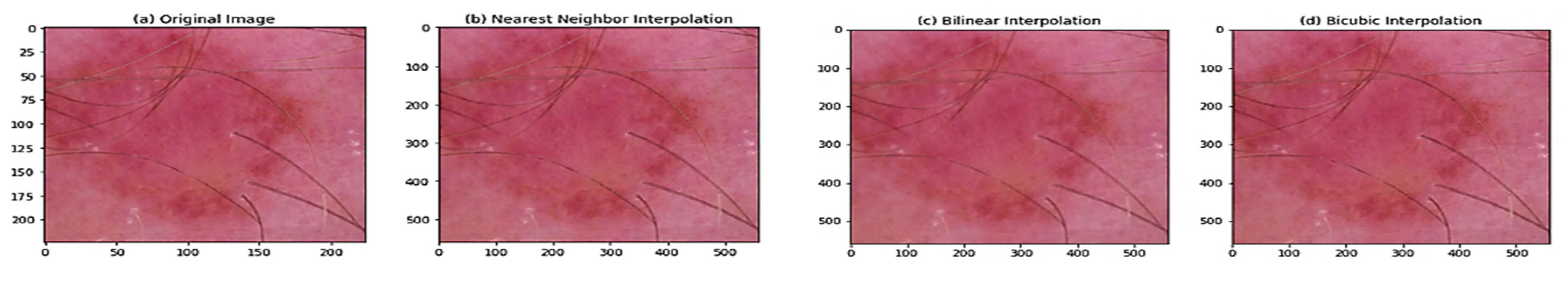

In this study, we have used the bicubic interpolation technique to accurately assess a pixel's intensity by extrapolating from neighbouring pixels' values. We compared the image produced by the bicubic interpolation with the other image scaling techniques, namely, nearest neighbor and bilinear interpolation, as shown in

Figure 6. As bicubic interpolation determines the value of each pixel by taking the weighted average of the 16 most relative neighbouring pixels, the output of bicubic algorithms is smoother and more accurate in preserving tiny features from the input image. As shown in

Figure 6 and

Table 2, bicubic yields significantly sharper images compared to the other two techniques and represents the optimum balance between processing speed and output quality.

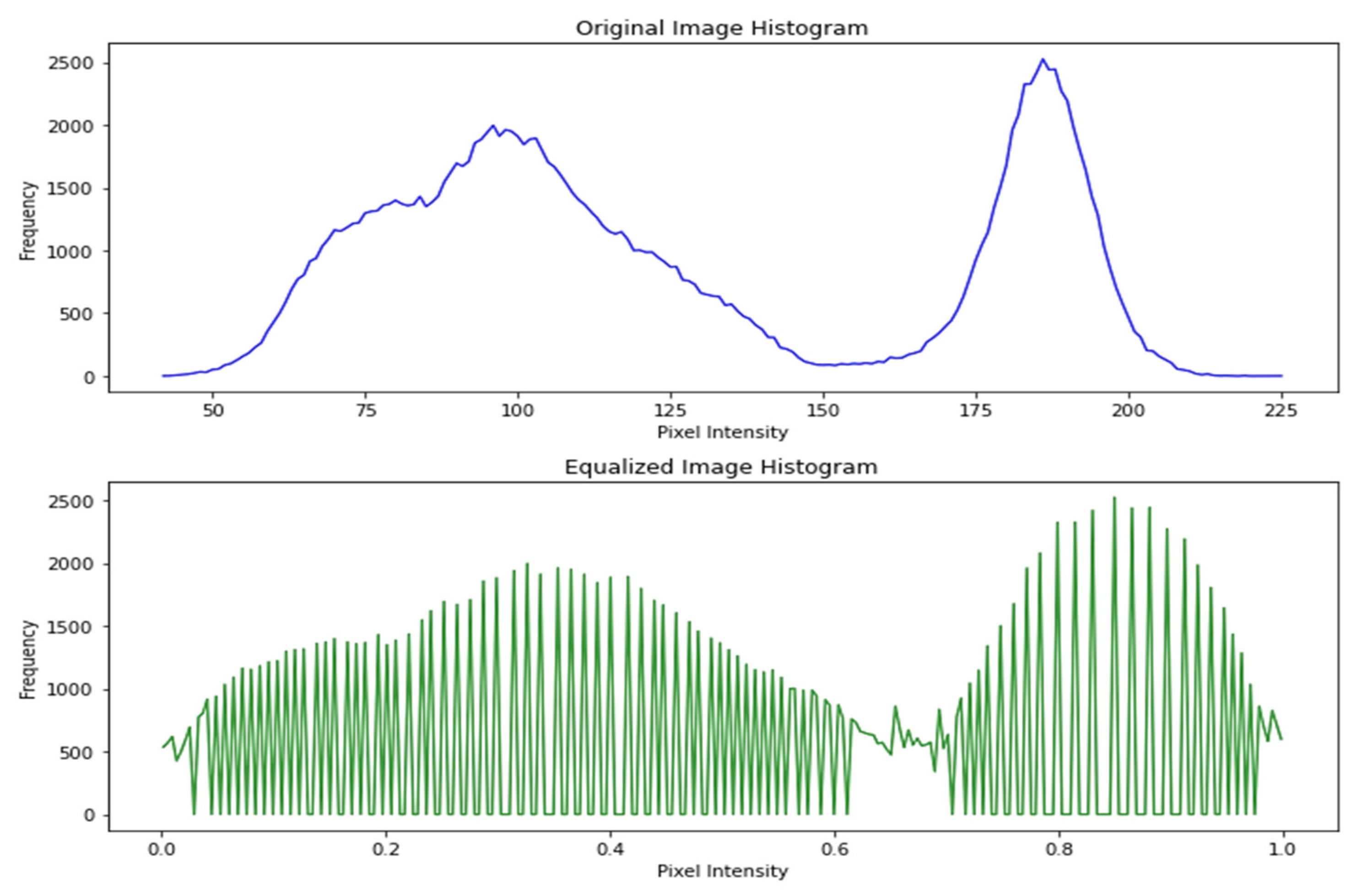

5.2.2. Histogram Equalization

After bicubic interpolation, Histogram Equalization is performed to increase the contrast of an image. Contrast is achieved by effectively distributing the most commonly occurring intensity values, which stretches the intensity range of the image. This method often increases the overall contrast of the images, which is helpful when close contrast levels indicate meaningful data. It makes it possible for areas of lower local contrast to improve their contrast. Normalization is performed by dividing the histogram by the number of pixels in the analysed image. To assess the performance of a histogram equalizer, we analysed histograms before and after equalization to see how the distribution of pixel intensities changed, as shown in

Figure 7.

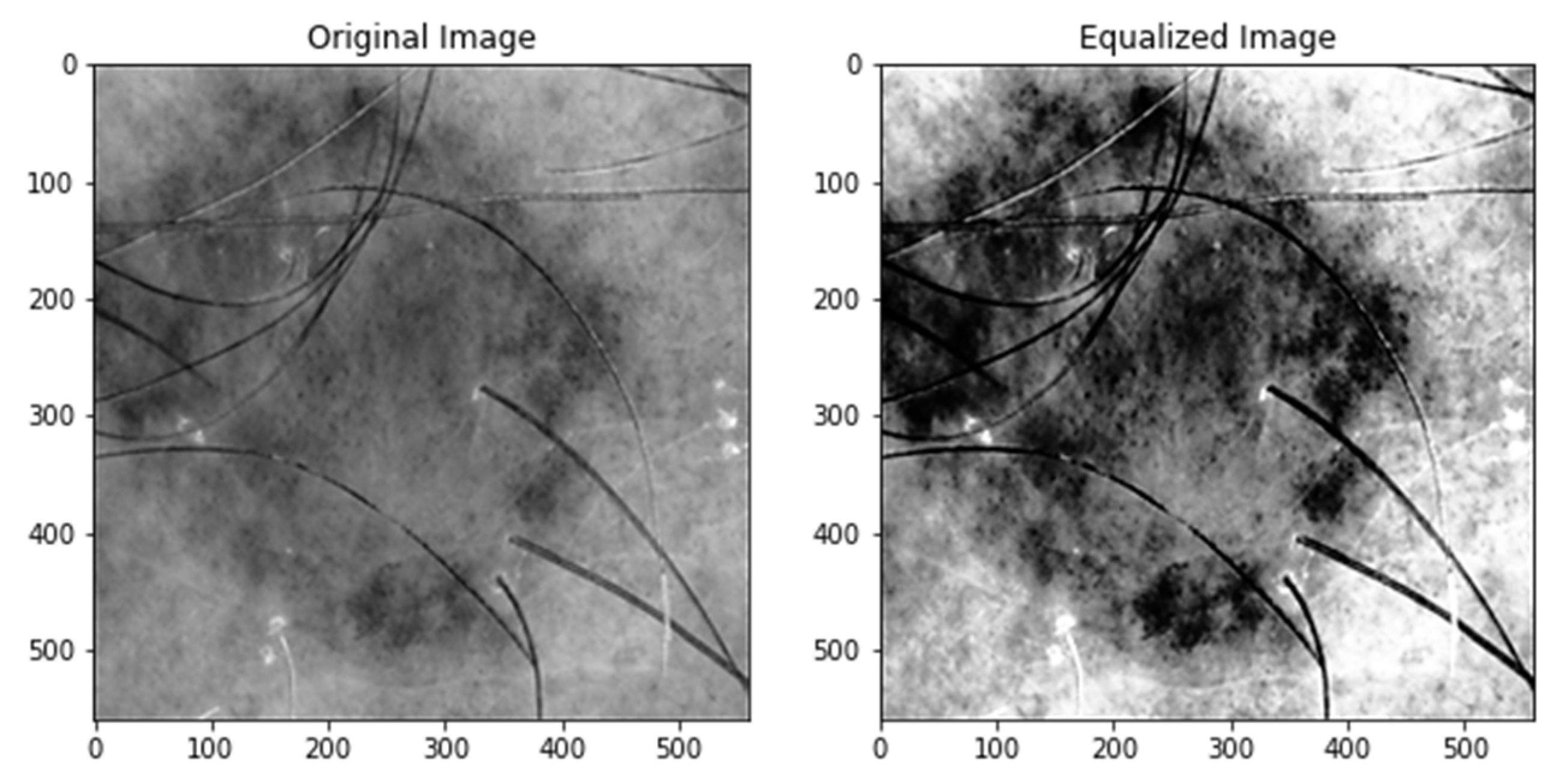

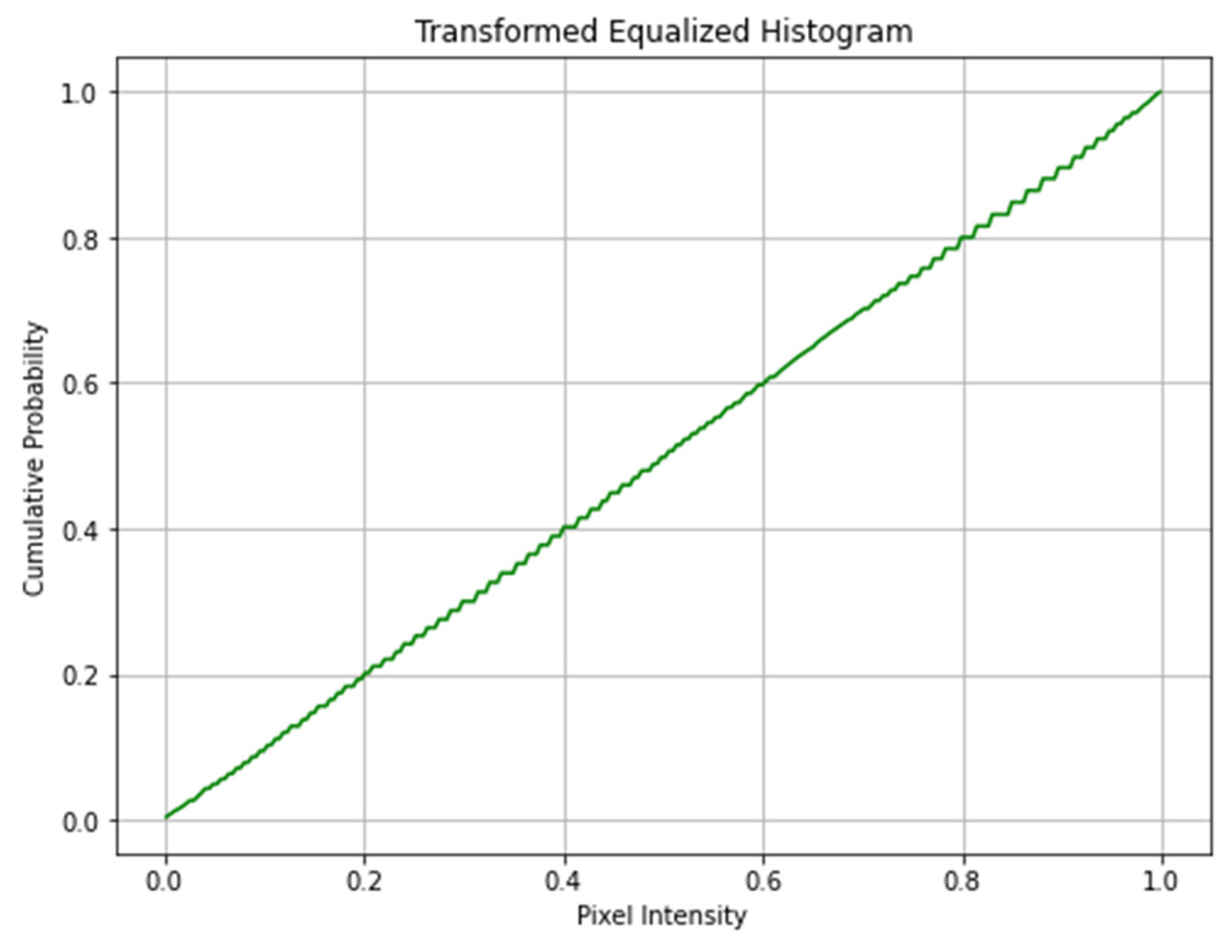

Figure 8 displays the outcome of applying histogram equalization to the low-contrast test image. After adjusting the contrast and brightness, the equalized image's histogram was computed. Then the equalized images were normalized by the cumulative sum of the histogram values to generate the cumulative distribution function (CDF). Finally, the equalized image CDF is plotted. The pixel intensity values are on the x-axis, while the cumulative probability is on the y-axis.

Figure 9 demonstrates the distribution of pixel intensities across the image and provides an overview of the transformation caused by histogram equalization.

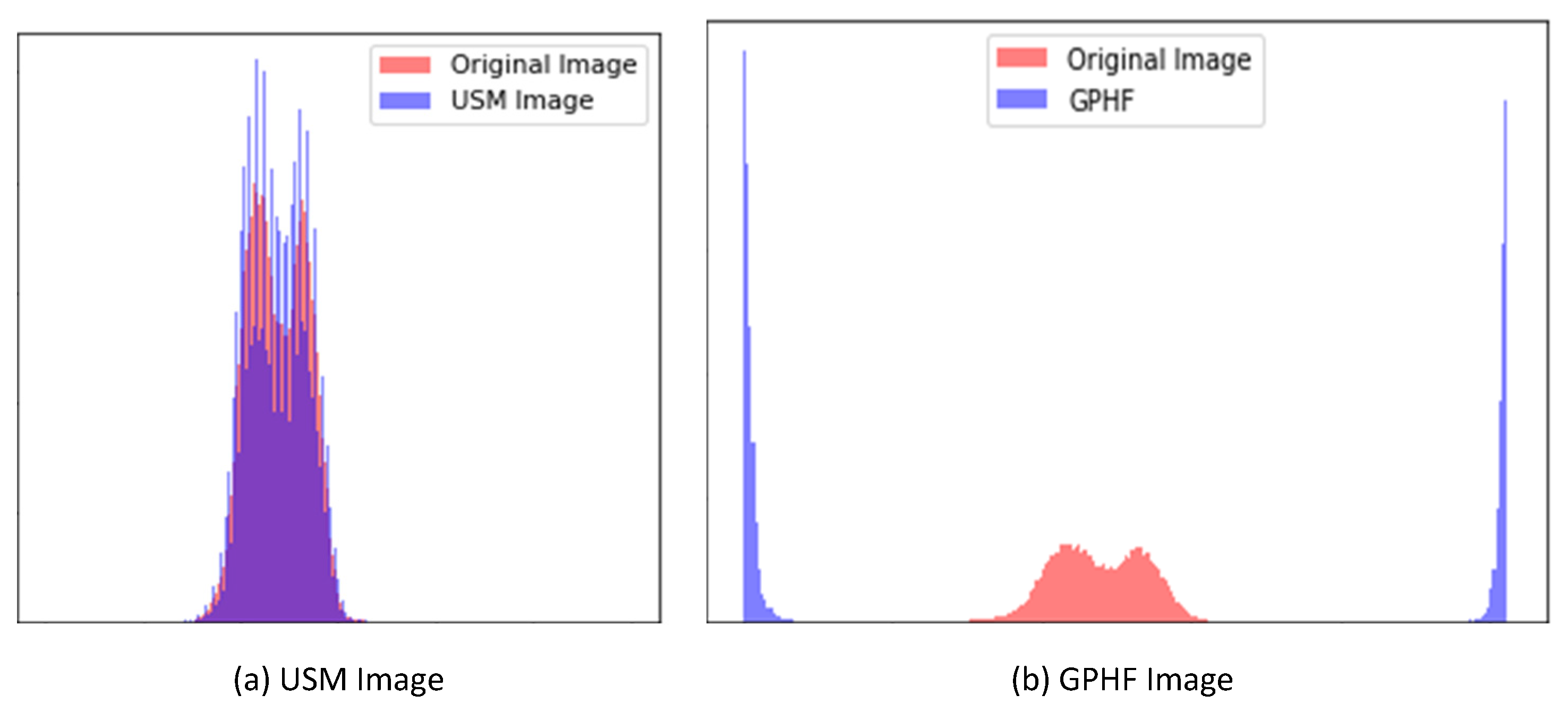

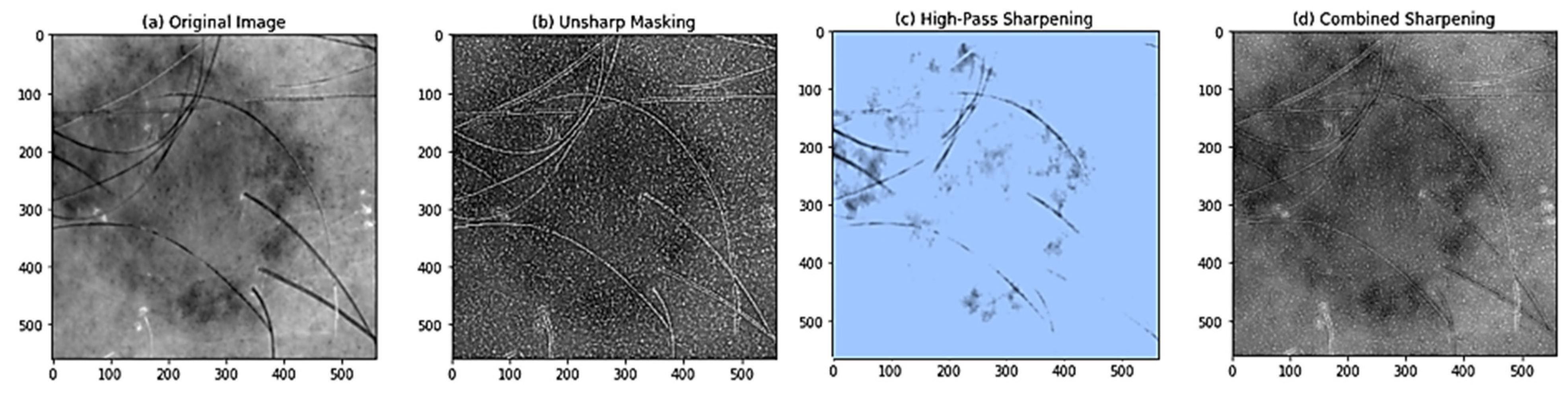

5.2.3. Unsharp Masking and Gaussian High Pass Filtering

We performed the USM and GHPF filtering techniques on the equalised image to sharpen the images and capture the fine details of skin lesion images. The authors first used the USM method to enhance the image's boundaries and fine details, followed by the GHPF method to separate and sharpen the image's high-frequency values.

Figure 10 illustrates the histogram comparison between the original image, the enhanced USM and the GPHF. The image contrast is adjusted with a histogram equalization method. Then the enhanced images of USM and GHPF are added and multiplied with a weight factor of the image.

Figure 11 shows the enhanced combined sharpened image. The combined image shows increased visibility of skin lesions and sharpens the image frequency value.

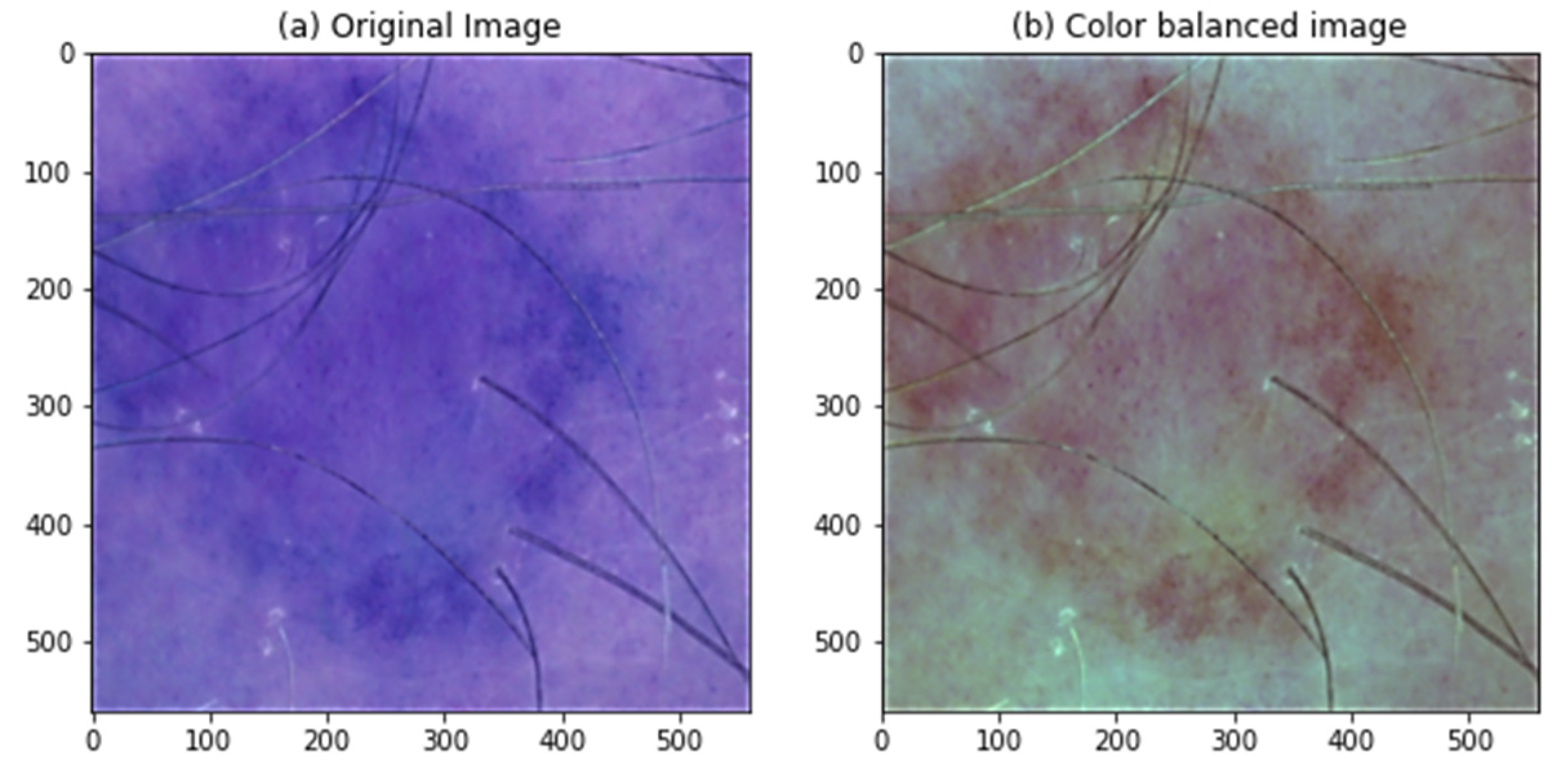

5.2.4. Color Space Transformation

We used CIELAB transformation to match numerical value changes to perceived colour changes. CIELAB colour balance approach preserves color by choosing each channel's scale factor separately. The first step is to convert each uint8 image to float32 format, map it to LAB colour space, split the channels, and calculate the mean and standard deviation along the first axis. The mean and standard deviation determine each colour channel's scale factor. Scaling factors are then added to each colour channel along the first axis. After that, reduce the pixel values to make the colour channels 0-255. After that, uint8-format the image.

Figure 12 shows the color balance transformation, and

Table 3 presents the image's performance after applying the color transformation.

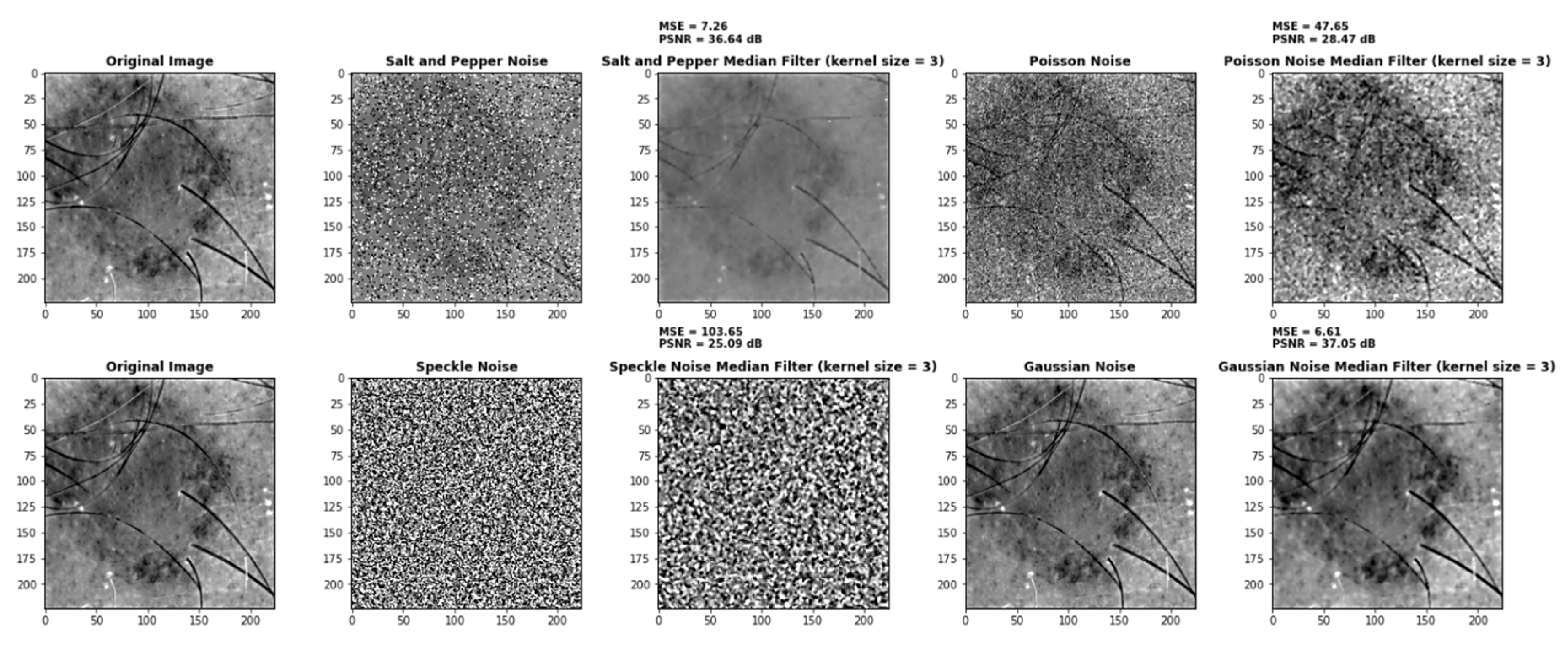

5.2.5. Median Filter

In 2D images, the median filter is widely used to eliminate noises such as salt and pepper, speckle, Poisson, and Gaussian noise [

93].

Figure 13 shows the implementation of the median filter on these noises. The Mean Square Error (MSE) and Peak Signal Noise Ratio (PSNR) metrics have been used to evaluate these noises. MSE is used to determine whether there is unexpected noise in the image. The value of MSE should generally be low. If so, it suggests that a filter is the best for reducing noise. As illustrated in

Figure 13, the MSE values are compared to select the most effective noise-reduction filter. From

Table 4, we can build that the median filter is best for removing Gaussian noise.

5.3. Results of Improved DCGAN-based Classifier

The ISIC 2017 dataset is used to train the proposed DCGAN-based Classifier. Image preprocessing techniques are applied before DCGAN modelling to ensure the model can learn reliable representations. As discussed in sub-section 4.4.1, 70% of the original datasets were used for training and 30% for testing each image class. Notably, 30% of the images were taken as unlabeled data. The adversarial network inputs random noise and provides the discriminator's final prediction on the created images. By altering the noise vector, we may thoroughly understand how the generator works and which noise vector results in the desired class. However, we have used 100 random latent input noises.

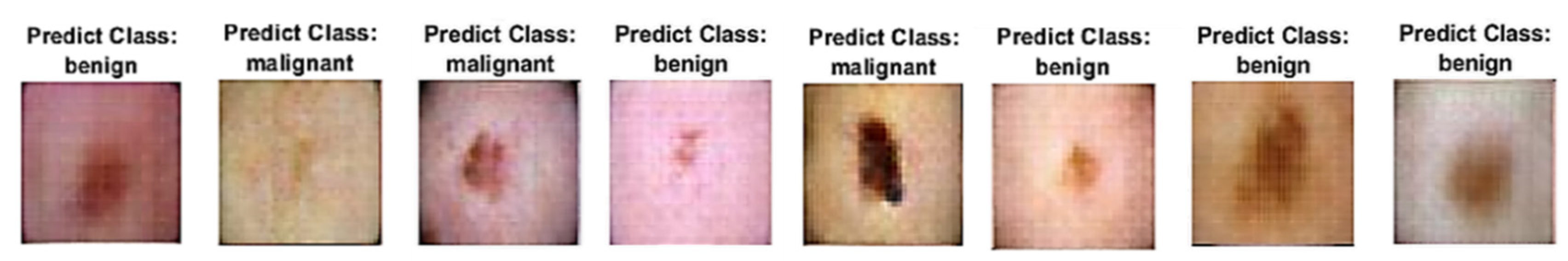

During the batch normalization, a mini-batch size of 64 was used. All weights were initialized with a normal distribution with a mean of zero and a standard deviation of 0.02. Both the generator and discriminator networks are composed of five layers. The generator comprises four deconvolutional layers with ReLu activations and one deconvolutional layer with tanh activation at the final layer. The discriminator consists of four convolutional layers with Leaky-ReLu activations and a SoftMax layer in the last layer. During the training process, both actual data and data from the generator network are fed to the discriminator. The generator produces extremely realistic images after a few epochs of training, which are also used for additional training. To verify the Improved DCGAN model's effectiveness, we randomly generated 100 images for each of the two skin lesions and compared them to discriminate between real and fake using the BAS evaluation metric; the sample images are shown in

Figure 14 (b).

Figure 14 (a) presents original images from the training data, and

Figure 14 (b) shows the random synthetic images generated after training. It is observed that each image produced by DCGAN looks very real and is hard to differentiate from the real images.

Figure 14.

(a): Real Images from the ISIC 2017 Dataset.

Figure 14.

(a): Real Images from the ISIC 2017 Dataset.

Figure 14.

(b). Synthetic Images Generated by Generator.

Figure 14.

(b). Synthetic Images Generated by Generator.

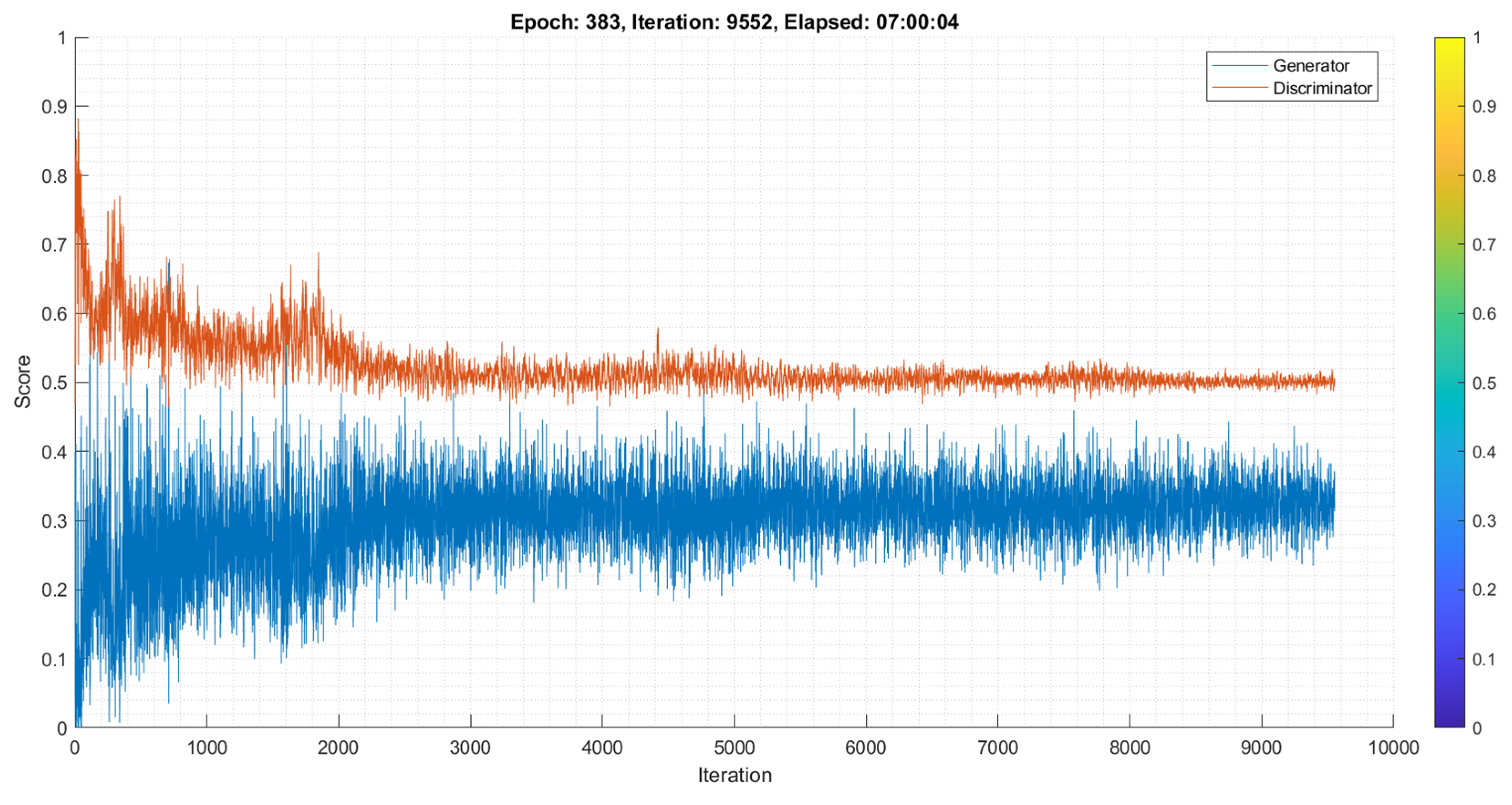

Figure 15 shows the DCGAN model's training process, the score generated by the generator and discriminator after 383 epochs/ 9552 iterations. The score must be between 0 to 1; however, 0.5 is the best score for each iteration [

102]. After each cycle, training losses for generator G and discriminator D are recorded. The generator should get massive random noise early in training to learn how to generate real data. At the same time, the discriminator can distinguish real from fake images; it does not usually collect large samples early on. The generator and discriminator may overcome each other during training. If the discriminator gets too accurate, it will return values close to 0 or 1. If the image generator method is used for producing and classifying skin lesions during training, GAN becomes too accurate; it will constantly harness discriminator mistakes, producing unpleasant effects. In our experiment, the discriminator’s score is close to 0.5, indicating that the generator creates a synthetic image that makes it impossible for the discriminator to differentiate whether it is real or fake.

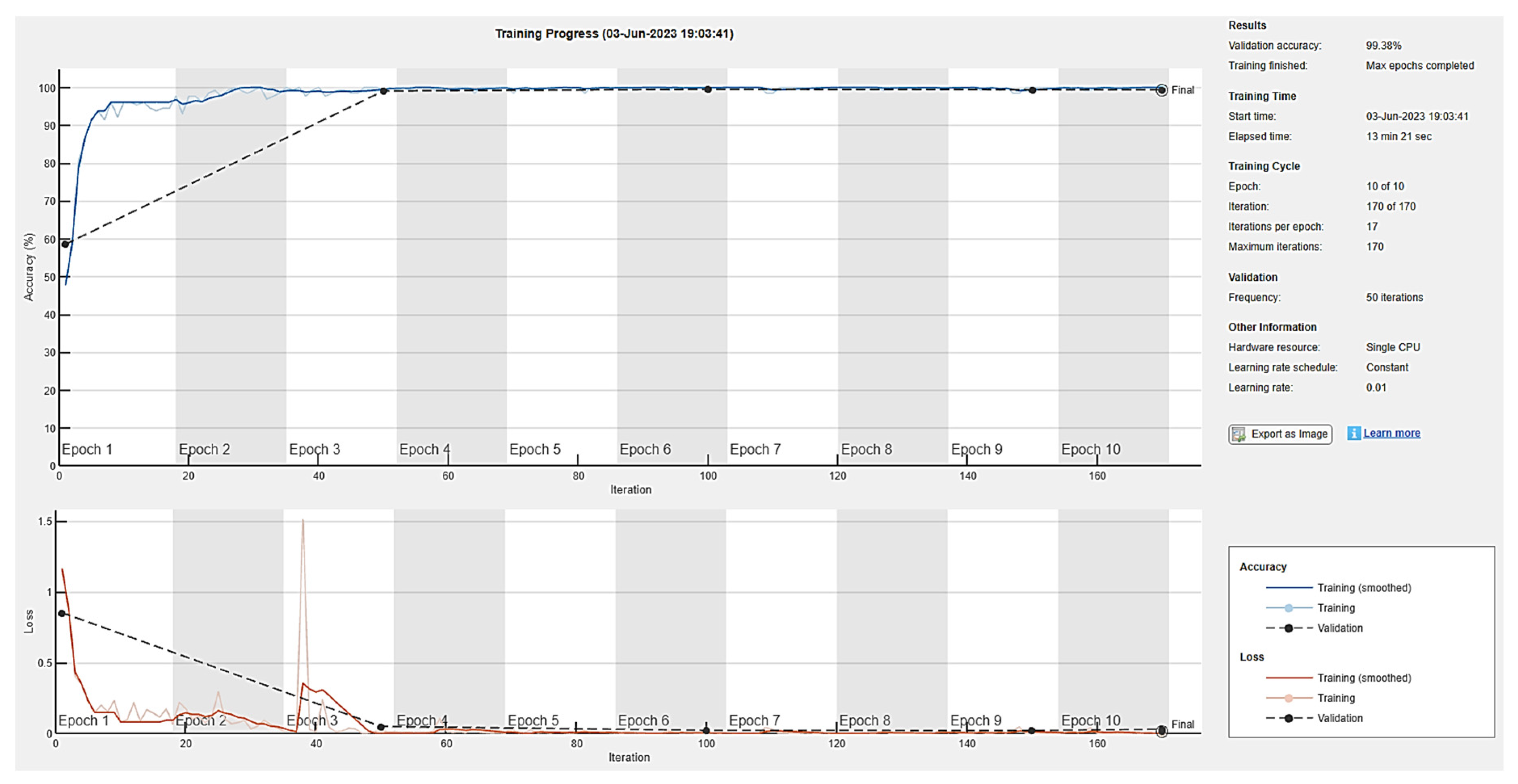

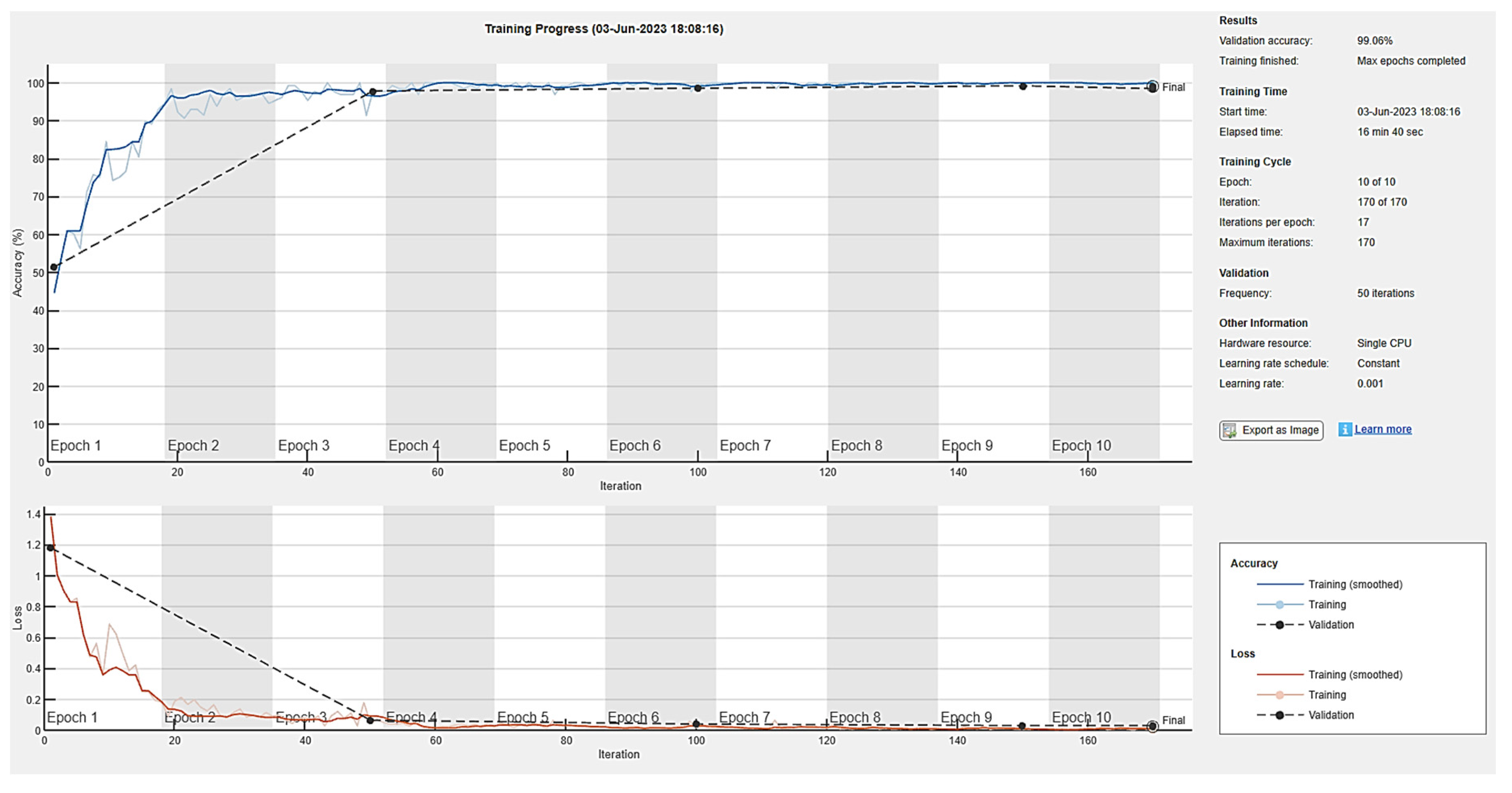

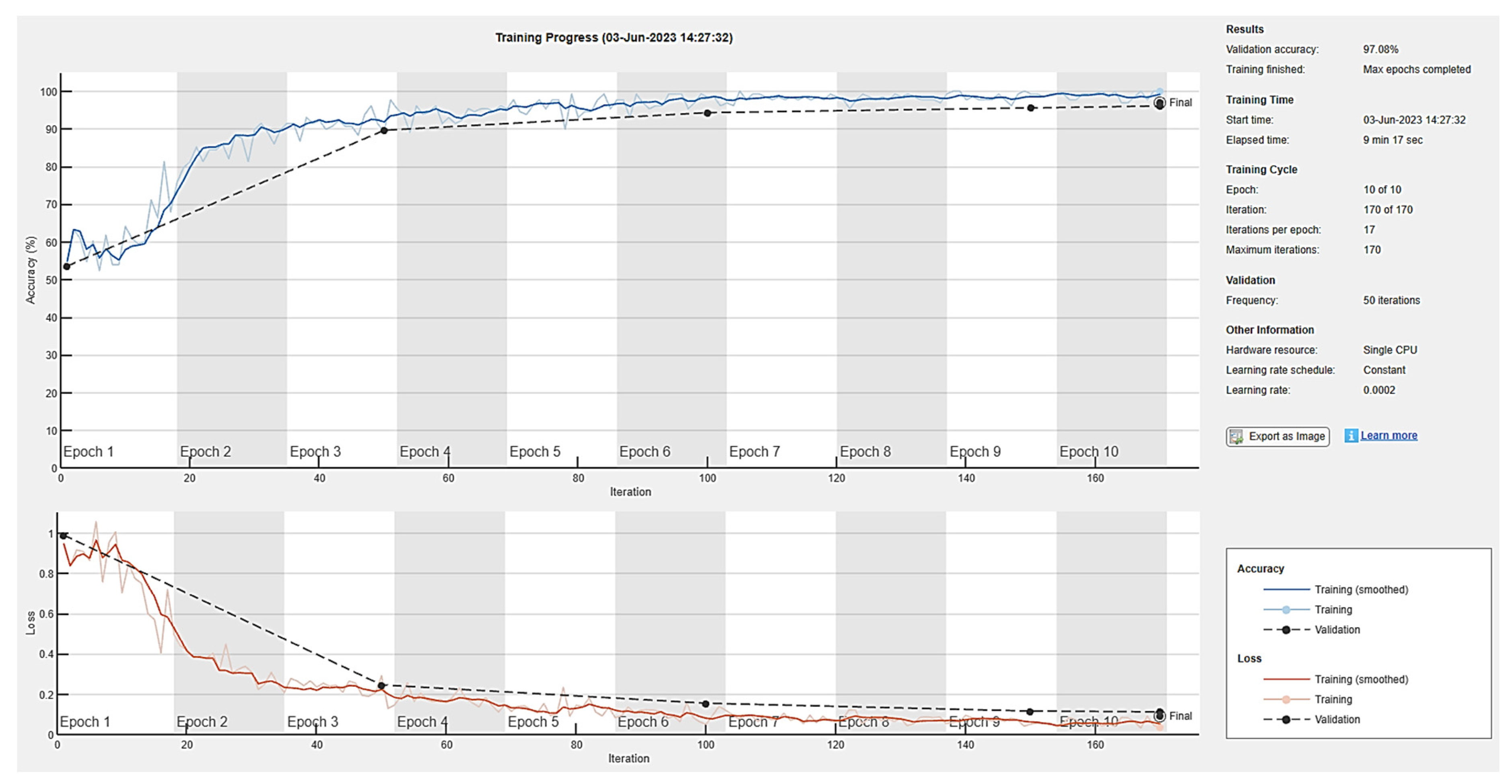

The generator and discriminator networks were trained for ten epochs to examine the validation accuracy of the model with definite learning rates of 0.01, 0.001, and 0.0002. Seventeen iterations per epoch were taken during the training phase, while a validation frequency of 50 iterations was used for testing. Accuracy and loss during training for the generator and discriminator are depicted in

Figure 16,

Figure 17 and

Figure 18 for ten epochs. The loss convergence at the end demonstrates that the GAN model has found its optimal state. Compared to the learning rates of 0.001 and 0.0002, the proposed DCGAN model achieves an optimal accuracy of 99.38% with a learning rate of 0.01 which outperforms state-of-the-art methods of GAN models as suggested in the literature, depicted in

Figure 16,

Figure 17 and

Figure 18. The images were trained using a single GPU to accelerate the matrix operations, training and for faster and parallel computation.

Table 5 shows the time elapsed, validation accuracy and loss of three learning rates computed for ten epochs with a batch size of 64. In modern deep-learning models, batch size is one of the most important hyperparameters to fine-tune the model performance. To enable the model to detect the pattern in the data without having to train on a huge dataset, the authors additionally evaluated the model on 128 and 256 batch sizes with a learning rate of 0.01, which gives higher accuracy than 0.001 and 0.0002.

Table 6 demonstrates that the training time for a model is substantially reduced as the batch size increases.

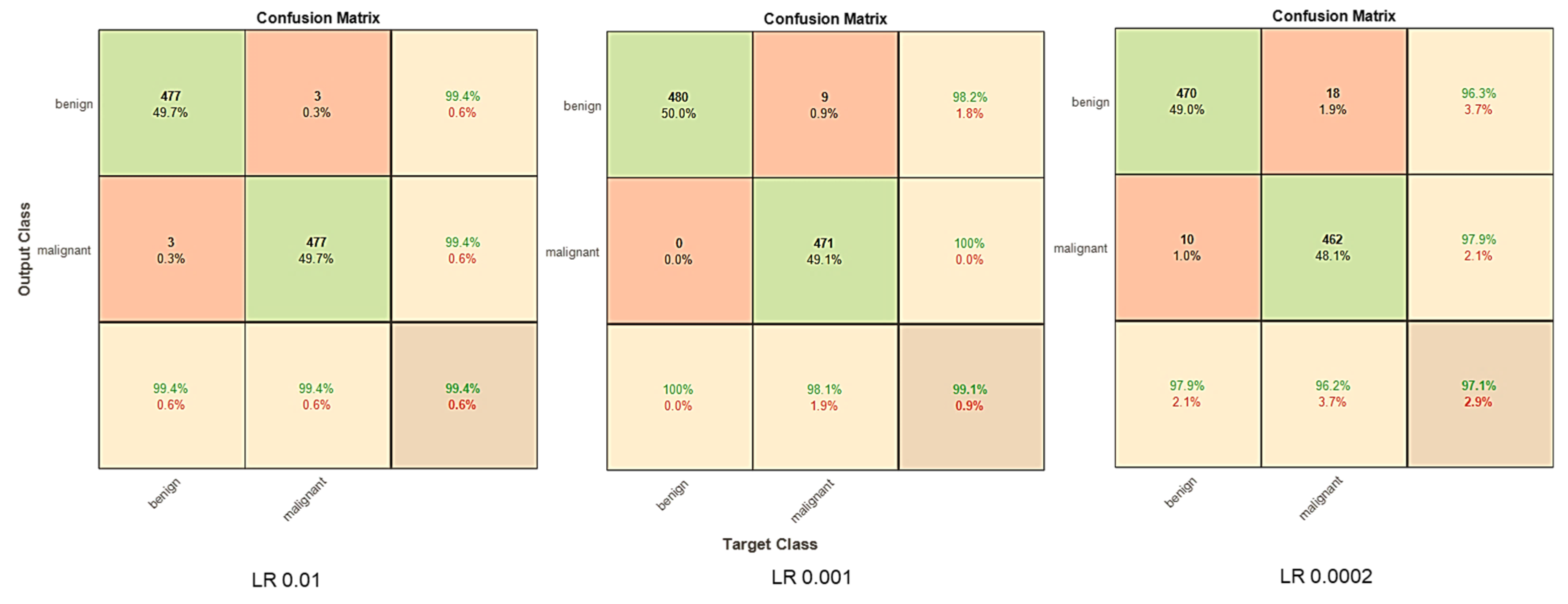

A confusion plot is a graphical representation of a classification model's performance. It calculates AUC-ROC, accuracy, precision, recall, and F1 score metrics to evaluate model performance. As shown in

Figure 19, the discriminator successfully diagnoses benign and malignant skin lesions with an extremely high degree of accuracy, demonstrated in the diagonal elements of the metrics. The confusion matrix for three learning rates was compared in

Figure 19.

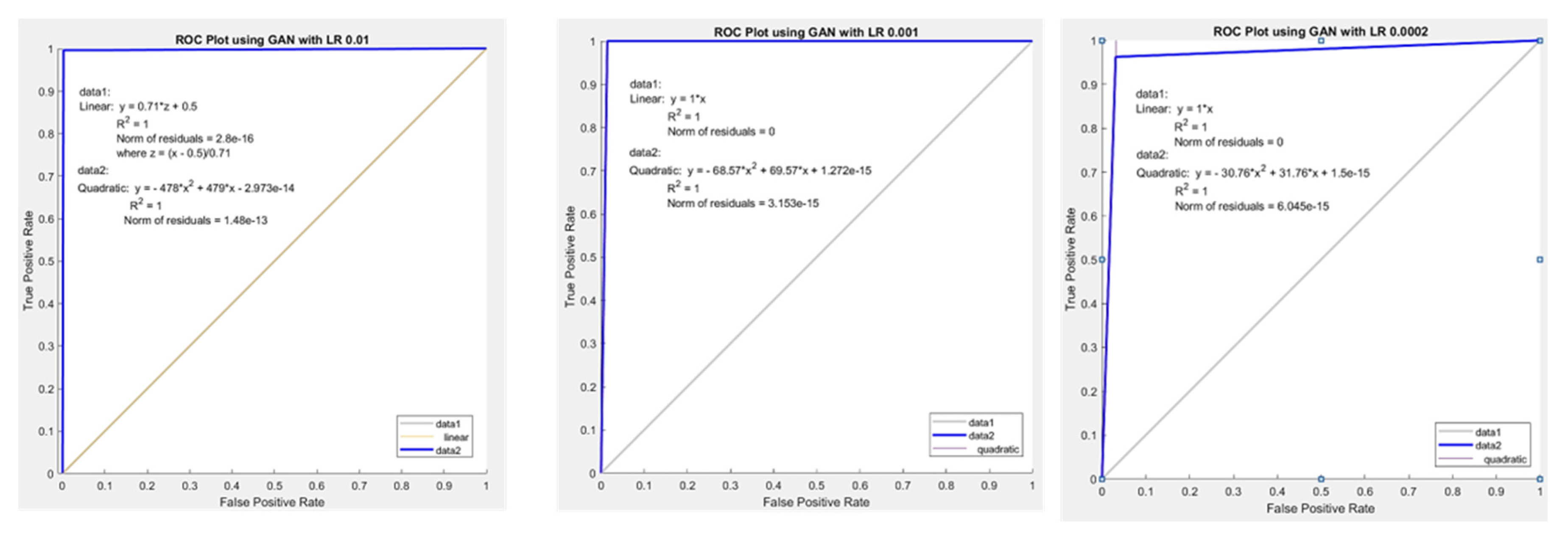

The ROC curve in

Figure 20 summarises and displays the binary classification results, particularly for the positive class, and has learning rates of 0.01, 0.001, and 0.0002, respectively. We used True Positive (TP) and False Positive (FP) to plot the ROC curve, as was mentioned in

Section 5.1. TP is on the y-axis, and FP is on the x-axis in the ROC plot. The plot's grey line is a random classifier's ROC curve. The trade-off between sensitivity (TPR) and specificity (1-FPR) is depicted by our ROC curve (

Figure 20). Good classifiers perform better if their curves are located nearer the top-left corner. A curve close to the ROC space's 45-degree diagonal indicates a less accurate test. Despite the ROC curve's deviation from the diagonal and the little gap between the top left corner and the curve,

Figure 20 demonstrates that the Classifier is fairly classified. As shown in

Figure 20, data1 is a linear line, and data2 is the ROC curve, a quadratic pattern. R-squared (

R2 )for all three learning rates equal 1, indicating that the predicted values are identical to the actual values.

The performance analysis of the improved DCGAN model utilizing the performance metrics BAS, accuracy, recall, precision, specificity, and F1 Score is shown in

Table 6 and

Figure 21 for three definite learning rates of 0.01, 0.001, and 0.0002. The model produces good results after 170 epochs, with the test loss reaching a minimal state and the resulting BAS being 99 for learning rates 0.01 and 0.001, and 97 for 0.0002, indicating that the classifier performance is significantly good. The accuracy with a learning rate of 0.01 obtains a greater accuracy of 99.38% compared to a 0.001 model's accuracy of 98.44% and a 0.0002 model's accuracy of 96.04%. As a result, the findings indicate that the proposed model could generalize well and operate well when applied to any skin lesions.

5.4. Discussion

The results show that the DCGAN method employed in the model's creation accurately reproduces real skin lesions. A DCGAN-based model allows the creation of more realistic and diverse skin lesions by capturing global structures and detailed textures. Although data augmentation methods like rotation, scaling, and flipping can expand dataset size, the improved strategy goes above and beyond standard methods by synthesizing new lesions. It allows high-quality synthetic samples to be added to missing or unbalanced datasets, improving the model's ability to generalize to different lesion types. Skin lesions' enormous diversity and complexity may challenge handmade feature extraction methods such as texture analysis or color-based descriptors, which rely on domain-specific knowledge. The DCGAN Classifier learns and extracts essential features from synthetic skin lesion images automatically, resulting in better generalization, robustness, and accuracy rates, thereby reducing the need for manual feature engineering. The DCGAN-based Classifier can handle differences in lesion appearance, illumination, and image quality due to its improved generalization abilities. Furthermore, image preprocessing procedures that increased feature extraction and learning were used to improve the accuracy, generalization, and flexibility of the DCGAN-based model. We have used bicubic interpolation, histogram equalization, USM and GHPF, CIELAB color transformation, and Gaussian noise median filter to extract the feature. In this study, the ISIC 2017 dataset containing 4000 benign and malignant images, each class containing 2000 images, is used to train and fine-tune the model.

As a result, classification performance is more robust and consistent even on novel or tough datasets. It can enhance training datasets and allow researchers to analyse sparse or unavailable lesion samples.

Table 7 and

Figure 21 shows that the DCGAN-based Classifier reliably diagnoses skin lesions. It has a high accuracy of 99.38%, 99% for precision, recall, and F1-Score, demonstrating that it can detect and differentiate between different skin lesions. The Classifier is particularly flexible to visual contrasts between lesions for precise diagnosis and classification. It solves problems such as class imbalance, annotated data, overfitting, and generalization to new models by capitalizing on DCGANs.

We have compared our proposed model with the existing models in the literature, such as StyleGAN, WGAN, DGAN, SLA-StyleGAN and DDGAN, in which the GAN classification models were applied to classify the skin lesions as listed in

Table 12. The improved DCGAN outperforms cutting-edge approaches for synthesizing and categorizing skin lesions. Results show that the proposed improved model is effective at generating accurate predictions based on the test images, and the model attained 99.38% accuracy, which is a significant outcome for skin lesion classification.

Bissoto et al. [

19] have achieved a performance of 84.7 using the Pix2Pix GAN model on ISIC 2017. On the other hand, Wu et al. [

34] applied GAN with Raman Spectroscopy to augment and generate synthetic images by achieving an accuracy of 92%. Mutepfe et al. [

67] have achieved a test accuracy of 93.5% using DCGAN. Qin et al. [

97] used StyleGAN, achieved an accuracy of 95.2 % and balanced multiclass accuracy of 83.1%. Khan et al. [

98] performed DGAN on unlabeled and labelled datasets achieving an accuracy of 91.1% and 92.3%, respectively. Therefore, it can be observed that our proposed model achieved higher accuracy of 99.38% when compared to the models listed in

Table 12.

6. Conclusion and Future Scope

This study investigated Deep Convolution Generative Adversarial Networks (DCGANs) for their potential application in creating synthetic data for an augmentation technique. This technique has been used successfully to classify images of skin lesions with high accuracy using ISIC 2017 dataset. Furthermore, the results demonstrate that adding GAN-generated image examples to the training data significantly outperforms conventional approaches of fine-tuning pre-existing deep neural network architectures. Access to unique data creation and augmentation processes like this is helpful when large-scale training datasets are not easily accessible. It enables the addition of high-quality synthetic samples to missing or unbalanced datasets, enhancing the model's ability to generalize to diverse lesion types. We observed a significant improvement in training after performing image enhancing and preprocessing operations. After fine-tuning the network's parameters, we obtained an overall test accuracy of 99.38%.

Despite achieving high accuracy, this study had limited capacity to fine-tune its hyper-parameters. Consequently, conducting all the necessary tests to fine-tune our model took considerable time. The most problematic aspect of this study was the duration of each training session, mainly when training was more than 100 epochs. It made it significantly more difficult to optimize the DCGAN on the dataset.

In our future work, we intend to investigate how different lesion-generation approaches can enhance the quality and authenticity of synthetic skin lesions. To improve the precision and dependability of cutaneous lesion classification, we aim to examine how multi-modal fusion with other diagnostic techniques, such as histological information or patient metadata, may be done. Exploring interpretability methods for the DCGAN-based Classifier may provide valuable insights into decision-making and increase reliance on the model's predictions.

7. Patents

This section is not mandatory but may be added if patents result from the work reported in this manuscript.

Author Contributions

The authors planned for the study and contributed to the idea and field of information. Conceptualization, K.B.; methodology, K.B.; software, K.B.; validation, K.B., E.B. and J.T.; formal analysis, K.B.; investigation, K.B.; resources, K.B.; data curation, K.B.; writing—original draft preparation, K.B.; writing—review and editing, E.B. and J.T.; visualization, E.B. and J.T.; supervision, E.B. and J.T.; project administration, E.B.; funding acquisition, E.B. and J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Acknowledgments

The data presented in this study are openly available in the reference list. I would like to sincerely thank and express my appreciation to my Supervisor, Dr Bhero, and Co-Supervisor, Prof Agee, for excellent supervision and for assisting me in paying attention to detail. Moreover, I wish to thank my family for their continuous support and countless sacrifices.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hoffman, M. ; Picture of the skin: human anatomy. 2014. Available online: https://www.webmd.com/skin-problems-and-treatments/ picture-of-the-skin.

- Stöppler, M. C. Medical definition of skin. 2021. Available online: https://www.medicinenet.com/skin/definition.htm.

- Anonymous. Skin cancer-index 2018. 2018. 2018. Available online: https://derma.plus/en/skin-cancer-index-2018/.

- Amarathunga, A.; Ellawala, E. P. W. C.; Abeysekara, G.; Amalraj, C. R. J. Expert system for diagnosis of skin diseases. International Journal of Scientific & Technology Research. 2015, 4, 174–178. [Google Scholar]

- Ambad, P.S.; Shirsat, A.S. A image analysis system to detect skin diseases. IOSR J. of VLSI and Signal Processing, 2016, 6, 17–25. [Google Scholar] [CrossRef]

- ALKolifi ALEnezi, N.S. A method of skin disease detection using image processing and machine learning. Procedia Computer Science, 2019, 163, 85–92. [Google Scholar] [CrossRef]

- Wu, H.; Yin, H.; Chen, H. , et al. A deep learning, image-based approach for automated diagnosis for inflammatory skin diseases. Annals of Translational Medicine. 2020, 8, 581. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, W.; Chen, J.; Sun, G.; Yang, A. Classification and research of skin lesions based on machine learning. Computers, Materials & Continua. 2020, 62, 1187–1200. [Google Scholar] [CrossRef]

- Yan, Y.; Kawahara, J.; Hamarneh, G. Melanoma recognition via visual attention, Lecture Notes in Computer Science. Proceedings of the International Conference on Information Processing in Medical Imaging; 19; Hong Kong, China. Springer; pp. 793–804. 20 December.

- Duan, M.; Li, K.; Liao, X.; Li, K. A parallel multiclassification algorithm for big data using an extreme learning machine. IEEE Transactions on Neural Networks and Learning Systems. 2018, 29, 2337–2351. [Google Scholar] [CrossRef]

- Sun, X.; Yang, J.; Sun, M.; Wang, K. A benchmark for automatic visual classification of clinical skin disease images. Computer Vision –ECCV 2016. New York, NY, USA: Springer International Publishing; 2016., Computer Vision - ECCV 2016.

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors. 2021, 21, 2852. [Google Scholar] [CrossRef]

- Vulli, A.; Srinivasu, P.N.; Sashank, M.S.; Shafi, J.; Choi, J.; Ijaz, M.F. Fine-tuned DenseNet-169 for breast cancer metastasis prediction using FastAI and 1-cycle policy. Sensors. 2022, 22, 2988. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Communications of the ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R. Dermatologist-level classification of skin cancer with deep neural networks. Nature, 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: open challenges and future directions. Prog Artif Intell 5 2019, 221–232. [Google Scholar] [CrossRef]

- Rice, L.; Wong, E.; Kolter, J.Z. Overfitting in adversarially robust deep learning. Proceedings of the 37th International Conference on Machine Learning. 2020, 119, 8093–8104. [Google Scholar]

- Stutz, D.; Hein, M.; Schiele, B. Disentangling Adversarial Robustness and Generalization. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 2019, 6969-6980. [CrossRef]

- Bissoto, A.; Perez, F.; Valle, E.; Avila, S. Skin Lesion Synthesis with Generative Adversarial Networks. International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). 2018. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Mirza, M.; Xu, B.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Advances in Neural Information Processing Systems. 2014, 2672–2680. [Google Scholar] [CrossRef]

- Iqbal, T.; Ali, H. Generative adversarial network for medical images (MI-GAN). Journal of medical systems. 2018, 42, 1–11. [Google Scholar] [CrossRef]

- Bi, L.; Feng, D.D.; Fulham, M.; Kim, J. Multi-Label classification of multi-modality skin lesion via hyper-connected convolutional neural network. Pattern Recognition. 2020, 107, 107502. [Google Scholar] [CrossRef]

- ASRT. The ASRT Practice Standards for Medical Imaging and Radiation Therapy. American Society of Radiologic Technologists. 2019.

- ISO 12052:2017. Digital imaging and communication in medicine (DICOM), including workflow and data management. Health Informatics. 2017. Available online: https://www.iso.org/obp/ui/#iso:std:iso:12052:ed-2v1:en (accessed on 6 April 2023).

- Cassidy, B.; Kendrick, C.; Brodzicki, A.; Jaworek-Korjakowska, J.; Yap, M.H. Analysis of the ISIC image datasets: Usage, benchmarks, and recommendations. Medical Image Analysis. 2022, 75, 102305. [Google Scholar] [CrossRef]

- WHO Regional Office for Africa. Handbook for Cancer Research in Africa. WHO/AFRO. 2013, p.147, [Online]. Available online: https://apps.who.int/iris/handle/10665/100065.

- Ibbott, G.S.; Van Dyk, J. Quality Assurance for Treatment Planning (IEC 62083 and IAEA Report). 2017.

- Romero-Lopez, A.; Giro, X.; Burdick, J.; Marques, O. Skin lesion classification from dermoscopic images using deep learning techniques. The IASTED International Conference on Biomedical Engineering. Biomedical engineering 2017. Innsbruck: ACTA Press, 2017, p. 1-6. [CrossRef]

- Mahbod, A.; Schaefer, G.; Wang, C.; Ecker, R.; Ellinge, I. Skin Lesion Classification Using Hybrid Deep Neural Networks. ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Brighton, UK, 2019, pp. 1229-1233. [CrossRef]

- Ayan, E.; Ünver, H.M. Data augmentation importance for classification of skin lesions via deep learning. 2018 Electric Electronics, Computer Science, Biomedical Engineering Meeting (EBBT), Istanbul, Turkey, 2018, pp. 1-4. [CrossRef]

- Zhang, J.; Xie, Y.; Wu, Q.; Xia, Y. Skin Lesion Classification in Dermoscopy Images Using Synergic Deep Learning. Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science (), vol 11071. Springer, Cham. [CrossRef]

- Motamed, S.; Rogalla, P.; Khalvati, F. Data augmentation using Generative Adversarial Networks (GANs) for GAN-based detection of Pneumonia and COVID-19 in chest X-ray images. Informatics in Medicine Unlocked. 2021, 27, 100779. [Google Scholar] [CrossRef]

- Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-based Synthetic Medical Image Augmentation for increased CNN Performance in Liver Lesion Classification. Neurocomputing. 2018, 321, 321–331. [Google Scholar] [CrossRef]

- Wu, M.; Wang, S.; Pan, S.; Terentis, A.C.; Strasswimmer, J.; Zhu, X. Deep learning data augmentation for Raman spectroscopy cancer tissue classification. Scientific Reports. 2021, 11, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Mumuni, A.; Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array. 2022, 16, 100258. [Google Scholar] [CrossRef]

- Razghandi, M.; Zhou, H.; Turgut, D. Variational Autoencoder Generative Adversarial Network for Synthetic Data Generation in Smart Home. IEEE International Conference on Communications (ICC). 2022. [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med Image Anal 2019, 58, 101552. [Google Scholar] [CrossRef] [PubMed]

- Kazeminia, S.; Baur, C.; Kuijper, A.; van Ginneken, B.; Navab, N.; Albarqouni, S.; Mukhopadhyay, A. GANs for medical image analysis. Artificial Intelligence in Medicine. 2020, 109, 101938. [Google Scholar] [CrossRef]

- Sampath, V.; Maurtua, I.; Aguilar Martín, J.J.; Gutierrez, A. A survey on generative adversarial networks for imbalance problems in computer vision tasks. Journal of Big Data. 2021, 8, 1–59. [Google Scholar] [CrossRef]

- Bissoto, A.; Avila, S. Improving Skin Lesion Analysis with Generative Adversarial Networks. In Anais Estendidos do XXXIII Conference on Graphics, Patterns and Images, 2020; 70–76. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proc. International Conference on Learning Representations, 2016. [Google Scholar] [CrossRef]

- Gurumurthy, S.; Sarvadevabhatla, R.K.; Babu, R.V. DeLiGAN: Generative adversarial networks for diverse and limited data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA. 2017; pp. 166–174. [Google Scholar] [CrossRef]

- Ma, Y.; Zhong, G.; Wang, Y.; Liu, W. MetaCGAN: A Novel GAN Model for Generating High Quality and Diversity Images with Few Training Data. 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK. 2020, pp. 1–7. [CrossRef]

- Van Den Oord, A.; Kalchbrenner, N.; Kavukcuoglu, K. Pixel recurrent neural networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA. 2016, 4, 2611–2620. [Google Scholar] [CrossRef]

- Van Den Oord, A.; Kalchbrenner, N.; Vinyals, O.; Espeholt, L.; Graves, A.; Kavukcuoglu, K. Conditional image generation with PixelCNN decoders. Adv. Neural Inf. Process. Syst. 2016, 4797–4805. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Unsupervised and semi-supervised learning with Categorical Generative Adversarial Networks assisted by Wasserstein distance for dermoscopy image Classification. in arXiv:1804.03700, 2018. arXiv:1804.03700, 2018. [CrossRef]

- Springenberg, J.T. Unsupervised and semi-supervised learning with categorical generative adversarial networks, International Conference on Learning Representations. 2016. [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved training of Wasserstein GANs, In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS'17). Curran Associates Inc., Red Hook, NY, USA; 5769–57792017.2017. [Google Scholar] [CrossRef]

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin Lesion Analysis Toward Melanoma Detection: A Challenge at The International Symposium on Biomedical Imaging (ISBI) 2016, Hosted by The International Skin Imaging Collaboration (ISIC), 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 2018, pp. 168-172. [CrossRef]

- Liu, Y.; Chen, A.; Shi, H.; Huang, S.; Zheng, W.; Liu, Z.; Zhang, Q.; Yang, X. CT Synthesis From MRI Using Multi-Cycle GAN For Head-And-Neck Radiation Therapy. Comput. Med. Imaging Graph. 2021, 91, 101953. [Google Scholar] [CrossRef] [PubMed]

- Baur, C.; Albarqouni, S.; Navab, N. MelanoGANs: high-resolution skin lesion synthesis with GANs, 1st Conference on Medical Imaging with Deep Learning (MIDL2018), Amsterdam, The Netherlands 2018. [CrossRef]

- Yan, S.; Liu, Y.; Li, J.; Xiao, H. "DDGAN: Double Discriminators GAN for Accurate Image Colorization," 2020 6th International Conference on Big Data and Information Analytics (BigDIA), Shenzhen, China, 2020, pp. 214-219. [CrossRef]

- Denton, E.L.; Chintala, S.; Szalm, A.; Fergus, R. Deep generative image models using a Laplacian pyramid of adversarial networks, Advances in Neural Information Processing Systems 28 (NIPS), 2015, pp. 1486–1494.

- Fossen-Romsaas, S.; Storm-Johannessen, A.; Lundervold, A.S. Synthesizing skin Lesion images using CycleGANs- a case Study. In Proc. NIK-2020Conference. 2020. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of Gans for Improved Quality, Stability, And Variation. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), 2018.

- Baur, C.; Albarqouni, S.; Navab, N. Generating Highly Realistic Images of Skin Lesions with GANs. In: et al. OR 2.0 Context-Aware Operating Theaters, Computer-Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis. CARE CLIP OR 2.0 ISIC 2018, 11041, 260–267, Springer, Cham. [Google Scholar] [CrossRef]

- Jiang, M.; Zhi, M.; Wei, L.; Yang, X.; Zhang, J.; Li, Y.; Wang, P.; Huang, J.; Yang, G. FA-GAN: Fused Attentive Generative Adversarial Networks for MRI Image Super-Resolution. Comput. Med. Imaging Graph. 2021, 92, 101969. [Google Scholar] [CrossRef]

- Wang, T. , Liu, M., Zhu, J., Tao, A., Kautz, J., & Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional GANs, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Beynek, B.; Bora, Ş.; Evren, V.; Ugur, A. Synthetic Skin Cancer Image Data Generation Using Generative Adversarial Neural Network. International Journal of Multidisciplinary Studies and Innovative Technologies. 2021, 5, 147–150. [Google Scholar]

- Pang, T.; Wong, J.H.D.; Ng, W.L.; Chan, C.S. Semi-supervised GAN-based Radiomics Model for Data Augmentation in Breast Ultrasound Mass Classification. Comput. Methods Programs Biomed. 2021, 203, 106018. [Google Scholar] [CrossRef]

- Shahsavari, A.; Ranjbari, S.; Khatibi, T. Proposing a novel Cascade Ensemble Super-Resolution Generative Adversarial Network (CESR-GAN) method for the reconstruction of super-resolution skin lesion images. Inform. Med. Unlocked. 2021, 24, 100628. [Google Scholar] [CrossRef]

- Rashid, H.; Tanveer, M.A.; Khan, H.A. Skin lesion classification using GAN-based data augmentation, In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 2019.

- Adhikari, A. Skin Cancer Detection using Generative Adversarial Network and an Ensemble of deep Convolutional Neural Networks, Master of Science in Engineering, The University of Toledo, Ohio, Dec 2019.

- Tschandl, P. , Rosendahl, C. & Kittler, H. The HAM10000 dataset: a large collection of multi-sources dermatoscopic images of common pigmented skin lesions. Sci. Data 5, 2018, pp. 180-161. [CrossRef]

- Huang, G.; Liu, Z.; Weinberger, K.Q. Densely connected convolutional networks, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

- He, K.; Zhang, X.; Ren, S. , Sun, J. Deep residual learning for image recognition, 2016., In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). [Google Scholar]

- Mutepfe, F.; Kalejahi, B.K.; Meshgini. S.; Danishvar, S. Generative Adversarial Network Image Synthesis Method for Skin Lesion Generation and Classification. J Med Signals Sens. 2021, 11, 237–252. [Google Scholar] [CrossRef]

- Wei, L.S.; Gan, Q.; Ji, T. Skin Disease Recognition Method Based on Image Color and Texture Features. Comput Math Methods Med. 2018, 8145713. [Google Scholar] [CrossRef]

- Devaraj, S.J. Emerging Paradigms in Transform-Based Medical Image Compression for Telemedicine Environment. Telemedicine Technologies. 2019, 15–29. [Google Scholar] [CrossRef]

- Zhu, Y.; Dai, Y.; Han, K. An efficient bicubic interpolation implementation for real-time image processing using hybrid computing. J Real-Time Image Proc 19. 2022; 1211–1223. [Google Scholar] [CrossRef]

- Rajarapollu, P.R. and Mankar, V.R. Bicubic Interpolation Algorithm Implementation for Image Appearance Enhancement. Int. J. of Computer Science and Tech. 2017.

- Nuno-Maganda, M.A.; Arias-Estrada, M.O. Real-time FPGA-based architecture for bicubic interpolation: an application for digital image scaling. 2005 International Conference on Reconfigurable Computing and FPGAs (ReConFig'05), Puebla, Mexico, 2005, pp. 8. [CrossRef]

- Triwijoyo, B.; Adil, A. Analysis of Medical Image Resizing Using Bicubic Interpolation Algorithm. Journal Ilmu Komputer. 2021, 14, 20–29. [Google Scholar] [CrossRef]

- Yuan, S.; Abe, M.; Taguchi, A.; Kawamata, M. High Accuracy Bicubic Interpolation Using Image Local Features. IEICE Transactions on Fundamentals of Electronics Communications and Computer Sciences. 2007, E90-A (8), 1611–1615. [Google Scholar] [CrossRef]

- Xie, Y.; Ning, L.; Wang, M.; Li, C. Image Enhancement Based on Histogram Equalization. Journal of Physics: Conference Series. 2019, 1314, 012161. [Google Scholar] [CrossRef]

- Gaddam, P.C.S.K.; Sunkara, P. Advanced Image Processing Using Histogram Equalization and Android Application Implementation. Master of Science in Electrical Engineering, Blekinge Institute of Technology, Sweden, 2016.

- Atta. M.; Ahmed, O., Rashed, A., Eds.; Ahmed, M. Advances in Image Enhancement for Performance Improvement: Mathematics, Machine Learning and Deep Learning Solutions. 2021; pp. 1–14. [Google Scholar]

- Gonzalezand, R.C.; Woods, R.E. Digital Image Processing,3rd ed.; Pearson International Edition, Florida, 2008; pp. 184-186.

- Wubuli, A.; Zhen-Hong, J.; Xi-Zhong, Q.; Jie, Y.; Kasabov, N. Medical image enhancement based on shearlet transform and unsharp masking. J. Med. Imag. Health Informat. 2014, 4, 814–818. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI signal Process. Syst. signal image video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Agaian, S.S.; Panetta, K.; Grigoryan, A.M. Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 2001, 10, 367–382. [Google Scholar] [CrossRef]

- Polesel, A.; Ramponi, G.; Mathews, V.J. Image enhancement via adaptive unsharp masking. IEEE Trans. Image Process. 2000, 9, 505–510. [Google Scholar] [CrossRef]

- Munadi, K.; Muchtar, K.; Maulina, N.; Pradhan, B. "Image Enhancement for Tuberculosis Detection Using Deep Learning," in IEEE Access. 2020, 8, 217897-217907. [CrossRef]

- Nevils, B.; Mimbs, T.; Sailesh, A.; Naheed, N. High Frequency Emphasis Filter Instead of Homomorphic Filter. 2018 International Conference on Computational Science and Computational Intelligence (CSCI), 2018; 482–484. [Google Scholar] [CrossRef]

- Santhosh, B.; Rishikesan, J.; Sundar, K.; Kalaiyarasi, M. Filters in Medical Image Processing. Suraj Punj Journal for Multidisciplanary Research. 2021, 11, 135–140. [Google Scholar]

- Rodríguez-Rodríguez, J.A.; Molina-Cabello, M.A.; Benítez-Rochel, R.; López-Rubio, E. The effect of image enhancement algorithms on convolutional neural networks. 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 2021; 3084–3089. [Google Scholar] [CrossRef]

- Hasan, M.K.; Dahal, L.; Samarakoon, P.N.; Tushar, F.I.; Martí, R. DSNet: Automatic dermoscopic skin lesion segmentation. Computers in Biology and Medicine. 2020, 120, 103738. [Google Scholar] [CrossRef]

- Hoshyar, A.N.; Al-Jumaily, A.; Hoshyar, A.N. The Beneficial Techniques in Preprocessing Step of Skin Cancer Detection System Comparing. Procedia Computer Science. 2014, 42, 25–31. [Google Scholar] [CrossRef]

- International Color Consortium, Specification ICC.1: 2004-10 (Profile version 4.2.0.0) Image Technology Colour Management — Architecture, Profile Format, and Data Structure, International Color Consortium, 2006, Revised 2019.

- Al-saleem, Riyadh, M. ; Al-Hilali, Baraa, M.; Abboud, Izz, K. Mathematical Representation of Color Spaces and Its Role in Communication Systems. Journal of Applied Mathematics, 2020, 2020, 7. [Google Scholar] [CrossRef]

- Ruslau, M.F.V.; Pratama, R.A.; Nurhayati; Asmal, S. Edge detection in noisy images with different edge types. IOP Conf. Ser.: Earth Environ. Sci. 2019, 343, 012198. [Google Scholar] [CrossRef]

- Church, J.C.; Chen, Y.; Stephen, V.; Rice, A. Spatial Median Filter for Noise Removal in Digital Images. Rice Department of Computer and Information Science, University of Mississippi; 2009. p. 618–23.

- Rajlaxmi, C.; Pradeep, K.C.; Kumar, R. Contrast enhancement of dark images using stochastic resonance in the wavelet domain. International Journal of Machine Learning and Computing. 2012, 2, 671–6. [Google Scholar]

- Janani, P. Image Enhancement Techniques: A Study. Indian Journal of Science and Technology. 2015. 8, 1-12. [CrossRef]

- S. S. Yadav and S. M. Jadhav, “Deep convolutional neural network based medical image classification for disease diagnosis,” Journal of Big Data, vol. 6, no. 1, 2019. [CrossRef]

- Wang, J.; Yang, Y.; Wang, T.; Sherratt, R.S.; Zhang, J. Big data service architecture: a survey. Journal of Internet Technology. 2020, 21, 393–405. [Google Scholar]

- Qin, Z.; Liu, Z.; Zhu, P.; Xue, Y. A GAN-based image synthesis method for skin lesion classification. Computer methods and programs in biomedicine. 2020, 195, 105568. [Google Scholar] [CrossRef]

- Khan, M.H.M.; Kaudeer, N.G.S.; Dayalen, M. Multiclass Skin Problem Classification Using Deep Generative Adversarial Network (DGAN). Computational Intelligence and Neuroscience. 2022, 2022, 13. [Google Scholar] [CrossRef]

- Zhao, C.; Shuai, R.; Ma, L.; Liu, W.; Hu, D.; Wu, M. Dermoscopy Image Classification Based on StyleGAN and DenseNet201. IEEE Access. 2021, 9, 8659–8679. [Google Scholar] [CrossRef]

- Liu, B.; Lv, J.; Fan, X.; Luo, J.; Zou, T. Application of an Improved DCGAN for Image Generation. Mobile Information Systems, 2022, 14. [CrossRef]

- Zhong, G.; Gao, W.; Liu, Y.; Yang, Y. Generative Adversarial Networks with Decoder-Encoder Output Noise. Neural Networks. 2020, 127, 19–28. [Google Scholar] [CrossRef]

- Ghassemi, N.; Shoeibi, A.; Rouhani, M. Deep neural network with generative adversarial networks training for brain tumour classification based on MR images. Biomed Signal Process Control. 2020, 57. [Google Scholar] [CrossRef]

- Behara, K.; Bhero, E.; Agee, J.T.; Gonela, V. Artificial Intelligence in Medical Diagnostics: A Review from a South African Context. Scientific African, 2022, 17.

- Nilsson, J. Understanding SSIM. Nvidia Inc,2020. [CrossRef]

Figure 1.

Sample images of Benign and Malignant.

Figure 1.

Sample images of Benign and Malignant.

Figure 2.

A Framework of the Proposed GAN-based Classifier.

Figure 2.

A Framework of the Proposed GAN-based Classifier.

Figure 3.

Preprocessing Pipeline.

Figure 3.

Preprocessing Pipeline.

Figure 4.

Flow diagram of proposed DCGAN Architecture for Skin Lesion Classification.

Figure 4.

Flow diagram of proposed DCGAN Architecture for Skin Lesion Classification.

Figure 5.

Improved DCGAN Framework.

Figure 5.

Improved DCGAN Framework.

Figure 6.

Three Methods of Interpolation for Rescaling the Image (a) Original image (b) Nearest Neighbor (c) Bilinear (d) Bicubic Interpolation.

Figure 6.

Three Methods of Interpolation for Rescaling the Image (a) Original image (b) Nearest Neighbor (c) Bilinear (d) Bicubic Interpolation.

Figure 7.

Distribution of Pixel Intensities.

Figure 7.

Distribution of Pixel Intensities.

Figure 8.

Equalized Image Histogram.

Figure 8.

Equalized Image Histogram.

Figure 9.

Transformed Equalized Histogram.

Figure 9.

Transformed Equalized Histogram.

Figure 10.

Histogram Comparison (a) USM image (b) GPHF.

Figure 10.

Histogram Comparison (a) USM image (b) GPHF.

Figure 11.

Image comparison between Original, USM, GHPF and combined sharpening.

Figure 11.

Image comparison between Original, USM, GHPF and combined sharpening.

Figure 12.

Balanced Color Space Image Transformation.

Figure 12.

Balanced Color Space Image Transformation.

Figure 13.

Implementation of Median Filter on various noises.

Figure 13.

Implementation of Median Filter on various noises.

Figure 15.

DCGAN Generator and Discriminator Score for each iteration.

Figure 15.

DCGAN Generator and Discriminator Score for each iteration.

Figure 16.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.01.

Figure 16.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.01.

Figure 17.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.001.

Figure 17.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.001.

Figure 18.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.0002.

Figure 18.

DCGAN Training Accuracy and Loss with a Learning Rate of 0.0002.

Figure 19.

DCGAN Confusion Matrix.

Figure 19.

DCGAN Confusion Matrix.

Figure 20.

DCGAN AUC- ROC Plot.

Figure 20.

DCGAN AUC- ROC Plot.

Figure 21.

Performance Metrics- Improved DCGAN.

Figure 21.

Performance Metrics- Improved DCGAN.

Table 1.

State-of-the-art Methods Comparison.

Table 1.

State-of-the-art Methods Comparison.

| Authors |

Techniques |

Dataset |

Observations |

Accuracy (%) |

| [19] |

Pix2Pix GAN |

ISIC 2017 |

The image-to-image translation was done via binary classification using a combination of semantic and instance mappings. |

84.7 |

| [34] |

GAN with Raman Spectroscopy |

Raman Spectroscopy |

The authors created a data augmentation module that uses a GAN to generate RS data comparable to the training data classes. |

92 |

| [46] |

cGAN and WGAN |

ISIC 2016 |