1. Introduction

Securing passengers and releasing risks have became challenging with increasing populations and varying treats. There is, therefore, an increasing demand for detection systems that can reveal risk for public. Regarding this, there is a need for devices that can reveal the risk while individuals walk in a continuous flow.

Eventhough, this need is obvious, many researches either at microwave [

1,

2,

3,

4] or millimeter-wave [

5,

6] regions have been performed on stationary case where an individual must be stationary and being illuminated for several seconds. In the coming years, Due to increasing passenger number, such solutions would be not enough to scan the predicted growth in a reasonable time.

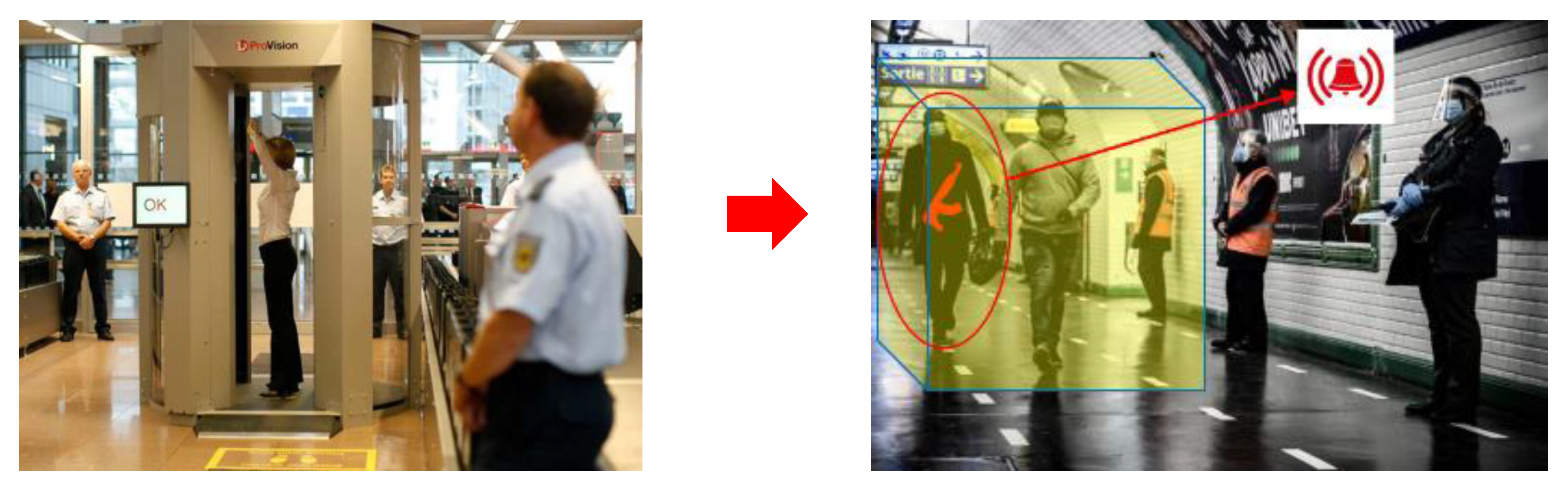

The objective of MIC (Microwave Imaging Curtain) is to serve as a proof of concept prototype for the automatic standoff detection of firearms and explosive belts hidden beneath the clothing of individuals walking in a continuous flow. Microwave signals possess the capability to penetrate non-metallic materials, and millimeter wavelength sensors are already in use for passenger inspections prior to aircraft boarding [

4]. However, the individual under inspection must remain stationary at the center of the scanner, being illuminated for several seconds (

Figure 1 left). These detection systems have numerous drawbacks, including their lack of discretion and the creation of a crowd of vulnerable people prior to the checkpoint.

MIC seeks to complement this detection principle by automating the inspection of a continuous flow of individuals in a much larger search volume and on a much smaller timescale. This challenge could be met by considering larger objects to be detected than in aircraft boarding scenarios (e.g., automatic rifles instead of small ceramic knives). Scientific and technical challenges are on real-time and big observable volume performances with the possibility to reduce the resolution constraint necessary to detect and identify the targeted threats (

Figure 1 right).

The MIC project aims to design, develop, and test a radar-based imaging device in a representative environment that addresses non-checkpoint firearms detection issues, which are increasingly challenging for mass transportation system operators and organizers of large public events. In accordance with current regulations regarding the impact of radiation on human health and privacy protection, the project integrates off-the-shelf high-performance microwave modules and develops specific signal processing algorithms to reconstruct 3D images of objects carried by moving individuals in the field of view of transmitting modules and scattered wave-receiving modules. Post-processing of such images will perform automatic detection of dangerous objects.

2. The imaging system hardware description

2.1. System Modular Structure

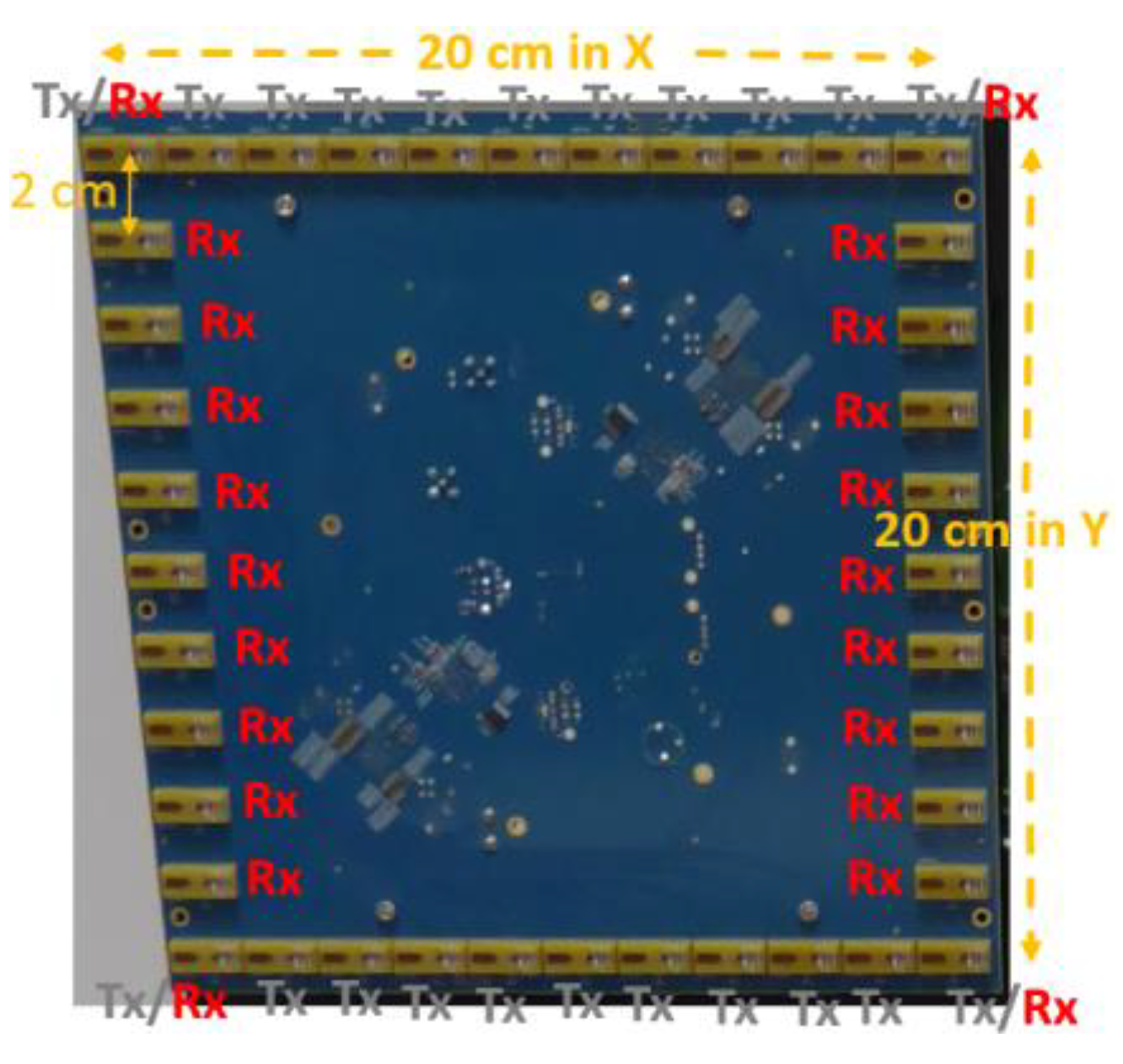

The system is based on a modular structure. Each module (that is an off-the-shelf device, produced by the Vayyar company [

7]) in

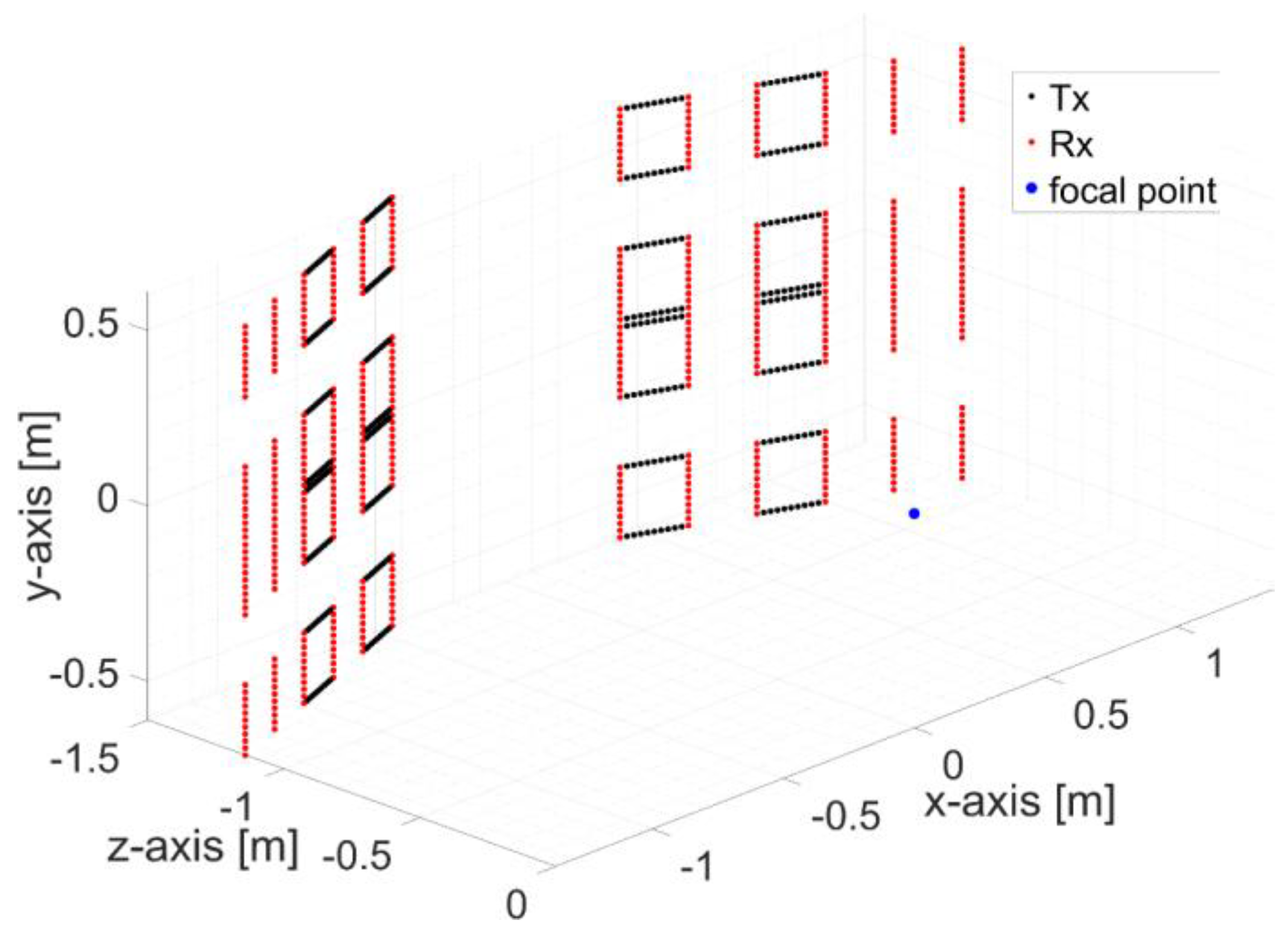

Figure 2 consists of 20 transmit and 20 receive elements that are distributed along a quadratic perimeter. The array size is 20 cm in both x-y-axes. The module operates at 8.5 GHz central frequency with 4 GHz bandwidth (3.53 cm central wavelength). The transmitted radar waveform is a classic Step-Frequency Continuous Wave (SFCW). 3 dB antenna aperture is about 100°.

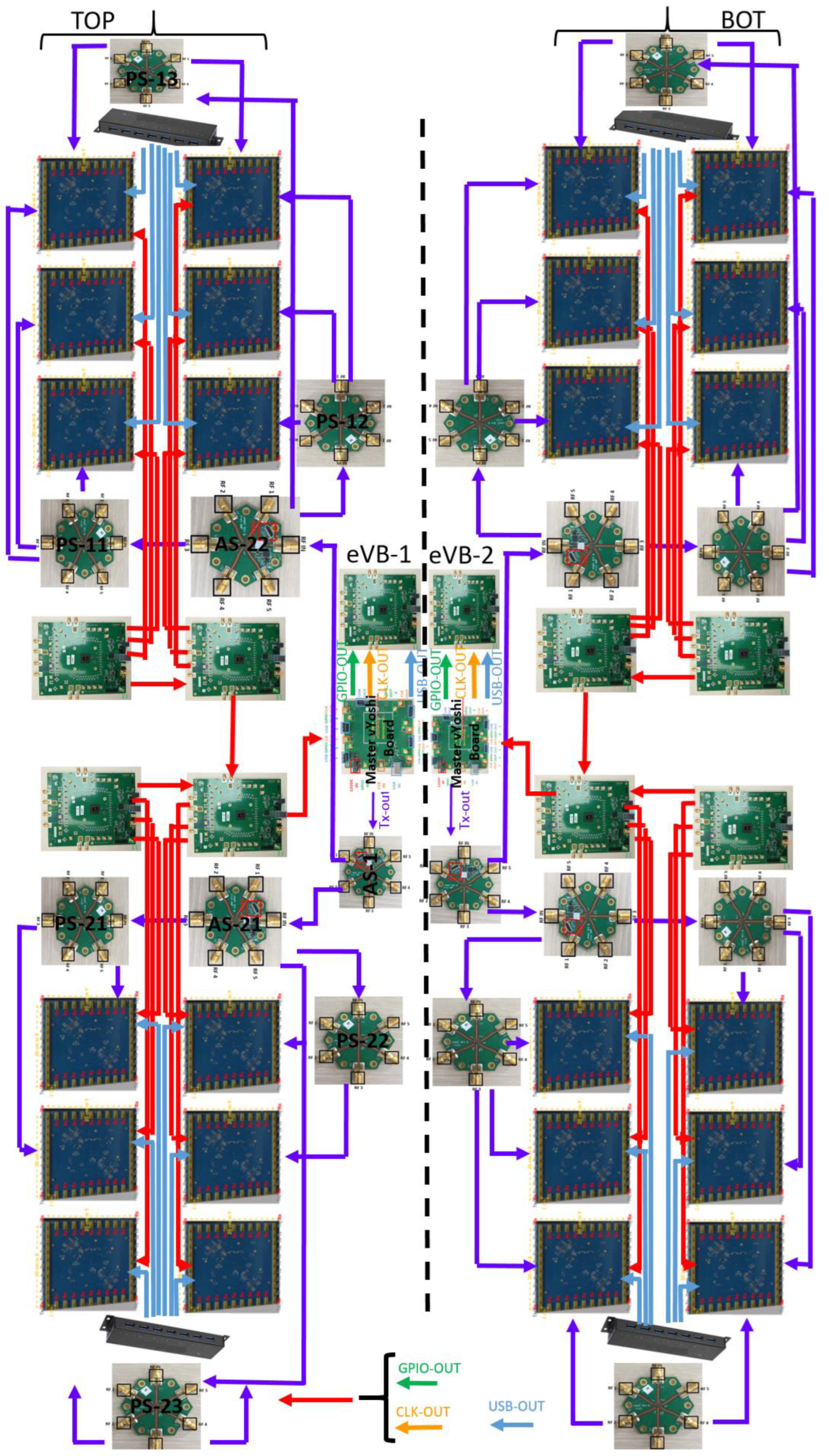

The complete imager is composed of two independent sub-systems, a top (TOP) one and a bottom (BOT) one, mounted on top of each other in the vertical axis. Each system is built in a tree structure that combines 12 MIMO modules (

Figure 3). This MIMO technique enables the creation of a large and high-resolution synthetic antenna. Each sub-system operates completely coherent since, instead of using T/R modules for each antenna, the RF signal for each transmitter element is generated by a single MMIC placed on a central master Board. These signals are then distributed through passive and active signal splitters to every module (

Figure 3).

It should be mentioned that four of antennas are operational as both, transmit and receive elements, on the module. Thus, despite of having 40 antennas, there are 22 Tx and 22 Rx antennas on each module.

The two sub-systems consist of 24 modules with 528 transmit and 528 receive antennas. 352 transmit antennas are in used while all receive antennas are employed in both sub-systems. Each sub-system is working itself, meaning that transmit and receive arrays on each system work on their own system. This approach reduces the total number of antenna pairs to 92928 whose half is formed from the TOP system and the rest is formed from the BOT system. The considered design then results in reduction of complexity of the final imaging system and computational cost of the radar image.

System hardware parameters are summarized in

Table 1.

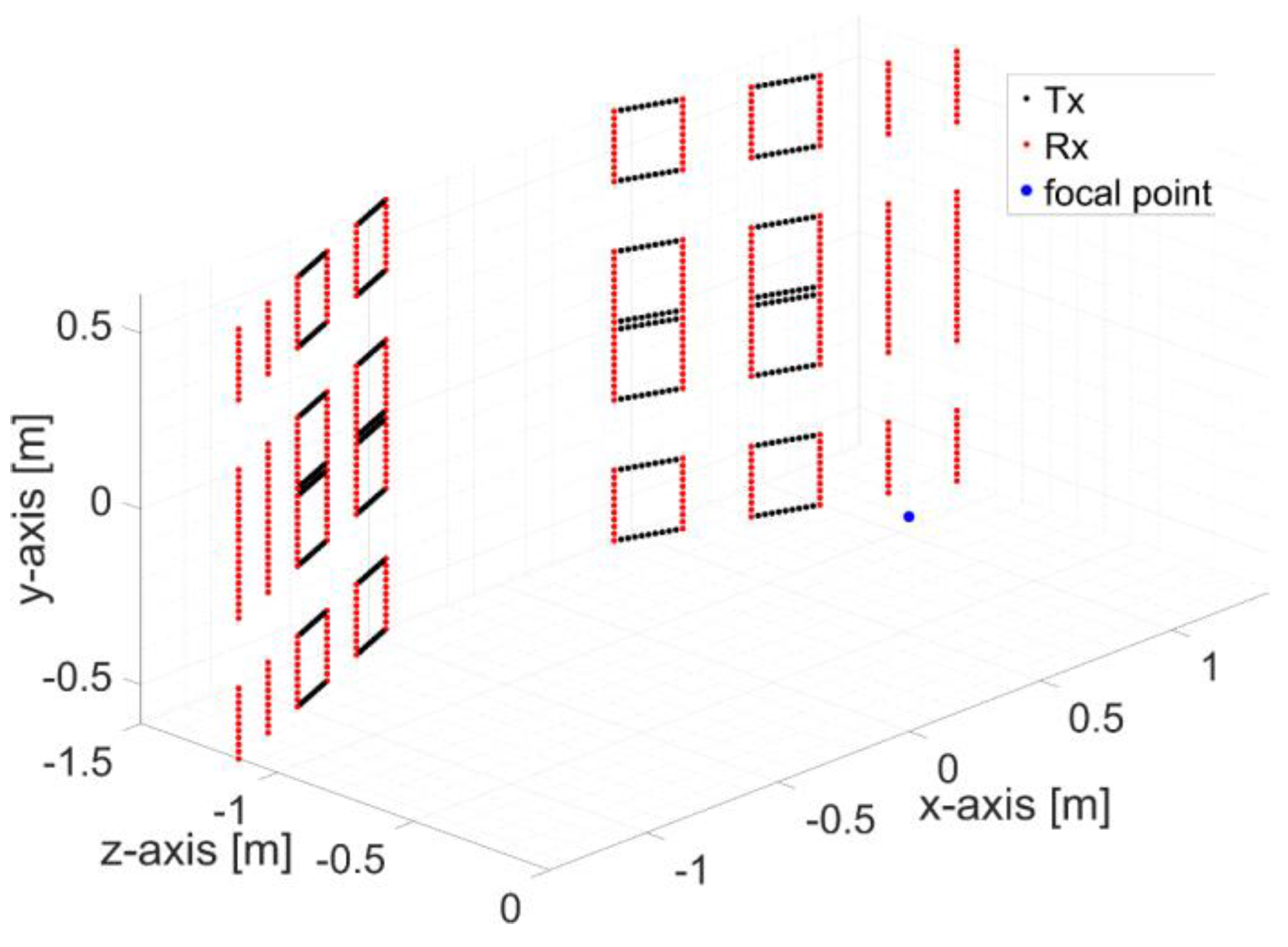

2.3. The MIC System Configuration

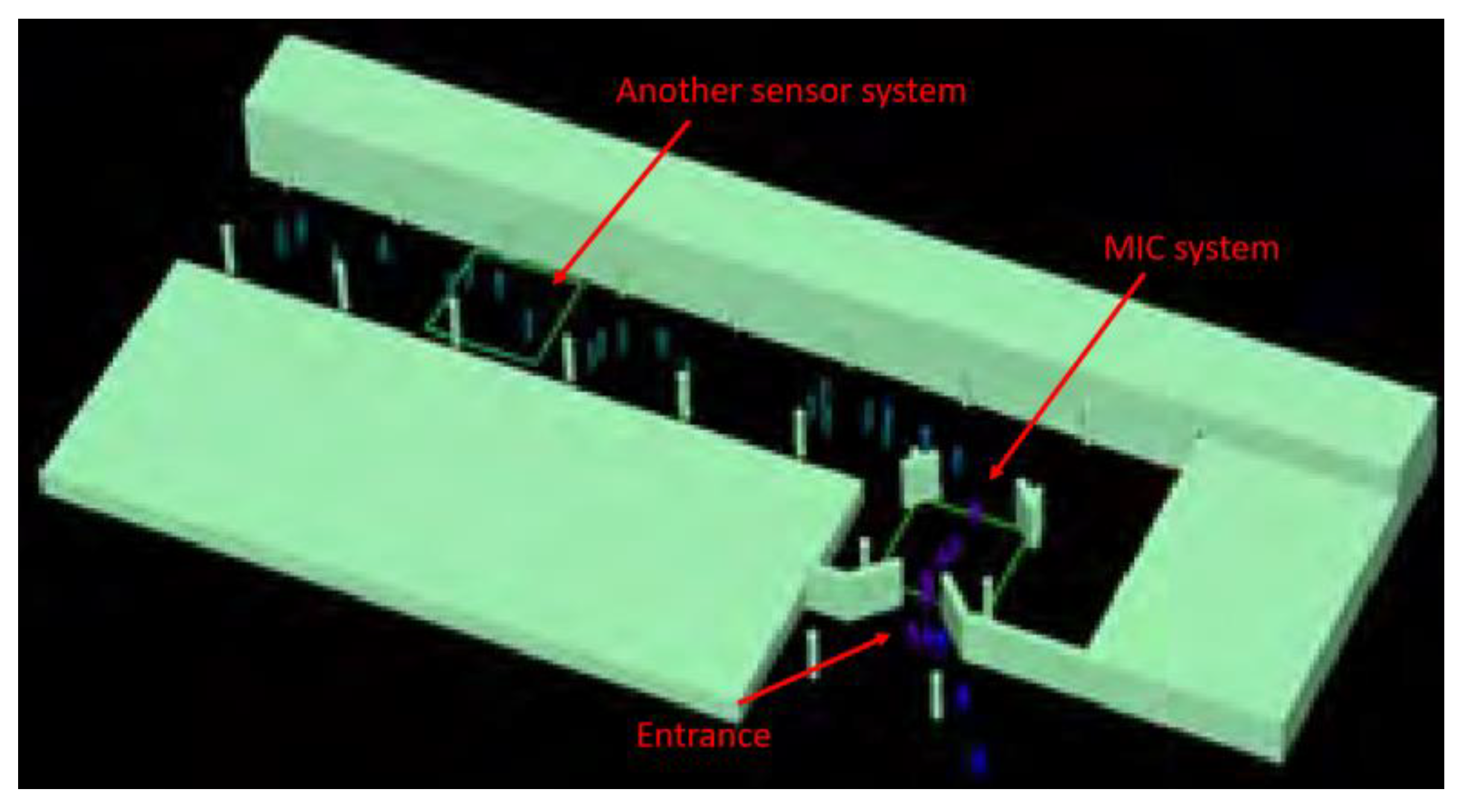

The flexible tree-structure allows many antenna array configurations. After studies and simulations, as a compromise between cross-range resolutions, system discretion and control of the trajectory of the persons to be imaged, a door configuration has been selected with a 87 cm width between each panel, typical of standard office door. Panels are oriented to focus energy on the area of interest (from 0.5 to 2.5 m from door threshold. Passengers walk through the MIC sensor, one by one.

24 panels sub-systems are assembled one on top of the other to image a volume from 0.8 to 2 m above ground (

Figure 4).

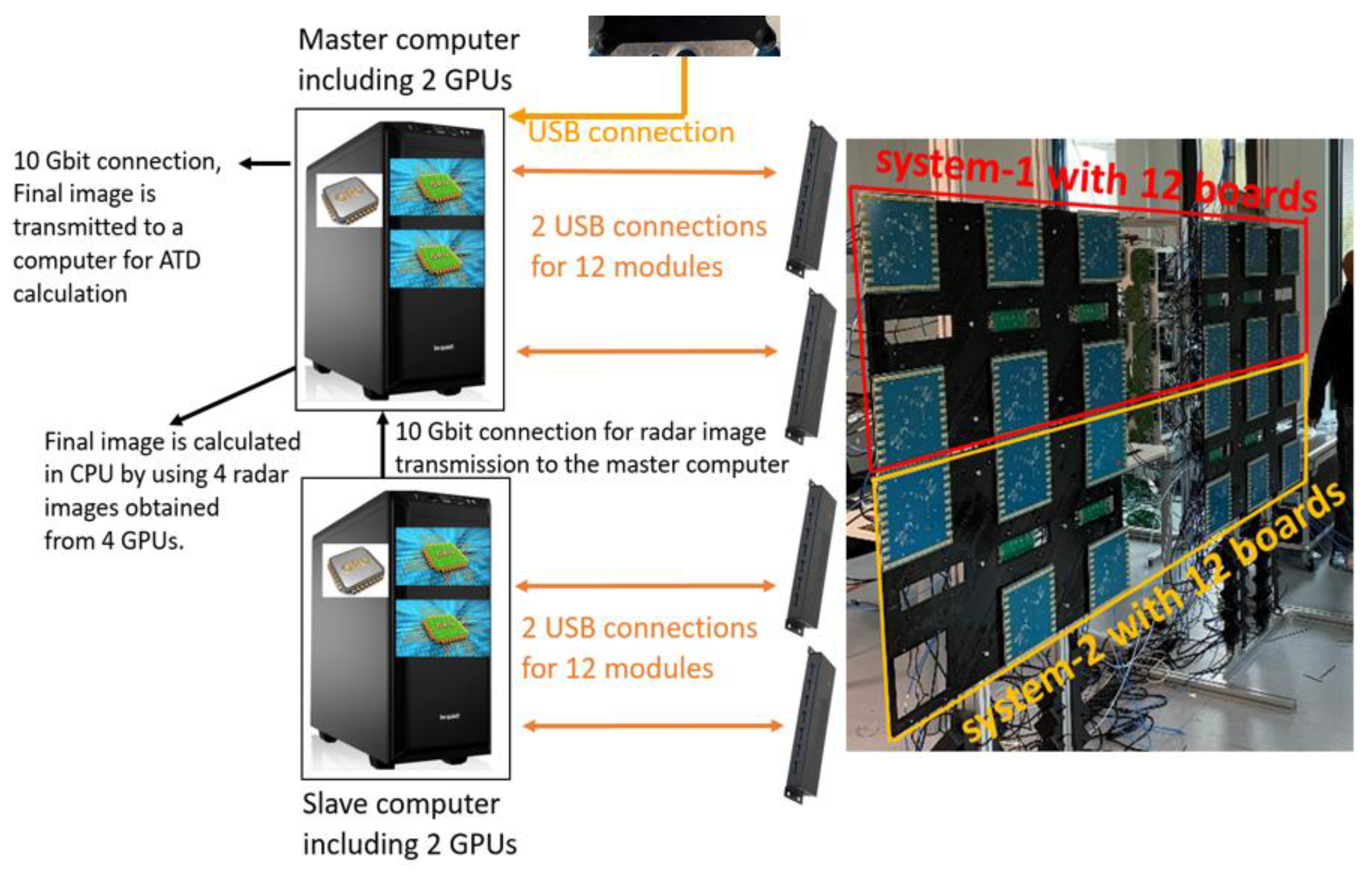

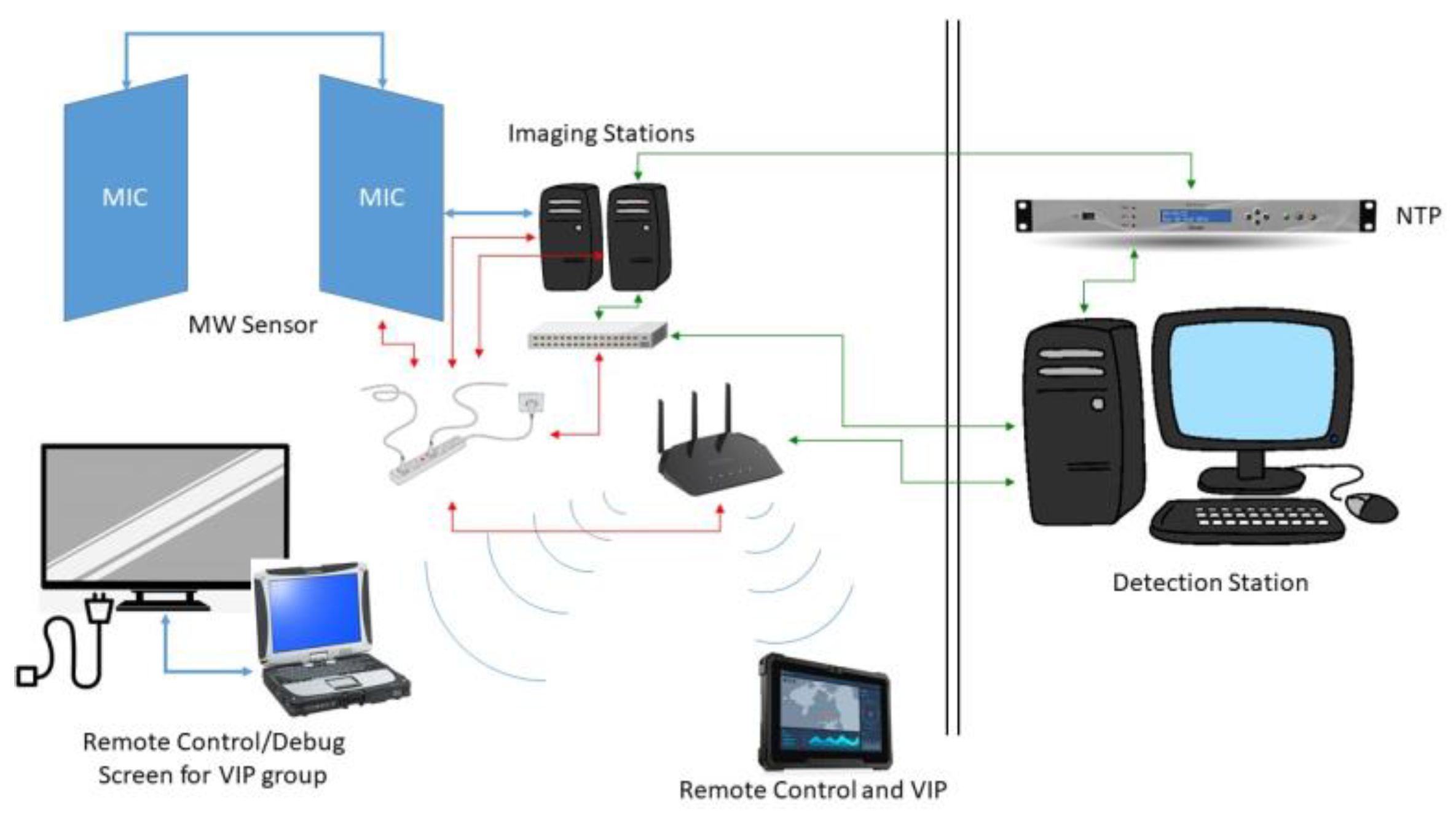

2.2. The MIC System Connection to Work-stations

The imaging system is connected to corresponding workstations as given in

Figure 5. Each one includes two GPUs.

Once measurement is performed, the collected data from TOP and BOT radar systems are transferred to corresponding workstations to be processed. The reconstructed radar image in the BOT system is then transferred to the TOP system where coherent summation is performed on CPU to create the final radar image. This calculated final image, a 3D matrix with complex data is finally transferred to the Automated Target Detection processing station for automatic target detection.

2.3. The Imaging System Algorithm

2.3.1. Imaging Algorithm

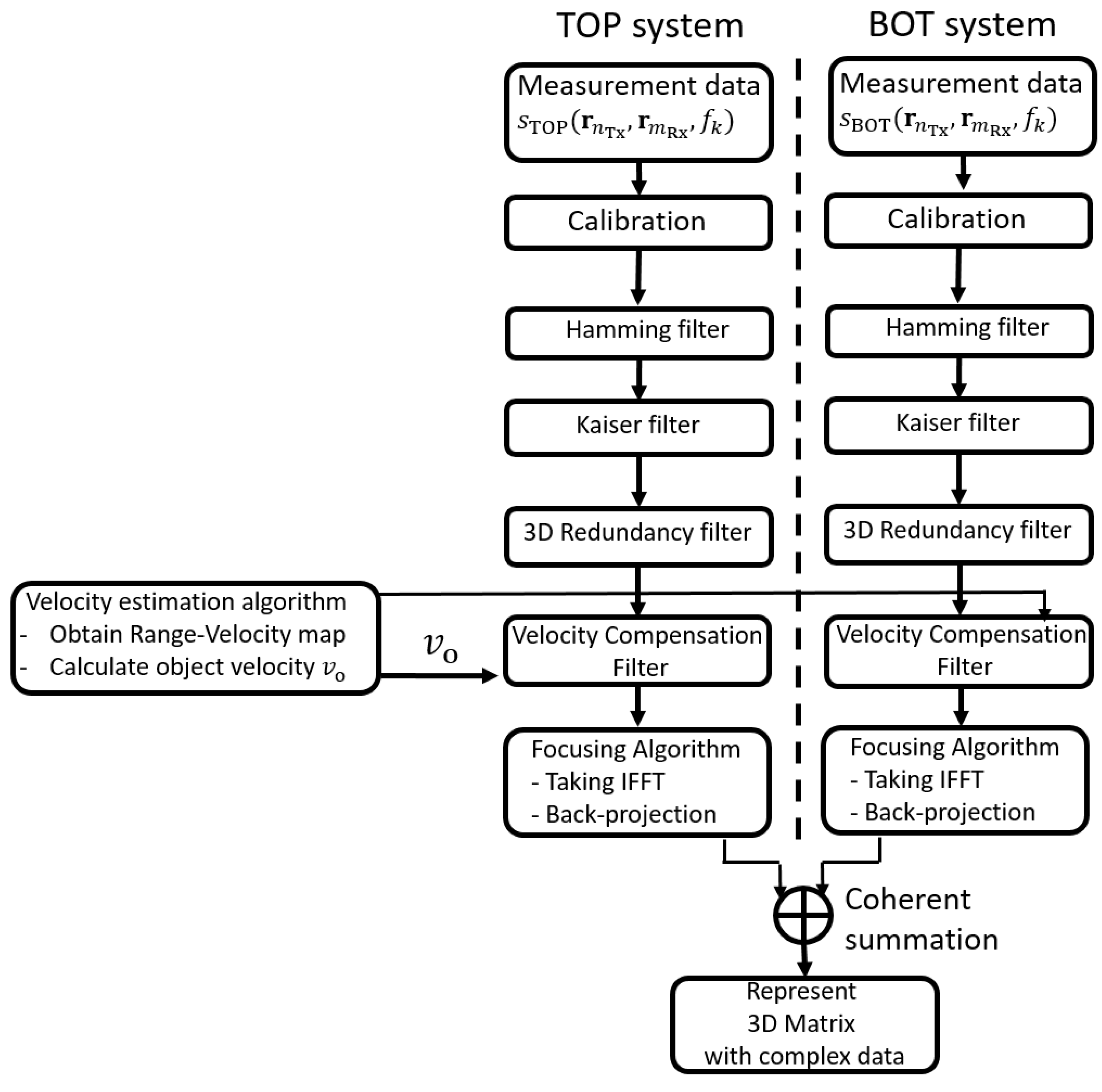

The algorithm utilized by the imaging system is a critical aspect of the radar image, as it incorporates numerous signal processing functions that ultimately reveal the focused targets.

Figure 6 summarizes the procedures of the imaging system algorithm. Two sub-systems TOP and BOT in the radar imaging system illuminate the region of interest with SFCW and save the measurement data in complex format within the frequency band for every transmit/receive pair. Then, calibration coefficients are applied to the measurement data by using active calibration method. The Hamming filter is applied to the data along frequency range for every transmit/receive pair to suppress range side lobes. The Kaiser filter is subsequently applied to the measurement data to suppress lateral side lobes. Afterwards, 3D multiplicity filter is employed to obtain more homogenous illumination over 2D array aperture. Velocity compensation filter is applied to compensate for the phase difference due to the displacement of the person. Later, Inverse fast Fourier transform (IFFT) is used to obtain the signal in spatial domain. Back-projection (BP) algorithm is applied to focus the data for 3D complex image. Finally, the 3D complex radar image in the BOT system is firstly transferred to the master-workstation, then a coherent summation is performed to obtain the final 3D complex radar image by using two 3D complex radar images from TOP and BOT systems.

The following is a concise exposition describing the processing and evaluation of measurement data from two distinct sub-systems, ultimately yielding a comprehensive 3D complex radar image.

Calibration: Calibration procedure is the first filter in the imaging algorithm and determines the quality of the radar image by compensating channel responses. If the calibration is not rigorously performed, the quality of the radar image can distinctly degrade. More details are given in

Section 2.3.2.

Hamming Filter: Hamming filter is a well-known and frequently employed filter in radar image applications. In this application, the 1D Hamming filter is used to suppress range side lobes that lead to degradation on the quality of the radar image.

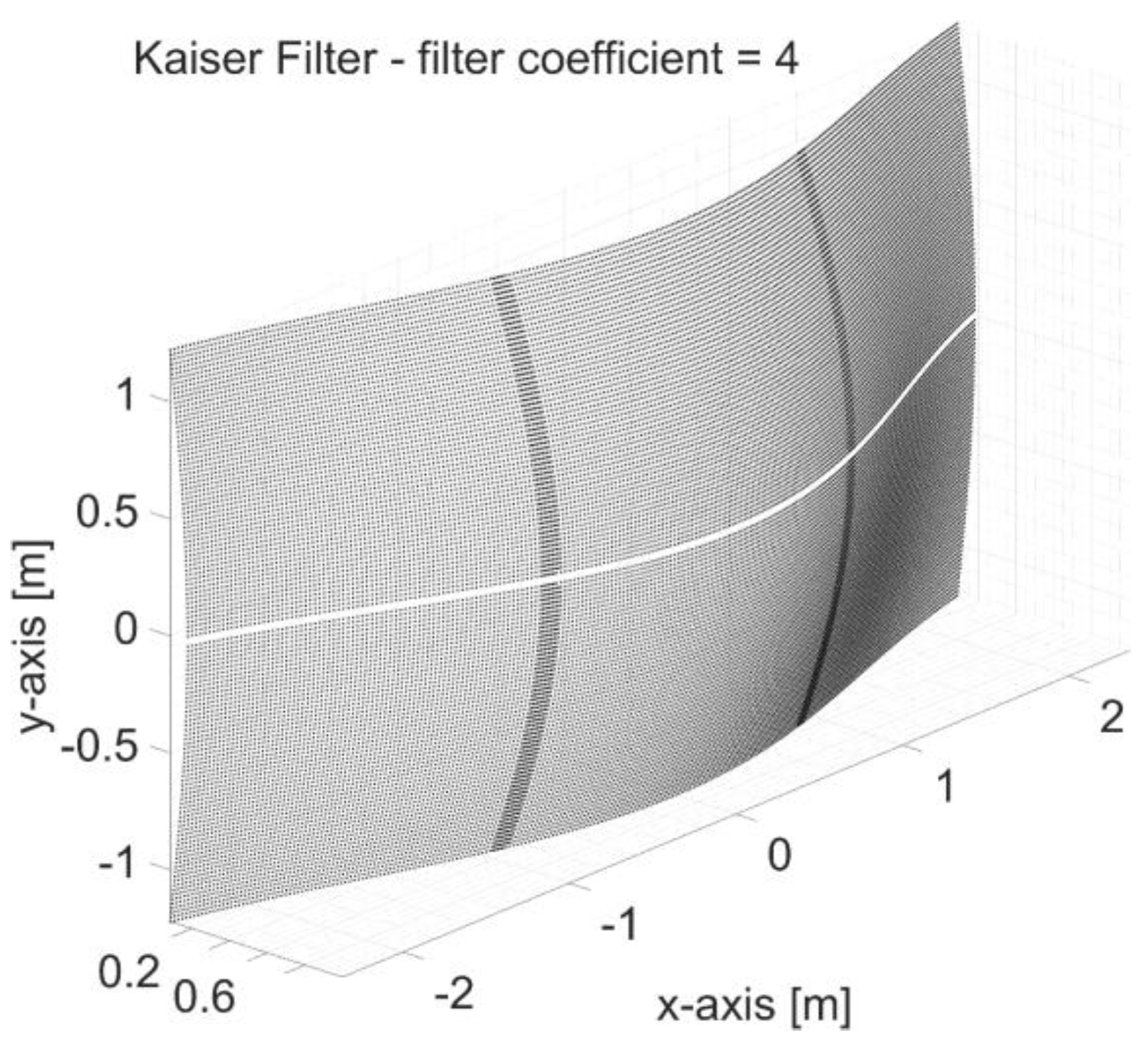

Kaiser Filter: Virtual elements lie over the virtual aperture of the MIMO array. The abrupt end of virtual elements at the end of the virtual aperture can also degrade image quality, and hence a further smoothing might be required. The exact choice of the window coefficient will, of course, influence the lateral focusing quality of the array. This includes the side-lobe level as well as the resolution of the image.

Figure 7 represents a Kaiser filter with a coefficient of 4. Once virtual elements place at a spatial domain closer to edges of the virtual aperture, their corresponding complex data within the frequency range are multiplied with a value closer to zero. If virtual elements are formed at the center positions of the virtual array aperture, corresponding complex data is multiplied with one.

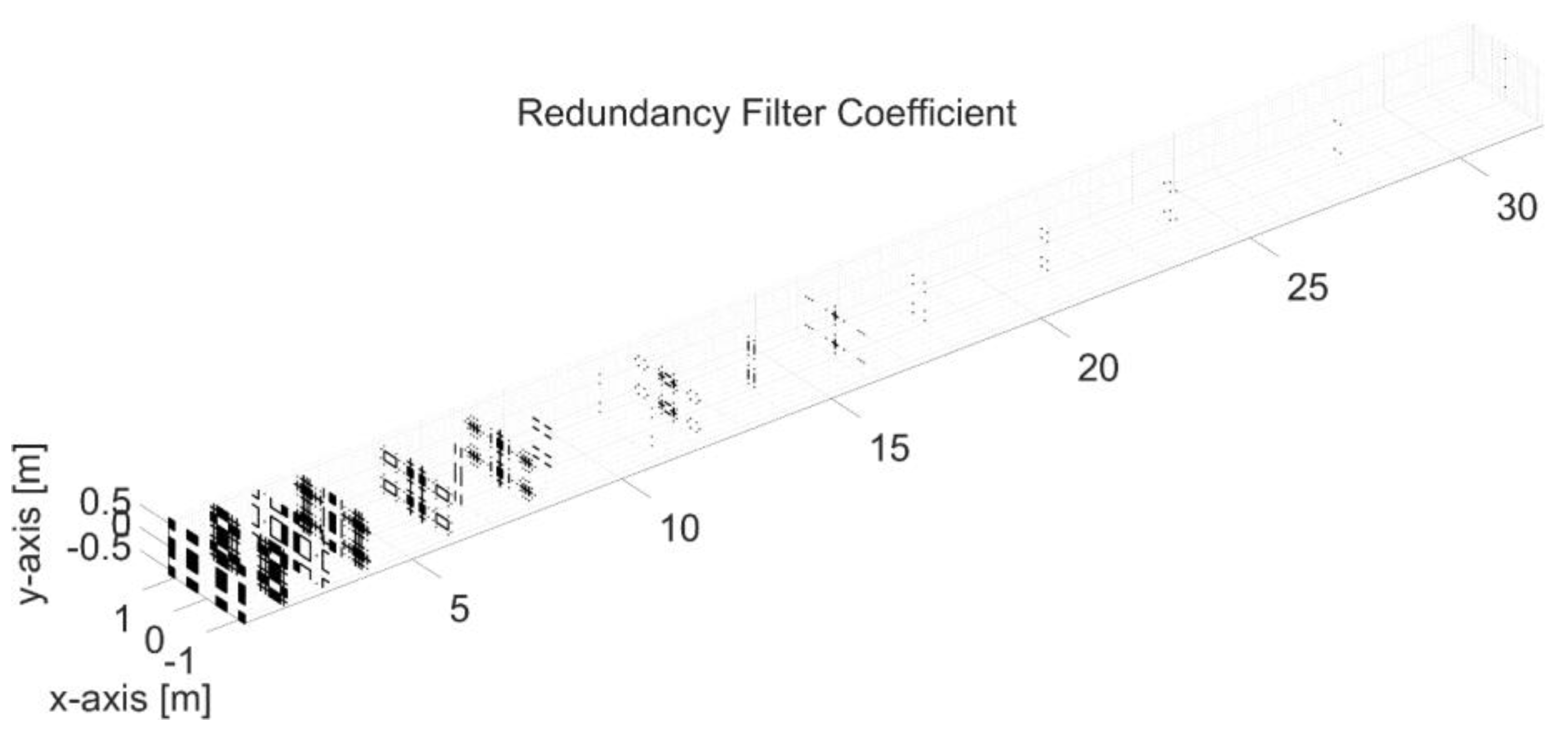

Virtual Element Redundancy Filter: The illumination virtual array aperture is generally not flat and hence can include abrupt changes. Its smoothing is thus necessary to avoid abrupt illumination changes and preserve image quality.

The filter firstly calculates a value regarding how many virtual elements are co-located at the same spatial position by considering 2 dimensional Cartesian coordinate positions, then corresponding complex data is divided by this value.

is the redundancy-filter applied signal and can be obtained as:

where

is the Kaiser-filter applied signal,

as given in

Figure 8 is a coefficient matrix depending on transmit/receive antenna pairs and keeps the number of co-located virtual elements,

fk is the frequency value,

is the position of the n-th transmit antenna, and

is the position of the m-th receive antenna.

Velocity Estimation and Compensation: During the acquisition, the illuminated person is in motion. Although a single transmission time is below a millisecond, total measurement time is 70 milliseconds due to time-division multiplexing mode of 176 transmitter antennas for each sub-system. This movement finally results in a shift in spatial domain, and needs to be compensated by a velocity compensation filter.

Velocity estimation algorithm calculates the velocity of a walking person based on an additional single channel radar sensor operating at 80 GHz. First, static clutter is removed from the data measured by the speed measurement system. Then, 2D fast Fourier transform (FFT) of up and down chirp data is taken to obtain range-velocity maps. Afterwards, these two maps are pointwise multiplied with each other to enhance the dynamic range. The center of gravity of the spectrum is calculated to obtain the velocity of the person. Finally, the object velocity

is conveyed to the velocity compensation filter as given in

Figure 6.

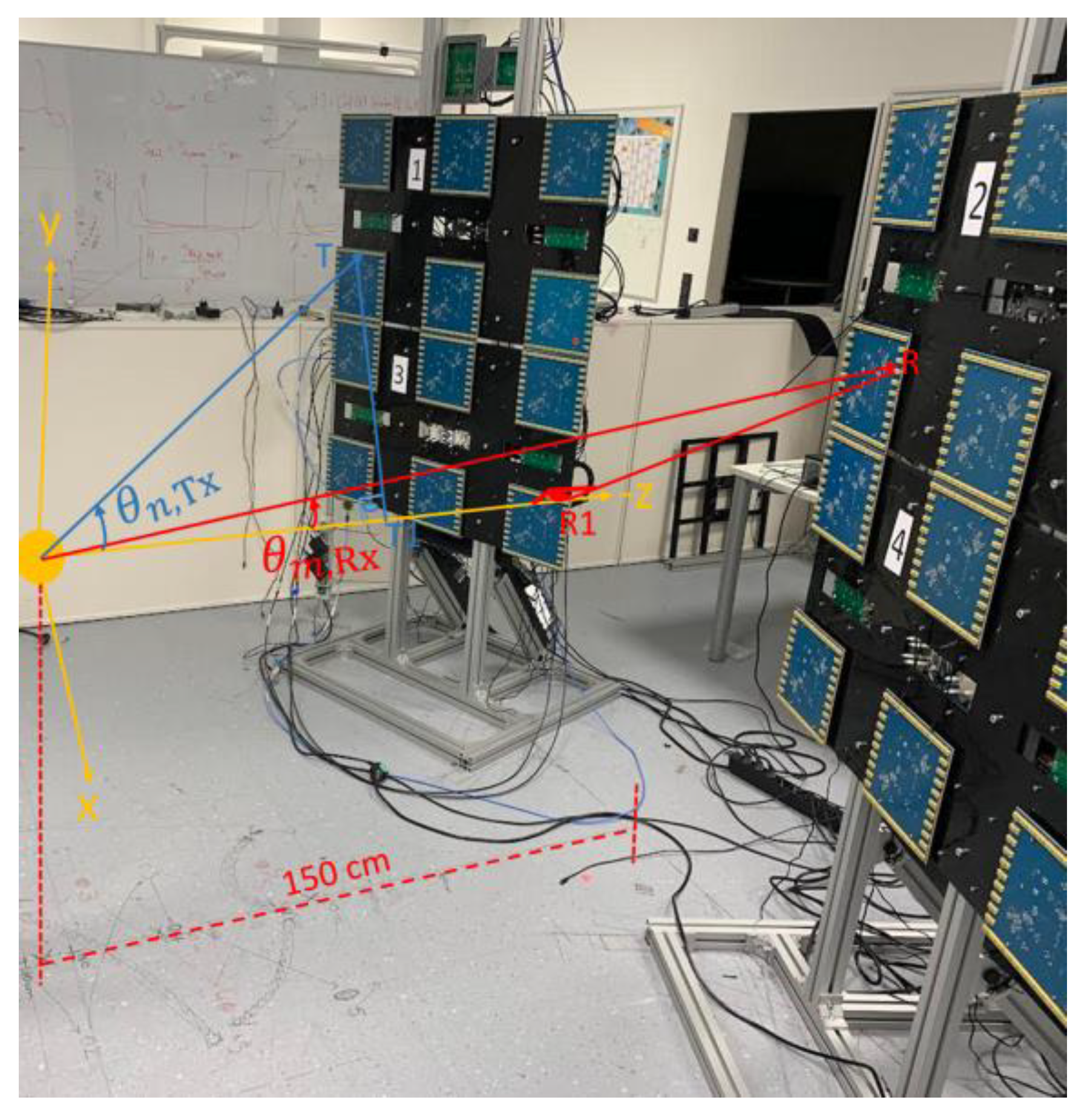

Velocity compensation

In the filter, firstly transmission

and reception

angles as shown in

Figure 9 have to be calculated for every transmit and receive antenna between z-axis and line segments from the center of the region of interest to corresponding transmit and receive antennas, respectively.

For each transmitter/receiver pair, the total range displacement is calculated as follow:

where range displacements ∆

Tx and ∆

Rx for transmitter and receiver antennas respectively can be obtained as

where

tSingleTime is a transmission time for single transmitter antenna. Finally, velocity compensation coefficients can be calculated as

where

c is the speed of electromagnetic wave in free space. Velocity compensation coefficients of Equation (4) are finally multiplied with the redundancy-applied signal of Equation (1).

2.3.2. Calibration Procedure

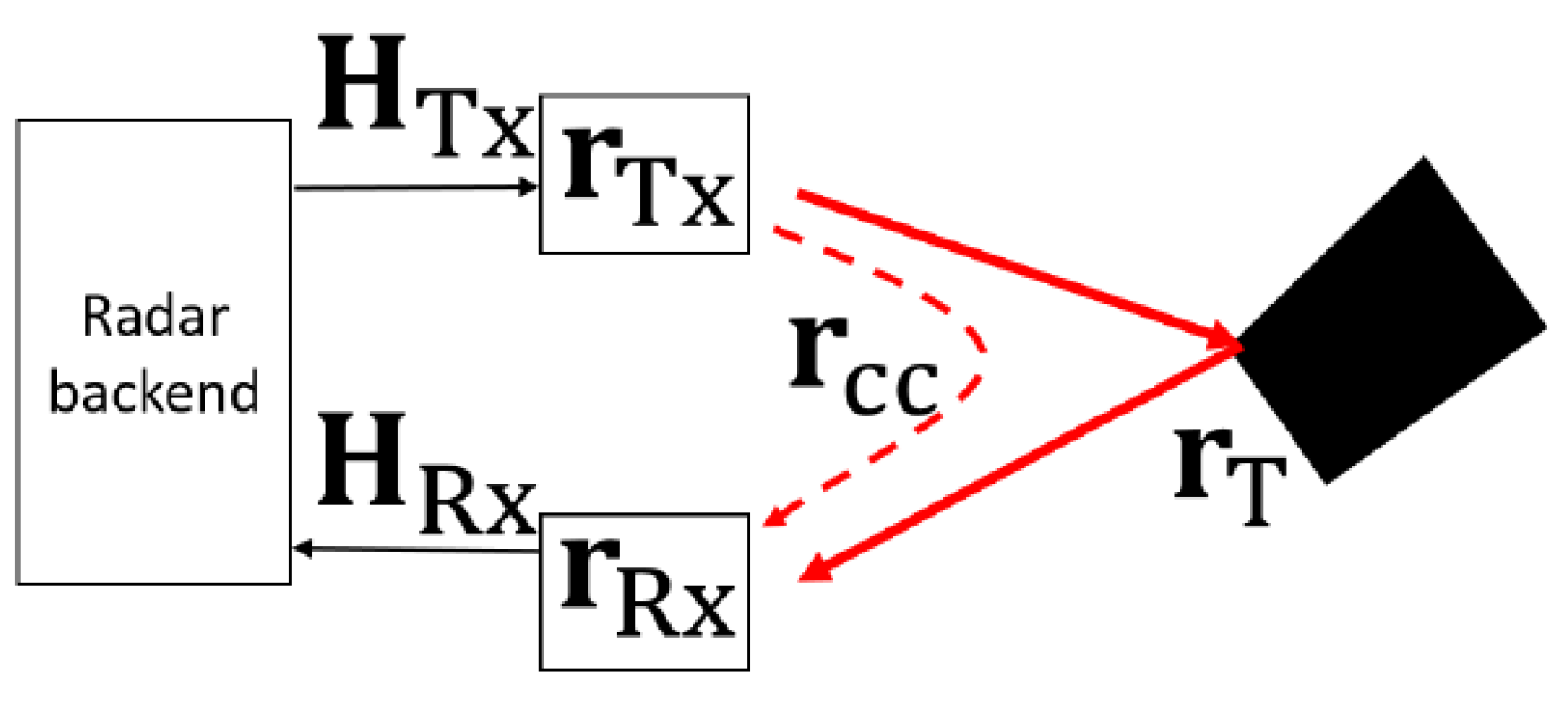

For reliable reconstruction of the reflectivity map, cross coupling and channel response effect in the measured signal need to be compensated. The cross coupling influences phase and amplitude in the measured signal, thereby deteriorating radar image. Aside from the cross coupling, effect of channel responses and for all transmitter and receiver antennas, respectively, are prominent in the measurement signal as well.

As shown in

Figure 10, measurement

and background

signals can be represented as

where

and where

is the back reflected target response,

the channel responses for n-th transmit and m-th receive antennas, respectively, and

the cross coupling signal introduced with an amplitude of

. The background signals only consists of the cross coupling signals for every transmitter/receiver pair due to measurements performed in empty space. The cross coupling signal with its components is minimized by considering the background signal in a way. To isolate the channel responses

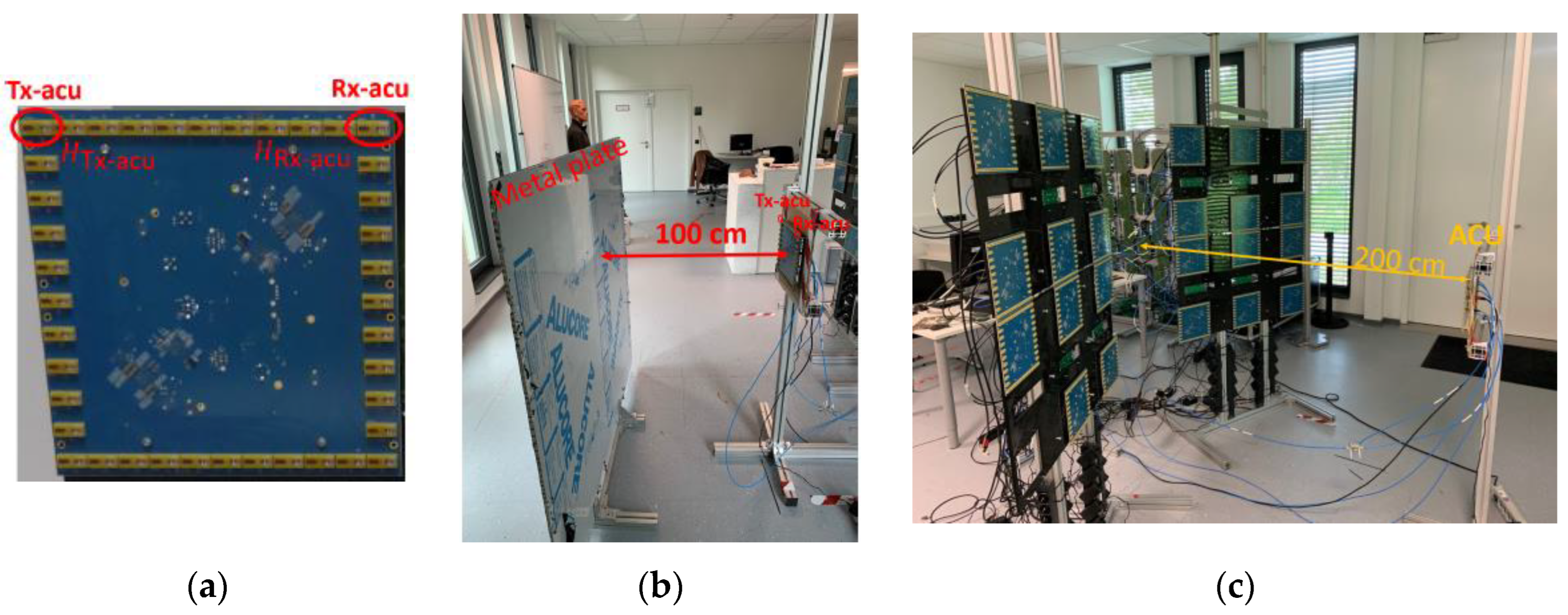

, a well-known scene has to be chosen which can be modeled without numerical effort. For the given configuration a reflective setup is not capable to cover the different orientation angles of the modules. Therefore, an active calibration method, based on an external “repeater” was used acting as an artificial point scatterer.

With the proposed calibration method, an external transceiver namely active calibration unit (ACU), is used as shown in

Figure 11a. A pair of transmit Tx-ACU and receive Rx-ACU antennas is assigned as active calibration pair on the ACU. In the active calibration method, channel responses

,

for transmitter Tx-ACU and receiver Rx-ACU antennas, respectively, should be firstly obtained. To obtain these channel responses, 10 sequential measurements with a metal plate, positioned at 100 cm away from the ACU as shown in

Figure 11b, is performed. To minimize the cross coupling, 10 sequential background measurements are subtracted from the metal plate measurements. The measured signal on the ACU can be given by

where

is the propagation path between the metal plate and the active calibration pair on the ACU. The channel response of the ACU can be calculated as

where

is the simulated propagation path in-between the active calibration pair and the metal plate, and can be described as

where

is the metal plate position,

and

are positions of transmit Tx-ACU and receive Rx-ACU antennas, respectively.

Next step is transmission measurement as shown in

Figure 11c. Here, the center of the ACU is positioned at 200 cm away from the center of the array aperture. The Tx-ACU illuminates all receive antennas

on the imaging system while the Rx-ACU receives from transmit antennas

on the imaging system. Because there is a range distance of 200 cm between the imaging system and the ACU, transmission measurement data is lack of the cross coupling influence.

Transmission measurement data, measured between

& Rx-ACU, and

& Tx-ACU, respectively, can be represented as follows

where

and

are propagation paths from n-th transmitter antenna on the imaging system to the Rx-ACU, and from the Tx-ACU to m-th receiver antenna on the imaging system, respectively. In consideration of antenna positions on the imaging system and ACU in the measurement scene, channel responses belonging to these transmission measurements can be calculated by considering simulation propagation paths as follow:

where simulation propagation paths can be describe as

Channel responses of the imaging system for every antenna pair can be finally calculated as follow

To avoid numerical artifacts from direct division of noisy measured target signal and channel responses, calibration coefficients are obtained as follow [

12]

The component factorsmoothing is added avoid overweighting of small amplitudes arising from the division by a measured quantity, while factoramplitude helps tuning amplitude values of the radar imaging system into the needed range for Automatic Target Detection algorithm.

Finally, the pure object signal is obtained for every transmitter/receiver pair by

2.4. Imaging System Characterization

Enhancing the imaging performance of a radar system is an imperative necessity, which is closely linked to the system’s attributes, including the level of illuminated power density, the signal-to-noise ratio (SNR) of the transmitted signal, and the point spread function.

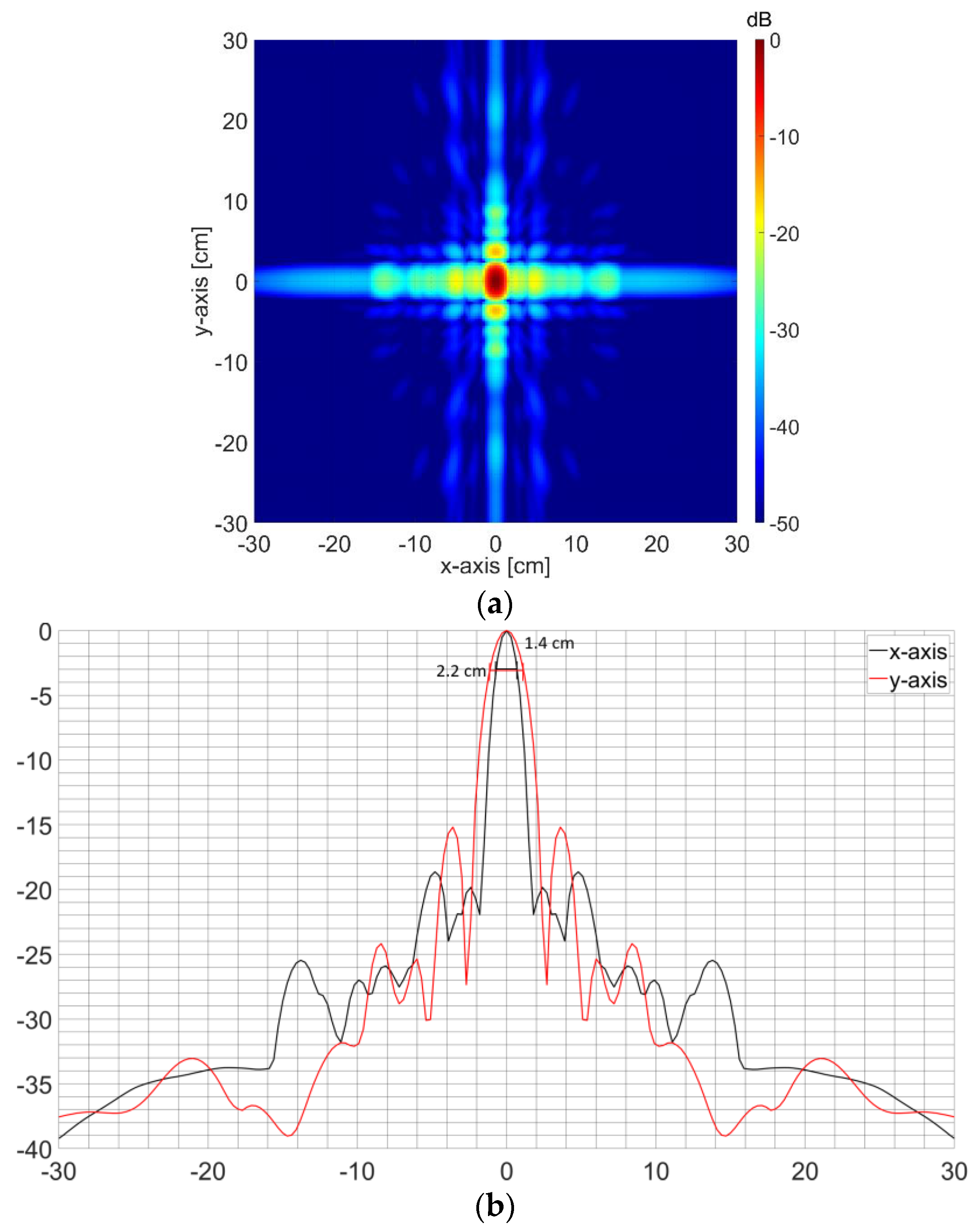

2.4.1. Point Spread function characterization

Since measurement data were not obtained during the system development, the PSF characteristics are studied by simulation data. To investigate the PSF quality, a point-like target is placed at the center of the coordinate system and the proposed MIMO array is positioned at 150 cm away from the focal point as given in

Figure 11. Data collected in simulation is then focused using the imaging algorithm mentioned in the

Section 2.3.1.

We notice in

Figure 12a that pedestal side lobes form along horizontal and vertical directions where the shadowing effect is the strongest within the virtual array. The maximum side-lobe levels are about -18.6 and -15.1 dB along x-y-axes, respectively, that are low enough to obtain high contrast radar image. It is clear that ultra-wide frequency band helps squeeze the PSF around the main beam residing by virtue of strong interference of transmitted waves. This strong interference lessens at larger x-y-axes in

Figure 12b.The simulation resolving capabilities of the system can be get from

Figure 13 and are 1.4 and 2.2 cm, respectively.

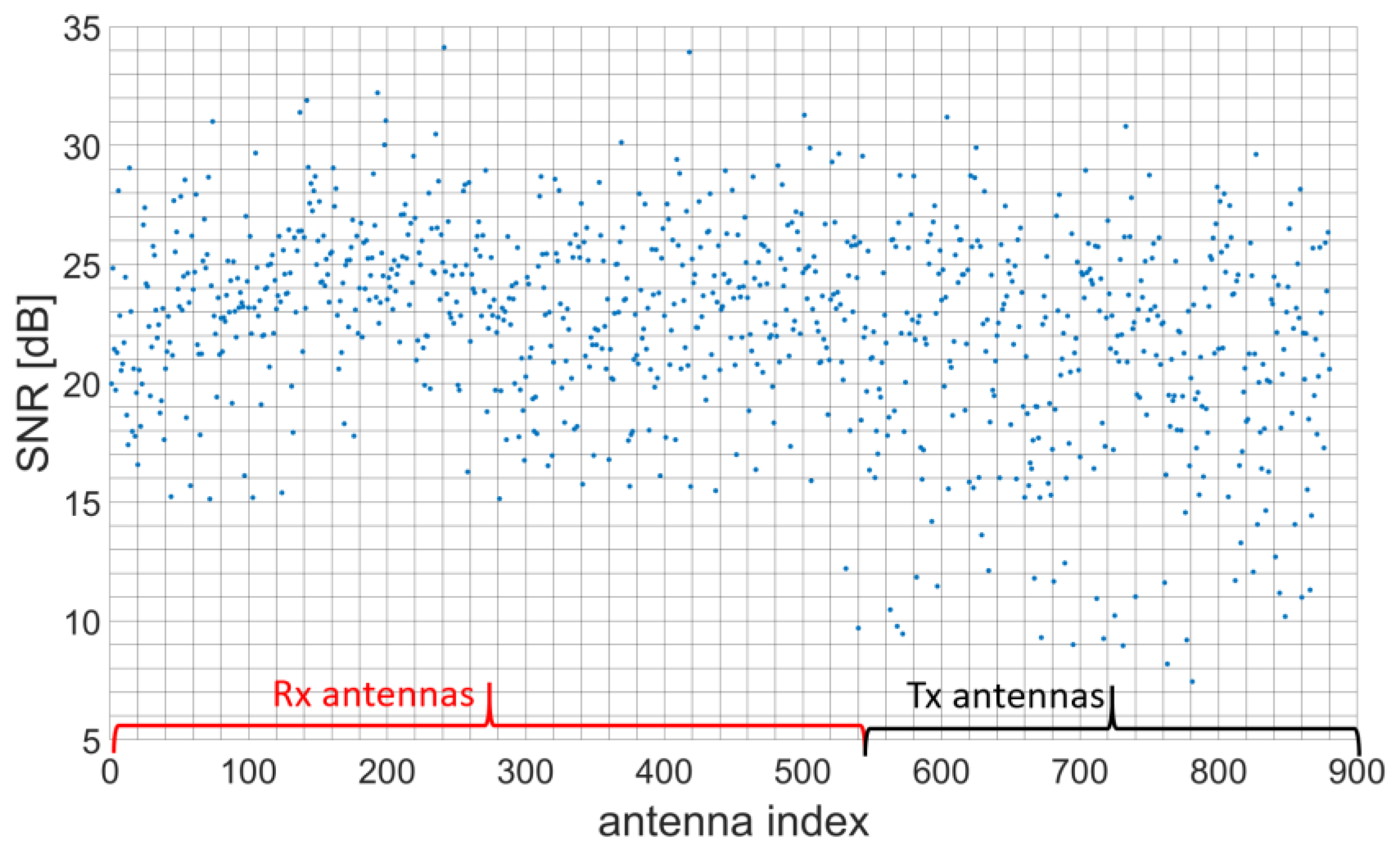

2.4.2. Signal-to-Noise Ratio Measurement

Signal-to-Noise-Ratio (SNR) is defined as the ratio of the power of the desired signal and the power of the random noise component. In a static radar measurement, the measured complex value at each range bin should be constant with time. Assuming white Gaussian receiver noise, the noise power can be calculated by the variance of the complex measured signal at the range bin under test over time. A sequence of calibration measurement in static environment can be, therefore, used to calculate the SNR of every transmitter and receiver as the ratio of the square of the mean value at the range bin under test and the corresponding variance:

where

μnTx and

μmRx are the signal means for the n-th transmitter and for the m-th receiver, respectively,

σnTx and

σmRx are the standard deviations of the noise over time for corresponding transmit (respectively receive) antenna.

Using the same measurement setup in

Figure 10c, sequential 10 transmission measurements are performed between transmit antenna on the system and ACU receive antenna, and receive antenna on the system and ACU transmit antenna. In total, 880 transmission measurements are obtained from 528 receive and 352 transmit antennas. Afterwards, inverse Fourier transform of these measurements generate range distribution in spatial domain.

Figure 14 represents obtained SNR values for every antenna. Most of the antennas are operational with SNR values bigger than 15 dB. Nevertheless, there are 1 Rx and 9 Tx antennas with SNR values below 10 dB.

2.4.3. Radiation Power

Power density transmitted by security scanner is addressed in a report [

8] which indicates that, for frequencies between 2 and 300 GHz the millimeter-wave security scanners would be using, the maximum power density level recommended is 10 W/m2 for members of the public and 50 W/m2 for exposed workers.

The frequency range transmitted from the microwave imaging system will be within 6.5 to 10.5 GHz. The regulation makes necessary to determine whether the transmitted power density is below the maximal threshold.

The measurement is performed at three different ranges such as 1 m, 2 m and 3 m (

Figure 15). One transmit antenna is activated and continuously illuminates measurement scene including the double ridge horn antenna The polarizations of double ridge horn antenna and Tx antenna on the imaging system are deliberately matched.

Received powers on spectrum analyzer at 8.5 GHz at 1 m, 2 m and 3 m are -66.4, -71.1, and -82.2 dBm, respectively. After considering losses and receive antenna gain, the power density can be calculated for these three different ranges, and results in 6.2897.10-7 W/m², 2.1313.10-7 W/m², and 0.1652.10-7 W/m², respectively.

Obtained power densities are dramatically lower than the required power density level 10 W/m2 for members of the public [

8]. That conclusion validates the MIC objective of being used on public people and respecting the European regulations.

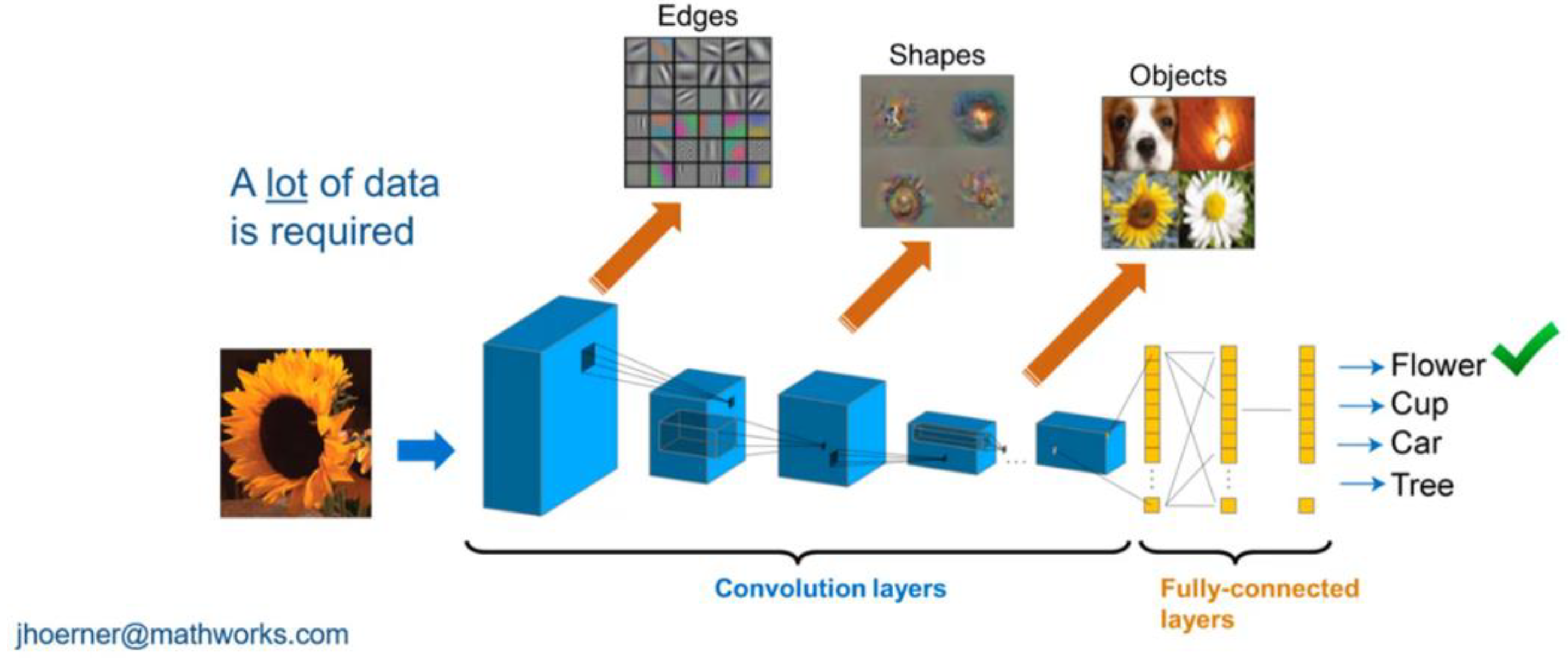

2.5. Artificial Intelligence algorithm for automatic detection on radar imagery

Data is a central element of AI methods. Having them in large numbers but also in quality is crucial to allow the convergence of learning and the correct measurement of performance during tests. As MIC is a prototype developed in a time constraint project, only few training data set measurement campaign have been conducted. An operational system would request much more data.

All available, non-empty, and exploitable voxels have been processed and transformed into a common format. This format comprises two channels, namely “voxel cuts,” each having a size of (120,100,2). One channel represents the amplitude, while the other represents the relative distance of the brightest pixel. These voxel cuts are stored using the same Matlab format. The format compresses the 3D voxels into simpler individual 2 channels images at the estimated center position of the subject. The compression results in virtually no information loss since most of the 3D voxel space comprises pure noise. The voxel cuts are used for training and testing the CNN-based classifier, which is discussed in the next section.

A total of 15251 usable voxel cuts with 83 different subjects were produced during the training phases. A Convolutional Neural Network (CNN) based classifier has been trained with 4 selected threats (AK47, middle size automatic riffle, small gun and explosive belt mock-up), confusers (umbrella, smart phone, metallic bottle, …) and without any object.

2.5.1. MIC Convolutional Neural Network classifier and Explainable Artificial Intelligence description

A convolutional neural network (CNN, or ConvNet) is a class of artificial neural network, most commonly applied to analyze imagery. CNNs are regularized versions of multilayer perceptrons, and use several convolutions layers piled on top each other (leading to the expression “deep learning”). CNNs use relatively little pre-processing compared to other image classification algorithms. This means that the network learns to optimize the filters (or kernels) through automated learning, whereas in traditional algorithms these filters are hand-engineered. This independence from prior knowledge and human intervention in feature extraction is a major advantage.

In a CNN, the input is a tensor with a shape: (number of inputs or batch size) × (input height) × (input width) × (input channels). After passing through a convolutional layer, the image becomes abstracted to a feature map, also called an activation map, with shape: (number of inputs) × (feature map height) × (feature map width) × (feature map channels). Convolutional layers convolve the input and pass its result to the next layer. They are ideal for data with a grid-like topology (such as images) as spatial relations between separate features are taken into account during convolution and/or pooling. Among successive convolutional layers, the filters focus on very simple features, such as outlines, and grow in complexity and granularity on features that uniquely define the object (

Figure 16).

Like other neural networks, a convolutional neural network is composed of an input layer, an output layer, and many hidden layers. These perform operations that modify the data in order to learn specific characteristics.

The four layers which constitute the most common bricks of the intermediate layers of CNNs are: a convolution layer, a normalization layer, an activation layer and a reduction layer (pooling). Each stacking of these elementary layers is often called a “down-sampling layer”. A deep CNN network then comprises the sequence of several of these stages (blocks shown in blue in

Figure 16).

To perform a classification function, the network ends with one or more linear layers intended to distribute the learned patterns into different probabilities of belonging to each of the output classes. The last layer of the architecture uses a classification layer such as softmax to generate the classification output. Classification is therefore carried out on a low-dimensional so-called “latent” space which is found at the output of the last convolutional neuronal layer (output of down-sampling blocks).

For the MIC project, a rather « classical » CNN deep learning architecture was chosen using 5 down-sampling blocks: convolution, normalization, activation, terminated with one fully connected layer and a softmax layer. Random Data-augmentations were applied: x-flip voxels, +/-20cm x-translation (x-centered), +/-10cm translation in height, +/-4cm translation in distance (centered).

Interpretability is the degree to which machine learning algorithms can be understood by humans. Machine learning models are often referred to as “black boxes” because their representations of knowledge are not intuitive, and as a result, it is often difficult to understand how they work. Interpretability techniques help to reveal how black-box machine learning models make predictions. In the context of deep neural networks, interpretability is more often referred as “AI explainability”.

By revealing how various features contribute (or do not contribute) to predictions, interpretability techniques can help you validate that the model is using appropriate evidence for predictions.

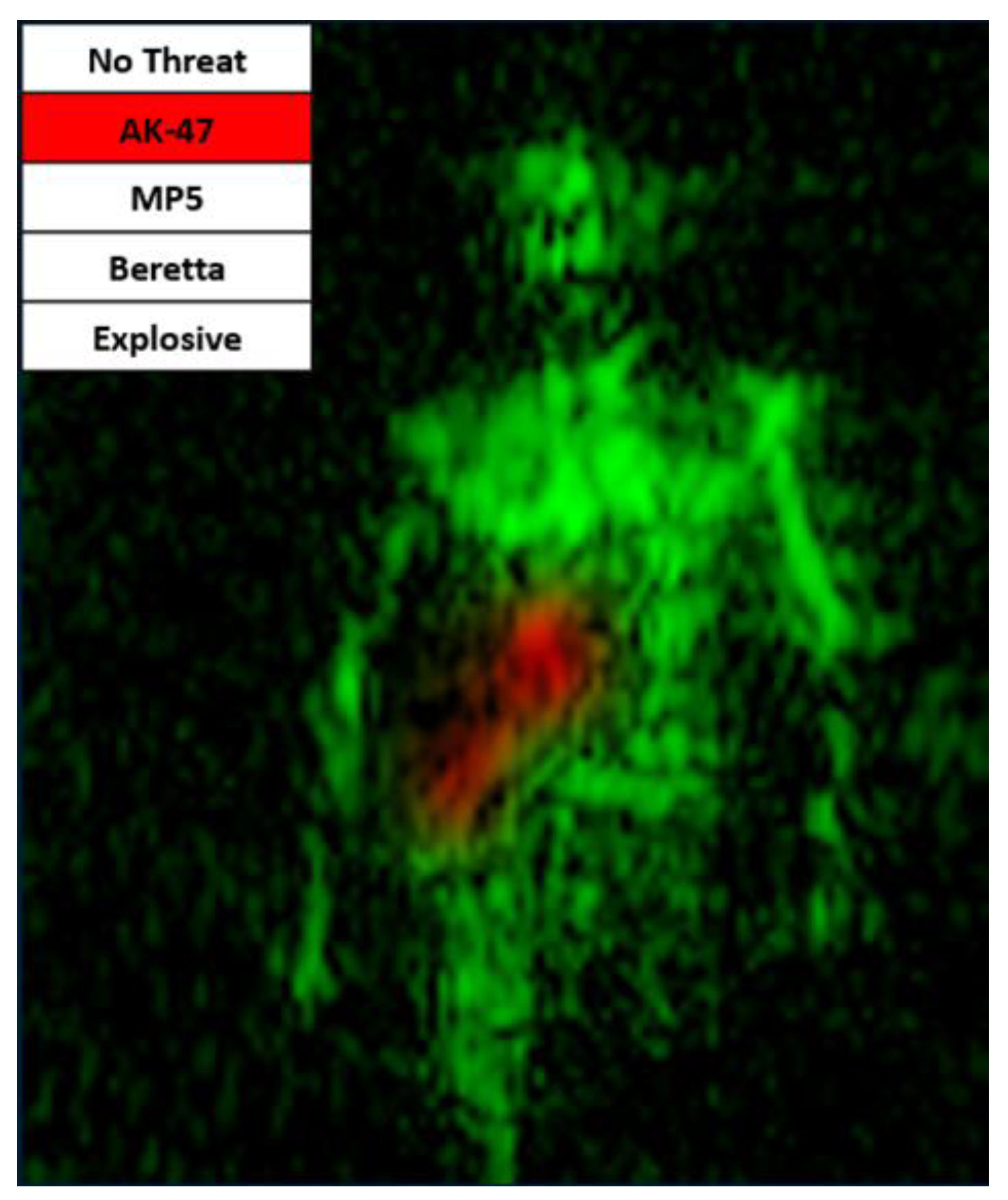

We have used LIME [

9] and Grad-CAM [

10] techniques to highlight the regions of an image that contribute most to the predictions of the image classification network. An example of result is shown on

Figure 17. These techniques clearly help to interpret decisions. For instance, it showed that the explosive threat detection was mainly taken on deviation of the “natural” belly region signature caused by the dielectric masking effect of the explosive belt and abnormal presence of scattered metal parts. During AI development, it also helped us to improve the model, by recognizing whether the classifier was trustworthy or not when comparing the highlighted region with the available ground truth.

2.5.2. MIC Classification Performance assessment on static targets

MIC Classification Performance measurement has been conducted with several threats of interest with different sizes and shapes (

Figure 18).

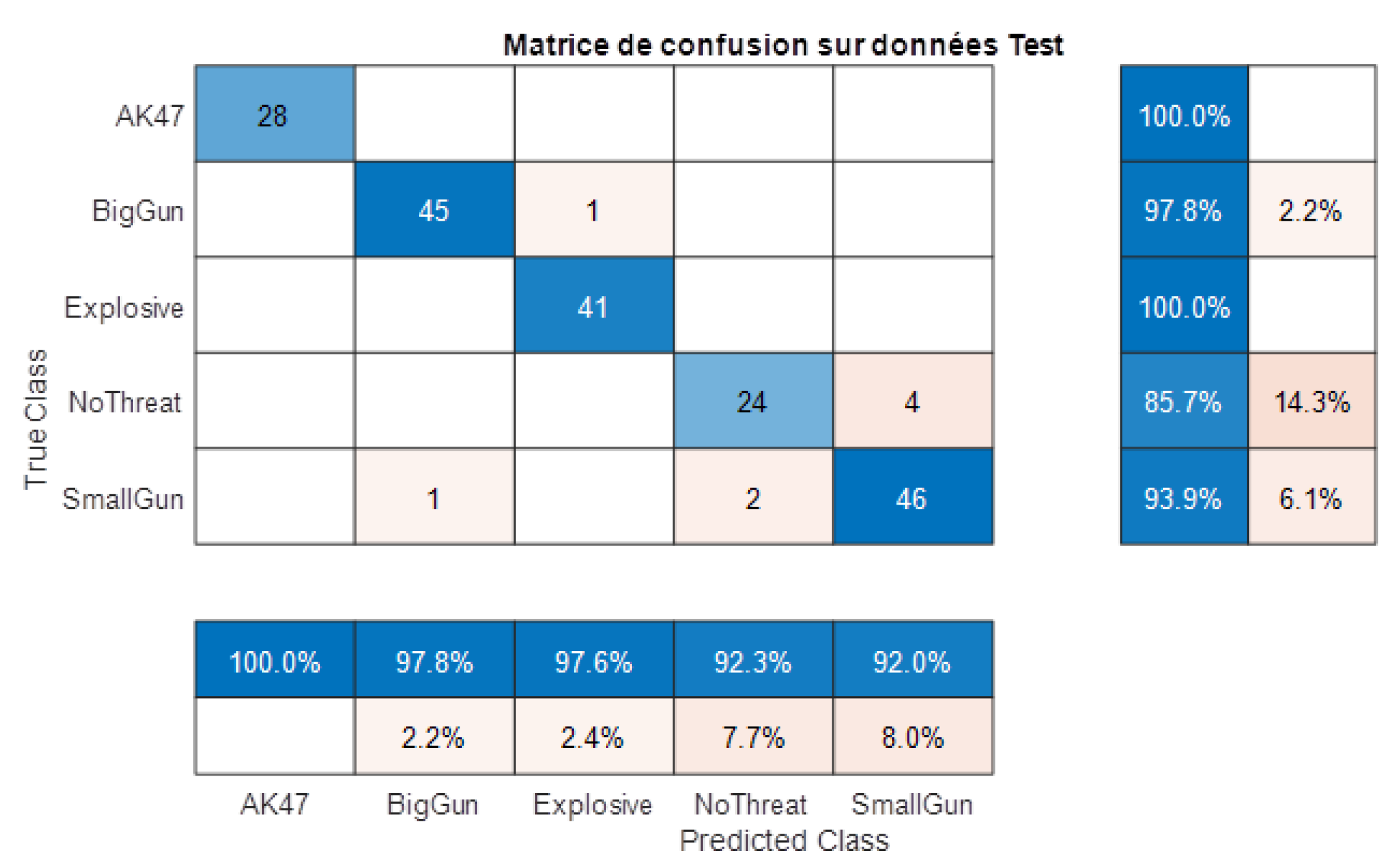

Before the field trial, a first classification performance analysis has been conducted on a reduced dataset using only static target measurements (20 subjects). A random separation of 841 train and 192 independent test voxels among all subjects was performed and led to the confusion matrix shown on

Figure 19.

Accuracies (and prediction errors) are presented on the right of the confusion matrix (mean accuracy 95.8%). Probabilities of correct (and false) declarations are presented on the bottom.

The confusion matrix shows an excellent class separation (residual errors due to Small Gun that is at the limit of target size detection), that confirms chosen CNN as a good potential candidate for Automated Target Detection. However, such confusion matrix is useful for classifier architecture selection and optimization but not sufficient to infer a fair performance evaluation with independent subject separation from train and test measurements.

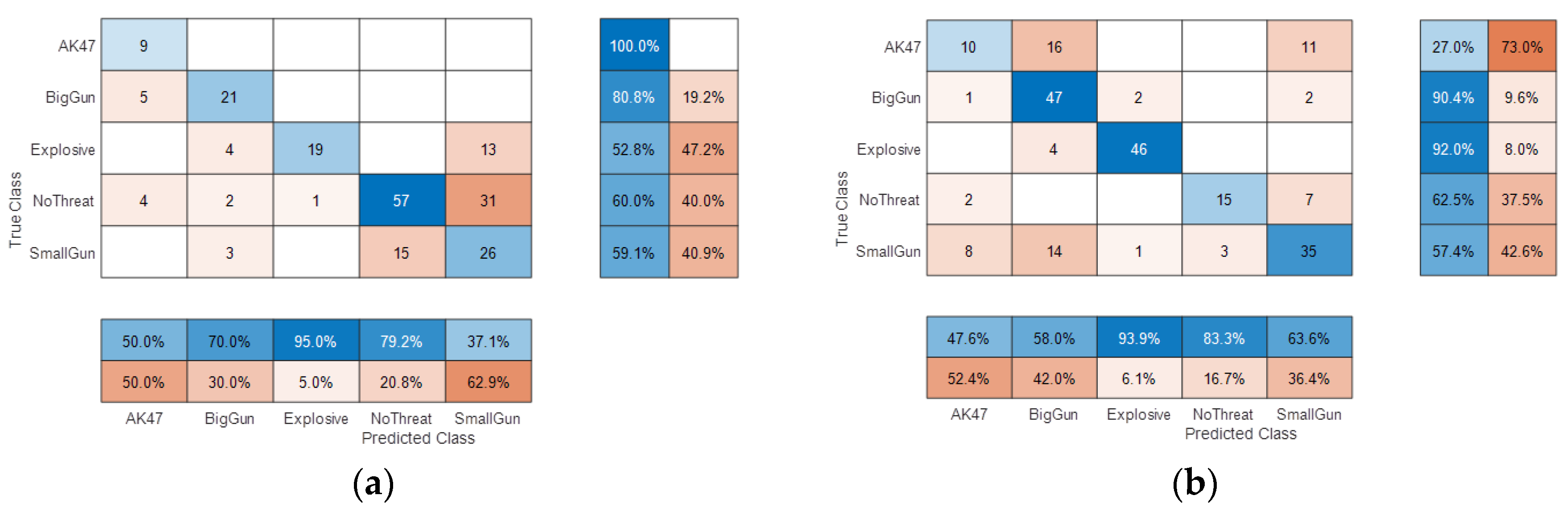

It is important to check that the performance of a network is not biased by subjectivity of the dataset it is trained on. The best way to assure this is to separate the subject selection between the Train and Test subsets.

Figure 20 shows the confusion matrixes obtained using two different separations among the 20 available subjects.

These two confusion matrices show some similarities (e.g. probabilities of declaration) and major performance discrepancies (i.e. accuracies). In both cases, the overall performance is rather low (mean accuracy ~ 65%).

The high error variance is also due to the rather “low” subject cardinality (and difference in cardinality of available threat data between the two tests). However, both show quite good and consistent result on “Explosive” declaration (~95%).

The missed alarm on « NoThreat » declaration is mainly due to Small Gun target, that corresponds to the detectable size limit, but this bad performance was expected due to the rather low signature of such threat (leading to low confidence on declaration). Natural confusion between “BigGun” and “AK47” was also expected as weapons with close size may lead to similar signatures.

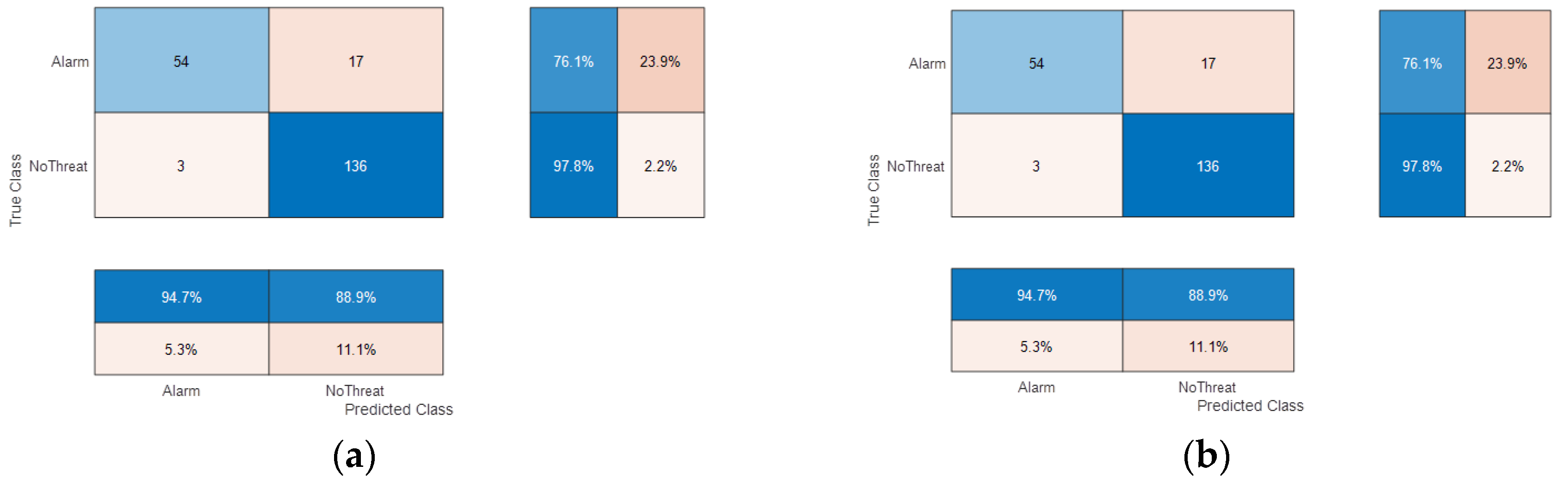

The performance may be further studied by reducing the problem to a binary classification: “big” threat alarm (AK47, BigGun, Explosive) versus not. Corresponding confusion matrixes are shown in

Figure 21. The overall performance is slightly more consistent with correct mean accuracy > 90%.

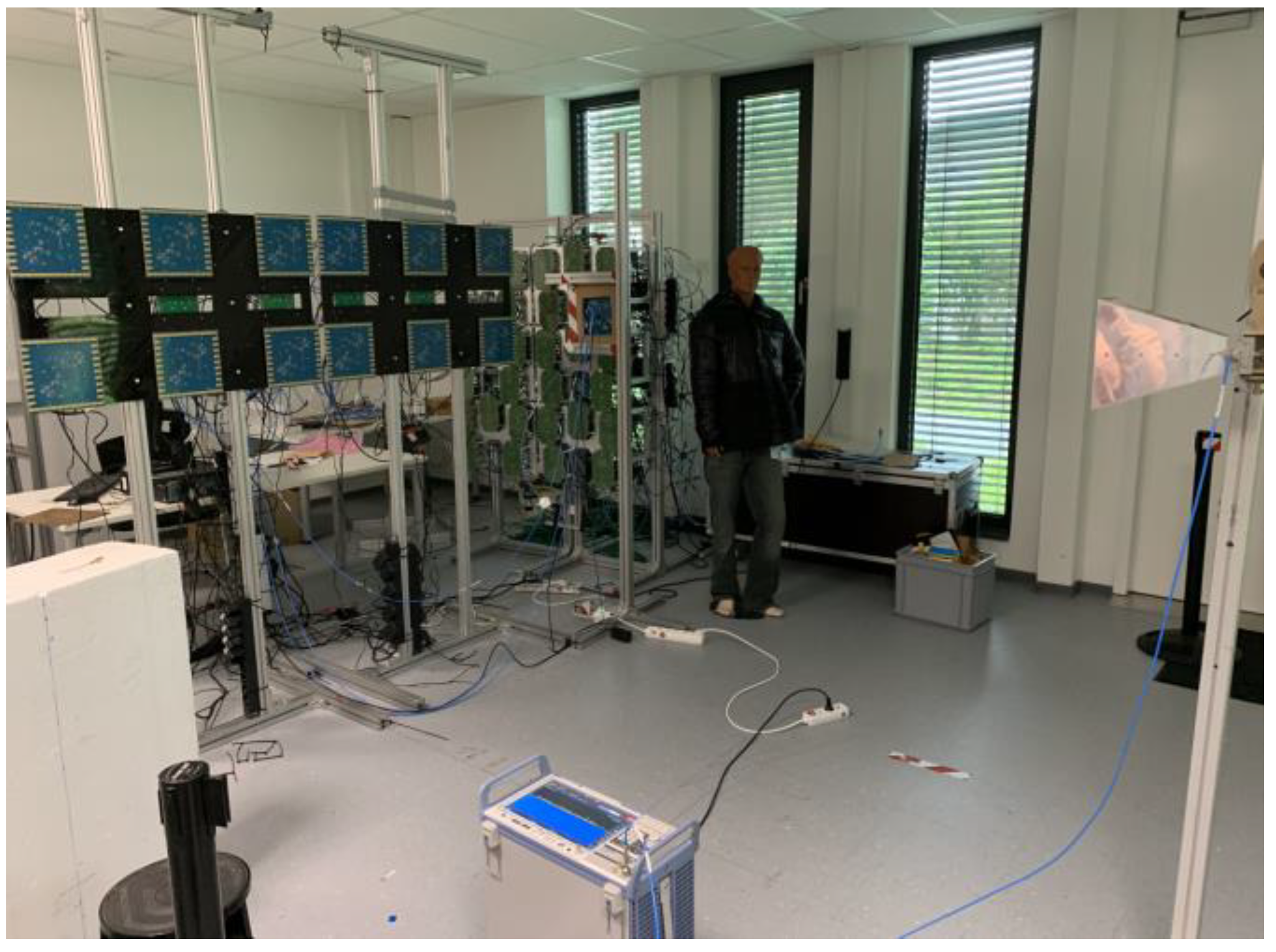

These static laboratory tests validating the processing chain, the complete system has been transported to Rome, Italy, to be tested in Anagnina metro station (Rome, Italy).

3. Performances evaluation

Setup

The MIC imaging system was integrated within a dedicated area of a public metro station in Rome as part of the field trial and system demonstration of DEXTER. The integration phase was conducted in the first week of May 2022, followed by a demonstration for VIPs, customers, experts, and journalists at the end of the month.

The MIC system is built as a walkthrough solution. Passengers, entered through the measurement scene as shown in

Figure 22, firstly have to pass through the MIC system. The resultant radar image is processed and potential threats are automatically identified and characterized. The DEXTER prototype is subsequently informed about the threat obtained from the MIC system to automatically initialize other sensor systems. Thus, the MIC system is an essential component in the DEXTER system prototype.

The final appearance of the MIC system is presented in

Figure 23.

The MIC imaging system was integrated into the DEXTER prototype via a NTP server to which two other sensor systems are connected as shown in

Figure 24. It is worth mentioning that the MIC system is set to be remotely controlled via laptop.

The MIC system has performed experiments by discreetly scanning 550 people carrying different treating objects under their jacket: 189 volunteers had threat (weapon or explosive belt), 203 others with civilian objects (confusers) and 158 without any threat/object.

These volunteers participate to blind test scenario covering different cases: either one by one, or four people walking in a row, or two people side by side. All the volunteers went through the MIC observed area with a natural walking and trajectory, at a typical walking speed (~ 2 m/s).

The MIC system revealed a success rate of around 95 % in these measurements but performance can be defined in different ways (depending on operational concept of operation):

92,6% of threat detection and identification

93% of threat detection

94% of under clothes concealed object detection

96% of big concealed object detection (if we do not take into account the small gun cases which were at the limit of the system in terms of target size)

Table 2 lists the MIC fail cases. We can observe that MIC confuses explosive belt with backpack carried on the front, which could be mitigated by video camera and/or safety agents. We also can conclude that most of false positives and negatives concern small gun scenarios that are the identified limit of the system and objectives (due to threat size regarding resolution and image quality).

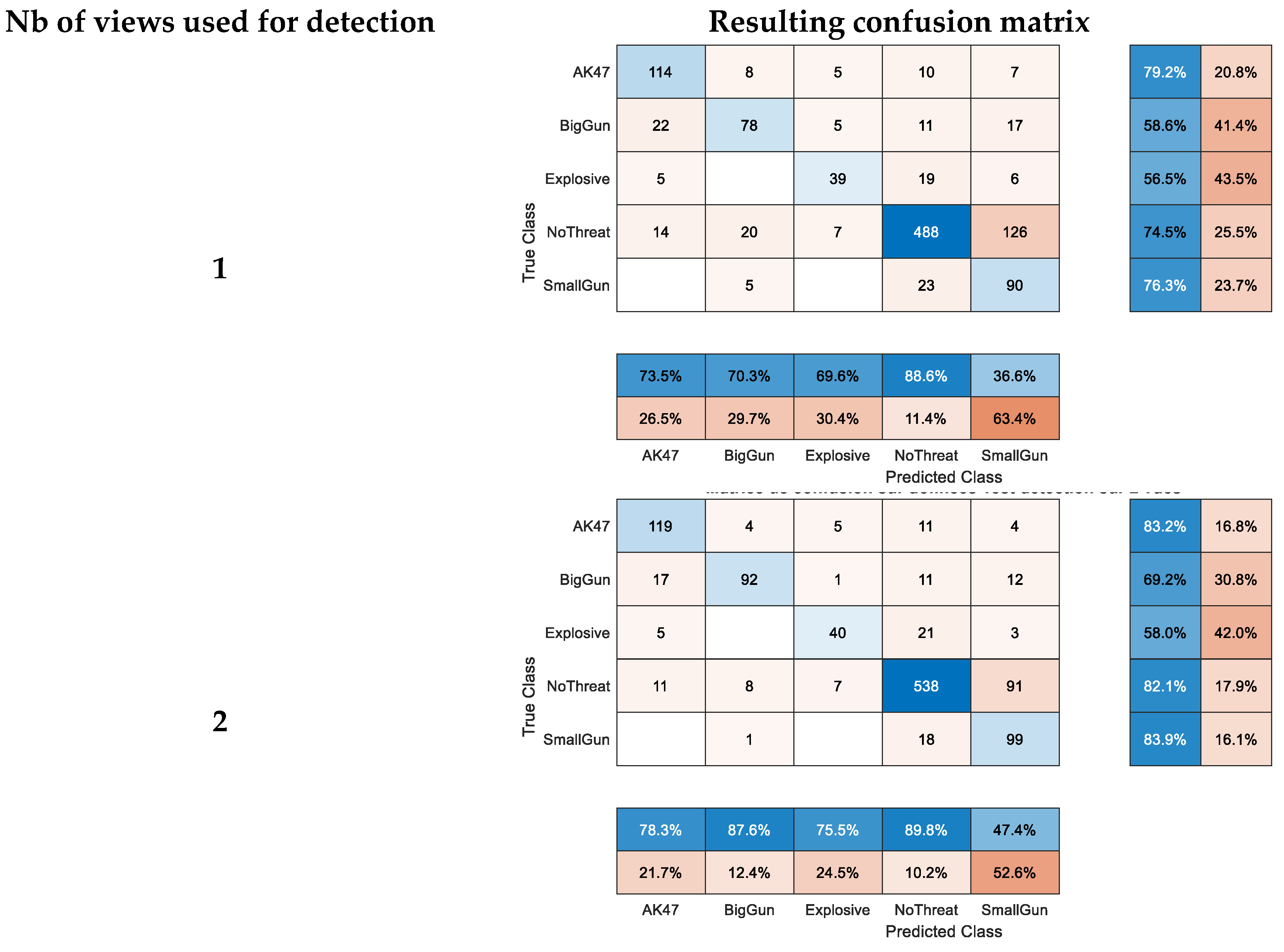

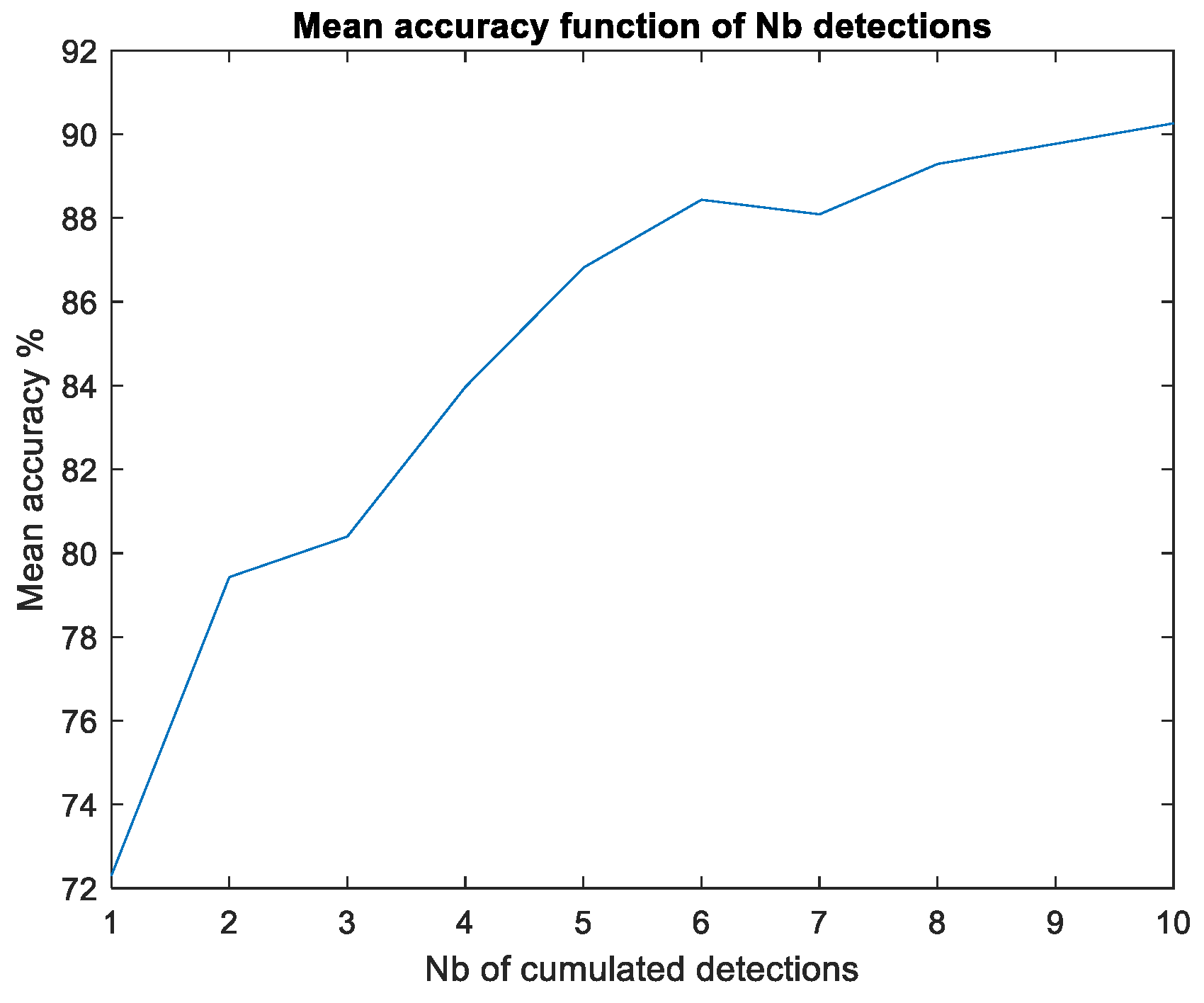

We are able to simulate performances that could be obtained with a higher acquisition frame rate by combining several paths of the same subject carrying the same threat. The positive effect brought by cumulating the individual detections is illustrated in

Figure 25 and

Figure 26.

These results confirm interest in image frame rate increasing for future operational system.

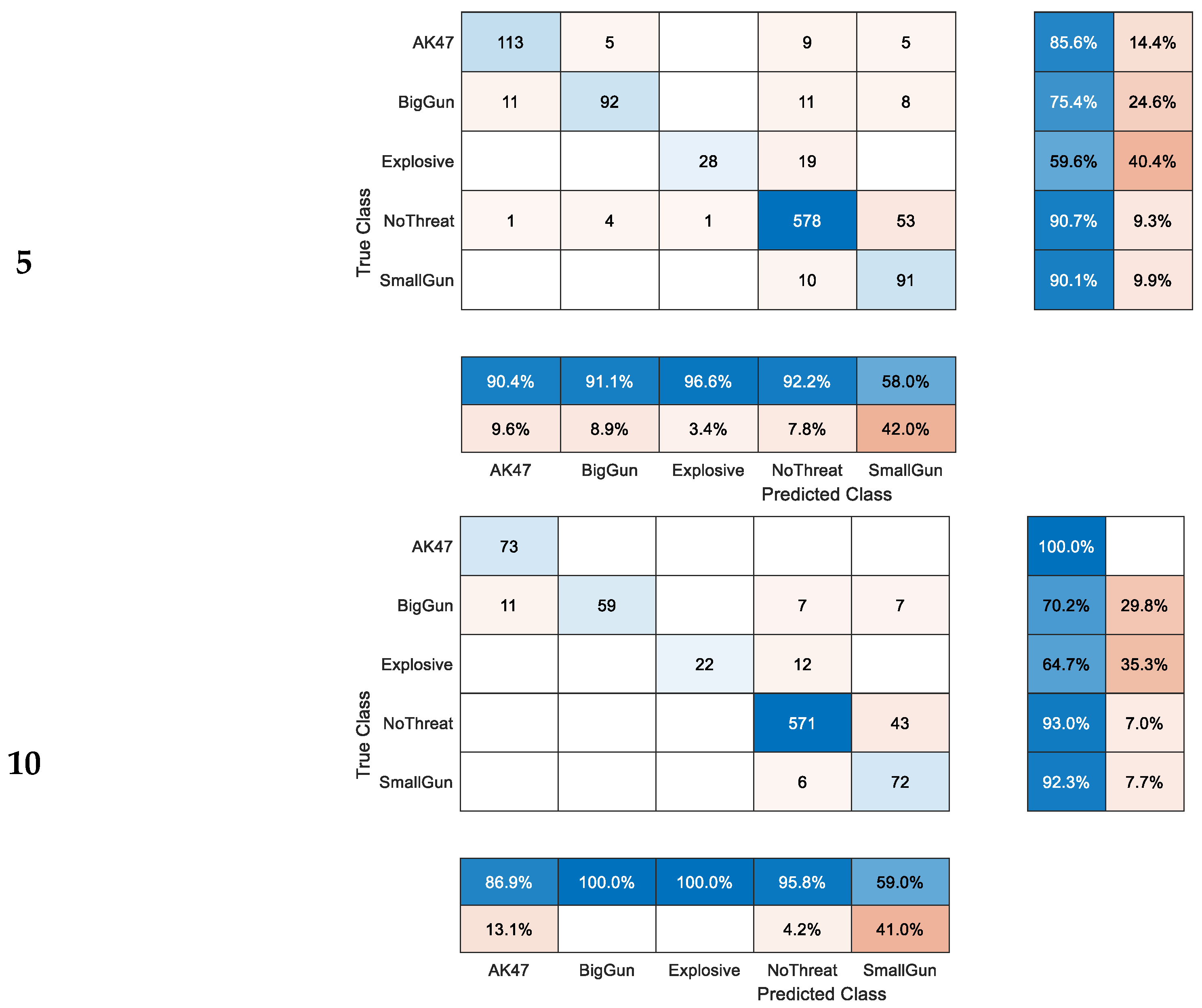

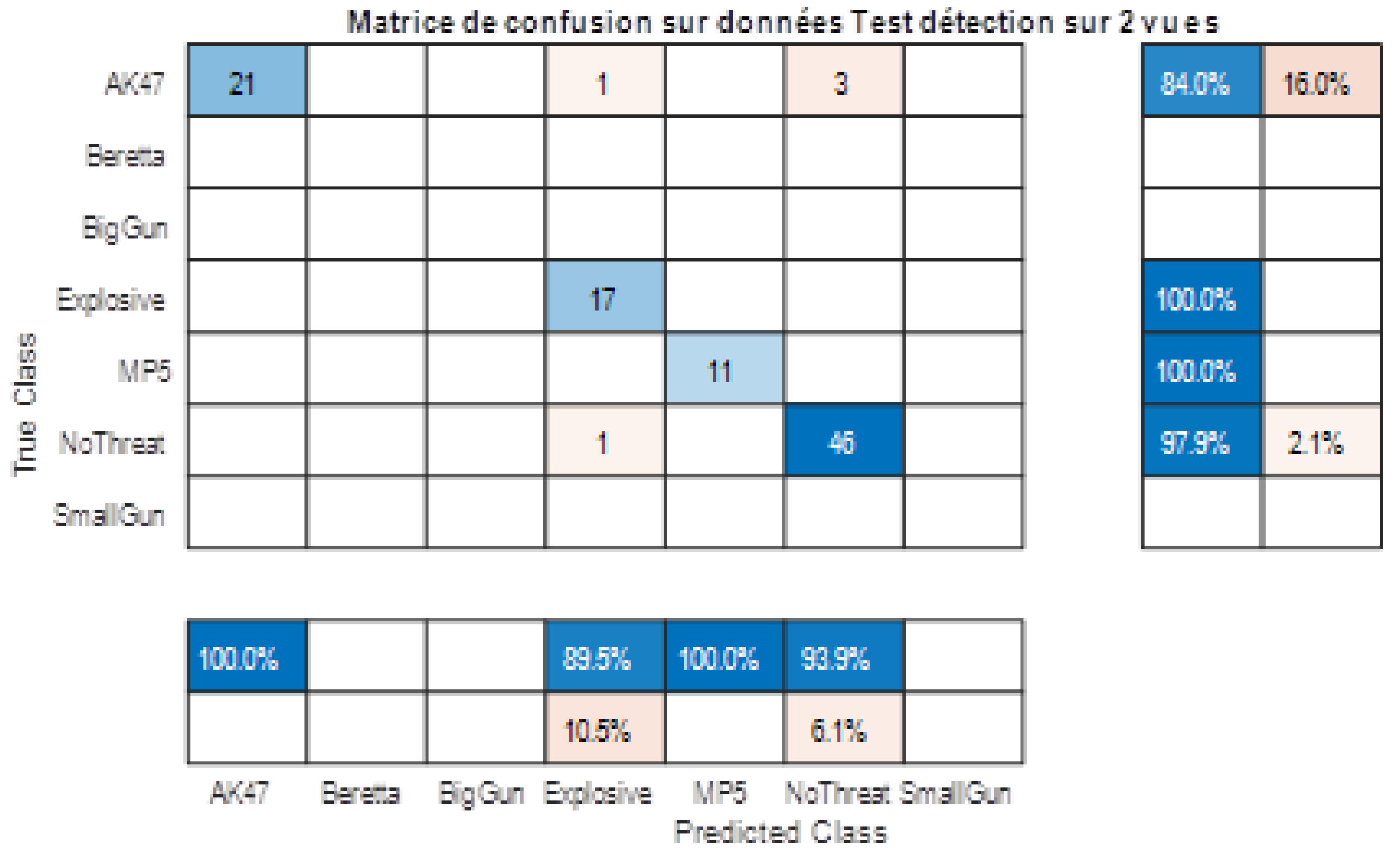

Field trial results must be weighted as the tests carried out did not present a complete separation of the subjects between the test and learning space due to the relatively limited number of volunteers. To mitigate this issue, we have undertaken a post-trial data reprocessing with a careful check of subjects identity according to the available ground truth.

We then have retrained and tested the AI on fully independent datasets. We also added two classes with threats only available in laboratory experiments (BigGun & SmallGun different from Beretta). We obtain the confusion matrix shown in

Figure 27 (decision on 2 successive frames).

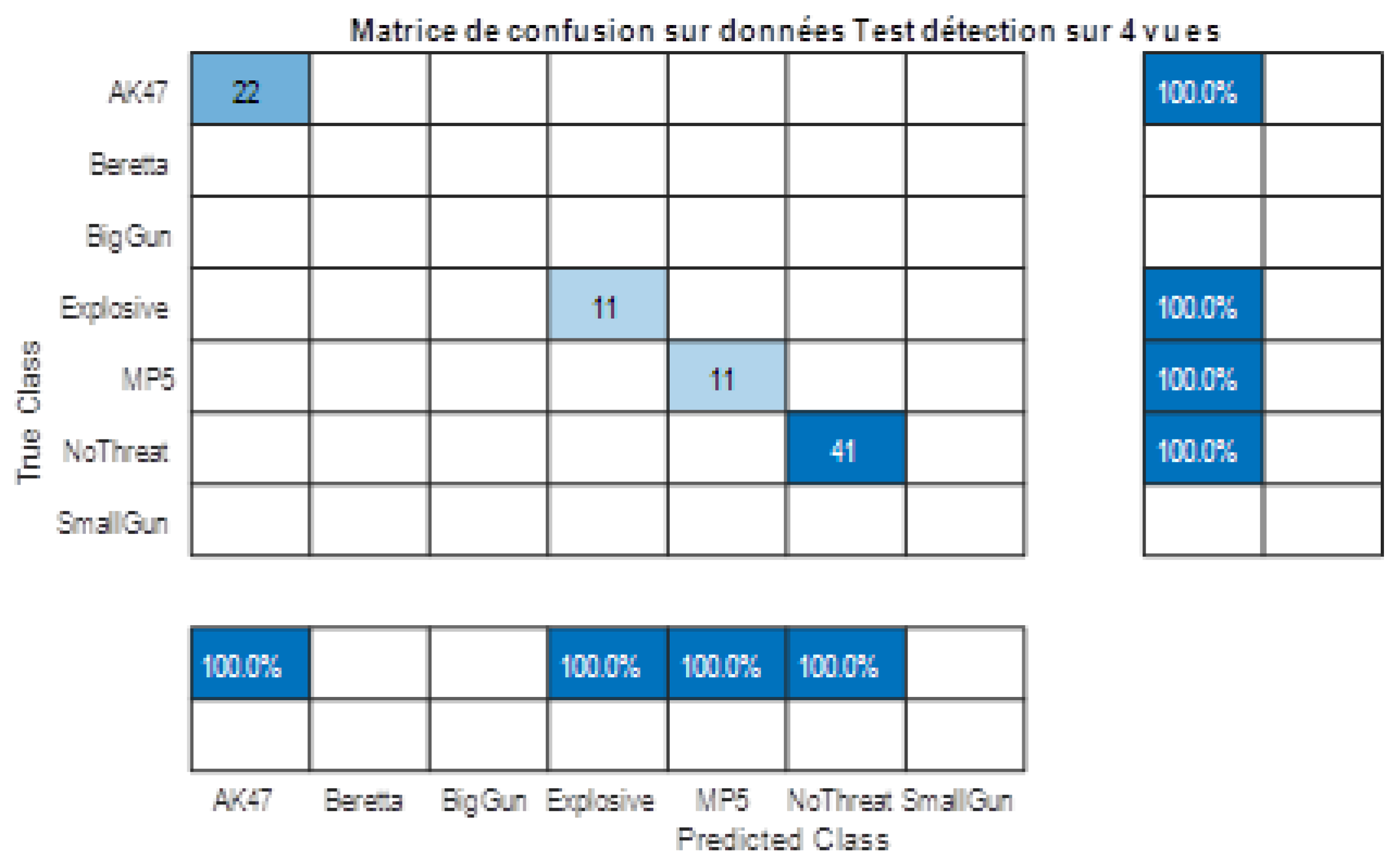

Overall performances increase with number of training subjects and number of cumulated detections, and confirm announced ~95% accuracy on major threat detection (decision on 2 successive detections).

Moreover this reprocessing enables us to artificially simulate an increase of the system frame rate by combining several passages of the same subject (with of course the same threat conditions). The positive impact of doubling the frame rate is clearly shown on the confusion matrix of

Figure 28.

4. Perspectives for operational sensor: wider Door and Wall configuration

The MIC prototype exhibits the ability to automatically detect threats concealed under the clothing of pedestrians in motion, without interrupting their movement. This is accomplished using COTS low-cost components that comply with EU regulations concerning civilian health in the presence of electromagnetic sources.

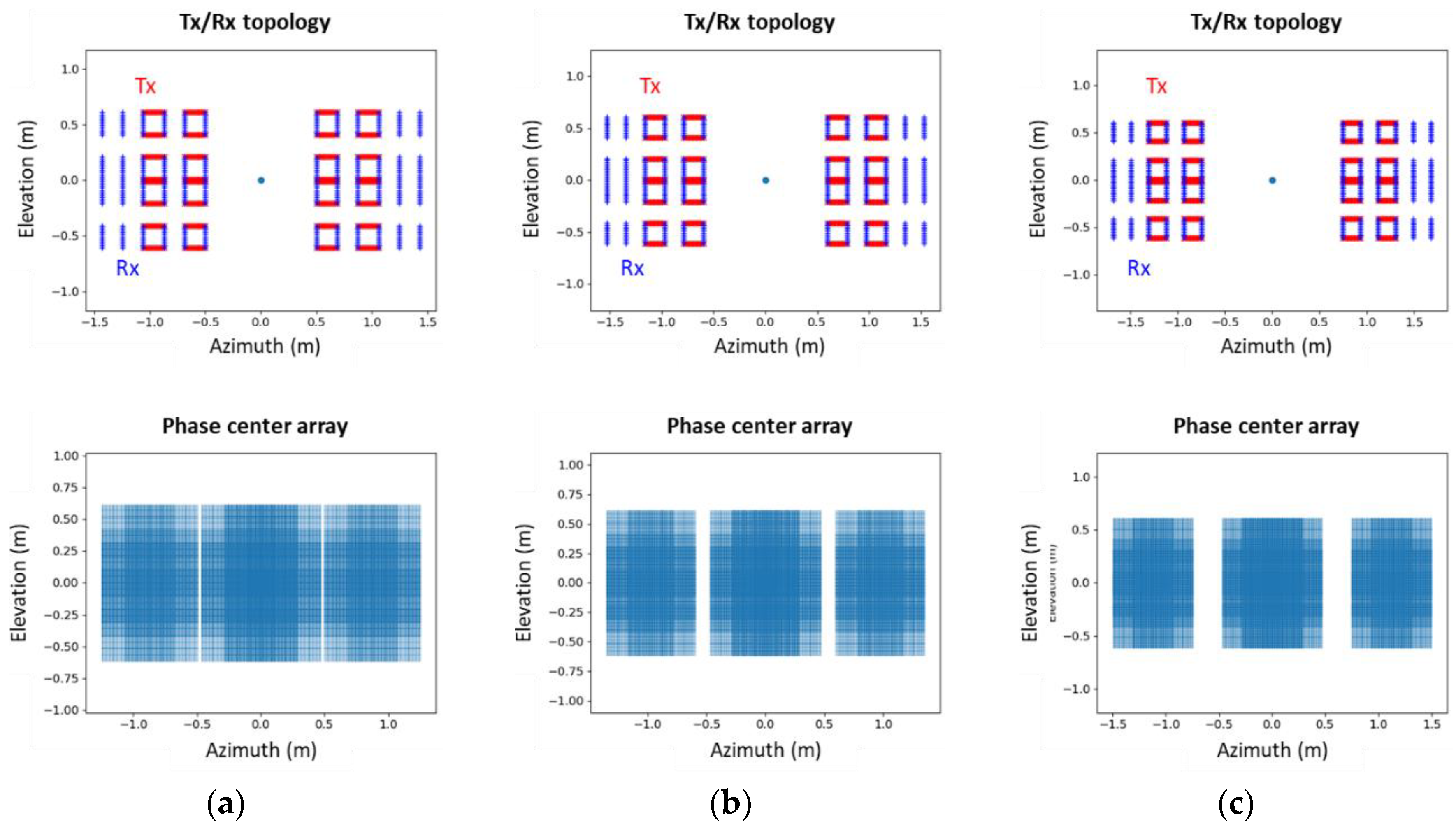

However, certain aspects require improvement before transferring the prototype to a higher TRL and proposing a COTS solution to end-users. One such aspect pertains to discretion, particularly, since MIC is built as a walkthrough solution, with respect to the space between the right and the left panels. While an 87 cm passage is typical of a standard office door, it may prove too narrow for individuals carrying large wheeled suitcases or for those with disabilities, such as those using wheelchairs. Widening the passage may be possible, but it could potentially lead to a loss in performance.

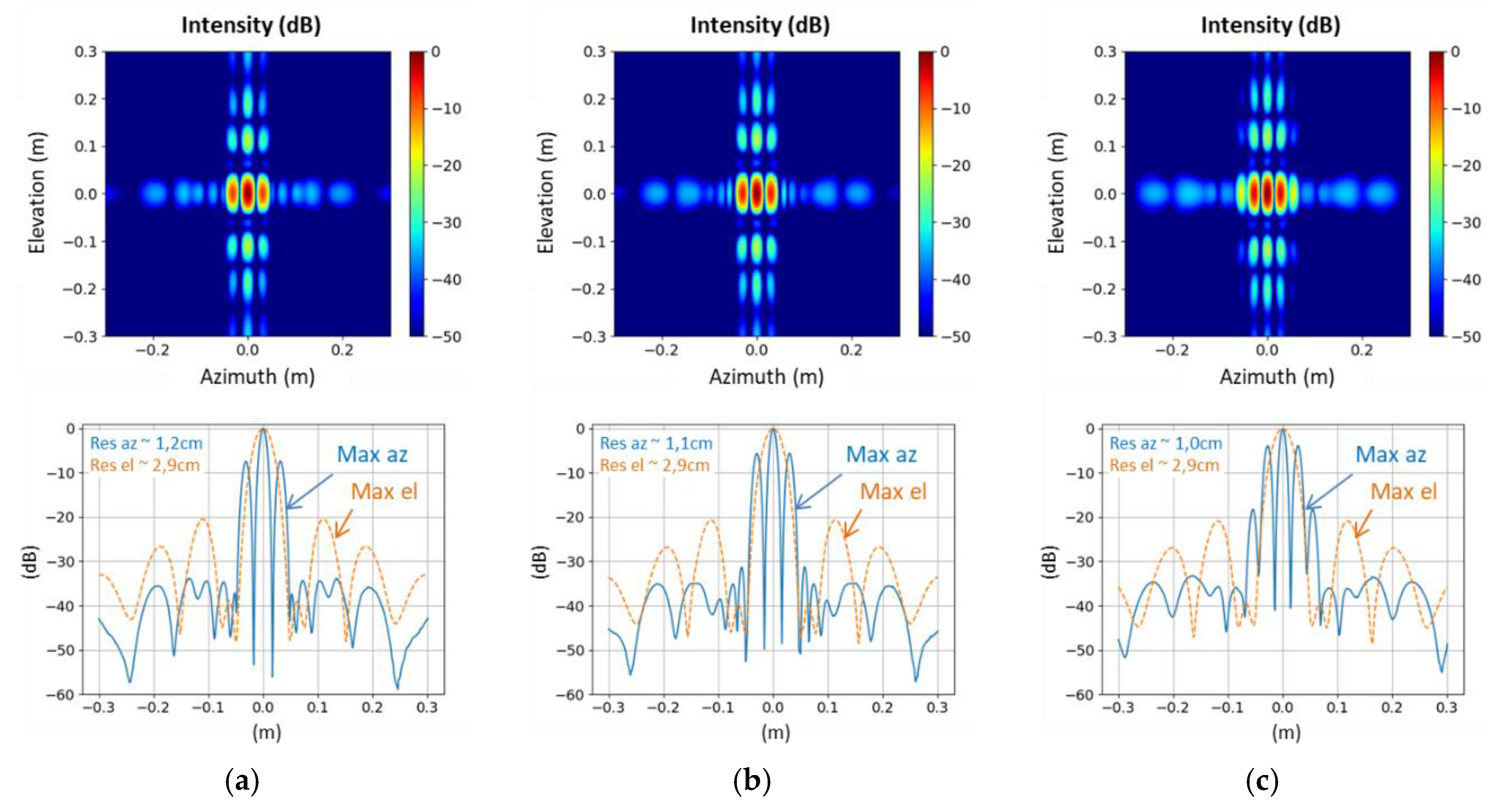

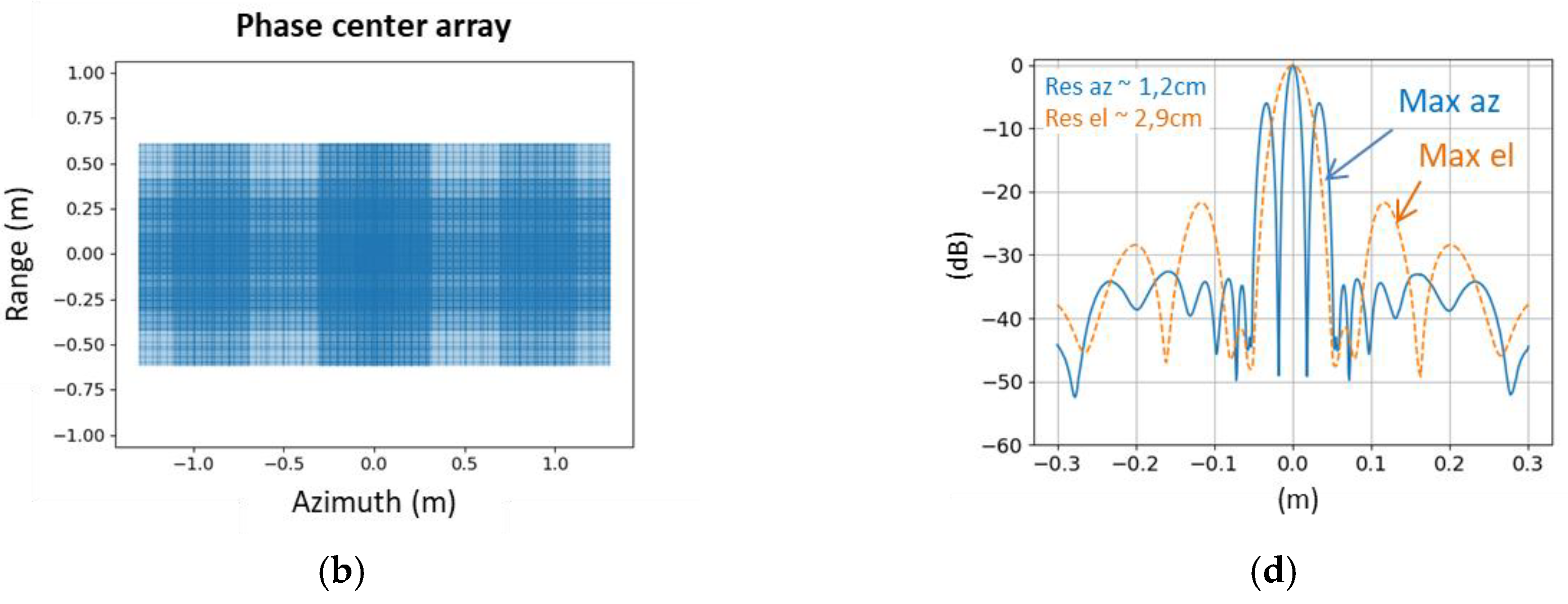

Simulations of possible artefacts encountered when widening this “door” passage, with a similar system configuration than the one used for the field trial in Rome are presented on

Figure 29 for three door widths: 1 m, 1.2 m and 1.5 m.

Figure 30 shows the maximal intensity in the azimuth/elevation plane obtained for a point-like target located at 1.5 m from the door passage.

When increasing the width of the gap between left and right panels, the antenna dimension in the azimuth axis is increasing as well. We can then observe an improvement of the resolution in the azimuth direction. However, gaps appear in the virtual antenna array, which lead to a rise of the side lobes level in the azimuth direction.

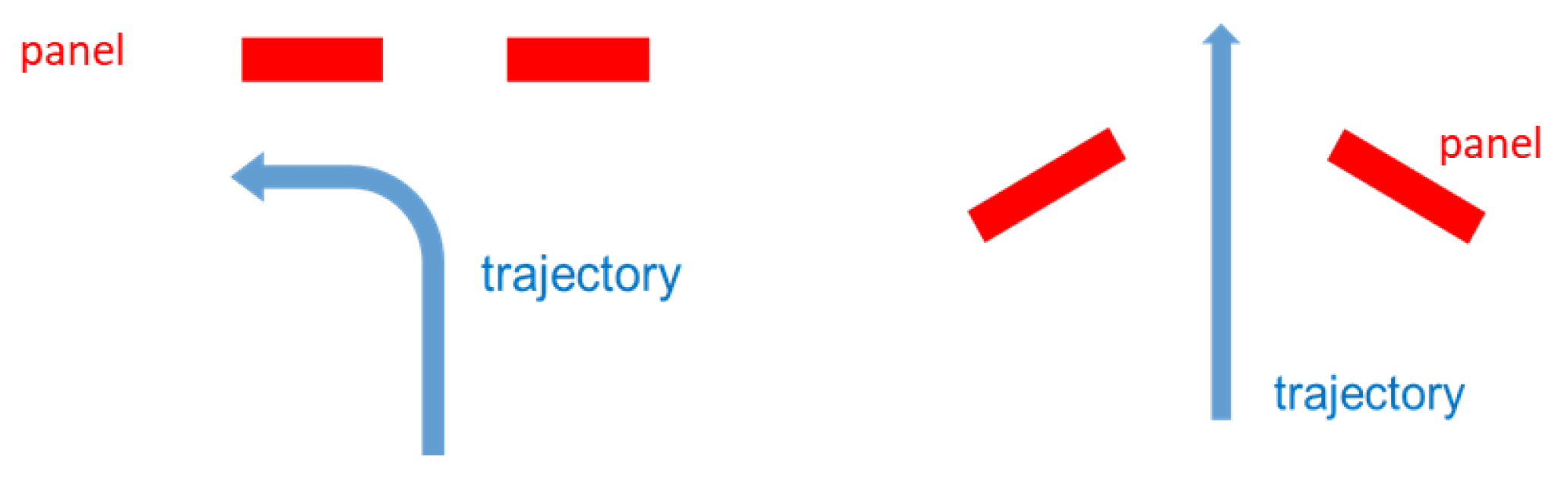

Inevitably, this will lead to a degradation of image quality. However, the impact on the performance of the Automated Target Detection is yet to be assessed. Since it is based on a CNN algorithm, learning on a degraded image base may still produce acceptable threat detection rate. This point would be interesting to investigate in future work.

It would be of significant interest to analyze the performance of the Automated Target Detection algorithm when the system is configured in the "Wall" layout. This particular configuration is characterized by a higher level of discretion compared to the "Door" configuration and does not impose any constraints on the flow of passengers. However, one significant drawback of this configuration is that individuals would be required to take a corner located in front of the system (as illustrated in

Figure 31), which may result in a loss of weapon visibility and detection. It is worth noting, however, that this configuration can also provide an opportunity for people to be viewed from the side or the back, particularly when two wall sensors are installed in a corner.

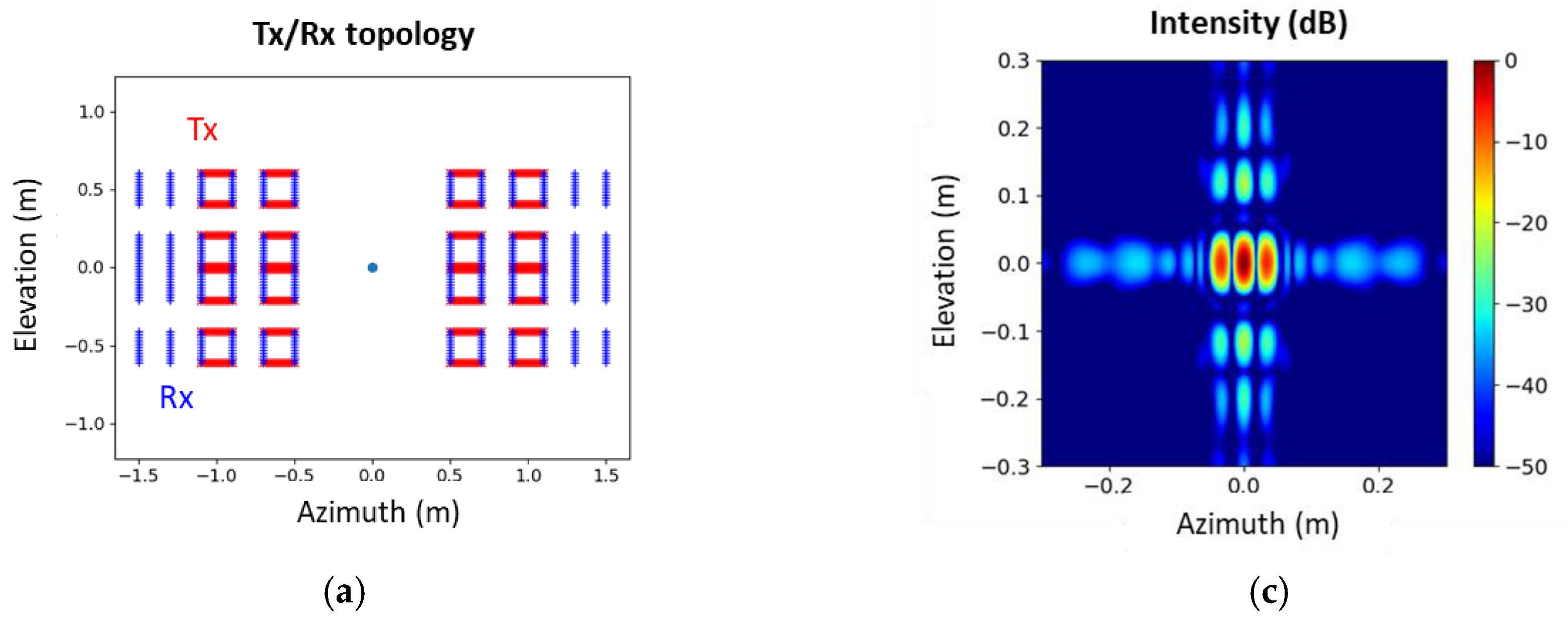

The results of applying the back-projection algorithm in the "Wall" layout are presented in

Figure 32 for a point-like target. Notably, the virtual antennas array displays a full array without any gaps in comparison to the "Door" configurations illustrated in

Figure 30. Hence, it would be worthwhile to evaluate the performance of a newly trained Automated Target Detection algorithm on this particular sensor configuration, similar to the approach taken with large distances between panels in the "Door" configuration.

5. Conclusions

To our knowledge, the MIC system represents a breakthrough in the world of MIMO radar imaging, as it is the first-of-its-kind low-cost system for imaging walking persons using COTS elements. The system boasts a transmission time of 70ms and a total measurement time of approximately 165ms, allowing for quasi-real time measurements of walking persons.

The MIC system has been installed and actively employed in the DEXTER system prototype, a multi-sensor detection system, at the Anagnina metro-station in Rome, Italy. The system has successfully performed discreet experiments by scanning over 550 people carrying different objects, achieving a success rate of 95% in these measurements.

The MIC system can provide 2 centimeters resolutions at 150 cm away from the array aperture. The system's power consumption is 72 W/h, and it illuminates with a power density significantly lower than the required power density level of 10 W/m² for members of the public.

The MIC system is currently operational producing 2.8 frames per second of 3D radar images. Once the image reconstruction time (currently about 350 ms) is reduced in the future, the system will be able to produce 3D radar images with 6 fps. The reconstructed range is currently within 50 cm to 250 cm with 100 points. The reconstructed number of voxels in the z-axis can be reduced by considering the range position of a walking person to reduce the reconstruction time.

Lastly, antenna configuration and discretion could be improved by enlarging the door width or moving to a fully discreet wall configuration. However, these tasks represent significant scientific challenges that will require further research.

Author Contributions

Conceptualization, Luc Vignaud, Valentine Wasik, Reinhold Herschel, Harun Cetinkaya and Thomas Brandes; Methodology, Remi Baque, Luc Vignaud, Valentine Wasik and Harun Cetinkaya; Software, Luc Vignaud, Valentine Wasik, Nicolas Castet, Harun Cetinkaya and Thomas Brandes; Validation, Luc Vignaud, Valentine Wasik and Harun Cetinkaya; Investigation, Remi Baque, Luc Vignaud, Nicolas Castet, Reinhold Herschel, Harun Cetinkaya and Thomas Brandes; Resources, Reinhold Herschel; Writing – original draft, Remi Baque; Supervision, Remi Baque and Reinhold Herschel.

References

- D. M. Sheen, J. L. Fernandes, J. R. Tedeschi, D. L. McMakin, A. M. Jones, W. M. Lechelt, and R. H. Severtsen, “Wide-bandwidth, wide-beamwidth, high-resolution, millimeter-wave imaging for concealed weapon detection,” in Passive and Active Millimeter-Wave Imaging XVI, D. A. Wikner and A. R. Luukanen, Eds., vol. 8715, International Society for Optics and Photonics. SPIE, 2013, pp. 65 – 75. [Online]. [CrossRef]

- D. M. Sheen, D. L. McMakin, W. M. Lechelt, and J. W. Griffin, “Circularly polarized millimeter-wave imaging for personnel screening,” in Passive Millimeter-Wave Imaging Technology VIII, R. Appleby and D. A. Wikner, Eds., vol. 5789, International Society for Optics and Photonics. SPIE, 2005, pp. 117 – 126. [Online]. [CrossRef]

- X. Zhuge and A. Yarovoy, “A sparse aperture mimo-sar-based uwb imaging system for concealed weapon detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 49, no. 9, pp. 509–518, 2011.

- E. Anadol, I. Seker, S. Camlica, T. O. Topbas, S. Koc, L. Alatan, F. Oktem, and O. A. Civi, “UWB 3D near-field imaging with a sparse MIMO antenna array for concealed weapon detection,” in Radar Sensor Technology XXII, K. I. Ranney and A. Doerry, Eds., vol. 10633, International Society for Optics and Photonics. SPIE, 2018, pp. 458 – 472. [Online]. [CrossRef]

- S. S. Ahmed, A. Schiessl, and L.-P. Schmidt, “A novel fully electronic active real-time imager based on a planar multistatic sparse array,” IEEE Transactions on Microwave Theory and Techniques, vol. 59, no. 12, pp. 3567–3576, 2011.

- D. M. Sheen, D. L. McMakin, H. D. Collins, T. E. Hall, and R. H. Severtsen, “Concealed explosive detection on personnel using a wideband holographic millimeter-wave imaging system,” in Signal Processing, Sensor Fusion, and Target Recognition V, I. Kadar and V. Libby, Eds., vol. 2755, International Society for Optics and Photonics. SPIE, 1996, pp. 503 – 513. [Online]. [CrossRef]

- https://vayyar.com/public-safety/.

- C. O. T. E. UNION, 1999/519/EC: Council Recommendation of 12 July 1999 on the limitation of exposure of the general public to electromagnetic fields (0 Hz to 300 GHz), 1999.

- Ribeiro, M.T.; Singh, S.; Guestrin, C. "Why should I trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, August 2016. (pp. 1135-1144).

- Selvaraju, R. R.; Cogswell, M.; Das, A., Vedantam R.; Parikh, D.; Batra, D. “Grad-cam: Visual explanations from deep networks via gradient-based localization.” In Proceedings of the IEEE international conference on computer vision, October 2017. (pp. 618-626).

- Ahmed, Sherif. (2015). Electronic Microwave Imaging with Planar Multistatic Arrays, PhD Thesis, University Erlangen, Germany.

- S. Thomas, A. Froehly, C. Bredendiek, R. Herschel and N. Pohl, "High Resolution SAR Imaging Using a 240 GHz FMCW Radar System with Integrated On-Chip Antennas," 2021 15th European Conference on Antennas and Propagation (EuCAP), Dusseldorf, Germany, 2021, pp. 1-5. [CrossRef]

Figure 1.

Objective to evolve from short-range detection with check-point issue to automatic discreet detection in the passenger flow.

Figure 1.

Objective to evolve from short-range detection with check-point issue to automatic discreet detection in the passenger flow.

Figure 2.

COTS module with MIMO transmit and receive antenna array.

Figure 2.

COTS module with MIMO transmit and receive antenna array.

Figure 3.

Tree structure assembling the 24 COTS transmit and receive modules in two sub-systems (TOP and BOT).

Figure 3.

Tree structure assembling the 24 COTS transmit and receive modules in two sub-systems (TOP and BOT).

Figure 4.

MIC Door configuration based on 24 COTS transmit and receive modules organized in two sub-systems (TOP and BOT).

Figure 4.

MIC Door configuration based on 24 COTS transmit and receive modules organized in two sub-systems (TOP and BOT).

Figure 5.

Overall system with 2 workstations including 4 GPU.

Figure 5.

Overall system with 2 workstations including 4 GPU.

Figure 6.

Imaging algorithm with functions.

Figure 6.

Imaging algorithm with functions.

Figure 7.

Kaiser filter with the coefficient of 4 over the virtual array aperture.

Figure 7.

Kaiser filter with the coefficient of 4 over the virtual array aperture.

Figure 8.

Redundancy filter coefficients represent how many virtual elements are co-located at the same spatial domain.

Figure 8.

Redundancy filter coefficients represent how many virtual elements are co-located at the same spatial domain.

Figure 9.

Transmission and reception angles in the measurement scene.

Figure 9.

Transmission and reception angles in the measurement scene.

Figure 10.

Measured signal consists of channel responses of transmit and receive antennas, coupling effect, and target reflection.

Figure 10.

Measured signal consists of channel responses of transmit and receive antennas, coupling effect, and target reflection.

Figure 11.

Active calibration method. (a) An active calibration pair is employed on the ACU; (b) Metal plate measurement to obtain a channel response of this active calibration pair; (c) Transmission measurement between the active calibration pair and the proposed imaging system.

Figure 11.

Active calibration method. (a) An active calibration pair is employed on the ACU; (b) Metal plate measurement to obtain a channel response of this active calibration pair; (c) Transmission measurement between the active calibration pair and the proposed imaging system.

Figure 12.

(a) 2D PSF in x-y-axes; (b) 1D PSF along with x- and y-axis.

Figure 12.

(a) 2D PSF in x-y-axes; (b) 1D PSF along with x- and y-axis.

Figure 13.

Simulation geometry.

Figure 13.

Simulation geometry.

Figure 14.

SNR values for transmitter and receiver antennas.

Figure 14.

SNR values for transmitter and receiver antennas.

Figure 15.

Signal measurements at 3m.

Figure 15.

Signal measurements at 3m.

Figure 16.

Illustration of the extraction of parameters of increasing granularity by a 2D CNN network, here on a natural image (Matlab © illustration).

Figure 16.

Illustration of the extraction of parameters of increasing granularity by a 2D CNN network, here on a natural image (Matlab © illustration).

Figure 17.

Explainable AI example on MIC AK47 detection: red spot indicates main body area contributing to threat detection by the AI classifier.

Figure 17.

Explainable AI example on MIC AK47 detection: red spot indicates main body area contributing to threat detection by the AI classifier.

Figure 18.

Threats used for MIC performance measurement scenarios (Small Gun, semi-automatic MP5 also called Big Gun, AK-47, explosive belt with nuts and bolts).

Figure 18.

Threats used for MIC performance measurement scenarios (Small Gun, semi-automatic MP5 also called Big Gun, AK-47, explosive belt with nuts and bolts).

Figure 19.

Confusion matrix with random separation in static 5 classes classification.

Figure 19.

Confusion matrix with random separation in static 5 classes classification.

Figure 20.

5 classes confusion matrixes with: (a) Test subjects #1, #2, #3 & #4 and training on all other subjects; (b) Test subjects #7, #8, #14 & #20 and training on all other subjects.

Figure 20.

5 classes confusion matrixes with: (a) Test subjects #1, #2, #3 & #4 and training on all other subjects; (b) Test subjects #7, #8, #14 & #20 and training on all other subjects.

Figure 21.

2 classes confusion matrixes with: (a) Test subjects #1, #2, #3 & #4 and training on all other subjects; (b) Test subjects #7, #8, #14 & #20 and training on all other subjects.

Figure 21.

2 classes confusion matrixes with: (a) Test subjects #1, #2, #3 & #4 and training on all other subjects; (b) Test subjects #7, #8, #14 & #20 and training on all other subjects.

Figure 22.

Layout of the measurement scene in Anagnina metro-station. Passengers firstly have to pass through the MIC system.

Figure 22.

Layout of the measurement scene in Anagnina metro-station. Passengers firstly have to pass through the MIC system.

Figure 23.

MIC implementation in Rome Anagnina metro station without and with advertising panels hiding antennas.

Figure 23.

MIC implementation in Rome Anagnina metro station without and with advertising panels hiding antennas.

Figure 24.

Synchronization of the imaging system MIC is accomplished via Ethernet connection to NTP server.

Figure 24.

Synchronization of the imaging system MIC is accomplished via Ethernet connection to NTP server.

Figure 25.

Post field trial confusion matrixes on field trail data, using simulated accumulation of 1 to 10 detections.

Figure 25.

Post field trial confusion matrixes on field trail data, using simulated accumulation of 1 to 10 detections.

Figure 26.

Post field trial mean accuracy versus simulated detections accumulation.

Figure 26.

Post field trial mean accuracy versus simulated detections accumulation.

Figure 27.

Post field trial confusion matrix on independent subject selection (decision made on 2 successive detections).

Figure 27.

Post field trial confusion matrix on independent subject selection (decision made on 2 successive detections).

Figure 28.

Post field trial confusion matrix on independent subject selection (decision made on 4 simulated successive detections).

Figure 28.

Post field trial confusion matrix on independent subject selection (decision made on 4 simulated successive detections).

Figure 29.

Sensor antennas array and corresponding phase center array in the azimuth/elevation plane for different spacing between MIC left and right panels: (a) 1 m; (b) 1.2 m; (c) 1.5 m.

Figure 29.

Sensor antennas array and corresponding phase center array in the azimuth/elevation plane for different spacing between MIC left and right panels: (a) 1 m; (b) 1.2 m; (c) 1.5 m.

Figure 30.

Maximal intensity in the azimuth/elevation plane and cuts in azimuth and elevation direction in dB (normalized) for different spacing between MIC left and right panels: (a) 1 m; (b) 1.2 m; (c) 1.5m.

Figure 30.

Maximal intensity in the azimuth/elevation plane and cuts in azimuth and elevation direction in dB (normalized) for different spacing between MIC left and right panels: (a) 1 m; (b) 1.2 m; (c) 1.5m.

Figure 31.

Two different configurations for MIC: (left) Wall and (right) Door.

Figure 31.

Two different configurations for MIC: (left) Wall and (right) Door.

Figure 32.

(a) Sensor antennas array in the azimuth/elevation plane for “Wall” configuration. (b) Corresponding phase center array. (c) Maximal intensity in the azimuth/elevation plane in dB (normalized). (d) Maximal intensity levels in the azimuth or elevation direction in dB (normalized).

Figure 32.

(a) Sensor antennas array in the azimuth/elevation plane for “Wall” configuration. (b) Corresponding phase center array. (c) Maximal intensity in the azimuth/elevation plane in dB (normalized). (d) Maximal intensity levels in the azimuth or elevation direction in dB (normalized).

Table 1.

System hardware parameters.

Table 1.

System hardware parameters.

| Parameters |

|

| Module – Frequency Range |

6.5 GHz – 10.5 GHz |

| Module – Number of Frequencies |

81 points |

| Total number of transmitter antennas |

352 |

| Total number of receiver antennas |

528 |

| Total number of modules |

24 |

Table 2.

MIC fail cases, occurrences appear in parenthesis.

Table 2.

MIC fail cases, occurrences appear in parenthesis.

| Confusions |

False positives |

False negatives |

| Small gun instead of Big gun (2) |

Small gun on Woman (2) |

Big gun on woman (2) |

| Explosive belt instead of Backpack on front (3) |

Explosive belt on woman (1) |

Small gun on woman (2) |

| AK47 instead of big Metallic Bottle on front (1) |

AK47 on woman with arms crossed (1) |

Small gun on man (3) |

| |

Small gun on “phone texting” (1) |

Explosive belt on woman (1) |

| |

Big gun on “phone texting” (1) |

Explosive belt on man (2) |

| |

Umbrella confused with Small gun (1) |

AK47 in wrong position |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).