1. Introduction

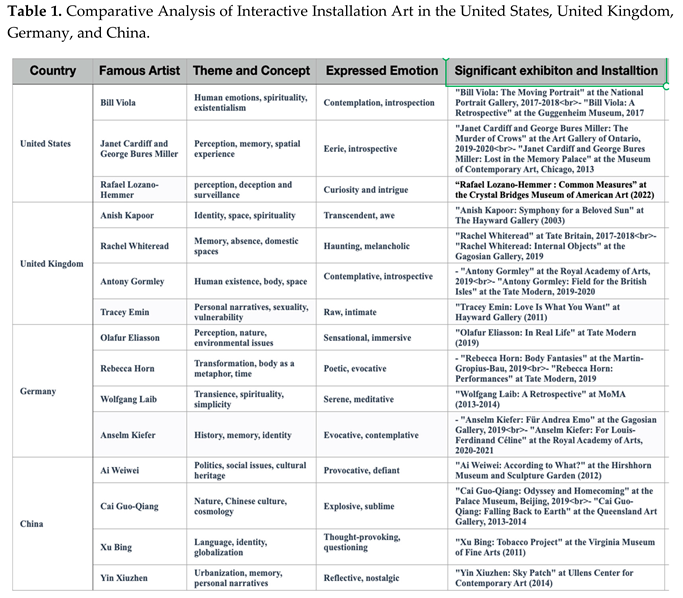

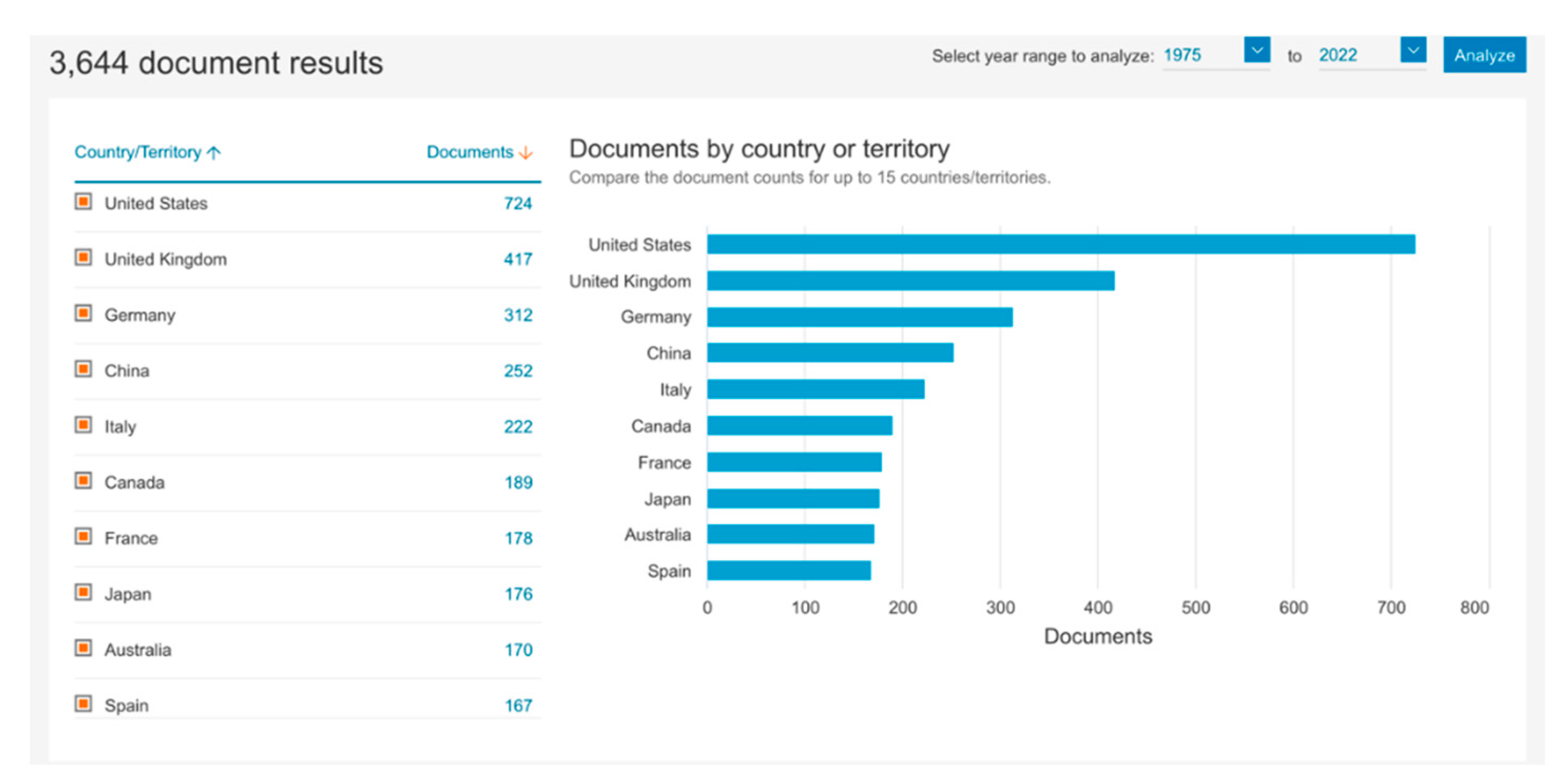

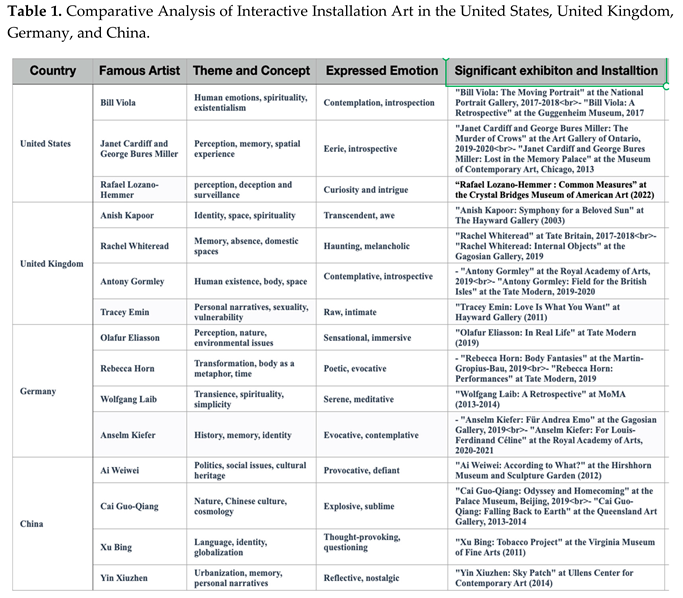

Interactive installations have gained significant prominence as a form of artistic expression, fueled by technological advancements and the quest for more personalized and adaptable art forms, which has led to the integration of artificial intelligence (AI) into interactive installations, enabling them to detect and respond to people's gestures, movements, and facial expressions (Patel et al., 2020; Cao et al., 2021). These devices can also recognize and interpret spoken or written language through natural language processing, facilitating interaction through speech or text (Tidemann, 2015; Gao & Lin, 2021; Ronchi & Benghi, 2014). The United States, the United Kingdom, Germany, and other countries have relatively developed research in this field (

Figure 1).

AI algorithms process and analyze vast amounts of data in real time, enabling installations to respond dynamically to participants' behavior and emotions. Consequently, AI algorithms in interactive installations unlock new possibilities for artists and designers to create emotionally rich and engaging experiences that adapt and respond intelligently to individuals. Artificial intelligence contributes to the immersive nature of interactive installations by capturing and interpreting participants' emotional cues. By employing AI algorithms, these installations can better understand participants' emotional states and reactions, resulting in heightened engagement.

However, despite the growing popularity and continuous advancements in interactive installations, a pressing need remains for a comprehensive understanding of the specific nature of emotional responses elicited by these installations. The intricate interplay between sensory stimuli, multi-dimensional physical interactions, participant engagement, and emotional responses has yet to be fully explored, limiting the ability of artists and designers to create emotionally resonant experiences for audiences. Furthermore, the demand for advanced data analysis techniques, such as AI algorithms, challenges traditional data analysis methods in extracting meaningful patterns and insights from collected data. These challenges lie in unraveling the complex relationships among various factors that influence emotional responses in interactive installations. Leveraging AI algorithms can help overcome these hurdles by enabling sophisticated data analysis, pattern recognition, and forecasting, ultimately providing a deeper understanding of the underlying dynamics.

2. Literature Review

Rapid advances in computing power, big data, and machine learning are enabling artists to incorporate artificial intelligence techniques into their installation arts, providing audiences with an immersive and engaging experience. These installations utilize artificial intelligence algorithms to create dynamic and responsive environments that interact with the viewer's movements, gestures, or other input (Patel et al., 2020; Cao et al., 2021). They can also incorporate computer vision to track audience movement and generate music or visual effects based on audience behavior (Raptis et al., 2021b;Pelowski et al., 2018). This research focuses on the use of artificial intelligence algorithms to measure emotion responses to interactive installation art. To achieve this goal, we conduct a relevant literature review to examine the current state of installation art, identify research gaps.

2.1. Artificial intelligence in interactive installation art

The impact of artificial intelligence on art design is profound and massive; it is changing the development pattern of art at an unprecedented speed, and artists use artificial intelligence in various ways (Manovich, 2019), offering artists new ways to create dynamic, responsive, and personalized experiences for their audiences. One of the essential applications of artificial intelligence in interactive installation art is to make the artwork respond to the audience's behavior in real-time through machine learning algorithms. For example, a smart mirror can recognize the viewer's face, track their movements, and change their appearance or behavior, creating a more personalized experience (Patel et al., 2020). Artificial intelligence in interactive art installations another way to use is to use natural language processing (NLP) technology to enable artwork to understand and respond to written or spoken language. For example, the "Molten Memories" installation uses NLP to create a responsive and immersive visitor experience. The building walls are transformed into a dynamic and changing display of light and sound (Rajapakse & Tokuyama, 2021). "You Are What You See" uses NLP and machine learning algorithms to generate appropriate responses for audience communication(Akten et al., 2019). It opens new possibilities for creating interactive installations that respond to the viewer's questions or commands, creating a more conversational and personal experience.

Artificial intelligence algorithms have created a responsive and immersive experience for the audience, eliciting better emotional responses. A large body of literature is dedicated to exploring the impact of emotion on interactive installations and how it affects user experience, emphasizing its role in creating a sense of presence and immersion in interactive installations. Interactive devices can respond to the emotional state of the user, creating a more personalized and engaging experience by evoking emotions such as surprise, joy, excitement, curiosity, or awe to create a deeper interaction with the device (Xu & Wang, 2021). Emotions can be elicited by various elements in interactive installations, such as visual and auditory stimuli, physical interaction with artwork, and narrative or conceptual content. They can range from joy and excitement to more complex emotions such as fear, anxiety, or confusion (Savaş et al., 2021).

Interactive installations can evoke a range of positive and negative emotions, and designers and curators must understand the role of emotion in the aesthetic experience of interactive installations to create impactful experiences for viewers (Duarte & Baranauskas, 2020). Emotional experience is an integral part of user experience, and emotional responses are influenced by multiple factors, including the physical environment and audience experience (Szubielska et al., 2021). Designers should prioritize emotions when creating interactive experiences, understanding the importance of the environment, the causes of emotional factors, and developing strategies for emotional experiences (Lim et al., 2008). the elements in designing interactive systems include storytelling, personalization, interactivity, consideration of users' prior knowledge and expectations, and cultural and social factors(Duarte & Baranauskas, 2020). The presence of other people in the physical environment of the interactive installation can also affect the audience's emotional experience (Szubielska et al., 2021). The emotion of an interactive installation is influenced by the design of the installation and the sensory experience it provides (Capece & Chivăran, 2020; Szubielska et al., 2021; Rajcic & McCormack, 2020), and to a large extent, also depends on the design of the installation, the presented of the environment and the characteristics of the relevant actors (Her, 2014). For example, the "Rain Room" installation at the Museum of Modern Art in New York City allows visitors to walk in heavy rain without getting wet. It creates a sense of wonder and awe among visitors and evokes a strong emotional response (Random International, 2013). Movement is an essential element of the installation, as visitors can move around and interact with the rain, experiencing the thrill of being surrounded by rain without getting wet. "Ripple" interactive installation uses light and sound to create a calming and meditative experience for the user. Movement is integral to the installation, as users can move and interact with light and sound waves, creating ripples and patterns(Rajcic & McCormack, 2020). The installation uses sensory cues to evoke an emotional response in the user. It allows the user to reflect on their emotional experience and connect with others who have had a similar experience.

In conclusion, emotions play a vital role in creating successful interactive installations, which can create meaningful experiences for viewers. As AI increasingly integrates into our lives, the literature reviewed above highlights the importance of creating engaging and evocative experiences in interactive installations through emotional design.

2.2. Research dimension: sensory stimulation, experience, and engagement

Interactive installations have been extensively studied in four countries: the United States, Germany, the United Kingdom, and China. Table 1 compares interactive installation art in these countries, highlighting the design factors that elicit emotional responses. Especially sensory stimulation, multi-dimensional interaction, and participation are crucial elements that significantly contribute to the emotional experiences associated with interactive installations.

2.2.1. Sensory stimulation

Sensory stimulation is a crucial aspect of interactive installations, as it refers to any input that activates our senses, such as visual, auditory, olfactory, gustatory, or tactile stimuli. Sensory stimulation can elicit different emotional responses, and multi-sensory stimuli can evoke different emotions (Schreuder et al., 2016); studies by Tidemann (2015) and Ronchi and Benghi (2014) have highlighted the potential of sensory stimuli, including music, sound effects, lighting, color, and CG animation, in creating immersive environments and enhancing emotional responses. These studies emphasize the importance of carefully designing and integrating sensory elements within interactive installations to elicit specific emotional states. Visually appealing and engaging content tended to elicit positive emotional responses, while repetitive or annoying sounds elicited negative emotional responses (De Alencar et al., 2016). Music can significantly impact mood, and certain types elicit positive emotions and reduce symptoms of depression (Cappelen et al., A. P.,2018). The "Molten Memories" installation, as the walls of the building, are transformed into a dynamic and changing display of light and sound (Rajapakse & Tokuyama, 2021).

With the rapid development of science and technology, various sensory technologies have emerged as the times require, stimulating vision and hearing and the senses of touch, smell, and taste(Velasco & Obrist, 2021). Recent research explores touch, taste, and smell to enhance engagement in multi-sensory experiences. An interdisciplinary SCHI lab team led by Marianna Obrist conducts extensive research on multi-sensory experiences, including interactive touch, taste, and smell experiences (Obrist et al., 2017). They explored using air-haptic technology to create an immersive tourist experience (Vi et al., 2017). For example, the I'm Sensing in the Rain exhibit created the illusion of raindrops falling through tactile stimuli in the air, enhancing realism and immersion in virtual environments (Pittera et al., 2019). Tate Sensorium exhibit engaged visitors' senses through a multi-sensory design that combines taste, touch, smell, sound, and sight (Ablart et al., 2017). Advances in immersive environments such as virtual reality (VR) and wearable devices have developed kinship interfaces involving scent, temperature, and smell to reshape multi-sensory experiences. Odor stimuli can influence the perception of body lightness or heaviness (Brianza et al., 2019), while temperature perception can promote social proximity concepts and influence human behavior(Brooks et al., 2021). Likewise, the sense of taste plays a crucial role in our understanding of the consumption process and reflects emotional states.

According to Velasco and Obrist, a multi-sensory experience is an impression formed by a specific event in which sensory elements such as color, texture, and smell are coordinated to create an impression of an object (Velasco & Obrist, 2021). These impressions can alter cognitive processes such as attention and memory, and designers draw on existing multi-sensory perception research and concepts to design them (Zald, 2003). Multi-sensory experiences can be physical, digital, or a combination of the two, from completely real to completely virtual. and can enhance participation in multi-sensory experiences(Raptis et al., 2021a).

2.2.2. Multi-dimension interactive

By incorporating sensory engagement, spatial design, human-machine interaction, data-driven approaches, and narrative elements, interactive installation art offers a multi-dimensional experience beyond traditional visual art forms(Cao et al., 2021). Anadol(2022) explores how interactive installations, data visualizations, and spatial narratives can enable humans to engage with and navigate this multi-dimensional space, blurring the boundaries between the physical and digital realms. Interactive installation at the Mendelssohn Memorial Hall provides a multi-dimensional experience by offering immersive interaction, sensorial engagement, technological integration, and spatial transformation. It merges technology and art, allowing visitors to participate in the virtual orchestra performance actively and creating a dynamic and captivating experience(Liu, 2021). George’s interactive installation art(Raptis et al., 2021) provides a multi-dimensional experience. The visitor visually explores the art exhibit, trying to discover interesting areas, while the audio guide provides brief information about the exhibit and the gallery theme. The system uses eye-tracking and voice commands to provide real-time information to the visitor. The evaluation study sheds light on the dimensions of evoking natural interactions within cultural heritage environments, using micro-narratives for self-exploration and understanding of cultural content, and the intersection between human-computer interaction and artificial intelligence within cultural heritage. Reefs and Edge's interactive installation art (De Bérigny et al.,2014) embodies a multi-dimensional experience, merging tangible user interface objects and combining environmental science and multiple art forms to explore coral reef ecosystems threatened by climate change. They argue that using a tangible user interface in an installation-art setting can help engage and inform the public about crucial environmental issues. The use of reacTIVision allows the computer simulating to pinpoint the location, identity, and orientation of the objects on the table's surface in real-time as the users move them.

Interface design is also a critical factor in structuring shared experiences. Fortin &Hennessy (Fortin & Hennessy, 2015) describe how electronic artists used cross-modal interfaces based on intuitive modes of interaction such as gesture, touch, and speech to design interactive installations that engage people beyond the ubiquitous single-user "social cocooning" interaction scenario. They explain that the multi-dimensional experience of interactive installations is achieved through thoughtful interface design that encourages collaboration, play, and meaning. Gu et al. (2022) find that diversifying interfaces in effective interactive installations can enhance users' emotional interaction and create a more engaging atmosphere. By incorporating facial affect detection technology and implementing a wide range of input and output interfaces, installations can offer a diverse and immersive experience.

Body movement is essential in multi-dimensional interaction; the Microsoft Kinect and the Leap Motion Controller as examples of 3D vision sensors that can be used for body motion interaction, such as gestures, speech, touch, and vision, to create more natural and powerful interactive experiences. According to Saidi, Serrano, Irani, Hurter, and Dubois (2019), on-body tangible interaction is with augmented and mixed reality devices using the body as physical support to constrain the movement of multiple degrees of freedom device (3D Mouse). The 3D Mouse offers enough degrees of freedom and accuracy to support the interaction. Using the body as a support for the interaction allows the user to move in their environment and avoid the fatigue of mid-air interactions. Turk (2014) notes challenges associated with body motion interaction, such as the need for accurate sensing and recognition of movements and issues related to privacy and security.

2.2.3. Engagement

The user experience should be designed to create engagement and active participation opportunities to enhance emotional response and overall user satisfaction. Meaningful engagement with art involves a fusion of cognitive and affective elements that goes beyond passive observation and involves active involvement, interaction, and participation on the viewer's part. Interactive installation art, which responds to the actions and inputs of the viewer, creates a dynamic and reciprocal relationship between the artwork and the participant. This interactivity enables individuals to engage with the artwork on their terms, influencing and shaping the experience (Edmonds, 2011). In interactive installation art, artificial intelligence technology is increasingly employed to create immersive experiences by analyzing participants' biometric data, such as heart rate and skin conductance.

Generally, engagement is combined with artificial intelligence to reflect people's participation. Artificial intelligence allows installations to respond to biometric data dynamically. Analyzing participants' biometric data improves engagement, promoting stronger emotional and sensory connections and enriching the interactive experience. For example, participants' engagement by using Galvanic Skin Response sensors can measure the level of engagement of the audience and the presenter. The gathered data is then visualized in real-time through a visualization projected onto a screen and a physical electro-mechanical installation, which would change the height of helium-filled balloons depending on the atmosphere in the auditorium (Röggla et al., 2017), which creates a tangible way of making the invisible visible. Artificial intelligence algorithms can adjust the experience in real time. [Self](Tidemann, 2015), AI algorithms analyze incoming sounds, identify patterns and characteristics, then classify the sounds, distinguishing between musical instruments, voices, and other auditory elements, which link to corresponding faces or visual representations. The AI algorithms dynamically adjust and generate visuals based on real-time audio input. "Learning to See" (Akten et al., 2019) uses machine learning algorithms to analyze live video footage and create an abstract image representation in real time. Artificial intelligence algorithms enhance participant engagement and foster emotional resonance in interactive installation arts. Personalized content and feedback can create a sense of ownership and ownership of the experience, enhancing emotional response and user engagement (Raptis et al., 2021a). Research shows that engagement is vital in eliciting an emotional response and creating meaningful connections between installations and viewers.

2.3. Research gap

With the rapid advancement of science and technology, there has been increasing interest in multi-sensory experience design in human-computer interaction. Through the literature review, several research gaps must be addressed to understand emotional responses in interactive installations comprehensively. The existing research has primarily focused on individual sensory modalities or specific stimuli, thus needing a holistic understanding of the combined effects of different sensory stimuli. Furthermore, while the importance of multi-dimensional interactions in interactive installations is recognized, there still needs to be more research exploring the specific relationship between different combinations and variations of these interactions and the elicited emotional responses. Understanding how these interactive elements work together to evoke specific emotional experiences is essential for future investigation.

Additionally, previous studies have touched upon participant engagement in interactive environments. However, there is a need for more comprehensive research that specifically examines the connection between participant engagement and emotional responses. It is crucial to investigate the mechanisms through which participant engagement, including factors such as immersion, interactivity, and meaningful engagement with the stimuli and the experimental environment, influences emotional experiences.

In summary, the literature review emphasizes the significance of sensory stimulation, multi-dimensional interactions, and participant engagement in measuring emotions in interactive installation arts. We can better understand these dimensions and develop more impactful and emotionally resonant arts by addressing these research gaps utilizing artificial intelligence techniques.

3. Framework and hypothesis

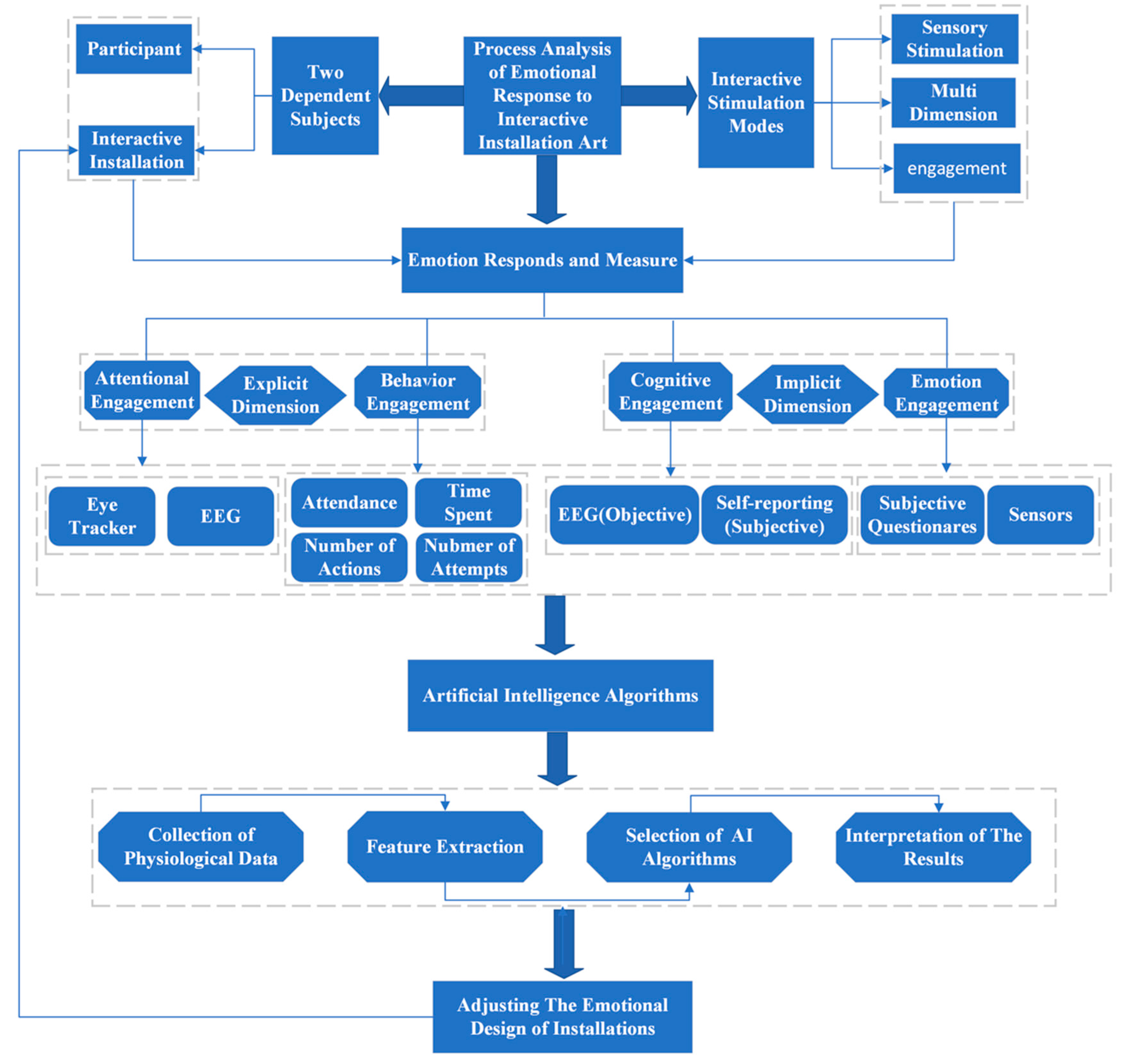

This research aims to investigate the emotional responses evoked during interactive installations. It focuses on capturing explicit and implicit data between participants and the artwork, utilizing artificial intelligence algorithms for emotional measurement. We propose a theoretical framework to analyze the emotional response process in interactive installations, as shown in

Figure 3. The framework considers measuring emotional responses experienced by participants across different stimulation modes offered by the installations. This process involves a complex interplay between attention, behavior, cognition, and emotion (Ma,2018). While attention and behavior are considered explicit data, cognition and emotion are categorized as implicit data. In interactive interactions, emotional responses can only be fully understood considering the combination of explicit and implicit data. Each dimension of attention, behavior, cognition, and emotion can be measured through physiological signals, including brain activity, heart rate variability, skin conductance, facial expressions, and eye tracking. Artificial intelligence algorithms can be employed to analyze and interpret these physiological signals, providing valuable insights into participants' emotional experiences during their interactions with the installation.

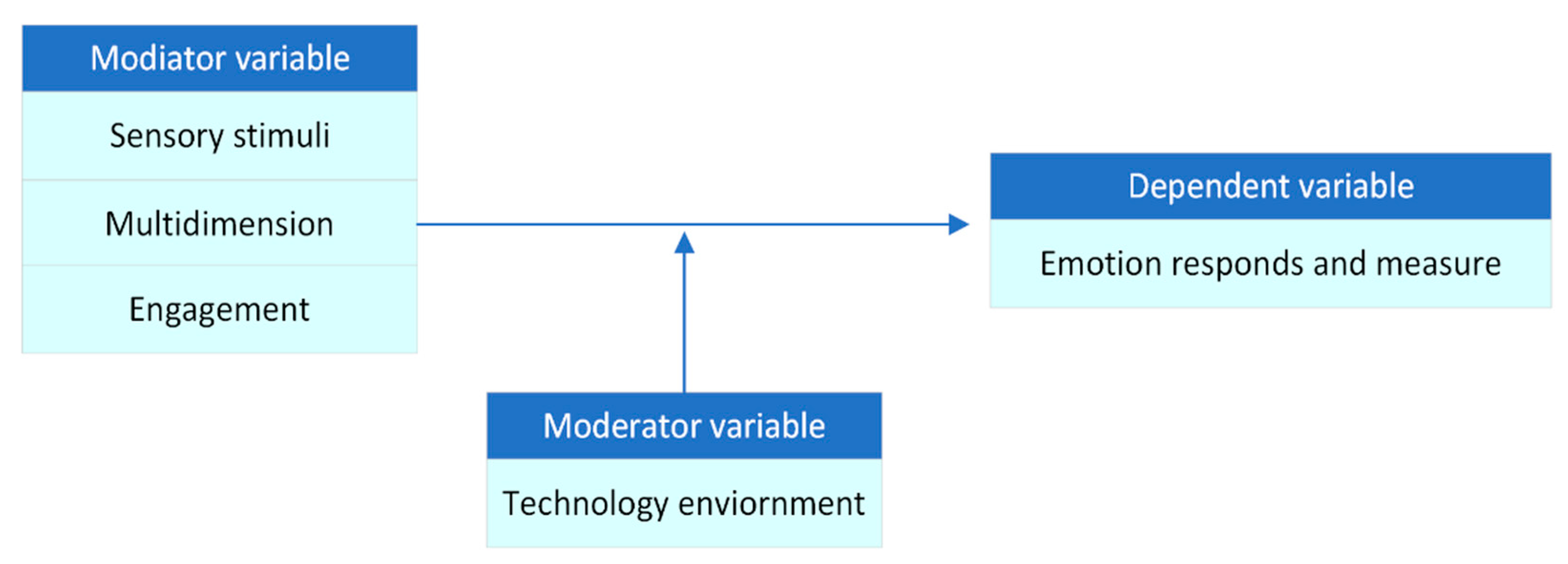

Based on the analysis of the interaction process in the above figure, we propose a conceptual framework to explore better the factors that affect emotional responses, as shown in

Figure 3. The independent variable, sensory stimulation, multi-dimensional interaction, and engagement, are designed to elicit emotional reactions. As the dependent variable, emotional responses are measured using various methods such as self-report measures, physiological signals (e.g., heart rate, skin conductance), or facial expression analysis. Contextual factors such as virtual reality (VR), augmented reality (AR), or mixed reality (MR), as moderator variables, influence the strength or direction of the relationship between the independent variable and the dependent variable.

Our study aims to explore the emotional responses triggered by sensory stimuli and multi-dimension interactions during meaningful engagement; we will study various interactive stimulation modes to capture participants' emotional responses, employing artificial intelligence algorithms to measure emotion. So, the research hypotheses are as follows:

Hypothesis 1: Different types of sensory stimuli will evoke specific emotional states or intensities.

Hypothesis 2: Multi-dimension interactions will have an impact on emotional responses.

Hypothesis 3: Participant engagement in the interactive environment will influence emotional responses.

Hypothesis 4: Artificial intelligence algorithms can effectively analyze physiological signals (EEG, facial expressions) to quantify emotional responses.

To test the research hypotheses and achieve the outlined research objectives, we will employ the research methodology of the ASSURE model combined with experimental design (Richey & Klein (2007)). The ASSURE model is a widely recognized instructional design model that stands for Analyze learners, State objectives, Select media and materials, Utilize media and materials, Require learner participation, and Evaluate and revise. In the research context, the ASSURE model provides a structured approach to ensure effective implementation and evaluation of the experimental design.

Figure 2.

Process Analysis of Emotional Response to Interactive Installation Art.

Figure 2.

Process Analysis of Emotional Response to Interactive Installation Art.

Figure 3.

The Conceptual Framework of Measure Emotional Responses in The Interactive Installation Art.

Figure 3.

The Conceptual Framework of Measure Emotional Responses in The Interactive Installation Art.

4. Conclusion

This paper has comprehensively examined emotional response and measurement in the context of interactive installation art, mainly focusing on integrating artificial intelligence. The review highlighted three key aspects: sensory stimulation, multi-dimensional interactions, and engagement, which are identified as significant contributors to eliciting profound emotional responses in interactive installation art. Additionally, research gaps were identified within these aspects, emphasizing the need for further investigation.

We propose the Process Analysis of Emotional Response to Interactive Installation Art to address these research gaps. Through this analysis, we develop a conceptual framework that explores the various variables involved in eliciting emotional responses. By understanding the underlying processes, we can gain deeper insights into the mechanisms that drive emotional experiences in interactive installation art. Based on the conceptual framework, we formulated hypotheses to guide our research efforts. These hypotheses predict the relationships between sensory stimulation, multi-dimensional interactions, engagement, and emotional responses. By testing these hypotheses, we aim to bridge the identified research gaps. To effectively test our hypotheses and achieve our research objectives, we have chosen the ASSURE model combined with experimental design as our research methodology. The ASSURE model provides a structured approach to instructional design, which is well-suited for our research context. By following this model, we can ensure a systematic and comprehensive implementation of our experimental study, ultimately facilitating the exploration of the emotional impact of sensory stimuli, multi-dimensional interactions, and engagement in interactive installation art.

In conclusion, this paper presents a roadmap for future research in emotional response and measurement in interactive installation art under artificial intelligence. By integrating theoretical frameworks, hypotheses, and the ASSURE model, we aim to advance our understanding of the emotional experiences evoked by these interactive artworks and contribute to the broader field of human-computer interaction.

References

- Ablart, D., Vi, C. T., Gatti, E., & Obrist, M. (2017). The how and why behind a multi-sensory art display. Interactions, 24(6), 38–43. [CrossRef]

- Akten, M., Fiebrink, R., & Grierson, M. (2019). Learning to see: You are what you see. ACM SIGGRAPH 2019 Art Gallery, SIGGRAPH 2019, 1–6. [CrossRef]

- Anadol, R. (2022). Space in the Mind of a Machine: Immersive Narratives. Architectural Design, 92(3), 28–37. [CrossRef]

- Brianza, G., Tajadura-Jiménez, A., Maggioni, E., Pittera, D., Bianchi-Berthouze, N., & Obrist, M. (2019). As Light as Your Scent: Effects of Smell and Sound on Body Image Perception. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 11749 LNCS(September 2020), 179–202. [CrossRef]

- Brooks, J., Lopes, P., Amores, J., Maggioni, E., Matsukura, H., Obrist, M., Lalintha Peiris, R., & Ranasinghe, N. (2021). Smell, Taste, and Temperature Interfaces. Conference on Human Factors in Computing Systems - Proceedings. [CrossRef]

- Cao, Y., Han, Z., Kong, R., Zhang, C., & Xie, Q. (2021). Technical Composition and Creation of Interactive Installation Art Works under the Background of Artificial Intelligence. Mathematical Problems in Engineering, 2021. [CrossRef]

- Capece, S., & Chivăran, C. (2020). The sensorial dimension of the contemporary museum between design and emerging technologies*. IOP Conference Series: Materials Science and Engineering, 949(1). [CrossRef]

- Cappelen, B., & Andersson, A. P. (2018). Cultural Artefacts with Virtual Capabilities Enhance Self-Expression Possibilities for Children with Special Needs. In Transforming our World Through Design, Diversity, and Education (pp. 634-642). IOS Press.

- De Bérigny, C., Gough, P., Faleh, M., & et al. (2014). Tangible User Interface Design for Climate Change Education in Interactive Installation Art. Leonardo (MIT Press - Journals), 47(5), 451-456. [CrossRef]

- Duarte, E. F., & Baranauskas, M. C. C. (2020). An Experience with Deep Time Interactive Installations within a Museum Scenario An Experience with Deep Time Interactive Installations within a Museum Scenario.

- Edmonds, E. (2011). Art, interaction, and engagement. Proceedings of the International Conference on Information Visualisation, pp. 451–456. [CrossRef]

- Fortin, C., & Hennessy, K. (2015). Designing Interfaces to Experience Interactive Installations Together. International Symposium on Electronic Art, August 1–8.

- Gu, S., Lu, Y., Kong, Y., Huang, J., & Xu, W. (2022). Diversifying Emotional Experience by Layered Interfaces in Affective Interactive Installations. In Proceedings of the 2021 DigitalFUTURES: The 3rd International Conference on Computational Design and Robotic Fabrication (CDRF 2021) 3 (pp. 221-230). Springer Singapore.

- Her, J. J. (2014). An analytical framework for facilitating interactivity between participants and interactive artwork: Case studies in MRT stations. Digital Creativity, 25(2), 113–125. [CrossRef]

- Lim, Y., Donaldson, J., Jung, H., Kunz, B., Royer, D., Ramalingam, S., Thirumaran, S., & Stolterman, E. (2008). Emotional Experience and Interaction Design.

- Liu, J. (2021). Science popularization-oriented art design of interactive installation based on the protection of endangered marine life-the blue whales. Journal of Physics Conference Series, 1827(1), 012116–012118. [CrossRef]

- Ma, X. (2018). Data-Driven Approach to Human-Engaged Computing Definition of Engagement.

- Manovich, L. (2019). Defining AI arts: Three proposals. … Catalog. Saint-Petersburg: Hermitage Museum, June 2019, pp. 1–9. https://www.academia.edu/download/60633037/Manovich.Defining_AI_arts.201920190918-80396-1vdznon.pdf.

- Obrist, M., Van Brakel, M., Duerinck, F., & Boyle, G. (2017). Multi-sensory experiences and spaces. Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, ISS 2017, pp. 469–472. [CrossRef]

- Patel, S. V., Tchakerian, R., Morais, R. L., Zhang, J., & Cropper, S. (2020). The Emoting City Designing feeling and artificial empathy in mediated environments. In L. C. Werner & D. Koering (Eds.), ECAADE 2020: ANTHROPOLOGIC - ARCHITECTURE AND FABRICATION IN THE COGNITIVE AGE, VOL 2 (pp. 261–270).

- Pittera, D., Gatti, E., & Obrist, M. (2019). I’m sensing in the rain: Spatial incongruity in visual-tactile mid-air stimulation can elicit ownership in VR users. Conference on Human Factors in Computing Systems - Proceedings, 1–15. [CrossRef]

- Rajapakse, R. P. C. J., & Tokuyama, Y. (2021). Thoughtmix: Interactive watercolor generation and mixing based on EEG data. Proceedings of International Conference on Artificial Life and Robotics, 2021, 728–731. https://www.scopus.com/inward/record.uri?eid=2-s2.0-85108839509&partnerID=40&md5=05392d3ad25a40e51753f7bb8fa37cde. 8510.

- Rajcic, N., & McCormack, J. (2020). Mirror ritual: Human-machine co-construction of emotion. TEI 2020 - Proceedings of the 14th International Conference on Tangible, Embedded, and Embodied Interaction, 697–702. [CrossRef]

- Random International.(2013). Rain room. Retrieved February 2022, from https://www.moma.org/calendar/exhibitions/1352.

- Raptis, G. E., Kavvetsos, G., & Katsini, C. (2021). Mumia: Multimodal interactions to better understand art contexts. Applied Sciences (Switzerland), 11(6). [CrossRef]

- Richey, R. C., & Klein, J. D. (2007). Design and development research. Lawrence Erlbaum Associates.

- Röggla, T., Wang, C., Romero, L. P., & et al. (2017. Tangible Air: An Interactive Installation for Visualising Audience Engagement.

- Ronchi, G., & Benghi, C. (2014). Interactive light and sound installation using artificial intelligence. International Journal of Arts and Technology, 7(4), 377–379. [CrossRef]

- Saidi, H., Serrano, M., Irani, P., Hurter, C., & Dubois, E. (2019). On-body tangible interaction: Using the body to support tangible manipulations for immersive environments. In Human-Computer Interaction–INTERACT 2019: 17th IFIP TC 13 International Conference, Paphos, Cyprus, September 2–6, 2019, Proceedings, Part IV 17 (pp. 471-492). Springer International Publishing.

- Savaş, E. B., Verwijmeren, T., & van Lier, R. (2021). Aesthetic experience and creativity in interactive art. Art and Perception, 9(2), 167–198. [CrossRef]

- Schreuder, E., van Erp, J., Toet, A., & Kallen, V. L. (2016). Emotional Responses to Multi-sensory Environmental Stimuli: A Conceptual Framework and Literature Review. SAGE Open, 6(1). [CrossRef]

- Szubielska, M., Imbir, K., & Szymańska, A. (2021). The influence of the physical context and knowledge of artworks on the aesthetic experience of interactive installations. Current Psychology, 40(8), 3702–3715. [CrossRef]

- Tidemann, A. (2015). [Self.]: An Interactive Art Installation that Embodies Artificial Intelligence and Creativity. 181–184.

- Turk, M. (2014). Multimodal interaction: A review. Pattern Recognition Letters, 36(1), 189–195. [CrossRef]

- Velasco, C., & Obrist, M. (2021). Multi-sensory Experiences: A Primer. Frontiers in Computer Science, 3(March), 1–6. [CrossRef]

- Vi, C. T., Ablart, D., Gatti, E., Velasco, C., & Obrist, M. (2017). Not just seeing, but also feeling art: Mid-air haptic experiences integrated into a multi-sensory art exhibition. International Journal of Human-Computer Studies, 108(May 2016), 1–14. [CrossRef]

- Xu, S., & Wang, Z. (2021). DIFFUSION: Emotional Visualization Based on Biofeedback Control by EEG Feeling, listening, and touching real things through human brainwave activity. ARTNODES, 28. [CrossRef]

- Zald, D. H. (2003). The human amygdala and the emotional evaluation of sensory stimuli. Brain Research Reviews, 41(1), 88–123. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).