1. Introduction

In the transportation sector, the greenhouse effect and the shortage of fossil fuels have been major global concerns over the past few decades [

1]. Replacing traditional fuels with electricity, known as the electrification, is considered as an effective approach to mitigating energy problems by reducing the consumption of natural resources [

2,

3,

4]. In addition, this initiative offers great potential for improving energy efficiency [

5,

6]. The undergoing electrification trend in the transportation sector involves the gradual electrification on planes, cars, trains, and ships, transitioning onboard fossil fuel-powered systems to electrical power systems [

7,

8].

In this transition, a conventional mechanical structure within the transportation vehicle is substituted with an electrical structure. The onboard microgrid (OBMG) is a crucial part of the power system in electrified transportation because it directly determines the onboard power distribution and the vehicle operational performance. [

9,

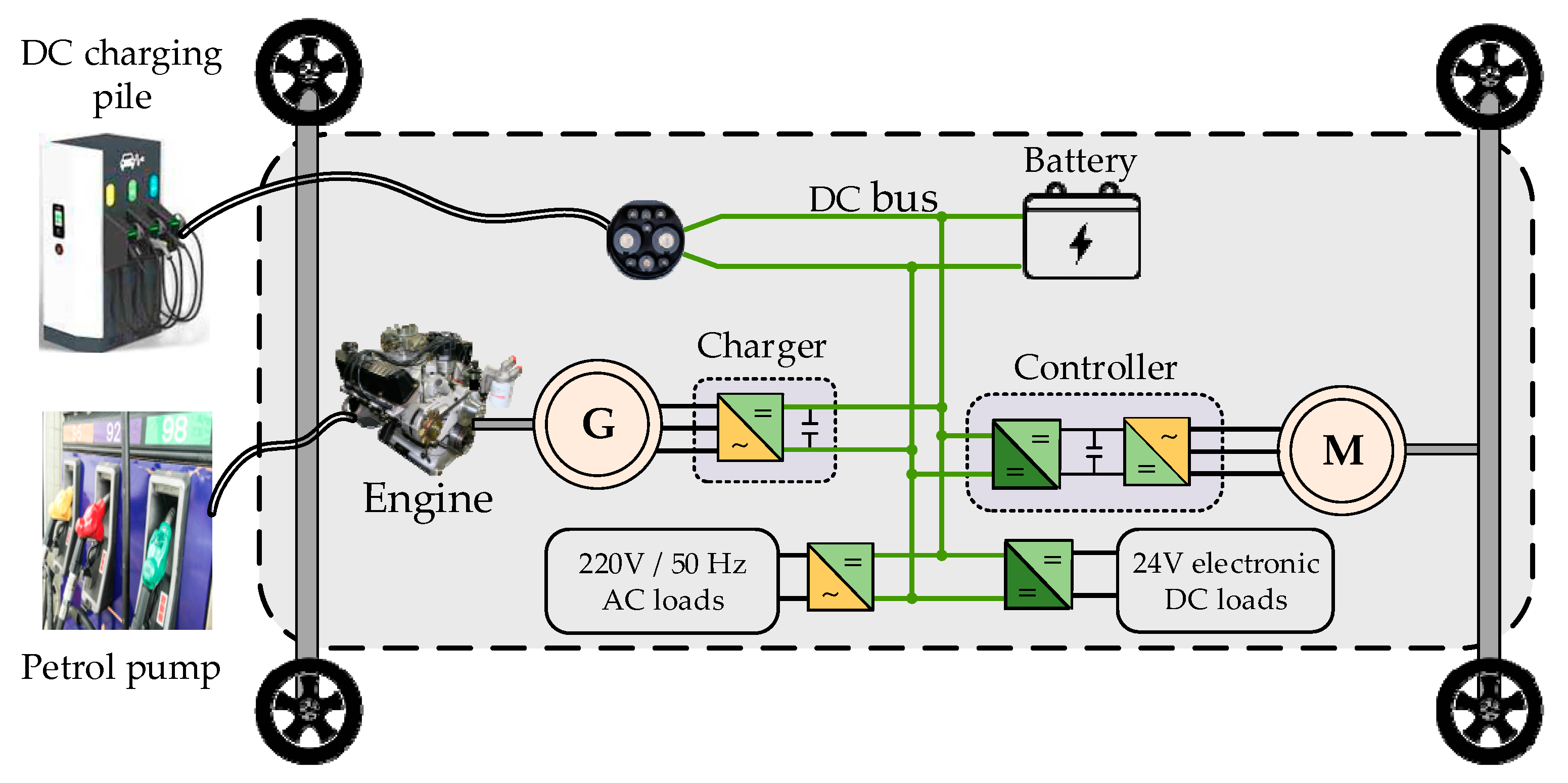

10]. A typical OBMG on a hybrid-electric vehicle is shown in

Figure 1. The OBMG usually comprises various electric power sources and power buses feeding different loads, where the allocation of electrical energy and fuel usage can be a big challenge [

3]. Therefore, energy management strategies (EMS) play a crucial role in achieving optimal energy utilization in OBMGs [

9,

11]. For example, the energy efficiency can be enhanced by capturing excess energy from regenerative braking, and then storing energy into the battery during braking or inertial motion [

12,

13].

Conventional EMS can be classified into rule-based and optimization-based approaches [

14,

15]. Rule-based EMS, known for its flexibility and adjustability, is widely used in controlling the OBMGs. However, this strategy requires developing a complex set of rules in advance, which would be difficult when considering all potential driving scenarios, and it may also result in a suboptimal EMS [

2,

16]. Optimization-based EMS aims to improve energy utilization efficiency and reduce emissions by dynamically adjusting energy conversion and distribution [

17,

18]. However, this approach typically demands substantial computational resources and time, thus real-time feasibility is a challenge [

19,

20]. In summary, traditional EMS covering the rule-based and optimization-based still suffers from limited pre-knowledge or substantial computational resources [

21]. This motivates researchers to explore advanced control techniques for the OBMGs in transportation applications.

With the development of information technology, emerging technologies, such as artificial intelligence (AI) technology and digital twin (DT) technology are being extensively integrated into EMS, resulting in the improved performance and efficiency of OBMGs [

22,

23]. In contrast to traditional strategies, combining AI and DT enables the system to make decisions using real-time data, leverage prediction, and optimization capabilities, and harness the advantages of intelligent decision-making, control, adaptive learning, optimization, and multi-objective optimization [

23,

24,

25]. This approach has been proven to be more cost-effective than traditional EMS in terms of energy efficiency, costs, and superior decision support for drivers and energy managers, rendering it a promising path for the future [

22,

26].

The transportation sector is undergoing a digital transformation due to the rapid development of information technology. This shift lays the foundation for the recent development of DT technology and the enhancement of AI techniques on OBMGs. In this context, the AI could primarily become an optimization-oriented algorithm within the energy management system of OBMG [

27], while the DT acts as a platform for data acquisition [

22,

28]. Despite being initiated in the last century, the recent rapid advancements of these two technologies attribute to remarkable progress in computing technology and data science. However, the use of AI and DT in energy management is often regarded as a black box, hard to explain the decision-making process and reasoning behind their data. Therefore, there is an urgent need to review these emerging techniques in the operation of transportation OBMG.

While a few papers reported the EMS using AI or DT technologies, there is limited literature available that provides comprehensive summaries of these studies on the state-of-art information technologies. For example, Reference [

29] classified practical EMS and developed simplified multifunctional EMS based on a re-simplification of this classification. The simplified EMS provided a valuable framework for future energy management that deal with complex information. The application of AI in energy management was discussed in [

23,

25,

30]. Among these studies, [

30] proposed an intelligent energy management system and compared different AI techniques for energy management; Reference [

23] compared traditional techniques with AI-based techniques and summarized the application of machine learning (ML) techniques to energy management systems; while Reference [

25] focused on the application of deep reinforcement learning (DRL) in ML to energy management and proposed a learning-based classification of EMS. Reference [

31] also explored the utilization of AI in energy management; however, it predominantly focuses on topics such as electric vehicles charging in the transportation grid, providing limited coverage of energy management within OBMG. Additionally, Reference [

22] provided a comprehensive examination of the application of DT in smart electric vehicles, including a brief discussion of each vehicle subsystem, but no in-depth discussion of energy management application.

Differing from the above review literature, this review analyses the integration of both AI and DT in the EMS of an OBMG, suggesting the future advancements which utilize DT as a data platform and AI as an algorithm to optimize the EMS. To summarise, the main contributions of this review include:

Provide an overview of recent EMS research, focusing on the applications of two emerging information technologies -- AI and DT;

In the AI domain, classify reinforcement learning-based EMS into model-based versus model-free approaches based on the utilization of models;

Assess the current state of DT-based EMS research and elucidate the application scenarios of DT in the intelligent transportation environment.

Provide the future trends of AI and DT technology in OBMG energy management, exploring current challenges and future directions.

The paper follows the organization below. Section II outlines the detailed description of two emerging technologies, AI and DT, followed by the description of energy management systems in Section III. Following the description of AI and DT applications in energy management is discussed in Sections III and IV, respectively. The future trends in AI and DT at OBMG energy management are discussed in Section V, and Section VI draws conclusions.

2. Emerging information technologies

This section will introduce the fundamentals of two typical emerging techniques: AI and DT. Based on that, the AI and DT applications in vehicle operation and control will be separately presented later this paper.

2.1. AI technology

AI pertains to the capacity of machines to exhibit or mimic intelligence, which is essentially different from that demonstrated by humans or other animals. Although AI can be implemented using preset rules, most of the time, specific AI tasks are performed by ML algorithms based on a model training process. ML algorithms can learn rules and relations from training data efficiently. During training, models improve automatically by incorporating data and gaining valuable experiences. ML is the most prominent form of AI used in electrical power systems. Other commonly utilized AI methods are expert systems, fuzzy logic, and meta-heuristic methods [

32]. ML algorithms are divided into three categories: supervised learning (SL), unsupervised learning (UL), and reinforcement learning (RL) [

33].

2.1.1. Three groups in ML

SL algorithms produce outputs (also referred to as targets) that match the training datasets. These targets can either be integers or continuous numbers. Regression is the learning task when the output data features continuous numbers. In contrast, when the response data contains multiple labels or integer values, it is known as classification. Regression learning requires no specific sampled data, unlike classification which typically needs categories, classes, or even 0 or 1 outputs tailored to particular problems. Both online and offline usage of SL algorithms has been listed. Statistics described in [

27] show that SL is the most popular technique across the three ML categories (From 1990 to 2020, 444 journal articles reporting on the use of ML in power electronics-related sectors identified SL as comprising roughly 91% of all uses).

Unlike SL, UL lacks a predefined output in the training data. The responsibility of UL is to learn how to recognize patterns and acquire information from a dataset without predefined output/labels/features. Utilization of such methods also enables the reduction of a dataset's dimensionality without the loss of relevant information [

34]. Therefore, UL can discover essential information with minimal training data comprising only a few elements. Clustering and association problems further characterize UL. Clustering focuses primarily on identifying patterns or structures in an uncategorized or unlabeled dataset, and determining the flower categories is a typical example of clustering problems. Moreover, association rules can uncover relationships or associations between elements in large databases.

The third category of ML, RL, learns how intelligent agents perform tasks by interacting with their environment. Throughout the learning, these agents must take actions (namely, updating the policy) in their environment (that provides rewards at each step/trial) to maximize overall rewards [

35]. Initially, these agents do not know about operating in the environment; Therefore, they must adopt various actions in the environment to obtain corresponding rewards. Their multiple cumulative rewards allow for the formulation of a suitable policy (i.e., how to act in a particular position) within the environment. Unlike SL, RL is a form of online learning that doesn't require input/output data as a starting point.

2.1.2. RL

Since RL has recently attracted significant attention, in the following contents, RL will be further introduced regarding its concept, preliminaries, and classification.

RL is a technique for learning a controller or decision-maker by interacting with the initially unknown environment.

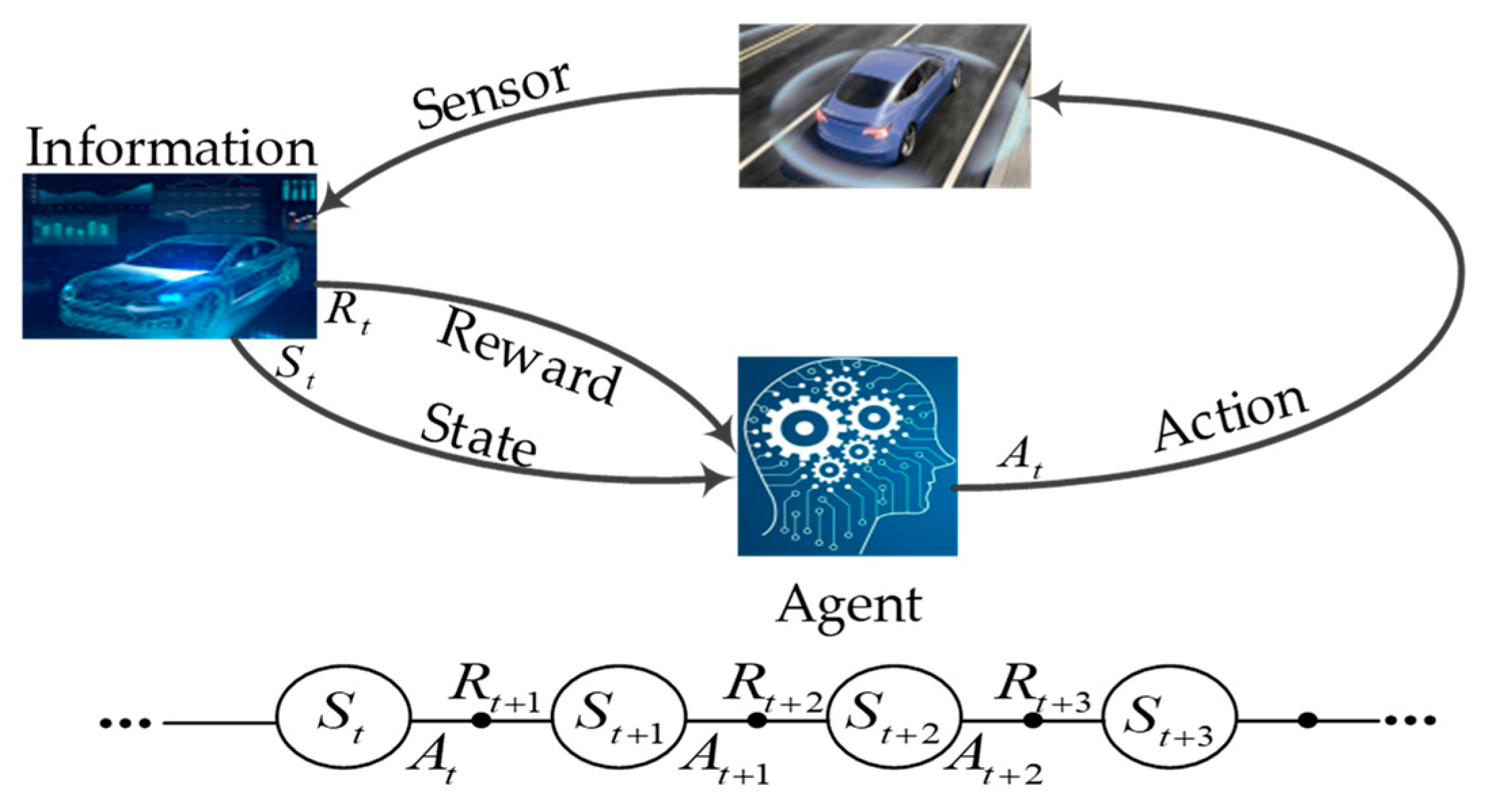

Figure 2 illustrates an RL framework utilized in the OBMG of a vehicle. The four fundamental elements of the RL system include strategy, reward, value, and environment. The strategy defines the behavior of the agent for a given state. Specifically, it is a mapping from the state to the appropriate behavior. The reward signal defines the objective of the RL problem. At each step, the environment issues a scalar value to the RL, which serves as the updated reward. The objective of RL is captured by the value, which measures the long-term returns. Typically, it is measured as cumulative rewards. The environment consists of information both internal and external to the learning object. Usually, a sensor is required to measure it and pass it to the agent.

The trained agents operate based on the current environmental state (or observation value) automatically to select and execute their response actions. These joint actions impact how the environment is updated and the reward feedback for following steps. As shown in

Figure 2,

S represents the status collection, and A

i and R

i respectively represent the agent's action collection and reward collection. Each state S

i will execute the new A

t+1 according to the current R

t+1 and A

t and get the new R

t+2. The training process is iterative to maximize the reward value and which results in a series of actions (namely policy) for the defined agents in the environment.

RL's mathematics basis is the Markov decision process (MDP). MDP is usually composed of state space (

S), an action space (

A), a state conversion function (

P), a reward function (

R), and a discount factor (

γ). The use of MDP has the following requirements/assumptions: the ideal state can be detected; it can be tried multiple times; the next state of the system is only related to the current status information, and it has nothing to do with the earlier state. According to

Figure 2, Agent observes the environmental status, and the action is made to change the environment. The environment feedback to Agent a reward 𝑟

𝑡 and a new state 𝑠

𝑡+1. This process continues until the end of the incident and then sends out a trajectory.

where 𝑇 is the time horizon of the episode. The objective of RL is to optimize a policy to maximize the expected return based on all possible trajectories. First, the value of the current state needs to be defined, and the formula (2-2) is the current state value calculation method.

Based on the Bellman equation [

36], the current value function can be decomposed into two parts: the current reward and the next reward. In the above trajectory, the action space A and the status space S are both limited collections, so we can calculate the expectations in an accumulated way. In the formula,

π(α|s) represents the probability distribution of action A under the case of a given state S;

RSα represents the current instant reward; γ represents the discount factor;

PSS’α represents the probability matrix transferred to the next states; and

νπ(s’) represents the value function of the next state. Among them, the equation is as described below.

This means the probability of reaching status S

t+1 when the current state is S

t and executes the action A

t. Then calculate the expectations of the future return that can be obtained in this state, and calculate the status value function, the formula is as follows.

This formula shows that there is a state S at the time of T. The value function is used to measure the advantages and disadvantages of a certain state or state-action pair. It calculates the expectations of cumulative rewards because it will be counted as the current state and the current expected value that has been accumulated in the future.

For RL training, select the best strategy, and find the best strategy through the best value function below.

When the maximum value of the target function is converged, the best strategy is trained.

2.2. DT technology

DTs are developed to replicate real-life physical entities in a virtual space. Typically, a DT digitally represents a physical product, system, or process, initially aimed at introducing the product. DT technology aims to digitize and model physical entities to reduce the cost and complexity of eliminating uncertainty in complex systems. This enables the effective management of the entire physical entity throughout its life cycle. As a virtual "mirror", instruments, tools, and parts can be conveniently maintained or adjusted. However, the implementation process in the digital space should be modeled with a careful selection of data or knowledge.

The development of modern sensor technology and edge computing has significantly improved the accuracy and speed of data acquisition, enabling real-time operation. The conjunction of the Internet of Things (IoT) and big data has significantly increased the feasibility of collecting and processing data for practical applications. Thus, the development of DT technology has become an inevitable outcome of the development of these technologies beyond a certain level, as their application forms the foundation for the construction of data twins. Furthermore, the development of DT technology can facilitate the advancement of associated technologies. DT is commonly integrated with digital technologies including the IoT, cloud computing, blockchain, and 5G/6G to create a network twin.

2.2.1. Development and evolution of DT

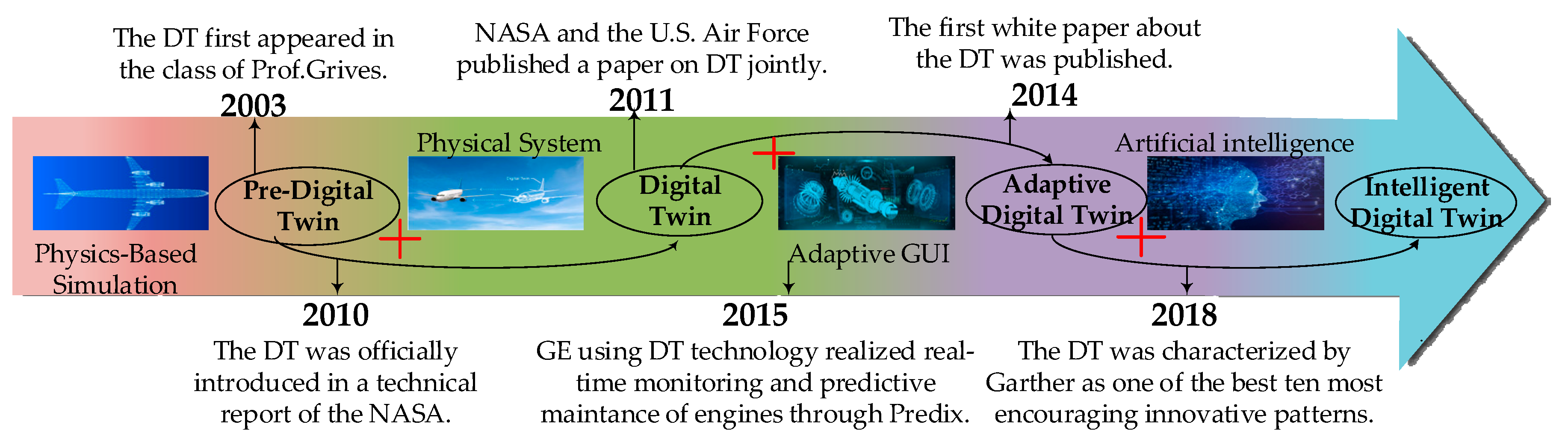

The development of DT technology can be categorized into four stages: the pre-DT stage, the DT stage, the adaptive DT stage, and the smart DT stage [

37].

Figure 3 presents an overview of the evolutionary history of DTs.

To begin with, before the real-time transfer of technology into digital space, it relied on physical model simulations. The critical difference between DT and simulation is that DTs have real-time synchronization with physical entities. Simulations, on the other hand, are purely digital and lack real-time synchronization. During the DT stage, experts developed data twins using their knowledge of DT output parameters, constantly refining the twins through professional expertise. Nonetheless, the technology was still relatively non-intuitive and heavily relied on professionalism. To cope with the lack of intuition, an adaptive graphical user interface (GUI) was introduced in the new stage of DT. This GUI provides a visual window that lets operators intuitively monitor twin changes. Operators can use the feedback obtained from the interface to have comprehensive control over and assessment of the physical entity.

The rapid development of AI led to the gradual replacement of human decision-making processes with AI-trained models. By collecting, analyzing, and evaluating information from the DT, new intelligence can be incorporated, thereby achieving autonomous operation. In some cases, well-trained AI models can even outperform humans and enable physical entities to perform optimally based on data transmitted through the DT.

In 2003, Professor Michael Grieves introduced the concept of DT in his product life cycle management course. However, the term DT, depicted in

Figure 3, was not coined by Grieves. Michael introduced a 3D model of DT, which is now a recognizable and widely used method. DT comprises three elements: physical entities, digital model, and data interaction between the two [

38]. Subsequently, in 2010, NASA published a technical report with a physical twin of the aircraft, defining the "twin" as a future development trend [

39]. A 2012 joint paper by NASA and the US air force identified DTs as a critical technology for future aircraft [

40]. The first white paper for DTs appeared in 2014, demonstrating the gradual standardization and increasing popularity of DT technology across industries [

41]. In 2015, GE used DT technology to achieve real-time monitoring and predictive maintenance of its engines. Moreover, in 2018, Gartner recognized DT as one of the pioneering innovations in the future industry [

42].

2.2.2. Composition of DT

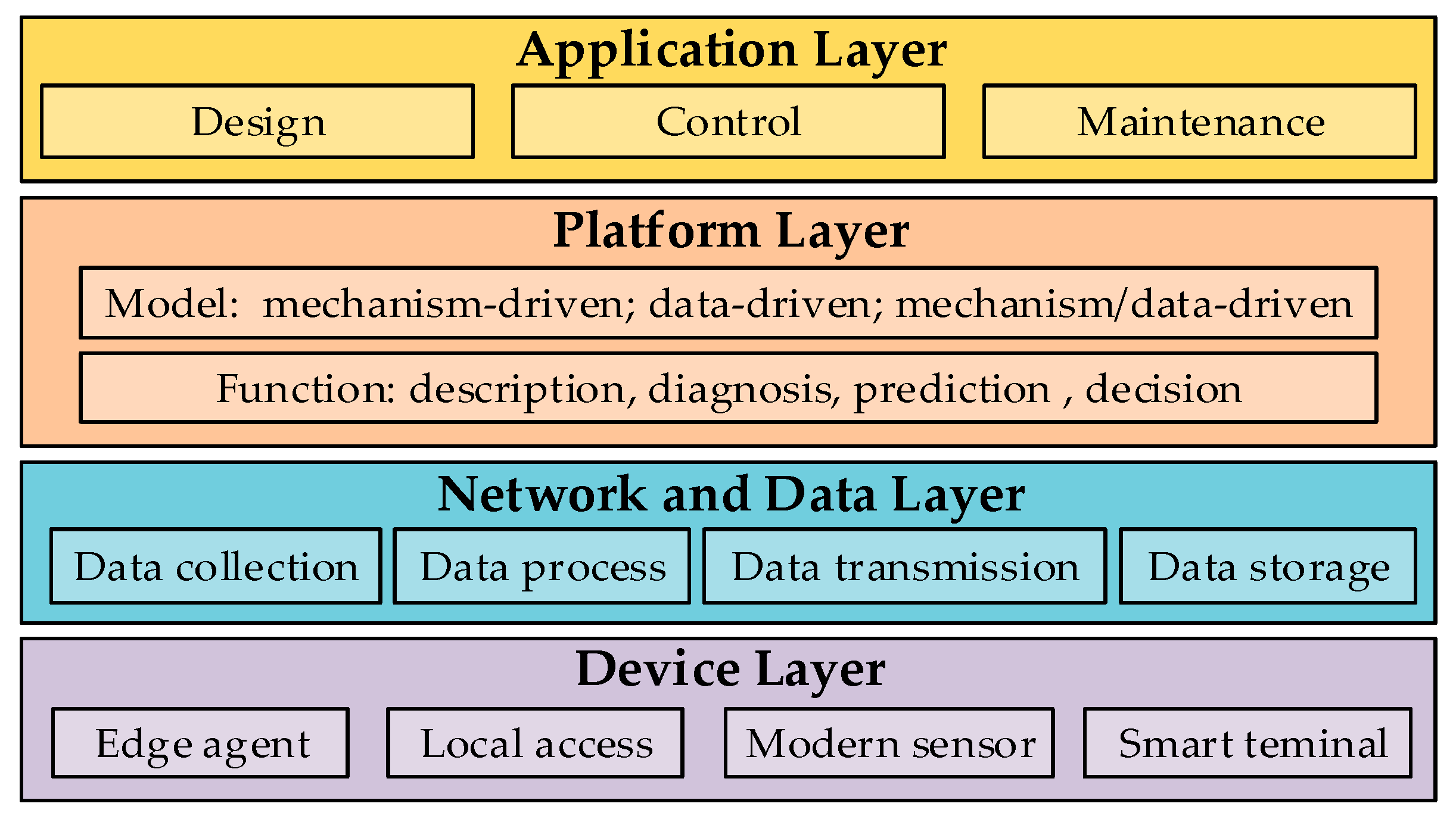

Figure 4 shows a typical technical structure of DT comprising four layers: the device, network and data, platform, and application layers [

43]. Within the device layer, edge agents combine local support with advanced sensor technologies to gather and transfer local device information. The data-layer information correlation is managed via smart terminal services. The network and data layers implement data collection, processing, storage, and transmission functions. The platform layer constructs DT models, which can be mechanism-driven, data-driven, or mechanism/data-driven. Once models are established, they perform functions like describing physical entities, predicting and diagnosing failures, and facilitating decision-making. The application layer encompasses design, control, and maintenance, representing the three stages of a complete product lifecycle.

Data forms the foundation of building a twin. It involves storing system parameters information, sensor data collection, real-time operation systems, and historical data in a storage space, which provides adequate data support for DT. The DT model can be either a mechanical model based on known physical objects or a data-driven one. In both cases, the model's key feature is its dynamic nature that allows it to update and learn autonomously. The mapping layer involves creating a model that reflects the internal physical entity via a digital "mirror" in real-time. For instance, the vehicle’s internal electrical system has different mapping relationships for various components. Battery mapping mainly focuses on monitoring the State of Charge (SoC) and battery charging/discharging states, whereas fuel cell (FC) mapping primarily monitors fuel consumption and energy release. In the end, interaction is essential in achieving synchronous virtual reality. With communication technology, DTs facilitate real-time information collection and control of physical entities, providing prompt diagnosis and analysis.

Leveraging the four elements, network twins can analyze, diagnose, simulate, and control the physical entity through AI technology, expert knowledge, and more. This leads to efficient system operation at lower costs.

3. Onboard microgrid energy management system

Before reviewing the specific AI and DT applications in Sections 4 & 5, in this section, the general energy management studies within the transportation OBMG systems will be first introduced; After that, traditional EMSs in this field will be summarized.

3.1. The energy management system of OBMG overview

An efficient energy management system can reduce fuel consumption and improve the overall efficiency of a power system architecture [

13]. Optimized fuel economy can be achieved by efficiently allocating power from different energy sources to loads [

14]. At the same time, energy management systems should guarantee the stable operation of the system under all constraints [

13,

44].

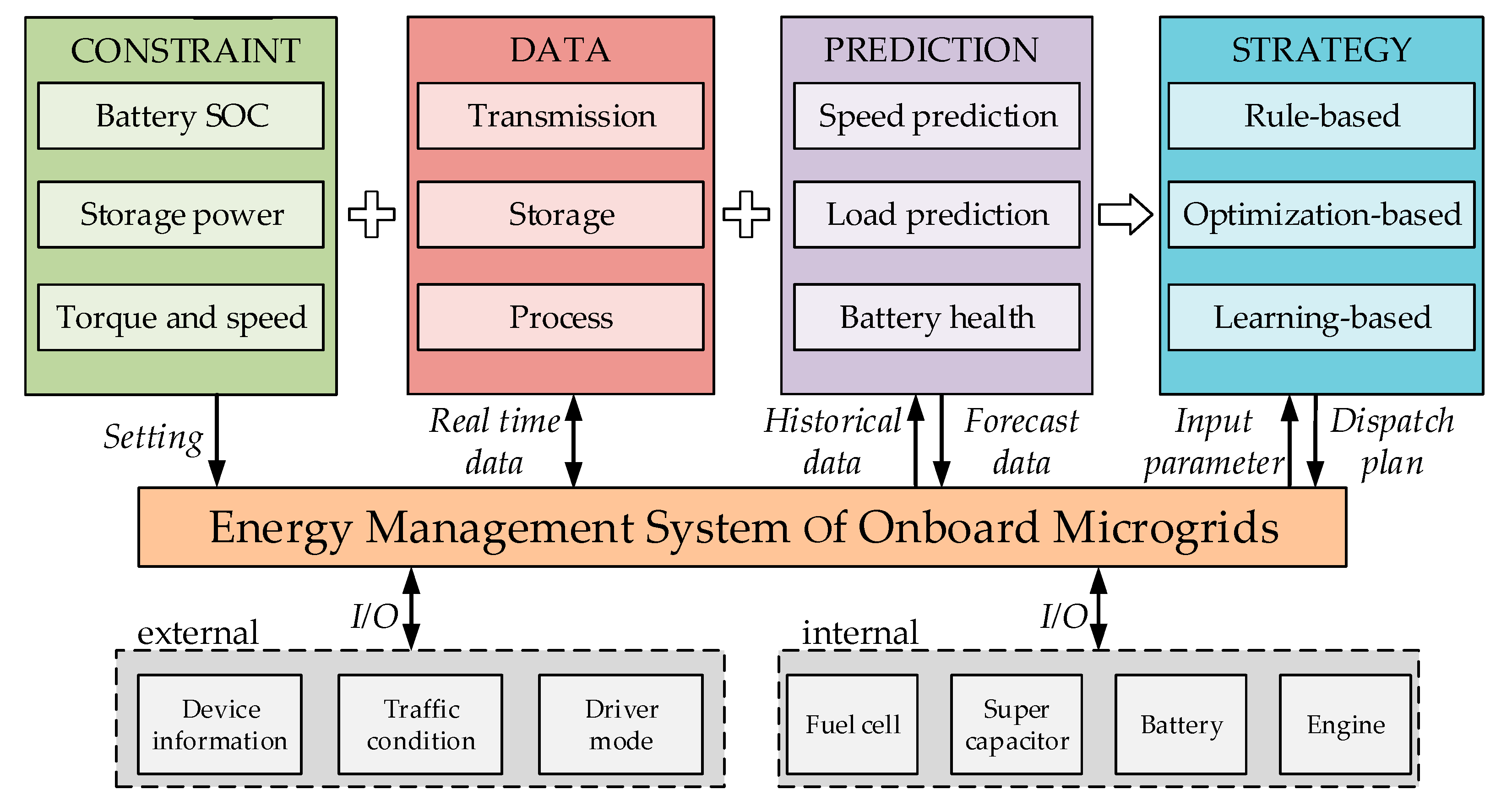

Figure 5 depicts the energy management system diagram of a typical OBMG in transportation applications. The system interacts with the information from internal and external sources. Engines and generators, the primary power sources, have the optimal working point in terms of fuel conversion efficiency. Typically, the battery pack compensates for differences between demand power from loads and generated power supplied by the primary power sources; however, its power dynamics are relatively slow. Supercapacitors are introduced to meet rapid power demands, producing power within a short period.

As shown in

Figure 5, the system can be divided into four levels, namely the constraint layer, the data layer, the prediction layer, and the strategy layer. Energy management involves balancing energy flows between devices while adhering to specific constraints. These constraints include battery pack SoC and maximum charging/discharging power, as well as torque and speed limitations for generators, as illustrated in

Figure 5. Battery pack SoC is typically maintained between 20% and 80% to extend battery life and optimize efficiency [

45].

The system data depicted in

Figure 5 can be utilized to construct a DT model, which can optimize, train, or verify the EMS. The three stages of energy management data handling are data transmission, storage, and processing. Data transmission is the core function that enables seamless real-time data transmission among various devices. Data storage aims to accumulate system data and operational information to facilitate future upgrading and optimization of the system. Lastly, data processing reduces EMS complexity by compressing and extracting feature values from data.

The system predicts future states by analysing historical and real-time data, as shown in

Figure 5. Speed prediction involves projecting the speed and acceleration of the vehicle and adjusting the output of energy resources accordingly. Load prediction evaluates the load power requirements of the vehicle and redistributes power accordingly. Battery health prediction is critical to maintaining system stability and is a crucial factor in advanced energy management approaches. The strategy in

Figure 5 lies at the core and can be classified as rule-based, optimization-based, and learning-based strategies, which are discussed in detail in the following.

3.2. Traditional energy management system

The OBMG energy management system is typically accomplished by regulating the distribution of energy between the engine and onboard battery while driving, as illustrated in

Figure 1. The crucial distinction between traditional and contemporary energy management systems lies in their EMS approaches. Traditional EMS can be categorized into two types: rule-based (deterministic and fuzzy) and optimization-based (global and real-time) [

16]. As depicted in the strategy layer of

Figure 5, excluding the incorporation of learning-based strategies. These two traditional control methods are briefly described below.

3.2.1. Rule-based control strategies

Deterministic rule-based control: it is generally implemented via lookup tables, splitting the demand power among power converters. There are four types, including thermostat control, power tracking control, modified power tracking control, and state machine strategy control [

16]. Among them, the state machine strategy is the most representative one, which enables it to adjust to external factors [

46]. This adaptability allows for effective monitoring of the entire system's performance. However, challenges arise in achieving economical fuel consumption and reducing emissions.

Fuzzy rule-based control: it summarizes the operator's control experience in obtaining fuzzy sets, and thus responds faster to sudden load changes and has a stronger tolerance for measurement errors than the deterministic rule-based methods. For example, an adaptive fuzzy control strategy is proposed to optimize fuel efficiency and emission standards by dynamically adjusting controller parameters in different situations [

47].

3.2.2. Optimization-based control strategies

Global optimization: it uses future and past power demand data to determine the best instruction. This method can be utilized to design online implementation rules or assess the efficacy of other control strategies, but it is unsuitable for real-time energy management. Global optimization methods include linear programming, the control theory approach, dynamic programming (DP), stochastic DP, and genetic algorithms (GA) [

16]. Noteworthy, the DP is frequently used as the benchmark for comparing other control strategies among them.

Real-time optimization: its cost functions will involve various factors, such as fuel consumption, self-sustainability, and optimal driving performance [

48]. There are two commonly used strategies, i.e., the equivalent consumption minimization strategy (ECMS) and frequency decoupling control. ECMS aims to reduce fuel battery consumption while also maintaining the SoC [

49]. In contrast, the frequency decoupling control utilizes the FC system to meet the low-frequency load demand while other energy sources fulfilling the high-frequency load demands [

17,

50].

It is noteworthy that, as the transportation electrification and information technologies continue to develop, energy management approaches and technologies are also progressing and enhancing. Advanced EMSs are employed to optimize energy utilization within the OBMG for enhanced energy efficiency. The subsequent section delves into the utilization of emerging information technologies in energy management.

4. AI technology for energy management

4.1. Overview of AI in energy management

In recent years, AI is emerging as one of the most popular technologies in the EMS. AI has significant potential in the field of energy management.AI can deliver intelligent optimization, energy demand prediction, real-time monitoring and control, and energy planning to facilitate efficient energy use, decrease costs, and minimize carbon footprint. The implementation of AI in energy management can enhance its intelligence, sustainability, and environmental friendliness. In contrast to traditional EMS, AI-enhanced EMS exhibits advantages such as improved response time, heightened prediction accuracy, adaptability, and versatility [

25].

Among them, neural networks (NN), serving as potent tools in energy management, are extensively employed in EMS due to their exceptional nonlinear modeling, adaptive learning, and multi-objective optimization capabilities [

51,

52]. For example, reference [

52] trains a NN controller in solar aircraft by using the RL technique to optimize the flight trajectory, which can improve energy management capabilities and operation time. It was found that the ultimate energy using the RL controller-guided aircraft was 3.53% higher than using the rules-based state machine; In addition, SoC was increased by 8.84%, and solar energy absorption was 3.15% higher. Similarly, in [

51], a NN is applied to build a shift controller for power distribution. The NN training data are from different driving cycles with DP. This NN model is very close to DP at fuel consumption with less than a 2.6% increase but is better for calculating efficiency and real-time control capabilities.

Furthermore, the researchers in [

53] utilize the nonlinear modeling capability of NN to recalibrate the components of energy management while constructing a novel set of control torque models. Unlike traditional approaches, this method does not require the extraction of driving cycle characteristics and outperforms them in terms of fuel economy and accuracy. The utilization of NN in energy management represents just one facet of AI. Specifically, NN falls within the domain of ML, which will be the primary focus of this discussion.

For instance, in the context of electric vehicles, model-free sliding mode control-based ML approaches are employed to optimize battery energy management. This application aims to enhance both battery life and overall system efficiency [

54]. By considering battery state and power conditions, these approaches determine the most favorable charging operation mode. As discussed in Section II, ML can be divided into UL, SL, and RL; However, RL is much more commonly used for energy management control, especially with DRL. The main two functions of UL are clustering and compression, they apply more during data processing and have fewer applications in energy management control strategies. Unlike UL, the applications of SL and RL in control are widely used [

55]. Since the previous research has a sufficient introduction to SL in control applications [

27], this article will spend more space discussing RL technology.

4.2. RL for energy management

In EMS applications, the factors (discussed in

Section 2.1) usually considered in the general reward function are the economy of fuel and the range of the battery SoC. The reward function of different RL learning methods can be uniformly summarized as the formula (3-1).

Where α is to describe the linear weight of the fuel consumption rate and SoC maintenance relationship, fuel is the fuel consumption rate, ∆SoC is the deviation of the current SoC and the target. The negative sign in front of the function will transform the minimum problem to maximize the problem.

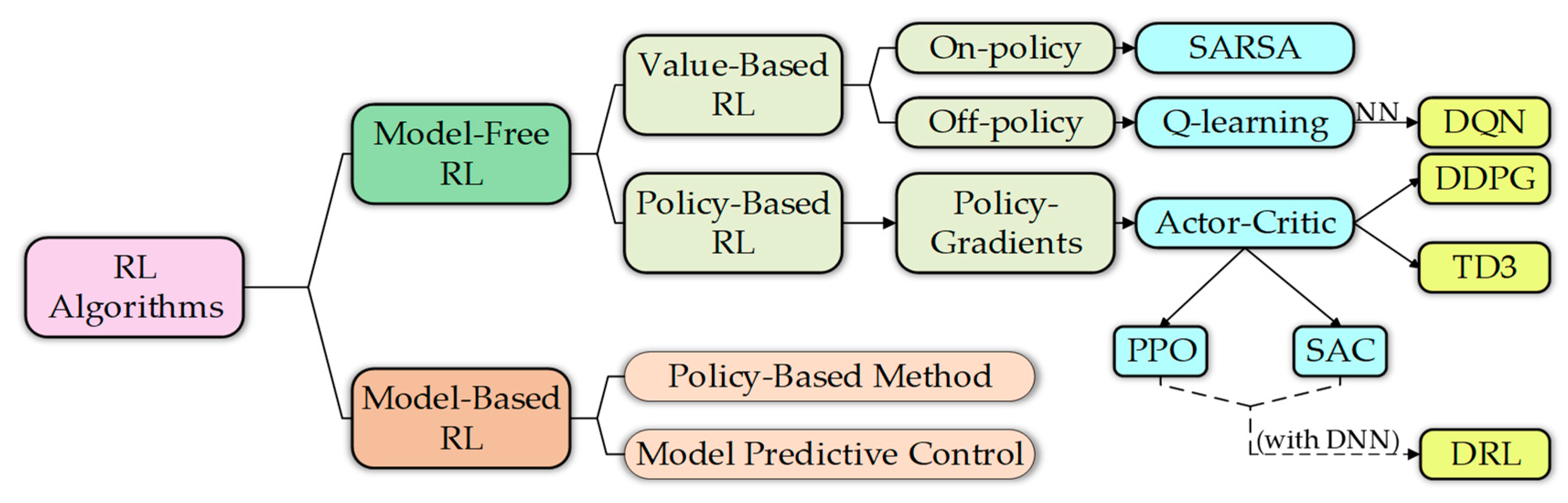

The implementation of RL in optimal energy management for OBMG hinges on the specific characteristics of the problem and the available data. Generally, this application can be categorized into two distinct approaches: model-free and model-based, as depicted in

Figure 6. For model-free RL algorithms, there are two types, value-based and policy-based. The value-based set can be divided into on-policy and off-policy based on whether algorithms use policy. For the on-policy algorithm, state-action-reward-status-action (SARSA) [

56] is a typical representative of it; For the off-policy algorithm, Q-Learning [

57] is a classic RL algorithm which can be matched with NN technology to form deep Q-network (DQN) [

58]. In another set of policy-based, Under the idea of policy gradients, the Actor-Critic algorithms can be divided into four types: proximal policy optimization (PPO) [

59], soft actor–critic (SAC) [

60], and deep deterministic policy gradient (DDPG) [

61], and twin delayed DDPG (TD3) [

62]. Among them, SARSA, Q-learning, PPO, and SAC belong to the RL algorithm and DQN, DDPG, and TD3 are all DRL algorithms. However, the classification above is not static. Some of the RL algorithms using deep neural networks (DNN) as the function approximators can be regarded as DRL algorithms, such as PPO and SAC [

63].

For the model-based RL algorithms, it can be divided into the policy-based method and model predictive control (MPC); Thus, the categorization of model-based RL is much simpler than model-free RL. For the policy-based method, a policy model is used for decision-making during the training. For MPCs, it is based on the current state and also need to gradually predict simulation and selection based on dynamics models.

4.2.1. Model-free RL

Traditional RL Algorithms: As shown in

Figure 6 (marked by blue), there are various examples of traditional RL algorithms without taking the system model into account. They are purely math algorithms for learning no matter what the studied system is about. In the last century, RL was widely used at first, as a mainstream plan for modeless models [

27]. Reference [

64] proposed an integrated RL strategy to improve fuel economy. The integrated RL strategies were used on parallel hybrid electric vehicles (HEV) models to minimize the two common energy management strategies: constant temperature strategy and equivalent consumption strategy. It is found that the fuel economy proposed by the integrated strategy is 3.2% higher than the best single strategy. For RL's training security constraints, the researchers in [

12] proposed an RL framework called "Coach-Action-Dual Critic" for the optimization of energy management. This control method includes a NN online EMS executor and a rules-based strategic coach. Once the output of the participants exceeds the scope of the feasible solution, the coach will take control of energy management to ensure safety. The method is better than existing RL-based strategies and has reached above 95% energy-saving rate of the offline global optimum. However, original RL cannot cope with large-scale, high-dimensional state action space in actual applications. Therefore, deep learning is introduced in RL, namely the DRL.

DRL Algorithms: The yellow part of

Figure 6 shows the DRL of modeless algorithms. DRL inherits the ability to reinforce good learning and has excellent perception and expression ability, which can effectively solve complex control problems and applies to energy management problems [

65]. As a specialized variant of RL, the DRL leverages DNN to address challenging problems that traditional RL methods struggle to solve. The line distinguishing them is often blurred, with various intermediate forms and variant techniques existing. In this paper, we aim to classify these approaches, depicting the transition techniques between traditional RL and DRL, as showcased in

Figure 6.

DRL is widely used as an algorithm in EMS to manage the energy within the OBMG. For example, A self-adaptive EMS for HEV based on DRL and transfer learning (TL) was proposed to address the drawbacks of DRL, such as its prolonged training time [

66]. A double-layer control framework is built to derive the EMS. The upper layer uses a DDPG algorithm to train EMS. The lower layer uses the TL method to convert pre-trained NNs. This control strategy can improve energy efficiency and improve system performance. The researchers in [

67] propose an inspirational DRL control strategy for energy management for a series of HEVs. In the control framework, a heuristic experience replay is proposed to achieve more reasonable experience sampling and improve training efficiency. In the optimization strategy, a self-adaptive torque optimization method based on Nesterov accelerates gradient is proposed. This control strategy can achieve faster training speed and higher fuel economy and is close to global optimal.

Moreover, reference [

68] proposed a combination of DRL-based EMS with a rule engine start-stop strategy that uses the DDPG to control the opening of the engine thermal valve. DRL-based EMS achieves multi-target synchronization control through different types of learning algorithms. Compared with deterministic DP, this method can ensure optimization and real-time efficiency. An EMS that leverages DRL in the context of a cyber-physical system is proposed [

69]. The EMS is trained using DRL algorithms, incorporating input from experts and multi-state traffic information. Notably, the system not only considers internal physical system information but also harnesses external information at the network layer to optimize performance. Moreover, the proposed method employs TL to transfer previously trained knowledge to a different type of vehicle. Ultimately, this approach leads to an improvement of over 5% in fuel economy compared to the DP method.

Furthermore, in response to the impact of battery health in EMS, the researchers in [

70] put forward an energy management framework based on battery health and DRL. The proposed strategies can reduce the severity factor with low fuel economy cost, thereby slowing the aging of the battery. To further reduce fuel consumption and improve the adaptability of the algorithm, the researchers in [

71] proposed an online update EMS based on DRL and accelerated training. The online framework continuously updates the NN parameters. Combining DDPG with the priority experience of the release, the fuel economy and SoC performance can be both improved, and the training time is also decreased. The EMS fuel economy based on the method reached 93.9% of the benchmark DP.

With the development of technology, vehicle autonomous driving technology is becoming more mature in recent years, and there are more EMS studies for autonomous driving. A layered control structure was proposed to facilitate energy management in autonomous vehicles through the use of visual technology [

72]. It combines one-time object detection with intelligent control based on deep-enhanced learning. This layered control structure can achieve quite high computing efficiency on the embedded device and has the potential of actual vehicle control. Unlike, the researchers in [

73] studied the EMS under the car-following scenario. A new ecological driver strategy, a DDPG-based ecological driving strategy is proposed, and the weights of multiple goals are analyzed to optimize the training results. Under the conditions of ensuring the performance of the car, the proposed strategy’s fuel economy can reach more than 90% of the DP method.

4.2.2. Model-based RL

Policy-Based Method: For the policy-based method, we need to build a policy model for the training in RL. When building such a new model, it is usually combined with traditional methods. Using new models for planning, RL can be transformed into an optimal control problem, and the optimal strategy is obtained through the planning algorithm. The RL method has strong adaptability to different working conditions and has strong application. In terms of the optimal control of energy management, the control method based on RL [

74] is compared with the control method of using deterministic DP or stochastic DP. The control problem obtains the global optimality, and the convergence characteristics of RL-based control strategies are verified. The researchers in [

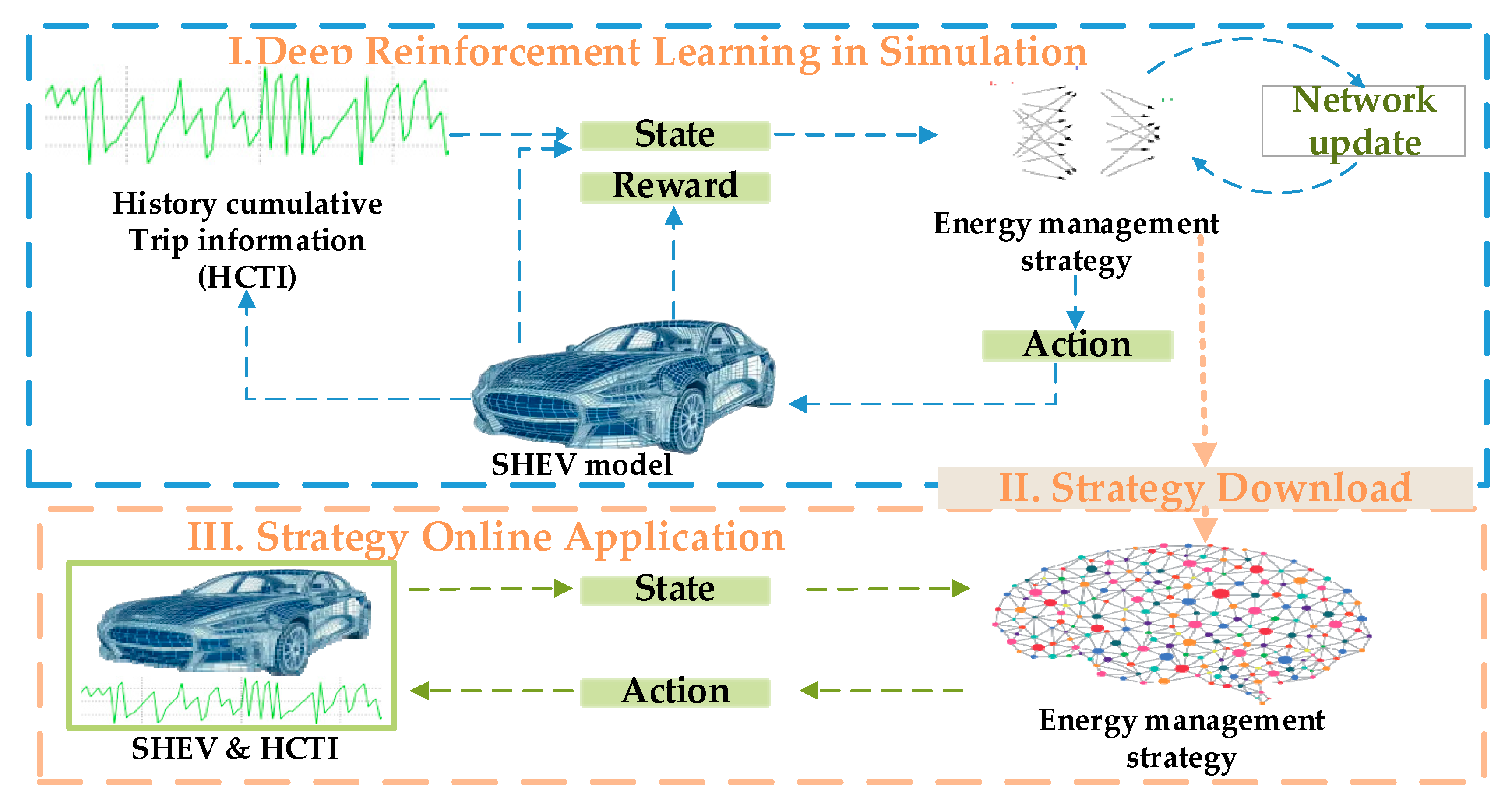

75] propose a DRL-based EMS method to use historically accumulated stroke information to build a dynamic model for NN construction.

Figure 7 is the principle of this method. It is found that the fuel economy and calculation speed of this method is better than that of EMS based on MPC.

Differently, the researchers in [

76] propose a control method by establishing a dynamic model of the vehicle, and then using an online EMS based on heuristic DP. In that article, considering the uncertain non-linear dynamic process of vehicles in the actual transportation environment, the backpropagation NN is used to build a dynamic model. The torque distributed by this strategy can effectively track the vehicle speed, and the speed tracking accuracy is higher than 98%. The proposed strategy can further reduce the fuel consumption and emissions of the HEV when compared with the existing online EMS. In [

77], the internal power system model of the vehicle is used for enhanced learning. The learning process is stable and convergent, and the power assembly model is well-learned. Compared with rules-based strategies, the algorithm proposed was reduced by 5.7% of the fuel consumption.

In addition, reference [

78] put forward a hybrid electric tracked vehicle based on DP and enhanced RL for online corrections to predict energy management, which is applying a DP algorithm to obtain a local control strategy based on the short-term future driving cycle. It combines the RL algorithm with the fuzzy logic controller to optimize the control strategy by eliminating the impact of inaccurate prediction. Compared with the original predictive energy management, the fuel economy of the proposed method has increased by 4% and reached 90.51% of the DP benchmark. To adjust the parameters of ECMS in a dynamic environment, the researchers in [

79,

80] propose to use RL to evaluate performance and determine the best control parameters which can automatically generate the equivalent factors in EMCS based on the interaction between the learning subject and the driving environment.

For example, the proposed method in [

79] can obtain a global solution that is close to the optimal solution, and close to the DP method (an average increase from the DP results of 96.7%). It improves by 4.3% compared with the existing adaptive ECMS. The researchers in [

81] propose an online update framework for the EMS for multi-mode hybrid systems. This method can generate close optimal strategies for any type of unknown driving cycle at a short time, only 6%-12% of fuel than global optimal EMS. To adjust the constraint setting of SoC according to the requirements of EMS future industrial tasks, the researchers in [

82] proposed an adaptive layered EMS combining knowledge and DDPG. The fuel consumption after SoC correction is very close to DP-based control, and the training of this method is an effective, efficient, and secure exploration for real-world applications.

Model Predictive Control: For MPC, the other model-based RL is based on the current state, and the dynamics model is built to predict and select the potential actions. In the MPC of energy management, it is important to predict some data of the system. A non-linear MPC system based on random power prediction methods was proposed to achieve the best performance in heavy HEV that lack navigation support [

83]. The data-driven prediction method is used to obtain high-precision ultra-short-term power prediction, and then find the optimal numerical solution through nonlinear MPC in real-time. This online real-time method is much better than the rules-based control strategy; In addition, compared with the offline global optimization strategy, the results are quite similar.

The researchers in [

84] proposed an uncertain-based HEV EMS, which combines convolutional NN with long-term memory NN and uses tube-MPC to solve the optimization control problem in a receding horizon manner. Compared with traditional rule-based and MPC methods, the tube-MPC method could achieve 10.7% and 3.0% energy-saving performance improvement on average. Reference [

85] developed a new learning MPC strategy and proposed an MPC solution based on reference tracking with strong and instant application capabilities. This method can optimize the energy flow in the power supply of the vehicle in real-time, highlighting its anticipated preferable performance.

This model-based RL has a high sample efficiency, but the training process of environmental models is often large in time. How to improve the learning efficiency of models is the direction of future research.

As mentioned above, a popular way of applying RL is coupled with transfer learning (TL). TL aims to apply the knowledge skills learned from a previous domain to a different but related domain. Reference [

66,

69] uses TL to improve the training efficiency of RL. In addition to TL, federal learning (FL) could also be used to protect user data privacy [

86]. For example, in vehicle-to-vehicle energy management, DRL is applied [

87] to learn the long-term returns of matching action and an FL framework is proposed to achieve different electric vehicle cooperation without sharing sensitive information. This method could benefit electric car owners to save costs with low hedge trading risks and reduce the burden of reducing the power grid.

4.3. Other AI methods for energy management

In addition to the above ML methods, metaheuristic methods and fuzzy logic control have also been considered for EMSs. The metaheuristic method is generally developed by biological evolution, e.g., GA by process of natural selection, and particle swarm optimization algorithm (PSO) by simulating birds in finding an efficient path for foods [

27]. The metaheuristic method in EMS can give feasible solutions at an acceptable price (such as calculation time). Researchers in [

8] proposed a self-adaptive layered EMS suitable for plug-in HEV, which combines DL and GA to derive power distribution control between batteries and internal combustion engines. This technology can greatly improve fuel economy with strong adaptability and real-time characteristics. According to the concept of metaheuristics, researchers in [

88] proposed a new coyote optimization algorithm to reduce the hydrogen consumption of hybrid systems and improve the durability of the power supply. Compared with the ECMS method, this algorithm can reduce 38.8% of hydrogen consumption.

Regarding the fuzzy logic control, reference [

89] proposes an intelligent DC micro-grid energy management controller based on the combination of fuzzy logic and fractional-order proportional-integral-derivative (PID) controller. The source-side converters are controlled by the new intelligent fractional order PID strategy to extract the maximum power from renewable energy sources and improve the power quality supplied to the DC microgrid. Therefore, the fuzzy logic control in [

89] is not applied in rules-based strategies but for PID.

Although AI offers numerous advantages in energy management, it faces certain challenging drawbacks. These include its heavy reliance on large quantities of high-quality data for effective training and learning, as well as its opaque decision-making process, making it difficult to provide explanations for its reasoning. Additionally, AI applications in energy management require substantial data collection and processing, which raises concerns related to privacy and security. Consequently, to address these challenges, another information technology, specifically the concept of DT, will be introduced in the subsequent chapter.

5. DT technology for energy management

DT technology provides a platform for the design, control, and maintenance of energy management. It can be regarded as advanced modeling, whose core is maintaining the consistency of the digital world with the physical world. For this goal, AI techniques are widely used in the creation of digital models to ensure their accuracy [

90]. On the other side, the implementation of DT presents an economical and efficient platform for AI addressing complex high-dimensional problems [

45]. Thus, combing AI techniques with DT technology is a popular trend nowadays.

The utilization of DT enables the real-time monitoring and collection of diverse data from energy systems [

22]. This facilitates the prompt identification of issues and opportunities for optimization, as well as the provision of instantaneous feedback and decision support. Additionally, DT technology possesses strong collaborative capabilities. Through the sharing of DT models and data, the realization of real-time information sharing and collaborative decision-making is attainable, consequently enhancing the efficiency and coordination of energy management as a whole [

90,

91].

This section categorizes the energy management applications of DT into two distinct parts based on their specific applications. The first part focuses on energy management applied to the OBMG system within vehicles, while the second part addresses energy management applied to the broader transportation grid.

5.1. DT applied in OBMG

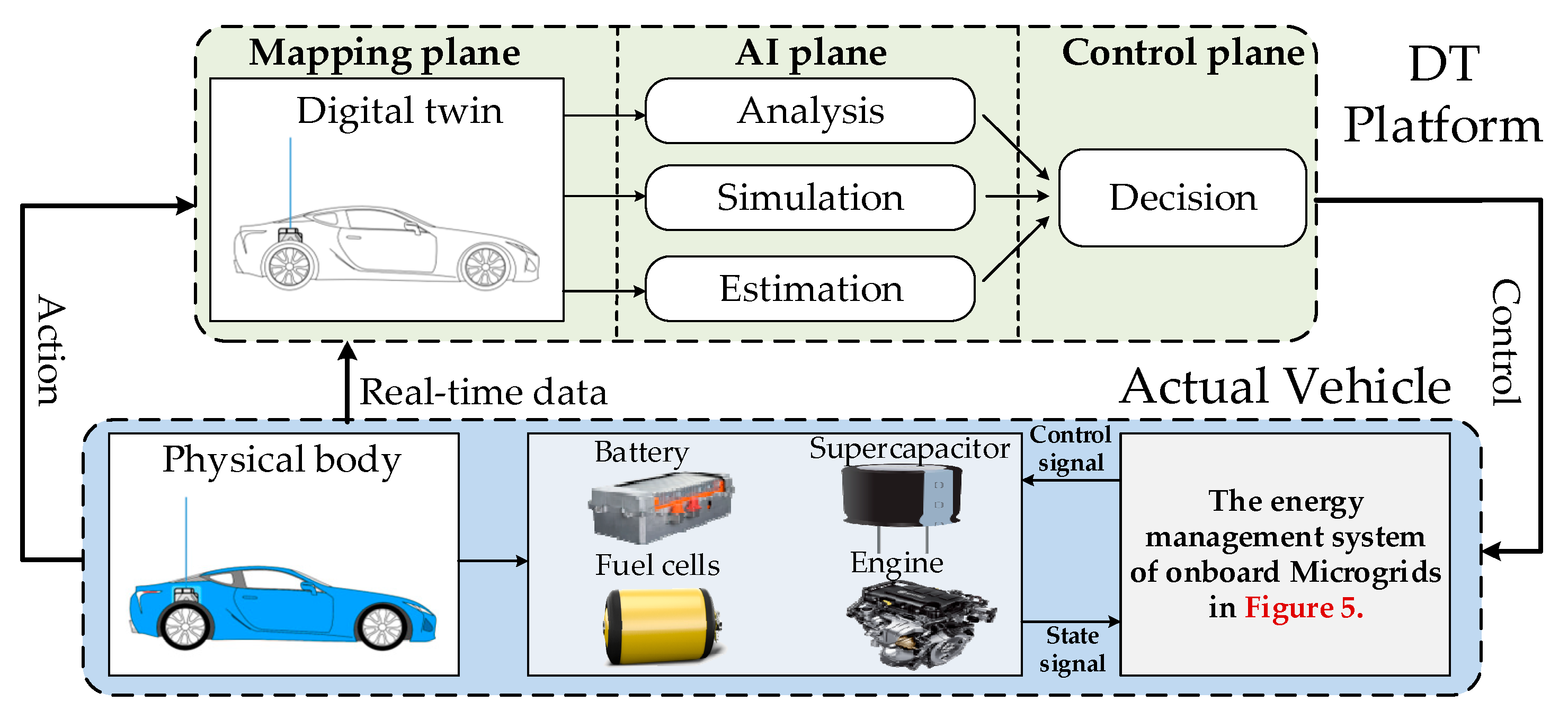

When applying the DT technology in an OBMG, its power utilization may increase. As shown in

Figure 8, DT achieves data fusion by combining data sources acquired from the physical body and the digital model. The blue rounded rectangle represents the OBMG inside the actual vehicle, and the green prototype rectangle represents the DT platform built in virtual space. The physical entity transmits real-time data to the DT system, permitting the DT mapping layer to synchronize with the data model of the physical entity. Meanwhile, instructions and actions issued by the driver can be transmitted to the DT model via the IoT platform. The mapping layer of the IoT platform reflects the real state of the physical entity, which will be analyzed, simulated, and evaluated by the AI layer. The AI layer then makes a comprehensive decision based on the results, which is then transmitted to the control layer. The control layer relays the control signal to the EMS on the physical entity, allowing it to perform energy scheduling by managing power sources such as battery packs, supercapacitors, FC, engines, etc.

The battery management system (BMS) is the key part of the power systems in all-electric vehicles [

22,

91,

92]. The health status of the battery is associated with both its charging state and lifespan, affecting the accuracy of the overall digital model. Reference [

91] offers a battery-based DT model structure for vehicles, which enhances the ability of the BMS to perceive and enables the battery storage unit to operate at peak efficacy. Besides, developing predictive models based on the DT technology can potentially improve the BMS's safety, reliability, and performance. For instance, DT can be used for monitoring the status of the battery and providing additional real-time information to the BMS for informed decision-making [

93].

Moreover, DT is also utilized to explore the engine's potential for fuel conservation. For example, a high-performance Atkinson cycle gasoline engine is developed by multi-objective evolutionary optimization using the DT technology [

94]. The approach leverages DT data as input and then uses the evolutionary non-dominating sorting GA to identify the optimal input parameter values. To optimize energy utilization efficiency, regenerative braking using the DT technique is proposed [

95]. The suggested approach analyzes the characteristics of each component in the traction-powered AC-DC hybrid grid using a DT platform, on which the recovery and reuse of traction braking energy are realized, taking into account more safety factors than conventional methods.

Apart from optimizing subsystems in energy distribution, DT is also beneficial for the overall OBMG design and optimization process. By using DT, an embedded control system can be integrated into the initial ship power system design to optimize cost, availability, safety, and emission levels at the top layer [

96]. The optimized design tool created using DT is compatible with the actual ship control design bandwidth during operation. An adaptive PSO algorithm is introduced enhancing the optimality and trustworthiness of the DT-based optimization of a parallel HEV [

97]. The proposed method optimizes the algorithm's performance by implementing an adaptive swarm control strategy. The objective function of this strategy minimizes fuel consumption while evaluating battery SoC. The method has been verified to have better performance in controlling the final SoC while saving fuel consumption as well as calculation time.

Although the advantages of DT for energy management in OBMG have been mentioned above, there are still limitations that need to be addressed. Firstly, DT necessitates real-time data collection from multiple sensors and devices within the vehicle. However, if the sensor data collection is inaccurate or delayed, it may result in erroneous or outdated energy management control signals [

22]. Another limitation is that the energy management system of OBMG is typically composed of components and subsystems sourced from varied suppliers. These suppliers may adopt different communication protocols and data formats, presenting a challenge in constructing the DT. Lastly, building DT models need the use of user data, including vehicle performance and driving behavior, giving rise to security and privacy concerns [

98]. In many cases, these concerns are neglected in transportation due to cost considerations. However, they become critical aspects to be addressed in larger systems like the transportation grid. The following section will elucidate the application of energy management in the context of the transportation grid

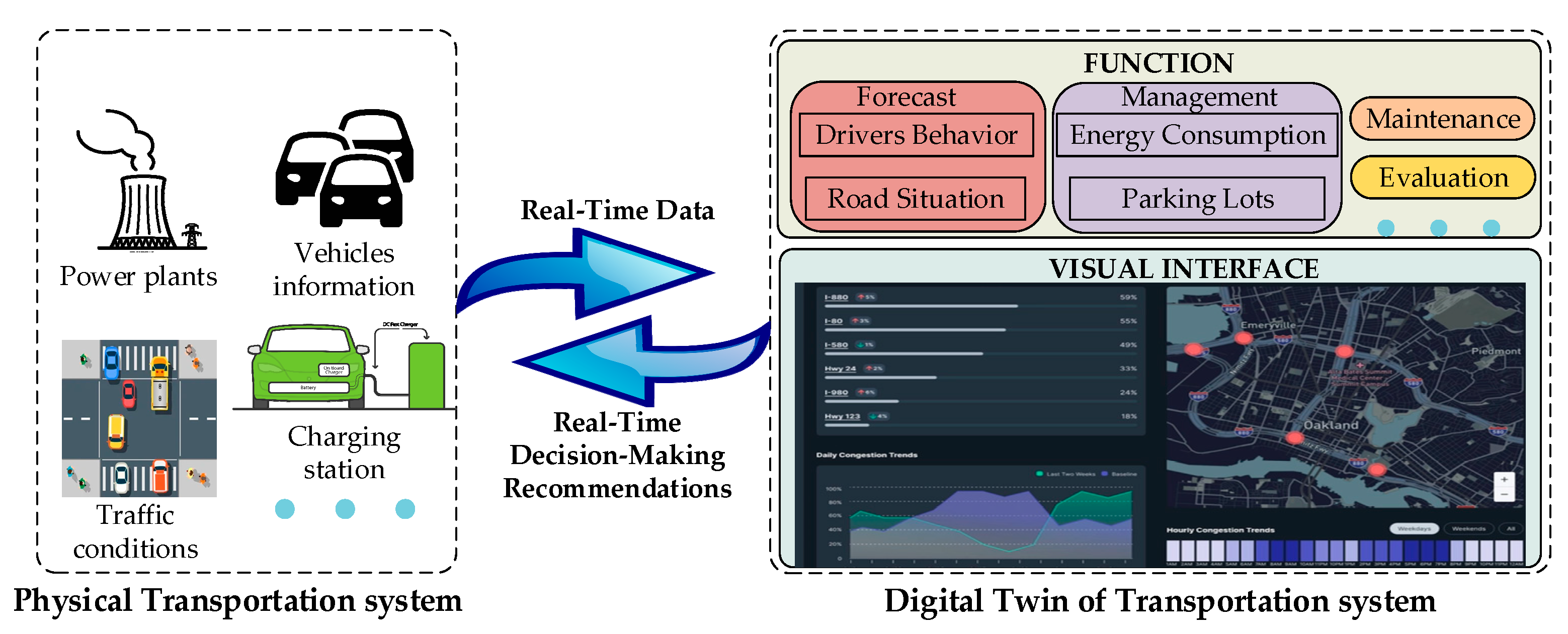

5.2. DT applied in the transportation grid

The DT technology can help realize the smart transportation system by effectively managing the road network traffic, as shown in

Figure 9 [

99,

100]. The data obtained from the vehicle information, road traffic conditions, charging station status, and power plant information, is transmitted to the DT of the transportation system in real-time. After processing the data by ML, DRL, and other AI algorithms, the results can be used to predict driver behavior, manage the energy, and evaluate the overall system. Besides, results are presented to the users in real-time on a visual interface that aids in decision-making.

The concept of the Internet of Vehicles (IoV) is introduced by combining it with DT. In a smart transportation system, IoV provides wireless connectivity and computing services, while the DT is used to digitize smart transportation for prediction, management, maintenance, and evaluation [

101], as shown in

Figure 9. Considering that transportation power systems are time-varying and unpredictable, it is crucial of developing a dynamic DT model for power distribution. For example, an aerial-assisted IoV is established to capture the time-varying energy supply and demands, thereby facilitating unified power scheduling and allocation [

102]. This approach improves user satisfaction and energy efficiency simultaneously.

Moreover, DT technology can be used to improve the performance of electric railway power systems. In [

103], a model is developed for a future 9 kV electric railway system (ERS), which integrates distributed energy sources and electric vehicle charging infrastructures. The algorithm in ERS is modified to accept real data from a physical railway system and simulate a DT-based model. The proposed architecture has the potential in terms of enhancing the ERS capacity and efficiency.

6. Future trends

There is a significant correlation between DT technology and AI technology. Within the context of the DT, AI technologies can be utilized for data analysis, pattern recognition, and decision support. By incorporating AI technologies, a DT can automatically analyze and interpret data, extract crucial information, and make decisions through deep learning and pattern recognition techniques using vast amounts of real-time data. AI algorithms and models are instrumental in helping DT systems identify patterns, anticipate trends, make intelligent decisions based on intricate data, optimize system performance, and provide intelligent operations and maintenance. Furthermore, DT and AI have the potential to mutually reinforce and enhance one another. DT technology provides a wealth of real data and scenarios that serve as the foundation for AI training and validation. The simulation data generated by DT can be utilized to train AI models to simulate diverse situations and changes within real environments, thus elevating the robustness and generalization capabilities of the models.

The application areas of DT and AI in the electrical industry encompass smart grid and energy management, smart manufacturing and industrial automation, smart appliances, and smart home, as well as data-driven decision support. Among these, the smart grid and energy management domain presents extensive prospects for application. By establishing DT models of power systems and integrating real-time monitoring data with AI algorithms, intelligent monitoring, fault diagnosis, and optimal scheduling of power networks can be achieved, thus enhancing power systems' reliability, efficiency, and sustainability. These applications find widespread use in transportation-based electrical infrastructures. Additionally, data-driven decision support, when combined with DT and AI algorithms, facilitates the analysis and mining of large-scale data, real-time monitoring of electrical equipment status, performance, and efficiency, identification of potential issues, and provision of intelligent decision suggestions. Such capabilities contribute to the optimization of operation and management strategies.

Although DT and AI offer a plethora of promising applications in the electrical industry, several issues still need to be resolved. These include concerns regarding data quality and reliability, model accuracy and precision, privacy and security protection, as well as standards and interoperability.

Data quality and reliability are crucial for the effective utilization of DT and AI. Nevertheless, in the electrical industry, data quality and reliability often encounter challenges caused by factors like sensor noise and data collection errors. Consequently, the need to address how to enhance data quality and reliability to mitigate the impact of data uncertainty remains an unresolved issue.

Model accuracy and precision are crucial aspects in the development of DT models and AI algorithms. Due to the inherent complexity of electrical systems, building accurate models and designing precise algorithms becomes increasingly challenging. Therefore, further research and improvement are necessary to enhance the accuracy of models and algorithms in this domain.

Privacy and security protection are of paramount importance in the utilization of DT and AI. Given the substantial amount of data and information collected and processed in these applications, it is crucial to address the protection of sensitive business and personal privacy information within the electrical industry. Ensuring the security and privacy of such information remains a significant concern that requires attention and resolution.

Standards and interoperability are vital for promoting the widespread adoption of DT and AI. To accomplish this, it is essential to develop common standards and specifications, as well as enhance interoperability among diverse systems. The establishment of such measures will facilitate the seamless cross-platform and cross-system integration and application of DT and AI technologies.

7. Conclusions

The paper commences by providing a background on transportation electrification and transportation informatization, subsequently introducing OBMG. The emphasis is particularly on their internal energy management. Subsequently, the paper provides an overview of AI and DT technologies, encompassing their key frameworks and classifications. The paper offers an overview of AI techniques applied in energy management, with a specific emphasis on the implementation of RL. Furthermore, the paper discusses the application of DT technology in energy management, highlighting its utility beyond OBMG to encompass larger transportation grids. In contrast to AI techniques, DT does not offer specific algorithms but rather provides a platform that aligns with the physical object. Lastly, the study explores the future trends of these two emerging information technologies (AI and DT) in the realm of transportation power systems.

The future trend of transportation systems is towards electrification and information technology. AI and DT technologies are advanced forms of information technology and can contribute significantly to the development of this transportation and power system. This study aims to consolidate recent research on the application of these technologies in transportation power systems, providing a robust foundation for future exploration in this field. In the future, the use of AI and DT technologies will be more prevalent in transportation power systems, resulting in increased energy efficiency in OBMG. An all-encompassing theme for future research would be to merge AI technology with DT technology to train AI algorithms by data generated from DT platforms, that enhance the design, control, and maintenance of transportation power systems. This technique may either be data-based or model-based. While it may be too computationally complicated for real-time control, it can substantially optimize the transportation power system overall.

Author Contributions

Conceptualization, Z.H., and Y.G.; investigation and resources, X.X.; writing original draft preparation, X.X.; writing—review and editing, Z.H. and Y.G.; supervision, T.D. and P.W.; funding acquisition, Y.G. and Y.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by the Natural Science Foundation of Jiangxi Province, grant number 20224BAB214058.

Data Availability Statement

The study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| AI |

Artificial intelligence |

| BMS |

Battery management system |

| DDPG |

Deep deterministic policy gradient |

| DNN |

Deep neural network |

| DP |

Dynamic programming |

| DQN |

Deep Q-network |

| DRL |

Deep reinforcement learning |

| DT |

Digital twin |

| ECMS |

Equivalent consumption minimization strategy |

| EMS |

Energy management strategies |

| ERS |

electric railway system |

| FC |

Fuel cell |

| GA |

Genetic algorithms |

| GUI |

graphical user interface |

| HEV |

Hybrid electric vehicle |

| IoT |

Internet of Things |

| IoV |

Internet of vehicles |

| MDP |

Markov decision process |

| ML |

Machine learning |

| MPC |

Model predictive control |

| NN |

Neural network |

| OBMG |

Onboard microgrid |

| PID |

Proportional-integral-derivative |

| PPO |

Proximal policy optimization |

| PSO |

particle swarm optimization |

| RL |

Reinforcement learning |

| SAC |

Soft actor–critic |

| SARSA |

state-action-reward-status-action |

| SL |

Supervised learning |

| SoC |

State of charge |

| TD3 |

Twin delayed DDPG |

| UL |

Unsupervised learning |

References

- Singh, N. India’s Strategy for Achieving Net Zero. Energies 2022, 15, 5852. [Google Scholar] [CrossRef]

- Deng, R.; Liu, Y.; Chen, W.; Liang, H. A Survey on Electric Buses—Energy Storage, Power Management, and Charging Scheduling. IEEE Trans. Intell. Transp. Syst. 2021, 22, 9–22. [Google Scholar] [CrossRef]

- Hu, X.; Han, J.; Tang, X.; Lin, X. Powertrain Design and Control in Electrified Vehicles: A Critical Review. IEEE Trans. Transp. Electrification 2021, 7, 1990–2009. [Google Scholar] [CrossRef]

- Tarafdar-Hagh, M.; Taghizad-Tavana, K.; Ghanbari-Ghalehjoughi, M.; Nojavan, S.; Jafari, P.; Mohammadpour Shotorbani, A. Optimizing Electric Vehicle Operations for a Smart Environment: A Comprehensive Review. Energies 2023, 16, 4302. [Google Scholar] [CrossRef]

- Chu, S.; Majumdar, A. Opportunities and Challenges for a Sustainable Energy Future. Nature 2012, 488, 294–303. [Google Scholar] [CrossRef]

- Mavlutova, I.; Atstaja, D.; Grasis, J.; Kuzmina, J.; Uvarova, I.; Roga, D. Urban Transportation Concept and Sustainable Urban Mobility in Smart Cities: A Review. Energies 2023, 16, 3585. [Google Scholar] [CrossRef]

- Patnaik, B.; Kumar, S.; Gawre, S. Recent Advances in Converters and Storage Technologies for More Electric Aircrafts: A Review. IEEE J. Miniaturization Air Space Syst. 2022, 3, 78–87. [Google Scholar] [CrossRef]

- Xin Wang; Najmeh Bazmohammadi; Jason Atkin; Bozhko, S. ; Guerrero, J.M. Chance-Constrained Model Predictive Control-Based Operation Management of More-Electric Aircraft Using Energy Storage Systems under Uncertainty. J. Energy Storage 2022, 55, 105629. [Google Scholar] [CrossRef]

- Buticchi, G.; Bozhko, S.; Liserre, M.; Wheeler, P.; Al-Haddad, K. On-Board Microgrids for the More Electric Aircraft—Technology Review. IEEE Trans. Ind. Electron. 2019, 66, 5588–5599. [Google Scholar] [CrossRef]

- Ajanovic, A.; Haas, R.; Schrödl, M. On the Historical Development and Future Prospects of Various Types of Electric Mobility. Energies 2021, 14, 1070. [Google Scholar] [CrossRef]

- Cao, Y.; Yao, M.; Sun, X. An Overview of Modelling and Energy Management Strategies for Hybrid Electric Vehicles. Appl. Sci. 2023, 13, 5947. [Google Scholar] [CrossRef]

- Zhang, H.; Peng, J.; Tan, H.; Dong, H.; Ding, F. A Deep Reinforcement Learning-Based Energy Management Framework With Lagrangian Relaxation for Plug-In Hybrid Electric Vehicle. IEEE Trans. Transp. Electrification 2021, 7, 1146–1160. [Google Scholar] [CrossRef]

- José Pedro Soares Pinto Leite; Mark Voskuijl Optimal Energy Management for Hybrid-Electric Aircraft. Aircr. Eng. Aerosp. Technol. 2020, 92, 851–861. [CrossRef]

- Njoya Motapon, S.; Dessaint, L.-A.; Al-Haddad, K. A Comparative Study of Energy Management Schemes for a Fuel-Cell Hybrid Emergency Power System of More-Electric Aircraft. IEEE Trans. Ind. Electron. 2014, 61, 1320–1334. [Google Scholar] [CrossRef]

- Xue, Q.; Zhang, X.; Teng, T.; Zhang, J.; Feng, Z.; Lv, Q. A Comprehensive Review on Classification, Energy Management Strategy, and Control Algorithm for Hybrid Electric Vehicles. Energies 2020, 13, 5355. [Google Scholar] [CrossRef]

- Salmasi, F.R. Control Strategies for Hybrid Electric Vehicles: Evolution, Classification, Comparison, and Future Trends. IEEE Trans. Veh. Technol. 2007, 56, 2393–2404. [Google Scholar] [CrossRef]

- Worku, M.Y.; Hassan, M.A.; Abido, M.A. Real Time-Based under Frequency Control and Energy Management of Microgrids. Electronics 2020, 9, 1487. [Google Scholar] [CrossRef]

- Rasool, M.; Khan, M.A.; Zou, R. A Comprehensive Analysis of Online and Offline Energy Management Approaches for Optimal Performance of Fuel Cell Hybrid Electric Vehicles. Energies 2023, 16, 3325. [Google Scholar] [CrossRef]

- Wang, X.; Atkin, J.; Bazmohammadi, N.; Bozhko, S.; Guerrero, J.M. Guerrero Optimal Load and Energy Management of Aircraft Microgrids Using Multi-Objective Model Predictive Control. Sustainability 2021, 13, 13907. [Google Scholar] [CrossRef]

- Teng, F.; Ding, Z.; Hu, Z.; Sarikprueck, P. Technical Review on Advanced Approaches for Electric Vehicle Charging Demand Management, Part I: Applications in Electric Power Market and Renewable Energy Integration. IEEE Trans. Ind. Appl. 2020, 56, 5684–5694. [Google Scholar] [CrossRef]

- Xu, L.; Guerrero, J.M.; Lashab, A.; Wei, B.; Bazmohammadi, N.; Vasquez, J.C.; Abusorrah, A. A Review of DC Shipboard Microgrids—Part II: Control Architectures, Stability Analysis, and Protection Schemes. IEEE Trans. Power Electron. 2022, 37, 4105–4120. [Google Scholar] [CrossRef]

- Bhatti, G.; Mohan, H.; Raja Singh, R. Towards the Future of Smart Electric Vehicles: Digital Twin Technology. Renew. Sustain. Energy Rev. 2021, 141, 110801. [Google Scholar] [CrossRef]

- Joshi, A.; Capezza, S.; Alhaji, A.; Chow, M.-Y. Survey on AI and Machine Learning Techniques for Microgrid Energy Management Systems. IEEECAA J. Autom. Sin. 2023, 10, 1513–1529. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Z.; Li, G.; Liu, Y.; Chen, H.; Cunningham, G.; Early, J. Machine Learning-Based Vehicle Model Construction and Validation—Toward Optimal Control Strategy Development for Plug-In Hybrid Electric Vehicles. IEEE Trans. Transp. Electrification 2022, 8, 1590–1603. [Google Scholar] [CrossRef]

- Gan, J.; Li, S.; Wei, C.; Deng, L.; Tang, X. Intelligent Learning Algorithm and Intelligent Transportation-Based Energy Management Strategies for Hybrid Electric Vehicles: A Review. IEEE Trans. Intell. Transp. Syst. 2023, 1–17. [Google Scholar] [CrossRef]

- Feiyan, Q.; Weimin, L. A Review of Machine Learning on Energy Management Strategy for Hybrid Electric Vehicles. In Proceedings of the 2021 6th Asia Conference on Power and Electrical Engineering (ACPEE); IEEE: Chongqing, China, April 2021; pp. 315–319.

- Zhao, S.; Blaabjerg, F.; Wang, H. An Overview of Artificial Intelligence Applications for Power Electronics. IEEE Trans. Power Electron. 2021, 36, 4633–4658. [Google Scholar] [CrossRef]

- Hyang-A Park; Hyang-A Park; Wanbin Son; Hyung-Chul Jo; Kim, J. ; Kim, S. Digital Twin for Operation of Microgrid: Optimal Scheduling in Virtual Space of Digital Twin. Energies 2020, 13, 5504. [Google Scholar] [CrossRef]

- Biswas, A.; Emadi, A. Energy Management Systems for Electrified Powertrains: State-of-the-Art Review and Future Trends. IEEE Trans. Veh. Technol. 2019, 68, 6453–6467. [Google Scholar] [CrossRef]

- Ali, A.M.; Moulik, B. On the Role of Intelligent Power Management Strategies for Electrified Vehicles: A Review of Predictive and Cognitive Methods. IEEE Trans. Transp. Electrification 2022, 8, 368–383. [Google Scholar] [CrossRef]

- Celsi, L.R.; Valli, A. Applied Control and Artificial Intelligence for Energy Management: An Overview of Trends in EV Charging, Cyber-Physical Security and Predictive Maintenance. 2023.

- Ozay, M.; Esnaola, I.; Yarman Vural, F.T.; Kulkarni, S.R.; Poor, H.V. Machine Learning Methods for Attack Detection in the Smart Grid. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1773–1786. [Google Scholar] [CrossRef]

- Singh, S.; Ramkumar, K.R.; Kukkar, A. Machine Learning Techniques and Implementation of Different ML Algorithms. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT); October 2021; pp. 1–6.

- Ravi Kumar, R.; Babu Reddy, M.; Praveen, P. A Review of Feature Subset Selection on Unsupervised Learning. In Proceedings of the 2017 Third International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB); IEEE: Chennai, India, February 2017; pp. 163–167.

- Kiumarsi, B.; Vamvoudakis, K.G.; Modares, H.; Lewis, F.L. Optimal and Autonomous Control Using Reinforcement Learning: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2042–2062. [Google Scholar] [CrossRef]

- Raković, S.V. The Minkowski-Bellman Equation; 2019;

- Nguyen, S.; Abdelhakim, M.; Kerestes, R. Survey Paper of Digital Twins and Their Integration into Electric Power Systems. In Proceedings of the 2021 IEEE Power & Energy Society General Meeting (PESGM); IEEE: Washington, DC, USA, July 26 2021; pp. 01–05.

- Dimogiannis, K.; Sankowski, A.; Holc, C.; Newton, G.; Walsh, D.A.; O’Shea, J.; Khlobystov, A.; Sankowski, A. Understanding the Mg Cycling Mechanism on a MgTFSI-Glyme Electrolyte. ECS Meet. Abstr. 2022, MA2022-01, 574. [CrossRef]

- Shafto, M.; Conroy, M.; Doyle, R.; Glaessgen, E.; Kemp, C.; LeMoigne, J.; Wang, L. Modeling, Simulation, Information Technology and Processing Roadmap; 2010.

- Ríos, J.; Morate, F.M.; Oliva, M.; Hernández, J.C. Framework to Support the Aircraft Digital Counterpart Concept with an Industrial Design View. Int. J. Agile Syst. Manag. 2016, 9, 212. [Google Scholar] [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication. 2015.

- Hyang-A Park; Hyang-A Park; Wanbin Son; Hyung-Chul Jo; Kim, J. ; Kim, S. Digital Twin for Operation of Microgrid: Optimal Scheduling in Virtual Space of Digital Twin. Energies 2020, 13, 5504. [Google Scholar] [CrossRef]

- Zhang, G.; Huo, C.; Zheng, L.; Li, X. An Architecture Based on Digital Twins for Smart Power Distribution System. In Proceedings of the 2020 3rd International Conference on Artificial Intelligence and Big Data (ICAIBD); IEEE: Chengdu, China, May 2020; pp. 29–33.

- Gomozov, O.; Trovao, J.P.F.; Kestelyn, X.; Dubois, M.R. Adaptive Energy Management System Based on a Real-Time Model Predictive Control With Nonuniform Sampling Time for Multiple Energy Storage Electric Vehicle. IEEE Trans. Veh. Technol. 2016, 66, 5520–5530. [Google Scholar] [CrossRef]

- Li, J.; Zhou, Q.; Williams, H.; Xu, H.; Du, C. Cyber-Physical Data Fusion in Surrogate- Assisted Strength Pareto Evolutionary Algorithm for PHEV Energy Management Optimization. IEEE Trans. Ind. Inform. 2022, 18, 4107–4117. [Google Scholar] [CrossRef]

- Garcia-Triviño, P.; Fernández-Ramírez, L.; García-Vázquez, C.; Jurado, F. Energy Management System of Fuel-Cell-Battery Hybrid Tramway. Ind. Electron. IEEE Trans. On 2010, 57, 4013–4023. [Google Scholar] [CrossRef]

- Li, C.-Y.; Liu, G.-P. Optimal Fuzzy Power Control and Management of Fuel Cell/Battery Hybrid Vehicles. J. Power Sources 2009, 192, 525–533. [Google Scholar] [CrossRef]

- P. Pisu; K. Koprubasi; G. Rizzoni Energy Management and Drivability Control Problems for Hybrid Electric Vehicles. In Proceedings of the Proceedings of the 44th IEEE Conference on Decision and Control; IEEE: Seville, Spain, 2005; pp. 1824–1830.

- García, P.; Torreglosa, J.; Fernández, L.; Jurado, F. Viability Study of a FC-Battery-SC Tramway Controlled by Equivalent Consumption Minimization Strategy. Int. J. Hydrog. Energy 2012, 37, 9368–9382. [Google Scholar] [CrossRef]

- Vural, B.; Boynuegri, A.; Nakir, I.; Erdinc, O.; Balikci, A.; Uzunoglu, M.; Gorgun, H.; Dusmez, S. Fuel Cell and Ultra-Capacitor Hybridization: A Prototype Test Bench Based Analysis of Different Energy Management Strategies for Vehicular Applications. Int. J. Hydrog. Energy 2010, 35, 11161–11171. [Google Scholar] [CrossRef]

- Li, G.; Görges, D. Fuel-Efficient Gear Shift and Power Split Strategy for Parallel HEVs Based on Heuristic Dynamic Programming and Neural Networks. IEEE Trans. Veh. Technol. 2019, 68, 9519–9528. [Google Scholar] [CrossRef]

- Xi, Z.; Wu, D.; Ni, W.; Ma, X. Energy-Optimized Trajectory Planning for Solar-Powered Aircraft in a Wind Field Using Reinforcement Learning. IEEE Access 2022, 10, 87715–87732. [Google Scholar] [CrossRef]

- Kong, H.; Fang, Y.; Fan, L.; Wang, H.; Zhang, X.; Hu, J. A Novel Torque Distribution Strategy Based on Deep Recurrent Neural Network for Parallel Hybrid Electric Vehicle. IEEE Access 2019, 7, 65174–65185. [Google Scholar] [CrossRef]

- Mosayebi, M.; Gheisarnejad, M.; Farsizadeh, H.; Andresen, B.; Khooban, M.H. Smart Extreme Fast Portable Charger for Electric Vehicles-Based Artificial Intelligence. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 586–590. [Google Scholar] [CrossRef]

- Anselma, P.G.; Huo, Y.; Roeleveld, J.; Belingardi, G.; Emadi, A. Integration of On-Line Control in Optimal Design of Multimode Power-Split Hybrid Electric Vehicle Powertrains. IEEE Trans. Veh. Technol. 2019, 68, 3436–3445. [Google Scholar] [CrossRef]

- Alfakih, T.; Hassan, M.M.; Gumaei, A.; Savaglio, C.; Fortino, G. Task Offloading and Resource Allocation for Mobile Edge Computing by Deep Reinforcement Learning Based on SARSA. IEEE Access 2020, 8, 54074–54084. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Jiang, D.; Song, H. A New Deep-Q-Learning-Based Transmission Scheduling Mechanism for the Cognitive Internet of Things. IEEE Internet Things J. 2018, 5, 2375–2385. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Zheng, W.; Xia, Y.; Li, Y.; Chen, P.; Guo, K.; Xie, H. Multi-Objective Workflow Scheduling With Deep-Q-Network-Based Multi-Agent Reinforcement Learning. IEEE Access 2019, 7, 39974–39982. [Google Scholar] [CrossRef]

- Bøhn, E.; Coates, E.M.; Moe, S.; Johansen, T.A. Deep Reinforcement Learning Attitude Control of Fixed-Wing UAVs Using Proximal Policy Optimization. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS); June 2019; pp. 523–533. [Google Scholar]

- Wu, J.; Wei, Z.; Li, W.; Wang, Y.; Li, Y.; Sauer, D.U. Battery Thermal- and Health-Constrained Energy Management for Hybrid Electric Bus Based on Soft Actor-Critic DRL Algorithm. IEEE Trans. Ind. Inform. 2021, 17, 3751–3761. [Google Scholar] [CrossRef]

- Qiu, C.; Hu, Y.; Chen, Y.; Zeng, B. Deep Deterministic Policy Gradient (DDPG)-Based Energy Harvesting Wireless Communications. IEEE Internet Things J. 2019, 6, 8577–8588. [Google Scholar] [CrossRef]

- Khalid, J.; Ramli, M.A.M.; Khan, M.S.; Hidayat, T. Efficient Load Frequency Control of Renewable Integrated Power System: A Twin Delayed DDPG-Based Deep Reinforcement Learning Approach. IEEE Access 2022, 10, 51561–51574. [Google Scholar] [CrossRef]

- Qiu, D.; Wang, Y.; Hua, W.; Strbac, G. Reinforcement Learning for Electric Vehicle Applications in Power Systems:A Critical Review. Renew. Sustain. Energy Rev. 2023, 173, 113052. [Google Scholar] [CrossRef]

- Xu, B.; Hu, X.; Tang, X.; Lin, X.; Li, H.; Rathod, D.; Filipi, Z. Ensemble Reinforcement Learning-Based Supervisory Control of Hybrid Electric Vehicle for Fuel Economy Improvement. IEEE Trans. Transp. Electrification 2020, 6, 717–727. [Google Scholar] [CrossRef]