Submitted:

26 July 2023

Posted:

27 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background and Related Works

3. The Proposed Method

- i.

- Choose a random chromosome value between 0 and 1,

- ii.

- Go to the next step and mutate if the number is bigger than the mutation threshold (a value between 0 and 1), otherwise skip mutation,

- iii.

- Choose a random number that indicates one of the chromosome genes and makes a numerical mutation.

4. Implementation and Experiments

- i.

- Defining the initial parameters such as population, number of iterations, mutation rate, and crossover rate of GA,

- ii.

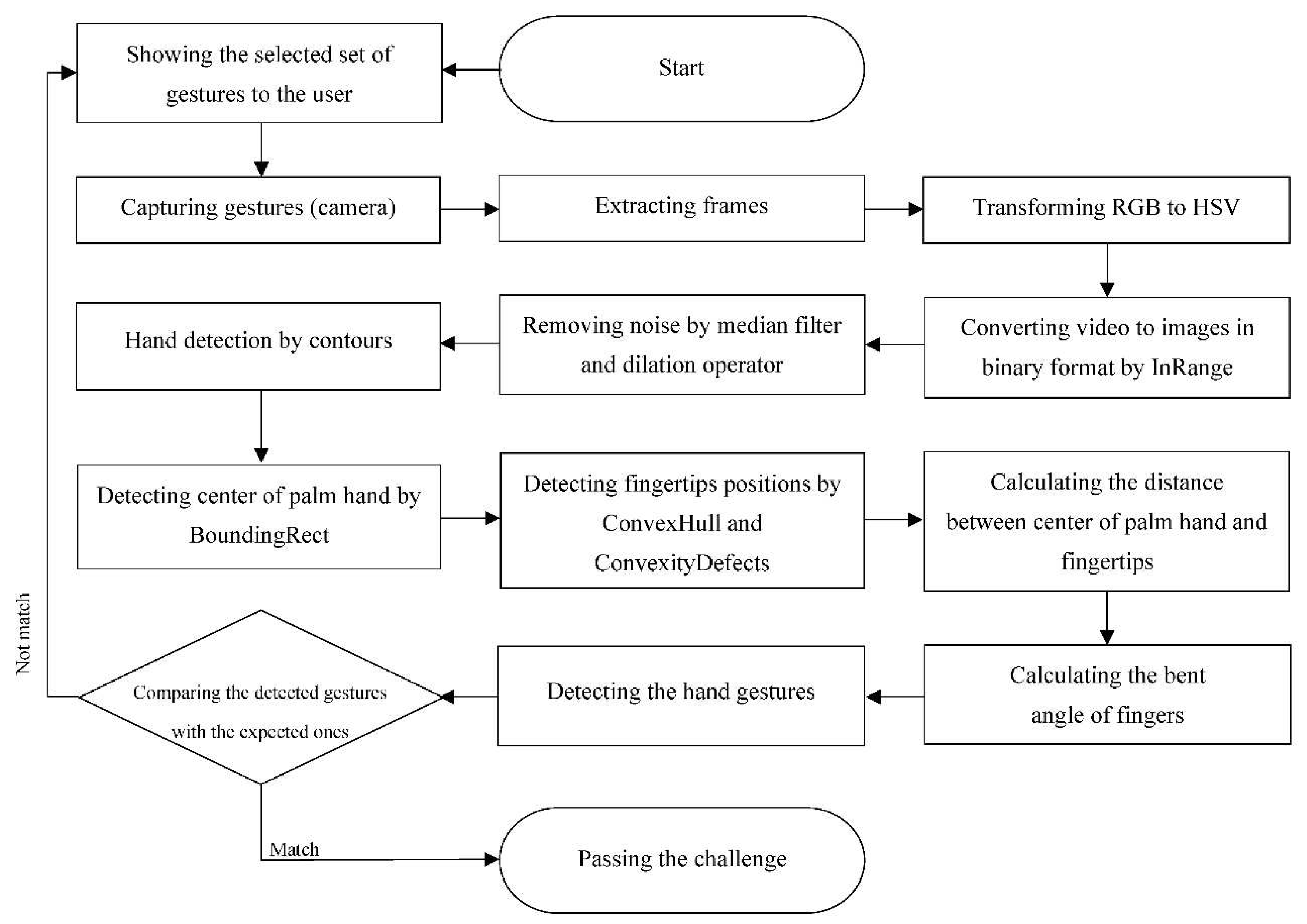

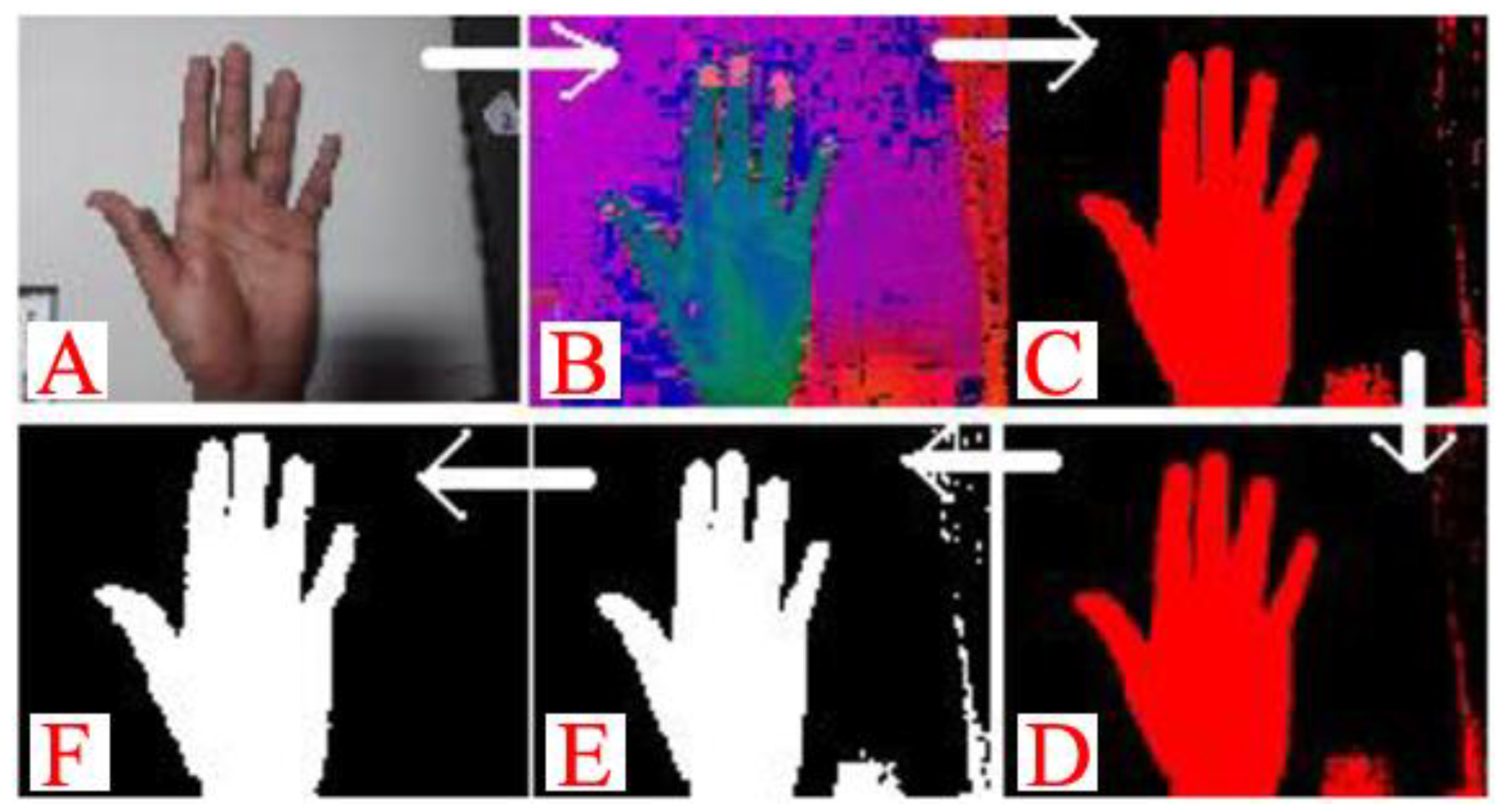

- Preprocessing and normalizing part of input data to reduce learning errors and enhance performance in hand movement detection,

- iii.

- Dividing the dataset into training and evaluation parts,

- iv.

- Producing chromosomes for MLP and applying mutation and crossover on the chromosomes to find optimum weight and bias and reduce the error rate,

- v.

- Evaluating the method according to the metrics in section 4.2.

4.1. Dataset

4.2. Evaluation Metrics

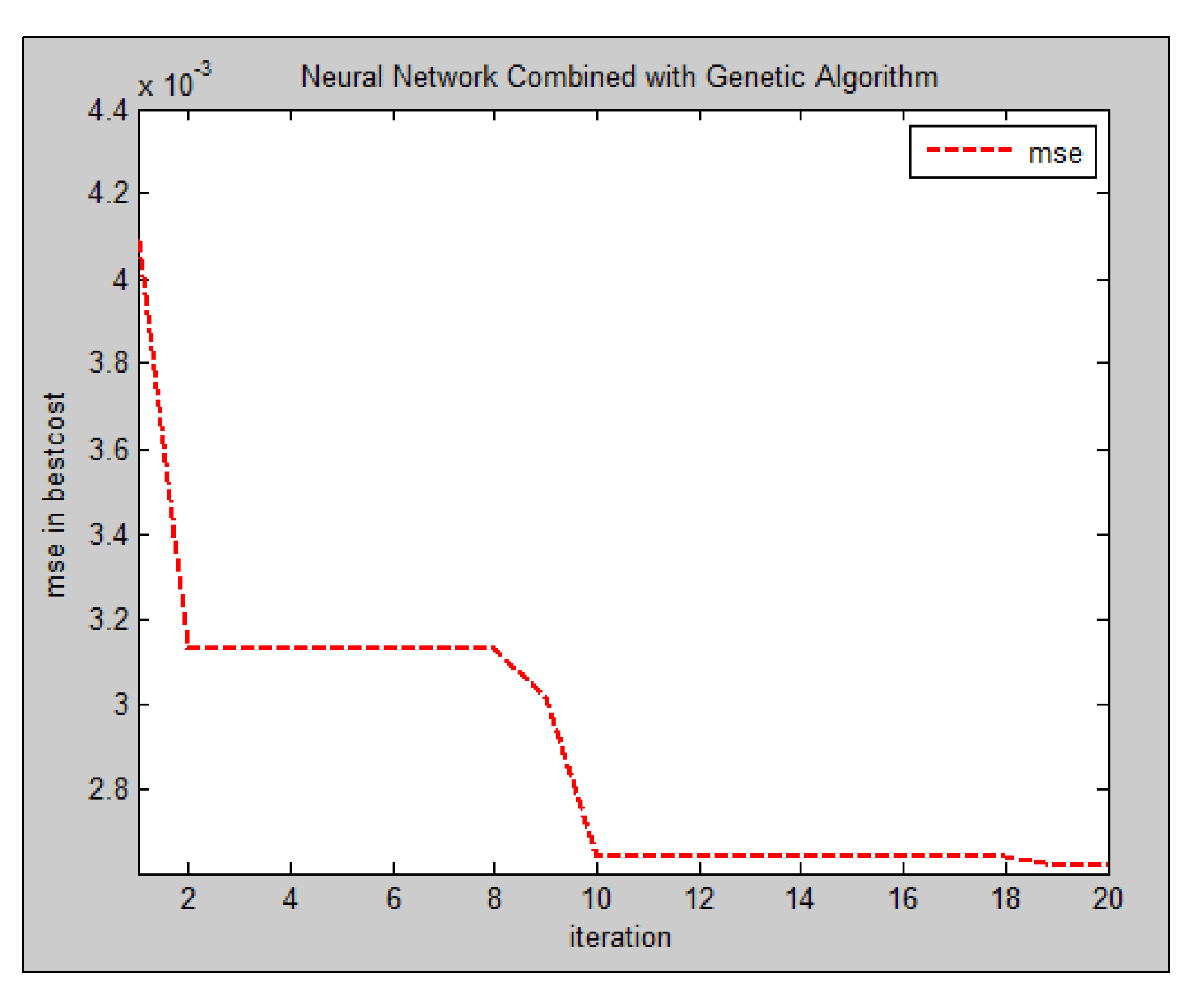

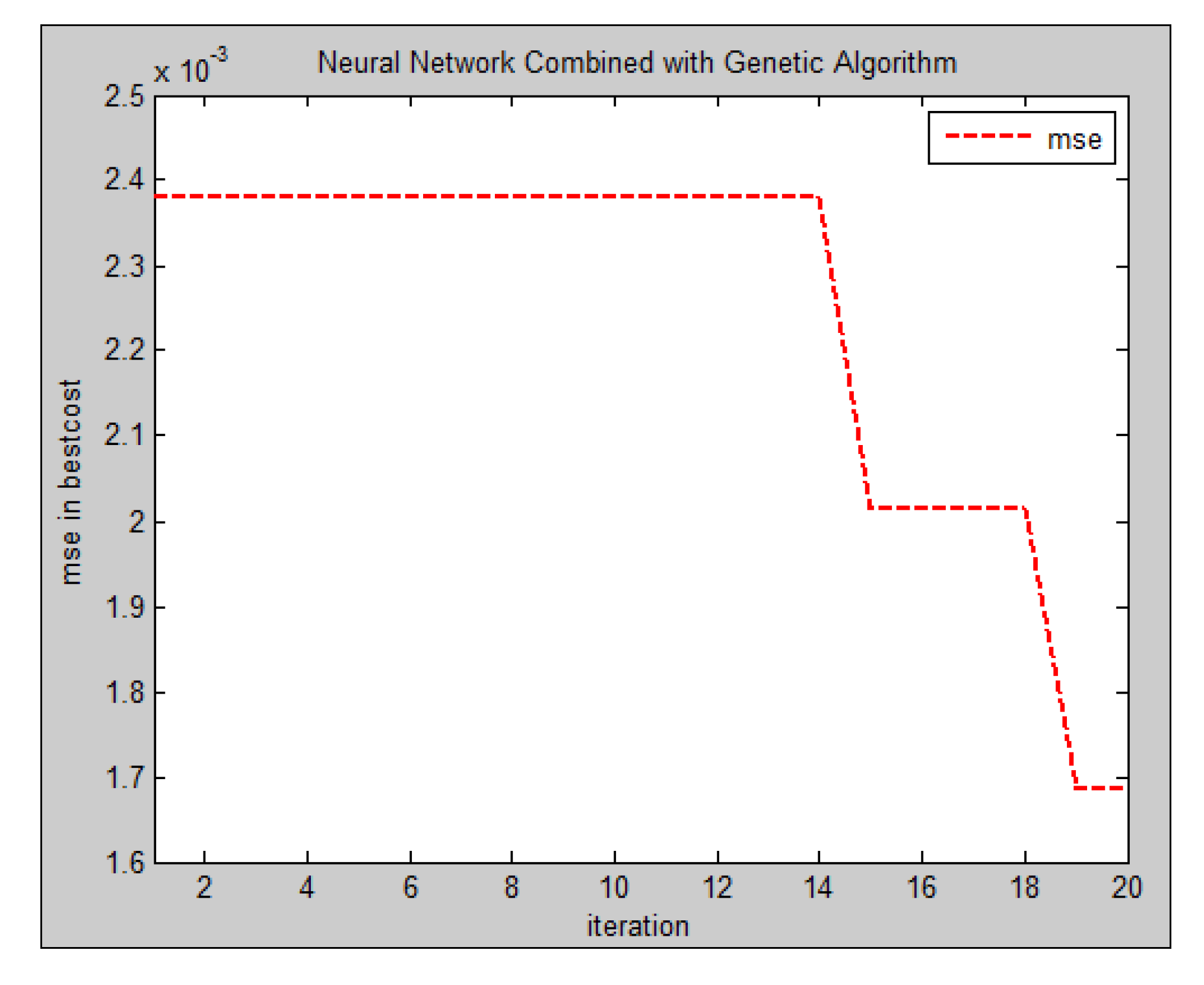

4.3. Error Analysis by Increasing Population and Iterations

- I.

- Increasing the number of chromosomes increases the number of neural networks for prediction, and results in a more accurate classification,

- II.

- Increasing population produces more and diverse test ratios in GA, and accordingly increases the chance of finding a final and accurate answer,

- III.

- Increasing chromosomes in GA increases problem search space that normally results in reaching optimum answers and reducing error,

- IV.

- Increasing the population increases the number of elite members and enhances the probability of mutation and crossover. This leads to increasing the chance of having a more accurate MLP for hand pose prediction.

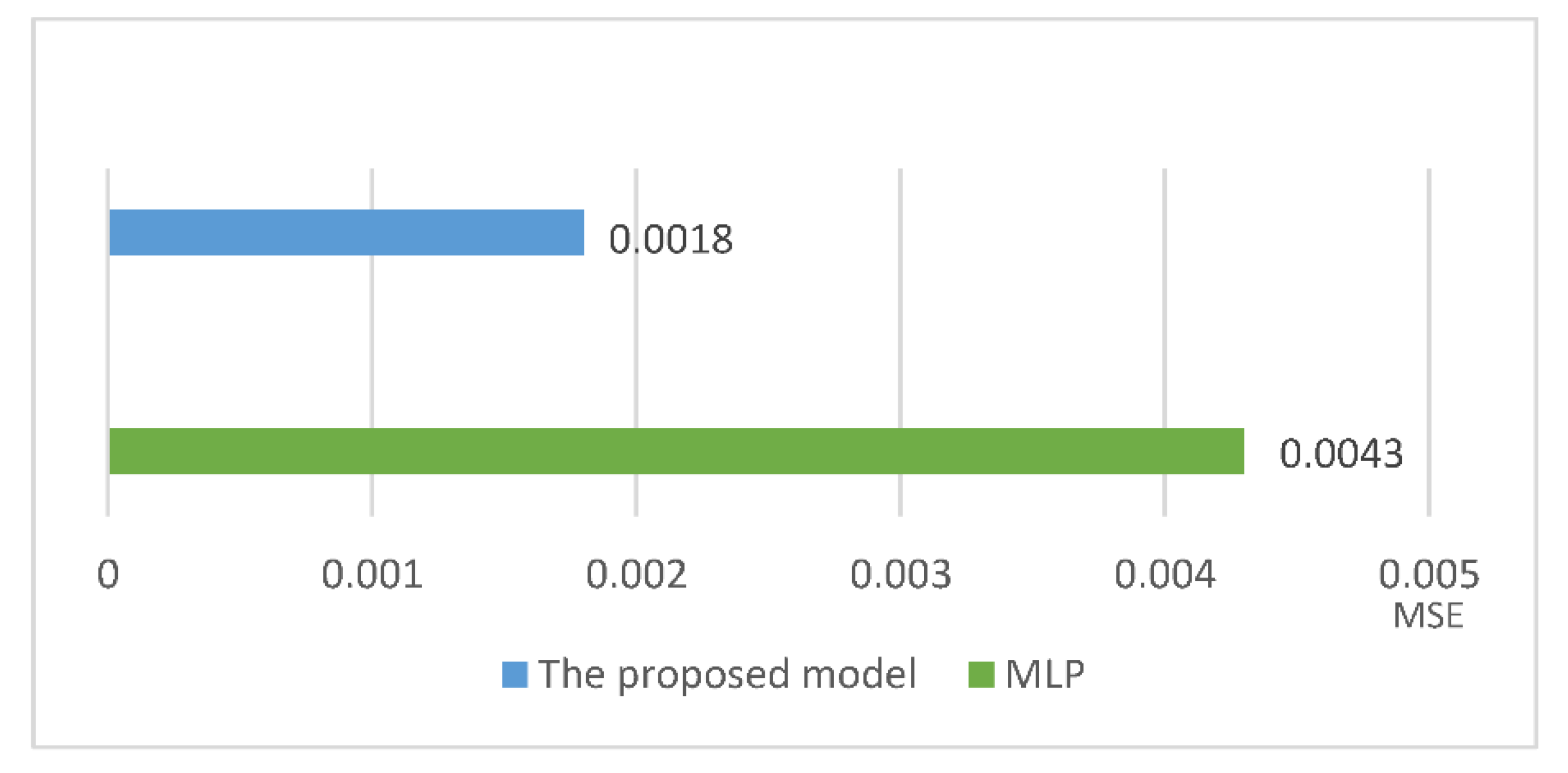

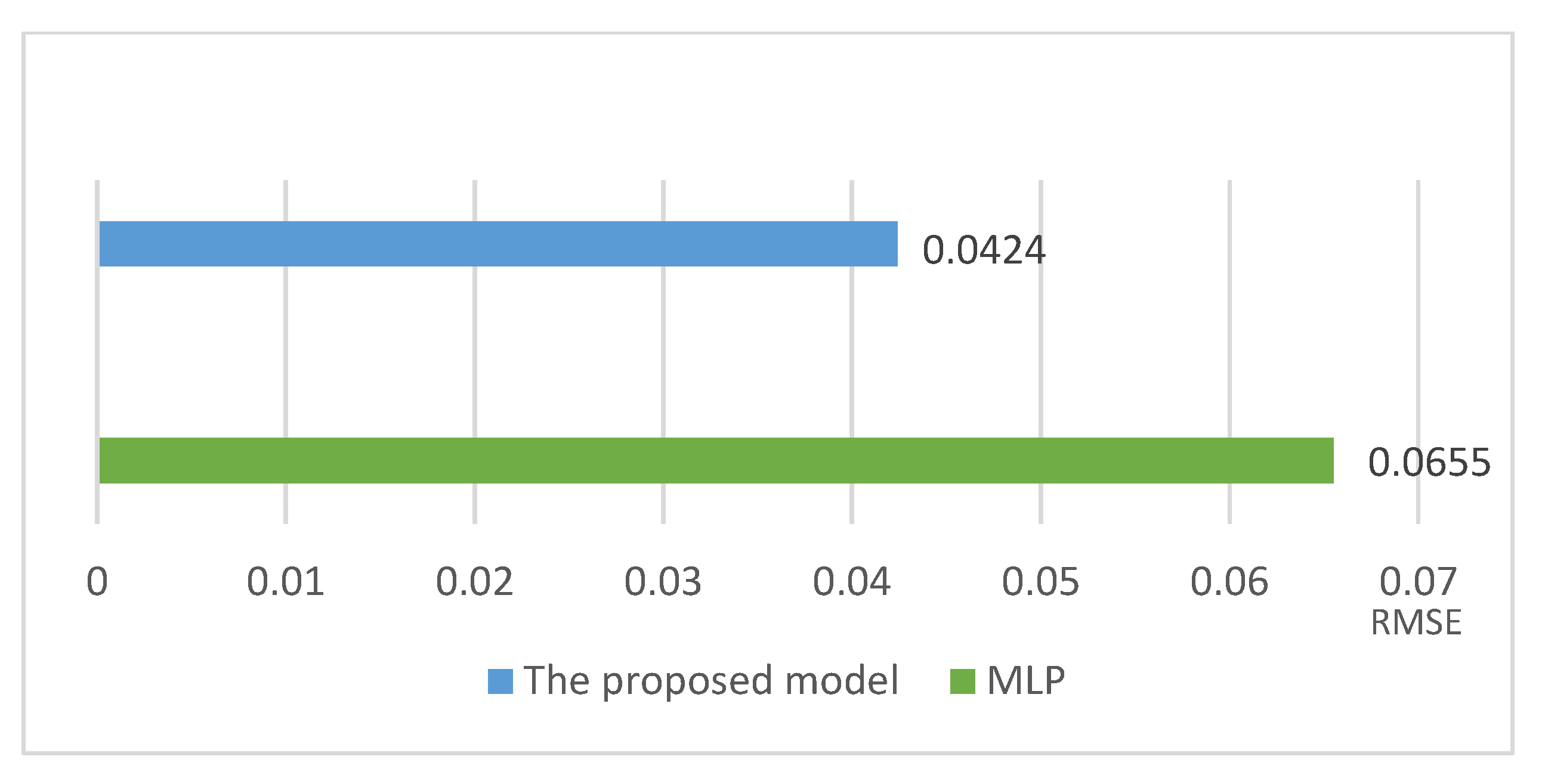

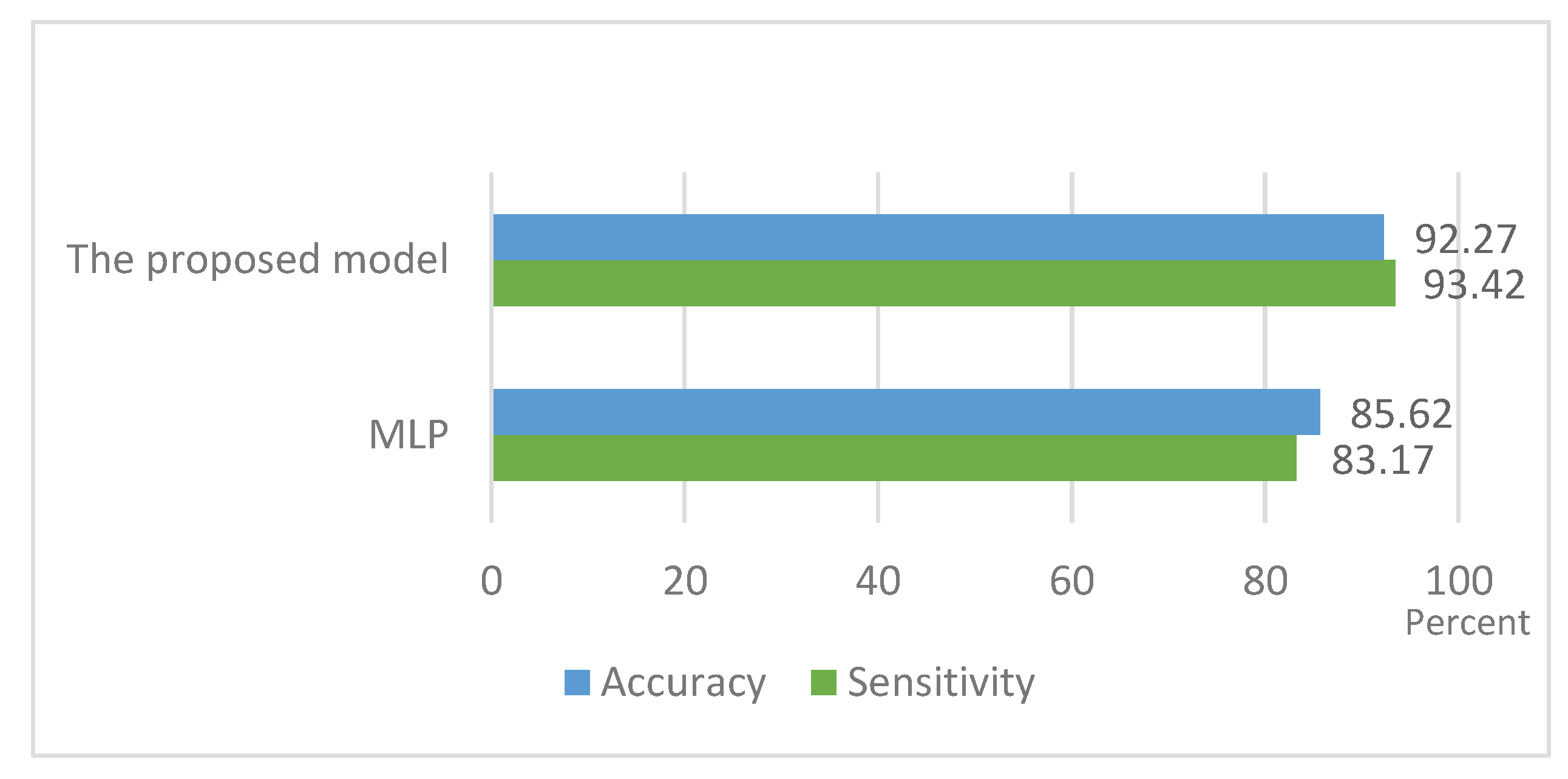

5. Comparison and Discussion

6. Conclusion

References

- Moradi, M. and Keyvanpour, M., 2015. CAPTCHA and its Alternatives: A Review. Security and Communication Networks, 8(12), pp.2135-2156. [CrossRef]

- Chaeikar, S.S., Alizadeh, M., Tadayon, M.H. and Jolfaei, A., 2021. An intelligent cryptographic key management model for secure communications in distributed industrial intelligent systems. International Journal of Intelligent Systems. [CrossRef]

- Von Ahn, L., Blum, M. and Langford, J., 2004. Telling humans and computers apart automatically. Communications of the ACM, 47(2), pp.56-60. [CrossRef]

- Chellapilla, K., Larson, K., Simard, P. and Czerwinski, M., 2005, April. Designing human friendly human interaction proofs (HIPs). In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 711-720).

- Chaeikar, S.S., Jolfaei, A., Mohammad, N. and Ostovari, P., 2021, October. Security principles and challenges in electronic voting. In 2021 IEEE 25th International Enterprise Distributed Object Computing Workshop (EDOCW) (pp. 38-45). IEEE. [CrossRef]

- Arteaga, M.V., Castiblanco, J.C., Mondragon, I.F., Colorado, J.D. and Alvarado-Rojas, C., 2020. EMG-driven hand model based on the classification of individual finger movements. Biomedical Signal Processing and Control, 58, p.101834. [CrossRef]

- Lupinetti, K., Ranieri, A., Giannini, F. and Monti, M., 2020, September. 3d dynamic hand gestures recognition using the leap motion sensor and convolutional neural networks. In International Conference on Augmented Reality, Virtual Reality and Computer Graphics (pp. 420-439). Springer, Cham. [CrossRef]

- Cobos-Guzman, S., Verdú, E., Herrera-Viedma, E. and Crespo, R.G., 2020. Fuzzy logic expert system for selecting robotic hands using kinematic parameters. Journal of Ambient Intelligence and Humanized Computing, 11(4), pp.1553-1564. [CrossRef]

- Martinelli, Dieisson, Alex Luiz Sousa, Mario Ezequiel Augusto, Vivian Cremer Kalempa, Andre Schneider de Oliveira, Ronnier Frates Rohrich, and Marco Antonio Teixeira. "Remote control for mobile robots using gestures captured by the rgb camera and recognized by deep learning techniques." In 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), pp. 98-103. IEEE, 2019. [CrossRef]

- Chaeikar, S.S., Ahmadi, A., Karamizadeh, S. and Chaeikar, N.S., 2022. SIKM–a smart cryptographic key management framework. Open Computer Science, 12(1), pp.17-26. [CrossRef]

- Khodadadi, T., Javadianasl, Y., Rabiei, F., Alizadeh, M., Zamani, M. and Chaeikar, S.S., 2021, December. A novel graphical password authentication scheme with improved usability. In 2021 4th International symposium on advanced electrical and communication technologies (ISAECT) (pp. 01-04). IEEE. [CrossRef]

- Chew, M. and Baird, H.S., 2003, January. Baffletext: A human interactive proof. In Document Recognition and Retrieval X (Vol. 5010, pp. 305-316). SPIE. [CrossRef]

- Baird, H.S., Coates, A.L. and Fateman, R.J., 2003. Pessimalprint: a reverse turing test. International Journal on Document Analysis and Recognition, 5(2), pp.158-163.

- Mori, G. and Malik, J., 2003, June. Recognizing objects in adversarial clutter: Breaking a visual CAPTCHA. In 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings. (Vol. 1, pp. I-I). IEEE. [CrossRef]

- Baird, H.S., Moll, M.A. and Wang, S.Y., 2005, August. ScatterType: A legible but hard-to-segment CAPTCHA. In Eighth International Conference on Document Analysis and Recognition (ICDAR'05). [CrossRef]

- Wang, D., Moh, M. and Moh, T.S., 2020, January. Using Deep Learning to Solve Google reCAPTCHA v2’s Image Challenges. In 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM) (pp. 1-5). IEEE. [CrossRef]

- Singh, V.P. and Pal, P., 2014. Survey of different types of CAPTCHA. International Journal of computer science and information technologies, 5(2), pp.2242-2245.

- Bursztein, E., Aigrain, J., Moscicki, A. and Mitchell, J.C., 2014. The End is Nigh: Generic Solving of Text-based {CAPTCHAs}. In 8th USENIX Workshop on Offensive Technologies (WOOT 14).

- Obimbo, C., Halligan, A. and De Freitas, P., 2013. CaptchAll: an improvement on the modern text-based CAPTCHA. procedia computer science, 20, pp.496-501. [CrossRef]

- Datta, R., Li, J. and Wang, J.Z., 2005, November. Imagination: a robust image-based captcha generation system. In Proceedings of the 13th annual ACM international conference on Multimedia (pp. 331-334).

- Rui, Y. and Liu, Z., 2003, November. Artifacial: Automated reverse turing test using facial features. In Proceedings of the eleventh ACM international conference on Multimedia (pp. 295-298). [CrossRef]

- Elson, J., Douceur, J.R., Howell, J. and Saul, J., 2007. Asirra: a CAPTCHA that exploits interest-aligned manual image categorization. CCS, 7, pp.366-374. [CrossRef]

- Hoque, M.E., Russomanno, D.J. and Yeasin, M., 2006, March. 2d captchas from 3d models. In Proceedings of the IEEE SoutheastCon 2006 (pp. 165-170). IEE. [CrossRef]

- Gao, H., Yao, D., Liu, H., Liu, X. and Wang, L., 2010, December. A novel image based CAPTCHA using jigsaw puzzle. In 2010 13th IEEE International Conference on Computational Science and Engineering (pp. 351-356). IEEE. [CrossRef]

- Gao, S., Mohamed, M., Saxena, N. and Zhang, C., 2015, December. Emerging image game CAPTCHAs for resisting automated and human-solver relay attacks. In Proceedings of the 31st Annual Computer Security Applications Conference (pp. 11-20). [CrossRef]

- Cui, J.S., Mei, J.T., Wang, X., Zhang, D. and Zhang, W.Z., 2009, November. A captcha implementation based on 3d animation. In 2009 International Conference on Multimedia Information Networking and Security (Vol. 2, pp. 179-182). IEEE. [CrossRef]

- Winter-Hjelm, C., Kleming, M. and Bakken, R., 2009. An interactive 3D CAPTCHA with semantic information. In Proc. Norwegian Artificial Intelligence Symp (pp. 157-160).

- Shirali-Shahreza, S., Ganjali, Y. and Balakrishnan, R., 2011. Verifying human users in speech-based interactions. In Twelfth Annual Conference of the International Speech Communication Association. [CrossRef]

- Chan, N., 2002. Sound oriented CAPTCHA. In First Workshop on Human Interactive Proofs (HIP), abstract available at http://www. aladdin. cs. cmu. edu/hips/events/abs/nancy_ abstract. pdf.

- Holman, J., Lazar, J., Feng, J.H. and D'Arcy, J., 2007, October. Developing usable CAPTCHAs for blind users. In Proceedings of the 9th international ACM SIGACCESS conference on Computers and accessibility (pp. 245-246). [CrossRef]

- Schlaikjer, A., 2007. A dual-use speech CAPTCHA: Aiding visually impaired web users while providing transcriptions of Audio Streams. LTI-CMU Technical Report, pp.07-014.

- Lupkowski, P. and Urbanski, M., 2008, October. SemCAPTCHA—user-friendly alternative for OCR-based CAPTCHA systems. In 2008 international multiconference on computer science and information technology (pp. 325-329). IEEE. [CrossRef]

- Yamamoto, T., Tygar, J.D. and Nishigaki, M., 2010, April. Captcha using strangeness in machine translation. In 2010 24th IEEE International Conference on Advanced Information Networking and Applications (pp. 430-437). IEEE. [CrossRef]

- Gaggi, O., 2022. A study on Accessibility of Google ReCAPTCHA Systems. In Open Challenges in Online Social Networks (pp. 25-30). [CrossRef]

- Yadava, P., Sahu, C. and Shukla, S., 2011. Time-variant Captcha: generating strong Captcha Security by reducing time to automated computer programs. Journal of Emerging Trends in Computing and Information Sciences, 2(12), pp.701-704.

- Wang, L., Chang, X., Ren, Z., Gao, H., Liu, X. and Aickelin, U., 2010, April. Against spyware using CAPTCHA in graphical password scheme. In 2010 24th IEEE International Conference on Advanced Information Networking and Applications (pp. 760-767). IEEE. [CrossRef]

- Belk, M., Fidas, C., Germanakos, P. and Samaras, G., 2012. Do cognitive styles of users affect preference and performance related to CAPTCHA challenges?. In CHI'12 Extended Abstracts on Human Factors in Computing Systems (pp. 1487-1492). [CrossRef]

- Wei, T.E., Jeng, A.B. and Lee, H.M., 2012, December. GeoCAPTCHA—A novel personalized CAPTCHA using geographic concept to defend against 3 rd Party Human Attack. In 2012 IEEE 31st International Performance Computing and Communications Conference (IPCCC) (pp. 392-399). IEEE. [CrossRef]

- Jiang, N. and Dogan, H., 2015, July. A gesture-based captcha design supporting mobile devices. In Proceedings of the 2015 British HCI Conference (pp. 202-207). [CrossRef]

- Pritom, A.I., Chowdhury, M.Z., Protim, J., Roy, S., Rahman, M.R. and Promi, S.M., 2020, February. Combining movement model with finger-stroke level model towards designing a security enhancing mobile friendly captcha. In Proceedings of the 2020 9th international conference on software and computer applications (pp. 351-356). [CrossRef]

- Parvez, M.T. and Alsuhibany, S.A., 2020. Segmentation-validation based handwritten Arabic CAPTCHA generation. Computers & Security, 95, p.101829. [CrossRef]

- Shah, A.R., Banday, M.T. and Sheikh, S.A., 2021. Design of a drag and touch multilingual universal captcha challenge. In Advances in Computational Intelligence and Communication Technology (pp. 381-393). Springer, Singapore.

- Luzhnica, G., Simon, J., Lex, E. and Pammer, V., 2016, March. A sliding window approach to natural hand gesture recognition using a custom data glove. In 2016 IEEE Symposium on 3D User Interfaces (3DUI) (pp. 81-90). IEEE. [CrossRef]

- Hung, C.H., Bai, Y.W. and Wu, H.Y., 2016, January. Home outlet and LED array lamp controlled by a smartphone with a hand gesture recognition. In 2016 IEEE international conference on consumer electronics (ICCE) (pp. 5-6). IEEE. [CrossRef]

- Chen, Y., Ding, Z., Chen, Y.L. and Wu, X., 2015, August. Rapid recognition of dynamic hand gestures using leap motion. In 2015 IEEE International Conference on Information and Automation (pp. 1419-1424). IEEE. [CrossRef]

- Panwar, M. and Mehra, P.S., 2011, November. Hand gesture recognition for human computer interaction. In 2011 International Conference on Image Information Processing (pp. 1-7). IEEE. [CrossRef]

- Chaudhary, A. and Raheja, J.L., 2013. Bent fingers’ angle calculation using supervised ANN to control electro-mechanical robotic hand. Computers & Electrical Engineering, 39(2), pp.560-570. [CrossRef]

- Marium, A., Rao, D., Crasta, D.R., Acharya, K. and D’Souza, R., 2017. Hand gesture recognition using webcam. American Journal of Intelligent Systems, 7(3), pp.90-94.

- Simion G, David C, G Simion, G., David, C., Gui, V. and Caleanu, C.D., 2016. Fingertip-based real time tracking and gesture recognition for natural user interfaces. Acta Polytechnica Hungarica, 13(5), pp.189-204. ui V, Căleanu CD. Fingertip-based real time tracking and gesture recognition for natural user interfaces. Acta Polytechnica Hungarica. 2016 Jan 1;13(5):189-204.

- Chaudhary, A., Raheja, J.L. and Raheja, S., 2012. A vision based geometrical method to find fingers positions in real time hand gesture recognition. J. Softw., 7(4), pp.861-869. [CrossRef]

- Benitez-Garcia, G., Olivares-Mercado, J., Sanchez-Perez, G. and Yanai, K., 2021, January. IPN hand: A video dataset and benchmark for real-time continuous hand gesture recognition. In 2020 25th International Conference on Pattern Recognition (ICPR) (pp. 4340-4347). IEEE.

- Li, Y.M., Lee, T.H., Kim, J.S. and Lee, H.J., 2021, June. Cnn-based real-time hand and fingertip recognition for the design of a virtual keyboard. In 2021 36th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC) (pp. 1-3). IEE. [CrossRef]

- Redmon, J. and Farhadi, A., 2018. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767. arXiv:1804.02767.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).