Submitted:

26 July 2023

Posted:

31 July 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

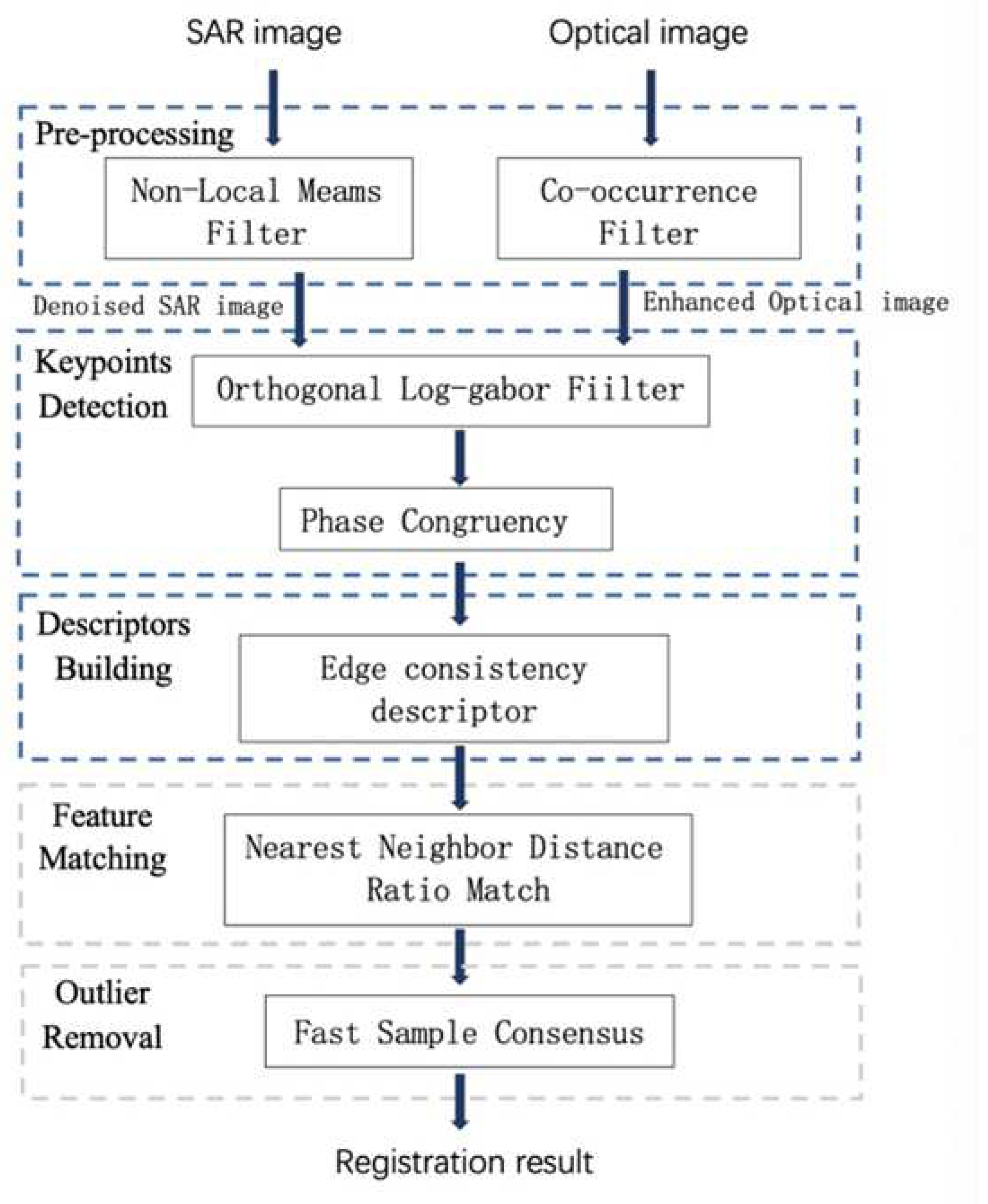

- 1.

- To capture the similarity of geometric structures and morphological features, phase congruency is used to extract image edges, and the differences between multi-source remote sensing images are suppressed by eliminating noise and weakening texture.

- 2.

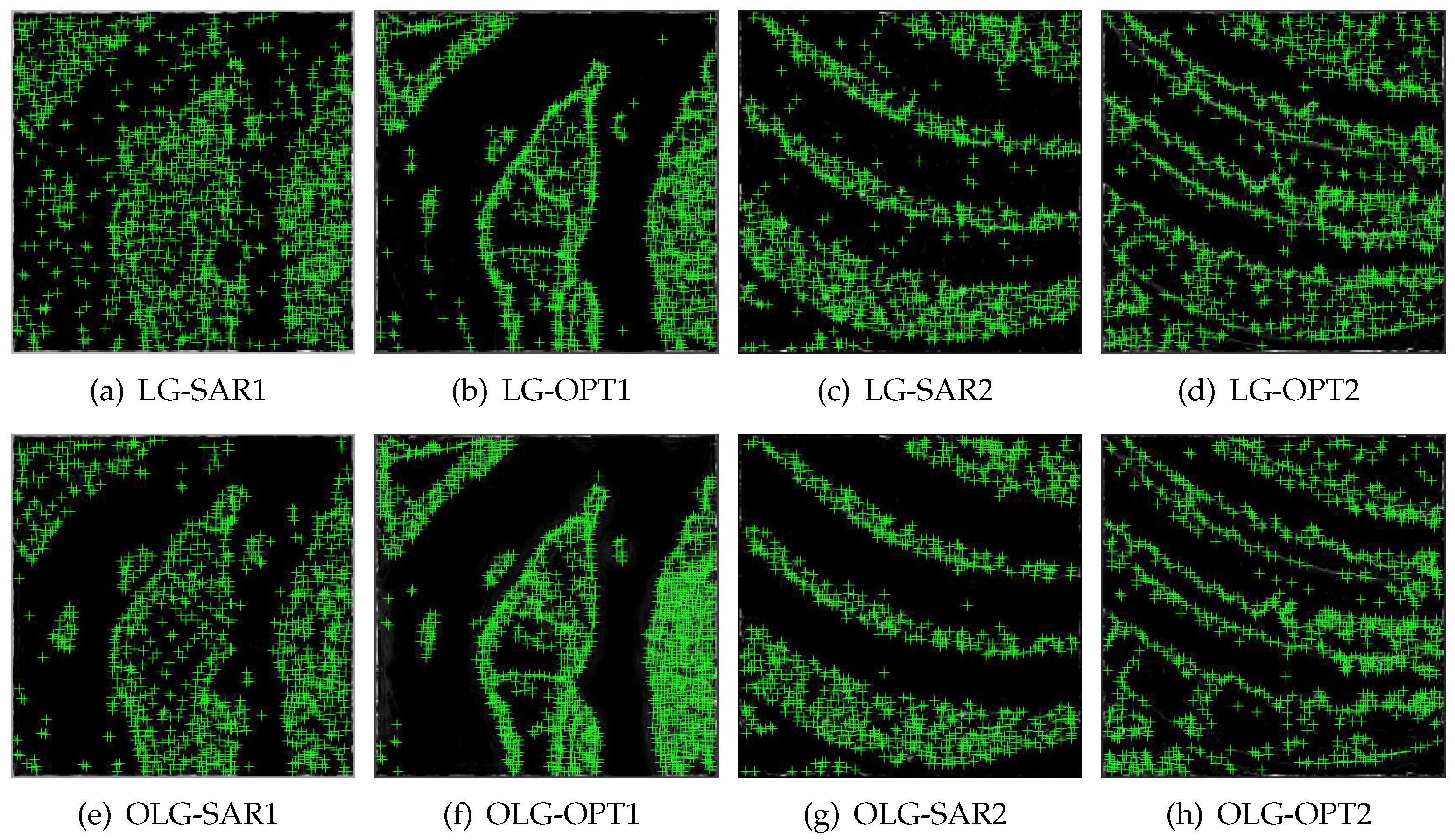

- To reduce computing costs, phase congruency models are constructed using Log-Gabor filtering in orthogonal directions.

- 3.

- To increase the dependability of descriptor features with richer description information, sector descriptors are built based on edge consistency features.

2. Materials and Methods

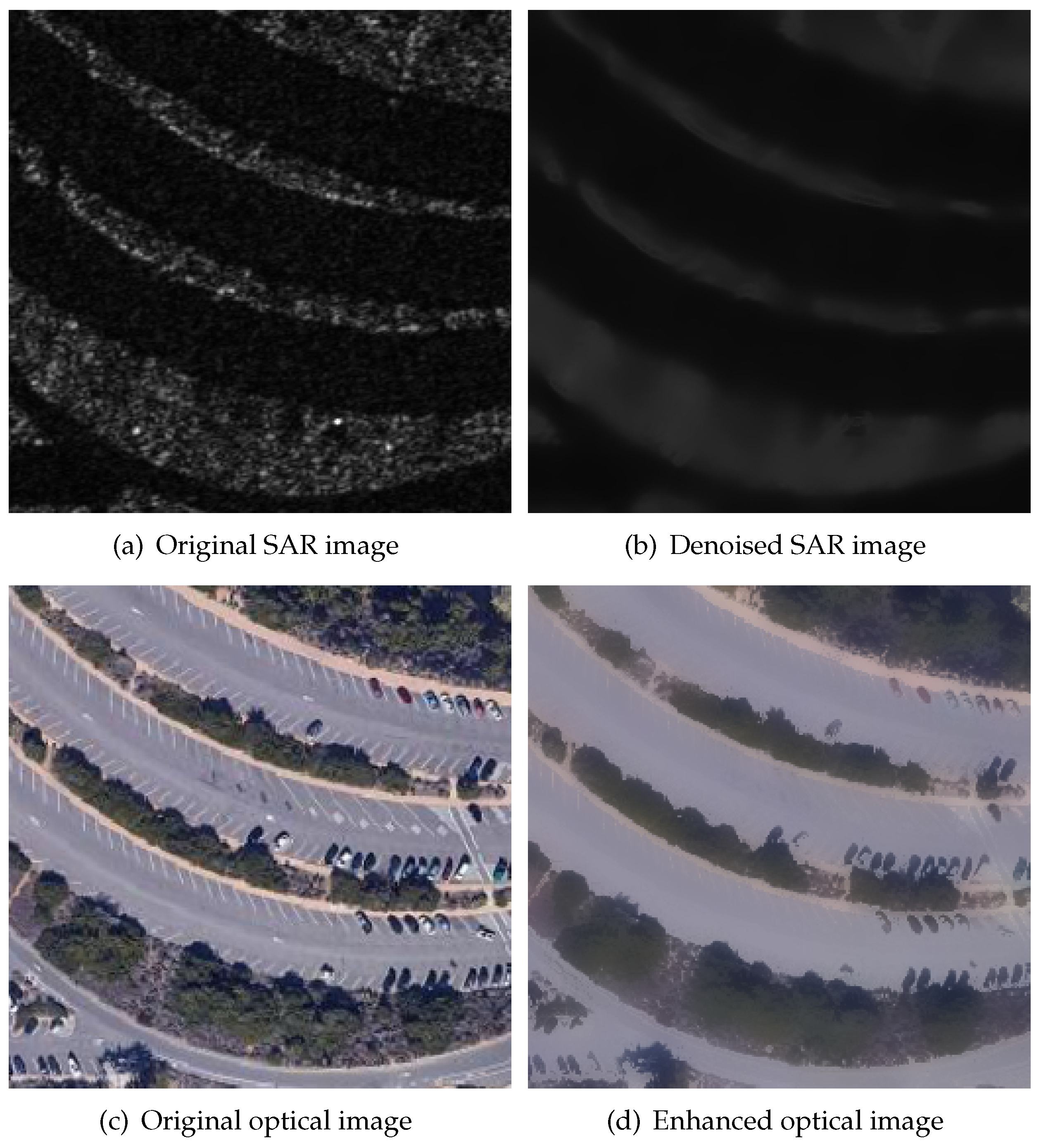

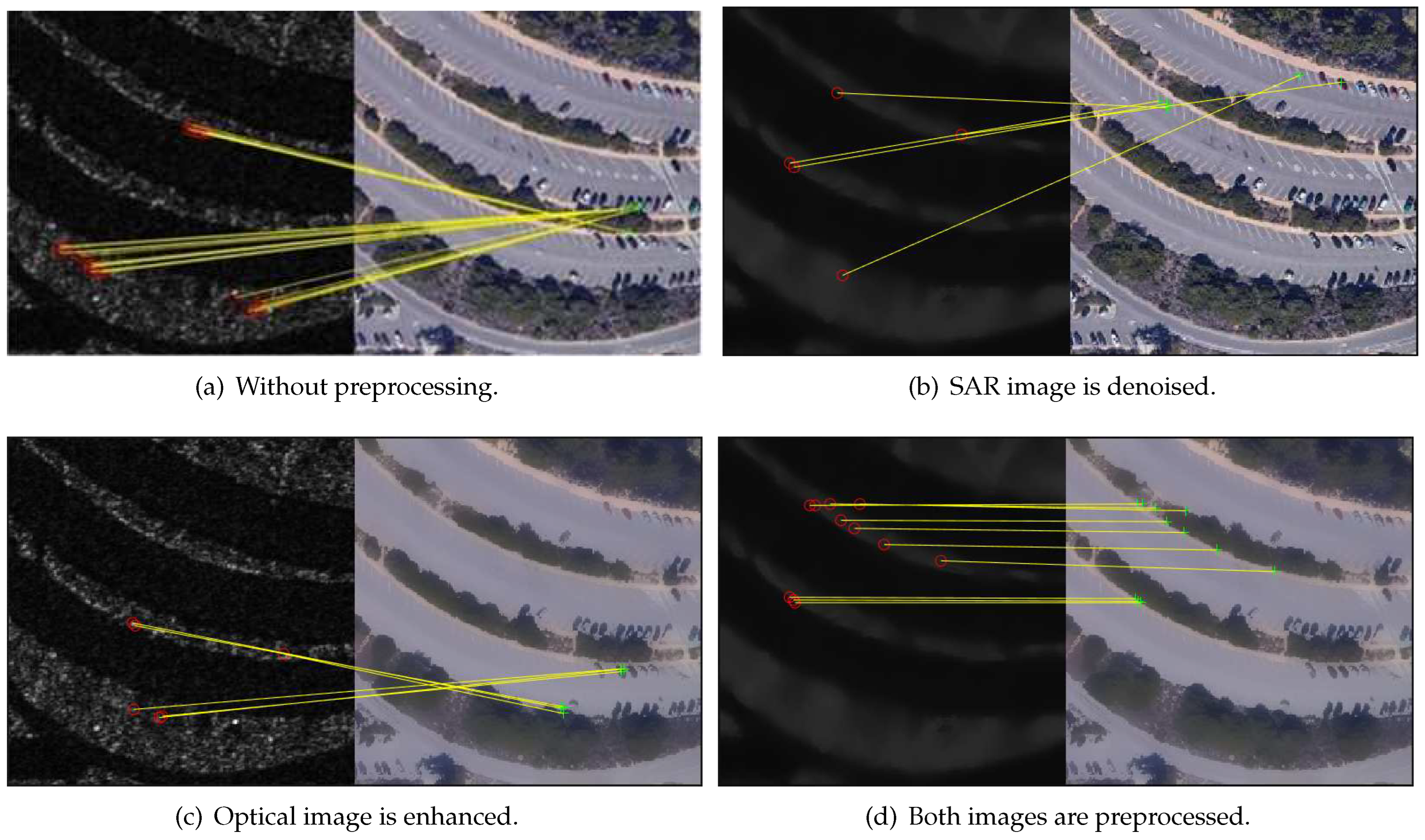

2.1. Multi-source Image Preprocessing

2.1.1. Non-local Mean Filtering

2.1.2. Co-occurrence Filtering

2.2. Edge Feature Detection

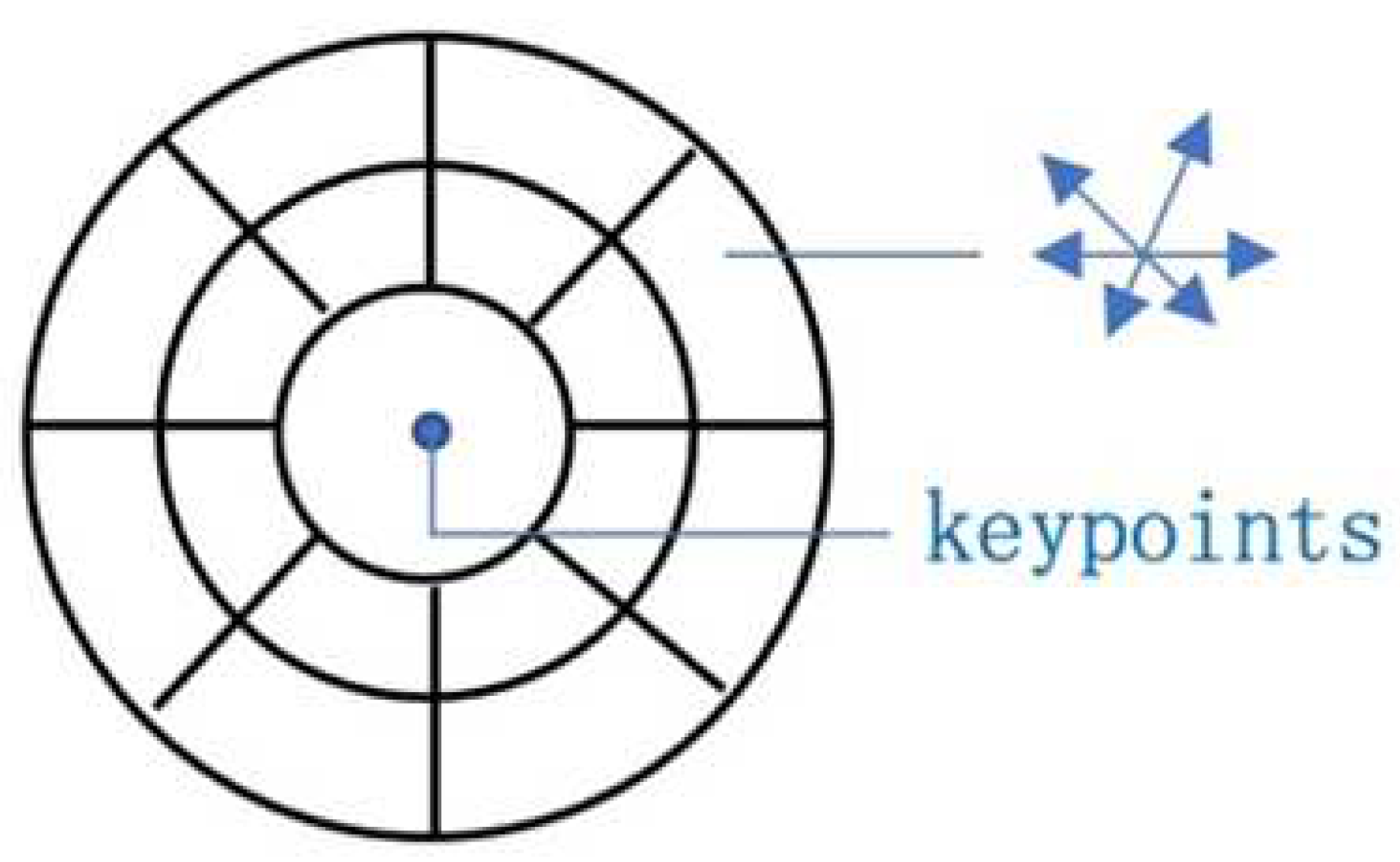

2.3. Sector Descriptor Construction

2.4. Feature Matching and Outlier Removal

3. Experiments and Results

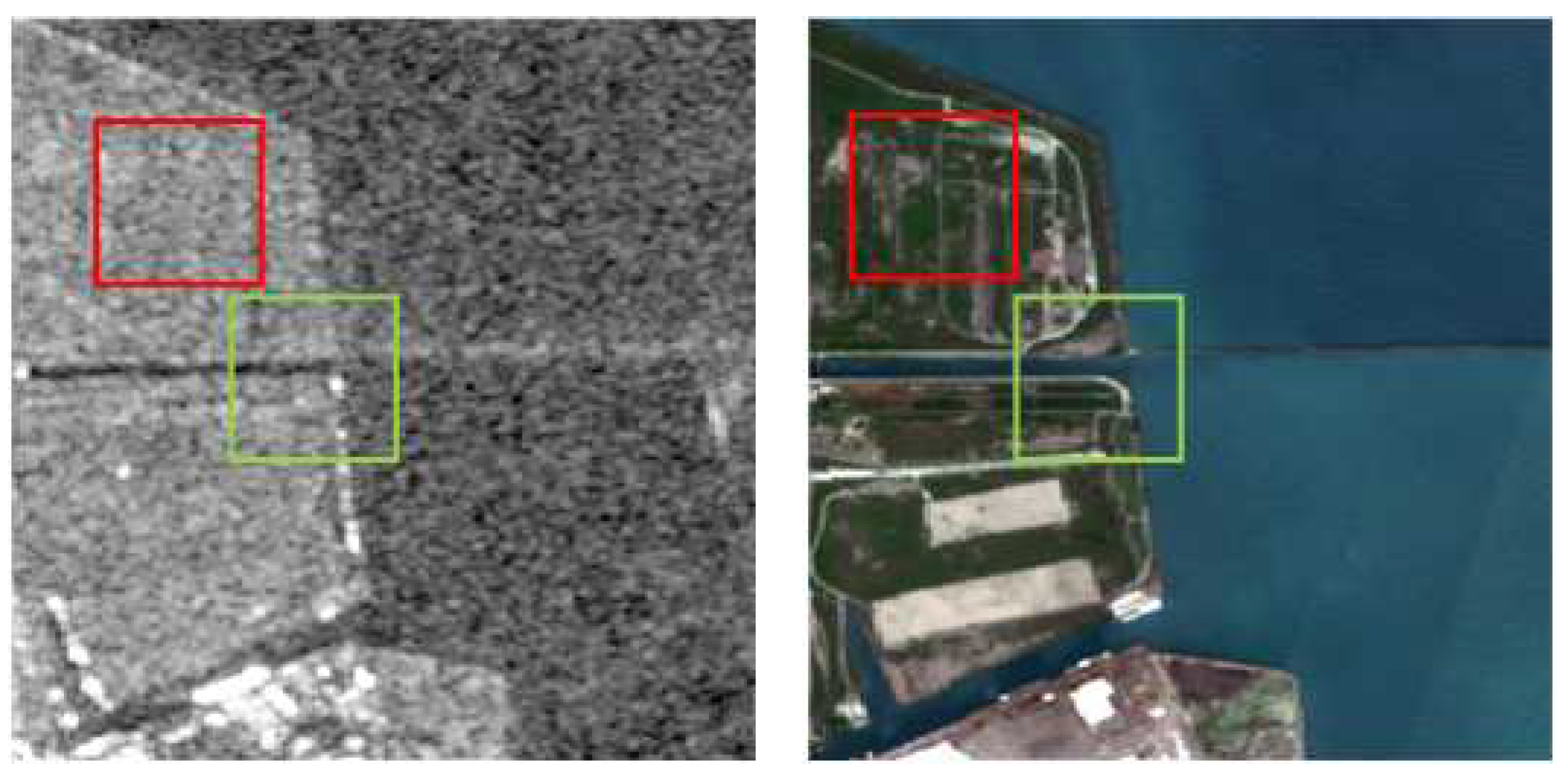

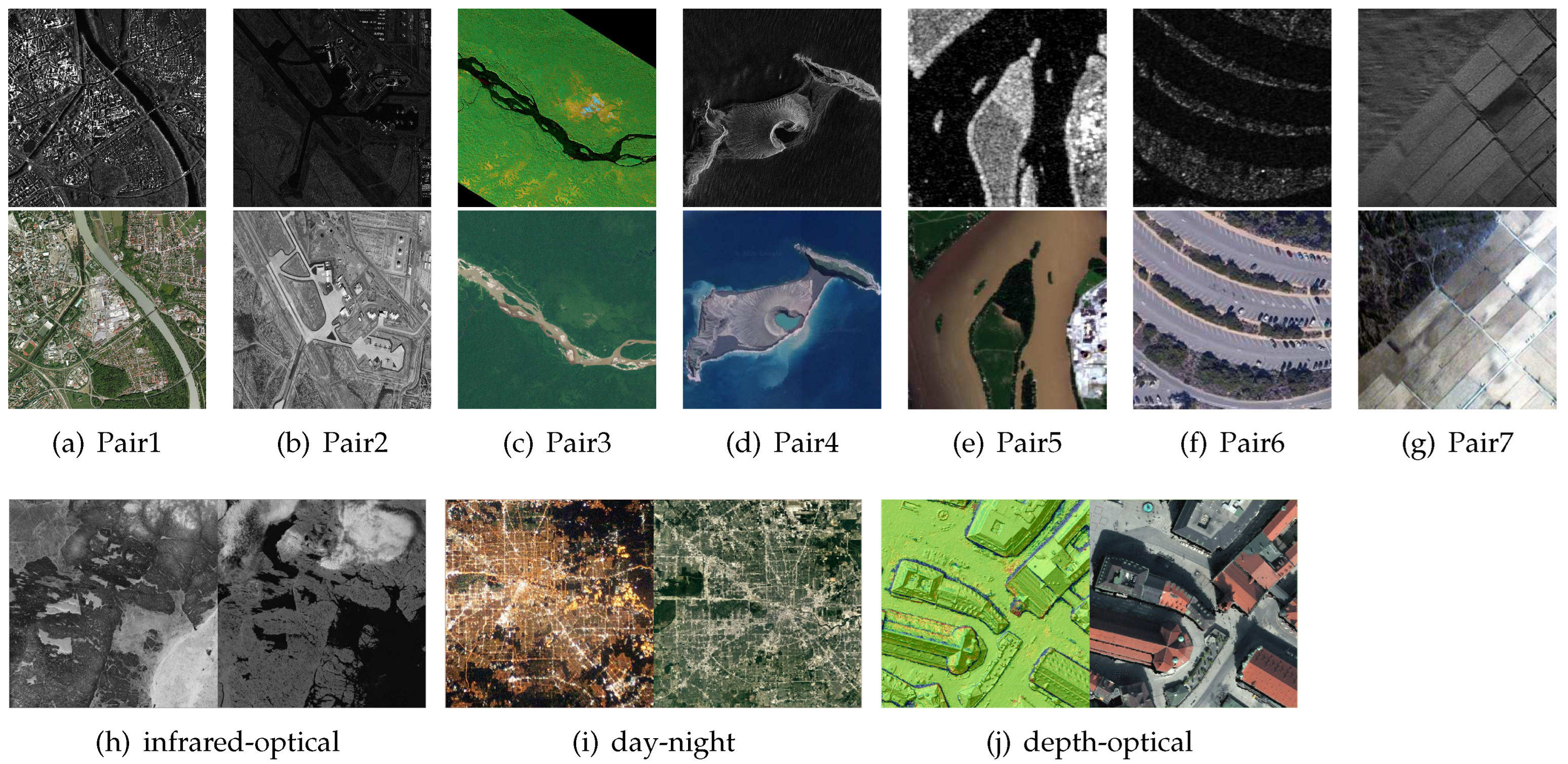

3.1. Datasets

3.2. Evaluation Criterion

3.3. Registration Results and Analysis

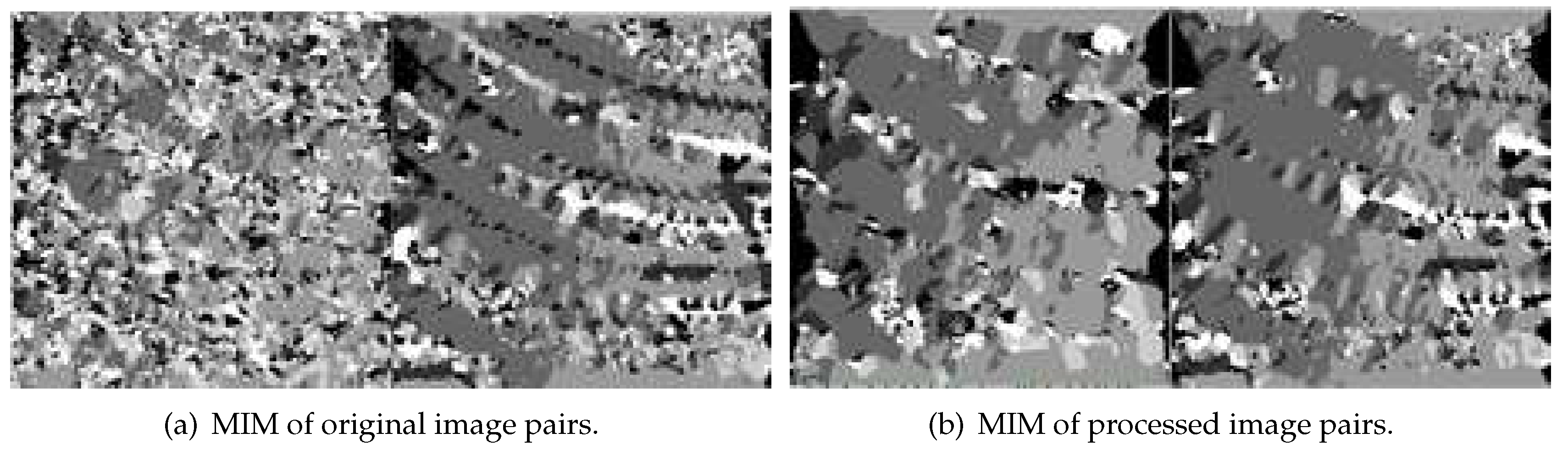

3.3.1. Comparison Experiment of preprocessing algorithms

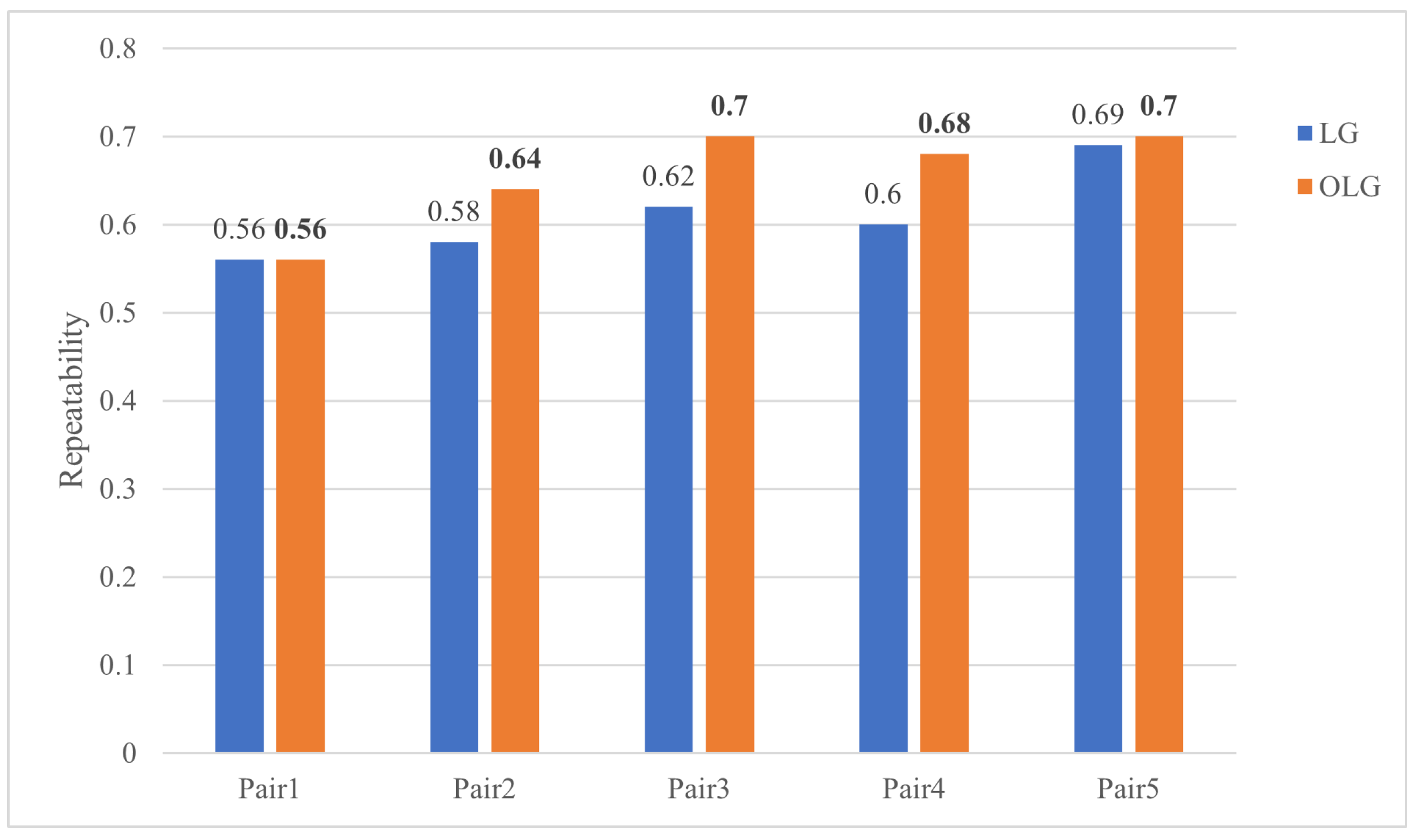

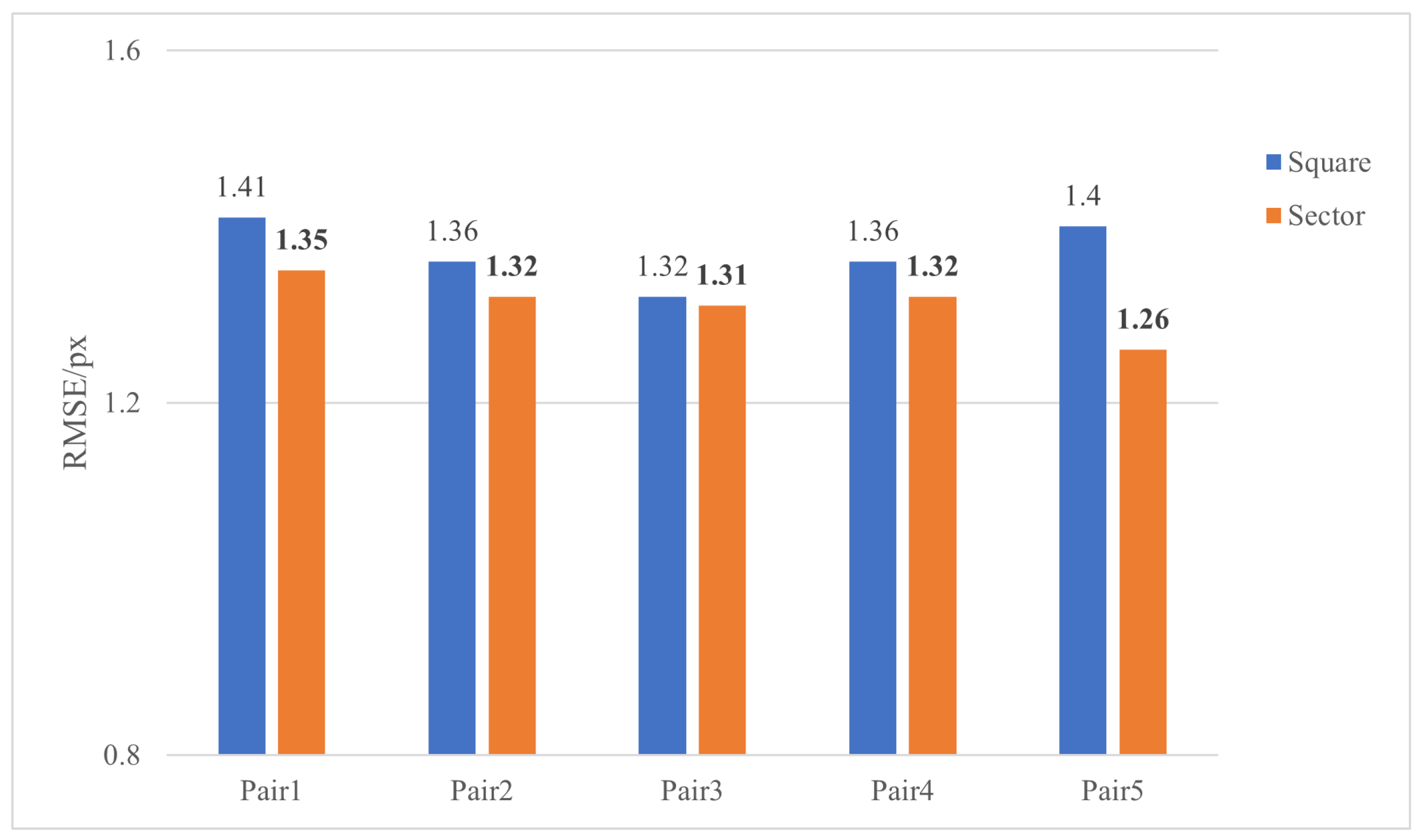

3.3.2. Comparison Experiment of OLG and LG

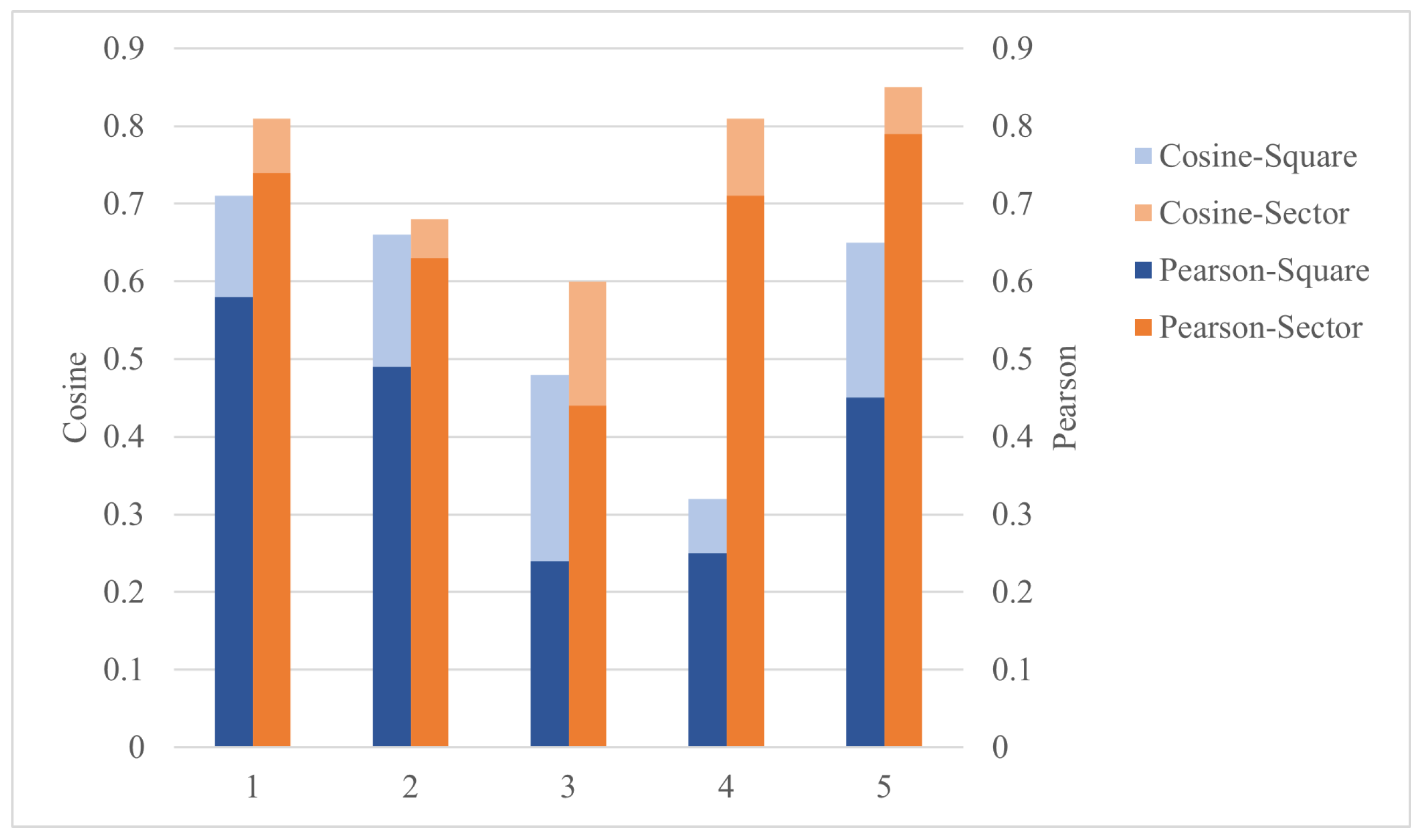

3.3.3. Comparison Experiment of Square Descriptor and Sector Descriptor

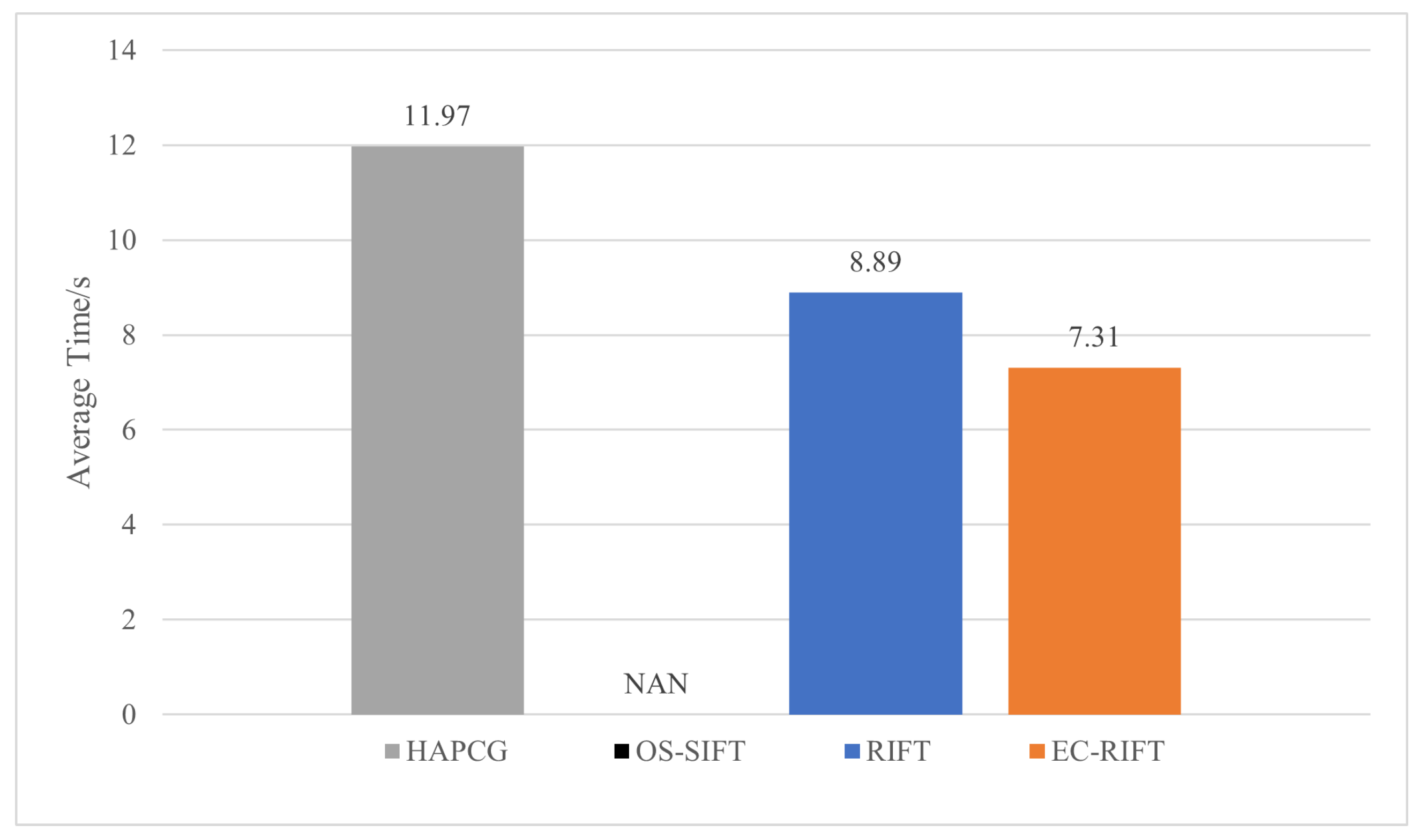

3.3.4. Comparative results with other methods

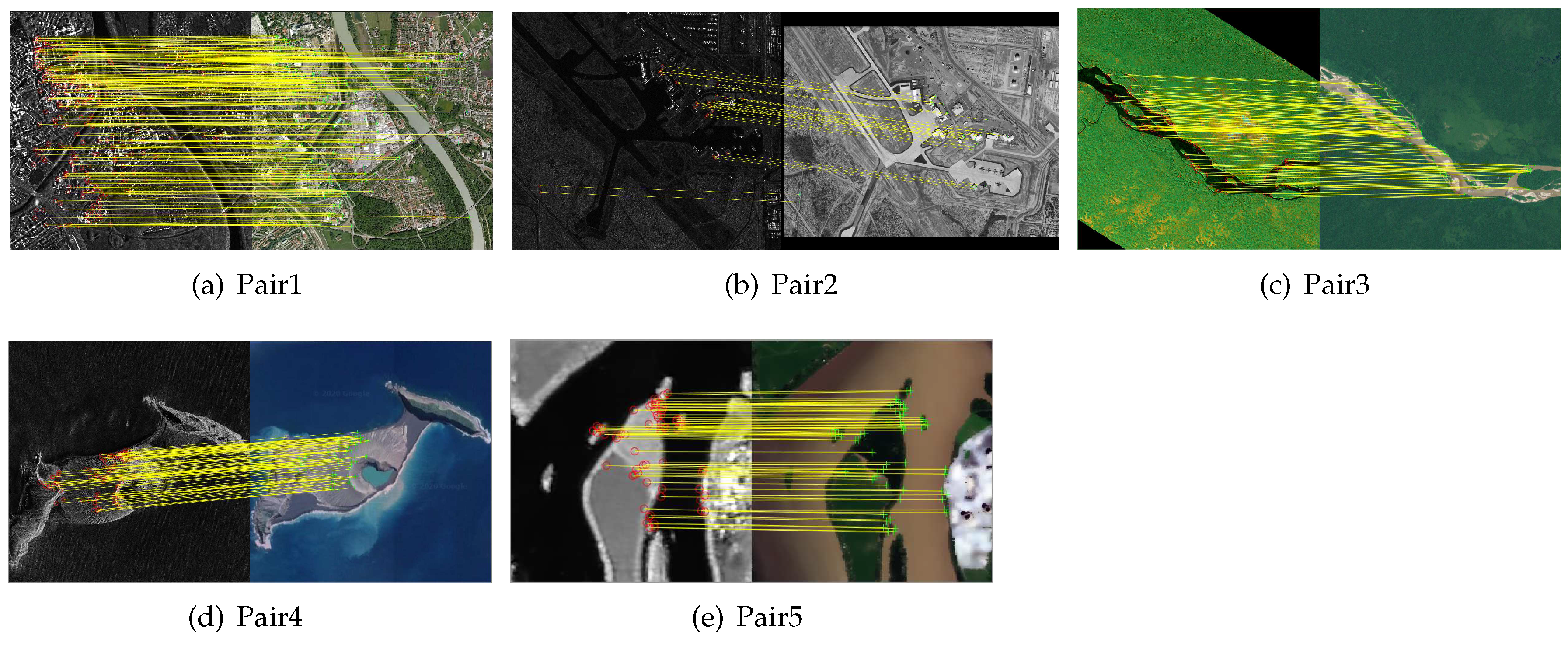

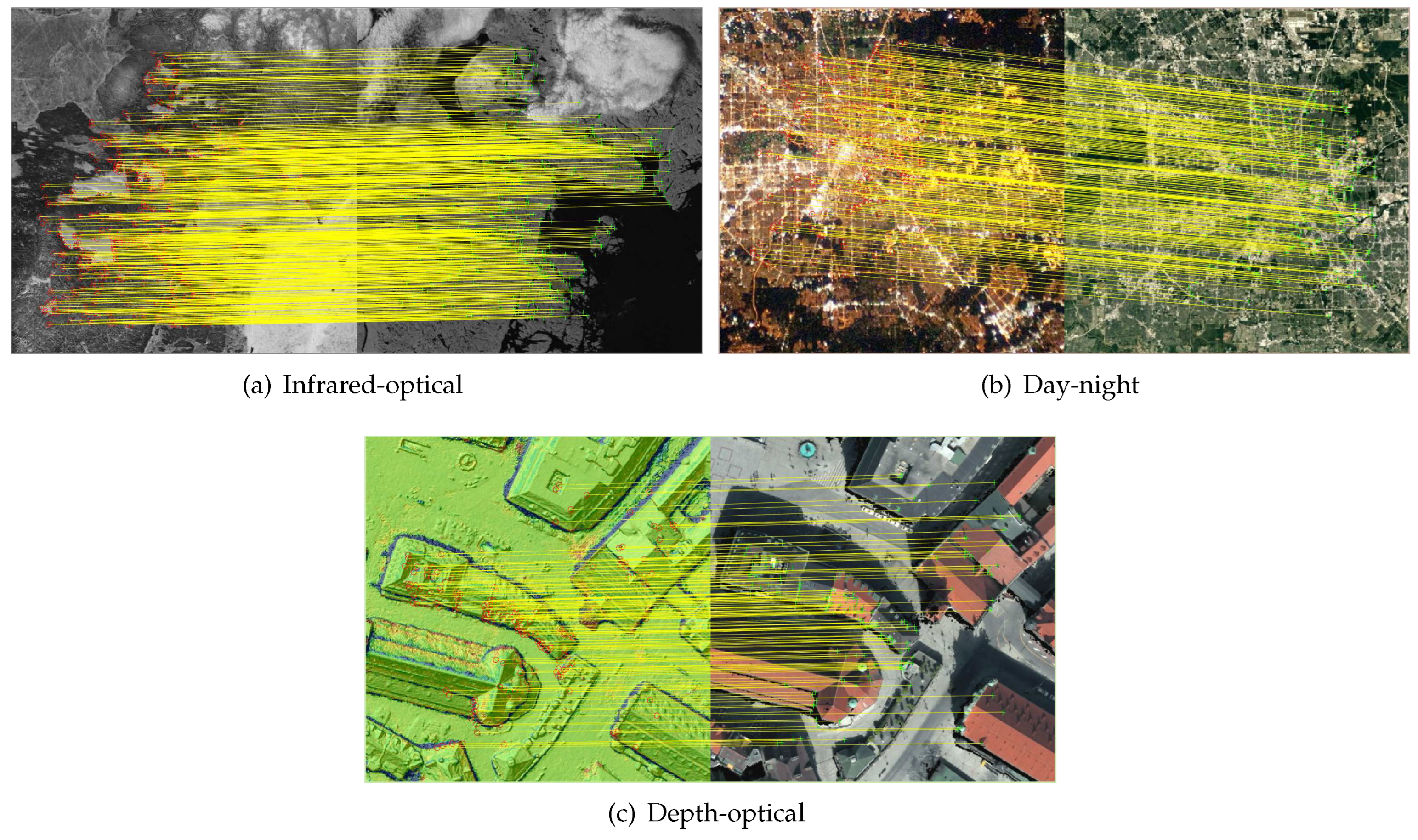

3.4. Experiments on multi-source Images

4. Discussion

4.1. The effect of noises on MIM

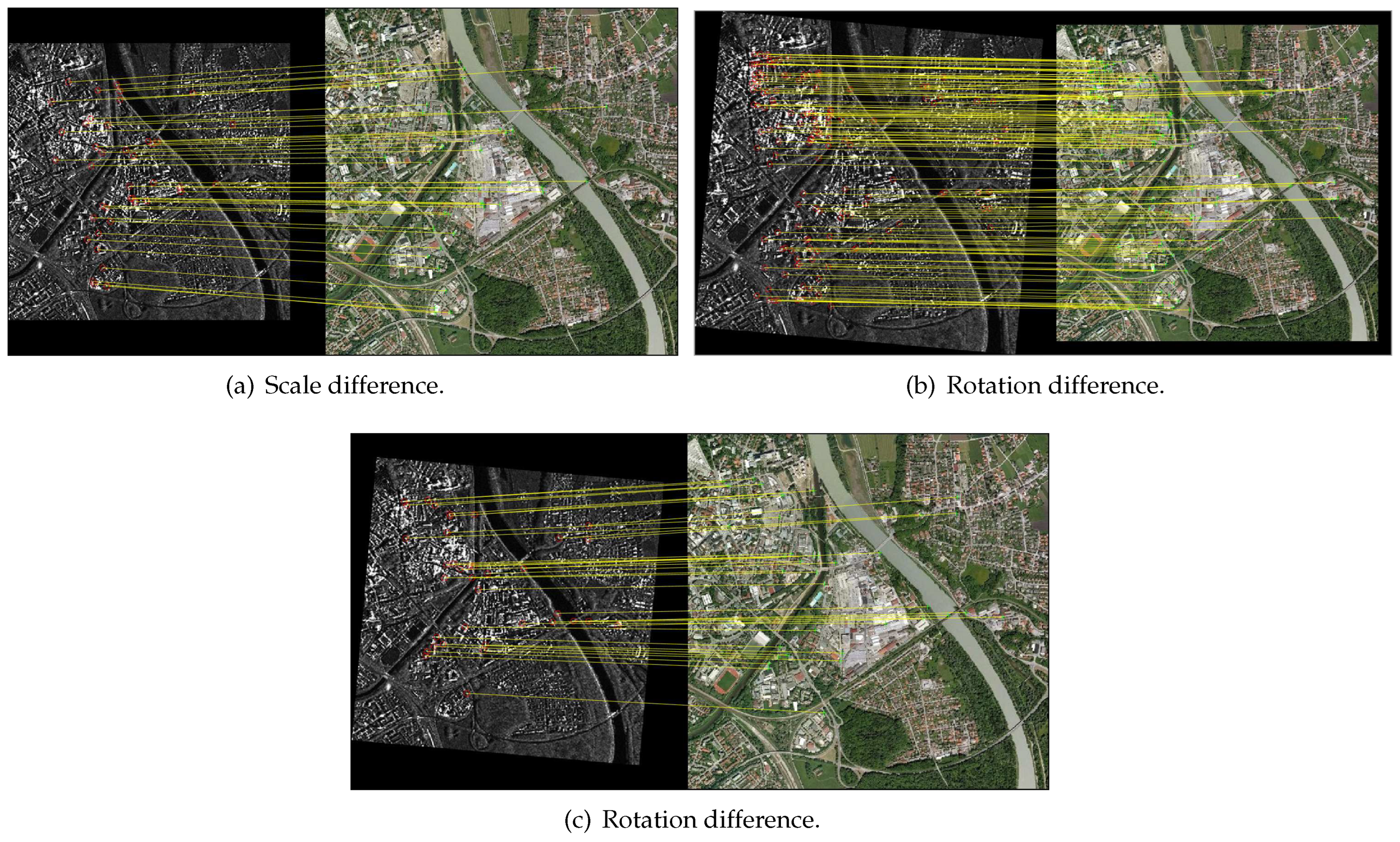

4.2. Fine-registration and Considerable Difference

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SAR | Synthetic Aperture Radar |

| EC-RIFT | Eedge Consistency Radiation-variation Insensitive Feature Transform |

| NLM | Non-local Mean |

| CoF | Co-occurrence Filter |

| OLG | Orthogonal Log-Gabor |

| RMSE | Root Mean Square Error |

References

- Chen, C.; Wang, C.; Liu, B.; He, C.; Cong, L.; Wan, S. Edge Intelligence Empowered Vehicle Detection and Image Segmentation for Autonomous Vehicles. IEEE Transactions on Intelligent Transportation Systems 2023, pp. 1–12. [CrossRef]

- Lv, N.; Zhang, Z.; Li, C. A hybrid-attention semantic segmentation network for remote sensing interpretation in land-use surveillance. International Journal of Machine Learning and Cybernetics 2023, 14, 395–406. [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision 2004, 60, 91–110. [CrossRef]

- Chen, H.; Sebastian.; Varshney.; Pramod, K.; Arora.; Manoj, K. Performance of Mutual Information Similarity Measure for Registration of Multitemporal Remote Sensing Images. IEEE Transactions on Geoscience and Remote Sensing 2003, 41, 2445 – 2454. [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Transactions on Geoscience and Remote Sensing 2019, PP, 1–12. [CrossRef]

- Ma, W.; Wen, Z.; Wu, Y.; Jiao, L.; Gong, M.; Zheng, Y.; Liu, L. Remote Sensing Image Registration With Modified SIFT and Enhanced Feature Matching. IEEE Geoscience and Remote Sensing Letters 2016, 14, 3–7. [CrossRef]

- Xiang, Y.; Wang, F.; You, H. OS-SIFT: A Robust SIFT-Like Algorithm for High-Resolution Optical-to-SAR Image Registration in Suburban Areas. IEEE Transactions on Geoscience and Remote Sensing 2018, 56, 3078–3090. [CrossRef]

- Gao, C.; Li, W.; Tao, R.; Du, Q. MS-HLMO: Multiscale Histogram of Local Main Orientation for Remote Sensing Image Registration. IEEE Transactions on Geoscience and Remote Sensing 2022, 60, 1–14. [CrossRef]

- Ma, W.; Zhang, J.; Wu, Y.; Jiao, L.; Zhu, H.; Zhao, W. A Novel Two-Step Registration Method for Remote Sensing Images Based on Deep and Local Features. IEEE Transactions on Geoscience and Remote Sensing 2019, pp. 1–10. [CrossRef]

- Zhou, L.; Ye, Y.; Tang, T.; Nan, K.; Qin, Y. Robust Matching for SAR and Optical Images Using Multiscale Convolutional Gradient Features. IEEE Geoscience and Remote Sensing Letters 2022, 19, 1–5. [CrossRef]

- Zhuoqian, Y.; Tingting, D.; Yang, Y. Multi-temporal Remote Sensing Image Registration Using Deep Convolutional Features. IEEE Access 2018, 6, 38544–38555. [CrossRef]

- Zhang, H.; Ni, W.; Yan, W.; Xiang, D.; Bian, H. Registration of Multimodal Remote Sensing Image Based on Deep Fully Convolutional Neural Network. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2019, PP, 1–15. [CrossRef]

- Hughes, L.; Marcos, D.; Lobry, S.; Tuia, D.; Schmitt, M. A deep learning framework for matching of SAR and optical imagery. ISPRS Journal of Photogrammetry and Remote Sensing 2020, 169, 166–179. [CrossRef]

- Kovesi, P. Phase congruency: A low-level image invariant. Psychological Research 2000, 64, 136–148. [CrossRef]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust Registration of Multimodal Remote Sensing Images Based on Structural Similarity. IEEE Transactions on Geoscience and Remote Sensing 2017, 55, 2941–2958. [CrossRef]

- Li, J.; Hu, Q.; Ai, M. RIFT: Multi-Modal Image Matching Based on Radiation-Variation Insensitive Feature Transform. IEEE Transactions on Image Processing 2020, 29, 3296–3310. [CrossRef]

- Yao, Y.; Zhang, Y.; Wan, Y.; Liu, X.; Guo, H. Heterologous Images Matching Considering Anisotropic Weighted Moment and Absolute Phase Orientation. Geomatics and Information Science of Wuhan University 2021, 46, 1727.

- Fan, Z.; Liu, Y.; Liu, Y.; Zhang, L.; Zhang, J.; Sun, Y.; Ai, H. 3MRS: An Effective Coarse-to-Fine Matching Method for Multimodal Remote Sensing Imagery. Remote Sensing 2022, 14, 478. [CrossRef]

- Sui, H.; Liu, C.; Gan, Z. Overview of multi-modal remote sensing image matching methods. Journal of Geodesy and Geoinformation Science 2022, 51.

- Buades, A.; Coll, B.; Morel, J. A non-local algorithm for image denoising. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) 2005, 2, 60–65. [CrossRef]

- Jevnisek, R.J.; Avidan, S. Co-occurrence Filter. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017, pp. 3816–3824. [CrossRef]

- Yang, H.; Fu, Y.; Zeng, J. Face recognition algorithm based on orthogonal Log-Gabor filter binary mode. Transactions on Intelligent Systems 2019, pp. 330–337.

- Oppenheim, A.; Lim, J. The importance of phases in signal. IEEE transaction on Computer Science 1981, 69, 333–382.

- Morrone, M.C.; Owens, R.A. Feature detection from local energy. Pattern Recognition Letters 1987, 6, 303–313. [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Transactions on Pattern Analysis and Machine Intelligence 2005, 1615-1630. [CrossRef]

- Wu, Y.; Ma, W.; Gong, M.; Su, L.; Jiao, L. A Novel Point-Matching Algorithm Based on Fast Sample Consensus for Image Registration. IEEE Geoscience and Remote Sensing Letters 2015, 12, 43–47. [CrossRef]

- Mikolajczyk, K.; Schmid, C. An Affine Invariant Interest Point Detector. Proc European Conf on Computer Vision, LNCS 2350 2002, 1.

- Zhang, Y.; Chen, C.; Liu, L.; Lan, D.; Jiang, H.; Wan, S. Aerial Edge Computing on Orbit: A Task Offloading and Allocation Scheme. IEEE Transactions on Network Science and Engineering 2023, 10, 275–285. [CrossRef]

- Li, J.; Shi, P.; Hu, Q.; Zhang, Y., Rift2: Speeding-up rift with a new rotation-invariance technique. ArXiv, vol. abs/2303.00319, 2023. [CrossRef]

- Gao, C.; Li, W. Multi-scale PIIFD for Registration of Multi-source Remote Sensing Images. Journal of Beijing Institute of Technology 2021, 30, 12. [CrossRef]

- Chen, C.; Yao, G.; Liu, L.; Pei, Q.; Song, H.; Dustdar, S. A Cooperative Vehicle-Infrastructure System for Road Hazards Detection with Edge Intelligence IEEE Transactions on Intelligent Transportation Systems 2023. [CrossRef]

- Hnatushenko, V.; Kogut, P.; Uvarov, M. Variational approach for rigid co-registration of optical/SAR satellite images in agricultural areas Journal of Computational and Applied Mathematics 2022, 400, 113742. [CrossRef]

| Data | Method | RMSE/px | Running time/s |

|---|---|---|---|

| Pair6 | HAPCG | 0.88 | 2.8 |

| OS-SIFT | × | × | |

| RIFT | 0.99 | 2.26 | |

| EC-RIFT | 0.95 | 1.99 | |

| Pair7 | HAPCG | 1.59 | 2.56 |

| OS-SIFT | × | × | |

| RIFT | 1.11 | 1.79 | |

| EC-RIFT | 0.92 | 1.76 |

| Data | Filter | Detection time/s | RMSE/px |

|---|---|---|---|

| Pair1 | LG | 0.99 | 1.41 |

| OLG | 0.58 | 1.35 | |

| Pair2 | LG | 3.20 | 1.36 |

| OLG | 1.81 | 1.32 | |

| Pair3 | LG | 1.50 | 1.32 |

| OLG | 1.04 | 1.27 | |

| Pair4 | LG | 0.99 | 1.34 |

| OLG | 0.65 | 1.33 | |

| Pair5 | LG | 0.23 | 1.40 |

| OLG | 0.15 | 1.29 |

| Data | Method | RMSE/px | Running time/s |

|---|---|---|---|

| Pair1 | HAPCG | 1.95 | 9.98 |

| OS-SIFT | 1.38 | 9.15 | |

| RIFT | 1.37 | 10.45 | |

| EC-RIFT | 1.35 | 8.81 | |

| Pair2 | HAPCG | 1.95 | 26.13 |

| OS-SIFT | × | × | |

| RIFT | 1.34 | 14.26 | |

| EC-RIFT | 1.19 | 11.01 | |

| Pair3 | HAPCG | 1.93 | 13.64 |

| OS-SIFT | NAN | NAN | |

| RIFT | 1.32 | 6.41 | |

| EC-RIFT | 1.28 | 5.06 | |

| Pair4 | HAPCG | 1.97 | 7.98 |

| OS-SIFT | × | × | |

| RIFT | 1.35 | 9.36 | |

| EC-RIFT | 1.24 | 9.27 | |

| Pair5 | HAPCG | 1.76 | 2.12 |

| OS-SIFT | NAN | NAN | |

| RIFT | 1.36 | 3.95 | |

| EC-RIFT | 1.31 | 2.39 |

| Data | Method | RMSE/px | Running time/s |

|---|---|---|---|

| Infrared-optical | RIFT | 1.16 | 14.70 |

| EC-RIFT | 1.16 | 13.13 | |

| Day-night | RIFT | 1.27 | 12.37 |

| EC-RIFT | 1.26 | 10.68 | |

| Depth-optical | RIFT | 1.36 | 11.98 |

| EC-RIFT | 1.33 | 11.50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).