Submitted:

28 July 2023

Posted:

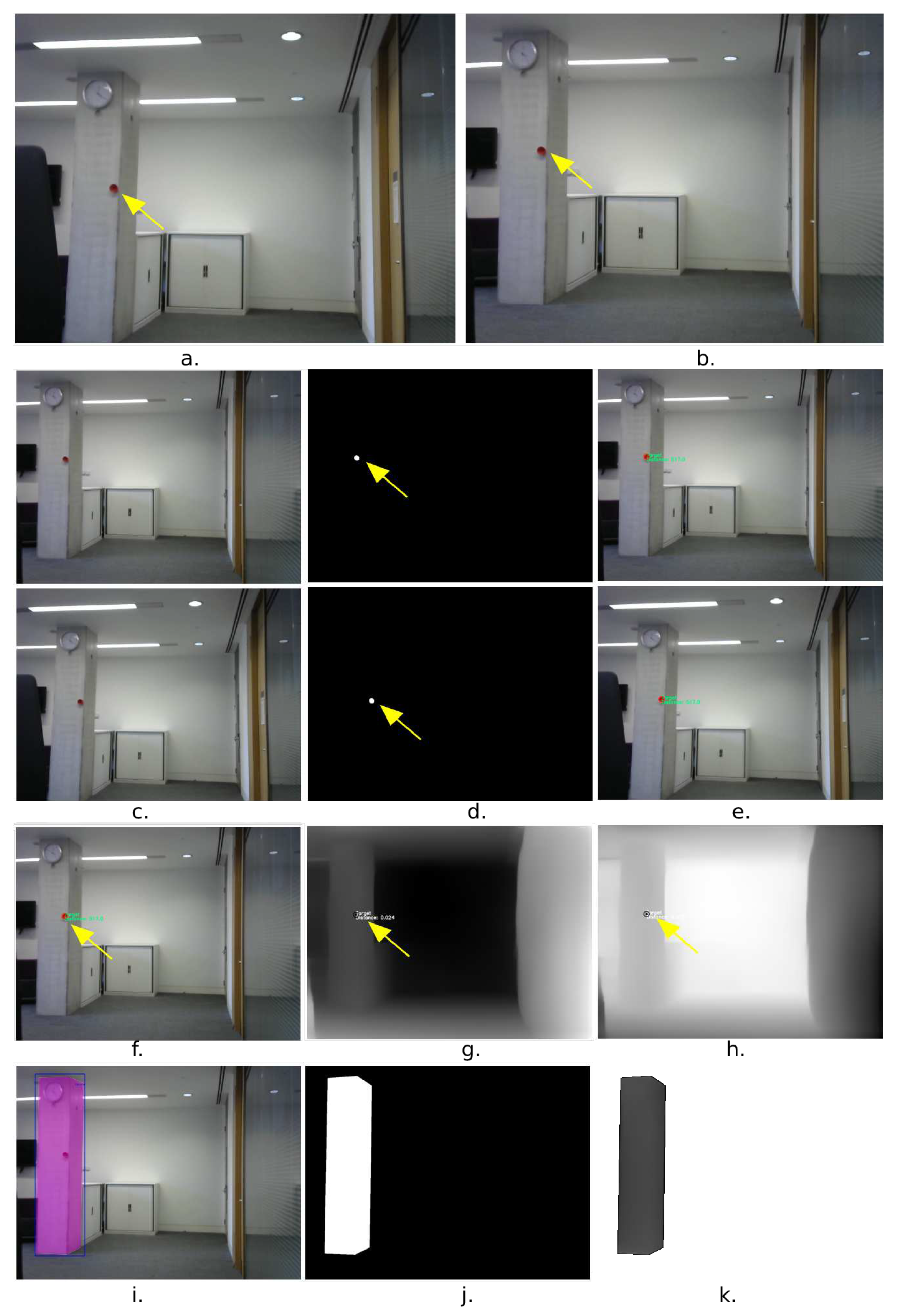

01 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

3. Instrumentation

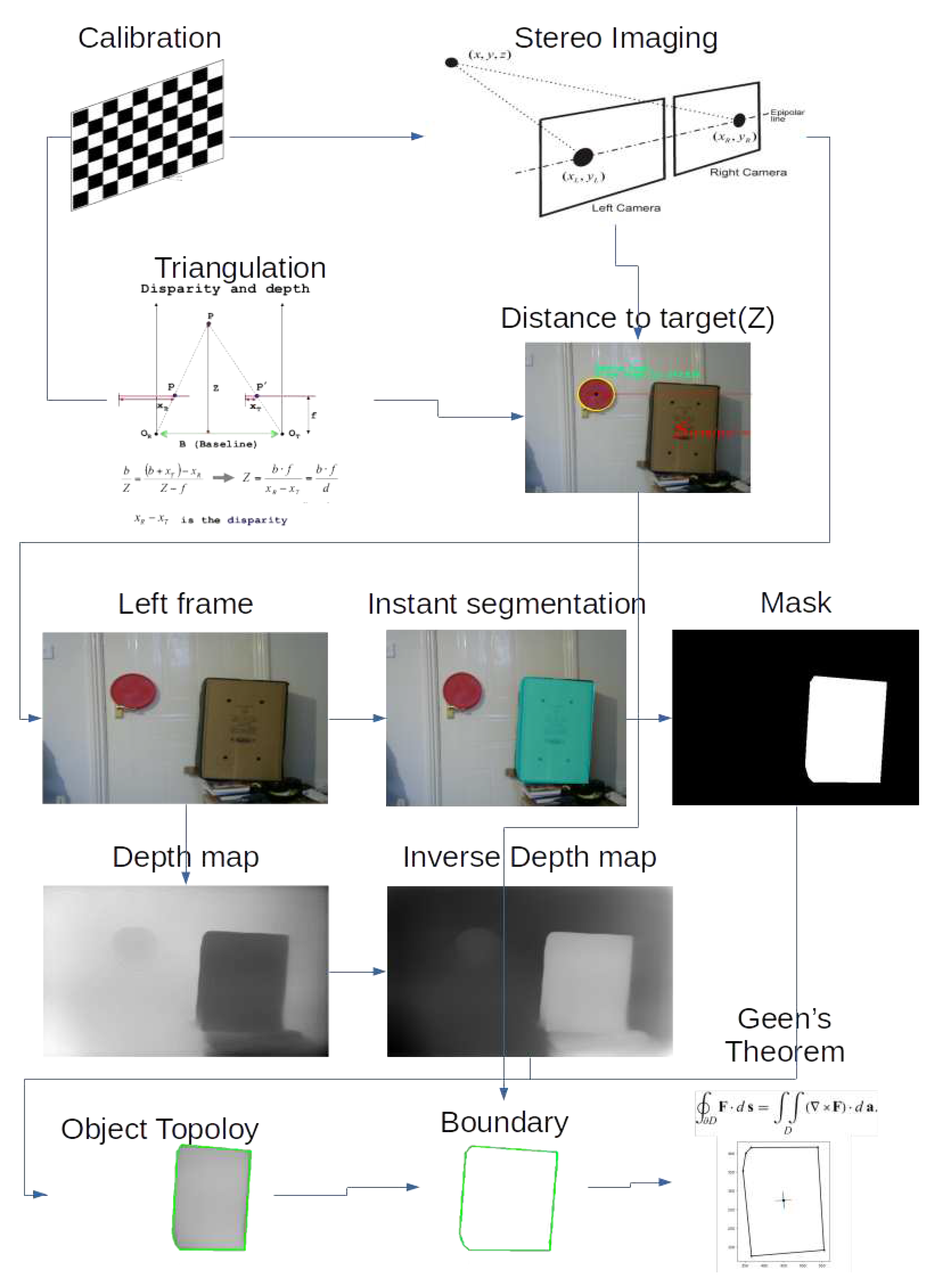

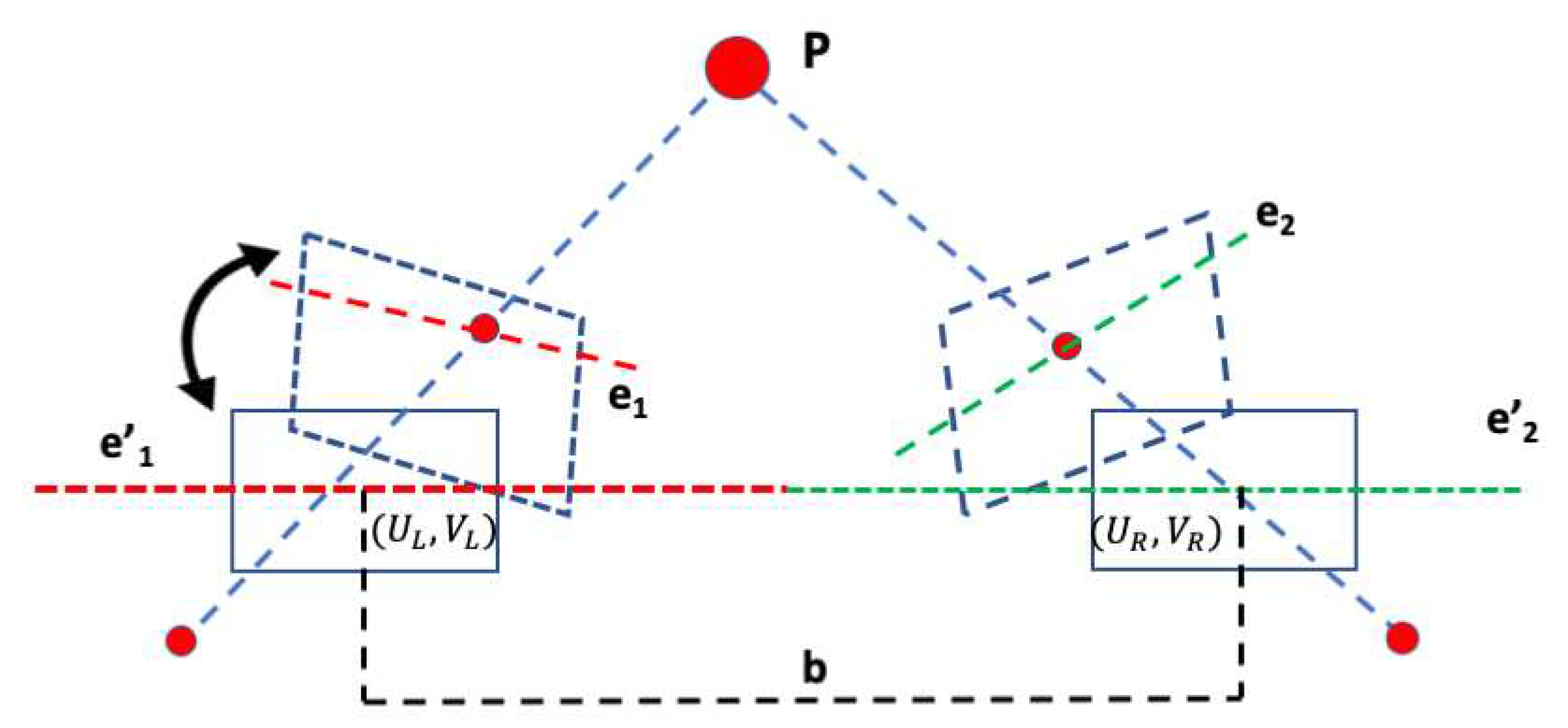

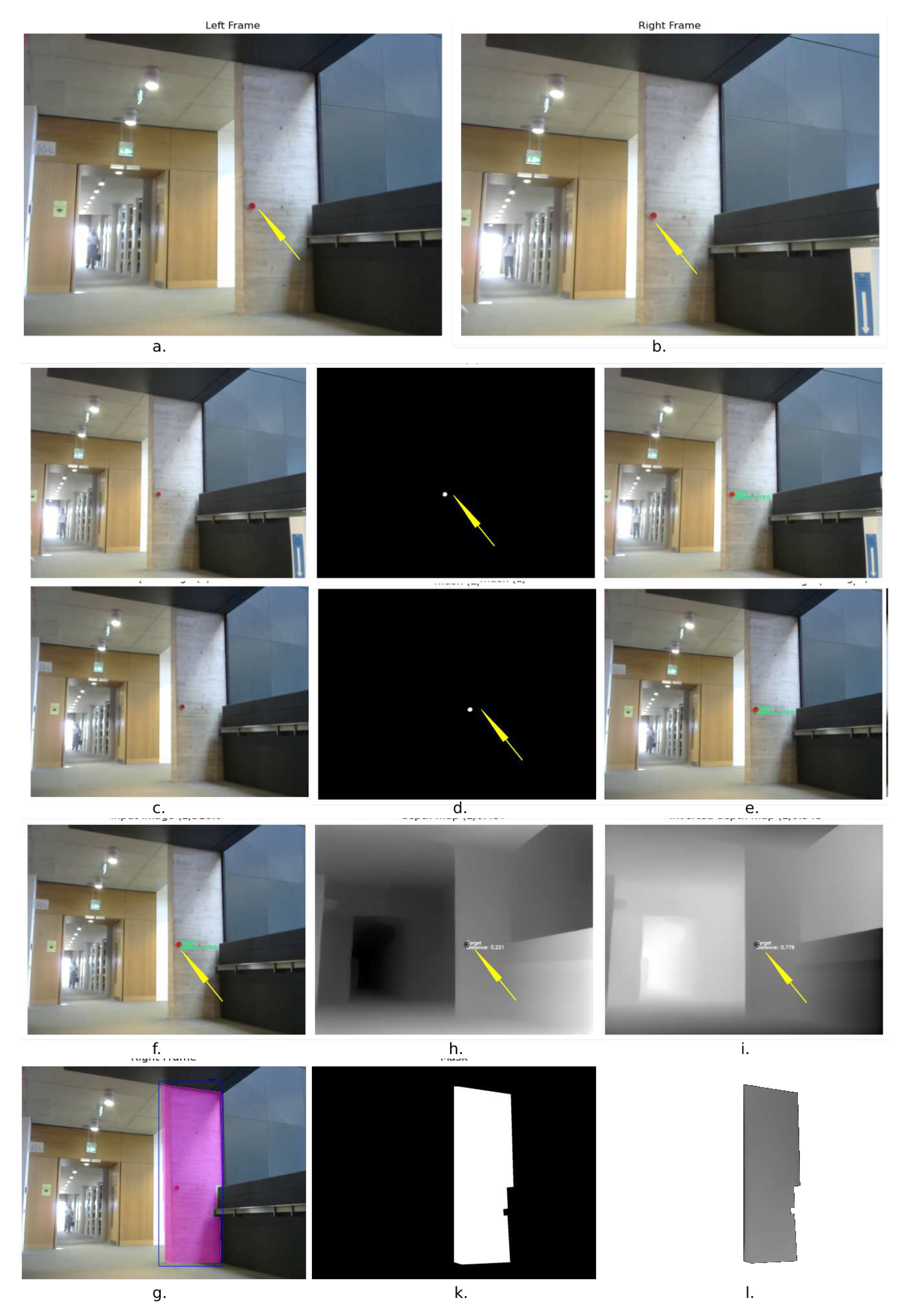

3.1. Initialisation

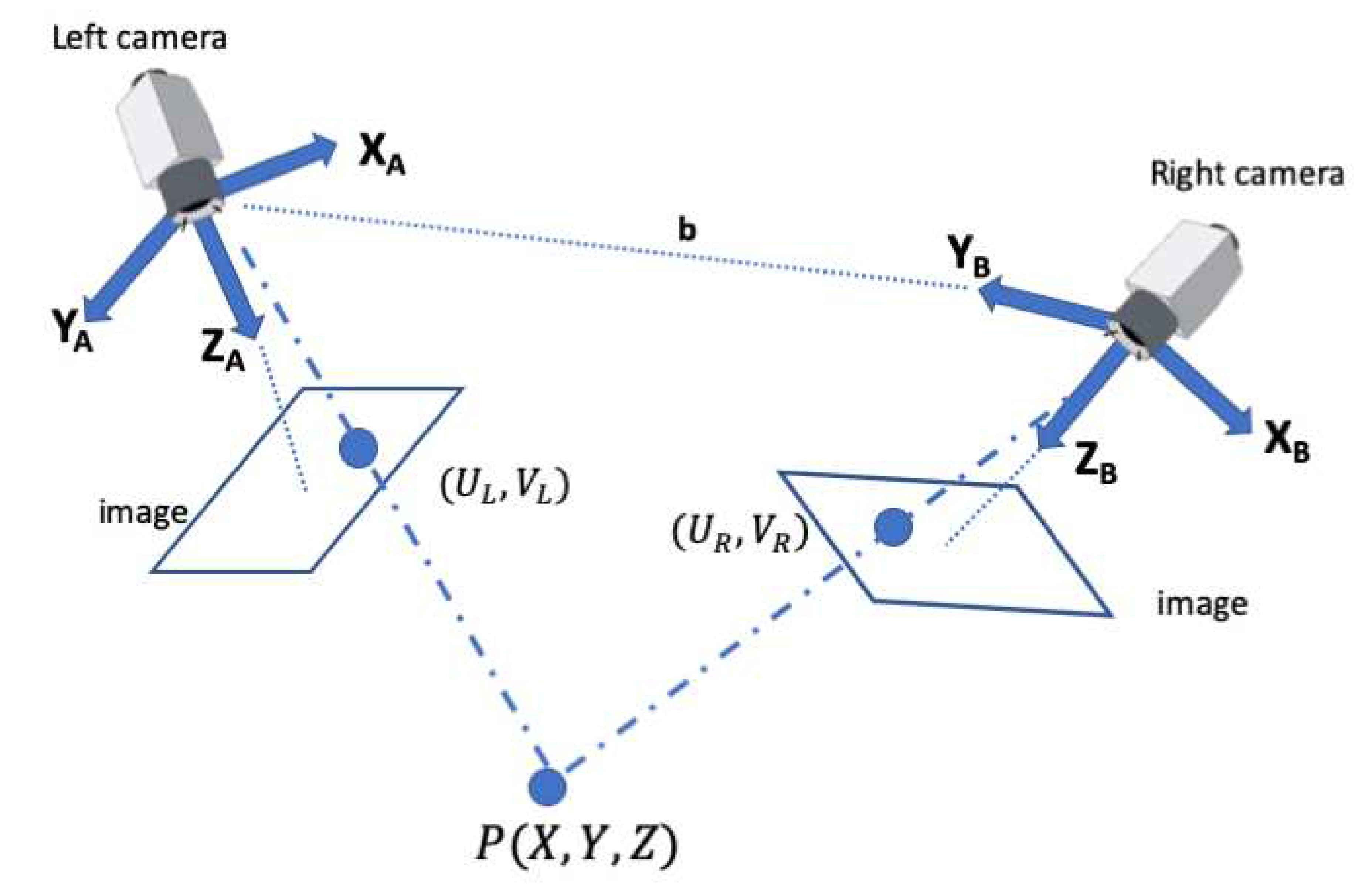

3.2. Data Acquisition

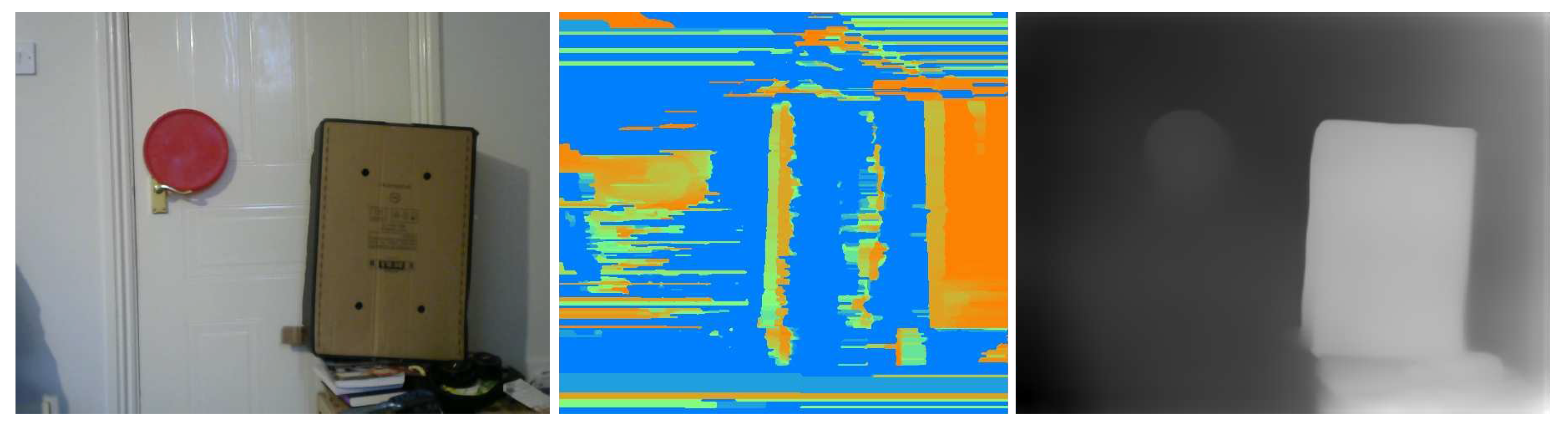

3.3. Information Retrieval and Processing

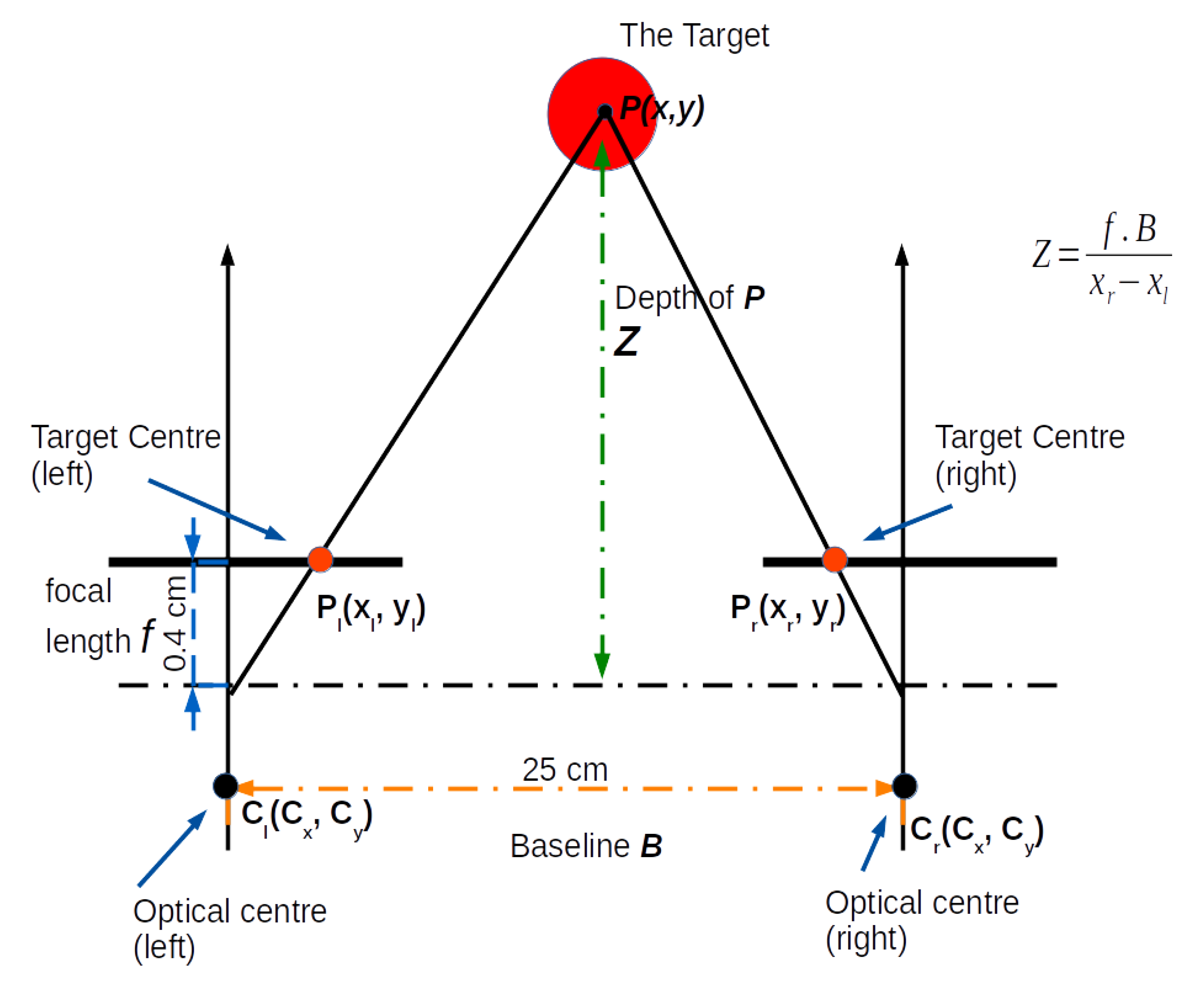

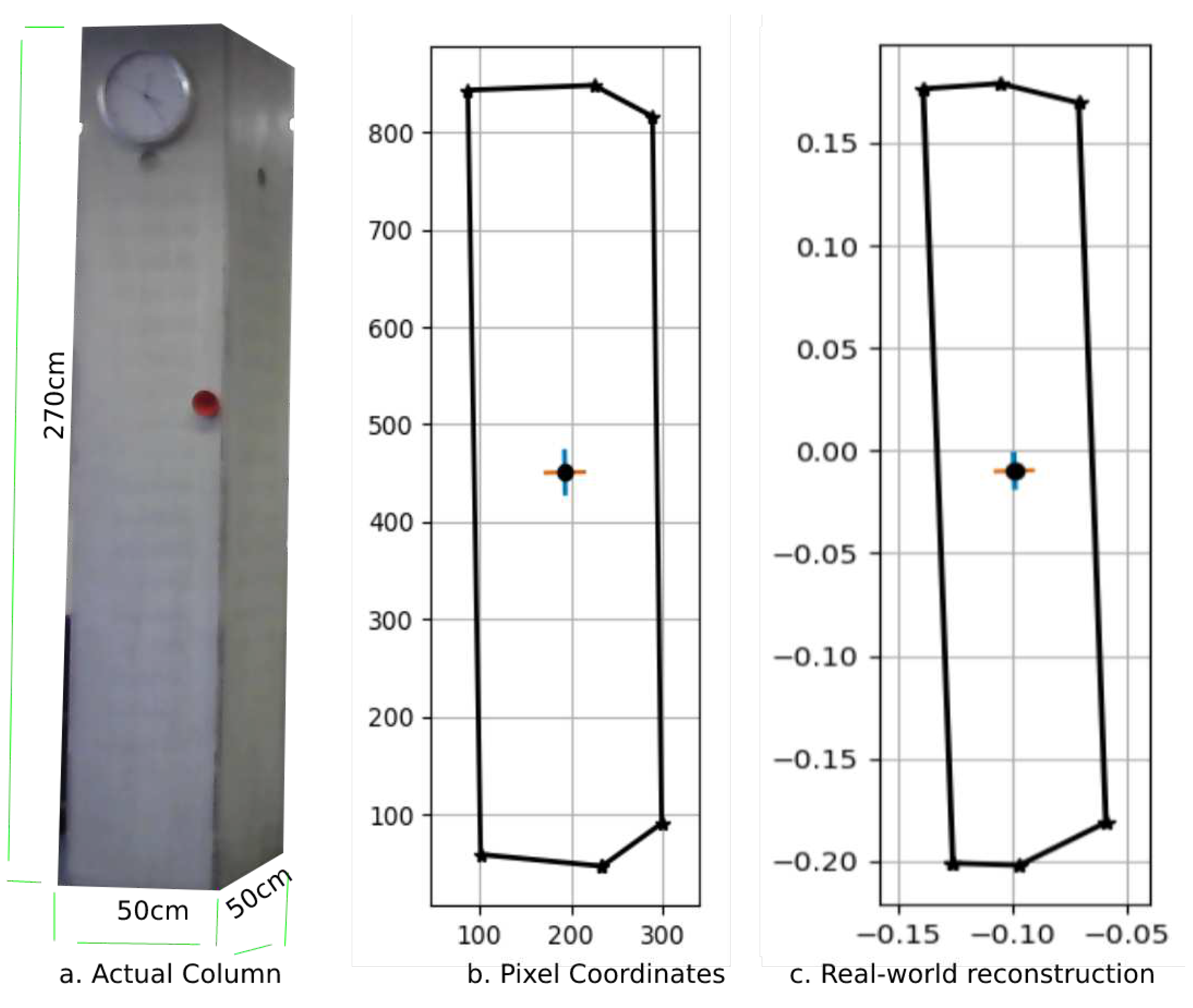

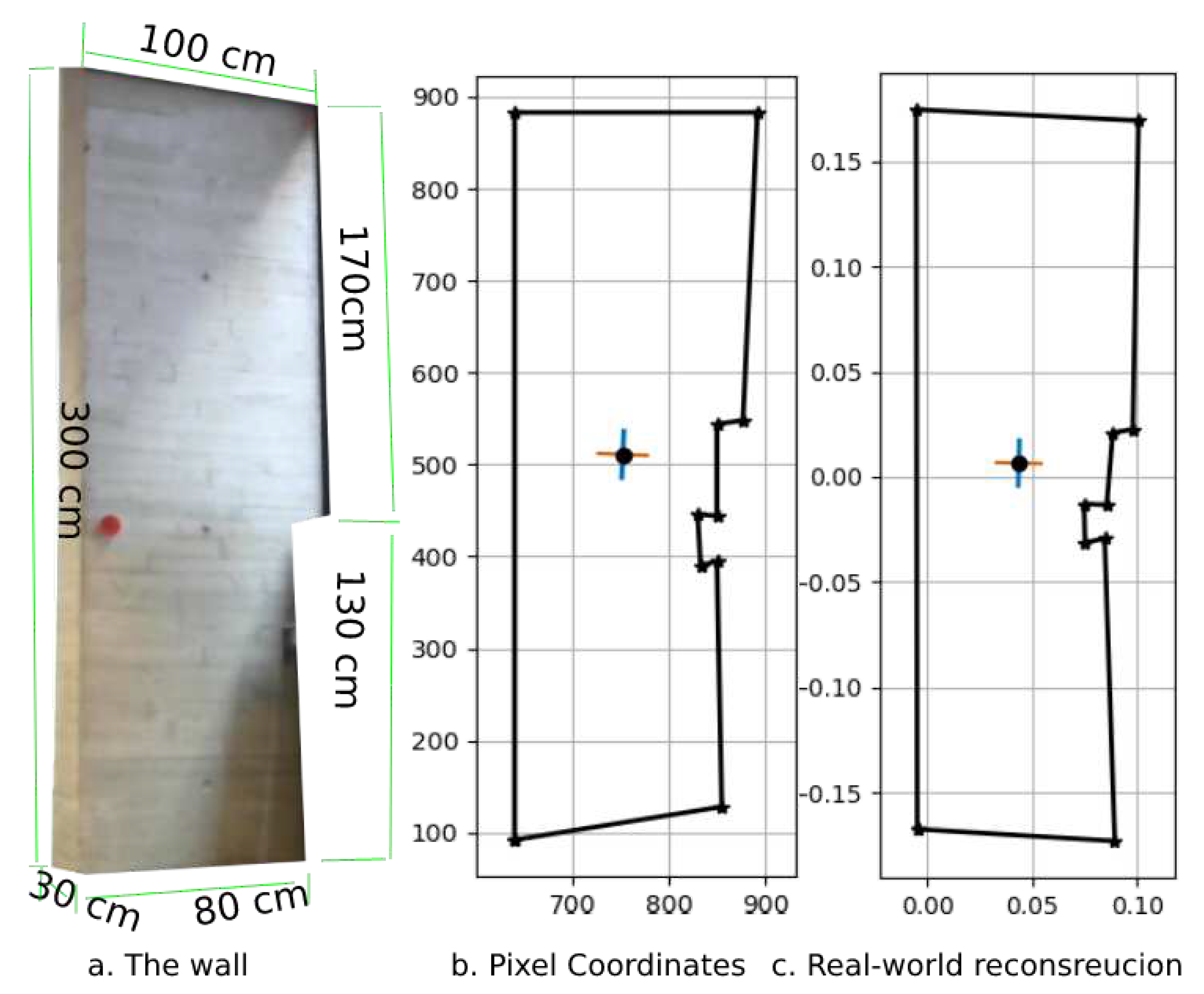

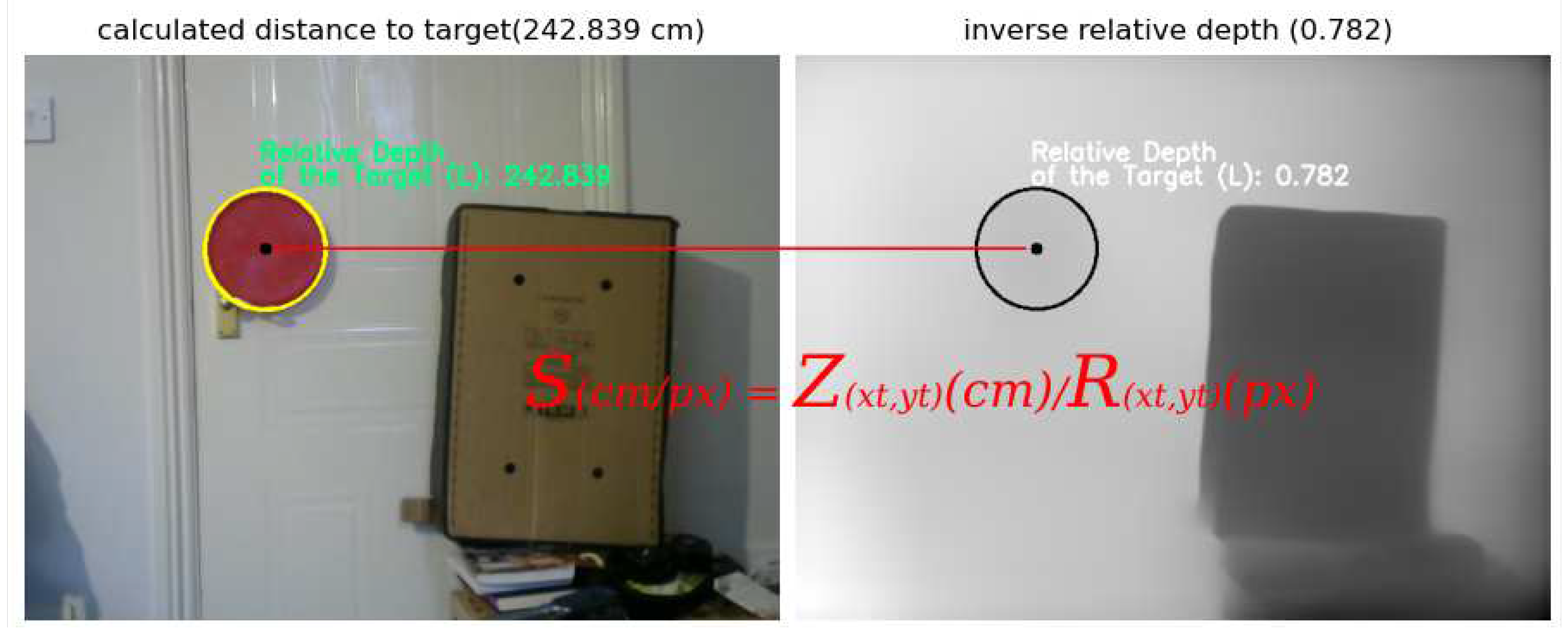

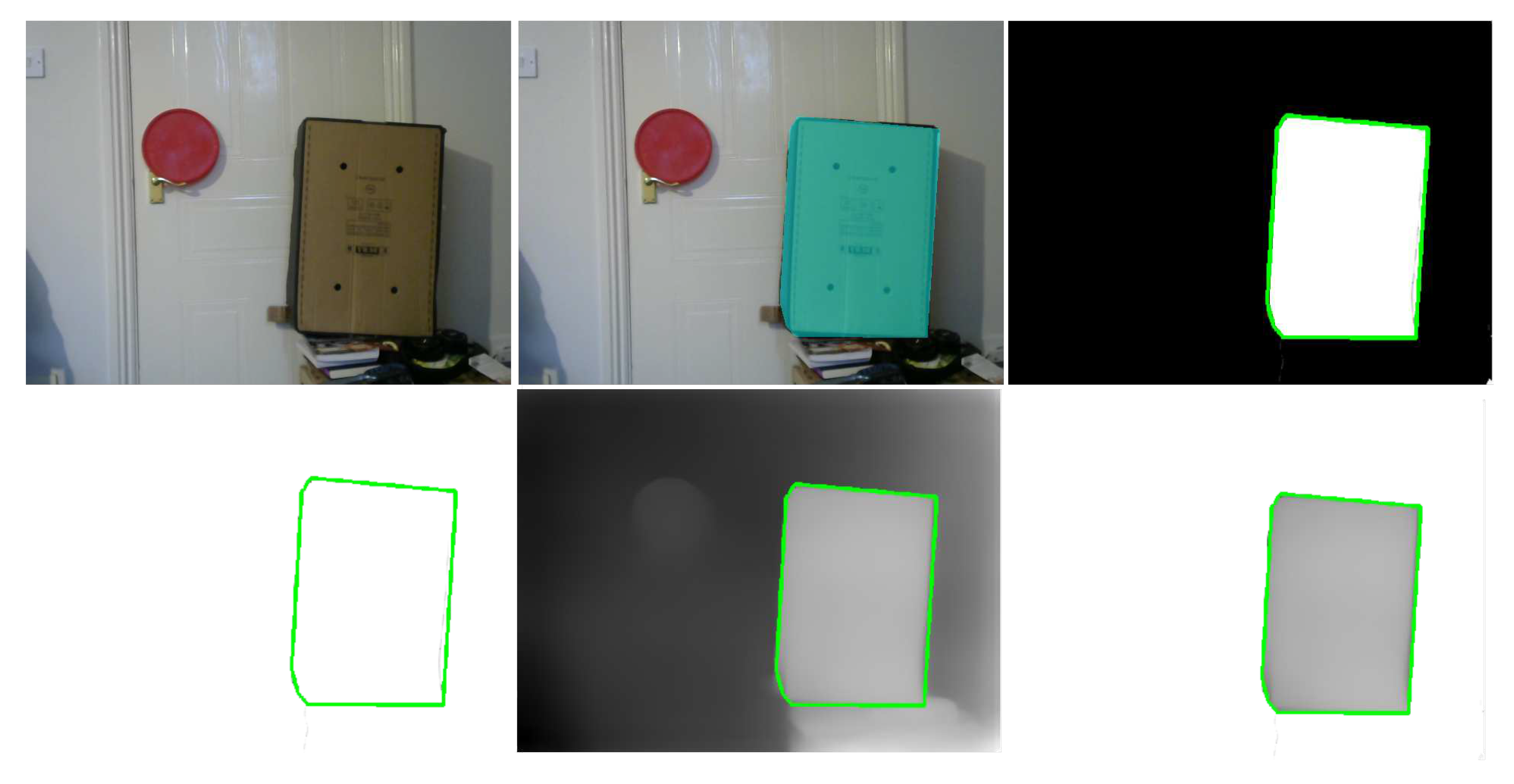

3.4. As-Built Component Measurement

| Algorithm 1: Real World Coordinates |

|

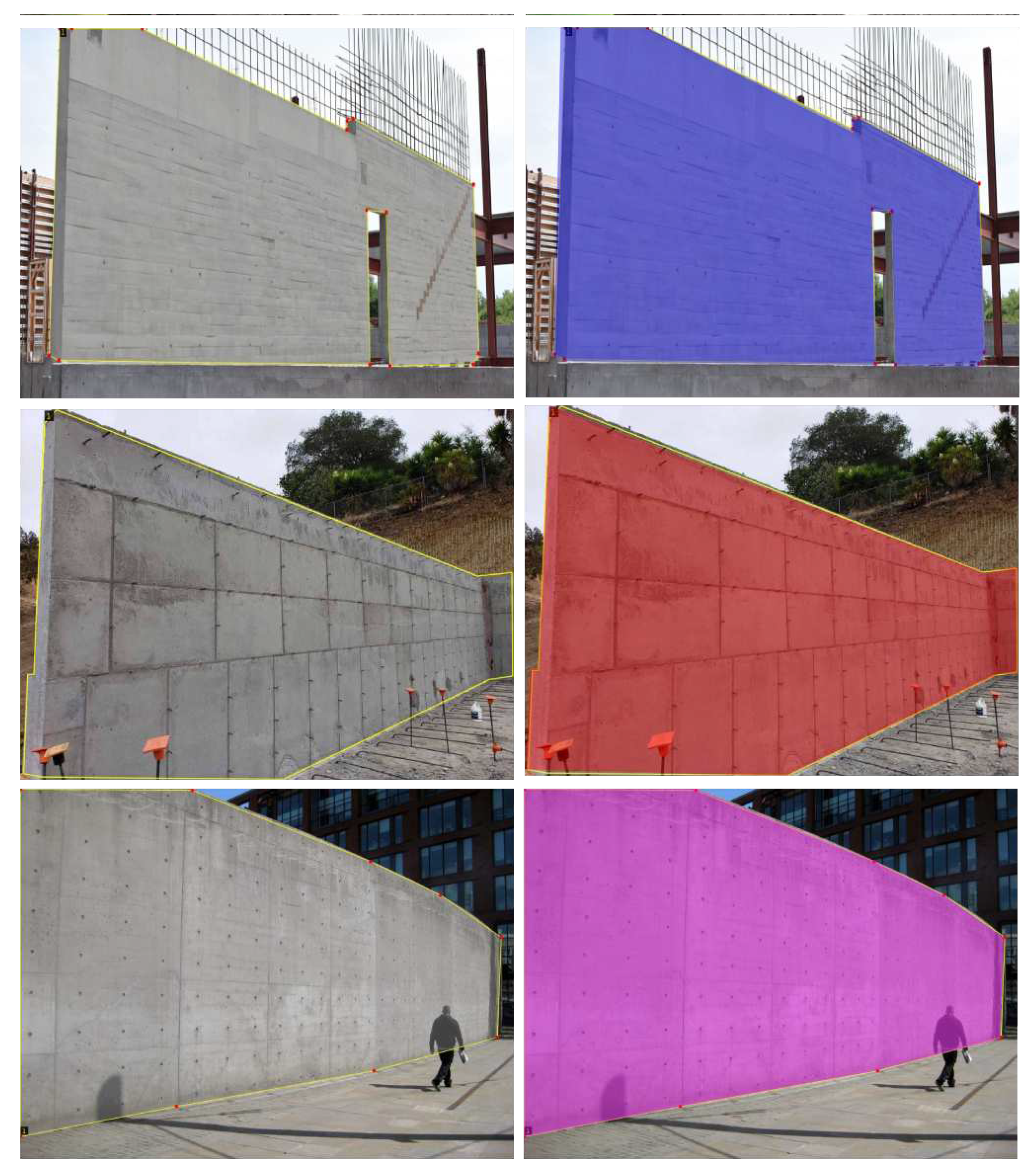

3.5. Visualisation of the Output

4. Results and Discussion

4.1. Limitations

5. Conclusion

Abbreviations

| 3D | Three Dimensional |

| AI | Artificial Intelligence |

| CV | Computer Vision |

| CNN | Convolutional Neural Networks |

| DLT | direct linear transformation |

| UAV | Unmanned aerial vehicles |

References

- Davidson, I.N.; Skibniewski, M.J. Simulation of automated data collection in buildings. J. Comput. Civ. Eng. 1995, 9, 9–20. [Google Scholar] [CrossRef]

- Navon, R. Research in automated measurement of project performance indicators. Autom. Constr. 2007, 16, 176–188. [Google Scholar] [CrossRef]

- Tsai, M.-K.; Yang, J.-B.; Lin, C.-Y. Synchronization-based model for improving on-site data collection performance. Autom. Constr. 2007, 16, 323–335. [Google Scholar] [CrossRef]

- Saidi, K.S.; Lytle, A.M.; Stone, W.C. Report of the NIST workshop on data exchange standards at the construction job site. In Proceedings of the 20th International Symposium on Automation and Robotics in Construction (ISARC); 2003; pp. 617–622. [Google Scholar]

- De Marco, A.; Briccarello, D.; Rafele, C. Cost and Schedule Monitoring of Industrial Building Projects: Case Study. Journal of Construction Engineering and Management 2009, 135, 853–862. [Google Scholar] [CrossRef]

- Navon, R.; Sacks, R. Assessing research issues in automated project performance control (APPC). Autom. Constr. 2007, 16, 474–484. [Google Scholar] [CrossRef]

- Manfren, M.; Tagliabue, L.C.; Re Cecconi, F.; Ricci, M. Long-term techno-economic performance monitoring to promote built environment decarbonisation and digital transformation—A case study. Sustainability 2022, 14, 644. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, L. Data acquisition technologies for construction progress tracking. Automation in Construction 2016, 70, 143–155. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer vision with the OpenCV library; O’Reilly Media, Inc., 2008. [Google Scholar]

- Wolfe, S., Jr. 2020 Construction Survey: Contractors Waste Time & Get Paid Slowly. 2020. Accessed on 5 April 2022.

- Bohn, J.S.; Teizer, J. Benefits and Barriers of Construction Project Monitoring Using High-Resolution Automated Cameras. J. Constr. Eng. Manag. 2010, 136, 632–640. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. Integrated Sequential As-Built and As-Planned Representation with D4AR Tools in Support of Decision-Making Tasks in the AEC/FM Industry. J. Constr. Eng. Manag. 2011, 137, 1099–1116. [Google Scholar] [CrossRef]

- Bosché, F.; Guillemet, A.; Turkan, Y.; Haas, C.T.; Haas, R. Tracking the built status of MEP works: Assessing the value of a Scan-vs-BIM system. Journal of Computing in Civil Engineering 2014, 28. [Google Scholar] [CrossRef]

- Zhang, X.; Bakis, N.; Lukins, T.C.; Ibrahim, Y.M.; Wu, S.; Kagioglou, M.; Aouad, G.; Kaka, A.P.; Trucco, E. Automating progress measurement of construction projects. Automation in Construction 2009, 18, 294–301. [Google Scholar] [CrossRef]

- Fisher, R.B.; Breckon, T.P.; Dawson-Howe, K.; Fitzgibbon, A.; Robertson, C.; Trucco, E.; Williams, C.K.I. Dictionary of computer vision and image processing; John Wiley & Sons, 2013. [Google Scholar]

- Elazouni, A.; Salem, O.A. Progress monitoring of construction projects using pattern recognition techniques. Constr. Manag. Econ. 2011, 29, 355–370. [Google Scholar] [CrossRef]

- Lukins, T.C.; Trucco, E. Towards automated visual assessment of progress in construction projects. In Proceedings of the British Machine Vision Conference; Warwick, UK, September 2007. [Google Scholar]

- Rebolj, D.; Babič, N.; Magdič, A.; Podbreznik, P.; Pšunder, M. Automated construction activity monitoring system. Advanced Engineering Informatics 2008, 22, 493–503. [Google Scholar] [CrossRef]

- Kim, H.; Kano, N. Comparison of construction photograph and VR image in construction progress. Automation in Construction 2008, 17, 137–143. [Google Scholar] [CrossRef]

- Kopsida, M.; Brilakis, I.; Vela, P.A. A review of automated construction progress monitoring and inspection methods. In Proc. of the 32nd CIB W78 Conference 2015; 2015; pp. 421–431.

- Álvares, J.S.; Costa, D.B. Literature review on visual construction progress monitoring using unmanned aerial vehicles. In Proceedings of the 26th Annual Conference of the International Group for Lean Construction: Evolving Lean Construction Towards Mature Production Management Across Cultures and Frontiers, Chennai, India, 18–22 2018. [Google Scholar]

- Teizer, J. Status quo and open challenges in vision-based sensing and tracking of temporary resources on infrastructure construction sites. Advanced Engineering Informatics 2015, 29, 225–238. [Google Scholar] [CrossRef]

- Borrmann, A.; Stilla, U. Automated Progress Monitoring Based on Photogrammetric Point Clouds and Precedence Relationship Graphs. In Proceedings of the 32nd International Symposium on Automation and Robotics in Construction (ISARC), Oulu, Finland, 2015; pp. 1–7. [Google Scholar]

- Dimitrov, A.; Golparvar-Fard, M. Vision-based material recognition for automated monitoring of construction progress and generating building information modeling from unordered site image collections. Adv. Eng. Inform. 2014, 28, 37–49. [Google Scholar] [CrossRef]

- Kim, Y.; Nguyen, C.H.P.; Choi, Y. Automatic pipe and elbow recognition from three-dimensional point cloud model of industrial plant piping system using convolutional neural network-based primitive classification. Autom. Constr. 2020, 116, 103236. [Google Scholar] [CrossRef]

- Chen, J.; Fang, Y.; Cho, Y.K. Unsupervised Recognition of Volumetric Structural Components from Building Point Clouds. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering 2017, Seattle, DC, USA, 2017; pp. 177–184. [Google Scholar] [CrossRef]

- Riley, K.F.; Hobson, M.P.; Bence, S.J. Mathematical Methods for Physics and Engineering; American Association of Physics Teachers, 1999. [Google Scholar] [CrossRef]

- Kim, C.; Son, H.; Kim, C. Automated construction progress measurement using a 4D building information model and 3D data. Autom. Constr. 2013, 31, 75–82. [Google Scholar] [CrossRef]

- Abdel Aziz, A.M. Minimum performance bounds for evaluating contractors’ performance during construction of highway pavement projects. Constr. Manag. Econ. 2008, 26, 507–529. [Google Scholar] [CrossRef]

- Hwang, B.-G.; Zhao, X.; Ng, S.Y. Identifying the critical factors affecting schedule performance of public housing projects. Habitat Int. 2013, 38, 214–221. [Google Scholar] [CrossRef]

- Turkan, Y.; Bosche, F.; Haas, C.T.; Haas, R. Automated progress tracking using 4D schedule and 3D sensing technologies. Autom. Constr. 2012, 22, 414–421. [Google Scholar] [CrossRef]

- Witzgall, C.J.; Bernal, J.; Cheok, G. TIN techniques for data analysis and surface construction. Christoph J. Witzgall, Javier Bernal, Geraldine Cheok 2004. [Google Scholar] [CrossRef]

- Du, J.-C.; Teng, H.-C. 3D laser scanning and GPS technology for landslide earthwork volume estimation. Autom. Constr. 2007, 16, 657–663. [Google Scholar] [CrossRef]

- Turkan, Y.; Bosche, F.; Haas, C.T.; Haas, R. Automated progress tracking using 4D schedule and 3D sensing technologies. Autom. Constr. 2012, 22, 414–421. [Google Scholar] [CrossRef]

- Shih, N.-J.; Wang, P.-H. Point-cloud-based comparison between construction schedule and as-built progress: long-range three-dimensional laser scanner’s approach. J. Archit. Eng. 2004, 10, 98–102. [Google Scholar] [CrossRef]

- Bosché, F. Automated recognition of 3D CAD model objects in laser scans and calculation of as-built dimensions for dimensional compliance control in construction. Adv. Eng. Inform. 2010, 24, 107–118. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. 3D structural component recognition and modeling method using color and 3D data for construction progress monitoring. Autom. Constr. 2010, 19, 844–854. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Pena-Mora, F.; Savarese, S. Automated progress monitoring using unordered daily construction photographs and IFC-based building information models. J. Comput. Civ. Eng. 2015, 29, 04014025. [Google Scholar] [CrossRef]

- Taj, G.; Anand, S.; Haneefi, A.; Kanishka, R.P.; Mythra, D. Monitoring of Historical Structures using Drones. IOP Conf. Ser. Mater. Sci. Eng. 2020, 955, 012008. [Google Scholar] [CrossRef]

- Ibrahim, A.; Golparvar-Fard, M.; El-Rayes, K. Metrics and methods for evaluating model-driven reality capture plans. Comput. Civ. Infrastruct. Eng. 2021, 37, 55–72. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, M.; Liu, X.; Wang, Z.; Ma, T.; Xie, Y.; Li, X.; Wang, X. Construction of Stretching-Bending Sequential Pattern to Recognize Work Cycles for Earthmoving Excavator from Long Video Sequences. Sensors 2021, 21, 3427. [Google Scholar] [CrossRef] [PubMed]

- Shang, Z.; Shen, Z. Real-Time 3D Reconstruction on Construction Site Using Visual SLAM and UAV. arXiv 2015, 151, 10–17. [Google Scholar]

- Shojaei, A.; Moud, H.I.; Flood, I. Proof of Concept for the Use of Small Unmanned Surface Vehicle in Built Environment Management. In Proceedings of the Construction Research Congress 2018: Construction Information Technology—Selected Papers from the Construction Research Congress, 2018; pp. 116–126. [Google Scholar] [CrossRef]

- Mahami, H.; Nasirzadeh, F.; Ahmadabadian, A.H.; Esmaeili, F.; Nahavandi, S. Imaging network design to improve the automated construction progress monitoring process. Constr. Innov. 2019, 19, 386–404. [Google Scholar] [CrossRef]

- Han, K.; Golparvar-Fard, M. Crowdsourcing BIM-guided collection of construction material library from site photologs. Vis. Eng. 2017, 5, 14. [Google Scholar] [CrossRef]

- Kielhauser, C.; Manzano, R.R.; Hoffman, J.J.; Adey, B.T. Automated Construction Progress and Quality Monitoring for Commercial Buildings with Unmanned Aerial Systems: An Application Study from Switzerland. Infrastructures 2020, 5, 98. [Google Scholar] [CrossRef]

- Braun, A.; Borrmann, A. Combining inverse photogrammetry and BIM for automated labeling of construction site images for machine learning. Autom. Constr. 2019, 106, 102879. [Google Scholar] [CrossRef]

- Kopsida, M.; Brilakis, I.; Vela, P.A. A review of automated construction progress monitoring and inspection methods. In Proceedings of the 32nd CIB W78 Conference 2015; 2015; pp. 421–431. [Google Scholar]

- Masood, M.K.; Aikala, A.; Seppänen, O.; Singh, V. Multi-Building Extraction and Alignment for As-Built Point Clouds: A Case Study With Crane Cameras. Front. Built Environ. 2020, 6, 581295. [Google Scholar] [CrossRef]

- Bosché, F. Plane-based registration of construction laser scans with 3D/4D building models. Adv. Eng. Inform. 2012, 26, 90–102. [Google Scholar] [CrossRef]

- Bueno, M.; Bosché, F.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. 4-Plane congruent sets for automatic registration of as-is 3D point clouds with 3D BIM models. Autom. Constr. 2018, 89, 120–134. [Google Scholar] [CrossRef]

- Styliadis, A.D. Digital documentation of historical buildings with 3-d modeling functionality. Autom. Constr. 2007, 16, 498–510. [Google Scholar] [CrossRef]

- Shashi, M.; Jain, K. Use of photogrammetry in 3D modeling and visualization of buildings. ARPN J. Eng. Appl. Sci. 2007, 2, 37–40. [Google Scholar]

- El-Omari, S.; Moselhi, O. Integrating 3D laser scanning and photogrammetry for progress measurement of construction work. Automation in construction 2008, 18, 1–9. [Google Scholar] [CrossRef]

- Baltsavias, E.P. A comparison between photogrammetry and laser scanning. ISPRS J. Photogramm. Remote Sens. 1999, 54, 83–94. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Bohn, J.; Teizer, J.; Savarese, S.; Peña-Mora, F. Evaluation of image-based modeling and laser scanning accuracy for emerging automated performance monitoring techniques. Autom. Constr. 2011, 20, 1143–1155. [Google Scholar] [CrossRef]

- Genovese, K.; Chi, Y.; Pan, B. Stereo-camera calibration for large-scale DIC measurements with active phase targets and planar mirrors. Opt. Express 2019, 27, 9040–9053. [Google Scholar] [CrossRef]

- Bian, J.-W.; Wu, Y.-H.; Zhao, J.; Liu, Y.; Zhang, L.; Cheng, M.-M.; Reid, I. An evaluation of feature matchers for fundamental matrix estimation. arXiv 2019, arXiv:1908.09474. [Google Scholar]

- Sun, H.; Du, H.; Li, M.; Sun, H.; Zhang, X. Underwater image matching with efficient refractive-geometry estimation for measurement in glass-flume experiments. Measurement 2020, 152, 107391. [Google Scholar] [CrossRef]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Karara, H.M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogrammetric engineering & remote sensing 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Burger, W. Zhang’s camera calibration algorithm: in-depth tutorial and implementation. HGB16-05 2016.

- Barone, F.; Marrazzo, M.; Oton, C.J. Camera Calibration with Weighted Direct Linear Transformation and Anisotropic Uncertainties of Image Control Points. Sensors (Basel, Switzerland) 2020, 20, 107391. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Karara, H.M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogrammetric Engineering and Remote Sensing 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Abedin-Nasab, M.H. Handbook of robotic and image-guided surgery. 2020, ISBN: 978-0-12-814245-5.

- Kang, S.B.; Webb, J.; Zitnick, C. An Active Multibaseline Stereo System With Real-Time Image Acquisition. 1999.

- Hartley, R.I. Theory and Practice of Projective Rectification. International Journal of Computer Vision 1999, 35, 115–127. [Google Scholar] [CrossRef]

- Lafiosca, P.; Ceccaroni, M. Rectifying homographies for stereo vision: analytical solution for minimal distortion. arXiv 2022, arXiv:2203.00123. [Google Scholar]

- Zhao, C.; Sun, Q.; Zhang, C.; Tang, Y.; Qian, F. Monocular depth estimation based on deep learning: An overview. Science China Technological Sciences 2020, 63, 1612–1627. [Google Scholar] [CrossRef]

- Alhashim, I.; Wonka, P. High quality monocular depth estimation via transfer learning. arXiv 2018, arXiv:1812.11941. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE conference on computer vision and pattern recognition 2017, 270–279. [Google Scholar]

- Casser, V.; Pirk, S.; Mahjourian, R.; Angelova, A. Depth prediction without the sensors: Leveraging structure for unsupervised learning from monocular videos. In Proceedings of the AAAI conference on artificial intelligence 2019, 33, 8001–8008. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. Advances in neural information processing systems 2014, 27. [Google Scholar]

- Lee, J.-H.; Kim, C.-S. Monocular depth estimation using relative depth maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019; 9729–9738. [Google Scholar]

- Lasinger, K.; Ranftl, R.; Schindler, K.; Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. arXiv 2019, arXiv:1907.01341. [Google Scholar]

- Girshick, R.; Radosavovic, I.; Gkioxari, G.; Dollár, P.; He, K. Detectron. 2018.

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).