1. Introduction

1.1. The necessary first axiom

Integrated Information Theory (IIT) starts with a bold assertion in a world dominated by materialism. Its first axiom [1] states that consciousness has intrinsic existence: that it is as fundamentally real as a lump of coal or a jumbo jet. The reason this axiom is necessary is that if it were not true, there would be nothing to discuss. The reason it is contentious in scientific circles is the lack of objective evidence and the fact that the inability to interact directly with consciousness prevents experimentation. To many, these factors exclude phenomenal consciousness from the list of the scientifically real, making any discussion on the topic a philosophical enterprise.

Considering the lack of evidence, what is the justification for the first axiom? Put simply, it is the compelling subjective experience that is the foundation of my every personal waking day. It is this that underlies my objection to the physicalist’s claim that there is nothing unusual about consciousness [2]. Yet strictly speaking this applies only to my own consciousness. I have no such direct evidence that other people are conscious, only their own claims logically supported by the fact that their brains share the same origins and physical characteristics as my own. However, beyond the normal human brain the lack of objective evidence leads to a slippery slope of unreliable assumptions. These include anthropomorphic responses to non-sentient robots [3] and animals [4], the uncertainties underlying the Uncanny Valley [5], and ethical discussions about the conscious status of the fetus [6] or patients in a vegetative state [7].

The demonstrable relationship between the brain and phenomenal consciousness provides indirect evidence for it being considered an integral part of reality. Neural correlates of conscious experiences can be identified [8], and consciousness can be demonstrably influenced by interventions in the brain such as anesthesia [9] and psychoactive drugs [10]. The functional output of a brain can also be seen as indirect evidence of consciousness, although this risks confrontation with conscious inessentialism and the possibility of philosophical zombies [11]. Such observations necessitate explanation of the relationship between the phenomenal and the physical.

1.2. Detectability and subjectivity

Difficulty in detection and experimentation is nothing new to the natural sciences. For most of human history our understanding of ourselves and our world was limited by the restrictions imposed by our unaided senses and manual abilities. It was only in the Enlightenment that the invention and use of scientific instruments and devices allowed us to probe and manipulate reality more extensively [12]. What we know today about the natural world is almost entirely based on the use of such supplementary tools. Indeed, the progressive expansion of scientific detection technologies is one of humanities most impressive areas of ongoing achievement. Now far beyond the historical detection of radio [13], x-rays [14], the microscopic [15] and the cosmological [16], our contemporary ingenuity stretches to the Higgs boson [17] and gravitational waves [18]. Often such phenomena are first theoretically deduced, after which instrumentation is designed to provide empirical confirmation.

If, as stated in the first axiom, we have theoretical reasons to be convinced of the factual existence of conscious qualia it is reasonable to ask why we make no attempt to build a device to detect them. Such a device would, after all, immediately end all discussion and doubt concerning their reality. It would provide a secure footing to facilitate progress in the science of consciousness and supply empirical answers to questions concerning which biological and physical systems are conscious. In doing so, it would present us with a means of determining the accuracy and validity of theories of consciousness such as IIT.

Given these motivations, why do we accept our own subjective experience and ability to report it as the only possible evidence for the existence of consciousness? Why do we not attempt to build such a device, instead of proposing that the puzzle will somehow disappear when we fully understand the physical brain, a standpoint that only evades Chalmers’ hard problem [19]? The most obvious answer lies in the assumption that consciousness is not a physical phenomenon. If this is so, then the creation of a detection device is not just a challenge to our technical ingenuity. Instead, it is an impossibility ensuing from the fact that detection requires interaction, and a physical device cannot interact with a non-physical phenomenon.

IIT proposes a direct relationship between the characteristics of consciousness (axioms) with those of its physical substrate (postulates). If the theory is true, then this allows the level of consciousness of any physical system to be calculated. However, it still does not aim to provide evidence for these predictions in the form of quantitative measurements of consciousness itself. Consequently, it is not possible to objectively confirm them or empirically compare their accuracy with competing theories. Instead, IIT remains dependent on subjective reporting: a serious limitation when considering potentially conscious systems without this capability.

The acceptance of consciousness as non-detectable is effectively a form of substance dualism: if it is non-physical and the first axiom is correct that it is real then it must be some other kind of ‘substance’. This leads inevitably to the question whether it is the only such phenomenon, or does it have company?

1.3. Physicalism and information

There is one other phenomenon with an intangibility comparable to that of consciousness: information. This starts with the term itself, which is used in different contexts to label broadly related but distinct phenomena. For example, the topics of Information Theory [20] are ontologically very different from those of semantic information [21]. This article uses the term in a manner similar to Roederer’s pragmatic information [22], which sees informational rather than physical characteristics determining the outcome of interactions. However, it differs from Roederer’s definition in also forming the basis for the function of designed information systems such as programmed computers.

The starting point in considering information in computers is the humble bit, designed to be in one of two magnetic states. We can measure these magnetic states and, knowing the process that caused this orientation, we can deduce which binary information value is associated with the bit. We may also be able to derive the value from its effect, for instance in the result of a calculation performed by the system. Following Bateson, there is a ‘difference that makes a difference’ [23]. However, the causative element in this effect is not the magnetic state. After all, if the computer were designed to use the opposite magnetic state to carry the binary value – or indeed an electrical current, pulse of light or any other physical characteristic – the effect would be the same. The processes taking place within the computer use this information, and not the physical property it is associated with, as causative difference. It is this specific type of information that is the topic of this article.

While a computer may be designed to report the existence of such information, or to use it in some information process whose output provides evidence for its existence, this does not constitute detection. Instead it is strikingly similar to the way in which consciousness can only be deduced through reporting or behavior but not objectively detected. Yet it is clearly not the case that the bit is even rudimentarily conscious. Since the isolated bit has no cause-effect repertoire, applying Integrated Information Theory gives it a Phi value of 0. It would therefore appear that consciousness does have company and that (this type of) information is also a non-physical yet real phenomenon. Just like consciousness, “information is not matter and not energy but is a fundamental part of reality” [24]. Both are, then, some kind of non-material substance, connected to their physical substrate in such a way that they can exert influence.

2. Physical systems

Before further addressing the relationship between consciousness and this kind of information it is useful to first consider the better-understood origins of physical entities, properties and processes. After all, if consciousness is to be considered an element of reality then it must comply with the general characteristics of real phenomena.

2.1. Emergence in natural systems

Physical science involves investigation of the specific laws applying to the interactions taking place between a particular type of entity. Physicists study subatomic events, meteorologists investigate weather systems and cosmologists consider the celestial. The entities and processes concerned originate through the combination of components to form larger scale systems which invariably exhibit weak emergence: they have characteristics that cannot exist in their components [25]. It is these characteristics and their interactions that scientists investigate.

Take, for instance, a grain of sand. None of its defining properties such as density, hardness, crystalline structure and color have any meaning at the level of its constituent atoms. Instead, they arise and exist only at a specific level of organization as a result of interaction between components which themselves lack these properties. Consequently, while these properties can be observed at a system level, they cannot be derived from atomic properties in any way other than creating (or simulating) the grain of sand. "Given the properties of the parts and the laws of their interaction, it is not a trivial matter to infer the properties of the whole" [26]. The whole is more than the sum of the parts.

This effect is not limited to a single level: grains of sand are built from atoms that are already an emergent level above the fundamental, and many grains blown in the wind can form ripples which cannot exist in a single grain of sand. In this way “a nested hierarchy of successively greater macro levels gives rise to multiple levels of emergence” [27]. This distinction in levels also applies to the processes taking place. Ripple formation cannot take place at the atomic level, and crystal formation cannot take place at the ripple level.

While it may already be difficult to derive the emergent properties of a particular level of organization from its immediate components, explanatory spanning of multiple levels becomes increasingly intractable. This is because the emergent properties of each level are based not on the fundamental, but on the organizational level immediately below it. For instance, while it may be possible to explain the formation of ripples on the beach on the basis of the interactions between wind-blown grains of sand, there is no direct relationship between these ripples and the properties of the subatomic particles from which grains of sand are built. The only way to achieve explanation is to progress stepwise through the intermediate levels. As pointed out by Davies and Brown in their book on superstrings “even if we identify the fundamental elements of the physical world, we cannot expect an understanding of its most complex features to follow automatically” [28].

To provide a foundation for the next level, each level must supply stable ordered entities with consistent interactional properties. Without stable atoms, there would be no grains of sand, without stable grains of sand there would be no ripples on the beach. The emergent properties observed in these systems are the causative result of the specific interactions and processes taking place between components: “mass-based and process/structure entities are intrinsically bound, being held together by forces internal to themselves.” [29]. Indeed, the very definition of a system is a persistent collection of components which interact more strongly with each other than with entities that are not members of the system.

This type of emergence is also relevant to the concept of entropy: the degree of order within a particular demarcated system. Each level of organization has its own degree of order or disorder. For instance, silica molecules can be in a disordered state, or regularly placed in the crystal lattice of a grain of sand; grains of sand may be in a minimal energy state of a flat layer or an ordered state of a rippled surface.

2.2. Designed machines

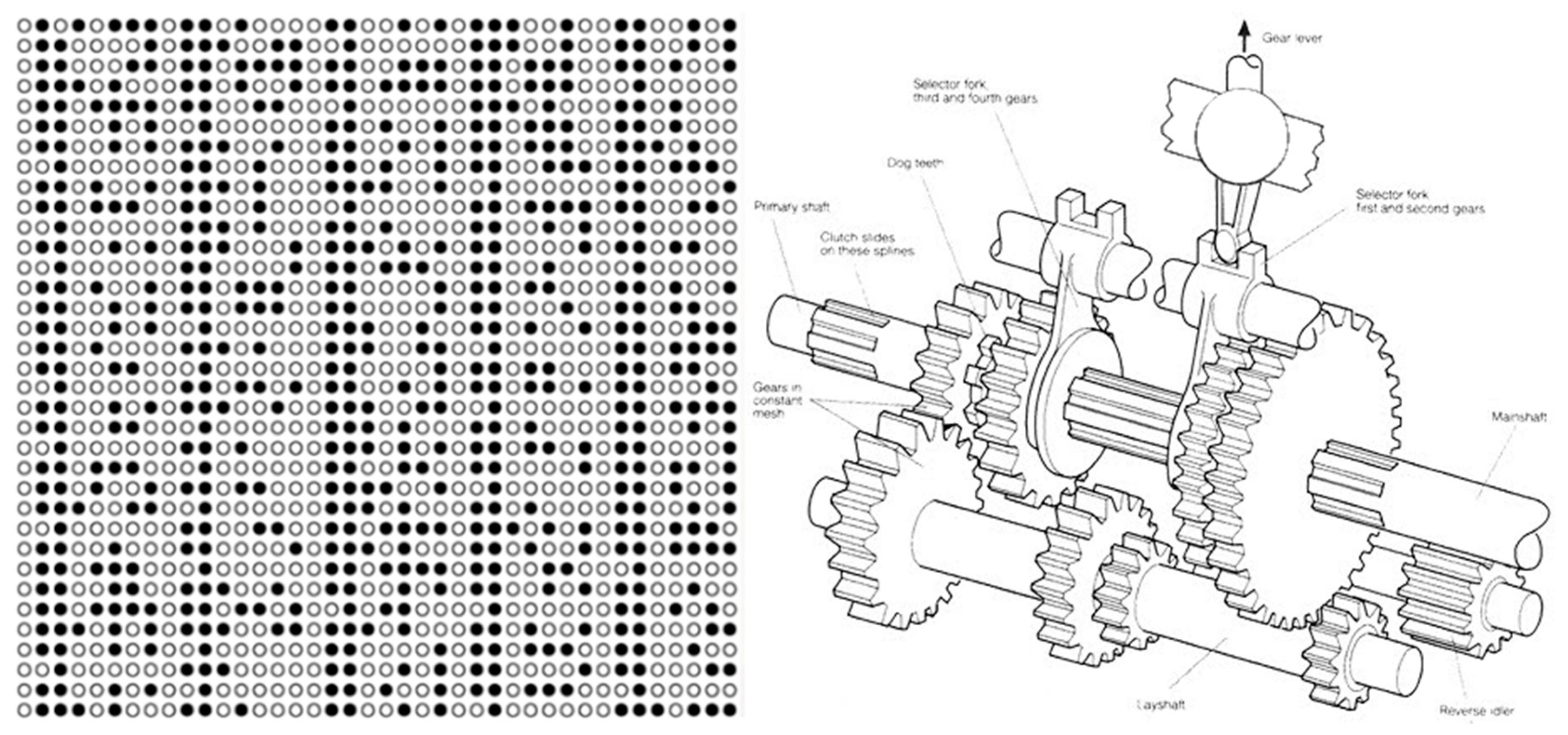

When we design machines, we invariably exploit weak emergence to create functions in the whole that do not exist in the components. A single bolt is of no use to us but combined with a diversity of other components in a specifically ordered way can be used to build a car with a transportation function. The design process is top-down: it is the required overall function that determines which parts are used, and how they are combined and contribute. The fact that machines are designed means that the relationship between system and component is entirely transparent. We know how the bolt contributes to the car’s function because we included it for that particular reason.

2.3. Living systems

Biological systems take emergence to a new level. In comparison to physical systems the levels of emergent organization in living organisms are extremely compact and the diversity and complexity of systems at each level immense. An ant weighing just 1 mg embodies complex structures and processes at the molecular, organelle, cell, tissue, organ and organismal levels [30]. This is a functional necessity since it would not be possible to leap from the properties of the molecule to the emergent characteristics of the organism in one step. An organism can only be built out of organs, an organ out of tissues and a tissue out of cells [31]. Indeed, life itself is one of the most remarkable emergent phenomena: a high-level systemic phenomenon that does not exist and has no meaning below the molecular level [32]. A particular carbon atom may be a constituent of a hemoglobin molecule transporting oxygen from the lungs to a muscle to allow the organism to flee from a predator, but this is not apparent at the atomic level. It is only when we step back and view the entire system that we can see that the systemic function of this carbon atom is entirely different from that of an identical atom in a carbon dioxide molecule drifting through the air, or an atom locked in a diamond buried deep underground. This function is determined not by the carbon atom, but by the system it is a part of.

As pointed out in Herbert Simon’s seminal paper [33] this sequential hierarchical organization is a prerequisite for the evolution of stable complex systems. Procaryotic cells needed to evolve and stabilize before a number of these could combine to form the eukaryotic cell; the existence of eukaryotic cells made possible the evolution of the multicellular organism; only through the interactions between multicellular organisms can social structures arise. At each level, the creation of structure is optional. Procaryotic cells, eukaryotic cells and organisms need not order themselves to form new emergent entities, they can also exist as unstructured collections of independent entities or looser collaborations. However, the formation of higher-level systems increases the persistence of the system and will therefore be selected by evolution when possible.

These systems are the products of selective evolutionary processes taking place at a particular level of organization (usually the single-celled or multicellular organism). This necessitates a strict demarcation of the boundaries of the selective unit and creates a strong mutual dependency between components that makes them inevitably subservient to the whole: “all processes at the lower levels of a hierarchy are restrained by and act in conformity to the laws of the higher levels” [34]. For instance, organisms routinely destroy individual cells when this is required in morphogenesis [35], and gene transcription may be determined by requirements at the much higher organizational level of the tissue [36].

The existence of such extremely non-equilibrium dynamic systems requires these autopoietic systems to use large amounts of external energy in the specific ways required to build and maintain multiple layers of complex organization from the molecular to the ecosystem [37]. The evolutionary and autopoietic origins of living systems makes them inherently complex and introduces a range of unique phenomena and laws [38].

3. Information systems

3.1. Designed information in computers

How do these considerations of emergence in physical and biological systems relate to information? As we saw earlier, the smallest functional physical components of digital computers are magnetic bits in one of two states. These are not fundamental physical entities but structures built of atoms, which are in turn built of sub-atomic particles. In other words, they are themselves physically emergent. However, we also saw that it is the associated non-physical binary information, not these physical entities themselves, that are the ‘difference that makes the difference’. In contrast to physical bits, these binary values are not built from smaller information entities. They are indivisible and in this sense comparable to fundamental physical particles, which has led to the suggestion of giving these ‘information quarks’ a comparable name: quirks [39].

The computer’s binary values are of no more practical use than the bolt used to build a car. What interests us are forms of information with properties that cannot exist at this fundamental level: decimal numbers, texts, images, audio and video. These properties are created by combining the computer’s quirks into structured composite entities.

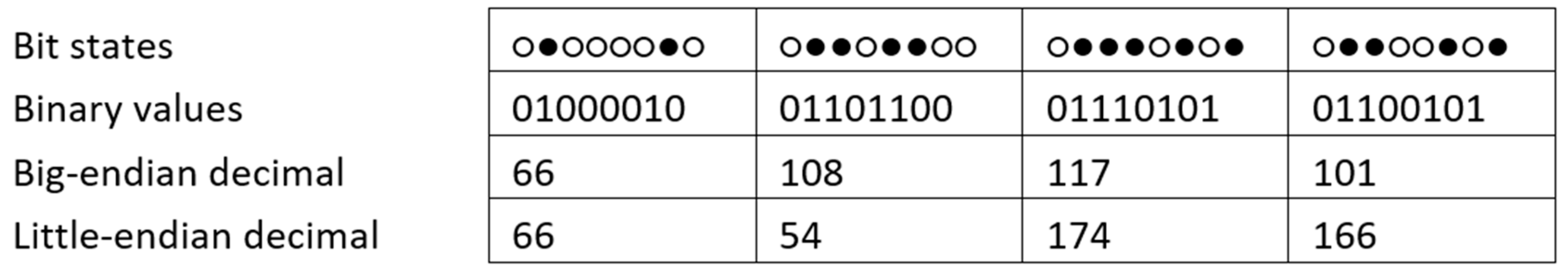

Strict coding rules allow us to create such higher level information entities by determining the physical states of the individual bits carrying binary values. For instance, to create the decimal value 66 we set the magnetic states of a series of 8 bits to down-up-down-down-down-down-up-down.

That this higher level information is more detached from its physical substrate than the binary values is demonstrated in

Figure 1. This shows that exactly the same sequence of bit states can be associated with different decimal values depending on whether a big- or little-endian coding system is used, and these are just two of the limitless number of alternative coding systems that could theoretically be used to create decimal numbers out of binary values. There is consequently no inherent relationship between the physical states and the decimal number, and it is impossible to determine what information is present from the physical bit sequence alone.

In this example the nature of the binary information is similar to the decimal values created: both are numbers.

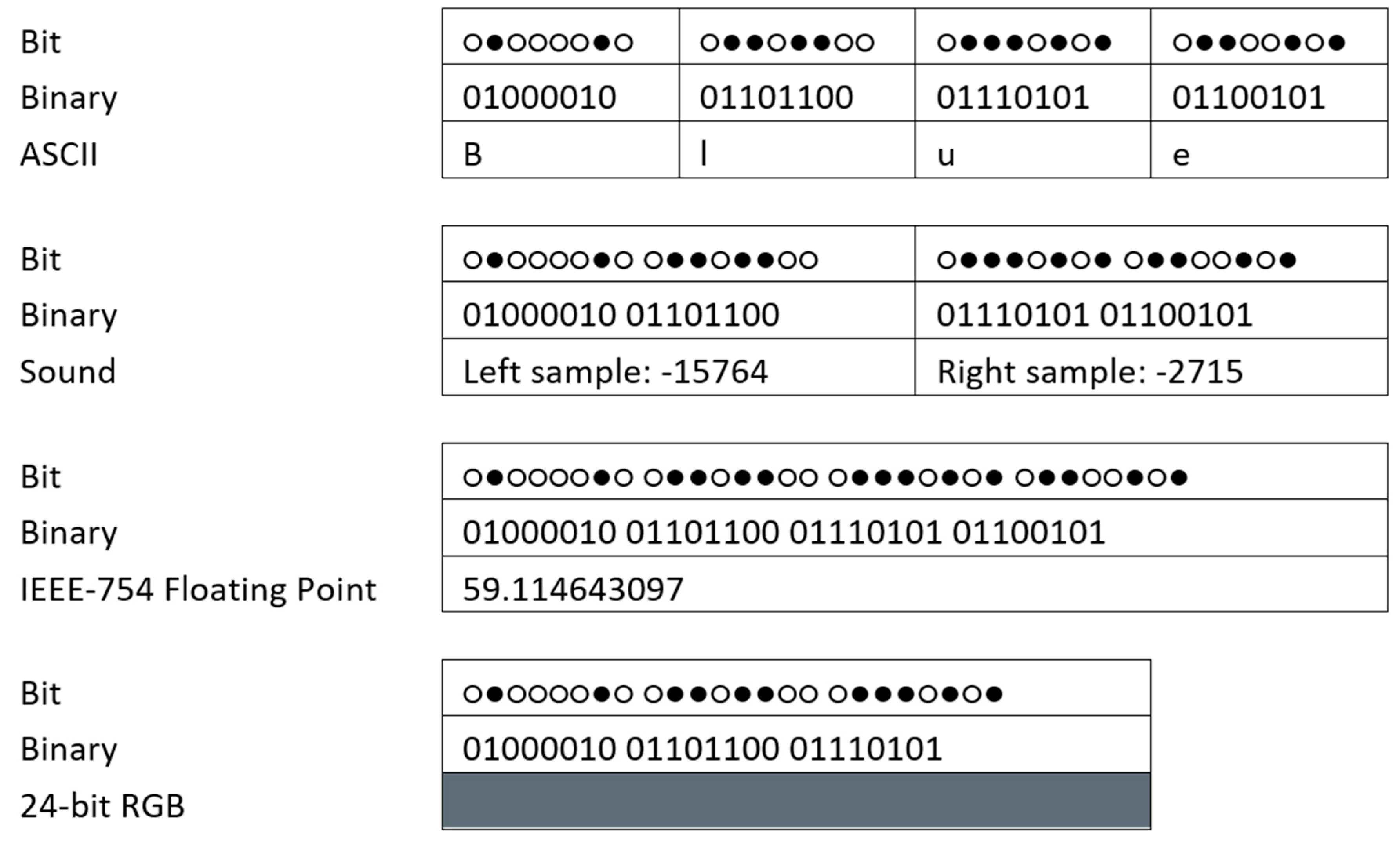

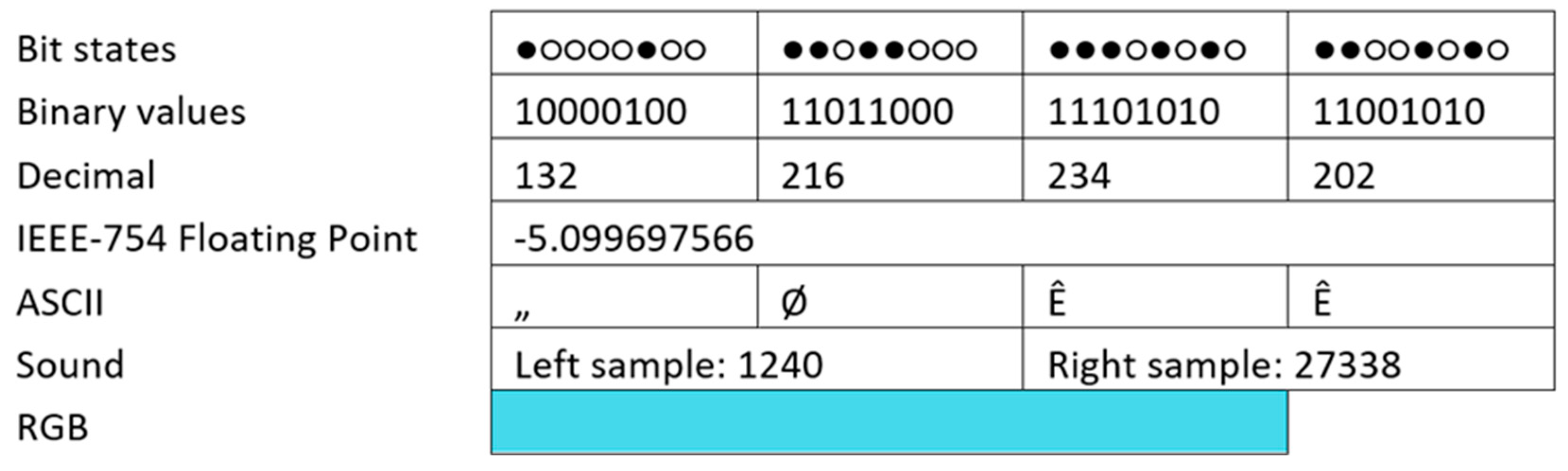

Figure 2 shows that, in addition to different outcomes, entirely different types of information can be created from the same bit sequences. While a floating-point value is again similar by nature to the binary values used to create it, this is not the case for the other examples. There is nothing numerical about a textual word, a sound or a color. Here, then, fundamental information entities are used to create emergent information with an entirely different nature and properties.

Figure 2 also demonstrates the importance of framing: while one type of information is based on 8-bit frames, others require 16, 24 or 32. Yet there is nothing inherent to the physical bit sequence that tells us how we should break it down into these blocks or indeed where. The consequent fragility of our ability to determine the higher level information associated with a particular bit sequence is demonstrated by the impact of framing errors (

Figure 3). Shifting the frame by just one bit leads us to derive entirely erroneous higher-level information using the same bits and protocols.

In general, this second level of emergence is still insufficient. Instead, it only provides the components required for the creation of meaningful higher levels such as an image, music, text or video. In each case, a specific coding protocol is used that is suited to the type of information involved [40].

These examples illustrate a fundamental difference between the information emergence used in computers and the physical emergence used in machines. Because these phenomena are based on the informational properties of binary quirks rather than the physical properties of magnetic bits they are not physically ordered structures. The energy consumed to create this information does not lead to a corresponding reduction of physical entropy.

This is not to say that the resulting bit sequences do not have structure. However, these regularities relate to the structure of the linear binary code, which is only indirectly related to the higher-level information stored. Strictly speaking, the Shannon entropy [41] of a bit sequence relates to the predictability of each physical bit. The direct relationship between the bit and its associated binary value means that the latter is equally (dis)ordered. However, we have seen that it is higher-level numerical, textual, sound, image and video information that matters. Just as the emergent properties of living systems cannot be seen in the atoms they are composed of, this emergent information is not visible in the bit sequences on the surface of a computer disk. The only way to know which information is present is to reconstruct it using the appropriate hierarchical sequence of coding systems.

The importance of hierarchical order is evident in the fact that bit-level regularities are not used in the compression of digital data. Far better results can be achieved by operating on the structures existing at higher levels of informational organization. The fact that each type of information is characterized by different regularities, structures and redundancies means that type-specific methods are required to optimize storage. For instance, removing one of two identical adjacent 24-bit colors in a digital image is more productive than looking for bit-level patterns, and working with the similarities between subsequent frames is an effective way of compressing video [42]. Another advantage of working at the level of the functional information is that it permits lossy compression: not all bit-level details are relevant and need to be kept. In this we can exploit the characteristics of our sense organs, for which the information is destined [43].

The use of emergence in information technologies is not limited to computers. It is also seen in written texts. A book is a device designed to transfer semantic information from the mind of the author to the mind of the reader. To do so, specific hierarchical conventions are used relating to the markings used. Here, the first level of information is the letter, which is combined sequentially into emergent words, sentences, paragraphs, chapters and so forth. The first levels in particular demonstrate clear functional emergence: a sentence can carry syntactic meaning that cannot exist in a word; a word can carry nominative information that cannot exist in a letter.

Given only ink-marked paper without knowing the conventions used it is impossible to determine the information it carries – or, indeed, whether it carries any. At best, by measuring the Kolmogorov entropy of the physical markings we could calculate the maximum amount of information that they could carry [44]. Regularities in these patterns could also be used as evidence that it carries semantic information rather than being a random sequence of markings. However, such statistical methods give no guarantee of the actual presence of meaningful information, as demonstrated by the unsolved riddle of the Voynich manuscript [45]. Further evidence for the distinction between physical states and the associated information is seen in encryption, which exploits this detachment to make the information intentionally difficult to extract. Such concealment would be impossible if information was indeed a property of the physical substrate.

3.2. Designed processing in computers

The previous paragraph dealt with the information processed by a computer – its raw materials and products – but emergence is equally important to its functions. At the functional heart of the computer is a processor that works according to Turing’s design for linear information processing [46]. This is constructed in a machine-like fashion [47]. Its Central Processing Unit includes an arithmetic logic unit (ALU), a control unit (CU) and a number of registers, each with its own function, which together allow the computer to run its programs. However, this machinery does not determine which information processes the computer performs, only that it is capable of performing them. Instead, the functions of the computer are created in the same way as the emergent information they operate on: by setting bit states according to specific coding rules and logical architectures such as programming languages [48] and object-oriented programming [49]. Like the information they work on, these high-level functions are not physically apparent and can only be reconstructed by applying the appropriate coding system to measured bit sequences.

Like physical machines, computer programs are created through top-down design. The function of the program determines sequentially which subroutines, code lines and operations are required, and thus ultimately the required states of the physical bits involved. In contrast with physical machines, the operation of the program does not result in the creation of a physical product, but informational output that is associated with but distinct from the sequences of physical bits that are used to create it.

The functions of software are the result of at least four levels of emergent organization: the operator, the code line, the subroutine and the program. Each hierarchical level has functional capabilities that cannot exist in its component parts, with the top level far removed from the basic operations performed by the CPU. The construction of higher-level functions is not based on the combination of the physical functions of components as it is in physical machines, but on the integration of the informational functions of underlying levels (

Figure 4).

A consequence of this separation between mechanism and function is that, in contrast with physical machines, new physical mechanisms are not required to create new functions. Indeed, as theoretically proven by Turing, the same physical programmable computer can perform any algorithmic function. Furthermore, in contrast with physical machines which generally perform just one function, computers have a vast array of potential stored functions of which only one can be performed by the CPU at any particular moment. The selection of functions may be initiated by physical events such as keyboard input, but this in itself first needs to be processed and interpreted using other functions. Ultimately, which code is run is determined not by some physical mechanism but by one of the fundamental logical functions performed by its ALU: the conditional branching function. This logical function is itself controlled by higher-level functions through top-down causation to produce the outcome required of the overall program. Significantly (and in contrast with physical machines) this causation is not based on emergent physical processes, but on non-physical emergent information processes.

Another consequence of the separation between the physical and the informational is that fact that running a computer program does not lead to the wear and tear that is inevitable in physical machines. Instead, the maintenance that computers require is of an entirely different nature [50] and software entropy [51] is an entirely different parameter than the entropy of the physical substrate used to store the software. Here, too, we see the separation between the physical substrate and the informational phenomena that rest upon it.

5. The brain

The previous paragraphs provide necessary evidence for the central thesis of this article: that the phenomena and functions created by the brain, including consciousness, are the product of the strongly emergent hierarchical organization of fundamental non-physical information entities and processes that are associated with, but not synonymous with, observable states and mechanisms in the physical substrate. It is, however, essential to carefully identify and acknowledge the similarities and differences between the brain and the other systems discussed.

5.2. Emergent living systems

Any explanation of the brain must be founded on the special characteristics of living systems. We know that a brain is not a machine constructed on the basis of a design, but a organic self-organizing system. Like all of the body’s organs, it has an observable large [53] and small scale [54] anatomy created by the differentiation and spatial distribution of different types of cells. However, in contrast with organs with a physical function such as the heart or kidney, this form of physical organization does not equate to the brain’s functions.

Instead, its physiology is only supportive of the network of connections between its cells: the connectome. It is this network that determines its functions. While the positions of neurons in the mature brain is relatively static, the connections between them are highly dynamic. This is comparable to the distinction between system and function seen in Artificial Intelligence systems, with the difference that the brain itself is also a product of self-organization while AI systems are created through design and construction.

A significant and generic characteristic of living systems is their strong emergence, which is necessary to create and maintain their non-equilibrium structures and functions [33]. This factor will be of particular importance in the dynamic connectome [55] in which the continual making and breaking of connections must, like all formative processes in living systems, be under the control of higher-level systems [56]. If this were not the case, the Second Law of Thermodynamics would prevail, resulting ultimately in random connectivity that would produce only noise.

Further evidence for emergence in the brain can be found in its immense scale relative to its smallest components. The human brain is the current end product of a long evolutionary line that started with animals with very simple nervous systems. Like in early computers, the limited scale of these primitive versions can support little emergence. However, even in the 302-neuron connectome of the roundworm Caenorhabditis elegans there is evidence for functional emergence [57]. A further step takes us to the nervous systems of insects, which include ganglia built from tens of thousands of strongly interconnected neurons [58]. Such nervous systems are capable of supporting a far more considerable degree of emergence. The further progression to cephalization seen in vertebrates such as lizards [59] brings us to the scale of millions of neurons, and in mammals to the hundreds of millions [60]. In primates [61] and humans [62] the numbers escalate to tens of billions, providing more than ample material for the construction of multiple hierarchical levels.

Together, these considerations make it implausible that the brain’s functions are simply a result of linear processes taking place at a single level of organization. Instead, like in all machines and living systems, they must be the product of several levels of emergence, each with its own characteristics that form the foundation for the next. The question is then what form these hierarchical levels take.

5.3. Information systems

The comparison between physical machines and programmed computers is relevant because, having designed and built both, we fully understand their workings. This makes them useful in illustrating both the functional emergence they both exhibit and the significant differences between physical and informational systems. This distinction is comparable to that between brains and other organs. We must therefore expect functional emergence in brains, like in computers, to be informational rather than physical.

However, the fact that the brain’s neural networks [63] operate in an entirely different way to computers mean that specific insights into informational mechanisms and phenomena in brains cannot be derived from what we know about computers. While Artificial Neural Networks are more comparable, closer examination reveals that even the most advanced are far less complex and lack many of the characteristics of biological neural networks [64]. This will inevitably lead to significant differences between the emergent phenomena created by ANNs and brains, even if they exhibit comparable input-output relationships.

As a consequence of the brain’s informational function, the significance of its operational mechanisms lies not in their physical effect, but in their associated informational effect. It is for this reason that the brain’s high energy expenditure [65] does not produce physical work (such as the heart’s pumping of blood) or lead to the creation of physically useful products (such as protein synthesis). Like in the computer, its energy expenditure instead results in the creation of informational functions and products.

5.5. Morphology and networks

The connections in the brain do not only link neighboring neurons. Since distant neurons can be connected while neighboring cells may not be, functional proximity does not correspond with physical proximity. The functionally relevant structures are therefore not based on physical features but on the topology of the connectome [66] which can better be approached using graph theory [67] than anatomical morphology.

Analysis of neural networks demonstrates the existence of regular, random, scale-free and small-world topologies [68] [69]. Such analyses also reveal that the connectome itself is hierarchically organized, necessitating consideration of neural circuits [70] and modularity [71]. Viewing the connectome purely from the lowest level of nodes and connections misses such significant features.

5.5. Complexity and non-detectability

Attempts to scale up our understanding of simple biological neural networks to the level of the entire brain are fraught with technical difficulties [72] but this approach also faces a more fundamental issue. As in computers, the brain’s informational phenomena are not directly detectable as physical properties or processes. It may be possible to derive some very basic information processing capabilities from physical mechanisms. For instance, analysis of the physical functions of the brain’s neural networks show how they can perform Boolean operations [73]. However, the organic origins of the brain cause even its basic level of neuronal structures and processes to be more complex than the computer’s bits and CPU [74]. The brain’s neural circuits [75] are also far more complex and varied than the wiring of a computer’s CPU. A synapse, for instance, is not an all-or-none binary connection: it can be excitatory or inhibitory, have varying strengths and use different neurotransmitters. In addition, the function of the brain relies on more than just wiring. Dynamic features such as spike train frequency and timing [76] also play an important role. Even in sensory areas, where information input can be directly correlated to neural activity, the relationship is far from simple [77].

Mapping the connectome therefore brings us to the same challenge as reading a byte sequences on a computer disk: how to reconstruct the higher information levels from such information. In computers we span multiple levels through reconstruction using formal protocols and languages, but we cannot use this method in the brain. After all, since connectomes are created through self-organization there is no design or protocol available to be used in this way. This relationship between physical processes and informational functions is comparable to but even more obscure than that seen in the deep neural networks used in Artificial Intelligence systems [78].

5.6. Multifunctionality and informational determinism

A significant similarity between brains and computers is that, as a consequence of the separation of physical mechanisms from informational functions, they store an immense diversity of information and functions, most of which are inactive at any particular moment. Activation may in some cases be initiated by external stimuli, but even then the connection between the stimulus and the activation is itself (with the exception of simple reflex reactions) mediated by internal information processes. As with the programmatic control of the CPU branching function in computers, this means that while choices and decision making are ultimately supported by cellular events, they are causally determined by processes taking place at higher levels of information organization.

This causality applies not only to selection between alternative activities, it also applies to the activities themselves. Like high-level physical phenomena, these will operate according to their own laws that are not relevant or applicable at lower levels. As in physical biological systems, these higher level processes will exert strong top-down control on underlying levels, all the way down to the basal information associated with the neuronal structures and functions.

We can therefore conclude that one of the most remarkable properties of brains is that they have evolved to transfer top-down control from high-level physical processes to high-level informational processes. In this way, an integrated causality is created that combines physical determinism with influences from the information dimension.

5. Discussion: emergent information and Integrated Information Theory

In the context of this special issue, we need to ask how the conclusions presented in this article relate to IIT, which aims to connect the characteristics of consciousness with those of the physical substrate supporting it. There is certainly agreement on a number of key concepts. Perhaps most importantly, both see consciousness as a real but non-physical phenomenon. Both also recognize the importance of large-scale causal integration of interacting neurons in the brain, as quantified in IIT’s measure of Phi [1]. Furthermore, IIT sees consciousness as an emergent phenomenon related to the information generated by a physical system, although its definitions of the terms ‘emergent’ and ‘information’ differ from those used in this article.

By taking a wider view of physical systems, and also of designed machines, this article demonstrates that internal causal integration is in fact a characteristic of all demarcated dynamic systems and underlies the general organization of physical reality into hierarchical levels, each with its own unique emergent properties. Living systems are shown to take this to a new level. Emergent Information Theory (EIT) distinguishes such physical systems from designed technological and evolved biological systems (computers and nervous systems) in which the interactions underlying the integration are not based on physical characteristics but on the information associated with them. A system that operates on the basis of physical mechanisms alone will not produce emergent information, however high its complexity and integration, as measured by Phi.

All but the simplest of information functions in the brain necessitate hierarchical emergence to create information functions not present in basic components and low-level processes. This approach therefore supports an association of consciousness with high values of Phi, but in a more specific way. Just as you need 102 interacting atoms to create a molecule, 1012 to create an organelle, 1014 to produce a cell and 1020 to reach the level of the organism, so too will a minimum number of fundamental information entities be required to create a particular level of emergent information. This is clearly evident in computers: 8 bits for a decimal number, 24 for a color, thousands for a simple image and billions for a few seconds of sound, trillions for a few seconds of video. It is not so that a binary value has a low level of the characteristics of a video. At this level video simply does not exist.

This tells us that we should not expect low-Phi informational systems to have a low level of consciousness. Instead, at these scales consciousness will have no meaning. This conclusion is consistent with empirical estimates of Phi associated with various brain states [79], and in particular with the state transitions observed. Falling into (or returning from) deep sleep or anesthesia does not result in a graded reduction in consciousness, but a cessation as integration breaks down below a particular, but still very high, threshold value.

This evidence – combined with the potential of the brain to support multiple levels of emergence; the fact that consciousness appears to be one of the brain’s more advanced functions; and the difference in nature between qualia and the simple information processes supported by mechanisms operating at the neuronal level – are consistent with consciousness emerging only at a relatively high level of organization.

Rather than consciousness being a gradient present to varying degrees in all integrated information systems, this article therefore concludes that it is just one member of a large family of emergent information phenomena created by the brain, each at its own level of organization. A high Phi value is therefore a necessary, but not a sufficient criterium for the existence of consciousness: and only in informational systems.

Consciousness is not unique in this sense. The majority of the brain’s observed functions, of which many require high levels of information processing and considerable integration, are entirely non-conscious. Furthermore, it is often possible to perform the same functions, for instance sporting abilities [80], consciously or subconsciously. These considerations strongly suggest that consciousness does not exclusively inhabit its particular level of organization. Instead, other functionalities lacking the unique characteristic of phenomenal experience can also be expected to exist at this level.

This consideration of consciousness as a specific form of emergent information has consequences for the possibility of it existing in digital systems. If the underlying physical substrate and mechanisms are significantly different then the emergence of identical higher-level phenomena is unlikely even if Phi values and input-output relationships are comparable. To suggest otherwise is akin to proposing that a tractor uses muscles because it is comparably complex and can pull a plough in the same way as a muscle-powered horse. The only reason there is still discussion on this topic [81] is because, while we can observe the physical mechanisms supporting plough pulling in these two systems and see them to be different, this is not possible with emergent informational mechanisms. Even at the fundamental level, information is shown to be a non-detectable, non-physical phenomenon that can only be derived from the physical measurements it is associated with on the basis of knowledge of the workings of the system. We have seen that in emergent physical systems ontological spanning of several levels of organizational emergence is not possible. The relationship between the ripples on the beach with the atoms in the silica crystals of the grains of sand only exists through the successive steps of the intermediate levels. Similarly, there is no direct relationship between the function of an entire computer program and the Boolean operations performed by the computer’s CPU. Instead, this is the culmination of successive levels of operations, code lines, and subroutines.

This situation has consequences for the derivation of the specific characteristics of consciousness from the physical characteristics of neural networks, as proposed by IIT. While this may be achievable for the simplest information functions they support, it will be impossible for the higher level phenomena (including consciousness) which ultimately emerge from these functions. Instead, the characteristics of consciousness as a systemic phenomenon will be derived from the interactions taking place between its immediate informational components, which will themselves only be indirectly derived from the brain’s lowest level structures and functions. This obscurity is already apparent in far simpler Artificial Neural Networks in which function is also created through self-organization rather than design.

Phi can therefore be useful in determining the scale and overall complexity of an integrated information system required to construct emergent hierarchies culminating in consciousness. However, these higher levels also house non-conscious processes that are equally dependent on this degree of integration. This is comparable to the fact that millions of water molecules can combine to form either a snowflake or steam, or in a computer millions of binary values may be combined to create either a text or a sound: it is not the scale of the system that determines the outcome, but the specific ways in which components combine to form high level emergent phenomena.

In its current early stage of development, EIT cannot solve the hard problem by explaining the properties of specific forms of emergent information such as qualia, nor can it quantify the value of Phi (or other potential factors) necessary for their existence. In its favor, by encompassing all processes in natural and artificial information-based systems it provides a far more generic theoretical framework: a measure characteristic of scientific progress. In doing so it explains why it is not possible to detect consciousness and other high-level information phenomena using physical devices and provides a direction for the development of methods and means for such empirical investigation. The quantitative results produced by IIT may be useful in focusing effort on those levels of organization at which consciousness emerges.

While this challenge may currently seem insurmountable, there is no justification for despondency that it is beyond our ingenuity. After all, while it took 40 years, the existence of the theoretically predicted Higgs boson was also eventually experimentally confirmed [17]. Rather, this approach opens up a new and promising avenue of theoretical and scientific investigation.

6. Conclusion

Considering the way in which all living systems work, we must assume that brain functions are based on stratified functional emergence. On account of their informational (rather than physical) function we can conclude that this emergence is not based on physical characteristics and interactions, but on the relationships and interactions between information associated with the physical substrate. The systems so created will therefore not equate to physical brain structures created though morphogenic processes.

In computers binary values are associated with bit states and basic logical functions to electronic operations; in brains comparably simple information and information processes will be associated with cellular states and the flow of electrical currents through its networks. These fundamental information entities and processes, and the emergent levels constructed from them, are non-physical but real phenomena. The indirect correlation with the physical substrate exists equally for both conscious and non-conscious phenomena created by the brain, as does the necessity for a high degree of causal systemic integration, as quantified in IIT’s Phi value. This unified explanation for conscious and non-conscious brain functions is a necessity considering their common origins from the same physical system and the lack of sharp demarcation between them.

As non-physical phenomena, all forms of the information involved in the function of brains are undetectable using physical instruments. This therefore constitutes substance dualism in the literal sense of the word, but with a far broader scope than the historical focus on human consciousness. Indeed, this dualism also underlies the function of information technologies. In programmed computers the use of coding systems provides a workaround to the problem of non-detectability, allowing higher levels of emergent information to be reconstructed from physical state measurements. In both AI systems and brains the creation of function through self-organization makes this approach impossible. Consequently, while phenomenal consciousness provides indirect evidence, and the advanced capabilities of the brain provide functional evidence, empirical investigation of emergent information in the brain remains problematic. What is eminently clear is that the base-level information and processes coupled to the physical substrate provide insufficient explanation. As in physical reality, understanding of the fundamental level does not constitute understanding the emergent levels constructed from them.

Acknowledging emergent information as a generic explanation does not in itself explain the distinguishing features of consciousness. It does, however, provide a context within which such non-physical features are to be expected. It could be claimed that the phenomenal properties of consciousness necessitate special attention and a distinguishing explanation. However, doing so isolates consciousness and prevents us from seeing it as part of an integral physico-informational reality: an isolation that is far from splendid and scientifically implausible. Furthermore, this is to do its fellow high-level information phenomena an injustice. Indeed, it would seem that we may need to invert Chalmer’s definitions of the hard and easy problem [19]. After all, the way in which consciousness is subjectively experienced and can be reported actually makes it easier to investigate than equally significant, advanced and potentially remarkable emergent informational phenomena for which no such evidence is available.

References

- Oizumi, M.; Albantakis, L.; Tononi, G. From the Phenomenology to the Mechanisms of Consciousness: Integrated Information Theory 3.0. PLOS Comput. Biol. 2014, 10, e1003588. [Google Scholar] [CrossRef] [PubMed]

- Papineau, D. What Exactly is the Explanatory Gap? Philosophia 2011, 39, 5–19. [Google Scholar] [CrossRef]

- Riek, L.; Rabinowitch, T.; Chakrabarti, B.; Robinson, P. Empathizing with robots: Fellow feeling along the anthropomorphic spectrum. In 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops; IEEE, 2009; pp. 43–48. [Google Scholar]

- Wynne, C.D.L. The Perils of Anthropomorphism. Nature 2004, 428, 606. [Google Scholar] [CrossRef] [PubMed]

- Mori, M.; MacDorman, K.; Kageki, N. The uncanny valley [from the field]. IEEE Robotics & Automation Magazine 2012, 19, 98–100. [Google Scholar]

- Padilla, N.; Lagercrantz, H. Making of the mind. Acta Paediatrica 2002, 109, 883–892. [Google Scholar] [CrossRef]

- Jennett, B. The vegetative state. Journal of Neurology, Neurosurgery & Psychiatry 2002, 355–357. [Google Scholar]

- Rees, G.; Kreiman, G.; Koch, C. Neural correlates of consciousness in humans. Nat. Rev. Neurosci. 2002, 3, 261–270. [Google Scholar] [CrossRef] [PubMed]

- Mashour, G.A. Integrating the Science of Consciousness and Anesthesia. Obstet. Anesthesia Dig. 2006, 103, 975–982. [Google Scholar] [CrossRef]

- Millière, R. Looking for the Self: Phenomenology, Neurophysiology and Philosophical Significance of Drug-induced Ego Dissolution. Front. Hum. Neurosci. 2017, 11, 245. [Google Scholar] [CrossRef]

- Flanagan, O.; Polger, T. Zombies and the function of consciousness. Journal of Consciousness Studies 1995, 313–321. [Google Scholar]

- Turner, G.; Turner, A. Scientific Instruments, 1500-1900: An Introduction. Univesity of California Press, 1998. [Google Scholar]

- Hertz, H. Electric waves: being researches on the propagation of electric action with finite velocity through space. Macmillan: London, 1893. [Google Scholar]

- Rontgen, W.C. ON A NEW KIND OF RAYS. Science 1896, 3, 227–231. [Google Scholar] [CrossRef] [PubMed]

- Zuylen, J. The microscopes of Antoni van Leeuwenhoek. J. Microsc. 1981, 121, 309–328. [Google Scholar] [CrossRef] [PubMed]

- Brown, H.I. Galileo on the Telescope and the Eye. J. Hist. Ideas 1985, 46, 487. [Google Scholar] [CrossRef]

- Cho, A. The Discovery of the Higgs Boson. Science 2012, 338, 1524–1525. [Google Scholar] [CrossRef]

- Barish, B.C.; Weiss, R. LIGO and the Detection of Gravitational Waves. Phys. Today 1999, 52, 44–50. [Google Scholar] [CrossRef]

- Chalmers, D. Facing up to the problem of consciousness. Journal of consciousness studies 1995, 2, 200–219. [Google Scholar]

- Cover, T.T.J. Elements of Information Theory; Wiley: New York, 1991. [Google Scholar]

- Hintikka, J. On semantic information. In Physics, Logic, and History; Springer: Boston MA, 1970; pp. 147–172. [Google Scholar]

- Roederer, J. Pragmatic information in biology and physics. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 2016, 374, 20150152. [Google Scholar] [CrossRef]

- Bateson, G. Steps to an Ecology of Mind; Chandler: Toronto, 1972. [Google Scholar]

- McIntosh, A. Information and entropy — top-down or bottom-up development in living systems? Int. J. Des. Nat. Ecodynamics 2010, 4, 351–385. [Google Scholar] [CrossRef]

- Bedau, M.A. Is Weak Emergence Just in the Mind? Minds Mach. 2008, 18, 443–459. [Google Scholar] [CrossRef]

- Simon, H. The sciences of the artificial; MIT Press: Cambridge MA, 1996. [Google Scholar]

- Bedau, M. Downward Causation and Autonomy in Weak Emergence. ipia: an international journal of epistemology 2002, 6, 5–50. [Google Scholar] [CrossRef]

- Davies, P.; Brown, J. Superstrings: A theory of everything? Cambridge University Press: Cambridge, 1992. [Google Scholar]

- Abbott, R. Emergence, entities, entropy, and binding forces. In Conference on: Social Dynamics: Interaction, Reflexivity, and Emergence; University of Chicago Press: Chicago, 2004; pp. 453–468. [Google Scholar]

- Nation, J. Sr, Insect physiology and biochemistry; CRC Press: Boca Raton, 2015. [Google Scholar]

- Günther, F.; Folke, C. Characteristics of nested living systems. J. Biol. Syst. 1993, 3, 257–274. [Google Scholar] [CrossRef]

- Morowitz, H. The Emergence of Everything; Oxford University Press: Oxford, 2002. [Google Scholar]

- Simon, H. The architecture of complexity. Proc. Am. Philos. Soc. 1962, 467–482. [Google Scholar]

- Campbell, D. Downward causation’ in hierarchically organised biological systems. In Studies in the Philosophy of Biology; Palgrave: London, 1974; pp. 179–186. [Google Scholar]

- Hinchliffe, J. Cell death in embryogenesis. In Cell death in biology and pathology; Springer: Dordrecht, 1981; pp. 35–78. [Google Scholar]

- Goldspink, G. Selective gene expression during adaptation of muscle in response to different physiological demands. Comp. Biochem. Physiol. Part B: Biochem. Mol. Biol. 1998, 120, 5–15. [Google Scholar] [CrossRef] [PubMed]

- Schneider, E.; Kay, J. Order from Disorder: The Thermodynamics of Complexity in Biology. In What is life? The next fifty years: Speculations on the future of biology; Cambridge University Press: Cambridge, 1995; pp. 161–172. [Google Scholar]

- Dorato, M. Mathematical Biology and the Existence of Biological Laws. In Probabilities, Laws, and Structures; Springer: Dordrecht, 2012; pp. 109–121. [Google Scholar] [CrossRef]

- Boyd, D. Design and self-assembly of information systems. Interdiscip. Sci. Rev. 2020, 45, 71–94. [Google Scholar] [CrossRef]

- Naik, U.; Shivalingaiah, D. Digital Library: File Formats, Standards and Protocols. In Proceedings of the Conference on Recent Advances in Information Technology, Kalpakkam, IGCAH, 2005; pp. 94–102. [Google Scholar]

- Shannon, C. A Mathematical Theory of Communication. Bell System Technical Journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Ponlatha, S.; Sabeenian, R. Comparison of video compression standards. International Journal of Computer and Electrical Engineering 2013, 5, 549–554. [Google Scholar] [CrossRef]

- Marzen, S.; DeDeo, S. The evolution of lossy compression. Journal of The Royal Society Interface 2017, 14, 20170166. [Google Scholar] [CrossRef]

- Papadimitriou, C.; Karamanos, K.; Diakonos, F.K.; Constantoudis, V.; Papageorgiou, H. Entropy analysis of natural language written texts. Physica A: Statistical Mechanics and its Applications 2010, 389, 3260–3266. [Google Scholar] [CrossRef]

- Montemurro, M.A.; Zanette, D.H. Keywords and Co-Occurrence Patterns in the Voynich Manuscript: An Information-Theoretic Analysis. PLOS ONE 2013, 8, e66344. [Google Scholar] [CrossRef]

- Turing, A. On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London mathematical society 1937, 2, 230–265. [Google Scholar] [CrossRef]

- Schuurman, D. Step-by-step design and simulation of a simple CPU architecture. In Proceedings of the 44th ACM technical symposium on Computer science education, Denver, SIGCSE, 2013; pp. 335–340. [Google Scholar]

- Nofre, D.; Priestley, M.; Alberts, G. When Technology Became Language: The Origins of the Linguistic Conception of Computer Programming, 1950–1960. Technol. Cult. 2014, 55, 40–75. [Google Scholar] [CrossRef]

- Black, A. Object-oriented programming: Some history, and challenges for the next fifty years. Information and Computation 2013, 231, 3–20. [Google Scholar] [CrossRef]

- Lehman, M. Programs, life cycles, and laws of software evolution. Proceedings of the IEEE 1980, 68, 1060–1076. [Google Scholar] [CrossRef]

- Harrison, W. An entropy-based measure of software complexity. IEEE Trans. Softw. Eng. 1992, 18, 1025–1029. [Google Scholar] [CrossRef]

- Thórisson, K.R.; Nivel, E.; Sanz, R.; Wang, P. Editorial: Approaches and Assumptions of Self-Programming in Achieving Artificial General Intelligence. J. Artif. Gen. Intell. 2012, 3, 1–10. [Google Scholar] [CrossRef]

- Damasio, H. Human brain anatomy in computerized images; OUP: Oxford, 2005. [Google Scholar]

- Jones, E. Microcolumns in the cerebral cortex. Proceedings of the National Academy of Sciences 2000, 97, 5019–5021. [Google Scholar] [CrossRef] [PubMed]

- Zheng, P.; Dimitrakakis, C.; Triesch, J. Network Self-Organization Explains the Statistics and Dynamics of Synaptic Connection Strengths in Cortex. PLOS Comput. Biol. 2013, 9, e1002848. [Google Scholar] [CrossRef]

- Seth, A.K. Causal connectivity of evolved neural networks during behavior. Network: Comput. Neural Syst. 2005, 16, 35–54. [Google Scholar] [CrossRef]

- Azulay, A.; Itskovits, E.; Zaslaver, A. The C. elegans connectome consists of homogenous circuits with defined functional roles. PLoS computational biology 2016, 12, e1005021. [Google Scholar] [CrossRef]

- Scheffer, L.K.; Xu, C.S.; Januszewski, M.; Lu, Z.; Takemura, S.-Y.; Hayworth, K.J.; Huang, G.B.; Shinomiya, K.; Maitlin-Shepard, J.; Berg, S.; et al. A connectome and analysis of the adult Drosophila central brain. eLife 2020, 9, e57443. [Google Scholar] [CrossRef]

- Storks, L.; Powell, B.; Leal, M. Peeking inside the lizard brain: Neuron numbers in Anolis and its implications for cognitive performance and vertebrate brain evolution. Integrative and Comparative Biology 2020, icaa129. [Google Scholar]

- Herculano-Houzel, S.; Mota, B.; Lent, R. Cellular scaling rules for rodent brains. Proceedings of the National Academy of Sciences 2006, 103, 12138–12143. [Google Scholar] [CrossRef] [PubMed]

- Herculano-Houzel, S.; Collins, C.; Wong, P.; Kaas, J. Cellular scaling rules for primate brains. Proceedings of the National Academy of Sciences 2007, 104, 3562–3567. [Google Scholar] [CrossRef] [PubMed]

- Herculano-Houzel, S. The human brain in numbers: a linearly scaled-up primate brain. Front. Hum. Neurosci. 2009, 3, 31. [Google Scholar] [CrossRef]

- Ghysen, A. The origin and evolution of the nervous system. International Journal of Developmental Biology 2003, 47, 555–562. [Google Scholar]

- Beniaguev, D.; Segev, I.; London, M. Single cortical neurons as deep artificial neural networks. Neuron 2021, 109, 2727–2739. [Google Scholar] [CrossRef]

- Wang, Z.; Ying, Z.; Bosy-Westphal, A.; Zhang, J.; Schautz, B.; Later, W.; Heymsfield, S.B.; Müller, M.J. Specific metabolic rates of major organs and tissues across adulthood: evaluation by mechanistic model of resting energy expenditure. Am. J. Clin. Nutr. 2010, 92, 1369–1377. [Google Scholar] [CrossRef]

- Yeh, F.-C.; Panesar, S.; Fernandes, D.; Meola, A.; Yoshino, M.; Fernandez-Miranda, J.C.; Vettel, J.M.; Verstynen, T. Population-averaged atlas of the macroscale human structural connectome and its network topology. NeuroImage 2018, 178, 57–68. [Google Scholar] [CrossRef]

- Sporns, O. Graph theory methods for the analysis of neural connectivity patterns," in Neuroscience databases; Springer: Boston MA, 2003; pp. 171–185. [Google Scholar]

- Bassett, D.S.; Bullmore, E. Small-World Brain Networks. Neurosci. 2006, 12, 512–523. [Google Scholar] [CrossRef]

- Shin, C.-W.; Kim, S. Self-organized criticality and scale-free properties in emergent functional neural networks. Phys. Rev. E 2006, 74, 045101. [Google Scholar] [CrossRef]

- Yuste, R. From the neuron doctrine to neural networks. Nat. Rev. Neurosci. 2015, 16, 487–497. [Google Scholar] [CrossRef]

- Meunier, D.; Lambiotte, R.; Fornito, A.; Ersche, K.; Bullmore, E.T. Hierarchical modularity in human brain functional networks. Front. Neuroinformatics 2009, 3, 37. [Google Scholar] [CrossRef] [PubMed]

- Bargmann, C.I.; Marder, E. From the connectome to brain function. Nat. Methods 2013, 10, 483–490. [Google Scholar] [CrossRef]

- Song, T.; Zheng, P.; Wong, M.D.; Wang, X. Design of logic gates using spiking neural P systems with homogeneous neurons and astrocytes-like control. Inf. Sci. 2016, 372, 380–391. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.; Naud, R.; Paninski, L. Neuronal dynamics: From single neurons to networks and models of cognition; Cambridge University Press: Cambridge, 2014. [Google Scholar]

- Hopfield, J.J.; Tank, D.W. Computing with Neural Circuits: A Model. Science 1986, 233, 625–633. [Google Scholar] [CrossRef] [PubMed]

- Brette, R. Philosophy of the spike: rate-based vs. spike-based theories of the brain. Frontiers in systems neuro-science 2015, 9, 151. [Google Scholar] [CrossRef]

- Di Lorenzo, P.M.; Chen, J.-Y.; Victor, J.D. Quality Time: Representation of a Multidimensional Sensory Domain through Temporal Coding. J. Neurosci. 2009, 29, 9227–9238. [Google Scholar] [CrossRef]

- Beniaguev, D.; Segev, I.; London, M. Single cortical neurons as deep artificial neural networks. Neuron 2021, 109, 2727–2739. [Google Scholar] [CrossRef]

- Kim, H.; Hudetz, A.G.; Lee, J.; Mashour, G.A.; Lee, U.; Avidan, M.S.; Bel-Bahar, T.; Blain-Moraes, S.; Golmirzaie, G.; et al.; the ReCCognition Study Group Estimating the Integrated Information Measure Phi from High-Density Electroencephalography during States of Consciousness in Humans. Front. Hum. Neurosci. 2018, 12, 42. [Google Scholar] [CrossRef]

- Cappuccio, M. Flow, choke, skill: the role of the non-conscious in sport performance. In Before consciousness: in search of the fundamentals of mind; Imprint Academic: Exeter, 2017; pp. 246–283. [Google Scholar]

- Proudfoot, D. Rethinking Turing’s Test and the Philosophical Implications. Minds Mach. 2020, 30, 487–512. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).