In this study, our purpose is to predict the risk of course failure for students, and to achieve this, we adopt a quantitative approach using machine learning methods in lieu of solely relying on statistical approaches. To complement the dataset used in [

6], we conducted a survey on students pursuing a bachelor’s degree in Systems Engineering at the University of Córdoba, Colombia. The data provided by the students were anonymized to protect their privacy, and we only consider their grades without including personal information such as, e.g., identification numbers, names, gender, and economic stratum.

2.1. Research Context and Problem Formalization

In many bachelor’s degree programs, courses are organized and grouped per semester, gradually increasing in complexity. Foundational subjects are typically taught in the first semester, followed by more advanced topics in the subsequent semesters. This organization of courses leads to the concept of prerequisite courses, where the successful completion of certain courses is assumed to be required for advancing to others.

Prerequisite courses play a crucial role in preparing students for more advanced subjects. They lay the groundwork and provide the necessary knowledge and skills for succeeding in later courses. For instance, a student who struggled to pass the Differential Calculus course might face challenges in the Differential Equations course, as the latter builds upon the concepts learned in the former.

The logical progression from prerequisite courses to advanced courses highlights the significance of a strong foundation in earlier subjects. Students who excel in prerequisite courses are more likely to perform well in subsequent, more complex courses, setting them on a path to academic success.

The relationship among courses and the results obtained in [

6], where the prediction of course failure risk was based on students’ academic history and the outcomes obtained from the admission test, has motivated us to explore the following research question: Can an artificial intelligence system effectively learn patterns in students’ academic history to predict whether a given student is at risk of failing a course, based only on their performance in prerequisite courses?

To answer this question, we extended the research endeavor from [

6], which focused on predicting course failure risk based on students’ academic history and admission test outcomes. Building upon the previous study, our goal in this study was to investigate whether an artificial intelligence system can effectively predict course failure risk solely based on students’ performance in prerequisite courses.

In the same context as [

6], we collected additional data from students pursuing a Bachelor’s degree in Systems Engineering at the University of Córdoba in Colombia, a public university. The dataset now includes an expanded sample of students, to provide a more comprehensive representation of students’ academic performances.

We continued our examination of the numerical methods course, a critical subject in the curriculum, whose theoretical and practical topics depend on foundational courses such as calculus, physics, and computer programming. By focusing on this specific course, we aim to uncover the relationships between prerequisite courses and course failure risk, offering valuable insights to support academic interventions and enhance student success.

At Colombian universities, students pursuing any Bachelor’s degree are graded on a scale from 0 to 5. In our research context, the University of Córdoba requires students to maintain a global average grade of at least 3.3, as specified in Article 16 of the university’s student code [

11]. However, the university’s code, particularly Article 28, outlines additional student retention policies for those whose global average grade falls below the above-mentioned thresholds (i.e., 3.3).

Therefore, students with a global average grade between 3 and 3.3 are placed on academic probation and must raise their grade to at least 3.3 in the next semester to maintain their student status. Failure to do so might result in dismissal from the university (cf., Article 16 in the student’s code [

11]). Additionally, if a student’s grade falls below 3, they are automatically withdrawn from the university. The possibility of losing student status due to course failure is a significant concern and is commonly referred to as student dropout.

The performance of students in prerequisite courses is assessed based on their grades. For each prerequisite course, we consider three key input variables for the prediction, namely: (i) the number of semesters the student has attended the course until passing, (ii) the best grade achieved, and (iii) the worst grade received.

To represent the input variables corresponding to the ith student’s academic record, we use a real-valued D-dimensional vector , where . Here, as we have ten prerequisite courses, and three variables are associated with each course. Each component of the vector represents a specific input variable as follows:

is the best grade that a given student achieved in Calculus I course.

is the number of semester a given student has attended the Calculus I course.

is the worst that a given student achieved in Calculus I course.

is the best grade that a given student achieved in Calculus II course.

is the number of semester a given student has attended the Calculus II course.

is the worst that a given student achieved in Calculus II course.

is the best grade that a given student achieved in Calculus III course.

is the number of semester a given student has attended the calculus III course.

is the worst that a given student achieved in Calculus III course.

is the best grade that a given student achieved in Linear Algebra course.

is the number of semester a given student has attended the Linear Algebra course.

is the worst that a given student achieved in Linear Algebra course.

is the best grade that a given student achieved in Physics I course.

is the number of semester a given student has attended the Physics I course.

is the worst that a given student achieved in Physics I course.

is the best grade that a given student achieved in Physics II course.

is the number of semester a given student has attended the Physics II course.

is the worst that a given student achieved in Physics II course.

is the best grade that a given student achieved in Physics III course.

is the number of semester a given student has attended the Physics III course.

is the worst that a given student achieved in Physics III course.

is the best grade that a given student achieved in Introduction to Computer Programming course.

is the number of semester a given student has attended the Introduction to Computer Programming course.

is the worst that a given student achieved in Introduction to Computer Programming course.

is the best grade that a given student achieved in Computer Programming I course.

is the number of semester a given student has attended the Computer Programming I course.

is the worst that a given student achieved in Computer Programming I course.

is the best grade that a given student achieved in Computer Programming II course.

is the number of semester a given student has attended the Computer Programming II course.

is the worst that a given student achieved in Computer Programming II course.

is the best grade that a given student achieved in Computer Programming III course.

is the number of semester a given student has attended the Computer Programming III course.

is the worst that a given student achieved in Computer Programming III course.

Moreover, it is essential to note that as part of the data collection process, we also obtained admission outcomes for each student. However, for the purpose of our current study, we decided not to incorporate these admission outcomes into our analysis as they fall outside the scope of our research question. Consequently, the dataset contains a total of 38 independent variables per student, of which we used 33 variables corresponding to the performance in prerequisite courses for our predictive models.

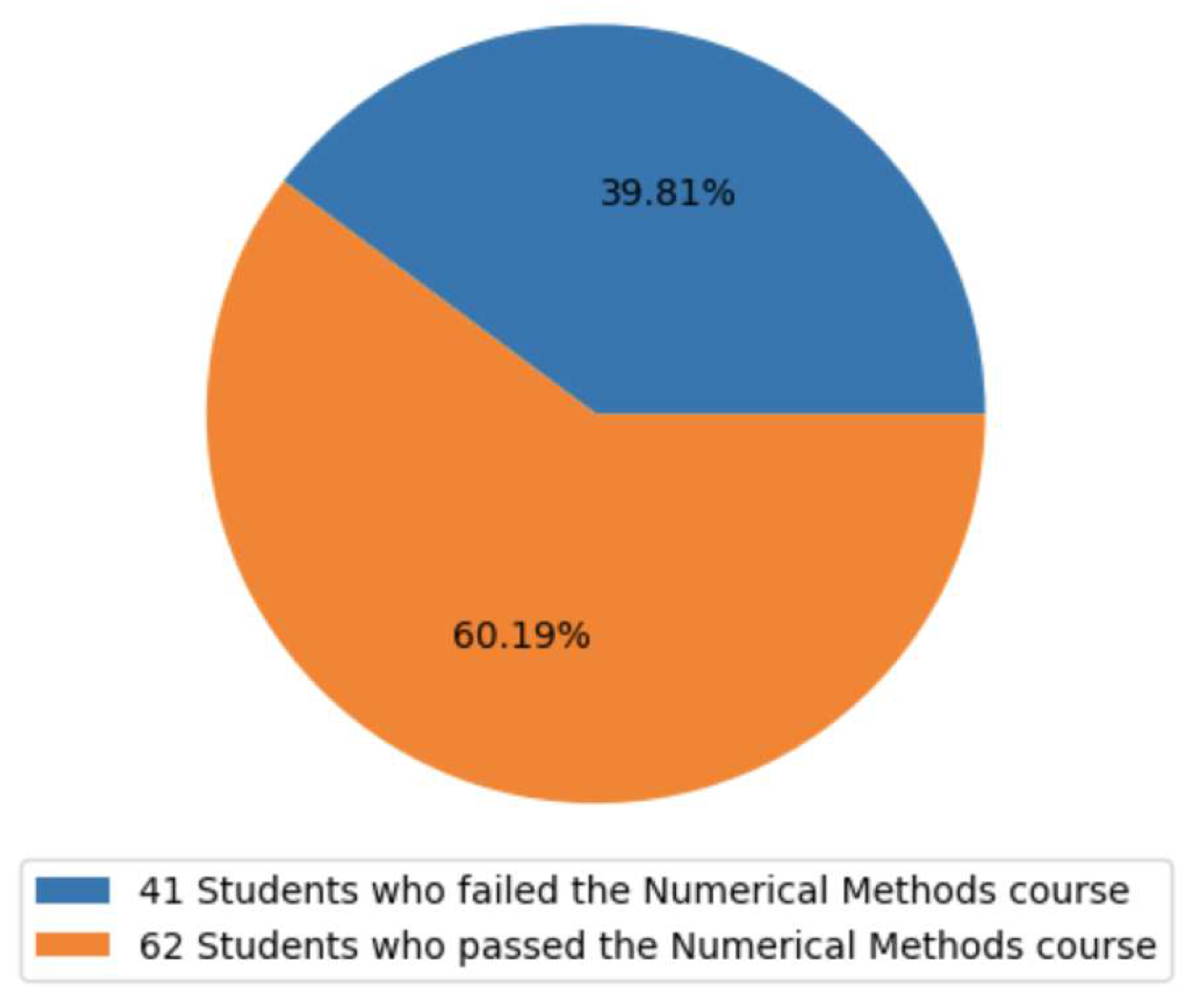

To formalize the problem, let be the training dataset, where n is the number of instances in the dataset (i.e., n is less than 103, or , due to a portion being set apart for evaluation). Each instance in consists of a real-valued vector representing the academic record of the ith student, along with the corresponding target variable , which takes a value of one if the student either failed or dropped out of the numerical methods course (), and zero otherwise ().

The problem addressed in our study is to find the function f, such that , which maps the input variables in the academic record to the target variable, given the aforementioned training dataset. To tackle this problem, we have adopted a supervised learning approach, specifically employing classification methods.

2.3. Machine Learning Methods

To solve the previously defined problem of predicting the risk of course failure, we have adopted classification methods, such as logistic regression, which is well-suited for binary outcome prediction tasks. Logistic regression utilizes the logistic function of the linear combination between input variables and weights, and the classifier is fitted by maximizing the objective function based on the log-likelihood of the training data given the binary outcome [

12]. In our study, we employed the Limited-memory Broyden-Fletcher-Goldfarb-Shanno (L-BFGS) algorithm [

13,

14] to efficiently fit the logistic regression classifier.

With the logistic regression method, it is assumed that a hyperplane exists to separate vectors into two classes within a multidimensional real-valued vector space. While this assumption might be reasonable taking into account the high dimensionality (i.e.,

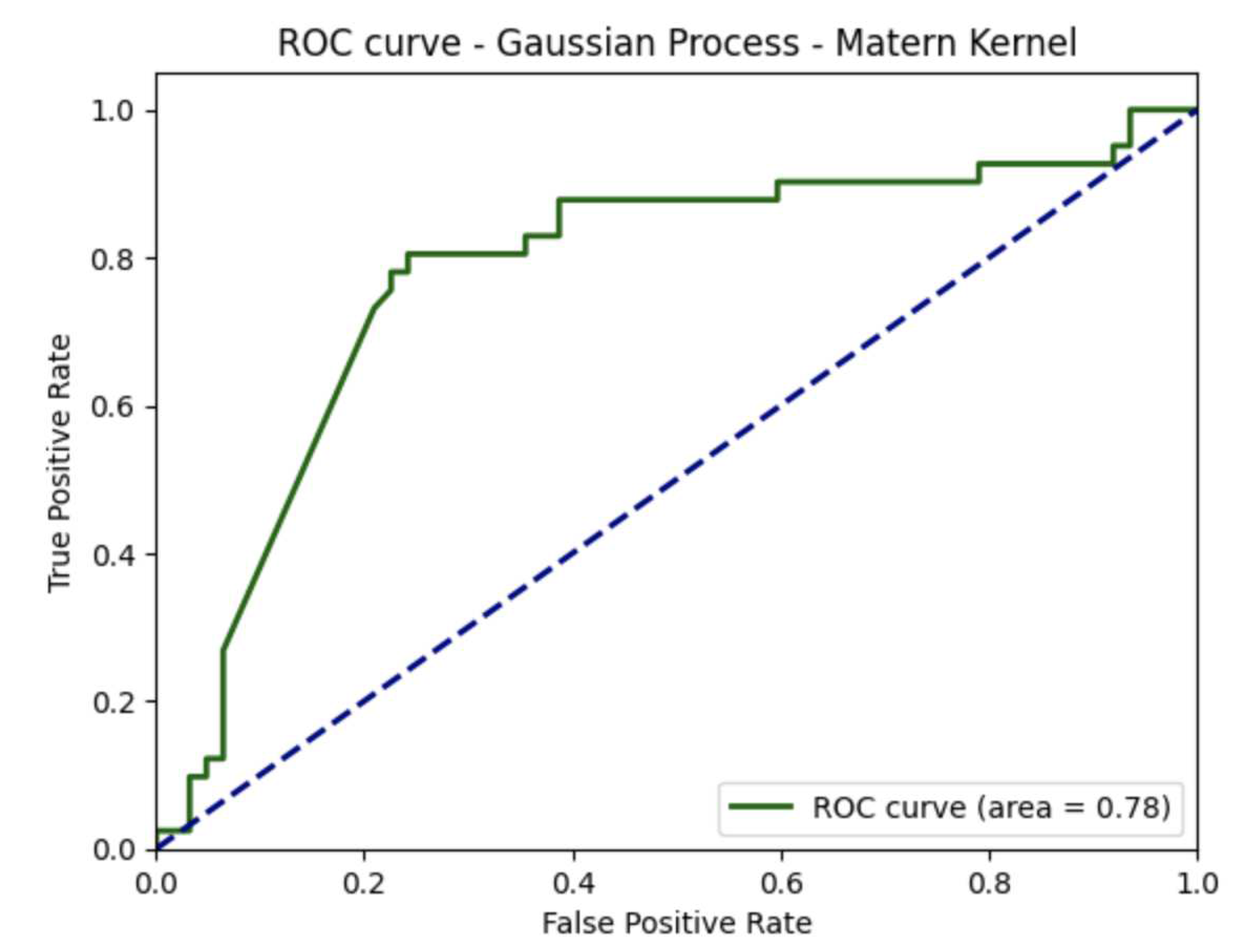

) of the dataset used in this study, we also adopted other classifiers more suited for non-linear classification problems, such as the Gaussian process classifier. The Gaussian process is a probabilistic method based on Bayesian inference, where the probability distribution of the target variable is Gaussian or normal, explaining the name of the method [

15,

16]. One of the main advantages of the Gaussian process classifier is its ability to incorporate prior knowledge about the problem, thereby improving its forecasting even with a small training dataset. Furthermore, in the context of this study, where the dataset is rather small, the Gaussian process classifier is a suitable choice.

In this study, we have used several kernels (a.k.a., covariant functions) with Gaussian processes. For instance, the radial basis function kernel, which is defined as follows:

where

are two

D-dimensional vectors in real-valued space, and

are scalars corresponding to the weight and length scale of the kernel, respectively.

Besides, we used the Matern kernel, which is defined as follows:

where

and

are the modified Bessel function and the gamma function, respectively. The hyperparameter

controls the smoothness of the kernel function.

Moreover, we employed rational quadratic kernel defined as follows:

where

is used for the same purpose in Equation

1, while

is the scale mixture parameter, such that

.

Furthermore, we combined Matern and radial basis function kernels by summing both as follows:

where

and

are the weights assigned to both kernels.

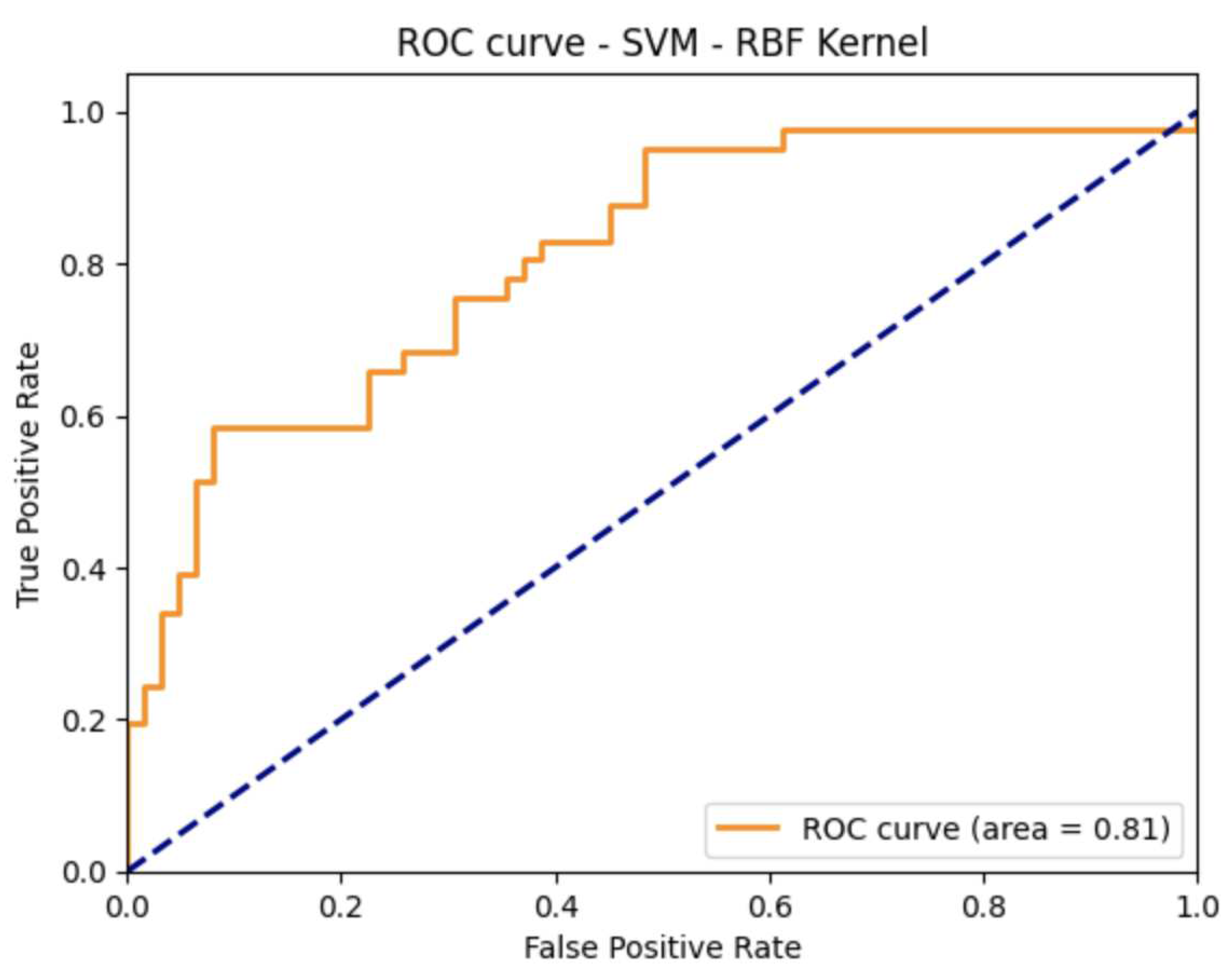

On the other hand, the support vector machines (SVM) method is so far the best theoretical motivated and one of the most successful methods in the practice of modern machine learning [

17]. It is based on convex optimization, allowing for a global maximum solution to be found, which is its main advantage. However, SVM method is not well-suited for interpretation in data mining and is better suited for training accurate machine learning-based systems. A detailed description of this method is in [

18].

Both SVM and logistic regression are linear classification methods that assume the input vector space can be separated by a linear decision boundary (or a hyperplane in the case of a multidimensional real-valued space). However, when this assumption is not satisfied, SVM can be used along with kernel methods to handle non-linear decision boundaries (see [

18] for further details). In this study, we used the radial basis function kernel, which is similar to the one presented in Equation

1, and it is defined as follows:

where

controls the radius of this spherical kernel, whose center is

. Additionally, we used polynomial and Sigmoid kernels defined in Equations , respectively. In Equation ,

is the degree of the kernel, and

is the coefficient in Equation .

Although SVM method is considered one of the most successful methods in the practice of modern machine learning, multilayer perceptrons and their variants, which are artificial neural networks, are the most successful methods in the practice of deep learning and big data, particularly in tasks such as speech recognition, computer vision, natural language processing, and so forth. [

19]. In this research, we have adopted the multilayer perceptrons fitted through back-propagated cross-entropy error [

20], and the optimization algorithm known as Adam [

21]. We used multilayer perceptrons with one and five hidden layers.

The multilayer perceptron method is a universal approximator (i.e., it is able to approximate any function for either classification or regression), which is its main advantage. However, its main disadvantage is that the objective function (a.k.a., loss function) based on the cross-entropy error is not convex. Therefore, the synaptic weights obtained through the fitting process might not converge to the most optimum solution because there are several local minima in the objective function. Thus, finding a solution depends on the random initialization of the synaptic weights. Furthermore, multilayer perceptrons have more hyperparameters to be tuned than other learning algorithms (e.g., support vector machines or naive Bayes), which is an additional shortcoming.

Except for the logistic regression method, all the above-mentioned methods are not easily interpretable. Therefore, we adopted decision trees, which are classification algorithms commonly used in data mining and knowledge discovery. In decision tree training, a tree is created using the dataset as input, where each internal node represents a test on an independent variable, each branch represents the result of the test, and leaves represent forecasted classes. The construction of the tree is carried out in a recursive way, beginning with the whole dataset as the root node, and at each iteration, the fitting algorithm selects the next attribute that best separates the data into different classes. The fitting algorithm can be stopped based on several criteria, such as when all the training data is classified or when the accuracy or performance of the classifier cannot be further improved.

Decision trees are fitted through heuristic algorithms, such as greedy algorithms, which may lead to several local optimal solutions at each node. This is one of the reasons why there is no guarantee that the learning algorithm will converge to the most optimal solution, as is also the case with the multilayer perceptrons algorithm. Therefore, this is the main drawback of decision trees, and it can cause completely different tree shapes due to small variations in the training dataset. The decision trees were proposed in 1984, in [

22] Breiman

et al. delve into the details of this method. We also adopted ensemble methods based on multiple decision trees such as, e.g., Adaboost (stands for adaptive boosting) [

23], Random forest [

24], and extreme gradient Boosting, a.k.a. XGBoost [

25].