Submitted:

03 August 2023

Posted:

03 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Background and Related Work

3. Methods and Data Analysis

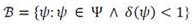

3.1. Development and Deployment of Chatbot

3.2. Sentiment Analysis

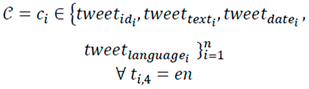

3.1.1. Data Collection

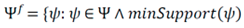

3.1.2. Data Pre-Processing

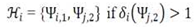

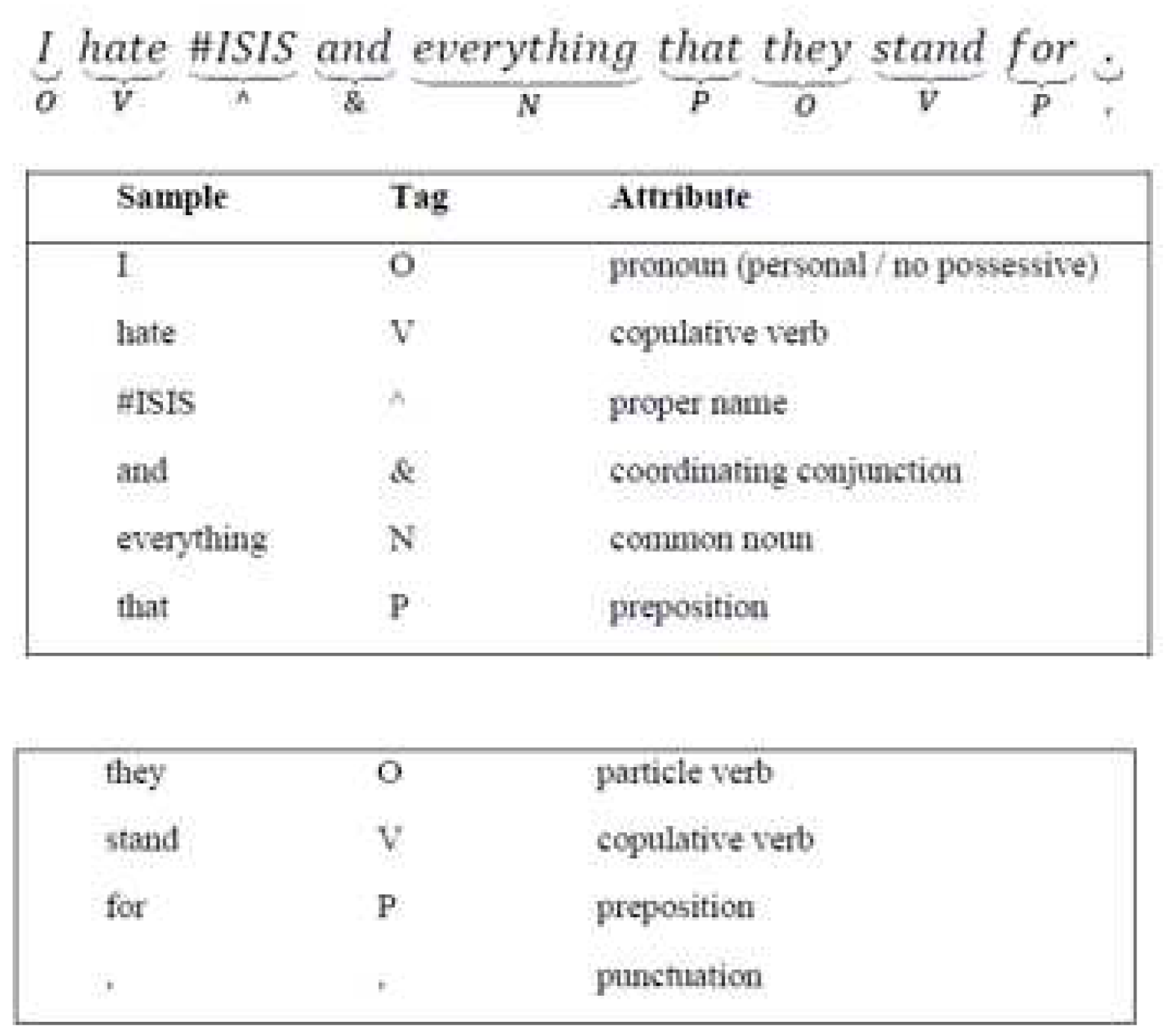

3.1.3. Sentiment Extraction

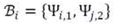

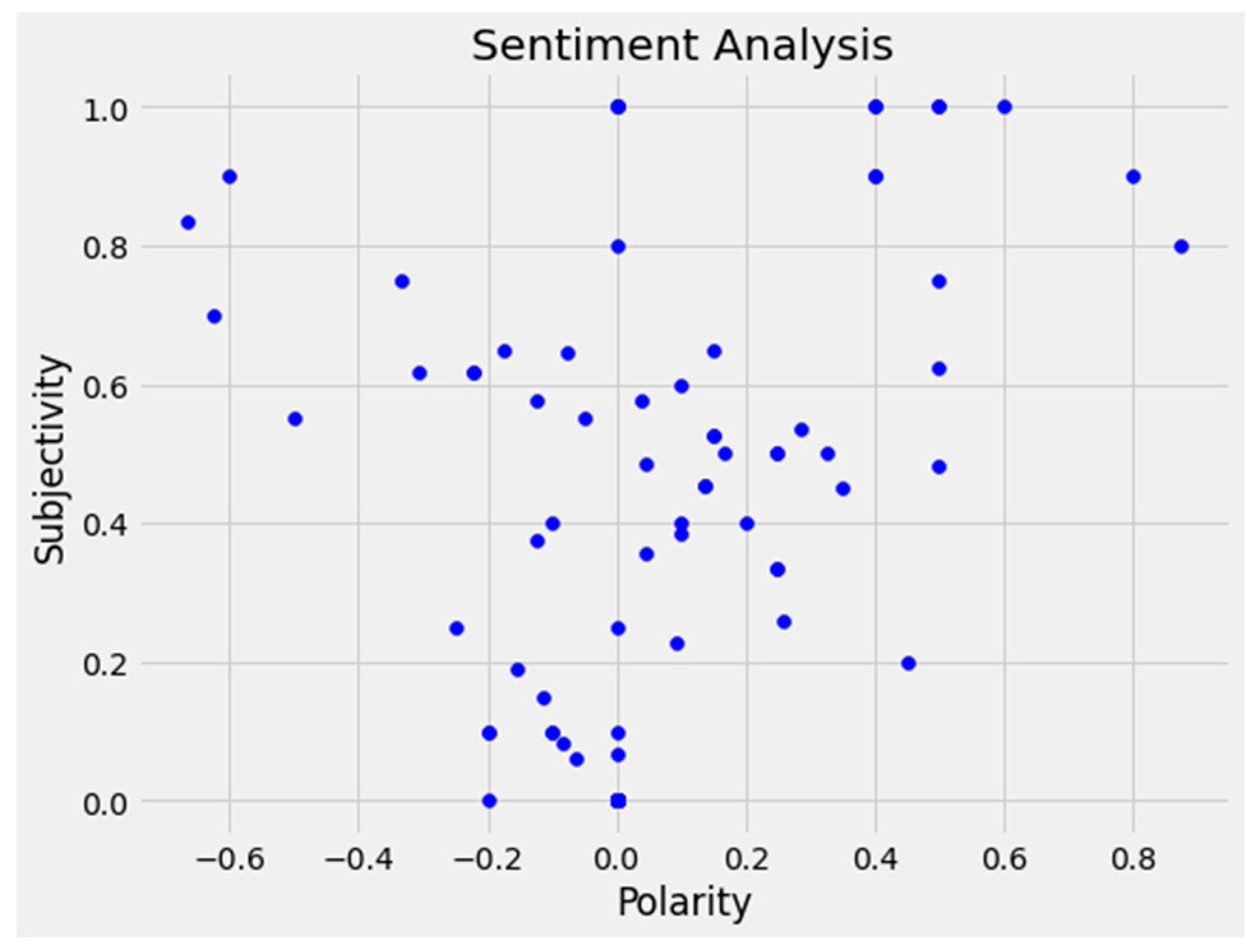

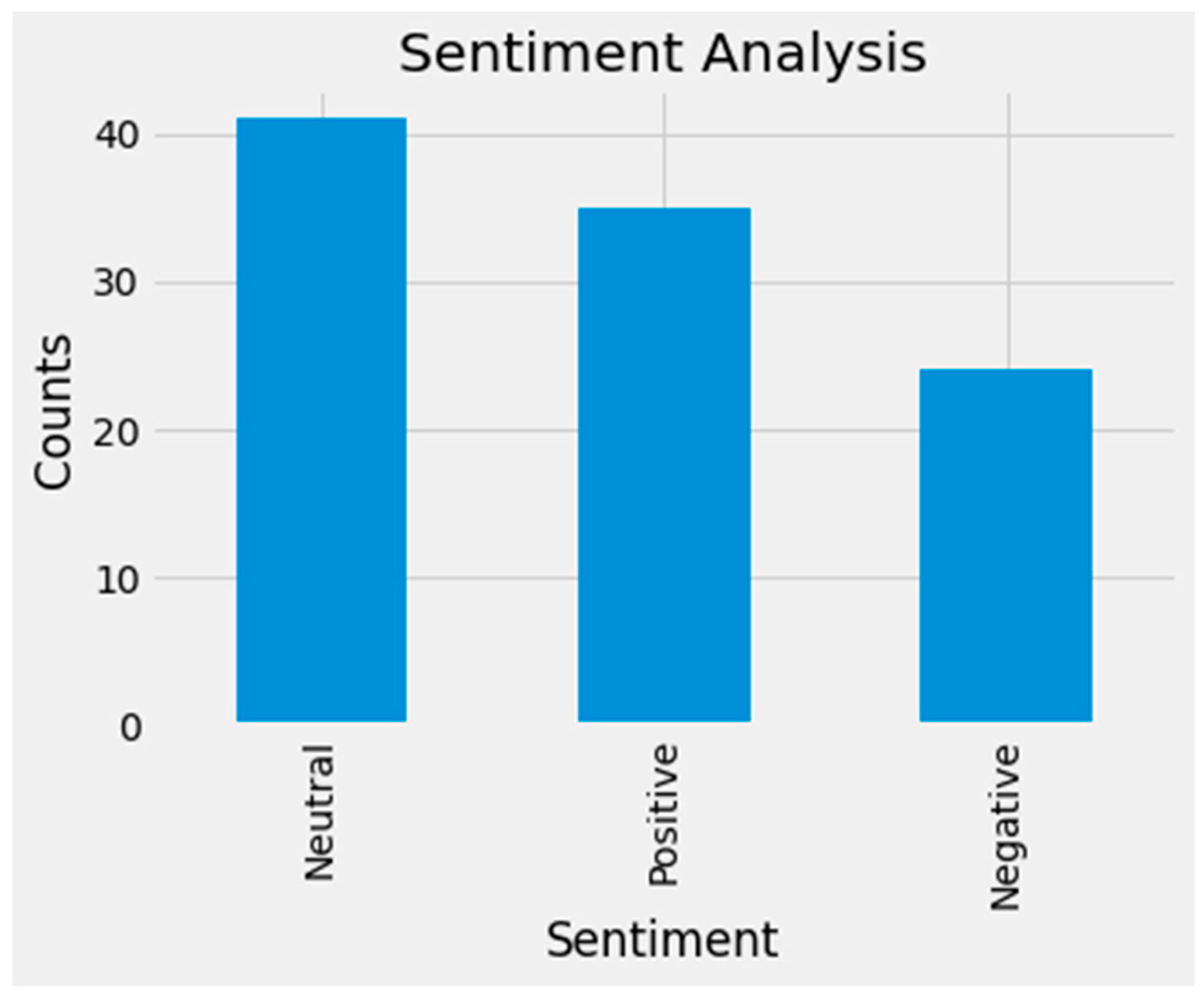

3.1.4. Sentiment Orientation and Analysis

4. General Discussion

- What AI related technological advancements are better suited to promote environmental, economic, and social sustainability for global organizations?

- To what extent do privacy and security risks affect sustainability? How can social media chatbots prevent these risks and challenges?

- To what extent do technical factors (e.g., infrastructure, design) affect environmental, economic, and social sustainability for global organizations?

- Which sustainable business models can be developed and evaluated for AI and AI-related technology adoption, including circular and sharing economy models?

- What are the drivers and barriers to promoting sustainable AI-based systems, and how can AI-technology adoption support sustainable business practices globally?

5. Conclusion and Future Directions

Funding

Appendix A

References

- Cutter, S., T. J. Wilbank, (ed.): Geographical Dimensions of Terrorism, Taylor & Francis, Inc. (2003).

- Kennedy, L. W., Lunn, C. M.: Developing a Foundation for Policy Relevant Terrorism Research in Criminology (2003).

- Reid, E., Qin, J., Chung, W., Xu, J., Zhou, Y., Schumaker, R., ... & Chen, H. (2004, June). Terrorism knowledge discovery project: A knowledge discovery approach to addressing the threats of terrorism. In International Conference on Intelligence and Security Informatics (pp. 125-145). Springer, Berlin, Heidelberg. [CrossRef]

- James, N. (2023). 160 Cybersecurity Statistics 2023. https://www.getastra.com/blog/security-audit/cyber-security-statistics/ Retrieved June 11, 2023.

- Gartner Research, “Forecast Analysis: Information Security Worldwide 2Q18 Update”, March 2020, [online] Available: https://www.gartner.com/en/documents/3889055.

- Franco, M. F., Rodrigues, B., Scheid, E. J., Jacobs, A., Killer, C., Granville, L. Z., & Stiller, B. (2020, November). SecBot: a business-driven conversational agent for cybersecurity planning and management. In 2020 16th International Conference on Network and Service Management (CNSM) (pp. 1-7). IEEE. [CrossRef]

- Thapa, B. (2022). Sentiment Analysis of Cybersecurity Content on Twitter and Reddit. arXiv preprint arXiv:2204.12267.

- Hernández-García, Á., & Conde-González, M. A. (2016). Bridging the gap between LMS and social network learning analytics in online learning. Journal of Information Technology Research (JITR), 9(4), 1-15. [CrossRef]

- Chatzakou, D., Koutsonikola, V., Vakali, A., & Kafetsios, K. (2013, September). Micro-blogging content analysis via emotionally-driven clustering. In 2013 humaine association conference on affective computing and intelligent interaction (pp. 375-380). IEEE.

- Achrekar, H., Gandhe, A., Lazarus, R., Yu, S. H., & Liu, B. (2011, April). Predicting flu trends using twitter data. In 2011 IEEE conference on computer communications workshops (INFOCOM WKSHPS) (pp. 702-707). IEEE.

- Pinard, P. (2019, December 3). 4 chatbot security measures you absolutely need to consider - dzone security. dzone.com. Retrieved October 29, 2021, from https://dzone.com/articles/4-chatbots-security-measures-you-absolutely-need-t.

- Adamopoulou, E., & Moussiades, L. (2020, June). An overview of chatbot technology. In IFIP International Conference on Artificial Intelligence Applications and Innovations (pp. 373-383). Springer, Cham.

- Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychological review, 114(4), 864. [CrossRef]

- Blut, M., Wang, C., Wünderlich, N. V., & Brock, C. (2021). Understanding anthropomorphism in service provision: a meta-analysis of physical robots, chatbots, and other AI. Journal of the Academy of Marketing Science, 49(4), 632-658. [CrossRef]

- Sheehan, Ben, Hyun Seung Jin, and Udo Gottlieb. “Customer service chatbots: Anthropomorphism and adoption.” Journal of Business Research 115 (2020): 14-24. [CrossRef]

- Feine, J., Morana, S., & Gnewuch, U. (2019). Measuring service encounter satisfaction with customer service chatbots using sentiment analysis.

- Küster, D., Kappas, A.: Measuring Emotions Online: Expression and Physiology. In: Holyst, J.A. (ed.) Cyberemotions: Collective Emotions in Cyberspace, pp. 71–93. Springer International Publishing, Cham (2017). [CrossRef]

- Yang, Y., Li, H., & Deng, G. (2011, July). A case study: behavior study of chinese users on the internet and mobile internet. In International Conference on Internationalization, Design and Global Development (pp. 585-593). Springer, Berlin, Heidelberg. [CrossRef]

- Marcus, M., Santorini, B., & Marcinkiewicz, M. A. (1993). Building a large annotated corpus of English: The Penn Treebank.

- Choy, M. (2012). Effective listings of function stop words for Twitter. arXiv preprint arXiv:1205.6396. [CrossRef]

- Agrawal, R., & Srikant, R. (1994, September). Fast algorithms for mining association rules. In Proc. 20th int. conf. very large data bases, VLDB (Vol. 1215, pp. 487-499).

- Hu, Ming, Guannan Zheng, and Hongmei Wang. “Improvement and research on Aprioriall algorithm of sequential patterns mining.” 2013 6th International Conference on Information Management, Innovation Management and Industrial Engineering. Vol. 2. IEEE, 2013. [CrossRef]

- U.S. Department of Homeland Security (DHS). (2020). National Initiative for Cybersecurity Careers and Studies. Glossary. Retrieved from https://niccs.us-cert.gov/glossary.

- Framework, N. C. W. (2017). National Initiative for Cybersecurity Careers and Studies.

- Achrekar, H., Gandhe, A., Lazarus, R., Yu, S. H., & Liu, B. (2011, April). Predicting flu trends using twitter data. In 2011 IEEE conference on computer communications workshops (INFOCOM WKSHPS) (pp. 702-707). IEEE.

- Feng, S., Wang, D., Yu, G., Yang, C., & Yang, N. (2009). Sentiment clustering: A novel method to explore in the blogosphere. In Advances in data and web management (pp. 332-344). Springer, Berlin, Heidelberg. [CrossRef]

- Andreevskaia, A., & Bergler, S. (2006, April). Mining wordnet for a fuzzy sentiment: Sentiment tag extraction from wordnet glosses. In 11th conference of the European chapter of the Association for Computational Linguistics.

- Fei, G., Liu, B., Hsu, M., Castellanos, M., & Ghosh, R. (2012, December). A dictionary-based approach to identifying aspects implied by adjectives for opinion mining. In Proceedings of COLING 2012: Posters (pp. 309-318).

- Owoputi, O., O’Connor, B., Dyer, C., Gimpel, K., Schneider, N., & Smith, N. A. (2013, June). Improved part-of-speech tagging for online conversational text with word clusters. In Proceedings of the 2013 conference of the North American chapter of the association for computational linguistics: human language technologies (pp. 380-390).

- Ahamer, G. (2016). GISS and GISP facilitate higher education and cooperative learning design. In Geospatial research: Concepts, methodologies, tools, and applications (pp. 810–833). IGI Global. [CrossRef]

- Al-Sharafi, M. A., Al-Emran, M., Iranmanesh, M., Al-Qaysi, N., Iahad, N. A., & Arpaci, I. (2022). Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interactive Learning Environments, 1-20. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).