Submitted:

03 August 2023

Posted:

04 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

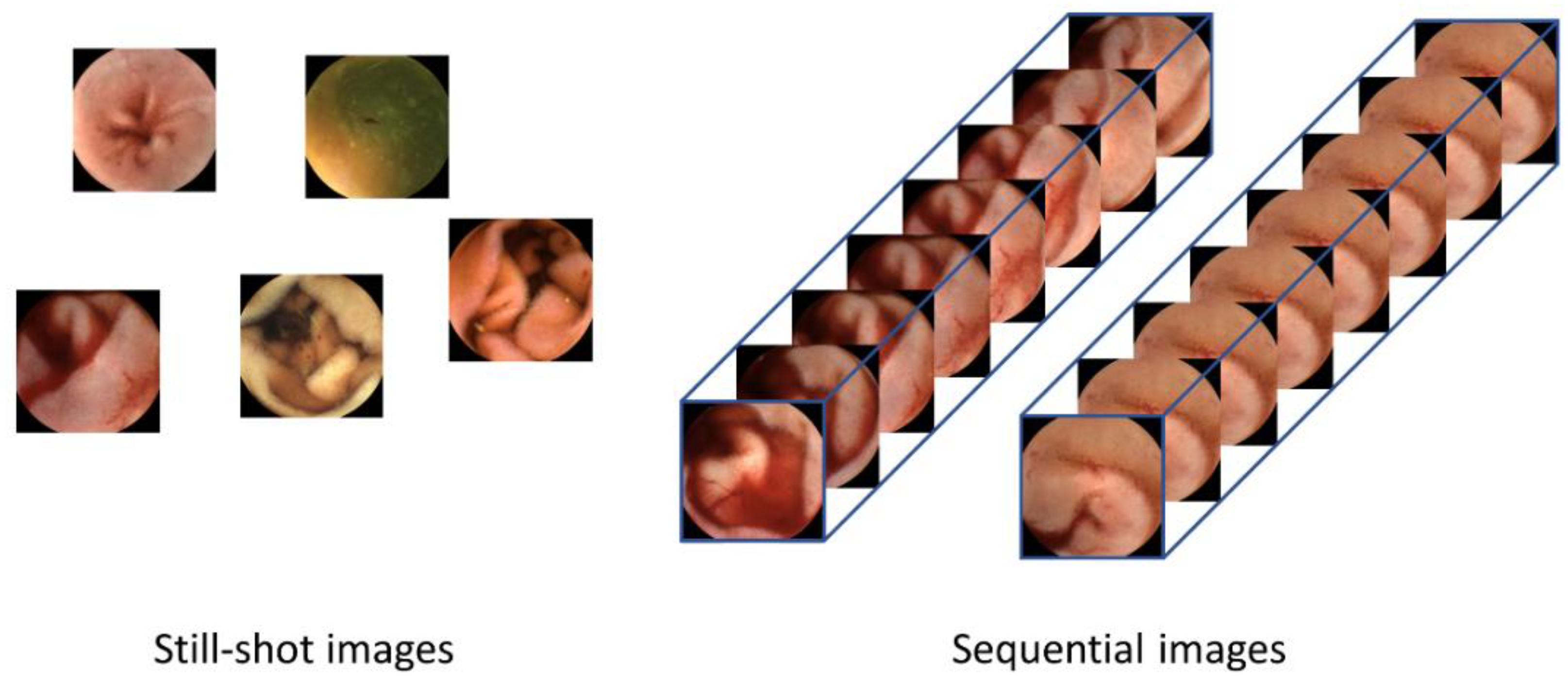

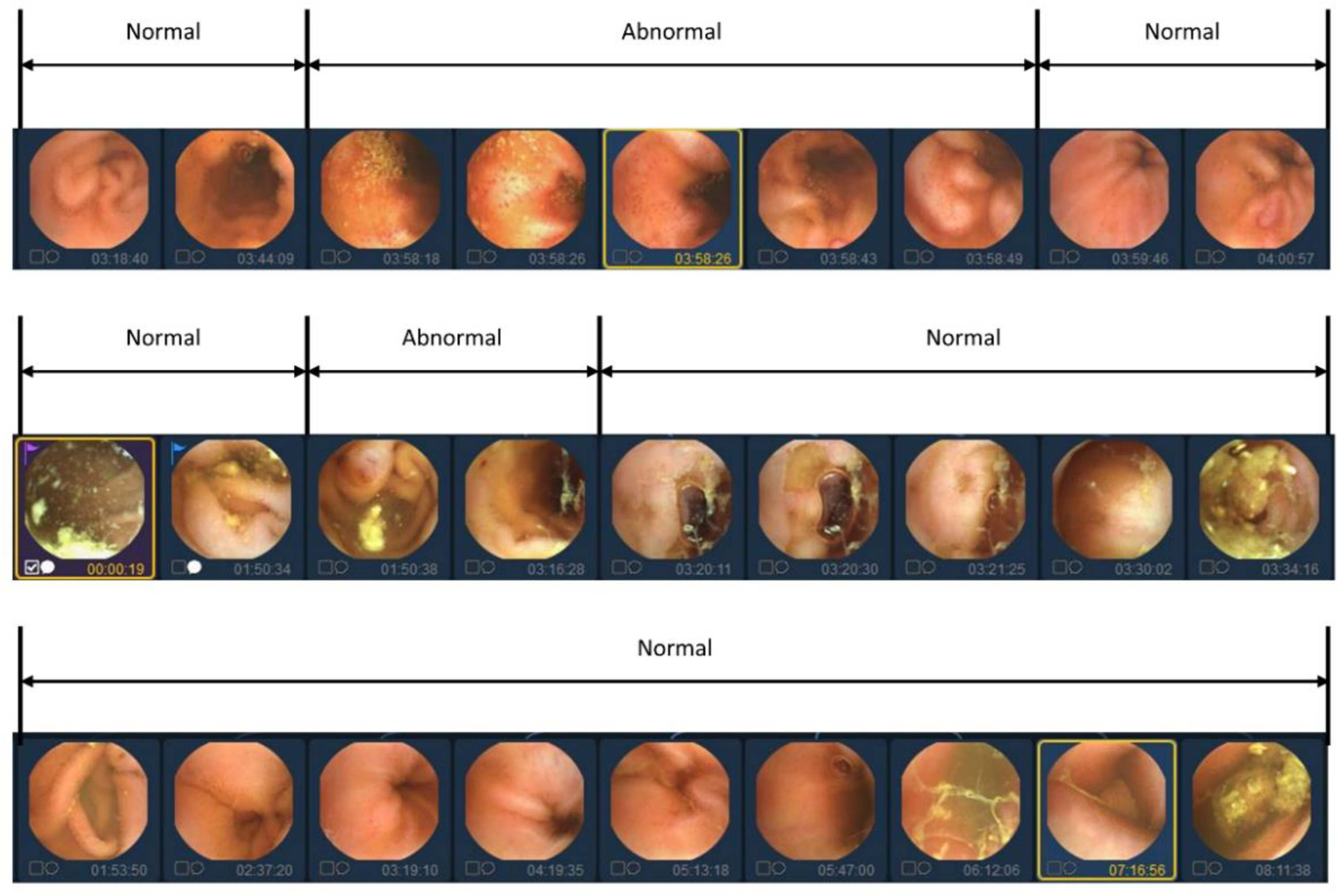

2.1. Data Acquisition (Data Characteristics)

2.2. Data Preparation

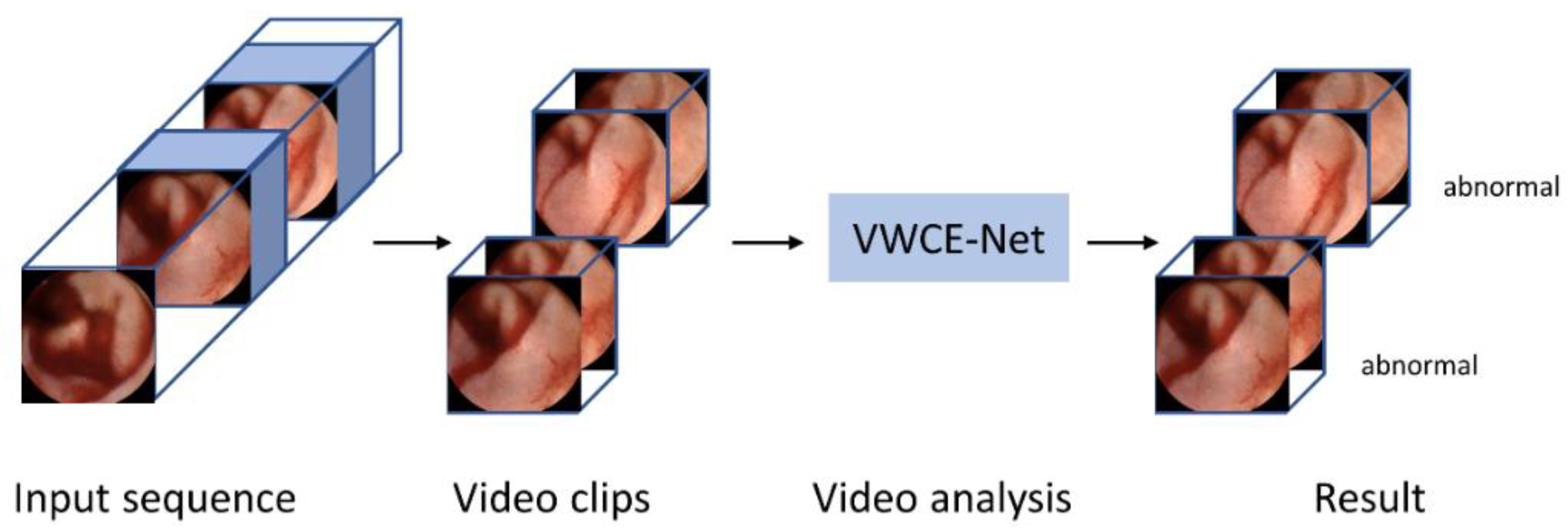

2.3. Study Design

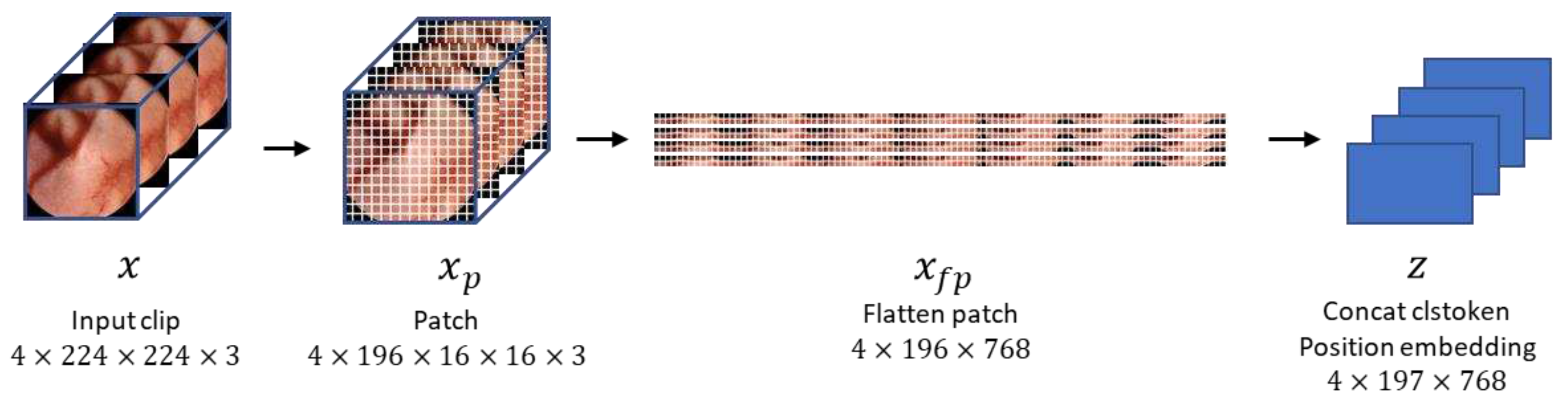

2.4. Implementation Detail

3. Results

3.1. Comparison of Our Video-Level Analysis with Existing Still-Shot Image Analysis Methods

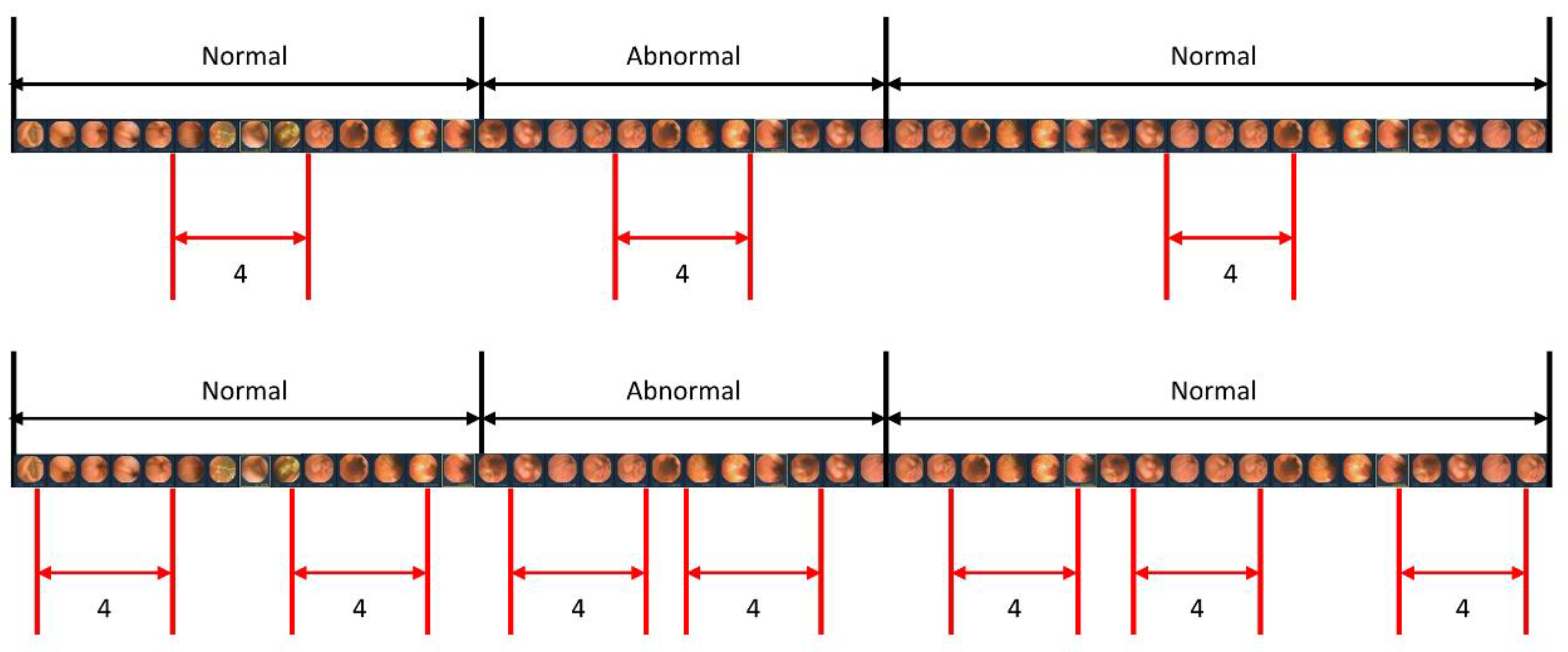

3.2. Determining the Clip Length for the Video-Level Analyses

3.3. Sampling Strategy

4. Discussion

4.1. The Necessity of Utilizing Transformer Networks and Video Input

4.2. Model Setup Analysis

5. Conclusions

Funding

References

- Soffer, S.; Klang, E.; Shimon, O.; Nachmias, N.; Eliakim, R.; Ben-Horin, S.; Kopylov, U.; Barash, Y. Deep learning for wireless capsule endoscopy: a systematic review and meta-analysis. Gastrointest Endosc 2020, 92, 831–839 e838. [Google Scholar] [CrossRef] [PubMed]

- Iddan. Wireless capsule endoscopy. Nature 2000. [Google Scholar]

- Eliakim, R. Video capsule endoscopy of the small bowel. Curr Opin Gastroenterol 2010, 26, 129–133. [Google Scholar] [CrossRef]

- Pennazio, M.; Spada, C.; Eliakim, R.; Keuchel, M.; May, A.; Mulder, C.J.; Rondonotti, E.; Adler, S.N.; Albert, J.; Baltes, P.; et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Clinical Guideline. Endoscopy 2015, 47, 352–376. [Google Scholar] [CrossRef]

- Committee, A.T.; Wang, A.; Banerjee, S.; Barth, B.A.; Bhat, Y.M.; Chauhan, S.; Gottlieb, K.T.; Konda, V.; Maple, J.T.; Murad, F.; et al. Wireless capsule endoscopy. Gastrointest Endosc 2013, 78, 805–815. [Google Scholar] [CrossRef]

- Jia, X.; Xing, X.; Yuan, Y.; Xing, L.; Meng, M.Q.H. Wireless Capsule Endoscopy: A New Tool for Cancer Screening in the Colon With Deep-Learning-Based Polyp Recognition. Proceedings of the IEEE 2020, 108, 178–197. [Google Scholar] [CrossRef]

- Kim, S.H.; Hwang, Y.; Oh, D.J.; Nam, J.H.; Kim, K.B.; Park, J.; Song, H.J.; Lim, Y.J. Efficacy of a comprehensive binary classification model using a deep convolutional neural network for wireless capsule endoscopy. Scientific Reports 2021, 11, 17479. [Google Scholar] [CrossRef]

- Kim, S.H.; Lim, Y.J. Artificial intelligence in capsule endoscopy: A practical guide to its past and future challenges. Diagnostics 2021, 11, 1722. [Google Scholar] [CrossRef] [PubMed]

- Oh, D.J.; Hwang, Y.; Lim, Y.J. A Current and Newly Proposed Artificial Intelligence Algorithm for Reading Small Bowel Capsule Endoscopy. Diagnostics (Basel) 2021, 11. [Google Scholar] [CrossRef] [PubMed]

- Spada, C.; McNamara, D.; Despott, E.J.; Adler, S.; Cash, B.D.; Fernández-Urién, I.; Ivekovic, H.; Keuchel, M.; McAlindon, M.; Saurin, J.-C. Performance measures for small-bowel endoscopy: a European Society of Gastrointestinal Endoscopy (ESGE) quality improvement initiative. Endoscopy 2019, 51, 574–598. [Google Scholar] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 2020. arXiv:2010.11929 2020.

- Bai, L.; Wang, L.; Chen, T.; Zhao, Y.; Ren, H. Transformer-Based Disease Identification for Small-Scale Imbalanced Capsule Endoscopy Dataset. Electronics 2022, 11. [Google Scholar] [CrossRef]

- Hosain, A.S.; Islam, M.; Mehedi, M.H.K.; Kabir, I.E.; Khan, Z.T. Gastrointestinal disorder detection with a transformer based approach. In Proceedings of the 2022 IEEE 13th Annual Information Technology, 2022, Electronics and Mobile Communication Conference (IEMCON); pp. 0280–0285.

- Lima, D.L.S.; Pessoa, A.C.P.; De Paiva, A.C.; da Silva Cunha, A.M.T.; Júnior, G.B.; De Almeida, J.D.S. Classification of Video Capsule Endoscopy Images Using Visual Transformers. In Proceedings of the 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI); 2022; pp. 1–4. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850 2013. arXiv:1308.0850 2013.

- Sak, H.; Senior, A.W.; Beaufays, F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. 2014.

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the Proceedings of the IEEE/CVF international conference on computer vision, 2019; pp. 6202–6211.

- Wang, X.; Xiong, X.; Neumann, M.; Piergiovanni, A.; Ryoo, M.S.; Angelova, A.; Kitani, K.M.; Hua, W. Attentionnas: Spatiotemporal attention cell search for video classification. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 2020, Proceedings, Part VIII 16, 2020, August 23–28; pp. 449–465.

- Bertasius, G.; Torresani, L. Classifying, segmenting, and tracking object instances in video with mask propagation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020; pp. 9739–9748.

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2015; pp. 3156–3164.

- Bertasius, G.; Wang, H.; Torresani, L. Is space-time attention all you need for video understanding. arXiv preprint arXiv:2102.05095 2021, arXiv:2102.05095 2021, 2, 42, 4. [Google Scholar]

- Aoki, T.; Yamada, A.; Kato, Y.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J Gastroenterol Hepatol 2020, 35, 1196–1200. [Google Scholar] [CrossRef]

- Klang, E.; Barash, Y.; Margalit, R.Y.; Soffer, S.; Shimon, O.; Albshesh, A.; Ben-Horin, S.; Amitai, M.M.; Eliakim, R.; Kopylov, U. Deep learning algorithms for automated detection of Crohn's disease ulcers by video capsule endoscopy. Gastrointest Endosc 2020, 91, 606–613. [Google Scholar] [CrossRef] [PubMed]

- Contributors, M. Openmmlab’s next generation video understanding toolbox and benchmark. 2020.

- Alaskar, H.; Hussain, A.; Al-Aseem, N.; Liatsis, P.; Al-Jumeily, D. Application of Convolutional Neural Networks for Automated Ulcer Detection in Wireless Capsule Endoscopy Images. Sensors (Basel) 2019, 19. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2017; pp. 1251–1258.

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 2020. arXiv:2004.10934 2020.

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Gool, L.V. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European conference on computer vision; 2016; pp. 20–36. [Google Scholar]

- Leenhardt, R.; Li, C.; Le Mouel, J.P.; Rahmi, G.; Saurin, J.C.; Cholet, F.; Boureille, A.; Amiot, X.; Delvaux, M.; Duburque, C.; et al. CAD-CAP: a 25,000-image database serving the development of artificial intelligence for capsule endoscopy. Endosc Int Open 2020, 8, E415–E420. [Google Scholar] [CrossRef] [PubMed]

- Aoki, T.; Yamada, A.; Aoyama, K.; Saito, H.; Tsuboi, A.; Nakada, A.; Niikura, R.; Fujishiro, M.; Oka, S.; Ishihara, S.; et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc 2019, 89, 357–363 e352. [Google Scholar] [CrossRef] [PubMed]

- Ding, Z.; Shi, H.; Zhang, H.; Meng, L.; Fan, M.; Han, C.; Zhang, K.; Ming, F.; Xie, X.; Liu, H.; et al. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology 2019, 157, 1044–1054 e1045. [Google Scholar] [CrossRef] [PubMed]

| Whole Dataset | Training Dataset | Test Dataset | ||||

|---|---|---|---|---|---|---|

| Classes | Clips | Images | Clips | Images | Clips | Images |

| Normal | 365,956 | 1,463,824 | 322,751 | 1,291,004 | 43,205 | 172,820 |

| Bleeding | 36,247 | 144,988 | 35,197 | 140,788 | 1,050 | 4,200 |

| Inflammation | 2,804 | 11,216 | 2,728 | 10,912 | 76 | 304 |

| Vascular | 805 | 3,220 | 582 | 2,328 | 223 | 892 |

| Polyp | 3,714 | 14,856 | 3,708 | 14,832 | 6 | 24 |

| Cases | 40 | 36 | 4 | |||

| Model | Sensitivity | Specificity |

|---|---|---|

| VWCE-Net | 95.1 | 83.4 |

| XceptionNet | 43.2 | 90.4 |

| YOLOV4 | 88.6 | 51.5 |

| Clip length | Sensitivity | Specificity |

|---|---|---|

| 2 | 98.51 | 72.81 |

| 4 | 95.13 | 83.43 |

| 6 | 93.93 | 83.25 |

| 8 | 97.89 | 80.76 |

| Sampling Strategy | Sensitivity | Specificity |

|---|---|---|

| center | 95.13 | 83.43 |

| random | 72.84 | 89.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).