1. Introduction

Synthetic aperture radar (SAR) deception jamming technology is effective in concealing important military facilities and operational equipment [

1,

2], enabling covert military operations[

3,

4]. The SAR deception jamming technology has the advantage of low power requirement, making it a popular research topic in SAR jamming technology[

5,

6,

7,

8]. At present, the methods for SAR deception jamming at the software level include using a SAR perception Jamming template library and electrical deception models. Between the existing techniques, using a SAR deception jamming template library costs less time and manpower than using electromagnetic scattering models for deception jamming, which further facilitates the rapid implementation of deception jamming in practical scenarios. The effect of using a SAR deception jamming template library in deception jamming depends on the refinement level of the deception jamming templates[

9,

10,

11]. However, deception jamming templates with lower authenticity can be easily detected by an enemy, which reduces the effectiveness of deception jamming. In the SAR imaging of side-looking radar, real targets exhibit shadow features. Therefore, using SAR deception jamming templates with shadows in deception jamming is more deceptive than using templates without shadows. By augmenting the existing templates, an efficient library of SAR deception jamming templates with shadows can be established.

Currently, there are two types of sample augmentation schemes for SAR deception jamming templates with shadows, traditional schemes and deep learning-based schemes. The first type involves traditional techniques, such as translation, rotation, and scaling, to obtain augmented SAR deception jamming template libraries with shadows[

12]. However, these schemes do not fundamentally alter the internal information on the images but only change the shape of the image targets at a geometric level. The processed shadow parts often lose their authentic correspondence with the targets, which limits the utility of deception jamming. The second type involves deep learning-based approaches for image sample augmentation, where deep learning models effectively capture the complex data distribution and features in SAR deception jamming templates with shadows, thus enabling the generation of more realistic and diverse templates[

13]. In deep learning schemes, there are two types: generating using a single template and generating using a dataset. Due to the easier availability of individual templates, using a single template has more advantages in terms of usability [

14,

15].

However, both types of the above-mentioned schemes do not consider the influence of SAR’s inherent speckle noise, leading to a lower similarity with the input template and a significant decrease in the authenticity of the deception jamming templates. Therefore, it is necessary to consider the characteristics of SAR’s speckle noise and design a fast and accurate sample augmentation network specifically for SAR deception jamming templates with shadows. This will enable the acquisition of a library of shadowed SAR deception jamming templates that are highly adaptable, diverse, and authentic within a short period of time.

The remainder of this paper is organized as follows:

Section 2 describes the features of the input SAR spoofing interference template with shadows and the training process and structure of the proposed generative adversarial network model.

Section 3 evaluates the generated images and compares the proposed method with the SinGAN method. Finally,

Section 4 concludes this paper.

2. Materials and Methods

2.1. Characteristics of the input template

During the training of the network proposed in this scheme, this network requires an input of a SAR target deceptive jamming template with shadows. Based on the speckle noise characteristics and shadow features of the input template, the network performs sample augmentation on the SAR target deceptive jamming template with shadows.

The speckle noise refers to the granular speckle patterns that appear in SAR images due to the interaction of different echo phases during the SAR imaging process[

16,

17,

18]. This noise is an inherent characteristic of SAR images. Coherent speckle noise represents a multiplicative noise in SAR images, and a random distribution of SAR images can be mathematically modeled as follows[

19]:

where

represents the observed SAR image,

is the ideal image without speckle noise, and

denotes the speckle noise generated during the SAR system imaging.The amplitude of speckle noise in a SAR image follows a Rayleigh distribution, which is expressed by[

20]:

where

represents the variance.

Given the imaging characteristics of SAR side-looking, certain areas of a target may be occluded and not illuminated by radar, resulting in no echo being generated. As a result, in the image domain, unilluminated areas appear as dark regions, known as shadows[

21,

22,

23]. Since the shadow regions are not illuminated by radar, a receiver does not receive any echo signals from these areas, and thus, there is no interaction between different echo phases, leading to the absence of speckle noise in the shadow regions of a SAR deceptive jamming template. As the pixel intensity values of shadow regions should be minimal in the entire image, it is possible to identify shadow points by searching for subregions with the minimum average intensity in an image. In a SAR deceptive jamming template with shadows, a sliding window with a size of

is moved within an image, and the average intensity value within each window is computed. The shadow point

corresponds to the center point of the subregion with the minimum average intensity, which can be expressed as follows:

where

represents the side image length;

, and

.

The shadow region can be expressed by:

2.2. Proposed Scheme

2.2.1. Scheme Overview

To realize rapid and realistic sample augmentation of SAR deception jamming templates with shadows, it is necessary to design a network that considers the speckle noise and shadow features of templates. This design aims to generate SAR deception jamming templates with shadows that have higher similarity and accuracy than the input template with shadows. Since speckle noise is an inherent noise in SAR images, simulating the speckle noise and using it as one of the noise inputs is essential to enhance the deceptive nature of the generated SAR deception jamming templates with shadows. Through processing by a GAN, the speckle noise can be preserved in generated templates with shadows. Moreover, in SAR deception jamming templates with shadows, the background brightness is usually slightly darker in the shadowed areas than in other image areas, resulting in minimal brightness differences between the shadow regions and the surrounding areas. This makes it challenging for a GAN to learn the shadow features effectively. Therefore, it is necessary to enhance a network’s ability to extract shadow features. The specific workflow of proposed scheme is as follows.

The network proposed in this scheme employs a pyramid-like multiscale structure as an overall framework to capture the internal information on SAR deception jamming templates with shadows. Each level of the pyramidal structure has a GAN responsible for generating and discriminating samples of SAR deception jamming templates with shadows at the current scale[

14]. The specific network architecture is shown in

Figure 1. This approach requires capturing only structural data of a single SAR deception jamming template with shadows at different scales and using it as a training set. Namely, this approach focuses on capturing both the global information and detailed local information on SAR deception jamming templates with shadows.

In

Figure 1,

represents the scale of the pyramid, which is defined by the size of the input SAR deception jamming template with shadows;

represents the downsampled result set of the original input SAR deception jamming template with shadows at different scales, with a downsampling factor of

, where

and

. The pyramid model starts training from the coarsest scale, and the first generator

is capable of generating augmented samples

based on the input mixed noise

, which is a combination of Gaussian white noise

and speckle noise

. The specific process can be expressed as follows:

After generating sample

by mixing noise

, the upsampled result of

and Gaussian white noise are both inputted to the next scale

’s generator

; then, generator

generates a new sample

. This process performs iteratively, and the output of each scale

’s generator can be represented as follows:

where

represents the generator output at the (

)th scale, where

is the generator at that scale;

refers to the mixed noise input specific to the (

)th scale, and

represents the upsampled output of

from the

th scale generator.

Noise

from the

th scale and upsampled output

of the generator at the (

)th scale are simultaneously inputted into the generator. The main function of the generator is to generate the missing data in

and incorporate them into

. This process generates a new sample of SAR deception jamming template with shadows, which is denoted by

and can be expressed as follows:

where

represents the mapping function from the upsampled output

and noise

, which is used to generate the details for generator at the (

)th scale.

Generators at the same scale share a similar structure. The entire training process progresses from bottom to top, starting from coarse to fine scales. At each scale, the output

of a generator

, in addition to being passed to the generator at the next scale, is fed to the discriminator

. The discriminator

compares the generated output

from the

th scale generator with the data obtained by downsampling the input SAR deception jamming template with shadows

at the current scale. This process continues until discriminator

is unable to distinguish between real and fake samples. A SAR deception jamming template with shadows, which is denoted by

, is composed of three regions with different features: the target region

, the shadow region

, and the background region

, as given in Eq. (8). The target region

contains complex and bright detailed information with regular shapes; a shadow region

is characterized by darker areas and relatively clean content; the background region

usually exhibits distinct texture details, and lacks clear geometric shapes, making it easier for the network to learn.

2.2.1. Specific Description of Scheme

A generator

uses the Gaussian white noise

as its original input and incorporates the speckle noise

into a mixed noise input. Between the five fully convolutional networks composed of convolutional layers (Conv)[

24], batch normalization layers (BN), and leaky rectified linear units (Leaky ReLU), a spatial attention mechanism (SAM) block is introduced[

25,

26,

27,

28]. This mechanism aims to enhance the learning ability of a network toward the target and shadow regions. At the image region level, the SAM block helps the network capture the high-response areas in a feature map, particularly focusing on the regions corresponding to shadows

in the feature map. It facilitates the processing of the shadow features in the SAR deception jamming template UN. Since the target and its shadow are crucial during the learning process, and the extraction of shadow features is challenging, an attention mechanism is adopted to improve the network

’s capability of extracting shape and contour features of shadow region

in a SAR deception jamming template

. The inception block[

29], which is placed in front of the generator

, consists of multiple scales, thus enabling a more detailed extraction of shape contours and internal details of a SAR target and its shadow, thereby enhancing the authenticity of the generated samples. This block also reduces redundant information and accelerates the convergence speed. The residual dense block with the attention mechanism uses the features extracted by convolutional layers at various levels fully[

30], thus further improving the feature extraction capability of the shadow. Additionally, it prevents the problem of gradient vanishing that may occur in deep networks and enhances network stability. The structure of generator

is shown in

Figure 2.

- 2.

Discriminator Structure;

Discriminator

adopts the patch-GAN approach[

31,

32,

33], which is inspired by the Markovian discriminator concept. Discriminator

consists of five fully convolutional layers that use a downsampling scheme to capture data distribution at the current scale, as shown in

Figure 3. The discriminator uses both the input SAR deception jamming template with shadows

and the generated SAR deception jamming template with shadows

as input data. The fully convolutional network is responsible for learning the internal distribution information on the two input SAR deception jamming templates at the same scale. By calculating the loss function, the fully convolutional network discriminates between the real and generated SAR deception jamming templates with shadows

at the current scale. In the adversarial game between generator

and discriminator

, the generated SAR deception jamming templates with shadows become increasingly realistic. The comparison and loss function calculation are performed by contrasting the SAR deception jamming template with shadows generated at the current scale with the downsampled SAR deception jamming template with shadows.

- 3.

Loss Function;

The model training starts from the coarsest scale and follows the multiscale structure shown in

Figure 1. After training a scale, the corresponding GAN for that scale is fixed. The training loss of the

th GAN includes the adversarial loss

and the reconstruction loss

, which can be expressed as follows:

where

represents the weight of the reconstruction loss in the training loss.

Adversarial loss: Each scale

’s generator

is accompanied by a Markovian discriminator

, which discriminates the authenticity of the generated shadowed SAR deception jamming templates at that scale. The discriminator

’s discrimination of the mean value of the spectrum can be expressed by:

where

is the average value function;

is the distribution of a real image;

is the distribution of the generated image;

is the distribution of

obeying

;

is the distribution of

obeying

;

is the concentration area of the real sample;

is the concentration area of the generated sample;

is randomly interpolated between

and

, and

;

is the distribution of

obeying

;

represents the discriminator output when discriminating the input shadowed SAR deception jamming template;

represents the output of the discriminator when discriminating the generated shadowed SAR deception jamming template;

represents the expectation;

is the gradient operator;

represents the

norm;

represents the weight of the gradient loss function.

Reconstruction loss: To generate a specific noise map of the original image

, assume that

, where

is the noise reconstructed at the

th scale, and

;

is the fixed noise map;

is the image generated at the

th scale using the noise map. When

, the reconstruction loss can be expressed by:

When

, the reconstruction loss can be expressed by:

3. Results

3.1. Data Description

The experimental data included images from the MSTAR dataset[

34,

35,

36]. The MSTAR data were collected using the Sandia National Laboratories SAR sensor platform and the Sentinel-1A multimode SAR sensor platform with X-band SAR sensors, having a resolution of 0.3 m in the spotlight mode. The publicly available MSTAR dataset consists of ten different categories of ground targets, including armored vehicles (BMP-2, BRDM-2, BTR-60, and BTR-70), tanks (T-62, T-72), rocket launchers (2S1), anti-aircraft units (ZSU-234), trucks (ZIL-131), and bulldozers (D7). Furthermore, the MSTAR dataset covers various depression angles and orientations and has been widely used for testing and performance comparison of SAR automatic target recognition algorithms.

In the experiment, the MSTAR data on a T72 tank with shadows were used as input data for training the network proposed in this scheme. The goal was to generate a library of SAR deception jamming templates with shadows.

3.2. Experimental Result

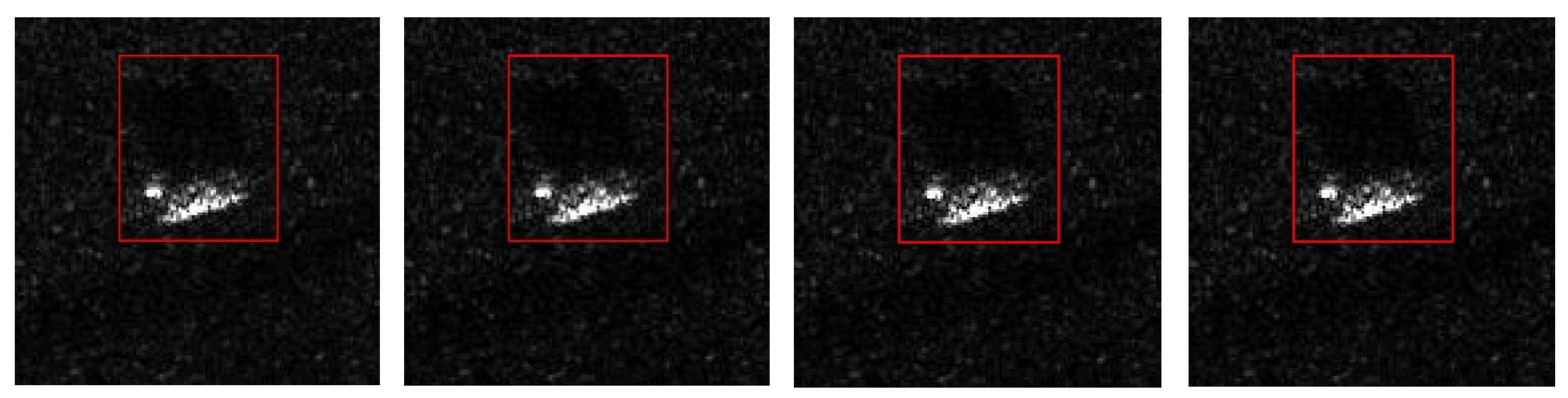

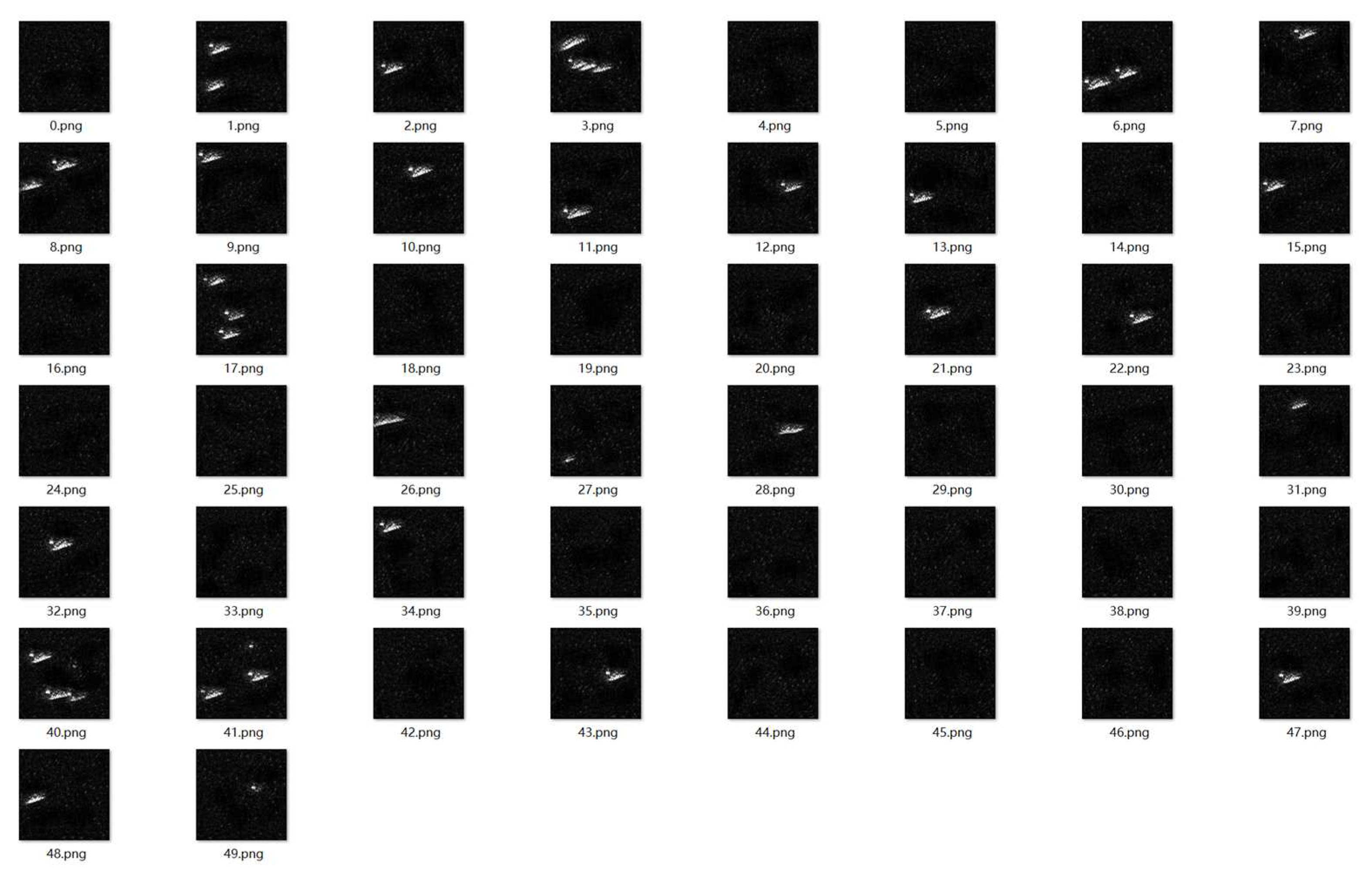

The main objective of the proposed network is to extract multilevel features of a target and generate SAR deception jamming templates with shadows that have a high similarity to the input SAR deception jamming templates with shadows of the T72 tank. The 50 SAR deception jamming template with shadows of the T72 tank generated by the proposed network is presented in

Figure 4. The comparison between the real SAR deception jamming template with shadows of the T72 tank and the SAR deception jamming template with shadows of the T72 tank generated by the proposed network is presented in

Figure 5.

The SAR deception jamming template with shadows of the T72 tank generated by the proposed network showed that the image exhibited prominent features of speckle noise, indicating a high level of authenticity. Moreover, the shadow contour of the T72 tank was realistic and well defined, and the edges and internal details of the tank were accurately represented. The visual comparison of the real and generated SAR deception jamming template with shadows demonstrated that the generated template had a high level of authenticity.

3.3. Effectiveness Analysis of Scheme

3.3.1. Quantitative Analysis of Image Quality

To evaluate the quality of the generated SAR deception jamming templates with shadows, its targets, shadows, and speckle noise were assessed.

The equivalent number of looks (ENL) was used to measure the relative strength of speckle noise in SAR images[

37,

38,

39]. A lower ENL value indicated a greater presence of speckle noise. The ENL was calculated by:

where

represents the mean value of a SAR image, and

represents the standard deviation of the SAR image. The ENL was calculated for the background regions in each image.

The structural similarity (SSIM) index[

40,

41,

42], which reflects the similarity between two images in terms of luminance, contrast, and structure, was also used. A higher SSIM value indicated a higher similarity of images. The SSIM was obtained by:

where

represents the average value of an image

;

is the average value of an image

;

and

represent the standard deviations of images

and

, respectively;

, where

is the dynamic range of pixel values;

and

are constants set to default values of 0.0001 and 0.0009, respectively. The SSIM was calculated for the red rectangular regions in each image.

The evaluation results of the first three images in

Figure 4 regarding the ENL and SSIM metrics are presented in

Table 1.

Table 1 shows that the original image in

Figure 5(a) had an ENL value of 2.5604. The generated SAR deception jamming templates with shadows have ENL values of 1.9764, 1.9090 and 1.9092, with an average value of 1.9315. Therefore, the difference between the ENL values of the generated templates and the original image was small, indicating a high similarity between the images of 75.44%. This result suggested that the generated SAR deception templates has high authenticity.

For the original image and the generated SAR deception templates, the SSIM values of the target and shadow regions were calculated. The SSIM values of the target and shadow regions of the original image and the generated templates were 0.9617, 0.9620, and 0.9643, with an average value of 0.9627, which indicated a high similarity of 96.27% between the generated and original images. Therefore, the proposed method could generate SAR deception jamming templates with shadows that exhibit high similarity to the original image and have high authenticity.

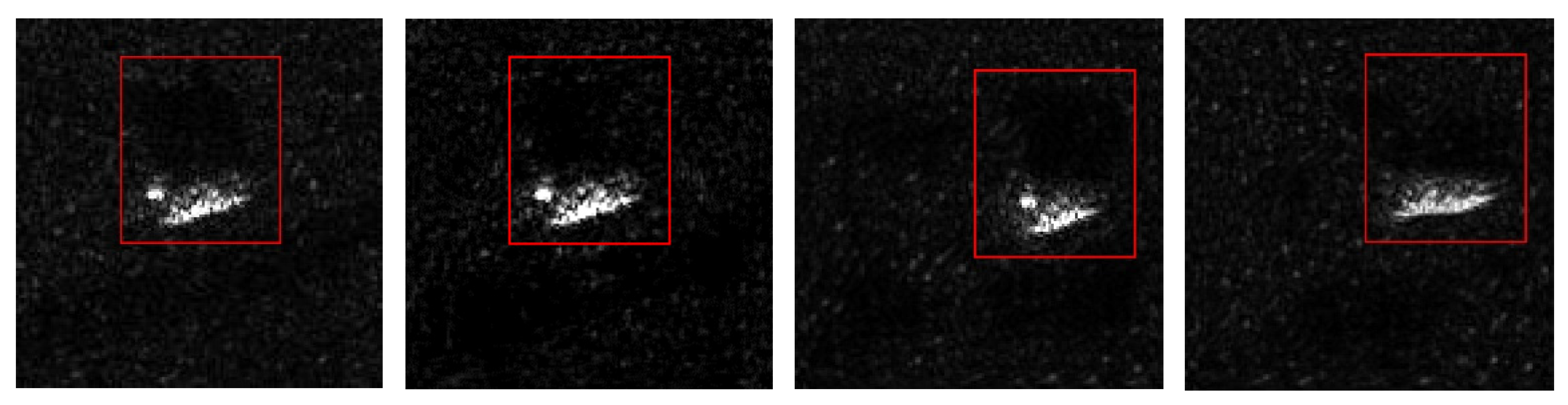

3.3.2. Comparison with SinGAN Method

This experiment was performed on shadowed T72 tank samples from the MSTAR dataset. Since SinGAN was currently one of the methods that can perform sample augmentation on the shadowed SAR deception jamming template and obtain good results, so the SinGAN method was employed for image sample augmentation of SAR deception templates with shadows, resulting in a dataset of 50 T72 tank SAR deception templates, as shown in

Figure 6. The comparison between the real SAR deception jamming template with shadows of the T72 tank and the SAR deception jamming template with shadows of the T72 tank generated by the proposed network is presented in

Figure 7.

Table 2 presents the evaluation results of the first three images using two metrics, ENL (Equivalent Number of Looks) and SSIM (Structural similarity Measure).

The results in

Table 2 show that the ENL value of the original image in

Figure 7(a) was 2.5604, and the ENL values of the generated SAR deception jamming templates with shadows were 1.6677, 1.7150, and 0.5637, with an average value of 1.3155. The average ENL of the generated templates showed a significant difference from the original image’s ENL value, indicating a low similarity of only 51.38%. This result suggested that the generated templates exhibited weaker speckle noise than the proposed approach, resulting in lower authenticity.

For the original image and the generated SAR deception templates, the SSIM values of the target and shadow regions were calculated. The SSIM values of the target and shadow regions were 0.3846, 0.37794, and 0.3925, having an average value of 0.3855 and indicating a 38.55% similarity between the generated templates and the original image. These values were lower than those of the proposed approach for target and shadow regions. Therefore, the proposed approach could generate SAR deception jamming templates with shadows that exhibit high similarity to the original image and high authenticity.

4. Discussion

To achieve fast and effective SAR deception jamming, it is necessary to perform sample augmentation on SAR deception jamming templates to generate a high-quality template dataset. Currently, the existing sample augmentation methods for SAR deception jamming templates face two main problems: low authenticity due to the absence of speckle noise and low similarity between the shadow regions in the generated templates and the original image. Therefore, this paper proposes a sample augmentation method based on GANs that can generate high-quality SAR deception jamming templates with shadows.

The proposed method adopts a pyramid-style multiscale structure as an overall framework to capture the internal information on SAR deception jamming templates with shadows. Each level of the pyramidal structure has a GAN responsible for generating and discriminating SAR deception jamming samples at that level. The generator uses residual dense blocks with attention mechanisms, multiscale modules, and region attention modules to enhance the network’s learning capability of shadow features. In addition, the speckle noise is introduced as input data to the generator, ensuring that the generated images contain the characteristic features of speckle noise. The discriminator adopts a patch-GAN approach with five fully convolutional layers used to assess the quality of generated images and compute the corresponding loss function, which improves the ability of both the generator and the discriminator iteratively to produce increasingly realistic images.

The effectiveness of the proposed method is demonstrated by comparing its results with those of the SinGAN method regarding two evaluation metrics, the ENL and SSIM values. The comparison results show that the proposed approach can achieve a 75.44% similarity between the generated and real images in speckle noise, which is significantly higher than the result of 51.38% achieved by the SinGAN method. Moreover, the SSIM values of the proposed method of the generated and real images for targets and shadows reach 96.27%, surpassing those of the SinGAN approach of 38.55%. This validates the effectiveness of the proposed approach in generating SAR deception jamming templates with shadows.

In future research, more complex inception modules, such as Inception V3, could be considered to improve the computational efficiency of the generator further.

Author Contributions

Conceptualization, S.L.; methodology, Q.Z.; validation, Q.Z., W.L. and G.L.; formal analysis, Y.L.; investigation, K.C.; data curation, W.L.; writing—original draft preparation, G.L.; writing—review and editing, S.L.; supervision, K.C.; project administration, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Stable-Support Scientific Project of China Research Institute of Radiowave Propagation, grant number No.A132003W02.

Data Availability Statement

Not applicable.

Acknowledgments

This experiment was supported by the Aerospace Information Innovation Institute of the Chinese Academy of Sciences and the China Radio Propagation Institute, and we would like to express our heartfelt thanks!

Conflicts of Interest

The authors declare no conflict of interest.

References

- Brown, W.M. Synthetic Aperture Radar. IEEE Trans. Aerosp. Electron. Syst. 1967, AES-3, 217–229.

- Doerry, A.W.; Dickey, F.M. Synthetic aperture radar. Opt. Photonics News. 2004, 15, 28–33. [Google Scholar] [CrossRef]

- Qin, J.; Liu, Z.; Ran, L.; Xie, R.; Tang, J.; Zhu, H. An SAR Image Automatic Target Recognition Method Based on the Scattering Parameter Gaussian Mixture Model. Remote Sens. 2023, 15, 3800. [Google Scholar] [CrossRef]

- Pei, J.; Huo, W.; Wang, C.; Huang, Y.; Zhang, Y.; Wu, J.; Yang, J. Multiview deep feature learning network for SAR automatic target recognition. Remote Sens. 2021, 13, 1455. [Google Scholar] [CrossRef]

- Zhou F, Zhao B, Tao M, et al. A large scene deceptive jamming method for space-borne SAR. IEEE Transactions on Geoscience and Remote Sensing, 2013, 51(8): 4486-4495. [CrossRef]

- Long S, Hong-rong Z, Yue-sheng T, et al. Research on deceptive jamming technologies against SAR. In Proceedings of the 2009 2nd Asian-Pacific Conference on Synthetic Aperture Radar. IEEE, China, 26-29 October 2009; pp. 521-525. [CrossRef]

- Wang H, Zhang S, Wang W Q, et al. Multi-scene deception jamming on SAR imaging with FDA antenna. IEEE Access, 2019, 8: 7058-7069. [CrossRef]

- Sun Q, Shu T, Yu K B, et al. Efficient deceptive jamming method of static and moving targets against SAR. IEEE Sensors Journal, 2018, 18(9): 3610-3618. [CrossRef]

- Tian T, Zhou F, Bai X, et al. A partitioned deceptive jamming method against TOPSAR. IEEE Transactions on Aerospace and Electronic Systems, 2019, 56(2): 1538-1552. [CrossRef]

- Zhao B, Huang L, Li J, et al. Deceptive SAR jamming based on 1-bit sampling and time-varying thresholds. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(3): 939-950. [CrossRef]

- Zhao B, Huang L, Li J, et al. Target reconstruction from deceptively jammed single-channel SAR. IEEE Transactions on Geoscience and Remote Sensing, 2017, 56(1): 152-167. [CrossRef]

- Vlahakis V, Ioannidis M, Karigiannis J, et al. Archeoguide: an augmented reality guide for archaeological sites. IEEE Computer Graphics and Applications, 2002, 22(5): 52-60. [CrossRef]

- Wenzel M. Generative Adversarial Networks and Other Generative Models. Machine Learning for Brain Disorders. New York, NY: Springer US, 2012: 139-192.

- Shaham T R, Dekel T, Michaeli T. Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF international conference on computer vision, Korea, 27 October-2 November 2019; pp. 4570-4580.

- Fan W, Zhou F, Zhang Z, et al. Deceptive jamming template synthesis for SAR based on generative adversarial nets. Signal processing, 2020, 172: 107528. [CrossRef]

- Goodman J W. Some fundamental properties of speckle. JOSA, 1976, 66(11): 1145-1150. [CrossRef]

- Lee J S, Grunes M R, De Grandi G. Polarimetric SAR speckle filtering and its implication for classification. IEEE Transactions on Geoscience and remote sensing, 1999, 37(5): 2363-2373. [CrossRef]

- Raney R K, Wessels G J. Spatial considerations in SAR speckle consideration. IEEE Transactions on Geoscience and Remote Sensing, 1988, 26(5): 666-672. [CrossRef]

- Mullissa A G, Marcos D, Tuia D, et al. DeSpeckNet: Generalizing deep learning-based SAR image despeckling. IEEE Transactions on Geoscience and Remote Sensing, 2020, 60: 1-15. [CrossRef]

- Lee J S, Jurkevich L, Dewaele P, et al. Speckle filtering of synthetic aperture radar images: A review. Remote sensing reviews, 1994, 8(4): 313-340. [CrossRef]

- Tang X, Zhang X, Shi J, et al. SAR deception jamming target recognition based on the shadow feature, In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO). IEEE, Greece, 28 August-2 September 2017; pp. 2491-2495. [CrossRef]

- Papson S, Narayanan R M. Classification via the shadow region in SAR imagery. IEEE Transactions on Aerospace and Electronic Systems, 2012, 48(2): 969-980. [CrossRef]

- Cui J, Gudnason J, Brookes M. Radar shadow and superresolution features for automatic recognition of MSTAR targets. IEEE In Proceedings of the International Radar Conference, 2005. IEEE, USA, 9-12 May 2005; pp. 534-539. [CrossRef]

- Nebauer C. Evaluation of convolutional neural networks for visual recognition. IEEE transactions on neural networks, 1998, 9(4): 685-696. [CrossRef]

- Zhu X, Cheng D, Zhang Z, et al. An empirical study of spatial attention mechanisms in deep networks. Proceedings of the IEEE/CVF international conference on computer vision, Korea, 27 October-2 November 2019; pp. 6688-6697. [CrossRef]

- Chun M M, Jiang Y. Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive psychology, 1998, 36(1): 28-71. [CrossRef]

- Hoffman J E, Subramaniam B. The role of visual attention in saccadic eye movements. Perception & psychophysics, 1995, 57(6): 787-795. [CrossRef]

- Deubel H, Schneider W X. Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision research, 1996, 36(12): 1827-1837. [CrossRef]

- Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition, USA, 26 June-; pp. 2818-Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition, USA, 26 June-1 July 2016; pp. 2818-2826.

- Zhang Y, Tian Y, Kong Y, et al. Residual dense network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition, USA, 18-22 June 2018; pp. 2472-2481.

- Wang Y, Yan X, Guan D, et al. Cycle-snspgan: Towards real-world image dehazing via cycle spectral normalized soft likelihood estimation patch gan. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(11): 20368-20382. [CrossRef]

- Leihong Z, Zhai Y, Xu R, et al. An End-to-end Computational Ghost Imaging Method that Suppresses. Phys. Rev. Lett, 2002, 89(11): 113601. [CrossRef]

- Saypadith S. A Study on Anomaly Detection in Surveillance. neural networks, 2006, 313(5786): 504-507.

- Lin C, Peng F, Wang B H, et al. Research on PCA and KPCA self-fusion based MSTAR SAR automatic target recognition algorithm. Journal of Electronic Science and Technology, 2012, 10(4): 352-357.

- Keydel E R, Lee S W, Moore J T. MSTAR extended operating conditions: A tutorial. Algorithms for Synthetic Aperture Radar Imagery III, 1996, 2757: 228-242.

- Yang Y, Qiu Y, Lu C. Automatic target classification-experiments on the MSTAR SAR images. In Proceedings of the Sixth international conference on software engineering, artificial intelligence, networking and parallel/distributed computing and first ACIS international workshop on self-assembling wireless network. IEEE, USA, 20-22 June 2005; pp. 2-7. [CrossRef]

- Vespe M, Greidanus H. SAR image quality assessment and indicators for vessel and oil spill detection. IEEE Transactions on Geoscience and Remote Sensing, 2012, 50(11): 4726-4734. [CrossRef]

- Tang Z, Yu C, Deng Y, et al. Evaluation of Deceptive Jamming Effect on SAR Based on Visual Consistency. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 12246-12262. [CrossRef]

- Cui Y, Zhou G, Yang J, et al. Unsupervised estimation of the equivalent number of looks in SAR images. IEEE Geoscience and remote sensing letters, 2011, 8(4): 710-714. [CrossRef]

- Wang S, Rehman A, Wang Z, et al. SSIM-motivated rate-distortion optimization for video coding. IEEE Transactions on Circuits and Systems for Video Technology, 2011, 22(4): 516-529. [CrossRef]

- Hore A, Ziou D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th international conference on pattern recognition. IEEE, Turkey, 23-26 August 2010; pp. 2366-2369. [CrossRef]

- Al-Najjar Y, Chen D. Comparison of image quality assessment: PSNR, HVS, SSIM, UIQI. International Journal of Scientific and Engineering Research, 2012, 3(8): 1-5.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).