Introduction

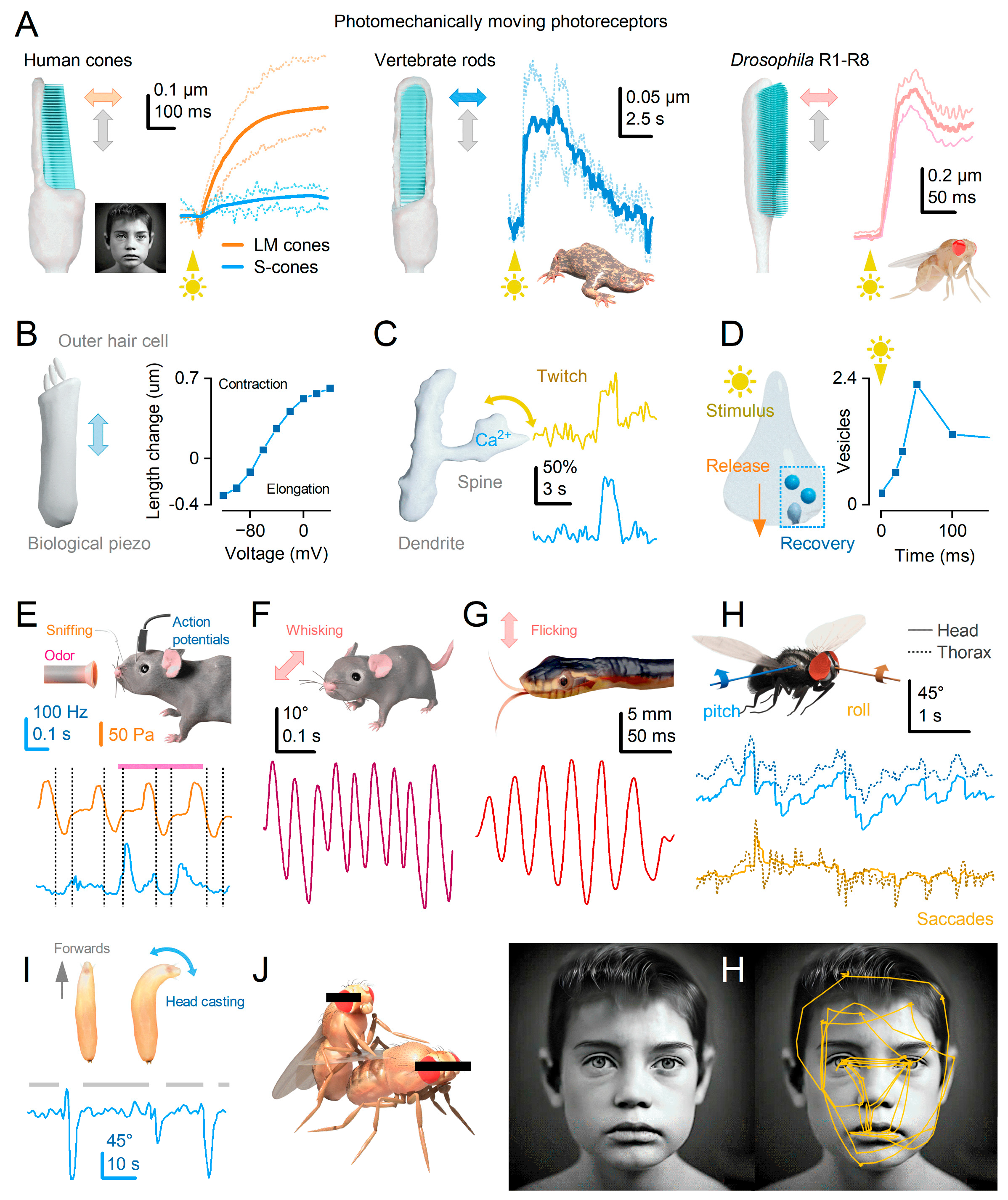

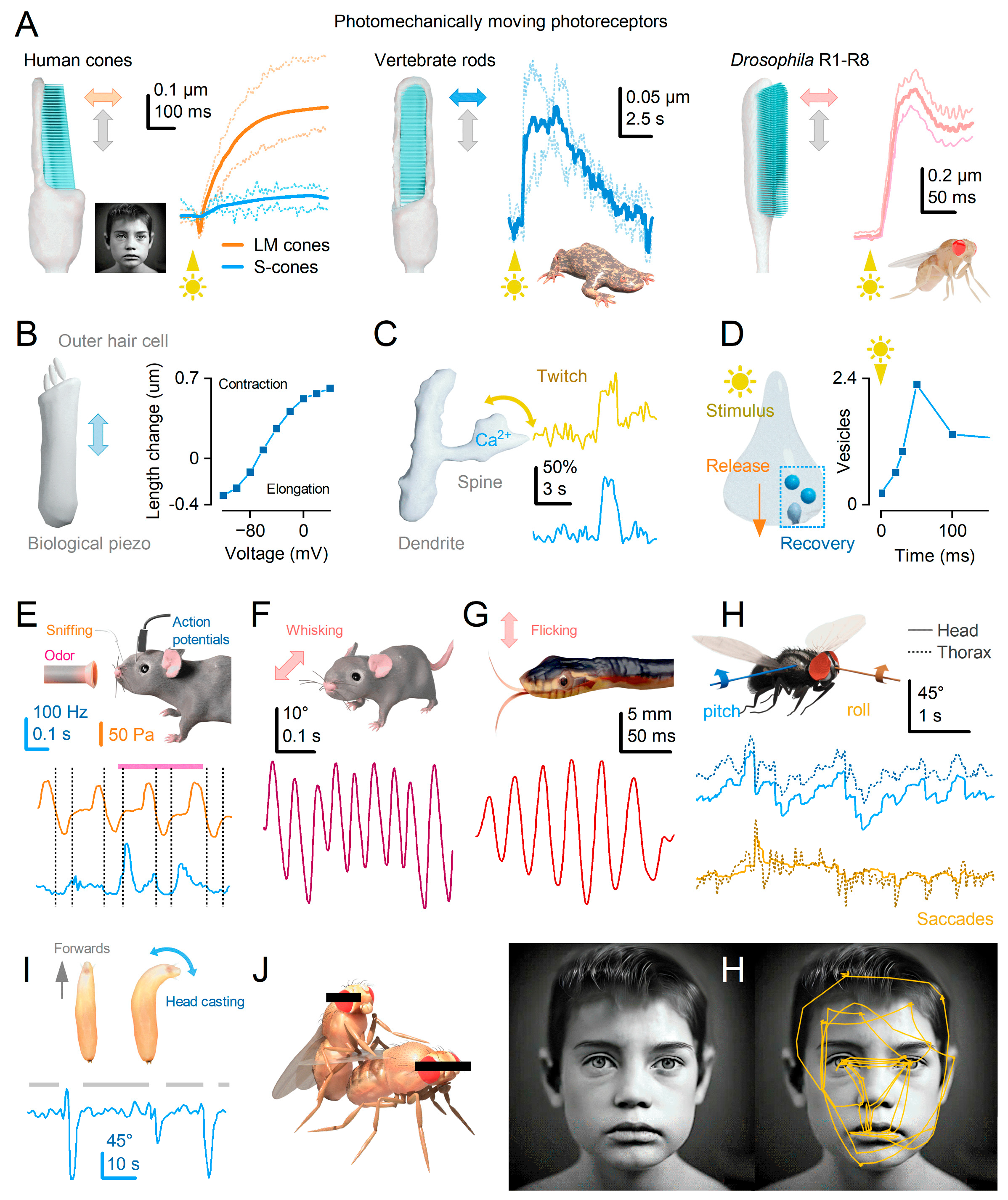

Behaviour arises from intrinsic changes in brain activity and responses to external stimuli, guided by animals’ heritable characteristics and cognition that shape and adjust nervous systems to maximise survival. In this dynamic world governed by the laws of thermodynamics, brains are never static. Instead, their inner workings actively utilise and store electrochemical, kinetic, and thermal energy, constantly moving and adapting in response to intrinsic activity and environmental shifts orchestrated by genetic information encoded in DNA. However, our attempts to comprehend the resulting neural information sampling, processing, and codes often rely on stationarity assumptions and reductionist behaviour or reductionist brain activity analyses. Unfortunately, these preconceptions can prevent us from appreciating the role of rapid biomechanical movements and microstructural changes of neurons and synapses, which we call neural morphodynamics, in sensing and behaviours.

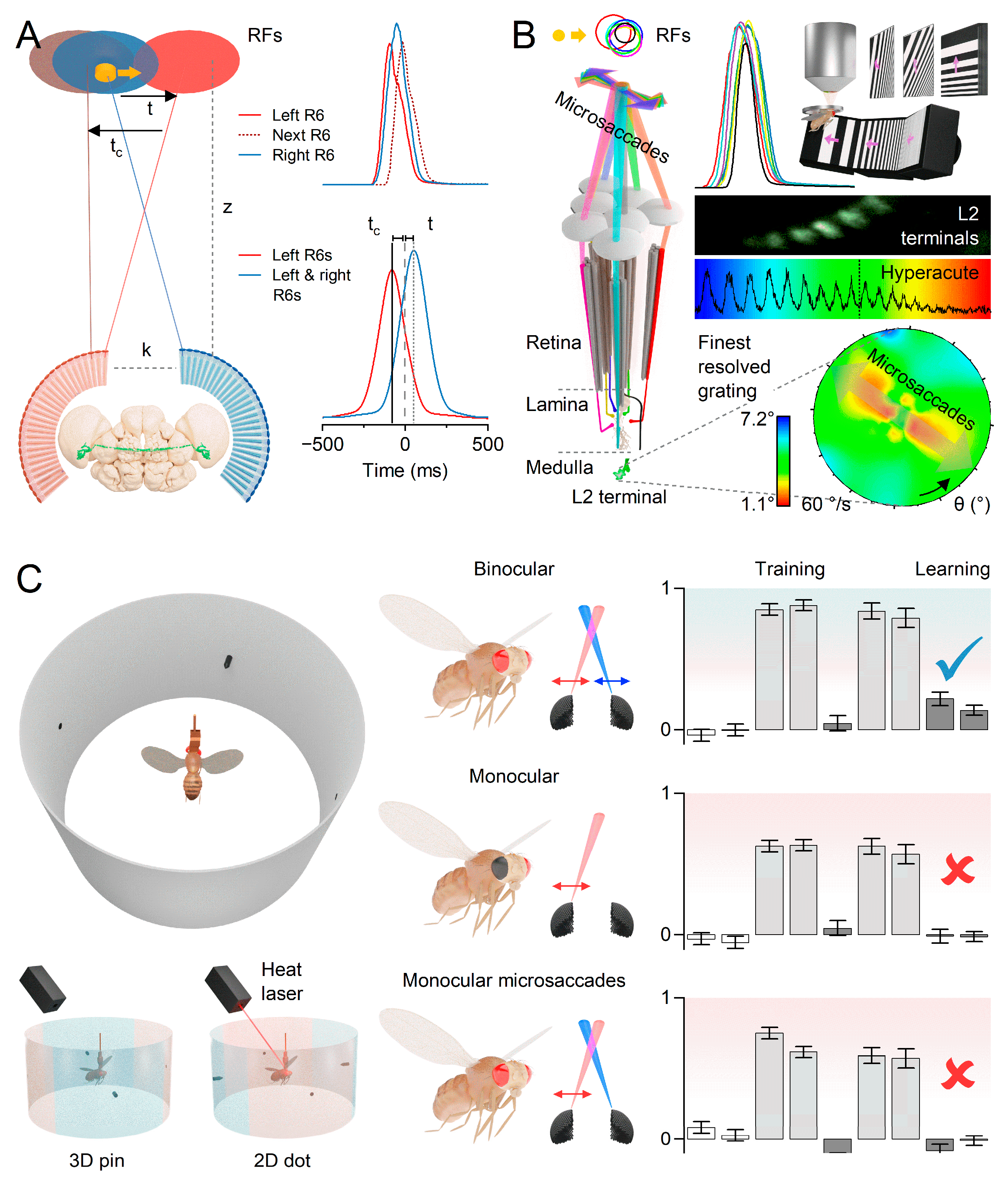

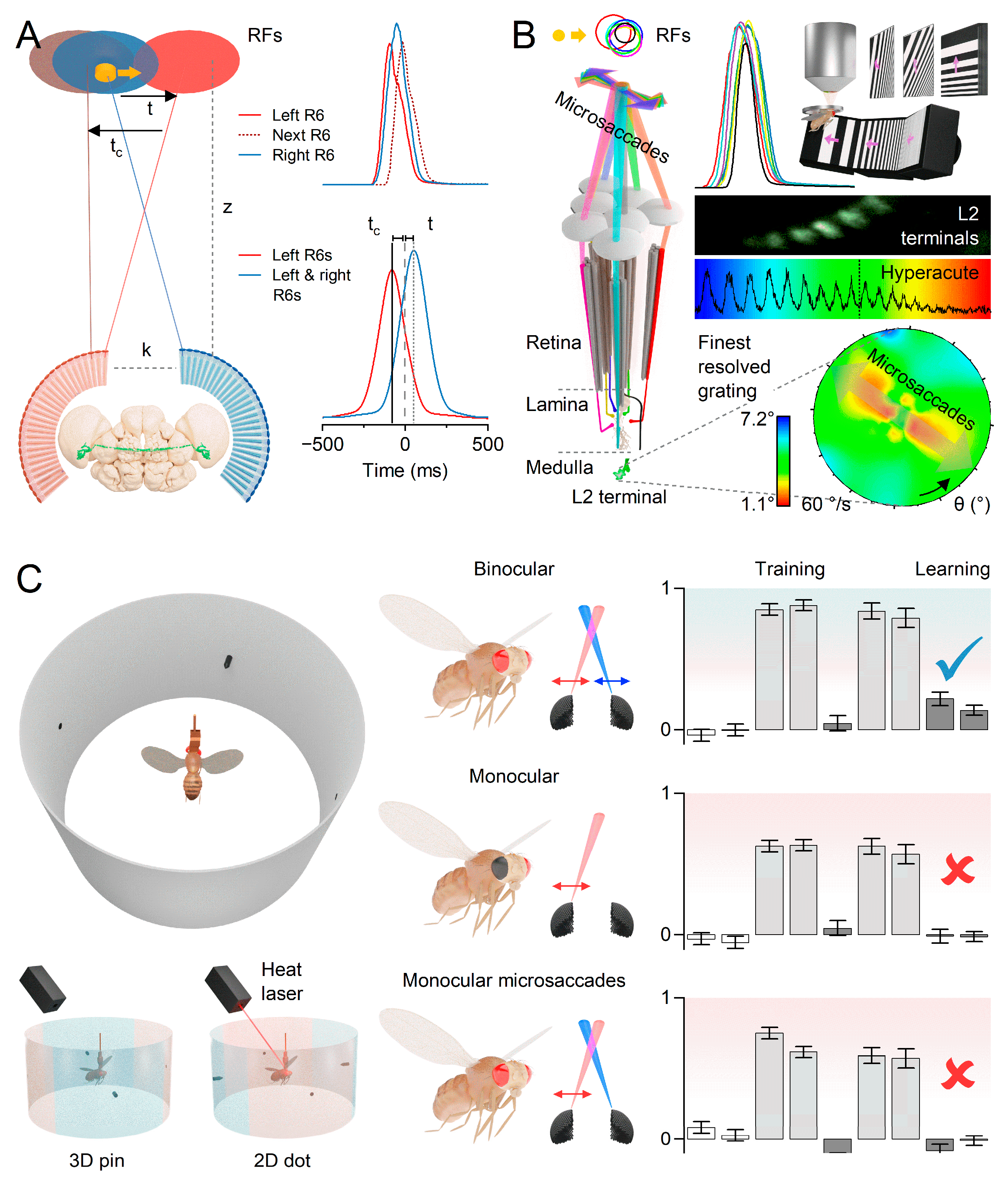

While electron-microscopic brain atlases provide detailed wiring maps at the level of individual synapses

1-4, they fail to capture the continuous motion of cells. Fully developed neurons actively move, with their constituent molecules, molecular structures, dendritic spines and cell bodies engaging in twitching motions that facilitate signal processing and plasticity

5-18 (

Figure 1A–D). Additionally, high-speed

in vivo X-ray holography

14, electrophysiology

19 and calcium-imaging

10 of neural activity suggest that ultrafast bursty or microsaccadic motion influences the release of neurotransmitter quanta, adding an extra layer of complexity to neuronal processing.

Recent findings on sensory organs and graded potential synapses provide compelling evidence for the crucial role of rapid morphodynamic changes in neural information sampling and synaptic communication

6,8,12-14,19,23,34. In

Drosophila, these phasic changes enhance performance and efficiency by synchronising responses to moving stimuli, effectively operating as a form of predictive coding

12,14. These changes empower the small fly brain to achieve remarkable capabilities

12-14, such as hyperacute stereovision

14 and time-normalised and aliasing-free responsiveness to naturalistic light contrasts of changing velocity, starting from photoreceptors and the first visual synapse

12,35. Importantly, given the compound eyes’ small size, these encoding tasks would only be physically possible with active movements

12,14,35. Ultrafast photoreceptor microsaccades enable flies to perceive 2- and 3-dimensional patterns 4-10 times finer than the static pixelation limit imposed by photoreceptor spacing

36,37. Thus, neural morphodynamics can be considered a natural extension of animals’ efficient saccadic encoding strategy to maximise sensory information while linking perception with behaviour (

Figure 1E–J)

12,14,26-29,32.

Overarching questions remain: Are morphodynamic information sampling and processing prevalent across all brain networks, coevolving with morphodynamic sensing to amplify environmental perception, action planning, and behavioural execution? How does the genetic information, accumulated over hundreds of millions of years and stored in DNA, shape and drive brain morphodynamics to maximise the sampling and utilisation of sensory information within the biological neural networks of animals throughout their relatively short lifespans, ultimately improving fitness? Despite the diverse functions and morphologies observed in animals, operating similar molecular motors and reaction cascades within compartmentalised substructures by their neurons suggests that the morphodynamic code may be universal.

This review delves into this phenomenon, specifically focusing on recent discoveries in insect vision and visually guided behaviour. Insects have adapted to colonise all habitats except the deep sea, producing complex building behaviour and societies, exemplified by ants, bees, and termites. Furthermore, insects possess remarkable cognitive abilities that often rely on hyperacute perception. For instance, paper wasps can recognise individual faces among their peers38,39, while Drosophila can distinguish minute parasitic wasp females from harmless males40. These findings alone challenge the prevailing theoretical concepts41,42, as the visual patterns tested may occupy only a pixel or two if viewed from the experimental positions through static compound eyes. Instead, we elucidate how such heightened performance naturally emerges from ultrafast morphodynamics in sensory processing and behaviours12,14, emphasising their crucial role in enhancing perception and generating reliable neural representations of the variable world. Additionally, we propose underlying representational rules and general mechanisms governing morphodynamic sampling and information processing, to augment intelligence and cognition. We hope these ideas will pave the way for new insights and avenues in neuroscience research and our understanding of behaviour.

Photoreceptor movements enhance vision

The structure and function of sense organs have long been recognised as factors that limit the quantity and variety of information they can gather

36,41,43. However, a more recent insight reveals that the process of sensing itself is an active mechanism, utilising bursty or saccadic movements to enhance information sampling

12-14,26,29,33,44-50 (

Figure 1). These movements encompass molecular, sensory cell, whole organ, head, and body motions, collectively and independently enhancing perception and behaviour.

Because compound eyes extend from the rigid head exoskeleton, appearing stationary to an outside observer, the prima facie is that their inner workings would also be immobile41,42,51. Therefore, as the eyes’ ommatidial faceting sets their photoreceptor spacing, the influential static theory of compound eye optics postulates that insects can only see a “pixelated” low-resolution image of the world. According to this traditional static viewpoint, the ommatidial grid limits the granularity of the retinal image and visual acuity. Resolving two stationary objects requires at least three photoreceptors, and this task becomes more challenging when objects are in motion, further reducing visual acuity. The presumed characteristics associated with small static compound eyes, including large receptive fields, slow integration times, and spatial separation of photoreceptors, commonly attributed to spherical geometry, contribute to motion blur that impairs the detailed resolution of moving objects within the visual field41. As a result, male Drosophila relying on coarse visual information face a real dilemma in distinguishing between a receptive female fly and a hungry spider. To accurately differentiate, the male must closely approach the subject to detect distinguishing characteristics such as body shape, colour patterns, or movements. In this context, the difference between sex and death may hinge on an invisible line.

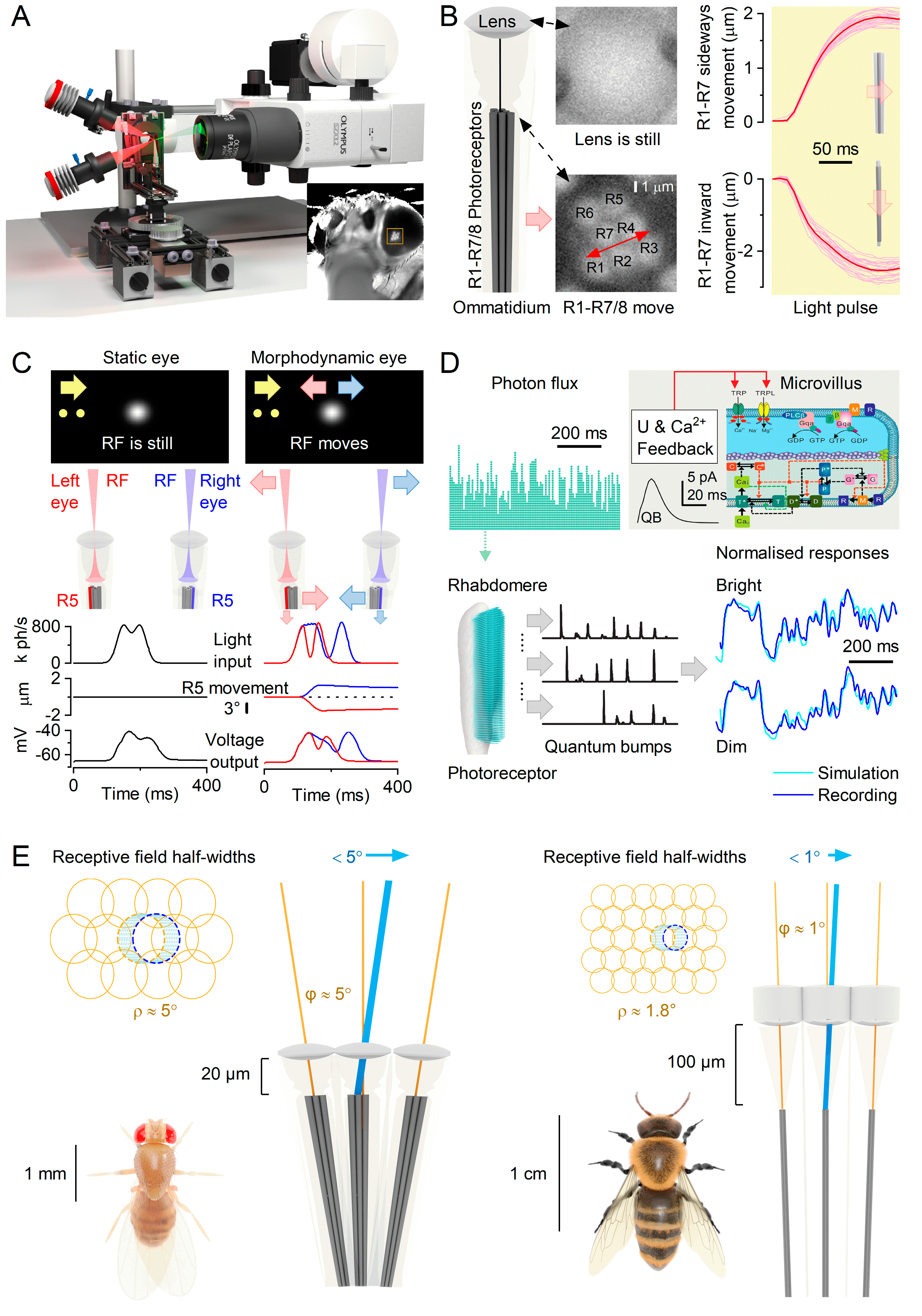

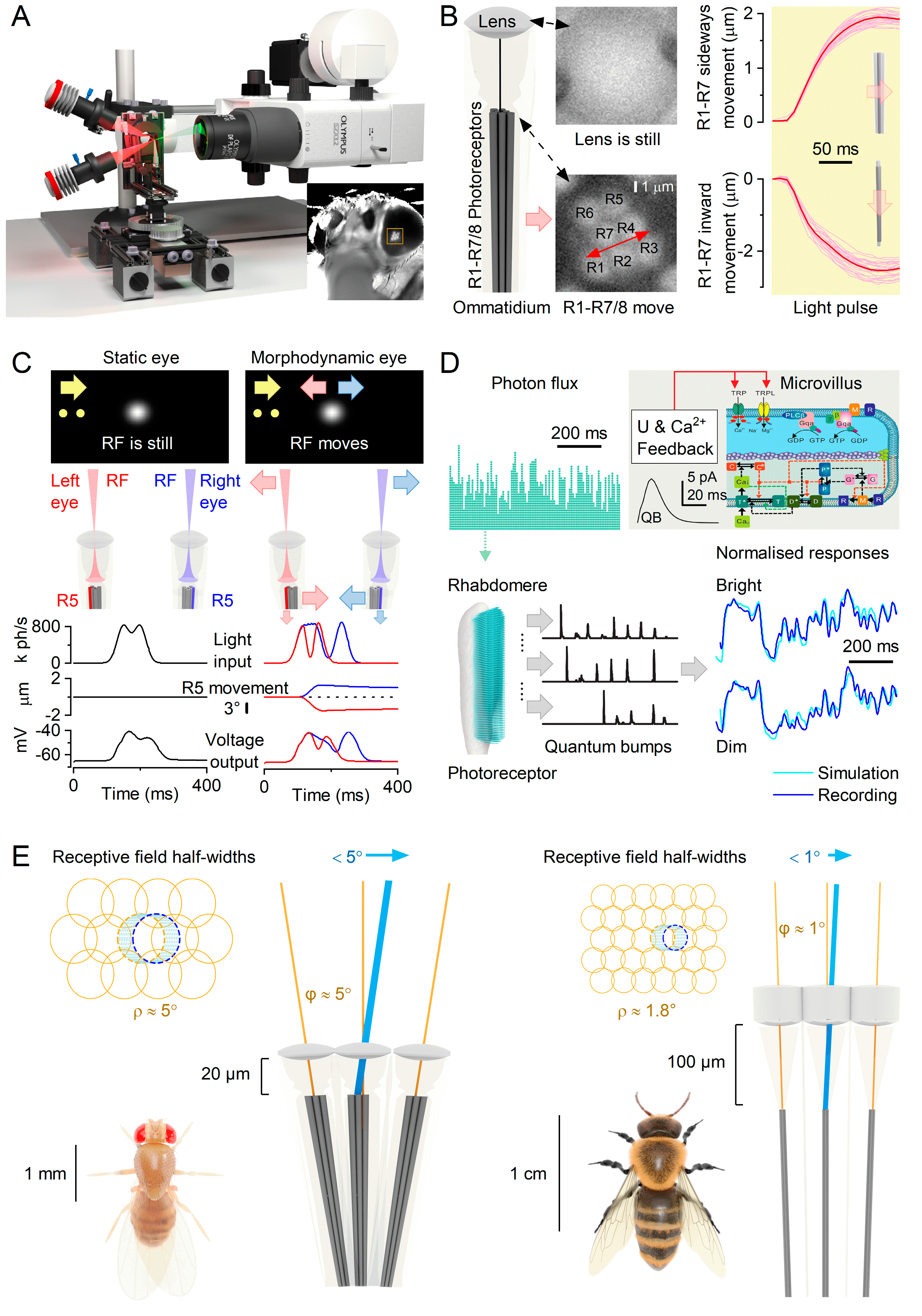

Recent studies on

Drosophila have challenged the notion that fixed factors such as photoreceptor spacing, integration time, and receptive field size solely determine visual acuity

12,14. Instead, these characteristics are dynamically regulated by photoreceptor photomechanics, leading to significant improvements in vision through morphodynamic processes. Intricate experiments (

Figure 2A) have revealed that photoreceptors rapidly move in response to light intensity changes

12-14. Referred to as high-speed photomechanical microsaccades

12,14, these movements, which resemble a complex piston motion (

Figure 2B), occur in less than 100 milliseconds and involve simultaneous axial recoil and lateral swinging of the photoreceptors within a single ommatidium. These local morphodynamics result in adaptive optics (

Figure 2C), enhancing spatial sampling resolution and sharpening moving light patterns over time by narrowing and shifting the photoreceptors’ receptive fields

12,14.

To understand the core concept and its impact on compound eye vision, let us compare the photoreceptors’ receptive fields to image pixels in a digital camera (

Figure 2E). Imagine shifting the camera sensor, capturing two consecutive images with a 1/2-pixel displacement. This movement effectively doubles the spatial image information. By integrating these two images over time, the resolution is significantly improved. However, if a pixel moves even further, it eventually merges with its neighbouring pixel (provided the neighbouring pixel remains still and does not detect changes in light). As a result of this complete pixel fusion, the acuity decreases since the resulting neural image will contain fewer pixels. Therefore, by restricting photoreceptors’ micro-scanning to the interommatidial angle,

Drosophila can effectively time-integrate a neural image that exceeds the optical limits of its compound eyes.

Microsaccades are photomechanical adaptations in phototransduction

Drosophila photoreceptors exhibit a distinctive toothbrush-like morphology characterised by their “bristled” light-sensitive structures known as rhabdomeres. In the outer photoreceptors (R1-6), there are approximately 30,000 bristles, called microvilli, which act as photon sampling units (

Figure 2D)

7,12,14. These microvilli collectively function as a waveguide, capturing light information across the photoreceptor’s receptive field

13,14. Each microvillus compartmentalises the complete set of phototransduction cascade reactions

52, contributing to the refractive index and waveguide properties of the rhabdomere

54. The phototransduction reactions within rhabdomeric microvilli of insect photoreceptors generate ultra-fast contractions of the whole rhabdomere caused by the PLC-mediated cleavage of PIP

2 headgroups (InsP3) from the microvillar membrane

7,52. These photomechanics rapidly adjust the photoreceptor, enabling it to dynamically adapt its light input as the receptive field reshapes and interacts with the surrounding environment. Because photoreceptor microsaccades directly result from phototransduction reactions

7,12,14,52, they are an inevitable consequence of compound eye vision. Without microsaccades, insects with microvillar photoreceptors would be blind

7,12,14,52.

Insects possess an impressively rapid vision, operating approximately 3 to 15 times faster than our own. This remarkable ability stems from the microvilli’s swift conversion of captured photons into brief unitary responses (

Figure 2D; also known as quantum bumps

52) and their ability to generate photomechanical micromovements

7,12 (

Figure 2C). Moreover, the size and speed of microsaccades adapt to the microvilli population’s refractory photon sampling dynamics

12,53 (

Figure 2D). As light intensity increases, both the quantum efficiency and duration of photoreceptors’ quantum bumps decrease

53,55, resulting in more transient microsaccades

12,14. These adaptations extend the dynamic range of vision

53,56 and enhance the detection of environmental contrast changes

12,57, making visual objects highly noticeable under various lighting conditions. Consequently,

Drosophila can perceive moving objects across a wide range of velocities and light intensities, surpassing the resolution limits of the static eye’s pixelation by 4-10 times (

Figure 2E; the average inter-ommatidial angle, φ ≈ 5°)

12,14.

Morphodynamic adaptations involving photoreceptor microvilli play a crucial role in insect vision by enabling rapid and efficient visual information processing. These adaptations lead to contrast-normalised (

Figure 2D) and more phasic photoreceptor responses, achieved through significantly reduced integration time

12,57,58. Evolution further refines these dynamics to match species-specific visual needs (

Figure 2E). For example, honeybee microsaccades are smaller than those of

Drosophila13, corresponding to the positioning of honeybee photoreceptors farther away from the ommatidium lenses. Consequently, reducing the receptive field size and interommatidial angles in honeybees is likely an adaptation that allows optimal image resolution during scanning

13. Similarly, fast-flying flies such as houseflies and blowflies, characterised by a higher density of ommatidia in their eyes, are expected to exhibit smaller and faster photoreceptor microsaccades compared to slower-flying

Drosophila with fewer and less densely packed ommatidia

14. This adaptation enables the fast-flying flies to capture visual information with higher velocity

53,57,59,60 and resolution, albeit at a higher metabolic cost

57.

Matching saccadic behaviours to microsaccadic sampling

Photoreceptors’ microsaccadic sampling likely evolved to align with animals’ saccadic behaviours, maximising visual information capture

12,14. Saccades are utilised by insects and humans to explore their environment (

Figure 1J), followed by fixation periods where the gaze remains relatively still

31. Previously, it was believed that detailed information was only sampled during fixation, as photoreceptors were thought to have slow integration times, causing image blurring during saccades

31. However, fixation intervals can lead to perceptual fading through powerful adaptation, reducing visual information and potentially limiting perception to average light levels

12,61,62. Therefore, to maximise information capture, fixation durations and saccade speeds should dynamically adapt to the statistical properties of the natural environment

12. This sampling strategy would enable animals to efficiently adjust their behavioural speed and movement patterns in diverse environments, optimising vision—for example, moving slowly in darkness and faster in daylight

12.

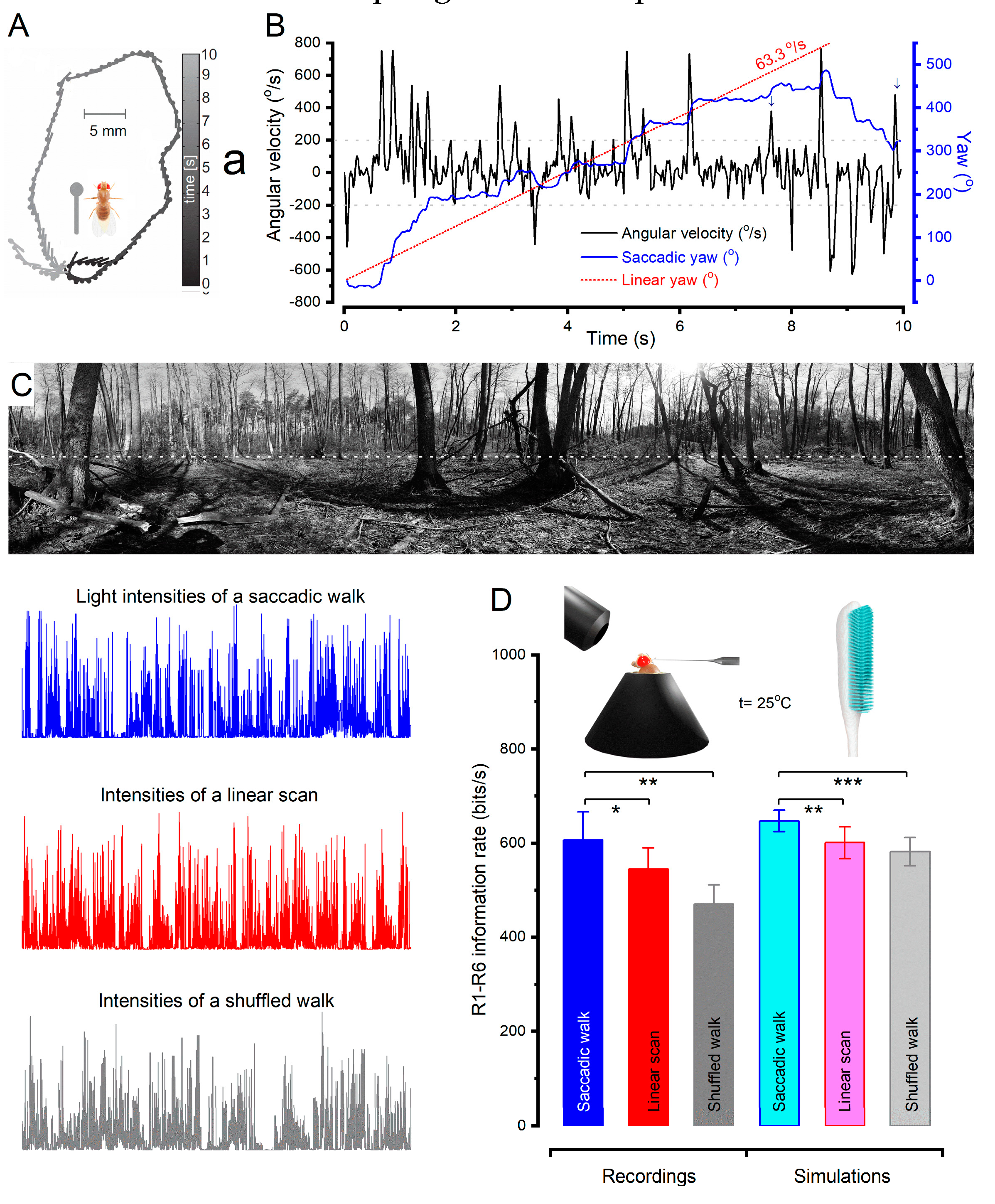

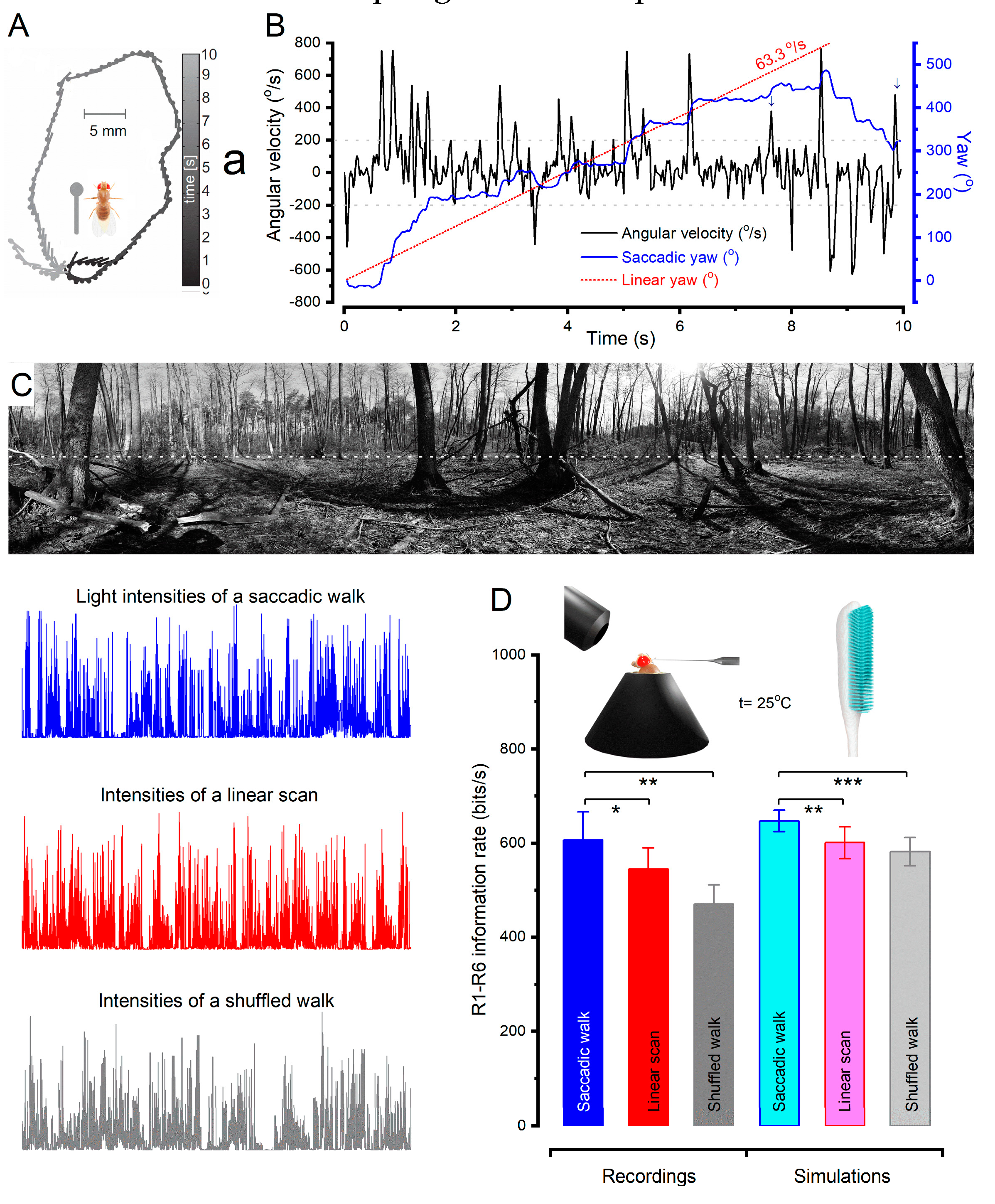

To investigate this theory, researchers studied the body yaw velocities of walking fruit flies

63 to sample light intensity information from natural images

12 (

Figure 3). They found that saccadic viewing of these images improved the photoreceptors’ information capture compared to linear or shuffled velocity walks

12. This improvement was attributed to saccadic viewing generating bursty high-contrast stimulation, maximising the photoreceptors’ ability to gather information. Specifically, the photomechanical and refractory phototransduction reactions of

Drosophila R1-6 photoreceptors, associated with motion vision

64, were found to be finely tuned to saccadic behaviour for sampling quantal light information, enabling them to capture 2-to-4-times more information in a given time compared to previous estimates

12,55.

Further analysis, utilising multiscale biophysical modelling58, investigated the stochastic refractory photon sampling by 30,000 microvilli12. The findings revealed that the improved information capture during saccadic viewing can be attributed to the interspersed fixation intervals12,56. When fixating on darker objects, which alleviates microvilli refractoriness, photoreceptors can sample more information from transient light changes, capturing larger photon rate variations12. The combined effect of photomechanical photoreceptor movements and refractory sampling worked synergistically to enhance spatial acuity, reduce motion blur during saccades, facilitate adaptation during gaze fixation, and emphasise instances when visual objects crossed a photoreceptor’s receptive field. Consequently, the encoding of high-resolution spatial information was achieved through the temporal mechanisms induced by physical motion12.

These discoveries underscore the crucial link between an animal’s adaptation in utilising movements across different scales, ranging from nanoscale molecular dynamics to microscopic brain morphodynamics, to maximise visual information capture and acuity12. The new understanding from the Drosophila studies is that contrary to popular assumptions, neither saccades41 nor fixations61 hinder the vision. Instead, they work together to enhance visual perception, highlighting the complementary nature of these active sampling movement patterns12.

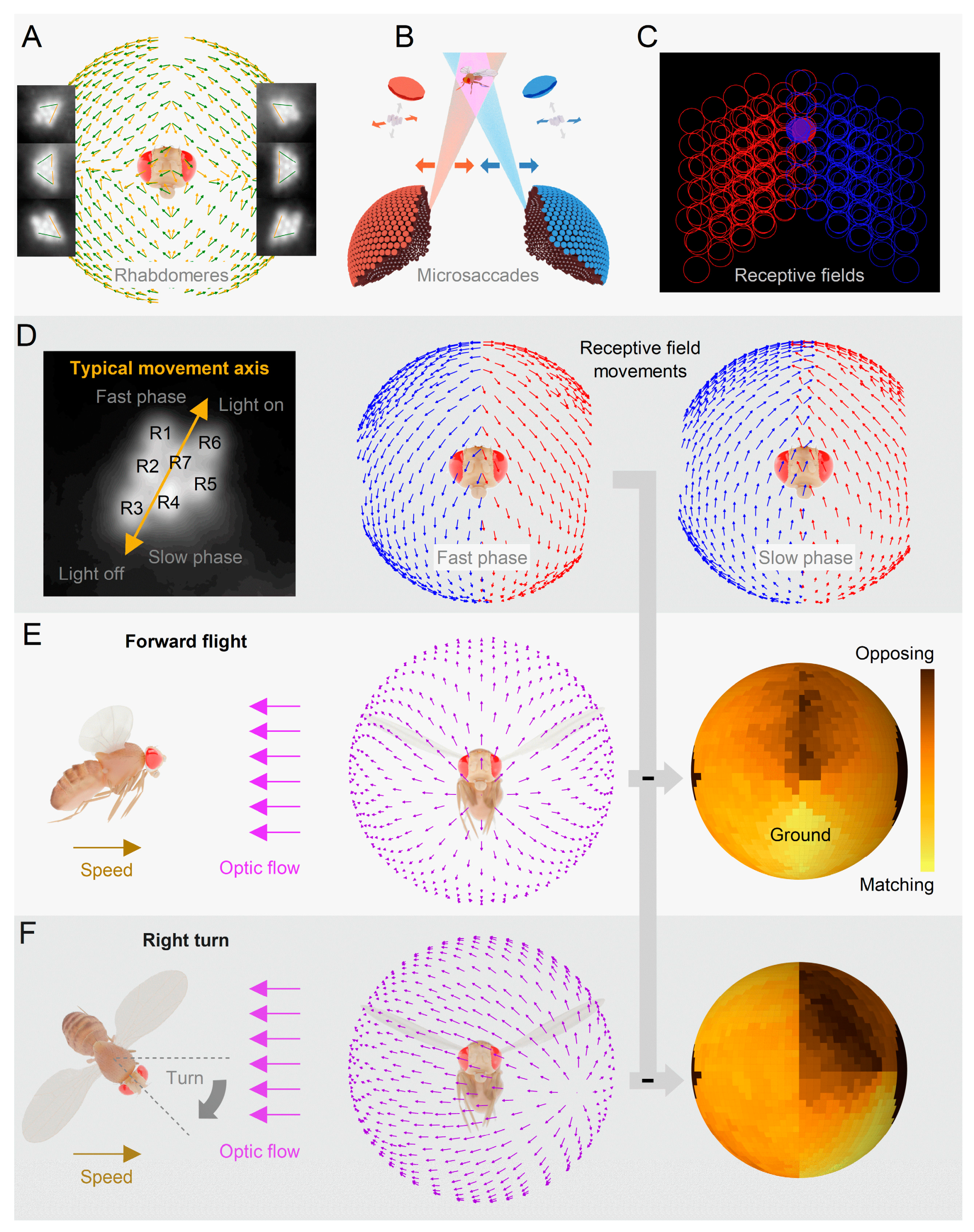

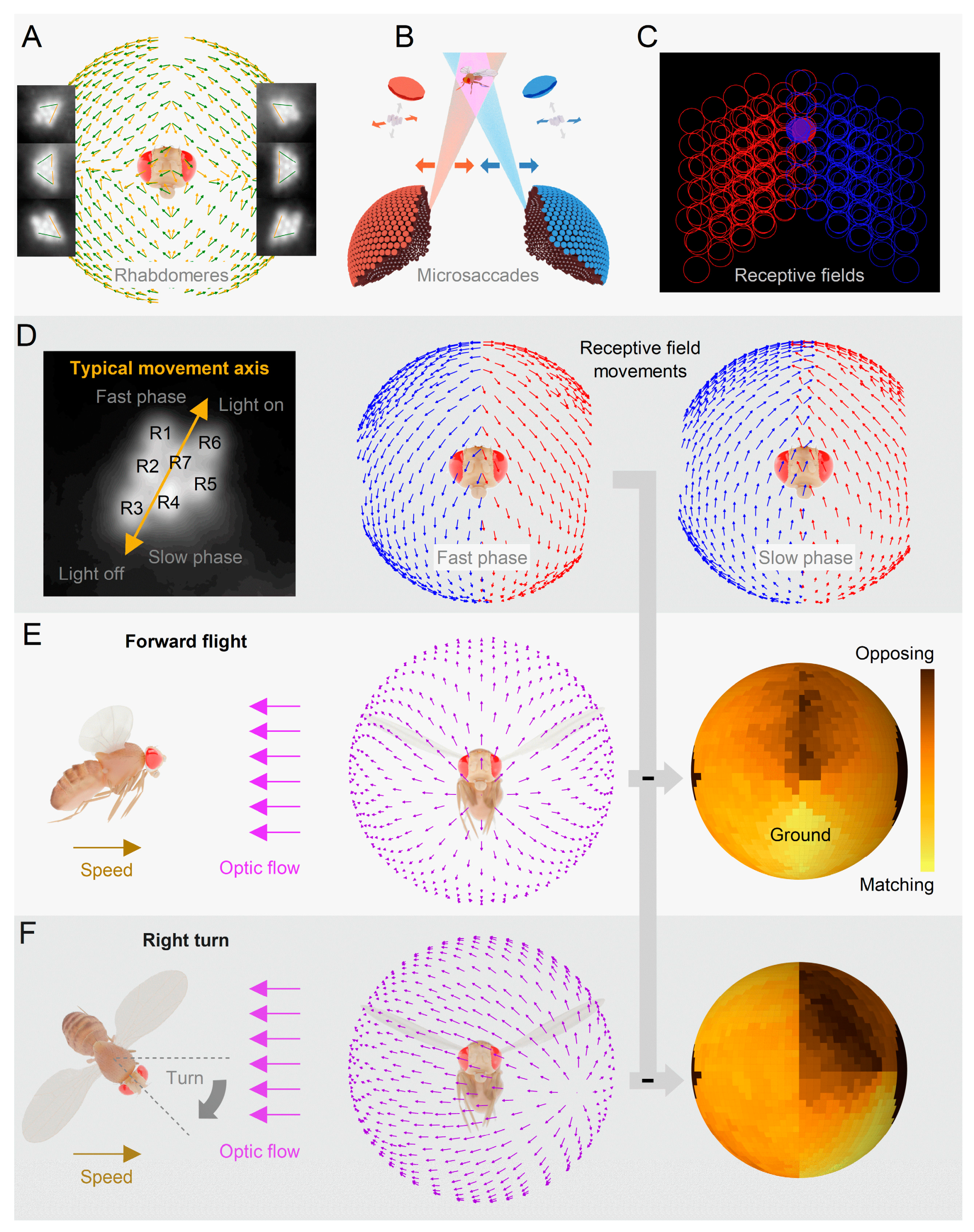

Left and right eyes’ mirror-symmetric microsaccades are tuned to optic flow

When

Drosophila encounters moving objects in natural environments, its left and right eye photoreceptor pairs generate microsaccadic movements that synchronise their receptive field scanning in opposite directions (

Figure 4)

12,14. To quantitatively analyse these morphodynamics, researchers utilised a custom-designed high-speed microscope system

13, tailored explicitly for recording photoreceptor movements within insect compound eyes (

Figure 2A). Using infrared illumination, which flies cannot see, the positions and orientations of photoreceptors in both eyes were measured, revealing mirror-symmetric angular orientations between the eyes and symmetry within each eye (

Figure 4A). It was discovered that a single point in space within the frontal region, where receptive fields overlap (

Figure 4B), is detected by at least 16 photoreceptors, eight in each. This highly ordered mirror-symmetric rhabdomere organisation, leading to massively over-complete tiling of the eyes’ binocular visual fields

14 (

Figure 4C), challenges the historical belief that small insect eyes, such as those of

Drosophila, are optically too coarse and closely positioned to support stereovision

41.

By selectively stimulating the rhabdomeres with targeted light flashes, researchers determined the specific photomechanical contraction directions for each eye’s location (

Figure 4D). Analysis of the resulting microsaccades enabled the construction of a 3D-vector map encompassing the frontal and peripheral areas of the eyes. These microsaccades exhibited mirror symmetry between the eyes and aligned with the rotation axis of the R1-R2-R3 photoreceptor of each ommatidium (

Figure 4D, left), indicating that the photoreceptors’ movement directions were predetermined during development (

Figure 4A)

13,14. Strikingly, the 3D-vector map representing the movements of the corresponding photoreceptor receptive fields (

Figure 4D) coincides with the optic flow-field generated by the fly’s forward thrust (

Figure 4E)

13,14. This alignment provides microsaccade-enhanced detection and resolution of moving objects (cf.

Figure 2C) across the extensive visual fields of the eyes (approximately 360°), suggesting an evolutionary optimisation of fly vision for this intriguing capability.

The microsaccadic receptive field movements comprise a fast phase (

Figure 4D, left) aligned with the flow-field direction during light-on (

Figure 4D, middle), followed by a slower phase in the opposite direction during light-off (

Figure 4D, right). When a fly is in forward flight with an upright head (

Figure 4E, left and middle), the fast and slow phases reach equilibrium (

Figure 4E, right). The fast phase represents “ground-flow,” while the slower phase represents “sky-flow.” In the presence of real-world structures, locomotion enhances sampling through a push-pull mechanism. Photoreceptors transition between fast and slow phases, thereby collectively improving neural resolution over time

14 (

Figure 2C). Fast microsaccades are expected to aid in resolving intricate visual clutter, whereas slower microsaccades enhance the perception of the surrounding landscape and sky

14. Moreover, this eye-location-dependent orientation-tuned bidirectional visual object enhancement makes any moving object deviating from the prevailing self-motion-induced optic flow field stand out. Insect brains likely utilise the resulting phasic neural image contrast differences to detect or track predator movements or conspecifics across the eyes’ visual fields. For example, this mechanism could help a honeybee drone spot and track the queen amidst a competing drone swarm

66, enabling efficient approach and social interaction.

Rotation (yaw) (

Figure 4F, left and middle) further enhances binocular contrasts (

Figure 4F, right), with one eye’s phases synchronised with field rotation while the other eye’s phases exhibit the reverse pattern

14. Many insects, including bees and wasps, engage in elaborately patterned learning or homing flights, involving fast saccadic turns and bursty repetitive waving, when leaving their nest or food sources

67,68 (

Figure 4G). Given the mirror-symmetricity and ultrafast photoreceptor microsaccades of bee eyes

13, these flight patterns are expected to drive enhanced binocular detection of behaviourally relevant objects, landmarks, and patterns, utilising the phasic differences in microsaccadic visual information sampling between the two eyes

12,14. Thus, learning flight behaviours likely make effective use of optic-flow-tuned and morphodynamically enhanced binocular vision, enabling insects to navigate and return to their desired locations successfully.

Mirror-symmetric microsaccades enable 3d vision

Crucially,

Drosophila uses mirror-symmetric microsaccades to sample the three-dimensional visual world, enabling the extraction of depth information (

Figure 5). This process entails comparing the resulting morphodynamically sampled neural images from its left and right eye photoreceptors

14. The disparities in x- and y-coordinates between corresponding “pixels” provide insights into scene depth. In response to light intensity changes, the left and right eye photoreceptors contract mirror-symmetrically, narrowing and sliding their receptive fields in opposing directions, thus shaping neural responses (

Figure 5A; also see

Figure 2C)

12,14. By cross-correlating these photomechanical responses between neighbouring ommatidia, the

Drosophila brain is predicted to achieve a reliable stereovision range spanning from less than 1 mm to approximately 150 mm

14. The crucial aspect lies in utilising the responses’ phase differences as temporal cues for perceiving 3D space (

Figure 5A,B). Furthermore, researchers assessed if a static

Drosophila eye model with immobile photoreceptors could discern depth

14. These calculations indicate that the lack of scanning activity by the immobile photoreceptors and the small distance between the eye (

Figure 5A, k = 440 μm) would only enable a significantly reduced depth perception range, underlining the physical and evolutionary advantages of moving photoreceptors in depth perception.

Behavioural experiments in a flight simulator verified that

Drosophila possesses hyperacute stereovision

14 (

Figure 5C). Tethered head-fixed flies were presented with 1-4° 3D and 2D objects, smaller than their eyes’ average interommatidial angle (cf.

Figure 2E). Notably, the flies exhibited a preference for fixating on the minute 3D objects, providing support for the new morphodynamic sampling theory of hyperacute stereovision.

In subsequent learning experiments, the flies underwent training to avoid specific stimuli, successfully showing the ability to discriminate between small (<< 4°) equal-contrast 3D and 2D objects. Interestingly, because of their immobilised heads, flies could not rely on motion parallax signals during learning, meaning the discrimination relied solely on the eyes’ image disparity signals. Flies with one eye painted over and mutants, incapable of producing photoreceptor microsaccades’ sideways movement in one eye, failed to learn the stimuli. These results firmly establish the significance of binocular mirror-symmetric photoreceptor microsaccades in sampling 3D information. Further investigations revealed that the R1-6 (associated with motion vision64) and R7/R8 (associated with colour vision71) photoreceptor classes contribute to hyperacute stereopsis. The findings provide compelling evidence that mirror-symmetric microsaccadic sampling, as a form of ultrafast neural morphodynamics, is necessary for hyperacute stereovision in Drosophila14.

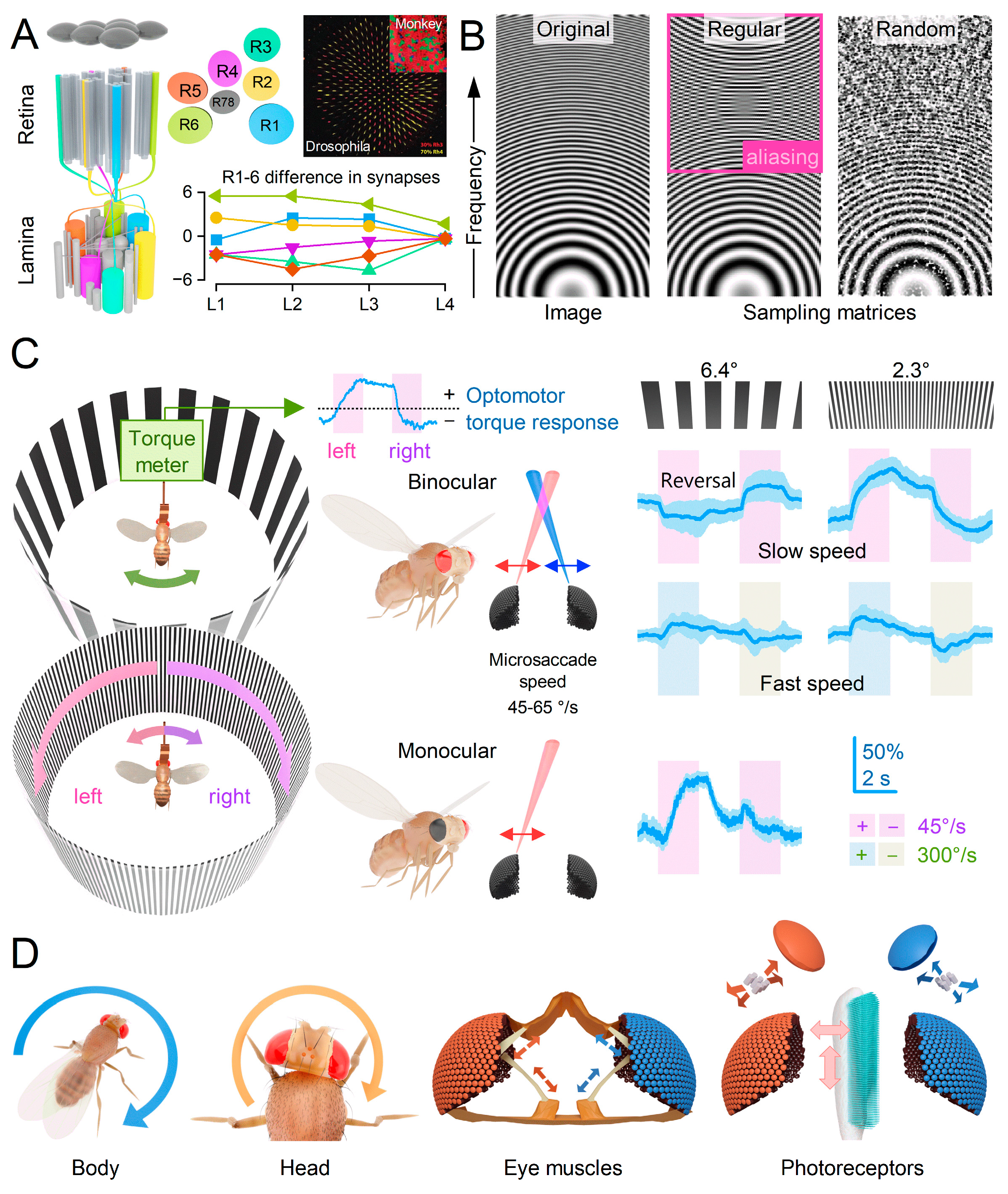

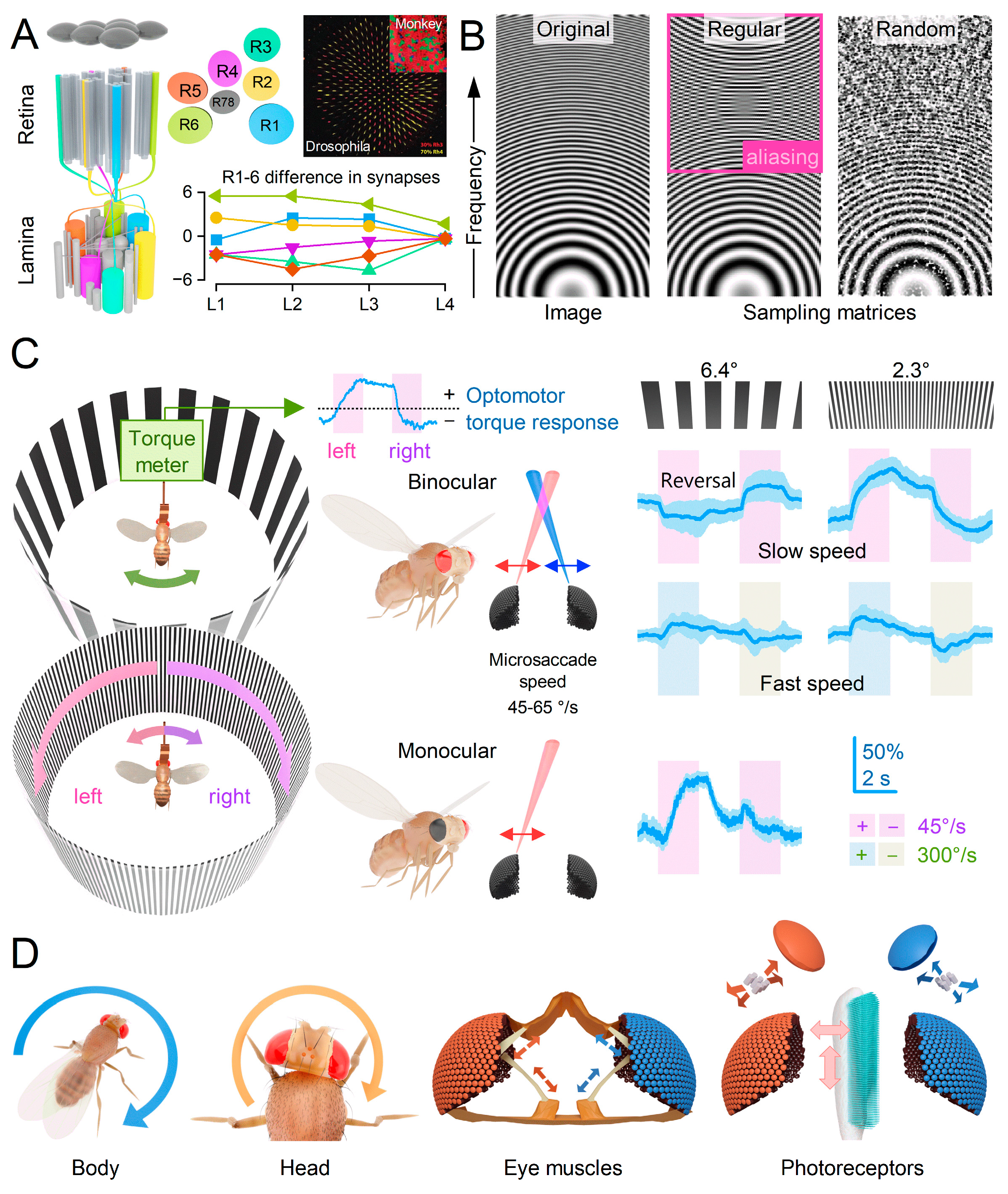

Reliable neural estimates of the variable world

The heterogeneous nature of the fly’s retinal sampling matrix—characterised by varying rhabdomere sizes

12 (

Figure 4,

Figure 5B and

Figure 6A), random distributions of visual pigments

72 (

Figure 6A), variations in photoreceptor synapse numbers

3 (

Figure 6A), the stochastic integration of single photon responses

53,55,73 (quantum bumps) (

Figure 2D)

12,56,58 and stochastic variability in microsaccade waveforms

12-14 - eliminates spatiotemporal aliasing

12,14 (

Figure 6B), enabling reliable transmission of visual information (

Figure 2C,D,

Figure 3D and

Figure 5B). Thus, the morphodynamic information sampling theory

12,14 challenges previous assumptions of

static compound eyes

41. These assumptions suggested that the ommatidial grid of immobile photoreceptors structurally limits spatial resolution, rendering the eyes susceptible to undersampling the visual world and prone to aliasing

41.

Supporting the new morphodynamic theory

12,14, tethered head-fixed

Drosophila exhibit robust optomotor behaviour in a flight simulator system (

Figure 6C). The flies generated yaw torque responses, represented by the blue traces, indicating their intention to follow the left or right turns of the stripe panorama. These responses are believed to be a manifestation of an innate visuomotor reflex aimed at minimising retinal image slippage

41,77. Consistent with

Drosophila’s hyperacute ability to differentiate small 3D and 2D objects

14 (

Figure 5C), the tested flies reliably responded to rotating panoramic black-and-white stripe scenes with hyperacute resolution, tested down to 0.5° resolution

12,14. This resolution is about ten times finer than the eyes’ average interommatidial angle (

Figure 2E), significantly surpassing the explanatory power of the traditional

static compound eye theory

41, which predicts 4°-5° maximum resolvability.

However, when exposed to slowly rotating 6.4-10° black-and-white stripe waveforms, a head-fixed tethered

Drosophila displays reversals in its optomotor flight behaviour

14 (

Figure 6C). Previously, this optomotor reversal was thought to result from the static ommatidial grid spatially aliasing the sampled panoramic stripe pattern due to the stimulus wavelength being approximately twice the eyes’ average interommatidial angle (

Figure 2E). Upon further analysis, the previous interpretation of these reversals as a sign of aliasing

41,45 is contested. Optomotor reversals primarily occur at 40-60°/s stimulus velocities, matching the speed of the left and right eyes’ mirror-symmetric photoreceptor microsaccades

14 (

Figure 6C and

Figure 2C). As a result, one eye’s moving photoreceptors are more likely to be locked onto the rotating scene than those in the other eye, which move against the stimulus rotation. This discrepancy creates an imbalance that the fly’s brain may interpret as the stimulus rotating in the opposite direction

14.

Notably, the optomotor behaviour returns to normal when the tested fly has monocular vision (with one eye covered) and during faster rotations

14 or finer stripe pattern waveforms

12,14 (

Figure 6C). Therefore, the abnormal optomotor reversal, which arises under somewhat abnormal and specific stimulus conditions when tested with head-fixed and position-constrained flies, must reflect high-order processing of binocular information and cannot be attributed to spatial sampling aliasing that is velocity and left-vs-right eye independent

14.

Multiple layers of active sampling vs simple motion detection models

In addition to photoreceptor microsaccades, insects possess intraocular muscles capable of orchestrating coordinated oscillations of the entire photoreceptor array, encompassing the entire retina

12,14,45,78 (

Figure 6D). This global motion has been proposed as a means to achieve super-resolution

79,80, but not for stereopsis. While the muscle movements alter the light patterns reaching the eyes, leading to the occurrence of photoreceptor microsaccades, it is the combination of local microsaccades and global retina movements, which include any body and head movements

29,44,68,81 (

Figure 6D), that collectively govern the active sampling of stereoscopic information by the eyes

12-14.

The Drosophila brain effectively integrates depth and motion computations using mirror-symmetrically moving image disparity signals from binocular vision channels14. Previous assumptions that insect brains perform high-order computations on low-resolution sample streams from their static eyes are now being questioned. For instance, the motion detection ideals of reduced input-output systems64,82, such as Hassenstein-Reichardt83 and Barlow-Levick84 models, require updates to incorporate ultrafast morphodynamics12-14 and state-dependent synaptic processing85-89. The updates are crucial as these processes actively shape neural responses, perception, and behaviors86, providing essential ingredients of hyperacute 3D vision12,14,38,40 and intrinsic decision-making12,14,90-92 that occur in a closed-loop interaction with the changing environment.

Accumulating evidence, consistent with the idea that brains reduce uncertainty by synchronously integrating multisensory information93, further suggests that object colour and shape information partially streams through the same channels previously thought to be solely for motion information70. Consequently, individual neurons within these channels should engage in multiple parallel processing tasks70, adapting in a phasic and goal-oriented manner. These emerging concepts challenge oversimplified input-output models of insect vision, highlighting the importance of ultrafast neural morphodynamics and active vision in perception and behaviour.

Benefits of neural morphodynamics

Organisms have adapted to the quantal nature of the dynamic physical world, resulting in ubiquitous active use of quantal morphodynamic processes and signalling within their constituent parts (cf.

Figure 1). Besides enhancing information sampling and flow in sensory systems for efficient perception

5-8,12-15, we propose that ultrafast neural morphodynamics likely evolved universally to facilitate effective communication across nervous systems

9-11,23. By aligning with the moving world principles of thermodynamics and information theory

94-96, the evolution of nervous systems harnesses neural morphodynamics to optimise perception and behavioural capabilities, ensuring efficient adaptation to the ever-changing environment. The benefits of ultrafast morphodynamic neural processing are substantial and encompass the following (

Figure 7):

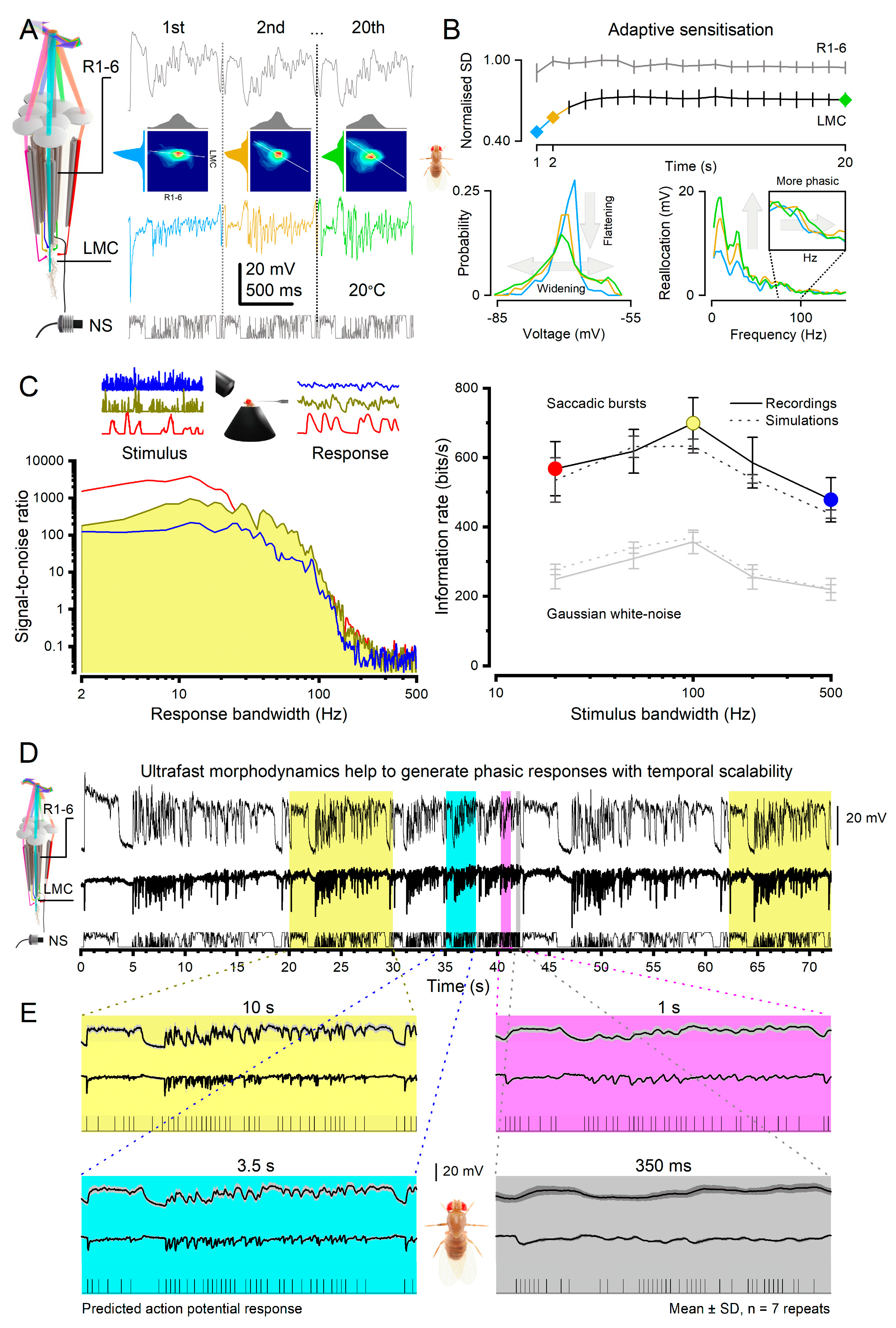

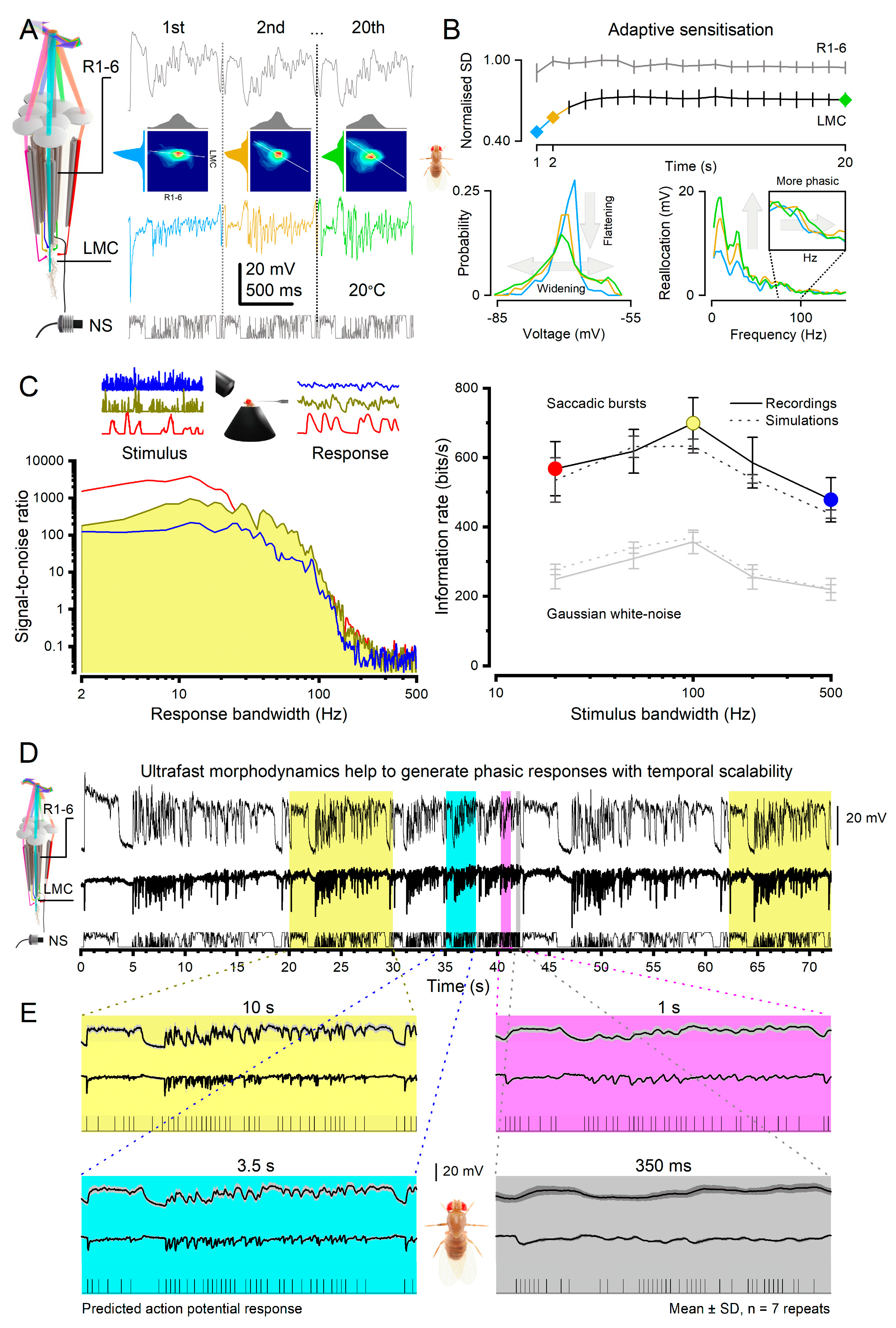

Efficient Neural Encoding of Reliable Representations across Wide Dynamic Range

Neural communication through synapses relies on rapid pre- and postsynaptic ultrastructural movements that facilitate efficient quantal release and capture of neurotransmitter molecules

19,22,23. These processes share similarities with how photoreceptor microvilli have evolved to utilise photomechanical movements

12-14 with quantal refractory photon sampling

12,53,56 to maximise information in neural responses (

Figure 3D). In both systems, ultrafast morphodynamics are employed with refractoriness to achieve highly accurate sampling of behaviourally or intrinsically relevant information by rapidly adapting their quantum efficiency to the influx of vastly varying sample (photon vs neurotransmitter molecule) rate changes

12,14,53,56.

In synaptic transmission (

Figure 1D), presynaptic transmitter vesicles are actively transported to docking sites by molecular motors

18. Within these sites, vesicle depletion occurs through ultrafast exocytosis, followed by replenishment via endocytosis

23. These processes generate ultrastructural movements, vesicle queuing and refractory pauses

18. Such movements and pauses occur as vesicle numbers, and potentially their sizes

19, adapt to sensory or neural information flow changes. Given that a spherical vesicle contains many neurotransmitter molecules with a high rate of release, the transmitter molecules, acting as carriers of information, can exhibit logarithmic changes from one moment to another. Conversely, the adaptive morphodynamic processes at the postsynaptic sites involve rapid movements of dendritic spines

10 (cf.

Figure 1C) or transmitter-receptor complexes

18. These ultrastructural movements likely facilitate efficient sampling of information from the rapid changes in neurotransmitter concentration, enabling swift and precise integration of macroscopic responses from the sampled postsynaptic quanta

19,22.

Interestingly, specific circuits have evolved to integrate synchronous high signal-to-noise information from multiple adjacent pathways, thereby enhancing the speed and accuracy of phasic signals

19,22,24,35,97. This mechanism is particularly beneficial for computationally challenging tasks, such as distinguishing object boundaries from the background during variable self-motion. For instance, in the photoreceptor-LMC synapse, the fly eye exhibits neural superposition wiring

69 (

Figure 7A), allowing each LMC to simultaneously sample and integrate quantal events from six neighbouring photoreceptors (R1-6). Because the receptive fields of these photoreceptors only partially overlap and move in slightly different directions during microsaccades, each photoreceptor conveys a distinct phasic aspect of the visual stimulus to the LMCs

14 (L1-3; cf.

Figure 5B). The LMCs actively differentiate these inputs, resulting in rapidly occurring phasic responses with notably high signal-to-noise ratios, particularly at high frequencies

19,22,24,35.

Moreover, in this system, coding efficiency improves dynamically by adaptation, which swiftly flattens and widens the LMC’s amplitude and frequency distributions over time

19,22 (

Figure 7B), improving sensitivity to under-represented signals within seconds. Such performance implies that LMCs strive to utilise their output range equally in different situations since a message in which every symbol is transmitted equally often has the highest information content

98. Here, an LMC’s sensory information is maximised through pre- and postsynaptic morphodynamic processes, in which quantal refractory sampling jointly adapts to light stimulus changes, dynamically adjusting the synaptic gain (

Figure 7A; cf. joint probability at each second of stimulation).

Comparable to LMCs, dynamic adapting scaling for information maximisation has been shown in blowfly H1 neurons’ action potential responses (spikes) to changing light stimulus velocities99. These neurons reside in the lobula plate optic lobe, deeper in the brain, at least three synapses away from LMCs. Therefore, it is possible that H1s’ adaptive dynamics partly project the earlier morphodynamic information sampling in the photoreceptor-LMC synapse or that adaptive rescaling is a general property of all neural systems100,101. Nevertheless, the continuously adapting weighted-average of the six variable photoreceptor responses reported independently to LMCs, combat noise and may carry the best (most accurate unbiased) running estimate of the ongoing light contrast signals. This dynamic maximisation of sensory information is distinct from Laughlin’s original concept of static contrast equalisation102. The latter is based on stationary image statistics of a limited range and necessitates an implausible static synaptic encoder system35 that imposes a constant synaptic gain. Furthermore, Laughlin’s model does not address the issue of noise35.

Thus, ultrafast morphodynamics actively shapes neurons’ macroscopic voltage response waveforms maximising information flow. These adaptive dynamics impact both the presynaptic quantal transmitter release and postsynaptic integration of the sampled quanta, influencing the underlying quantum bump size, latency, and refractory distributions

12. Advantageously, intracellular microelectrode recordings in vivo provide a means to estimate these distributions statistically with high accuracy

19,22,55,73. Knowing these distributions and the number of sampling units obtained from ultrastructural electron microscopy data, one can accurately predict neural responses and their information content for any stimulus patterns

22,55,56,73,94. These 4-parameter quantal sampling models, which avoid the need for free parameters

12,14,53,57,58, have been experimentally validated

12,14,19,53,55,57,58,73,94 (

Figure 7C), providing a biophysically realistic multiscale framework to understand the involved neural computations

12,58.

From a computational standpoint, a neural sampling or transmission system exerts adaptive quantum efficiency regulation that can be likened to division (cf. Text Box 1). Proportional quantal sample counting is achieved through motion-enhanced refractory transmission, sampling units, or combinations. This refractory adaptive mechanism permits a broad dynamic range, facilitating response normalisation through adaptive scaling and integration of quantal information12,14,56. Consequently, noise is minimised, leading to enhanced reliability of macroscopic responses12,56.

Therefore, we expect that this efficient information maximisation strategy, which has demonstrated signal-to-noise ratios reaching several thousand in insect photoreceptors during bright saccadic stimulation

12,56 (

Figure 7C), will serve as a fundamental principle for neural computations involving the sampling of quantal bursts of information, such as neurotransmitter or odorant molecules. In this context, it is highly plausible that the pre- and postsynaptic morphodynamic quantal processes of neurons have co-adapted to convert logarithmic sample rate changes into precise phasic responses with limited amplitude and frequency distributions

12,19, similar to the performance seen in fly photoreceptor

12,14,53,55,73 and first visual interneurons, LMCs

19,22. Hence, ultrafast refractory quantal morphodynamics may represent a prerequisite for efficiently allocating information within the biophysically constrained amplitude and frequency ranges of neurons

94,96,103.

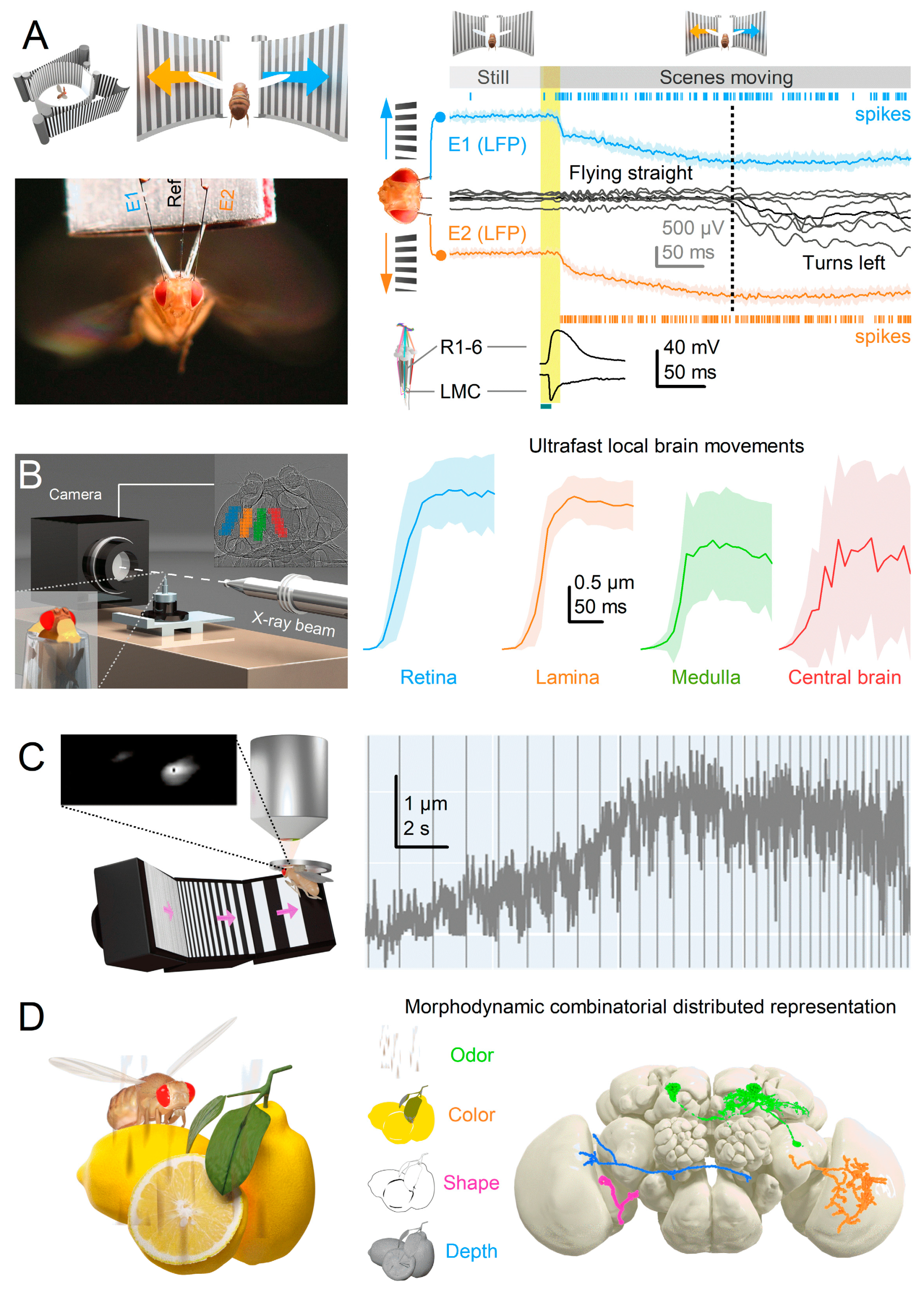

Predictive Coding and Minimal Neural Delays

Hopfield and Brody initially proposed that brain networks might employ transient synchrony as a collective mechanism for spatiotemporal integration for action potential communication104. Interestingly, morphodynamic quantal refractory information sampling and processing may offer the means to achieve this general coding objective.

Neural circuits incorporate predictive coding mechanisms that leverage mechanical, electrical, and synaptic feedback to minimise delays

12,14,56. This processing, which enhances phasic inputs, synchronises the flow of information right from the first sampling stage

12,19,35. It time-locks activity patterns into transient bursts of temporal scalability as observed in

Drosophila photoreceptors’ and LMCs’ voltage responses to accelerating naturalistic light patterns (

Figure 7D,E)

12,35,104. Such phasic synchronisation and scalability are crucial for the brain to efficiently recognise and represent the changing world, irrespective of the animal’s self-motion, and predict and lock onto its moving patterns. As a result, perception becomes more accurate, and behavioural responses to dynamic stimuli are prompt.

Crucially, this adaptive scalability of phasic, graded potential responses is readily translatable to sequences of action potentials (

Figure 7E, cf. the scalable spike patterns predicted from the LMC responses). Thus, ultrafast neural morphodynamics may contribute to our brain’s intrinsic ability to effortlessly capture the same meaning from a sentence, whether spoken very slowly or quickly. This dynamic form of predictive coding, which time-locks phasic neural responses to moving temporal patterns, differs markedly from the classic concept of interneurons using static centre-surround antagonism within their receptive fields to exploit spatial correlations in the natural scenes

105.

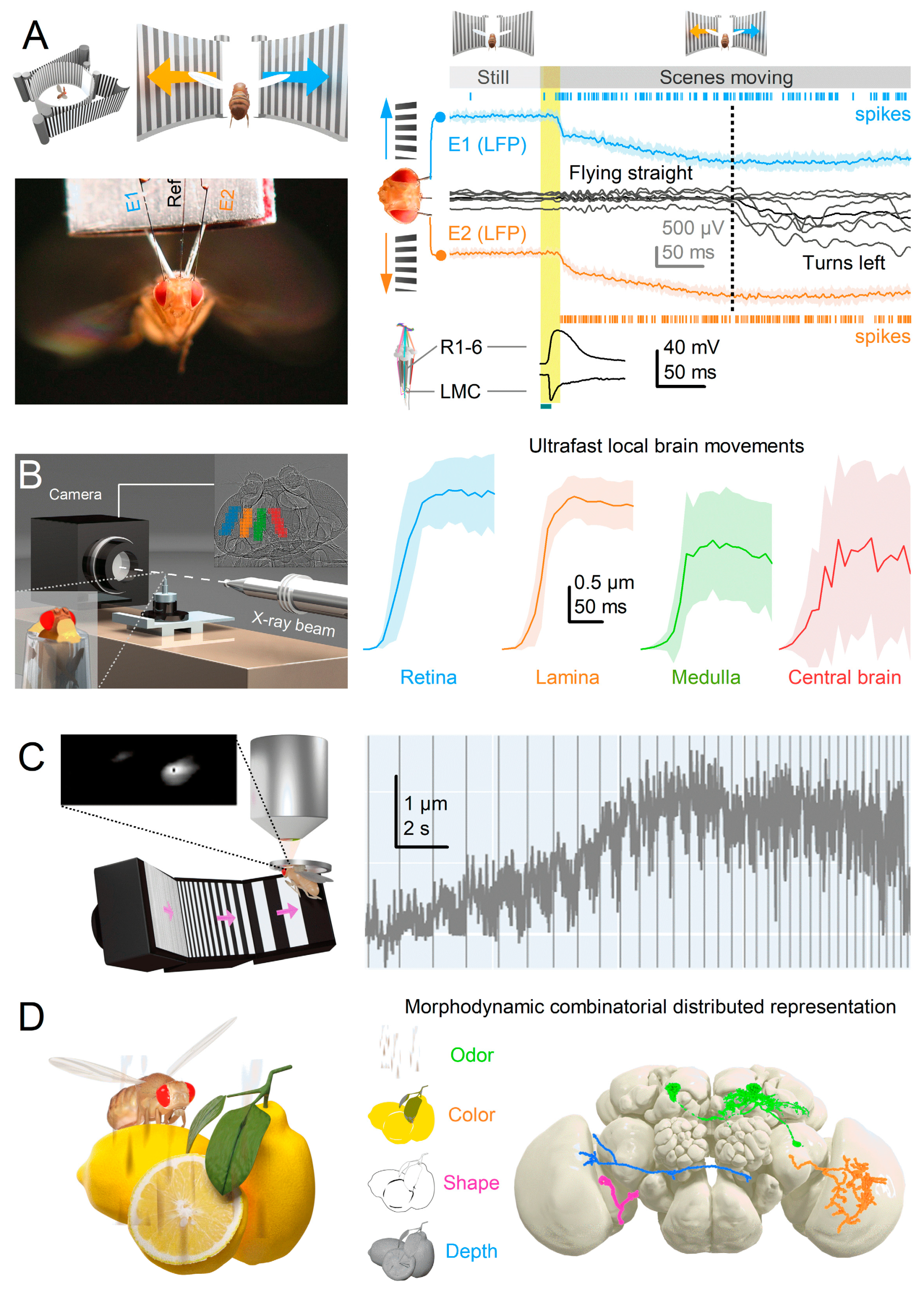

Reinforcing fast morphodynamic synchronisation as a general phenomenon, we observe minimum phase responses deeper in the brain. In experiments involving tethered flying

Drosophila, electrical activity patterns recorded from their lobula/lobula plate optic lobes

86 - at least three synapses downstream from photoreceptors - exhibit remarkably similar minimal delay responses to LMCs (

Figure 8A), with the first responses emerging within 20 ms of the stimulus onset

86. Such a rapid signal transmission through multiple neurons and synapses challenges traditional models that rely on the stationary eye and brain circuits with significant synaptic (chemical), signal integration and conduction (electrical) delays. Moreover, in vivo high-speed X-ray imaging

14 has revealed synchronised phasic movements across the

Drosophila optic lobes following the rapid microsaccades of light-activated photoreceptors (

Figure 8B). Synchronised tissue movements have also been observed during 2-photon imaging of optic lobe neurons

14 (

Figure 8C). In the past, such movements have been often thought to be motion artefacts, with researchers making considerable efforts to eliminate them from calcium imaging data collection.

The absence of phasic amplification and synchronisation of signals through morphodynamics would have detrimental effects on communication speed and accuracy, resulting in slower perception and behavioural responses. It would significantly prolong the time it takes for visual information from the eyes to reach decision-making circuits, increasing uncertainty and leading to a decline in overall fitness. Thus, we expect the inherent scalability of neural morphodynamic responses (

Figure 7D) to be crucial in facilitating efficient communication and synchronisation among different brain regions, enabling the coordination required for complex cognitive processes.

We propose that neurons exhibit morphodynamic jitter (stochastic oscillations) at the ultrastructural level sensitising the transmission system to achieve these concerted efforts. By enhancing phasic sampling, such jitter could minimise delays across the whole network, enabling interconnected circuits to respond in -sync to changes in information flow, actively co-differentiating the relevant (or attended) message stream86. Similarly, jitter-enhanced synchronisation could involve linking sensory (bottom-up) information about a moving object with the prediction (efference copy106,107) of movement-producing signals generated by the motor system, or top-down prediction of the respective self-motion108. Their difference signal, or prediction error, could then be used to rectify the animal’s self-motion more swiftly than without the jitter-induced delay minimisation and synchronous phase enhancement, enabling faster behavioural responses.

We also expect ultrafast morphodynamics to contribute to multisensory integration by temporally aligning inputs from diverse sensory modalities with intrinsic goal-oriented processing (

Figure 8D). This cross-modal synchronisation enhances behavioural certainty

93,109. Using synchronised phasic information, a brain network can efficiently integrate yellow colour, shuttle-like shape, rough texture, and sweet scent into a unique neural representation, effectively identifying a

lemon amidst the clutter and planning an appropriate action. These ultrafast combinatorial and distributed spatiotemporal responses expand the brain’s capacity to encode information, increasing its representational dimensionality

110 beyond what could be achieved through slower processing in static circuits. Thus, the phasic nature of neural morphodynamics may enable animals to think and behave faster and more flexibly.

Anti-aliasing and Robust Communication

Neural morphodynamics incorporates anti-aliasing sampling and signalling mechanisms within the peripheral nervous system

12,14,111 to prevent the distortion of sensory information. Like

Drosophila compound eyes, photoreceptors in the primate retina exhibit varying sizes

112, movements

6 and partially overlapping receptive fields

113. Along with stochastic rhodopsin choices

76 (

Figure 5B, inset), microstructural and synaptic variations

114, these characteristics should create a stochastically heterogeneous sampling matrix free of spatiotemporal aliasing

12,14,53,58. By enhancing sampling speed and phasic integration of changing information through heterogeneous channels, ultrafast morphodynamics reduces ambiguity in interpreting sensory stimuli and enhances the brain’s “frame-rate” of perception. Such clear evolutionary benefits suggest that analogous morphodynamic processing would also be employed in central circuit processes for thinking and planning actions.

Furthermore, the inherent flexibility of neural morphodynamics using moving sampling units to collect and transmit information should help the brain maintain its functionality even when damaged, thus contributing to its resilience and recovery mechanisms. By using oscillating movements to enhance transmission and parallel information channels streaming overlapping content70, critical phasic information could potentially bypass or reroute around partially damaged neural tissue. This morphodynamic adaptability equips the brain to offset disturbances and continue information processing. As a result, brain morphodynamics ensures accurate sensory representation and bolsters neural communication’s robustness amidst challenges or impairments.

The Efficiency of Encoding Space in Time

Neural morphodynamics boosts the efficiency to encode space in time

12,14, allowing smaller mobile sense organs - like compact compound eyes with fewer ommatidia - to achieve the spatial resolution equivalent to larger stationary sense organs (cf.

Figure 5C and

Figure 6C). The resulting ultrafast phasic sampling and transmission expedite sensory processing, while the reduced signal travel distance promotes faster perception, more efficient locomotion and decreases energy consumption. Therefore, we can postulate that between two brains of identical size, if one incorporates ultrafast morphodynamics across its neural networks while the other does not, the brain using morphodynamics has a higher information processing capacity. Its faster and more efficient information processing should enhance cognitive abilities and decision-making capabilities. In this light, for evolution to select neural morphodynamics as a pathway for optimising the brains would be a no-brainer.

Future avenues of research

Investigating the Integration of Ultrafast Morphodynamics Changes in the Brain and Behaviour One area of interest is understanding how the brain and behaviour can effectively synchronise with rapid morphodynamic changes, such as adaptive quantal sample rate modifications within the sensory receptor matrix and synaptic information transfer. A fundamental question pertains to how neural morphodynamics enhances the efficiency and speed of synaptic signal transmission. Is there a morphodynamic adaptation of synaptic vesicle sizes and quantities

19 that maximises information transfer? It is plausible that synaptic vesicle sizes and numbers adapt morphodynamically to ensure efficient information transfer, potentially using a running memory of the previous activity to optimise how transmitter molecule quantities scale in response to environmental information changes (cf.

Figure 7A,B). This adaptive process might involve rapid exo- or endocytosis-linked movements of transmitter-receptor complexes. Furthermore, it is worthwhile to explore how brain morphodynamics adaptively regulates the synaptic cleft and optimises the proximity of neurotransmitter receptors to optimise signal transmission.

Neural Activity Synchronisation

It is crucial to understand how neural morphodynamics synchronises brain activity within specific networks in a goal-directed manner and to comprehend the effects of changes in brain morphodynamics during maturation and learning on brain function and behaviour. Modern machine learning techniques now enable us to establish and quantify the contribution of brain morphodynamics to learning-induced structural and functional changes, and behaviour.

For instance, we can employ a deep learning approach to study how

Drosophila’s compound eyes use photoreceptor movements to attain hyperacuity

115. Could an artificial neural network (ANN), equipped with precisely positioned and photomechanically moving photoreceptors to process and transmit visual information to a lifelike-wired lamina connectome (cf.

Figure 2 and

Figure 4), reproduce the natural response dynamics of real flies, thereby surpassing their optical pixelation limit? By systematically altering sampling dynamics and synaptic connections in an ANN-based compound eye model, it is now possible to test whether the performance falters without the realistic orientation-tuned photoreceptor movements and connectome and the eye loses its hyperacuity.

Perception Enhancement

Neural morphodynamics mechanisms can enhance perception by implementing biomechanical feedback signals to photoreceptors via feedback synapses24 to improve object detection against backgrounds. An object’s movement makes detecting it from the background easier116. When interested in a particular object in a specific position, could the brain send attentive86,117 feedback signals to a set of photoreceptors, in which receptive fields point at that position, to make them contract electromechanically, causing the object to ‘jump’? This approach would enhance the object boundaries from its background118. Such biomechanical feedback would be the most efficient way to self-generate pop-up attention at the level of the sampling matrix.

Conclusion and future outlook

Theory of neural morphodynamics provides a new perspective that has a potential to revolutionise our understanding of brain function and behaviour. By addressing the key questions and conducting further research, we can explore the applications of ultrafast morphodynamics for neurotechnologies to enhance perception, improve artificial systems, and develop biomimetic devices and robots capable of sophisticated sensory processing and decision-making.

Box 1. Visualising Refractory Quantal Computations

By utilising powerful multi-scale morphodynamic neural models

12,14,53, we can predict and analyse the generation and integration of voltage responses during morphodynamic quantal refractory sampling and compare these simulations to actual intracellular recordings for similar stimulation12,14,53. This approach, combined with information-theoretical analyses

53,57,73,94, allows us to explain how phasic response waveforms arise from ultrafast movements and estimate the signal-to-noise ratio and information transfer rate of the neural responses. Importantly, these methods are applicable for studying the morphodynamic functions of any neural circuit. To illustrate the analytic power of this approach, we present a simple example: an intracellular recording (whole-cell voltage response) of dark-adapted Drosophila photoreceptors (

TB1C) to a bright light pulse. See also Figure 2D that shows morphodynamic simulations of how a photoreceptor responds to two dots crossing it receptive field from east to west and west to east directions.

An insect photoreceptor’s sampling units – e.g., 30,000 microvilli in a fruit fly or 90,000 in a blowfly R1-6 - count photons as variable samples (quantum bumps) and sum these up into a macroscopic voltage response, generating a reliable estimate of the encountered light stimulus. For clarity, visualise the light pulse as a consistent flow of photons, or golden balls, over time (TB1A). The quantum bumps that the photons elicit in individual microvilli can be thought of as silver coins of various sizes (TB1B). The photoreceptor is persistently counting these “coins” produced by its microvilli, thus generating a dynamically changing macroscopic response (TB1C, depicted as a blue trace). These basic counting rules119 shape the photoreceptor response:

Each microvillus can produce only one quantum bump at a time53,120-122.

After producing a quantum bump, a microvillus becomes refractory for up to 300 ms (in Drosophila R1-6 photoreceptors at 25°C) and cannot respond to other photons120,123,124.

Quantum bumps from all microvilli sum up the macroscopic response53,120-122,125.

Microvilli availability sets a photoreceptor’s maximum sample rate (quantum bump production rate), adapting its macroscopic response to a light stimulus53,122.

Global Ca2+ accumulation and membrane voltage affect samples of all microvilli. These global feedbacks strengthen with brightening light to reduce the size and duration of quantum bumps, adapting the macroscopic response55,73,126,127.

Adaptation in macroscopic response (TB1C) to continuous light (TB1A) is mostly caused by reduction in the number and size of quantum bumps over time (TB1B). When the stimulus starts, a large portion of the microvilli is simultaneously activated (TB1Ai and TB1Bi), but they subsequently enter a refractory state (TB1Aii and TB1Bii). This means that a smaller fraction of microvilli can respond to the following photons in the stimulus until more microvilli become available again. As a result, the number of activated microvilli initially reaches a peak and then rapidly declines, eventually settling into a steady state (TB1Aiii and TB1Biii) as the balance between photon arrivals and refractory periods is achieved. If all quantum bumps were identical, the macroscopic current would simply reflect the number of activated microvilli based on the photon rate, resulting in a steady-state response. Light-induced current also exhibits a decaying trend towards lower plateau levels. This is because quantum bumps adapt to brighter backgrounds (TB1Aiii and TB1Biii), becoming smaller and briefer55,73. This adaptation is caused by global negative feedback, Ca2+-dependent inhibition of microvillar phototransduction reactions126-130. Additionally, the concurrent increase in membrane voltage compresses responses by reducing the electromotive force for the light-induced current across all microvilli53.

The signal-to-noise ratio and rate of information transfer increase with the average sampling rate, which is the average number of samples per unit time. Thus, the more samples that make up the macroscopic response to a given light pattern, the higher its information transfer rate. However, with more photons being counted by a photoreceptor at brightening stimulation, information about saccadic light patterns of natural scenes in its responses first increases and then approaches a constant rate. This is because:

(i) When more microvilli are in a refractory state, more photons fail to generate quantum bumps. As quantum efficiency drops, the equilibrium between used and available microvilli approaches a constant (maximum) quantum bump production rate (sample rate).

(ii) Once global Ca2+ and voltage feedbacks saturate, they cannot make quantum bumps any smaller and briefer with increasing brightness.

(iii) After initial acceleration from the dark-adapted state, quantum bump latency distribution remains practically invariable in different light-adaptation states73.

Therefore, when sample rate modulation (i) and sample integration dynamics (ii and iii) of the macroscopic voltage responses settle (at intensities >105 photons/s in Drosophila R1-6 photoreceptors, allocation of visual information in the photoreceptor’s amplitude and frequency range becomes nearly invariable53,57,131. Correspondingly, stochastic simulation closely predicts measured responses and rates of information transfer53,56,57. Notably, when the microvilli usage reaches a midpoint (~50 % level), the information rate encoded by the macroscopic responses to natural light intensity time series saturates53. This is presumably because sample rate modulation to light increments and decrements – which in the macroscopic response code for the number of different stimulus patterns94 - saturate. Quantum bump size, if invariable, does not affect the information transfer rate – as long as the quantum bumps are briefer than the stimulus changes they encode. Thus, like any other filter, a fixed bump waveform affects signal and noise equally (data processing theorem53,94). But varying quantum bump size adds noise; when this variation is adaptive (memory-based), less noise is added53,94.

In summary, insect photoreceptors count photons through microvilli, integrate the responses, and adapt their macroscopic response based on the basic counting rules and global feedback mechanisms. The information transfer rate increases with the average sampling rate but eventually reaches a constant rate as the brightness of the stimulus increases. The size of the quantum bumps affects noise levels, with adaptive variation reducing noise.

Author Contributions

MJ wrote the paper with content contributions from JT, JK, KRH, BS, JM and LC. All authors discussed and edited the manuscript.

Acknowledgments

We thank G. de Polavieja, G. Belušič, B. Webb, J. Howard, M. Göpfert, A. Lazar, P. Verkade, R. Mokso, S. Goodwin, M. Mangan, S.-C. Liu, P. Kuusela, R.C. Hardie and J Bennett for fruitful discussions. We thank G. de Polavieja, G. Belušič, S. Goodwin and R.C. Hardie for comments on the manuscript. This work was supported by BBSRC (BB/F012071/1 and BB/X006247/1) and EPSRC (EP/P006094/1 and EP/X019705/1) grants to MJ, Horizon Europe Framework Programme grant NimbleAI grant to LC, and BBSRC White-rose studentship (1945521) to BS.

Declaration of Interests

The authors declare no competing interests.

References

- Eichler, K.; Li, F.; Litwin-Kumar, A.; Park, Y.; Andrade, I.; Schneider-Mizell, C.M.; Saumweber, T.; Huser, A.; Eschbach, C.; Gerber, B.; et al. The complete connectome of a learning and memory centre in an insect brain. Nature 2017, 548, 175–182. [Google Scholar] [CrossRef]

- Oh, S.W.; Harris, J.A.; Ng, L.; Winslow, B.; Cain, N.; Mihalas, S.; Wang, Q.; Lau, C.; Kuan, L.; Henry, A.M.; et al. A mesoscale connectome of the mouse brain. Nature 2014, 508, 207–214. [Google Scholar] [CrossRef]

- Rivera-Alba, M.; Vitaladevuni, S.N.; Mishchenko, Y.; Lu, Z.; Takemura, S.-Y.; Scheffer, L.; Meinertzhagen, I.A.; Chklovskii, D.B.; de Polavieja, G.G. Wiring Economy and Volume Exclusion Determine Neuronal Placement in the Drosophila Brain. Curr. Biol. 2011, 21, 2000–2005. [Google Scholar] [CrossRef]

- Winding, M.; Pedigo, B.D.; Barnes, C.L.; Patsolic, H.G.; Park, Y.; Kazimiers, T.; Fushiki, A.; Andrade, I.V.; Khandelwal, A.; Valdes-Aleman, J.; et al. The connectome of an insect brain. Science 2023, 379, eadd9330–eadd9330. [Google Scholar] [CrossRef]

- Hudspeth, A. Making an Effort to Listen: Mechanical Amplification in the Ear. Neuron 2008, 59, 530–545. [Google Scholar] [CrossRef]

- Pandiyan, V.P.; Maloney-Bertelli, A.; Kuchenbecker, J.A.; Boyle, K.C.; Ling, T.; Chen, Z.C.; Park, B.H.; Roorda, A.; Palanker, D.; Sabesan, R. The optoretinogram reveals the primary steps of phototransduction in the living human eye. Sci. Adv. 2020, 6, eabc1124. [Google Scholar] [CrossRef]

- Hardie, R.C.; Franze, K. Photomechanical Responses in Drosophila Photoreceptors. Science 2012, 338, 260–263. [Google Scholar] [CrossRef]

- Bocchero, U.; Falleroni, F.; Mortal, S.; Li, Y.; Cojoc, D.; Lamb, T.; Torre, V. Mechanosensitivity is an essential component of phototransduction in vertebrate rods. PLOS Biol. 2020, 18, e3000750. [Google Scholar] [CrossRef]

- Korkotian, E.; Segal, M. Spike-Associated Fast Contraction of Dendritic Spines in Cultured Hippocampal Neurons. Neuron 2001, 30, 751–758. [Google Scholar] [CrossRef]

- Majewska, A.; Sur, M. Motility of dendritic spines in visual cortex in vivo : Changes during the critical period and effects of visual deprivation. Proc. Natl. Acad. Sci. 2003, 100, 16024–16029. [Google Scholar] [CrossRef]

- Crick, F. (1982). Do dendritic spines twitch? Trends in Neurosciences 5, 44-46.

- Juusola, M.; Dau, A.; Song, Z.; Solanki, N.; Rien, D.; Jaciuch, D.; Dongre, S.A.; Blanchard, F.; de Polavieja, G.G.; Hardie, R.C.; et al. Microsaccadic sampling of moving image information provides Drosophila hyperacute vision. eLife 2017, 6. [Google Scholar] [CrossRef]

- Kemppainen, J.; Mansour, N.; Takalo, J.; Juusola, M. High-speed imaging of light-induced photoreceptor microsaccades in compound eyes. Commun. Biol. 2022, 5, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Kemppainen, J.; Scales, B.; Haghighi, K.R.; Takalo, J.; Mansour, N.; McManus, J.; Leko, G.; Saari, P.; Hurcomb, J.; Antohi, A.; et al. Binocular mirror–symmetric microsaccadic sampling enables Drosophila hyperacute 3D vision. Proc. Natl. Acad. Sci. 2022, 119. [Google Scholar] [CrossRef]

- Senthilan, P.R.; Piepenbrock, D.; Ovezmyradov, G.; Nadrowski, B.; Bechstedt, S.; Pauls, S.; Winkler, M.; Möbius, W.; Howard, J.; Göpfert, M.C. Drosophila Auditory Organ Genes and Genetic Hearing Defects. Cell 2012, 150, 1042–1054. [Google Scholar] [CrossRef]

- Reshetniak, S.; Rizzoli, S.O. The vesicle cluster as a major organizer of synaptic composition in the short-term and long-term. Curr. Opin. Cell Biol. 2021, 71, 63–68. [Google Scholar] [CrossRef]

- Reshetniak, S.; Ußling, J.; Perego, E.; Rammner, B.; Schikorski, T.; Fornasiero, E.F.; Truckenbrodt, S.; Köster, S.; O Rizzoli, S. A comparative analysis of the mobility of 45 proteins in the synaptic bouton. EMBO J. 2020, 39, e104596. [Google Scholar] [CrossRef]

- Rusakov, D.A.; Savtchenko, L.P.; Zheng, K.; Henley, J.M. Shaping the synaptic signal: molecular mobility inside and outside the cleft. Trends Neurosci. 2011, 34, 359–369. [Google Scholar] [CrossRef] [PubMed]

- Juusola, M.; O Uusitalo, R.; Weckström, M. Transfer of graded potentials at the photoreceptor-interneuron synapse. J. Gen. Physiol. 1995, 105, 117–148. [Google Scholar] [CrossRef] [PubMed]

- Fettiplace, R.; Crawford, A.C.; Kennedy, H.J. SIGNAL TRANSFORMATION BY MECHANOTRANSDUCER CHANNELS OF MAMMALIAN OUTER HAIR CELLS. 2006. [CrossRef]

- Kennedy, H.J.; Crawford, A.C.; Fettiplace, R. Force generation by mammalian hair bundles supports a role in cochlear amplification. Nature 2005, 433, 880–883. [Google Scholar] [CrossRef]

- Juusola, M.; French, A.S.; O Uusitalo, R.; Weckström, M. Information processing by graded-potential transmission through tonically active synapses. Trends Neurosci. 1996, 19, 292–297. [Google Scholar] [CrossRef]

- Watanabe, S.; Rost, B.R.; Camacho-Pérez, M.; Davis, M.W.; Söhl-Kielczynski, B.; Rosenmund, C.; Jorgensen, E.M. Ultrafast endocytosis at mouse hippocampal synapses. Nature 2013, 504, 242–247. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; de Polavieja, G.G.; Wolfram, V.; Asyali, M.H.; Hardie, R.C.; Juusola, M. Feedback Network Controls Photoreceptor Output at the Layer of First Visual Synapses in Drosophila . J. Gen. Physiol. 2006, 127, 495–510. [Google Scholar] [CrossRef] [PubMed]

- Shusterman, R.; Smear, M.C.; A Koulakov, A.; Rinberg, D. Precise olfactory responses tile the sniff cycle. Nat. Neurosci. 2011, 14, 1039–1044. [Google Scholar] [CrossRef] [PubMed]

- Smear, M.; Shusterman, R.; O’connor, R.; Bozza, T.; Rinberg, D. Perception of sniff phase in mouse olfaction. Nature 2011, 479, 397–400. [Google Scholar] [CrossRef]

- E Bush, N.; A Solla, S.; Hartmann, M.J. Whisking mechanics and active sensing. Curr. Opin. Neurobiol. 2016, 40, 178–188. [Google Scholar] [CrossRef]

- Daghfous, G.; Smargiassi, M.; Libourel, P.-A.; Wattiez, R.; Bels, V. The Function of Oscillatory Tongue-Flicks in Snakes: Insights from Kinematics of Tongue-Flicking in the Banded Water Snake (Nerodia fasciata). Chem. Senses 2012, 37, 883–896. [Google Scholar] [CrossRef]

- van Hateren, J.H. , and Schilstra, C. ( 1999). Blowfly flight and optic flow II. Head movements during flight. J Exp Biol 202, 1491–1500.

- Schütz, A.C.; Braun, D.I.; Gegenfurtner, K.R. Eye movements and perception: A selective review. J. Vis. 2011, 11, 9–9. [Google Scholar] [CrossRef]

- Land, M. Eye movements in man and other animals. Vis. Res. 2019, 162, 1–7. [Google Scholar] [CrossRef]

- Davies, A.; Louis, M.; Webb, B. A Model of Drosophila Larva Chemotaxis. PLOS Comput. Biol. 2015, 11, e1004606. [Google Scholar] [CrossRef]

- Gomez-Marin, A.; Stephens, G.J.; Louis, M. Active sampling and decision making in Drosophila chemotaxis. Nat. Commun. 2011, 2, 441. [Google Scholar] [CrossRef] [PubMed]

- Schöneich, S.; Hedwig, B. Hyperacute Directional Hearing and Phonotactic Steering in the Cricket (Gryllus bimaculatus deGeer). PLOS ONE 2010, 5, e15141. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Nikolaev, A.; Wardill, T.J.; O'Kane, C.J.; de Polavieja, G.G.; Juusola, M. Network Adaptation Improves Temporal Representation of Naturalistic Stimuli in Drosophila Eye: I Dynamics. PLOS ONE 2009, 4, e4307. [Google Scholar] [CrossRef] [PubMed]

- Barlow, H.B. (1961). Possible principles underlying the transformations of sensory messages. In Sensory Communication, W. Rosenblith, ed. (M.I.T. Press), pp. 217-234.

- Darwin, C. (1859). On the origin of species by means of natural selection, or the preservation of favoured races in the struggle for life (John Murray).

- Sheehan, M.J.; Tibbetts, E.A. Specialized Face Learning Is Associated with Individual Recognition in Paper Wasps. Science 2011, 334, 1272–1275. [Google Scholar] [CrossRef]

- Miller, S.E.; Legan, A.W.; Henshaw, M.T.; Ostevik, K.L.; Samuk, K.; Uy, F.M.K.; Sheehan, M.J. Evolutionary dynamics of recent selection on cognitive abilities. Proc. Natl. Acad. Sci. 2020, 117, 3045–3052. [Google Scholar] [CrossRef]

- Kacsoh, B.Z.; Lynch, Z.R.; Mortimer, N.T.; Schlenke, T.A. Fruit Flies Medicate Offspring After Seeing Parasites. Science 2013, 339, 947–950. [Google Scholar] [CrossRef]

- Land, M.F. VISUAL ACUITY IN INSECTS. Annu. Rev. Èntomol. 1997, 42, 147–177. [Google Scholar] [CrossRef]

- Laughlin, S.B. The role of sensory adaptation in the retina. J. Exp. Biol. 1989, 146, 39–62. [Google Scholar] [CrossRef]

- Laughlin, S.B.; de Ruyter van Steveninck, R.R.; Anderson, J.C. The metabolic cost of neural information. Nat. Neurosci. 1998, 1, 36–41. [Google Scholar] [CrossRef]

- Schilstra, C.; Van Hateren, J.H. Blowfly flight and optic flow : I. Thorax kinematics and flight dynamics. J. Exp. Biol. 1999, 202, 1481–1490. [Google Scholar] [CrossRef]

- Fenk, L.M.; Avritzer, S.C.; Weisman, J.L.; Nair, A.; Randt, L.D.; Mohren, T.L.; Siwanowicz, I.; Maimon, G. Muscles that move the retina augment compound eye vision in Drosophila. Nature 2022, 612, 116–122. [Google Scholar] [CrossRef] [PubMed]

- Guiraud, M.; Roper, M.; Chittka, L. High-Speed Videography Reveals How Honeybees Can Turn a Spatial Concept Learning Task Into a Simple Discrimination Task by Stereotyped Flight Movements and Sequential Inspection of Pattern Elements. Front. Psychol. 2018, 9, 1347. [Google Scholar] [CrossRef] [PubMed]

- Nityananda, V.; Chittka, L.; Skorupski, P. Can Bees See at a Glance? J. Exp. Biol. 2014, 217, 1933–9. [Google Scholar] [CrossRef] [PubMed]

- Vasas, V.; Chittka, L. Insect-Inspired Sequential Inspection Strategy Enables an Artificial Network of Four Neurons to Estimate Numerosity. iScience 2018, 11, 85–92. [Google Scholar] [CrossRef]

- Chittka, L. , and Skorupski, P. (2017). Active vision: A broader comparative perspective is needed. Constr Found 13, 128-129.

- Sorribes, A.; Armendariz, B.G.; Lopez-Pigozzi, D.; Murga, C.; de Polavieja, G.G. The Origin of Behavioral Bursts in Decision-Making Circuitry. PLOS Comput. Biol. 2011, 7, e1002075. [Google Scholar] [CrossRef]

- Exner, S. (1891). Die Physiologie der facettierten Augen von Krebsen und Insecten.

- Hardie, R.C.; Juusola, M. Phototransduction in Drosophila. Curr. Opin. Neurobiol. 2015, 34, 37–45. [Google Scholar] [CrossRef]

- Song, Z.; Postma, M.; Billings, S.A.; Coca, D.; Hardie, R.C.; Juusola, M. Stochastic, Adaptive Sampling of Information by Microvilli in Fly Photoreceptors. Curr. Biol. 2012, 22, 1371–1380. [Google Scholar] [CrossRef]

- Stavenga, D.G. Angular and spectral sensitivity of fly photoreceptors. II. Dependence on facet lens F-number and rhabdomere type in Drosophila. Journal of Comparative Physiology A 2003, 189, 189–202. [Google Scholar] [CrossRef]

- Juusola, M.; Hardie, R.C. Light Adaptation in Drosophila Photoreceptors. J. Gen. Physiol. 2000, 117, 27–42. [Google Scholar] [CrossRef]

- Juusola, M.; Song, Z. How a fly photoreceptor samples light information in time. J. Physiol. 2017, 595, 5427–5437. [Google Scholar] [CrossRef]

- Song, Z.; Juusola, M. Refractory Sampling Links Efficiency and Costs of Sensory Encoding to Stimulus Statistics. J. Neurosci. 2014, 34, 7216–7237. [Google Scholar] [CrossRef]

- Song, Z.; Zhou, Y.; Feng, J.; Juusola, M. Multiscale ‘whole-cell’ models to study neural information processing – New insights from fly photoreceptor studies. J. Neurosci. Methods 2021, 357, 109156. [Google Scholar] [CrossRef]

- Gonzalez-Bellido, P.T.; Wardill, T.J.; Juusola, M. Compound eyes and retinal information processing in miniature dipteran species match their specific ecological demands. Proc. Natl. Acad. Sci. 2011, 108, 4224–4229. [Google Scholar] [CrossRef]

- Juusola, M. Linear and non-linear contrast coding in light-adapted blowfly photoreceptors. Journal of Comparative Physiology A 1993, 172, 511–521. [Google Scholar] [CrossRef]

- Ditchburn, R.W.; Ginsborg, B.L. Vision with a Stabilized Retinal Image. Nature 1952, 170, 36–37. [Google Scholar] [CrossRef] [PubMed]

- Riggs, L.A. , and Ratliff, F. ( 1952). The effects of counteracting the normal movements of the eye. J Opt Soc Am 42, 872–873.

- Geurten, B.R.H.; Jã¤Hde, P.; Corthals, K.; Gã¶Pfert, M.C. Saccadic body turns in walking Drosophila. Front. Behav. Neurosci. 2014, 8, 365–365. [Google Scholar] [CrossRef]

- Borst, A. Drosophila's View on Insect Vision. Curr. Biol. 2009, 19, R36–R47. [Google Scholar] [CrossRef] [PubMed]

- Franceschini, N.; Kirschfeld, K. Les phénomènes de pseudopupille dans l'œil composé deDrosophila. Kybernetik 1971, 9, 159–182. [Google Scholar] [CrossRef] [PubMed]

- Woodgate, J.L.; Makinson, J.C.; Rossi, N.; Lim, K.S.; Reynolds, A.M.; Rawlings, C.J.; Chittka, L. Harmonic radar tracking reveals that honeybee drones navigate between multiple aerial leks. iScience 2021, 24, 102499. [Google Scholar] [CrossRef]

- Schulte, P.; Zeil, J.; Stürzl, W. An insect-inspired model for acquiring views for homing. Biol. Cybern. 2019, 113, 439–451. [Google Scholar] [CrossRef] [PubMed]

- Boeddeker, N.; Dittmar, L.; Stürzl, W.; Egelhaaf, M. The fine structure of honeybee head and body yaw movements in a homing task. Proc. R. Soc. B: Boil. Sci. 2010, 277, 1899–1906. [Google Scholar] [CrossRef] [PubMed]

- Kirschfeld, K. [Neural superposition eye]. Fortschritte der Zoöl. 1973, 21, 229–57. [Google Scholar]

- Wardill, T.J.; List, O.; Li, X.; Dongre, S.; McCulloch, M.; Ting, C.-Y.; O’kane, C.J.; Tang, S.; Lee, C.-H.; Hardie, R.C.; et al. Multiple Spectral Inputs Improve Motion Discrimination in the Drosophila Visual System. Science 2012, 336, 925–931. [Google Scholar] [CrossRef] [PubMed]

- Song, B.-M.; Lee, C.-H. Toward a Mechanistic Understanding of Color Vision in Insects. Front. Neural Circuits 2018, 12, 16. [Google Scholar] [CrossRef]

- Johnston, R.J.; Desplan, C. Stochastic Mechanisms of Cell Fate Specification that Yield Random or Robust Outcomes. Annu. Rev. Cell Dev. Biol. 2010, 26, 689–719. [Google Scholar] [CrossRef]

- Juusola, M. , and Hardie, R.C. (2001). Light adaptation in Drosophila photoreceptors: II. Rising temperature increases the bandwidth of reliable signaling J Gen Physiol 117, 27–42.

- Vasiliauskas, D.; Mazzoni, E.O.; Sprecher, S.G.; Brodetskiy, K.; Jr, R.J.J.; Lidder, P.; Vogt, N.; Celik, A.; Desplan, C. Feedback from rhodopsin controls rhodopsin exclusion in Drosophila photoreceptors. Nature 2011, 479, 108–112. [Google Scholar] [CrossRef]

- Dippé, M.A.Z.; Wold, E.H. Antialiasing through stochastic sampling. ACM SIGGRAPH Comput. Graph. 1985, 19, 69–78. [Google Scholar] [CrossRef]

- Field, G.D.; Gauthier, J.L.; Sher, A.; Greschner, M.; Machado, T.A.; Jepson, L.H.; Shlens, J.; Gunning, D.E.; Mathieson, K.; Dabrowski, W.; et al. Functional connectivity in the retina at the resolution of photoreceptors. Nature 2010, 467, 673–677. [Google Scholar] [CrossRef]

- Götz, K.G. Flight control in Drosophila by visual perception of motion. Kybernetik 1968, 4, 199–208. [Google Scholar] [CrossRef]

- Hengstenberg, R. Das augenmuskelsystem der stubenfliege musca domestica. Kybernetik 1971, 9, 56–77. [Google Scholar] [CrossRef] [PubMed]

- Colonnier, F.; Manecy, A.; Juston, R.; Mallot, H.; Leitel, R.; Floreano, D.; Viollet, S. A small-scale hyperacute compound eye featuring active eye tremor: application to visual stabilization, target tracking, and short-range odometry. Bioinspiration Biomimetics 2015, 10, 026002–026002. [Google Scholar] [CrossRef] [PubMed]

- Viollet, S.; Godiot, S.; Leitel, R.; Buss, W.; Breugnon, P.; Menouni, M.; Juston, R.; Expert, F.; Colonnier, F.; L'Eplattenier, G.; et al. Hardware Architecture and Cutting-Edge Assembly Process of a Tiny Curved Compound Eye. Sensors 2014, 14, 21702–21721. [Google Scholar] [CrossRef] [PubMed]

- Talley, J.; Pusdekar, S.; Feltenberger, A.; Ketner, N.; Evers, J.; Liu, M.; Gosh, A.; Palmer, S.E.; Wardill, T.J.; Gonzalez-Bellido, P.T. Predictive saccades and decision making in the beetle-predating saffron robber fly. Curr. Biol. 2023, 33, 2912–2924. [Google Scholar] [CrossRef] [PubMed]

- de Polavieja, G.G. (2006). Neuronal algorithms that detect the temporal order of events. Neural Comp 18, 2102-2121.

- Hassenstein, B. , and Reichardt, W. (1956). Systemtheoretische Analyse der Zeit-, Reihenfolgen- und Vorzeichenauswertung bei der Bewegungsperzeption des Rüsselkäfers Chlorophanus. Z Naturforsch 11b, 513-524.

- Barlow, H.B.; Levick, W.R. The mechanism of directionally selective units in rabbit's retina. PEDIATRICS 1965, 178, 477–504. [Google Scholar] [CrossRef]

- Grabowska, M.J.; Jeans, R.; Steeves, J.; van Swinderen, B. Oscillations in the central brain of Drosophila are phase locked to attended visual features. Proc. Natl. Acad. Sci. 2020, 117, 29925–29936. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Juusola, M. Intrinsic Activity in the Fly Brain Gates Visual Information during Behavioral Choices. PLOS ONE 2010, 5, e14455. [Google Scholar] [CrossRef] [PubMed]

- Leung, A.; Cohen, D.; van Swinderen, B.; Tsuchiya, N. Integrated information structure collapses with anesthetic loss of conscious arousal in Drosophila melanogaster. PLOS Comput. Biol. 2021, 17, e1008722. [Google Scholar] [CrossRef]

- Chiappe, M.E.; Seelig, J.D.; Reiser, M.B.; Jayaraman, V. Walking Modulates Speed Sensitivity in Drosophila Motion Vision. Curr. Biol. 2010, 20, 1470–1475. [Google Scholar] [CrossRef]

- Maimon, G.; Straw, A.D.; Dickinson, M.H. Active flight increases the gain of visual motion processing in Drosophila. Nat. Neurosci. 2010, 13, 393–399. [Google Scholar] [CrossRef]

- Maye, A.; Hsieh, C.-H.; Sugihara, G.; Brembs, B. Order in Spontaneous Behavior. PLOS ONE 2007, 2, e443–e443. [Google Scholar] [CrossRef] [PubMed]

- van Hateren, J.H. A Unifying Theory of Biological Function. Biol. Theory 2017, 12, 112–126. [Google Scholar] [CrossRef] [PubMed]

- van Hateren, J.H. A theory of consciousness: computation, algorithm, and neurobiological realization. Biol. Cybern. 2019, 113, 357–372. [Google Scholar] [CrossRef] [PubMed]

- Okray, Z.; Jacob, P.F.; Stern, C.; Desmond, K.; Otto, N.; Talbot, C.B.; Vargas-Gutierrez, P.; Waddell, S. Multisensory learning binds neurons into a cross-modal memory engram. Nature 2023, 617, 777–784. [Google Scholar] [CrossRef]

- Juusola, M.; de Polavieja, G.G. The Rate of Information Transfer of Naturalistic Stimulation by Graded Potentials. J. Gen. Physiol. 2003, 122, 191–206. [Google Scholar] [CrossRef]

- de Polavieja, G.G. (2002). Errors drive the evolution of biological signalling to costly codes. J Theor Biol 214, 657-664.

- de Polavieja, G.G. (2004). Reliable biological communication with realistic constraints. Phys Rev E 70, 061910.

- Li, X.; Tayoun, A.A.; Song, Z.; Dau, A.; Rien, D.; Jaciuch, D.; Dongre, S.; Blanchard, F.; Nikolaev, A.; Zheng, L.; et al. Ca2+-Activated K+ Channels Reduce Network Excitability, Improving Adaptability and Energetics for Transmitting and Perceiving Sensory Information. J. Neurosci. 2019, 39, 7132–7154. [Google Scholar] [CrossRef]

- Shannon, C.E. (1948). A mathematical theory of communication. Bell Syst Technic J 27, 379–423, 623–656.

- Brenner, N.; Bialek, W.; Steveninck, R.d.R.v. Adaptive Rescaling Maximizes Information Transmission. Neuron 2000, 26, 695–702. [Google Scholar] [CrossRef]

- Maravall, M.; Petersen, R.S.; Fairhall, A.L.; Arabzadeh, E.; E Diamond, M. Shifts in Coding Properties and Maintenance of Information Transmission during Adaptation in Barrel Cortex. PLOS Biol. 2007, 5, e19. [Google Scholar] [CrossRef]

- Arganda, S.; Guantes, R.; de Polavieja, G.G. Sodium pumps adapt spike bursting to stimulus statistics. Nat. Neurosci. 2007, 10, 1467–1473. [Google Scholar] [CrossRef]

- Laughlin, S. A Simple Coding Procedure Enhances a Neuron's Information Capacity. 1981, 36, 910–912. [CrossRef]

- van Hateren, J.H. A theory of maximizing sensory information. Biol. Cybern. 1992, 68, 23–29. [Google Scholar] [CrossRef]

- Hopfield, J.J.; Brody, C.D. What is a moment? Transient synchrony as a collective mechanism for spatiotemporal integration. Proc. Natl. Acad. Sci. 2001, 98, 1282–1287. [Google Scholar] [CrossRef] [PubMed]