Submitted:

02 September 2024

Posted:

04 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

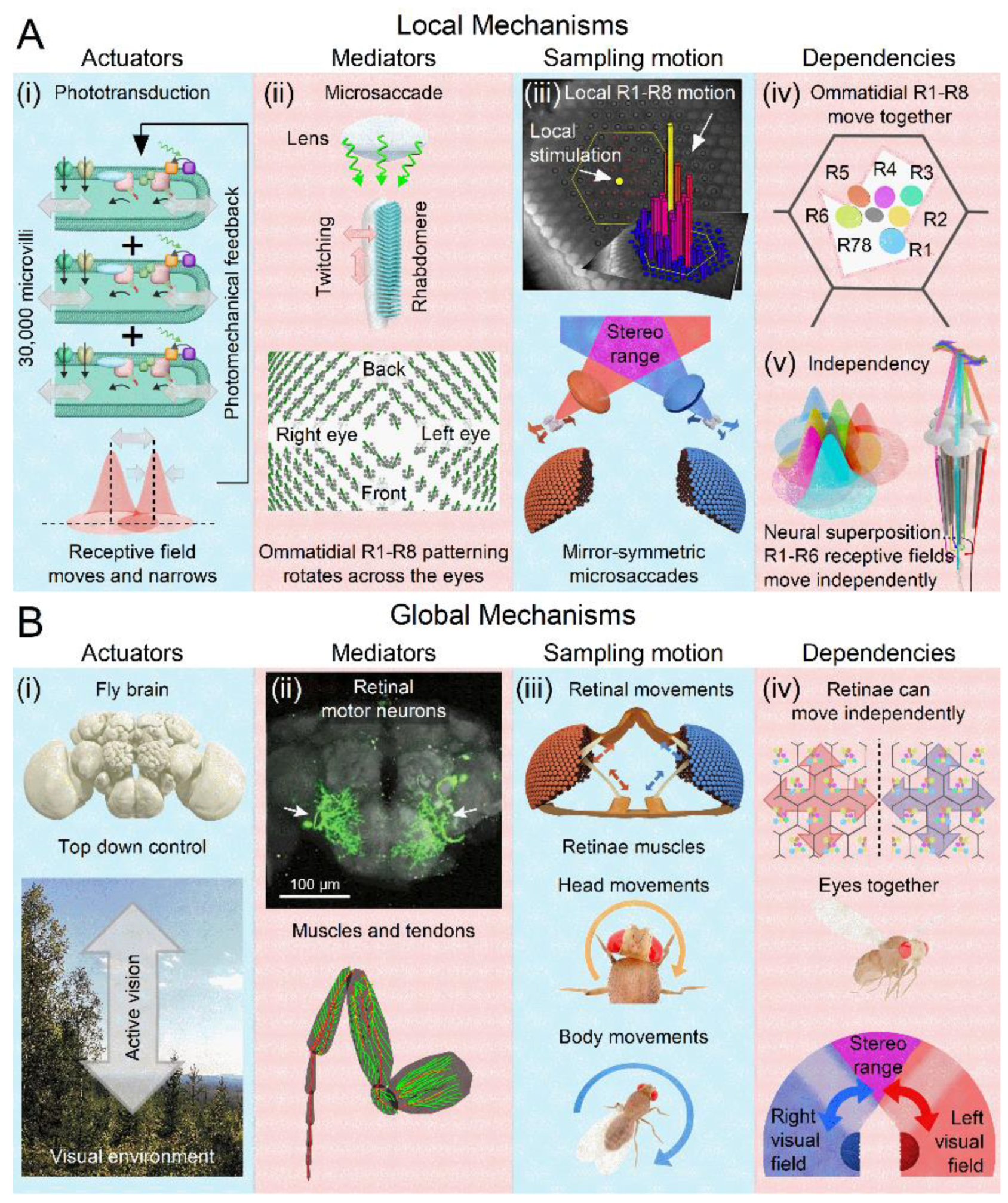

2. Sensing Requires Motion, and Moving Sensors Improve Sensing

- (i)

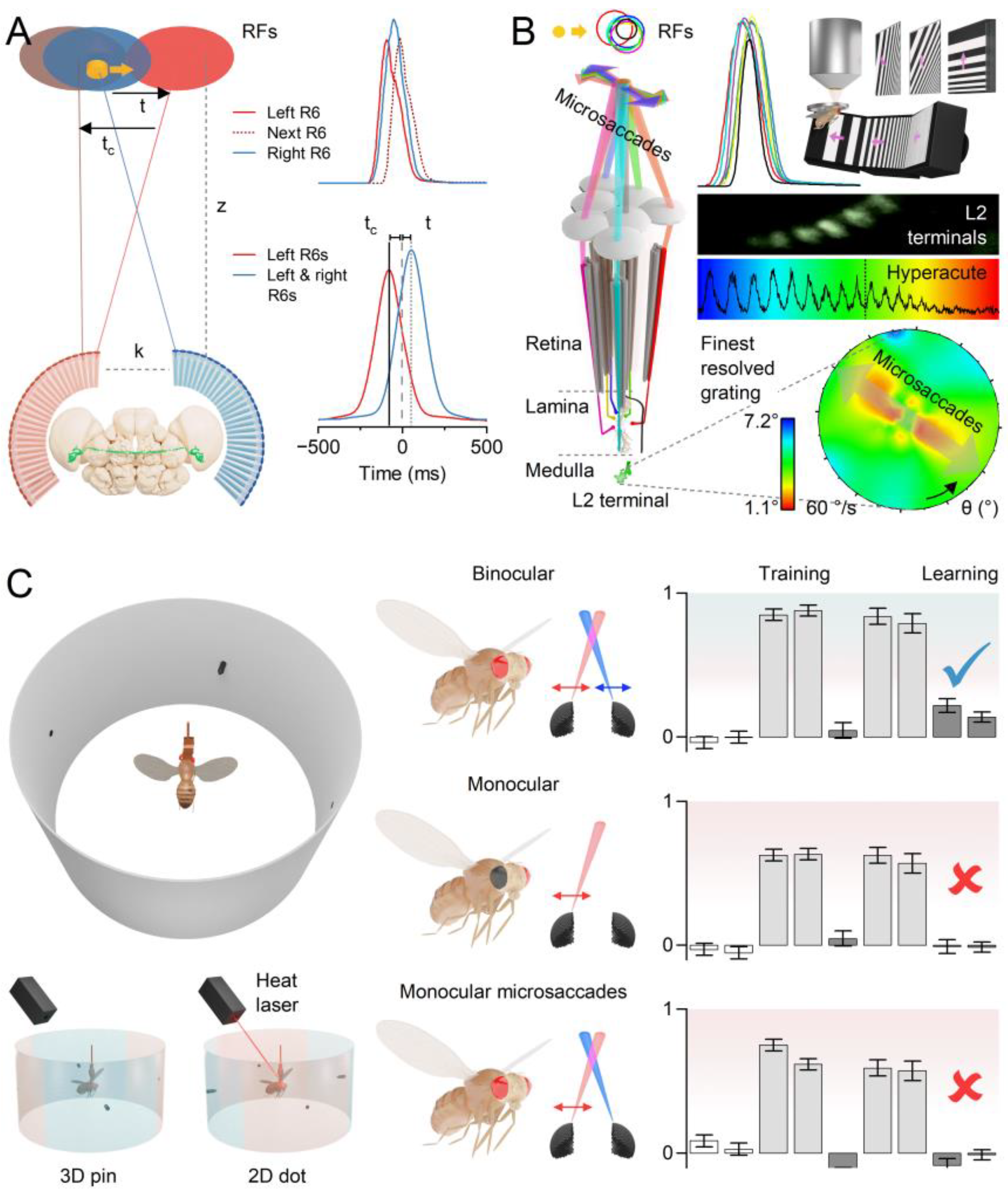

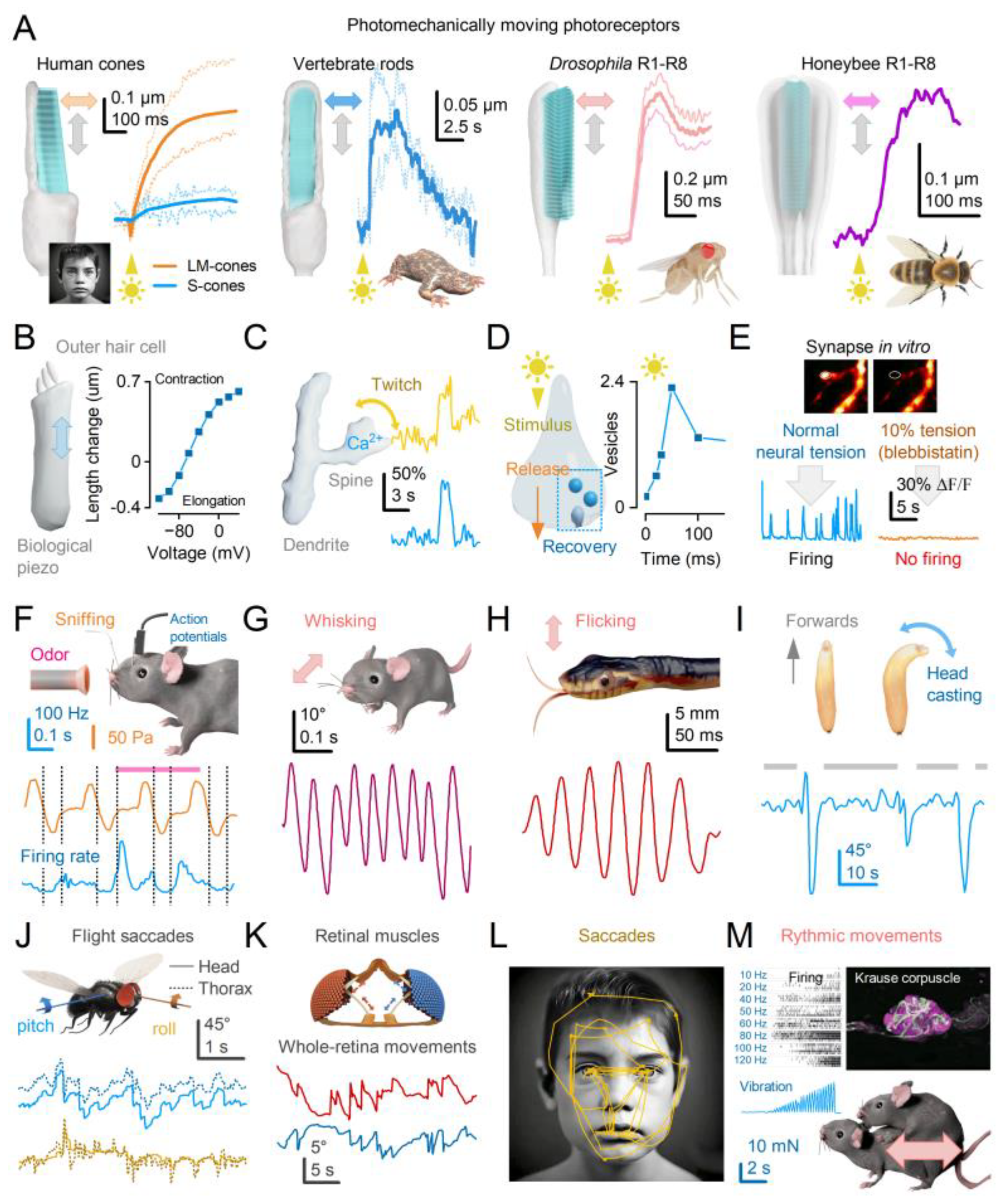

- Photomechanical Receptive Field Scanning Motion: Each photoreceptor’s microvillar phototransduction reactions generate rapid contractions in response to light intensity changes, causing the photoreceptor’s waveguide, the rhabdomere (containing 30,000 microvilli), to twitch[8,13,61] (grey double-headed arrows). These twitches create microsaccades that dynamically shift and narrow the photoreceptor’s receptive field[15] (red Gaussian). Unlike uniform, reflex-like contractions, microsaccades are actively regulated and continuously adjust photon sampling dynamics. This auto-regulation optimises photoreceptors’ receptive fields in response to environmental light changes to maximise information capture[13,14,15]. These dynamics rapidly adapt to ongoing light exposure, varying with both light intensity (dim vs bright conditions) and contrast type (positive for light increments, negative for light decrements)[13,14,15]. From a sampling theory perspective, photoreceptor microsaccades constitute a form of ultrafast, morphodynamic active sampling.

- (ii)

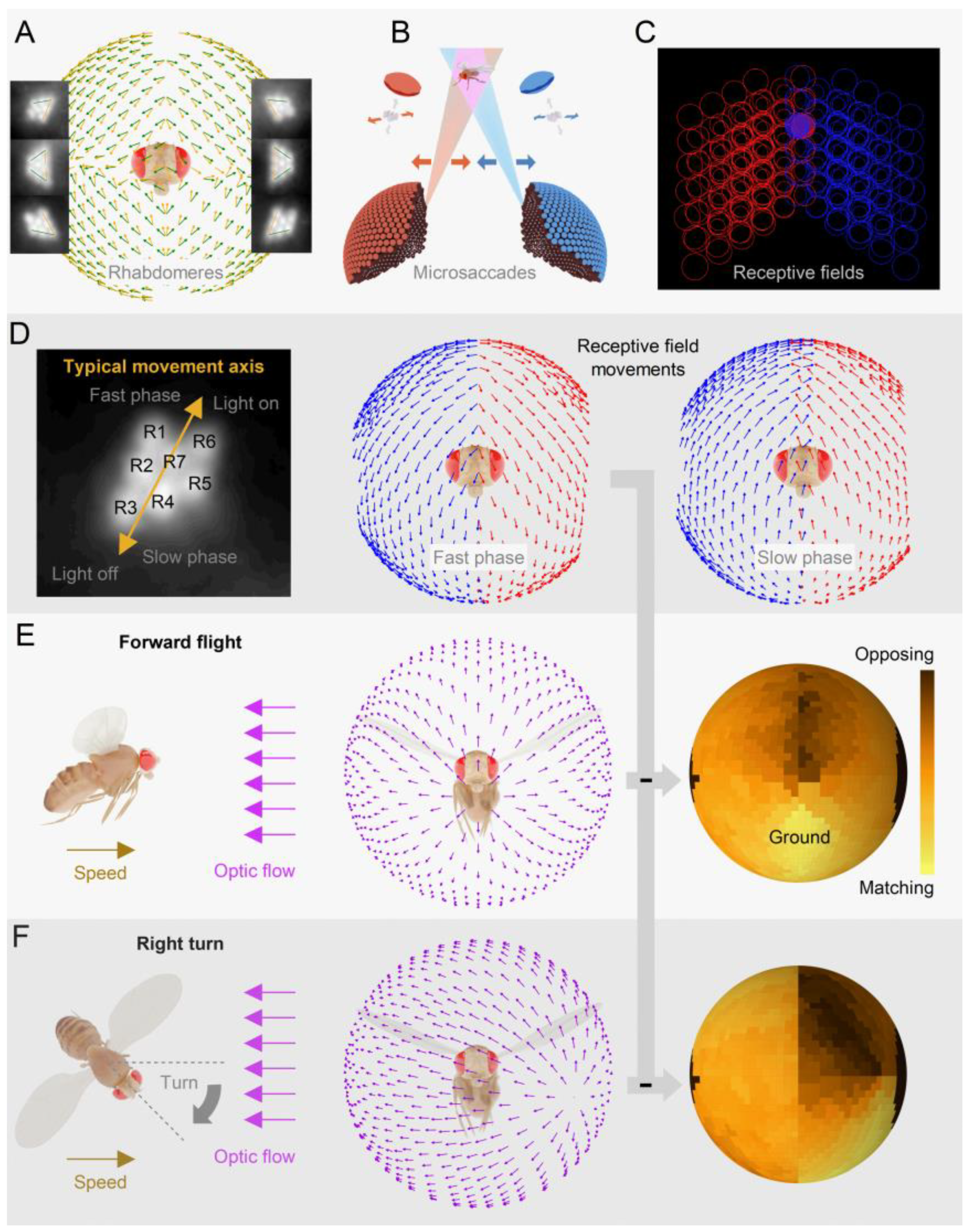

- Local Directionality: During a photomechanical microsaccade, photoreceptors contract axially (up-down arrow), moving away from the lens to narrow the receptive field while swinging sideways (left-right arrow) in a direction specific to their eye location, moving the receptive field[14,15]. These lateral movements are predetermined during development as the ommatidial R1-8 photoreceptor alignment gradually rotates across the eyes[14,15], forming a diamond shape (with green lines indicating the rotation axis).

- (iii)

- Insularity, Symmetry and Adaptability: Local pinhole light stimulation (yellow dot) triggers microsaccades only in the photoreceptors of the illuminated ommatidia (yellow and red bars), while the photoreceptors in the neighbouring ommatidia (dark blue bars) remain still[15]. The left and right eyes show mirror-symmetric microsaccade directions[14,15], but local microsaccades themselves are not uniform[13,14,15]. Their speed and magnitude adapt to ambient light changes, becoming faster and shorter in brighter environments, indicating light-intensity dependency[13,14,15].

- (iv)

- Collective Motion: Within an ommatidium, R1-8 photoreceptor movements are interdependent. When one photoreceptor is activated by light, all R1-8 photoreceptors move together in a unified direction[15]. This coordinated motion likely arises from the photoreceptors’ structural pivoting and the linkage of their rhabdomere tips to the ommatidial cone cells via adherens junctions[13,62]. If all photoreceptors are activated, their combined microsaccades produce a larger collective movement[15]. A local UV light stimulus activating all R1-6 photoreceptors and R7 results in the largest microsaccades[15].

- (v)

- Asymmetry and Tiling: The asymmetric arrangement of R1-8 photoreceptors around the ommatidium lens causes R1-6 photoreceptors’ receptive fields (coloured Gaussians), pooled from neighbouring ommatidia, to tile the visual space over-completely[15,63] and move independently (correspondingly coloured arrows) in slightly different directions during synaptic transmission to large monopolar cells[15].

- (i)

- (ii)

- (iii)

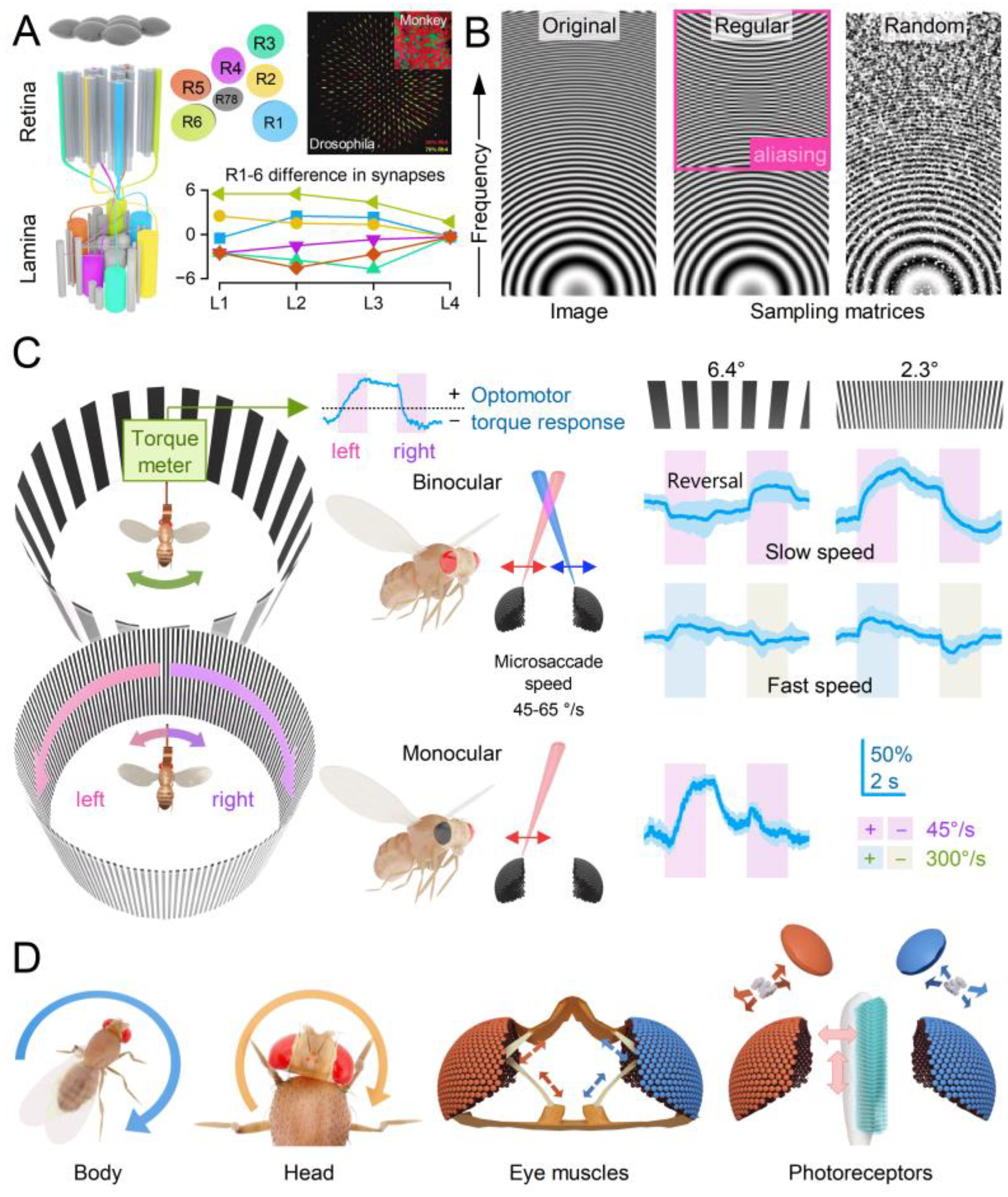

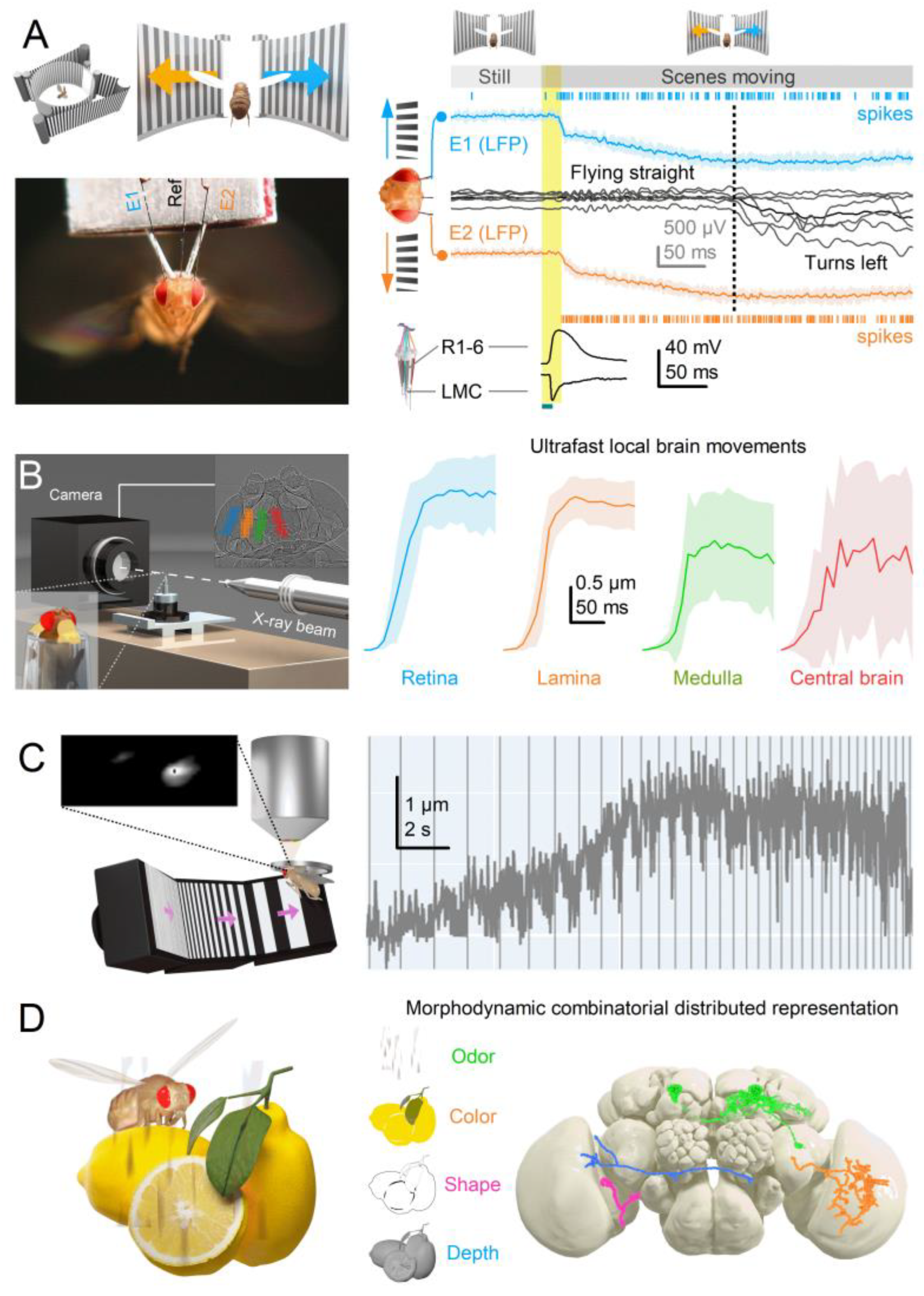

- Self-Motion-Induced Optic Flow: Retinal, head, and body movements, including saccades[33,67,68] and vergence[35,36,37], shift the entire retina, refreshing neural images across the visual field and preventing perceptual fading due to fast adaptation. The interplay between these global movements and (A) orientation-sensitive photoreceptor microsaccades generates complex spatiotemporal sampling dynamics. While microsaccades can independently enhance neural responses to local visual changes, whole retina movements rely on this interaction for full effectiveness. Each retinal movement alters the photoreceptor light input[15], with moving scenes triggering photomechanical microsaccades - rippling wave patterns synchronised with contrast changes - across the retina, except in complete darkness or uniform, zero-contrast environments.

- (iv)

- Coordinated Adjustments and Activity State: Retinal muscles enable the left and right retinae (illustrated by the left and right ommatidial matrix) to move independently[35] (red and blue four-headed arrows), providing precise control during an attentive viewing[35,64,65], including optokinetic retinal tracking and other behaviours. For example, by pulling the retinae inward (convergence) or outward (divergence), these muscles can adjust the number of frontal photoreceptors involved in stereopsis, dynamically altering the eyes’ stereo range while preserving the integrity of the compound eyes’ lens surface and surrounding exoskeleton. Interestingly, whole-retina movements, driven by retinal motor neuron activity, are seldom observed in intact, fully immobilised, head-fixed Drosophila, such as during intracellular electrophysiological recordings or in vivo photoreceptor imaging[13,14,15]. Instead, one occasionally notes slow retinal drifting, likely due to changes in muscle tone affecting retinal tension, which necessitates recentring the light stimulus[13]. However, the whole-retina movements become more frequent and pronounced when the flies are less restricted and actively engaged in stimulus tracking or visual behaviours, such as during tethered flight or while walking on a trackball [35,36,37]. These findings align with previous observations from two-photon calcium imaging [69,70,71,72,73] and extracellular electrophysiology[64,66,74], which demonstrate that the fly’s behavioural state influences neural activity in the fly brain’s visual processing centres.

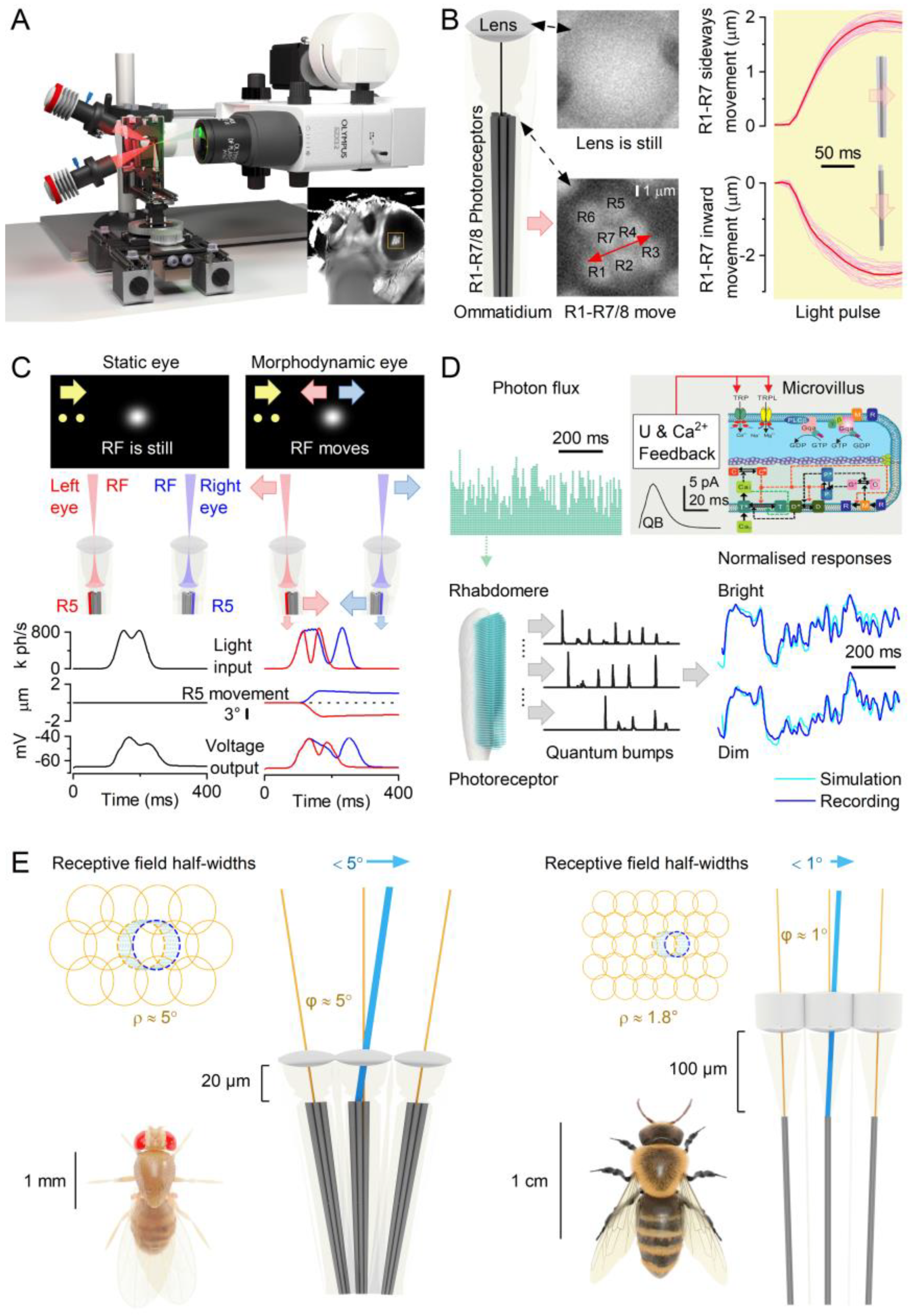

2.1. Photoreceptor Microsaccades Enhance Vision

2.2. Microsaccades Are Photomechanical Adaptations in Phototransduction

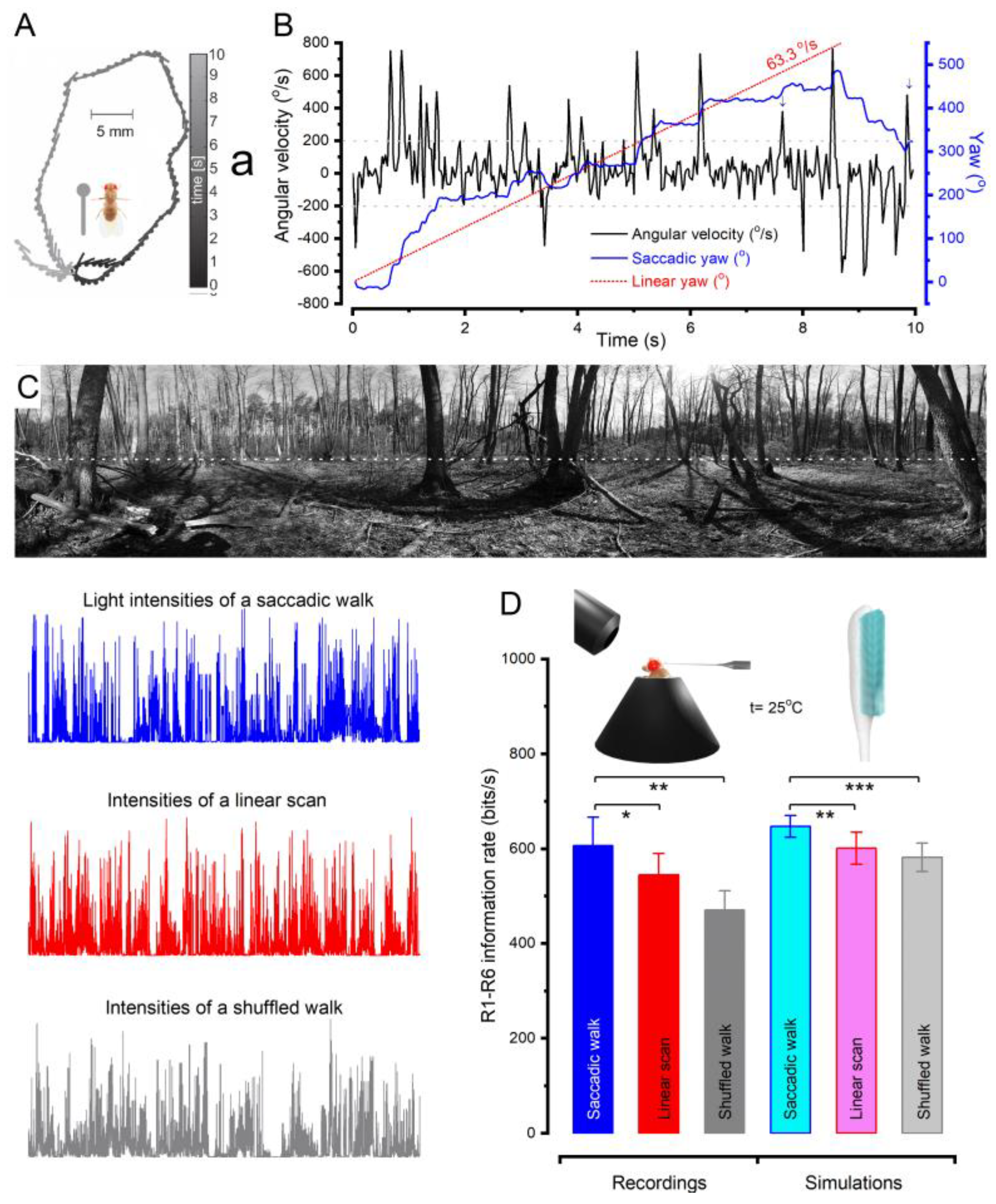

2.3. Microsaccades Maximise Information during Saccadic Behaviours

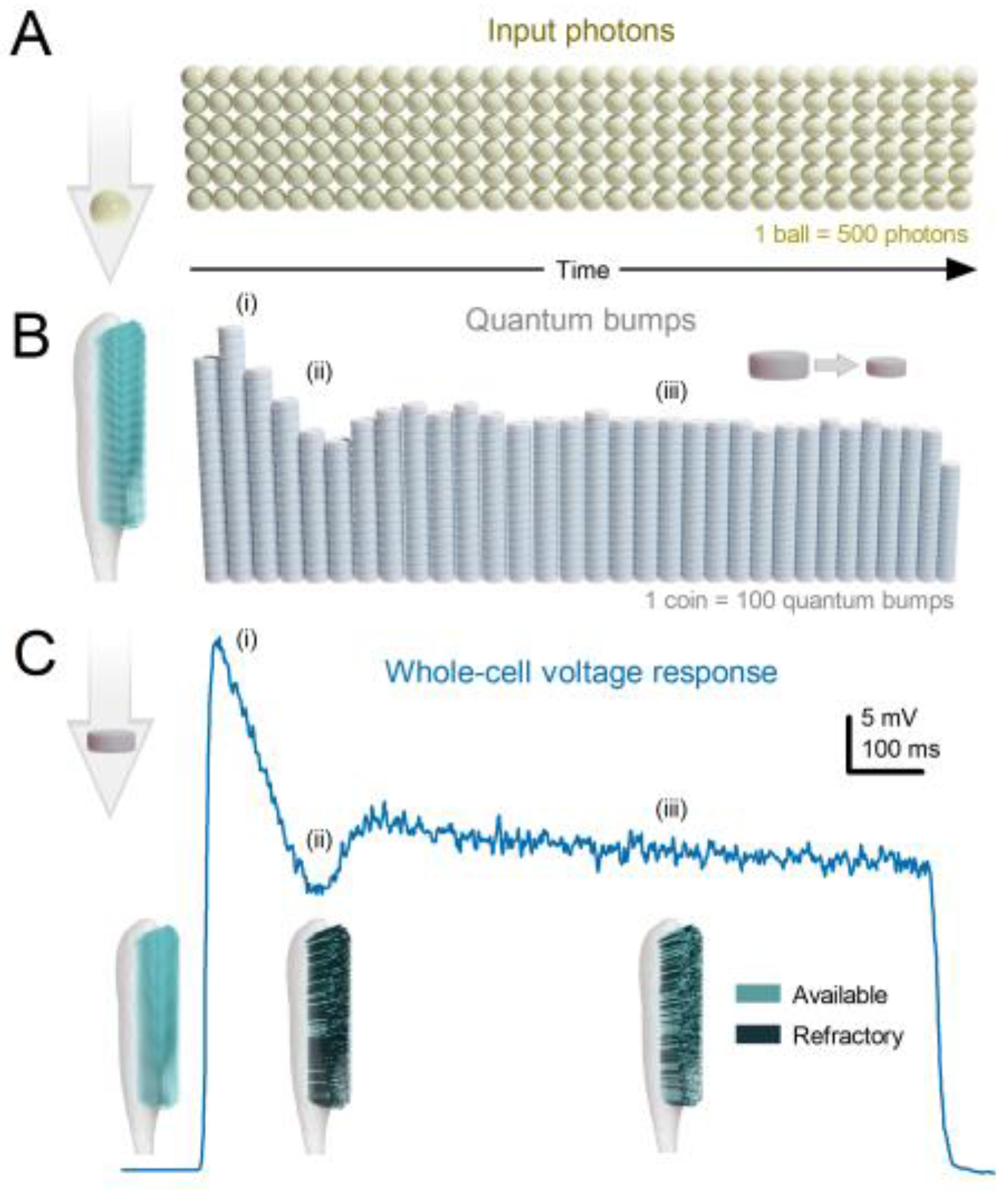

- Each microvillus can produce only one quantum bump at a time [76,91,92,93].

- After producing a quantum bump, a microvillus becomes refractory for up to 300 ms (in Drosophila R1-6 photoreceptors at 25°C) and cannot respond to other photons[91,94,95].

- Quantum bumps from all microvilli sum up the macroscopic response[76,91,92,93,96].

- Microvilli availability sets a photoreceptor’s maximum sample rate (quantum bump production rate), adapting its macroscopic response to a light stimulus[76,93].

- Global Ca2+ accumulation and membrane voltage affect samples of all microvilli. These global feedbacks strengthen with brightening light to reduce the size and duration of quantum bumps, adapting the macroscopic response[78,88,97,98].

2.4. Left and Right Eyes’ Mirror-Symmetric Microsaccades Phase-Enhance Moving Objects

2.5. Mirror-Symmetric Microsaccades Enable Hyperacute Stereovision

2.6. Microsaccade Variability Combats Aliasing

2.7. Multiple Layers of Active Sampling vs Simple Motion Detection Models

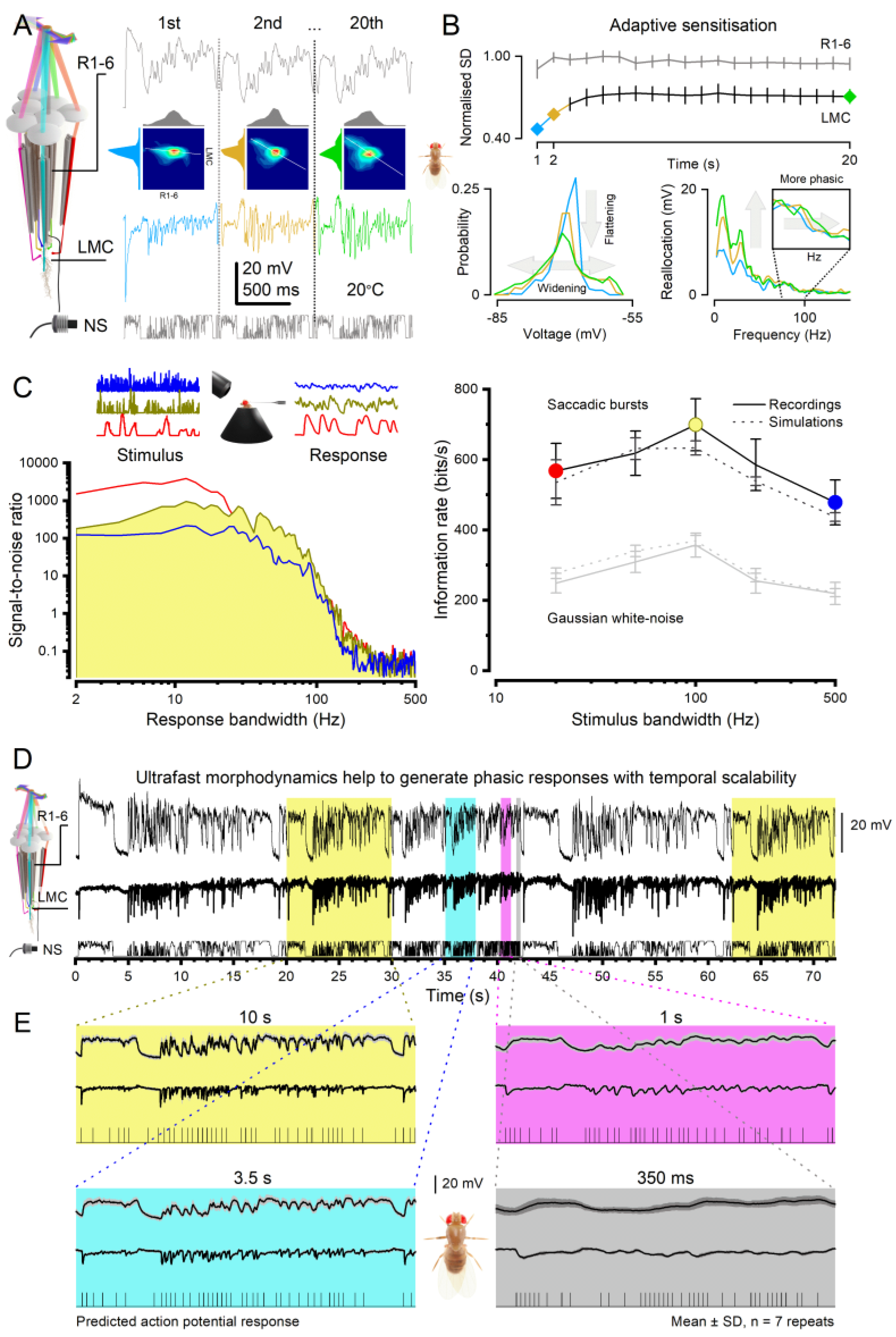

3. Benefits of Neural Morphodynamics

3.1. Efficient Neural Encoding of Reliable Representations across Wide Dynamic Range

3.2. Predictive Coding and Minimal Neural Delays

3.3. Anti-Aliasing and Robust Communication

3.4. Efficiency of Encoding Space in Time

3.5. Expanding Dimensionality in Encoding Space for Cognitive Proficiency

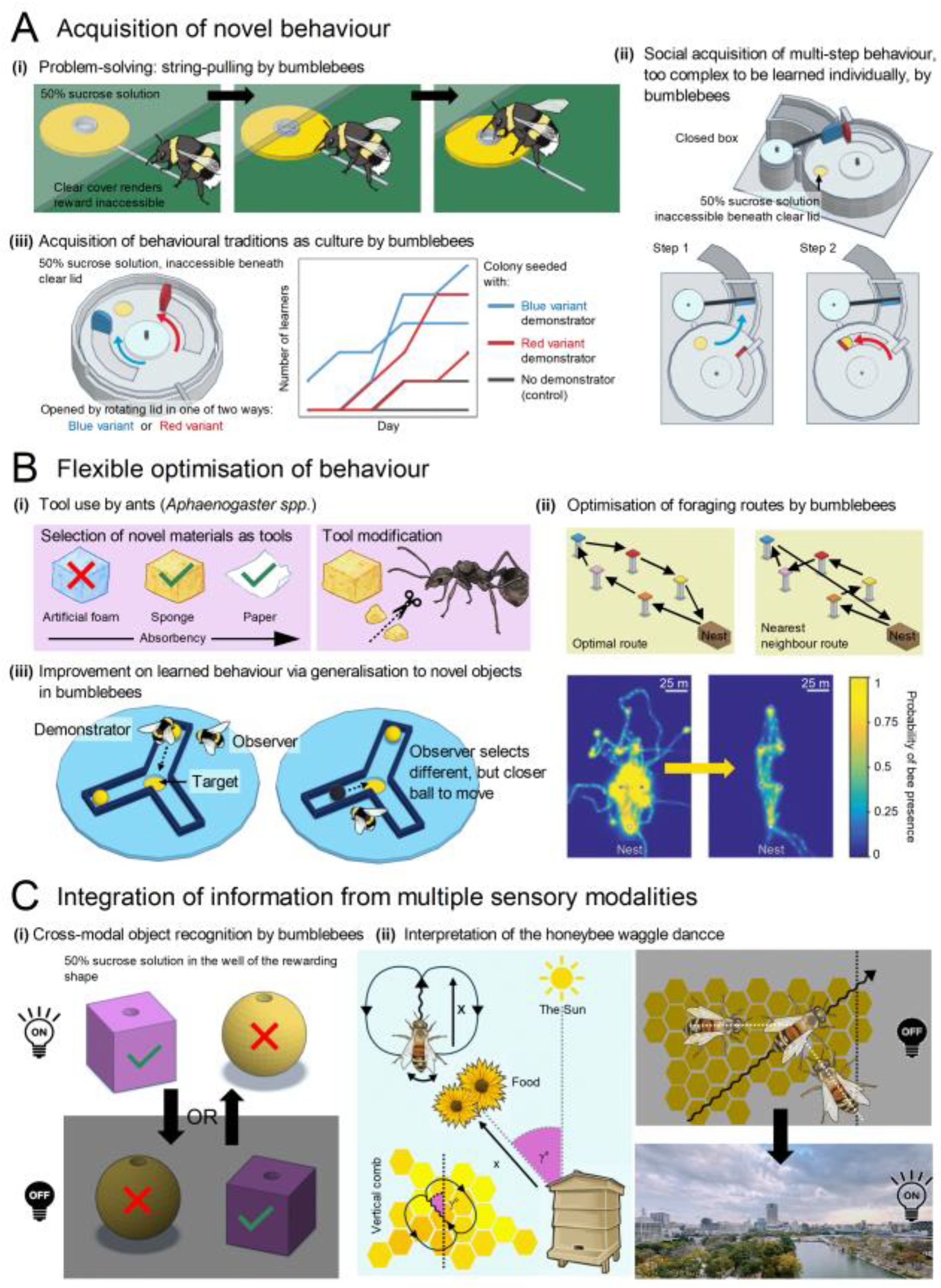

- i.

- Bumblebees can learn to pull strings to obtain out-of-reach rewards, both through individual learning and social transmission[188].

- ii.

- Bumblebees can socially learn a complex two-step behaviour that they cannot learn individually - previously thought to be a human-exclusive capability underlying our species’ cumulative culture[189].

- iii.

- In laboratory settings, bumblebees can acquire local variations of novel behaviours as a form of culture, even though such behaviours are not observed in the wild[190].

- i.

- Ants (Aphaenogaster spp.) select and modify tools based on their soaking properties and the viscosity of food sources. They not only learn to use novel objects like sponges and paper as tools but also modify these objects by tearing them into smaller, more manageable pieces[191,192].

- ii.

- Bumblebees optimise their foraging routes between multiple locations, effectively solving the “travelling salesman problem” by reducing flight distance and duration with experience[193].

- iii.

- Bumblebees can be trained to roll a ball to a marked location for a reward. After observing knowledgeable conspecifics, they not only learn this behaviour but also generalise it to novel balls, preferring the more efficient option even if this differed from the option used by their conspecifics[194].

- i.

- Bumblebees (Bombus terrestris) can recognise three-dimensional objects, such as spheres and cubes, by touch if they have only seen them and by sight if they have only touched them[155].

- ii.

- Honeybees (Apis mellifera) can interpret the waggle dance of successful foragers in darkness by detecting the dancer’s movements with their antennae. They then translate these movements into an accurate flight vector encoding distance and direction relative to the sun[195].

4. Future Avenues of Research

4.1. Investigating the Integration of Ultrafast Morphodynamics Changes

4.2. Genetically Enhancing Signalling Performance and Speed

4.3. Neural Activity Synchronisation

4.4. Perception Enhancement

5. Conclusion and Future Outlook

Author Contributions

Acknowledgments

Declaration of interests

References

- Eichler, K., Li, F., Litwin-Kumar, A., Park, Y., Andrade, I., Chneider-Mizell, C.M.S., Saumweber, T., Huser, A., Eschbach, C., Gerber, B., et al. (2017). The complete connectome of a learning and memory centre in an insect brain. Nature 548, 175-182. [CrossRef]

- Oh, S.W., Harris, J.A., Ng, L., Winslow, B., Cain, N., Mihalas, S., Wang, Q.X., Lau, C., Kuan, L., Henry, A.M., et al. (2014). A mesoscale connectome of the mouse brain. Nature 508, 207-214. [CrossRef]

- Rivera-Alba, M., Vitaladevuni, S.N., Mischenko, Y., Lu, Z.Y., Takemura, S.Y., Scheffer, L., Meinertzhagen, I.A., Chklovskii, D.B., and de Polavieja, G.G. (2011). Wiring economy and volume exclusion determine neuronal placement in the Drosophila brain. Curr Biol 21, 2000-2005. [CrossRef]

- Winding, M., Pedigo, B.D., Barnes, C.L., Patsolic, H.G., Park, Y., Kazimiers, T., Fushiki, A., Andrade, I.V., Khandelwal, A., Valdes-Aleman, J., et al. (2023). The connectome of an insect brain. Science 379, 995-1013. ARTN eadd9330. [CrossRef]

- Meinertzhagen, I.A., and O’Neil, S.D. (1991). Synaptic organization of columnar elements in the lamina of the wild type in Drosophila melanogaster. J Comp Neurol 305, 232-263. [CrossRef]

- Hudspeth, A.J. (2008). Making an effort to listen: mechanical amplification in the ear. Neuron 59, 530-545. [CrossRef]

- Pandiyan, V.P., Maloney-Bertelli, A., Kuchenbecker, J.A., Boyle, K.C., Ling, T., Chen, Z.C., Park, B.H., Roorda, A., Palanker, D., and Sabesan, R. (2020). The optoretinogram reveals the primary steps of phototransduction in the living human eye. Sci Adv 6. [CrossRef]

- Hardie, R.C., and Franze, K. (2012). Photomechanical responses in Drosophila photoreceptors. Science 338, 260-163. [CrossRef]

- Bocchero, U., Falleroni, F., Mortal, S., Li, Y., Cojoc, D., Lamb, T., and Torre, V. (2020). Mechanosensitivity is an essential component of phototransduction in vertebrate rods. PLoS Biol 18, e3000750. [CrossRef]

- Korkotian, E., and Segal, M. (2001). Spike-associated fast contraction of dendritic spines in cultured hippocampal neurons. Neuron 30, 751-758. [CrossRef]

- Majewska, A., and Sur, M. (2003). Motility of dendritic spines in visual cortex in vivo: changes during the critical period and effects of visual deprivation. Proc Natl Acad Sci U S A 100, 16024-16029. [CrossRef]

- Crick, F. (1982). Do dendritic spines twitch? Trends in Neurosciences 5, 44-46.

- Juusola, M., Dau, A., Song, Z., Solanki, N., Rien, D., Jaciuch, D., Dongre, S.A., Blanchard, F., de Polavieja, G.G., Hardie, R.C., and Takalo, J. (2017). Microsaccadic sampling of moving image information provides Drosophila hyperacute vision. Elife 6. [CrossRef]

- Kemppainen, J., Mansour, N., Takalo, J., and Juusola, M. (2022). High-speed imaging of light-induced photoreceptor microsaccades in compound eyes. Commun Biol 5, 203. [CrossRef]

- Kemppainen, J., Scales, B., Razban Haghighi, K., Takalo, J., Mansour, N., McManus, J., Leko, G., Saari, P., Hurcomb, J., Antohi, A., et al. (2022). Binocular mirror-symmetric microsaccadic sampling enables Drosophila hyperacute 3D vision. Proc Natl Acad Sci U S A 119, e2109717119. [CrossRef]

- Senthilan, P.R., Piepenbrock, D., Ovezmyradov, G., Nadrowski, B., Bechstedt, S., Pauls, S., Winkler, M., Mobius, W., Howard, J., and Gopfert, M.C. (2012). Drosophila auditory organ genes and genetic hearing defects. Cell 150, 1042-1054. [CrossRef]

- Reshetniak, S., and Rizzoli, S.O. (2021). The vesicle cluster as a major organizer of synaptic composition in the short-term and long-term. Curr Opin Cell Biol 71, 63-68. [CrossRef]

- Reshetniak, S., Ussling, J.E., Perego, E., Rammner, B., Schikorski, T., Fornasiero, E.F., Truckenbrodt, S., Koster, S., and Rizzoli, S.O. (2020). A comparative analysis of the mobility of 45 proteins in the synaptic bouton. Embo J 39. ARTN e104596. [CrossRef]

- Rusakov, D.A., Savtchenko, L.P., Zheng, K.Y., and Henley, J.M. (2011). Shaping the synaptic signal: molecular mobility inside and outside the cleft. Trends Neurosci 34, 359-369. [CrossRef]

- Juusola, M., Uusitalo, R.O., and Weckstrom, M. (1995). Transfer of graded potentials at the photoreceptor-interneuron synapse. J Gen Physiol 105, 117-148. [CrossRef]

- Fettiplace, R., Crawford, A.C., and Kennedy, H.J. (2006). Signal transformation by mechanotransducer channels of mammalian outer hair cells. Auditory Mechanisms: Processes and Models, 245-253. [CrossRef]

- Kennedy, H.J., Crawford, A.C., and Fettiplace, R. (2005). Force generation by mammalian hair bundles supports a role in cochlear amplification. Nature 433, 880-883. [CrossRef]

- Juusola, M., French, A.S., Uusitalo, R.O., and Weckstrom, M. (1996). Information processing by graded-potential transmission through tonically active synapses. Trends Neurosci 19, 292-297. [CrossRef]

- Watanabe, S., Rost, B.R., Camacho-Perez, M., Davis, M.W., Sohl-Kielczynski, B., Rosenmund, C., and Jorgensen, E.M. (2013). Ultrafast endocytosis at mouse hippocampal synapses. Nature 504, 242-247. [CrossRef]

- Zheng, L., de Polavieja, G.G., Wolfram, V., Asyali, M.H., Hardie, R.C., and Juusola, M. (2006). Feedback network controls photoreceptor output at the layer of first visual synapses in Drosophila. J Gen Physiol 127, 495-510. [CrossRef]

- Joy, M.S.H., Nall, D.L., Emon, B., Lee, K.Y., Barishman, A., Ahmed, M., Rahman, S., Selvin, P.R., and Saif, M.T.A. (2023). Synapses without tension fail to fire in an in vitro network of hippocampal neurons. Proc Natl Acad Sci U S A 120, e2311995120. [CrossRef]

- Shusterman, R., Smear, M.C., Koulakov, A.A., and Rinberg, D. (2011). Precise olfactory responses tile the sniff cycle. Nat Neurosci 14, 1039–1044. [CrossRef]

- Smear, M., Shusterman, R., O’Connor, R., Bozza, T., and Rinberg, D. (2011). Perception of sniff phase in mouse olfaction. Nature 479, 397–400. [CrossRef]

- Bush, N.E., Solla, S.A., and Hartmann, M.J.Z. (2016). Whisking mechanics and active sensing. Curr Opin Neurobiol 40, 178-186. [CrossRef]

- Daghfous, G., Smargiassi, M., Libourel, P.A., Wattiez, R., and Bels, V. (2012). The function of oscillatory tongue-flicks in snakes: insights from kinematics of tongue-flicking in the banded water snake (Nerodia fasciata). Chem Senses 37, 883-896. [CrossRef]

- Davies, A., Louis, M., and Webb, B. (2015). A model of Drosophila larva chemotaxis. Plos Comp Biol 11. ARTN e1004606. [CrossRef]

- Gomez-Marin, A., Stephens, G.J., and Louis, M. (2011). Active sampling and decision making in Drosophila chemotaxis. Nature Comm 2. ARTN 441. [CrossRef]

- van Hateren, J.H., and Schilstra, C. (1999). Blowfly flight and optic flow II. Head movements during flight. J Exp Biol 202, 1491-1500.

- Land, M. (2019). Eye movements in man and other animals. Vis Res 162, 1-7. [CrossRef]

- Fenk, L.M., Avritzer, S.C., Weisman, J.L., Nair, A., Randt, L.D., Mohren, T.L., Siwanowicz, I., and Maimon, G. (2022). Muscles that move the retina augment compound eye vision in Drosophila. Nature 612, 116-122. [CrossRef]

- Franceschini, N., Chagneux, R., and Kirschfeld, K. (1995). Gaze control in flies by co-ordinated action of eye muscles.

- Franceschini, N. (1998). Combined optical neuroanatomical, electrophysiological and behavioural studies on signal processing in the fly compound eye. In Biocybernetics of Vision: Integrative Mechanisms and Cognitive Processes, C. Taddei-Ferretti, ed. (World Scientific), pp. 341-361.

- Schutz, A.C., Braun, D.I., and Gegenfurtner, K.R. (2011). Eye movements and perception: a selective review. J Vis 11. [CrossRef]

- Rucci, M., Iovin, R., Poletti, M., and Santini, F. (2007). Miniature eye movements enhance fine spatial detail. Nature 447, 851-854. [CrossRef]

- Casile, A., Victor, J.D., and Rucci, M. (2019). Contrast sensitivity reveals an oculomotor strategy for temporally encoding space. Elife 8. ARTN e40924. [CrossRef]

- Intoy, J., Li, Y.H., Bowers, N.R., Victor, J.D., Poletti, M., and Rucci, M. (2024). Consequences of eye movements for spatial selectivity. Current Biology 34. [CrossRef]

- Rucci, M., and Victor, J.D. (2015). The unsteady eye: an information-processing stage, not a bug. Trends in Neurosciences 38, 195-206. [CrossRef]

- Qi, L.J., Iskols, M., Greenberg, R.S., Xiao, J.Y., Handler, A., Liberles, S.D., and Ginty, D.D. (2024). Krause corpuscles are genital vibrotactile sensors for sexual behaviours. Nature 630. [CrossRef]

- Schoneich, S., and Hedwig, B. (2010). Hyperacute directional hearing and phonotactic steering in the cricket (Gryllus bimaculatus deGeer). Plos One 5. ARTN e15141. [CrossRef]

- Zheng, L., Nikolaev, A., Wardill, T.J., O’Kane, C.J., de Polavieja, G.G., and Juusola, M. (2009). Network adaptation improves temporal representation of naturalistic stimuli in Drosophila eye: I dynamics. PLoS One 4, e4307. [CrossRef]

- Barlow, H.B. (1961). Possible principles underlying the transformations of sensory messages. In Sensory Communication, W. Rosenblith, ed. (M.I.T. Press), pp. 217-234.

- Darwin, C. (1859). On the origin of species by means of natural selection, or the preservation of favoured races in the struggle for life (John Murray).

- Sheehan, M.J., and Tibbetts, E.A. (2011). Specialized face learning Is associated with individual recognition in paper wasps. Science 334, 1272-1275. [CrossRef]

- Miller, S.E., Legan, A.W., Henshaw, M.T., Ostevik, K.L., Samuk, K., Uy, F.M.K., and Sheehan, M.J. (2020). Evolutionary dynamics of recent selection on cognitive abilities. Proc Natl Acad Sci U S A 117, 3045-3052. [CrossRef]

- Kacsoh, B.Z., Lynch, Z.R., Mortimer, N.T., and Schlenke, T.A. (2013). Fruit flies medicate offspring after seeing parasites. Science 339, 947-950. [CrossRef]

- Nöbel, S., Monier, M., Villa, D., Danchin, E., and Isabel, G. (2022). 2-D sex images elicit mate copying in fruit fies. Sci Rep-Uk 22. [CrossRef]

- Land, M.F. (1997). Visual acuity in insects. Ann Rev Entomol 42, 147-177. [CrossRef]

- Laughlin, S.B. (1989). The role of sensory adaptation in the retina. J Exp Biol 146, 39-62.

- Laughlin, S.B., van Steveninck, R.R.D., and Anderson, J.C. (1998). The metabolic cost of neural information. Nat Neurosci 1, 36-41. [CrossRef]

- Schilstra, C., and Van Hateren, J.H. (1999). Blowfly flight and optic flow I. Thorax kinematics and flight dynamics. J Exp Biol 202, 1481-1490.

- Guiraud, M., Roper, M., and Chittka, L. (2018). High-speed videography reveals how honeybees can turn a spatial concept learning task Into a simple discrimination task by stereotyped flight movements and sequential inspection of pattern elements. Front Psychol 9. [CrossRef]

- Nityananda, V., Skorupski, P., and Chittka, L. (2014). Can bees see at a glance? J Exp Biol 217, 1933-1939. [CrossRef]

- Vasas, V., and Chittka, L. (2019). Insect-inspired sequential inspection strategy enables an artificial network of four neurons to estimate numerosity. Iscience 11, 85-92. [CrossRef]

- Chittka, L., and Skorupski, P. (2017). Active vision: A broader comparative perspective is needed. Constr Found 13, 128-129.

- Sorribes, A., Armendariz, B.G., Lopez-Pigozzi, D., Murga, C., and de Polavieja, G.G. (2011). The origin of behavioral bursts in decision-making circuitry. Plos Comput Biol 7, e1002075. [CrossRef]

- Hardie, R.C., and Juusola, M. (2015). Phototransduction in Drosophila. Curr Opin Neurobiol 34, 37-45. [CrossRef]

- Tepass, U., and Harris, K.P. (2007). Adherens junctions in Drosophila retinal morphogenesis. Trends Cell Biol 17, 26-35. [CrossRef]

- Pick, B. (1977). Specific Misalignments of Rhabdomere Visual Axes in Neural Superposition Eye of Dipteran Flies. Biol Cybern 26, 215-224. [CrossRef]

- Tang, S., and Juusola, M. (2010). Intrinsic activity in the fly brain gates visual information during behavioral choices. PLoS One 5, e14455. [CrossRef]

- Tang, S., Wolf, R., Xu, S., and Heisenberg, M. Visual pattern recognition in Drosophila is invariant for retinal position. Science 305, 1020-1022. [CrossRef]

- van Swinderen, B. (2011). Attention in Drosophila. Int Rev Neurobiol 99, 51-85. [CrossRef]

- Geurten, B.R.H., Jahde, P., Corthals, K., and Gopfert, M.C. (2014). Saccadic body turns in walking Drosophila. Front Behav Neurosci 8. ARTN 365. [CrossRef]

- Blaj, G., and van Hateren, J.H. (2004). Saccadic head and thorax movements in freely walking blowflies. J Comp Physiol A Neuroethol Sens Neural Behav Physiol 190, 861-868. [CrossRef]

- Chiappe, M.E. (2023). Circuits for self-motion estimation and walking control in Drosophila. Curr Opin Neurobiol 81, 102748. [CrossRef]

- Chiappe, M.E., Seelig, J.D., Reiser, M.B., and Jayaraman, V. (2010). Walking modulates speed sensitivity in Drosophila motion vision. Curr Biol 20, 1470-1475. [CrossRef]

- Fujiwara, T., Brotas, M., and Chiappe, M.E. (2022). Walking strides direct rapid and flexible recruitment of visual circuits for course control in Drosophila. Neuron 110, 2124-2138 e2128. [CrossRef]

- Fujiwara, T., Cruz, T.L., Bohnslav, J.P., and Chiappe, M.E. (2017). A faithful internal representation of walking movements in the Drosophila visual system. Nat Neurosci 20, 72-81. [CrossRef]

- Maimon, G., Straw, A.D., and Dickinson, M.H. (2010). Active flight increases the gain of visual motion processing in Drosophila. Nat Neurosci 13, 393-399. [CrossRef]

- Grabowska, M.J., Jeans, R., Steeves, J., and van Swinderen, B. (2020). Oscillations in the central brain of Drosophila are phase locked to attended visual features. Proc Natl Acad Sci U S A 117, 29925-29936. [CrossRef]

- Exner, S. (1891). Die Physiologie der facettierten Augen von Krebsen und Insecten.

- Song, Z., Postma, M., Billings, S.A., Coca, D., Hardie, R.C., and Juusola, M. (2012). Stochastic, adaptive sampling of information by microvilli in fly photoreceptors. Curr Biol 22, 1371-1380. [CrossRef]

- Stavenga, D.G. (2003). Angular and spectral sensitivity of fly photoreceptors. II. Dependence on facet lens F-number and rhabdomere type in Drosophila. J Comp Physiol A 189, 189-202. [CrossRef]

- Juusola, M., and Hardie, R.C. (2001). Light Adaptation in Drosophila Photoreceptors: I. Response Dynamics and Signaling Efficiency at 25°C. J Gen Physiol 117, 3-25. [CrossRef]

- Juusola, M., and Song, Z.Y. (2017). How a fly photoreceptor samples light information in time. J Physiol Lond 595, 5427-5437. [CrossRef]

- Song, Z., and Juusola, M. (2014). Refractory sampling links efficiency and costs of sensory encoding to stimulus statistics. J Neurosci 34, 7216-7237. [CrossRef]

- Song, Z., Zhou, Y., Feng, J., and Juusola, M. (2021). Multiscale ‘whole-cell’ models to study neural information processing - New insights from fly photoreceptor studies. J Neurosci Methods 357, 109156. [CrossRef]

- Gonzalez-Bellido, P.T., Wardill, T.J., and Juusola, M. (2011). Compound eyes and retinal information processing in miniature dipteran species match their specific ecological demands. Proc Natl Acad Sci U S A 108, 4224-4229. [CrossRef]

- Juusola, M. (1993). Linear and nonlinear contrast coding in light-adapted blowfly photoreceptors. J Comp Physiol A 172, 511-521. [CrossRef]

- Ditchburn, R.W., and Ginsborg, B.L. (1952). Vision with a stabilized retinal image. Nature 170, 36-37. [CrossRef]

- Riggs, L.A., and Ratliff, F. (1952). The effects of counteracting the normal movements of the eye. J Opt Soc Am 42, 872-873.

- Borst, A. (2009). Drosophila’s view on insect vision. Curr Biol 19, 36-47. [CrossRef]

- Juusola, M., Dau, A., Zheng, L., and Rien, D.N. (2016). Electrophysiological method for recording intracellular voltage responses of photoreceptors and interneurons to light stimuli. Jove-J Vis Exp. ARTN e54142. [CrossRef]

- Juusola, M., and Hardie, R.C. (2001). Light adaptation in Drosophila photoreceptors: II. Rising temperature increases the bandwidth of reliable signaling J Gen Physiol 117, 27–42.

- Juusola, M., and de Polavieja, G.G. (2003). The rate of information transfer of naturalistic stimulation by graded potentials. J Gen Physiol 122, 191-206. [CrossRef]

- Juusola, M., Song, Z., and Hardie, R.C. (2022). Phototransduction Biophysics. In Encyclopedia of Computational Neuroscience, D. Jaeger, and R. Jung, eds. (Springer), pp. 2758-2776. [CrossRef]

- Hochstrate, P., and Hamdorf, K. (1990). Microvillar components of light adaptation in blowflies. J Gen Physiol 95, 891-910. [CrossRef]

- Pumir, A., Graves, J., Ranganathan, R., and Shraiman, B.I. (2008). Systems analysis of the single photon response in invertebrate photoreceptors. Proc Natl Acad Sci U S A 105, 10354-10359. [CrossRef]

- Howard, J., Blakeslee, B., and Laughlin, S.B. (1987). The intracellular pupil mechanism and photoreceptor signal: noise ratios in the fly Lucilia cuprina. Proc R Soc Lond B Biol Sci 231, 415-435. [CrossRef]

- Mishra, P., Socolich, M., Wall, M.A., Graves, J., Wang, Z., and Ranganathan, R. (2007). Dynamic scaffolding in a G protein-coupled signaling system. Cell 131, 80-92. [CrossRef]

- Scott, K., Sun, Y., Beckingham, K., and Zuker, C.S. (1997). Calmodulin regulation of Drosophila light-activated channels and receptor function mediates termination of the light response in vivo. Cell 91, 375-383. [CrossRef]

- Liu, C.H., Satoh, A.K., Postma, M., Huang, J., Ready, D.F., and Hardie, R.C. (2008). Ca2+-dependent metarhodopsin inactivation mediated by calmodulin and NINAC myosin III. Neuron 59, 778-789. [CrossRef]

- Wong, F., and Knight, B.W. (1980). Adapting-bump model for eccentric cells of Limulus. J Gen Physiol 76, 539-557. [CrossRef]

- Wong, F., Knight, B.W., and Dodge, F.A. (1980). Dispersion of latencies in photoreceptors of Limulus and the adapting-bump model. J Gen Physiol 76, 517-537. [CrossRef]

- Hardie, R.C., and Postma, M. (2008). Phototransduction in microvillar photoreceptors of Drosophila and other invertebrates. In The senses: a comprehensive reference. Vision, A.I. Basbaum, A. Kaneko, G.M. Shepherd, and G. Westheimer, eds. (Academic), pp. 77-130.

- Postma, M., Oberwinkler, J., and Stavenga, D.G. (1999). Does Ca2+ reach millimolar concentrations after single photon absorption in Drosophila photoreceptor microvilli? Biophys J 77, 1811-1823. [CrossRef]

- Hardie, R.C. (1996). INDO-1 measurements of absolute resting and light-induced Ca2+ concentration in Drosophila photoreceptors. J Neurosci 16, 2924-2933. [CrossRef]

- Friederich, U., Billings, S.A., Hardie, R.C., Juusola, M., and Coca, D. (2016). Fly Photoreceptors Encode Phase Congruency. PLoS One 11, e0157993. [CrossRef]

- Faivre, O., and Juusola, M. (2008). Visual coding in locust photoreceptors. Plos One 3. ARTN e2173. [CrossRef]

- Sharkey, C.R., Blanco, J., Leibowitz, M.M., Pinto-Benito, D., and Wardill, T.J. (2020). The spectral sensitivity of photoreceptors. Sci Rep-Uk 10. ARTN18242. [CrossRef]

- Wardill, T.J., List, O., Li, X., Dongre, S., McCulloch, M., Ting, C.Y., O’Kane, C.J., Tang, S., Lee, C.H., Hardie, R.C., and Juusola, M. (2012). Multiple spectral inputs improve motion discrimination in the Drosophila visual system. Science 336, 925-931. [CrossRef]

- Franceschini, N., and Kirschfeld, K. (1971). Phenomena of pseudopupil in compound eye of Drosophila. Kybernetik 9, 159-182. [CrossRef]

- Woodgate, J.L., Makinson, J.C., Rossi, N., Lim, K.S., Reynolds, A.M., Rawlings, C.J., and Chittka, L. (2021). Harmonic radar tracking reveals that honeybee drones navigate between multiple aerial leks. Iscience 24. ARTN 102499. [CrossRef]

- Schulte, P., Zeil, J., and Sturzl, W. (2019). An insect-inspired model for acquiring views for homing. Biol Cybern 113, 439-451. [CrossRef]

- Boeddeker, N., Dittmar, L., Sturzl, W., and Egelhaaf, M. (2010). The fine structure of honeybee head and body yaw movements in a homing task. Proc R Soc Lond B Biol Sci 277, 1899-1906. [CrossRef]

- Hoekstra, H.J.W.M. (1997). On beam propagation methods for modelling in integrated optics. Opt. Quantum Electron 29, 157-171.

- Kirschfeld, K. (1973). [Neural superposition eye]. Fortschr Zool 21, 229-257.

- Song, B.M., and Lee, C.H. (2018). Toward a mechanistic understanding of color vision in insects. Front Neur Circ 12. ARTN 16. [CrossRef]

- Johnston, R.J., and Desplan, C. (2010). Stochastic mechanisms of cell fate specification that yield random or robust outcomes. Ann Rev Cell Dev Biol 26, 689-719. [CrossRef]

- Galton, F. (1907). Vox populi. Nature 450-451.

- Vasiliauskas, D., Mazzoni, E.O., Sprecher, S.G., Brodetskiy, K., Johnston, R.J., Lidder, P., Vogt, N., Celik, A., and Desplan, C. (2011). Feedback from rhodopsin controls rhodopsin exclusion in Drosophila photoreceptors. Nature 479, 108-112. [CrossRef]

- Dippé, M.A.Z., and Wold, E.H. (1985). Antialiasing through stochastic sampling. ACM SIGGRAPH Computer Graphics 19, 69-78. [CrossRef]

- Field, G.D., Gauthier, J.L., Sher, A., Greschner, M., Machado, T.A., Jepson, L.H., Shlens, J., Gunning, D.E., Mathieson, K., Dabrowski, W., et al. (2010). Functional connectivity in the retina at the resolution of photoreceptors. Nature 467, 673-677. [CrossRef]

- Götz, K.G. (1968). Flight control in Drosophila by visual perception of motion. Kybernetik 6, 199-208.

- Hengstenberg, R. (1971). Eye muscle system of housefly Musca Domestica .1. Analysis of clock spikes and their source. Kybernetik 9, 56-77. [CrossRef]

- Colonnier, F., Manecy, A., Juston, R., Mallot, H., Leitel, R., Floreano, D., and Viollet, S. (2015). A small-scale hyperacute compound eye featuring active eye tremor: application to visual stabilization, target tracking, and short-range odometry. Bioinspir Biomim 10. Artn 026002. [CrossRef]

- Viollet, S., Godiot, S., Leitel, R., Buss, W., Breugnon, P., Menouni, M., Juston, R., Expert, F., Colonnier, F., L’Eplattenier, G., et al. (2014). Hardware architecture and cutting-edge assembly process of a tiny curved compound eye. Sensors-Basel 14, 21702-21721. [CrossRef]

- Talley, J., Pusdekar, J., Feltenberger, A., Ketner, N., Evers, J., Liu, M., Gosh, A., Palmer, S.E., Wardill, T.J., and Gonzalez-Bellido, P.T. (2023). Predictive saccades and decision making in the beetle-predating saffron robber fly. Curr Biol 33, 1-13. [CrossRef]

- Glasser, A. (2010). Accommodation. In Encyclopedia of the Eye, D.A. Dartt, ed. (Academic Press), pp. 8-17. [CrossRef]

- Osorio, D. (2007). Spam and the evolution of the fly’s eye. Bioessays 29, 111-115. [CrossRef]

- Zelhof, A.C., Hardy, R.W., Becker, A., and Zuker, C.S. (2006). Transforming the architecture of compound eyes. Nature 443, 696-699. [CrossRef]

- Kolodziejczyk, A., Sun, X., Meinertzhagen, I.A., and Nassel, D.R. (2008). Glutamate, GABA and acetylcholine signaling components in the lamina of the Drosophila visual system. PLoS One 3, e2110. [CrossRef]

- de Polavieja, G.G. (2006). Neuronal algorithms that detect the temporal order of events. Neural Comp 18, 2102-2121.

- Yang, H.H., and Clandinin, T.R. (2018). Elementary Motion Detection in Drosophila: Algorithms and Mechanisms. Annu Rev Vis Sci 4, 143-163. [CrossRef]

- Hassenstein, B., and Reichardt, W. (1956). Systemtheoretische Analyse der Zeit-, Reihenfolgen- und Vorzeichenauswertung bei der Bewegungsperzeption des Rüsselkäfers Chlorophanus. Z Naturforsch 11b, 513-524.

- Barlow, H.B., and Levick, W.R. (1965). The mechanism of directionally selective units in rabbit’s retina. J Physiol 178, 477-504. [CrossRef]

- Leung, A., Cohen, D., van Swinderen, B., and Tsuchiya, N. (2021). Integrated information structure collapses with anesthetic loss of conscious arousal in Drosophila melanogaster. Plos Comput Biol 17, e1008722. [CrossRef]

- Maye, A., Hsieh, C.H., Sugihara, G., and Brembs, B. (2007). Order in spontaneous behavior. PLoS One 2, e443. [CrossRef]

- van Hateren, J.H. (2017). A unifying theory of biological function. Biol Theory 12, 112-126. [CrossRef]

- van Hateren, J.H. (2019). A theory of consciousness: computation, algorithm, and neurobiological realization. Biol Cybern 113, 357-372. [CrossRef]

- Okray, Z., Jacob, P.F., Stern, C., Desmond, K., Otto, N., Talbot, C.B., Vargas-Gutierrez, P., and Waddell, S. (2023). Multisensory learning binds neurons into a cross-modal memory engram. Nature 617, 777-784. [CrossRef]

- de Polavieja, G.G. (2002). Errors drive the evolution of biological signalling to costly codes. J Theor Biol 214, 657-664.

- de Polavieja, G.G. (2004). Reliable biological communication with realistic constraints. Phys Rev E 70, 061910.

- Li, X., Abou Tayoun, A., Song, Z., Dau, A., Rien, D., Jaciuch, D., Dongre, S., Blanchard, F., Nikolaev, A., Zheng, L., et al. (2019). Ca2+-activated K+ channels reduce network excitability, improving adaptability and energetics for transmitting and perceiving sensory information. J Neurosci 39, 7132-7154. [CrossRef]

- Shannon, C.E. (1948). A mathematical theory of communication. Bell Syst Technic J 27, 379–423, 623–656.

- Brenner, N., Bialek, W., and de Ruyter van Steveninck, R. (2000). Adaptive rescaling maximizes information transmission. Neuron 26, 695-702. [CrossRef]

- Maravall, M., Petersen, R.S., Fairhall, A.L., Arabzadeh, E., and Diamond, M.E. (2007). Shifts in coding properties and maintenance of information transmission during adaptation in barrel cortex. PLoS Biol 5, e19. [CrossRef]

- Arganda, S., Guantes, R., and de Polavieja, G.G. (2007). Sodium pumps adapt spike bursting to stimulus statistics. Nat Neurosci 10, 1467-1473. [CrossRef]

- Laughlin, S.B. (1981). A simple coding procedure enhances a neuron’s information capacity. Zeitschrift für Naturforschung C 36, 910-912.

- van Hateren, J.H. (1992). A theory of maximizing sensory information. Biol Cybern 68, 23-29. [CrossRef]

- Hopfield, J.J., and Brody, C.D. (2001). What is a moment? Transient synchrony as a collective mechanism for spatiotemporal integration. Proc Natl Acad Sci U S A 98, 1282-1287. [CrossRef]

- Srinivasan, M.V., Laughlin, S.B., and Dubs, A. (1982). Predictive coding: a fresh view of inhibition in the retina. Proc R Soc Lond B Biol Sci 216, 427-459. [CrossRef]

- Mann, K., Deny, S., Ganguli, S., and Clandinin, T.R. (2021). Coupling of activity, metabolism and behaviour across the Drosophila brain. Nature 593, 244-248. [CrossRef]

- Poulet, J.F., and Hedwig, B. (2006). The cellular basis of a corollary discharge. Science 311, 518-522. [CrossRef]

- Poulet, J.F., and Hedwig, B. (2007). New insights into corollary discharges mediated by identified neural pathways. Trends Neurosci 30, 14-21. [CrossRef]

- Peyrache, A., Dehghani, N., Eskandar, E.N., Madsen, J.R., Anderson, W.S., Donoghue, J.A., Hochberg, L.R., Halgren, E., Cash, S.S., and Destexhe, A. (2012). Spatiotemporal dynamics of neocortical excitation and inhibition during human sleep. Proc Natl Acad Sci U S A 109, 1731-1736. [CrossRef]

- Gallego-Carracedo, C., Perich, M.G., Chowdhury, R.H., Miller, L.E., and Gallego, J.A. (2022). Local field potentials reflect cortical population dynamics in a region-specific and frequency-dependent manner. Elife 11. [CrossRef]

- Yap, M.H.W., Grabowska, M.J., Rohrscheib, C., Jeans, R., Troup, M., Paulk, A.C., van Alphen, B., Shaw, P.J., and van Swinderen, B. (2017). Oscillatory brain activity in spontaneous and induced sleep stages in flies. Nat Commun 8, 1815. [CrossRef]

- Miller, E.K., Brincat, S.L., and Roy, J.E. (2024). Cognition is an emergent property. Current Opinion in Behavioral Sciences, 101388. [CrossRef]

- Pinotsis, D.A., Fridman, G., and Miller, E.K. (2023). Cytoelectric coupling: Electric fields sculpt neural activity and “tune” the brain’s infrastructure. Prog Neurobiol 226, 102465. [CrossRef]

- Solvi, C., Al-Khudhairy, S.G., and Chittka, L. (2020). Bumble bees display cross-modal object recognition between visual and tactile senses. Science 367, 910-912. [CrossRef]

- Badre, D., Bhandari, A., Keglovits, H., and Kikumoto, A. (2021). The dimensionality of neural representations for control. Curr Opin Behav Sci 38, 20-28. [CrossRef]

- Yellott, J.I. (1982). Spectral-analysis of spatial sampling by photoreceptors - topological disorder prevents aliasing. Vis Res 22, 1205-1210. [CrossRef]

- Wikler, K.C., and Rakic, P. (1990). Distribution of photoreceptor subtypes in the retina of diurnal and nocturnal primates. J Neurosci 10, 3390-3401. [CrossRef]

- Kim, Y.J., Peterson, B.B., Crook, J.D., Joo, H.R., Wu, J., Puller, C., Robinson, F.R., Gamlin, P.D., Yau, K.W., Viana, F., et al. (2022). Origins of direction selectivity in the primate retina. Nat Commun 13, 2862. [CrossRef]

- Yu, W.Q., Swanstrom, R., Sigulinsky, C.L., Ahlquist, R.M., Knecht, S., Jones, B.W., Berson, D.M., and Wong, R.O. (2023). Distinctive synaptic structural motifs link excitatory retinal interneurons to diverse postsynaptic partner types. Cell Rep 42, 112006. [CrossRef]

- Niven, J.E., and Chittka, L. (2010). Reuse of identified neurons in multiple neural circuits. Behavioral and Brain Sciences, 4.

- Pfeiffer, K., and Homberg, U. (2014). Organization and Functional Roles of the Central Complex in the Insect Brain. Annual Review of Entomology, Vol 59, 2014 59, 165-U787. [CrossRef]

- Li, F., Lindsey, J., Marin, E.C., Otto, N., Dreher, M., Dempsey, G., Stark, I., Bates, A.S., Pleijzier, M.W., Schlegel, P., et al. (2020). The connectome of the adult mushroom body provides insights into function. Elife 9. ARTN e62576. [CrossRef]

- Kohonen, T. (2006). Self-organizing neural projections. Neural Netw 19, 723-733. [CrossRef]

- Li, M., Liu, F., Juusola, M., and Tang, S. (2014). Perceptual color map in macaque visual area V4. J Neurosci 34, 202-217. [CrossRef]

- Dan, C., Hulse, B.K., Kappagantula, R., Jayaraman, V., and Hermundstad, A.M. (2024). A neural circuit architecture for rapid learning in goal-directed navigation. Neuron 112, 2581-2599 e2523. [CrossRef]

- Hulse, B.K., Haberkern, H., Franconville, R., Turner-Evans, D., Takemura, S.Y., Wolff, T., Noorman, M., Dreher, M., Dan, C., Parekh, R., et al. (2021). A connectome of the Drosophila central complex reveals network motifs suitable for flexible navigation and context-dependent action selection. Elife 10. [CrossRef]

- Scheffer, L.K., Xu, C.S., Januszewski, M., Lu, Z., Takemura, S.Y., Hayworth, K.J., Huang, G.B., Shinomiya, K., Maitlin-Shepard, J., Berg, S., et al. (2020). A connectome and analysis of the adult Drosophila central brain. Elife 9. [CrossRef]

- Kim, S.S., Hermundstad, A.M., Romani, S., Abbott, L.F., and Jayaraman, V. (2019). Generation of stable heading representations in diverse visual scenes. Nature 576, 126-131. [CrossRef]

- Kim, S.S., Rouault, H., Druckmann, S., and Jayaraman, V. (2017). Ring attractor dynamics in the Drosophila central brain. Science 356, 849-853. [CrossRef]

- Seelig, J.D., and Jayaraman, V. (2015). Neural dynamics for landmark orientation and angular path integration. Nature 521, 186-191. [CrossRef]

- Seelig, J.D., and Jayaraman, V. (2013). Feature detection and orientation tuning in the Drosophila central complex. Nature 503, 262-266. [CrossRef]

- Mussells Pires, P., Zhang, L., Parache, V., Abbott, L.F., and Maimon, G. (2024). Converting an allocentric goal into an egocentric steering signal. Nature 626, 808-818. [CrossRef]

- Lu, J., Behbahani, A.H., Hamburg, L., Westeinde, E.A., Dawson, P.M., Lyu, C., Maimon, G., Dickinson, M.H., Druckmann, S., and Wilson, R.I. (2022). Transforming representations of movement from body- to world-centric space. Nature 601, 98-104. [CrossRef]

- Lyu, C., Abbott, L.F., and Maimon, G. (2022). Building an allocentric travelling direction signal via vector computation. Nature 601, 92-97. [CrossRef]

- Green, J., Adachi, A., Shah, K.K., Hirokawa, J.D., Magani, P.S., and Maimon, G. (2017). A neural circuit architecture for angular integration in Drosophila. Nature 546, 101-106. [CrossRef]

- Honkanen, A., Hensgen, R., Kannan, K., Adden, A., Warrant, E., Wcislo, W., and Heinze, S. (2023). Parallel motion vision pathways in the brain of a tropical bee. J Comp Physiol A Neuroethol Sens Neural Behav Physiol 209, 563-591. [CrossRef]

- Heinze, S. (2021). Mapping the fly’s ‘brain in the brain’. Elife 10. [CrossRef]

- Pisokas, I., Heinze, S., and Webb, B. (2020). The head direction circuit of two insect species. Elife 9. [CrossRef]

- Goulard, R., Buehlmann, C., Niven, J.E., Graham, P., and Webb, B. (2021). A unified mechanism for innate and learned visual landmark guidance in the insect central complex. Plos Comput Biol 17, e1009383. [CrossRef]

- Cope, A.J., Sabo, C., Vasilaki, E., Barron, A.B., and Marshall, J.A.R. (2017). A computational model of the integration of landmarks and motion in the insect central complex. Plos One 12. ARTN e0172325. [CrossRef]

- Heinze, S., and Homberg, U. (2009). Linking the input to the output: new sets of neurons complement the polarization vision network in the locust central complex. J Neurosci 29, 4911-4921. [CrossRef]

- Wu, M., Nern, A., Williamson, W.R., Morimoto, M.M., Reiser, M.B., Card, G.M., and Rubin, G.M. (2016). Visual projection neurons in the Drosophila lobula link feature detection to distinct behavioral programs. Elife 5. [CrossRef]

- Kanerva, P. (1990). Sparce distrubuted memory (The MIT Press).

- Nishimoto, S., Vu, A.T., Naselaris, T., Benjamini, Y., Yu, B., and Gallant, J.L. (2011). Reconstructing visual experiences from brain activity evoked by natural movies. Curr Biol 21, 1641-1646. [CrossRef]

- Willmore, B.D., Prenger, R.J., and Gallant, J.L. (2010). Neural representation of natural images in visual area V2. J Neurosci 30, 2102-2114. [CrossRef]

- Naselaris, T., Prenger, R.J., Kay, K.N., Oliver, M., and Gallant, J.L. (2009). Bayesian reconstruction of natural images from human brain activity. Neuron 63, 902-915. [CrossRef]

- Alem, S., Perry, C.J., Zhu, X.F., Loukola, O.J., Ingraham, T., Sovik, E., and Chittka, L. (2016). Associative Mechanisms Allow for Social Learning and Cultural Transmission of String Pulling in an Insect. Plos Biology 14. ARTN e1002564. [CrossRef]

- Bridges, A.D., Royka, A., Wilson, T., Lockwood, C., Richter, J., Juusola, M., and Chittka, L. (2024). Bumblebees socially learn behaviour too complex to innovate alone. Nature 627, 572-578. [CrossRef]

- Bridges, A.D., MaBouDi, H., Procenko, O., Lockwood, C., Mohammed, Y., Kowalewska, A., González, J.E.R., Woodgate, J.L., and Chittka, L. (2023). Bumblebees acquire alternative puzzle-box solutions via social learning. Plos Biology 21. ARTN e300201910.1371/journal.pbio.3002019.

- Maák, I., Lorinczi, G., Le Quinquis, P., Módra, G., Bovet, D., Call, J., and d’Ettorre, P. (2017). Tool selection during foraging in two species of funnel ants. Anim Behav 123, 207-216. [CrossRef]

- Lorinczi, G., Módra, G., Juhász, O., and Maák, I. (2018). Which tools to use? Choice optimization in the tool-using ant,. Behav Ecol 29, 1444-1452. [CrossRef]

- Woodgate, J.L., Makinson, J.C., Lim, K.S., Reynolds, A.M., and Chittka, L. (2017). Continuous Radar Tracking Illustrates the Development of Multi-destination Routes of Bumblebees. Sci Rep-Uk 7. ARTN 17323. [CrossRef]

- Loukola, O.J., Solvi, C., Coscos, L., and Chittka, L. (2017). Bumblebees show cognitive flexibility by improving on an observed complex behavior. Science 355, 833-836. [CrossRef]

- Hadjitofi, A., and Webb, B. (2024). Dynamic antennal positioning allows honeybee followers to decode the dance. Curr Biol 34, 1772-1779 e1774. [CrossRef]

- Suver, M.P., Medina, A.M., and Nagel, K.I. (2023). Active antennal movements in Drosophila can tune wind encoding. Curr Biol 33, 780-789 e784. [CrossRef]

- Perez-Escudero, A., Rivera-Alba, M., and de Polavieja, G.G. (2009). Structure of deviations from optimality in biological systems. Proc Natl Acad Sci U S A 106, 20544-20549. [CrossRef]

- Razban Haghighi, K. (2023). The Drosophila visual system: a super-efficient encoder. PhD (University of Sheffield).

- Kapustjansky, A., Chittka, L., and Spaethe, J. (2010). Bees use three-dimensional information to improve target detection. Naturwissenschaften 97, 229-233. [CrossRef]

- Chittka, L., and Spaethe, J. (2007). Visual search and the importance of time in complex decision making by bees. Arthropod-Plant Inte 1, 37-44. [CrossRef]

- de Polavieja, G.G., Harsch, A., Kleppe, I., Robinson, H.P., and Juusola, M. (2005). Stimulus history reliably shapes action potential waveforms of cortical neurons. J Neurosci 25, 5657-5665. [CrossRef]

- Juusola, M., Robinson, H.P., and de Polavieja, G.G. (2007). Coding with spike shapes and graded potentials in cortical networks. Bioessays 29, 178-187. [CrossRef]

- de Croon, G.C.H.E., Dupeyroux, J.J.G., Fuller, S.B., and Marshall, J.A.R. (2022). Insect-inspired AI for autonomous robots. Sci Robot 7. ARTN eabl6334. [CrossRef]

- Webb, B. (2020). Robots with insect brains. Science 368, 244-245. [CrossRef]

- Land, M.F. (2009). Vision, eye movements, and natural behavior. Visual Neurosci 26, 51-62. [CrossRef]

- Medathati, N.V.K., Neumann, H., Masson, G.S., and Kornprobst, P. (2016). Bio-inspired computer vision: Towards a synergistic approach of artificial and biological vision. Comput Vis Image Und 150, 1-30. [CrossRef]

- Serres, J.R., and Viollet, S. (2018). Insect-inspired vision for autonomous vehicles. Curr Opin Insect Sci 30, 46-51. [CrossRef]

- Song, Y.M., Xie, Y.Z., Malyarchuk, V., Xiao, J.L., Jung, I., Choi, K.J., Liu, Z.J., Park, H., Lu, C.F., Kim, R.H., et al. (2013). Digital cameras with designs inspired by the arthropod eye. Nature 497, 95-99. [CrossRef]

- MaBouDi, H., Roper, M., Guiraud, M., Marshall, J.A., and Chittka, L. (2021). Automated video tracking and flight analysis show how bumblebees solve a pattern discrimination task using active vision. bioRxiv. [CrossRef]

- MaBouDi, H., Roper, M., Guiraud, M.-G., Chittka, L., and Marshall, J.A. (2023). A neuromorphic model of active vision shows spatio-temporal encoding in lobula neurons can aid pattern recognition in bees. bioRxiv. [CrossRef]

- Schuman, C.D., Kulkarni, S.R., Parsa, M., Mitchell, J.P., Date, P., and Kay, B. (2022). Opportunities for neuromorphic computing algorithms and applications (vol 2, pg 10, 2022). Nat Comput Sci 2, 205-205. [CrossRef]

- Wang, H., Sun, B., Ge, S.S., Su, J., and Jin, M.L. (2024). On non-von Neumann flexible neuromorphic vision sensors. Npj Flex Electron 8. ARTN 28. [CrossRef]

- Millidge, B., Seth, A., and Buckley, C.L. (2022). Predictive Coding: A Theoretical and Experimental Review. arXiv 2107.12979. http://arxiv.org/abs/2107.12979.

- Rao, R.P.N. (2024). A sensory-motor theory of the neocortex. Nat Neurosci 27, 1221-1235. [CrossRef]

- Pfeifer, R., Lungarella, M., and Iida, F. (2007). Self-organization, embodiment, and biologically inspired robotics. Science 318, 1088-1093. [CrossRef]

- Greenwald, A.G. (1970). Sensory Feedback Mechanisms in Performance Control - with Special Reference to Ideo-Motor Mechanism. Psychol Rev 77, 73-99. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).