Submitted:

21 August 2023

Posted:

22 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

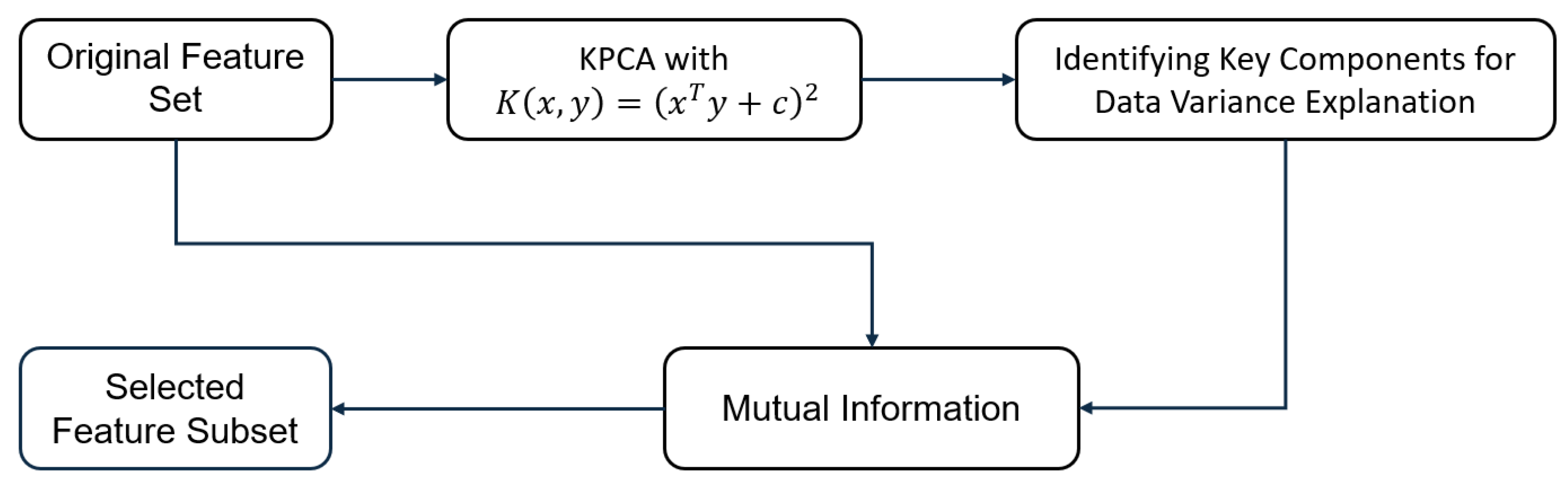

- A new feature selection method based on KPCA and Mutual Information was developed to select the relevant features that control the PEMFC.

- A novel performance prediction method based on XGBRegressor and Tree-structured Parzen Estimator was proposed to predict the polarization curve of the PEMFC.

- A comparison study between the proposes model and traditional machine learning models has been carried out on a real dataset.

2. Experimental Data and Dimensionality Reduction

2.1. Data Description

2.2. PEMFC Dimensionality Reduction

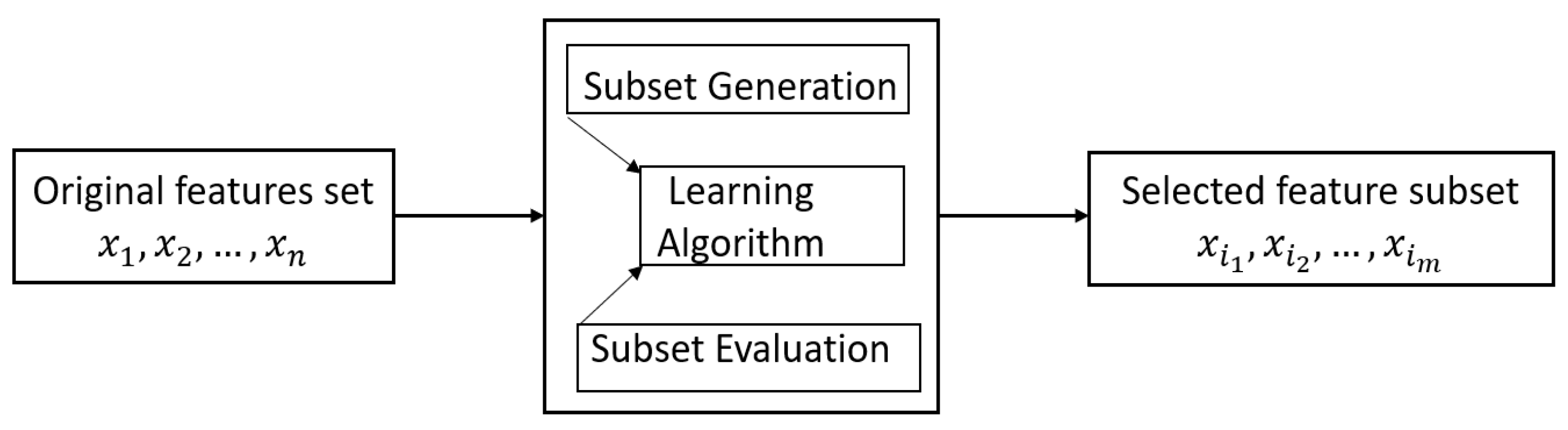

2.2.1. Feature Selection

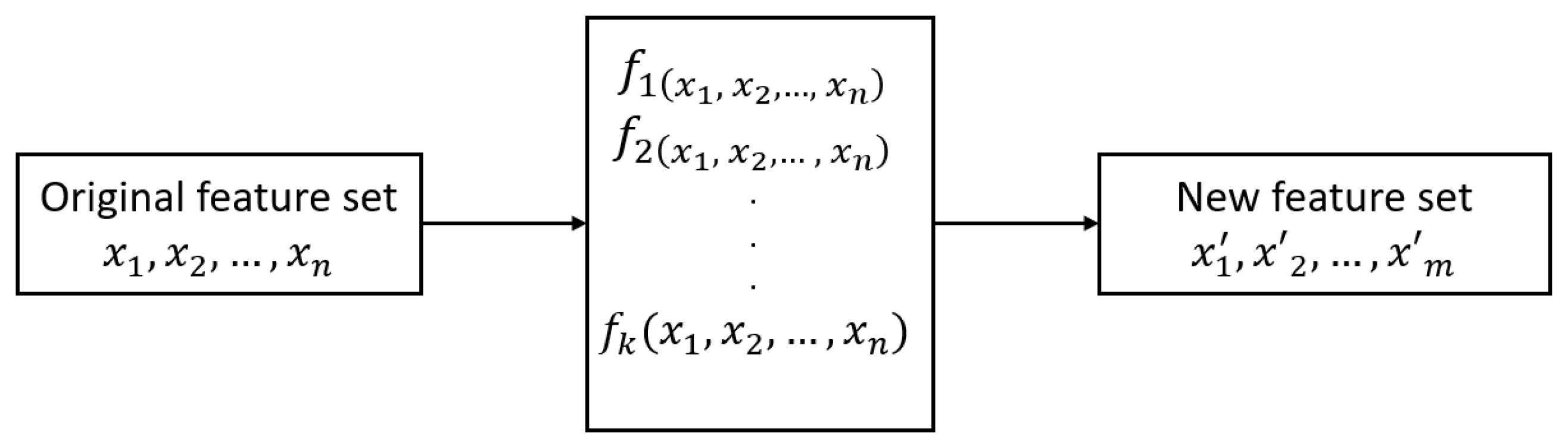

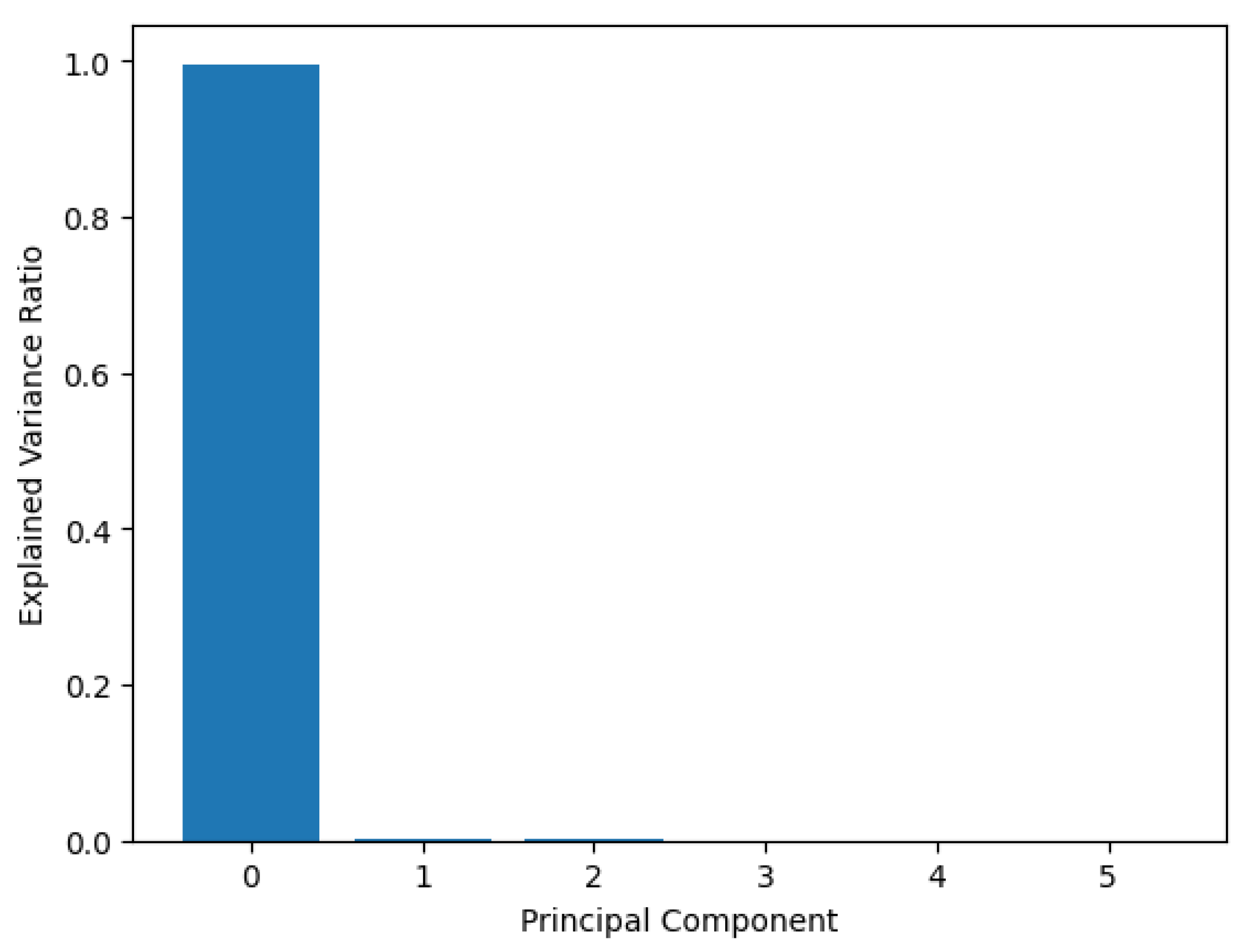

2.2.2. Feature Extraction

2.2.3. PEMFC Feature Selection

- Construct the kernel matrix K, in our case, we choose the polynomial kernel,

- Compute the Gram matrix according to the following equation:where N is the number of data points and is the matrix with all elements equal to .

- Find the vector by solving the following equation:where are the eigenvalues of and are the corresponding eigenvectors.

- Finlay, compute the kernel principal components

- in the discrete case:

- in the continuous case:

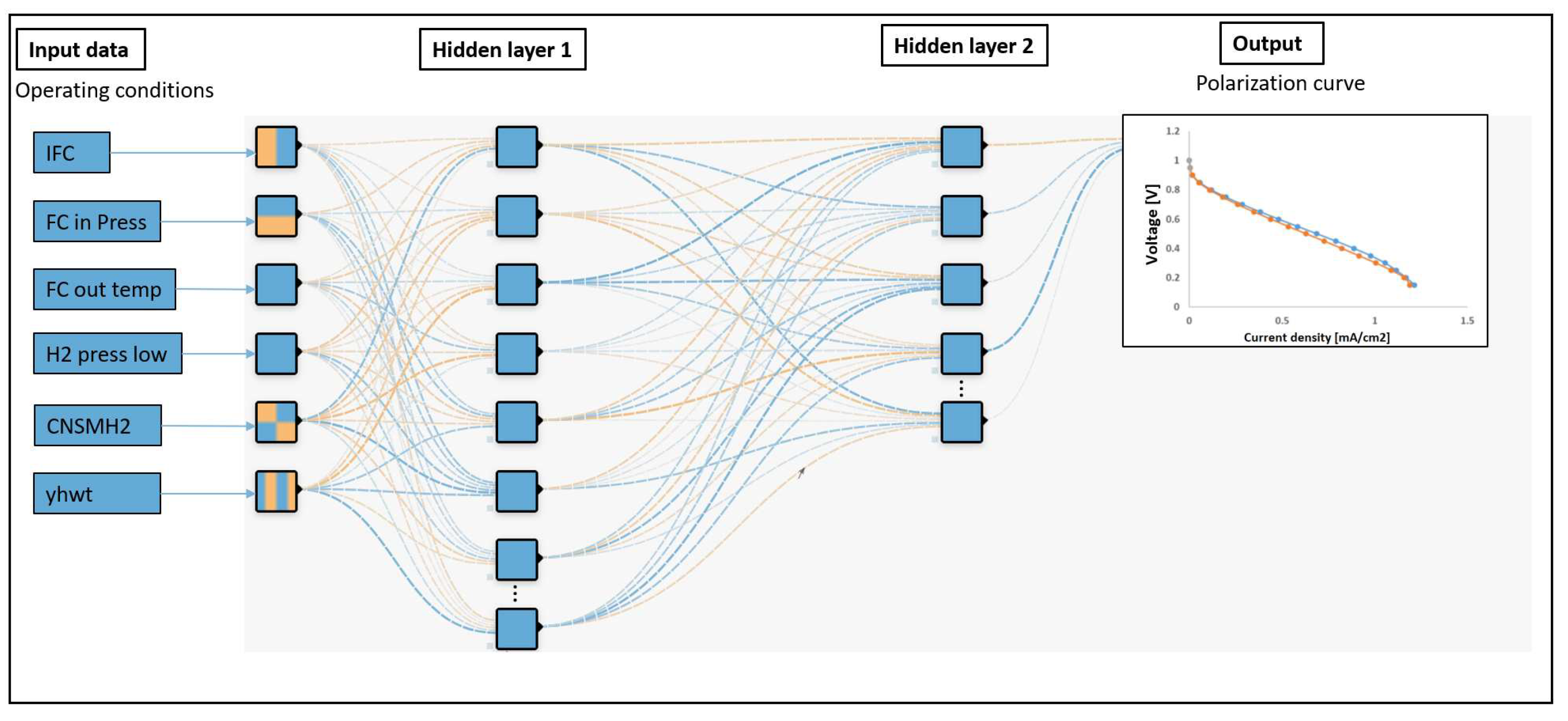

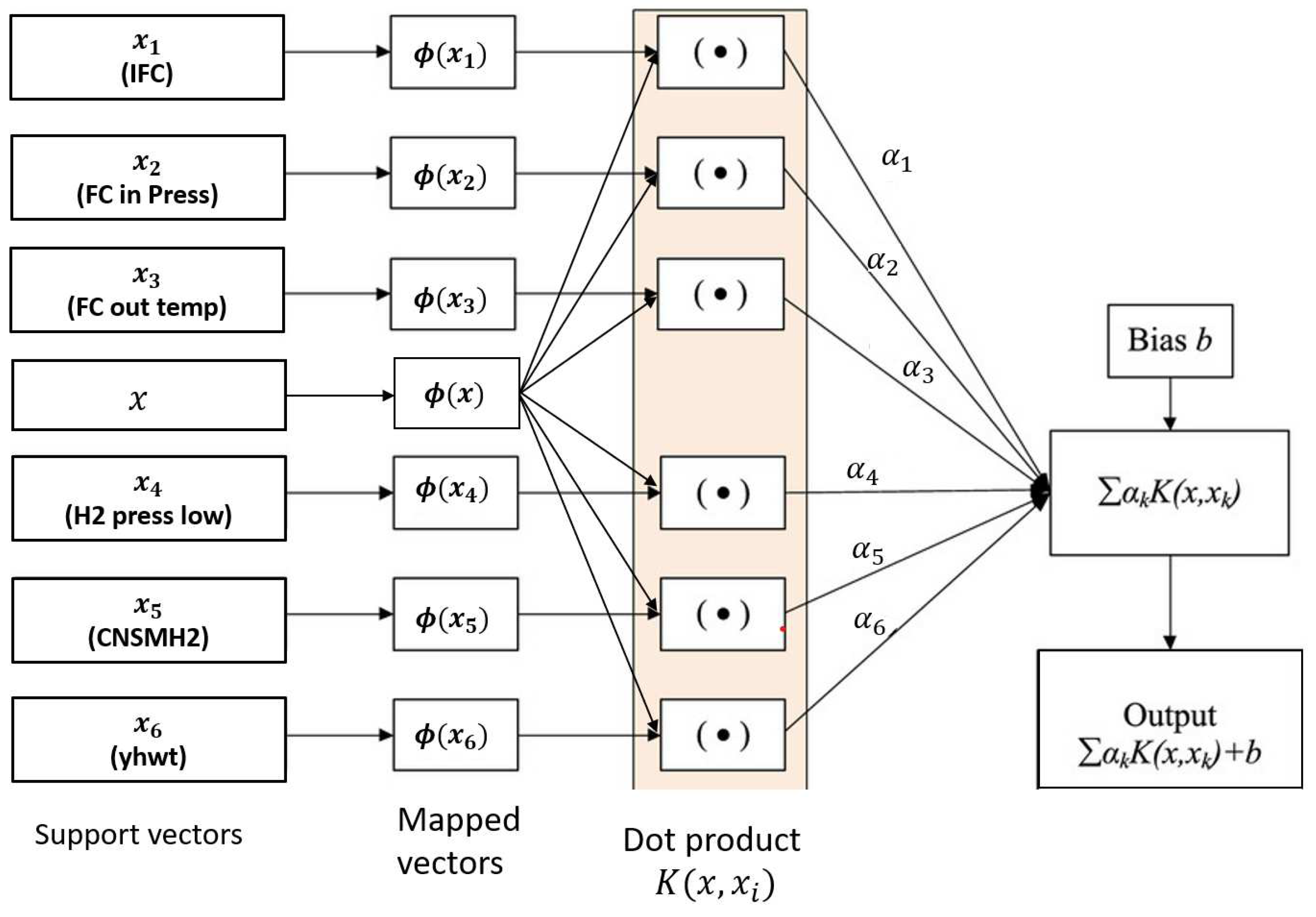

3. Model Development and Evaluation Criteria

3.1. XGBRegressor

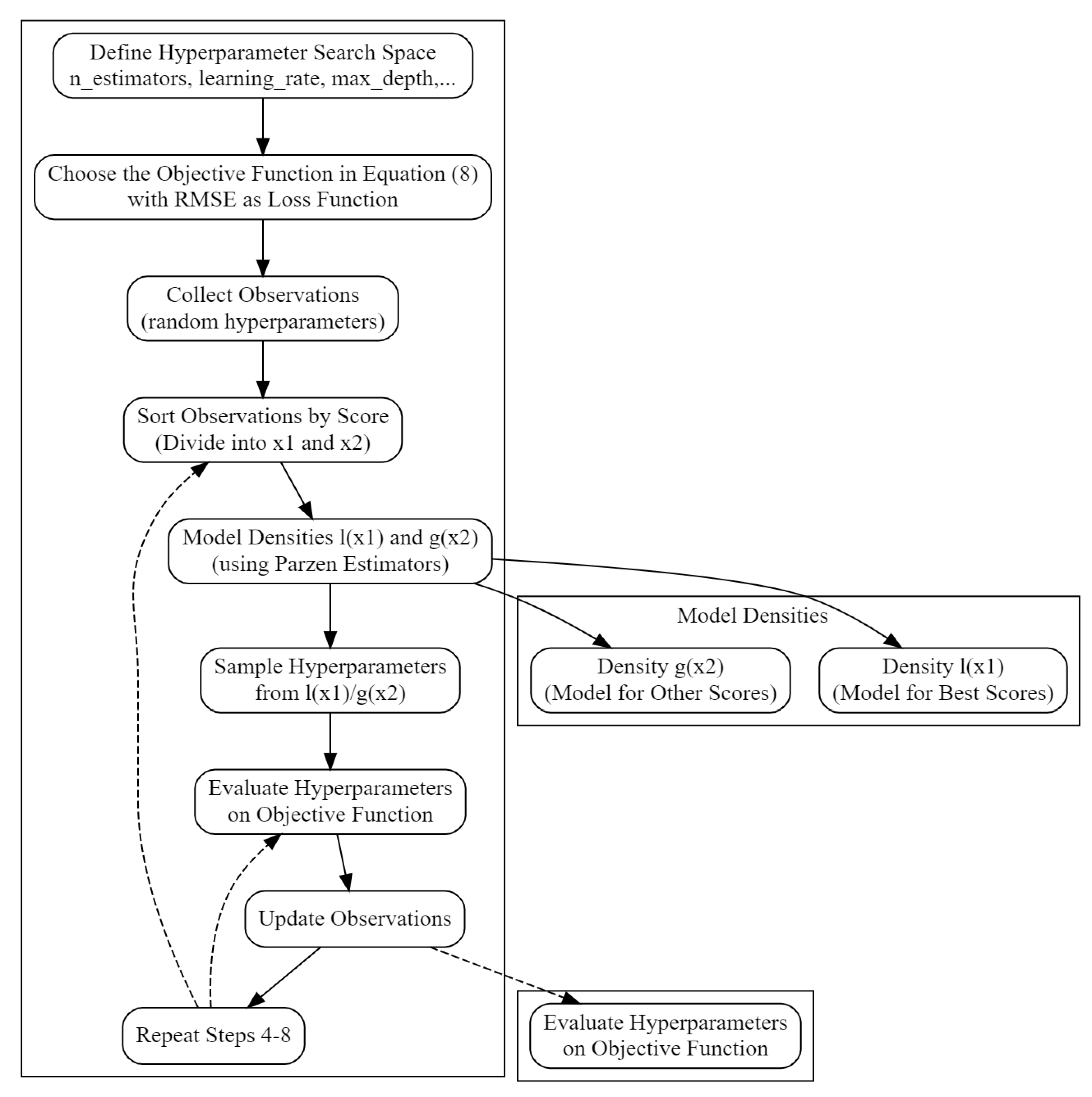

3.2. Tree-Structured Parzen Estimator

3.3. Evaluation Criteria

4. Results and Discussions

4.1. Hyper-parameters Tuning

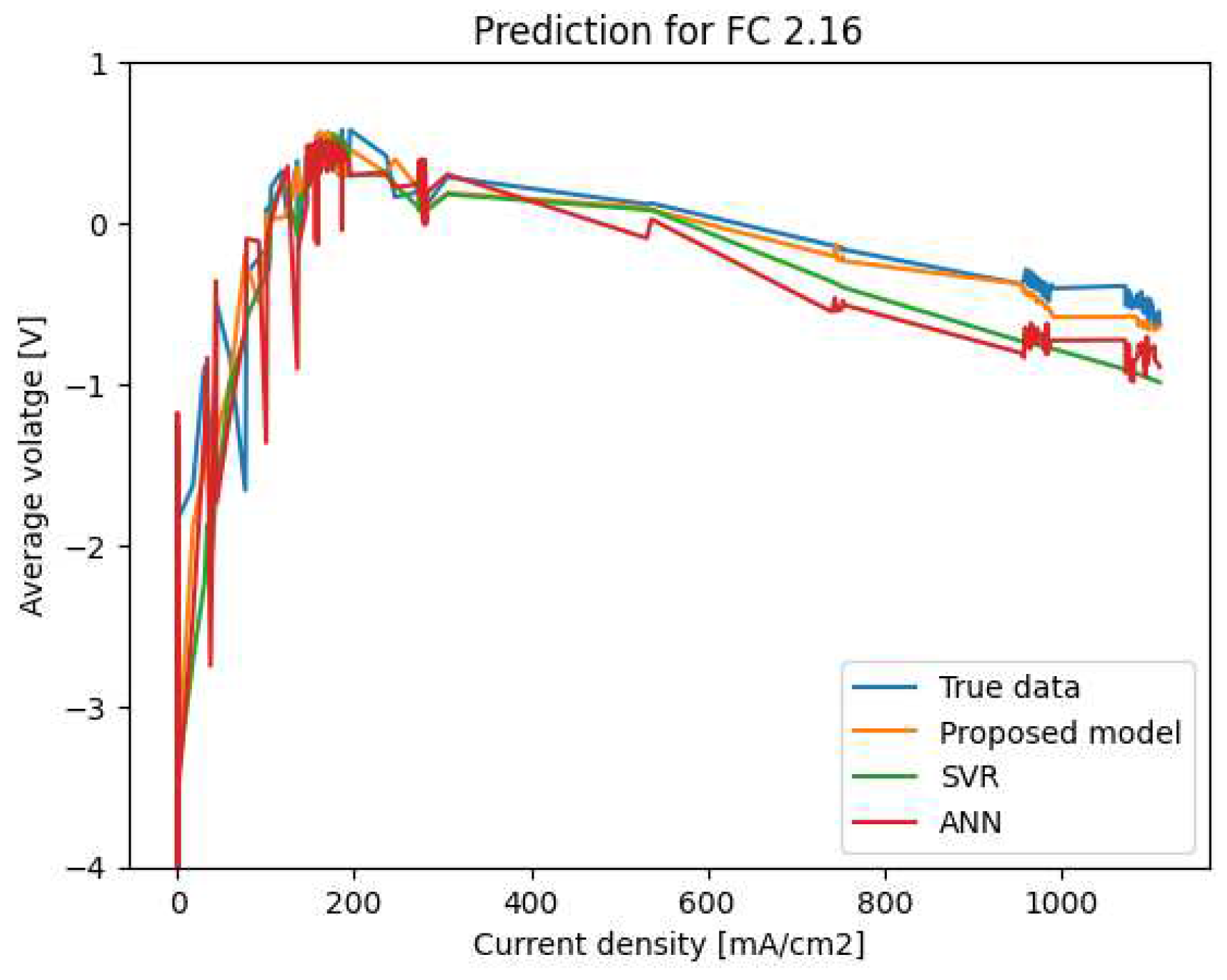

4.2. Prediction and Evaluation

5. Conclusion

Appendix A

Appendix A.1

References

- Padmaja, D.L.; Vishnuvardhan, B. Comparative study of feature subset selection methods for dimensionality reduction on scientific data. IEEE 6th Int. Conf. on Advanced Computing 2016, 31–34. [Google Scholar]

- Zebari, R.R.; Abdulazeez, A.; Zeebaree, D.; Zebari, D.; Saeed, J. A Comprehensive Review of Dimensionality Reduction Techniques for Feature Selection and Feature Extraction. J. Appl. Sci. Technol. Trends, 2020, 01, 56–70. [Google Scholar] [CrossRef]

- Zebari, D.; Haron, H.; Zeebaree, S. Security Issues in DNA Based on Data Hiding: A Review. Int. J. Appl. Eng. Res., 2017, 12, 6940–6948. [Google Scholar]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal., 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Zhao, H.; Min, F.; Zhu, W. Cost-sensitive feature selection of numeric data with measurement errors. J. of Appl. Math., 2013, 2013. [Google Scholar] [CrossRef]

- Elhadad, M.K.; Badran, K.M.; Salama, G.I. A novel approach for ontology-based dimensionality reduction for web text document classification. Int. J. Software Innovation, 2017, 5, 44–58. [Google Scholar] [CrossRef]

- Aziz, R.; Verma, C.; Srivastava, N. Dimension reduction methods for microarray data: a review. AIMS Bioeng., 2017, 4, 179–197. [Google Scholar] [CrossRef]

- Wang, Q. Kernel Principal Component Analysis and its Applications in Face Recognition and Active Shape Models. arXiv preprint arXiv:1207.3538, arXiv:1207.3538.

- Bouchlaghem, Y.; Akhiat, Y.; Amjad, S. Feature Selection: A Review and Comparative Study. E3S Web of Conf., 2022, 351, 01046. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. Proc. 22nd ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining.

- Shen, K.; Qin, H.; Zhou, J.; Liu, G. Runoff Probability Prediction Model Based on Natural Gradient Boosting with Tree-Structured Parzen Estimator Optimization. Water, 2022, 14, 545. [Google Scholar] [CrossRef]

- Salva, J.A.; Iranzo, A.; Rosa, F.; Tapia, E. Experimental validation of the polarization curve and the temperature distribution in a PEMFC stack using a one-dimensional analytical model. Int. J. of Hydrogen Energy.

- Moreira, M.V.; Silva, G.E. A practical model for evaluating the performance of proton exchange membrane fuel cells. Renew. Energy, 2009, 34, 1734–1741. [Google Scholar] [CrossRef]

- Ou, S.; Achenie, L.A. A hybrid neural network model for PEM fuel cells. J. of Power Sources, 2005, 140, 319–330. [Google Scholar] [CrossRef]

- Zhong, Z.; Zhu, X.; Gao, G.; Shi, J. A hybrid multi-variable experimental model for a PEMFC. J. of Power Sources, 2007, 164, 746–751. [Google Scholar] [CrossRef]

- Lee, W.Y.; Park, G.G.; Yang, T.H.; Yoon, Y.G.; Kim, C.S. Empirical modeling of polymer electrolyte membrane fuel cell performance using artificial neural networks. Int. J. of Hydrogen Energy, 2004, 29, 961–966. [Google Scholar] [CrossRef]

- Han, I.; Shin, H. Modeling of a PEM fuel cell stack using partial least squares and artificial neural networks. Korean Chem. Eng. Res., 2015, 53, 236–242. [Google Scholar] [CrossRef]

- Zhong, Z.; Zhu, Z.; Cao, G. Modeling a PEMFC by a support vector machine. J. of Power Sources, 2006, 160, 293–298. [Google Scholar] [CrossRef]

- Han, I.; Chung, C.C. Performance prediction and analysis of a PEM fuel cell operating on pure oxygen using data-driven models: a comparison of artificial neural network and support vector machine. Int. J. of Hydrogen Energy, 2016, 41, 10202–10211. [Google Scholar] [CrossRef]

- Hong, W. Performance prediction and power density maximization of a proton exchange membrane fuel cell based on deep belief network. J. of Power Sources, 2020, 228, 154. [Google Scholar]

- Shen, K.; Qin, H.; Zhou, J.; Liu, G. Runoff probability prediction model based on natural gradient boosting with tree-structured parzen estimator optimization. Water, 2022, 4, 545. [Google Scholar] [CrossRef]

- Zheng, L.; Hou, Y.; Zhang, T. Performance prediction of fuel cells using long short-term memory recurrent neural network. Int. J. of Energy Research, 2021, 45, 9141–9161. [Google Scholar] [CrossRef]

- Wang, B.; Xie, B.; Xuan, J.; Jiao, K. AI-based optimization of PEM fuel cell catalyst layers for maximum power density via data-driven surrogate modeling. Energy Conv. Manag., 2020, 205, 112460. [Google Scholar] [CrossRef]

- Ding, R.; Wang, R.; Ding, Y.; Yin, W.; Liu, Y.; Li, J.; Liu, J. Designing AI-Aided Analysis and Prediction Models for Nonprecious Metal Electrocatalyst-Based Proton-Exchange Membrane Fuel Cells. Angew. Chem., 2020, 132, 19337–19345. [Google Scholar] [CrossRef]

- Legala, A.; Zhao, J.; Li, X. Machine learning modeling for proton exchange membrane fuel cell performance. Energy and AI, 2022, 10, 100183. [Google Scholar] [CrossRef]

- Weiwei, L. Performance prediction of proton-exchange membrane fuel cell based on convolutional neural network and random forest feature selection. Energy Conv. Manag., 2021, 243, 114367. [Google Scholar]

- Han, I.-S.; Chung, C.-B. A hybrid model combining a support vector machine with an empirical equation for predicting polarization curves of PEM fuel cells. Int. J. of Hydrogen Energy, 2017, 42, 7023–7028. [Google Scholar] [CrossRef]

- Wilberforce, T.; Olabi, A.G. Proton exchange membrane fuel cell performance prediction using artificial neural network. Int. J. of Hydrogen Energy, 2021, 46, 6037–6050. [Google Scholar] [CrossRef]

- Long, B.; Wu, K.; Li, P.; Li, M. A novel remaining useful life prediction method for hydrogen fuel cells based on the gated recurrent unit neural network. Appl. Sci., 2022, 12. [Google Scholar] [CrossRef]

- Morán-Durán, A.; Martínez-Sibaja, A.; Rodríguez-Jarquin, J.P.; Posada-Gómez, R.; González, O.S. PEM fuel cell voltage neural control based on hydrogen pressure regulation. Processes, 2019, 7, 434. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, Y.; Pang, R.; Xu, B. Seismic fragility analysis of high concrete faced rockfill dams based on plastic failure with support vector machine. Soil Dyn. Earthquake Eng., 2021, 144, 106587. [Google Scholar] [CrossRef]

- Long, B.; Wu, K.; Li, P.; Li, M. A novel remaining useful life prediction method for hydrogen fuel cells based on the gated recurrent unit neural network. Appl. Sci., 2022, 12, 432. [Google Scholar] [CrossRef]

- Kheirandish, A.; Shafiabady, N.; Dahari, M.; Kazemi, M.S.; Isa, D. Modeling of commercial proton exchange membrane fuel cell using support vector machine. Int. J. of Hydrogen Energy, 2016, 41, 11351–11358. [Google Scholar] [CrossRef]

- Kishimoto, M.; Kishida, S.; Seo, H.; Iwai, H.; Yoshida, H. Prediction of electrochemical characteristics of practical-size solid oxide fuel cells based on database of unit cell performance. Appl. Energy, 2021, 283, 116305. [Google Scholar] [CrossRef]

- Talukdar, K.; Ripan, M.A.; Jahnke, T.; Gazdzicki, P.; Morawietz, T.; Friedrich, K.A. Experimental and numerical study on catalyst layer of polymer electrolyte membrane fuel cell prepared with diverse drying methods. J. of Power Sources, 2020, 461, 228169. [Google Scholar] [CrossRef]

- Danilov, V.A.; Tade, M.O. An alternative way of estimating anodic and cathodic transfer coefficients from PEMFC polarization curves. Chem. Eng. J., 2010, 156, 496–499. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.M.; Srinivasan, S. Modeling of proton membrane fuel cell performance with an empirical equation. J. Electroanal. Chem., 1995, 142, 2670–2674. [Google Scholar] [CrossRef]

- Guinea, D.M.; Moreno, B.; Chinarro, E.; Guinea, D.; Jurado, J.R. Rotary-gradient fitting algorithm for polarization curves of proton exchange membrane fuel cells (PEMFCs). Int. J. Hydrogen Energy, 2008, 33, 2774–2782. [Google Scholar] [CrossRef]

- Bressel, M.; Hilairet, M.; Hissel, D.; Bouamama, B.O. Extended Kalman filter for prognostic of proton exchange membrane fuel cell. Appl. Energy, 2016, 164, 220–227. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, K.; Zhao, H.; Li, J.; Sheng, X.; Yin, Y.; Jiao, K. Degradation prediction of proton exchange membrane fuel cell stack using semi-empirical and data-driven methods. Energy and AI, 2023, 11, 100205. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, L.; Jiang, Y.; Peng, K.; Jin, Z. A hybrid method for performance degradation probability prediction of proton exchange membrane fuel cell. Membranes, 2023, 13, 426. [Google Scholar] [CrossRef]

- Pan, R.; Yang, D.; Wang, Y.; Chen, Z. Performance degradation prediction of proton exchange membrane fuel cell using a hybrid prognostic approach. Int. J. of Hydrogen Energy, 2020, 45, 30994–31008. [Google Scholar] [CrossRef]

- Zhou, D.; Al-Durra, A.; Zhang, K.; Ravey, A.; Gao, F. Online remaining useful lifetime prediction of proton exchange membrane fuel cells using a novel robust methodology. J. of Power Sources, 2018, 399, 314–328. [Google Scholar] [CrossRef]

- Cheng, Y.; Zerhouni, N.; Lu, C. A hybrid remaining useful life prognostic method for proton exchange membrane fuel cell. Int. J. of Hydrogen Energy, 2018, 43, 12314–12327. [Google Scholar] [CrossRef]

- Chen, K.; Laghrouche, S.; Djerdir, A. Aging prognosis model of proton exchange membrane fuel cell in different operating conditions. Int. J. of Hydrogen Energy, 2020, 45, 11761–11772. [Google Scholar] [CrossRef]

- Wilberforce, T.; Olabi, A.G. Proton exchange membrane fuel cell performance prediction using artificial neural network. Int. J. of Hydrogen Energy, 2021, 46, 6037–6050. [Google Scholar] [CrossRef]

- Zuo, J.; Lv, H.; Zhou, D.; Xue, Q.; Jin, L.; Zhou, W. .. & Zhang, C. Deep learning based prognostic framework towards proton exchange membrane fuel cell for automotive application. Appl. Energy, 2021, 281, 115937. [Google Scholar]

- He, K.; Liu, Z.; Sun, Y.; Mao, L.; Lu, S. Degradation prediction of proton exchange membrane fuel cell using auto-encoder based health indicator and long short-term memory network. Int. J. of Hydrogen Energy, 2022, 47, 35055–35067. [Google Scholar] [CrossRef]

- Legala, A.; Zhao, J.; Li, X. Machine learning modeling for proton exchange membrane fuel cell performance. Energy and AI, 2022, 10, 100183. [Google Scholar] [CrossRef]

| Variable | Description | Unit |

|---|---|---|

| VFC | Average PEMFC stack voltage | V |

| IFC | PEMFC stack current | A |

| ACP Inv Temp | Air compressor inverter temperature | °C |

| ACP Mot Temp | Air compressor internal temperature | °C |

| Air comp speed | Air compressor speed | rpm |

| Air Flow | PEMFC air flow | rpm |

| CNSMH2 | Instantaneous H2 consumption | mg |

| f4g fwctrvo ratrvnw | 3 way valve opening rate | % |

| FC in Press | PEMFC input air pressure | kPa |

| FC out temp | PEMFC output temperature | °C |

| FCO TEMP | Output coolant temperature | °C |

| H2 mean pressure | Hydrogen pressure middle | Kpa |

| H2 press low | H2 pressure at PEMFC inlet | kPa |

| H2 press target | H2 pressure target in PEMFC | kPa |

| HP pump speed | H2 pump speed | rpm |

| MES FC | PEMFC net output power | W |

| MOD FC | PEMFC mode | - |

| Rad out temp | Radiator output temperature | °C |

| REVAPREF | Air compressor speed control | rpm |

| Water pump spd | Water pump speed | rpm |

| Water pump spd req | Water pump speed request | rpm |

| yhwt | Coolant Temperature | °C |

| Variable | Description |

|---|---|

| IFC | PEMFC stack current |

| FC in Press | PEMFC input air pressure |

| FC out temp | PEMFC output temperature |

| H2 press low | H2 pressure at PEMFC inlet |

| CNSMH2 | Instantaneous H2 consumption |

| yhwt | Coolant Temperature |

| XGBRegressor | ANN | k | ||||

|---|---|---|---|---|---|---|

| RMSE | R | RMSE | R | |||

| Filter method | Mutual Information | 0.0501 | 0.8717 | 0.0588 | 0.7354 | 4 |

| Correlation | 0.1065 | 0.5919 | 0.1633 | 0.5615 | 7 | |

| Wrapper method | RFE-Random Forest | 0.0490 | 0.8909 | 0.0550 | 0.7312 | 9 |

| Genetic algorithm | 0.0914 | 0.7590 | 0.0766 | 0.6918 | 12 | |

| Embedded method | Auto-encoder | 0.0431 | 0.8984 | 0.0549 | 0.7422 | 8 |

| Lasso | 0.0652 | 0.8577 | 0.0789 | 0.6700 | 13 | |

| Ridge | 0.0682 | 0.8505 | 0.0734 | 0.6692 | 9 | |

| Proposed method | 0.0476 | 0.9233 | 0.0537 | 0.7689 | 6 | |

| Hyper-parameters | Description | Estimated value |

|---|---|---|

| n_estimators | number of decision trees to be created | 1500 |

| max_depth | the maximum depth of each decision tree | 5 |

| learning_rate | the rate at which the model learns from the data | 0.01 |

| colsample_bytree | the fraction to be used for training each tree | 0.060 |

| loss function | RMSE | - |

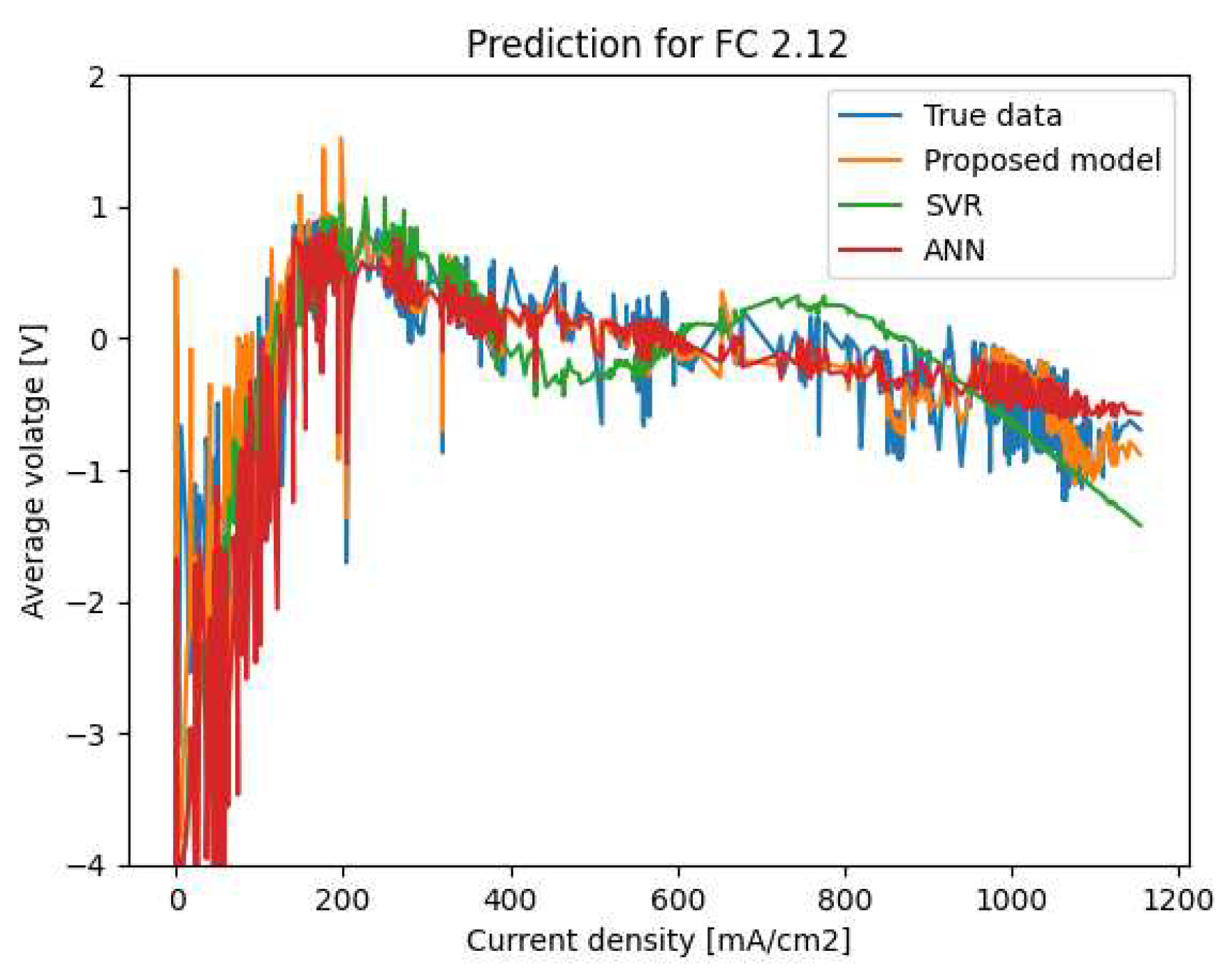

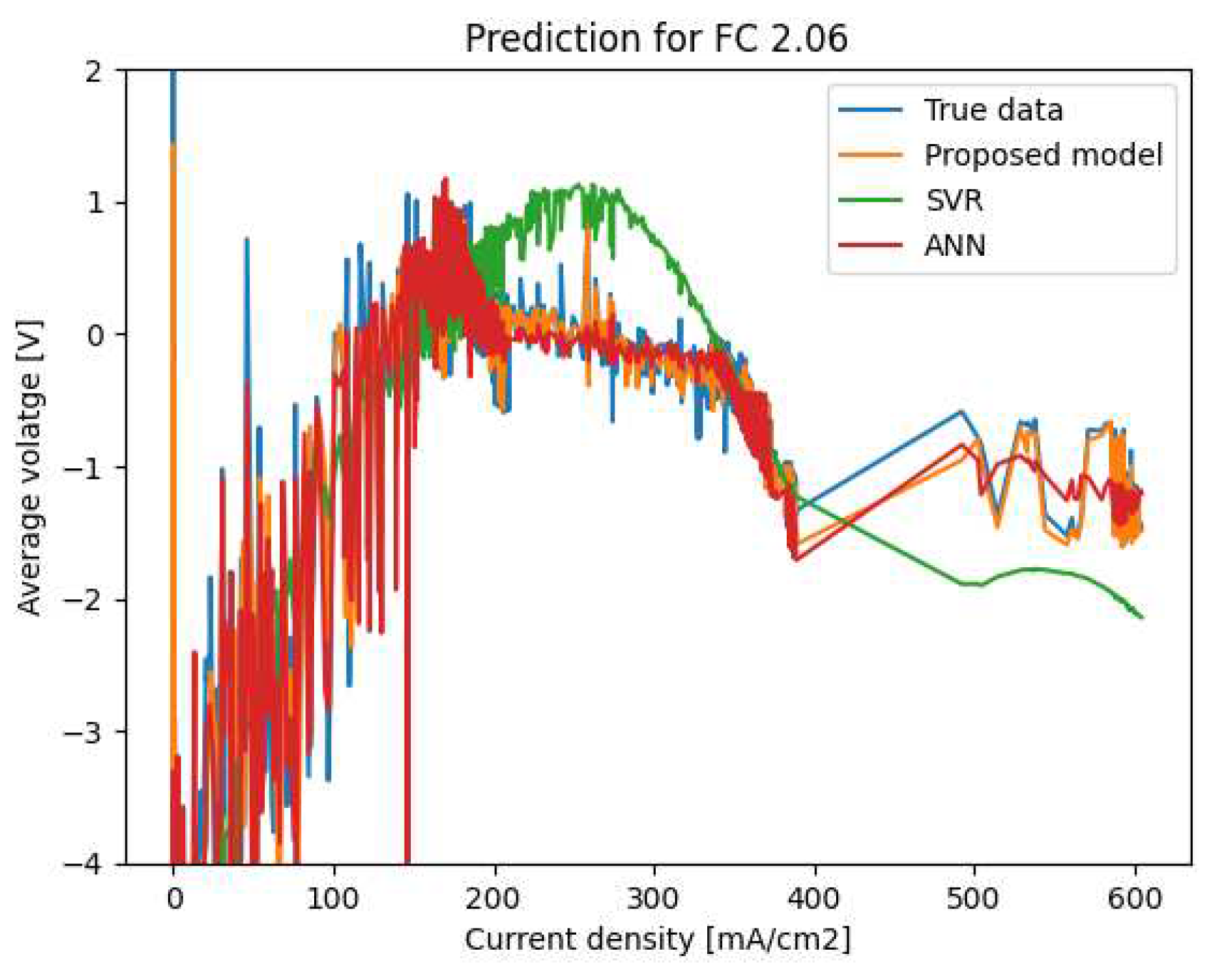

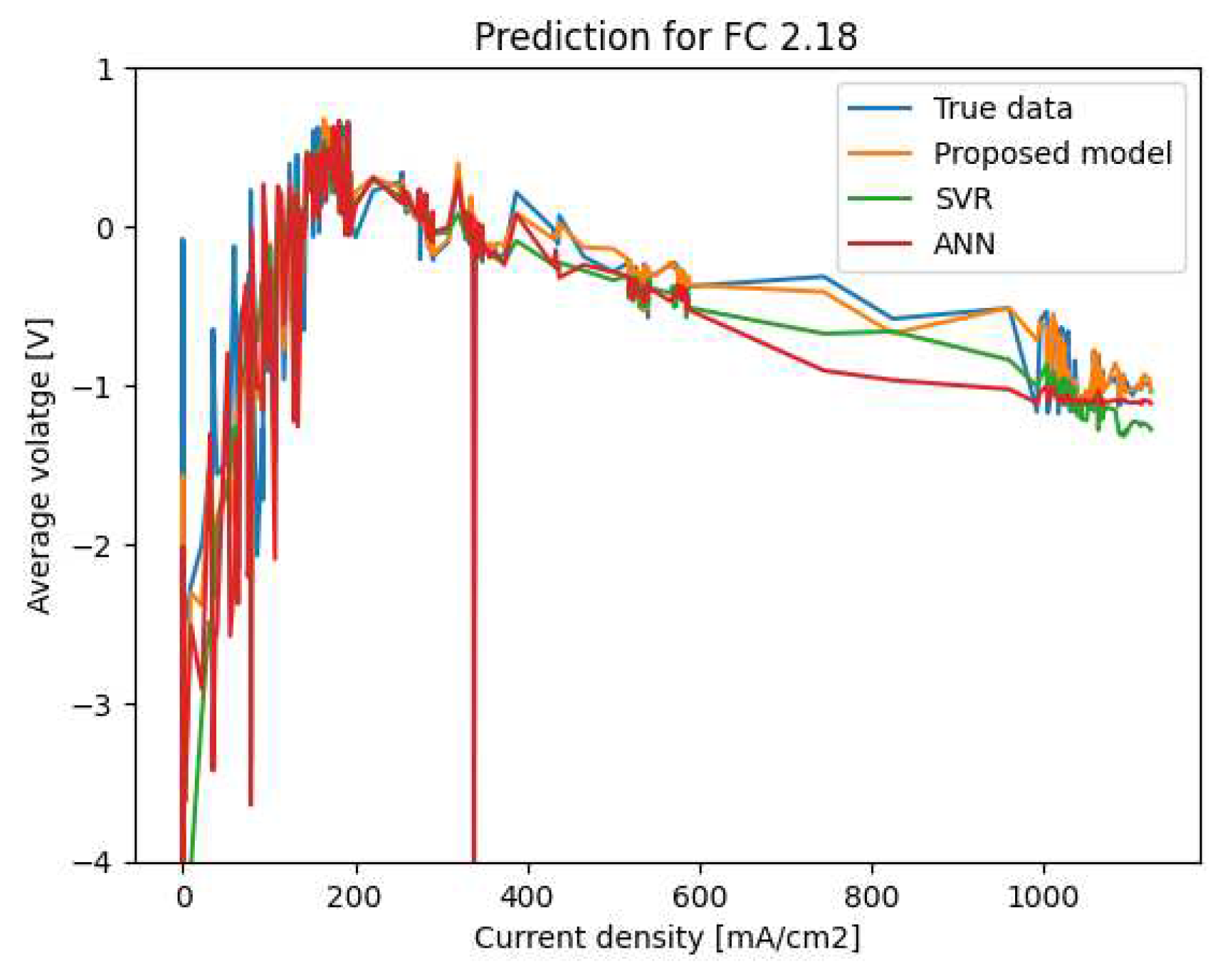

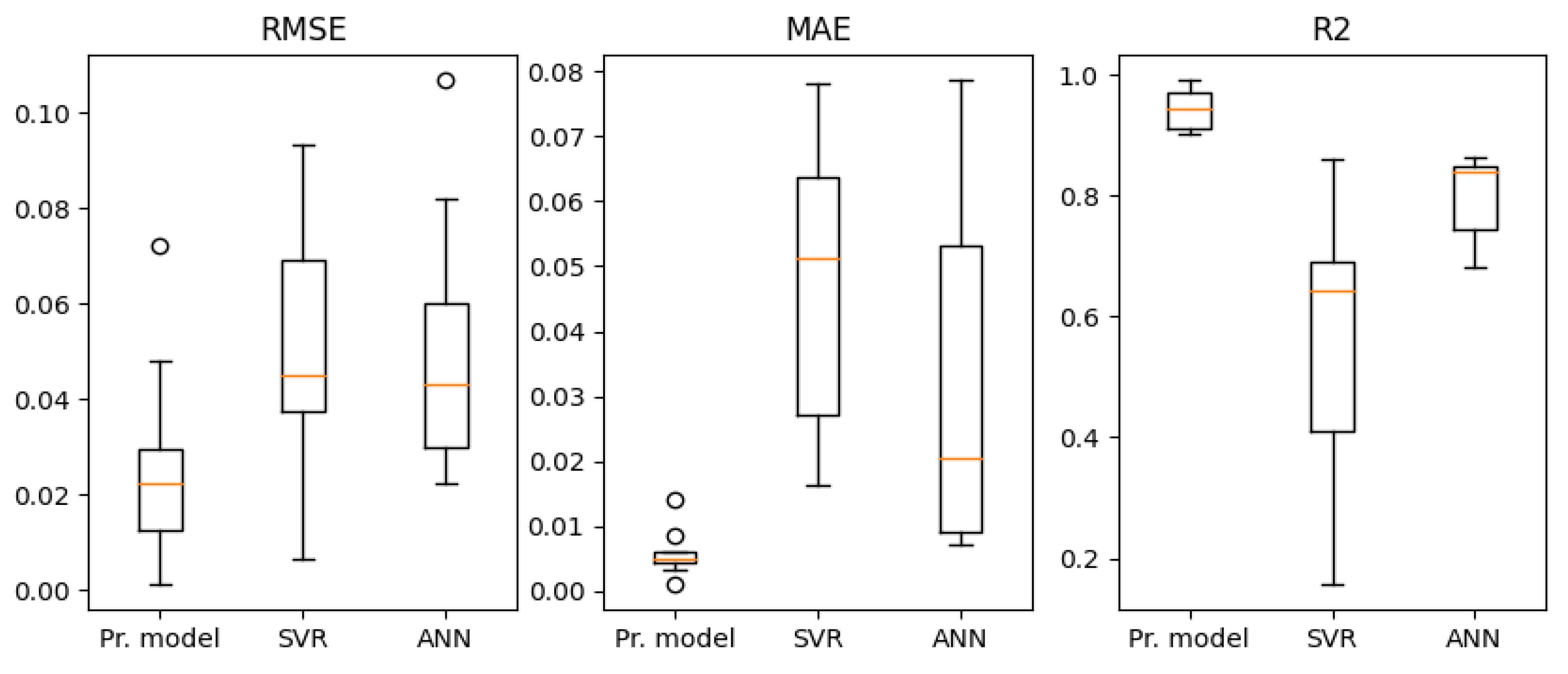

| Proposed model | SVR | ANN | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | R | RMSE | MAE | R | RMSE | MAE | R | ||

| FC 2.21 | 0.0100 | 0.0050 | 0.9758 | 0.0350 | 0.0215 | 0.6015 | 0.0424 | 0.0172 | 0.8351 | |

| FC 2.19 | 0.0220 | 0.0062 | 0.9512 | 0.0374 | 0.0162 | 0.8602 | 0.0410 | 0.0134 | 0.8476 | |

| FC 2.18 | 0.0280 | 0.0085 | 0.9500 | 0.0707 | 0.0641 | 0.6625 | 0.0435 | 0.0641 | 0.8417 | |

| FC 2.17 | 0.0194 | 0.0047 | 0.9071 | 0.0373 | 0.0267 | 0.6228 | 0.0236 | 0.0071 | 0.8483 | |

| FC 2.16 | 0.0480 | 0.0141 | 0.9060 | 0.0932 | 0.0781 | 0.6622 | 0.0632 | 0.0236 | 0.8446 | |

| FC 2.14 | 0.0053 | 0.0034 | 0.9809 | 0.0500 | 0.0433 | 0.2825 | 0.0507 | 0.0307 | 0.7236 | |

| FC 2.13 | 0.0011 | 0.0010 | 0.9904 | 0.0064 | 0.063 | 0.3480 | 0.0821 | 0.0606 | 0.6987 | |

| FC 2.12 | 0.0720 | 0.0049 | 0.9021 | 0.0921 | 0.0689 | 0.7629 | 0.1068 | 0.0786 | 0.6810 | |

| FC 2.08 | 0.0230 | 0.0044 | 0.9192 | 0.0640 | 0.0593 | 0.1560 | 0.0223 | 0.0076 | 0.8024 | |

| FC 2.06 | 0.0300 | 0.0055 | 0.9367 | 0.0400 | 0.0280 | 0.7012 | 0.0264 | 0.0076 | 0.8640 | |

| Mean | 0.0258 | 0.0057 | 0.9419 | 0.0526 | 0.0469 | 0.5659 | 0.0502 | 0.0310 | 0.7987 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).