Submitted:

14 September 2023

Posted:

15 September 2023

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

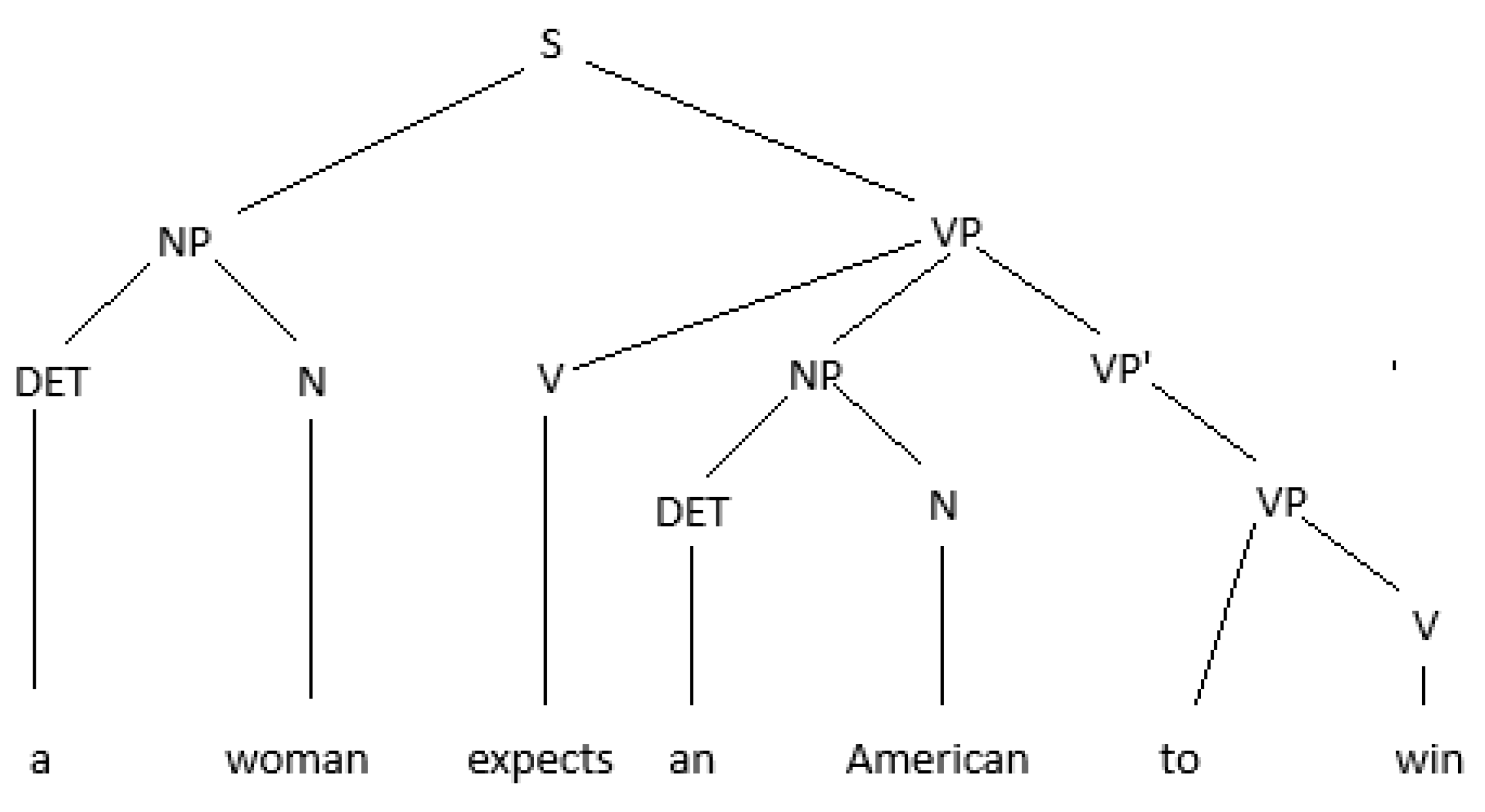

- Word order and meaning - syntactic analysis aims to extract the dependence of words with other words in the document. If we change the order of words, then it will be difficult to understand the sentence;

- Retention of stop words - if we remove stop words, then the meaning of a sentence can be changed altogether;

- Word morphology - stemming, lemmatization will bring words to their basic form, thereby changing the grammar of the sentence;

- Parts of speech of words in a sentence - identifying the correct speech part of a word is important.

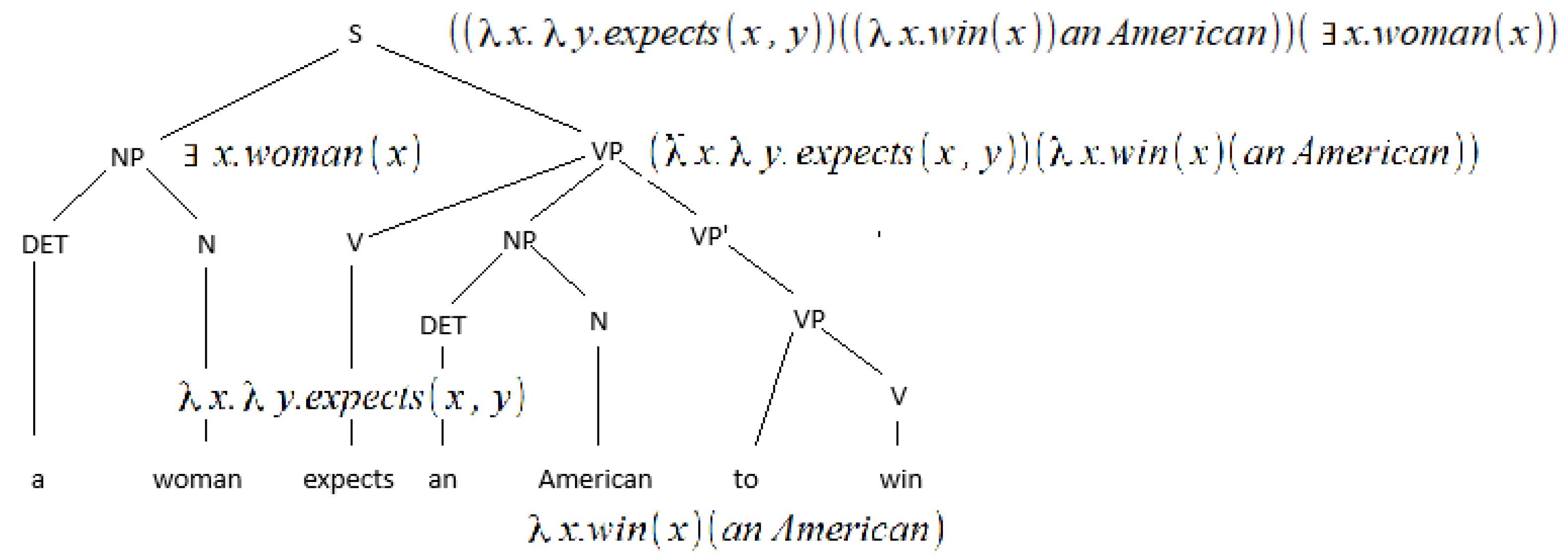

- Interpreting determiners, e.g. a man is not the same with there is a man ;

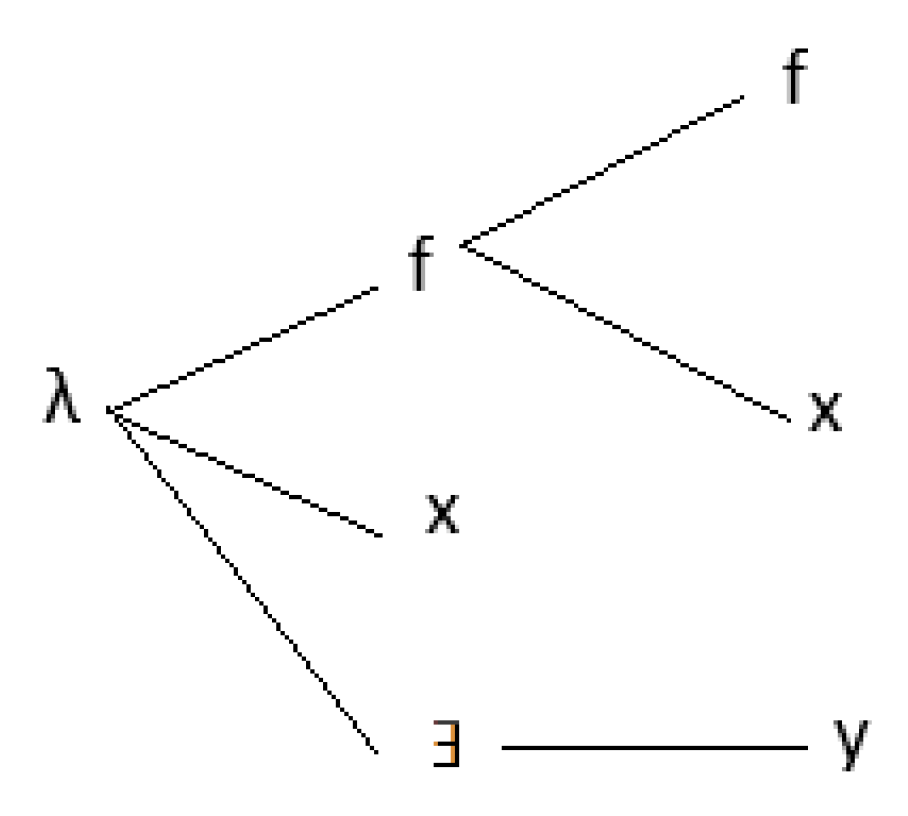

- Type raising, e.g. the argument may become the function, like in the case of callback functions or inversion of control design pattern;

- Transitive verbs, e.g. the following expression may improve the lexicon in this special case, and the expression will do the same for intransitive verbs;

- Quantifier and scope ambiguity, e.g. In this country, a woman gives birth every 15 minutes. and Every man loves a woman , respectively;

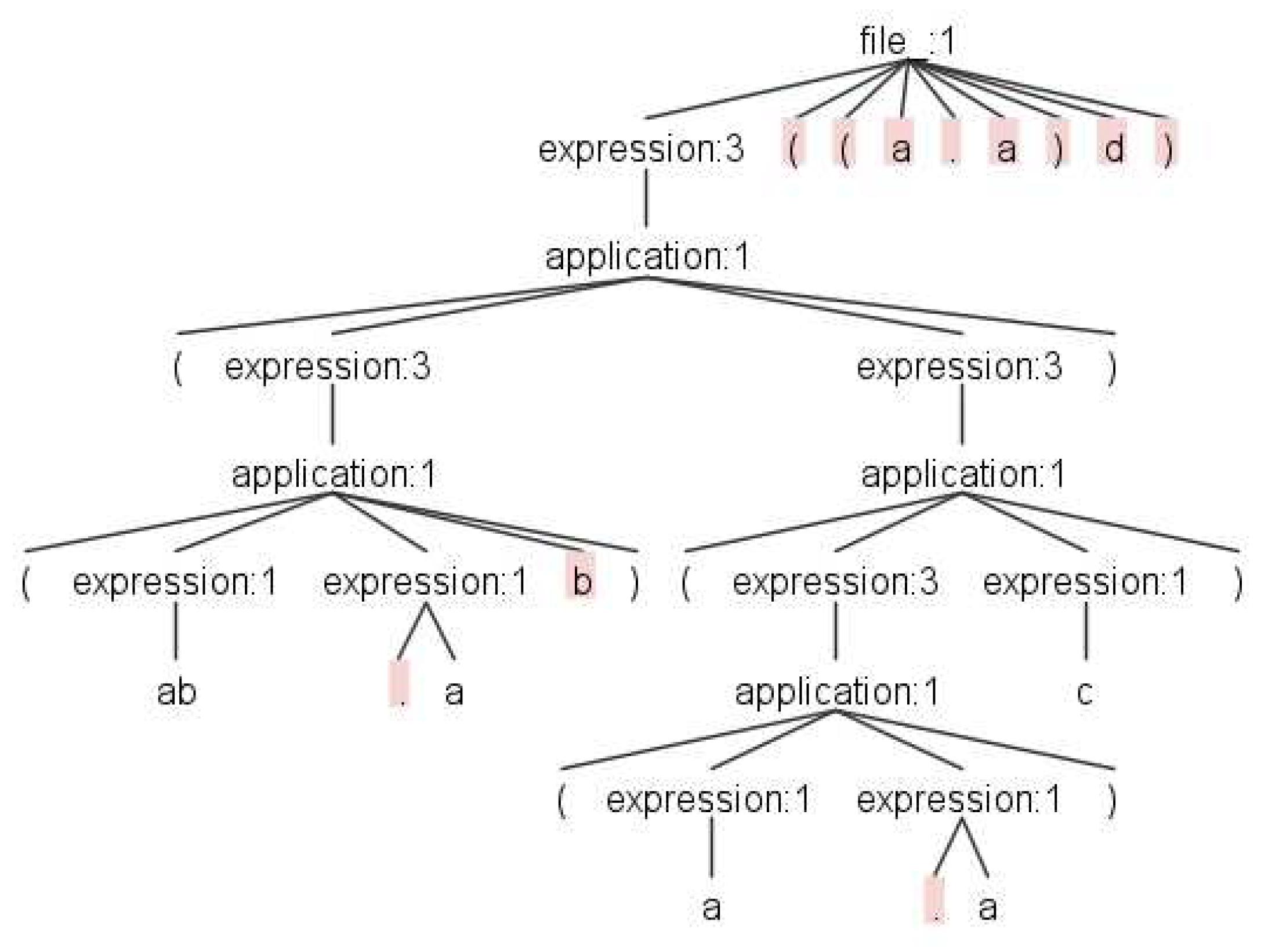

- Coordination or summing up, e.g. .

2. Materials

- the identification of the special grammatical relation to the subject position of any sentence analyzed by this clause vis-à-vis the NP appearing in it;

- the identification of all grammatical relations of the sentence with those of the VP.

3. Methodology

-

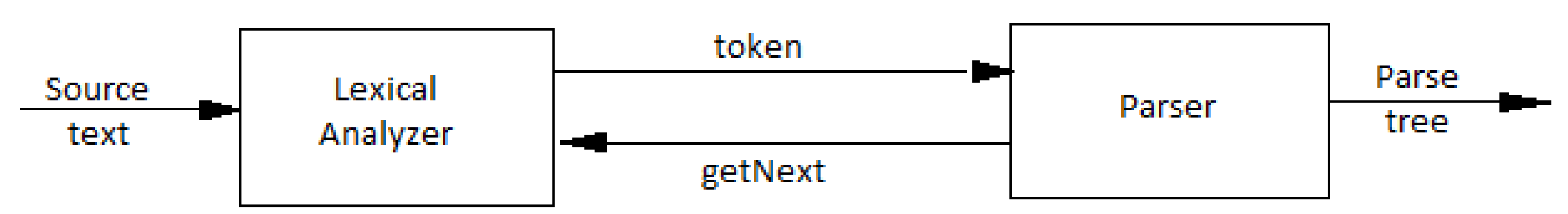

The required packages and modules are imported using the require function. The wink-nlp package is imported as winkNLP, and the English language model is imported accordingly:// Load required packages and modules:const winkNLP = require(’wink-nlp’);const model = require(’wink-eng-lite-web-model’);const pl = require("tau-prolog");.

-

The tau-prolog package is imported as pl, and a session is created with pl.create(1000):// Create a new session:const session = pl.create(1000);

-

The winkNLP function is invoked with the imported model to instantiate the nlp object:// Instantiate winkNLP:const nlp = winkNLP(model);

-

The its and show variables are assigned to nlp.its and a function that logs the formatted answer from the tau-prolog session, respectively:// Define helper functions:const its = nlp.its;const showAnswer = x => console.log(session.format_answer(x));

-

The item variable is assigned the value of the third argument passed to the Node.js script using process.argv[2]:// Get command line argument:const inputItem = process.argv[2]; //’the boy eats the apples.the woman runs the alley’;// in the back. a woman runs freely on the alley’;

-

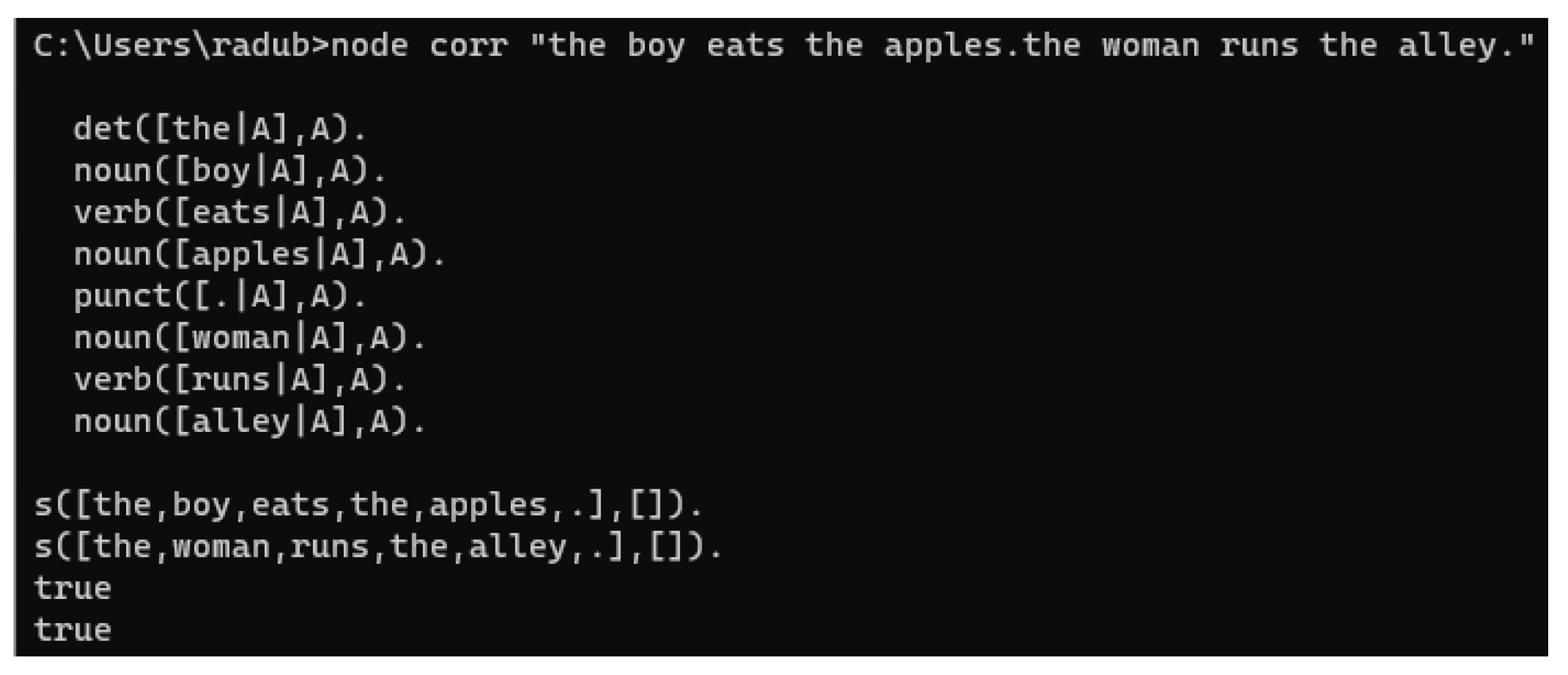

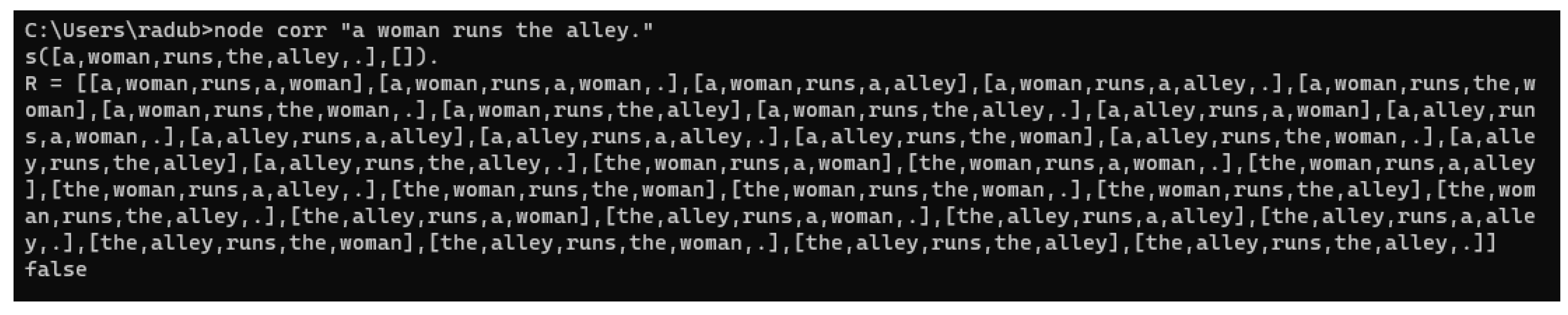

The program variable is assigned a Prolog program represented as a string. It defines rules for sentence structure, including noun phrases, verb phrases, and intransitive verbs. The program also includes rules for intransitive verbs, e.g. "runs" and "laughs":[14]// Define the program and goal:let program = `s(A,B) :- np(A,C), vp(C,D), punct(D,B).np(A,B) :- proper_noun(A,B).np(A,B) :- det(A,C), noun(C,B).vp(A,B) :- verb(A,C), np(C,B).vp(A, B) :- intransitive_verb(A, B).proper_noun([Eesha|A],A).proper_noun([Eeshan|A],A).intransitive_verb([runs|A],A). %intransitive_verb([laughs|A],A). %punct(A,A).`;

-

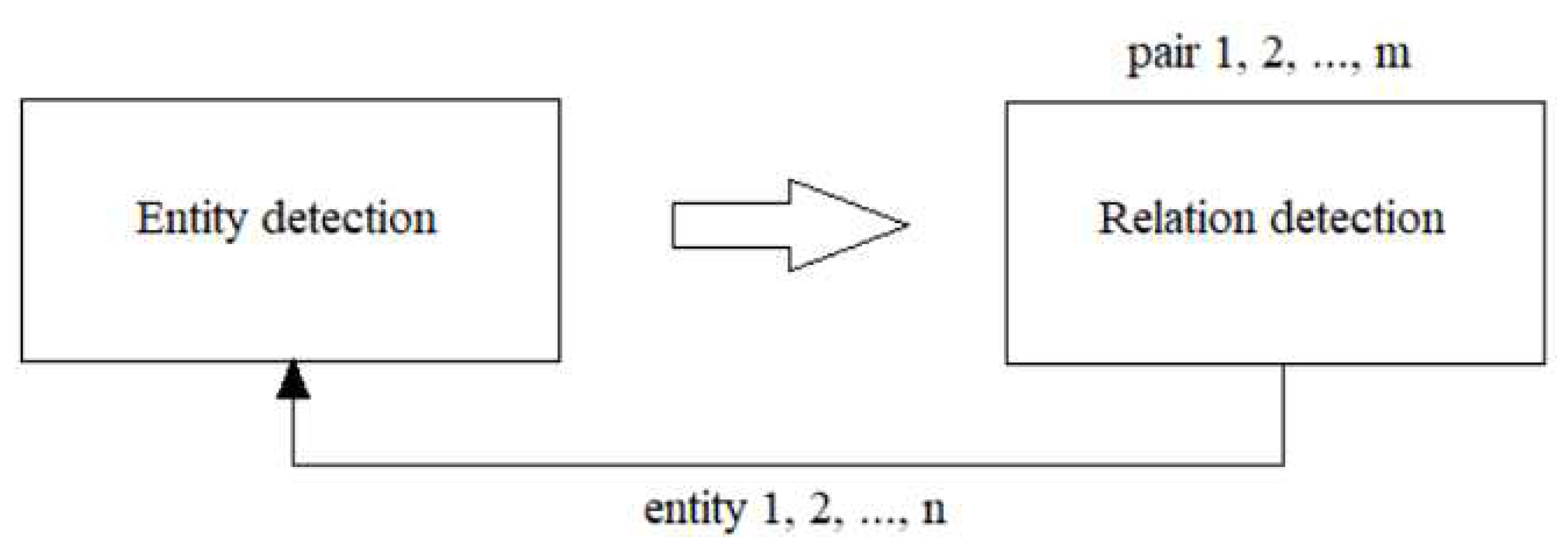

The nlp.readDoc function is used to create a document object from the inputItem. The code then iterates over each sentence and token in the document, extracting the type of entity and its part of speech:const doc = nlp.readDoc(inputItem);let entityMap = new Map();// Extract entities from the text:doc.sentences().each((sentence) => {sentence.tokens().each((token) => {entityMap.set(token.out(its.value), token.out(its.pos));});});

-

The extracted entities and their parts of speech are stored in a Map object as Prolog rules:// Add entity rules to the program:const mapEntriesToString = (entries) => {return Array.from(entries, ([k, v]) => `n ${v.toLowerCase()}(S0,S) :- S0=[${k.toLowerCase()}|S].`).join("") + "n";}//console.log(mapEntriesToString([...entityMap.entries()]));

-

The generated Prolog rules are appended to the program string:program += mapEntriesToString([...entityMap.entries()]);

-

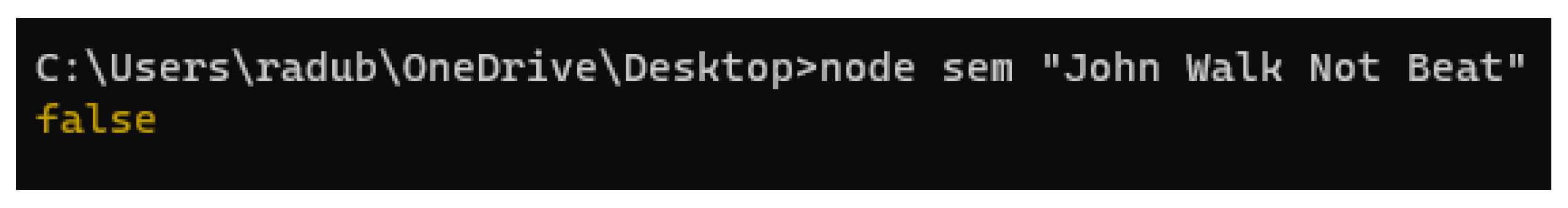

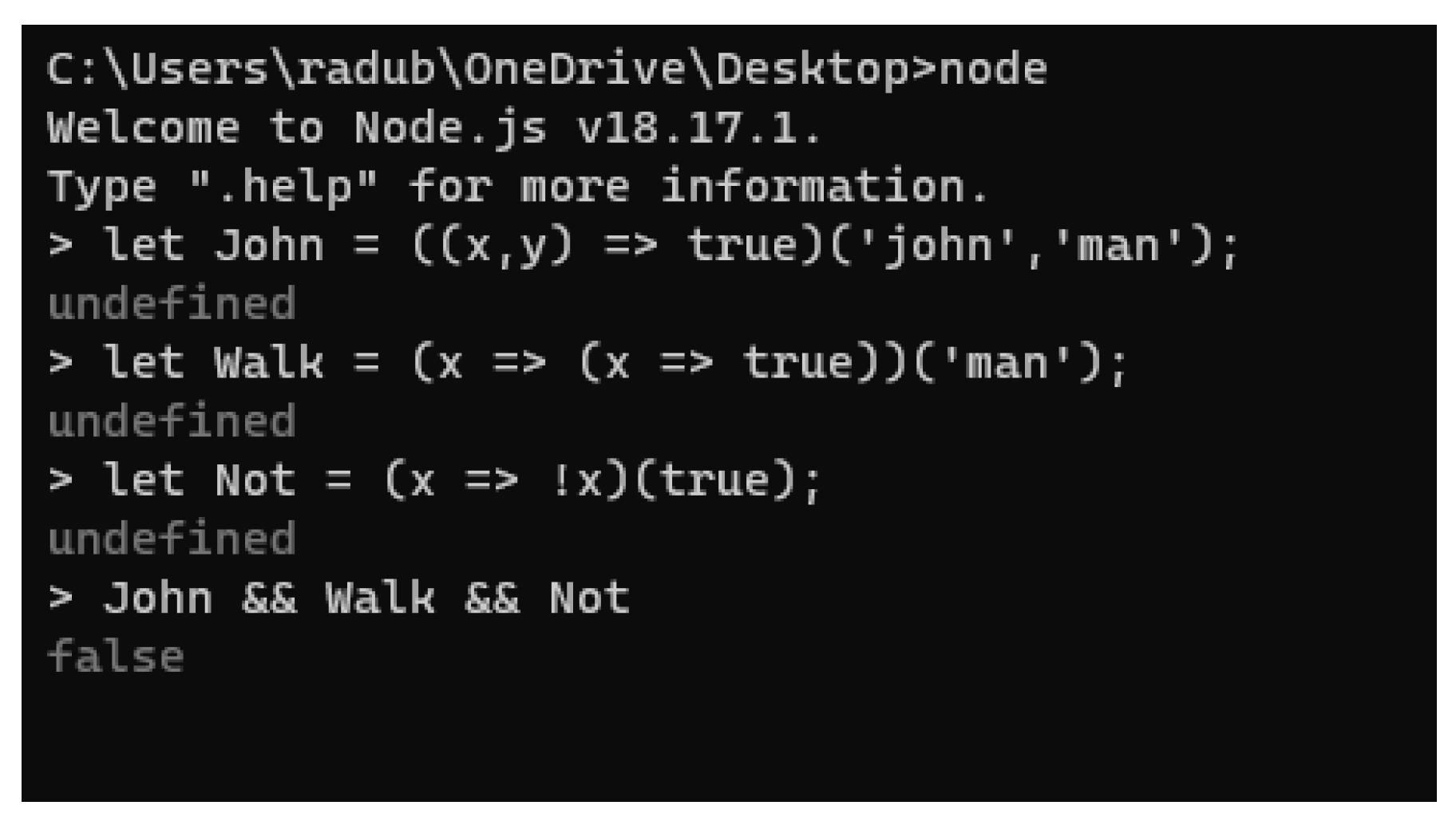

The session.consult function is used to load the Prolog program into the tau-prolog session. Then, the session.query function is used to query the loaded program with the specified goals. The session.answers function is used to display the answers obtained from the query:doc.sentences().each((sentence) => {let goals = `s([${sentence.tokens().out()}],[]).`;session.consult(program,{success: function() {session.query(goals, {success: function() {session.answers(showAnswer);}})}})});

4. Results

5. Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| DCG | Definite Clause Grammar |

| NLP | Natural Language Processing |

| NLU | Natural Language Understanding |

| AI | Artificial Intelligence |

| SVO | Subject Verb Object |

| VSO | Verb Subject Object |

| OSV | Object Subject Verb |

| OS | Operating System |

| DOAJ | Directory of open access journals |

| LFG | Lexical-Functional Grammar |

| LP | Logic Programming |

| ANTLR | ANother Tool for Language Recognition |

References

- Seuren, P. The Chomsky hierarchy in perspective. In From Whorf to Montague: Explorations in the Theory of Language; Oxford Academic Books, Country; 2013; pp. 205–238. [Google Scholar] [CrossRef]

- NLP: Building a Grammatical Error Correction Model. Available online: https://towardsdatascience.com/nlp-building-a-grammatical-error-correction-model-deep-learning-analytics-c914c3a8331b (accessed on 16 Aug. 2023).

- Turaev, S.; Abdulghafor, R.; Amer Alwan, A.; Abd Almisreb, A.; Gulzar, Y. Binary Context-Free Grammars. Symmetry 2020, 12, 1209. [Google Scholar] [CrossRef]

- Syntactic Analysis - Guide to Master Natural Language Processing(Part 11). Available online: https://www.analyticsvidhya.com/blog/2021/06/part-11-step-by-step-guide-to-master-nlp-syntactic-analysis (accessed on 16 Aug. 2023).

- Relation Extraction and Entity Extraction in Text using NLP. Available online: https://nikhilsrihari-nik.medium.com/identifying-entities-and-their-relations-in-text-76efa8c18194 (accessed on 16 Aug. 2023).

- Discourse Representation Theory. Available online: https://plato.stanford.edu/entries/discourse-representation-theory (accessed on 16 Aug. 2023).

- Introduction to semantic parsing. Available online: https://stanford.edu/class/cs224u/2018/materials/cs224u-2018-intro-semparse.pdf (accessed on 22 Aug. 2023).

- Bercaru, G.; Truică, C.-O.; Chiru, C.-G.; Rebedea, T. Improving Intent Classification Using Unlabeled Data from Large Corpora. Mathematics 2023, 11, 769. [Google Scholar] [CrossRef]

- Jose, F. Morales, Rémy Haemmerlé, Manuel Carro, and Manuel V. Hermenegildo. Lightweight compilation of (C)LP to JavaScript. Theory and Practice of Logic Programming 2012, 12(4-5), 755–773. [Google Scholar] [CrossRef]

- An open source Prolog interpreter in JavaScript. Available online: https://socket.dev/npm/package/tau-prolog (accessed on 16 Aug. 2023).

- Frey, W.; Reyle, U. A Prolog Implementation of Lexical Functional Grammar as a Base for a Natural Language Processing System. Conference of the European Chapter of the Association for Computational Linguistics (1983); URL: https://api.semanticscholar. 1716. [Google Scholar]

- Logic programming in JavaScript using LogicJS. Available online: https://abdelrahman.sh/2022/05/logic-programming-in-javascript (accessed on 16 Aug. 2023).

- Saint-Dizier, P. An approach to natural-language semantics in logic programming; Journal of Logic Programming. Journal of Logic Programming 1986, 3, 329–356. [Google Scholar] [CrossRef]

- Kamath, R, Jamsandekar, S., Kamat, R. Exploiting Prolog and Natural Language Processing for Simple English Grammar. In Proceedings of National Seminar NSRTIT-2015, CSIBER, Kolhapur, Date of Conference (15); URL: URL: https://www.researchgate.net/publication/280136353_Exploiting_Prolog_and_Natural_Language_Processing_for_Simple_English_Grammar. 20 March.

- Paulson, L.C. Writing Interpreters for the λ -Calculus. In ML for the Working Programmer; Cambridge University Press, Country, 2007; pp. [CrossRef]

- Parr, T. Introducing ANTLR and Computer Languages. In The Definitive ANTLR 4 Reference; Davidson Pfalzer, Country; 2013; p. 328. ISBN 9781934356999. [Google Scholar]

- An introduction to the roots of functional programming. Available online: https://medium.com/@ahlechandre/lambda-calculus-with-javascript-897f7e81f259 (accessed on 28 Aug. 2023).

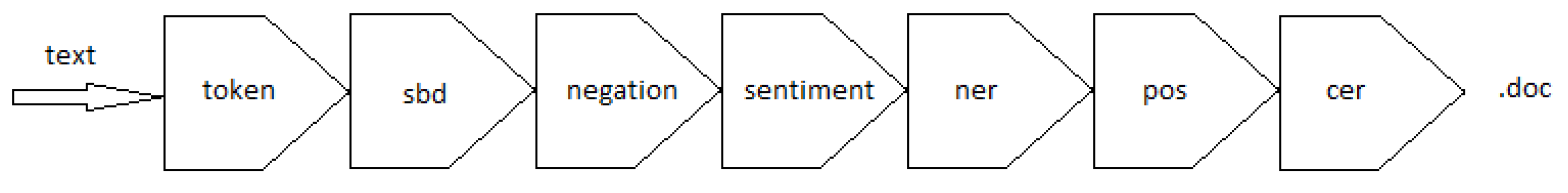

| Stage | Description |

|---|---|

| tokenization | Splits text into tokens. |

| sbd | Sentence boundary detection — determines span of each sentence in terms of start and end token indexes. |

| negation | Negation handling — sets the negation Flag for every token whose meaning is negated due a "not" word. |

| sentiment | Computes sentiment score of each sentence and the entire document. |

| ner | Named entity recognition — detects all named entities and also determines their type and span. |

| pos | Performs part-of-speech tagging. |

| cer | Custom entity recognition — detects all custom entities and their type and span. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).