4.3.3. Quantitative Evaluation Results

Quantitative evaluation involves expressing registration outcomes in numerical terms. Commonly employed metrics for quantitative assessment encompass the count of control point pairs (

), the Root Mean Square Error (RMSE) of all control point residuals (

), and RMSE calculated using the leave-one-out method (

). These metrics are frequently normalized to the pixel size to facilitate comparisons [

22].

The transformation matrix (

T) for control point pairs is determined through the least squares method, and based on this matrix, the residuals of control point pairs are computed, giving rise to

and

. The presence of more accurately matched point pairs translates to a better determination of the parameters in the geometric transformation model, thereby yielding enhanced performance [

12]. However, it’s important to note that an increase in the number of correctly matched point pairs acquired through a registration method doesn’t necessarily guarantee a decrease in the Root Mean Square Error. This is because errors can still persist in the point pairs matched by the algorithm.

Gonçalves et al. took into account the distribution of control points and residuals and put forward various evaluation metrics, which include the quadrant residual distribution (

), the proportion of poorly matched points with residuals (norm) exceeding 1.0 (Bad Point Proportion, BPP) denoted as BPP(1.0), the detection of a preferred axis in the residual scatter plot (

), the statistical attributes of control point distribution in the image (

), all of which were considered in conjunction with

,

, and

. Among these metrics, except for

, smaller values in the other evaluation metrics signify improved registration performance [

2].

These seven metrics are not completely independent of one another. For instance, a higher value of along with a lower value of indicates greater accuracy in point matching. Conversely, if both metrics are either high or low, it suggests lower accuracy.

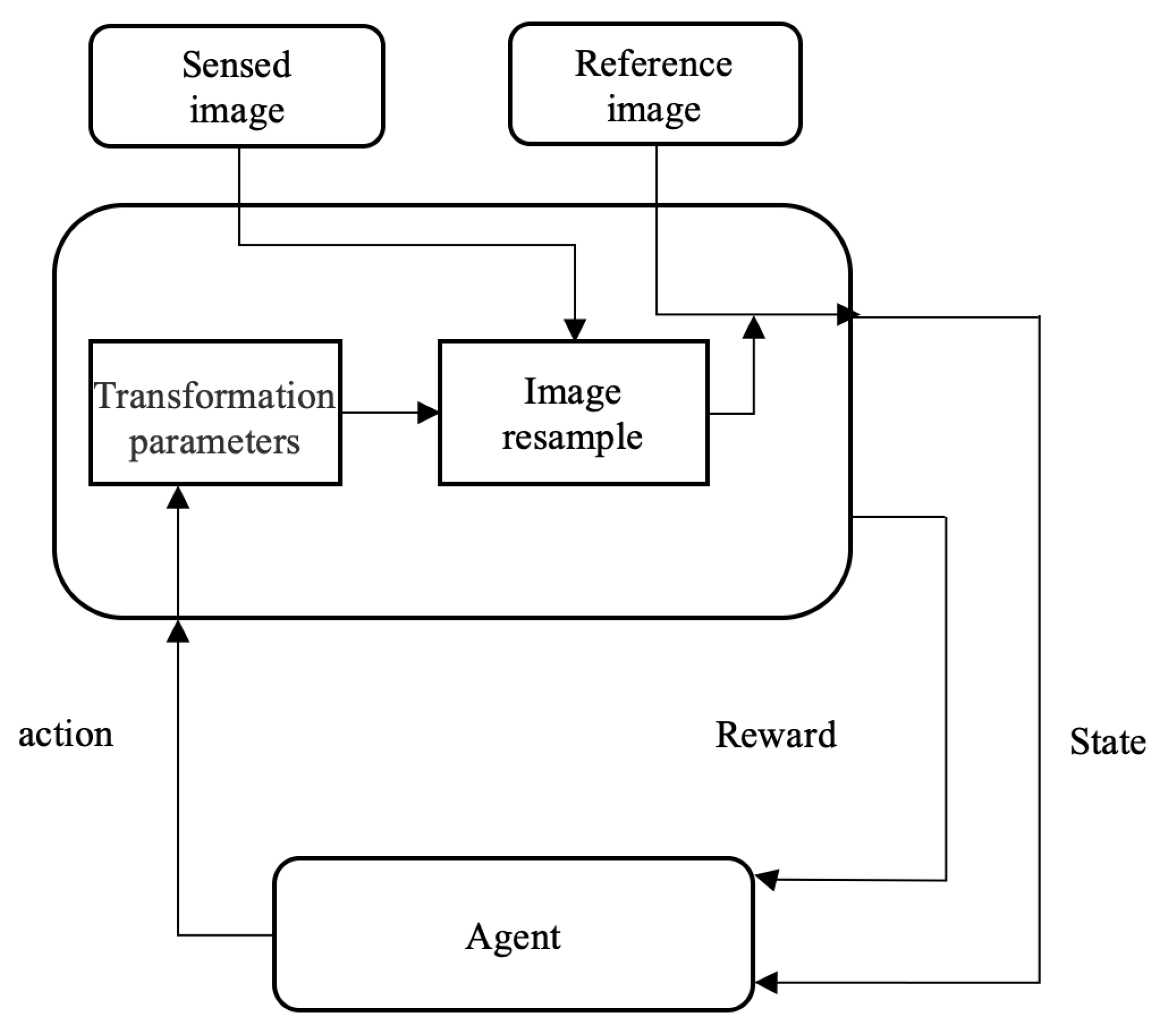

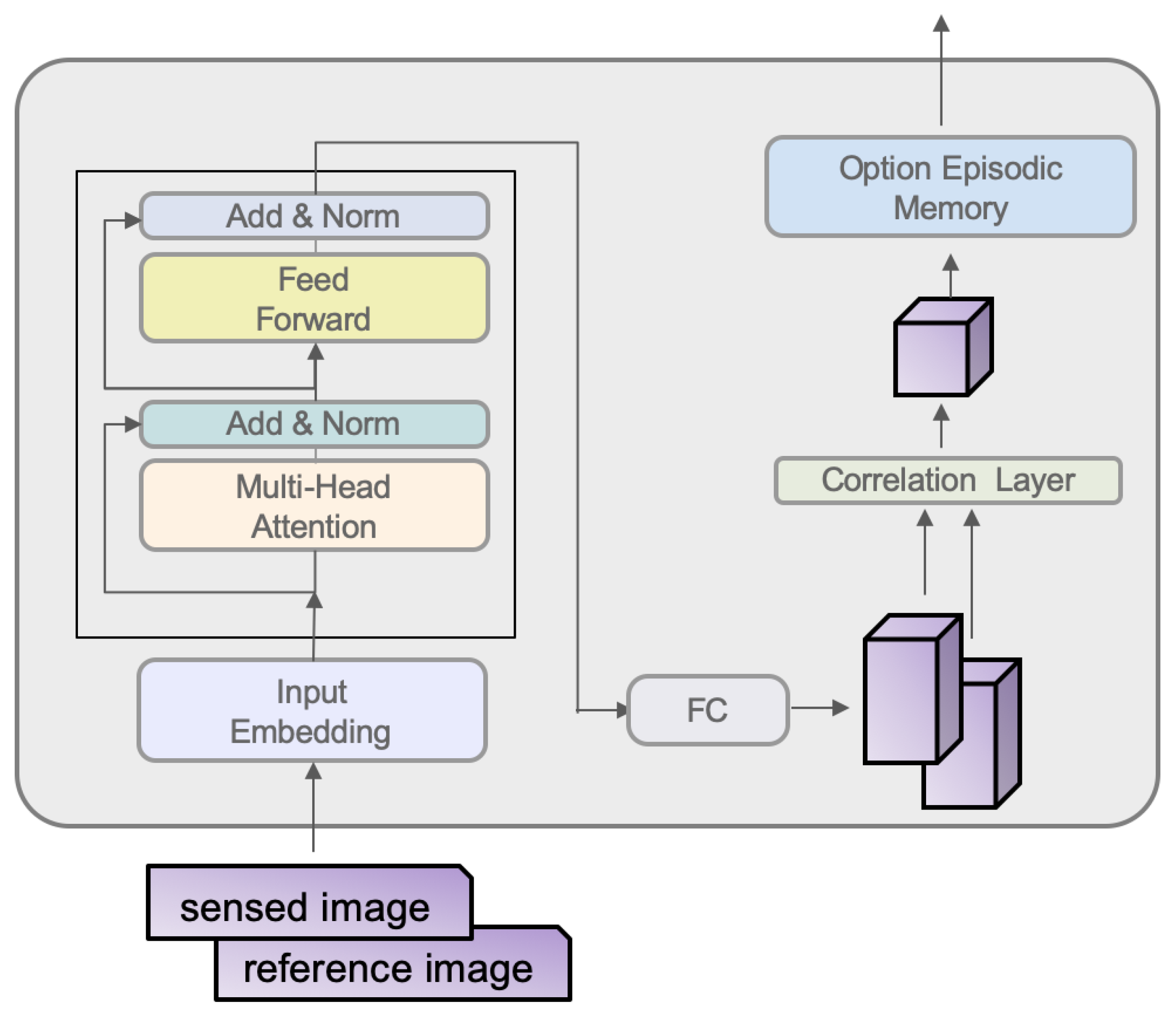

In order to compare our method with traditional and deep learning-based approaches, we have selected SAR-SIFT and Wang Shuang’s research (referred to as DL-WangS) [

2] as benchmarks.

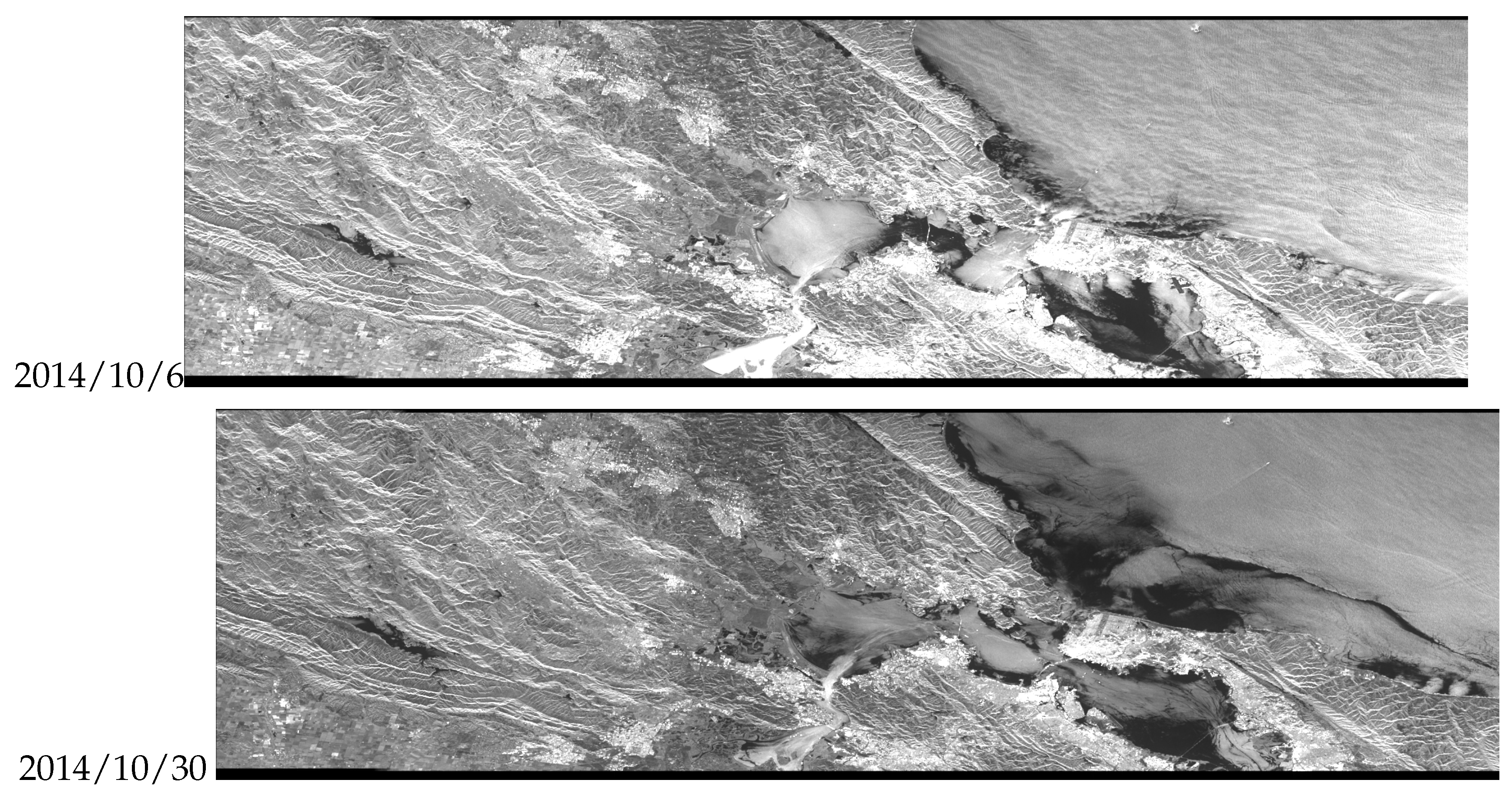

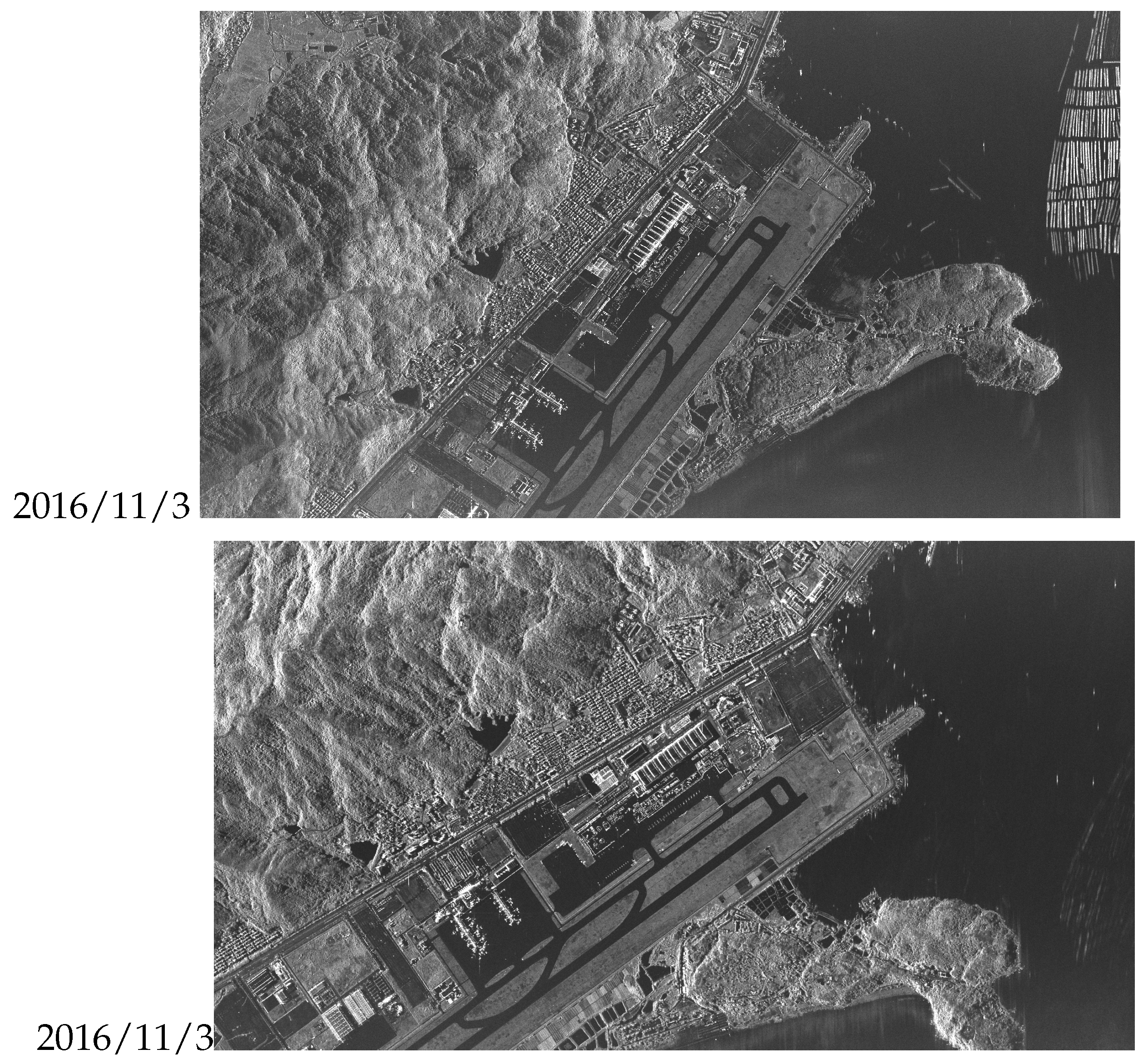

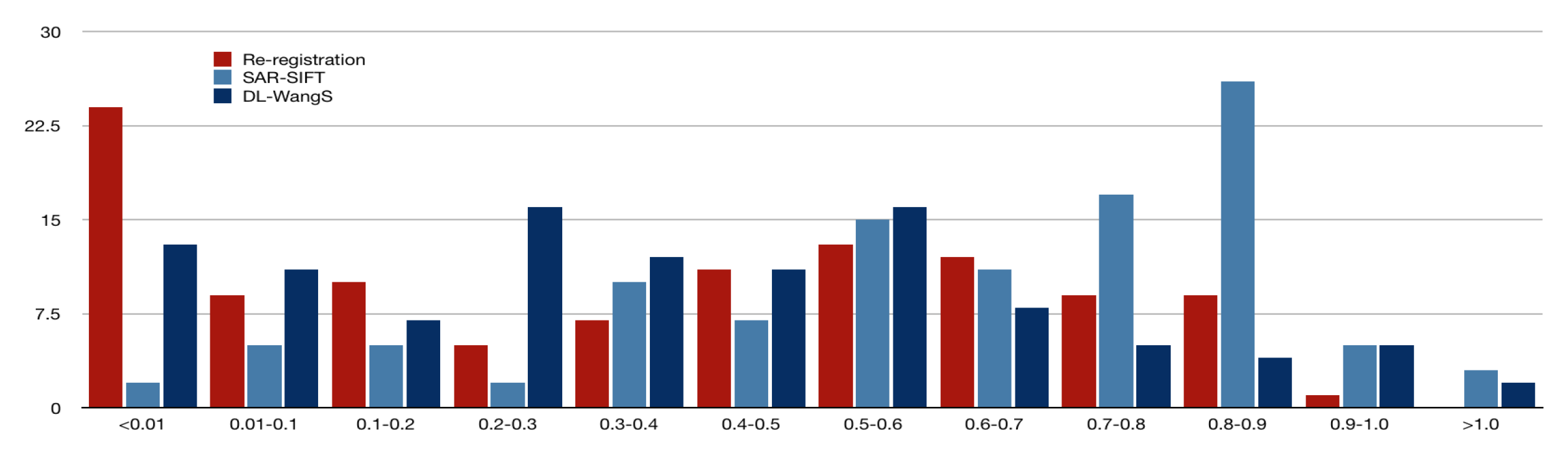

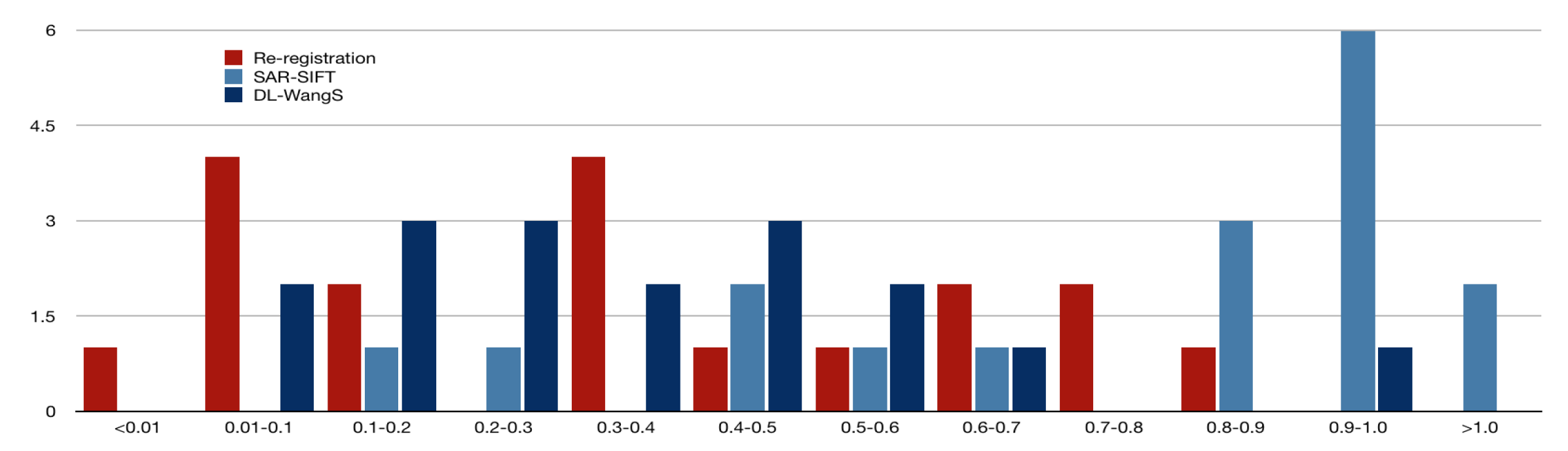

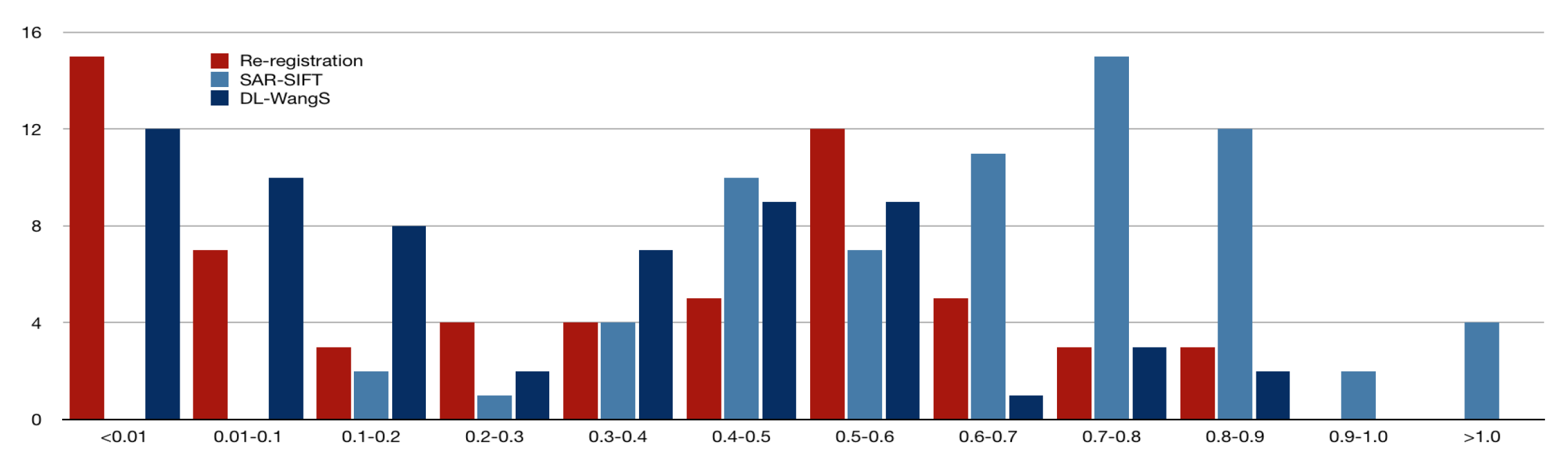

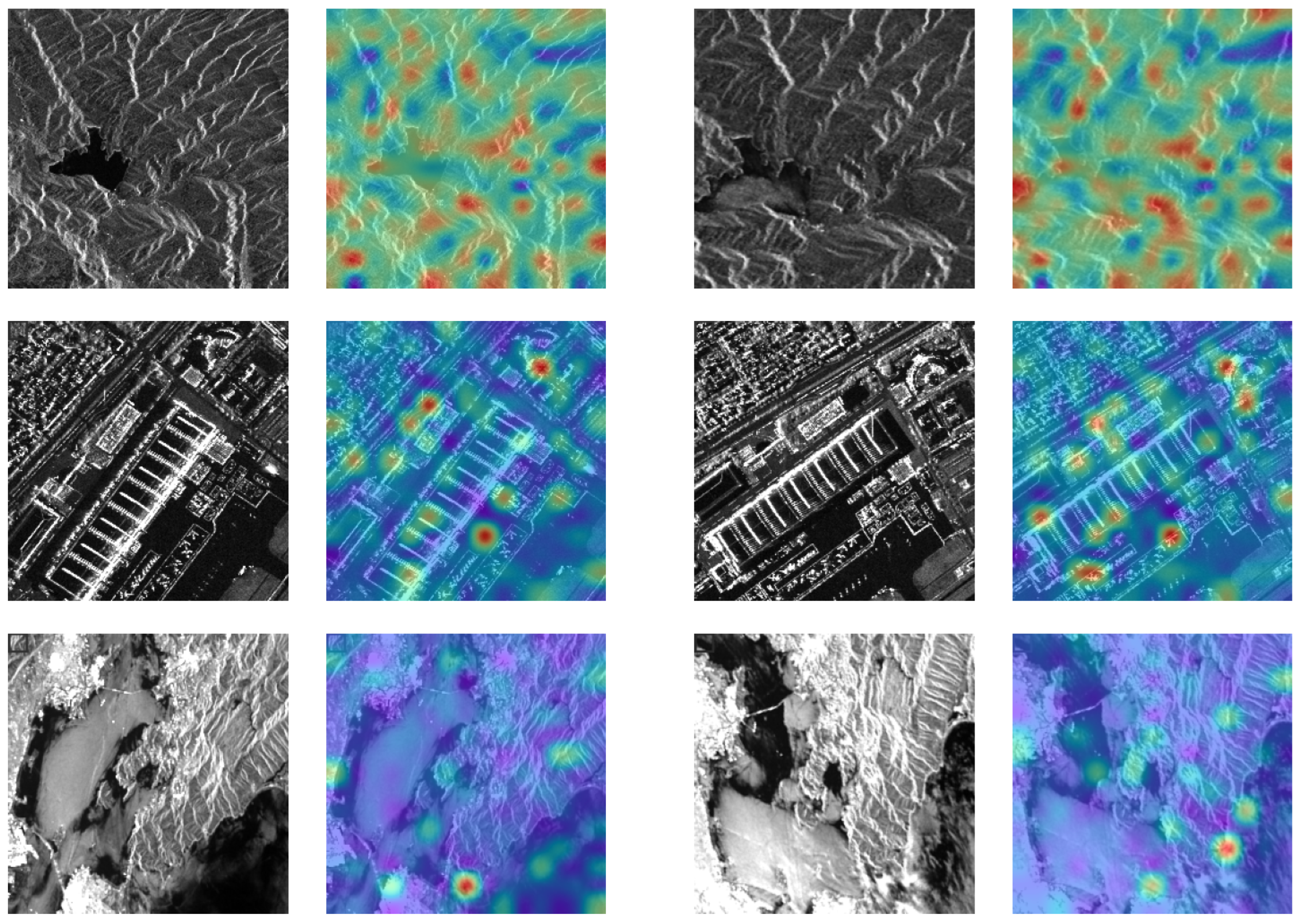

Figure 10,

Figure 11 and

Figure 12 illustrate the interval statistical results of Root Mean Square Error (RMSE) values for SAR-SIFT, DL-WangS [

2], and our re-registration results for the three datasets.

The intervals depicted in these figures are , , , , , , , , , , , and .

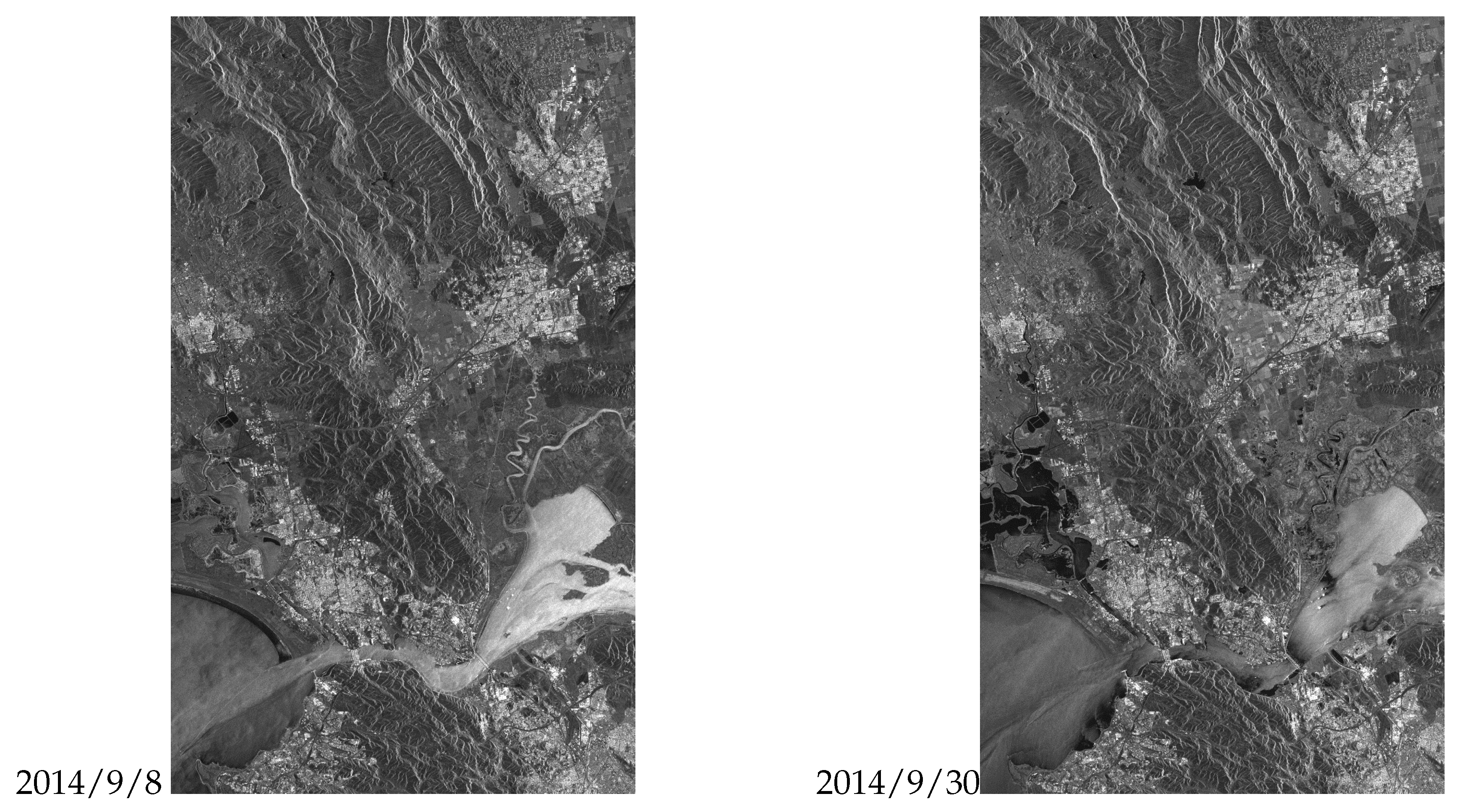

These can be seen in the figures , the re-registration results after the initial registration of sensed and reference image pairs used for testing are significantly better. Results from the TerraSAR-X Napa 2014 dataset and TerraSAR-X Zhuhai 2016 dataset outperform those from the Sentinel-1A Napa 2014 dataset. About of re-registrations in the TerraSAR-X Napa 2014 dataset achieved RMSE values below , while only in the Sentinel-1A Napa 2014 dataset achieved this. Similarly, of re-registrations in the TerraSAR-X Zhuhai 2016 dataset achieved RMSE values below . The RMSEs obtained by SAR-SIFT are concentrated around 1 pixel, with a small portion larger than 1 pixel. The RMSEs obtained by DL-WangS are mostly smaller than 0.6 pixel, but for the Napa region images, some are close to 1 pixel. The RMSEs obtained by our proposed re-registration method are all less than 1 pixel and are concentrated near 0. This suggests that our re-registration achieves sub-pixel level accuracy and outperforms SAR-SIFT, while being comparable or even slightly better than DL-WangS.

Table 2 and

Table 3 present the median and mean values of the quantitative analysis results for SAR-SIFT, DL-WangS [

2], and our SAR-RL model after re-registration applied to the three datasets. The analysis is conducted using the seven metrics introduced by Gonçalves et al. (2009) to quantitatively evaluate the registration results for all image pairs in the test datasets.

Comparing and analyzing the quantitative results in these tables, several observations can be made: The value obtained by re-registration is comparable to that of SAR-SIFT, indicating that a similar number of control point pairs are correctly matched. However, our re-registration achieves more accurate registration results. The value obtained by re-registration is lower than that of DL-WangS, but the accuracy achieved is comparable. The value obtained by re-registration is comparable to that of both SAR-SIFT and DL-WangS, suggesting that the distribution of matched feature point pairs obtained by the three methods is similar. It can also be observed that the running time of SAR-SIFT is in the order of tens of seconds, while DL-WangS takes seconds to run. In contrast, our re-registration method has a significantly faster registration speed, with running times in the sub-second range.

These tables provide a comprehensive overview of the quantitative evaluation results and highlight the strengths of our re-registration method in terms of accuracy and efficiency compared to the other methods.

It should be emphasized that the re-registration approach does not directly measure the performance of the SAR-RL method. The calculation of metrics still relies on the SIFT and SAR-SIFT algorithms. However, the metrics indicate that SAR-RL registration is significantly more accurate than these two traditional methods.

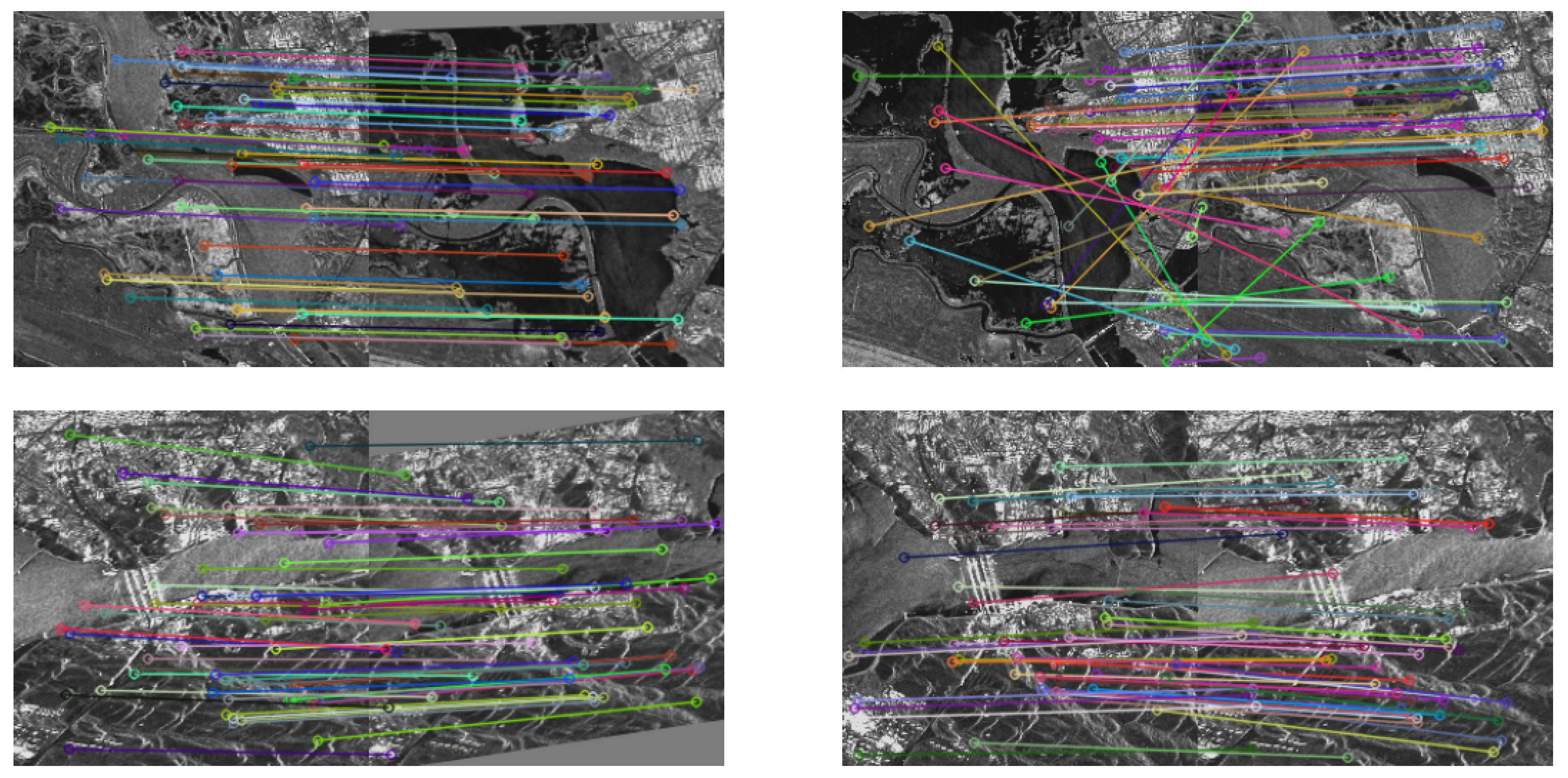

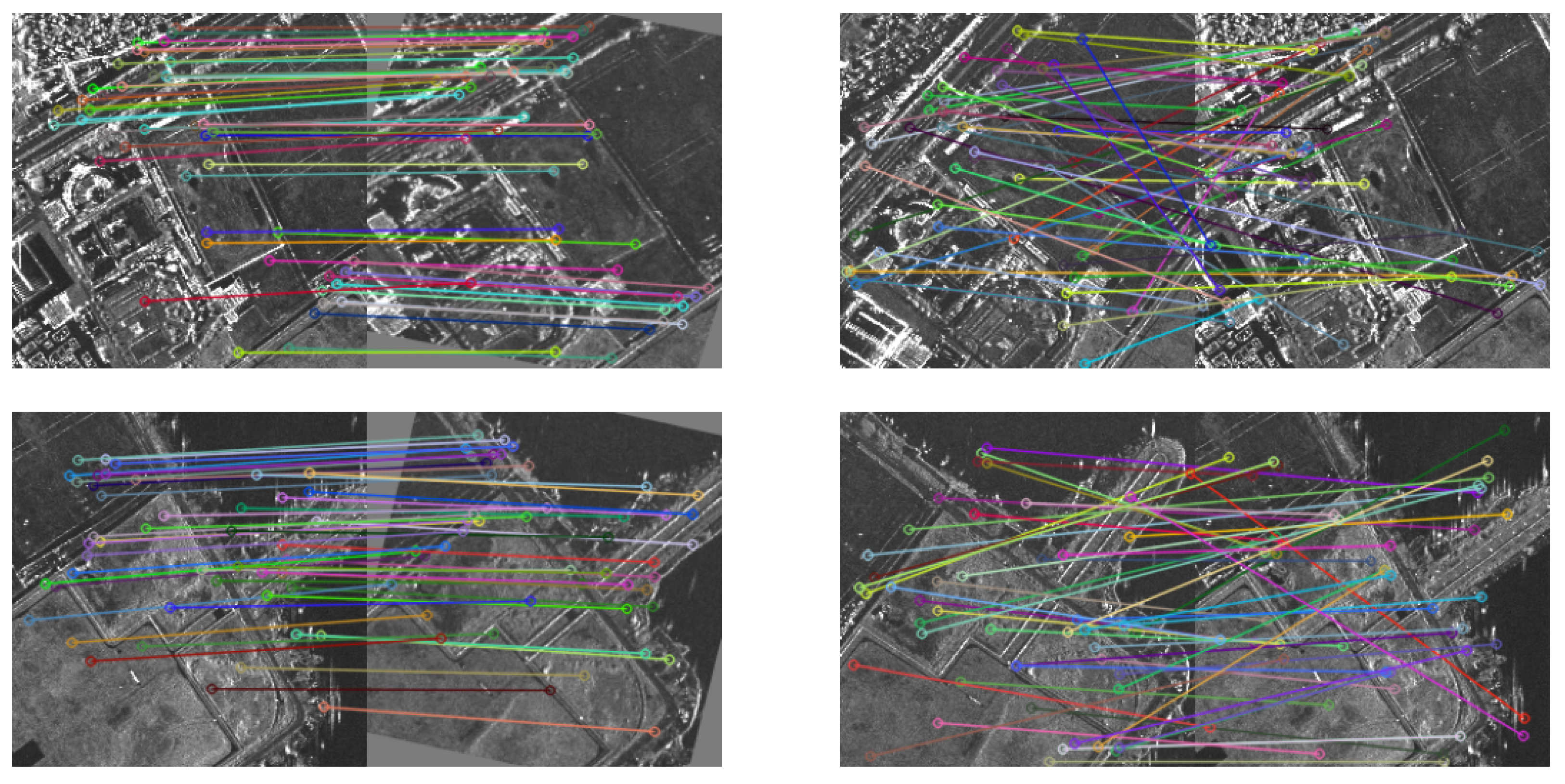

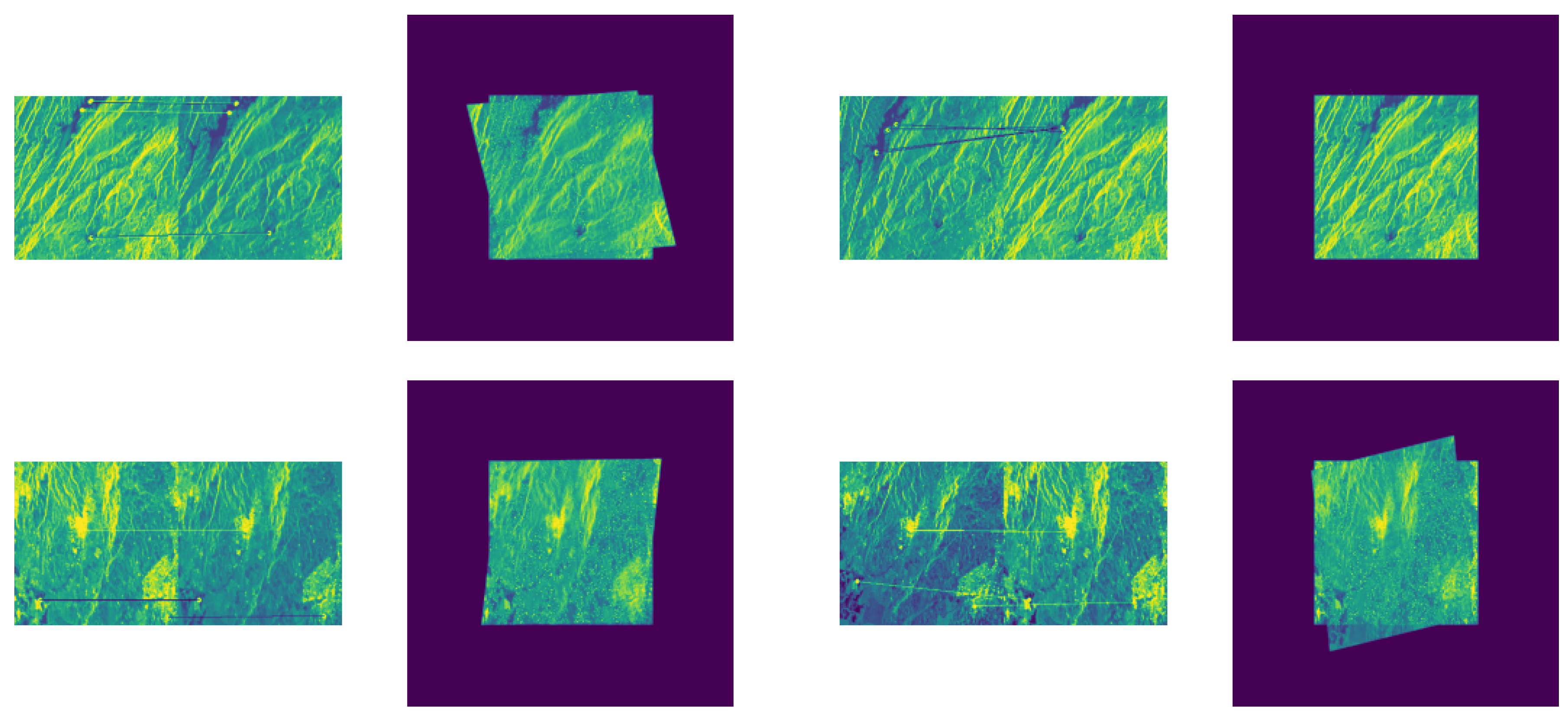

The visual comparisons we’ve provided through

Figure 13,

Figure 14 and

Figure 15 effectively illustrate the differences between matched feature point pairs obtained by the Re-registration and Once-registration methods using SIFT extracted feature points for the three sets of test data samples. These comparisons highlight the strengths of the Re-registration approach.

In the examples shown, it’s evident that the matched point pairs obtained by Re-registration are more accurate and less cluttered compared to Once-registration. The Re-registration approach manages to find correct matching point pairs even in image regions with significant differences, such as varying grayscale, structural features, and challenging image content. This is a strong indication of the effectiveness of the SAR-RL method in obtaining accurate and robust registrations, even under challenging conditions.

However, it’s also important to note that even the Re-registration approach might have some incorrectly matched point pairs, as seen in

Figure 15. This can be attributed to factors such as differences in satellite orbital directions and potential blurring in SAR images. Despite these challenges, the Re-registration approach consistently demonstrates superior performance compared to the Once-registration method, as evidenced by the overall accuracy and quality of matched feature point pairs.

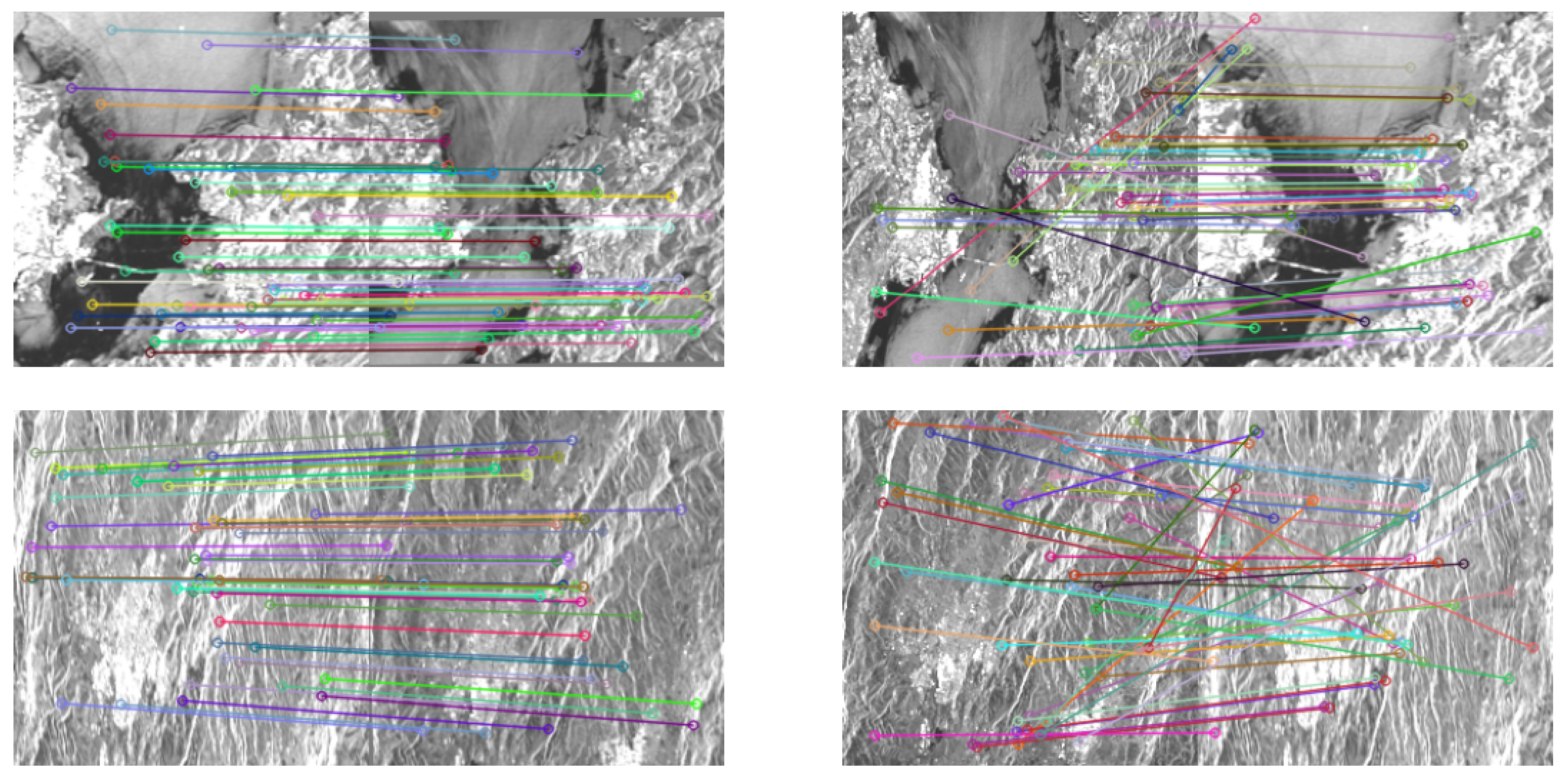

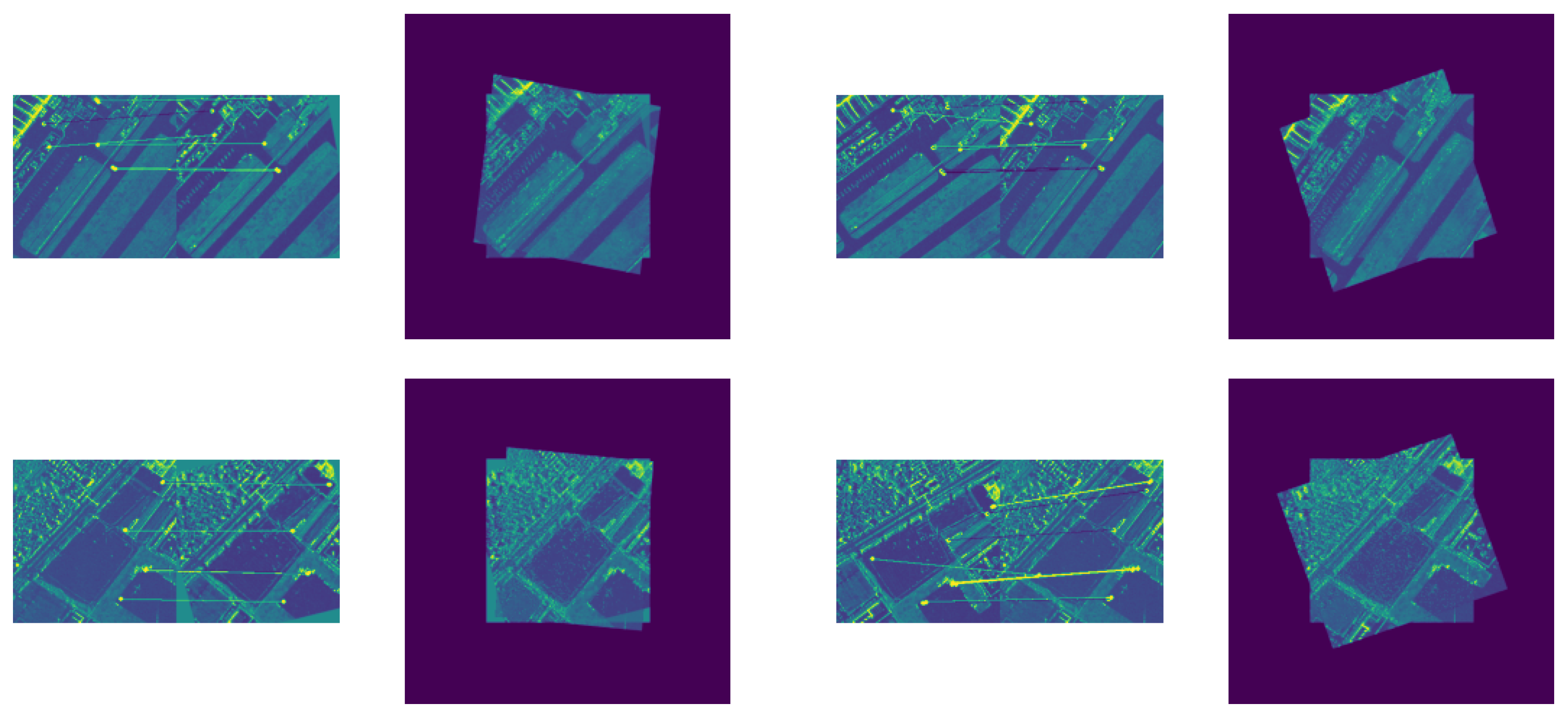

Figure 16,

Figure 17 and

Figure 18 demonstrate samples of matched feature point pairs obtained through re-registration and one-time registration of feature points extracted using SAR-SIFT for the three sets of test data samples. In comparison with the results from the previous set of experiments, it is evident that the feature point pairs acquired through SAR-SIFT are fewer than those obtained using SIFT.

In

Figure 16, the connecting lines of the matching point pairs obtained from the once registration of sample 1 appear more cluttered, and all of them are incorrectly matched, resulting in a failed image registration. The outcome of the fusion processing displays only the sensed image. The fusion process merely presents the information from one of the images. Conversely, the corresponding matching point pairs acquired through re-registration align with the correct positions. The fusion image exhibits continuous splicing boundaries, a substantial overlap of most image regions, and the visibility of splicing boundaries between the two images. For sample 2, the positions of matched point pairs obtained from once-registration are accurate, resulting in successful image registration. However, the overlapping area of the fused image is blurred, which hinders the acquisition of image information. Re-registration, on the other hand, yields more correct matching point pairs, evenly distributed, with the fusion image displaying continuous splicing boundaries, extensive region overlap, and visible splicing boundaries and geometric structural features.

In

Figure 17, feature point pairs derived from the once-registration of sample 1 are concentrated and inaccurately matched, and the image registration is failure. The fusion image primarily shows the sensed image. In contrast, feature point pairs acquired through re-registration for sample 1 correspond to the accurate location, with a relatively scattered distribution. The fusion image exhibits continuous splicing boundaries and substantial overlap in most image regions. For sample 2, both re-registration and once-registration yield accurately corresponding feature point pairs. The fusion images demonstrate extensive region overlap, with no noticeable misalignment in the splicing boundary.

The two samples in

Figure 18 display evident linear structural features and grayscale similarity. In both cases, re-registration and once-registration yield correctly corresponding feature point pairs. The fusion images reveal significant overlap in most image regions, with continuous splicing boundaries, indicating a favorable registration outcome.

It’s crucial to note that in the context of SAR images, SIFT and SAR-SIFT can sometimes extract incorrect feature points or produce mismatched feature point pairs. As a result, the comparison between once-registration and re-registration, as presented above, serves as a reference. A comprehensive evaluation should encompass various indicators discussed in the preceding sections, along with other methodologies such as mosaicked images.

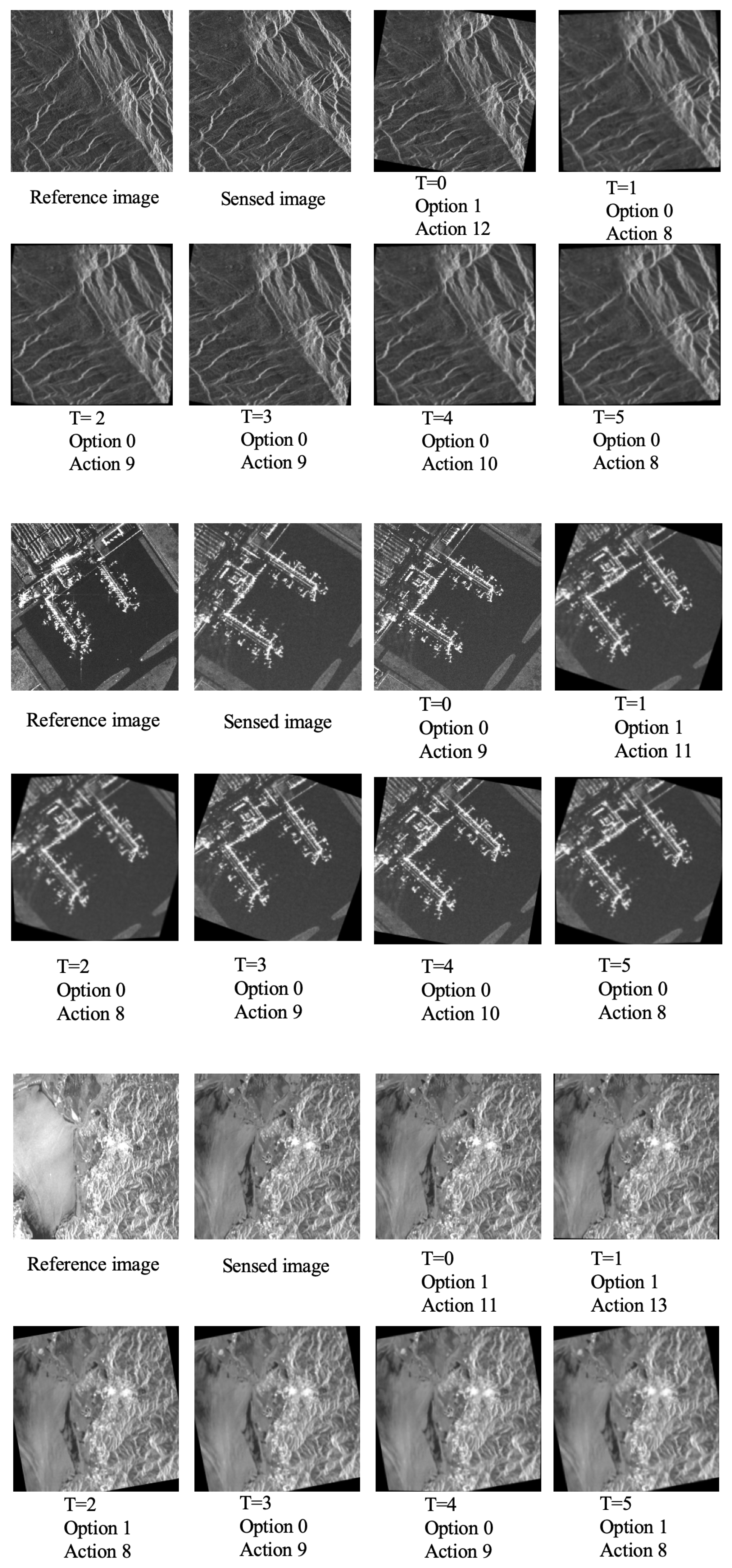

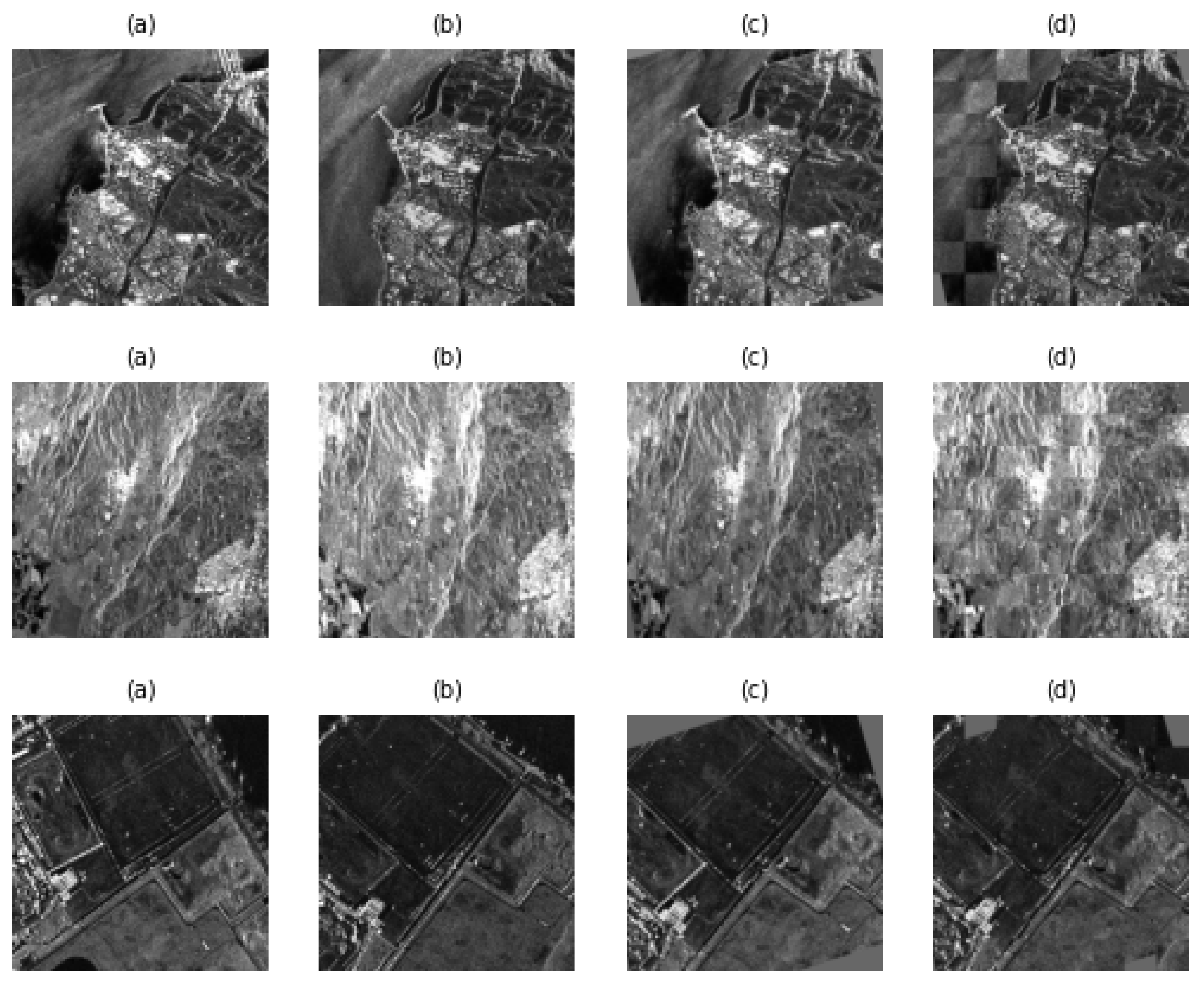

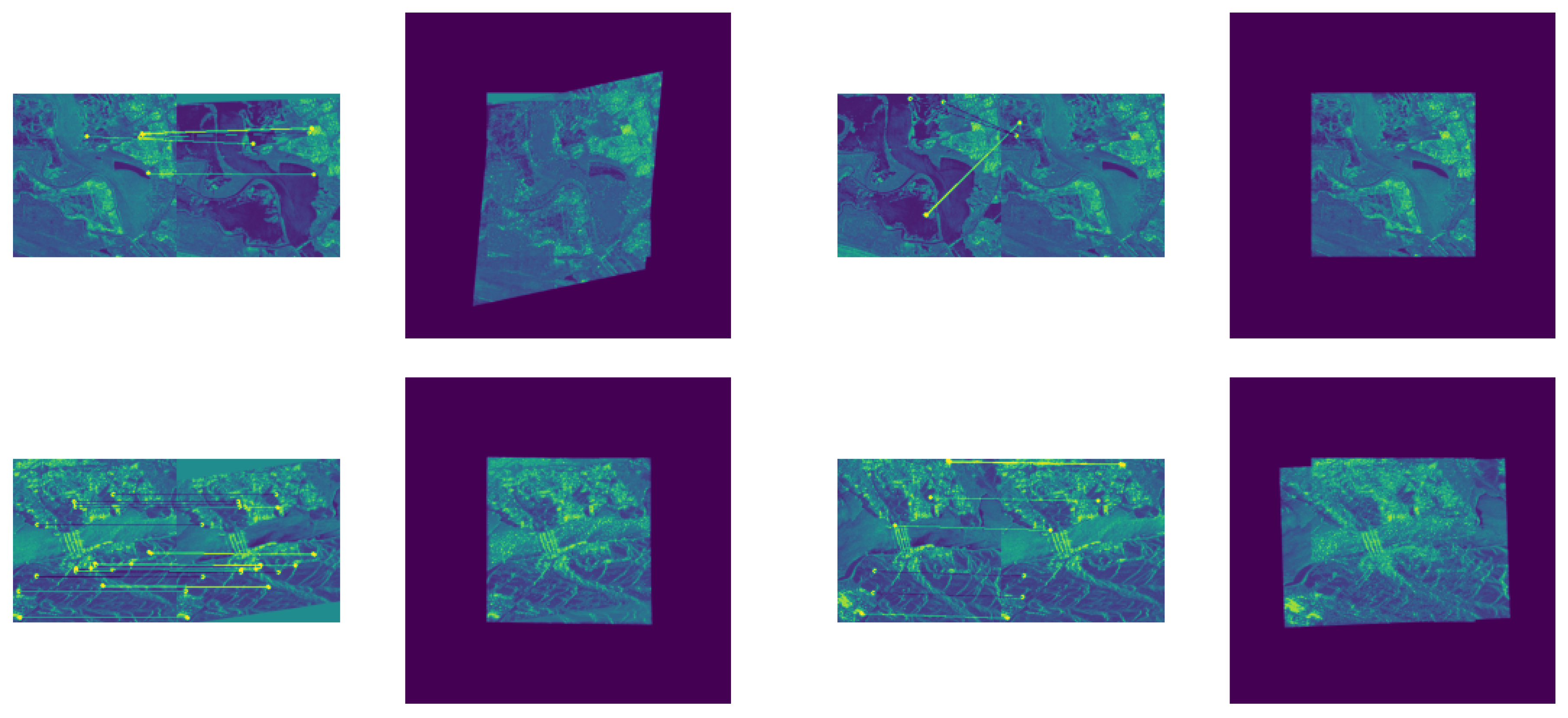

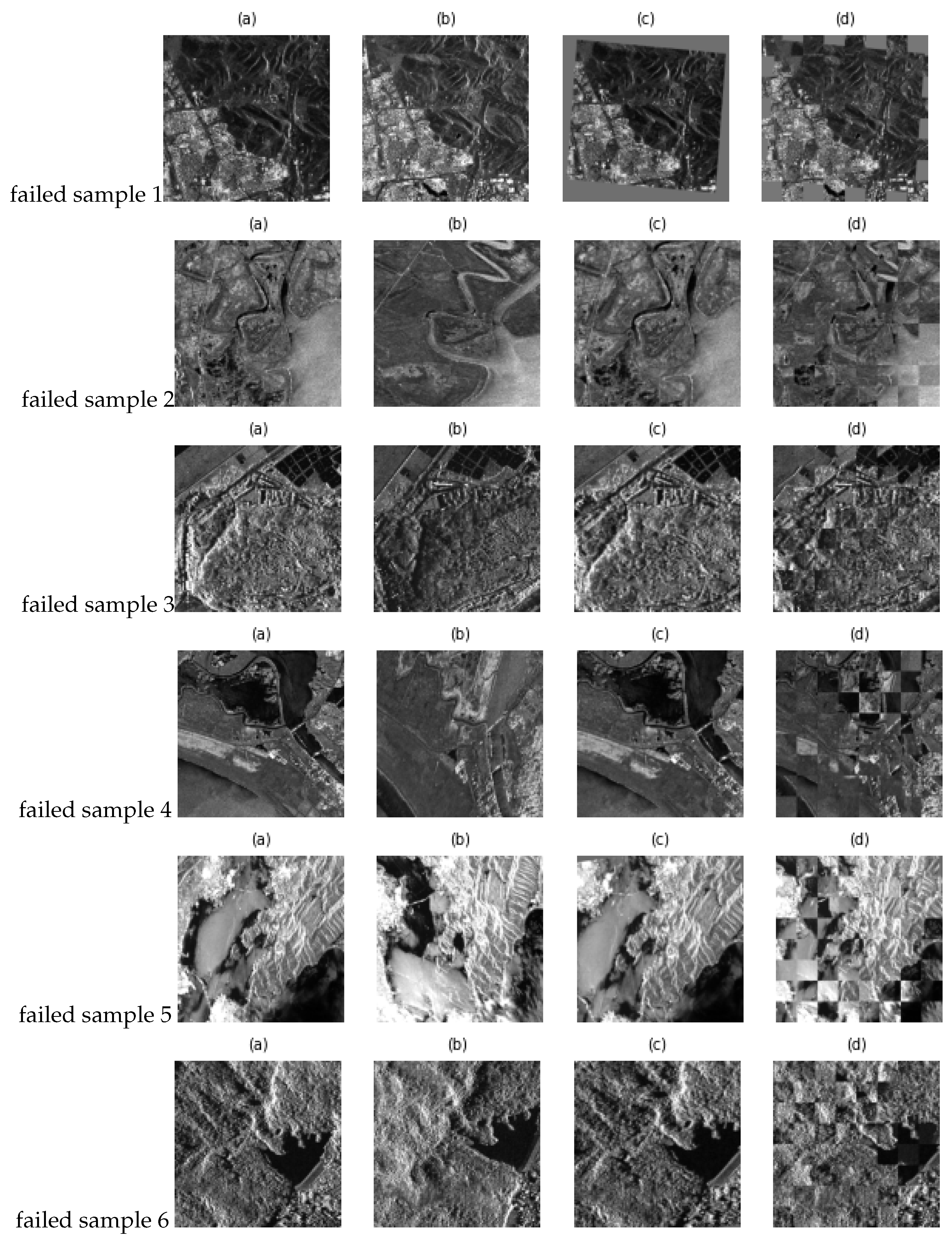

4.3.5. Analysis of Failed Cases

Figure 20 illustrates instances of failed registration. In the third column, the sensed image generated by the reinforcement learning framework of this paper is based on the reference image, i.e., the sensed image to be registered. The fourth column displays the outcomes of the registration using the method proposed in this paper. Variations in terrain features and imaging viewpoints between the sensed images obtained at different sampling times and the reference image pairs can lead to significant grayscale differences, as observed in failed sample 4, and different structural features, such as failed sample 3 and failed sample 6. In some cases, SAR images obtained from the same ground scene region may not appear intuitively identical, as demonstrated in rows 2 and 4 of the figure. While the reinforcement learning in this paper generates sensed images that partially reduce structural differences between them and the original reference images, it does not perform grayscale transformations on the sensed images.

The mosaic image of the sensed image and the reference image after the registration can exhibit pronounced grayscale misregistration at the splicing location, as seen in failed sample 5, or show insufficient smoothness in the splicing of line features and region features, as seen in failed sample 4. The registration scheme based on the entire image requires a certain level of grayscale and structural similarity between the sensed image and the reference image pairs to be registered. However, the reinforcement learning approach in this paper does not perform grayscale transformations on the SAR image during the transformation of the sensed image. Consequently, its applicability and scalability for registering SAR image pairs with substantial grayscale differences need to be enhanced.

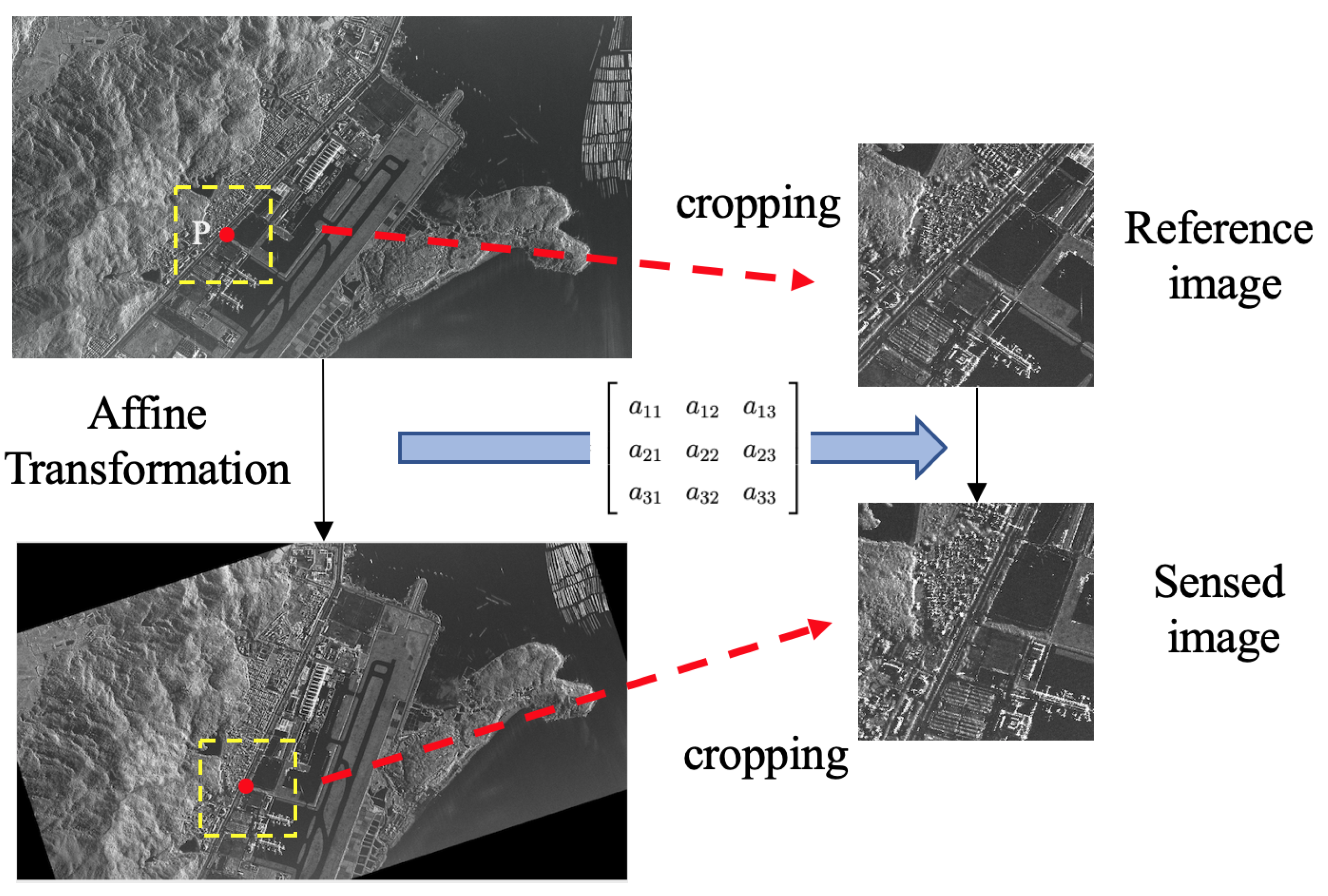

Since this paper employs a self-learning method to generate training samples by applying various affine transformations to the training data, the feature differences between the sensed image and the reference image pairs that cannot be reflected by the affine transformations include significant geometric distortions, e.g., failed sample 3 and failed sample 6, and grayscale changes, e.g., failed sample 4. Such differences impact the correlation between image pairs, potentially leading to suboptimal performance of the registration method based on the correlation matching network presented in this paper. To address this challenge, a potential solution involves manually labeled matching labels and a machine learning network that performs grayscale transformations, image matching, and evaluation.

Furthermore, failed sample 1 highlights the significant challenge of dealing with complex and variable ground topographic features. In this scenario, the feature point pairs obtained by SAR-RL are sparse and unevenly distributed in the images to be registered, affecting the global registration performance of the image pairs. Some of the failure cases involve images with densely distributed point features and line features. This can be attributed to the fact that the SAR-RL in this paper is a global registration method, and the uniform distribution of weights for the globally densely distributed point features and line features can easily result in the failure of the registration.