1. Introduction

Quality control (QC) is the bedrock of industrial manufacturing and is intrinsically tied to operational efficiency, product reliability, and overall customer satisfaction [

1,

2,

3]. In the industry, the criticality of QC is amplified due to the inherent complexity of manufacturing processes, the diversity of parts and components, and the high-stakes safety implications of the final product [

4]. As such, the ability to perform quality inspections rapidly and accurately can significantly affect an organization’s bottom line and influence the larger industry’s trajectory.

Defects, especially those detected late in the manufacturing process or after the product’s release, can lead to high costs, including reworking, recalls, or even reputational damage to the manufacturer [

1]. Consequently, thorough inspections during various stages of production can significantly reduce these costs, maximizing productivity and profitability. Moreover, a robust quality control system fosters customer confidence in the brand, driving increased sales and maintaining a competitive edge in the market [

5,

6].

Traditionally, quality inspections in industry have been manual processes, relying heavily on the experience and judgment of human inspectors [

7,

8]. However, the increasingly intricate nature of modern products, combined with the growing demand for efficiency and productivity, has pushed these traditional inspection methods to their limits. Manual inspections can be time-consuming, prone to errors, and may struggle to detect subtle or complex defects [

9]. Furthermore, the potential for inconsistency among inspectors poses a significant challenge to maintaining uniform quality standards [

10,

11].

In the quest for greater efficiency and precision, industries worldwide are turning to technology. In particular, Mixed Reality (MR), characterized by the enhancement of the real world with digital information, has emerged as a promising solution to these QC challenges. MR allows for real-time, interactive overlays of data in the user’s field of view, potentially revolutionizing inspection processes [

12].

MR, when integrated into quality control processes, offers a range of advantages. First, it increases efficiency [

13,

14,

15,

16]. By overlaying digital information directly onto real-world components, MR allows inspectors to rapidly identify and assess parts without the need for time-consuming manual checks against plans or specifications. This can significantly speed up the inspection process, allowing for quicker detection of defects and faster correction of production issues.

Also, MR improves accuracy [

16]. By leveraging sophisticated imaging and recognition technology, MR can detect defects that may be missed by the human eye. It can also ensure consistency in inspections by applying the same precise standards to every part, every time, eliminating the variability that can occur with human inspections.

The MR technology also opens the possibility of real-time tracking of inspection cycles and individual tasks. This additional feature can provide valuable insights into the efficiency of the manufacturing process, enabling managers to identify bottlenecks and improve workflow designs [

17,

18].

Understanding cycle times and individual task times is paramount in industrial environments like automotive manufacturing. The time an operator spends on a task or the cycle time of a specific process can provide deep insights into the operational efficiency and productivity of the manufacturing process [

16,

19]. Moreover, this information, when systematically captured and analyzed, can reveal patterns, inconsistencies, and bottlenecks that might otherwise go unnoticed.

However, despite the potential benefits, the integration of MR into industrial quality control processes is still in its early stages, with many questions remaining about its practical feasibility and effectiveness. Motivated by the pressing need for more efficient and reliable quality control methods and inspired by the potential of MR to transform industrial inspections, this study embarked on an exploratory journey to investigate how MR can be effectively used in industry QC.

The primary objective of this study is to develop an MR-based quality control system using smart glasses, integrated with a server-based image recognition system, and to evaluate this system’s effectiveness in a controlled environment.

This study also intends to evaluate the performance and viability of this system under real world conditions. The objective was to identify possible problems, possibilities for improvements and verify the feasibility of implementing this MR system on a larger operational scale in the manufacturing industry.

In doing so, it is intended to provide a practical roadmap for the adoption of MR in industrial quality control and to contribute to the understanding and advancement of this technology.

The remainder of this paper is organized as follows. The next section provides an overview of object detection.

Section 3 details the proposed methodology.

Section 4 presents the pilot test carried out in a controlled environment where the intention is to evaluate the performance of the system, identify possible problems and evaluate its scalability.

Section 5 concludes the paper by summarizing the essential findings and highlighting the significance of the proposed methodology in improving quality control system.

2. Object Detection

In the realm of quality control processes enhanced by mixed reality, object detection assumes a fundamental and pivotal role. Object detection serves as the cornerstone of this convergence, seamlessly integrating real-world and digital environments to facilitate meticulous quality assessment and assurance.

Mixed reality, which blends elements of both physical reality and virtual environments, demands a robust mechanism to identify, localize, and analyze objects within the merged context. Object detection technologies, often harnessed through advanced computer vision techniques, enable the system to precisely discern and categorize relevant objects of interest.

The efficacy of quality control processes hinges on accurate and timely identification of specific items, defects, or anomalies. Object detection, operating at the heart of the framework, empowers the system to perform these functions with precision. By seamlessly detecting objects in real-time and overlaying relevant digital information, such as annotations or diagnostic insights, the quality control process achieves an elevated level of comprehensiveness and accuracy.

Over the years, the development of object detection algorithms has undergone significant evolution, driven by advancements in deep learning and the need for more accurate and efficient solutions. One of the pivotal distinctions in this evolution lies in the categorization of algorithms into two-stage and one-stage approaches [

20,

21].

2.1. Two-Stage Object Detection Algorithms

The inception of two-stage object detection algorithms marked a transformative shift in the field. This paradigm introduced a clear separation between region proposal and object classification stages, addressing the challenges posed by computational inefficiency in previous methods [

22]. The R-CNN (Region-based Convolutional Neural Network) family of algorithms, including R-CNN, Fast R-CNN, and Faster R-CNN, played a pivotal role in establishing this approach.

R-CNN, introduced by Girshick et al. in 2014 [

23], presented a novel strategy by proposing regions of interest (RoIs) using selective search and then classifying objects within these regions using a convolutional neural network (CNN). This method demonstrated promising results in terms of accuracy but suffered from slow processing speeds due to the sequential nature of region proposal and classification. The subsequent refinement, Fast R-CNN, merged the region proposal and feature extraction stages, sharing computation across different regions and leading to improved efficiency [

24].

The pinnacle of the two-stage approach was reached with the introduction of Faster R-CNN by Ren et al. in 2015 [

25]. This approach integrated the region proposal process into the network architecture itself using a Region Proposal Network (RPN), enabling end-to-end training. Faster R-CNN achieved remarkable accuracy while significantly reducing processing time, making it a cornerstone in the field of object detection [

26,

27].

2.2. One-Stage Object Detection Algorithms

While two-stage algorithms were achieving remarkable accuracy, the computational overhead associated with region proposal hindered their real-time applicability. This limitation spurred the development of one-stage object detection algorithms, which aimed to address this issue by performing object classification and localization in a single pass.

The YOLO (You Only Look Once) family of algorithms and the SSD (Single Shot MultiBox Detector) algorithm exemplify the one-stage approach. YOLO, introduced by Redmon et al. in 2016 [

28], divided the input image into a grid and predicted bounding boxes and class probabilities directly from grid cells. This design drastically reduced computation time, making real-time object detection feasible [

29,

30]. YOLO demonstrated competitive accuracy, but its weak point was the challenge of detecting small objects accurately due to the inherent nature of its grid-based architecture.

On the other hand, SSD, introduced by Liu et al. in 2016 [

31], adopted a different strategy. It utilized a set of predefined default bounding boxes with varying aspect ratios and sizes, predicting object classes and offsets for these boxes. This design allowed SSD to effectively address the challenge of detecting objects at different scales and improved its accuracy in small object detection. However, SSD still struggled to match the accuracy of two-stage approaches for larger and more complex scenes.

2.3. Evolutionary Trends

The evolution of object detection algorithms has been a dynamic interplay between the two-stage and one-stage approaches, each catering to specific requirements and challenges. While two-stage algorithms excelled in accuracy but often sacrificed speed, one-stage algorithms offered real-time capabilities at the expense of accuracy, especially for small objects and complex scenes [

32,

33].

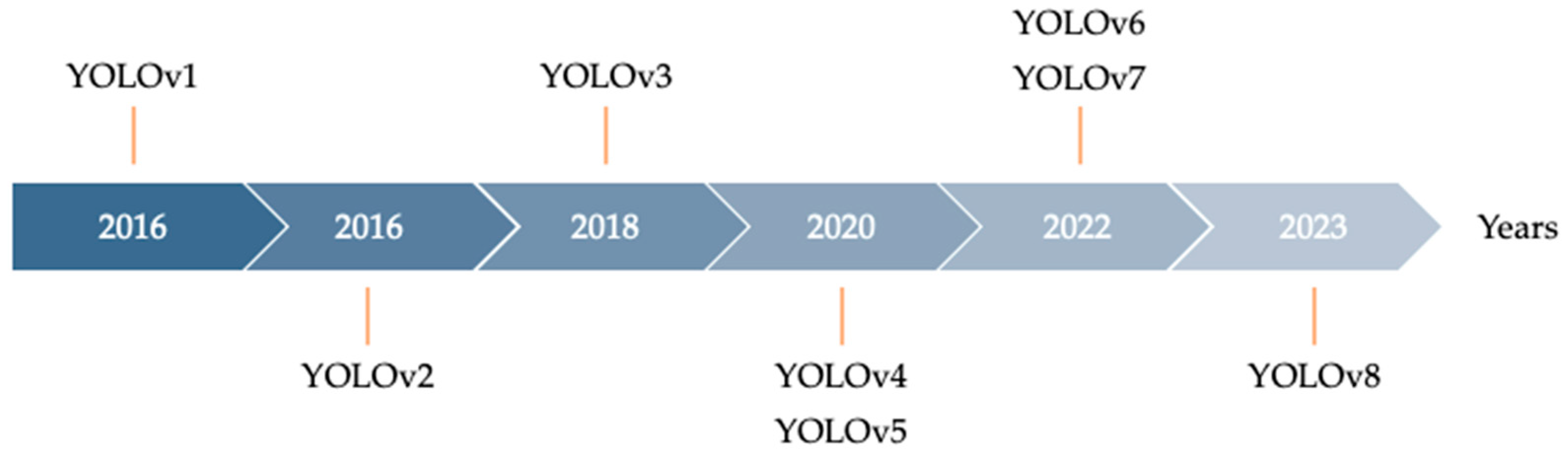

Recent advancements have further refined these paradigms. The YOLO series, culminating in YOLOv8 in 2023, showcased significant improvements in accuracy and speed, making them increasingly competitive with two-stage approaches [

34,

35,

36].

Figure 1 presents a concise timeline of the historical dates corresponding to various versions of the YOLO algorithm. The visual representation highlights the progression of YOLO from its inception to the latest versions, including YOLOv8.

In the historical evolution of the YOLO algorithm, certain versions have stood out due to their increased protagonism and importance in shaping the landscape of object detection. Notably, YOLOv1, YOLOv3, and YOLOv4 have garnered significant attention and impact within the computer vision community.

The inaugural version of YOLO introduced the concept of one-stage object detection, revolutionizing the field. Its pioneering design, which predicted bounding boxes and class probabilities directly in a single pass, laid the foundation for subsequent iterations. Despite its relative simplicity, YOLOv1 showcased the potential of real-time object detection, setting the stage for further advancements.

YOLOv3 marked a substantial leap in terms of accuracy and versatility. With the introduction of a feature pyramid network and multiple detection scales, YOLOv3 improved detection performance across various object sizes. This version’s increased accuracy and the ability to handle small and large objects alike further solidified YOLO’s reputation as a powerful object detection algorithm [

37].

YOLOv4 represented a significant milestone by substantially improving both accuracy and speed. The architecture was optimized with advanced techniques. This version achieved state-of-the-art performance by enhancing feature extraction, introducing novel training strategies, and incorporating efficient design choices. YOLOv4’s holistic approach to accuracy and speed propelled it to the forefront of object detection algorithms [

38,

39].

While this version may not be as widely recognized as its predecessors, YOLOv8 has gained prominence due to its reported advancements in accuracy. With the promise of achieving the highest accuracy in the YOLO series, YOLOv8 signifies ongoing efforts to refine the algorithm. This version demonstrates the continued commitment to enhancing object detection performance, particularly in scenarios involving small objects and complex scenes [

36].

The YOLOv8 version presents distinct advantages over other solutions in the field of object detection. Its advanced feature extraction capabilities coupled with heightened accuracy ensure precise identification of objects in various scenarios. This version’s holistic approach to accuracy and speed positions it as a standout choice for applications requiring both precision and efficiency in object detection [

40,

41,

42].

3. Methodology

The development of a robust MR-based quality control system for the industrial manufacturing necessitates a meticulously crafted architectural design. This architecture fundamentally revolves around integrating smart glasses device, a cutting-edge MR headset, with a server-based image recognition system.

The architecture can be conceptually divided into two main segments. Client-side MR system and server-side image recognition system.

The client-side MR system, which the quality inspector interacts with, employs the smart glasses device. This MR headset captures the video stream of the real-world environment, primarily components inspected for quality control, and streams it to the server.

The device is equipped with depth sensors and multiple cameras that capture a rich, three-dimensional view of the world. This information is crucial in correctly overlaying the augmented reality elements on the real-world view.

The device runs a custom-built application developed using, for example, Unity or Unreal or other compatible software [

43,

44], a powerful development platform, combined with the Mixed Reality Toolkit (MRTK) [

45,

46], a collection of scripts and components designed to accelerate the development of mixed reality applications.

The server-side system is responsible for analyzing the incoming video stream, identifying objects, and detecting defects. The server runs a machine learning model and utilizes the framework for real-time video processing and computer vision tasks.

This architecture, combining an MR headset with a powerful server-based image recognition system, enables a robust and efficient MR-based quality control system that outperforms traditional manual inspection in terms of speed and accuracy.

The proposed framework architecture for the server-side image recognition system operates on a two-stage basis: training the model and real-time processing. These stages are fundamental to the successful implementation of an MR-based quality control system.

3.1. Model Training

In the first stage, the proposed process for model training, is presented in

Figure 2.

The proposed process for training a custom image dataset is based on five main steps.

The first step is data collection and preprocessing.

In the context of quality control in the industrial manufacturing and in particular in the automotive industry, there is a need to create a custom image dataset sourced directly from real-world field environments.

The intricacy and specificity of tasks inherent to this industry demand a dataset that faithfully replicates the complex conditions and nuances encountered during actual operations. By harnessing images captured from real field settings, the dataset becomes a potent tool for training models that can accurately and effectively detect defects, anomalies, and quality deviations. This approach not only aligns with the industry’s unique challenges but also ensures that the models developed are finely attuned to the intricacies of automotive quality control, resulting in robust, reliable, and industry-relevant solutions.

For each product and component that need to be inspected, images should ideally capture a variety of both faulty and non-faulty examples.

Images should be collected under various lighting conditions and angles and should feature a wide array of parts and defect types.

Preprocessing the images involves resizing, normalizing, and augmenting the data to enhance the model’s ability to generalize and perform well on real-world data. By applying various transformations to the images, such as rotation, scaling, and cropping, the augmented data set is created to simulate different possible scenarios the model may encounter during real-time processing.

Following data collection is the equally critical step of image annotation. Here, each image in the dataset is meticulously reviewed, and the relevant objects are marked or labeled with pertinent details about the type of part and the nature of the defect, if any. The annotation process is often a manual and time-consuming endeavor, yet it is paramount for creating a reliable training dataset. This annotation gives the model the ground truth data points to learn from.

With the dataset prepared, it’s time to select the appropriate models for the tasks.

For part identification, a convolutional neural network (CNN) is suitable due to its ability to learn spatial hierarchies of features in images. In the case of object detection, the framework leverages YOLO, a state-of-the-art object detection model known for its accuracy and speed in detecting and localizing multiple objects in a single pass, as indicated in the previous section.

YOLO ushered in a new era of object detection methodologies, veering away from traditional two-step processes that involved identifying regions of interest and subsequently classifying them.

By tackling images in one go, YOLO achieves real-time object detection speeds, making it an ideal candidate for applications that require immediate results such as industrial inspections. This efficiency, however, does not compromise its ability to provide accurate object detection. In fact, YOLO’s holistic view of an image allows it to contextualize information about classes and their appearance, contributing to its precise object detection capabilities. Furthermore, its architecture and training processes enable it to generalize effectively, enhancing its performance in a broad range of scenarios and environments.

The next step is model training. Training the selected models is an iterative and multi-step process. The dataset is split into training and validation sets, ensuring that the models learn from diverse examples and can be validated on unseen data. Hyperparameters are fine-tuned, and various optimization algorithms are tested to achieve high accuracy and robust performance.

After training, the models are evaluated on a separate test set, and any necessary refinements are made based on the evaluation results.

Once the models are trained, evaluated, and refined, they are deployed to the server, ready for the second stage, real-time processing.

3.2. Real-Time Processing

This stage involves analyzing and processing the video stream captured by the smart glasses device worn by the quality inspector. By using OpenCV, YOLO and other machine learning models, the server-side system can identify components parts, detect defects, and generate augmented reality data to provide real-time feedback to the inspector.

OpenCV, an open-source computer vision and machine learning software library, was chosen for this proposed methodology due to its wide array of functionalities, high performance, and robustness [

47,

48,

49]. It is particularly well-suited for real-time image and video processing tasks, making it ideal for parsing and analyzing the incoming video stream. OpenCV has an extensive collection of algorithms for image processing, feature extraction, and image manipulation, which are invaluable for enhancing the visibility of defects and improving the accuracy of defect detection.

The real-time processing stage starts when the server receives the video stream from the client-side MR system. Using OpenCV, the continuous video stream is parsed into individual frames, enabling frame-by-frame analysis. Once the frames are extracted, the previously trained part identification model scans them to recognize the different components parts present. Subsequently, the YOLO-based object detection model, known for its accuracy and speed, is employed to localize objects of interest within the frames. The identified parts then undergo inspection by the defect detection model to determine the presence and type of defects.

One of the key challenges in defect detection is the variability in defect appearances, which may be due to lighting conditions, angles, or other environmental factors. OpenCV plays a significant role in addressing this challenge. It offers image enhancement techniques such as histogram equalization, edge detection, and contrast adjustment, which can make subtle defects more visible and easier to detect. By incorporating OpenCV, the system can reveal defects that might otherwise be missed by the human eye, contributing to more accurate and reliable defect detection.

The analysis of each frame culminates in the creation of augmented reality data, which is a synthesis of insights from part identification and defect detection. This MR data includes information about the part type, defect location, severity, and recommended corrective action. OpenCV is used to create visually compelling MR overlays that are superimposed onto the original frames. These overlays highlight the defective areas, provide annotations detailing the defects, and offer visual cues to guide the inspector in addressing the issues.

The enriched frames with MR overlays are transmitted back to the client-side MR system, where they are presented to the quality inspector in real time. It is vital that this transmission occurs swiftly to minimize latency and ensure a seamless MR experience for the inspector. The inspector can then take immediate action based on the insights provided by the MR overlay.

The real-time processing stage is an iterative process that incorporates feedback from quality inspectors to continuously improve the system. This feedback is invaluable for refining the models and ensuring that the system remains responsive to real-world challenges and evolving defect patterns.

4. Case Study

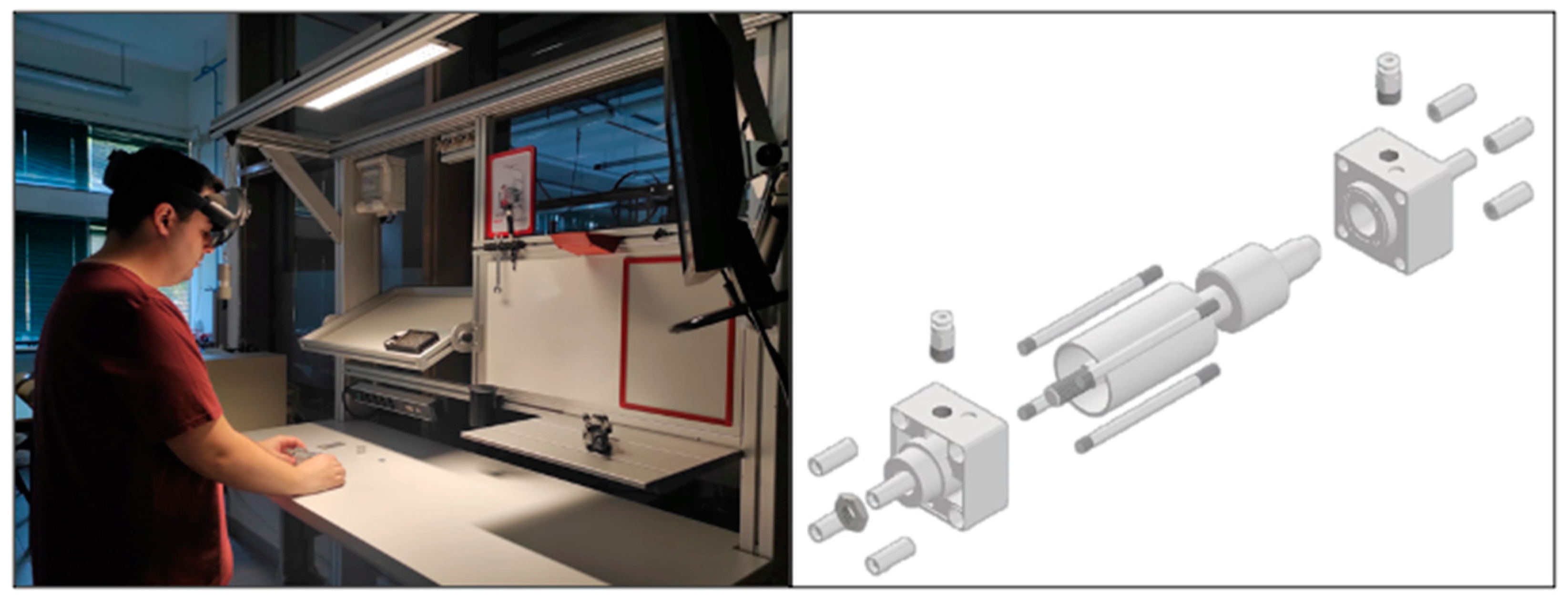

This case study explores the practical application and testing of a mixed reality-based quality control system for the assembly of a pneumatic cylinder. The prototype was developed and tested in a controlled laboratory setting to evaluate its effectiveness in providing real-time feedback on assembly quality.

The prototype setup is presented in

Figure 3.

The prototype setup for this case study involved the assembly of a pneumatic cylinder in a laboratory environment. The primary tool for the implementation of the mixed reality-based quality control system was the Microsoft HoloLens 2 smart glasses [

50]. These glasses were chosen for their advanced capabilities in capturing and overlaying mixed reality elements in a real-world environment.

The pneumatic cylinder assembly process involved multiple components, including the cylinder barrel, end caps, piston, and seals. Participants were instructed to assemble the cylinder under different conditions, with both correct and incorrect assembly scenarios.

The objective was to determine the effectiveness of the prototype system in accurately assessing the quality of the assembly process and providing real-time feedback to the assembler.

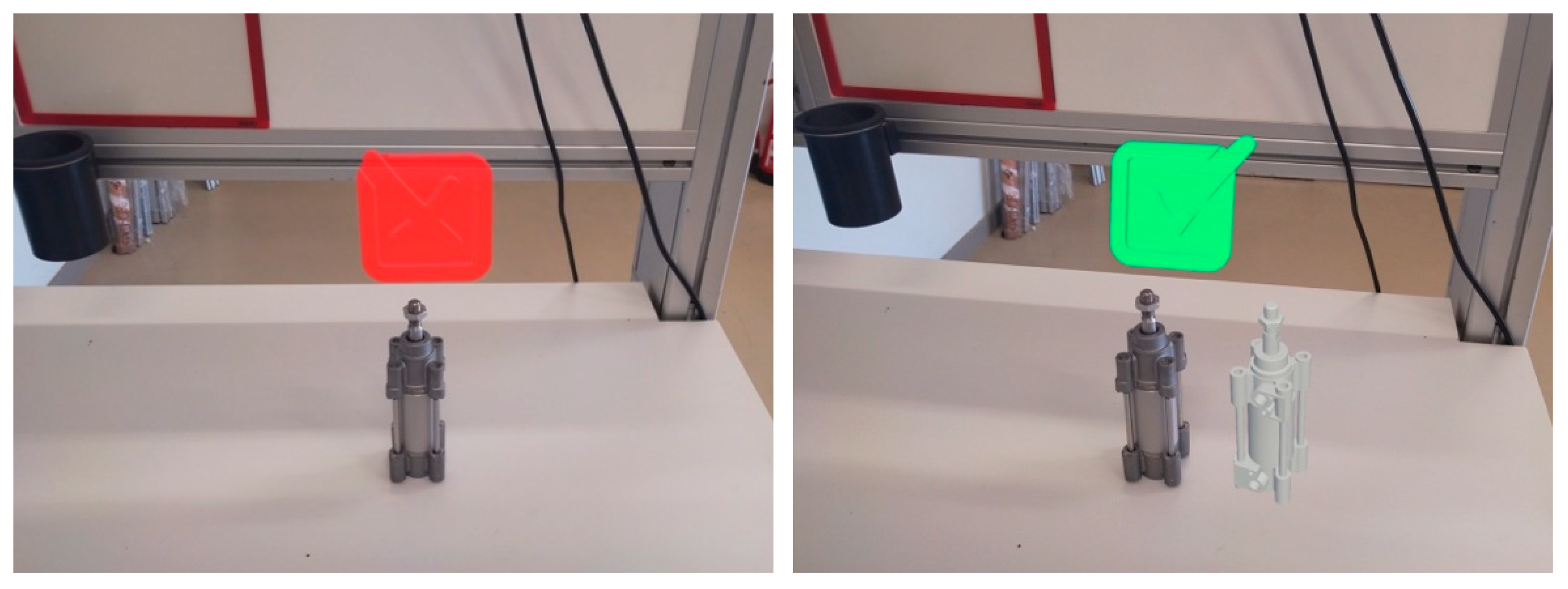

Based on the analysis, appropriate holographic overlays are displayed on the smart glasses to indicate whether the assembly is correct or not.

The initial phase of model training for the quality control system hinges on collecting a set of images from the pneumatic cylinder prototype. The quality and diversity of the image dataset serve as the foundation for the system’s accuracy and robustness.

The pneumatic cylinder is assembled and disassembled in different ways, reflecting a wide range of scenarios, including correct and incorrect assemblies, missing parts, and misaligned components. Images of these various conditions are captured at different stages of assembly, encompassing individual components, partial assemblies, and fully assembled cylinders, as shown in the following figure.

Figure 4.

Captured images of pneumatic cylinder components and assembly.

Figure 4.

Captured images of pneumatic cylinder components and assembly.

This image collection ensures that the dataset includes all potential variations that could occur during the assembly of a pneumatic cylinder.

Once gathered, the images are organized into categories according to the assembly stage, component type, and the correctness of the assembly. Proper categorization simplifies the annotation process, which is a crucial step in training the machine learning model.

Roboflow, a web-based platform for dataset management and image annotation, was used for this purpose [

51]. Annotations were made both for parts of the cylinder and for the complete model.

Roboflow was chosen for dataset management and image annotation in this project due to its streamlined interface, comprehensive toolset, and the capability to manage large datasets efficiently. It offers a user-friendly platform that simplifies the annotation process, making it more accurate and consistent.

The model was trained using the YOLOv8 object detection algorithm.

During the real-time processing stage, the video stream captured by the Microsoft Hololens 2 smart glasses is transmitted to the server for analysis by the trained YOLO model.

Combined with the YOLO model, OpenCV ensures that the system can swiftly and accurately identify components and assess the assembly quality.

The analysis results are then sent back to the smart glasses, where the custom Unity application processes the information and generates an MR overlay on the inspector’s view.

Figure 5 shows the information presented by the Hololens in the validation process of the pneumatic cylinder assembly quality control.

If the assembly is correct, a holographic indication of “OK” appears on the glasses. If the assembly is found to be incorrect or incomplete, a holographic indication of “NOT OK” appears. This real-time feedback mechanism allows the inspector to instantly identify and correct any errors, enhancing the efficiency and accuracy of the assembly process.

The consistency and accuracy of the server-based image recognition system were evident, contributing to higher levels of quality control and a decreased risk of defective products.

The prototype confirmed the feasibility and potential benefits of the proposed quality control methodology, highlighting the advantages of incorporating mixed reality and computer vision technologies in the inspection process.

5. Conclusions

The study aimed to develop a mixed reality (MR) based quality control system by integrating smart glasses with a server-based image recognition system and assessing its effectiveness in a controlled environment. Moreover, it sought to evaluate the performance and viability of this system under real-world conditions, identify possible problems and areas for improvement, and verify the feasibility of implementing this MR system on a larger operational scale in the manufacturing industry. Lastly, the study intended to contribute to the understanding and advancement of MR technology and provide a practical roadmap for its adoption in industrial quality control.

In terms of the objectives set, the study was highly successful. The MR-based quality control system was developed and successfully integrated smart glasses and a server-based image recognition system. In controlled conditions, the system demonstrated its effectiveness in enhancing the quality control process. The real-time feedback, reduction of human error, and enhanced observational capabilities provided by the smart glasses all contributed to a noticeable improvement in the quality control process.

This case study highlighted the effectiveness of combining YOLO and OpenCV for building robust real-time object detection systems. The integrated systems can automate tasks, enhance accuracy, and streamline operations, offering significant efficiency gains.

Additionally, through prototype testing under real-world conditions, the study was able to identify potential problems and areas for improvement. This valuable insight is instrumental in refining the MR system for larger operational scales, ensuring it is robust and reliable in the manufacturing industry. The successful prototype testing validated the feasibility of implementing this MR system on a broader scale, confirming that it is not just theoretically promising but also practically applicable.

The study successfully provided a practical roadmap for the adoption of MR in industrial quality control. The detailed analysis of the development, implementation, and evaluation process offers a valuable resource for organizations considering the adoption of MR technology in their quality control processes. The study contributes to the advancement of MR technology and its applications in industrial settings.

The use of MR in quality control presents numerous advantages, including real-time feedback, increased accuracy, reduced human error, and improved overall efficiency. In this context, the development of an MR-based quality control system becomes an attractive proposition for industries seeking to innovate and optimize their production processes.

Author Contributions

J.S. conceptualized the study, developed the methodology, and wrote the paper; P.C. and L.S. conceptualized the study, developed the methodology; P.V. provided validation and reviewed the manuscript; P.M. provided validation and reviewed the manuscript; J.H. supervised the study and reviewed the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded by National Funds through the FCT—Foundation for Science and Technology, I.P., within the scope of the project Ref. UIDB/05583/2020. Furthermore, we would like to thank the Research Centre in Digital Services (CISeD) and the Instituto Politécnico de Viseu for their support.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Juran, J.M.; Defeo, J.A. Juran’s Quality Handbook: The Complete Guide to Performance Excellence; McGraw-Hill: New York, USA, 2010. [Google Scholar]

- Thoben, K.; Wiesner, S.; Wuest, T. Industrie 4.0 and Smart Manufacturing – A Review of Research Issues and Application Examples. Int. J. Automation Technol. 2017, 11, 4–16. [Google Scholar] [CrossRef]

- Jayaram, A. Lean Six Sigma Approach for Global Supply Chain Management Using Industry 4.0 and IIoT. In Proceedings of the 2nd International Conference on Contemporary Computing and Informatics (IC3I), Greater Noida, India, 14-17 December 2016; pp. 89–94. [Google Scholar]

- Pacana, A.; Czerwińska, K。; Dwornicka, R. Analysis of Quality Control Efficiency in The Automotive Industry. Transportation Research Procedia 2021, 55, 691–698. [Google Scholar] [CrossRef]

- Kim, H.; Lin, Y.; Tseng, T.L.B. A Review on Quality Control in Additive Manufacturing. Rapid Prototyping Journal 2018, 24, 645–669. [Google Scholar] [CrossRef]

- Rusell, J.P. The ASQ Auditing Handbook, 4th ed.; ASQ Quality Press: Milwaukee, Wisconsin, USA, 2012. [Google Scholar]

- Kujawińska, A.; Vogt, K. Human Factors in Visual Quality Control. Management and Production Engineering Review 2015, 6, 25–31. [Google Scholar] [CrossRef]

- Drury, C.G. Human Factors and Automation in Test and Inspection. In Handbook of Industrial Engineering, 3rd ed.; Salvendy, G., Ed.; John Wiley and Sons: Hoboken, NJ, USA, 2001; pp. 1887–1920. [Google Scholar]

- Gallwey, T.J. Selection Tests for Visual Inspection on a Multiple Fault Type Task. Ergonomics 1982, 25, 1077–1092. [Google Scholar] [CrossRef]

- See, J.E. Visual Inspection Reliability for Precision Manufactured Parts. Hum. Factors 2015, 57, 1427–1442. [Google Scholar] [CrossRef]

- Shahin, M.; Chen, F.F.; Bouzary, H.; Krishnaiyer, K. Integration of Lean Practices and Industry 4.0 Technologies: Smart Manufacturing for Next-Generation Enterprises. Int. J. Adv. Manuf. Technol. 2020, 107, 2927–2936. [Google Scholar] [CrossRef]

- Silva, J.; Nogueira, P.; Martins, P.; Vaz, P.; Abrantes, J. Exploring the Potential of Mixed Reality as a Support Tool for Industrial Training and Production Processes: A Case Study Utilizing Microsoft HoloLens. In Advances in Intelligent Systems and Computing; Iglesia, D., Santana, J., Rivero, A., Eds.; Springer: Cham, Switzerland, 2023; pp. 187–196. [Google Scholar]

- Escobar-Castillejos, D.; Noguez, J.; Bello, F.; Neri, L.; Magana, A.J.; Benes, B. A Review of Training and Guidance Systems in Medical Surgery. Appl. Sci. 2020, 10, 5752. [Google Scholar] [CrossRef]

- Segovia, D.; Mendoza, M.; Mendoza, E.; González, E. Augmented Reality as a Tool for Production and Quality Monitoring. Procedia Computer Science 2015, 75, 291–300. [Google Scholar] [CrossRef]

- Baroroh, D.K.; Chu, C.H.; Wang, L. Systematic Literature Review on Augmented Reality in Smart Manufacturing: Collaboration Between Human and Computational Intelligence. Journal of Manufacturing Systems 2021, 61, 696–711. [Google Scholar] [CrossRef]

- Muñoz, A.; Martí, A.; Mahiques, X.; Gracia, L.; Solanes, J.E.; Tornero, J. Camera 3D Positioning Mixed Reality-Based Interface to Improve Worker Safety, Ergonomics and Productivity. CIRP J. Manuf. Sci. Technol. 2020, 28, 24–37. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Choi, S.M. A Review on Mixed Reality: Current Trends, Challenges and Prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The Impact of Virtual, Augmented and Mixed Reality Technologies on the Customer Experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Runji, J.M.; Lin, C.-Y. Markerless Cooperative Augmented Reality-Based Smart Manufacturing Double-Check System: Case of Safe PCBA Inspection Following Automatic Optical Inspection. Robot. Comput. Integr. Manuf. 2020, 64, 101957. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object Detection Using YOLO: Challenges, Architectural Successors, Datasets and Applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature Toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, C.; Xiao, S. Small Object Detection in Optical Remote Sensing Images via Modified Faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Columbus, OH, USA, 23-28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 11-18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Shao, F.; Wang, X.; Meng, F.; Zhu, J.; Wang, D.; Dai, J. Improved Faster R-CNN Traffic Sign Detection Based on a Second Region of Interest and Highly Possible Regions Proposal Network. Sensors 2019, 19, 2288. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22-29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-time Object Detection. In Proceedings of the IEEE Conference on Computer Vision, Las Vegas, USA, 26 June-1 July 2016; pp. 779–788. [Google Scholar]

- Ravi, N.; El-Sharkawy, M. Real-Time Embedded Implementation of Improved Object Detector for Resource-Constrained Devices. J. Low Power Electron. Appl. 2022, 12, 21. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11-14 October 2016; pp. 21–37. [Google Scholar]

- Padilla, R.; Passos, W.L.; Dias, T.L.B.; Netto, S.L.; da Silva, E.A.B. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Liu, H.; Duan, X.; Chen, H.; Lou, H.; Deng, L. DBF-YOLO: UAV Small Targets Detection Based on Shallow Feature Fusion. IEEJ Trans. Electr. Electron. Eng. 2023, 18, 605–612. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A Modified YOLOv8 Detection Network for UAV Aerial Image Recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, N.; Won, C.S. High-Speed Drone Detection Based On Yolo-V8. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4-11 June 2023; pp. 1–2. [Google Scholar]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. DC-YOLOv8: Small-Size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Touko, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, W. Face Mask Wearing Detection Algorithm Based on Improved YOLO-v4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A Detection Algorithm for Cherry Fruits Based on the Improved YOLO-v4 Model. Neural Comput. and Applic. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Ruiz-Ponce, P.A.; Ortiz-Perez, D.; Garcia-Rodriguez, J.; Kiefer, B. POSEIDON: A Data Augmentation Tool for Small Object Detection Datasets in Maritime Environments. Sensors 2023, 23, 3691. [Google Scholar] [CrossRef]

- Ma, M.; Pang, H. SP-YOLOv8s: An Improved YOLOv8s Model for Remote Sensing Image Tiny Object Detection. Appl. Sci. 2023, 13, 8161. [Google Scholar] [CrossRef]

- Yandouzi, M.; Grari, M.; Berrahal, M.; Idrissi, I.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Elmiad, A.K. Investigation of Combining Deep Learning Object Recognition with Drones for Forest Fire Detection and Monitoring. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 377–384. [Google Scholar] [CrossRef]

- Samini, A.; Palmerius, K.L.; Ljung, P. A Review of Current, Complete Augmented Reality Solutions. In Proceedings of the International Conference on Cyberworlds (CW), Caen, France, 28–30 September 2021; pp. 49–56. [Google Scholar]

- Dontschewa, M.; Stamatov, D.; Marinov, M.B. Mixed Reality Smart Glass Application for Interactive Working. In Proceedings of the XXVI International Scientific Conference Electronics, Sozopol, Bulgaria, 13–15 September 2017; pp. 1–4. [Google Scholar]

- Anjum, T.; Lawrence, S.; Shabani, A. Augmented Reality and Affective Computing on the Edge Makes Social Robots Better Companions for Older Adults. In Proceedings of the 2nd International Conference on Robotics, Computer Vision and Intelligent Systems (ROBOVIS), Online, 27–28 October 2021; pp. 196–204. [Google Scholar]

- Yoon, Y.-S.; Kim, D.-M.; Suh, J.-W. Augmented Reality Services Using Smart Glasses for Great Gilt-bronze Incense Burner of Baekje. In Proceedings of the International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Korea, Republic, 6–9 February 2022; pp. 1–4. [Google Scholar]

- Deshpande, H.; Singh, A.; Herunde, H. Comparative Analysis on YOLO Object Detection with OpenCV. Int. Journal of Research in Industrial Engineering 2020, 9, 46–64. [Google Scholar]

- Sharma, A.; Pathak, J.; Prakash, M.; Singh, J.N. Object Detection Using OpenCV and Python. In Proceedings of the 3rd International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 17–18 December 2021; pp. 501–505. [Google Scholar]

- Howse, J.; Minichino, J. Learning OpenCV 4 Computer Vision with Python 3: Get to Grips with Tools, Techniques, and Algorithms for Computer Vision and Machine Learning, 3rd ed.; Packt Publishing: Birmingham, UK, 2020. [Google Scholar]

- Park, S.; Bokijonov, S.; Choi, Y. Review of Microsoft HoloLens Applications Over the Past Five Years. Appl. Sci. 2021, 11, 7259. [Google Scholar] [CrossRef]

- Protik, A.A.; Rafi, A.H.; Siddique, S. Real-time Personal Protective Equipment (PPE) Detection Using YOLOv4 and TensorFlow. In Proceedings of the 2021 IEEE Region 10 Symposium (TENSYMP), Jeju, Republic of Korea, 23–25 August 2021; pp. 1–6. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).