2. Materials and methods

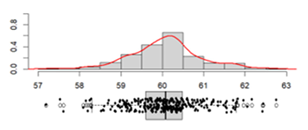

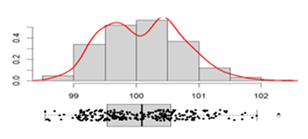

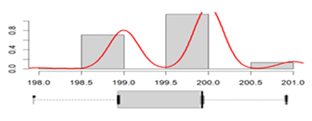

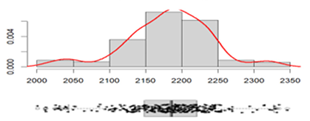

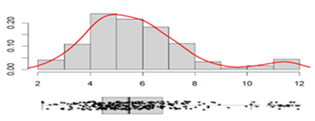

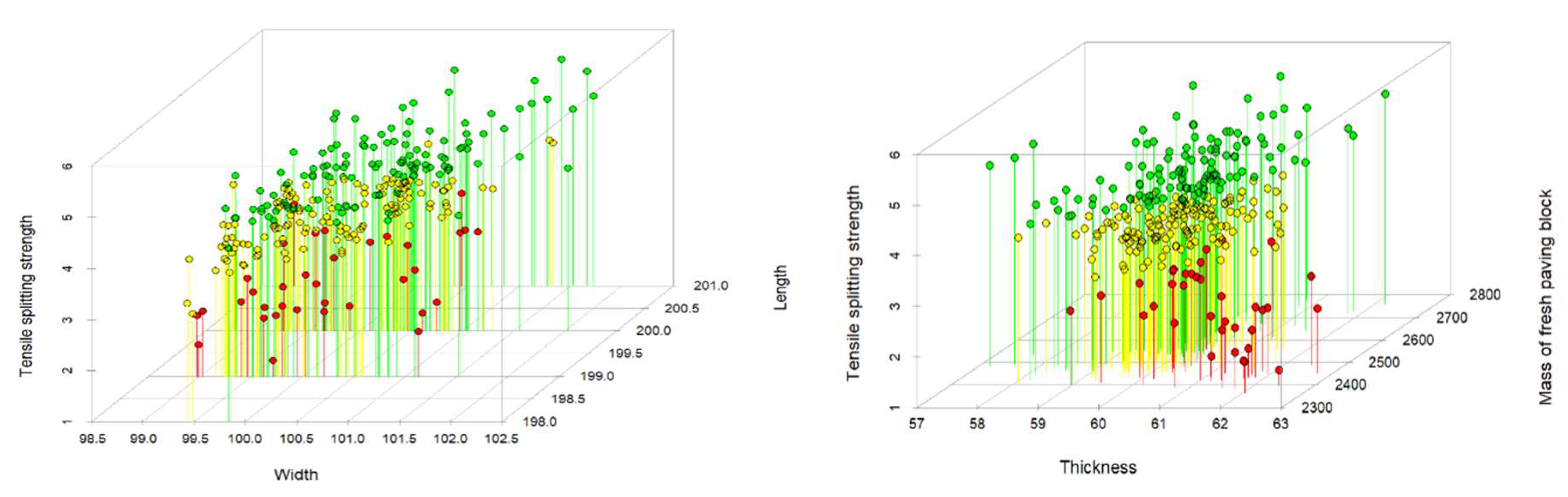

The paving blocks of the present investigation were obtained from a factory that produces 30 000 units per day in Quito - Ecuador; the paving block model is rectangular with nominal dimensions of length 200 mm, 100 mm in width and 60 mm in thickness. The research has a quantitative approach since all the variables to be analyzed are continuous quantitative.

As a first point, the sample size is estimated using the G* Power software for multiple linear regression of 5 predictors, using a medium range effect size of 0.0363, which allowed estimating a sample size of 300 paving stones, with which an estimated power of the test of 0.9502764 was obtained. On production day, the paving blocks for the population of 30 000 units are sampled as the pieces come out of the vibro-compacting machine, the measurements of the length, width, thickness and mass of the fresh paving block are taken, the paving blocks are marked to guarantee traceability and carry out the absorption and resistance test according to the NTE INEN 3040 standard. The database consists of rows representing the analysis individuals of the sampled and numbered paving blocks; the columns represent the analysis variables.

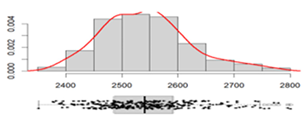

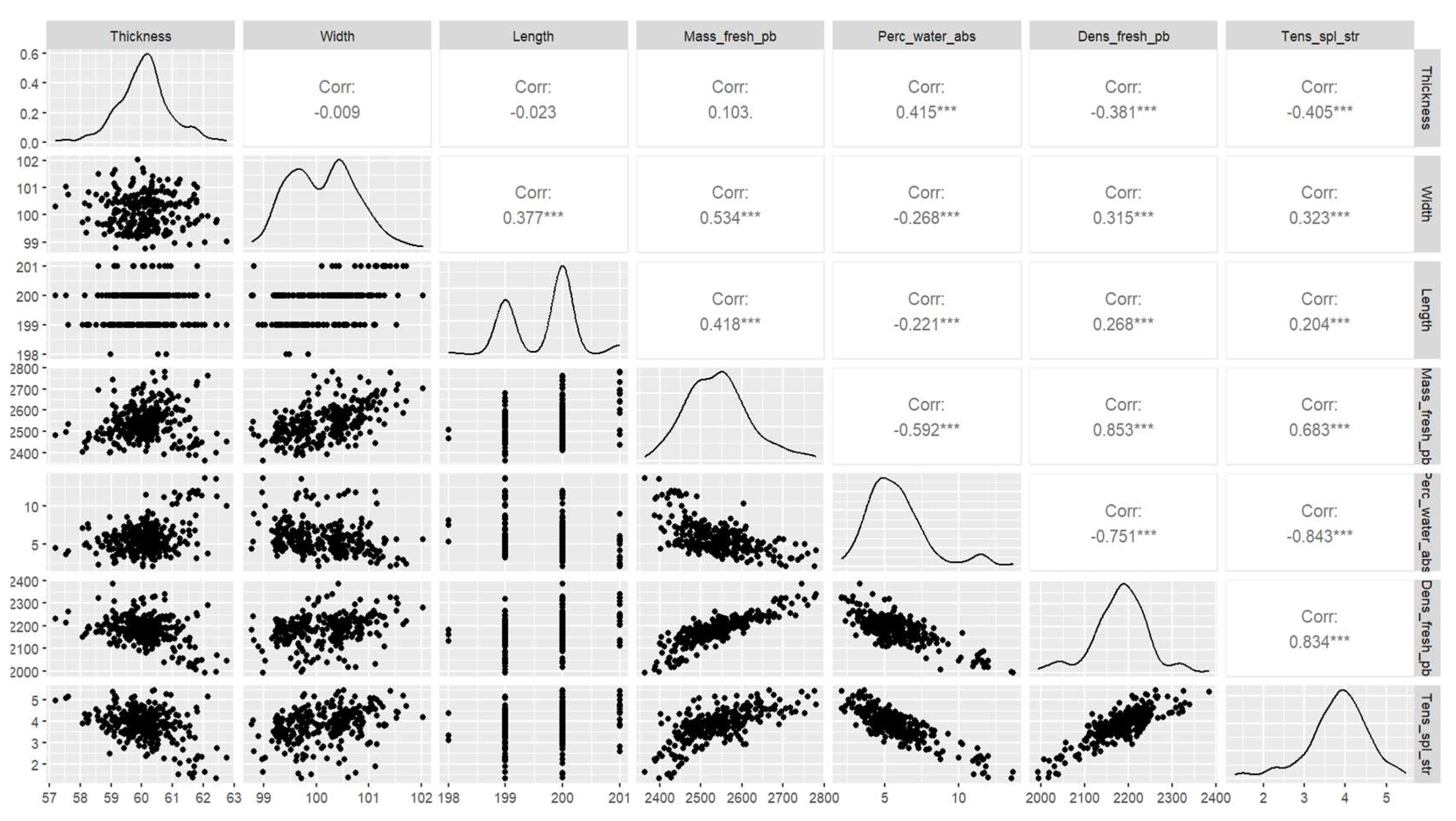

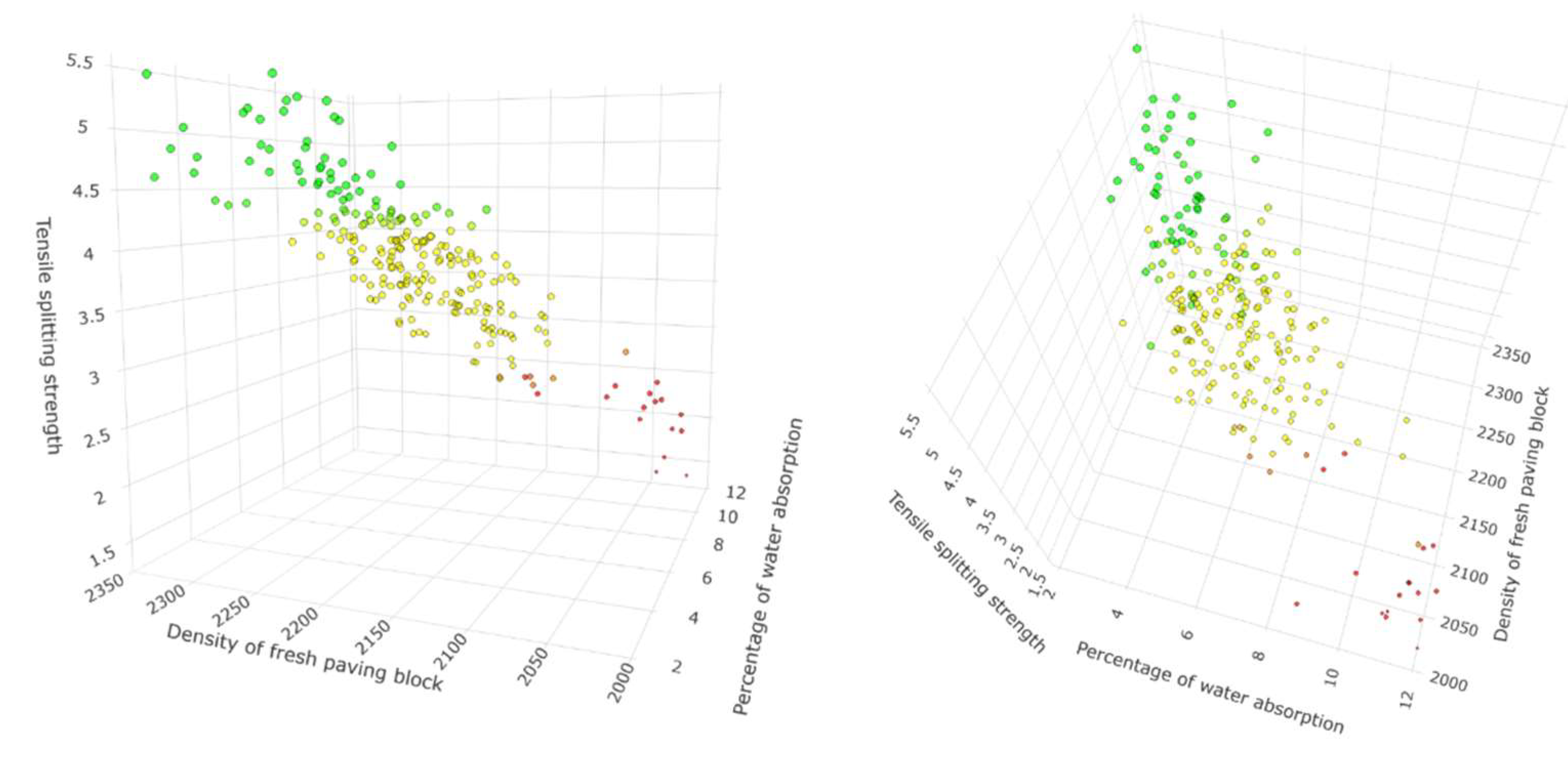

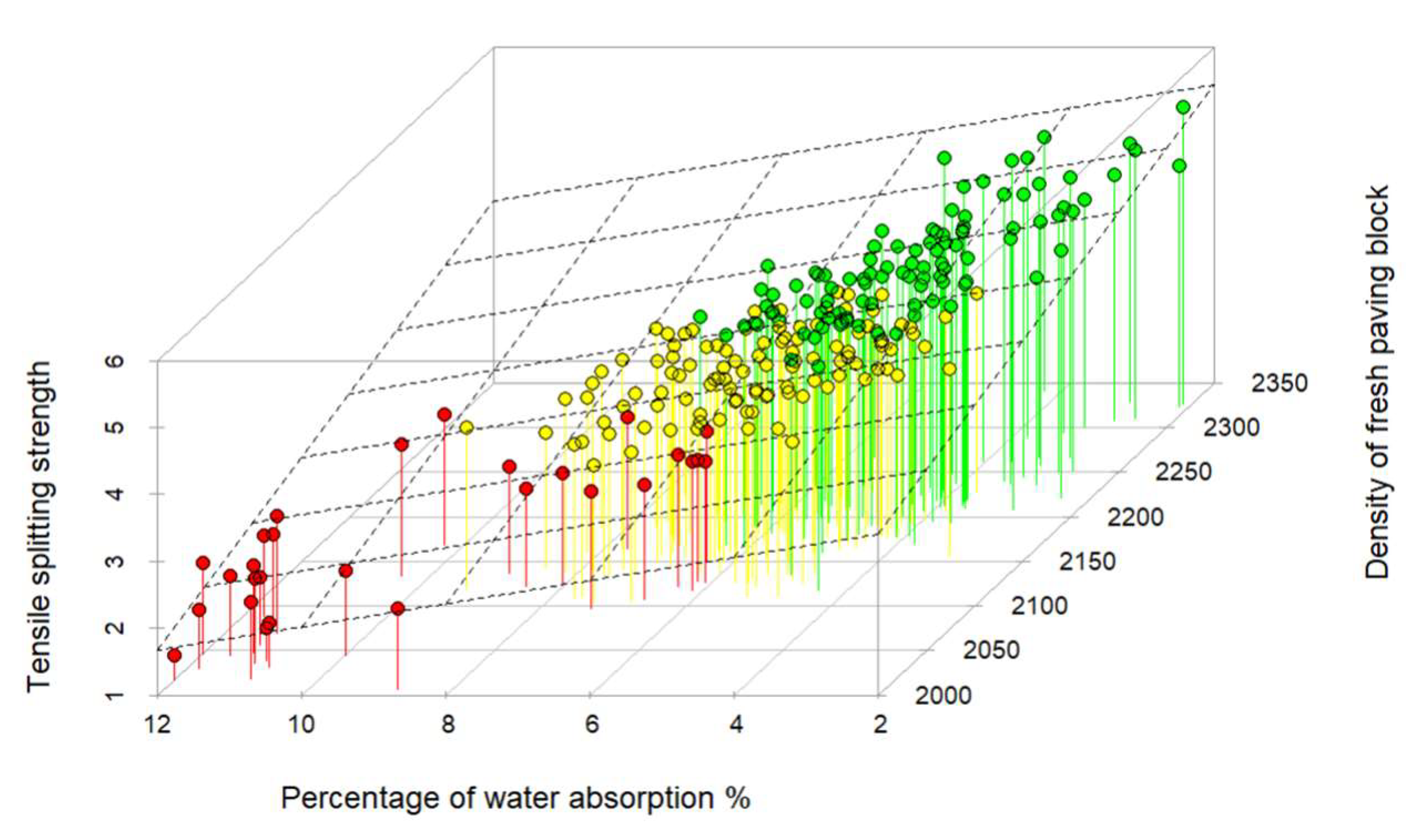

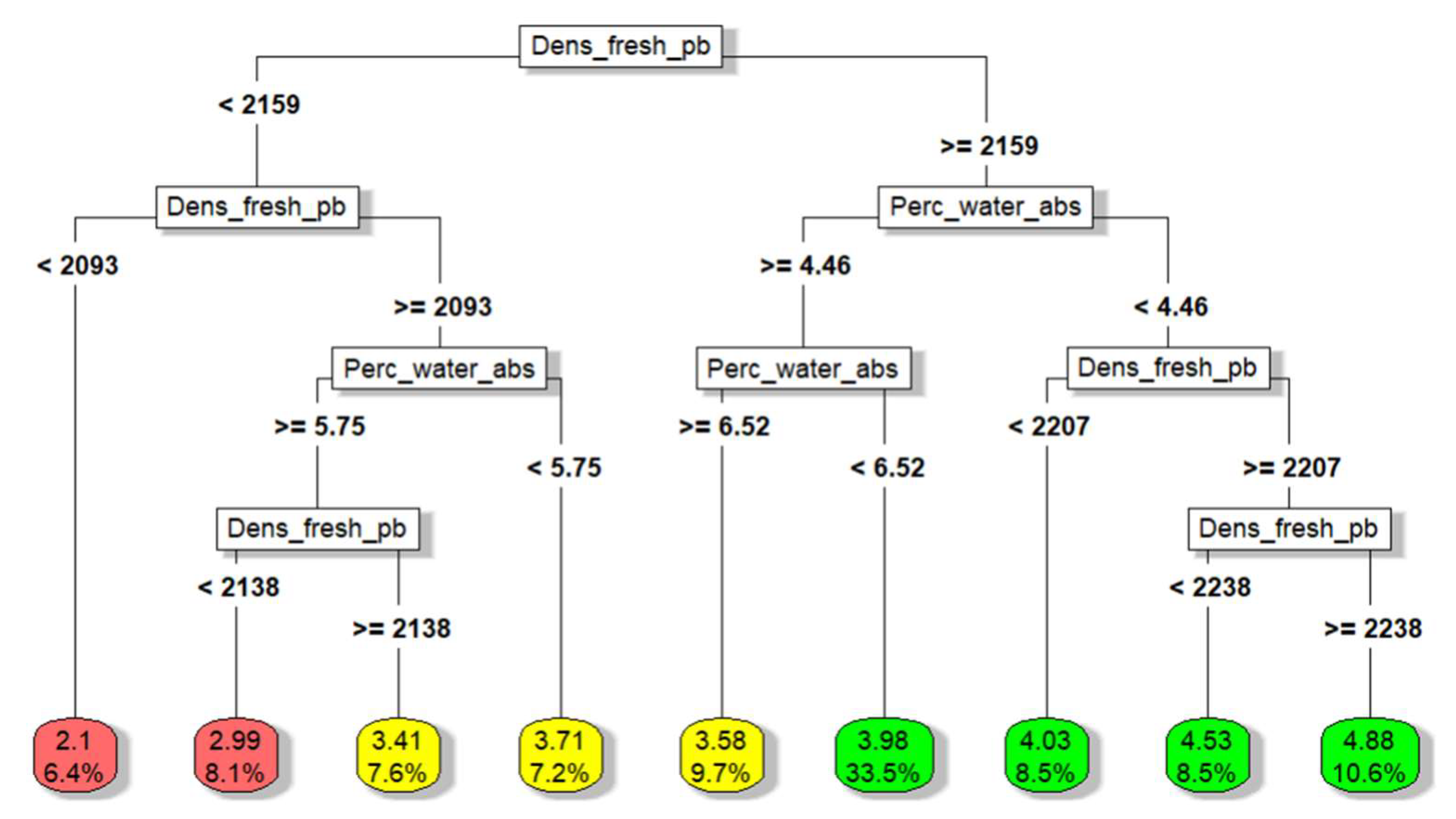

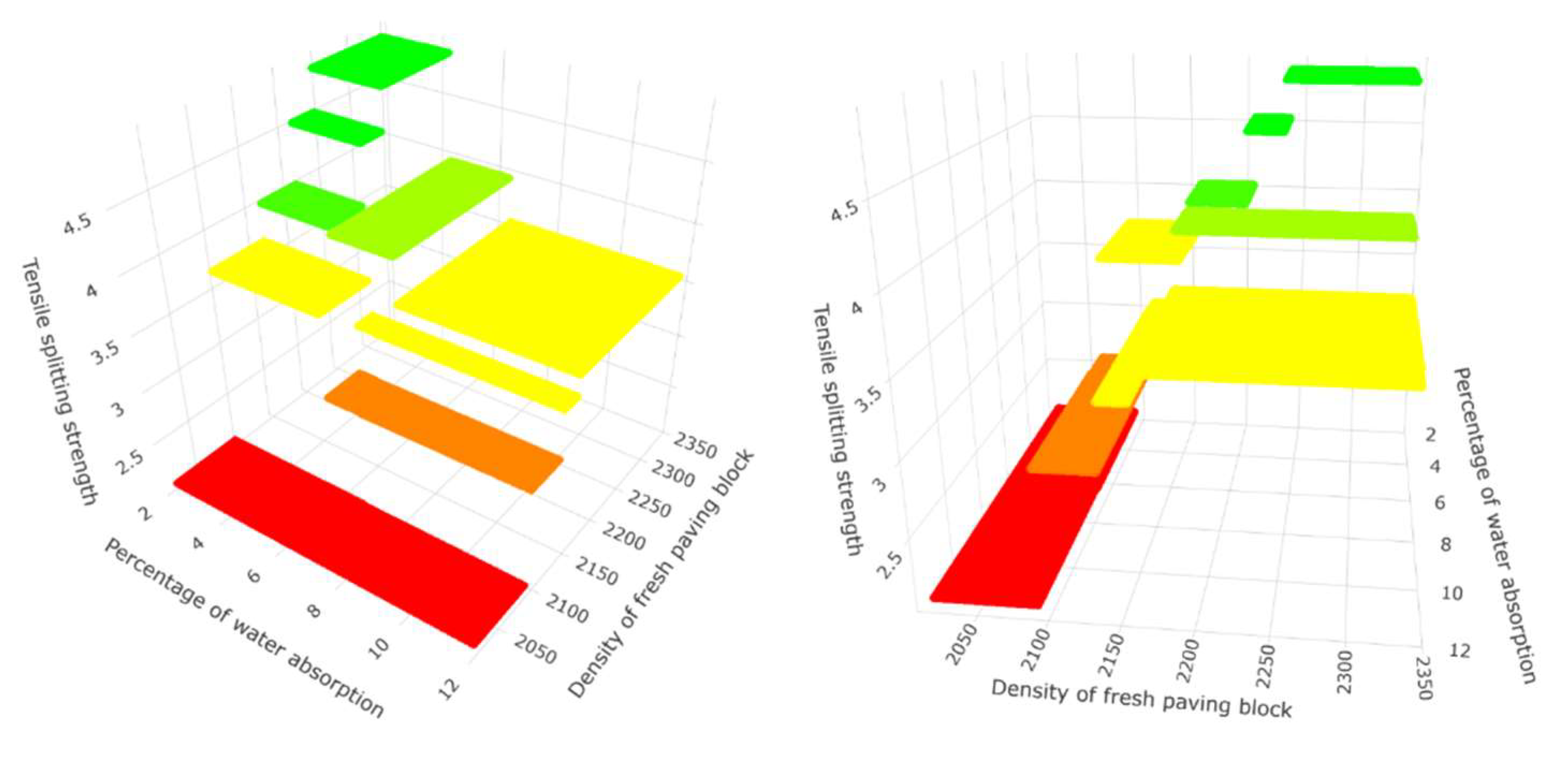

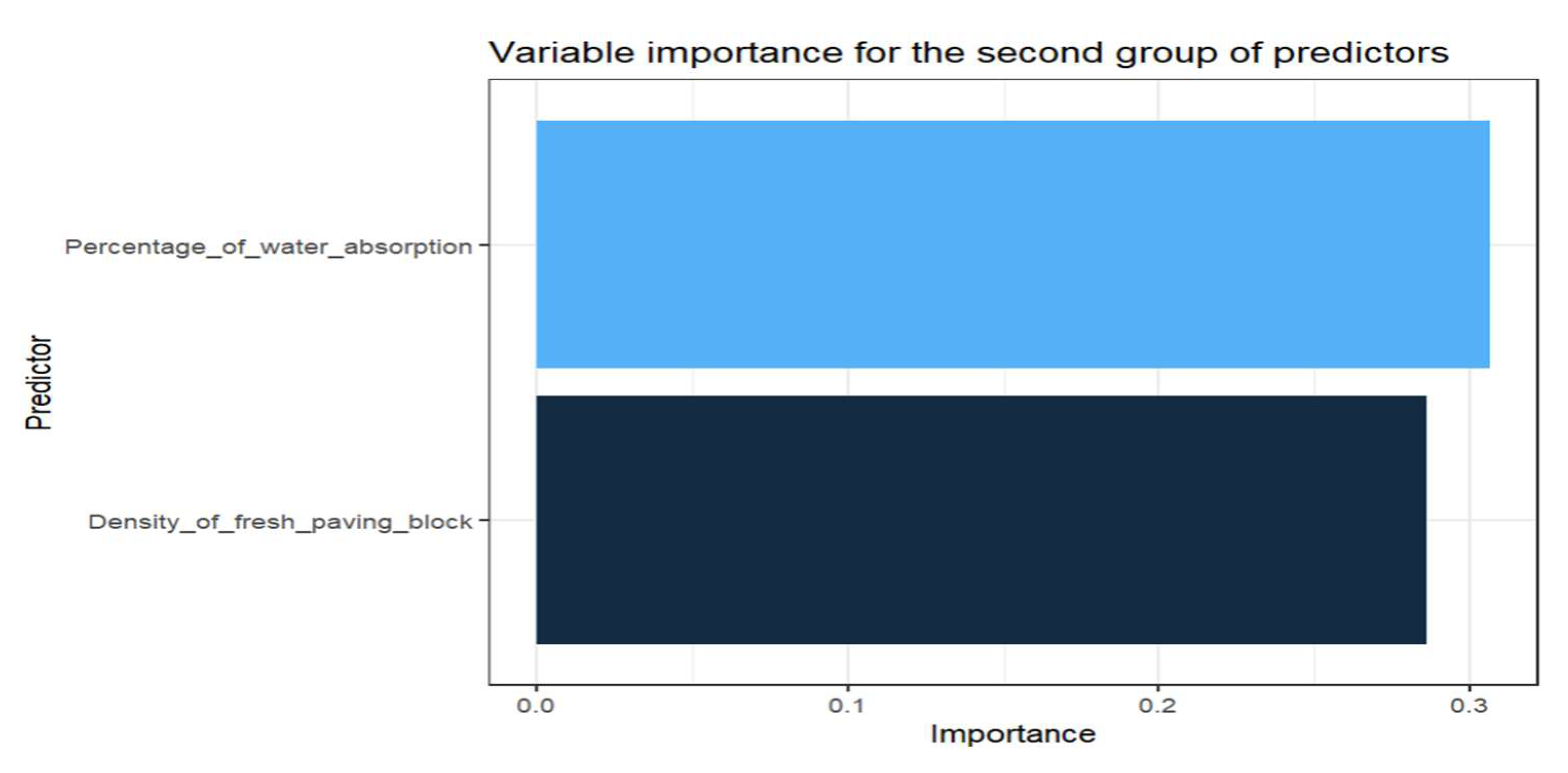

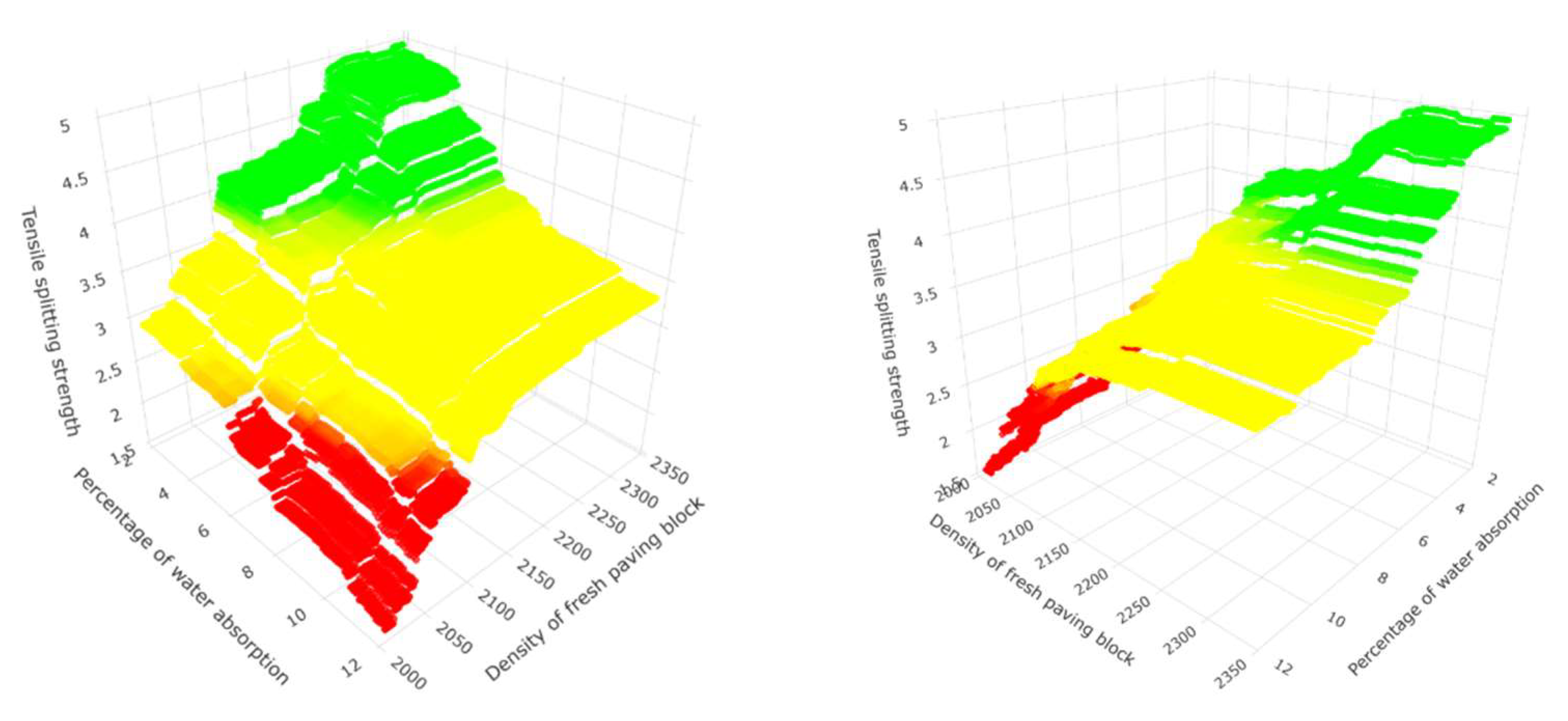

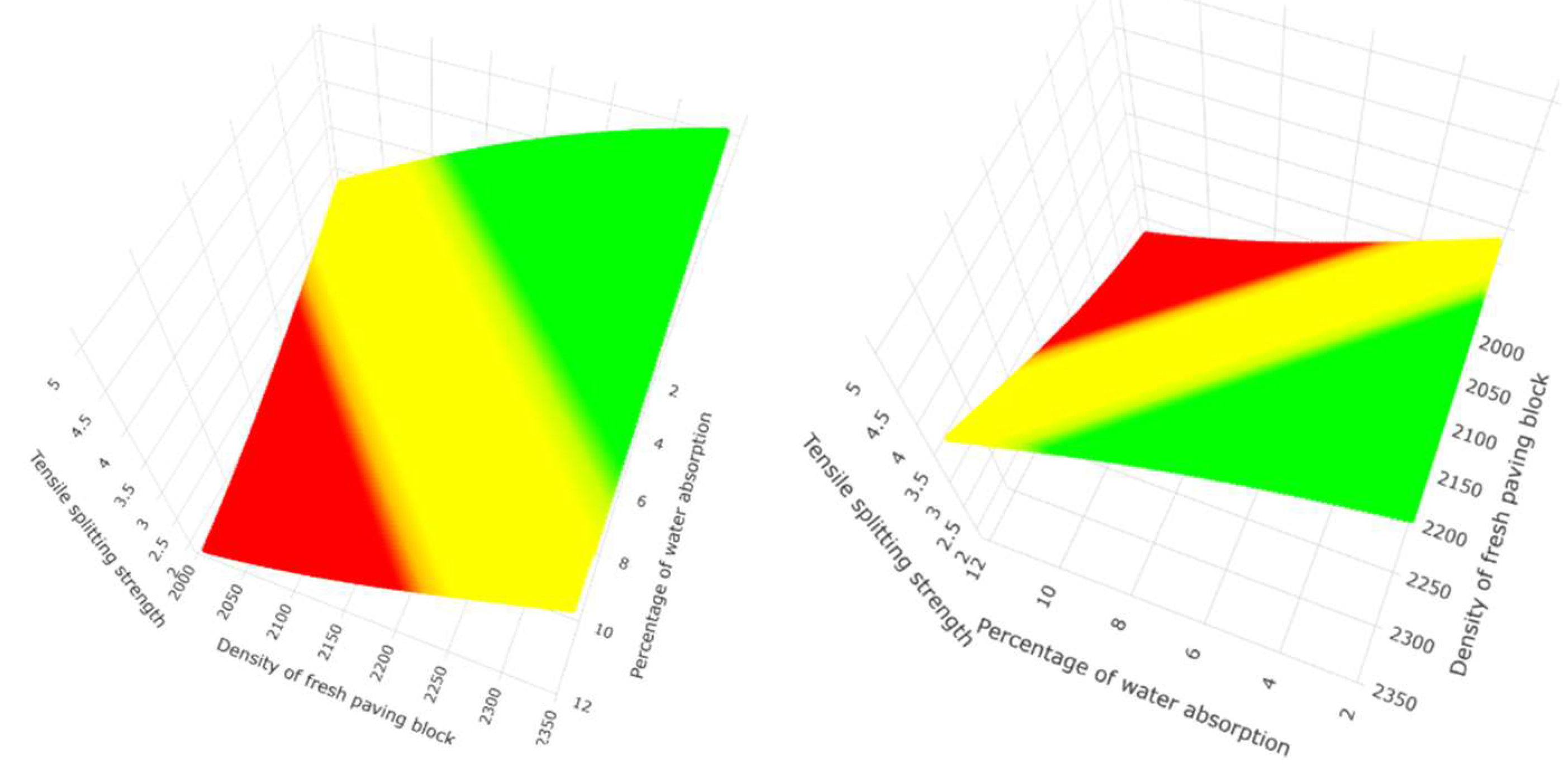

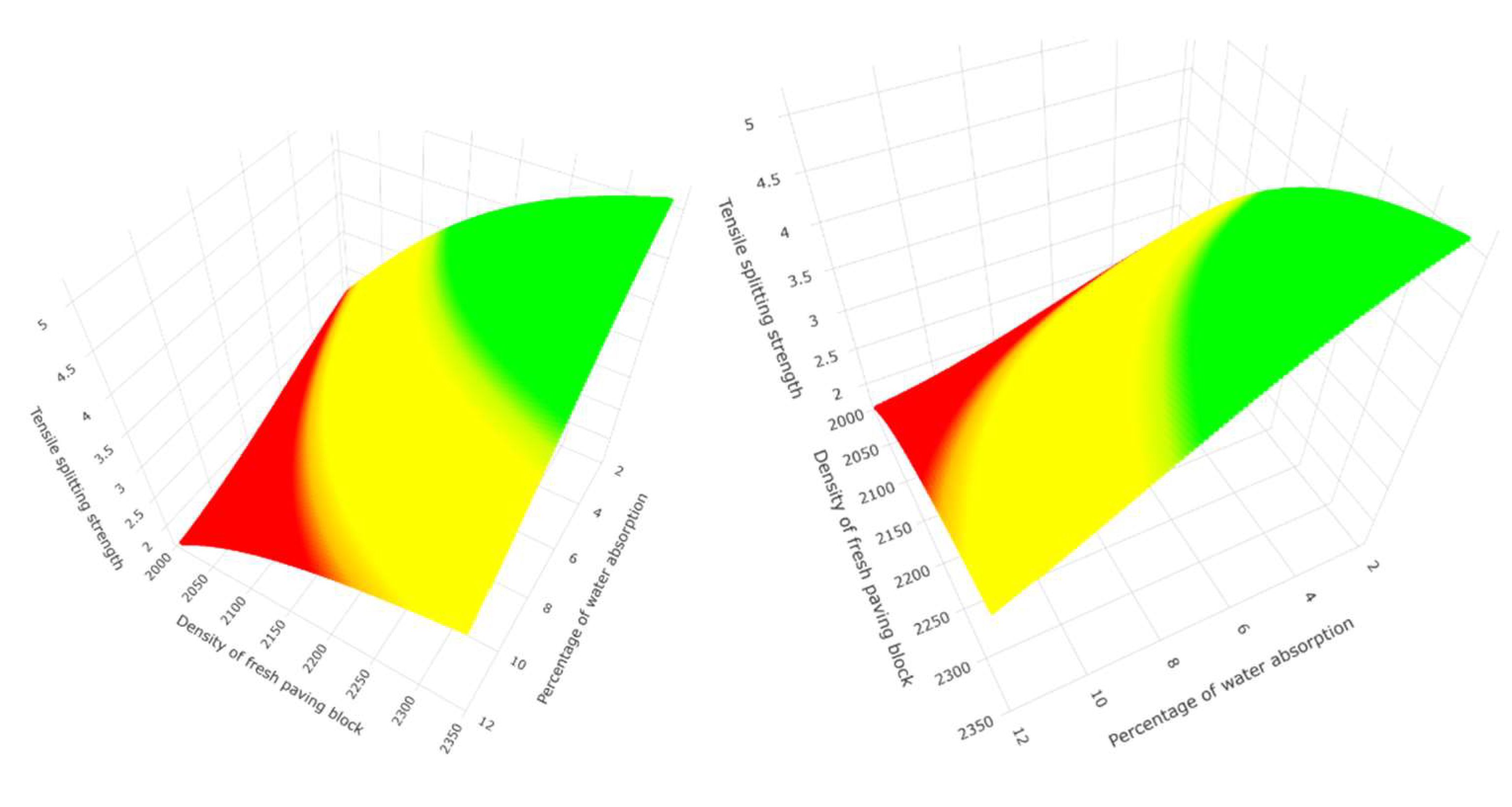

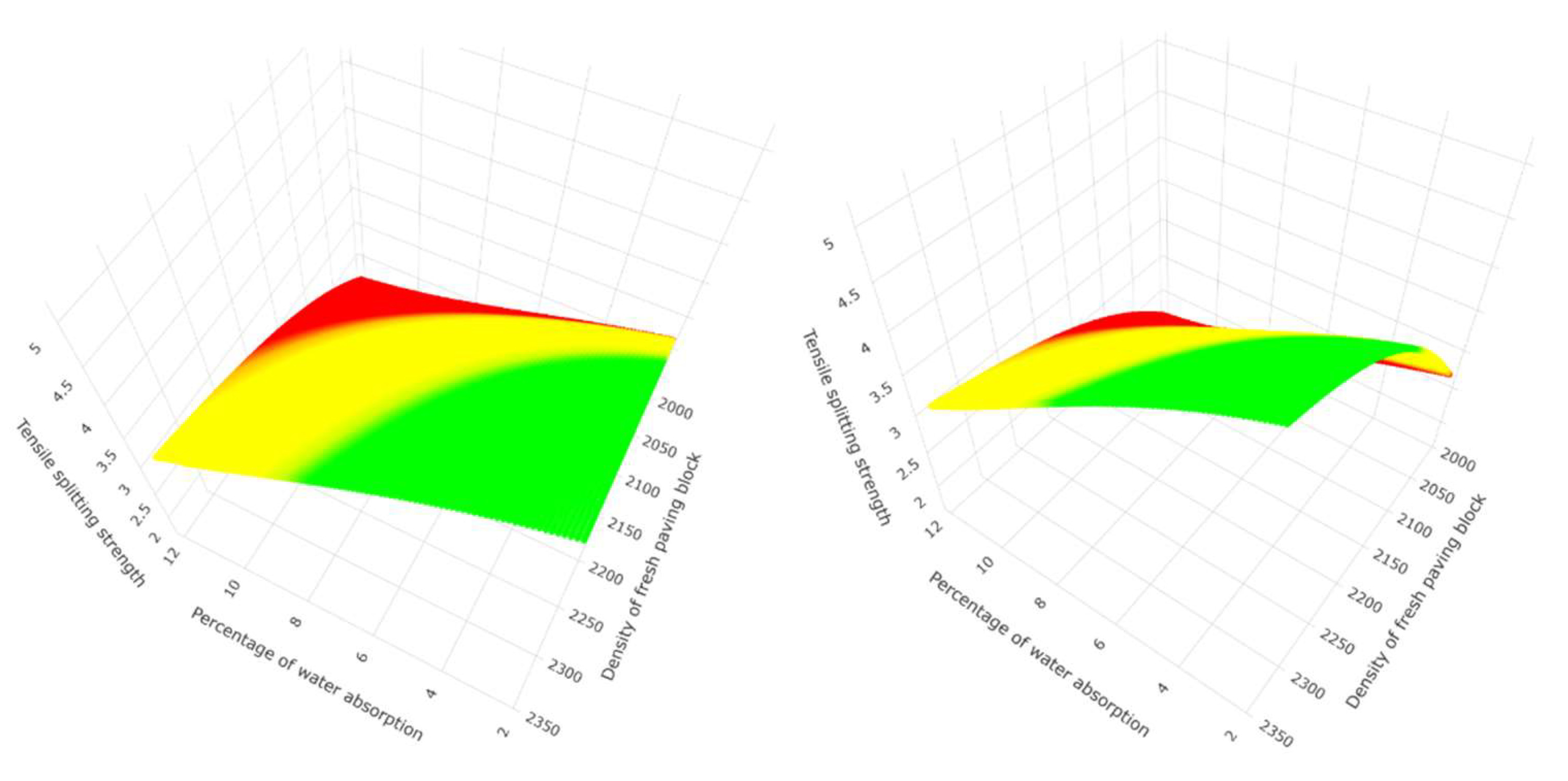

The variables that enter the models of multivariate techniques, also called input, predictor or independent, are: length mm, width mm, height mm, the mass of the fresh paving blocks (piece fresh from the vibro-compacting process) expressed in grams and the percentage of absorption based on the NTE INEN 3040 standard. Additionally, since the dimensional variables of the paving blocks and the mass of the fresh product can be reformulated into a new variable called the density of the fresh product expressed in units of (kg/m3), it was taken into account this variable together with the percentage of water absorption to make the prediction and compare the multivariate methods.

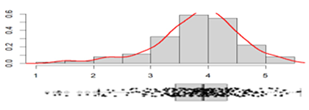

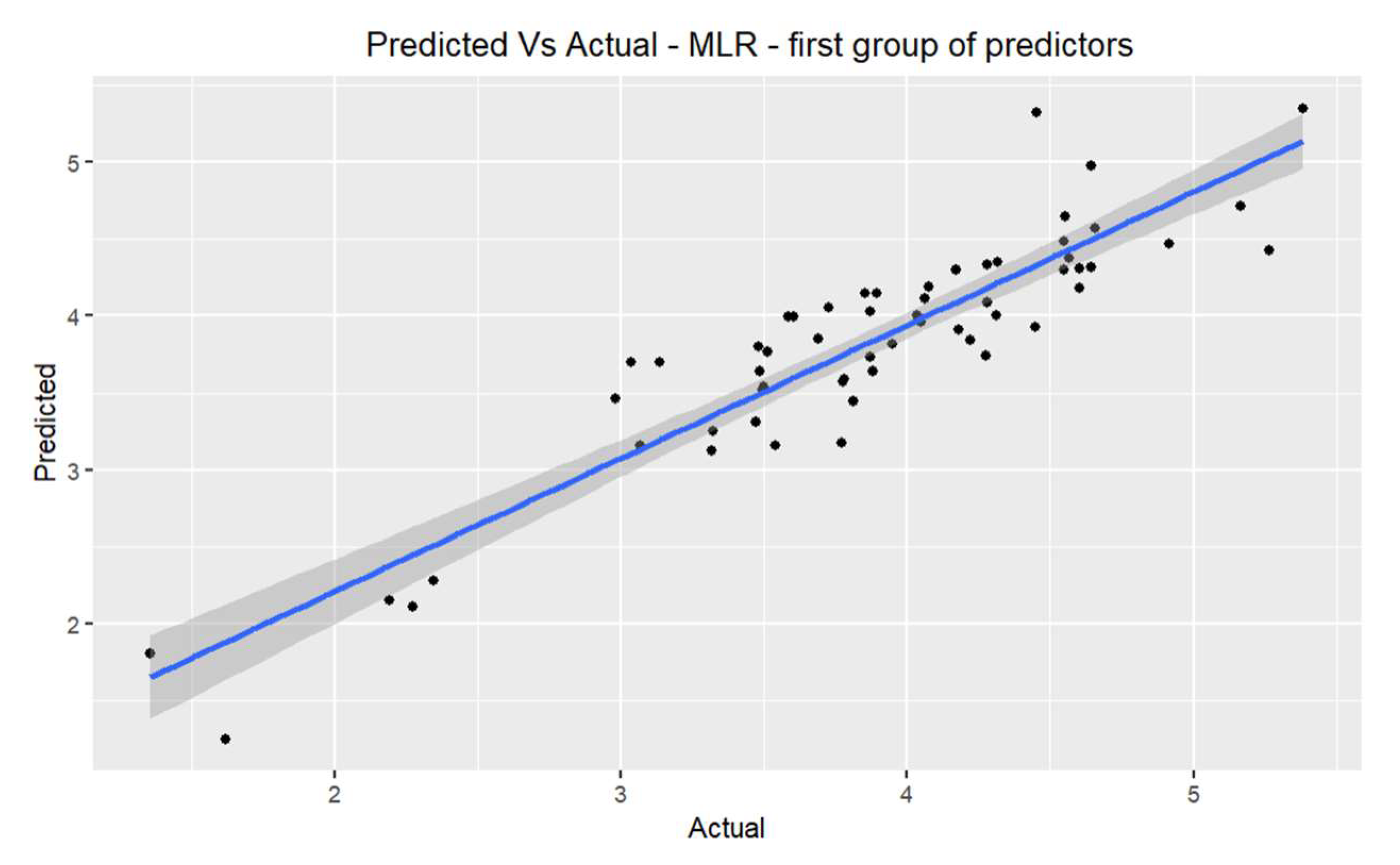

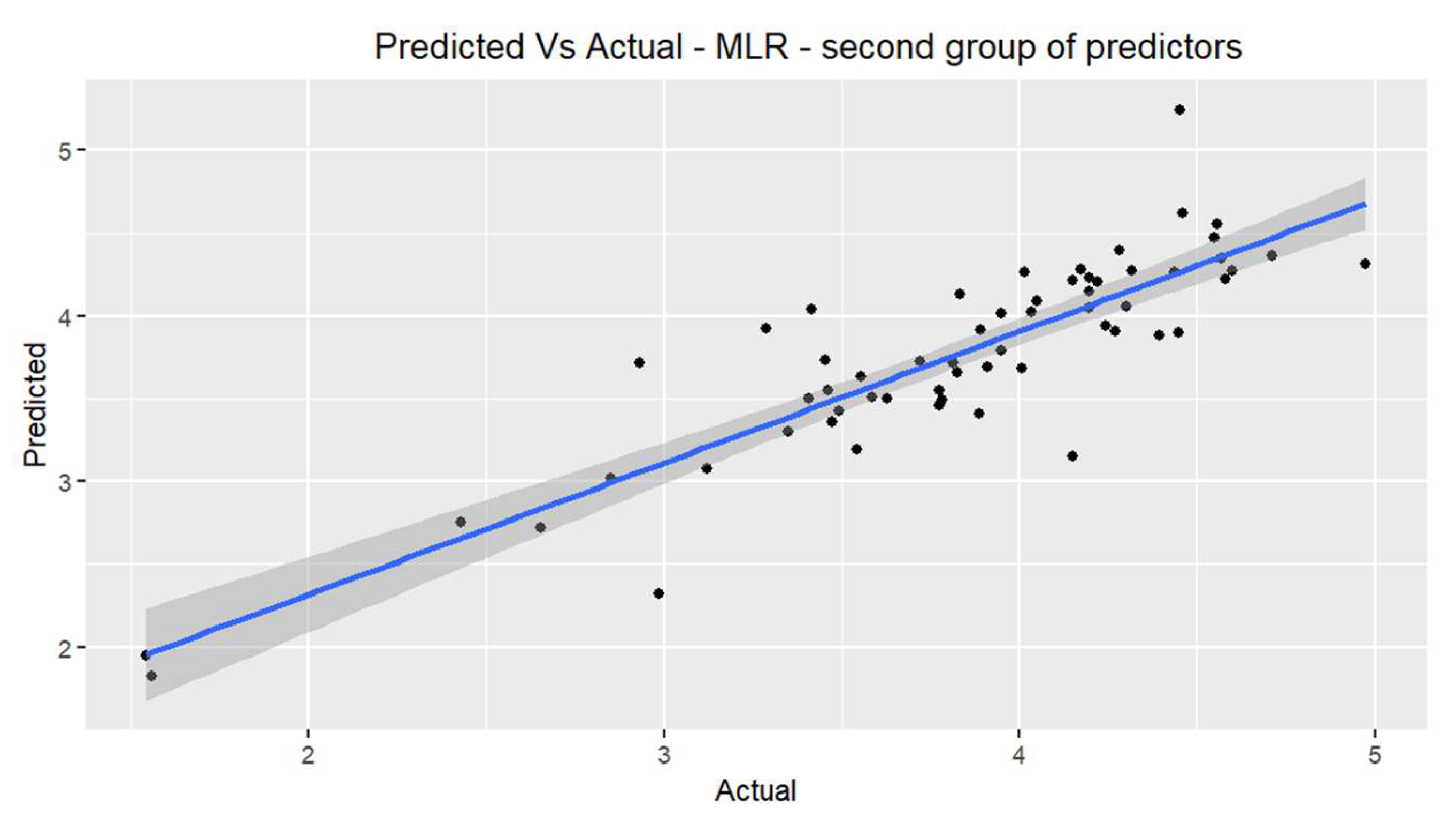

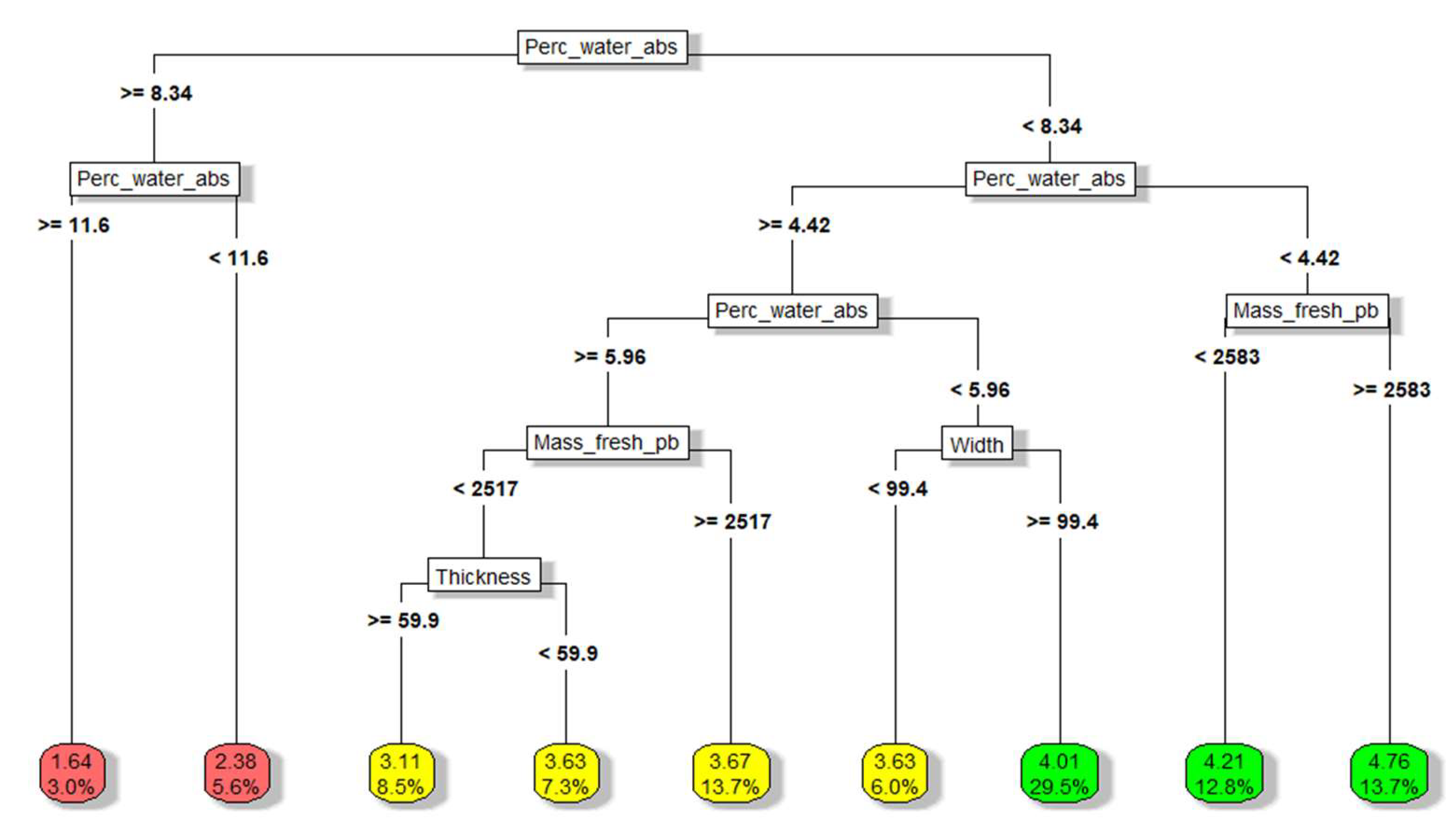

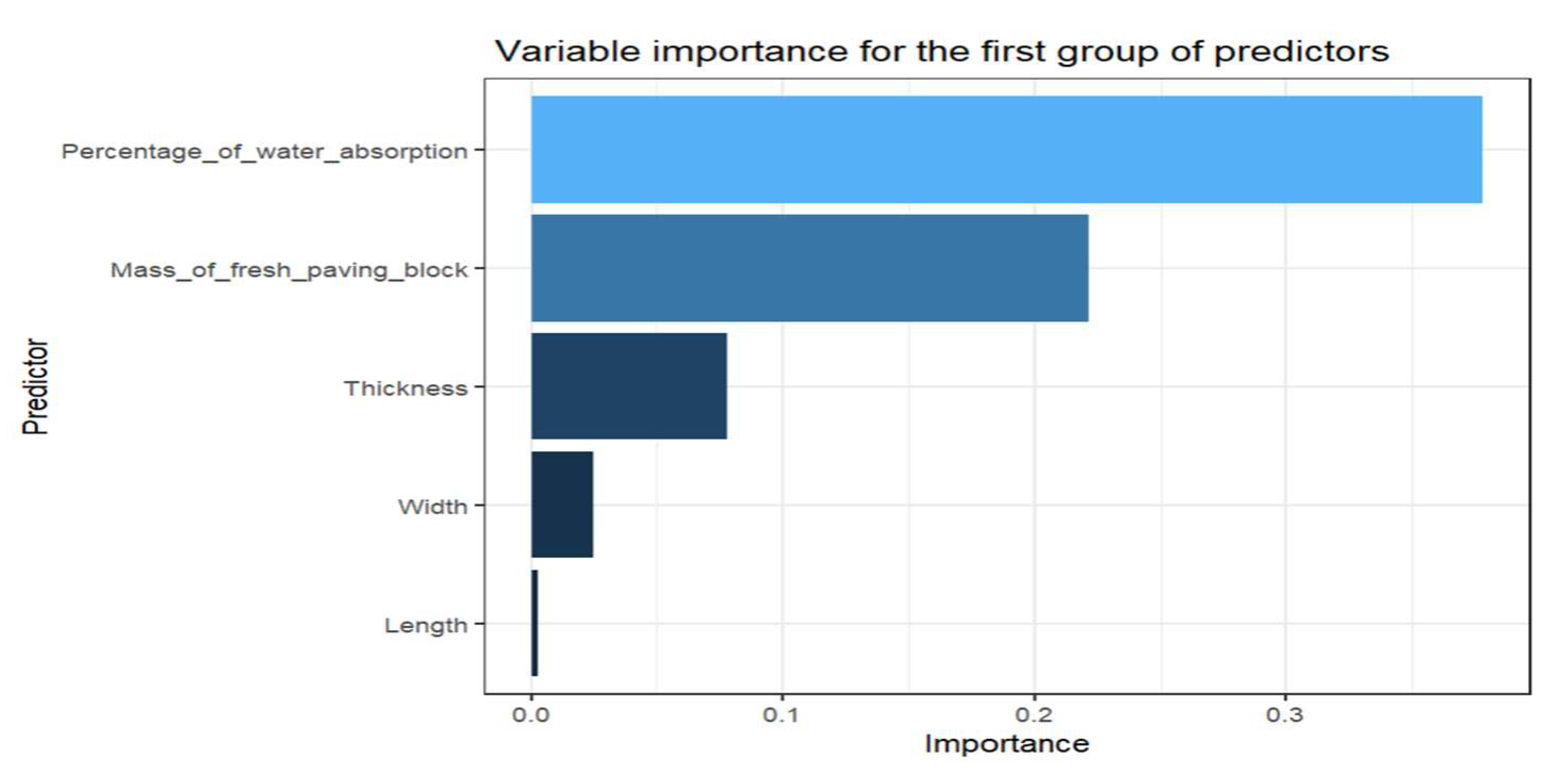

The response variable, also called dependent or output, is the indirect tensile strength in megapascals (MPa), which will depend on the predictor variables, which are grouped into: First group of predictor variables (5): Length, width, thickness, mass of the fresh product and absorption water percentage. The second group of predictor variables (2): Density of the fresh paving block and percentage of water absorption.

Water absorption. The water absorption test is carried out by reference to the NTE INEN 3040 (2016) standard, which indicates that to determine the absorption rate, the paving block must be submerged in potable water at 20 ±5

o C minimum for three days and then clean the surface excess water with a moistened cloth, weigh the moistened paving block to a constant mass. In the same way, an oven is used to find the mass of the dry paving block, and it is placed for a minimum period of 3 days at 105 ±5

o C until constant mass. The calculation is made by the difference between the saturated and dry mass divided by the dry mass. This would correspond to the percentage of the maximum mass of water that the paving block has absorbed from the dry state to the saturated state. The calculation formula is as follows:

is the mass of the specimen saturated with water, expressed in grams.

is the final mass of the dry specimen, expressed in grams.

Fresh paving block weight (mass). It is the mass of the paving block expressed in grams taken just after it leaves the mould in the vibro-compaction process.

Thickness (height), length and width of the paving block. The height of the paving block, or thickness, is the distance between the lower and upper face. In practice, the height variation is given by the vertical mobility of the mechanical parts in the vibro-compacting, where the plate of tamping compresses the mixture into the mould. The width and length of the paving blocks are taken from one end to the other.

Tensile splitting strength. The tensile splitting strength test is carried out using a hydraulic press, giving the measured load at failure in newtons, and the strength is calculated by applying the following formula indicated in the standard NTE INEN 3040 (2016) :

Where T is the paving block strength in MPa, P is the measured load at failure in Newtons, and S is the area of failure plane in mm2 that results from the multiplication of the measured failure length and the thickness at the failure plane of the paving block, and k=0.87 for a thickness of 60 mm.

Mahalanobis Distance. The importance of identifying outlier data in a database is that these can distort the statistical analysis, and therefore the distance to the centroid and the shape are taken into account; the Mahalanobis distance takes these two premises into account [

23]. The study by [

24] indicates that in the multivariate field with Gaussian data, the Mahalanobis distance follows a chi-square distribution, where p means degrees of freedom and represents the number of variables. The Mahalanobis distance measures the amount of the standard deviation of an observation or individual from the mean of a distribution, considering correlations for multivariate analysis. The Mahalanobis distance transforms to a Euclidean distance when covariance matrix is the identity matrix [

25]. A multivariate normal distribution is defined as:

Where

is the covariance matrix,

the mean vector, if X is a vector with p variables, which follows a multivariate normal distribution

), then the mahalanobis distance square

follows a chi-squared distribution with p degrees of freedom

. Mahalanobis represents the distance between each data point and its centre of mass and is defined by the following formula:

Simple linear regression. Simple linear regression allows one to relate two variables: variable Y, called response or dependent, and variable X, predictor or explanatory. The regression of the two random variables is given by the expected value of Y when X takes a specific value (X=x). If we consider linear regression with intercept

, slope

y

that represents the random error of

, [

26] explains that the residuals

are

where

is the fitted value of y.

The popular method for obtaining

y

is RSS ordinary least squares to minimize the difference between the observed and predicted values. The minimization is done by differentiating RSS concerning the coefficients

and

and setting it equal to 0.

The structural assumptions of the regression model are the linearity that explains that Y depends on x through linear regression, the homoscedasticity indicates that the variance of the errors when X=x must be common, better explained as

, the normality assumption indicates that the errors must follow a normal distribution with 0 mean and variance

and finally the independence of the errors. The inference of the linear regression under the previous assumptions

follows a normal distribution with mean

and variance

where

; if we consider

unknown, then the test statistic follows a distribution of T-student with n-2 degrees of freedom, where

:

is the null hypothesis and

:

is the alternative hypothesis. T is described by the expression:

Similarly, for

, the T statistic follows a T-student distribution with n-2 degrees of freedom, where the null hypothesis is

:

and the alternative hypothesis is

:

.

The analysis of variance allows decomposing the variability by analyzing the mean of Y, the predicted and observed points; the total variability is separated into the sum of the variability explained by the model plus the unexplained variability or error. The total sum of squares is SST = SYY =

, where

is the sum of squares of the regression (

=

and

is the sum of squares of the residuals

=

.

The F statistic follows an F distribution with 1 and n-2 degrees of freedom, where the null hypothesis is

:

and the alternative hypothesis is

. It can be seen that if the null hypothesis is rejected, then Y depends on X.

The coefficient of determination in linear regression is given by:

Multiple Linear Regression (MLR). According to [

26], the response variable (Y) in MLR is predicted and related to multiple explanatory or predictor variables, where the expectation of Y when each variable X takes a specific value is represented as:

The sum of squares for the multivariate case is:

Multiple linear regression is denoted as:

Each term expressed in vectors and matrices indicates the following:

The estimated coefficients of each term can be calculated by linear algebra calculus, where the term

is defined as Hat Matrix (H) and the residual maker matrix as M, which is equal to

where

is the identity matrix, the projection

is equal a

so in the regression hyperplane,

is a transformation of Y.

If the errors follow normal distribution with constant variance, then the T statistic follows a student’s t distribution with n-p-1 degrees of freedom and is given by:

The term

is the estimated standard deviation of

where the null hypothesis indicates that

:

and the alternative hypothesis is

:

. In the analysis of variance in the multivariate case, as in the case of simple linear regression, the total variability is equal to the variability explained by the model plus the unexplained variability or error. The F statistic follows an F distribution with p and n-p-1 degrees of freedom where the null hypothesis is Ho:

and the alternative hypothesis indicates that

:

.

Adding the number of predictors increases

, so

is used.

Regression trees. A decision tree is an algorithm in machine learning that can be used in regression and classification; that is a white box where they are intuitive and easy to interpret. For the regression case, the tree, instead of predicting a class, predicts a value that is the average value across the training instances of the node. Instead of minimizing impurity, the regression tree minimizes the mean squared error MSE [

27]. The cost function for regression is:

Where m is the number of instances to the left or right, MSE is the mean square error.

The limits on the decision trees are perpendicular to the axis (orthogonal) and are sensitive to variations in training. The regression trees, according to [

28], generate divisions of the database into more homogeneous groups, with a set of “if” and “then” conditions being easily interpretable with different predictors. A disadvantage that could occur is instability with minor changes in the data. The oldest and most widely used technique is CART by [

29], whose methodology is to find the predictor and the dividing value of the base whose squared error is the smallest.

represents the subgroup mean , represents the subgroup mean , the division process continues within the sets and up to a stopping criterion. The predictor’s relative importance can be calculated using SSE, where the predictors higher up the tree or more frequent are the most important.

Random forests. In the research by [

30], an algorithm called the random forest allows for predicting and reducing overfitting. The procedure consists of choosing the number of tree models to be built from 1 to m, obtaining an initial sample, and then training the tree model for each division; the predictors are randomly selected, the best one is chosen, and finally, the stopping criteria are used. Each tree model generates a prediction, and the m predictions are averaged to generate the final prediction. By randomly choosing the k variables in each division, their correlation decreases. Random forests are computationally more efficient tree by tree, and the predictors’ importance can also be seen through the permutation or impurity methodology. According to [

28], the tree bagging procedure reduces the prediction variance. The ensemble method is the algorithm that analyzes the predictions as a whole, obtaining the predictions of each individual tree with different random subsets. [

27]. Analyzing the predictions together will yield better results than just one prediction. Random forests are trained by bagging with max_samples.

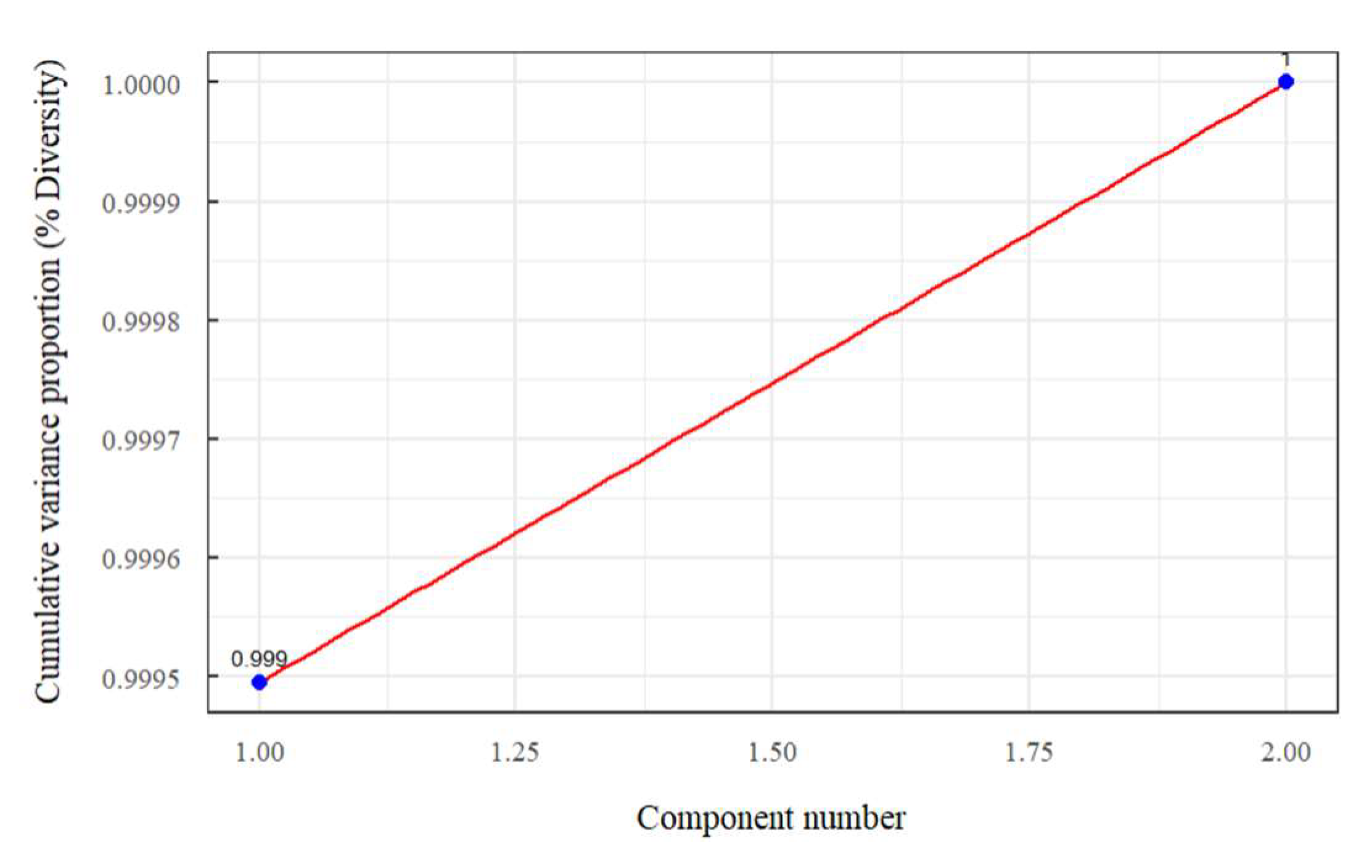

Principal component analysis. It is a dimension reduction technique that occupies the orthogonal transformation so that a group of correlated n-dimensional variables can maintain their variability information in other uncorrelated k-dimensional ones. The general process consists first of data standardisation so that the base has a mean of zero and a variance of one. The covariance matrix, correlation matrix, eigenvectors and eigenvalues are calculated. The first eigenvectors representing the most significant variability are chosen [

31]. Research carried out by [

32] indicates that this technique was developed by Karl Pearson and Harold Hotelling independently. The technique linearly transforms multivariate data into a new uncorrelated set of variables. The eigenvectors are vectors that do not change position when a data transformation occurs and represent the axis of maximum variance called the principal component.

According to [

33], Principal components are commonly defined as the matrix multiplication between the eigenvectors of the correlation matrix (A) and the standardized variables (

).

The principal components calculated by covariance have a drawback, and it is the sensitivity to the units of measurement, for which it is done using the correlation matrix with the standardized variables since each variable has a different unit of measurement. Also, the sizes of the variances of the principal components have the same implications in correlation matrices as in covariance matrices. One of the properties of the principal components using the correlation matrix is that they do not depend on the absolute values of the correlation.

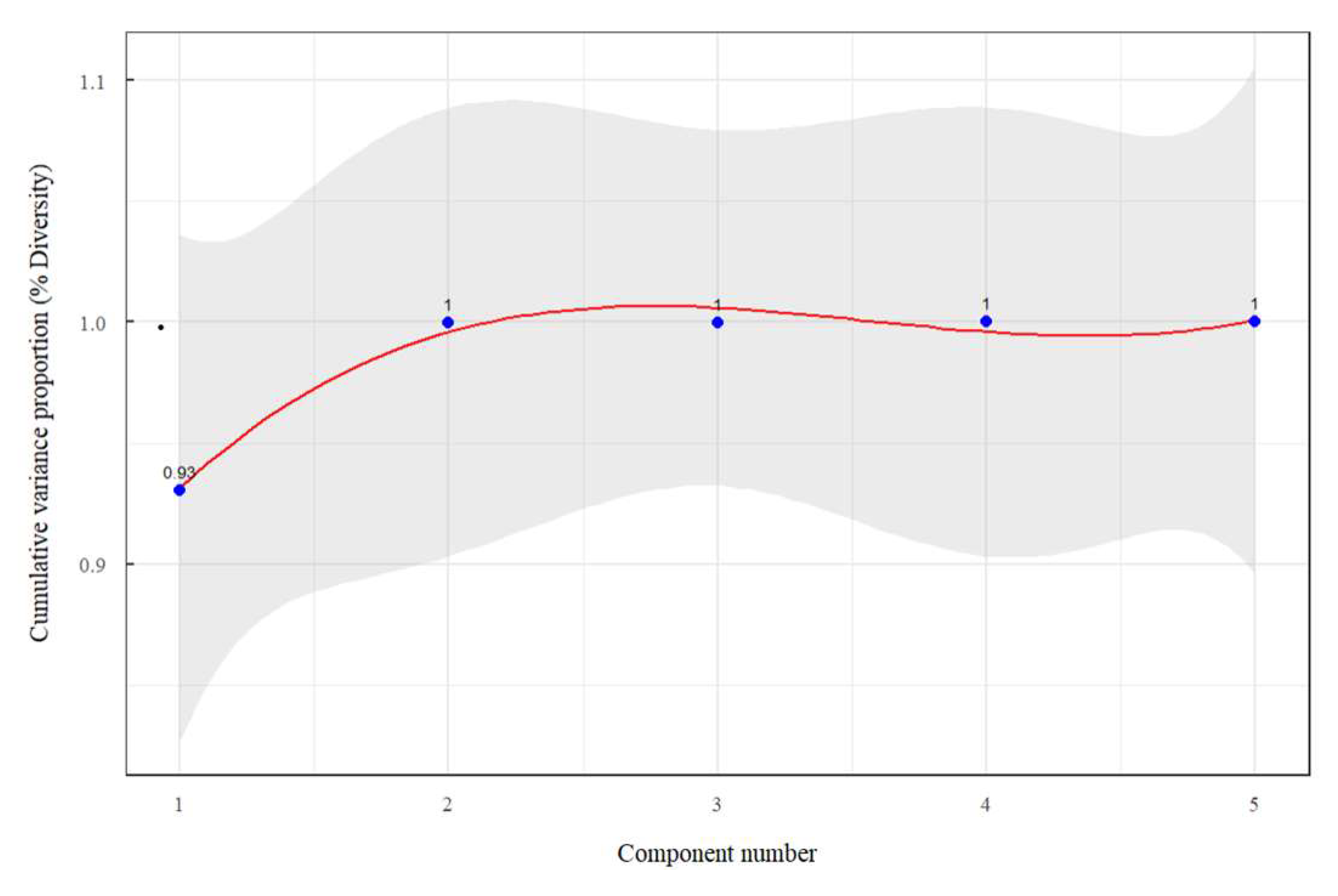

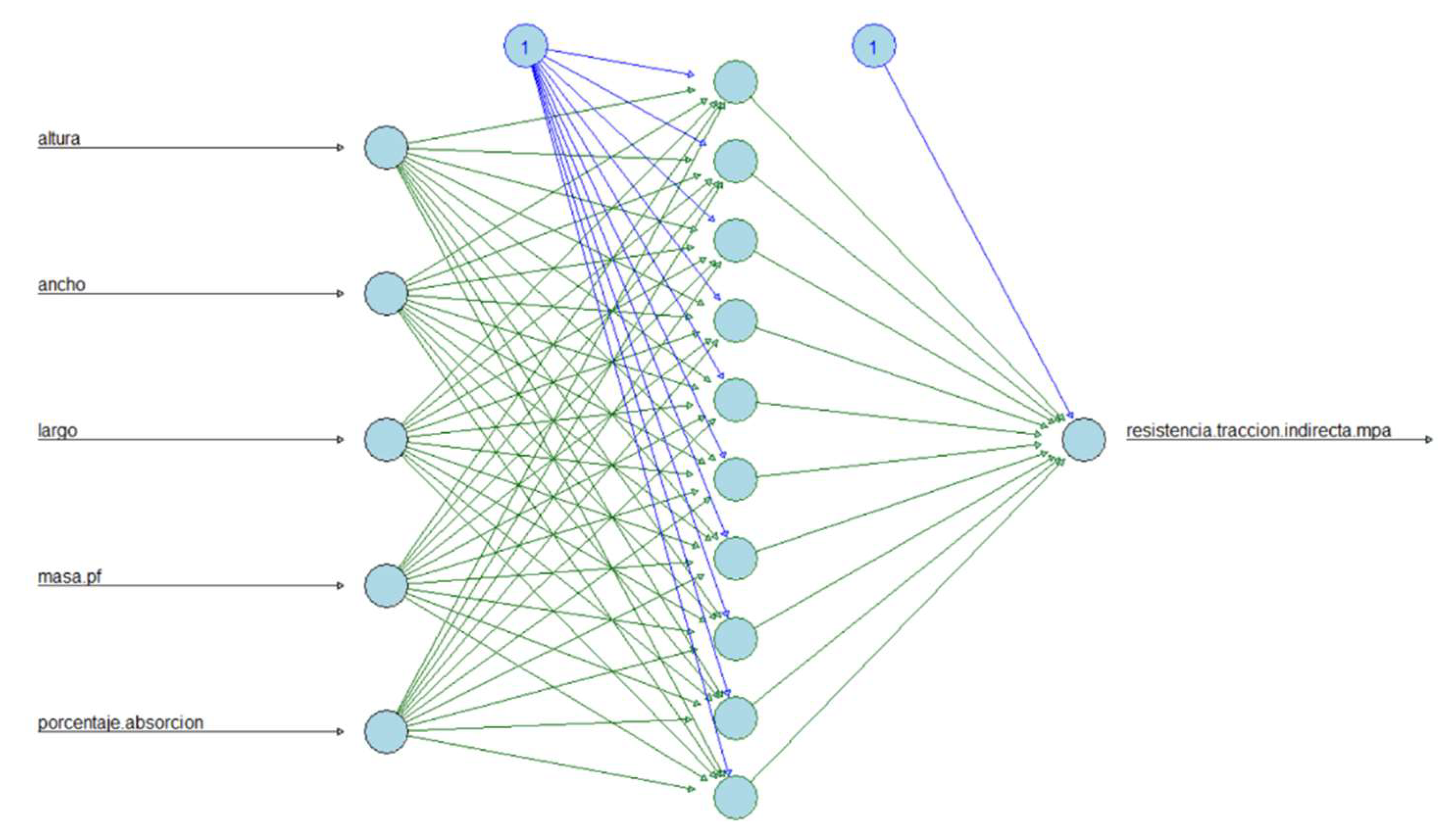

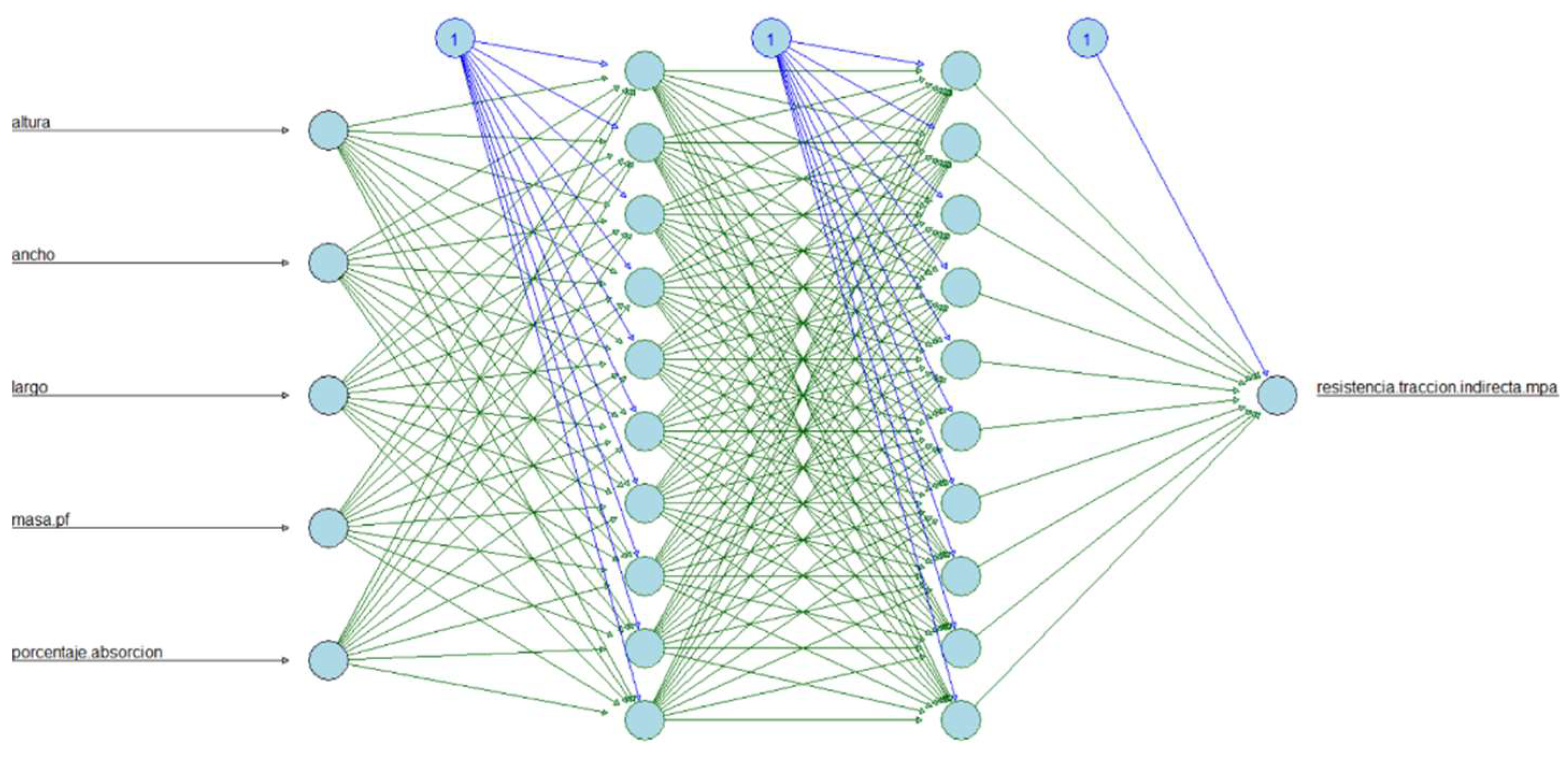

According to studies, [

34] principal component analysis can determine the number of hidden layers in artificial neural networks, which represents sufficient variability for statistical analysis. On the other hand, the study by [

35] lets to know the number of hidden layers in neural networks through principal component analysis to predict a continuous variable, giving good results through quality measures with optimal performance.

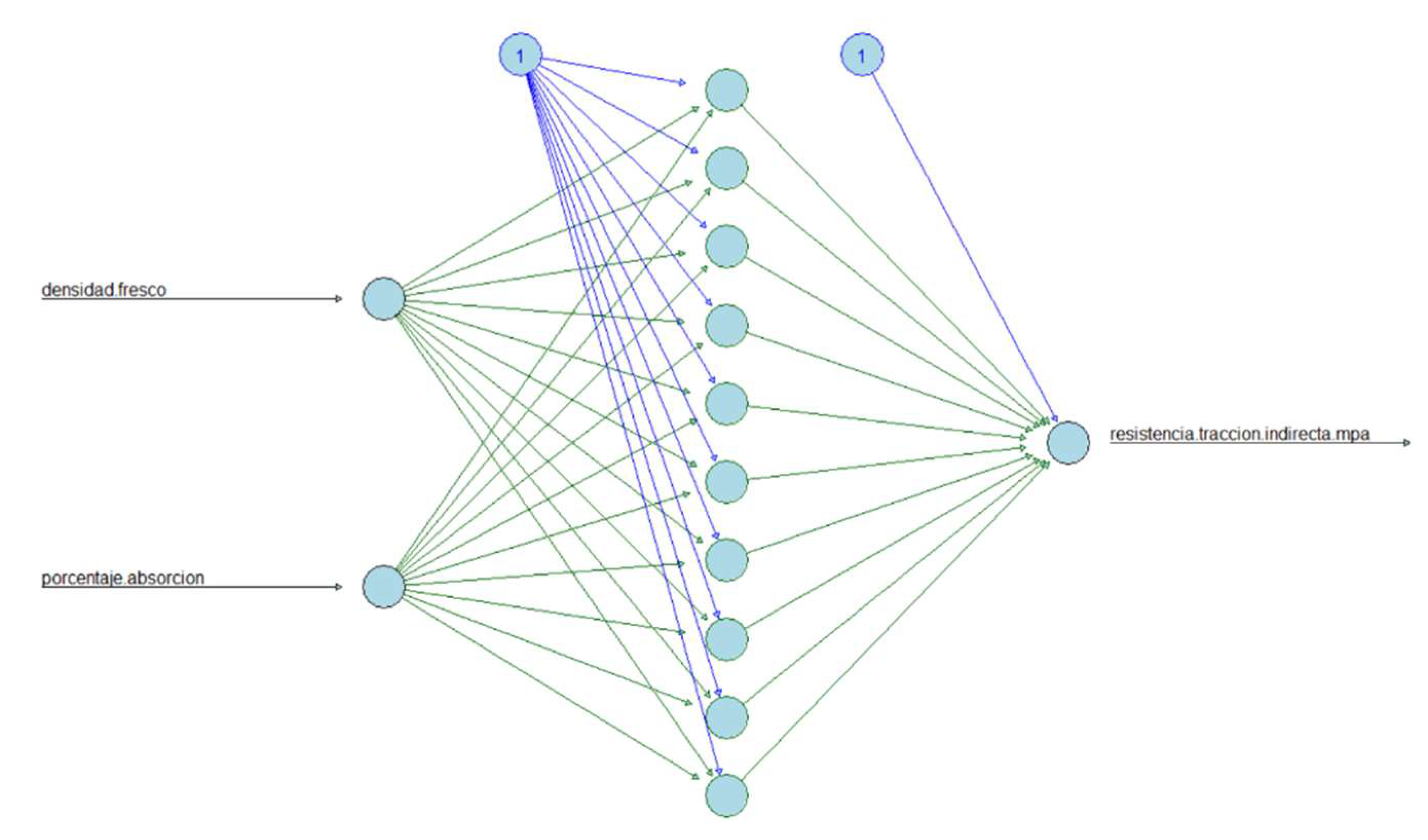

Artificial neural networks. Neural networks were inspired by the biological capacity of the brain, being so influential in machine learning to tackle tasks as complex as classifying millions of images, voice recognition, and beating world champions in mental sports. They were implemented in 1943 by McCulloch and Walter Pitts. In neural networks, the perceptron is a different neuron called threshold logic unit where the inputs are numbers just like the outputs, and each connection has a weight [

27].

A fully connected layer has the following outputs

, where X is the input matrix (instance rows and feature columns), W is the weight matrix (rows per input neurons and columns per artificial neuron), b is the polarization vector (connection weights of the bias neuron and the artificial neurons),

is the activation function.

Learning has a rule: the perceptron connections are strengthened when the error is reduced, receiving one instance at a time and making the predictions. Perceptron learning is done by

,

Where is the weight of the connection between the input and output of the neurons, is the input value of the instance, is the output value of the instance, is the target output value, and is the learning rate. The perceptron convergence theorem tells us that the algorithm converges to a solution if the instances are linearly separable. The multilayer perceptron, MLP, has an input layer (lower layer), hidden layers, and an output layer (upper layer).

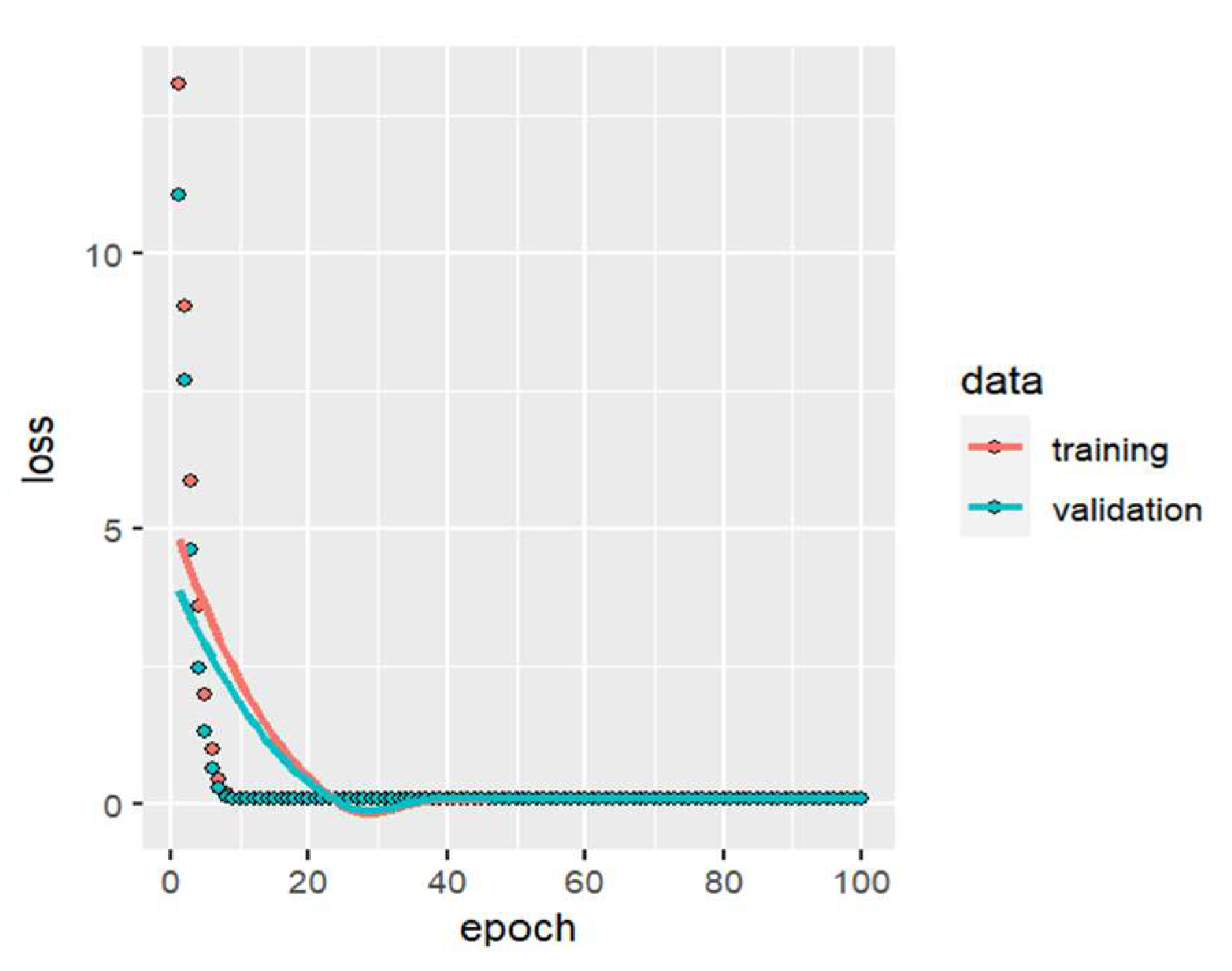

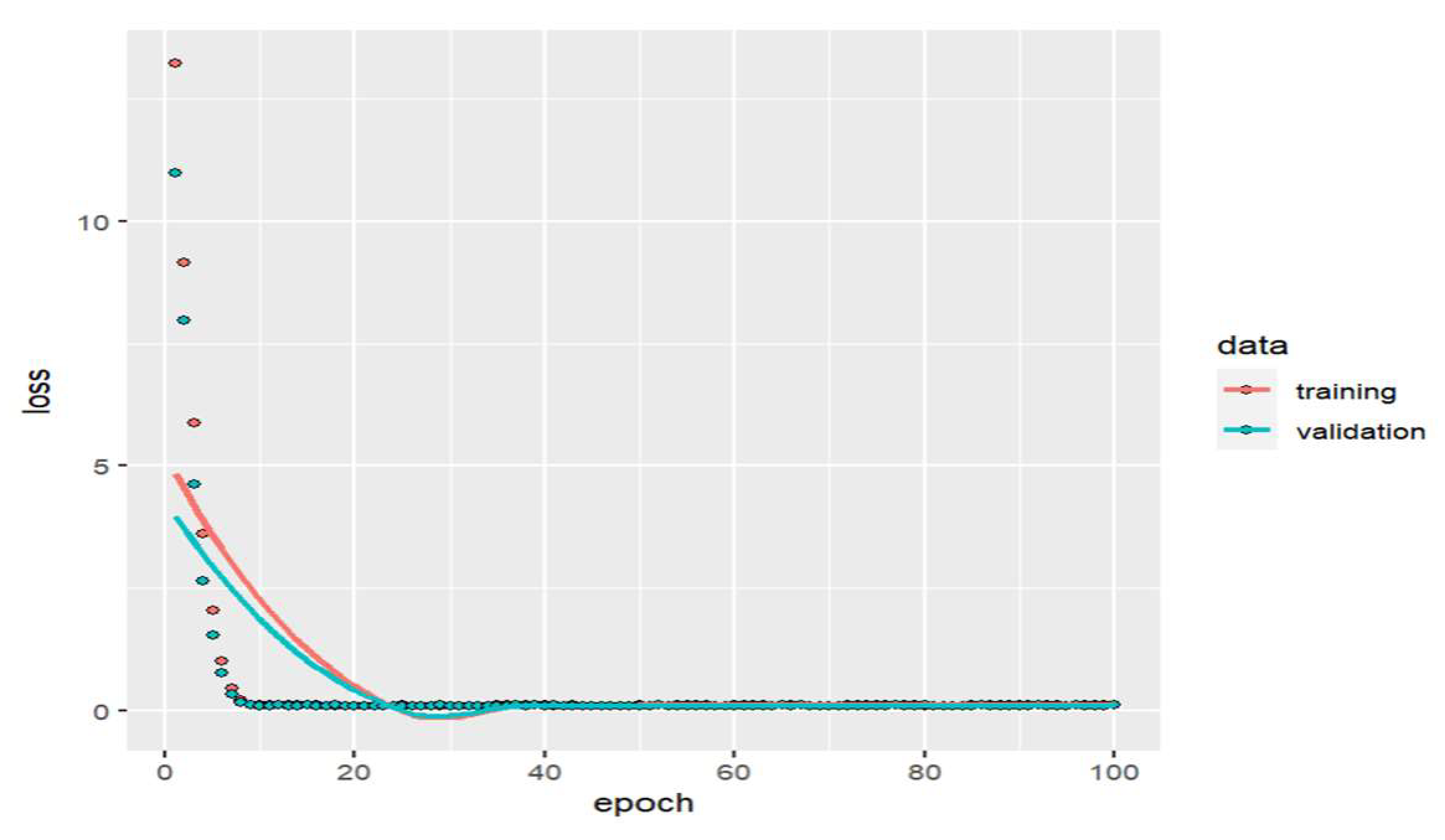

If the artificial neural network (ANN) has more than one hidden layer, it is called a DNN deep neural network. In 1986 in the article written by Rumelhart, Hinton and Williams, an algorithm called gradient descent was created to calculate the gradients automatically with one pass forward and another pass backwards; the process is repeated until converging to the solution. The procedure is to get a small group of instances and train it several times, creating an epoch. The small group goes through the input layer, hidden layers, and the output layer with a step forward, preserving the intermediate results and measuring the output error. The contribution of each connection to the error is calculated by applying the chain rule making it precise. It is returned to the input layer with the same rule, and the contribution to the connections’ error is measured; finally, the gradient descent is performed by adjusting the weights to reduce the error. If there is one output neuron, only one value will be predicted. According to [

36], the sigmoid neuron has weights and a bias occupying the sigmoid function defined as

, where

and the bias (b) is the introduced bias.

Considering the cost function to evaluate the model and quantify how well the objective is achieved, we have:

Where

represents the weights of the network, b are all the biases, n is the total training inputs, a is the vector of outputs when inputting an x, y(x) is the output desired, x sums over the training inputs, and C is the cost function. As the cost function approaches 0, as y(x) approaches the output a, gradient descent allows minimization of the cost function where it

can be written as:

is the gradient vector and relates the changes of C to changing

, so

is the vector of changes in position, and m is the number of variables.

Gradient descent repeatedly computes

looking like small steps in the direction C decreases the most. The backpropagation algorithm gives information on how to change the weights and biases in the behaviour of the neural network. The notation to use is l for the l-th layer , k is the k-th neuron of the l-th layer minus one ( l - 1 ), j is the j-th neuron of the l-th layer ,

is the weight of the connection of the l- th layer for the j- th neuron and k- th neuron of the layer (l-1),

is the bias of the j -th neuron for the l -th layer,

is the activation of the l- th layer for the j -th neuron.

In matrix and vector notation it is expressed as follows, where

is the weight matrix for the l-th layer,

is the bias vector for the l- th layer,

is the activation vector for the l- th layer,

=

is the function that is applied to each element of the vector v,

is the weighted input to the neurons of the l-th layer.

The desired output is expressed as y(x), n is the number of training instances or examples, and

is the output vector of activations when x is entered. Hadamard product is used ⊙, for denotes the multiplication of the elements of two vectors; the error in the j-th neuron, in the output layer, is:

A weight will learn slowly if the output neuron is saturated or if the input neuron has low activation. The rate of change of cost concerning bias is

, and the rate of change of cost concerning weight is

giving the summary backpropagation equations:

The backpropagation algorithm consists first in establishing the corresponding activation in the input layer, second we calculate

, and

, third we calculate the output error by calculating the vector

, fourth we calculate the backpropagation error

and lastly the gradient of the cost function It will be for the weights

and the bias is

. An intuitive way to see the rate of change of C concerning the weights in the network is: