1. Introduction

Even with advances in medical technology, medical errors due to human error still prone to be occur. In 2000, the report "To Err is Human-Built a Healthy and Safety System" from the National Institute of Medicine showed that the rate of medical errors caused by human negligence was up to 2.9% and 50% of Medical errors among these medical errors could be preventable [

1]. Therefore, how to avoid medical errors caused by human negligence is still a primary research topic in the medical field until now.

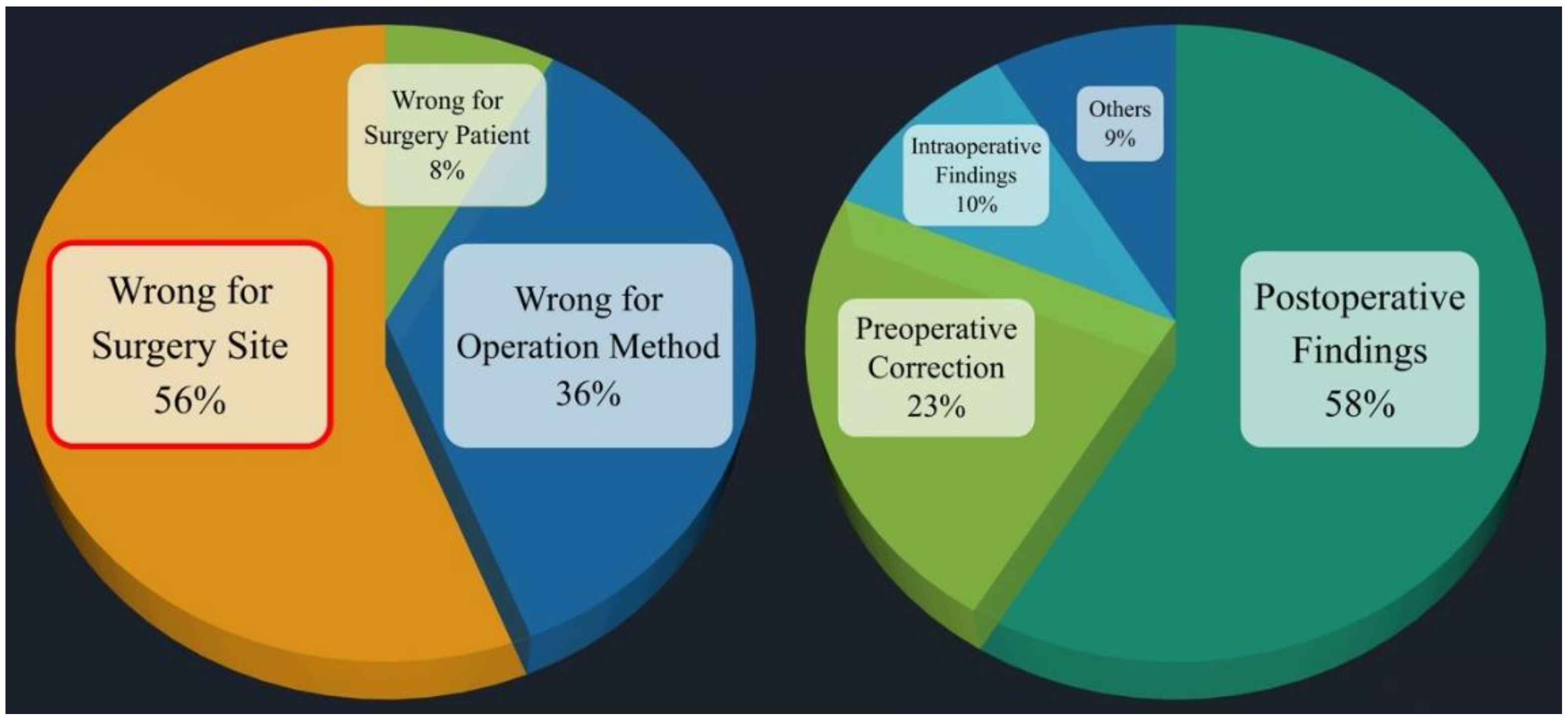

Among all medical errors, surgical medical errors often cause huge injuries and losses to patients and medical institutions, since surgery is the most prevalent medical action. Medical negligence in surgery is divided into several categories. Among them, in the 2019 annual report of the Taiwan Patient Safety Reporting System [

2], it was pointed out that the incidence of surgical site errors reached the second place among the types of surgical event errors. Therefore, how to avoid surgical site errors is an important issue for surgery research topics in medical malpractice. Among all surgical specialties, the orthopedic surgery is the most common category in surgical site errors, as high as 54%, and only 5.4% can be found to be corrected before surgery, because orthopedic surgery needs to deal with skeletal and muscular injuries of the extremities, as shown in

Figure 1 [

3]. Therefore, how to avoid surgical site errors is definitely the primary research topic in medical errors of orthopedic surgery.

In orthopedic surgery, the wrong site of surgery includes the wrong position of the operation and the wrong site of the operation. Taking the wrong incision position as an example, assume that the patient’s original incision was on the right limb, but due to human error, the incision was performed on the left limb. The original incision was on the right elbow, but after anesthesia, it was changed to the right wrist. Such operations are often simple operations, but the wrong surgical site causes more serious injuries to the patient, and makes the medical team and the hospital face huge compensation and pressure from public opinion, and even legal proceedings. In view of this, after discussing with the supervisor and the cooperating orthopedic surgeon, this project will first take the upper limb orthopedic surgery as the main surgical site, and propose a smart image recognition system that can prevent the error of the left and right parts of the upper limb orthopedic surgery, and assist the orthopedic surgeon to prevent upper limb surgery left-right misalignment occurs.

According to paper survey and relevant information obtained from medical institutions, the current methods to prevent the wrong location of surgery are still mainly marked by marking or barcode scanning, as shown in

Figure 2. However, regardless of marking or barcode scanning, it is still easy to cause errors at the surgical site due to many human factors, such as unusual time pressure, unusual instrument settings or handling in the operating room, more than one surgeon involved, the patient being taken transfer to another physician, and multidisciplinary physicians to participate in the operation. Therefore, this paper aims to integrate medical and artificial intelligence technologies to develop an intelligent image recognition system to replace the marking and barcode scanning methods to avoid the error of the left and right parts of upper limb orthopedic surgery caused by the above-mentioned human factors.

Susanne Hempel published a systemic review on the wrong surgical site in JAMA Surgery in 2015. It pointed out that the incidence of surgical site errors is about 1 in 100,000. The major reason for the occurrence is poor communication to be prone to make the medical personnel mistakes. The poor communication included Miscommunications among staff, Missing information that should have been available to the operating room staff, surgical team members not speaking up when they noticed that a procedure targeted the wrong side, and A surgeon ignoring surgical team members who questioned laterality [

3]. Therefore, the medical team must communicate fully and raise any questions bravely. The chief surgeon must also respect everyone's opinions to avoid medical negligence in the wrong surgical site.

Research published by Mark A Palumbo in 2013 pointed out that it is not only the limbs that can be operated on in the wrong place. In spinal surgery, the wrong joint often occurs. Once the wrong part is prescribed, it will be a devastating disaster for both the patient and the doctor. The author suggested that in order to prevent the wrong surgical site, in addition to the strict regulation of the medical system, it is also necessary to develop a customized process (patient specific protocol) in order to avoid the wrong surgical site [

4].

Omid Moshtaghi published the results of the study in 2017, analyzing the surgical site errors in California from 2007 to 2014. The authors pointed out that although California introduced the universal surgical safety protocol in 2004 to ensure the safety of patients during surgery, the wrong site of surgery still continues to occur. Occurrence of the disciplines, orthopedics as the largest, accounting for 35% [

5].

Ambe P.C.'s research report in 2015 also pointed out that orthopedics is the department with the most frequent surgical site errors. The most common causes included breakdown in communication, time pressure, emergency procedures, multiple procedures on the same patient by different surgeons, and obesity. The author recommends doing check lists and checking carefully before surgery, which is an effective solution [

6].

After visiting the operation process, it can be found that in the current orthopedic surgery, before the operation is disinfected and draped, a mark is drawn on the site where the patient intends to be operated on, so as to remind the surgeon whether to draw the wrong knife. At present, the position of orthopedic surgery is mostly marked by using a colored pen to draw a mark on the site to be operated on, as shown in

Figure 2. In modern times, some hospitals adopt the barcode scanning method, that is, paste a barcode on the site to be operated on, and then scan it through a barcode machine to confirm whether the position is correct, as shown in

Figure 2. However, regardless of marking or barcode marking, it is still easy to cause wrong surgical site due to the following factors, such as initiate or complete surgery under unusual time pressure, instrument settings or handling that are not commonly used in the operating room, such as left-handed surgery, more than one surgeon is involved, the patient is referred to another physician, physicians from multiple disciplines participate in the operation, and unusual physical features require special positioning.

Therefore, after discussing with the orthopedic surgeon (co-host), it is absolutely necessary to develop an upper limb orthopedic intelligent image recognition system to replace the above-mentioned marking and barcode machine scanning methods before surgical disinfection and draping. From the background and purpose of the research project, it can be found that orthopedic surgery accounts for 41% of wrong site surgery, ranking first. Among them, the part where position errors occur most frequently is the left and right position errors. However, in current research at home and abroad, most of the related research on upper limb surgery focuses on rehabilitation, lymph, and nerve [7-29], and no research results have been proposed on distinguishing between left and right upper limbs to prevent dislocation surgery image recognition. Therefore, how to develop a new type of intelligent image recognition system to help surgeons distinguish the left and right upper limbs to prevent dislocation surgery, and avoid the problem of wrong left and right surgical positions during orthopedic upper limb surgery will be of considerable benefit and research value.

Therefore, an intelligent image recognition system for preventing wrong side upper limb surgery, IIRS, was proposed to address the above issues in this paper. In order not to violate academic theory and human body research ethics, this paper will first use laboratory students to simulate orthopedic surgery patients as the object of system experimental testing to establish a training data set and a test data set. After the proto type of deep learning model training is completed, the IRB application approval is completed. The industry-university cooperation with the hospital and the second phase of human trials are carried out. Hence, the research results are actually applied to orthopedic clinical treatment to achieve the purpose of smart medical treatment.

2. Materials and Methods

The current position marking of orthopedic surgery is mainly based on mark marking or barcode scanning. However, such an approach is still prone to surgical site errors due to time pressure, unfamiliar instrument setup or handling, participation of multiple surgeons, patient being referred to another physician, and other factors. Therefore, how to prevent the wrong surgical site is absolutely the top priority of orthopedic surgery.

After discussions with orthopedic surgeons, this paper proposed an intelligent image recognition system, IIRS, combined with a deep learning neural network model to assist orthopedic surgeons to prevent left-right dislocation in upper limb surgery. In the internal survey of the members of the Orthopedic Medical Association of the Republic of China, it was also shown that 56% of the wrong parts were opened, and only 5.4% could be found to be corrected before the operation. Therefore, this paper proposes that the intelligent image recognition system for the left and right parts of upper limb orthopedic surgery will have considerable benefits and research value for upper limb orthopedic surgery.

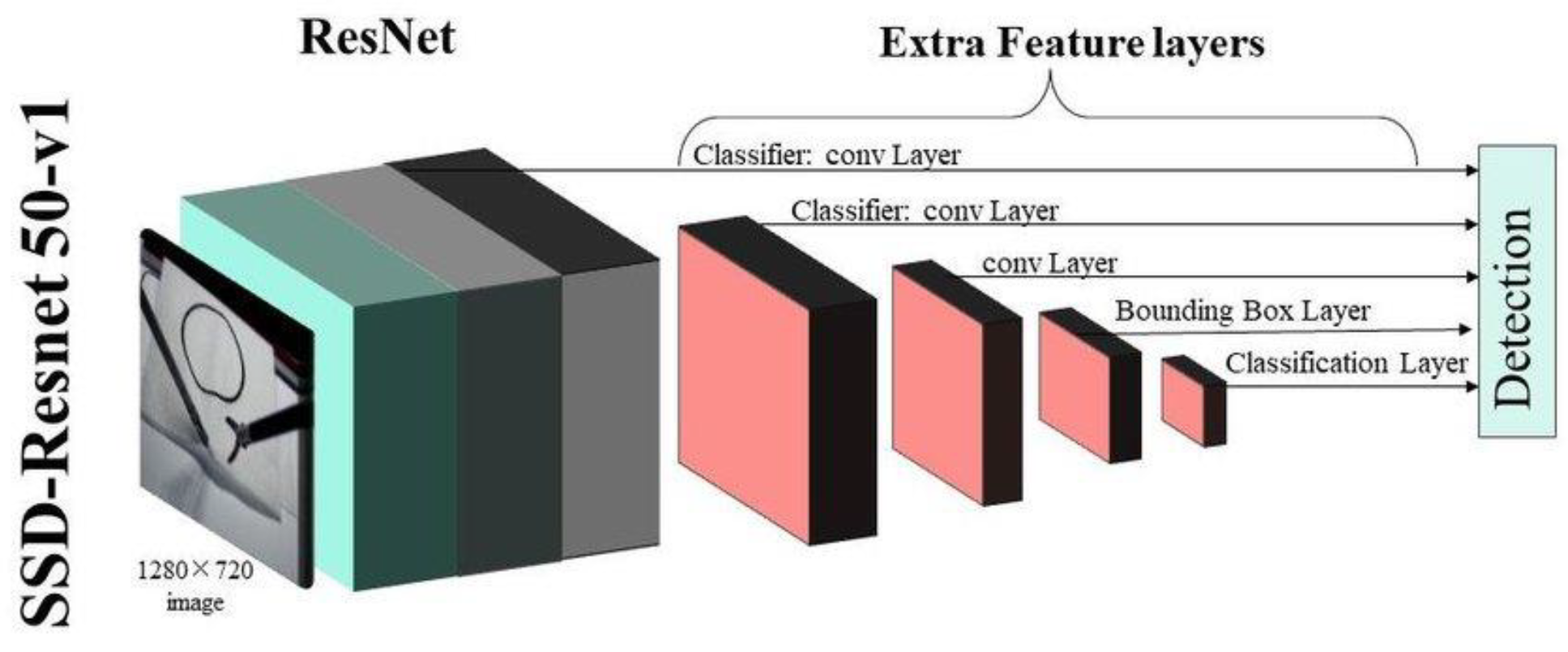

The neural network model in this paper adopts the most commonly used YoloV4 model. Since CSPNet in YoloV4 is based on DarknNet53 neural network, and DarkNet53 is developed based on ResNet, two different models, such as ResNet50 and YoloV4, were used for simultaneous training.

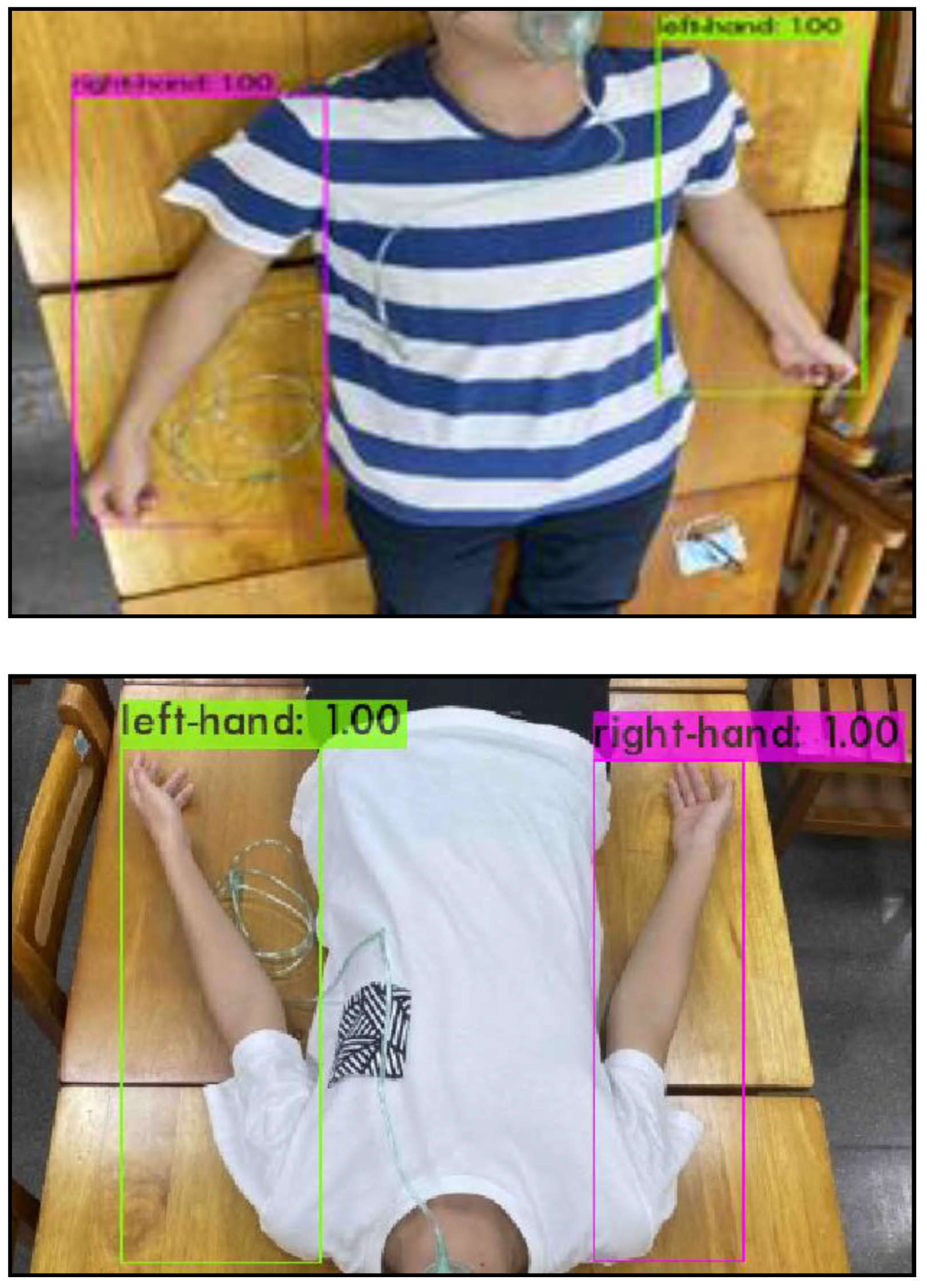

In the data set, total number of photos is 810. Among these data set, 122 photos are generated by data generation method. The ratio of the training set to the test set is 9:1. The xml extension name is selected for labelling through the LabelImg software. The photos are labelled one by one for the training range, and divided into two parts, such as right-hand and left-hand for training, as shown in

Figure 3.

In ResNet50, the marked data set is classified into training set and test set in the two folders. The two folders are converted into the .record files to ensure that the model training process could process the image files with adding a pre-training model. This model is related to the final training results. If this pre-training model is replaced, it could be transformed into other models. ResNet50 was shown in

Figure 4. The parameter in pipeline.config of ResNet50 was listed in

Table 1 [

30].

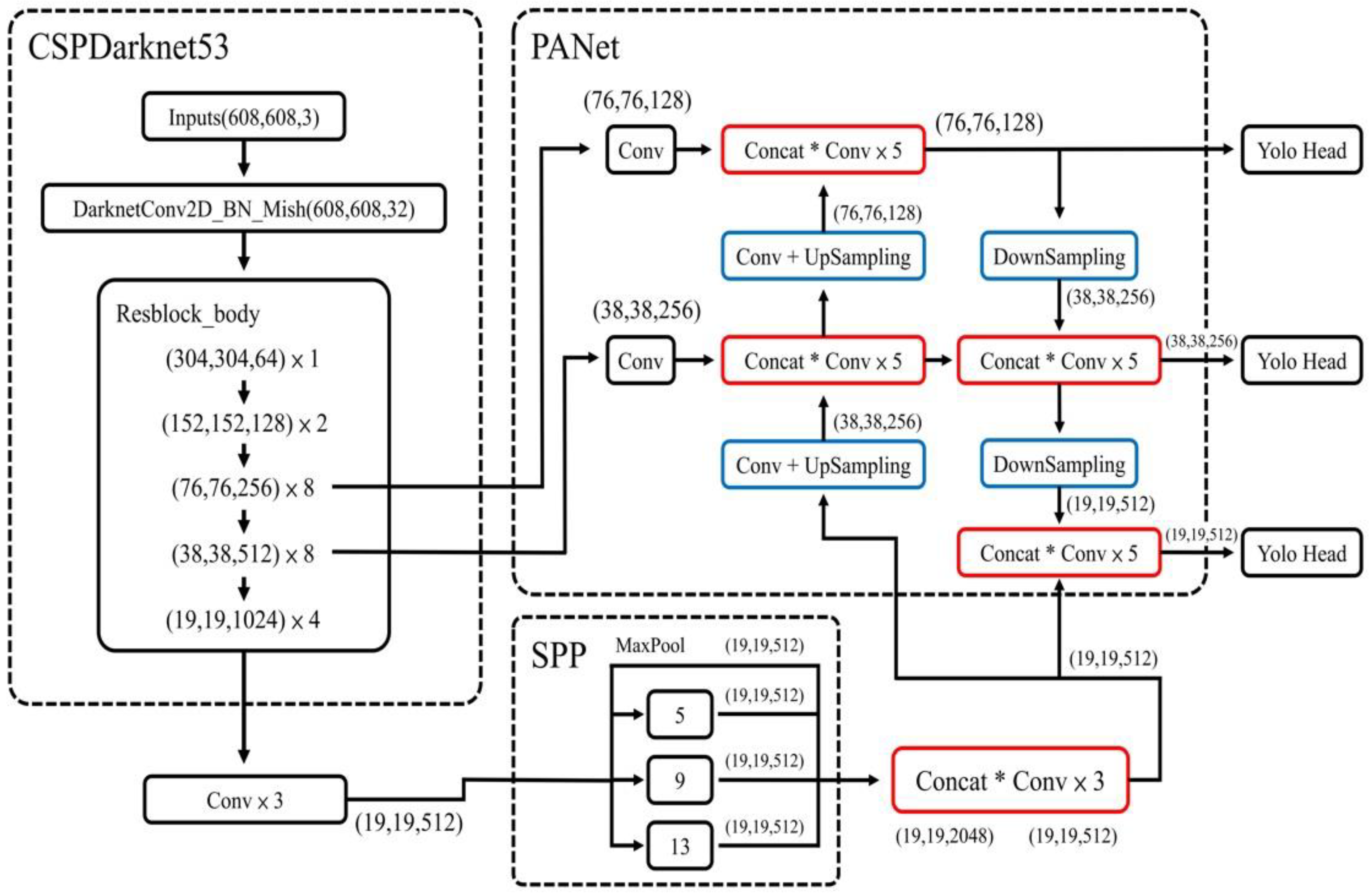

In YoloV4, the parameters were listed in

Table 2. For YoloTinyV4, it needs download the official pre-trained weight. The YoloV4 model was shown in

Figure 5 [Research on YOLOv4 Detection Algorithm Based on Lightweight Network]. We used the Mish function as the activation function and the CIOU function as the loss function in YoloV4.

3. Results

The confidence value (

conf_threshold) close to the recognition frame is defined as the threshold value of object frame recognition and could be adjusted. For example, if the confidence value is set to 0.9, only the object frame which

conf_threshold of object frame recognition is larger than 0.9 could be shown on the screen, as shown in

Figure 6.

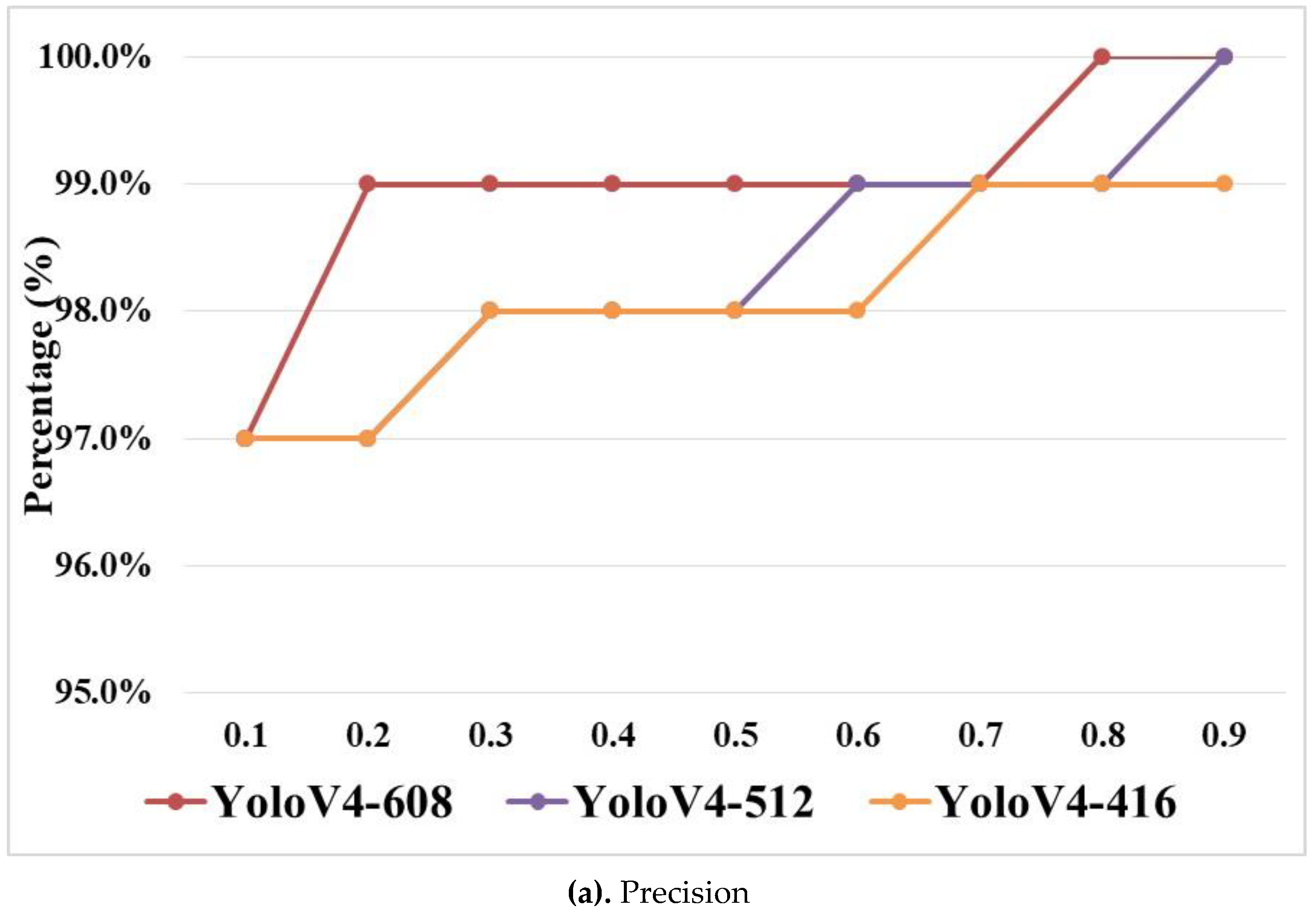

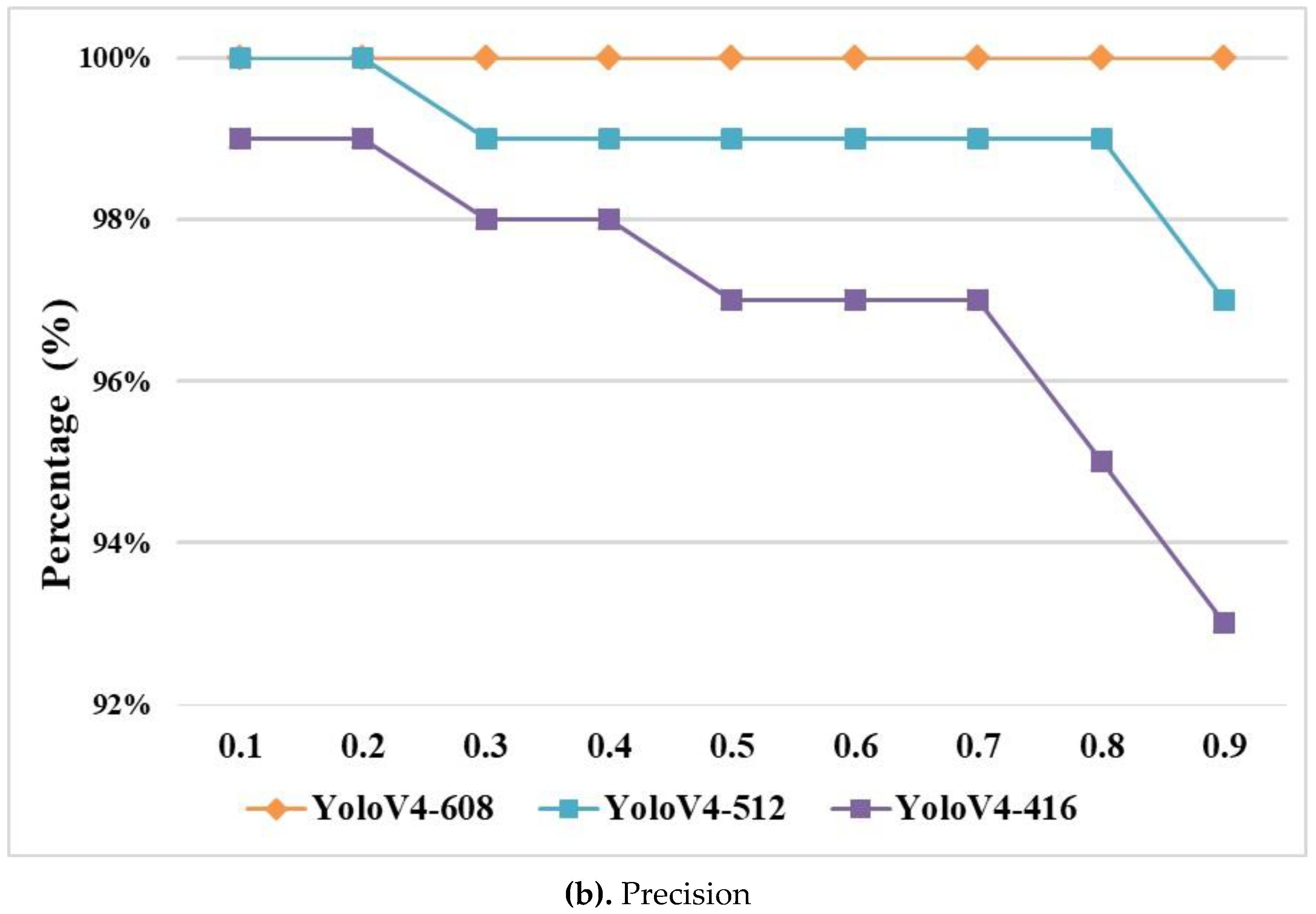

When the size of the input image is the same, the lower conf_threshold will lead to the higher false positive (FP) in a decrease of the overall accuracy. Through the experimental results in this paper, the optimal conf_threshold is 0.9. The following experimental results are based on the comparison of Precision and Recall while the conf_threshold is 0.9 and input size is 608 × 608, as shown in

Figure 7, where YoloV4-608 is defined as YoloV4 with input size and others are followed in the same way.

Figure 7 (b) showed that Recall on YoloV4 is 100% in any value of conf_threshold. Precision increased while the value of conf_threshold increased, as shown in

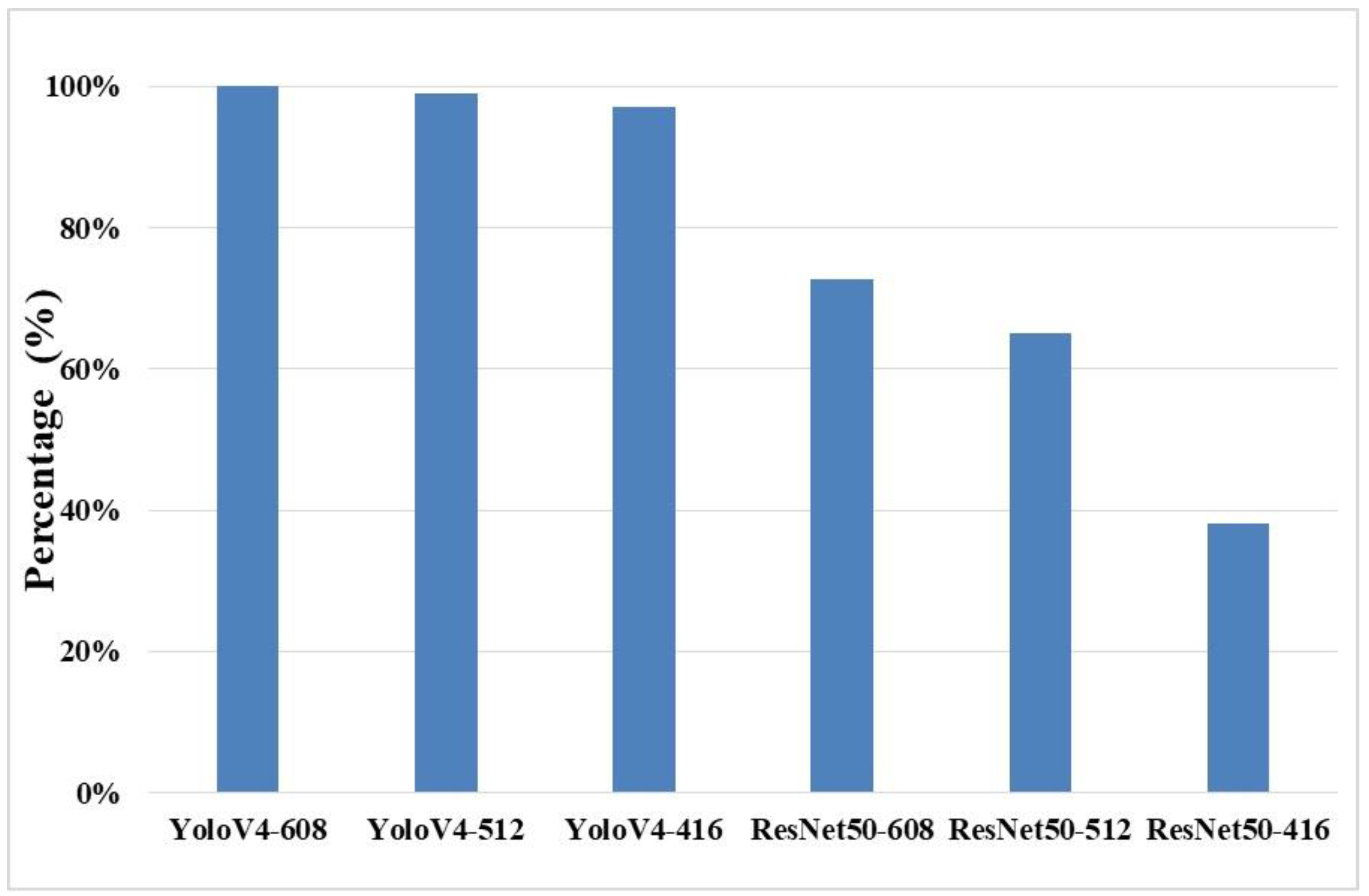

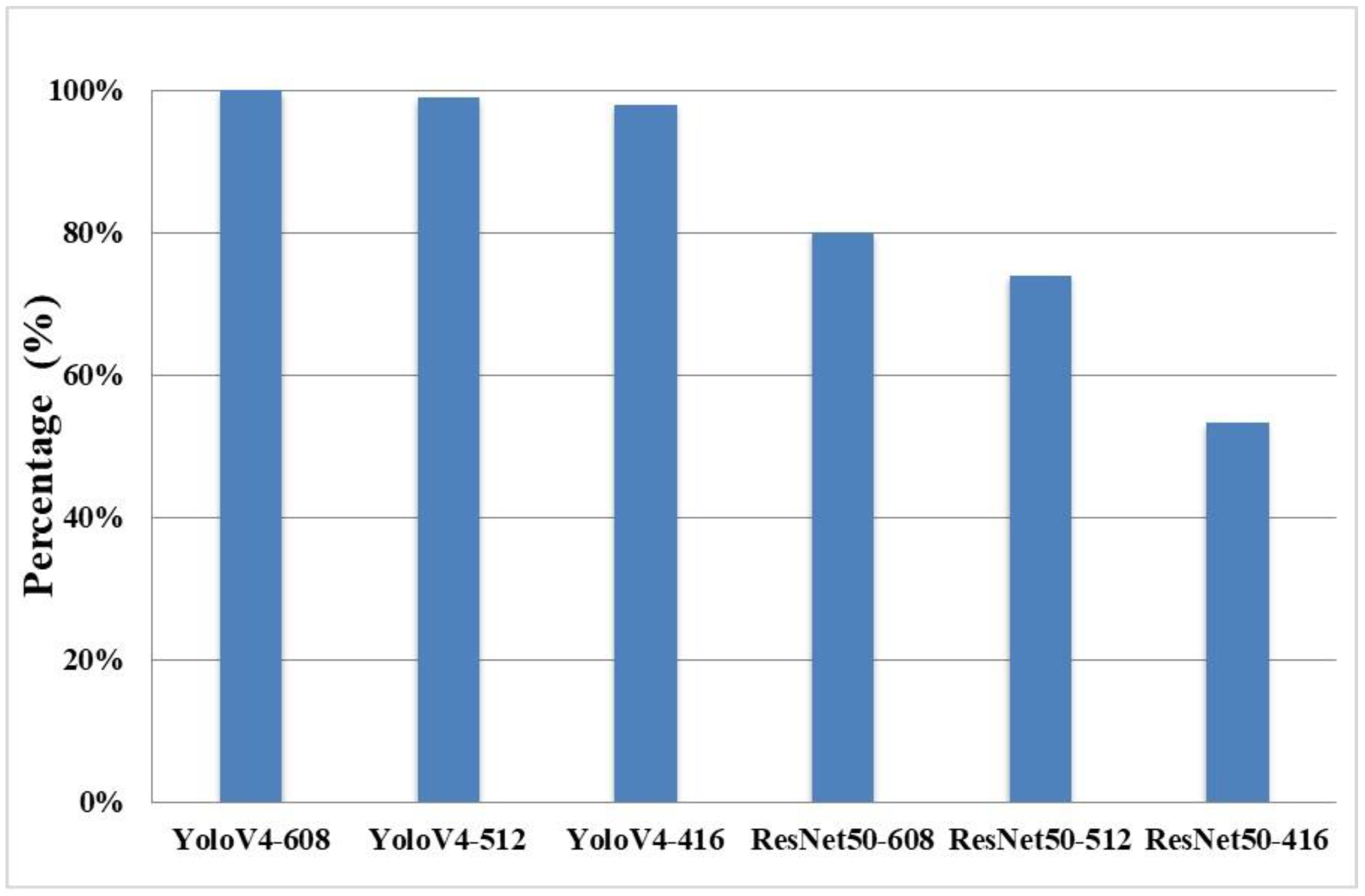

Figure 7 (a). Hence, the optimal value of conf_threshold is set to 0.9 in the experiment under YoloV4 and ResNet50. In

Figure 8, it showed that Precision in YoloV4 could be higher than Precision in ResNet50 for each input size while the value of conf_threshold is set to 0.9, where ResNet50-608 is defined as ResNet50 with input size 608×608 and others are followed in the same way.

Figure 8 showed that Precision with input size 608×608 in both YoloV4 and ResNet50 was higher than Precision with the other input size.

Figure 9 showed that Recall in YoloV4 could be higher than Recall in ResNet50 for each input size while the value of conf_threshold is set to 0.9.

4. Discussion

In the experimental results,

Figure 7 and

Figure 8 proved that the optimal value of input size is set to 608×608 for both of YoloV4 and ResNet50.

Figure 9 showed that Recall with input size 608×608 in both YoloV4 and ResNet50 was higher than Recall with the other input size. The experimental results showed that

Precision and

Recall in YoloV4 were all than

Precision and

Recall in ResNet50. It also proved that our designed intelligent image recognition system, IIRS, with YoloV4 could be applied for preventing wrong side upper limb surgery. Moreover, the IRB for this our designed intelligent image recognition system, IIRS, with YoloV4 to prevent wrong side upper limb surgery has been applied and passed.

5. Conclusions

In the category of surgical medical negligence, the wrong surgical site caused by the wrong location of the operation or the wrong site of the operation has ranked the second among the wrong types of surgical events. Among the surgical site errors, orthopedic surgery is the most common discipline. Among them, the wrong part accounted for 56%, and only 5.4% could be found to be corrected before the operation. Since the surgical site errors are often caused by human negligence, 50% of medical errors could be prevented. Therefore, how to prevent surgical site errors is definitely a top priority in orthopedic surgery.

However, the current methods to prevent left and right dislocation in upper limb orthopedic surgery mainly use marking or barcode scanning. However, the above methods are still prone to many external factors. Therefore, this study, in cooperation with the orthopedic surgeons of the hospital, integrates medical and artificial intelligence technologies, and develops an intelligent image recognition system for the left and right parts of upper limb orthopedic surgery to replace the above-mentioned marking and barcode machine scanning methods.

In view of this, this study proposes an intelligent image recognition system for the left and right parts of upper limb orthopedic surgery. Through the image recognition of the upper limb in the image, it can be judged whether the left upper limb in the image is the left upper limb or the right upper limb. Through image recognition of the upper limbs and machine learning technology, the position of the left upper limb and the right upper limb can be judged, and then the doctor can be given the correct surgical position to help orthopedic surgeons prevent the occurrence of left and right dislocation in upper limb surgery. It is believed that the research results of this project will be considerable benefit and research value for upper limb orthopedic surgery.

In order to apply the research results to the clinical treatment of the hospital and implement the combination of theory and practice, this study not only used laboratory students to simulate patients as test objects in the prototype stage to complete the deep learning model, but also passed the IRB human body test. Apply for the experimental plan, carry out the second phase of human trials, and will implement IRB experiments in the future, so that it can be actually applied to clinical medical treatment in hospitals to achieve the so-called smart medical treatment.

In the future, we will continue to optimize the model architecture used for training, hoping to achieve the same results with lower layers and fewer neurons (nodes), so that the system requirements will be reduced, and the recognition speed will be further improved to be closer to practical applications. The hardware facilities of the scene make it run smoothly.

Author Contributions

Y.-C.W. was responsible for Conceptualization, Methodology, Investigation, Writing - Original Draft, and Writing - Review & Editing. C.-Y.C. was responsible for Resources and Software. Y.-T.U. was responsible for Validation and Formal analysis. S.-Y.C and C.-H.C. were responsible for Data Curation and Visualization. H.-K.K. was responsible for Supervision and Project Administration. All authors read and approved the final manuscript. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

This is an observational study. The IRB was approved by the Chang Gung Memorial Hospital at Linkou, Taiwan. The IRB number is 202202226B0.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

This paper was supported by the National Science and Technology Council (NSTC) of Taiwan to National Yunlin University of Science and Technology under 111-2221-E-143-002.

Conflicts of Interest

The authors declare no conflict of interest.

References

- In: To Err is Human: Building a Safer Health System. edn. Edited by Kohn LT, Corrigan JM, Donaldson MS. Washington DC: 2000 by the National Academy of Sciences; 2000.

- https://www.patientsafety.mohw.gov.tw/Content/Downloads/List01.aspx?SiteID=1&MmmID=621273303702500244.

- H. Susanne, M.-G. Melinda, K. N. David, J. D. Aaron, M.-L. Isomi, M. B. Jessica, J. B. Marika, N. V. M. Jeremy, S. Roberta, and G. S. Paul, "Wrong-Site Surgery, Retained Surgical Items, and Surgical Fires : A Systematic Review of Surgical Never Events," JAMA Surgery, vol. 150, no. 8, pp. 796-805, 2015.

- A. P. Mark, J. B. Aaron, E. Sean, and H. D. Alan, "Wrong-site Spine Surgery," The Journal of the American Academy of Orthopaedic Surgeons, vol. 21, no. 5, pp. 312-320, 2013.

- O. Moshtaghi, Y. M. Haidar, R. Sahyouni, A. Moshtaghi, Y. Ghavami, H. W. Lin, and H. R. Djalilian, "Wrong-Site Surgery in California, 2007-2014," Otolaryngol Head Neck Surg., vol. 157, no. 1, pp. 48-52, 2017. [CrossRef]

- C. Nunes and F. Pádua, "A Convolutional Neural Network for Learning Local Feature Descriptors on Multispectral Images," IEEE Latin America Transactions, Vol. 20, Issue 2, pp. 215-222, 2022. [CrossRef]

- Y. Qi, Y. Guo, and Y. Wang, "Image Quality Enhancement Using a Deep Neural Network for Plane Wave Medical Ultrasound Imaging," IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, Vol. 68, Issue 4, pp. 926-934, 2021. [CrossRef]

- T. Hassanzadeh, D. Essam, and R. Sarker, "2D to 3D Evolutionary Deep Convolutional Neural Networks for Medical Image Segmentation," IEEE Transactions on Medical Imaging, Vol. 40, Issue 2, pp. 712-721, 2021. [CrossRef]

- Z. Duan, T. Zhang, J. Tan, and X. Luo "Non-Local Multi-Focus Image Fusion with Recurrent Neural Networks," IEEE Access, Vol. 8, pp. 135284-135295, 2020. [CrossRef]

- Y. Tian, "Artificial Intelligence Image Recognition Method Based on Convolutional Neural Network Algorithm," IEEE Access, Vol. 8, pp. 125731-125744, 2020. [CrossRef]

- Y. Tan, M. Liu, W. Chen, X. Wang, H. Peng, and Y. Wang, "DeepBranch: Deep Neural Networks for Branch Point Detection in Biomedical Images," IEEE Transactions on Medical Imaging, Vol. 39, Issue 4, pp. 1195-1205, 2020. [CrossRef]

- A. Waseem, M. David, and G. Andrea, "Limbs Detection and Tracking of Head-Fixed Mice for Behavioral Phenotyping Using Motion Tubes and Deep Learning," IEEE Access, vol. 8. pp. 37891-37901. 2020. [CrossRef]

- Y. Zhang, S. Li, J. Liu, Q. Fan, and Y. Zhou, "A Combined Low-Rank Matrix Completion and CDBN-LSTM Based Classification Model for Lower Limb Motion Function," IEEE Access, vol. 8, pp. 205436-205443, 2020. [CrossRef]

- S. Javeed, C. F. Dibble, J. K. Greenberg, J. K. Zhang, J. M. Khalifeh, Y. Park, T. J. Wilson, E. L Zager, A. H Faraji, M. A. Mahan, L. J. Yang, R. Midha, N. Juknis, and W. Z. Ray, "Upper Limb Nerve Transfer Surgery in Patients With Tetraplegia", JAMA Network Open, Vol. 5, Issue 11, pp. 1-14, 2022. [CrossRef]

- M. Li, J. Guo, R. Zhao, J.-N. Gao, M. Li, and L.-Y. Wang, "Sun-Burn Induced Upper Limb Lymphedema 11 Years Following Breast Cancer Surgery: A Case Report", World Journal of Clinical Cases, Vol. 10, Issue 32, pp. 7-12, 2022. [CrossRef]

- W. Shi, J. Dong, J.-F. Chen, and H. Yu, "A Meta-Analysis Showing the Quantitative Evidence Base of Perineural Nalbuphine for Wound Pain From Upper-Limb Orthopaedic Trauma Surgery", International Wound Journal, pp. 1-15, 2022. [CrossRef]

- K. Chmelová and M. Nováčková, "Effect of manual lymphatic drainage on upper limb lymphedema after surgery for breast cancer”, Ceska Gynaecology, Vol. 87, Issue 5, pp. 317-323, 2022.

- W. Johanna, R. Carina, and B.-K. Lina, "Linking Prioritized Occupational Performance in Patients Undergoing Spasticity-Correcting Upper Limb Surgery to the International Classification of Functioning, Disability, and Health", Occupational Therapy International, Vol. 2022, pp.1-11, 2022. [CrossRef]

- T. Ramström, C. Reinholdt, J. Wangdell, and J. Strömberg, "Functional Outcomes 6 years After Spasticity Correcting Surgery with Regimen-Specific Rehabilitation in the Upper Limb", Vol. 48, Issue 1, Journal of Hand Surgery, pp. 54-55, 2022. [CrossRef]

- Y. Duan, G.-L. Wang, X. Guo, L.-L. Yang, and F. G. Tian, "Acute Pulmonary Embolism Originating from Upper Limb Venous Thrombosis Following Breast Cancer Surgery: Two Case Reports", World Journal of Clinical Cases, Vol. 10, Issue 21, pp. 8-13, 2022. [CrossRef]

- H.-Z. Zhang, Q.-L. Zhong, H.-T. Zhang, Q.-H. Luo, H.-L. Tang, and L.-J. Zhang, "Effectiveness of Six-Step Complex Decongestive Therapy for Treating Upper Limb Lymphedema After Breast Cancer Surgery", World Journal of Clinical Cases, Vol. 10, Issue 25, pp. 7-16, 2022. [CrossRef]

- E. A. Stanley, B. Hill, D. P. McKenzie, P. Chapuis, M. P. Galea, and N. V. Zyl, "Predicting Strength Outcomes for Upper Limb Nerve Transfer Surgery in Tetraplegia", Journal of Hand Surgery, Vol.47, Issue 11, pp.1114-1120, 2022. [CrossRef]

- H. Alsajjan, N. Sidhoum, N. Assaf, C. Herlin, and R. Sinna, "The Contribution of the Late Dr. Musa Mateev to the Field of Upper Limb Surgery with the Shape-Modified Radial Forearm Flap", Annales de Chirurgie Plastique Esthétique, Vol. 67, Issue 4, pp.196-201, 2022. [CrossRef]

- T. Redemski, D. G. Hamilton, S. Schuler, R. Liang, and Z. A. Michaleff, "Rehabilitation for Women Undergoing Breast Cancer Surgery: A Systematic Review and Meta-Analysis of the Effectiveness of Early, Unrestricted Exercise Programs on Upper Limb Function", Clinical Breast Cancer, Vol. 22, Issue 7, pp. 650-665, 2022. [CrossRef]

- V. Meunier, O. Mares, Y. Gricourt, N. Simon, P. Kuoyoumdjian, and P. Cuvillon, "Patient Satisfaction After Distal Upper Limb Surgery Under WALANT Versus Axillary Block: A Propensity-Matched Comparative Cohort Study", Hand Surgery and Rehabilitation, Vol. 41, Issue 5, pp. 576-581, 2022. [CrossRef]

- Q. Luo, H. Liu, L. Deng, L. Nong, H. Li, Y. Cai, and J. Zheng, "Effects of Double vs Triple Injection on Block Dynamics for Ultrasound-Guided Intertruncal Approach to the Supraclavicular Brachial Plexus Block in Patients Undergoing Upper Limb Arteriovenous Access Surgery: Study Protocol for a Double-Blinded, Randomized Controlled Trial", Trials, Vol. 23, Issue 1, pp.295, 2022. [CrossRef]

- M. Manna, W. B. Mortenson, B. Kardeh, S. Douglas, C. Marks, E. M. Krauss, and M. J. Berger, "Patient Perspectives and Self-Rated Knowledge of Nerve Transfer Surgery for Restoring Upper Limb Function in Spinal Cord Injury", PM&R, Online Ahead of Print, 2022. [CrossRef]

- S. Beiranvand, M. Alvani, and M. M. Sorori, "The Effect of Ginger on Postoperative Nausea and Vomiting Among Patients Undergoing Upper and Lower Limb Surgery: A Randomized Controlled Trial", Journal of PeriAnesthesia Nursing, Vol. 37, Issue 3, pp.365-368, 2022. [CrossRef]

- Y. Gao, P. Dai, L. Shi, W. Chen, W. Bao, L. He, and Y. Tan, "Effects of Ultrasound-Guided Brachial Plexus Block Combined with Laryngeal Mask Sevoflurane General Anesthesia on Inflammation and Stress Response in Children Undergoing Upper Limb Fracture Surgery", Minerva Pediatrics, Vol. 74, Issue 3, pp.385-387, 2021.

- A. Rashidi, J. Grantner, I. Abdel-Qader, and S. A Shebrain, "Box-Trainer Assessment System with Real-Time Multi-Class Detection and Tracking of Laparoscopic Instruments, using CNN Box-Trainer Assessment System with Real-Time Multi-Class Detection and Tracking of Laparoscopic Instruments, using CNN", Acta Polytechnica Hungarica, Vol. 19, Issue 2, pp.2022-2029, 2022. [CrossRef]

- X. Zhang and H. Wang, "Research on YOLOv4 Detection Algorithm Based on Lightweight Network", Computer Science and Application, Vol. 11, Issue 9, pp.2333-2341, 2021. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).