1. Introduction

Long ago Leonard Euler spoke about the optimal arrangement of everything in the world: "For since the fabric of the universe is most perfect and the work of a most wise Creator, nothing at all takes place in the universe in which some rule of maximum or minimum does not appear." Striving for optimality is natural in every sphere.

In order to optimally move an autonomous robot to a certain target position, currently, as a standard, engineers first solve the problem of optimal control, obtain the optimal trajectory, and then solve the additional problem of moving the robot along the obtained optimal trajectory. In most cases, the following approach is used to move the robot along a path. Initially the object is made stable relative to a certain point in the state space. Then the stability points are positioned along the desired path and the object is moved along the trajectory by switching these points from one point to another [

1,

2,

3,

4,

5,

6,

7]. The difference between the existing methods is in solving the control synthesis problem to ensure stability relatively to some equilibrium point in the state space and in the location of these stability points.

Often, to ensure stability, the model of the control object is linearized relative to a certain point in the state space. Then, for the linear model of the object, a linear feedback control is found to arrange the eigenvalues of the closed-loop control system matrix on the left side of the complex plane. Sometimes, to improve the quality of stabilization, control channels or components of the control vector are defined that affect the movement of an object along a specific coordinate system axis of the state space. Then controllers, as a rule, PI controllers, are inserted into these channels with the coefficients that are adjusted according to the specified control quality criterion [

3,

4]. In some cases analytical or semi analytical methods are used to solve the control synthesis problem and building nonlinear stable control systems [

5,

7]. But the stability property of the nonlinear model of the control object, obtained from the linearization of this model, is generally preserved only in the vicinity of a stable equilibrium point.

The main drawback of this approach when the control object is moved along the stable points on the trajectory is that even if this trajectory is obtained as a result of solving the optimal control problem [

8], then the movement itself will never be optimal. To ensure optimality, it is necessary to move along the trajectory at a certain speed, but when approaching the stable equilibrium point, the speed of the control object tends to zero.

The optimal control problem generally does not require ensuring the stability of the control object. The construction of a stabilization system that ensures the stability of the object relative to the equilibrium point in the state space is carried out by the researcher to achieve predictable behavior of the control object in the vicinity of a given trajectory.

The optimal control problem in the classical formulation is solved for a control object without any stabilization system, therefore, the resulting optimal control and the optimal trajectory will not be optimal for this object with further introduced stabilization system. It follows that the classical formulation of the optimal control problem [

9] is missing something as far as its solution cannot be directly implemented in the real object, since this leads to an open-loop control. The open-loop control system is very sensitive to small disturbances, but they are always possible in real conditions, since no model accurately describes the control object. In order to achieve the optimal control in real object, it is necessary to build a feedback control system, which should provide some additional properties, for example stability relative to the trajectory or points on this trajectory. Authors [

10,

11] proposed an extended formulation statement of the optimal control problem, that has additional requirements established for the optimal trajectory. The optimal trajectory must have a non-empty neighborhood with a property of attraction. Performing these requirements provides implementation of the solution of the optimal control problem directly in the real control object.

In the work [

12,

13] an approach to solving the extended optimal control problem on the base of the synthesized control is presented. This approach ensures obtaining a solution of the optimal control problem in the class of practically implemented control functions. According to this approach, initially the control synthesis problem is solved. So the control object becomes stable in the state space relatively to some equilibrium point. In the second stage the optimal control problem is solved by determination of optimal positions of the stable equilibrium point. Switching stable points after a constant time interval ensures moving the control object from initial state to the terminal one optimally according to the given quality criterion. Optimal positions of stable equilibrium points can be far from the optimal trajectory in the state space, therefore a control object doesn’t slow down motion speed. Studies of synthesized control in various optimal control problems have shown that such control is not sensitive to perturbations and can be directly implemented in a real object [

14,

15].

In synthesized control the optimal control problem is solved for a control object already with a stabilization system. Other advantage of the synthesized control is a position of the stable point not changes in time interval, that is an optimal control function is solved in the class of piecewise constant functions, that simplifies a search of the optimal solution.

It is possible that piecewise constant control in the synthesized approach finds several optimal solutions with practically the same values of the quality criterion. This circumstance prompted us the idea to find among all almost optimal solutions one that is less sensitive to perturbations. This approach is called adaptive synthesized control.

In this work a principle of adaptive synthesized control is proposed in section 2, methods for solving it are discussed in section 3 and further in the section 4 a computational experiment of the solution of the optimal control problem for spatial motion of quadcopter by adaptive synthesized control is considered.

2. Adaptive Synthesized Control

Consider the principle of adaptive synthesized control for solving the optimal control problem in its extended formulation [

10].

Initially, the control synthesis problem is solved to provide stability of control object relatively some point in the state space.

In the problem the mathematical model of the control object in the form of ordinary differential equation system is given.

where

is a state vector,

,

is a control vector,

,

is a compact set, that determines restrictions on the control vector.

The domain of admissible initial states is given

To solve the problem numerically, the initial domain (

2) is taken in the form of the finite number of points in the state space

Sometimes it is convenient to set one initial state and deviations from it:

where

is a given initial state,

is a deviations vector,

, ⊙ is Hadamard product of vectors,

is a binary code of the number

j. In this case

.

The stabilization point as a terminal state is given

It is necessary to find a control function in the form

where

, such that minimizes the quality criterion

where

is a time of achieving the terminal state (

5) from the initial state

,

is determined by an equation

is a particular solution from initial state

,

, of the differential equation (

1) with inserted there control function (

6)

is a given accuracy for hitting to terminal state (

5),

is a given maximal time for control process,

p is a weight coefficient.

Further in the principle of the synthesized optimal control the following optimal control problem is considered. The model of control object in the form (

9) is used

where the terminal state vector (

5) is changed into the new unknown vector

, that will be a control vector in the considered optimal control problem.

In accordance with the classical formulation of the optimal control problem, the initial state of the object (

10) is given

As far as in the engineering practice there can be some deviations in the initial position, therefore, in the adaptive synthesized control instead of one initial state (

11) the set of initial states are used defined by the equation (

4). The vector of initial deviations

is defined as a level of disturbances.

The goal of control is defined by achievement of the terminal state

The quality criterion is given

where

is a terminal time,

is not given but is limited,

,

is a given limit time of control process.

According to the principle of synthesized control, it is necessary to choose time interval

and to search for optimal constant values of the control vector

for each interval

where

M is a number of intervals

such, that particular solution of the system (

10) from the given initial state (

11) reaches terminal state with optimal value of the quality criterion (

13).

Algorithmically in the second stage of the adaptive synthesized control approach, the optimal values of the vector

are found as a result of the optimization task with the following quality criterion, which takes into account the given grid according to the initial conditions

where

K is number of initial states,

is determined by equation (

8).

3. Methods of Solving

As described in the previous section, the approach based on the principle of adaptive synthesized optimal control consists of two stages.

To implement the first stage of the approach under consideration for solving the control synthesis problem (

1)–(

9) any known method can be used mostly depending on the type of the model used to describe the control object. In practice, when developing a stabilization system, engineers do not use the initial performance criterion (

7), since the main goal is to ensure stability. To solve the synthesis problem and obtain an equilibrium point, methods of modal control [

16] can be applied for linear systems, as well as other analytical methods such as backstepping [

17] or synthesis based on the application of the Lyapunov function [

18]. In practice, stability is ensured through linearization of the model (

1) in the terminal state and setting PI or PID-controllers in control channels [

19,

20]. The mathematical formulation of the stabilization problem as a control synthesis problem is needed to apply numerical methods and automatically obtain a feedback control function. Today, to solve the synthesis problem for nonlinear dynamic objects of varying complexity, modern numerical methods of machine learning can be applied [

21]. In the present paper machine learning by symbolic regression [

22] is used.

Symbolic regression allows to find a mathematical expression for desired function in the form of special code. All symbolic regression methods differs in the form of this code. The search for solutions is performed in the space of codes by a special genetic algorithm.

Let us demonstrate main features of symbolic regression on the example of the network operator method (NOP), that was used in this work in the computational experiment. To code a mathematical expression NOP uses an alphabet of elementary functions:

- –

functions without arguments or parameters and variables of the mathematical expression;

- –

functions with one argument

- –

function with two arguments

Any elementary function is coded by two digits, the first one is the number of arguments, the second one is the function number in corresponding set. These digits are written as indexes of elements in the introduced sets of the alphabet (

17)–(

19). The set of functions with one argument must include the identity function

. Functions with two arguments should be commutative, associative and have a unit element.

NOP encodes a mathematical expression in the form of an oriented graph. Source-nodes of the NOP-graph are connected with functions without arguments, other nodes are connected with functions with two arguments. Arcs of the NOP-graph are connected with functions with one argument. If on the NOP-graph some node has one input arc, then the second argument is a unit element for the function with two arguments connected with this node.

Let us define the following alphabet of elementary functions:

With this alphabet the following mathematical expressions can be encoded in the form of NOP:

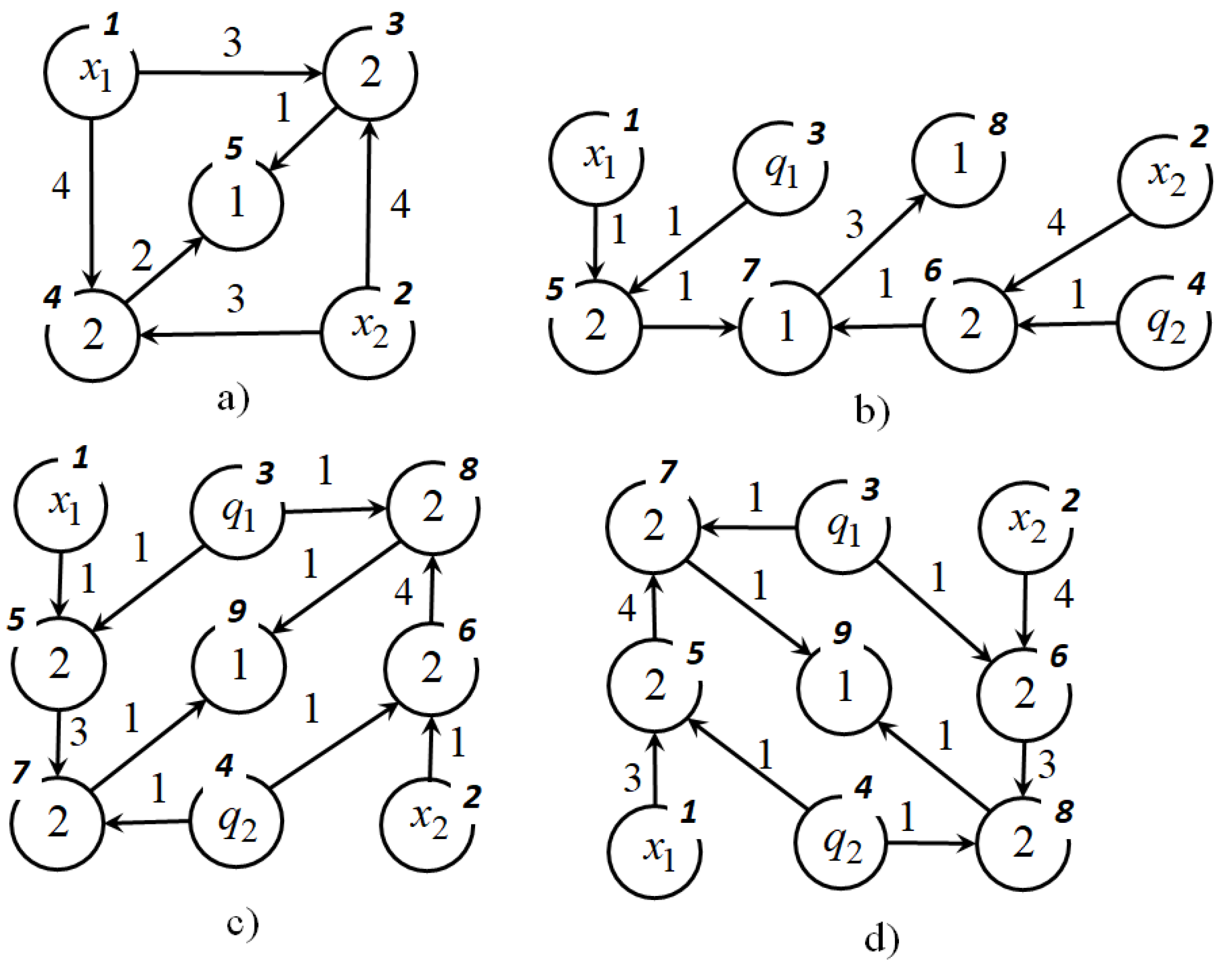

The NOP-graphs of these mathematical expressions are presented in

Figure 1. In the computer memory the NOP-graphs are presented in the form of integer matrices.

As far as the NOP-nodes are enumerated in such a way that the node number from which an arc comes out is less than the node number to which an arc enters, then the NOP matrix has an upper triangular form. Every line of the matrix corresponds some node of the graph. Lines with zeros in the main diagonal corresponds to source-nodes of the graph. Other elements in the main diagonal are the function numbers with two arguments. Non zero elements above the main diagonal are the function numbers with one argument.

NOP matrices for the mathematical expressions (

21) have the following forms

To calculate a mathematical expression by its NOP matrix, initially, the vector of nodes is determined. Number of components of the vector of nodes equals to the number of nodes in a graph. The initial vector of nodes includes variables and parameters in positions that correspond to source nodes, as well as other components equal to the unit elements of the corresponding functions with two arguments. Further every line of matrix is checked. If element of matrix doesn’t equal to zero, then corresponding element of the vector of nodes is changed. To calculate mathematical expression by the NOP matrix the following equation is used

where

is a unit element for function with two arguments

,

Consider an example of calculating the second mathematical expression in (

21) on its NOP matrix

.

Initial vector of nodes is

Further all strings in the matrix

are checked and non zero elements are found.

The last mathematical expression coincides with the needed mathematical expression for

(

21).

So, we considered the way of codding in the NOP method. Then, to search for an optimal mathematical expression in some task, the NOP method applies a principle of small variations of a basic solution. According to this principle one possible solution is encoded in the form of the NOP matrix

. This solution is the basic solution and it is set by a researcher as some good solution. Others possible solutions are presented in the form of sets of small variation vectors. A small variation vector consists of four integer numbers

where

is a type of small variation,

the a line number of NOP-matrix,

is a column number of NOP-matrix,

is a new value of a NOP-matrix element. There are four types of small variations:

is an exchange of the function with one argument: if

, then

;

is an exchange of the function with two arguments: if

, then

;

is an insertion of the additional function with one argument: if

, then

;

is an elimination of the function with one argument: if

and

,

,

and

,

, then

.

The initial population includes

H possible solutions. Each possible solution

except the basic solution is encoded in the form of the set of small variation vectors

where

d is a depth of variations, which is set as a parameter of the algorithm.

The NOP-matrix of a possible solution is determined after application of all small variations to the basic solution

here small variation vector is written as a mathematical operator changing matrix

.

During the search process sometimes the basic solution is replaced by the current best possible solution. This process is called a change of an epoch.

Consider an example of applying small variations to the NOP-matrix

. Let

and there are three following small variation vectors

After application of these small variation vectors to the NOP-matrix

the following NOP-matrix is obtained

This NOP-matrix corresponds to the following mathematical expression

As a search engine for the optimal solution a genetic algorithm is used. To perform a crossover operation two possible solutions are selected randomly

A crossover point is selected randomly

. Two new possible solutions are obtained as the result of exchanging elements of the selected possible solutions after crossover point:

The second stage of the synthesized principle under consideration is to solve the problem of optimal control via determination of the optimal position of the equilibrium points. Studies have shown [

23] that for a complex optimal control problem with phase constraints, evolutionary algorithms allow to cope with such problems. Good results were demonstrated [

24] by such algorithms as a genetic algorithm (GA) [

25], a particle swarm optimization (PSO) algorithm [

26], a grey wolf optimizer (GWO) algorithm [

27] or a hybrid algorithm [

28] involving one population of possible solutions and all three evolutionary transformations of GA, PSO and GWO selected randomly.