Submitted:

05 September 2023

Posted:

07 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Works

1.2. Motivation and Key Contributions

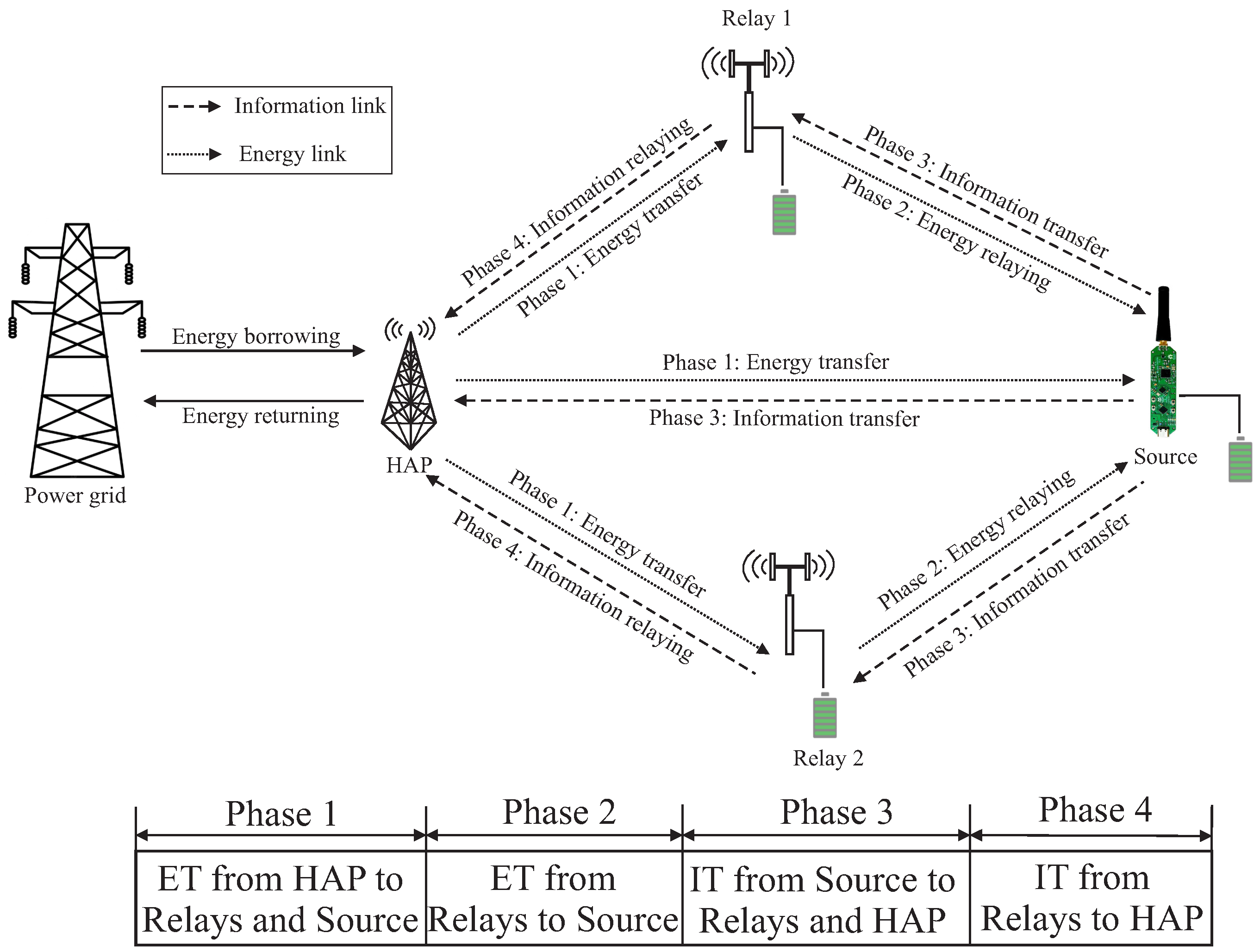

- Considering a novel smart energy borrowing and relaying-enabled EH communication scenario, we investigate the end-to-end net bit rate maximization problem in WPCN. Here, we jointly optimize the transmit power of HAP and IS, fractions of harvested energy transmitted by the relays, and the relay selection indicators for DF relaying within the operational time.

- As the original formulated problem is non-convex and combinatorial, we decompose it into multi-period decision-making steps using MDP. Specifically, we propose a nontrivial transformation where the state represents the current onboardonboard battery energy level and the instantaneous channel gains. In contrast, the corresponding action indicates the transmit power allocations. Since HAP selects the relay based on the maximum achievable signal-to-noise ratio (SNR) among all the relays for receiving the information, the instantaneous transmission rate attained by HAP is treated as an immediate reward.

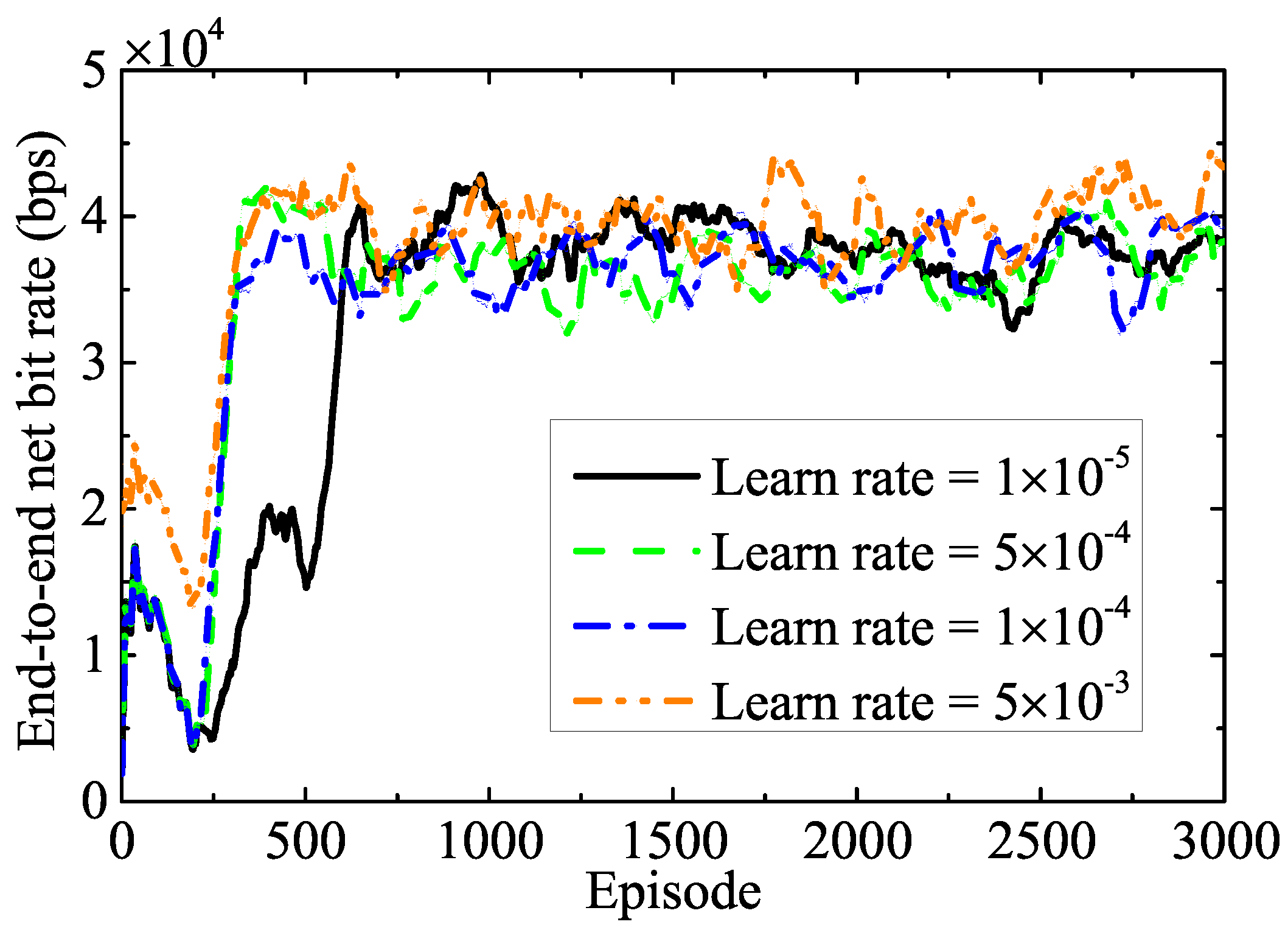

- We observed that the initial joint optimization problem was analytically intractable to be solved using traditional convex optimization techniques due to the realistic parameter settings of complex communication environments. Therefore, we suggest a DRL framework using the DDPG algorithm to train the DNN model, enabling the system to discover the best policy in an unfamiliar communication environment. The proposed approach determines the current policy using the Q-value for all state-action pairs. Additionally, we have examined the convergence and complexity of the proposed algorithm to improve the learning process.

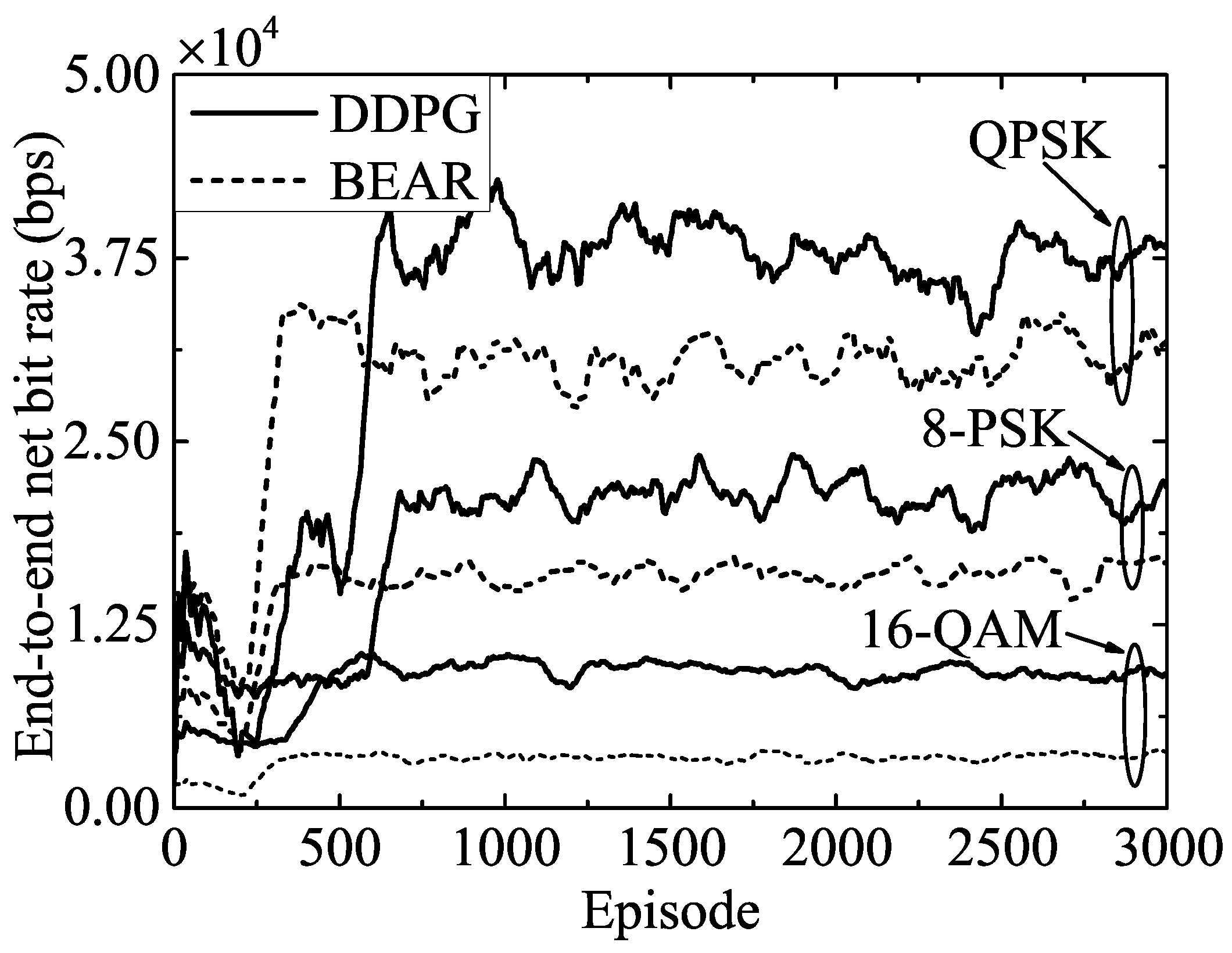

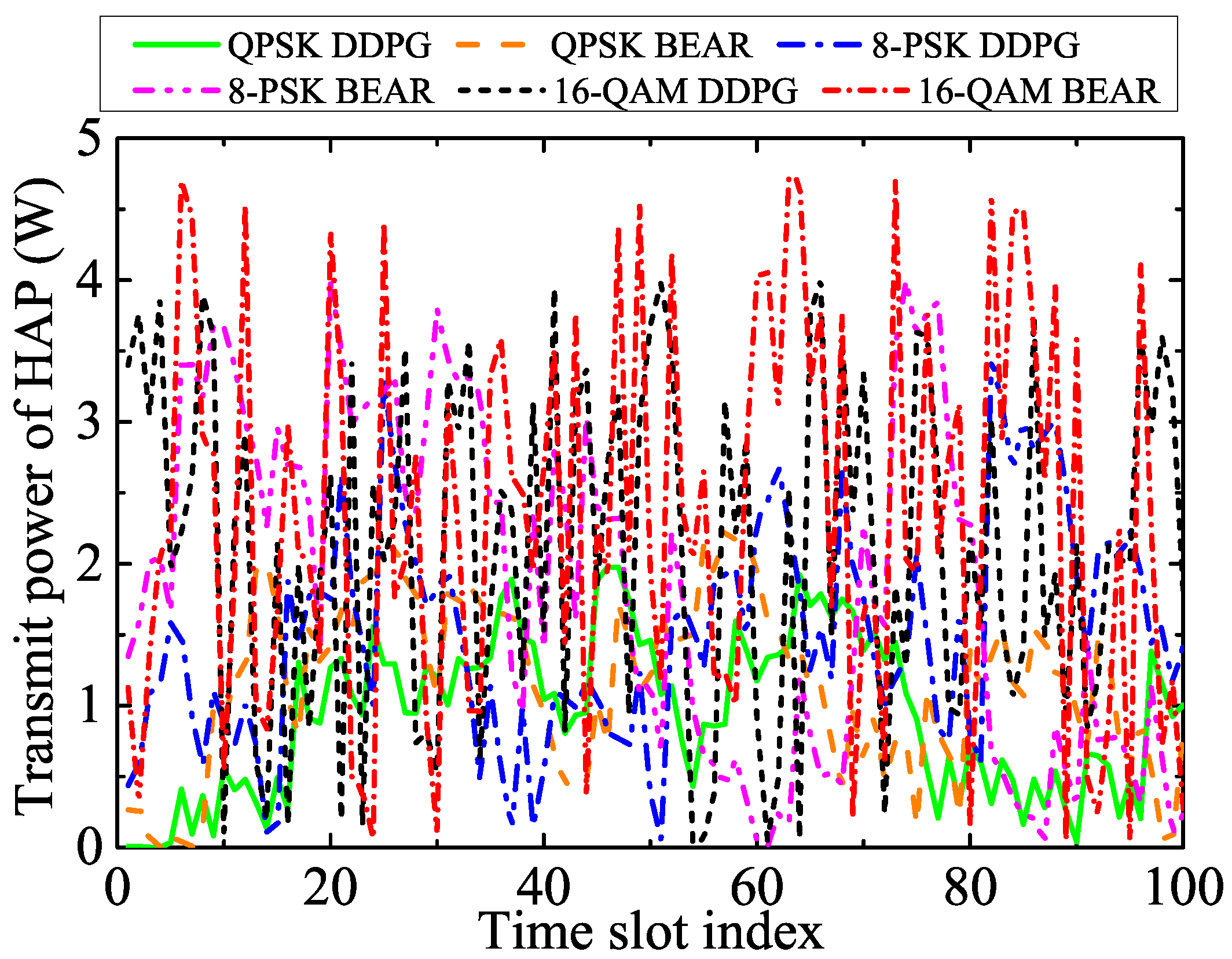

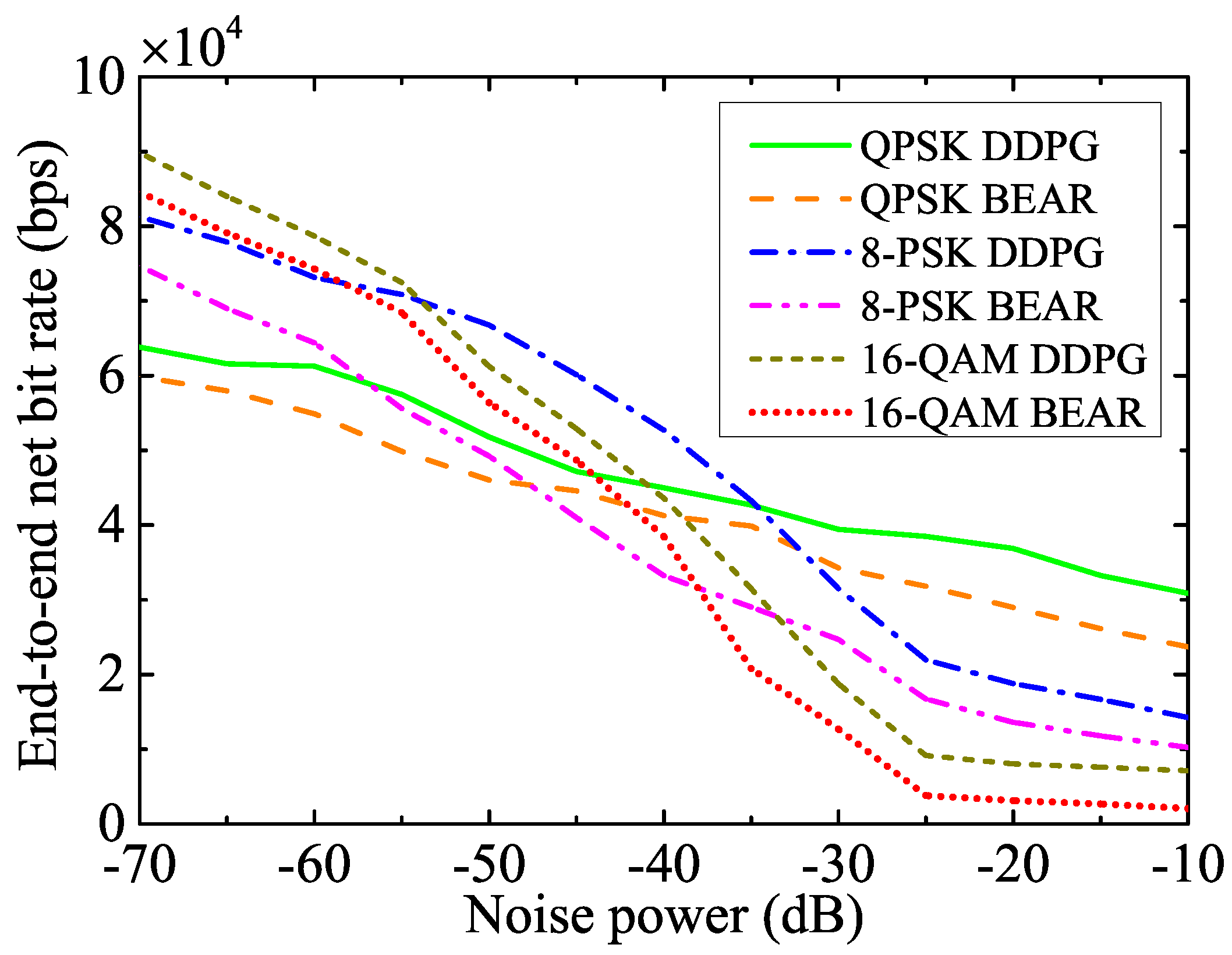

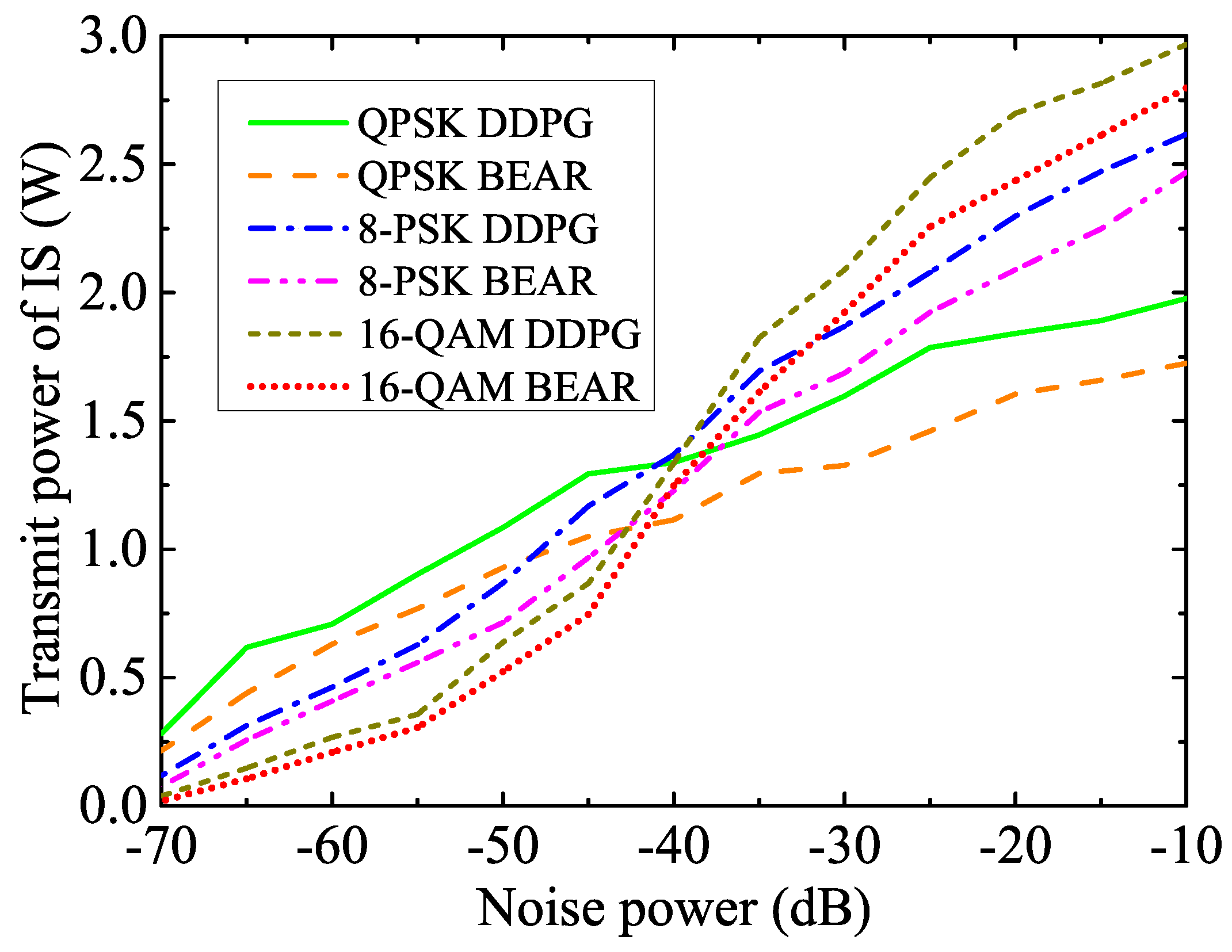

- Our analysis is validated by the extensive simulation results, which offer valuable insights into the impact of key system parameters on optimal decision-making. Additionally, we compared the performance of various modulation schemes, including QPSK, 8-PSK, and 16-QAM, that leverage the same algorithm. Our resource allocation technique significantly improved the net bit rate of the system compared to the BEAR-based benchmark algorithm.

2. System Model

2.1. JIER Protocol

2.2. Energy Scheduling

2.2.1. Energy Borrowing

2.2.2. Energy Returning

2.3. RF Energy Harvesting

3. Problem Definition

3.1. DF Relay Assisted Information Transfer

3.2. Optimization Formulation

4. Proposed Solution Methodology

4.1. MDP-Based DRL Framework

4.1.1. State Space

4.1.2. Action Space

4.1.3. Reward Evaluation

4.1.4. State Transition

4.2. Decision-Making Policy

4.3. DRL using DDPG Algorithm

4.4. Implementation Details

| Algorithm 1:DRL based on DDPG for end-to-end net bit rate maximization |

|

5. Simulation Results

5.1. Convergence Analysis

5.2. Performance Evaluation

6. Conclusion

References

- Lin, H. C.; Chen, W. Y. An approximation algorithm for the maximum-lifetime data aggregation tree problem in wireless sensor networks. IEEE Trans. Wireless Commun. 2017, 16, 3787–3798. [Google Scholar] [CrossRef]

- Lu, X.; Wang, P.; Niyato, D.; Kim, D. I.; Han, Z. Wireless charging technologies: fundamentals, standards, and network applications. IEEE Commun. Surveys Tuts. 2016, 18, 1413–1452. [Google Scholar] [CrossRef]

- Mishra, D.; De, S.; Jana, S. , Basagni, S.; Chowdhury, K, Ed.; Heinzelman, W. Smart RF energy harvesting communications: Challenges and opportunities. IEEE Communications Magazine, 2015. [Google Scholar] [CrossRef]

- Hsieh, P. H.; Chou, C. H.; Chiang, T. An RF energy harvester with 44.1% PCE at input available power of -12 dbm. IEEE Transactions on Circuits and Systems, 1528. [Google Scholar] [CrossRef]

- Tutuncuoglu, K.; Yener, A. Energy harvesting networks with energy cooperation: procrastinating policies. IEEE Transactions on Communications, 4525. [Google Scholar] [CrossRef]

- Mishra, D.; De, S. Optimal relay placement in two-hop RF energy transfer. IEEE Transactions on Communications, 1635. [Google Scholar] [CrossRef]

- Mishra, D. , De, S. Energy Harvesting and Sustainable M2M Communication in 5G Mobile Technologies. Internet of Things (IoT) in 5G Mobile Technologies; Springer: Cham, Switzerland, 2016, 8, 99–125. [Google Scholar] [CrossRef]

- Ju, H.; Zhang, R. User cooperation in wireless powered communication networks. IEEE Global Communications Conference, 1430. [Google Scholar] [CrossRef]

- Chen, H.; Li, Y.; Rebelatto, J. L.; Uchoa-Filho, B. F.; Vucetic, B. Harvest-then-cooperate: wireless-powered cooperative communications. IEEE Transactions on Signal Processing, 2015, 63, 1700–1711. [Google Scholar] [CrossRef]

- Gu, Y.; Chen, H.; Li, Y.; Vucetic, B. An adaptive transmission protocol for wireless-powered cooperative communications. IEEE International Conference on Communications (ICC), 4223. [Google Scholar] [CrossRef]

- Sarma, S.; Ishibashi, K. Time-to-recharge analysis for energy-relay-assisted energy harvesting. IEEE Access, 2019, 7, 139924–139937. [Google Scholar] [CrossRef]

- Na, Z.; Lv, J.; Zhang, M.; Peng, B.; Xiong, M.; Guan, M. GFDM based wireless powered communication for cooperative relay system. IEEE Access, 2019, 7, 50971–50979. [Google Scholar] [CrossRef]

- Wei, Z.; Sun, S.; Zhu, X.; Kim, D. I.; Ng, D. W. K. Resource allocation for wireless-powered full-duplex relaying systems with nonlinear energy harvesting efficiency. IEEE Transactions on Vehicular Technology, 2019, 68, 12079–12093. [Google Scholar] [CrossRef]

- Gurakan, B.; Ozel, O.; Ulukus, S. Optimal energy and data routing in networks with energy cooperation. IEEE Transactions on Wireless Communications, 2016, 15, 857–870. [Google Scholar] [CrossRef]

- Huang, X.; Ansari, N. Energy sharing within EH-enabled wireless communication networks. IEEE Wireless Communications, 2015, 22, 144–149. [Google Scholar] [CrossRef]

- Hu, C.; Gong, J.; Wang, X.; Zhou, S.; Niu, Z. Optimal green energy utilization in MIMO systems with hybrid energy supplies. IEEE Transactions on Vehicular Technology, 2015, 64, 3675–3688. [Google Scholar] [CrossRef]

- Sun, Z.; Dan, L.; Xiao, Y.; Wen, P.; Yang, P.; 0Li, S. Energy borrowing: an efficient way to bridge energy harvesting and power grid in wireless communications. IEEE 83rd Vehicular Technology Conference. [CrossRef]

- Sun, Z.; Dan, L.; Xiao, Y.; Yang, P.; Li, S. Energy borrowing for energy harvesting wireless communications. IEEE Communications Letters, 2016, 20, 2546–2549. [Google Scholar] [CrossRef]

- Cui, J.; Ding, Z.; Deng, Y.; Nallanathan, A.; Hanzo, L. Adaptive UAV-trajectory optimization under quality of service constraints: a model-free solution. IEEE Access, 2020, 8, 112253–112265. [Google Scholar] [CrossRef]

- Challita, U.; Saad, W.; Bettstetter, C. Interference management for cellular-connected UAVs: a deep reinforcement learning approach. IEEE Transactions on Wireless Communications, 2019, 18, 2125–2140. [Google Scholar] [CrossRef]

- Qiu, C.; Hu, Y.; Chen, Y.; Zeng, B. Deep deterministic policy gradient (DDPG)-based energy harvesting wireless communications. IEEE Internet of Things Journal, 2019, 6, 8577–8588. [Google Scholar] [CrossRef]

- Zhao, B.; Zhao, X. Deep reinforcement learning resource allocation in wireless sensor networks with energy harvesting and relay. IEEE Internet of Things Journal, 2022, 9, 2330–2345. [Google Scholar] [CrossRef]

- Sachan, A.; Mishra, D.; Prasad, G. BEAR: reinforcement learning for throughput aware borrowing in energy harvesting systems. IEEE Global Communications Conference (GLOBECOM). [CrossRef]

- Su, Y.; Lu, X.; Zhao, Y.; Huang, L.; Du, X. Cooperative communications with relay selection based on deep reinforcement learning in wireless sensor networks. IEEE Sensors Journal, 2019, 19, 9561–9569. [Google Scholar] [CrossRef]

- Wei, Y.; Yu, F. R.; Song, M.; Han, Z. User scheduling and resource allocation in hetnets with hybrid energy supply: an actor-critic reinforcement learning approach. IEEE Transactions on Wireless Communications, 2018, 17, 680–692. [Google Scholar] [CrossRef]

- Masadeh, A.; Wang, Z.; Kamal, A. E. An actor-critic reinforcement learning approach for energy harvesting communications systems. International Conference on Computer Communication and Networks. [CrossRef]

- Reddy, G. K.; Mishra, D.; Devi, L. N. (2020). Scheduling protocol for throughput maximization in borrowing-aided energy harvesting system. IEEE Networking Letters, 2020, 2, 171–174. [Google Scholar] [CrossRef]

- Kumari, M.; Prasad, G.; Mishra, D. Si2ER protocol for optimization of RF powered communication using deep learning. IEEE Wireless Communications and Networking Conference (WCNC). [CrossRef]

| Modulation | |

|---|---|

| QPSK | |

| 8-PSK | |

| 16-QAM | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).