Submitted:

07 September 2023

Posted:

08 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methods

2.1. Participants

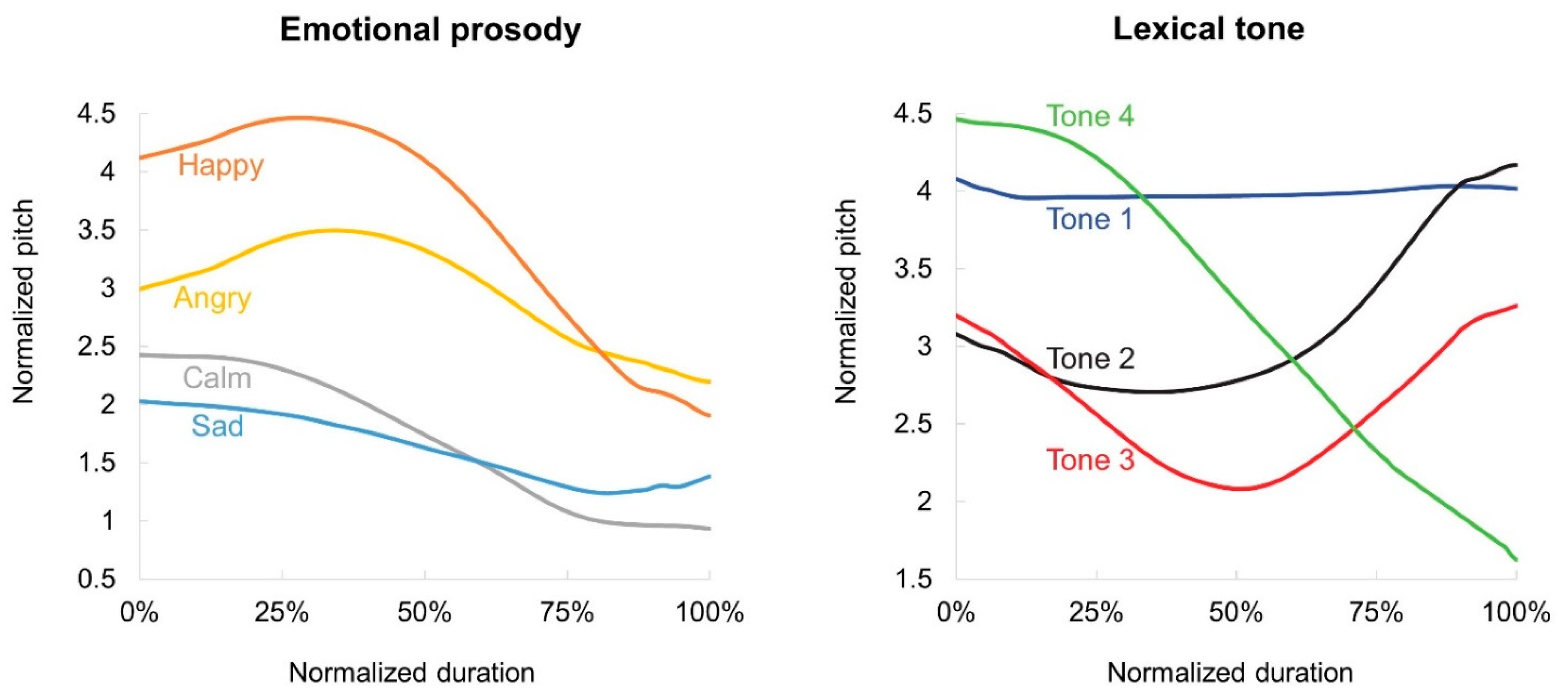

2.2. Stimuli

2.3. Procedure

2.4. Statistical Analyses

3. Results

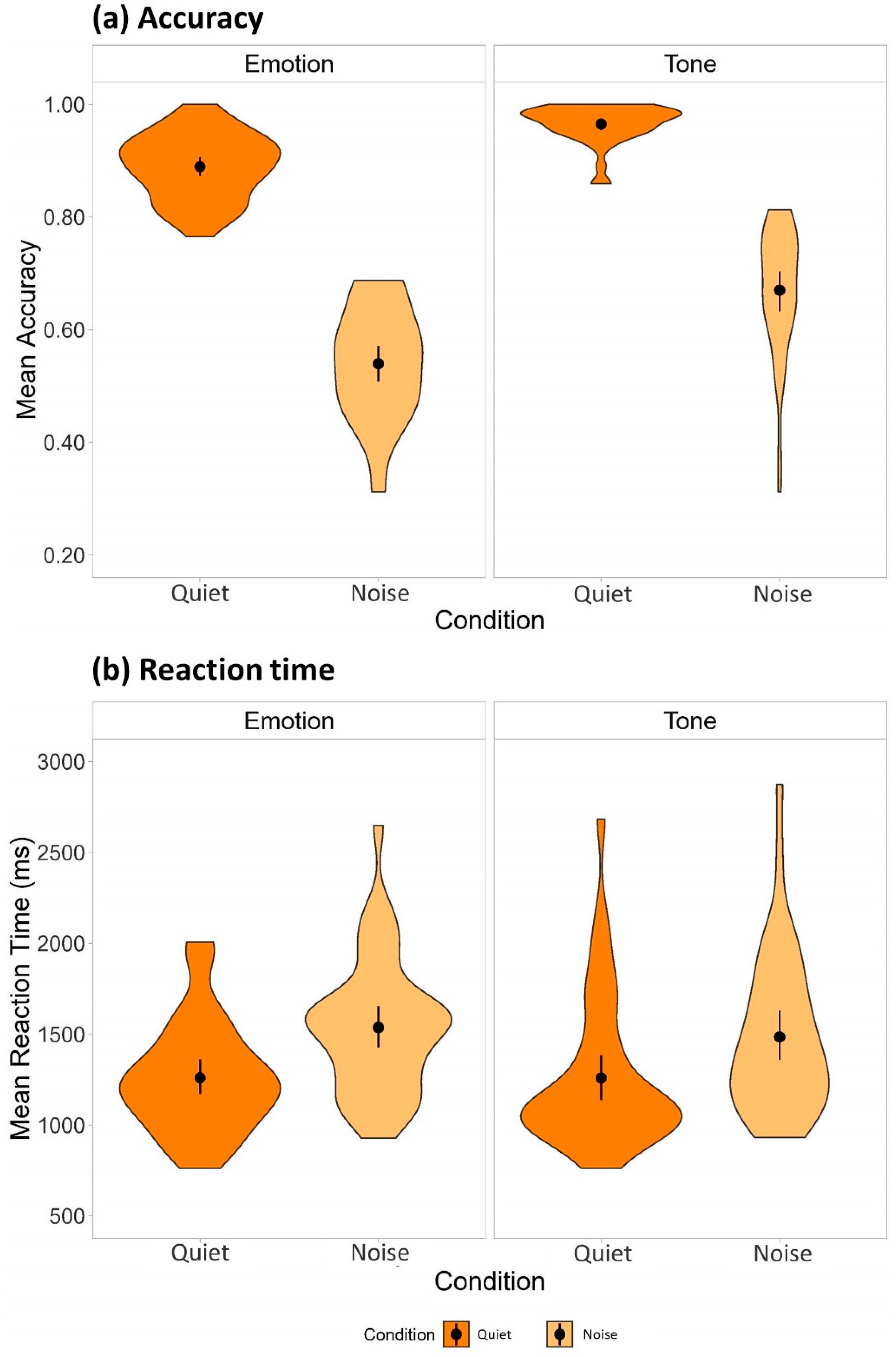

3.1. Accuracy

3.2. Reaction time

4. Discussion

5. Conclusion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fairbanks, G.; Pronovost, W. Vocal Pitch During Simulated Emotion. Science 1938, 88, 382-383. [CrossRef]

- Monrad-Krohn, G.H. The Prosodic Quality of Speech and Its Disorders: (a Brief Survey from a Neurologist's Point of View). Acta Psychiatr. Scand. 1947, 22, 255-269. [CrossRef]

- Cutler, A.; Pearson, M. On the Analysis of Prosodic Turn-Taking Cues. In Intonation in Discourse, Johns-Lewis, C., Ed.; Routledge: Abingdon, UK, 2018; pp. 139–155.

- Belyk, M.; Brown, S. Perception of Affective and Linguistic Prosody: An ALE Meta-Analysis of Neuroimaging Studies. Soc. Cogn. Affect. Neurosci. 2014, 9, 1395-1403. [CrossRef]

- Ding, H.; Zhang, Y. Speech Prosody in Mental Disorders. Annu. Rev. Linguist. 2023, 9. [CrossRef]

- Fromkin, V.A.; Curtiss, S.; Hayes, B.P.; Hyams, N.; Keating, P.A.; Koopman, H.; Steriade, D. Linguistics: An Introduction to Linguistic Theory; Blackwell Oxford: Oxford, 2000.

- Liang, B.; Du, Y. The Functional Neuroanatomy of Lexical Tone Perception: An Activation Likelihood Estimation Meta-Analysis. Front. Neurosci. 2018, 12, 495. [CrossRef]

- Gandour, J.T. Neural Substrates Underlying the Perception of Linguistic Prosody. In Volume 2 Experimental Studies in Word and Sentence Prosody, Carlos, G., Tomas, R., Eds.; De Gruyter Mouton: Berlin, New York, 2009; pp. 3-26.

- Massaro, D.W.; Cohen, M.M.; Tseng, C.-y. The Evaluation and Integration of Pitch Height and Pitch Contour in Lexical Tone Perception in Mandarin Chinese. Journal of Chinese Linguistics 1985, 13, 267-289.

- Xu, Y. Contextual Tonal Variations in Mandarin. J. Phon. 1997, 25, 61-83. [CrossRef]

- Jongman, A.; Wang, Y.; Moore, C.B.; Sereno, J.A. Perception and Production of Mandarin Chinese Tones. In The Handbook of East Asian Psycholinguistics: Volume 1: Chinese, Bates, E., Tan, L.H., Tzeng, O.J.L., Li, P., Eds.; Cambridge University Press: Cambridge, 2006; Volume 1, pp. 209-217.

- Moore, C.B.; Jongman, A. Speaker Normalization in the Perception of Mandarin Chinese Tones. J. Acoust. Soc. Am. 1997, 102, 1864-1877. [CrossRef]

- Yu, K.; Zhou, Y.; Li, L.; Su, J.a.; Wang, R.; Li, P. The Interaction between Phonological Information and Pitch Type at Pre-Attentive Stage: An ERP Study of Lexical Tones. Lang. Cogn. Neurosci. 2017, 32, 1164-1175. [CrossRef]

- Tseng, C.-y.; Massaro, D.W.; Cohen, M.M. Lexical Tone Perception in Mandarin Chinese: Evaluation and Integration of Acoustic Features. In Linguistics, Psychology, and the Chinese Language, Kao, H.S.R., Hoosain, R., Eds.; Centre of Asian Studies, University of Hong Kong: 1986; pp. 91-104.

- Whalen, D.H.; Xu, Y. Information for Mandarin Tones in the Amplitude Contour and in Brief Segments. Phonetica 1992, 49, 25-47. [CrossRef]

- Lin, M.-C. The Acoustic Characteristics and Perceptual Cues of Tones in Standard Chinese [Putonghua Shengdiao De Shengxue Texing He Zhijue Zhengzhao]. Chinese Linguistics [Zhongguo Yuwen] 1988, 204, 182-193.

- Xu, L.; Tsai, Y.; Pfingst, B.E. Features of Stimulation Affecting Tonal-Speech Perception: Implications for Cochlear Prostheses. J. Acoust. Soc. Am. 2002, 112, 247-258. [CrossRef]

- Kong, Y.-Y.; Zeng, F.-G. Temporal and Spectral Cues in Mandarin Tone Recognition. J. Acoust. Soc. Am. 2006, 120, 2830-2840. [CrossRef]

- Krenmayr, A.; Qi, B.; Liu, B.; Liu, H.; Chen, X.; Han, D.; Schatzer, R.; Zierhofer, C.M. Development of a Mandarin Tone Identification Test: Sensitivity Index D' as a Performance Measure for Individual Tones. Int. J. Audiol. 2011, 50, 155-163. [CrossRef]

- Lee, C.-Y.; Tao, L.; Bond, Z.S. Effects of Speaker Variability and Noise on Mandarin Tone Identification by Native and Non-Native Listeners. Speech Lang. Hear. 2013, 16, 46-54. [CrossRef]

- Qi, B.; Mao, Y.; Liu, J.; Liu, B.; Xu, L. Relative Contributions of Acoustic Temporal Fine Structure and Envelope Cues for Lexical Tone Perception in Noise. J. Acoust. Soc. Am. 2017, 141, 3022-3029. [CrossRef]

- Wang, X.; Xu, L. Mandarin Tone Perception in Multiple-Talker Babbles and Speech-Shaped Noise. J. Acoust. Soc. Am. 2020, 147, EL307-EL313. [CrossRef]

- Apoux, F.; Yoho, S.E.; Youngdahl, C.L.; Healy, E.W. Role and Relative Contribution of Temporal Envelope and Fine Structure Cues in Sentence Recognition by Normal-Hearing Listeners. J. Acoust. Soc. Am. 2013, 134, 2205-2212. [CrossRef]

- Morgan, S.D. Comparing Emotion Recognition and Word Recognition in Background Noise. J. Speech Lang. Hear. Res. 2021, 64, 1758-1772. [CrossRef]

- Lakshminarayanan, K.; Ben Shalom, D.; van Wassenhove, V.; Orbelo, D.; Houde, J.; Poeppel, D. The Effect of Spectral Manipulations on the Identification of Affective and Linguistic Prosody. Brain Lang. 2003, 84, 250-263. [CrossRef]

- van Zyl, M.; Hanekom, J.J. Speech Perception in Noise: A Comparison between Sentence and Prosody Recognition. J. Hear. Sci. 2011, 1, 54-56.

- Krishnan, A.; Xu, Y.; Gandour, J.; Cariani, P. Encoding of Pitch in the Human Brainstem Is Sensitive to Language Experience. Cogn. Brain Res. 2005, 25, 161-168. [CrossRef]

- Klein, D.; Zatorre, R.J.; Milner, B.; Zhao, V. A Cross-Linguistic PET Study of Tone Perception in Mandarin Chinese and English Speakers. NeuroImage 2001, 13, 646-653. [CrossRef]

- Braun, B.; Johnson, E.K. Question or Tone 2? How Language Experience and Linguistic Function Guide Pitch Processing. J. Phon. 2011, 39, 585-594. [CrossRef]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [CrossRef]

- Sauter, D.A.; Eisner, F.; Ekman, P.; Scott, S.K. Cross-Cultural Recognition of Basic Emotions through Nonverbal Emotional Vocalizations. Proc. Natl. Acad. Sci 2010, 107, 2408-2412. [CrossRef]

- Scherer, K.R.; Banse, R.; Wallbott, H.G. Emotion Inferences from Vocal Expression Correlate across Languages and Cultures. J. Cross Cult. Psychol. 2001, 32, 76-92. [CrossRef]

- Pell, M.D.; Monetta, L.; Paulmann, S.; Kotz, S.A. Recognizing Emotions in a Foreign Language. J. Nonverbal Behav. 2009, 33, 107-120. [CrossRef]

- Scherer, K.R. Vocal Affect Expression: A Review and a Model for Future Research. Psychol. Bull. 1986, 99, 143-165. [CrossRef]

- Liu, P.; Pell, M.D. Processing Emotional Prosody in Mandarin Chinese: A Cross-Language Comparison. In Proceedings of the International Conference on Speech Prosody; 2014; pp. 95-99.

- Bryant, G.A.; Barrett, H.C. Vocal Emotion Recognition across Disparate Cultures. J. Cogn. Cult. 2008, 8, 135-148. [CrossRef]

- Wang, T.; Lee, Y.-c.; Ma, Q. Within and across-Language Comparison of Vocal Emotions in Mandarin and English. Applied Sciences 2018, 8, 2629. [CrossRef]

- Banse, R.; Scherer, K.R. Acoustic Profiles in Vocal Emotion Expression. J. Pers. Soc. Psychol. 1996, 70, 614-636. [CrossRef]

- Dupuis, K.; Pichora-Fuller, M.K. Intelligibility of Emotional Speech in Younger and Older Adults. Ear Hear. 2014, 35, 695-707. [CrossRef]

- Castro, S.L.; Lima, C.F. Recognizing Emotions in Spoken Language: A Validated Set of Portuguese Sentences and Pseudosentences for Research on Emotional Prosody. Behav. Res. Methods 2010, 42, 74-81. [CrossRef]

- Murray, I.R.; Arnott, J.L. Toward the Simulation of Emotion in Synthetic Speech: A Review of the Literature on Human Vocal Emotion. J. Acoust. Soc. Am. 1993, 93, 1097-1108. [CrossRef]

- Juslin, P.N.; Laukka, P. Communication of Emotions in Vocal Expression and Music Performance: Different Channels, Same Code? Psychol. Bull. 2003, 129, 770-814. [CrossRef]

- Zhang, S. Emotion Recognition in Chinese Natural Speech by Combining Prosody and Voice Quality Features. Sun, F., Zhang, J., Tan, Y., Cao, J., Yu, W., Eds.; Advances in Neural Networks; Springer Berlin Heidelberg: Berlin, Heidelberg, 2008; pp. 457-464.

- Hirst, D.; Wakefield, J.; Li, H.Y. Does Lexical Tone Restrict the Paralinguistic Use of Pitch? Comparing Melody Metrics for English, French, Mandarin and Cantonese. In Proceedings of the International Conference on the Phonetics of Languages in China; 2013; pp. 15–18.

- Zhao, X.; Zhang, S.; Lei, B. Robust Emotion Recognition in Noisy Speech Via Sparse Representation. Neural Comput. Appl. 2014, 24, 1539-1553. [CrossRef]

- Schuller, B.; Arsic, D.; Wallhoff, F.; Rigoll, G.I., Dresden. Emotion Recognition in the Noise Applying Large Acoustic Feature Sets. In Proceedings of Speech Prosody 2006; 2006; p. paper 128.

- Scharenborg, O.; Kakouros, S.; Koemans, J. The Effect of Noise on Emotion Perception in an Unknown Language. In Proceedings of the International Conference on Speech Prosody; 2018; pp. 364–368.

- Parada-Cabaleiro, E.; Baird, A.; Batliner, A.; Cummins, N.; Hantke, S.; Schuller, B. The Perception of Emotions in Noisified Non-Sense Speech. In Proceedings of Interspeech 2017; 2017; pp. 3246–3250.

- Zhang, M.; Ding, H. Impact of Background Noise and Contribution of Visual Information in Emotion Identification by Native Mandarin Speakers. In Proceedings of Interspeech 2022; 2022; pp. 1993-1997.

- Parada-Cabaleiro, E.; Batliner, A.; Baird, A.; Schuller, B. The Perception of Emotional Cues by Children in Artificial Background Noise. Int. J. Speech Technol. 2020, 23, 169-182. [CrossRef]

- Luo, X. Talker Variability Effects on Vocal Emotion Recognition in Acoustic and Simulated Electric Hearing. J. Acoust. Soc. Am. 2016, 140, EL497-EL503. [CrossRef]

- Hockett, C. The Quantification of Functional Load. Word 1967, 23, 320–339. [CrossRef]

- Ross, E.D.; Edmondson, J.A.; Seibert, G.B. The Effect of Affect on Various Acoustic Measures of Prosody in Tone and Non-Tone Languages: A Comparison Based on Computer Analysis of Voice. J. Phon. 1986, 14, 283-302. [CrossRef]

- Xu, Y. Prosody, Tone and Intonation. In The Routledge Handbook of Phonetics, Katz, W.F., Assmann, P.F., Eds.; Routledge: New York, 2019; pp. 314-356.

- Scherer, K.R.; Wallbott, H.G. Evidence for Universality and Cultural Variation of Differential Emotion Response Patterning. J. Pers. Soc. Psychol. 1994, 66, 310–328. [CrossRef]

- Viswanathan, V.; Shinn-Cunningham, B.G.; Heinz, M.G. Temporal Fine Structure Influences Voicing Confusions for Consonant Identification in Multi-Talker Babble. J. Acoust. Soc. Am. 2021, 150, 2664-2676. [CrossRef]

- Cherry, E.C. Some Experiments on the Recognition of Speech, with One and with Two Ears. J. Acoust. Soc. Am. 1953, 25, 975-979. [CrossRef]

- Koerner, T.K.; Zhang, Y. Differential Effects of Hearing Impairment and Age on Electrophysiological and Behavioral Measures of Speech in Noise. Hear. Res. 2018, 370, 130-142. [CrossRef]

- Ameka, F. Interjections: The Universal yet Neglected Part of Speech. J. Pragmat. 1992, 18, 101-118. [CrossRef]

- Howie, J.M. On the Domain of Tone in Mandarin. Phonetica 1974, 30, 129-148. [CrossRef]

- Xu, Y. Prosodypro—a Tool for Large-Scale Systematic Prosody Analysis. In Tools and Resources for the Analysis of Speech Prosody; Laboratoire Parole et Langage: Aix-en-Provence, France, 2013; pp. 7-10.

- Boersma, P.; Weenink, D. Praat: Doing Phonetics by Computer, 6.0.37; 2018.

- Wang, Y.; Jongman, A.; Sereno, J.A. Acoustic and Perceptual Evaluation of Mandarin Tone Productions before and after Perceptual Training. J. Acoust. Soc. Am. 2003, 113, 1033-1043. [CrossRef]

- Liu, S.; Samuel, A.G. Perception of Mandarin Lexical Tones When F0 Information Is Neutralized. Lang. Speech 2004, 47, 109-138. [CrossRef]

- Li, A. Emotional Intonation and Its Boundary Tones in Chinese. In Encoding and Decoding of Emotional Speech: A Cross-Cultural and Multimodal Study between Chinese and Japanese; Springer Berlin Heidelberg: Berlin, Heidelberg, 2015; pp. 133-164.

- Chen, F.; Hu, Y.; Yuan, M. Evaluation of Noise Reduction Methods for Sentence Recognition by Mandarin-Speaking Cochlear Implant Listeners. Ear Hear. 2015, 36. [CrossRef]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using Lme4. J. Stat. Softw. 2015, 67, 1–48. [CrossRef]

- Lo, S.; Andrews, S. To Transform or Not to Transform: Using Generalized Linear Mixed Models to Analyse Reaction Time Data. Front. Psychol. 2015, 6, 1171. [CrossRef]

- Baayen, R.H.; Milin, P. Analyzing Reaction Times. Int. J. Psychol. Res. 2010, 3, 12–28. [CrossRef]

- Chien, Y.F.; Sereno, J.A.; Zhang, J. What’s in a Word: Observing the Contribution of Underlying and Surface Representations. Lang. Speech 2017, 60, 643–657. [CrossRef]

- Lenth, R. Emmeans: Estimated Marginal Means, Aka Leastsquares Means, R package: 2020.

- Brungart, D.S.; Simpson, B.D.; Ericson, M.A.; Scott, K.R. Informational and Energetic Masking Effects in the Perception of Multiple Simultaneous Talkers. J. Acoust. Soc. Am. 2001, 110, 2527-2538. [CrossRef]

- Scott, S.K.; McGettigan, C. The Neural Processing of Masked Speech. Hear. Res. 2013, 303, 58-66. [CrossRef]

- Shinn-Cunningham, B.G. Object-Based Auditory and Visual Attention. Trends Cogn. Sci. 2008, 12, 182-186. [CrossRef]

- Mattys, S.L.; Brooks, J.; Cooke, M. Recognizing Speech under a Processing Load: Dissociating Energetic from Informational Factors. Cogn. Psychol. 2009, 59, 203-243. [CrossRef]

- Rosen, S.; Souza, P.; Ekelund, C.; Majeed, A. Listening to Speech in a Background of Other Talkers: Effects of Talker Number and Noise Vocoding. J. Acoust. Soc. Am. 2013, 133, 2431-2443. [CrossRef]

- Wang, T.; Ding, H.; Kuang, J.; Ma, Q. Mapping Emotions into Acoustic Space: The Role of Voice Quality. In Proceedings of Interspeech 2014; 2014; pp. 1978-1982.

- Ingrisano, D.R.-S.; Perry, C.K.; Jepson, K.R. Environmental Noise. Am. J. Speech Lang. Pathol. 1998, 7, 91-96, doi:doi:10.1044/1058-0360.0701.91.

- Perry, C.K.; Ingrisano, D.R.S.; Palmer, M.A.; McDonald, E.J. Effects of Environmental Noise on Computer-Derived Voice Estimates from Female Speakers. J. Voice 2000, 14, 146-153. [CrossRef]

- Wang, T.; Lee, Y.-c. Does Restriction of Pitch Variation Affect the Perception of Vocal Emotions in Mandarin Chinese? J. Acoust. Soc. Am. 2015, 137, EL117. [CrossRef]

- Schirmer, A.; Kotz, S.A. Beyond the Right Hemisphere: Brain Mechanisms Mediating Vocal Emotional Processing. Trends Cogn. Sci. 2006, 10, 24-30. [CrossRef]

- Fugate, J.M.B. Categorical Perception for Emotional Faces. Emot. Rev. 2013, 5, 84-89. [CrossRef]

- Singh, L.; Fu, C.S.L. A New View of Language Development: The Acquisition of Lexical Tone. Child Dev. 2016, 87, 834-854. [CrossRef]

- Yeung, H.H.; Chen, K.H.; Werker, J.F. When Does Native Language Input Affect Phonetic Perception? The Precocious Case of Lexical Tone. J. Mem. Lang. 2013, 68, 123-139. [CrossRef]

- Shablack, H.; Lindquist, K.A. The Role of Language in Emotional Development. In Handbook of Emotional Development, LoBue, V., Pérez-Edgar, K., Buss, K.A., Eds.; Springer International Publishing: Cham, 2019; pp. 451-478.

- Morningstar, M.; Venticinque, J.; Nelson, E.E. Differences in Adult and Adolescent Listeners’ Ratings of Valence and Arousal in Emotional Prosody. Cogn. Emot. 2019, 33, 1497-1504. [CrossRef]

- Zhao, L.; Sloggett, S.; Chodroff, E. Top-Down and Bottom-up Processing of Familiar and Unfamiliar Mandarin Dialect Tone Systems. In Proceedings of Speech Prosody 2022; 2022; pp. 842-846.

- Zhao, T.C.; Kuhl, P.K. Top-Down Linguistic Categories Dominate over Bottom-up Acoustics in Lexical Tone Processing. J. Acoust. Soc. Am. 2015, 137, 2379-2379. [CrossRef]

- Malins, J.G.; Gao, D.; Tao, R.; Booth, J.R.; Shu, H.; Joanisse, M.F.; Liu, L.; Desroches, A.S. Developmental Differences in the Influence of Phonological Similarity on Spoken Word Processing in Mandarin Chinese. Brain Lang. 2014, 138, 38-50. [CrossRef]

- Shuai, L.; Gong, T. Temporal Relation between Top-Down and Bottom-up Processing in Lexical Tone Perception. Front. Behav. Neurosci. 2014, 8, 97. [CrossRef]

- Başkent, D. Effect of Speech Degradation on Top-Down Repair: Phonemic Restoration with Simulations of Cochlear Implants and Combined Electric–Acoustic Stimulation. J. Assoc. Res. Otolaryngol. 2012, 13, 683-692. [CrossRef]

- Wang, J.; Shu, H.; Zhang, L.; Liu, Z.; Zhang, Y. The Roles of Fundamental Frequency Contours and Sentence Context in Mandarin Chinese Speech Intelligibility. J. Acoust. Soc. Am. 2013, 134, EL91-EL97.

- Sammler, D.; Grosbras, M.-H.; Anwander, A.; Bestelmeyer, Patricia E.G.; Belin, P. Dorsal and Ventral Pathways for Prosody. Curr. Biol. 2015, 25, 3079-3085. [CrossRef]

- Coulson, S. Sensorimotor Account of Multimodal Prosody. PsyArXiv 2023. [CrossRef]

- Holler, J.; Levinson, S.C. Multimodal Language Processing in Human Communication. Trends Cogn. Sci. 2019, 23, 639-652. [CrossRef]

- Bryant, G.A. Vocal Communication across Cultures: Theoretical and Methodological Issues. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2022, 377, 20200387. [CrossRef]

- Lecumberri, M.L.G.; Cooke, M.; Cutler, A. Non-Native Speech Perception in Adverse Conditions: A Review. Speech Commun. 2010, 52, 864-886. [CrossRef]

- Liu, P.; Rigoulot, S.; Pell, M.D. Cultural Differences in on-Line Sensitivity to Emotional Voices: Comparing East and West. Front. Hum. Neurosci. 2015, 9, 311. [CrossRef]

- Gordon-Salant, S.; Fitzgibbons, P.J. Effects of Stimulus and Noise Rate Variability on Speech Perception by Younger and Older Adults. J. Acoust. Soc. Am. 2004, 115, 1808-1817. [CrossRef]

- Goossens, T.; Vercammen, C.; Wouters, J.; van Wieringen, A. Masked Speech Perception across the Adult Lifespan: Impact of Age and Hearing Impairment. Hear. Res. 2017, 344, 109-124. [CrossRef]

- Van Engen, K.J.; Phelps, J.E.; Smiljanic, R.; Chandrasekaran, B. Enhancing Speech Intelligibility: Interactions among Context, Modality, Speech Style, and Masker. J. Speech Lang. Hear. Res. 2014, 57, 1908-1918. [CrossRef]

- Scott, S.K.; Rosen, S.; Wickham, L.; Wise, R.J.S. A Positron Emission Tomography Study of the Neural Basis of Informational and Energetic Masking Effects in Speech Perception. J. Acoust. Soc. Am. 2004, 115, 813-821. [CrossRef]

- Nygaard, L.C.; Queen, J.S. Communicating Emotion: Linking Affective Prosody and Word Meaning. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 1017.

- Wilson, D.; Wharton, T. Relevance and Prosody. J. Pragmat. 2006, 38, 1559-1579.

- Frühholz, S.; Trost, W.; Kotz, S.A. The Sound of Emotions—Towards a Unifying Neural Network Perspective of Affective Sound Processing. Neurosci. Biobehav. Rev. 2016, 68, 96-110. [CrossRef]

- Grandjean, D. Brain Networks of Emotional Prosody Processing. Emot. Rev. 2021, 13, 34-43. [CrossRef]

- Jiang, A.; Yang, J.; Yang, Y. Mmn Responses During Implicit Processing of Changes in Emotional Prosody: An ERP Study Using Chinese Pseudo-Syllables. Cogn. Neurodyn. 2014, 8, 499-508. [CrossRef]

- Lin, Y.; Fan, X.; Chen, Y.; Zhang, H.; Chen, F.; Zhang, H.; Ding, H.; Zhang, Y. Neurocognitive Dynamics of Prosodic Salience over Semantics During Explicit and Implicit Processing of Basic Emotions in Spoken Words. Brain Sci. 2022, 12, 1706. [CrossRef]

- Mauchand, M.; Caballero, J.A.; Jiang, X.; Pell, M.D. Immediate Online Use of Prosody Reveals the Ironic Intentions of a Speaker: Neurophysiological Evidence. Cogn. Affect. Behav. Neurosci. 2021, 21, 74-92. [CrossRef]

- Chen, Y.; Tang, E.; Ding, H.; Zhang, Y. Auditory Pitch Perception in Autism Spectrum Disorder: A Systematic Review and Meta-Analysis. J. Speech Lang. Hear. Res. 2022, 65, 4866-4886. [CrossRef]

- Zhang, L.; Xia, Z.; Zhao, Y.; Shu, H.; Zhang, Y. Recent Advances in Chinese Developmental Dyslexia. Annu. Rev. Linguist. 2023, 9, 439-461. [CrossRef]

- Zhang, M.; Xu, S.; Chen, Y.; Lin, Y.; Ding, H.; Zhang, Y. Recognition of Affective Prosody in Autism Spectrum Conditions: A Systematic Review and Meta-Analysis. Autism 2022, 26, 798-813. [CrossRef]

- Seddoh, S.A. How Discrete or Independent Are''Affective Prosody''and''Linguistic Prosody''? Aphasiology 2002, 16, 683-692. [CrossRef]

- Ben-David Boaz M.; Gal-Rosenblum Sarah; van Lieshout Pascal H. H. M.; Shakuf Vered Age-Related Differences in the Perception of Emotion in Spoken Language: The Relative Roles of Prosody and Semantics. Journal of Speech, Language, and Hearing Research 2019, 62, 1188–1202. [CrossRef]

| Measure | Emotional prosody | Lexical tone |

|---|---|---|

| Mean F0 (Hz) | 195.6 (76.1) | 151.9 (46.3) |

| Duration (msec) | 525.2 (185.6) | 570.0 (84.6) |

| Mean intensity (dB) | 77.7 (2.4) | 78.0 (2.6) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).