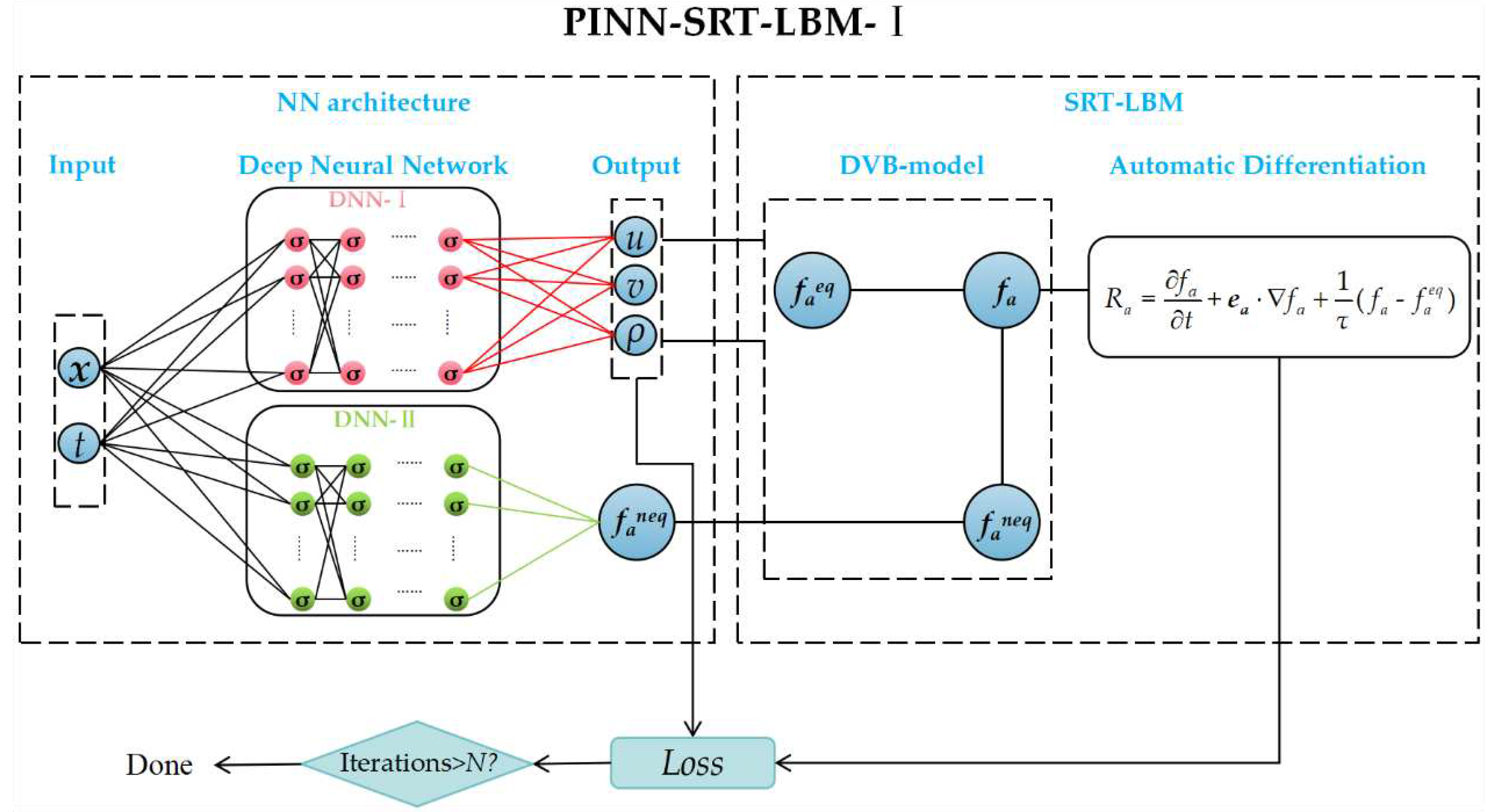

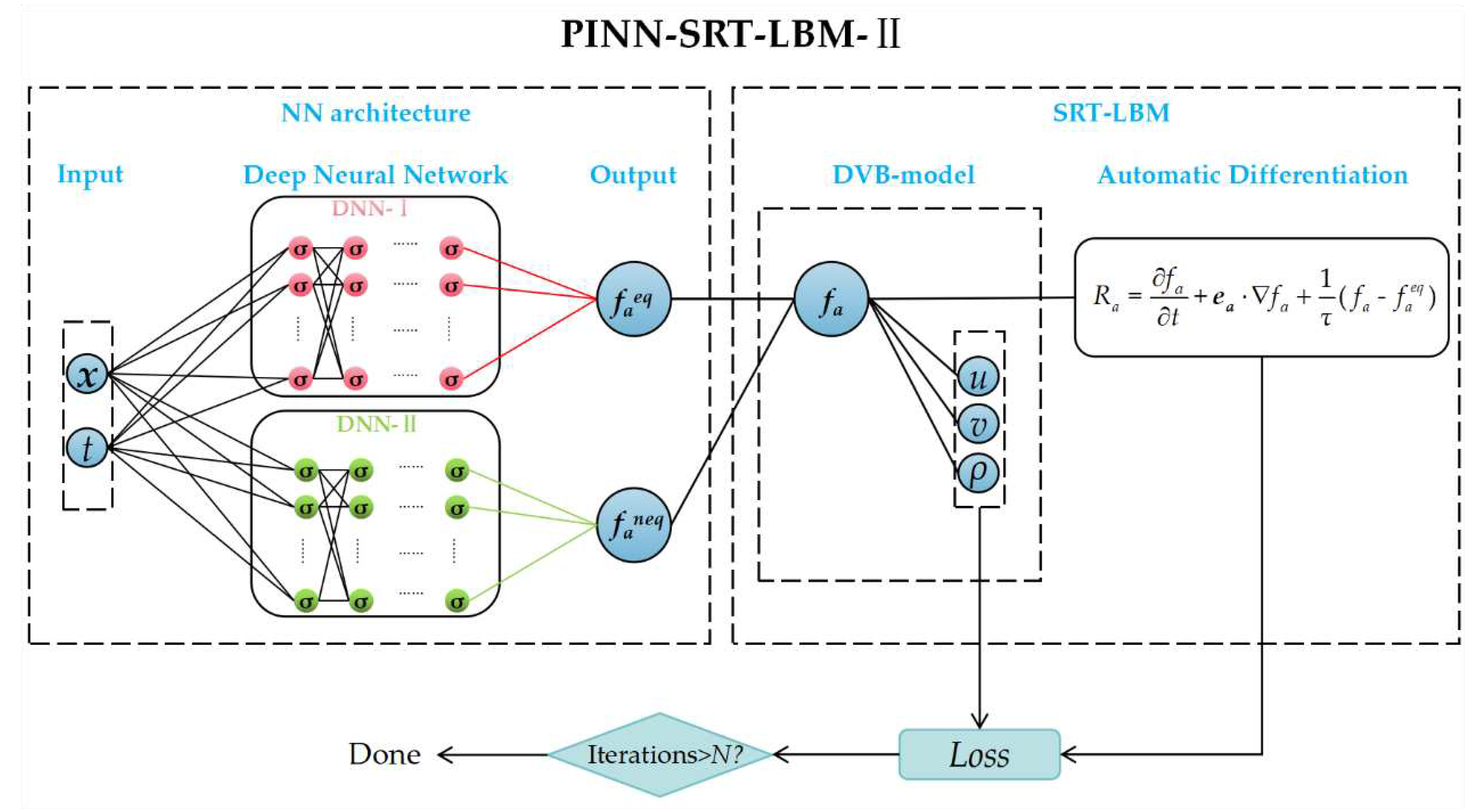

This section presents a comprehensive experimental validation and analysis of the PINN-SRT-LBM-I and PINN-SRT-LBM-II using classical CFD cases. In order to ensure accuracy, the experiments encompass both 1D and 2D cases, specifically addressing the sod shock tube case in the 1D scenario, and the lid-driven cavity flow and flow around circular cylinder cases within the 2D scenario.

The computational resources employed in this paper include NVIDIA Titan X based on the Pascal architecture. The card offers a floating-point computational capacity of 11 TFLOPS, a memory capacity of 12288MB, 3584 CUDA cores, and a clock frequency of 1.53 GHz.

3.1. Sod Shock Tube

The shock tube is a type of experimental apparatus that serves as a significant means for studying nonlinear mechanics. The sod shock tube problem is a commonly employed test case in CFD, smoothed particle hydrodynamics (SPH), and similar methods. It serves to assess the efficacy of specific computational approaches and exposes potential shortcomings within methods, such as numerical schemes. The shock tube for the sod shock tube problem is divided into two regions, left and right, separated by a thin membrane. When the membrane ruptures (

T=0), a shock wave propagates from the left to the right, while an expansion wave moves from the right to the left [

43].

In this part, this paper employed three methods: PINN-SRT-LBM-I, PINN-SRT-LBM-II, and DNNs, to simulate the density field, pressure field, and velocity field within the computational region of the Sod shock tube at T=100.

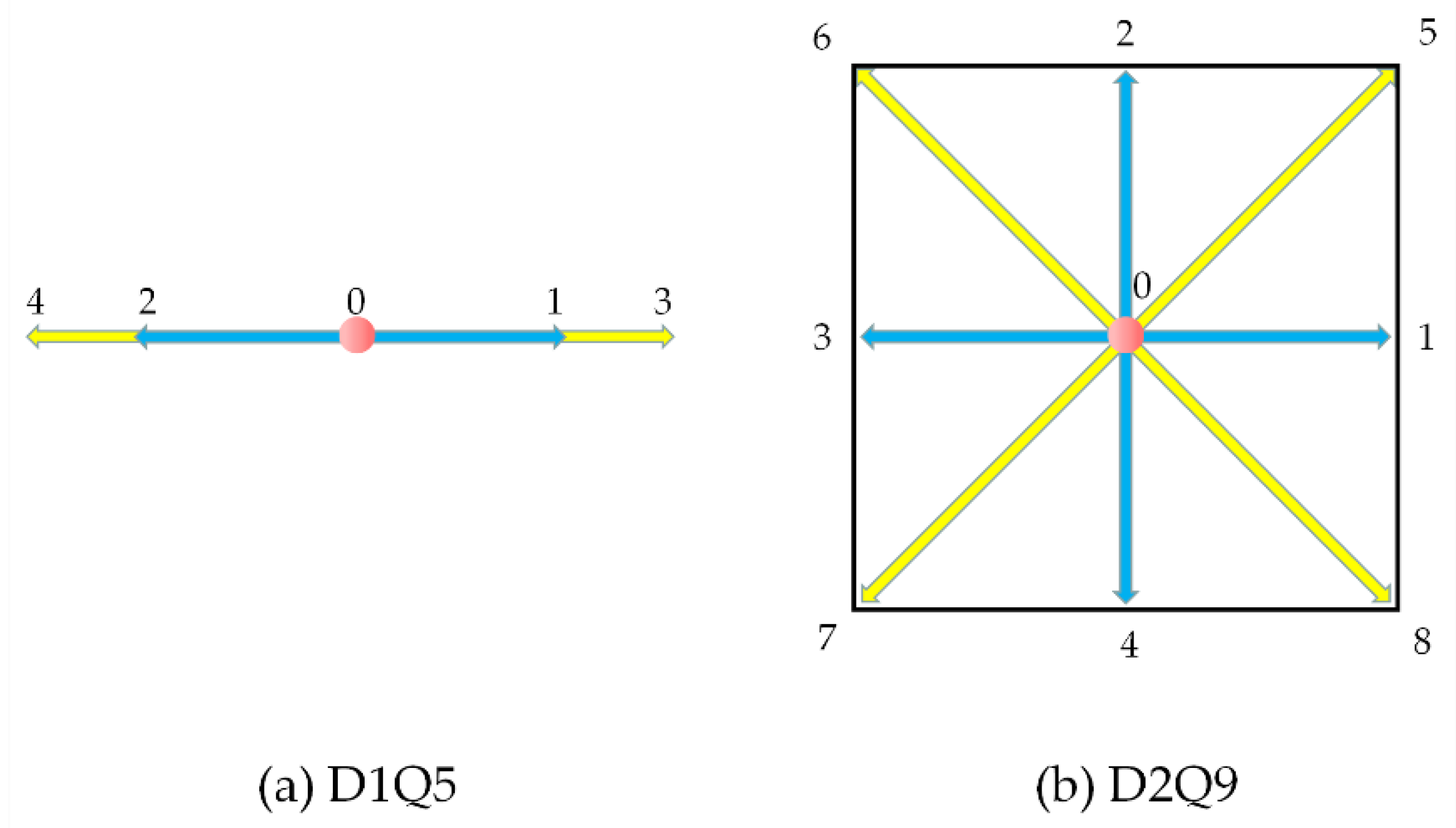

For the sod shock tube problem, the D1Q5 DVM was utilized. The model configuration is shown in

Figure 1(a), and the model parameters are established according to Equation (4).

The initial values for the exact solution are set as follows:

In the experiments, the density and length are respectively set as:ρ0 = 1 and L0 = 1. The grid size is set as Δx = 1/1000, the time step length is Δt = 0.001, the total duration is t = 0.1, and the number of time slices is T = 100. The position of the shock is chosen at x = 0.5.

To simulate the density ρ, pressure p, and velocity u, we divide the entire simulation duration of t = 0.1 into T time slices and extract 1000 training points. This constitutes 1% of the total available data. These points are employed as internal observations for solving the inverse problem and are used for training, while the remaining data is reserved for validation.

For the DNN-I and DNN-II in both the PINN-SRT-LBM-I and PINN-SRT-LBM-II models, the neural network configuration consists of 8 hidden layers with 20 neurons each, utilizing the tanh activation function. The DNNs employed for comparison are similarly structured with 8 hidden layers and 20 neurons per layer, utilizing the tanh activation function. The weight coefficients in the loss function are set as (β1, β2) = (1, 1). The max iteration is set to 80000, and the optimizer follows the Adam + L-BFGS-B combination.

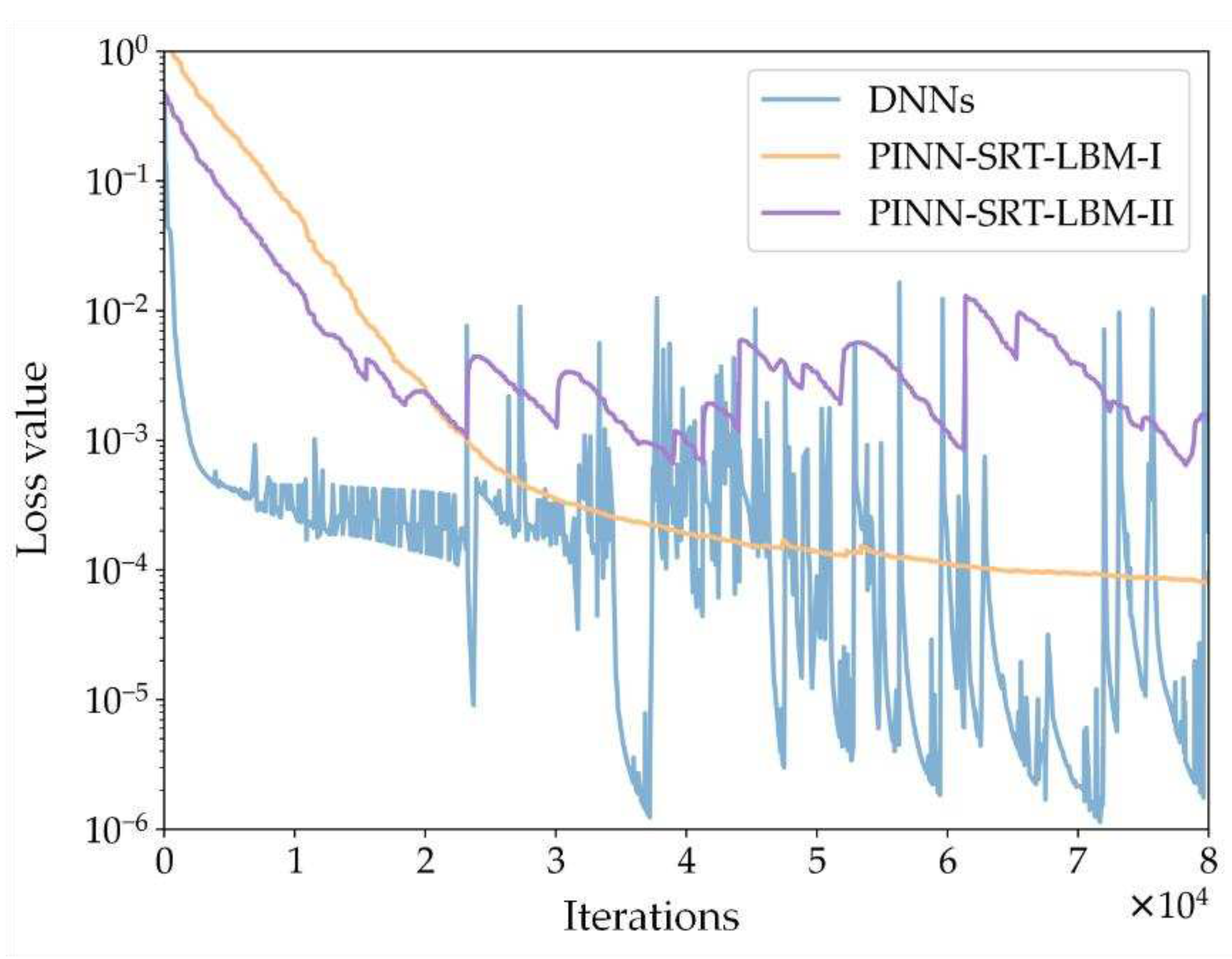

Figure 5 shows the comparison of training loss curves for the Sod shock tube inverse problem simulation using three methods: PINN-SRT-LBM-I, PINN-SRT-LBM-II, and DNNs. The depicted oscillation amplitude and convergence range of the training loss curves in the graph indicate a significant advantage of the PINN-SRT-LBM-I.

To further validate the superiority of the PINN-SRT-LBM-I model,

Table 2 presents the relative errors

L2 of the simulation results obtained using the three methods with respect to the reference solution. Here,

erru,

errp, and

errρ represent the relative errors

L2 for velocity, pressure, and density, respectively.

The data in

Table 2 reveals that the PINN-SRT-LBM-I model exhibits higher accuracy in solving the sod shock tube problem compared to DNNs and PINN-SRT-LBM-II. This advantage translates to more precise simulation results within the predicted region and enhanced stability.

The definition of the relative error

L2 is given by:

where

yi represents the predicted value,

yi* represents the exact value, and

N is the number of prediction points.

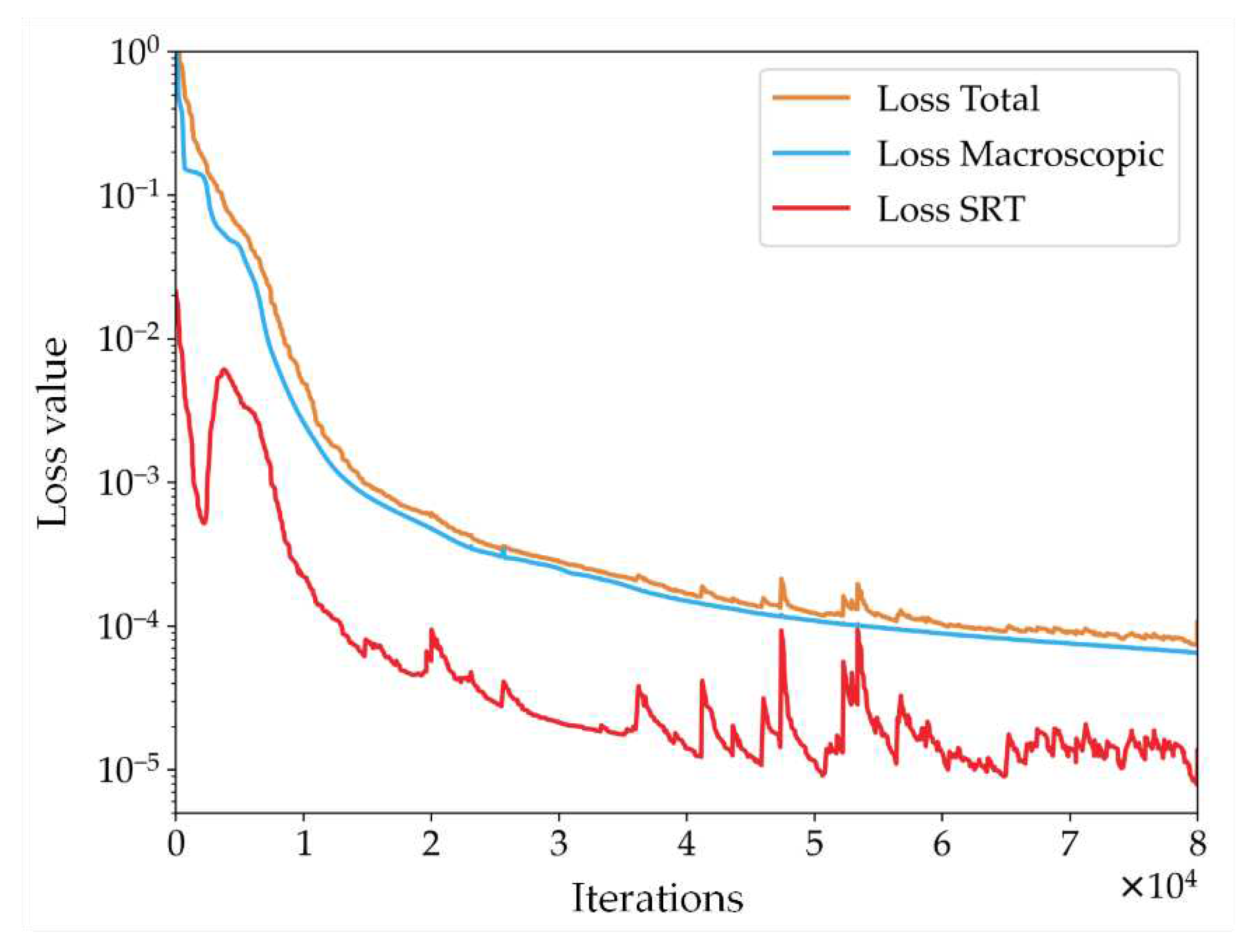

Furthermore,

Figure 6 presents a schematic curve depicting the variation of the loss function values during training for the PINN-SRT-LBM-I model. The curves include Loss SRT, representing the loss function of the residual values from the SRT-LBM's PDEs; Loss Macroscopic, depicting the loss function of the macroscopic quantities at training points; and Loss Total, the summation of the preceding two. By comparing the training loss curves over iterations, one can observe the substantial influence of the Loss SRT curve on the Loss Total curve. This validates that the proposed model in this paper enforces the physical constraints on neural network training.

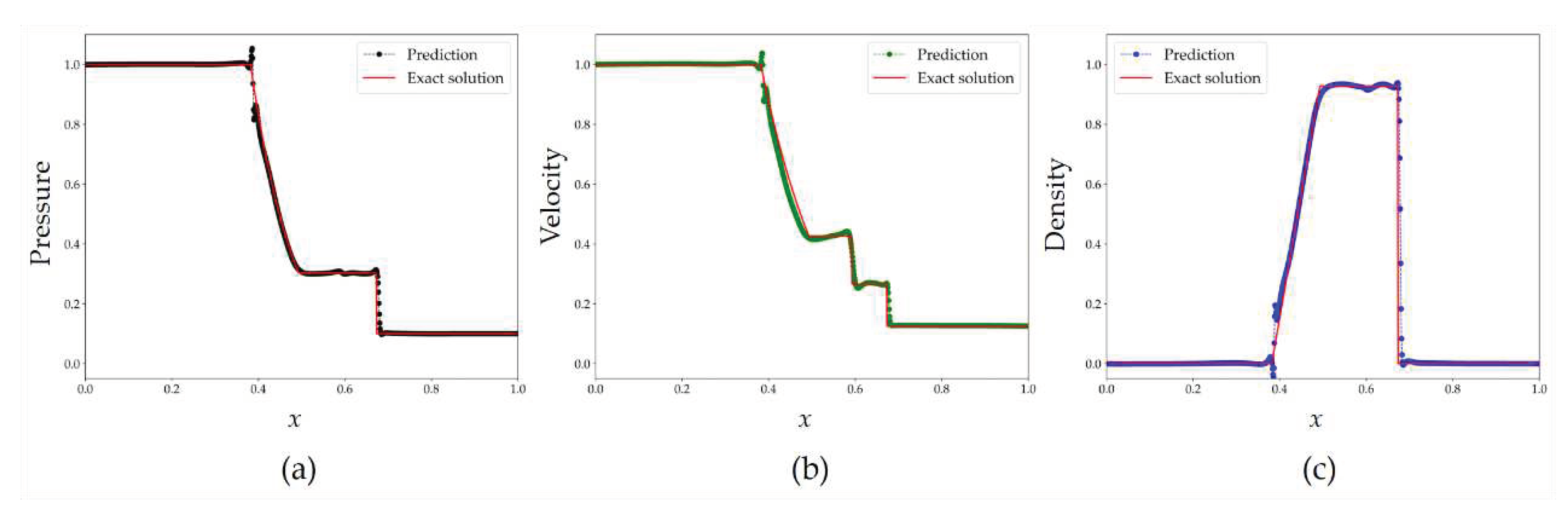

In

Figure 7, a comparison is presented between the predicted pressure

p, density

ρ, and velocity

u by the PINN-SRT-LBM-I model and the exact solutions.

The results in

Figure 7 indicate that the predicted physical quantities closely match the exact solutions, highlighting the effectiveness of the PINN-SRT-LBM-I in simulating the Sod shock tube problem. However, it's worth noting that when abrupt changes occur in the physical quantities over a short time, the predictions display oscillations. This suggests that the neural network's ability to learn such rapid variations is limited due to the nonlinear nature of these changes. To improve this aspect, exploring networks with stronger nonlinear learning capabilities is advisable.

3.2. Lid-Driven Cavity Flow

In this section, we conduct experimental validation concerning a classic problem in 2D scenarios: the incompressible steady-state lid-driven cavity flow. The experiments are conducted in a 2D cavity domain, denoted as Ω = (0,1) × (0,1). The number of time slices is set to

T=100,A continuous and constant rightward initial velocity of

U=0.1 is applied at the upper boundary of the cavity. Non-equilibrium extrapolation is employed for the boundary conditions. The initial density of the flow field is

ρ0=1, and the governing equation is represented by Equation (1). The DVM utilizes the D2Q9, as illustrated in

Figure 1(b).

There are four datasets of training data, each comprising 4225 randomly selected spatiotemporal training points within the computational domain, are gathered at

Re = 400, 1000, 2000, 5000. The reference solutions for the velocity field and density field of these points are derived from reference [

44]. The horizontal velocity and vertical velocity are denoted as

u(

T,x,y) and

v(

T,x,y) respectively, while the density field is represented as

ρ(

T,x,y). The weights for the loss function are set as (

β1,

β2) = (1,1). In this reference solution, 1% of the entire dataset will be used for training, with the remaining portion utilized for validating the predictive outcomes. Considering that the flow field of the lid-driven cavity under the specified Reynolds numbers attains a steady state we focus solely on simulating the flow field at the final time step (

T=100).

To train the models for the experimental cases, the foundational components DNN-Ⅰ and DNN-Ⅱ of both the PINN-SRT-LBM-I and PINN-SRT-LBM-II models are configured with 8 hidden layers, each containing 40 neurons and employing the hyperbolic tangent (tanh) activation function. The parameters of the contrasting DNNs network are set to 8 layers with 40 neurons per layer. The maximum number of training iterations is established at 80000, and the training process employs the Adam + L-BFGS optimizer. Notably, for the DNNs in this experiment, the SGD optimizer will be utilized in the pursuit of potentially enhanced performance.

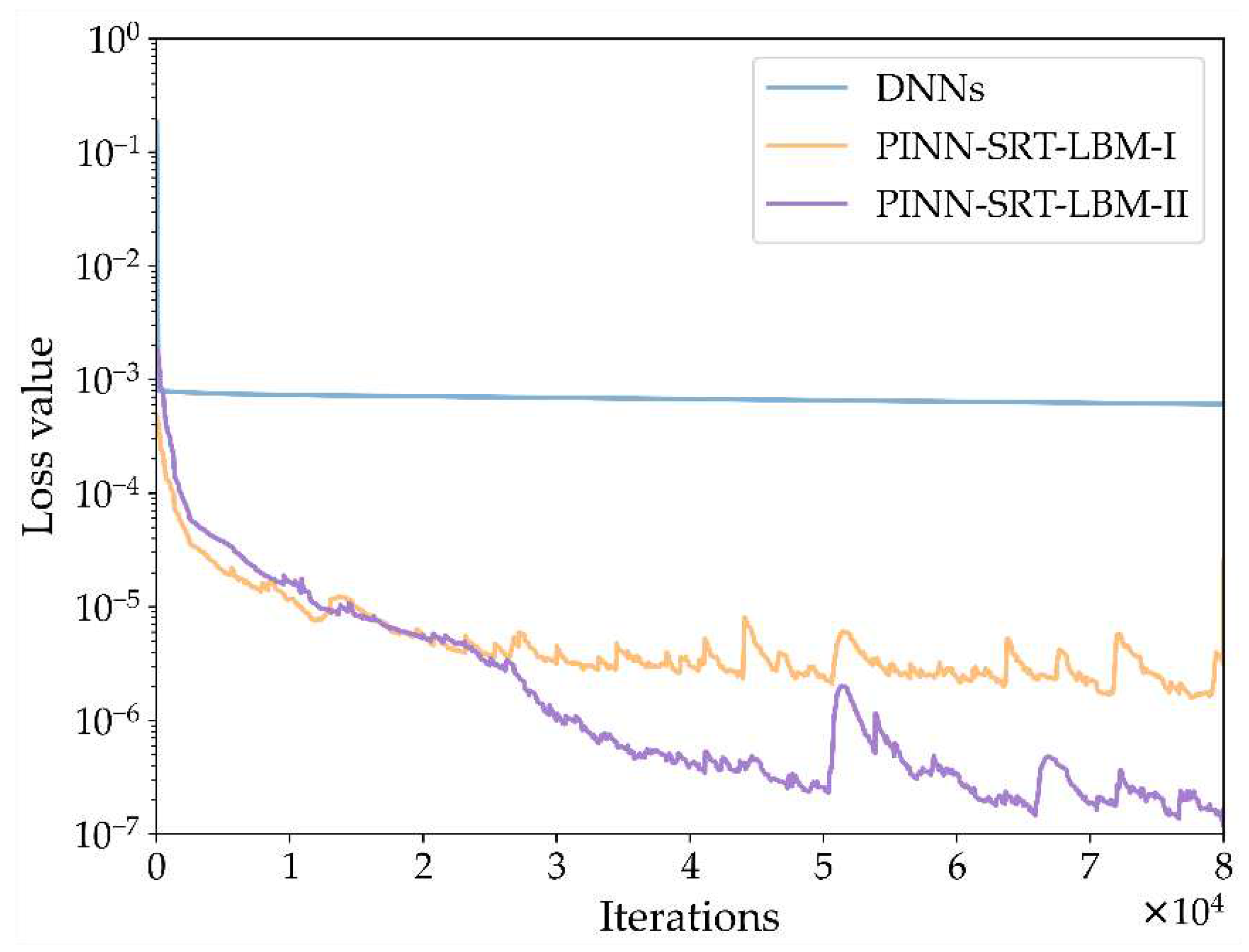

The results shown in

Figure 8 unveil the characteristics of the training loss curves for the three methods: PINN-SRT-LBM-I, PINN-SRT-LBM-II, and DNNs, when simulating the lid-driven cavity flow. Notably, the loss value of DNNs experiences a rapid descent to below 1×10

-3 followed by an onset of overfitting. Meanwhile, the loss value of the PINN-SRT-LBM-II model converges within a range one order of magnitude smaller than that of the PINN-SRT-LBM-I model. This deduction is grounded in the fact that the PINN-SRT-LBM-II model directly leverages deep neural networks to approximate both equilibrium and non-equilibrium distribution functions. When tackling the 2D inverse problem of the lid-driven cavity flow, the PINN-SRT-LBM-II model is anticipated to excel in capturing intricate details of the underlying physical distribution patterns compared to both DNNs and the PINN-SRT-LBM-I model. In order to empirically validate our conjecture,

Table 3 presents the relative errors

L2 for the results obtained by the PINN-SRT-LBM-I, PINN-SRT-LBM-II, and DNNs when simulating at

Re =1000 and

T =100.

In

Table 3,

erru, errv, and

errρ respectively denote the relative errors

L2 of

u,

v, and

ρ. The results notably illustrate that the relative errors

L2 of the predictions generated by the PINN-SRT-LBM-II model are approximately one order of magnitude lower than those of both DNNs and the PINN-SRT-LBM-I model.

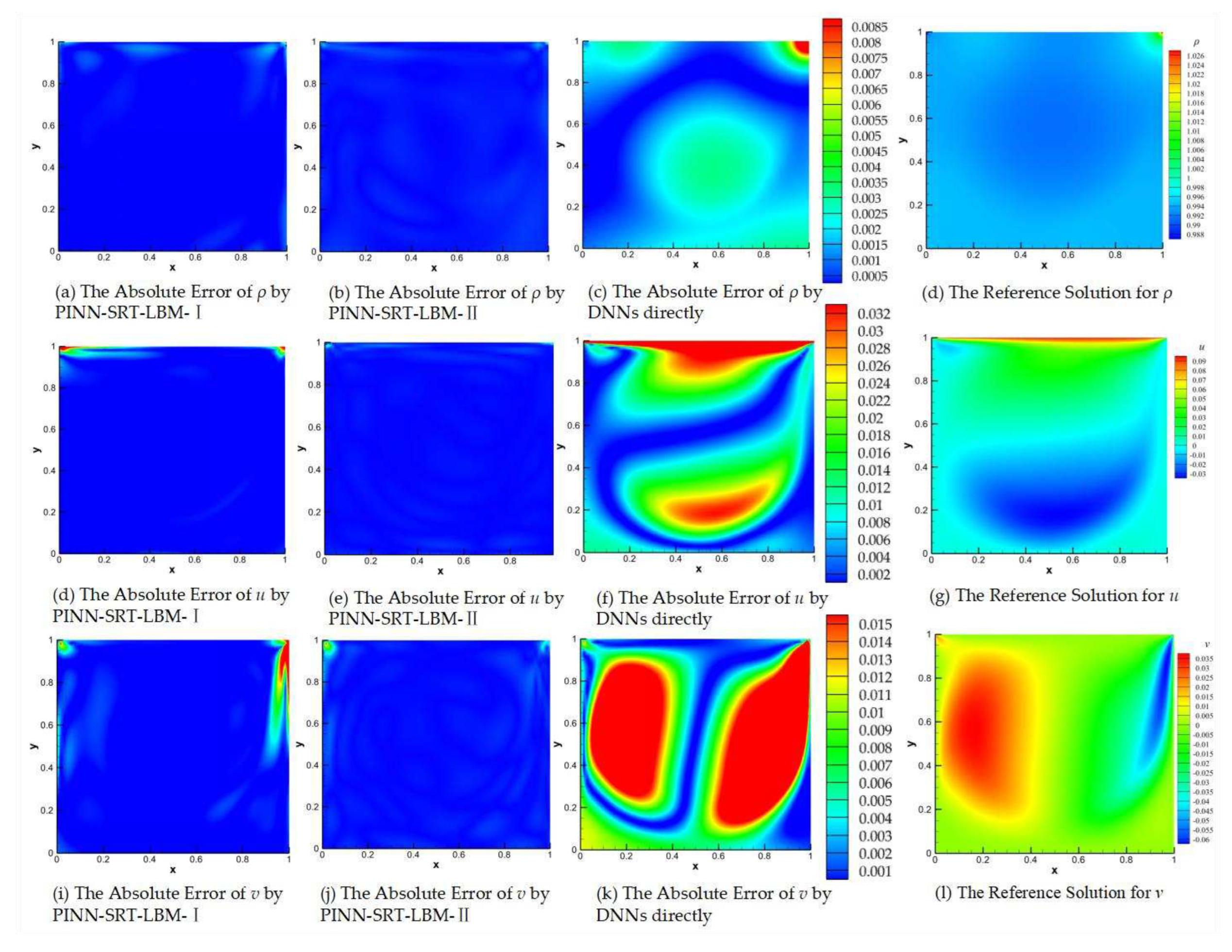

For a more comprehensive comparison of the accuracy of different models,

Figure 9 displays the visualizations of absolute errors between the reference solution and the simulated results by PINN-SRT-LBM-I, PINN-SRT-LBM-II, and DNNs. The first column (a), (e), (i) presents the visualizations of absolute errors for PINN-SRT-LBM-I; the second column (b), (f), (j) displays the visualizations of absolute errors for PINN-SRT-LBM-II; and the third column (c), (g), (k) showcases the visualizations of absolute errors for DNNs. The absolute error images reveal the observable discrepancies between predicted and reference values. Finally, the last column (d), (h), (l) provides the visual representations of the reference solutions.

As for the absolute error, let

Ypred(

t,x,y) denote the predicted value at coordinates (

x,

y) for

T=t, and

Yexact(

t,x,y) represent the reference solution value at the same coordinates and time. The absolute error function is defined as follows:

where

Eabs(

t,

x,

y) represents the absolute error function,

Ypred(

t,x,y) denotes the predicted value at time

T=

t and position (x,y), and

Yexact(

t,x,y) signifies the reference solution value at time

T=

t and position (

x,

y). Subtracting these two values and taking the absolute value yields the absolute error at point (

x,

y) during the time slice

T=

t.

From the results shown in

Figure 9 and the relative error

L2 presented in

Table 3, a reasonable inference can be made that DNNs lack the capability to address the inverse problem of 2D lid-driven cavity flow. Moreover, it can be observed that the PINN-SRT-LBM-I model, when employed to simulate the 2D lid-driven cavity flow, struggles to capture the characteristics of physical quantities at the boundaries. This phenomenon arises due to the significant variations of physical quantities in proximity to the boundaries. Consequently, the model encounters greater difficulty in learning the evolution patterns near these boundaries compared to other regions, leading to elevated levels of error.

It is worth noting that in the simulations of CFD, the Reynolds number exerts a substantial influence on the results. Thus, in the experimental cases of this section, we have included investigations of the inverse problem of lid-driven cavity flow under various Reynolds numbers.

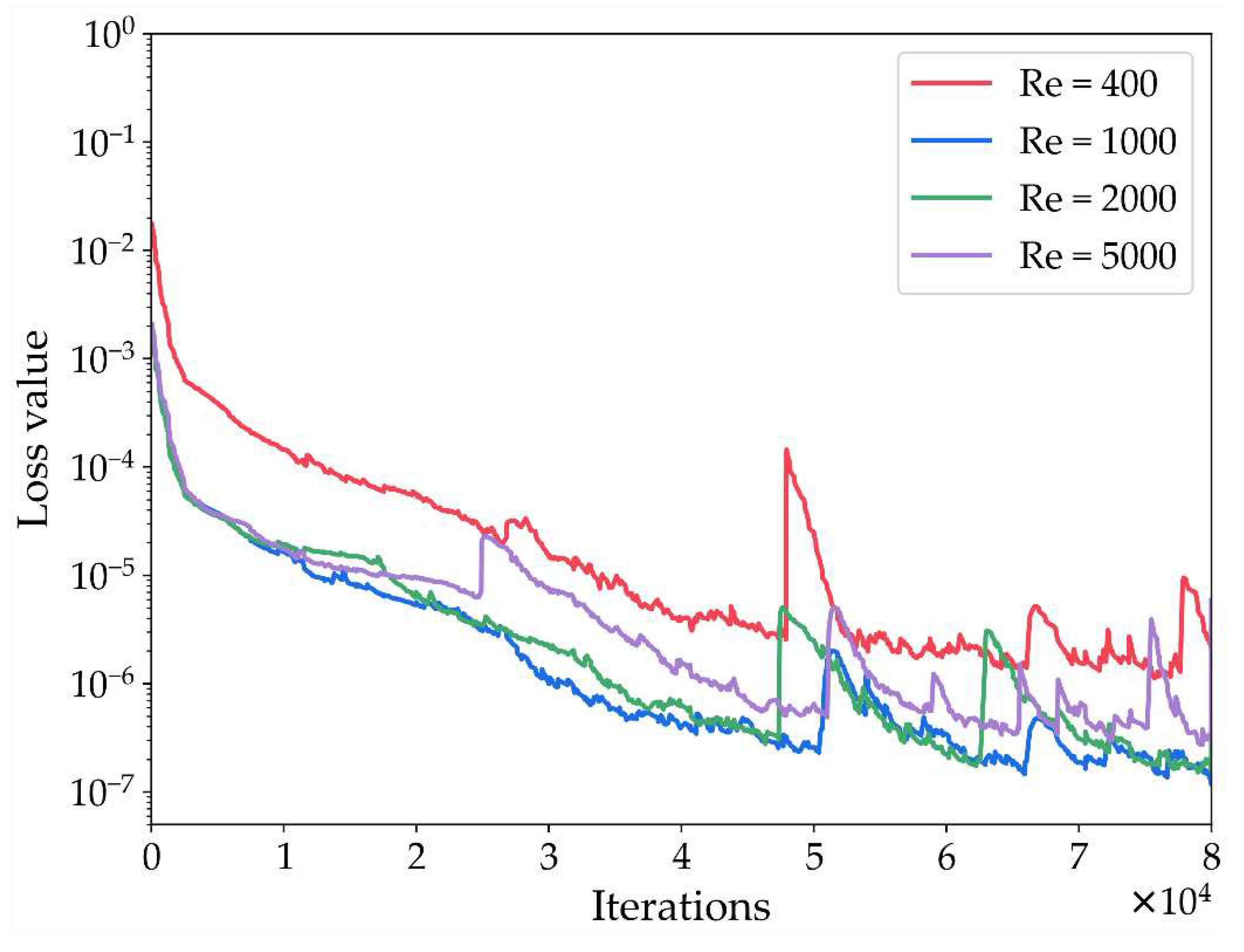

In the course of the experiments, four distinct Reynolds number values were considered:

Re = 400,

Re = 1000,

Re = 2000, and

Re = 5000. Predictions were made for the results at

T = 100, which corresponds to the attainment of a steady state within the cavity. Using PINN-SRT-LBM-II to address predictions for different Reynolds numbers, the variation of the training loss curves with respect to time is depicted in

Figure 10. Observing the trend of the loss value across iterations in

Figure 10, it can be concluded that the convergence range of the model's loss value remains relatively consistent as the Reynolds number increases. This indicates that the PINN-SRT-LBM-II model exhibits stability across different Reynolds numbers and underscores its capacity for generalization.

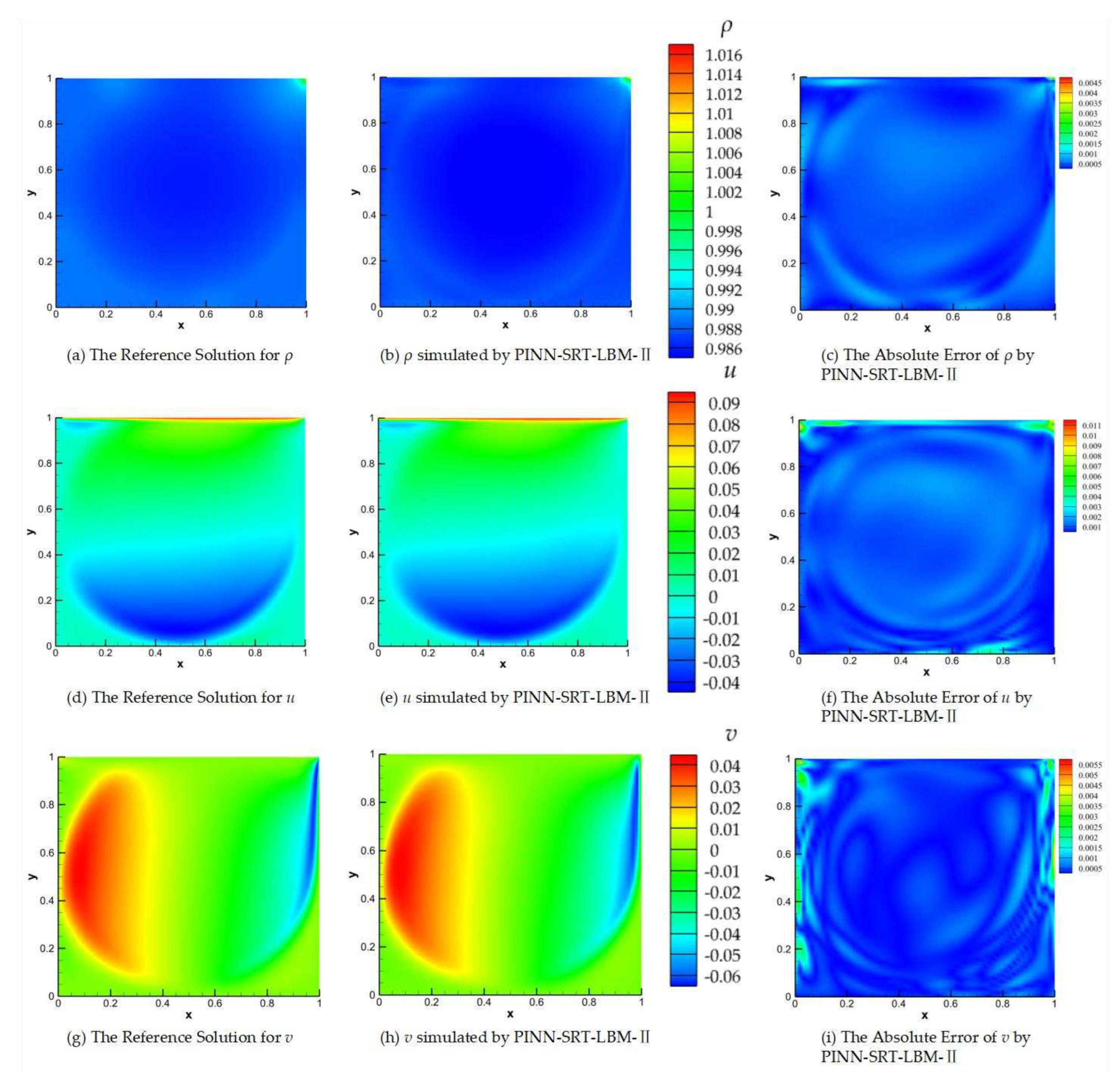

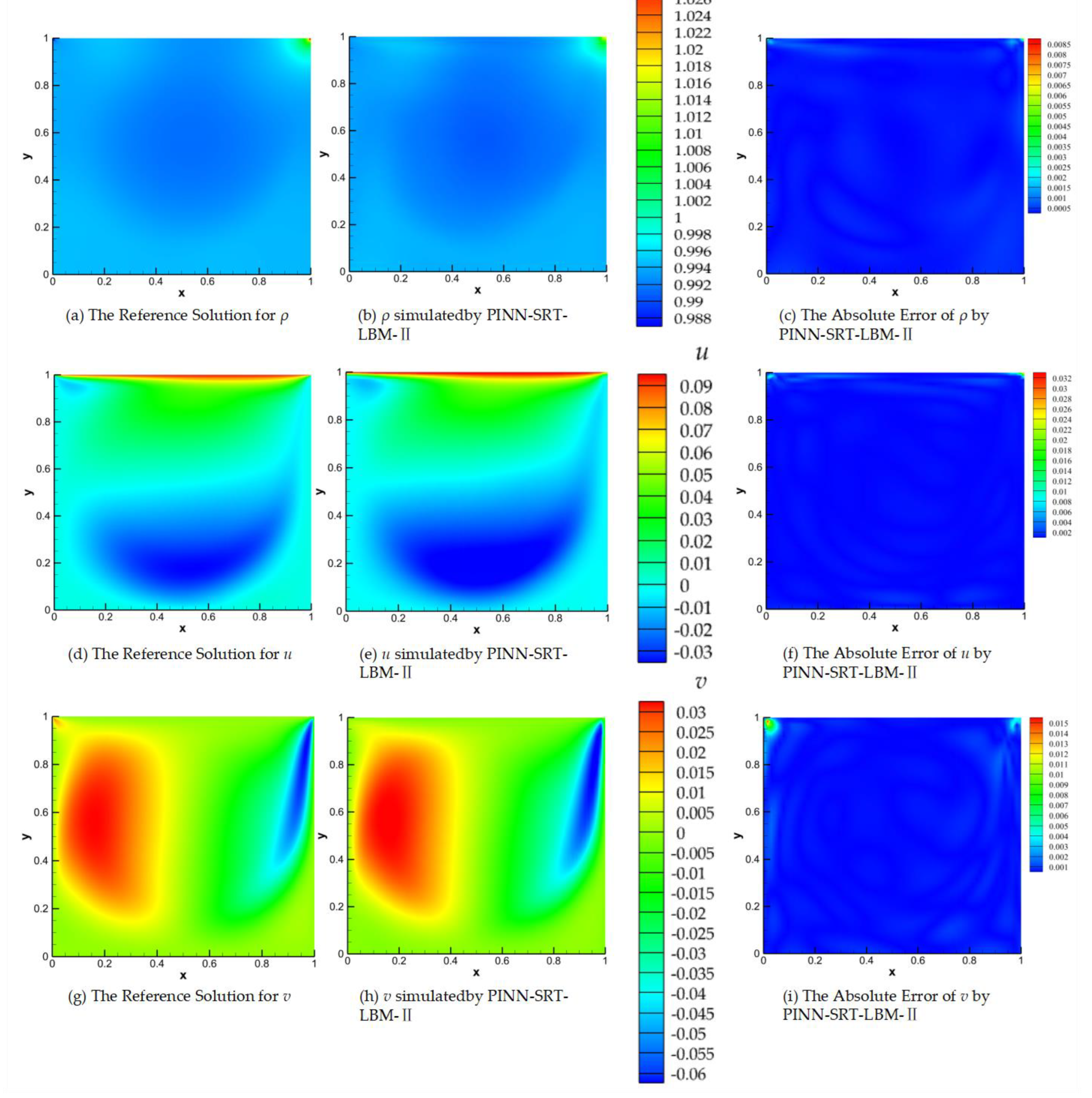

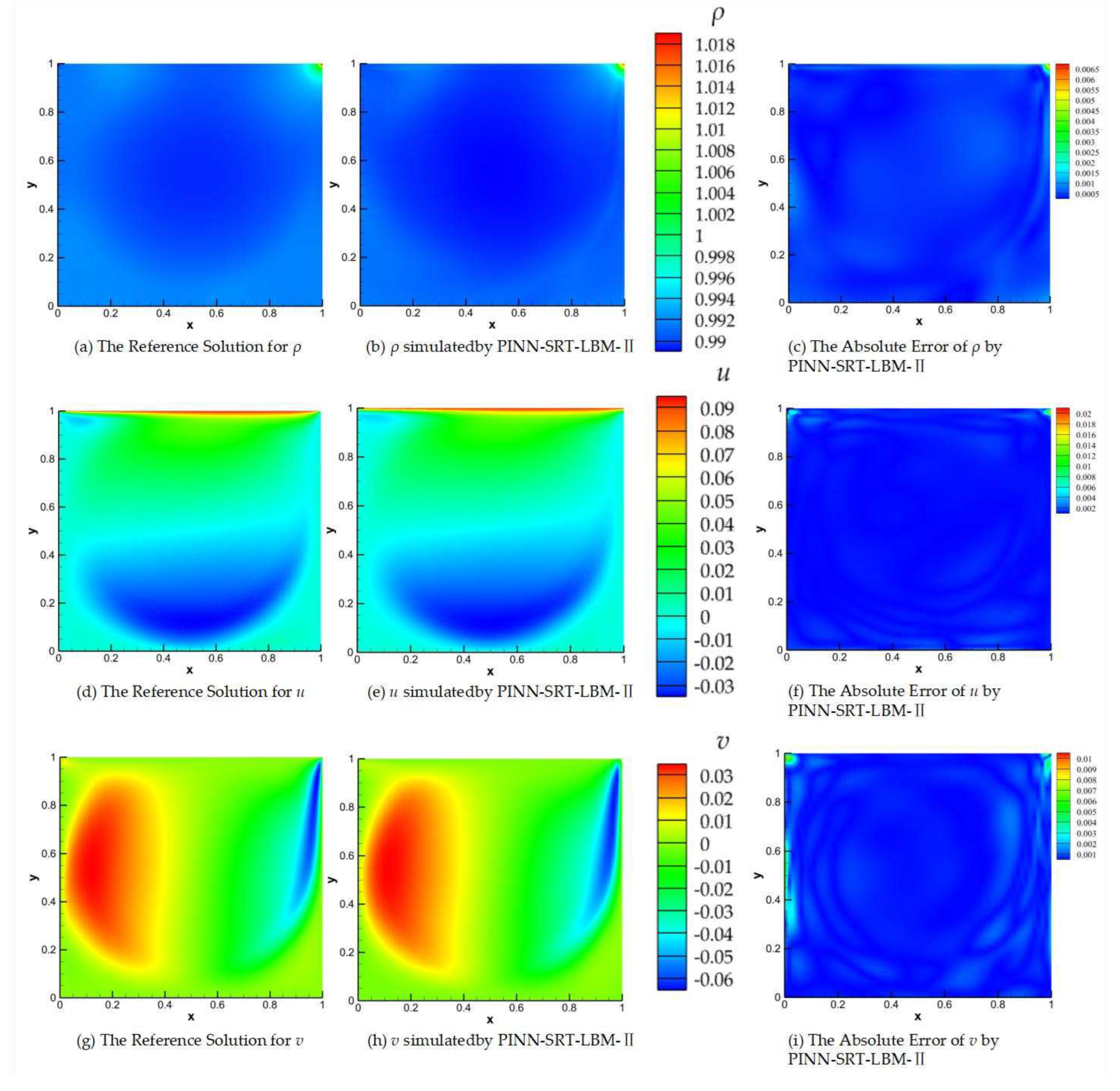

In

Figure 11, we present the visual results of the predictions made by the PINN-SRT-LBM-II model and the reference solution for

Re=5000 and

T=100.

Figure 11(a), (b), and (c) respectively depict the visualizations of the reference solution

ρ, the predicted

ρ from the PINN-SRT-LBM-II model, and the visualization of the absolute error in the predicted

ρ.

Figure 11(d), (e), and (f) correspondingly represent the visualizations of the

u for reference solution, the predicted

u from the model, and the visualization of the absolute error in the predicted

u using the PINN-SRT-LBM-II model. Similarly,

Figure 11(g), (h), and (i) show the same pattern for

v.

In

Figure 11(b), (e), and (h), observe elevated absolute error values in regions near the cavity boundaries and the primary vortex. Notably, absolute errors surpass 10

-2 at the cavity vertices, with errors near the primary vortex exceeding other areas by around 10

-3. These discrepancies are attributed to the accumulation of errors and rapid changes in the physical distribution patterns.

Significantly, the absolute error results in the predicted density ρ are notably better than those for u and v in the results. Consequently, we can infer that regions with intense variations in physical patterns within the computational domain pose a challenge for the model's learning process. Moreover, the mesoscopic physics-based approach SRT-LBM imparts a distinct advantage to the model in accurately capturing the distribution patterns of ρ.

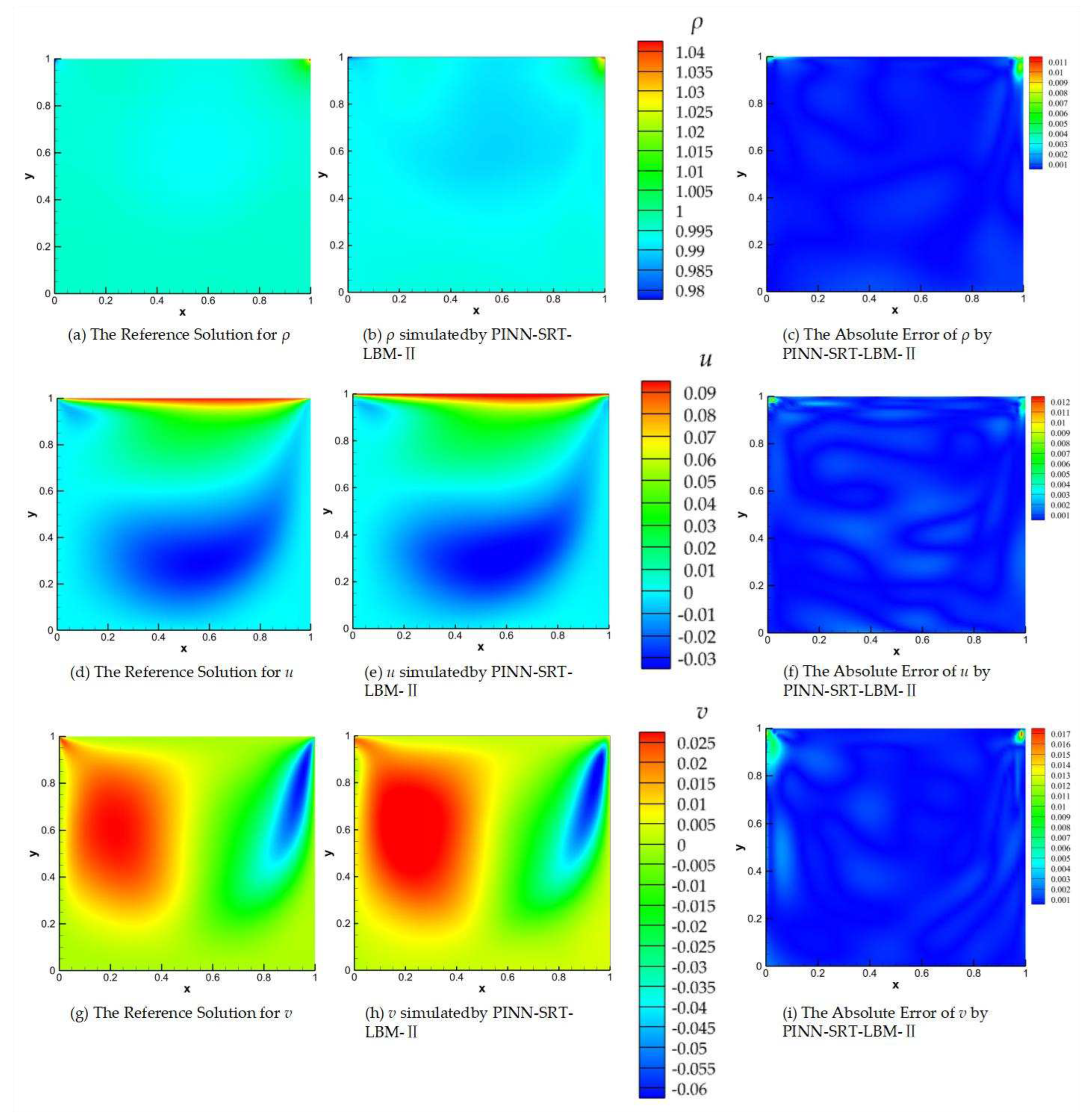

Consistent with the results presented in

Figure 11,

Figure 12, 13, and 14 depict visualizations of the reference solutions, model predictions, and absolute error results for Reynolds numbers

Re=400, 1000, 2000 at

T=100.

Through the progressive comparison of

Figure 11,

Figure 12,

Figure 13and

Figure 14 as the Reynolds number increases, a conclusion can be drawn. Given that the maximum absolute error remains below 10

-2, the PINN-SRT-LBM-II model exhibits stability in simulating 2D lid-driven cavity flow. Furthermore, the comparative analysis of the results reveals that regions with more pronounced variations in physical distribution patterns tend to exhibit relatively higher absolute errors compared to other areas within the computational domain. This is due to the escalating nonlinearity with higher Reynolds numbers. However, the nonlinearity within the PINN-SRT-LBM-II model doesn't align perfectly. For instance, in

Figure 11 (c), (f), and (i), there are irregular oscillations and uneven distribution of absolute errors within the simulation domain. As a result, prediction accuracy gradually decreases with higher Reynolds numbers. In order to address the above, attention should be given to countering overfitting during the model's training process [

44].

To better assess the alignment between the results of the PINN-SRT-LBM-II model and the reference solution, this paper provides further comparison in

Table 4 regarding the coordinate of main vortex center point. A represents the predictions from PINN-SRT-LBM-II, while B, C, and D correspond to the results presented in references [

45,

46,

47].

The conclusion drawn from the coordinate of main vortex center point is that there exists a strong physical consistency between the prediction results and the reference solution. This signifies that the predictive results of the PINN-SRT-LBM-II model possess certain physical attributes, indicating the capability of the model to incorporate physical constraints, a manifestation of the synergy between the PINN-SRT-LBM-II model and SRT-LBM principles.

The relative errors

L2 of the simulation results are shown in

Table 5. The results indicate that with an increase in Reynolds number, the relative errors

L2 do not exhibit a pronounced rise. This further substantiates the physical reliability and stability of PINN-SRT-LBM.

This work has differentiated the dimensions in which the PINN-SRT-LBM-I and PINN-SRT-LBM-II models are suited through comparative experiments. PINN-SRT-LBM has successfully conducted simulations of the inverse problem of 2D lid-driven cavity flow by learning from a sparse, randomly distributed 1% of data within the computational domain. This extension from one dimension to two dimensions demonstrates the applicability of dispersed temporal and spatial data, akin to passive scalar or limited data encountered in inverse problems, within the training of neural network models.

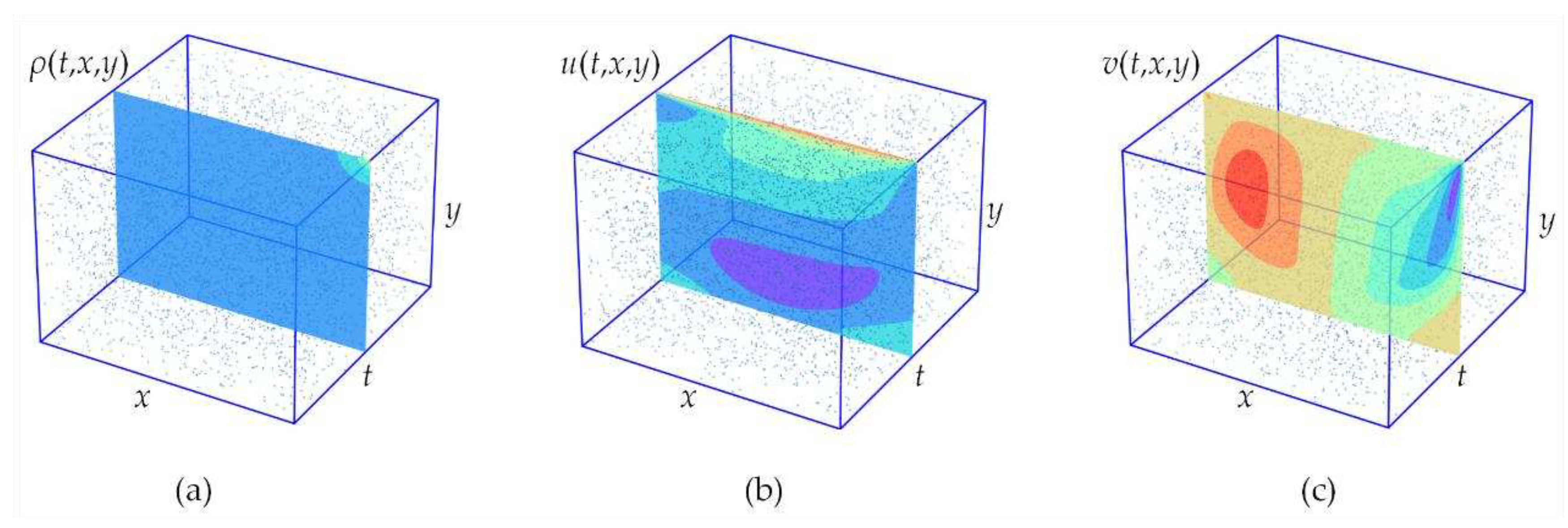

3.3. Flow around Circular Cylinder

In this part, this study have opted to employ a 2D flow around circular cylinder dataset provided by M. Raissi et al. in reference [

25]. Flow around circular cylinder is a classic simulation problem in CFD. The purpose of conducting experiments in this subsection is twofold: to verify the performance of PINN-SRT-LBM in simulating the inverse problem of incompressible unsteady flows and to conduct comparative experiments with the work of other researchers. Open-source code exists for PINNs solving the inverse problem of fluid mechanics using the Navier-Stokes equations (referred to as PINN-NS in the subsequent). The performance of PINN-SRT-LBM in addressing the inverse problem of unsteady flows will be elaborated upon in detail within this part.

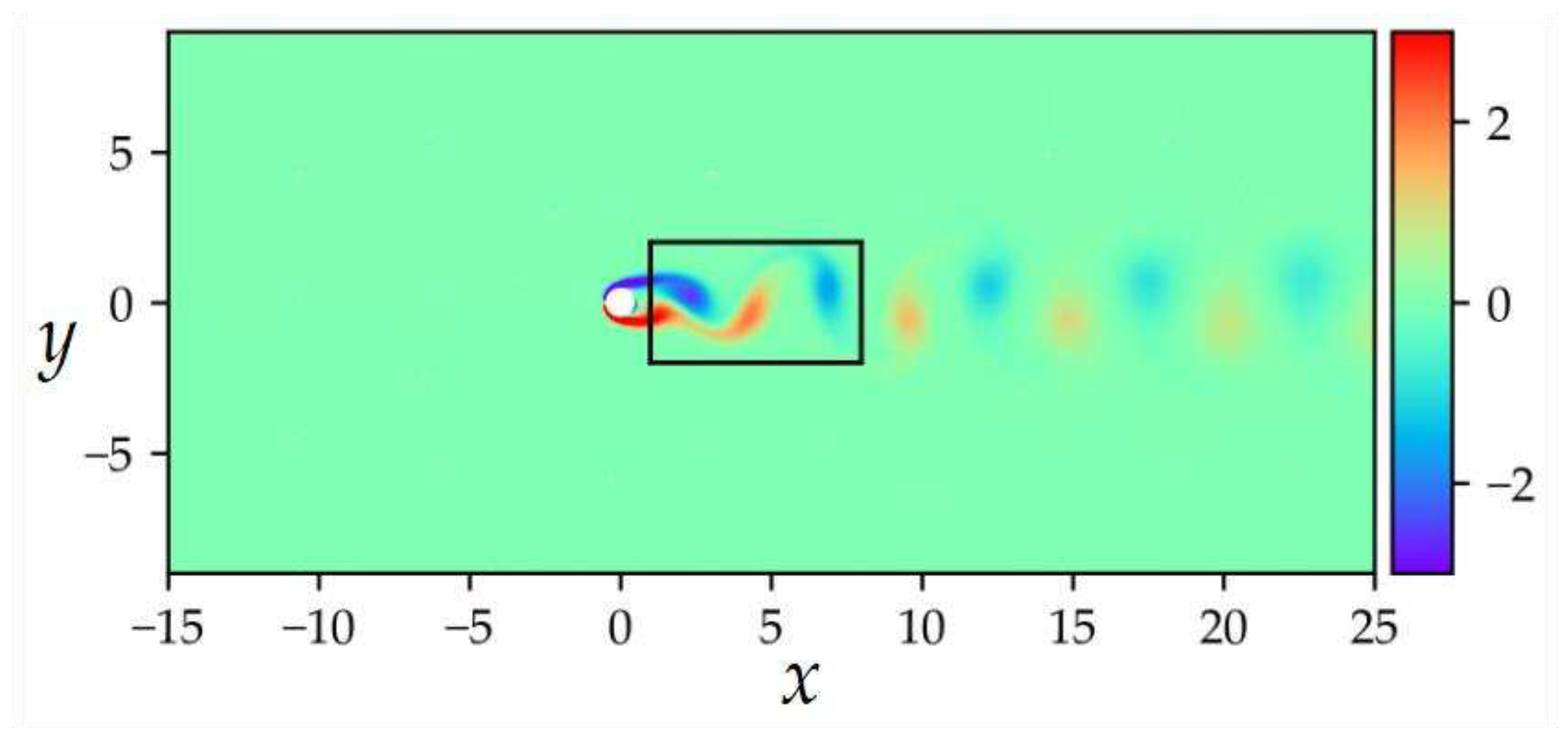

The dataset was set up with

Re = 100, focusing on the wake region of the flow around circular cylinder. The dataset contained 5000 sampled training points, with a total of

T = 200 time slices. For simplicity, the data collection was confined to a rectangular region downstream of the cylinder, as shown in

Figure 15. The solver employed in this case was the spectral/hp element solver

NekTar [

48]. Since the dataset only contained velocity and pressure, the focus in this part's experiment was solely on simulating the velocity

u(

t,

x,

y) and

v(

t,

x,

y) within the sampled region.

This case assumes a uniform freestream velocity profile is imposed at the left boundary of the domain Ω = (-15,25) × (-8,8). A zero-pressure outflow condition is applied at the right boundary, 25 units downstream of the cylinder. The top and bottom boundaries of the domain adopt periodic boundary conditions. The initial velocity of the fluid is

U=1, cylinder diameter

D=1, and viscosity coefficient

ν = 0.01. The system displays periodic steady state features characterized by asymmetric vortex shedding patterns in the wake flow around a circular cylinder, known as the Kármán vortex street [

49]. The simulation is confined to the region [

1,

7] × [-2,2].

For the training settings, the foundational components DNN-I and DNN-II within the PINN-SRT-LBM-I and PINN-SRT-LBM-II were configured with 8 hidden layers, each containing 40 neurons and utilizing the tanh activation function. The DNNs employed for comparison were also designed with 8 hidden layers and 40 neurons per layer. The PINN-NS’s network configuration followed the default structure outlined in [

25], which entails 6 layers with 40 neurons each, utilizing the tanh activation function. Regarding optimizer selection, DNNs were optimized using the SGD optimizer, while the other models employed a combination of the Adam optimizer and L-BFGS optimizer. The maximum number of iterations was uniformly set to 100,000.

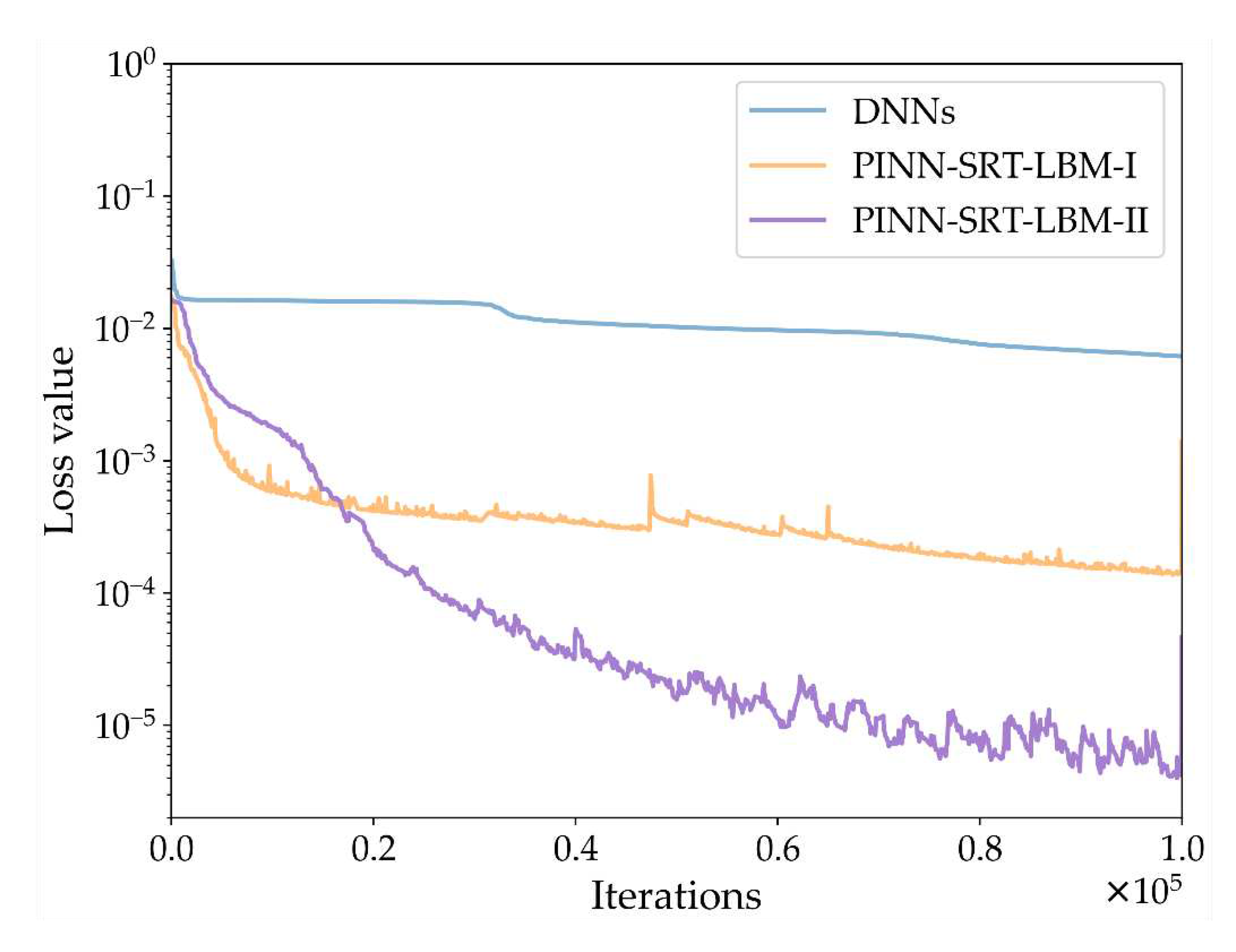

Figure 16 presents a schematic of the variations in the training loss curves for the three models: DNNs, PINN-SRT-LBM-I, and PINN-SRT-LBM-II. From the trend of loss value shown in

Figure 16, it can be concluded that the PINN-SRT-LBM-II model exhibits a more distinct convergence range when simulating the inverse problem of 2D flow around circular cylinder. This suggests that the precision of the PINN-SRT-LBM-II model is higher compared to the other models. Additionally,

Table 6 presents the relative errors

L2 for

u and

v at

T=100.

Conclusions can be drawn from the relative errors

L2 of the results by the four models presented in

Table 6. Under the same training conditions, our proposed PINN-SRT-LBM-II model achieves a level of accuracy comparable to that of the PINN-NS model in [

25]. Additionally, PINN-SRT-LBM-II outperforms PINN-SRT-LBM-I in simulating the inverse problem of 2D. Consequently, for the subsequent experiments, we will employ the PINN-SRT-LBM-II model.

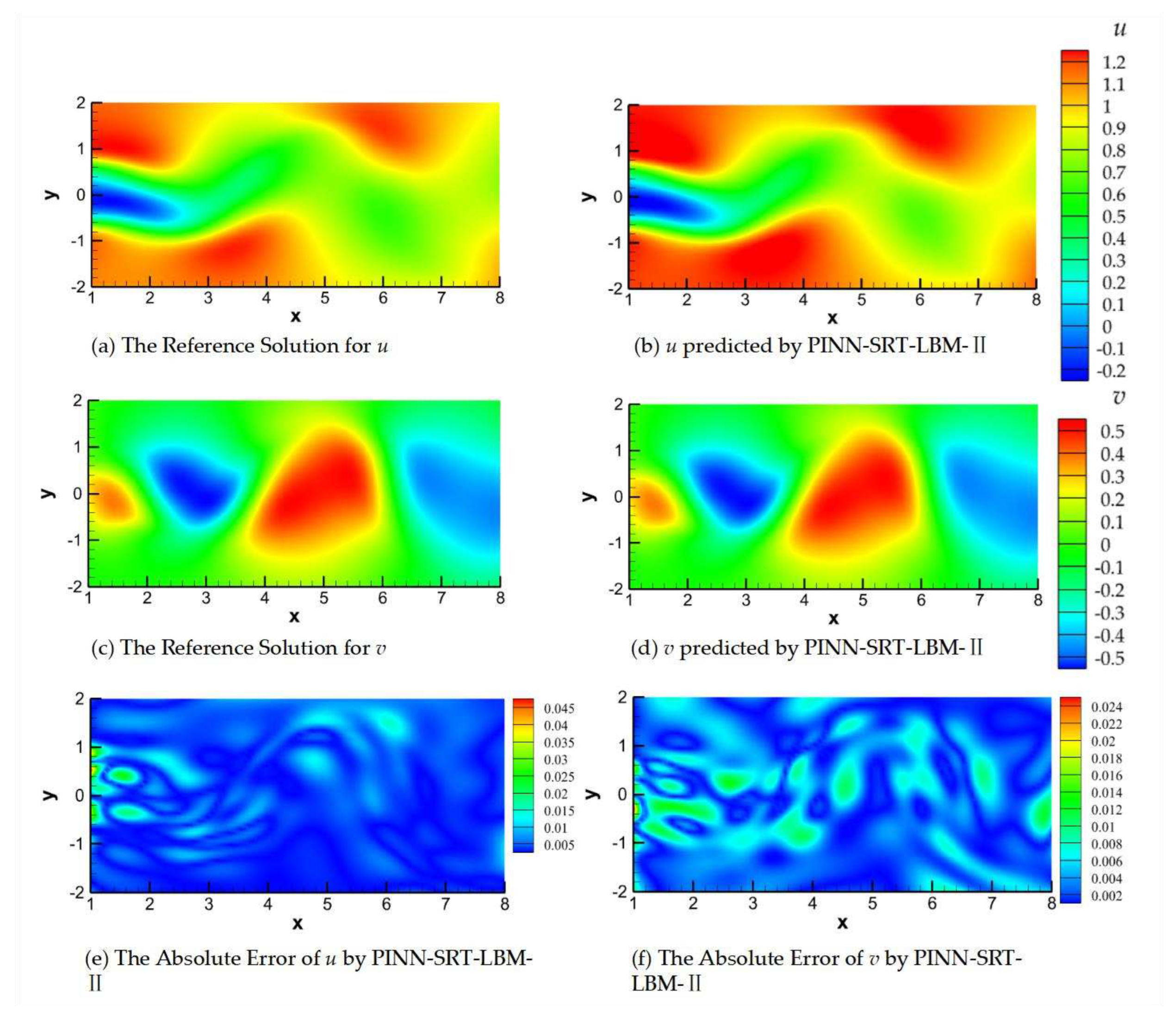

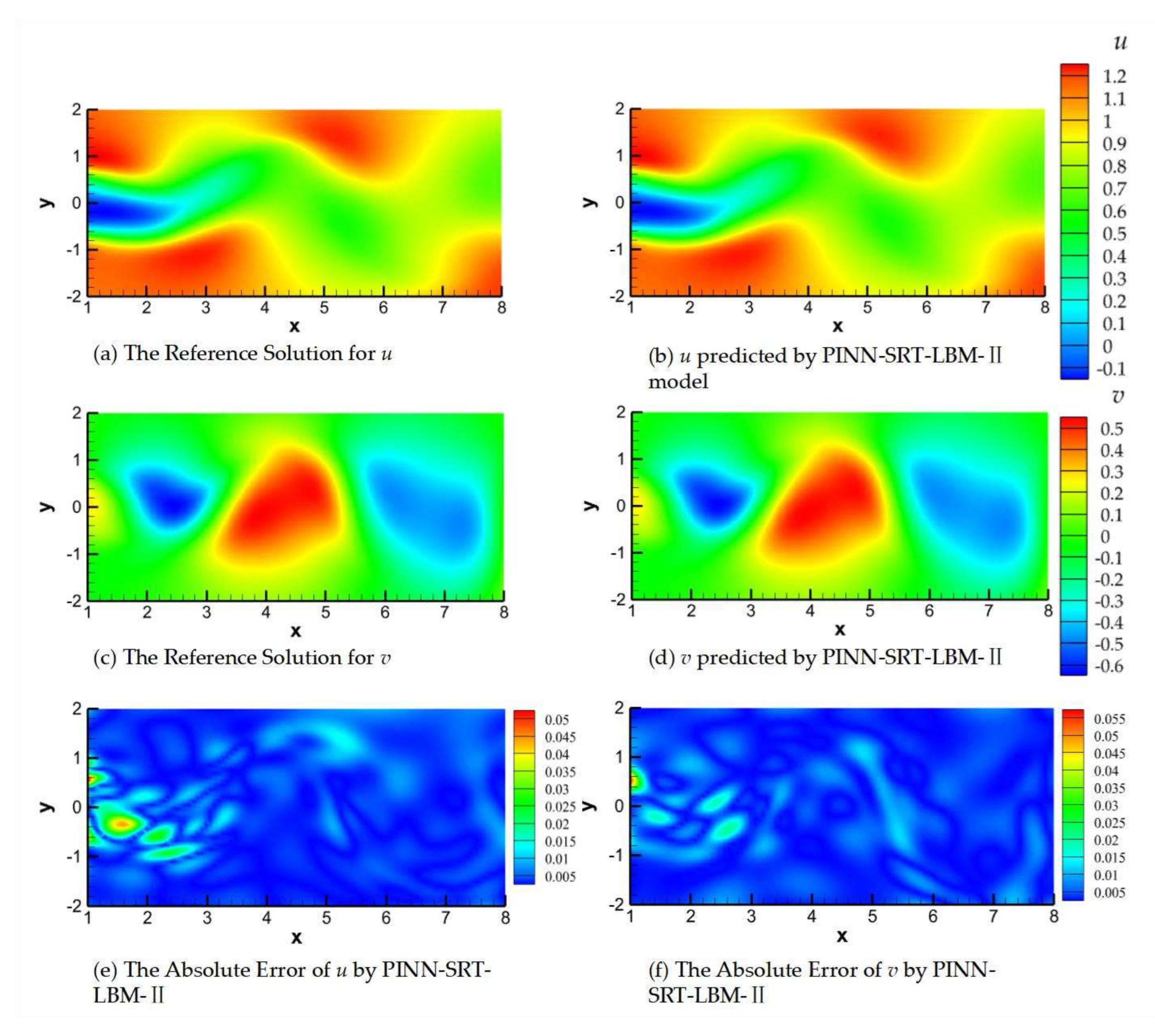

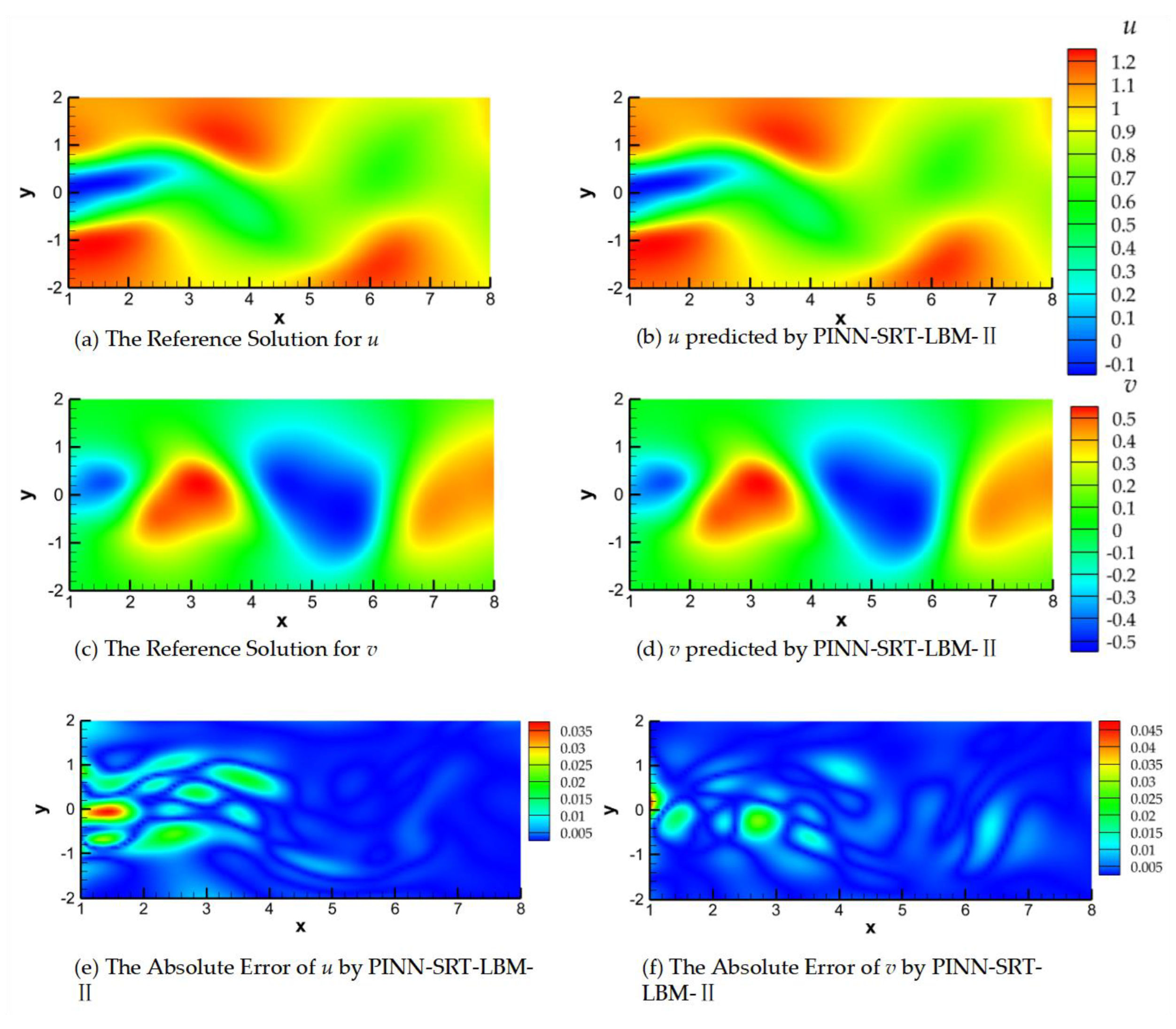

Flow around circular cylinder is a classic unsteady flow phenomenon. In this paper, an attempt is made to verify the learning effect of the PINN-SRT-LBM in the whole continuous time domain. Predictions using the PINN-SRT-LBM-II model were performed at

T=50, 100, 200. Refer to

Figure 17,

Figure 18, and

Figure 19for the respective results.

In

Figure 17,

Figure 18 and

Figure 19, (a) and (b) represent the reference solution and model predictions for

u (velocity component in the x-direction), (c) and (d) depict the reference solution and model predictions for

v (velocity component in the y-direction), and (e) and (f) show the visualizations of the absolute errors for

u and

v, respectively.

Observing the absolute errors in panels (e) and (f) of

Figure 17,

Figure 18 and

Figure 19 leads to a reasonable inference. Due to error accumulation, areas with larger absolute errors are predominantly located in regions of significant fluid variation, aligning well with the flow patterns in cylinder wake flow. The shedding of the Kármán vortex street in the wake exhibits the most pronounced variations, posing a challenge for neural networks to capture the underlying physical distribution patterns. This issue can potentially be addressed through alterations in training methodologies and optimization of neural network structures. Upon sequential comparison at time snapshots

T=50, 100, and 200, a noteworthy observation emerges when focusing on the early time slice (

T=50), as shown in

Figure 17. Due to the absence of a relatively stable flow configuration and the periodic shedding of the Kármán vortex street, the PINN-SRT-LBM-II model's predictions exhibit oscillations in the distribution of absolute errors within the computational domain, owing to the abrupt variations in the underlying physical distribution patterns.

It is noteworthy that the absolute error values in the model predictions shown in

Figure 17,

Figure 18 and

Figure 19 do not exceed magnitudes of 1×10

-1. Furthermore, the majority of the computational domain exhibits absolute errors in the model predictions that are smaller than 1×10

-2. From this, the conclusion can be drawn that the PINN-SRT-LBM-II model is capable of providing highly accurate prediction results across the continuous temporal and spatial domain of the unsteady 2D inverse problem.

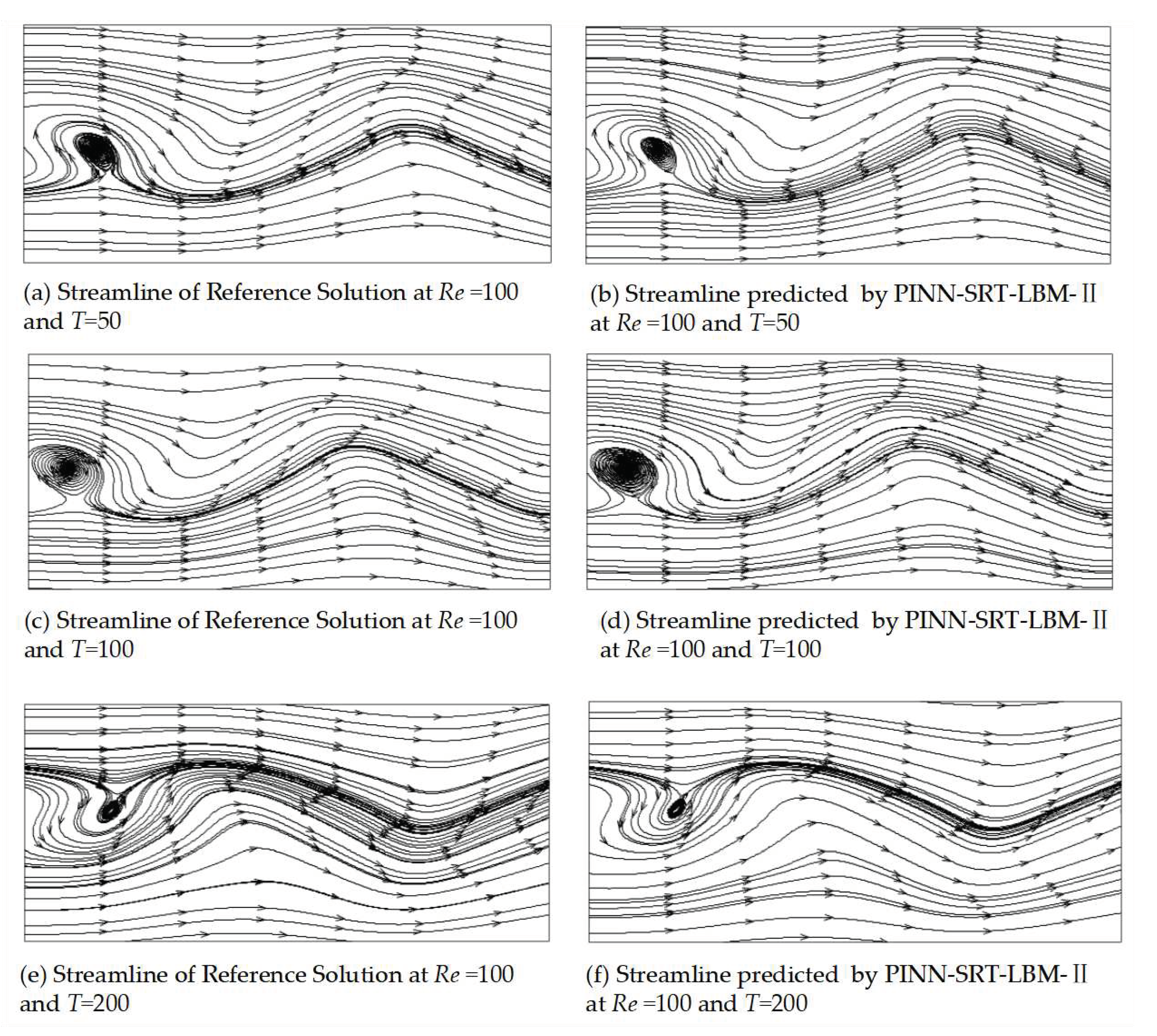

To validate the physical reliability of the prediction results generated by the PINN-SRT-LBM-II model,

Figure 20 provides streamline diagrams for

T=50, 100, 200. In

Figure 20, panels (a) and (b) depict the streamline patterns of the reference solution and model predictions at

T=50; panels (c) and (d) display the patterns at

T=100; and panels (e) and (f) illustrate the patterns at

T=200. By comparing the streamline patterns of the reference solution with those of the model predictions, it becomes apparent that the positions of vortex shedding are nearly identical. While the dataset only captures a portion of the wake, excluding the performance near the boundary, the congruence between vortex shedding positions and streamline patterns between the reference solution and the model predictions still underscores the physical reliability of the results obtained from the PINN-SRT-LBM-II model.

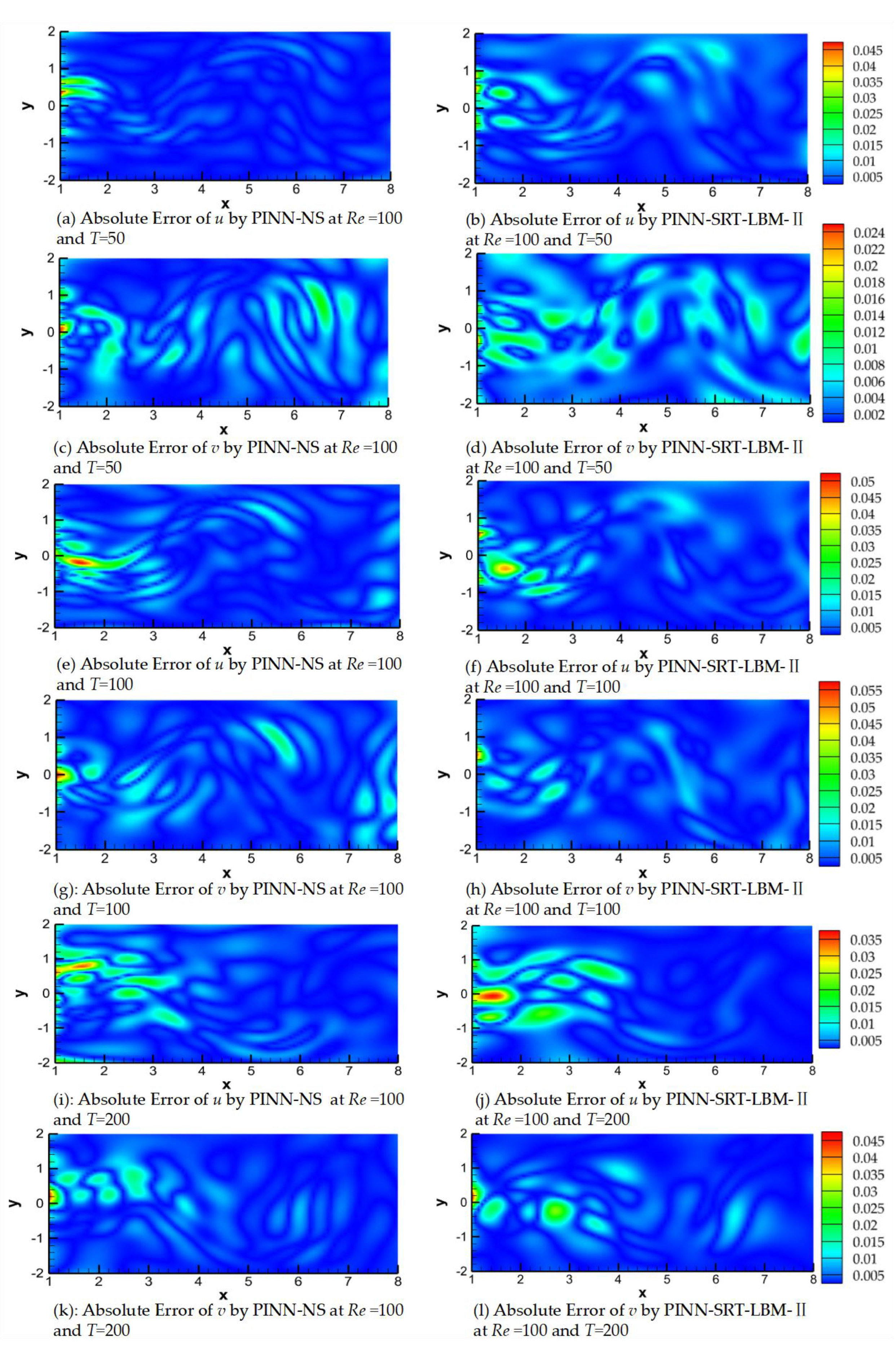

Finally, in

Figure 21, we present the visualizations of the absolute errors for

u and

v, simulated by PINN-NS and PINN-SRT-LBM-II. From the visualizations, a reasonable deduction can be made that, under the same training parameter settings, the accuracy of the prediction results from PINN-SRT-LBM-II is comparable to that of PINN-NS. At the boundaries of the simulation region, the performance of PINN-SRT-LBM-II is better than PINN-NS. However, in regions characterized by more pronounced variations in physical behavior, the predictive accuracy of PINN-SRT-LBM-II is at a disadvantage. Overall, the accuracy of simulations conducted by the PINN-SRT-LBM-II model is approaching that of PINN-NS. This underscores the potential of mesoscopic physics methods in conjunction with PINNs except macroscopic physics methods and demonstrates the feasibility of employing mesoscopic physics methods combined with PINNs for simulating inverse problems in fluid mechanics.

In this part, this paper employed the PINN-SRT-LBM-II model to test its performance in simulating 2D unsteady fluid mechanics inverse problems. Moreover, for an impartial assessment of the PINN-SRT-LBM-II model's capabilities, we compared its prediction accuracy with that achieved by existing PINNs coupled with macroscopic physics methods, particularly the Navier-Stokes equations. The test data and comparative results collectively indicate the substantial potential of the PINN-SRT-LBM-II model in inverse problem simulations. Notably, in the realm of simulating 2D inverse problems in fluid mechanics, the performance of the PINN-SRT-LBM-II model has already reached the same level as that presented in reference [

24], where PINNs were combined with the Navier-Stokes equations.