1. Introduction

Entropy is a crucial measure of the uncertainty of the state in physical systems that would be needed to specify the state of disorder, randomness, or uncertainty in the micro-structure of the system. Due to this fact, researchers in many scientific fields have continually extended, interpreted, and applied the notion of entropy (introduced by Clausius [

1] in thermodynamics).

Several generalizations of celebrated Shannon entropy, originally related to the processes information [

2], have been introduced in the literature. For a deeper gather entropy measures, the reader is referred to [

3,

4,

5,

6,

7,

8].

Given a probability distribution

under a probability space

, under

P (see [

9]) is generated as:

where

N is the total number of (microscopic) possibilities

and

.

Similarly, Tsallis entropy (also called

q-entropy) [

10,

11,

12] is generated by the same procedure but using Jackson’s

q-derivative operator

,

, [

13], see also [

9,

14,

15] and it is given by

where

logarithm is defined by

(

,

,

).

Tsallis entropy is connected to the Shannon entropy through the limit

which being the reason it is considered one parameter extension of Shannon entropy.

Several entropy measures have been revealed following the same procedure above and using appropriate fractional order differentiation operators on the generative function

concerning variable

t and then letting

, see for instance [

16,

17,

18,

19,

20,

21,

22,

23].

A new measure of information, called extropy, has been introduced by Lad, Sanfilippo and Agrò [

24] as the dual version of Shannon entropy. In the literature, this measure of uncertainty has received considerable attention in the last years [

25,

26,

27]. The entropy and the extropy of a binary distribution (

) are identical.

In recent years, complex networks and systems have been extensively studied since they are applied to describe a wide range of systems in many disciplines to solve practical problems [

28].

In the study of the structure of complex networks has been considered the method of fractional order information dimension by combining the fractional order entropy and information dimension, see for instance [

29,

30] and the references given there.

This article proposes a fractional two-parameter non-extensive information dimension of complex networks based on fractional order entropy proposed in [

31]. This new information dimension is computed on real-world and synthetic complex networks. The contents of the sections of the paper, besides this introduction, are described as follows.

Section 2 introduces the reader to a fractional entropy measure and the information dimension of complex networks. Then, the new definitions of fractional information dimension are introduced in

Section 3.

Section 4 focuses on applying the new measure to several real complex networks. Finally, the findings of this study and the conclusion are given in

Section 4.

2. Preliminaries

2.1. Fractional entropy

Following the same produce to obtain Shannon and Tsallis entropies, a generalized nonextensive two-parameter entropy, named fractional

entropy, is developed in [

31] and obtained by the action of a derivative operator already proposed by Chakrabarti and Jagannathan [

32]:

where

of a function

f is given by

.

Following the general idea that extropy is the complementary dual version of entropy, we present the

extropy for a discrete random variable

X as

An easy computation shows that Eq. (

5) can be expressed in terms of Tsallis entropy

The

,

and

for

,

. Consider a system composed of two independent subsystems, A and B, with factorized probabilities

and

then

where,

entropy resembles Tsallis entropy Eq. (

2) and

the Shannon entropy Eq. (

1). Thus,

is non-additive for

.

2.2. Information dimensions of complex networks

The information dimensions measuring the topological complexity of a given network are sketched briefly. Let us consider the Shannon entropy Eq. (

1); a definition of the information dimension is introduced in [

33] as follows

where

,

is the mass of the

ith box of size

,

n is the number of nodes of complex networks, and

is the minimum number of boxes to cover the network. The reader is referred to [

34,

35] for in-depth details on obtaining

.

Applying Eq.

9, we can assert that

for some constant

, where

is diameter of the boxes to perform the covering of the network.

3. Fractional information dimension of complex

Now we proceed to the primary goal of this article, which is to introduce the fractional

information dimension of complex network denoted by

as follows:

where

,

is the mass of the

ith box of size

,

n is the number of nodes of the network, and

is the minimum number of boxes to cover the network. The parameters

depend on the minimal covering of the network; thus, the maximal entropy minimal covering principle was adopted as in previous research on complex network [

29,

30,

36,

37,

38] to the computation for

, where

means the diameter of the network.

For some constant

, the Eq. (

12) is deduced from Eq. (

11)

3.1. Computation of

The computation of parameter q relies on the idea that considers the networks as a system that can be divided into several subsystems. This division is based on the formation of minimum boxes by the box-covering algorithm. Hence, the number of subsystems equals the number of boxes for a given size .

For a given box size

, the value of

q is determined as the average of

, denoted by

, where

Note that

This approximation measures how far the number of subsystems (for a given size ) is from the mean number of overall sizes, which is the baseline.

Now, to quantify the interactions among the elements that conform the subsystems (nodes) and among these subsystems (boxes), the

and

were introduced in [

30]:

where

is the number of nodes in

,

n is the number of nodes of the network,

are the edges among the nodes that are in

,

are the edges among the sub-networks

,

is the diameter of the box to compute the sub-network

and

is the diameter of the network.

Finally,

is defined by

where

,

are the mean of

and

, respectively, since they are vectors

. The Eq. (

16) is the interaction index [

30] that shows if

is equal to (

), greater (

) or lesser (

) than

; hence, it reflects which type of interactions is stronger: inner subsystems interaction (

), outer them (

) or if both are balanced.

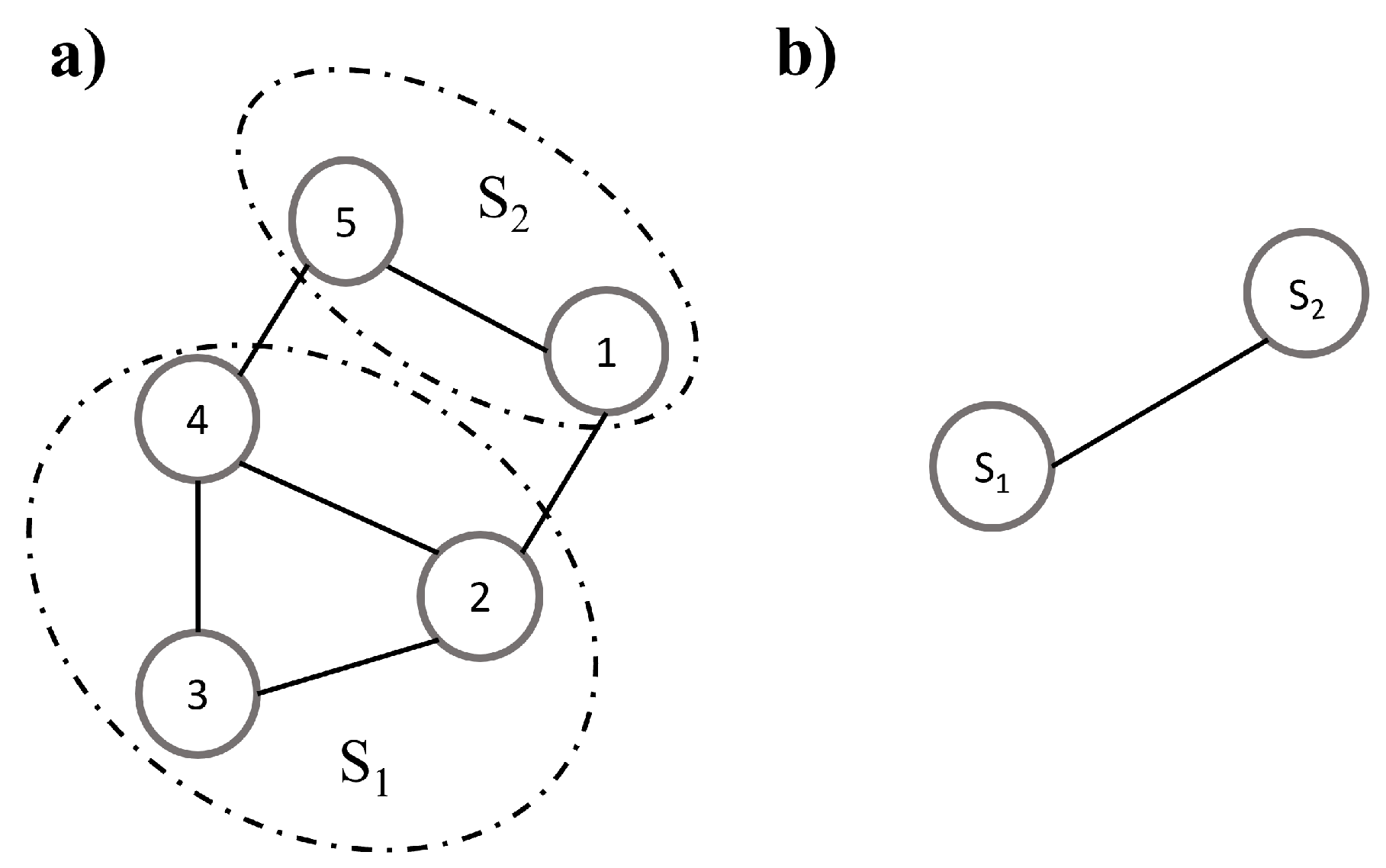

Figure 1 show an example

computations. Once a box covering is performed, using the approach in [

35], for

, see

Figure 1a), the re-normalization agglutinates the nodes into the boxes in a super node (subsystem)

,

as shown in

Figure 1b). Since

, in the example,

. Furthermore,

,

and

,

which is the degree of the nodes of the re-normalized network in

Figure 1b).

Since

, the result of Eq. (

14) are

,

and

,

from Eq. (

15); thus,

. The networks included have a diameter greater than the example shown above, so the steps to estimate

and

are repeated by each box size

resulting in two vectors that are averaged to obtain

q and

.

4. Results

4.1. Real-world networks

The fractional

information dimension Eq.(

11) and the classical information dimension Eq.(

9) were computed on 28 real-world networks that were gathered from [

30,

39], see

Table 1 for number of nodes, edges, diameter and source. These networks cover several domains, such as biological, social, technological, and communications, so they are representative.

Next, the models of Eq.(

10) and Eq.(

12) –that corresponds to the classical information dimension and the fractional

information model, respectively– were approximated using Nonlinear Regression [

40] in MATLAB R2022a. The best model is selected by summed Bayesian information criterion with bonuses (SBICR)[

41]. The SBICR penalize the complex models (that were estimated independently) and the size of the data sets employed to approximate the parameters; hence, the model with the largest SBICR score must be selected.

Table 2 shows the fit of information model Eq.(

10) and fractional

model Eq.(

12) on the information and fractional

information, respectively. The results of SBICR,

,

,

q,

computations are also shown. The column

and

show that the Eq.(

12) is better than Eq.(

10) for all networks except for PG and POW (in bold). Additionally,

means that the number of subsystems for a given

is higher than the baseline (mean subsystems found for all

). On the other hand, for 12 networks, the interaction between subsystems (

)(in bold) is stronger and for 16 networks, the interaction between the elements of the subsystems named inner interactions (

) are higher than those between subsystems (outer interactions).

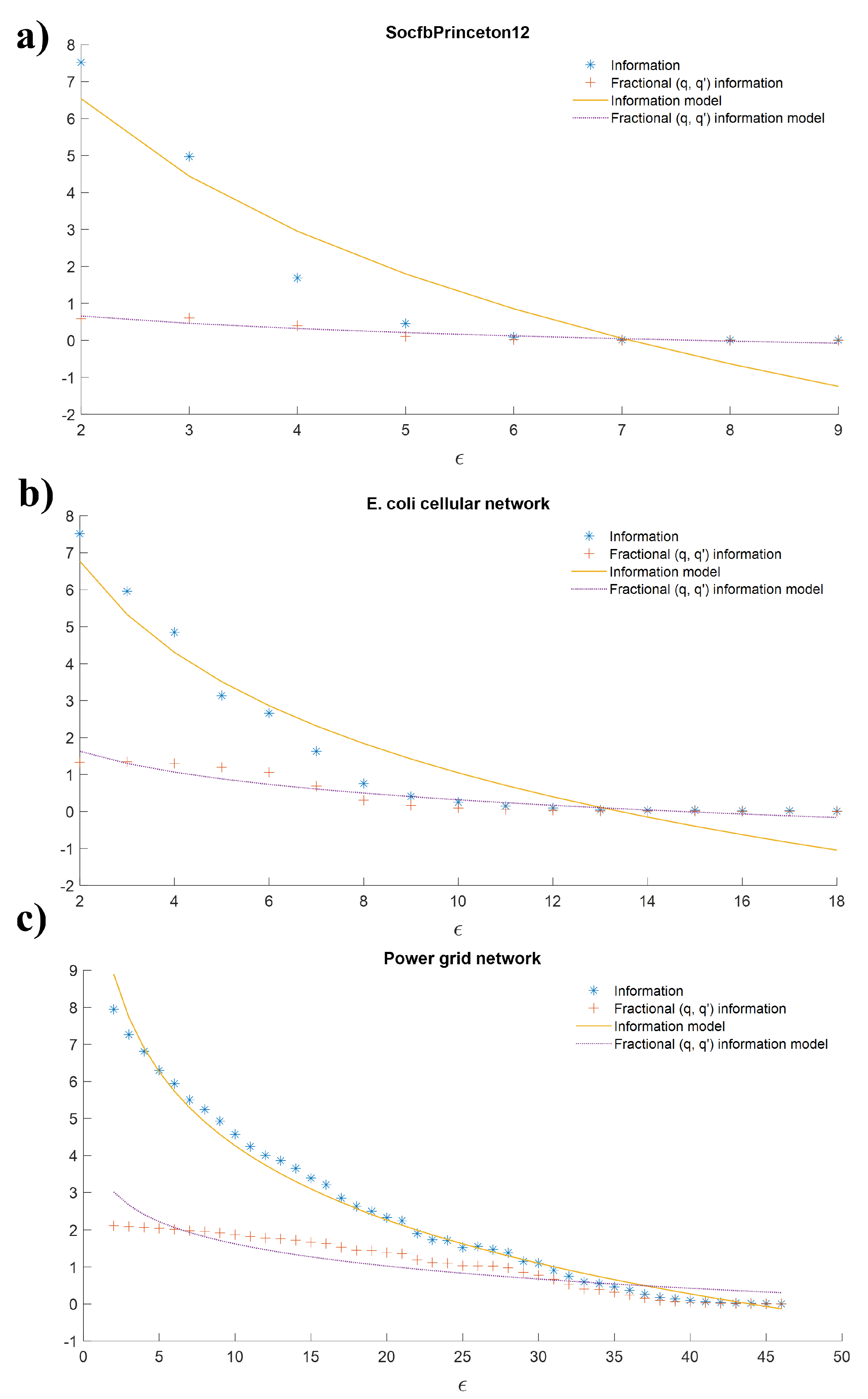

Figure 2 a) show the SocfbPrinceton12 network, were fractional

model (dotted line) is closer to fractional

information (+) than information model (solid line) to information(*). It is rather difficult to appreciate in

Figure 2 b), so the SBICR is a valuable tool. The opposite occurs in

Figure 2 c) where the information model is better than the fractional

model since the value of

is higher than the value

for Power grid network (PG), see

Table 2.

4.2. Synthetic networks

The same produce was followed on networks generated by Barabasi-Albert (BA) [

42], Song, Havlin and Makse (SHM) [

43], and Watts and Strogatz (WS) models [

44]. First,

,

were computed and then the best models of Eq.(

10) and Eq.(

12) were chosen based on the SBICR. The BA networks were 225, 211 for SHM and 216 for WS.

Table 3 summarise the nodes, edges,

,

and the information model selected between Eq.(

10) and Eq.(

12). See the supplementary material for the details on the parameters of each model to generate the networks as well as the specific

,

,

,

,

q and

.

A remarkable finding on real and synthetic networks is that

. The fractional

model fitted better for all BA and WS networks and about 71% of the SHM.

Table 4 summarises the parameter of the SHM model that produces

networks for which the information model fits better; see the supplementary material for the meaning of each parameter. The values of the SHM parameters are influenced by the assortativity (

) and hub repulsion (

), so the only conditions that intersect on

and

were

,

, and

.

Additionally, for BA, the average node degree () equal to 1 produces networks with stronger outer interactions than inner one . It occurs no matter what the number of initial nodes () and total nodes(n) values were chosen; see Table S1 of the supplementary material. On the other hand, for SHM three networks: SHM_G-3 M-4 IB-0.40 BB-0.00 MODE-2, SHM_G-4 M-3 IB-0.00 BB-0.40 MODE-2, SHM_G-4 M-3 IB-0.40 BB-0.00 MODE-2 and for WS a network: WS-2000-2-0.400000 obtained , see Tables S2 and S3. These results suggest that the fractional information dimension captures the complexity of the network topology since the SHM model tunes the links between nodes into the boxes () and the connections between boxes through . This capability is not in BA and WS models.

5. Conclusion

This article introduced complex networks’ fractional information dimension. The rationale of the definition is that the network can be divided into several subsystems. Hence, q measures how far the number of subsystems (for a given size ) is from the mean number of overall sizes, which is the baseline. On the other hand, (interaction index) measures if the interactions between subsystems are greater(), lesser () or equal to the interactions into these subsystems ().

Starting from the experimental results on real networks and synthetic networks, a glance at the interaction of the subsystems shows that clear interconnection patterns emerge, especially in the networks generated by the SHM model, where its parameters play a crucial role in obtaining networks for which the information model best fit. The initial nodes parameter of BA model generated networks where the outer interactions are stronger than inner ones , no matter what value takes the remaining parameters. Finally, our experiments reveal that in both types of networks.

We have enough evidence to state that the fractional information dimension of complex networks based on extropy seems to be a complementary dual statistical index of the fractional information dimension. It is an exciting area for future research to prove to what extent the new formulations will be helpful.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on

Preprints.org, Table S1: The SBICR of information model Eq.(8) and the fractional

information model Eq.(10),

,

and the

q,

values of BA networks; Table S2: The SBICR of information model Eq.(8) and the fractional

information model Eq.(10),

,

and the

q,

values of SHM networks; Table S3: The SBICR of information model Eq.(8) and the fractional

information model Eq.(10),

,

and the

q,

values of WS networks.

Author Contributions

Conceptualization, J.B.-R. and A.R.-A.; formal analysis, A.R.-A. and J.B.-R.; investigation, J.-S.D.-l.-C.-G.; methodology, A.R.-A. and J.-S.D.-l.-C.-G.; supervision, J.B.-R.; writing—original draft, A.R.-A. and J.B.-R.; writing—review & editing, A.R.-A. and J.B.-R. All authors have read and agreed to the published version of the manuscript

Funding

This research was funded by Instituto Politécnico Nacional grant number 20230066.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SBICR |

Bayesian Information Criterion with Bonuses |

| BA |

Barabasi-Albert |

| SHM |

Song, Havlin and Makse |

| WS |

Watts and Strogatz |

References

- Clausius, R. The Mechanical Theory of Heat: With Its Applications to the Steam-Engine and to the Physical Properties of Bodies; Creative Media Partners: Sacramento, CA, USA, 1867. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell system technical journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Beck, C. Generalized information and entropy measures in physics. Contemporary Physics 2009, 50, 495–510. [Google Scholar] [CrossRef]

- Esteban, M.D. A general class of entropy statistics. Applications of Mathematics 1997, 42, 161–169. [Google Scholar] [CrossRef]

- Esteban, M.D.; Morales, D. A summary on entropy statistics. Kybernetika 1995, 31, 337–346. [Google Scholar]

- Ribeiro, M.; Henriques, T.; Castro, L.; Souto, A.; Antunes, L.; Costa-Santos, C.; Teixeira, A. The entropy universe. Entropy 2021, 23, Paper No. 222, 35. [Google Scholar] [CrossRef]

- Lopes, A.M.; Tenreiro Machado, J.A. A review of fractional order entropies. Entropy 2020, 22, Paper No. 1374, 17. [Google Scholar] [CrossRef]

- Amigó, J.M.; Balogh, S.G.; Hernández, S. A brief review of generalized entropies. Entropy 2018, 20, Paper No. 813, 21. [Google Scholar] [CrossRef]

- Abe, S. A note on the q-deformation-theoretic aspect of the generalized entropies in nonextensive physics. Physics Letters A 1997, 224, 326–330. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. Journal of Statistical Physics 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Tsallis, C.; Tirnakli, U. Non-additive entropy and nonextensive statistical mechanics – Some central concepts and recent applications. Journal of Physics: Conference Series 2010, 201, 012001. [Google Scholar]

- Tsallis, C., Introduction to non-extensive Statistical Mechanics: Approaching a Complex World; Springer, 2009; chapter Thermodynamical and Nonthermodynamical Applications, pp. 221–301.

- Jackson, D.O.; Fukuda, T.; Dunn, O.; Majors, E. On q-definite integrals. Quart. J. Pure Appl. Math, 1910, pp. 193–203.

- Johal, R.S. q calculus and entropy in nonextensive statistical physics. Physical Review E 1998, 58, 4147. [Google Scholar] [CrossRef]

- Lavagno, A.; Swamy, P.N. q-Deformed structures and nonextensive-statistics: a comparative study. Physica A: Statistical Mechanics and its Applications 2002, 305, 310–315. [Google Scholar] [CrossRef]

- Shafee, F. Lambert function and a new non-extensive form of entropy. IMA journal of applied mathematics 2007, 72, 785–800. [Google Scholar] [CrossRef]

- Ubriaco, M.R. Entropies based on fractional calculus. Physics Letters A 2009, 373, 2516–2519. [Google Scholar] [CrossRef]

- Ubriaco, M.R. A simple mathematical model for anomalous diffusion via Fisher’s information theory. Physics Letters A 2009, 373, 4017–4021. [Google Scholar] [CrossRef]

- Karci, A. Fractional order entropy: New perspectives. Optik 2016, 127, 9172–9177. [Google Scholar] [CrossRef]

- Karci, A. Notes on the published article “Fractional order entropy: New perspectives” by Ali KARCI, Optik-International Journal for Light and Electron Optics, Volume 127, Issue 20, October 2016, Pages 9172–9177. Optik 2018, 171, 107–108. [Google Scholar] [CrossRef]

- Radhakrishnan, C.; Chinnarasu, R.; Jambulingam, S. A Fractional Entropy in Fractal Phase Space: Properties and Characterization. International Journal of Statistical Mechanics 2014, 2014. [Google Scholar] [CrossRef]

- Ferreira, R.A.C.; Tenreiro Machado, J. An Entropy Formulation Based on the Generalized Liouville Fractional Derivative. Entropy 2019, 21. [Google Scholar] [CrossRef]

- Ferreira, R.A.C. An entropy based on a fractional difference operator. Journal of Difference Equations and Applications 2021, 27, 218–222. [Google Scholar] [CrossRef]

- Lad, F.; Sanfilippo, G.; Agrò, G. Extropy: complementary dual of entropy. Statist. Sci. 2015, 30, 40–58. [Google Scholar] [CrossRef]

- Xue, Y.; Deng, Y. Tsallis eXtropy. Comm. Statist. Theory Methods 2023, 52, 751–762. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, F. Renyi extropy. Comm. Statist. Theory Methods 2023, 52, 5836–5847. [Google Scholar] [CrossRef]

- Buono, F.; Longobardi, M. A dual measure of uncertainty: the Deng extropy. Entropy 2020, 22, Paper No. 582, 10. [Google Scholar] [CrossRef] [PubMed]

- Newman, M.E.J. The structure and function of complex networks. SIAM Rev. 2003, 45, 167–256. [Google Scholar] [CrossRef]

- Ramirez-Arellano, A.; Sigarreta-Almira, J.M.; Bory-Reyes, J. Fractional information dimensions of complex networks. Chaos: An Interdisciplinary Journal of Nonlinear Science 2020, 30, 093125. [Google Scholar] [CrossRef]

- Ramirez-Arellano, A.; Hernández-Simón, L.M.; Bory-Reyes, J. Two-parameter fractional Tsallis information dimensions of complex networks. Chaos, Solitons & Fractals 2021, 150, 111113. [Google Scholar]

- Borges, E.P.; Roditi, I. A family of nonextensive entropies. Physics Letters A 1998, 246, 399–402. [Google Scholar] [CrossRef]

- Chakrabarti, R.; Jagannathan, R. A (p, q)-oscillator realization of two-parameter quantum algebras. Journal of Physics A: Mathematical and General 1991, 24, L711. [Google Scholar] [CrossRef]

- Wei, D.; Wei, B.; Hu, Y.; Zhang, H.; Deng, Y. A new information dimension of complex networks. Physics Letters A 2014, 378, 1091–1094. [Google Scholar] [CrossRef]

- Song, C.; Havlin, S.; Makse, H.A. Self-similarity of complex networks. Nature 2005, 433, 392. [Google Scholar] [CrossRef] [PubMed]

- Song, C.; Gallos, L.K.; Havlin, S.; Makse, H.A. How to calculate the fractal dimension of a complex network: the box covering algorithm. Journal of Statistical Mechanics: Theory and Experiment 2007, 2007, P03006. [Google Scholar] [CrossRef]

- Rosenberg, E. Maximal entropy coverings and the information dimension of a complex network. Physics Letters A 2017, 381, 574–580. [Google Scholar] [CrossRef]

- Ramirez-Arellano, A.; Hernández-Simón, L.M.; Bory-Reyes, J. A box-covering Tsallis information dimension and non-extensive property of complex networks. Chaos, Solitons & Fractals 2020, 132, 109590. [Google Scholar]

- Ramirez-Arellano, A.; Bermúdez-Gómez, S.; Hernández-Simón, L.M.; Bory-Reyes, J. d-summable fractal dimensions of complex networks. Chaos, Solitons & Fractals 2019, 119, 210–214. [Google Scholar]

- Rossi, R.A.; Ahmed, N.K. The Network Data Repository with Interactive Graph Analytics and Visualization. AAAI, 2015.

- Seber, G.; Wild, C. Nonlinear Regression; Wiley Series in Probability and Statistics, Wiley, 2003.

- Dudley, R.M.; Haughton, D. Information criteria for multiple data sets and restricted parameters. Statistica Sinica 1997, 265–284. [Google Scholar]

- Barabási, A.L.; Albert, R. Emergence of scaling in random networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- Song, C.; Havlin, S.; Makse, H.A. Origins of fractality in the growth of complex networks. Nature physics 2006, 2, 275. [Google Scholar] [CrossRef]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).