1. Introduction

Epilepsy is a persistent neurological illness that is characterized by the abrupt and unexplained onset of signs or symptoms that are brought on by aberrant electrical activity in the brain, these symptoms or signs might bring on seizures [

1]. The World Health Organization estimates that over 50 million people all over the world have been given a diagnosis of epilepsy [

2], and this condition is the third most frequent form of neurological disorder after stroke and Alzheimer’s disease [

3,

4,

5]. The regular pattern of activity in the neurological system is disturbed during epileptic seizures, which results in reduced movement, loss of control of bowel or bladder function, and loss of consciousness. As a consequence of this, patients who experience epileptic seizures have an increased risk of suffering from paralysis, fractures, and even unexpected death [

6].

Moreover, seizures are a common symptom of epilepsy, and they can cause patients to lose consciousness and become unresponsive. As a result, they are susceptible to harm, even death, from things like traffic accidents. The patient and their loved ones experience anxiety, depression and stress due to the unpredictability of seizures [

7].

Seizures are typically categorized into three distinct classifications, including focal onset, generalized onset, and undetermined onset. Seizures characterized by focal onset are confined to a specific region of the brain, while seizures with generalized onset may either propagate from a specific region or manifest simultaneously over the entire brain. The commencement of seizures with an unknown onset is indeterminate. Focal seizures can be categorized into two distinct types: Focal onset aware seizures, during which the individual remains conscious and cognizant during the seizure, and Focal onset impaired awareness seizures, characterized by confusion and compromised awareness during the seizure event [

8,

9].

Several risk variables enhance injury risk overall. Injury risk is greater with seizure type. Generalized tonic-clonic, atonic, and complex partial seizures have the largest harm risk due to consciousness loss.54 Patients with at least one seizure a month and more than three antiepileptic drug side effects are more likely to be injured by seizures. Duration, sex, and age do not affect epilepsy risk. Epileptic patients’ injury risk affects employment, insurance, education, and recreation. Active epilepsy patients are at increased risk for injury, so it’s important to keep them in pharmacological remission to reduce their risk of injury and allow them to enjoy normal occupational and social privileges and a better quality of life [

8].

It is believed that more than 70% of persons with epilepsy can live a normal life without having seizures if they are detected and treated for their condition as soon as possible [

9]. Therefore, if epilepsy could be recognized at an earlier stage, we would be able to avoid the unintended consequences that are associated with it [

10].

Monitoring the electrical activity in the brain on a continual basis allows for a reduction in the harmful effects that are caused by seizures. If it is possible to forecast the onset of seizures before they take place, both the patient and the caregivers will be able to take the necessary safety measures. It is a time-consuming process, but in order to diagnose epilepsy, clinicians need to visually analyze lengthy electroencephalography (EEG) recordings. These recordings are widely employed in modern medicine because they are able to monitor the electrical function of neurons in the brain [

3,

4,

11]. EEG is the non-invasive and painless method that is utilized the most frequently in order to assess the electrical activity of the cerebral cortex from the scalp. Its popularity among the many brain imaging modalities can be attributed to both its inexpensive cost and its high portability [

2]. The electrical potentials of the dendrites of neurons that are close to the cortical surface can be directly measured using EEG [

12,

13]. The flow of current through and around neurons produces a voltage potential that is able to be recorded by an EEG device utilizing electrodes that have been put on the scalp [

2,

12,

14,

15]. Because it is possible to obtain readings at a rate of one thousand times per second, EEG is an accurate method for use in real-time applications with high temporal resolution [

16].

The current standard procedure for identifying epileptic seizures is dependent mainly on the visual analysis by physicians of a significant amount of information included in EEG signals [

17]. The interpretation of EEG signals by medical professionals can lead to a diagnosis, but this process is time-consuming, needs a significant amount of skill, and carries a risk of making mistakes. Visual analysis of anomalies in EEG data can help a neurologist recognize the existence of epileptic seizures as well as the specific type of seizure that is occurring in a patient.

A neurologist can diagnose epilepsy with EEG by visually exploring data and looking for the spikes, sharp, and slow wave patterns that are indicative of an epileptic seizure [

18]. However, there is great variation in the presentation of epileptic conditions among patients. Traditional visual analysis of EEG recordings for epilepsy diagnosis is thus a laborious process that might result in a number of false positives and negatives. There are negative outcomes that can occur as a result of a wrong diagnosis being made on a patient. Therefore, accurate diagnosis of epilepsy is crucial for effective therapy. Research into automatic systems for epilepsy detection [

19,

20,

21] and seizure episode prediction [

22,

23] has been intensive throughout the past decade.

As a result, the study of how to automatically analyze EEG patterns and detect epileptic seizures is of paramount importance. EEG signals are highly complex, nonlinear, and non-stationary due to the interconnections of the brain’s billions of neurons [

24,

25]. Because of these quirks, EEG data might be difficult to decipher. The correct diagnosis of epileptic seizures using EEG data is a major challenge in this setting [

10]. Several different methods have been proposed in the academic literature to automatically detect seizures from EEG recordings [

18].

Feature selection is important in signal processing, machine learning, and data analysis. The chosen features affect data analysis models’ performance, interpretability, and efficiency. Hurst exponent (Hur), Tsallis Entropy (TsEn), improved permutation entropy (impe), and amplitude-aware permutation entropy (AAPE) are temporal domain-based features in which the Hurst exponent assesses long-range dependence or time series self-similarity. A high Hurst exponent indicates correlation and predictability, while a low exponent indicates unpredictability [

26,

27]. TsEn is extended to Shannon entropy enabling the incorporation of non-extensive statistics. Entropy measurements are less versatile than time series value distributions. This helps complex, heavy-tailed data distributions. TsEn can reveal patterns and information that standard entropy ignores in physics, economics, and complex systems analysis [

28]. impe measures time series complexity or irregularity using ordinal patterns. It makes this method computationally efficient and sensitive to short-term patterns. This helps diagnose neurological diseases by detecting EEG anomalies since short-term changes are significant [

29,

30]. Permutation entropy with time series amplitude variations is called AAPE. AAPE may catch more signal dynamics features for heterogeneous signals by studying ordinal patterns and amplitudes [

30,

31].

Signal amplitude changes in vibration analysis may indicate machinery or building damage. Time domain-based characteristics in data analysis help comprehend signal behaviour and uncover hidden patterns and complexities that simpler measures may miss. These properties improve model accuracy, generalization, and robustness with non-stationary and irregular datasets like EEG towards medical diagnoses [

29].

Medical diagnosis of epilepsy requires seizure prediction models that are used to identify seizure patterns and to assist patients and clinicians prevent them. In this study, the EEG dataset is mainly recorded from epileptic seizure children and normal children. Both machine learning (ML) and deep learning (DL) seizure prediction systems have pros and downsides. Therefore, to meet our objective in performing a comparative study for automatic diagnosis of epileptic seizures, both ML-based models (SVM, KNN, DT) and DL-based recurrent neural network (RNN) classifiers (GRU, LSTM, BiLSTM) have been used. ML models SVM, KNN, and DT aid feature engineering and interpretation. SVMs with high dimensions are ideal for several EEG channels, with varied kernels, it handles linear and non-linear data well. KNN is simple, captures local data patterns, and works with class clusters. DT is easy to read and captures non-linear correlations.

We acknowledge that the whole world is moving towards deep learning using GRU and LSTMs to capture sequential data temporal dependencies. Minimize vanishing gradients and model long-term relationships. BiLSTM can handle bidirectional context jobs because they combine past and future time steps. This may capture complex data patterns. Quality data and task needs determine model selection. EEG data used to predict seizures are sequential because they contain electrical activity. GRUs, BiLSTMs, and LSTMs capture temporal dependencies, making them desirable.

DL can automatically learn a comprehensive collection of features from training data, providing a more versatile feature space for modeling than ML’s hand-crafted features [

26]. Despite these developments, numerous issues remain unanswered and existing approaches fail to detect epileptic seizures [

32]. Although there are techniques for combining numerous EEG signals, the most effective way to use data from several electrodes on an EEG device is still up for debate. In addition, detecting epileptic seizures is fundamentally an unbalanced classification problem, with lengthy hours of normal states (non-seizure episodes) and a few seconds of abnormal states (seizure episodes). Most current methods use sampling criteria to evenly divide data between the training and testing sets, however, it is unclear how this might impact classification accuracy. Therefore, the primary contributions of this study are twofold: first, extracting temporal characteristics from numerous EEG signals for an appropriate time domain entropy-based combination of features to improve classification performance. In the proposed study, ML-based and DL-based classification models are used to examine the representations of EEG signals in two separate classification sessions.

In session one of this study, the EEG dataset was classified using three ML-based models: an SVM, a KNN, and a DT, and three DL-based RNN classifiers: a GRU, a LSTM, and a BiLSTM. Thus, the performances of ML-based and DL-based with an emphasis on automatic seizure recognition were performed in this paper.

1.1. Related Work

An automated system for classifying EEG signals and detecting epileptic seizures has been presented by Swami et al. [

33]. This system makes use of the wavelet transform, and the extracted feature set of each EEG includes the signal’s energy, standard deviation, root mean square, Shannon entropy, mean, and maximum values. After that, a general regression neural network, also known as a GRNN, is used to classify this collection of features.

Overview of deep learning algorithms for epilepsy detection from EEG deep learning algorithms are a type of artificial neural network that can automatically learn to recognize patterns in data. In the case of EEG signals, these algorithms can learn to identify specific patterns of brain activity that are indicative of epilepsy. There are several deep learning algorithms that have been used for epilepsy detection from EEG, including convolutional neural networks (CNNs), RNNs, and hybrid architectures that combine both CNNs and RNNs.

Therefore, to identify epileptic seizures from EEG recordings, Jaiswal et al. [

34] used two feature extraction techniques: local neighbor descriptive pattern (LNDP) and one-dimensional local gradient pattern (1DLGP) with artificial neural network (ANN). CNNs are a type of deep learning algorithm that is commonly used for image recognition tasks. In the case of EEG signals, CNNs can be used to detect patterns of brain activity that are indicative of epilepsy [

35]. Several studies have reported promising results using CNNs for epilepsy detection from EEG [

36]. For example, Zhou et al. [

37] conducted a study not too long ago in which they selected EEG signals for each patient based on visual evaluation. A convolutional neural network, also known as a CNN, was utilized in that procedure in order to differentiate between the ictal, preictal, and interictal phases. Inputs for classification can be signals in either the time domain or the frequency domain.

Moreover, Wahengbam et al. [

38] used a CNN to classify EEG signals as either normal or epileptic. RNNs are another type of deep learning algorithm that is commonly used for time-series data analysis. In the case of EEG signals, RNNs can be used to model the temporal dynamics of brain activity and detect patterns that are indicative of epilepsy. Several studies have reported promising results using RNNs for epilepsy detection from EEG [

39].

In addition, Singh et al. [

40] have segmented multichannel EEG signals into short-duration subsegments by selecting a single channel from the set of several channels and then filtering the results. stacked autoencoder (SA), RNN and CNN are the three deep learning algorithms that are employed in this strategy to classify, and in the end, they came to the conclusion that the CNN model functioned better than other classifiers.

2. Materials and Methods

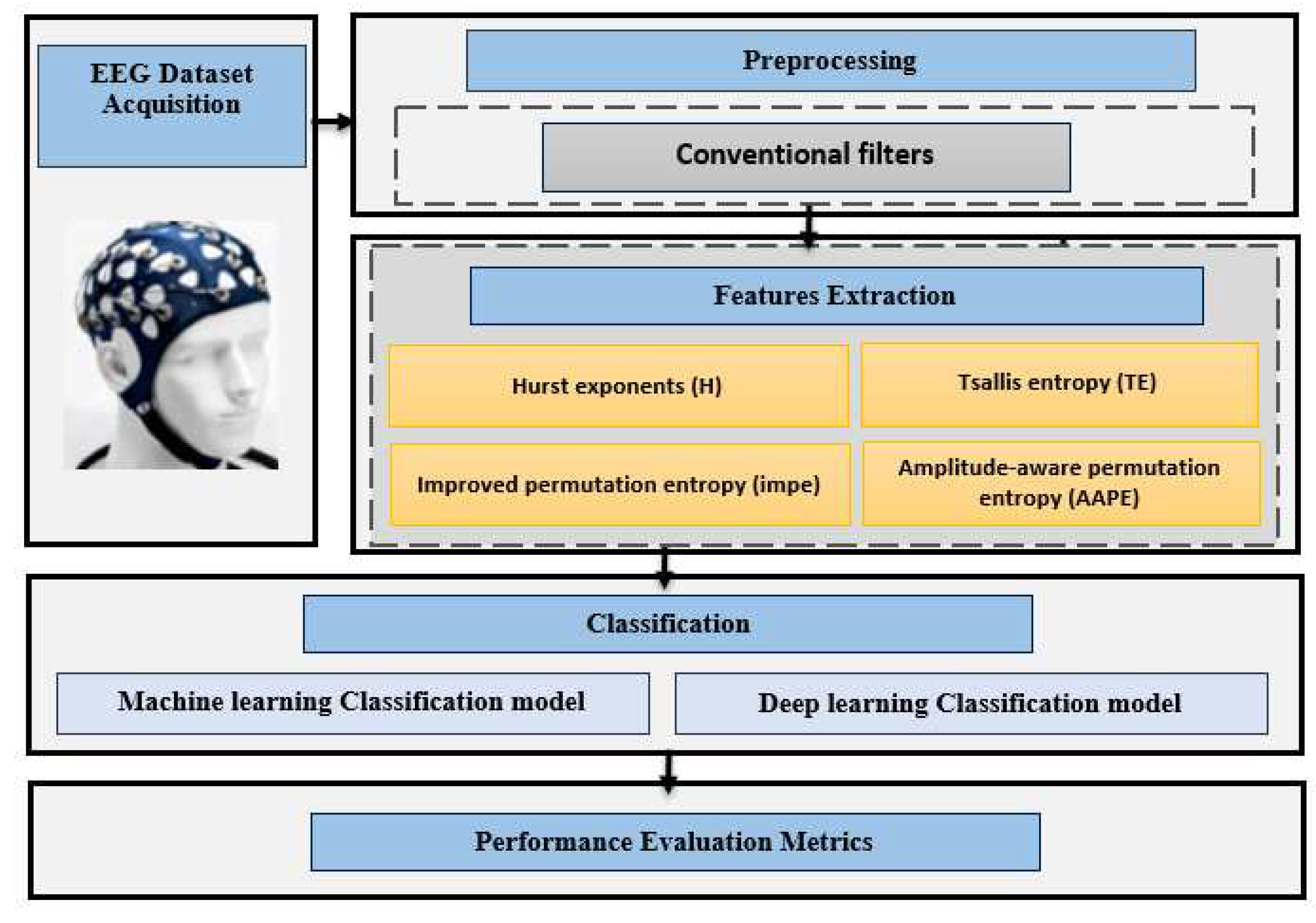

Figure 1 is an illustration of the block diagram that has been used to analyze epileptic seizure children as opposed to normal participants.

2.1. EEG dataset Acquisition

Micromed Brain Spy Plus Embia via Giotto 2, 31021 Mogliano Veneto, Treviso, Italy 31021 was the model number of the EEG recording headgear used to collect the datasets. It can identify spikes and seizures, and is used for data analysis. There were a total of 19 electrodes, with 2 serving as references and 1 as ground. There were 19 electrodes in total. Following the guidelines of the international 10-20 system, the EEG electrodes were arranged as follows: (FP1, FP2, F7, F3, Fz, F4, F8, T3, C3, Cz, C4, T5, P3, Pz, P4, T6, O1, and O2). The Micromed EEG equipment featured notches in addition to low pass and high pass filters. Suppressing frequencies below 0.15 Hz, with a sampling rate of 256 Hz, required the use of a low pass filter with a cutoff frequency of 0.5 Hz, an upper cutoff filter with a cutoff frequency of 220 Hz, and a notch filter with a cutoff frequency of 50 Hz. The electrode’s impedance was reduced to below 5 kiloOhms.

A standard recording is the first step in the EEG recording process for both normal and epileptic patients. Usually done when an individual is resting, this recording can last for as long as ten minutes. Eye-opening, eye-closing, hyperventilation, and photic stimulation were among the situations under which the volunteers’ brain electrical activity was recorded.

Twenty youngsters from a single elementary school participated in an EEG data analysis for this study. Ten normal subjects had their EEGs recorded (3 females and 7 males; the age of 14.182±3.027 years; mean ± standard deviation SD); ten children with epilepsy had theirs recorded (5 females and 5 males; the age of 14.545±3.464 years; mean ± standard deviation SD). The healthy kids who acted as comparisons have never been diagnosed with a mental or nervous condition. The Ibn-Rushd training hospital’s Human Ethics Committee in Baghdad, Iraq, approved all of the experiments before they were carried out. Children with epilepsy were recruited at random from the Neurology Clinic at the Ibn-Rushd training hospital, and parental consent was obtained in the form of a signed informed consent form.

2.2. Preprocessing

First and foremost, external noise from the surrounding environment and internal noise from the human body’s internal structure must be reduced and removed [

11]. As a result, Conventional Filters using Butterworth notch filter were employed to remove the power line interference noise, and a second-order bandpass filter with a frequency range of 0.1 to 64 Hz was used in the preprocessing stage.

After being filtered, the EEG signals are initially separated into 10-second long segments for each participant in the dataset, with no overlap between the segments that are adjacent to one another. After the EEG segments have been gathered, they are forwarded to the feature extraction module. In this module, a number of the EEG features that are utilized the most frequently are extracted to produce a feature matrix from all normal subjects and a feature matrix from all abnormal epileptic patients. During this process, the EEG features that are utilized the most frequently are extracted first.

2.3. Features Extraction

Identifying significant features from the recorded EEG dataset is a critical step toward improving the detection of epileptic seizures automatically. In this study, temporal features using time domain entropy-based features such as Hurst exponent (Hur), Tsallis Entropy (TsEn), improved permutation entropy (impe), and amplitude-aware permutation entropy (AAPE) were extracted from each 10-sec long EEG segment (i.e. 2560 EEG data samples). The EEG features have to convey the neurophysiological behaviour of the brain’s embedded structures and the EEG dataset was classified in session one of this investigation using three ML-based models: an SVM, a KNN, and a DT, as well as three DL-based RNN classifiers: GRU, LSTM, and BiLSTM. The retrieved features are shown in the following sections.

Hurst (Hur): The hurst feature (Hur) is formulated as follows [

41]:

where

n is the number of samples per second in an EEG and

is the rescaled value where

is the range of the first

n cumulative deviations from the mean and

is the series sum of the first

n standard deviations [

41].

Tsallis Entropy (TsEn): TsEn has been commonly utilized to display estimated changes in EEG. TsEn, for instance, has been developed from EEG data to differentiate brain ischemia damage in Alzheimer’s patients from that in patients with other illnesses [

28,

42]. We used the definition of entropy proposed by Tsallis et al. to measure signal uncertainty. [

42,

43].

where

are information events,

are the probabilities of

, and the q-logarithm function is defined as [

29]:

Improved Multiscale Permutation Entropy (impe): The impe entropy estimates from using impe make it a potential method for characterizing physiological changes across several time scales [

29]. The impe can be calculated as in Equation

4.

where

d is the embedding dimension.

Amplitude-aware permutation entropy (AAPE): The current study employed two different forms of entropy for a group of epileptic and healthy people in order to differentiate between the various EEG signals. These two different types of entropy are known as the impe and the AAPE, respectively. The latter is a modification of Bandt-Pompe’s permutation entropy, which may be found in [

44]. The AAPE system overcomes the limitations of conventional PE, the most significant of which are the neglect of the amplitudes’ mean value and the disparities between consecutive samples. This approach also assists in solving the problem of recorded values of amplitude samples being identical (which is relevant for digitized time series). In contrast, AAPE methods are sensitive to changes in the frequency and amplitude of the signals because they have high reliability in determining the signal motifs. This sensitivity comes from the fact that AAPE methods have this high reliability. In contrast to PE, AAPE is capable of minimizing the effect of background noise and performing accurate spike recognition, even when the sample only contains a single spike. When compared with PE, the change in multi-sample spikes recorded with AAPE was significantly larger [

31]. Mathematically speaking, in conventional PE (the Bandt-Pompe technique), and in the case of conformance with the specification of the i set, one is added to enhance the group frequency. On the other hand, in the case of AAPE, 0 to 1 is added to the probability of that set as follows Equation

5,

31].

2.4. Classification

In this research, three distinct DL and ML techniques were utilized after first extracting features from EEG signals based on their entropy as they occurred in the time-based domain. Classifying epilepsy seizures from those of healthy people typically requires either a machine learning classification model such as SVM, KNN and DT, or a deep learning model such as a RNN using GRU, LSTM and BiLSTM networks.

2.4.1. Machine learning Classification model

Using one of three classification models SVM, KNN and DT, this is the final stage in separating seizures produced by epilepsy from those caused by other disorders.

This study made use of multi-class SVM classifiers that were based on the RBF-base kernel. A smoothing parameter known as

with a value of

was used in order to achieve the highest possible performance in terms of muti-class classification accuracy when applied to the training dataset [

45]. The KNN scheme improves when

, turning it into a more reliable non-parametric classification algorithm and enabling a reduction in the number of influenced, noisy points in the training set. When conducting this study, we categorized each trial employing KNN by making use of the Euclidean distance. The classifier was adjusted so that it would output the value

for the purpose of achieving the highest possible classification accuracy [

46].

The decision tree is a recursively generated tree that is based on the supervised classification technique of breaking down a big problem into multiple smaller subproblems. Each leaf node in a decision tree is assigned a class label, and the internal nodes, as well as the parent node, use attribute testing criteria to partition records based on their features [

30,

47]. The boost in the accuracy of classification was not drastically affected by the larger number of trees. Thus, the value of 100 to binary decision trees was used in this analysis.

2.4.2. Deep learning Classification model

In this study, RNN deep learning classification models were used for classifying epilepsy seizures from normal individuals. These models included GRU, LSTM, and BiLSTM networks.

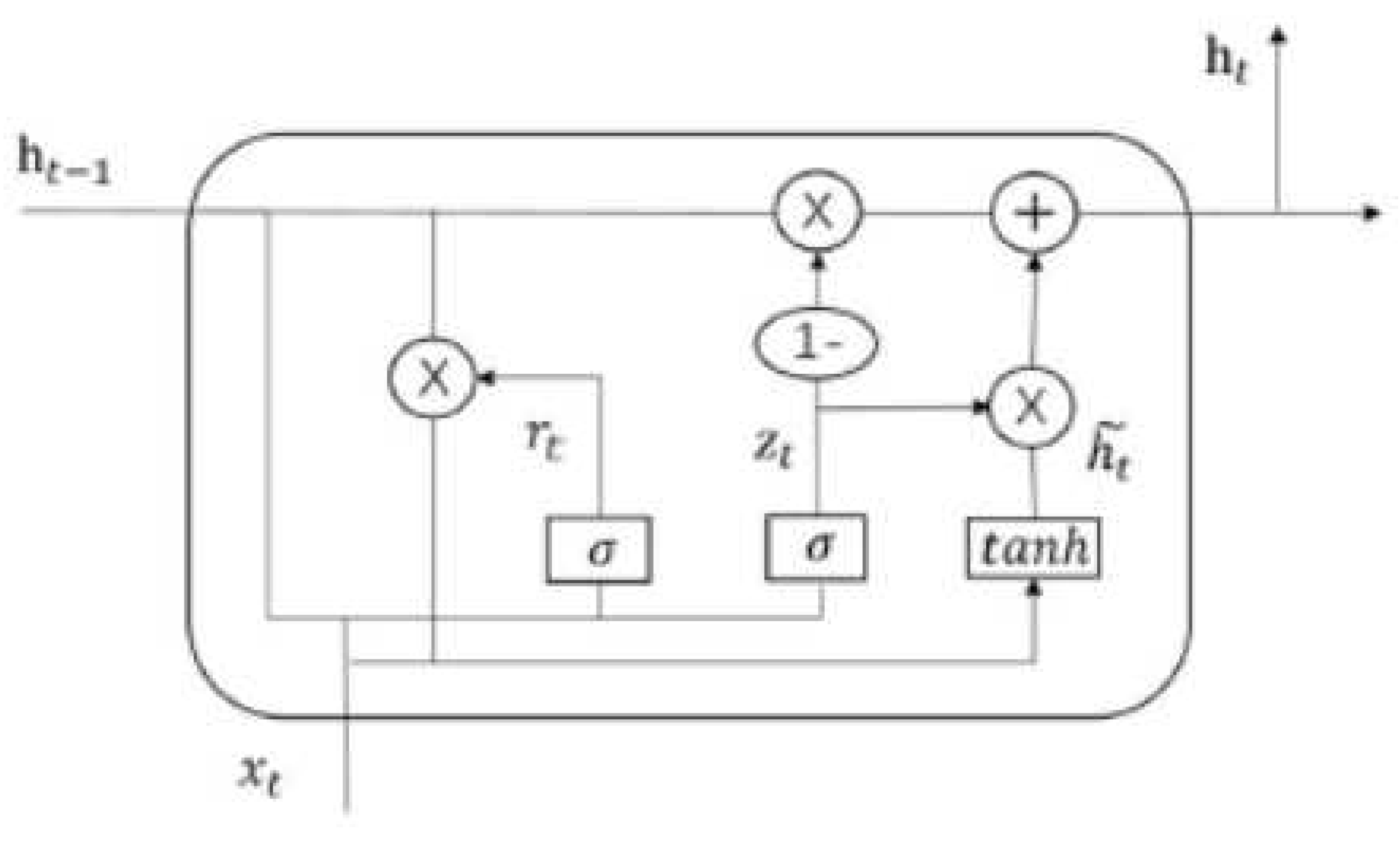

Gated Recurrent Unit (GRU) network: GRUs are a type of RNN design that makes use of gates to regulate the exchange of data between neurons. At most, a single hidden state can be carried over from one time step to the next in a GRU. Because of the computations and gating techniques applied to the hidden state and input data, it is able to keep track of both long- and short-term dependencies [

48]. The GRU cell’s internal structure is depicted in reference. An input

and the hidden state Ht1 from the preceding time step

are used. A new hidden state,

, is produced at a later time and is also carried forward to the next tick. The GRU, or gated recurrent unit, performs the same tasks as the RNN but with distinct gate operations. By combining two gates—the update gate and the reset gate—GRU solves the issue with conventional RNN, as described in [

49].

Figure 2.

Structure of a GRU cells.

Figure 2.

Structure of a GRU cells.

-

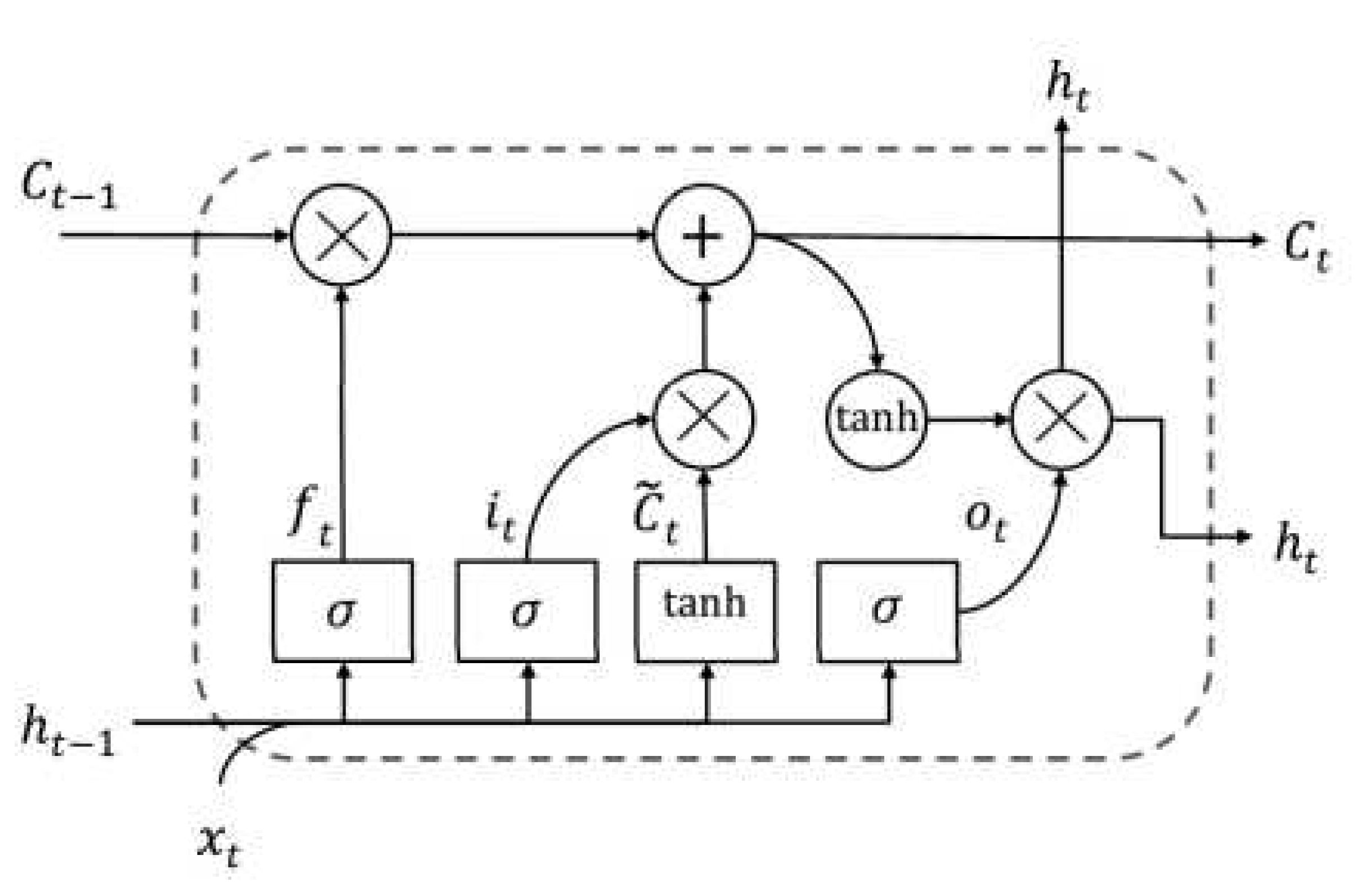

Long Short-Term Memory (LSTM) network: The idea behind both LSTMs and GRUs is the same. They are a more recent development than LSTM. This is why GRUs perform better than LSTMs and have a simpler structure. The cell state and the concealed state make up LSTMs and are responsible for the long-term and short-term storage, respectively [

49].

A memory cell is a configuration unique to the LSTM network [

50,

51].

Figure 3 depicts the four main components of this memory cell: a neuron, a forget gate, an input gate, and an output gate with a self-recurrent structure. These gates facilitate the capacity of cells to store and access information for longer durations [

52]. One significant development in dealing with long-term dependencies is the introduction of LSTM, a special type of RNN that overcomes the vanishing gradient problem. The hidden layer, or cell, is a minor difference between RNN and regular LSTM design [

53]. From

Figure 3 depicts the memory cell that allows LSTM to maintain its state over time, as well as the nonlinear gating units that tightly control the flow of information, both of which are crucial to the LSTM’s success [

51]. The basic components of an LSTM cell at time

t are the layer input

and the layer output

. The complex cell stores not only the input state

of the cell but also its output state

and the previous cell’s output state

. The training process provides this data, which is then used to adjust settings. Three gates, the forget gate

, the input gate it, and the output gate

, guard and regulate the state of the cell. The gates are constructed using a sigmoid

as the activation function, as shown in the same Figure. Because of its gate structure in cells, LSTM is able to handle long-term dependencies, letting relevant information through while discarding superfluous data [

54]. Multiple memory cells make up one LSTM layer. The output of a layer of an LSTM network is a vector,

, where

T is the number of cells of time steps. In this study, we focus solely on predicting the last element of the output vector

, in the context of the epilepsy estimating problem. For the following iteration time

T, the estimated value of the epileptic seizure is

. The success of resolving various field tasks is significantly influenced by the network’s depth. The deep network can be thought of as a processing pipeline, with each layer solving a smaller subset of the job at hand before passing it through to the next layer, which then generates the final output [

55]. We offer four distinct deep LSTM models by stacking numerous LSTM layers to provide the performance at estimating the epileptic seizure, based on the concept that increasing the depth of the network provides a more efficient solution in tackling the long-term dependency problem of sequential data [

56].

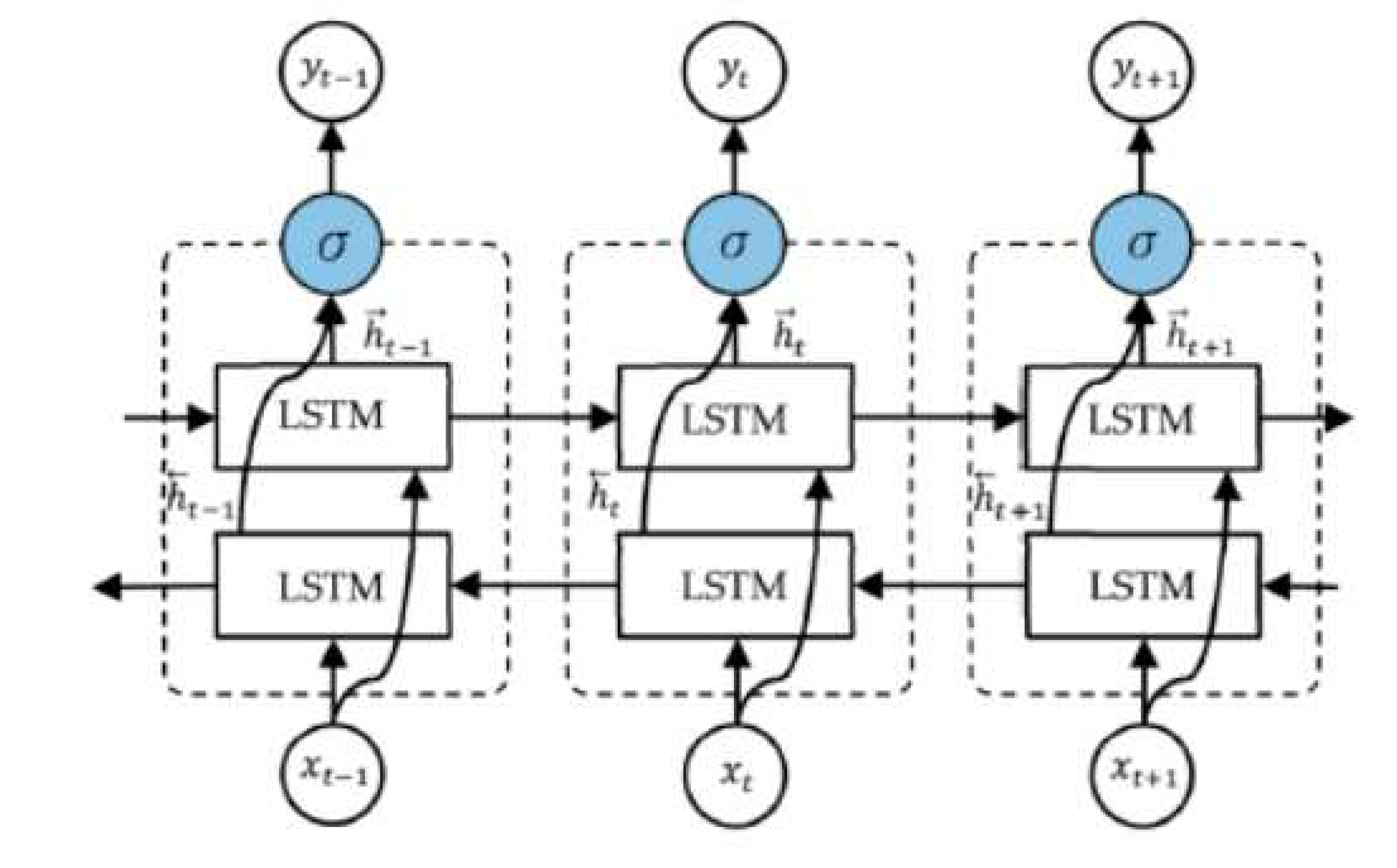

Bidirectional Long Short-Term Memory (BiLSTM) network: The output layer of an LSTM is linked to the model’s two hidden layers via BiLSTM. Using two LSTMs in a single layer of an application encourages strengthening the learning long-term dependency, which in turn boosts model performance [

50]. epileptic seizures could be represented as ones where bidirectional networks have been shown to be superior to traditional ones [

52]. As shown in Figure, an unfolded BiLSTM layer consists of a forward LSTM layer and a backward LSTM layer. Since the forward LSTM layer output sequence

is acquired in the same way as the unidirectional one, the backward LSTM layer output sequence

is computed by applying the same algorithm to the inputs from time

to time

in the opposite direction. The

function is then used to merge these sequences into the final output vector

[

54]. The BiLSTM layer’s final output is a vector,

, which is similar to the LSTM layer’s output vector except that the last element,

, represents the estimated epileptic seizure for the following iteration as shown in

Figure 4.

2.4.3. Architectures of RNN Classifiers Used in This Study

Because RNN models have been utilized for seizure prediction, there is no appropriate internal design; hence, a pre-analysis is carried out in this section utilizing GRU, LSTM, and BiLSTM models, each of which has a unique internal architecture.

When the input size was initially for each feature, the classification of 10 epileptic seizure sufferers and 10 normal people was investigated using all three RNN modalities. The total number of samples used in the study was for each feature taken separately.

All of the RNN networks are made up of a total of six layers, which are as follows: a feature input layer, a fully connected layer, a batch normalization layer that makes use of the rectified linear unit (ReLU) activation function, a softmax layer, and finally, a classification outputs layer to obtain a binary classification result (i.e. normal or abnormal).

The adaptive moment estimation (Adam) optimizer was used to train all RNN networks with a learning rate of 0.001 over the course of 30 epochs, and the batch size was set to 16.

Therefore, the input size to the used RNN models was increased due to the features increasing in size to get the combined attributes input sequence consisting of . In theory, a better EEG signal representation could be learned if the size of the RNN network was significantly increased.

2.5. Performance Evaluation Metrics

In this research, the classification accuracies were calculated by using SVM, KNN and DT firstly, and GRU, LSTM and BiLSTM RNN ML-based models secondly to classify the EEG dataset into two classes these are children with epileptic seizures and normal control children. To prevent class imbalance, the dataset is split for training and validation and for testing .

The scope of the evaluation is broadened by including segment-based and event-based performance outcomes. Estimating seizure prediction performance requires defining the true positive rate

as the number of EEG segments that were correctly identified as preictal, the true negative rate

as the number of EEG segments that were correctly classified as interictal, the false positive rate

as the number of EEG segments that were incorrectly classified as preictal, and the false negative rate

as the number of EEG segments that were incorrectly classified as interictal. Equations

6 to

10 were used for calculating accuracy, recall, specificity, precision and F1-score [

9].

In the event-based evaluation, the proportion of seizures for which at least one preictal EEG segment was detected per hour of EEG recordings.

3. Results and Discussion

The outcomes of four temporal features, namely Hur, TsEn, impe, and AAPE, are presented in the

Table 1 to

Table 4. These features have been assessed with the use of three distinct classifiers, namely SVM, KNN, and DT. The effectiveness of the classifiers that were chosen has varied depending on the feature that is being considered. Through the use of DT and SVM, respectively, we were able to attain the best classification accuracies of 70% across all of the attributes. Nevertheless, the KNN demonstrate an almost perfect level of specificity and precision across the entire features.

Table 1.

Classification results for epileptic seizures and control samples using the Hur feature with SVM, KNN, and DT classifiers.

Table 1.

Classification results for epileptic seizures and control samples using the Hur feature with SVM, KNN, and DT classifiers.

| Hur |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| SVM |

4 |

1 |

3 |

2 |

60 |

40 |

80 |

66.7 |

50 |

| KNN |

5 |

0 |

4 |

1 |

60 |

20 |

100 |

100 |

33.3 |

| DT |

5 |

0 |

3 |

2 |

70 |

40 |

100 |

100 |

57.1 |

Table 2.

Classification results for epileptic seizures and control samples using the TsEn feature with SVM, KNN, and DT classifiers.

Table 2.

Classification results for epileptic seizures and control samples using the TsEn feature with SVM, KNN, and DT classifiers.

| TsEn |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| SVM |

4 |

1 |

2 |

3 |

70 |

60 |

80 |

75 |

66.7 |

| KNN |

5 |

0 |

4 |

1 |

60 |

20 |

100 |

100 |

33.3 |

| DT |

3 |

2 |

1 |

4 |

70 |

80 |

60 |

66.7 |

72.7 |

Table 3.

Classification results for epileptic seizures and control samples using the impe feature with SVM, KNN, and DT classifiers.

Table 3.

Classification results for epileptic seizures and control samples using the impe feature with SVM, KNN, and DT classifiers.

| impe |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| SVM |

4 |

1 |

3 |

2 |

60 |

40 |

80 |

66.7 |

50 |

| KNN |

4 |

1 |

3 |

2 |

60 |

40 |

80 |

66.7 |

50 |

| DT |

5 |

0 |

3 |

2 |

70 |

40 |

100 |

100 |

57.1 |

Table 4.

Classification results for epileptic seizures and control samples using the AAPE feature with SVM, KNN, and DT classifiers.

Table 4.

Classification results for epileptic seizures and control samples using the AAPE feature with SVM, KNN, and DT classifiers.

| AAPE |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

|

| SVM |

3 |

2 |

2 |

3 |

60 |

60 |

60 |

60 |

60 |

| KNN |

5 |

0 |

3 |

2 |

70 |

40 |

100 |

100 |

57.1 |

| DT |

4 |

1 |

2 |

3 |

70 |

60 |

80 |

75 |

66.7 |

The results of four time domain and entropy characteristics, namely Hur, TsEn, impe, and AAPE, are shown in the Tables referred to as

Table 5 to

Table 8, respectively. These features have been evaluated with the assistance of three different RNN classifiers, namely GRU, LSTM, and BiLSTM. The selected classifiers have varying degrees of efficacy, which is directly proportional to the number of features that are taken into account. We were able to get the best classification accuracies of 90% across the board for both the TsEn and AAPE characteristics by making use of GRU. In spite of this, the GRU and LSTM exhibit levels of specificity and precision that are nearly flawless across the board for all of the features.

Table 5.

Classification results for epileptic seizures and control samples using the Hur feature with GRU,LSTM and BiLSTM classifiers.

Table 5.

Classification results for epileptic seizures and control samples using the Hur feature with GRU,LSTM and BiLSTM classifiers.

| Hur |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| GRU |

4 |

1 |

1 |

4 |

80 |

80 |

80 |

80 |

80 |

| LSTM |

5 |

0 |

2 |

3 |

80 |

60 |

100 |

100 |

75 |

| Bi-LSTM |

4 |

1 |

1 |

4 |

80 |

80 |

80 |

80 |

80 |

Table 6.

Classification results for epileptic seizures and control samples using the TsEn feature with GRU, LSTM and BiLSTM classifiers.

Table 6.

Classification results for epileptic seizures and control samples using the TsEn feature with GRU, LSTM and BiLSTM classifiers.

| TsEn |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| GRU |

5 |

0 |

1 |

4 |

90 |

80 |

100 |

100 |

88.9 |

| LSTM |

5 |

0 |

2 |

3 |

80 |

60 |

100 |

100 |

75 |

| Bi-LSTM |

4 |

1 |

1 |

4 |

80 |

80 |

80 |

80 |

80 |

Table 7.

Classification results for epileptic seizures and control samples using the impe feature with GRU, LSTM and BiLSTM classifiers.

Table 7.

Classification results for epileptic seizures and control samples using the impe feature with GRU, LSTM and BiLSTM classifiers.

| impe |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| GRU |

3 |

2 |

0 |

5 |

80 |

100 |

60 |

71.4 |

83.3 |

| LSTM |

4 |

1 |

1 |

4 |

80 |

80 |

80 |

80 |

80 |

| Bi-LSTM |

5 |

0 |

2 |

3 |

80 |

60 |

100 |

100 |

75 |

Table 8.

Classification results for epileptic seizures and control samples using the AAPE feature with GRU, LSTM and BiLSTM classifiers.

Table 8.

Classification results for epileptic seizures and control samples using the AAPE feature with GRU, LSTM and BiLSTM classifiers.

| AAPE |

TN |

FP |

FN |

TP |

Accuracy |

recall |

specificity |

precision |

F1-measure |

| GRU |

5 |

0 |

1 |

4 |

90 |

80 |

100 |

100 |

88.9 |

| LSTM |

4 |

1 |

1 |

4 |

80 |

80 |

80 |

80 |

80 |

| Bi-LSTM |

5 |

0 |

2 |

3 |

80 |

60 |

100 |

100 |

75 |

It’s expected that the

features would be the most helpful in practice if its components were combined, given this is how it works conceptually. These feature techniques, when combined, provide knowledge from a number of different points of view, which enables superior model predictions. After the initial feature extraction has been completed, it is possible to employ ML and DL models in order to capture temporal dependencies. In the end, the decision between ML and DL techniques should be guided by considerations such as the size of the dataset, the amount of computational resources available, the level of interpretability, and the complexity of the temporal interactions present in the data. There is shown in

Table 9 which displays the results of applying SVM, KNN, and DT machine learning classifiers on the

fusion feature attributes set. The results obtained by combining the four time and entropy features with the DT classifier produce superior outcomes to those obtained by using the KNN and SVM classification models, respectively.

Thus, the feature set combines the best aspects of both time domain and entropy features in an effort to improve seizure prediction algorithms. This means that the feature set is designed to enhance the final classification results, particularly for the DT classifier, by providing in-depth explanations and analyses from the time domain and entropy characteristics.

In addition, the outcomes of utilizing GRU, LSTM, and BiLSTM RNN deep learning classifiers on the

fusion feature characteristics set are shown in

Table 10. In this case, the

feature set is designed to improve the final classification results thanks to its detailed explanations and studies from the time domain and entropy characteristics.