In the finite element calculation of temperature field and stress field of hydraulic concrete structure[

1,

2,

3], especially the concrete structure with cooling water pipe[

4], in order to get high accuracy[

5,

6], the calculators usually build a dense model, which leads to the increase of calculation scale[

7]. In addition, reasonable temperature control calculation should be carried out in real time with the construction[

8,

9], so that the boundary conditions and parameters can be continuously adjusted and corrected with reference to the measured data, so as to obtain more reasonable results. Due to the low computational efficiency of the traditional serial program, it can not meet the actual needs of engineering under the condition of ensuring the calculation accuracy. All the above problems show that the existing serial simulation calculation method needs comprehensive improvement to improve the computational efficiency.

In recent years, the growth of CPU clock rate is slowing down year by year. In the field of engineering computing[

10], the exploration of improving computing efficiency is gradually transferred to the parallelization implementation of programs[

11]. Many explorations have been made for the implementation of multi-core CPU parallelism. Mao parallelized the joint optimization scheduling of multiple reservoirs in the upper reaches of Huaihe River, which took 5671.1s for 1 CPU core and 2104.6s for 6 CPU cores, with an accelerate rate of 2.704, and the efficiency is 45%[

12]. Jia proposed a master-slave parallel MEC based on MPI, analyzed the effects of task allocation, communication overhead, sub-population size, individual evaluation time and the number of processors on the parallel speedup[

13]. CPU parallelism relies on adding more CPU cores to achieve speedup. Whether you buying a multicore CPU or cluster parallelism[

14], an order of magnitude increase in the number of CPU cores is definitely very expensive. The GPU of a home grade graphics card contains hundreds or thousands of processing cores[

15,

16].

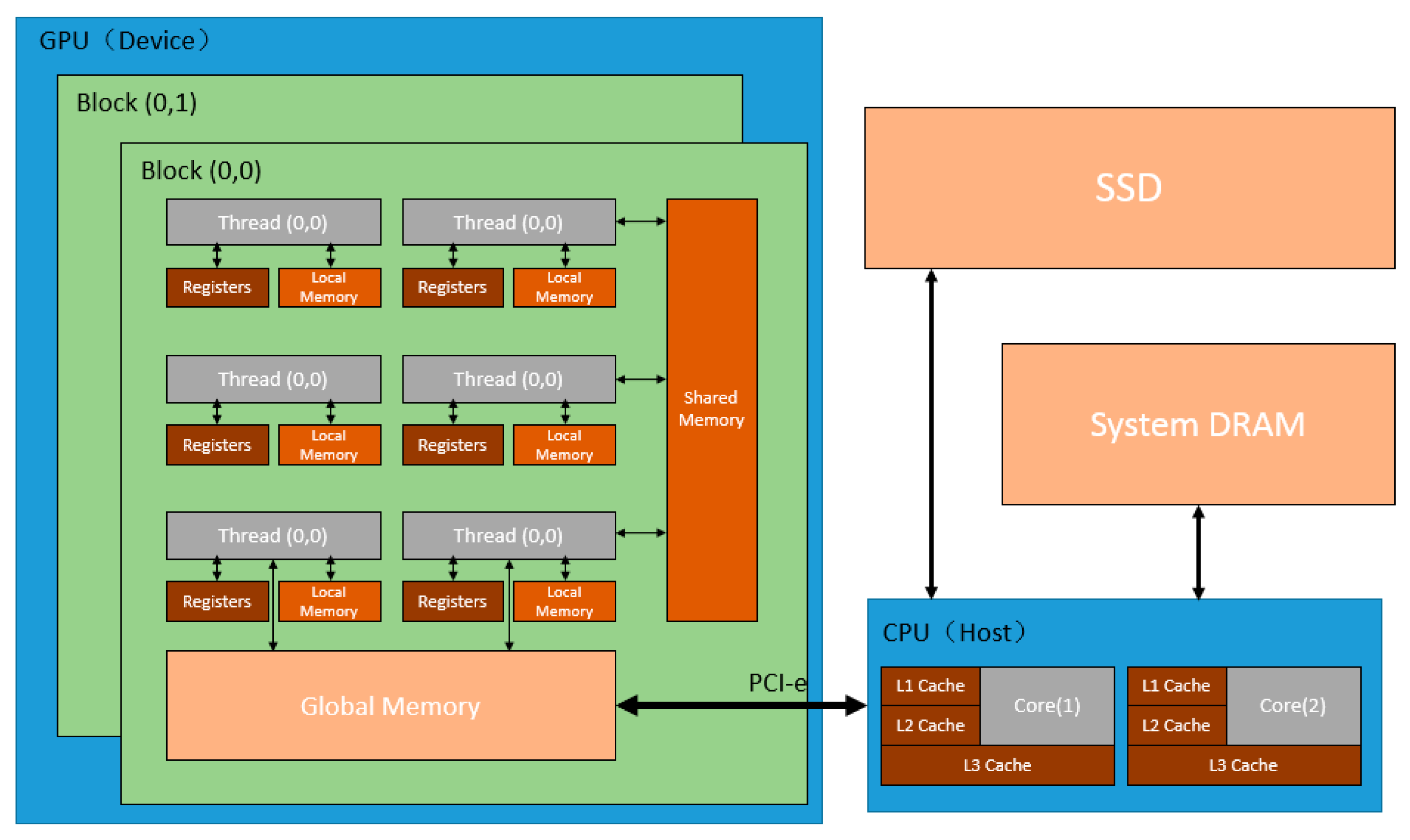

The full name of GPU is Graphic Processing unit, which is designed for image computing, and the research in the field of image computing has been very mature. As a fine-grained parallel method, GPU was designed for compute-intensive, highly parallel computing, which enabled more transistors to be used for data processing, rather than data caching or flow control. GPU has an absolute advantage over CPU in the number of processors and threads. Therefore, GPU computing is very suitable for the field that requires large-scale parallel computing. In 2009, Portland Group(PGI) and NVDIA jointly launched the CUDA Fortran compiler platform, which greatly expanded the ication range of GPU general purpose computing. The release of this platform makes GPU computing widely used in medicine, meteorology, fluid calculation, hydrological prediction and other numerical computing fields[

17,

18,

19,

20]. T Mcgraw presented a Bayesian formulation of the fiber model which shown that the inversion method can be used to construct plausible connectivity[

21]. An implementation of this fiber model on the graphics processing unit (GPU) is presented. Qin proposed a fast 3D registration technique based on CUDA architecture, which improves the speed by an order of magnitude while maintaining the registration accuracy, which is very suitable for medical clinical ications[

22]. Takashi uses GPU to implement ASUCA, the next generation weather prediction model developed by Japan Meteorological Agency, and achieves significant performance speedup[

23]. Taking pres-tack time migration and Gazdag depth migration in seismic data processing as a starting point, Liu introduced the idea, architecture and coding environment of GPU and CPU co-processing with CUDA[

24]. GPU acceleration for OpenFMO, a fragment molecular orbital calculation program, has been implemented and its performance was examined.The GPU-accelerated program shows 3.3× speedups from CPU. MA Otaduy presented a parallel molecular dynamics algorithm for on-board multi-GPU architectures, parallelizing a state-of-the-art molecular dynamics algorithm at two levels[

25]. Liang ied GPU platform and FDTD method to solve Maxwell equations with complex boundary conditions, large amount of data and low data correlation[

26]. Wen presents a new particle-based SPH fluid simulation method based completely on GPU.By this method,hash-based uniform grid is constructed firstly on GPU to locate the neighbor particles faster in arbitrary scale scenes[

27]. Lin presented a new node reordering method to optimize the bandwidth of sparse matrices, resulting in a reduction of the communication between GPUs[

28]. JC Kalita present an optimization strategy for BiCGStab iterative solver on GPU for computing incompressible viscous flows governed by the unsteady N-S equations on a CUDA platform[

29]. Tran harness the power of accelerators such as graphics processing units (GPUs) to accelerate numerical simulations up to 23x times faster [

30].Cohen uses a GPU-based sparse matrix solver to improve the solving speed of the flood forecasting model, which is of great benefit to the early warning and operation management during the flood[

31]. Ralf uses CUDA to numerically solve the equations of motion of a table tennis ball and performs statistical analysis of the data generated by the simulation[

32].The Mike (Mike11, Mike21, and Mike3) series of commercial software developed by the Danish Institute of Hydraulics has also introduced GPUs to improve the computational efficiency of models[

33,

34,

35]

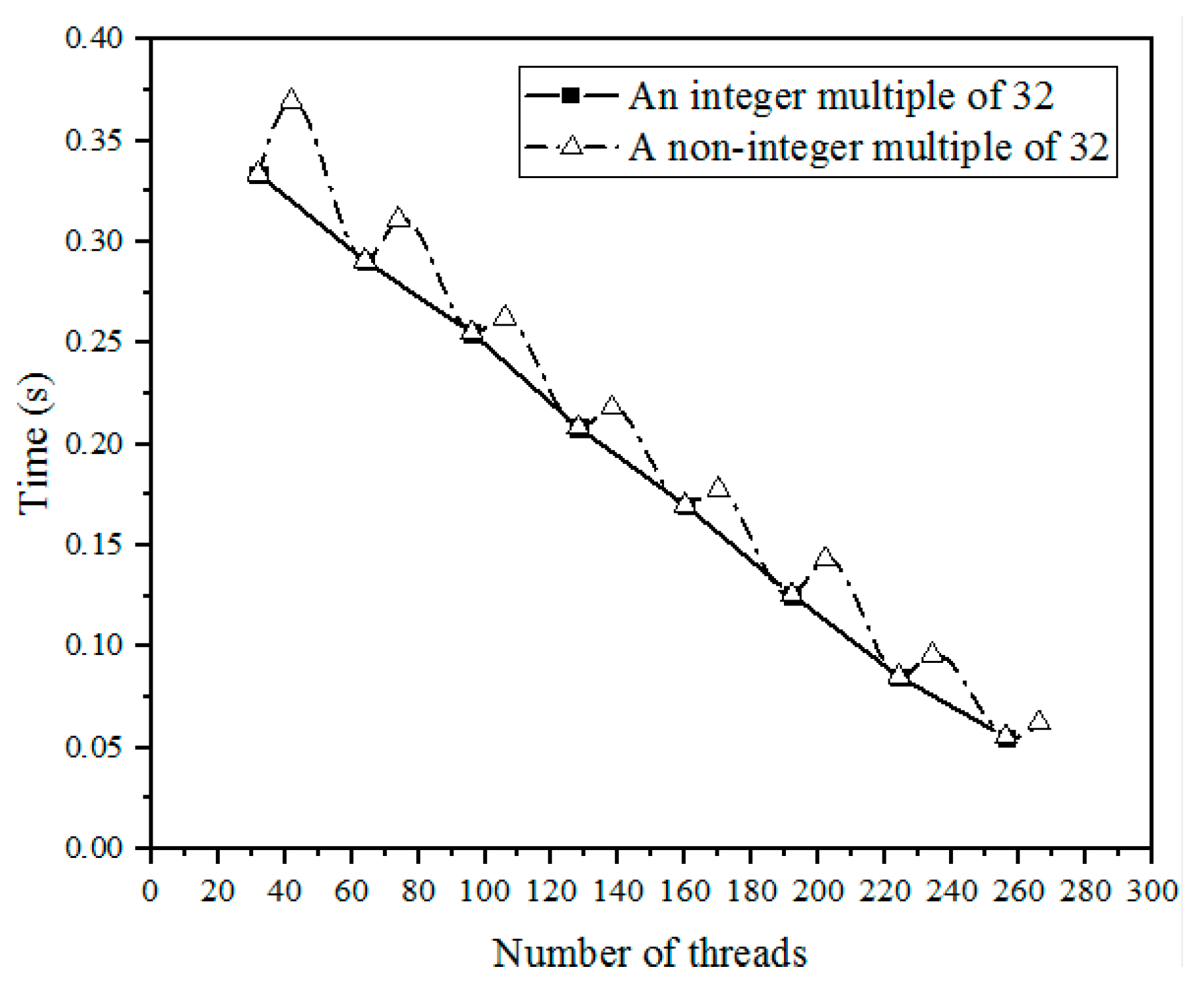

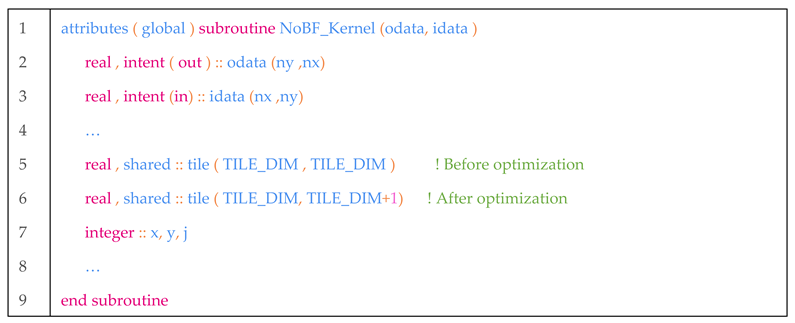

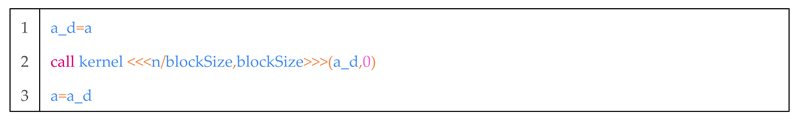

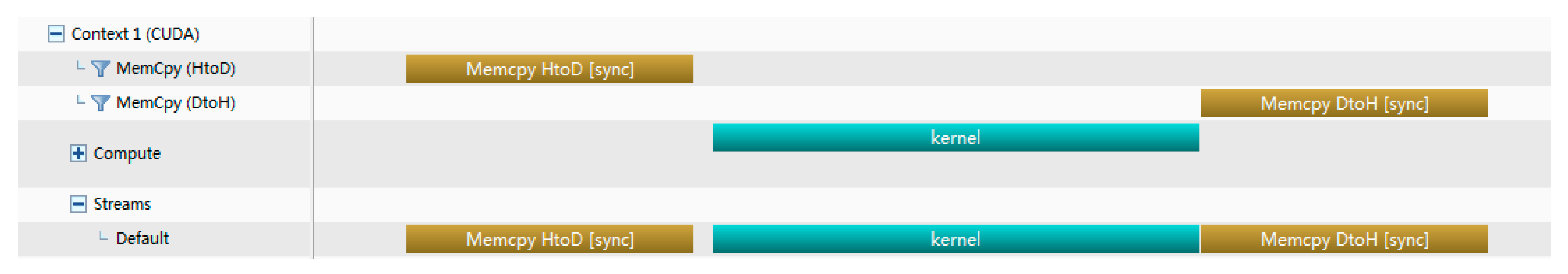

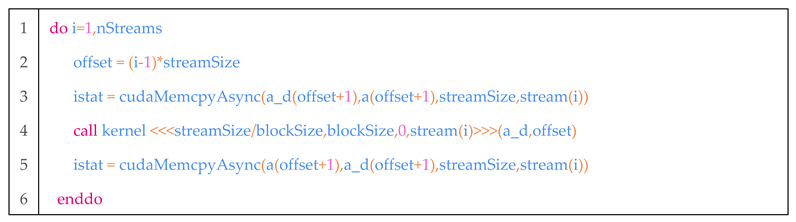

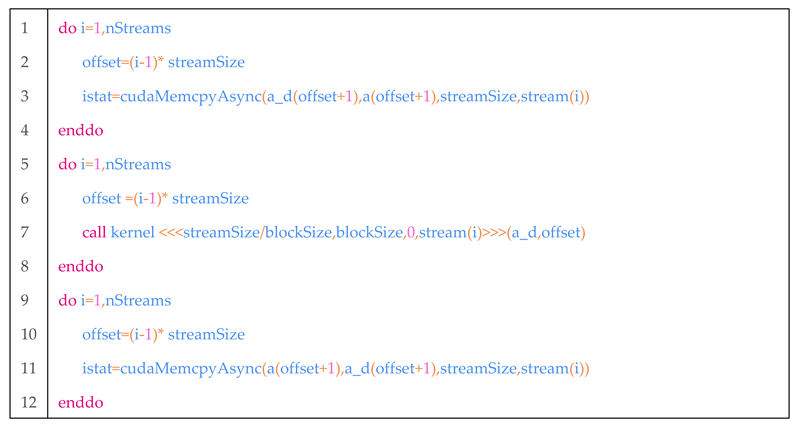

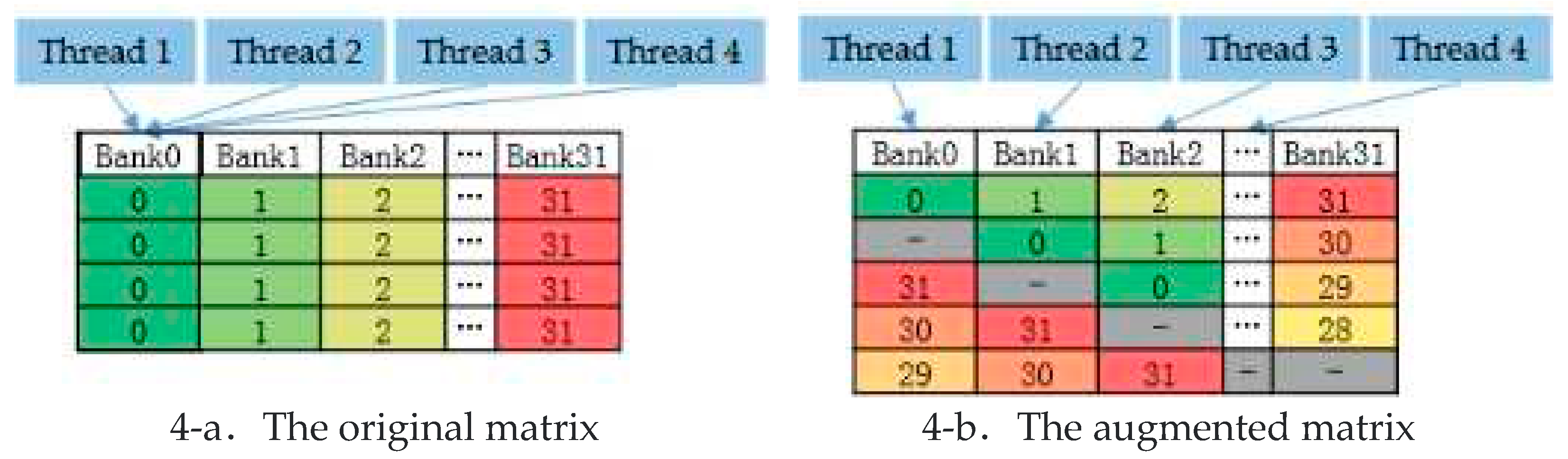

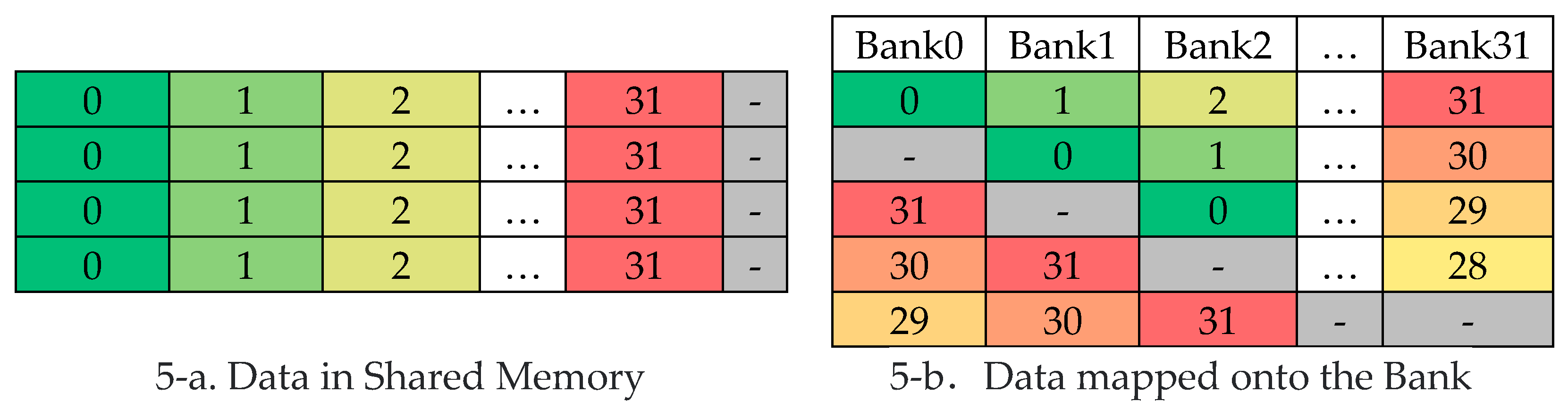

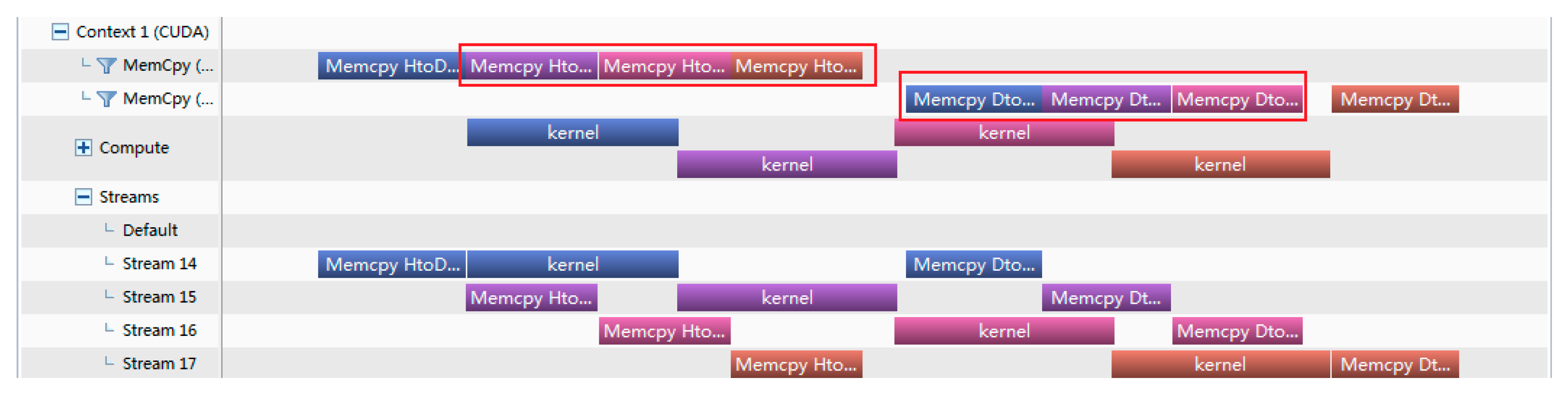

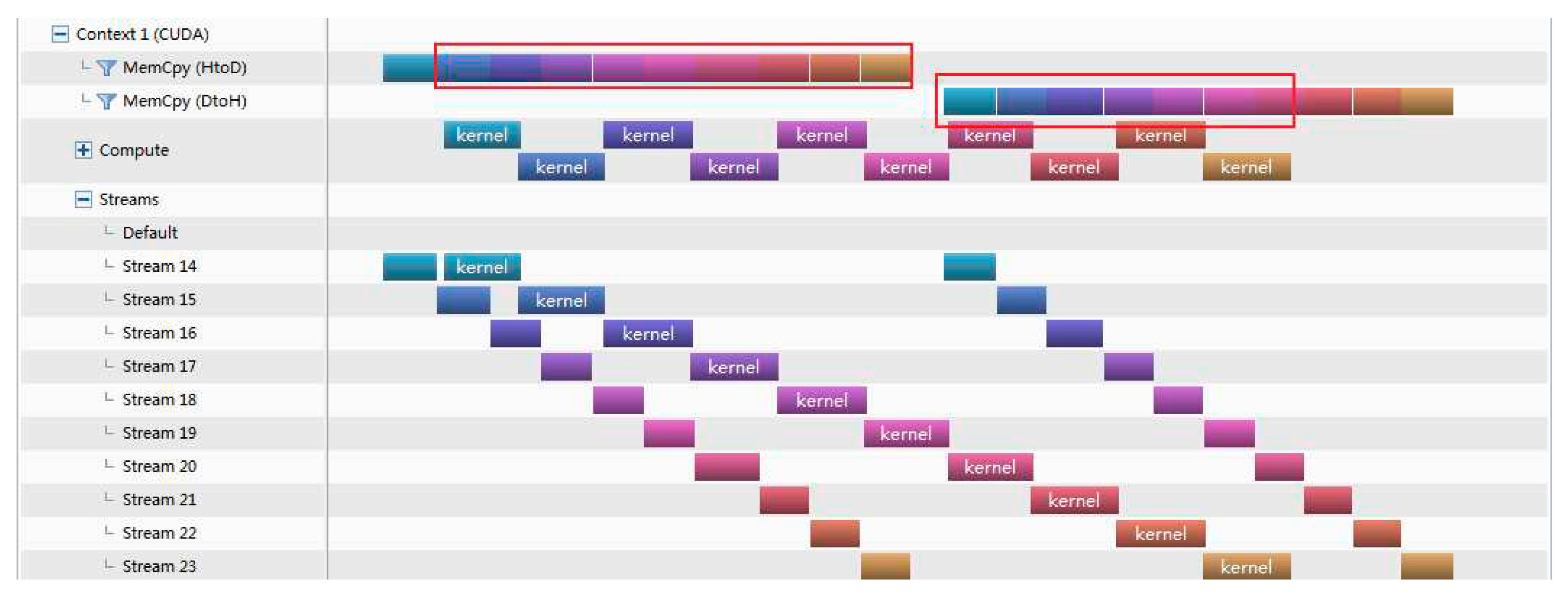

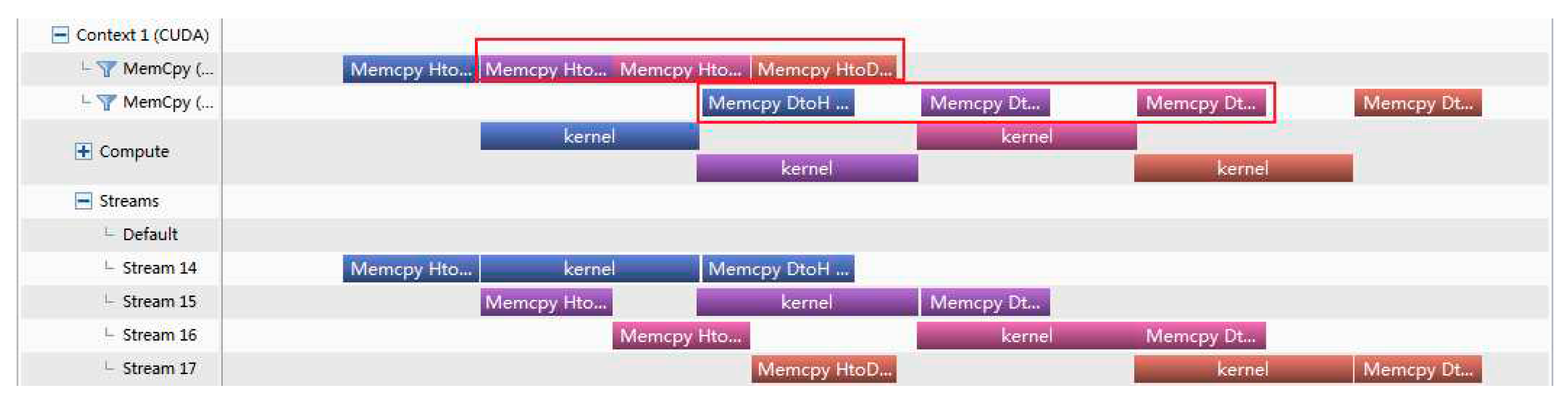

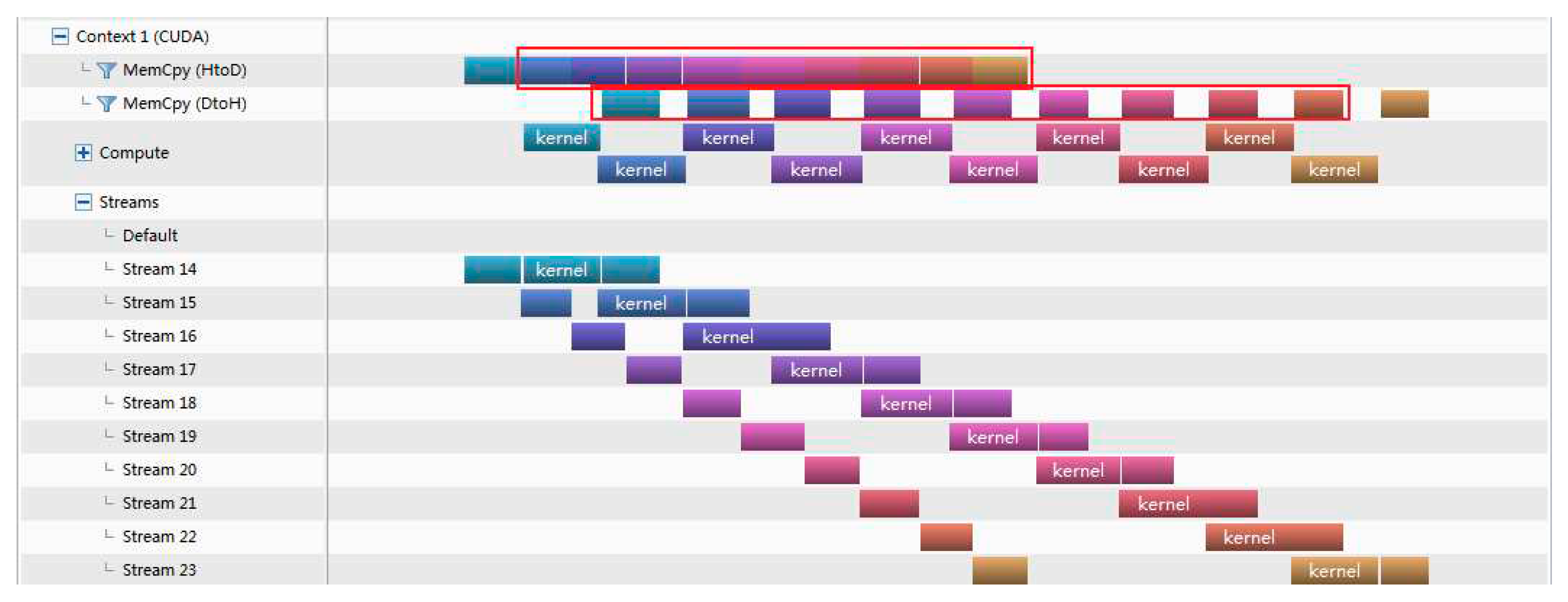

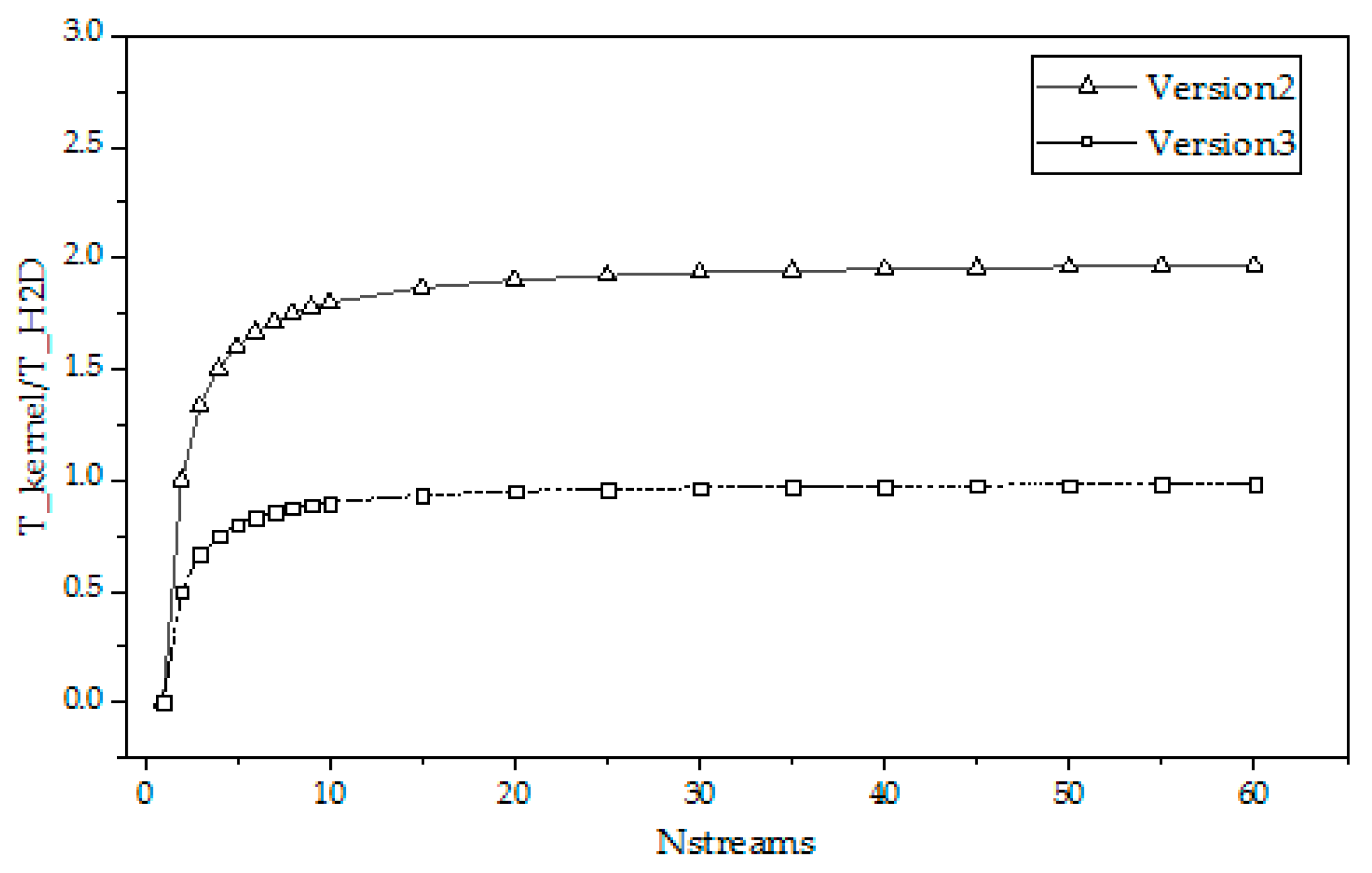

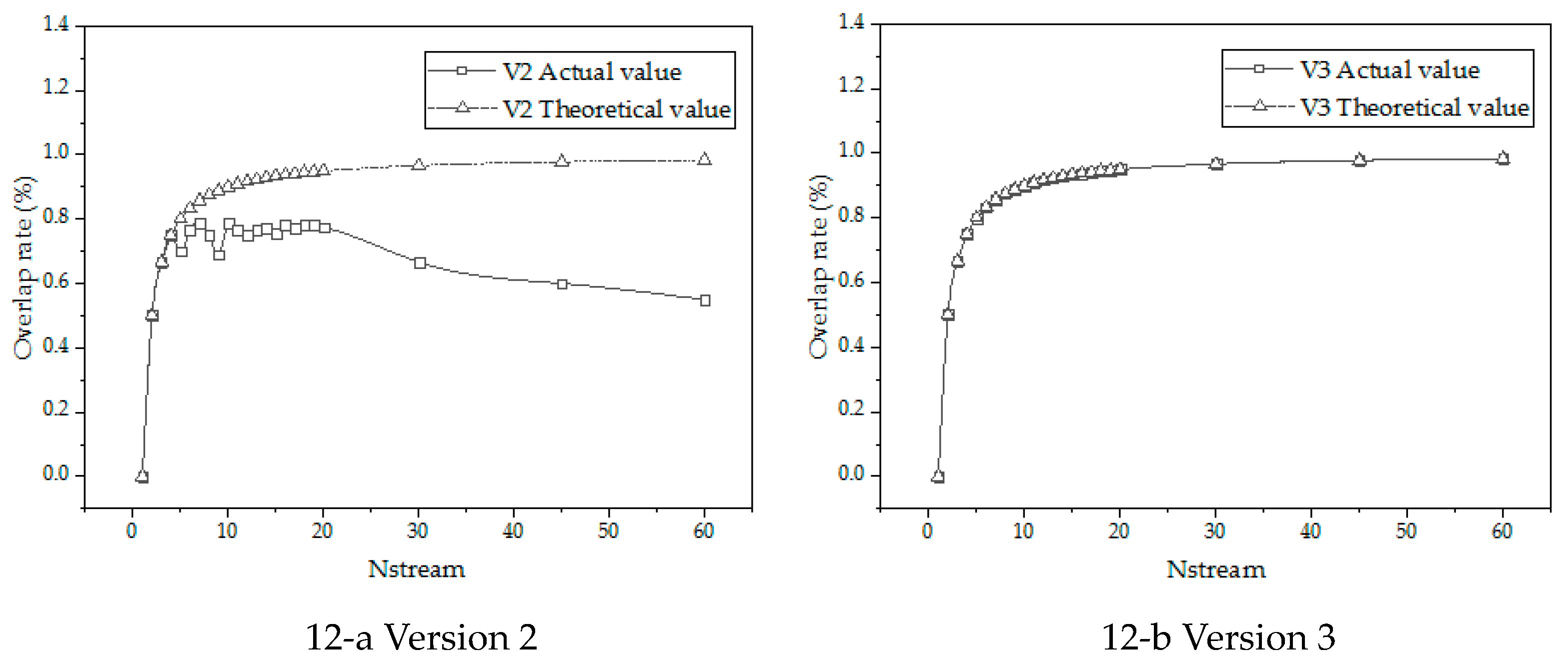

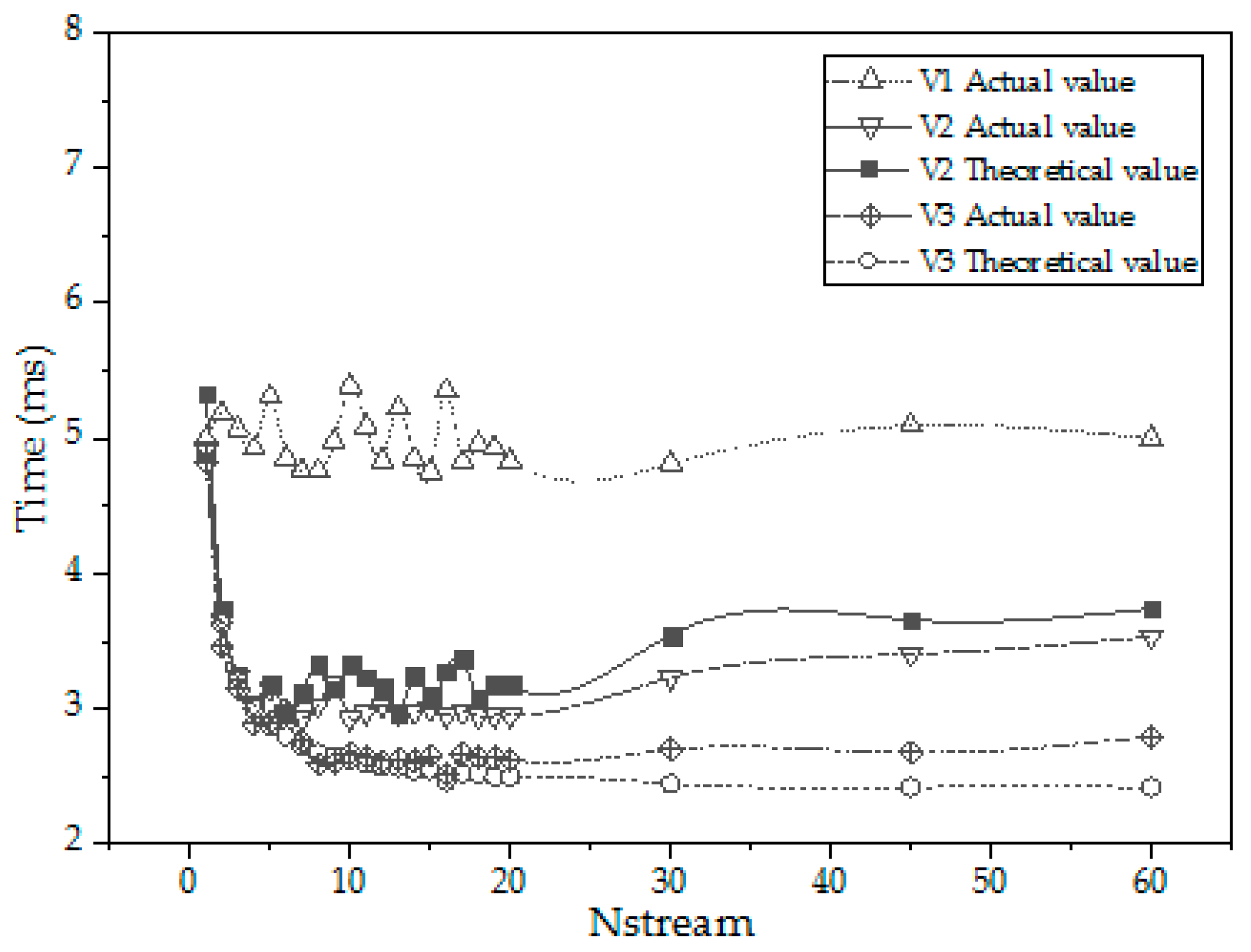

GPU parallel computing is rarely used in the field of concrete temperature and stress field simulation. Concrete simulation calculation is to simulate the construction process[

36], environmental conditions[

37], material property changes and crack prevention measures and other factors as accurately as possible[

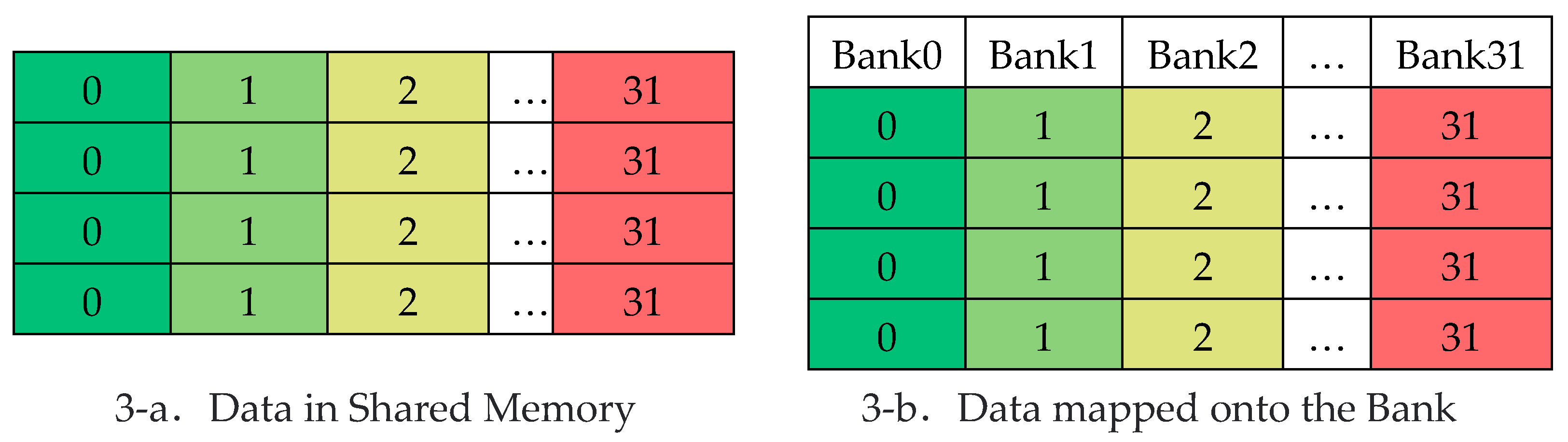

38]. It is of great significance to y GPU parallel computing to concrete temperature control simulation calculation for real-time inversion of temperature field and stress field and shortening calculation time. In this paper, CUDA Fortran compiler platform is used to transform the large massive concrete temperature control simulation program into GPU parallel program. An improved analysis formula of GPU parallel algorithm is proposed. Aiming at the extra time consumption of GPU parallel in the indicators, two measures are proposed to optimize the GPU parallel program: One is to use shared memory to reduce the date access time, and the other is to hide the date access time through asynchronous parallelism.