Submitted:

14 September 2023

Posted:

18 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature review

3. General encoding and decoding algorithm

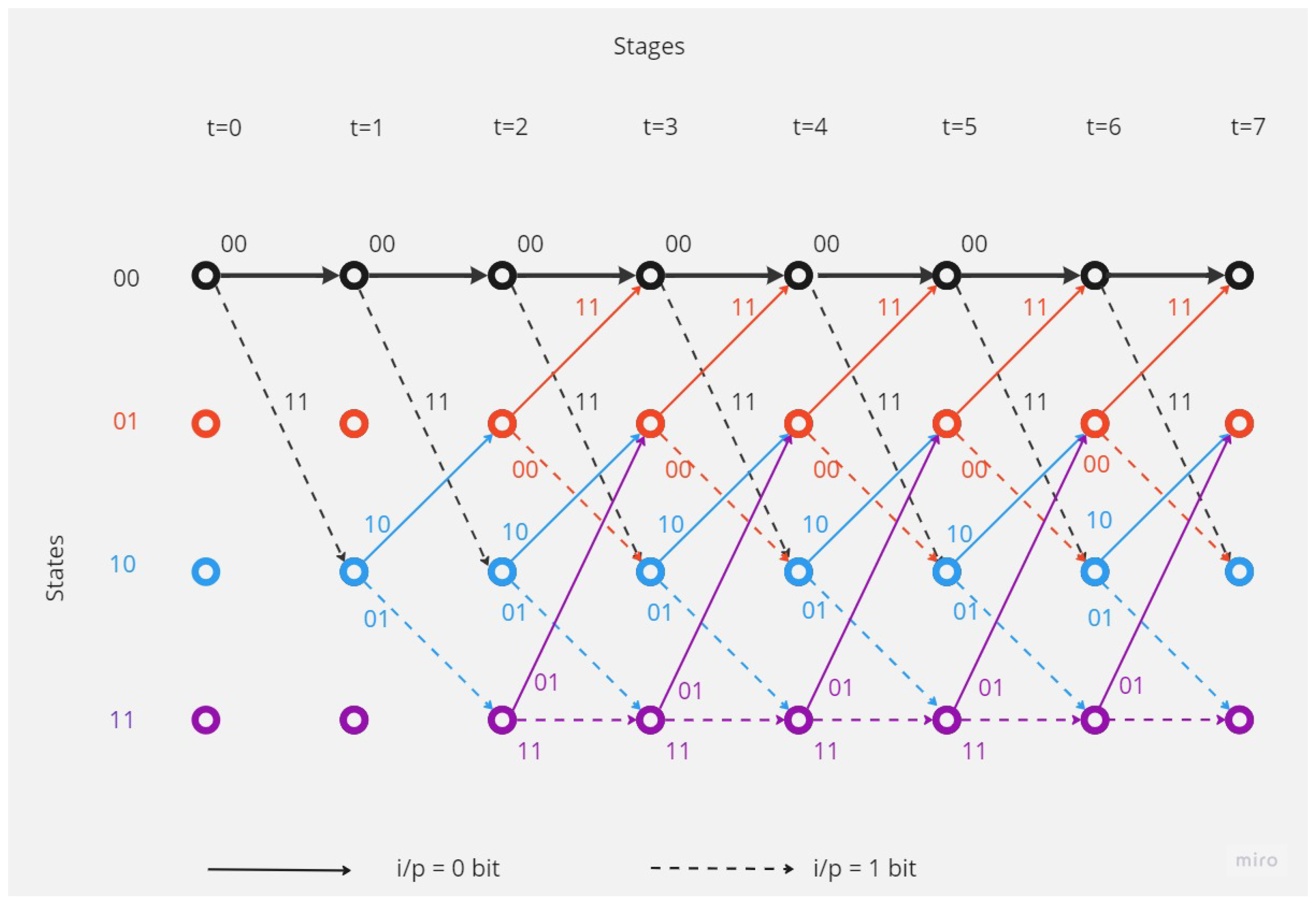

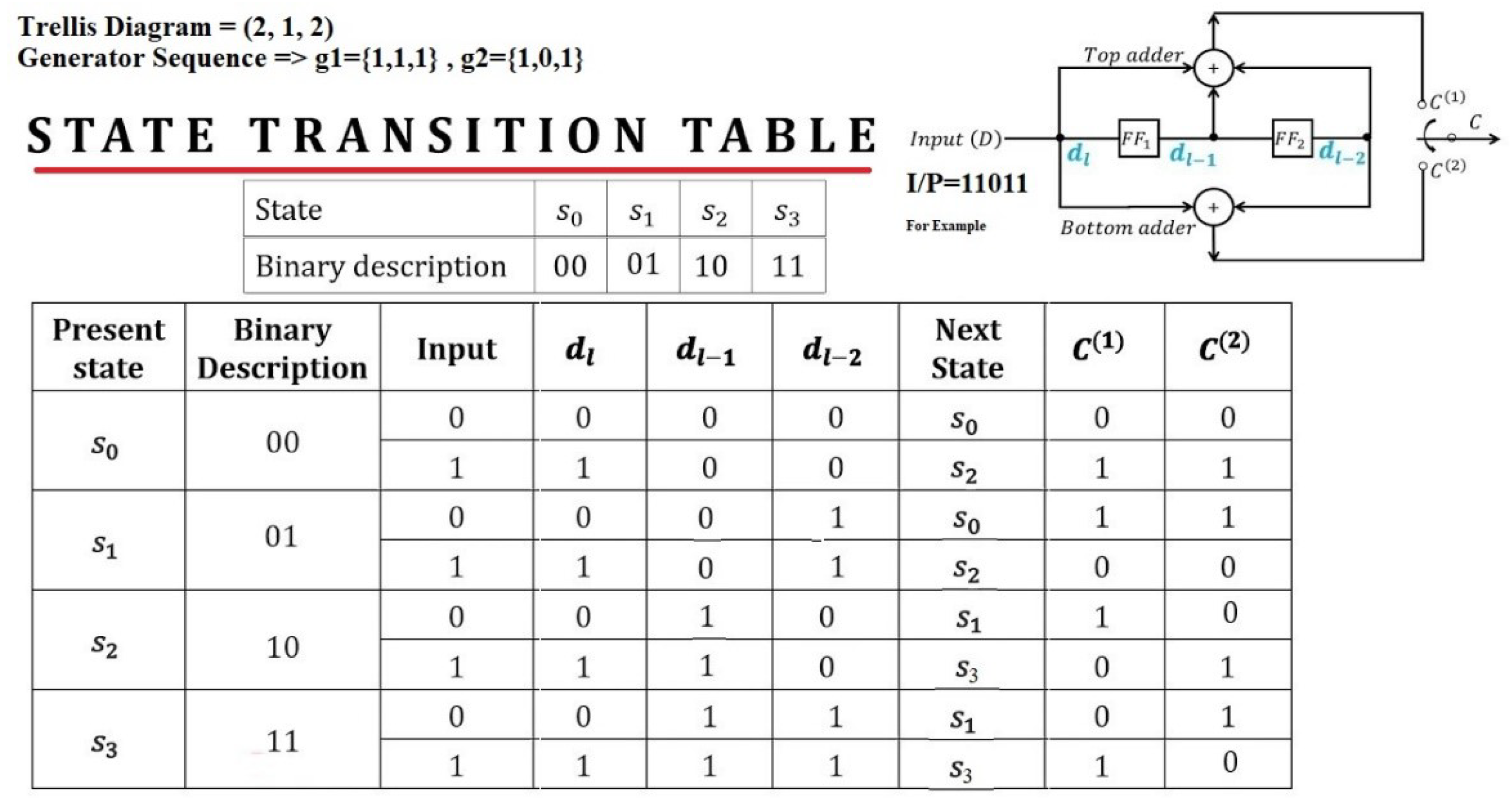

- Trellis Diagram

- Viterbi Algorithm

- PM [s, i+1] is the new path metric for state (s) at time i+1. It represents the likelihood of the most likely path through the trellis diagram that ends in state (s) at time i+1.

- PM [, i] is the previous path metric for state at time i. It represents the likelihood of the most likely path through the trellis diagram that ends in state at time i.

- B [, i] is the branch metric for transitioning from state at time i to state (s) at time i+1. It represents the similarity between the received signal and the expected signal for this state transition.

- 1.

-

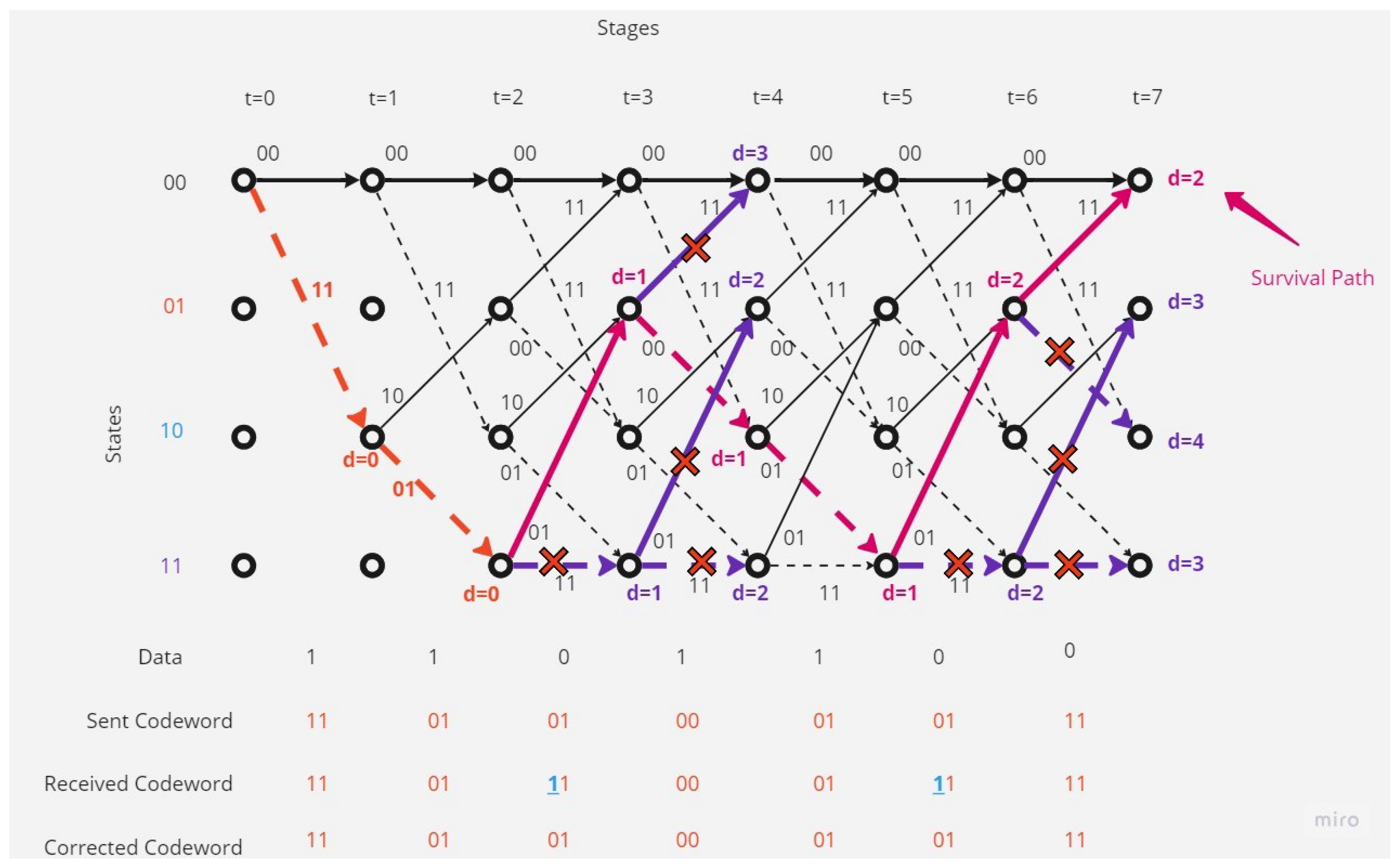

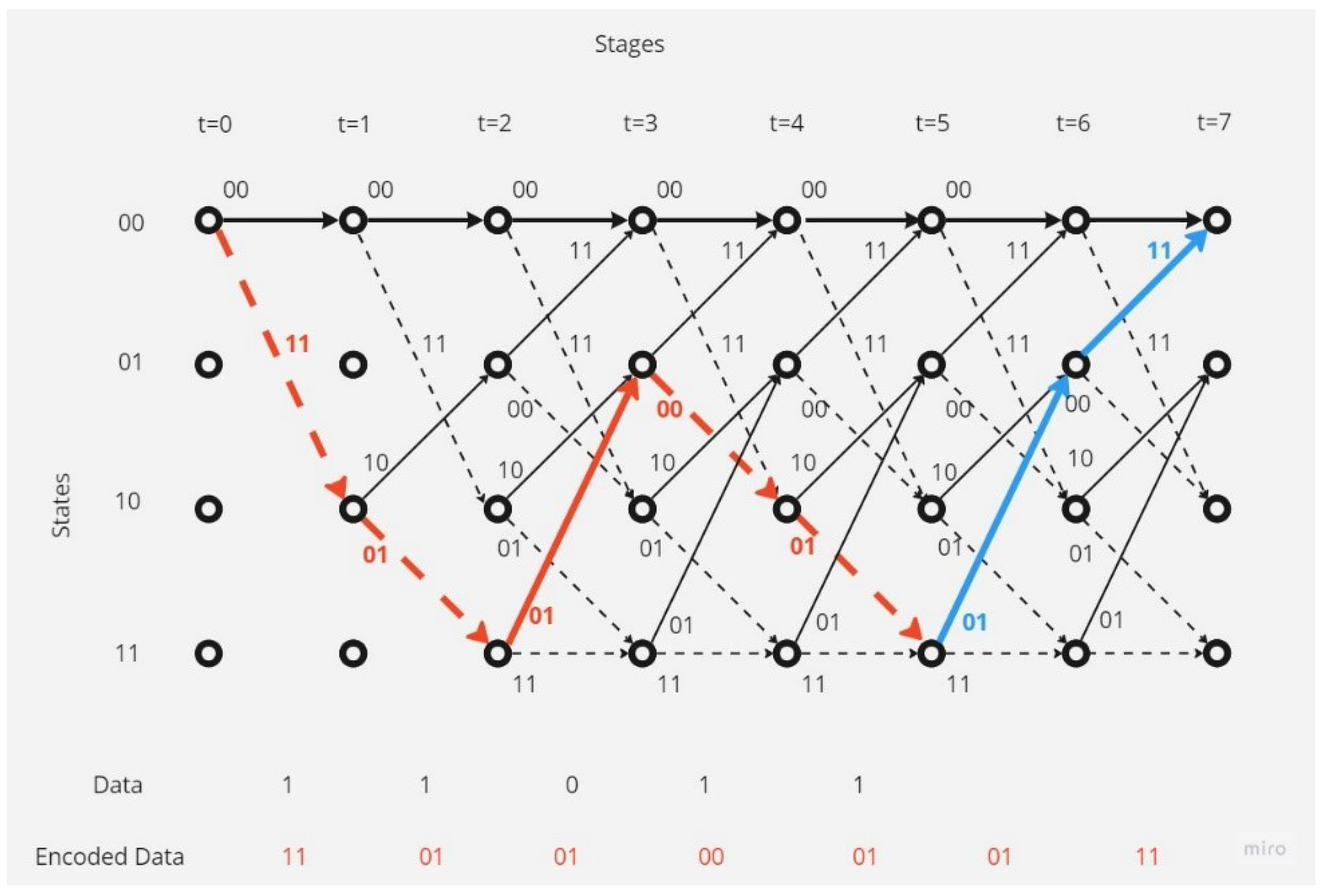

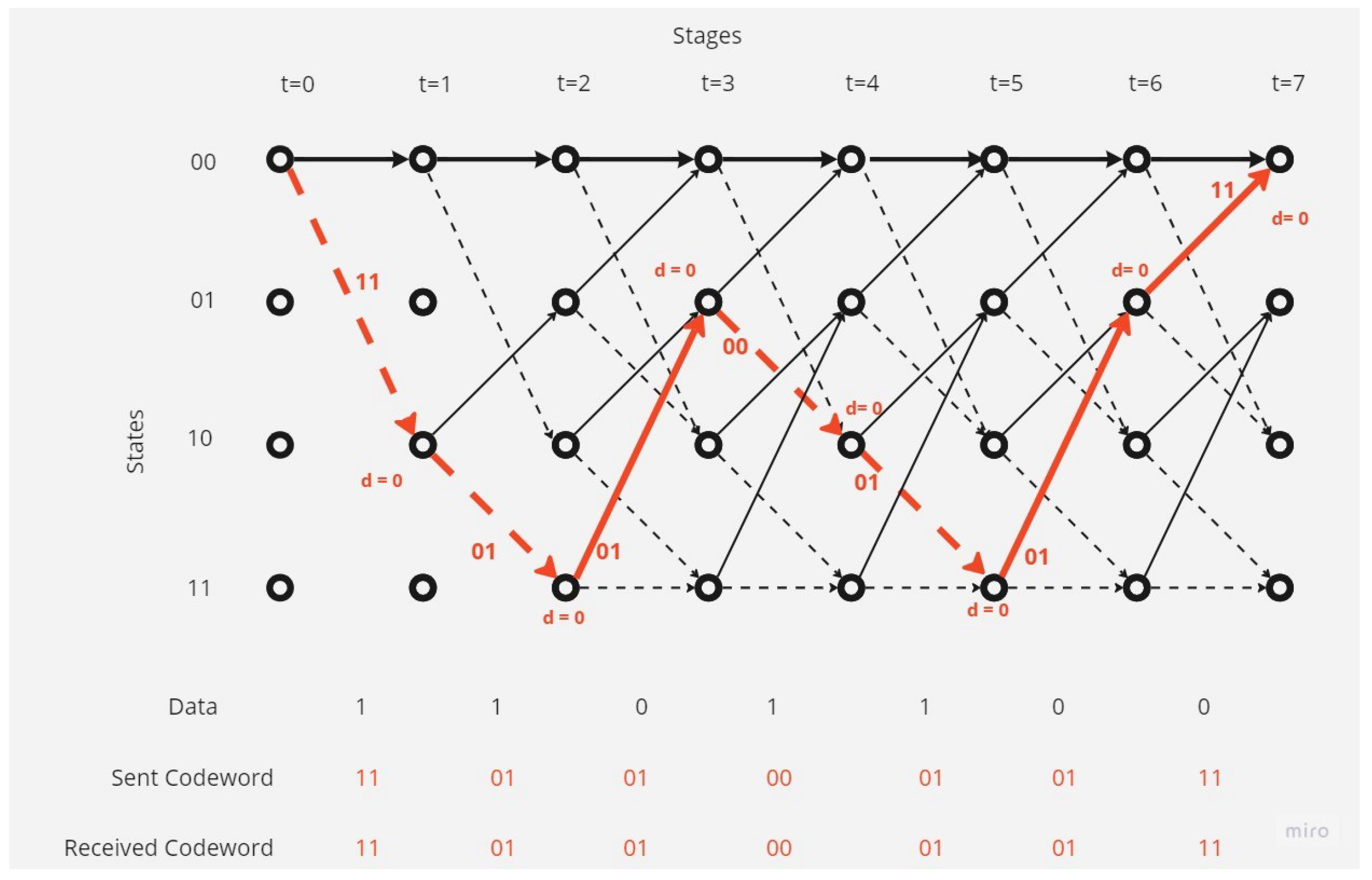

There is no error in the received codeword.In this case the decoding operation at the receiver point will as shown in the Figure 5:The receiver and depending on Viterbi algorithm found the correct code depending on the survival path (path with minimum hamming distance value, here it equal to 0).

- 2.

-

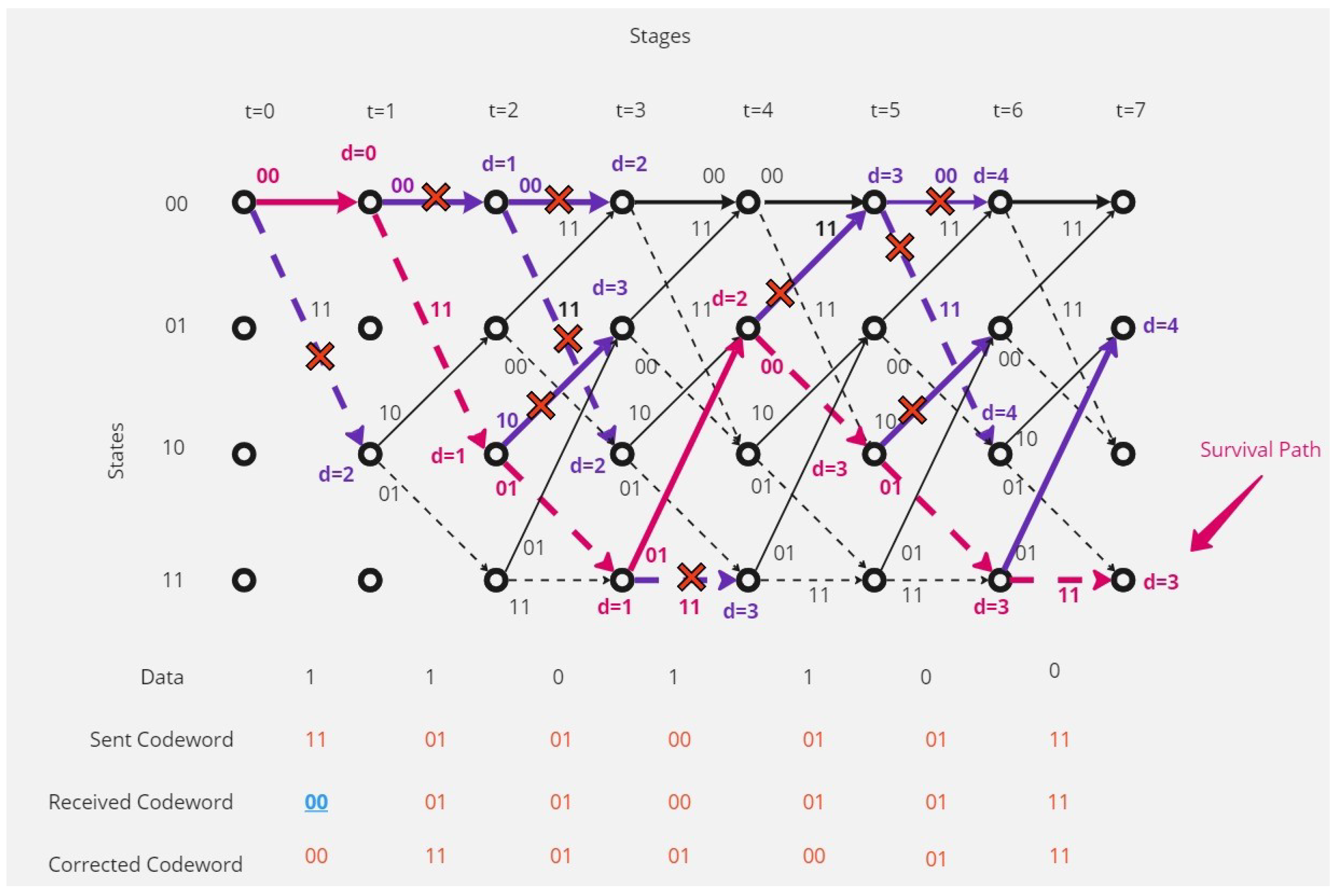

There is one error in the received codeword.Figure 6 shows that the receiver received a codeword with one-bit error.The receiver and depending on Viterbi algorithm found the error bit and corrected code depending on the survival path (path with minimum hamming distance value, here it is 1).

- 3.

-

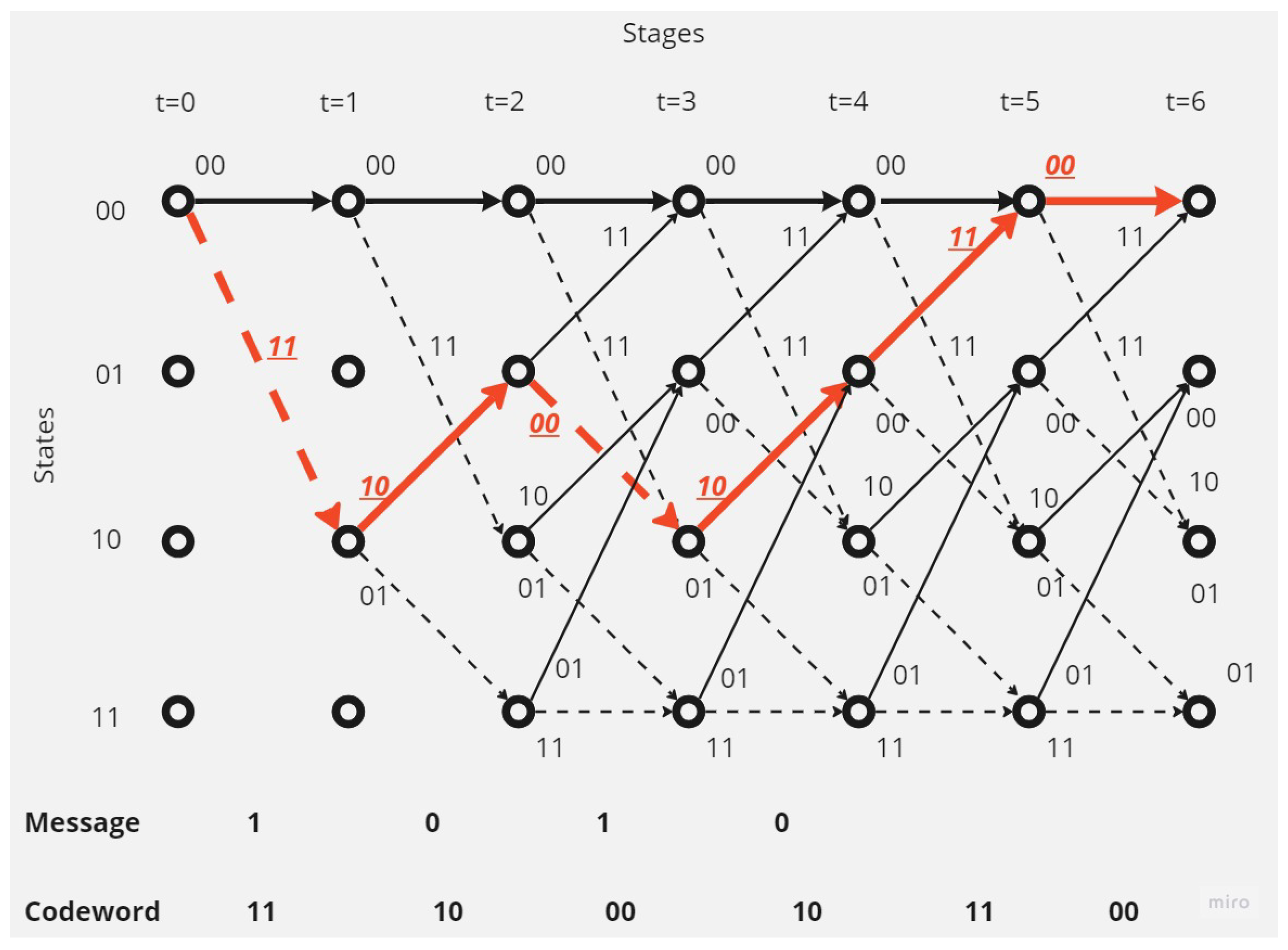

There are separated errors in the received codeword.Figure 7 show that the receiver received a codeword with a separated two-bit error.The receiver and depending on Viterbi algorithm found the error bit and corrected code depending on the survival path (path with minimum hamming distance value, here it is 2).

- 4.

-

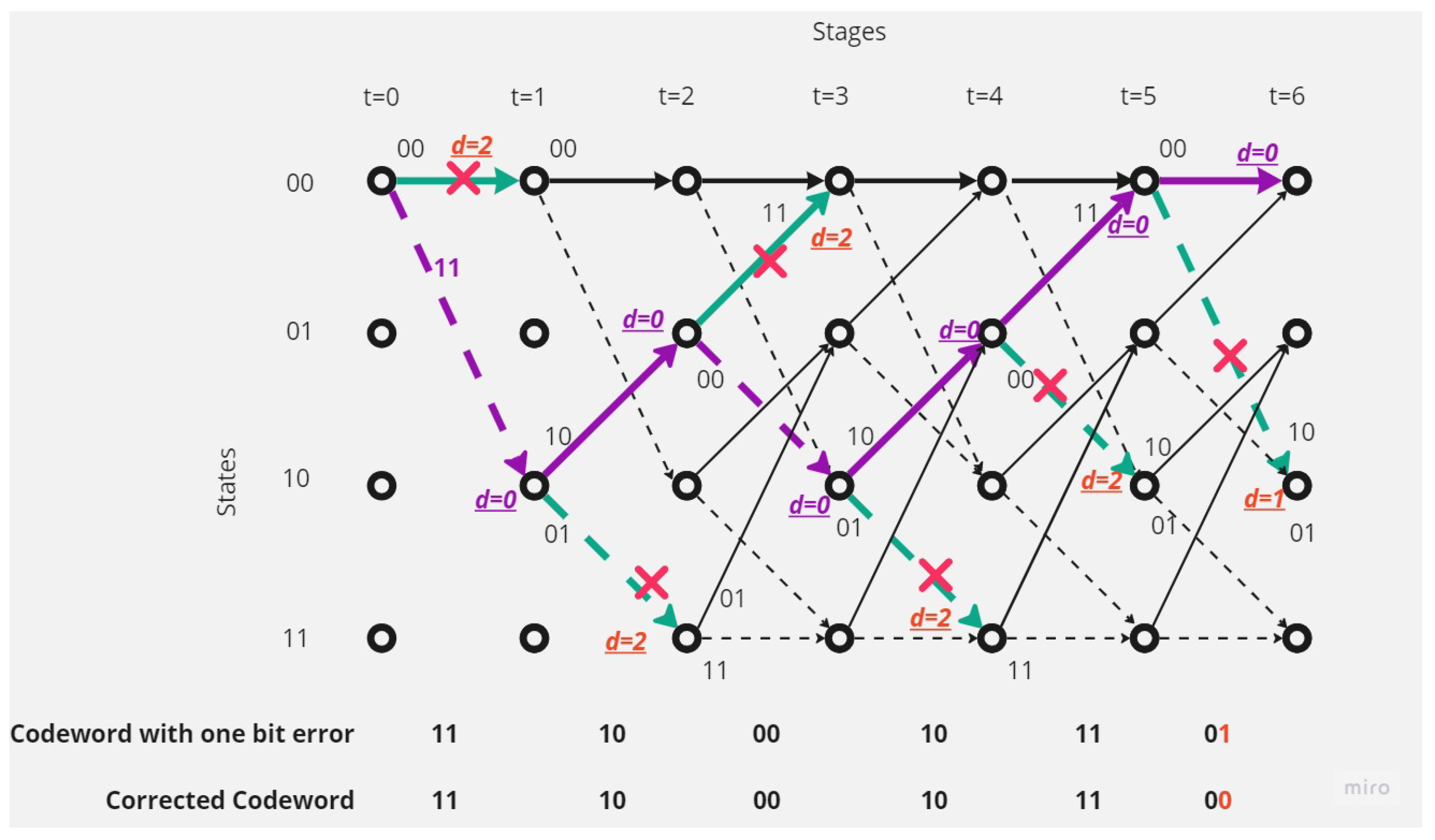

There are continuous (contiguous) errors in the received codeword.Figure 8 show that the receiver received a codeword with continuous errors.The receiver in this situation couldn’t detect the errors and the receiver could correct only 64.29% of the received codeword, and there are 35.71% of the received code word couldn’t correct it, and this shows the disadvantages of this type of coding and decoding method.

4. Comparative analysis Convolutional codes with LDPC codes, BCH codes and Turbo codes

- High data rate: Satellite communication systems typically need to transmit high volumes of data over long distances. Convolutional codes are efficient error-correcting codes that can operate at high data rates. They can be implemented in hardware or software and enable reliable transmission of large amounts of data.

- Channel characteristics: Satellite communication channels often suffer from fading and interference, which can cause errors and degradation of the transmitted signal. Convolutional codes and the Viterbi algorithm are designed to deal with such channel impairments. Convolutional codes are powerful because their encoding and decoding processes involve comparing the received signal with the expected signal. The Viterbi algorithm can estimate the most likely transmission sequence from a received signal in spite of channel distortions.

- Low computational complexity: Satellite communication systems often have limited computational resources due to power and size constraints. Convolutional codes and the Viterbi algorithm are computationally efficient and require relatively few resources, making them suitable for use in satellite communication systems.

- Real-time processing: Satellite signals must be processed in real-time to provide real-time communication services. The algorithm used for the decoding needs to be fast and efficient, which is another reason why the Viterbi algorithm is often used. The algorithm has been proven to decode signals in real-time, making it a good fit for satellite communication systems.

- Compatibility with modulation schemes: Convolutional codes can be used with a variety of modulation schemes, including phase-shift keying (PSK) and quadrature amplitude modulation (QAM). This makes them a versatile choice for satellite communication systems, where different modulation schemes may be used depending on the specific requirements of the system.

4.1. Convolutional codes VS. LDPC codes

4.2. Convolutional codes VS. BCH codes

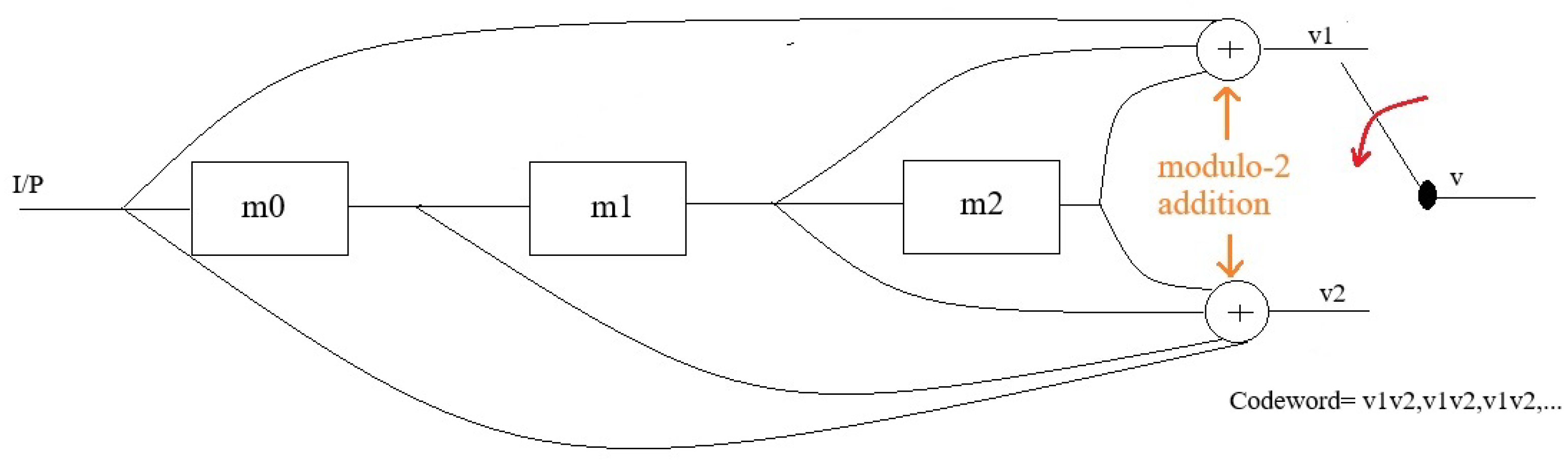

- Set all memory registers to zero.

- Feed the input bits one by one and shift the register values to the right.

- Calculate the output bits using the generator polynomials = (1,1,1) and = (1,0,1).

- Append two zero bits at the end of the message to flush the encoder.

4.3. Convolutional codes VS. Turbo codes

- Block codes require larger block sizes to achieve the same level of error protection as convolutional codes. The larger block size means more bits need to be processed, which can slow down the system.

- Block codes require more complex encoding and decoding algorithms than Convolutional codes, which can also slow down the system. Convolutional codes use a shift register and some XOR gates to generate the parity bits, which is a simpler process than the matrix multiplication required for block codes.

- Error detection and correction are more efficient in Convolutional codes than block codes. Convolutional codes can detect and correct errors in real-time, while block codes require the entire block to be received before errors can be corrected.

- More memory to store the parity check matrix used for decoding;

- Significant processing power, which can be problematic for low-power and low-cost GPS devices that are commonly used in commercial applications;

- Slow encoding and decoding operation.

5. Conclusions

Acknowledgments

Abbreviations

| LDPC codes | Low-Density Parity Check (LDPC) codes |

| BCH codes | The Bose, Chaudhuri, and Hocquenghem (BCH) codes |

| EUMETSAT | European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT) |

References

- Ripa, H.; Larsson, M. A Software Implemented Receiver for Satellite Based Augmentation Systems. Master’s thesis, Luleå University of Technology, 2005.

- Wikipedia contributors. GPS signals — Wikipedia, The Free Encyclopedia, 2023. [Online; accessed 30-August-2023].

- Gao, Y.; Cui, X.; Lu, M.; Li, H.; Feng, Z. The analysis and simulation of multipath error fading characterization in different satellite orbits. China Satellite Navigation Conference (CSNC) 2012 Proceedings. Springer, 2012, pp. 297–308.

- Gao, Y.; Yao, Z.; Cui, X.; Lu, M. Analysing the orbit influence on multipath fading in global navigation satellite systems. IET Radar, Sonar & Navigation 2014, 8, 65–70. [Google Scholar]

- Morakis, J. A comparison of modified convolutional codes with sequential decoding and turbo codes. 1998 IEEE Aerospace Conference Proceedings (Cat. No.98TH8339), 1998, Vol. 4, pp. 435–439 vol.4. [CrossRef]

- Chopra, S.R.; Kaur, J.; Monga, H. Comparative Performance Analysis of Block and Convolution Codes. Indian Journal of Science and Technology 2016, 9, 1–6. [Google Scholar] [CrossRef]

- Wang, J.; Tang, C.; Huang, H.; Wang, H.; Li, J. Blind identification of convolutional codes based on deep learning. Digital Signal Processing 2021, 115, 103086. [Google Scholar] [CrossRef]

- Pandey, M.; Pandey, V.K. Comparative Performance Analysis of Block and Convolution Codes. International Journal of Computer Applications 2015, 119, 43–7. [Google Scholar] [CrossRef]

- Benjamin, A.K.; Ouserigha, C.E. Implementation of Convolutional Codes with Viterbi Decoding in Satellite Communication Link using Matlab Computational Software. European Scientific Journal (ESJ) 2021, 17, 1. [Google Scholar] [CrossRef]

- Huang, F.h. Convolutional Codes; Springer, 2010.

- Ass.Prof.Dr.Thamer. Convolution Codes. https://uotechnology.edu.iq/dep-eee/lectures/4th/Communication/Information%20theory/4.pdf, Unknown. Accessed on: [Insert date here].

- G. DAVID FORNEY, J. G. DAVID FORNEY, J. The Viterbi Algorithm. PDF file, 1973.

- Ivaniš, P.; Drajić, D. Information Theory and Coding-Solved Problems; Springer, 2017.

- Dafesh, P.; Valles, E.; Hsu, J.; Sklar, D.; Zapanta, L.; Cahn, C. Data message performance for the future L1C GPS signal. Proceedings of the 20th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS 2007), 2007, pp. 2519–2528.

- Corraro, F.; Ciniglio, U.; Canzolino, P.; Garbarino, L.; Gaglione, S.; Nastro, V. An EGNOS Based Navigation System for Highly Reliable Aircraft Automatic Landing.

- Wikipedia contributors. Convolutional code — Wikipedia, The Free Encyclopedia, 2023. [Online; accessed 31-July-2023].

- Dalal, D. Convolutional Coding And Viterbi Decoding Algorithm.

- Wikipedia contributors. BCH code — Wikipedia, The Free Encyclopedia, 2023. [Online; accessed 31-July-2023].

- Wikipedia contributors. Turbo code — Wikipedia, The Free Encyclopedia. https://en.wikipedia.org/w/index.php?title=Turbo_code&oldid=1106388136, 2022. [Online; accessed 31-July-2023].

- Al-Hraishawi, H.; Chatzinotas, S.; Ottersten, B. Broadband non-geostationary satellite communication systems: Research challenges and key opportunities. 2021 IEEE International Conference on Communications Workshops (ICC Workshops). IEEE, 2021, pp. 1–6. [CrossRef]

- Chen, C.L.; Rutledge, R.A. Error Correcting Codes for Satellite Communication Channels. IBM Journal of Research and Development 1976, 20, 168–175. [Google Scholar] [CrossRef]

- Lee, J.S.; Thorpe, J. Memory-efficient decoding of LDPC codes. Proceedings. International Symposium on Information Theory, 2005. ISIT 2005., 2005, pp. 459–463. [CrossRef]

- Wikipedia contributors. Low-density parity-check code — Wikipedia, The Free Encyclopedia, 2023. [Online; accessed 3-September-2023].

- Lahtonen, J. Convolutional Codes. https://www.karlin.mff.cuni.cz/~holub/soubory/ConvolutionalCodesJyrkiLahtonen.pdf, 2004. [Online; accessed 3-September-2023].

- Petac, E.; Alzoubaidi, A.R. Convolutional codes simulation using Matlab. 2004 IEEE International Symposium on Signal Processing and Information Technology. IEEE, 2004, pp. 573–576. [CrossRef]

- Kurkoski, B. Introduction to Low-Density Parity Check Codes. http://www.jaist.ac.jp/~kurkoski/teaching/portfolio/uec_s05/S05-LDPC%20Lecture%201.pdf, 2005. Accessed on: [Insert date here].

- Tahir, B.; Schwarz, S.; Rupp, M. BER comparison between Convolutional, Turbo, LDPC, and Polar codes. 2017 24th international conference on telecommunications (ICT). IEEE, 2017, pp. 1–7. [CrossRef]

- Wikipedia contributors. BCH code — Wikipedia, The Free Encyclopedia, 2023. [Online; accessed 7-September-2023].

- Jiang, Y. Analysis of Bit Error Rate Between BCH Code and Convolutional Code in Picture Transmission. 2022 3rd International Conference on Electronic Communication and Artificial Intelligence (IWECAI), 2022, pp. 77–80. [CrossRef]

- FEC Codes bch code, 2023. Accessed: Sun, 03 Sep 2023.

- Hrishikesan, S. Error Detecting and Correcting Codes in Digital Electronics, 2023.

- Leung, O.H.; Yue, C.W.; Tsui, C.Y.; Cheng, R. Reducing power consumption of turbo code decoder using adaptive iteration with variable supply voltage. Proceedings. 1999 International Symposium on Low Power Electronics and Design (Cat. No.99TH8477), 1999, pp. 36–41. [CrossRef]

- MacKay, D.J. Information Theory, Inference, and Learning Algorithms, 2003. Accessed on [insert date here].

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).