2.1. Natural gas forecasting research

Forecasting methodology of natural gas consumption is frequently created for individual countries. Some important studies for particular countries include: [

10] (decomposition method), [

11] (neural networks, Belgium), [

12] (Spain, stochastic diffusion models), [

13] (Poland, logistic model), [

14] (Turkey, machine learning, neural networks), [

15] (Turkey, Gray models), [

16] (Argentina, aggregation of short- and long-run models), [

17] (China, grey model). However, these models are usually country specific which makes it difficult to use for other countries.

Short-term natural gas consumption is the main area of research in forecasting. Short-term consumption forecasts, traditionally use time series forecasting models like ETS family [

18] or ARIMA/SARIMA [

19]. The use of BATS/TBATS models is a relatively new approach [

20,

21]. Traditional time series models are often replaced by artificial neural networks, among others, deep neural networks and Long Short-Term Memory (LSTM) [

22,

23,

24]. Long-term forecasting is the research area in only about 20% of studies [

25,

26,

27,

28]. Usually, long-term forecasts are constructed using models with dependent macroeconomic variables (GDP, population, unemployment rate etc.), without distinguishing between spatial and types of gas consumption (private-industrial), what is the core of our analysis in this paper. Exceptions are the forecasts for Iran [

29] (logistic regression and genetic algorithms) and Argentina [

16] (logistic models, computer simulation and optimization models), [

30] (regression, elasticity coefficients). While, medium-term forecasting of natural gas incorporates mainly economic and temperature variables [

31].

Traditionally, econometric and statistical models have been frequently used in forecasting. The most popular group of models are econometric models (e.g. [

32,

33], and statistical models [

10,

34]. Recent researches have focused attention on artificial intelligence methods [

11,

35,

36,

37]. One of the latest research [

26] compares the accuracy of more than 400 models of energy demand forecasting. The authors of this study found that statistical methods work better in short- and medium-term models, while in the long-term models based on computational intelligence are more appropriate. One of the reasons is, that computational intelligence methods are more advantageous for poorly cleaned data. Typical data sets used in gas demand forecasts are: gas consumption profiles, macro- and microeconomic data (e.g., households) and climatic data [

38].

Forecasting natural gas consumption in households (or domestic, residential) has been the subject of not much scientific studies. For example, Szoplik [

39] used neural networks to construct medium-term forecasts for households located at a single urban area. Bartels and others [

38] used gas consumption profiles of households and their economic characteristics, macro- and microeconomic data including regional differences in natural gas consumption and climatic data. Cieślik and others [

40] studied the impact of infrastructural development (electrical network, water supply, sewage system) on natural gas consumption in Polish counties. Sakas et al. [

41] proposed a methodology for modeling energy consumption, including natural gas, in the residential sector in the medium term, taking into account economic, weather and demographic data.

A common practice is forecasting the demand for gas for business purposes for distribution companies. An example of such forecasts are studies on gas demand for eastern and south-eastern Australia [

42]. Similar forecasts are made for Polska Spółka Gazownictwa – the main Polish distribution company. Commercial forecasts consider detailed data on existing customers and their past gas consumption. The challenge remains in accounting for future changes in the number of recipients and their gas consumption characteristics.

2.2. Hierarchical forecasting of short time series

A time series is typically represented as a sequence of observations, given by . When forecasting a time series, the goal is to estimate the future values , where h represents the forecasting horizon. The forecast is typically denoted as . There are two primary group of forecasting. The univariate method predicts future observations of a time series based solely on its past data. In contrast, multivariate methods expand on univariate techniques by including additional time series as explanatory variables.

In the literature, univariate methods are generally classified into three main groups. The first group comprises simple forecasting methods that are often used as benchmarks. The most common example is the naive method, which predicts future values of the time series based on the most recent observation:

Other methods in this group are mean, seasonal naive, or drift.

Statistical group encompass classical techniques such as the well-known ARIMA and ETS families of methods. The ARIMA model, which stands for autoregressive integrated moving average, represents a univariate time series using both autoregressive AR and moving average MA components. The

AR(p) component predicts future observations as a linear combination of past

p observations using the equation:

where

c is constant,

represents the model’s parameters and

is white noise.

The

MA(q) component, on the other hand, models the time series using past errors. It can be represented as:

where

denote the mean of the observation and

models’ parameters.

Selecting parameters manually for ARIMA models can be challenging, particularly with forecasting numerous time series simultaneously. However, the auto-ARIMA method provides a solution by automatically testing multiple parameter combinations to identify the model with the lowest AIC (Akaike Information Criterion).

The ETS family is a smoothing technique that use weighted averages of past values, with the weights decreasing exponentially for older data points. Each component of ETS: Error, Trends and Seasonality is modelled by one recursive equation. The auto-ETS procedure, analogous to auto-ARIMA, automates the process of identifying the best-fitting ETS model for a given time series [

43].

An important aspect of statistical forecasting is stationarity, which refers to a time series whose statistical properties, such as mean and variance, remain constant over time. Many real-world processes lack stationary structures. While models like ETS do not require constant stationarity, others, like ARIMA, use differencing transformations to achieve it.

The third group is Machine Learning (ML) models. In this approach, time series forecasting corresponds to the task of autoregressive modeling

AR(p). This necessitates transforming the time series into a dataset format. Let

constitute a set of observations referred to as the training set. Transformation between time series and training set is modelled by equation:

The xi is called feature vector.

Machine learning (ML) in time series forecasting, learn relationships between input features and an output variable. In general, the assumptions of ML models which leads to multiple regression problems.

Regardless of the chosen approach, time series forecasting is strongly influenced by the number of available observations. Gas consumption data for households in Poland constitute a short time series due to the limited historical data available. Forecasting with this kind of time series can be tricky. As noted by Hyndman [

9], two rules should be met when utilizing a short time series for forecasting: firstly, the number of parameters in the model should be fewer than the number of observations; secondly, forecast intervals should be limited. The literature on short time series forecasting is limited. The comprehensive article "Forecasting: Theory and Practice" [

44] does not mention short time series. The literature suggests [

45,

46] that uncomplicated models such as ARIMA, ETS, or regression perform exceptionally well with small time series.

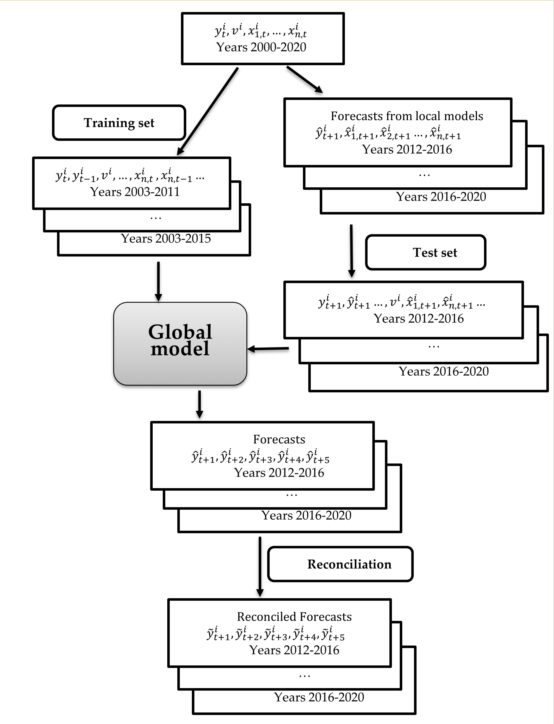

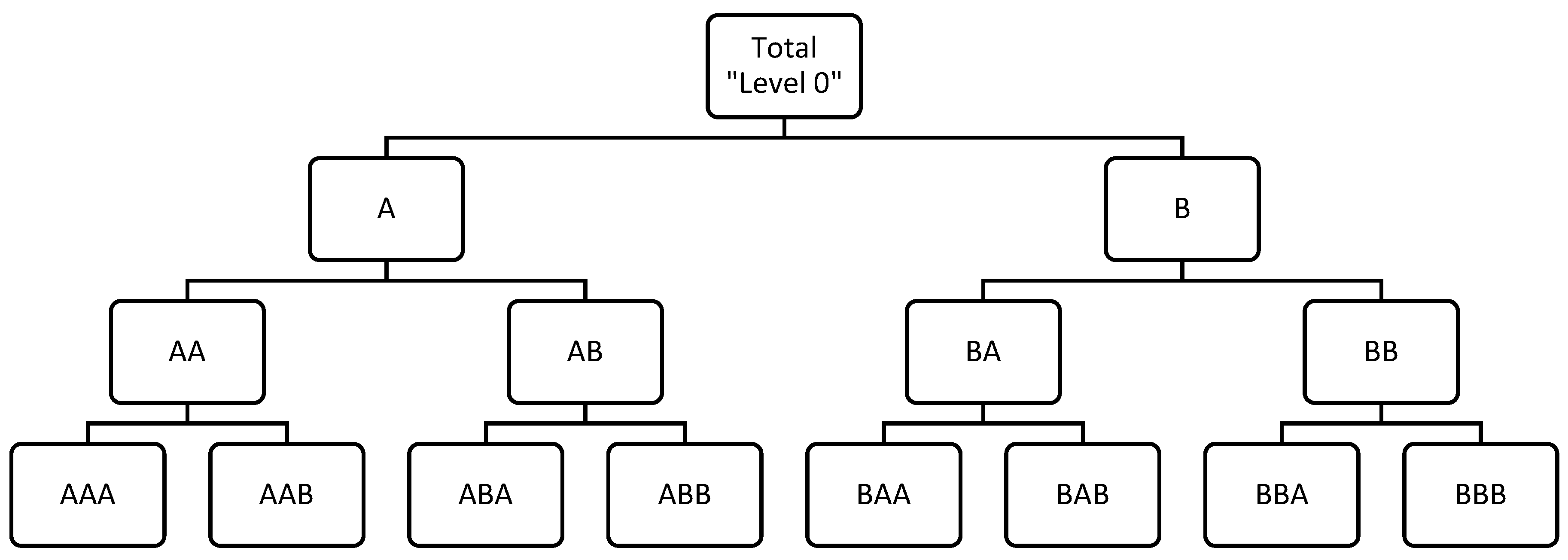

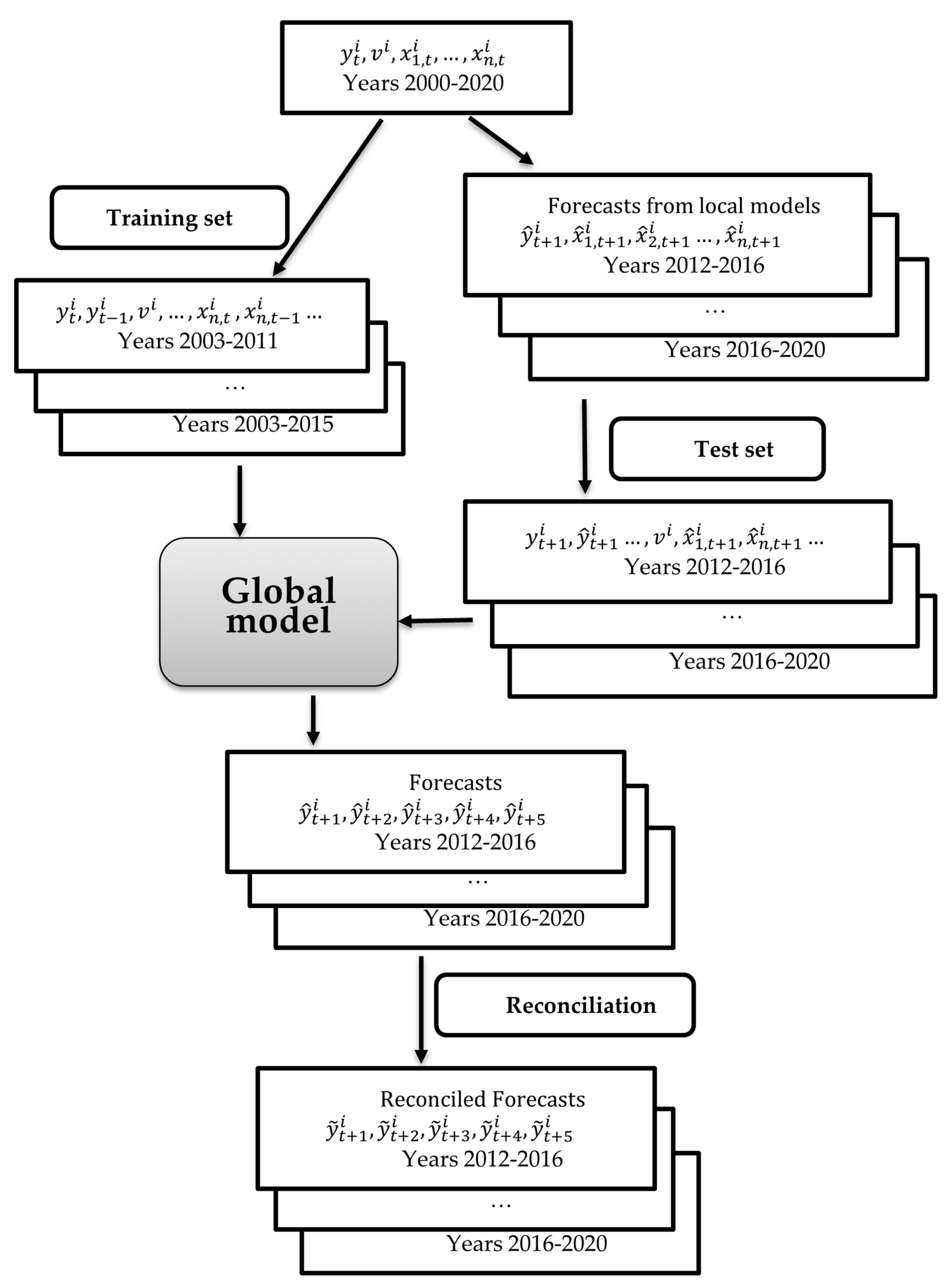

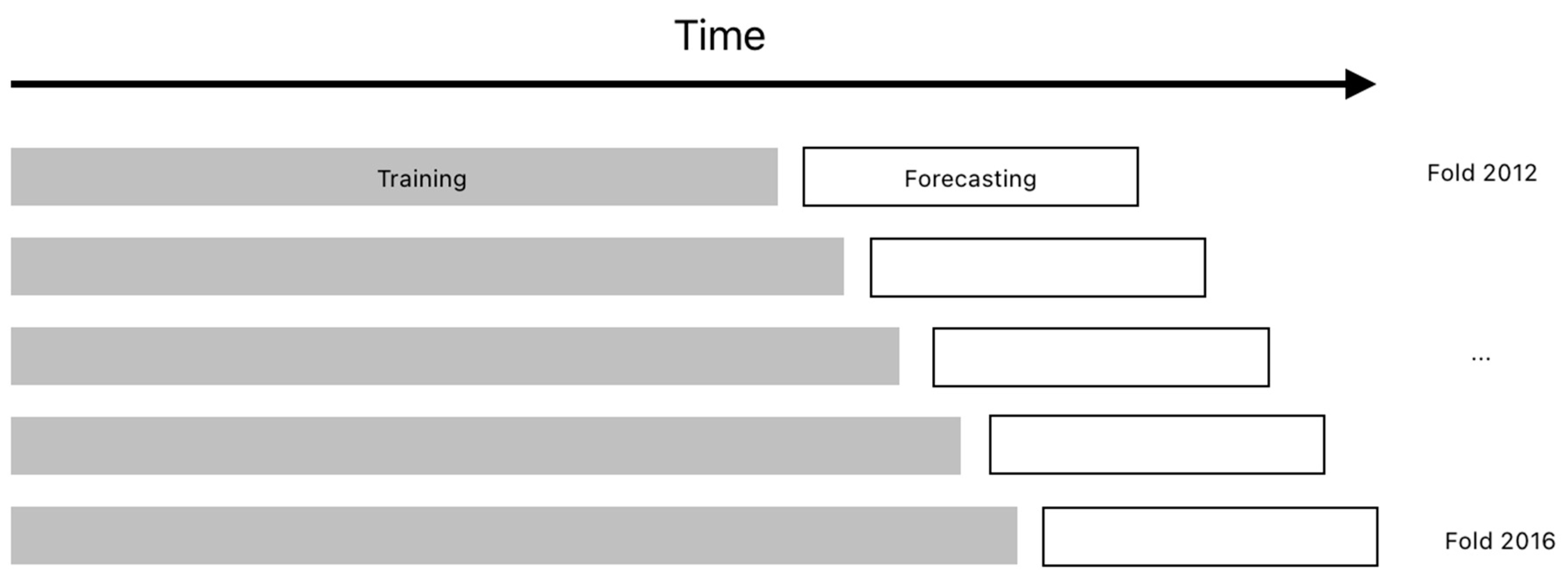

Time series often follow a structured system called hierarchical time series (HTS) where each unique time series is arranged into a hierarchy based on dimensions like geographical territory or product categories (

Figure 2). Many studies, like [

47,

48,

49,

50], discuss forecasting HTS. In HTS, level 0 consists of a completely aggregated main time series. Level 1 to

k-2 breaks down this main series by features. The

k-1 level contains disaggregated time series. The hierarchy vector of time series can be described as follows:

where

is an observation of the highest level, and

vector of observations at the bottom level. The summing matrix S defines structure of hierarchy:

When forecasting with HTS, it's important to get consistent results at every level. Firstly, each series is predicted on its own, creating base forecasts. Then, these forecasts are reconciliated to be coherent [

51]. Traditionally, there are four strategies for reconciling forecasts: the top-down, bottom-up, middle-out and min-T. The top-down strategy begins by forecasting at the highest level of the hierarchy and then breaks down the forecasts for lower-level series using weighting systems. This disaggregation is done using the proportion vector

, which represents the contribution of each time series. Typical ways to calculate p are:

averages of historical proportions

average of the historical values

or proportions of forecasts

where

is base forecast of the series that corresponds to the node, which is

k levels above the node

j, and

is sum of the base forecasts below the series that is

k levels above node

j.

Let

is a vector of reconciled, forecasted observations. The top-down approach can be expressed as

Similarly, the bottom-up approach produces forecasts at the bottom level

k-1 and then aggregates them to upper-levels:

Another approach, known as the middle-out strategy, combines elements of both the top-down and bottom-up strategies. It estimates models from an intermediary stage of the hierarchy where predictions are most reliable. Forecasts at higher levels than this are synthesized using a bottom-up approach, while forecasts for observations below this midpoint are calculated using a top-down approach. One of the most promising reconciliation methods is the min-T [

52]. In this approach, the total joint variance of all obtained consistent forecasts is minimized. The approach described here is usually called local.

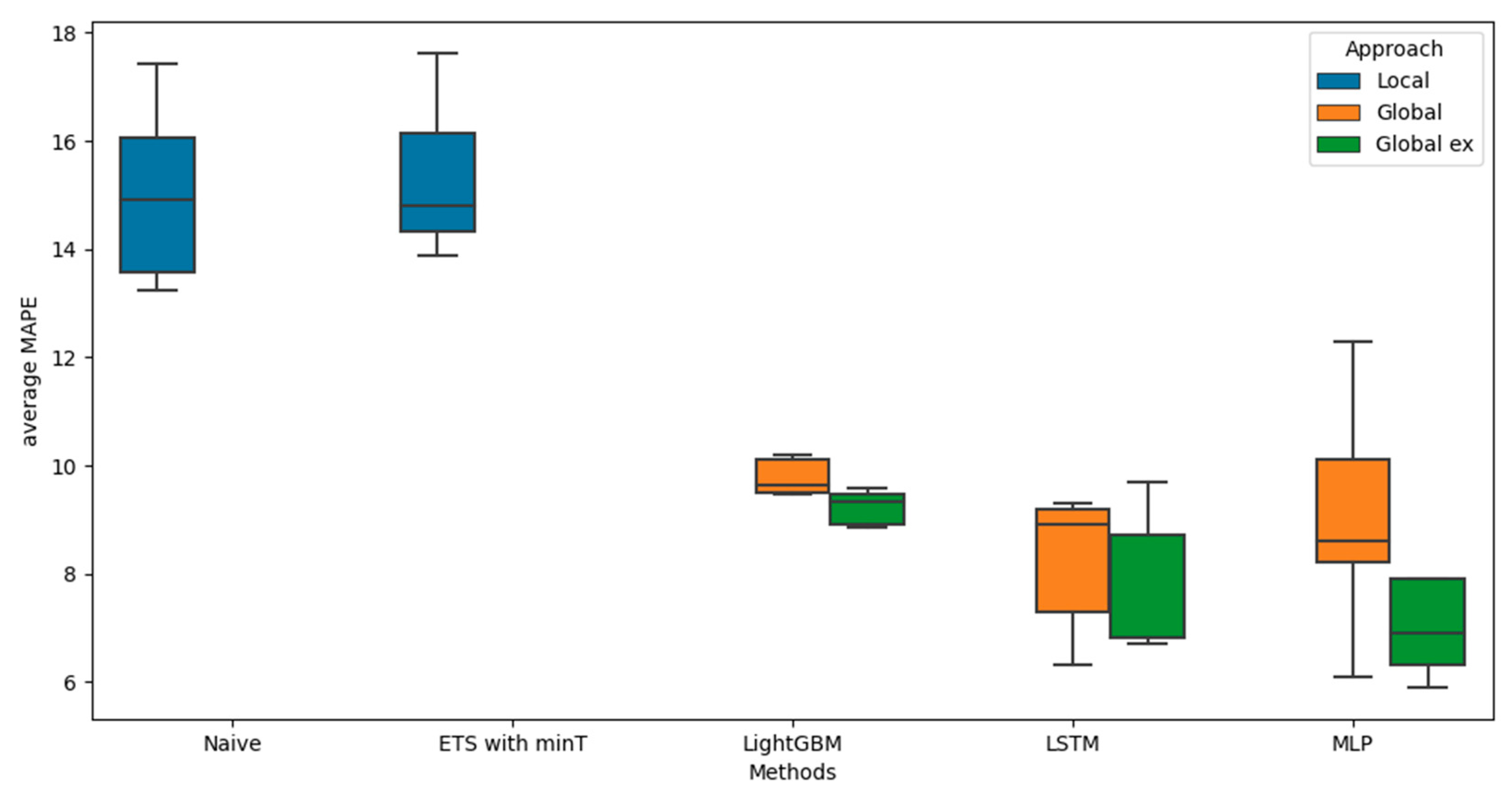

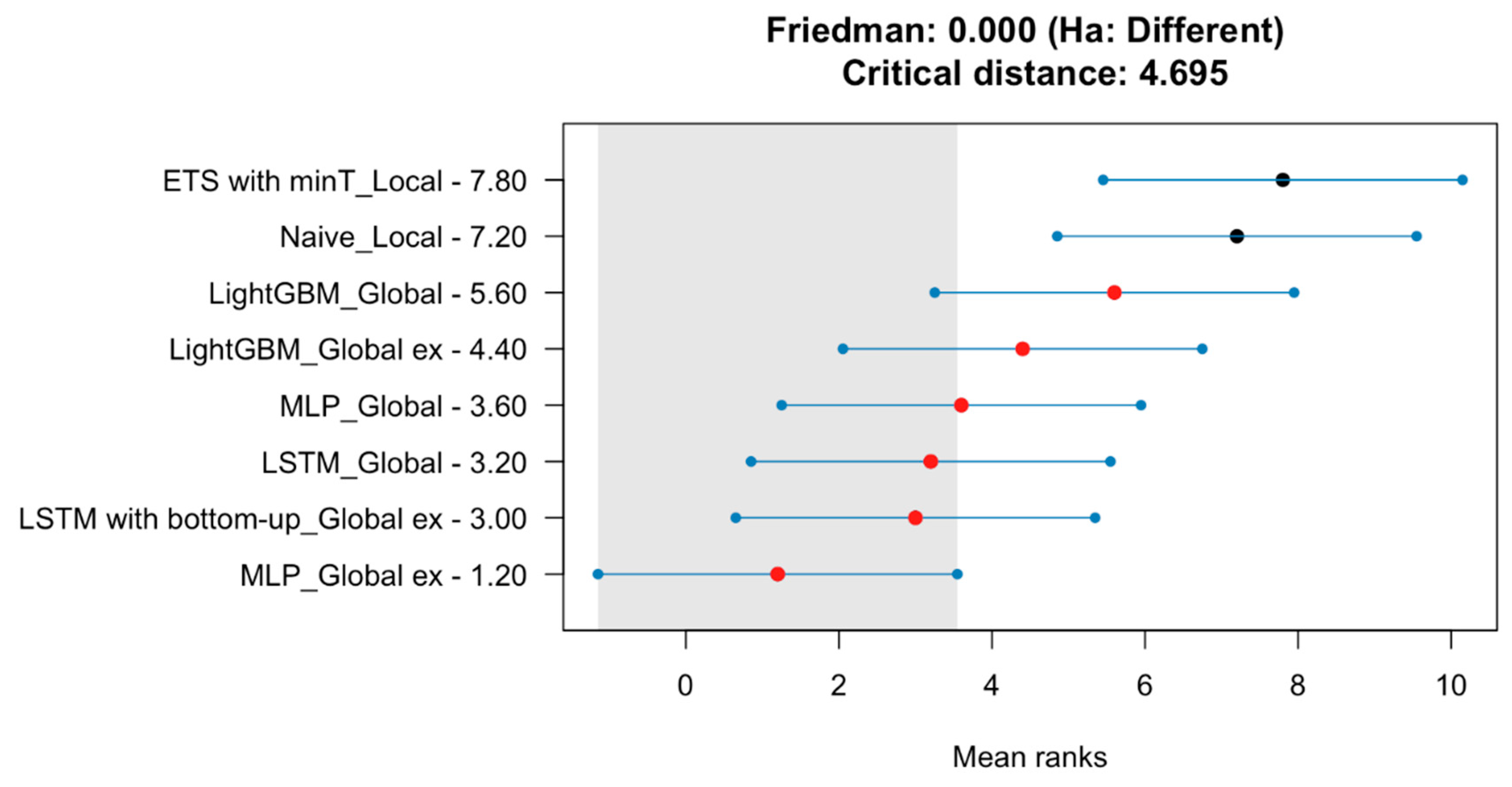

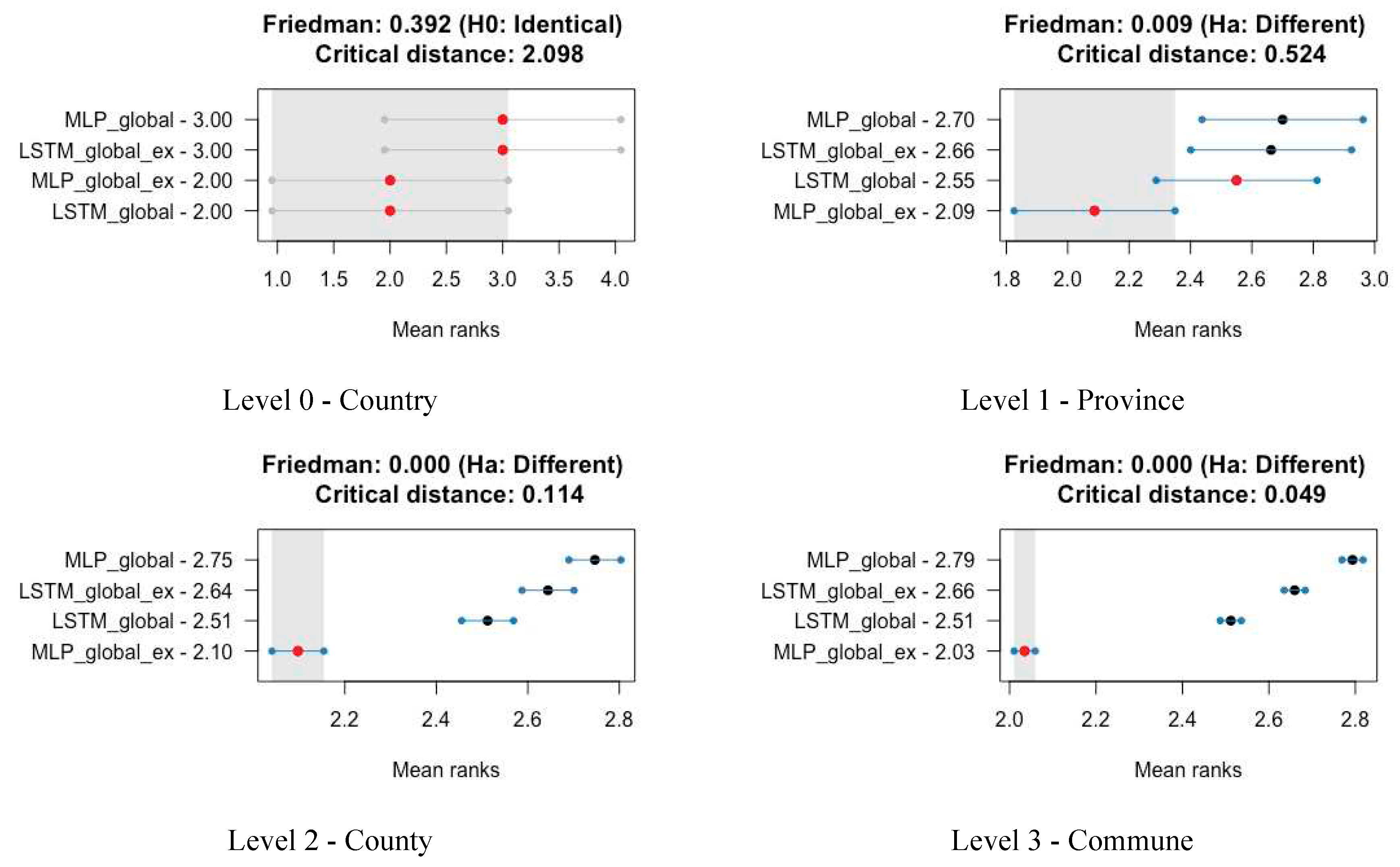

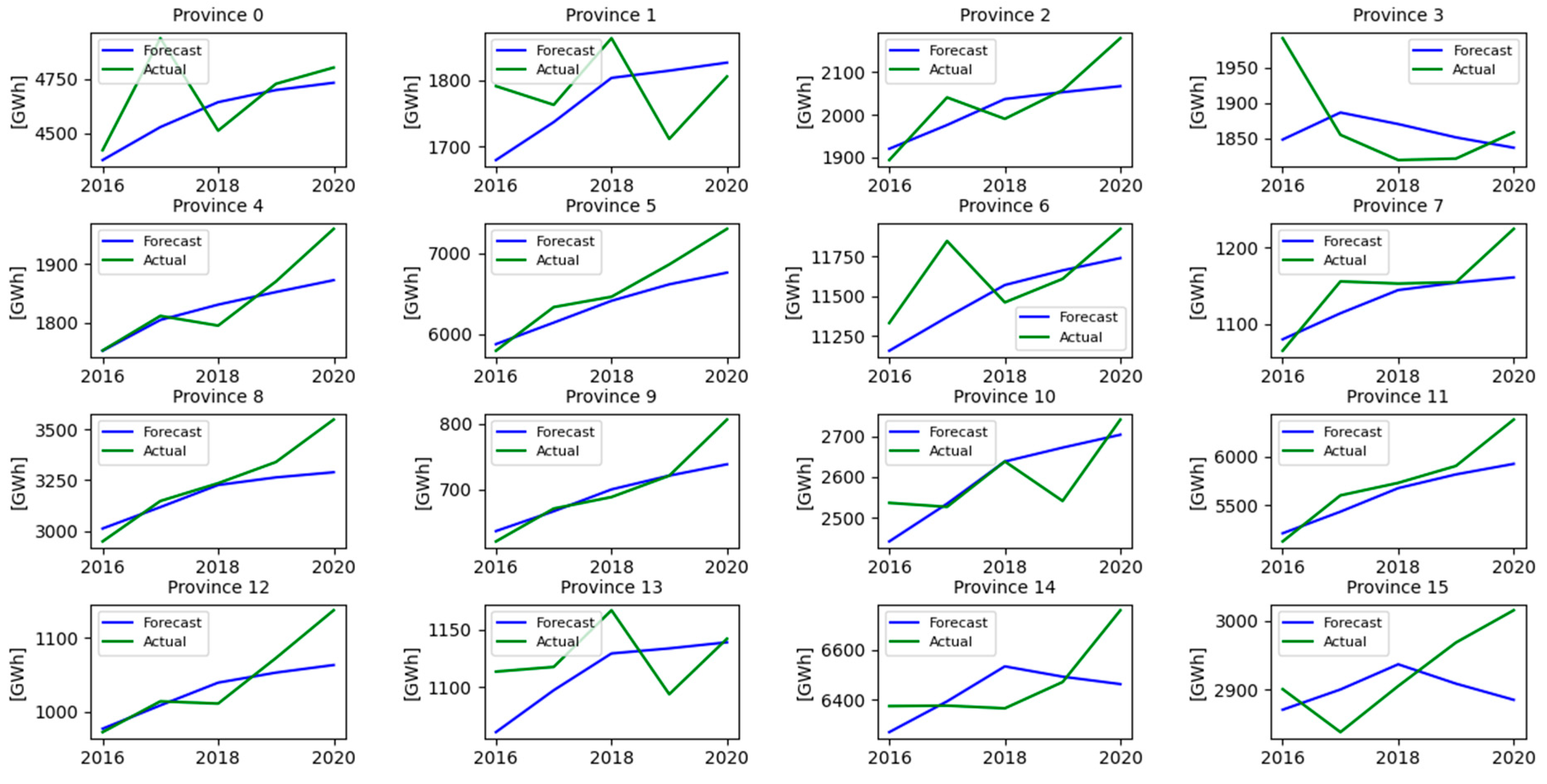

The local approach has two drawbacks [

5]. The size of a single time series can lead to the situation that individual forecasting models for each time series becoming too specialized and suffer from over-fitting. Temporal dependencies that may not be adequately modelled by algorithms. To avoid this problem, analysts should manually input their knowledge to algorithms what is time consuming and makes the models less accurate, harder to scale up and time consuming, as each time series needs human supervision. There's another way to approach this problem, known as the global [

53] or cross-learning [

54]. In the global approach, all the time series data is treated as a single comprehensive group. This approach assumes that all the time series data originates from objects that behave in a similar manner. Some researchers have found that the global approach can yield surprisingly effective results, even for sets of time series that don't seem to be related. [

55] demonstrates that even if this assumption doesn't hold true, the methods still provide better performance than the local approach.

In the global approach, a single prediction model is created using all the available data, which helps mitigate overfitting due to the larger dataset for learning. However, this approach has its own limitations: it employs the same model for all time series, even when they exhibit differences, potentially lacking the flexibility of the local approach.