Submitted:

21 September 2023

Posted:

22 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We extract a concise and consistent set of macro obfuscation features from the perspective of adversarial attack.

- We analyze the drawbacks of using obfuscated features or suspicious keywords in detecting malicious macros, and for the first time combine these two types of features in machine learning models to detect malicious macros. Our approach has a very low rate of false positives and is capable of detecting unseen malicious macros.

2. Background

2.1. Macro in Office documents

2.2. Obfuscation of VBA Macro

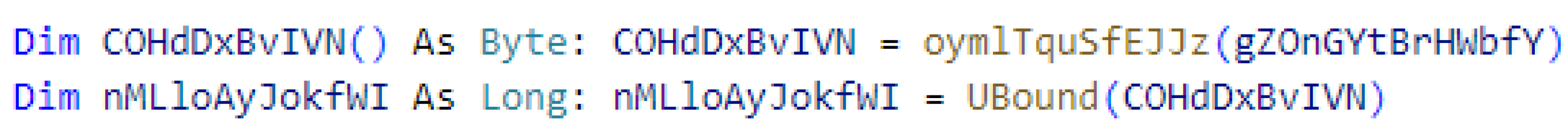

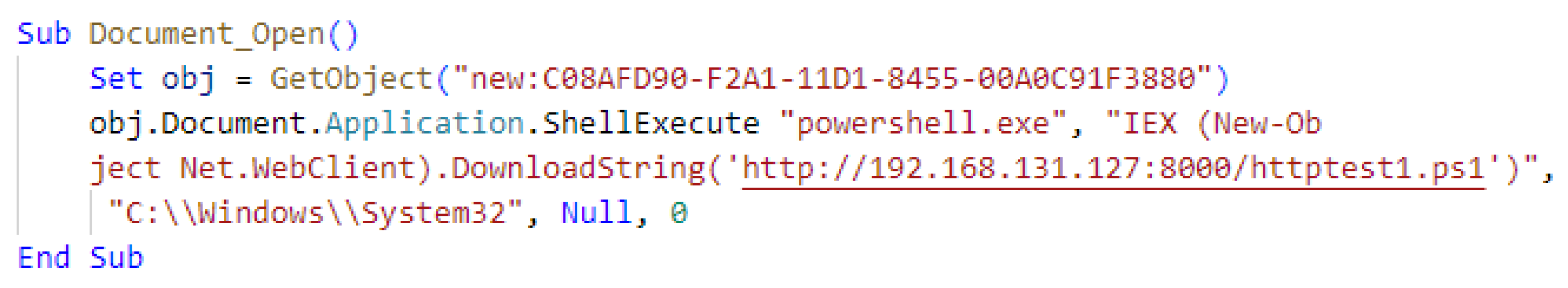

- Random Obfuscation: Randomise the variable names or procedure names in macro with randomly generated characters. Figure 3 provides an example of random obfuscation. Both of the names of variables and function parameters have been obfuscated.

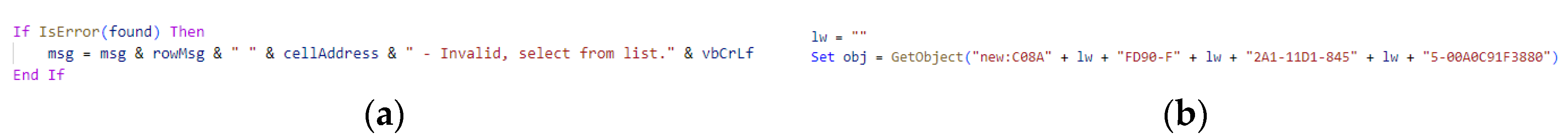

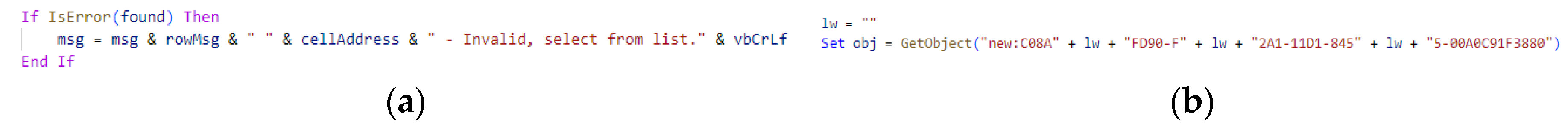

- Split Obfuscation: Long strings can be split into several short strings connected with “+” or “=”. This operation is common in normal macros when a long string needs to be joined by many sub-strings. Figure 4(a) demonstrates split obfuscation in a normal macro, while Figure 4(b) shows a snippet of a malicious macro using split obfuscation for the parameter of function GetObject.

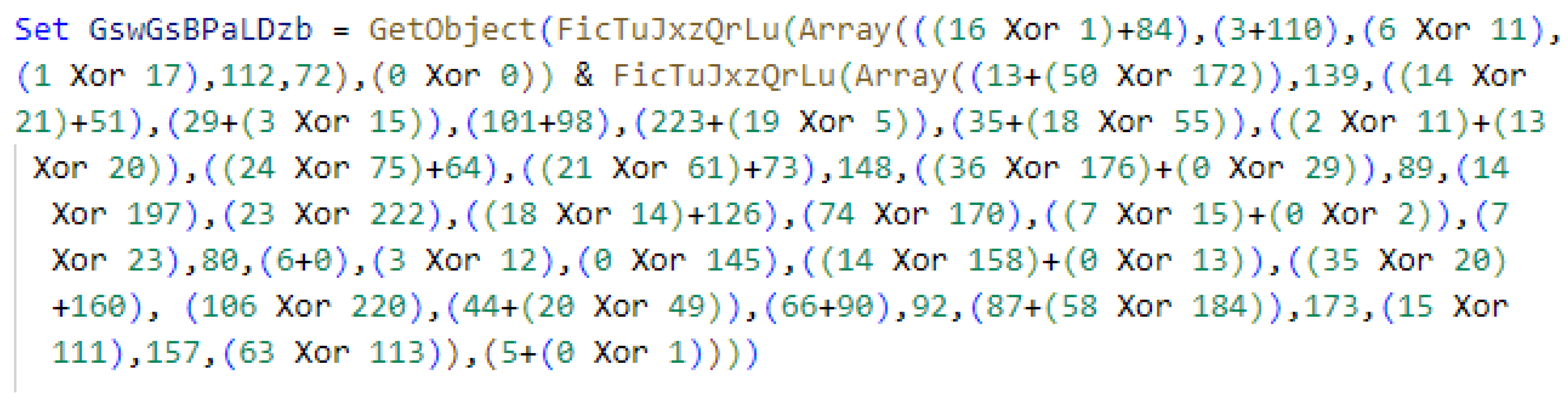

- Encoding Obfuscation: Encoding obfuscation is similar to encryption and requires decoding operations to restore the original string before use. For instance, we can encode a PowerShell command as a byte array or Base64 string. PowerShell commands are frequently targeted for encoding obfuscation. Figure 5 demonstrates the parameter of function GetObject is obfuscated using byte arrays and subsequently decoded with a self-defined decoding function, FicTuJxzQrLu.

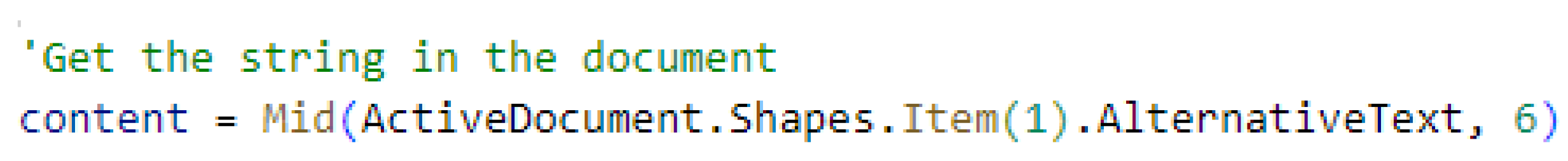

- Embedding Obfuscation: Embedding obfuscation conceals the target string in other parts of the macro, such as document attributes, forms or controls, and even within the content of Word or Excel documents. The macro can then directly load this content into a string variable. This obfuscation can be challenging to recognize, as it is a common operation in non-obfuscated macros to process content. Figure 6 provides an example of embedding obfuscation. This PowerShell command is loaded from a hidden shape within the document. The shape is made invisible by some simple settings.

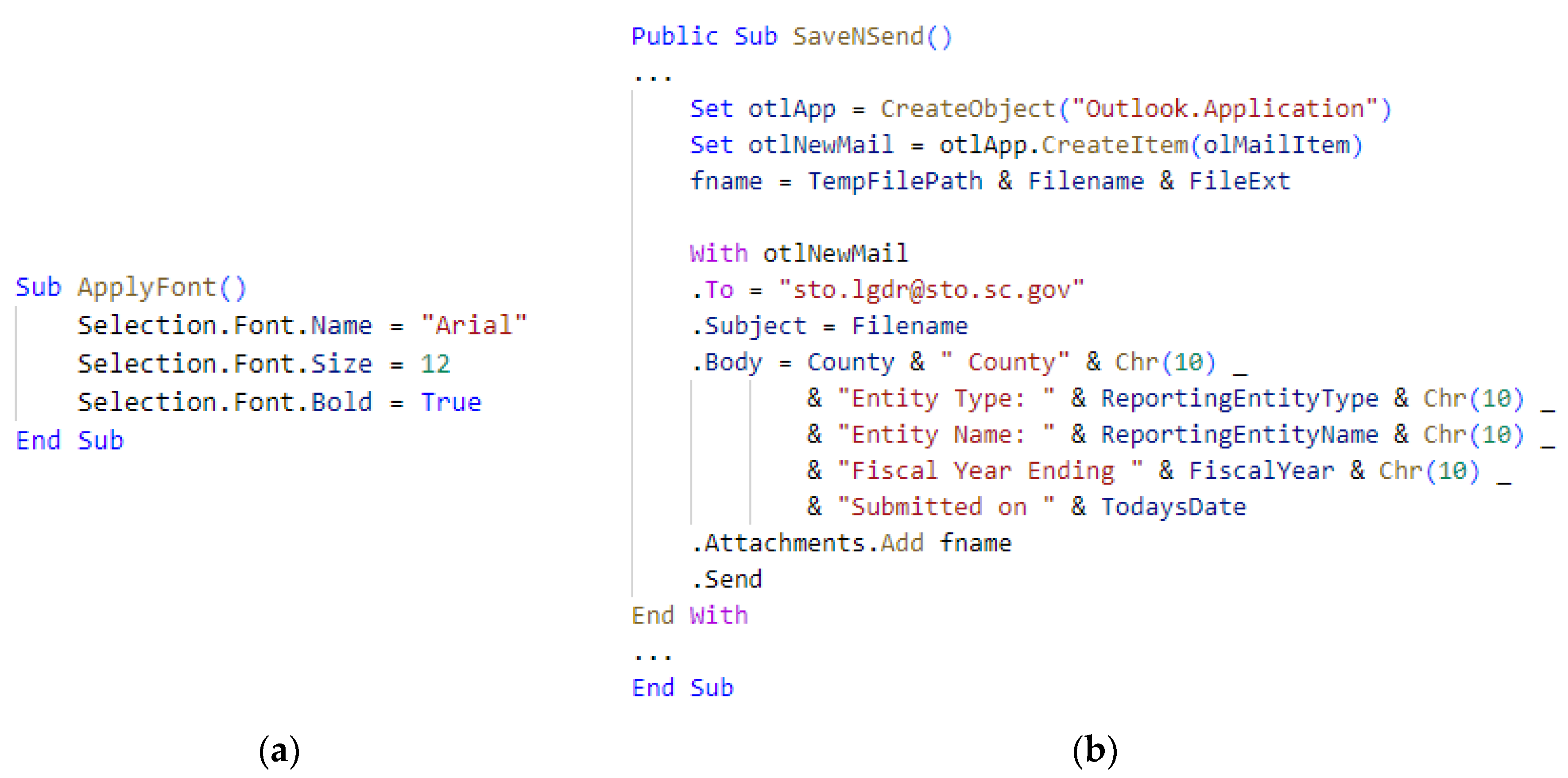

- Call obfuscation: When dealing with sensitive object methods such as Run, ShellExecute, and Create in VBA macros, the CallByName method can be useful in executing a method by specifying its name through a string. By combining it with other forms of string obfuscation, the called method can be further obfuscated. The macro depicted in Figure 7(b) is the obfuscated version of the code shown in Figure 7(a), where the object's called method can be seen as obfuscated.

- Logical Obfuscation: The concept of logical obfuscation is about adding dummy code like procedure calls and branch decisions, which complicate the macro's logic. In certain cases, attackers conceal a few lines of malicious statements among large amounts of normal macro code, making it problematic to analyze malicious macros - similar to searching for a needle in a haystack. Without comprehending the program's semantics, it is impossible to determine if the code is logically obfuscated.

3. Related Work

4. Machine learning Method with combined features

4.1. Data collection and Pre-processing

- Malicious samples disarmed

- Excel 4.0 macro samples

- Samples unable to parse with oletools

- Samples without macros

4.2. Feature selection

4.2.1. Obfuscation features

4.2.2. Suspicious keywords features

5. Evaluation

6. Discussion and Conclusion

Data Availability Statement

References

- https://www.microsoft.com/en-us/security/blog/2018/09/12/office-vba-amsi-parting-the-veil-on-malicious-macros/.

- Matt Miller. Trends and Challenges in the Vulnerability Mitigation Landscape. https://www.usenix.org/conference/woot19/presentation/miller.

- https://learn.microsoft.com/en-us/deployoffice/security/internet-macros-blocked.

- https://www.csoonline.com/article/573293/attacks-using-office-macros-decline-in-wake-of-microsoft-action.html.

- https://www.fortinet.com/blog/threat-research/are-internet-macros-dead-or-alive.

- https://thehackernews.com/2022/12/apt-hackers-turn-to-malicious-excel-add.html.

- https://thehackernews.com/2022/07/microsoft-resumes-blocking-office-vba.html.

- Kim, Sangwoo, et al. "Obfuscated VBA macro detection using machine learning." 2018 48th annual ieee/ifip international conference on dependable systems and networks (dsn). IEEE, 2018.

- Koutsokostas, Vasilios, et al. "Invoice# 31415 attached: Automated analysis of malicious Microsoft Office documents." Computers & Security 114 (2022): 102582. [CrossRef]

- Advanced VBA macros: bypassing olevba static analyses with 0 hits. https://www.certego.net/blog/advanced-vba-macros-bypassing-olevba-static-analyses/.

- https://github.com/decalage2/oletools/wiki/olevba.

- https://learn.microsoft.com/en-us/office/vba/api/overview/.

- Vasilios Koutsokostas, Nikolaos Lykousas, Gabriele Orazi, Theodoros Apostolopoulos, Amrita Ghosal, Fran Casino, Mauro Conti, & Constantinos Patsakis. (2021). Malicious MS Office documents dataset. [CrossRef]

- N. Nissim, A. Cohen and Y. Elovici, "ALDOCX: Detection of Unknown Malicious Microsoft Office Documents Using Designated Active Learning Methods Based on New Structural Feature Extraction Methodology," in IEEE Transactions on Information Forensics and Security, vol. 12, no. 3, pp. 631-646.

- S. HUNEAULT-LEBLANC and C. TALHI, "P-Code Based Classification to Detect Malicious VBA Macro," 2020 International Symposium on Networks, Computers and Communications (ISNCC), Montreal, QC, Canada, 2020, pp. 1-6.

- N. Ruaro, F. Pagani, S. Ortolani, C. Kruegel and G. Vigna, "SYMBEXCEL: Automated Analysis and Understanding of Malicious Excel 4.0 Macros," 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 2022, pp. 1066-1081.

- ViperMonkey. https://github.com/decalage2/ViperMonkey.

- Aebersold S, Kryszczuk K, Paganoni S, et al. Detecting obfuscated javascripts using machine learning[C]//ICIMP 2016 the Eleventh International Conference on Internet Monitoring and Protection, Valencia, Spain, 22-26 May 2016. Curran Associates, 2016, 1: 11-17.

| label | benign | malicious |

|---|---|---|

| number | 2939 | 13734 |

| Details of document type | doc 644, docx 26, xls 1607, xlsx 662 | doc 11025, docx 1340, xls 1012, xlsx 240, ppt 5, pptx 5, others 107 |

| label | malicious |

|---|---|

| number | 2885 |

| Details of document type | doc 1537, docx 58, xls 1193, xlsx 16, ppt 13, others 68 |

| Details of obfuscation | Obfuscated 1358, non-obfuscated 1527 |

| Symbols | Description |

|---|---|

| represents a line in macro’s procedures, is the vector of all lines in macro. | |

| represents a procedure in macro, P is the vector of all procedures in macro. | |

| count_concatenation | count the number of concatenation symbols include “+” and “=”. |

| count_strings | |

| length | calculate the length |

| max | calculate the maximum |

| Feature name | Description |

|---|---|

| F1 | |

| F2 | |

| F3 | |

| F4 | |

| F5 | |

| F7 | |

| F8 | |

| F9 | m |

| F10 | m |

| F11 | |

| F12 | |

| F13 | |

| F14 | |

| F15 | the number of occurrences of each suspicious keywords |

| Keywords | Description |

|---|---|

| Auto_Open*, AutoOpen*, Document_Open*, Workbook_Open*, Document_Close* |

Procedure names will be executed automatically upon opening or closing the document |

| CreateObject*, GetObject, Wscript.Shell*, Shell.Application* | Methods and parameters to obtain the key object capable of executing commands |

| Shell*, Run*, Exec, Create, ShellExecute* | Methods used to execute a command or launch a program |

| CreateProcessA, CreateThread, CreateUserThread, VirtualAlloc, VirtualAllocEx, RtlMoveMemory, WriteProcessMemory, VirtualProtect, SetContextThread, QueueApcThread, WriteVirtualMemory, | External functions, when imported from kernel32.dll, can be used to create a process or thread, operating memory |

| Print*, FileCopy*, Open*, Write*, Output*, SaveToFile*, CreateTextFile*, Kill*, Binary* | Methods related to file creation, opening, writing, copying, deletion, etc |

| cmd.exe, powershell.exe, vbhide* | Command line tools and suspicious parameters |

| StartupPath, Environ*, Windows*, ShowWindow*, dde*, Lib*, ExecuteExcel4Macro*, System*, Virtual* | Other keywords related to startup Path, environment variables, program windows, dde, function reference of DLL, Execution of Excel 4 macro, virtualization |

| Model | Parameter |

|---|---|

| RF | n_estimators=100 |

| MLP | hidden_layer_sizes=(150,), max_iter=500 |

| SVM | kernel='rbf' |

| KNN | n_neighbors=3 |

| Model | Feature Selection | FAR | Precision | Recall | Accuracy | F1-Score |

|---|---|---|---|---|---|---|

| RF | F1-F14 | 0.083 | 0.982 | 0.994 | 0.981 | 0.988 |

| F15 | 0.012 | 0.997 | 0.995 | 0.993 | 0.996 | |

| F1-F15 | 0.015 | 0.997 | 0.997 | 0.994 | 0.997 | |

| MLP | F1-F14 | 0.138 | 0.971 | 0.979 | 0.958 | 0.975 |

| F15 | 0.013 | 0.997 | 0.995 | 0.994 | 0.996 | |

| F1-F15 | 0.035 | 0.993 | 0.994 | 0.989 | 0.993 | |

| SVM | F1-F14 | 0.203 | 0.957 | 0.961 | 0.932 | 0.959 |

| F15 | 0.022 | 0.995 | 0.987 | 0.986 | 0.991 | |

| F1-F15 | 0.028 | 0.994 | 0.992 | 0.988 | 0.993 | |

| KNN | F1-F14 | 0.092 | 0.980 | 0.984 | 0.971 | 0.982 |

| F15 | 0.020 | 0.996 | 0.992 | 0.990 | 0.994 | |

| F1-F15 | 0.028 | 0.994 | 0.992 | 0.988 | 0.993 | |

| RF | Ref [9] | — | 0.993 | 0.976 | 0.975 | 0.985 |

| Model | Feature Selection | Precision |

|---|---|---|

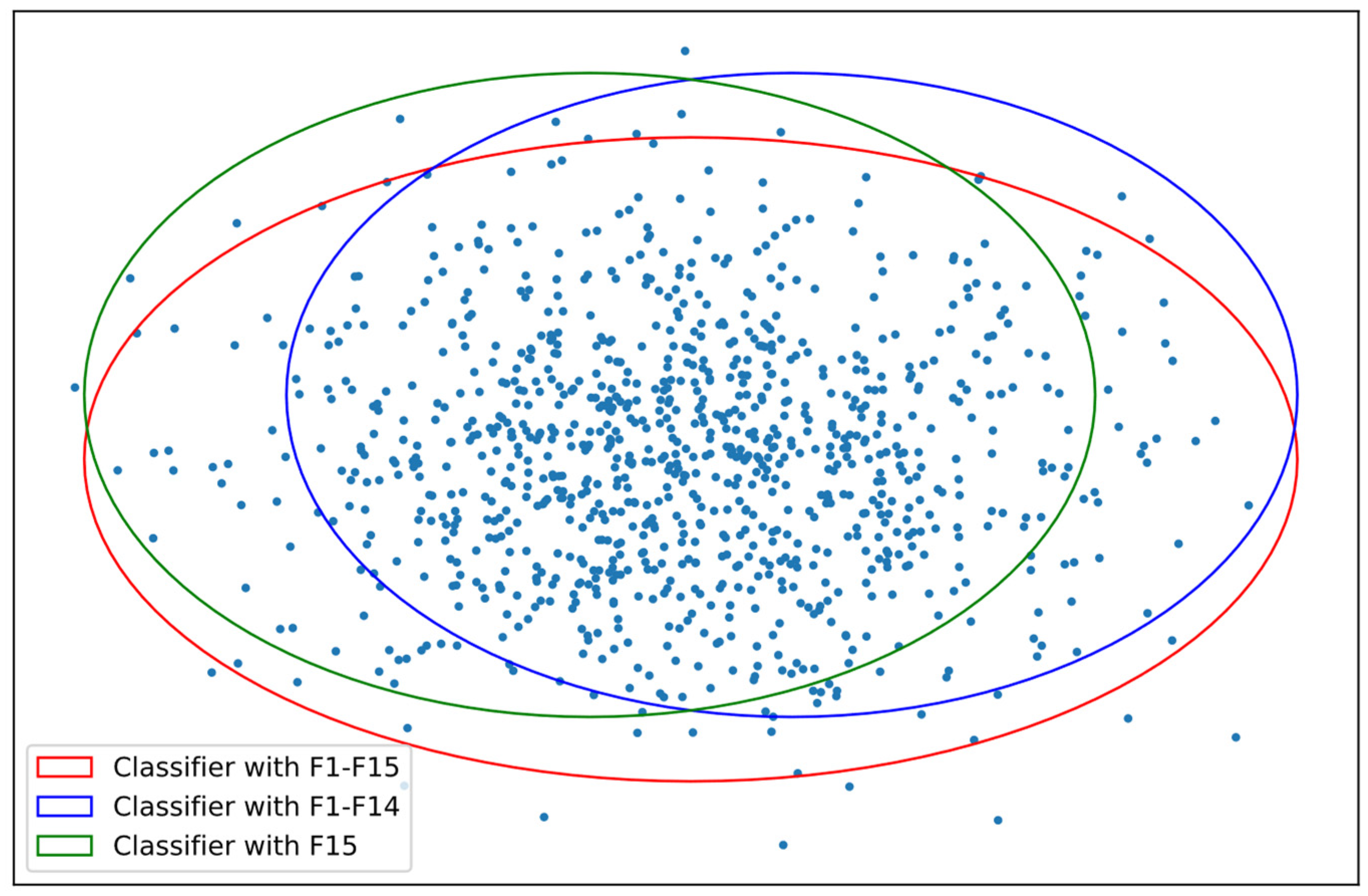

| RF | F1-F14 | 0.923 |

| F15 | 0.856 | |

| F1-F15 | 0.953 | |

| MLP | F1-F14 | 0.739 |

| F15 | 0.793 | |

| F1-F15 | 0.907 | |

| SVM | F1-F14 | 0.799 |

| F15 | 0.788 | |

| F1-F15 | 0.817 | |

| KNN | F1-F14 | 0.792 |

| F15 | 0.757 | |

| F1-F15 | 0.735 |

| Index | Feature Name | Feature Type |

|---|---|---|

| 1 | F15:CreateObject | Suspicious Keywords |

| 2 | F15:Document_Open | Suspicious Keywords |

| 3 | F15:Shell | Suspicious Keywords |

| 4 | F15:GetObject | Suspicious Keywords |

| 5 | F15:Lib | Suspicious Keywords |

| 6 | F15:AutoOpen | Suspicious Keywords |

| 7 | F15:Auto_Open | Suspicious Keywords |

| 8 | F15:StartupPath | Suspicious Keywords |

| 9 | Obfuscation | |

| 10 | Obfuscation | |

| 11 | F14:Chr | Obfuscation |

| 12 | F14:Asc | Obfuscation |

| 13 | F14:UCase | Obfuscation |

| 14 | Obfuscation | |

| 15 | F5: | Obfuscation |

| 16 | F14:Left | Obfuscation |

| 17 | F15:Open | Suspicious Keywords |

| 18 | F14:Abs | Obfuscation |

| 19 | F14:Split | Obfuscation |

| 20 | F15:System | Suspicious Keywords |

| Index | Feature Name | Proportion of Benign samples | Proportion of malicious samples |

|---|---|---|---|

| 1 | F15:CreateObject | 2.76% | 97.24% |

| 2 | F15:Document_Open | 0.41% | 99.59% |

| 3 | F15:Shell | 0.29% | 99.71% |

| 4 | F15:GetObject | 0.07% | 99.93% |

| 5 | F15:Lib | 1.72% | 98.28% |

| 6 | F15:AutoOpen | 0.12% | 99.88% |

| 7 | F15:Auto_Open | 9.62% | 90.38% |

| 8 | F15:StartupPath | 0.00% | 100.00% |

| 9 | F14:Chr | 2.31% | 97.69% |

| 10 | F14:Asc | 1.88% | 98.12% |

| 11 | F14:UCase | 34.20% | 65.80% |

| 12 | F14:Left | 10.94% | 89.06% |

| 13 | F15:Open | 5.75% | 94.25% |

| 14 | F14:Abs | 27.32% | 72.68% |

| 15 | F14:Split | 3.45% | 96.55% |

| 16 | F15:System | 12.27% | 87.73% |

| Ensemble of classifier | Precision |

|---|---|

| RF classifier with F1-F14 and RF classifier with F15 | 0.979 |

| RF classifier with F1-F14 and RF classifier with F1-F15 | 0.974 |

| RF classifier with F15 and RF classifier with F1-F15 | 0.969 |

| All three Classifiers | 0.980 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).