Submitted:

25 September 2023

Posted:

27 September 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related work

2.1. Air Writing with Numbers and Symbols

2.2. Exploring Air-Written Letters

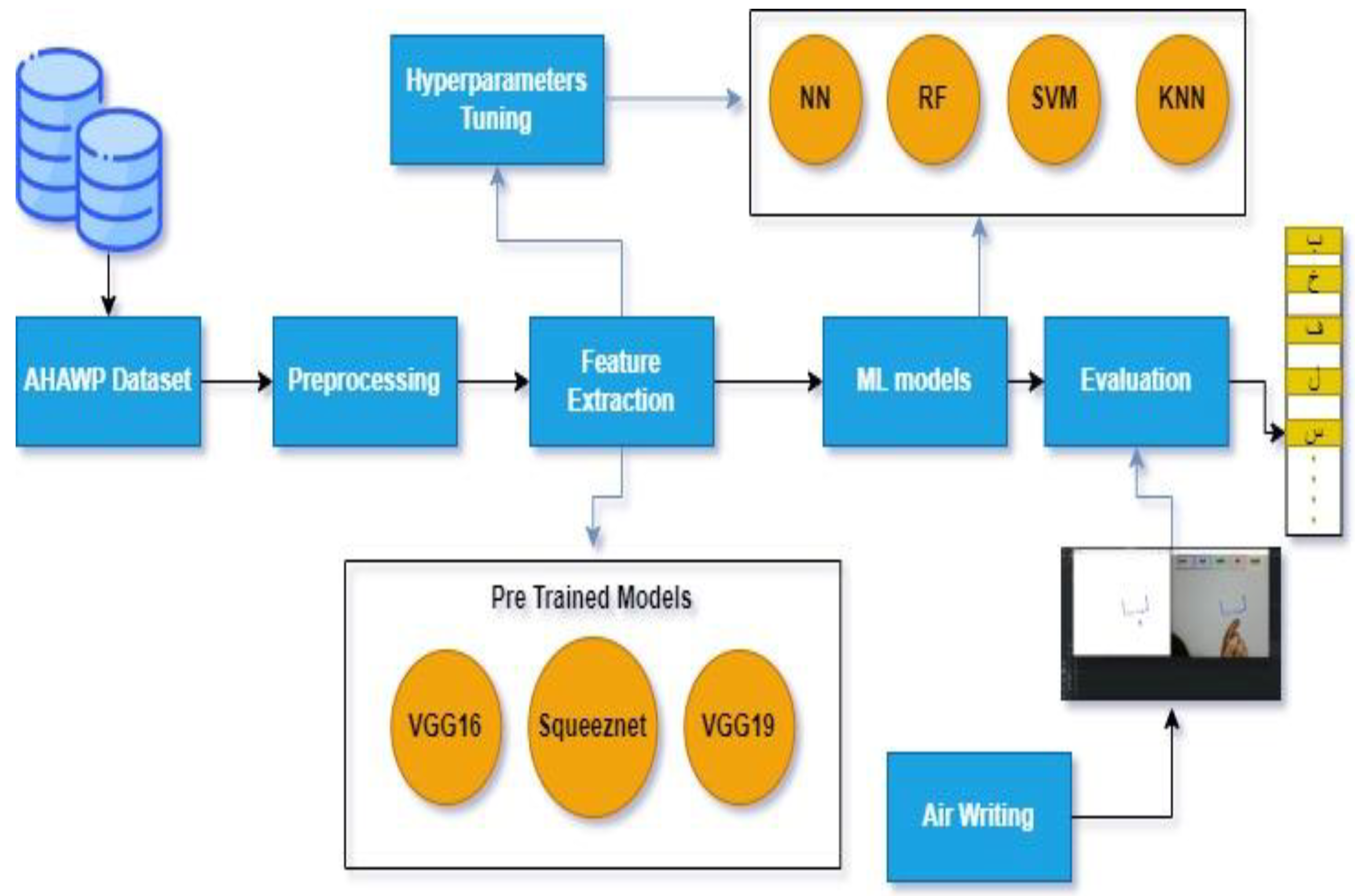

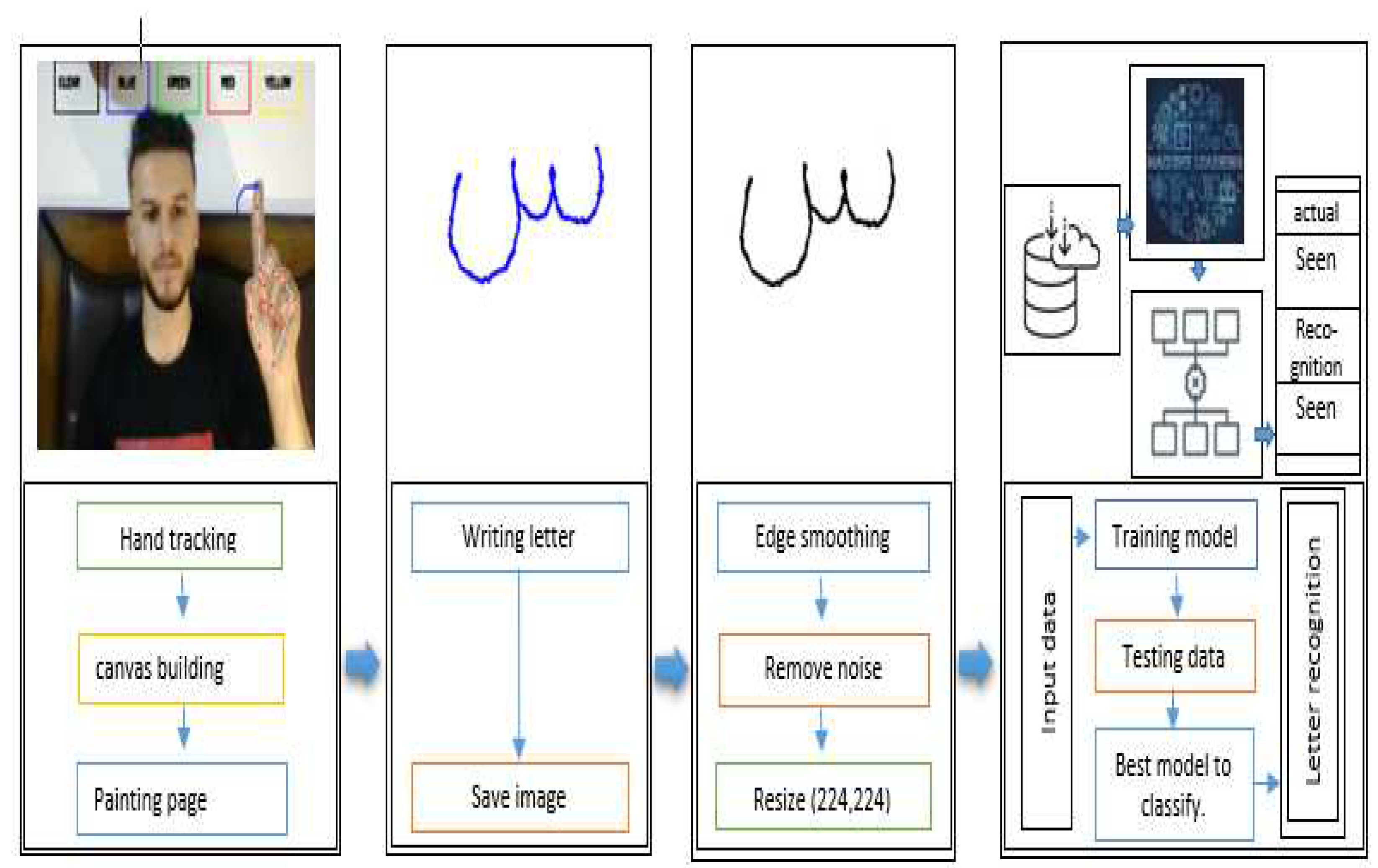

3. Arabic Air-Writing to Image Conversion and Recognition: Methodology

3.1. AHAWP Dataset

3.2. Data Pre-Processing:

3.2.1. Image Resizing:

3.2.2. Feature Extraction

3.2.3. Dimensionality Reduction

3.2.4. Data Normalization

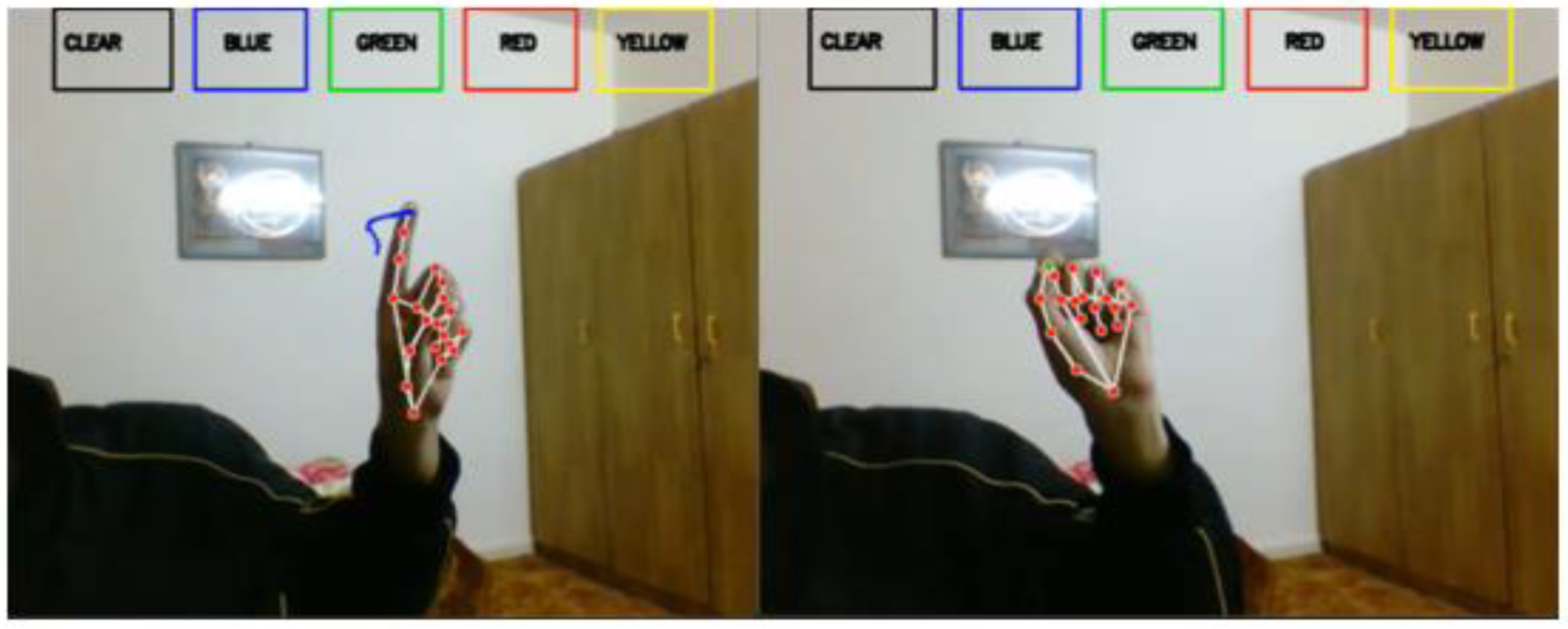

3.3. Building the Air Writing Components

3.3.1. Air writing tools

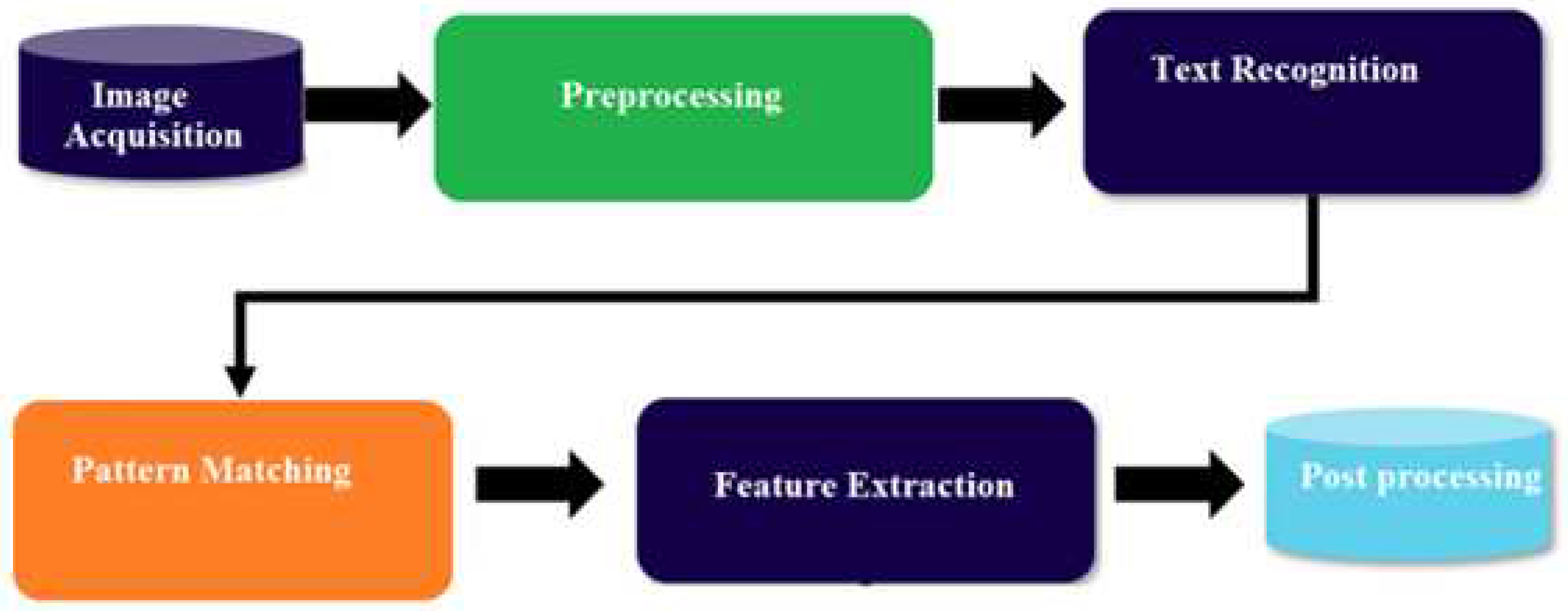

3.4. Optical character recognition (OCR)

3.5. Arabic Air Writing Letter Recognition System Using Deep Convolutional Neural

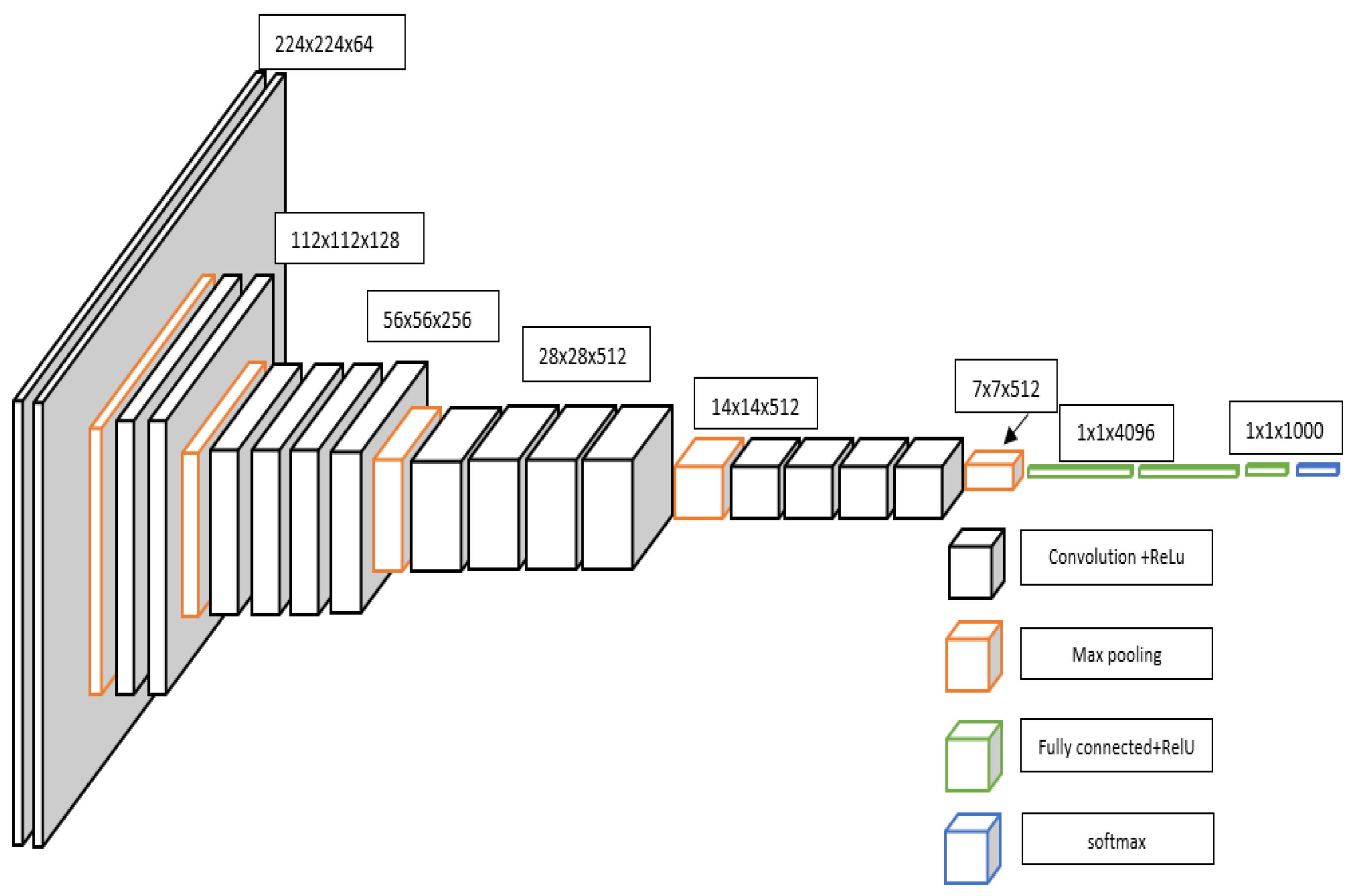

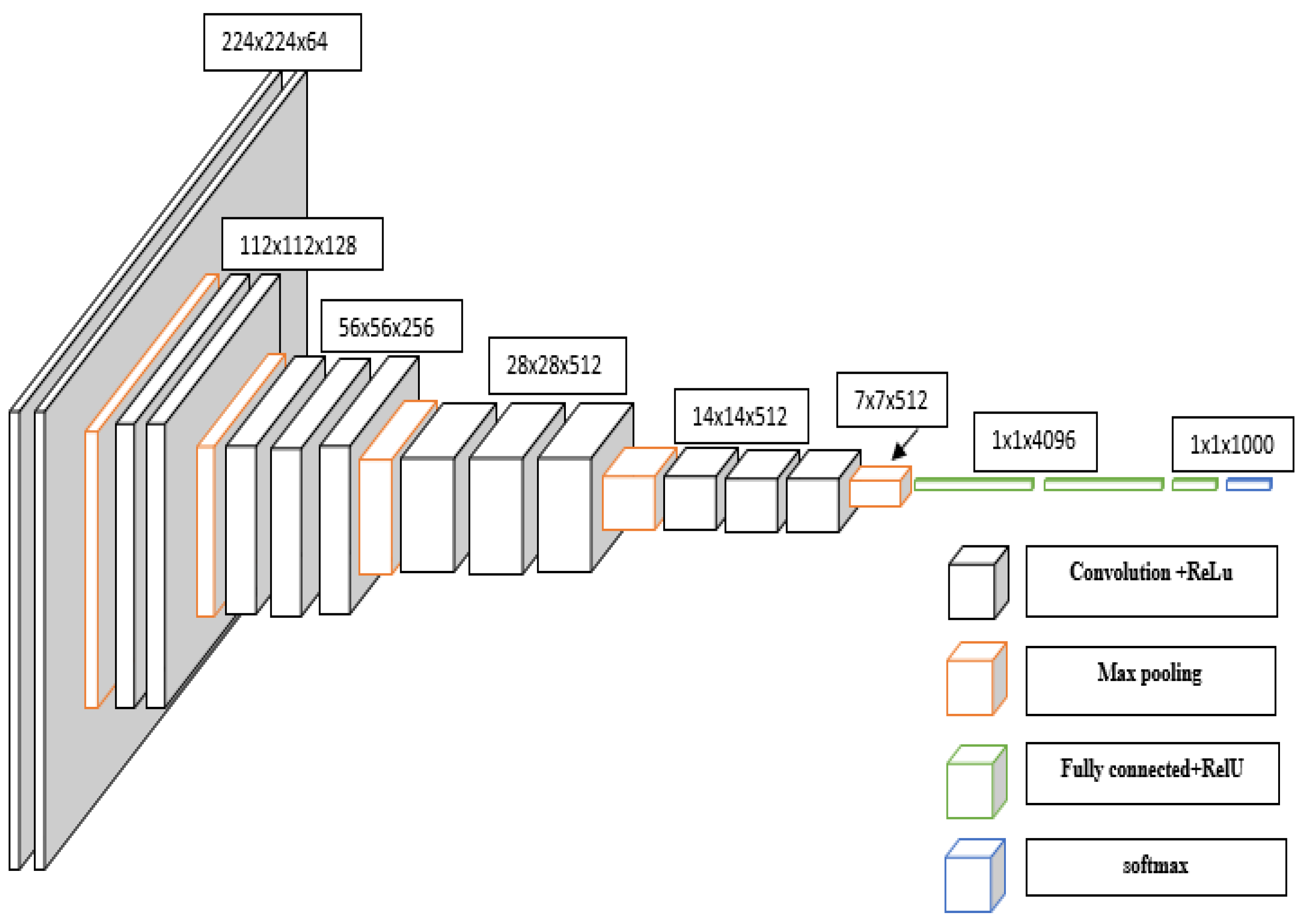

3.5.1. The VGGNet CNN architecture

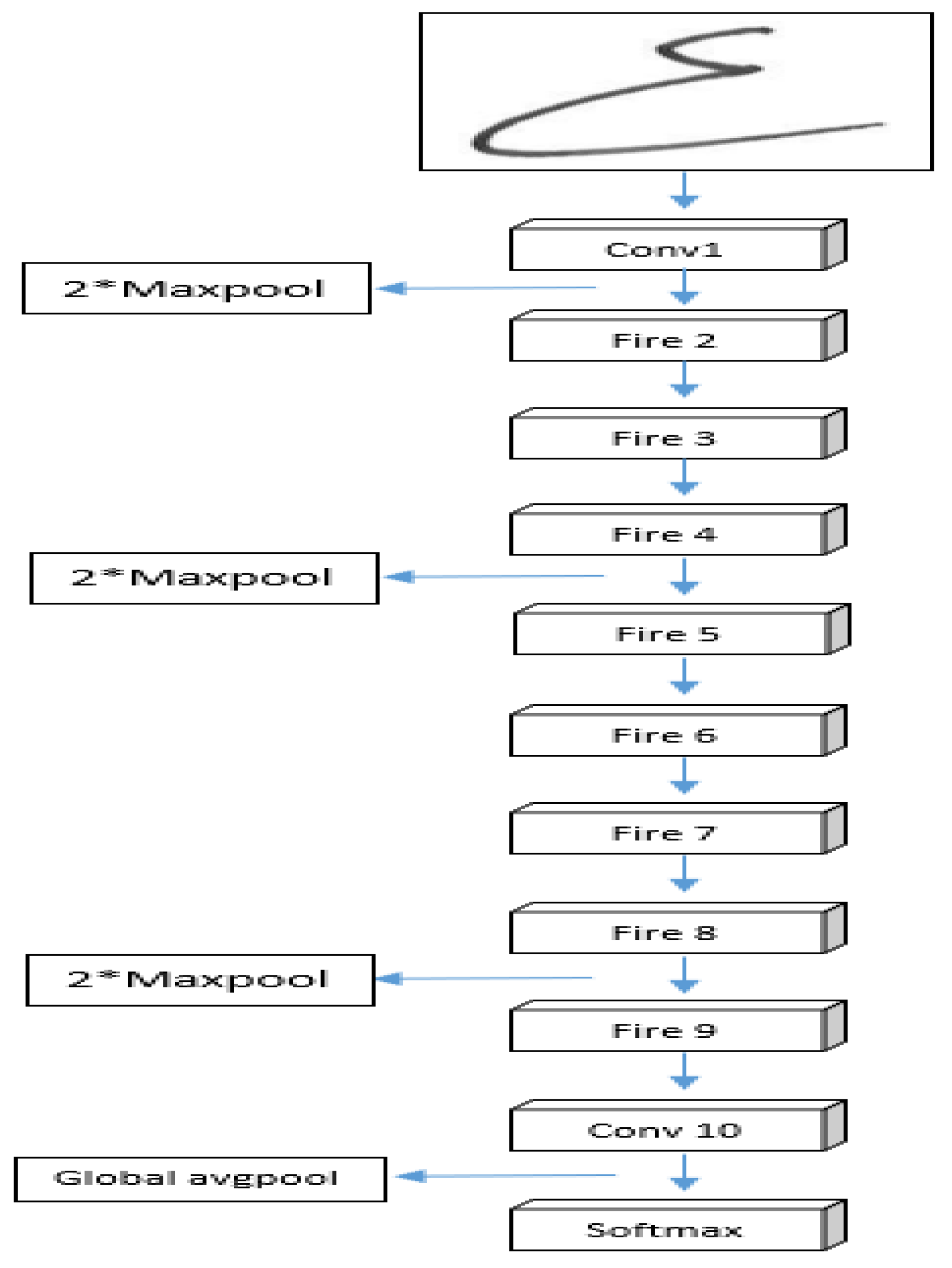

3.5.2. SqueezeNet architecture

3.6. Hyperparameters Tuning

3.6.1. Grid Search

| Algorithm.1 Pseudo Code of Grid Search | |||

| 1 | Function Grid Search (): | ||

| 2 | Hyperparameter Grid Search = Define Hyperparameter Grid Search | ||

| 3 4 |

Best Hyperparameters = None Best Performance = Select |

||

| 5 | for Hyperparameter in Hyperparameter Grid Search | ||

| 6 | Model = Set Hyperparameters in Model | ||

| 7 | Performance = Evaluate Model | ||

| 8 | if Performance > Best Performance | ||

| 9 | Best Performance = Performance | ||

| 10 | Best Hyperparameters = Hyperparameters | ||

| 11 | END | ||

| end | |||

3.6.2. Random Search

3.7. Supervised Machine learning Models

3.7.1. Support Vector Machines (SVM)

3.7.2. Neural Network (NNs)

3.7.3. Random Forest (RF)

3.7.4. K-Nearest Neighbors (KNN)

3.8. Evaluation of Models

4. Result and discussion

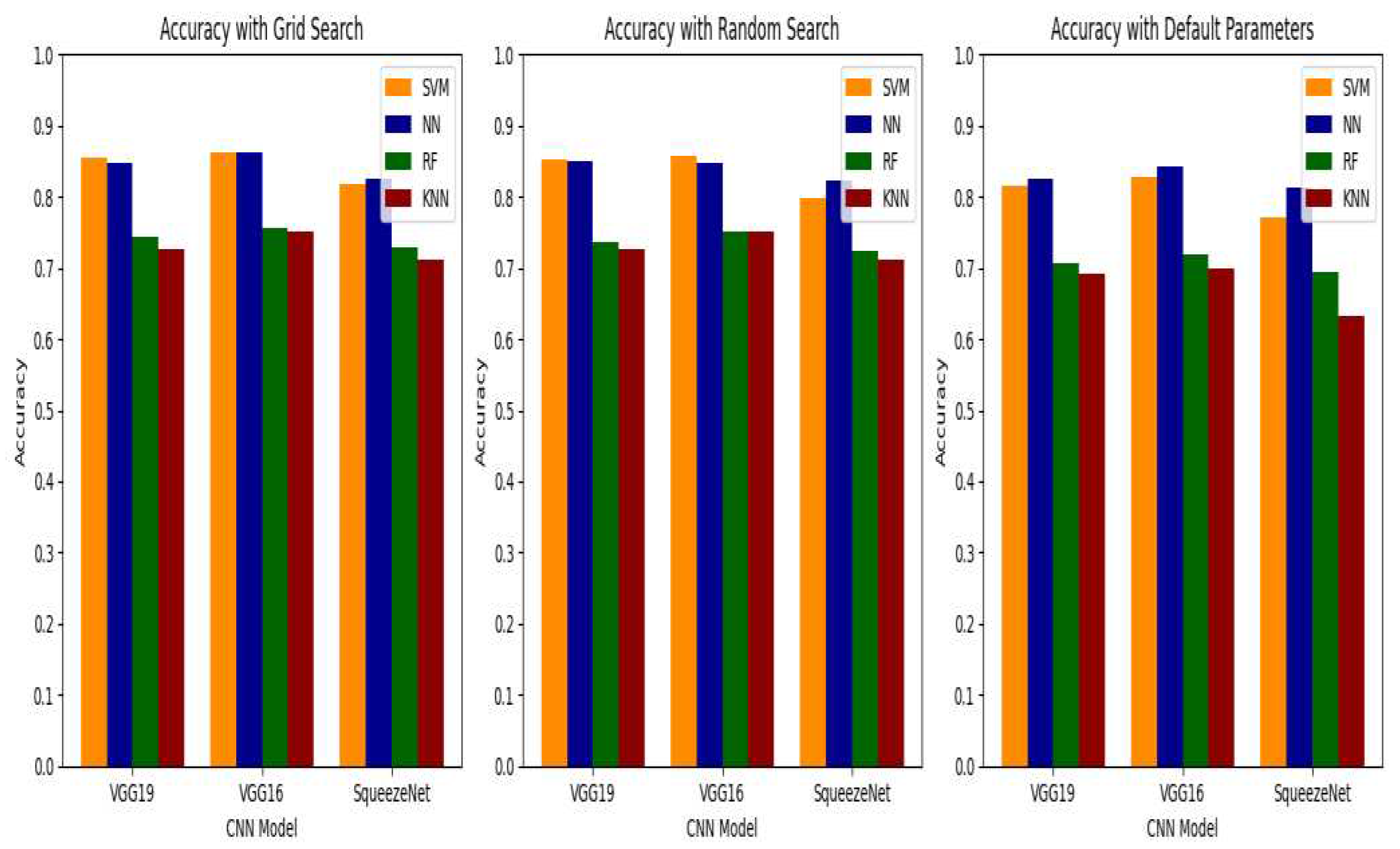

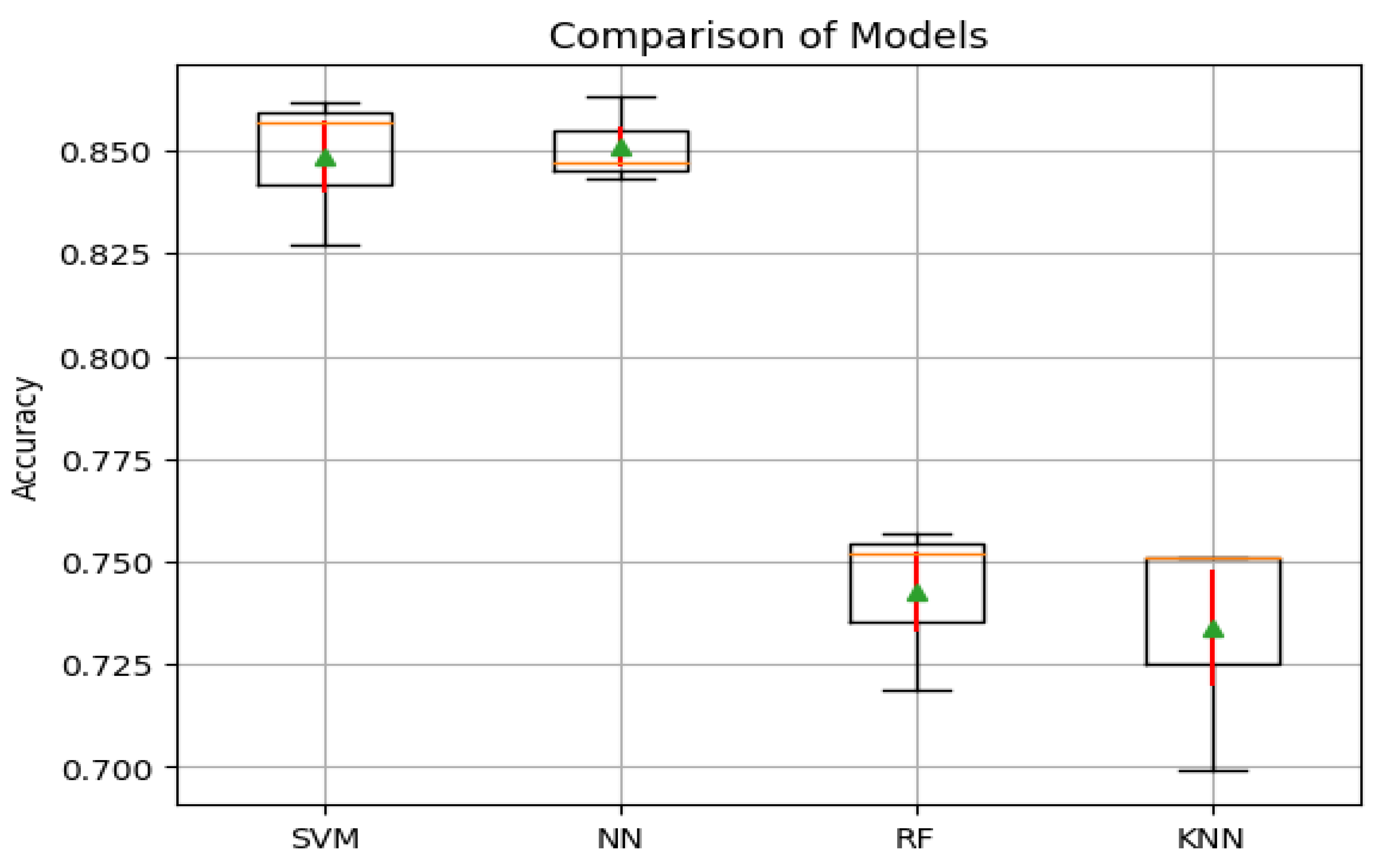

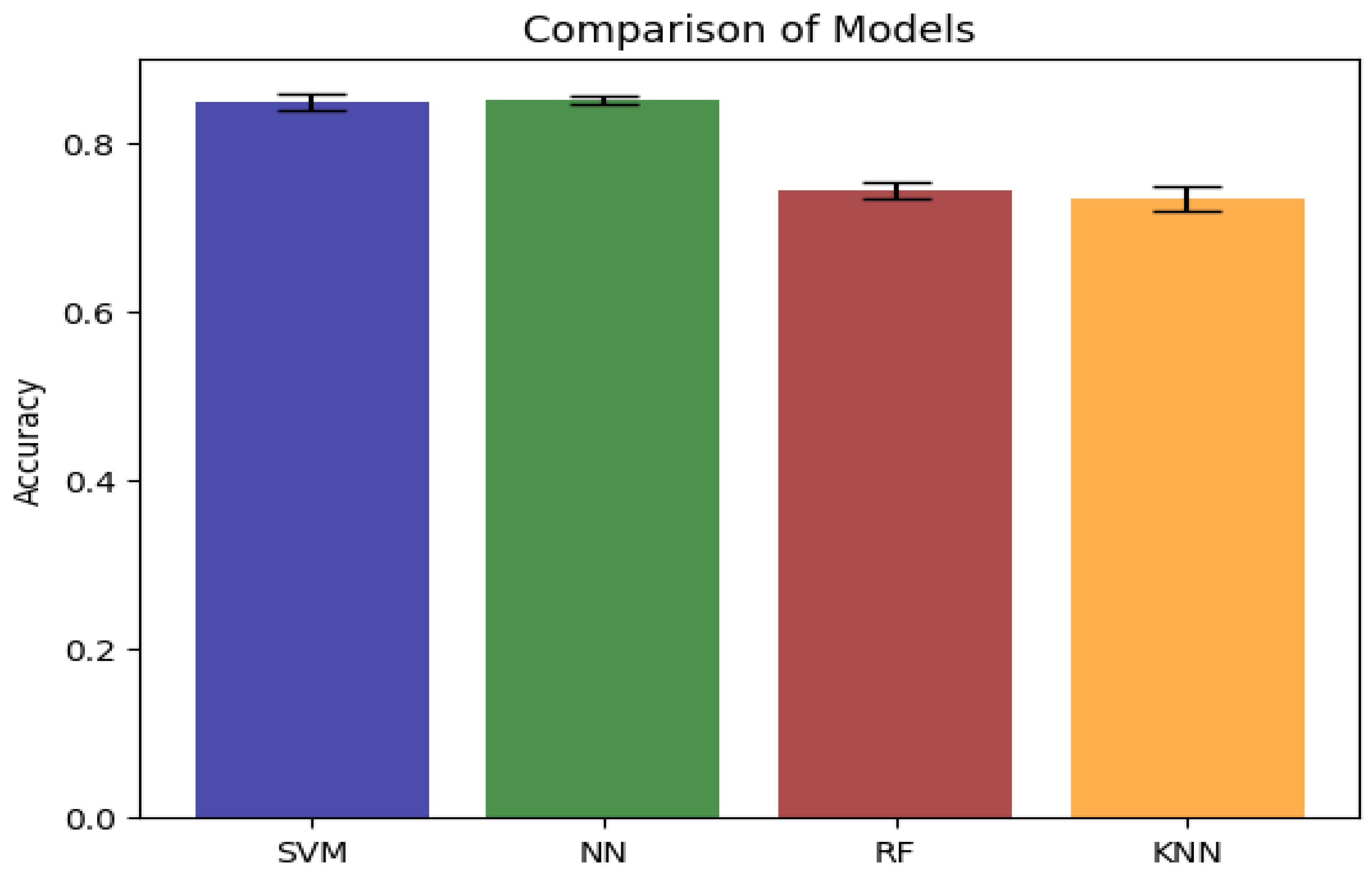

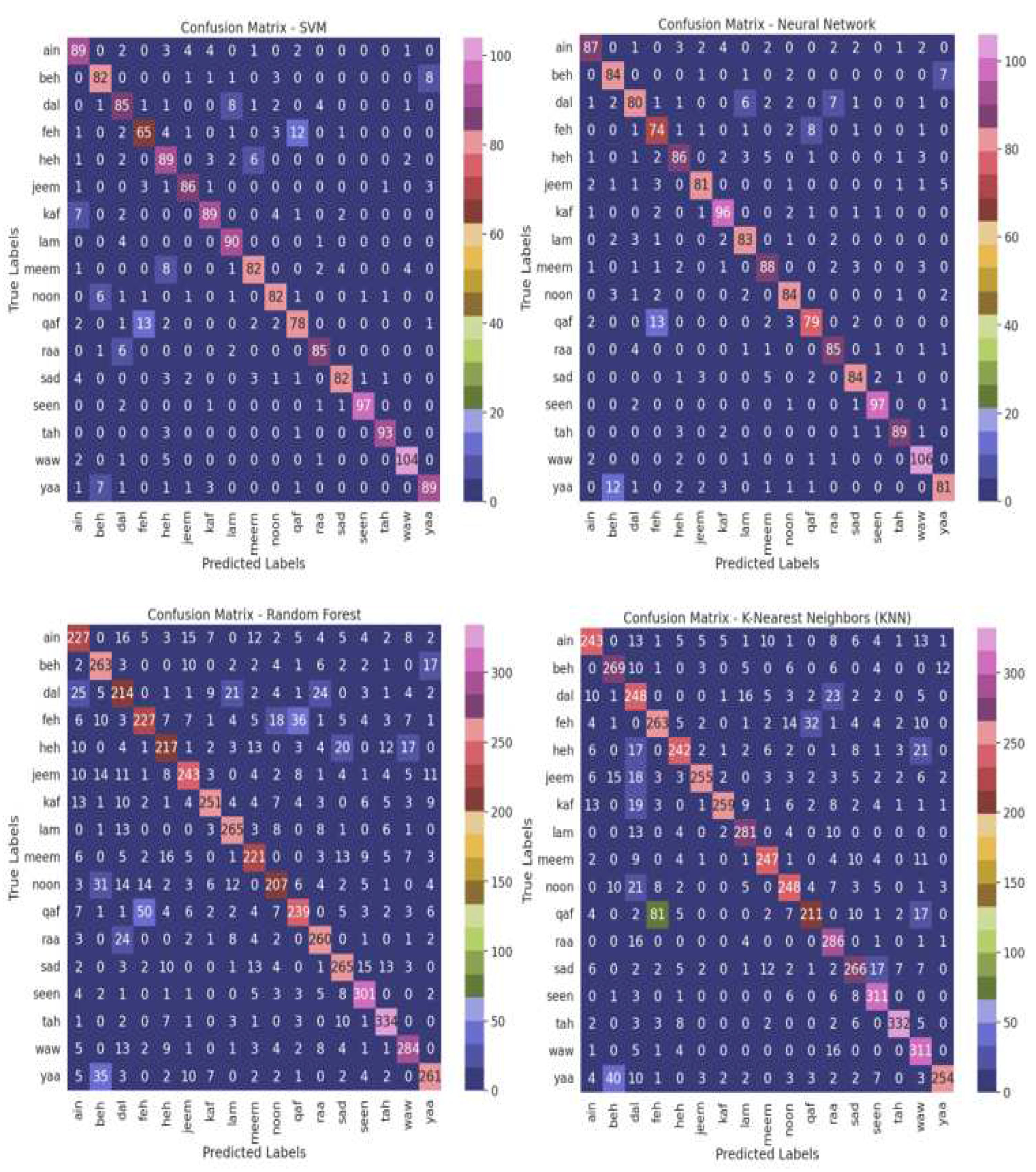

4.1. Performance of Classifier of Algorithms

4.2. Compared mean accuracy scores between models

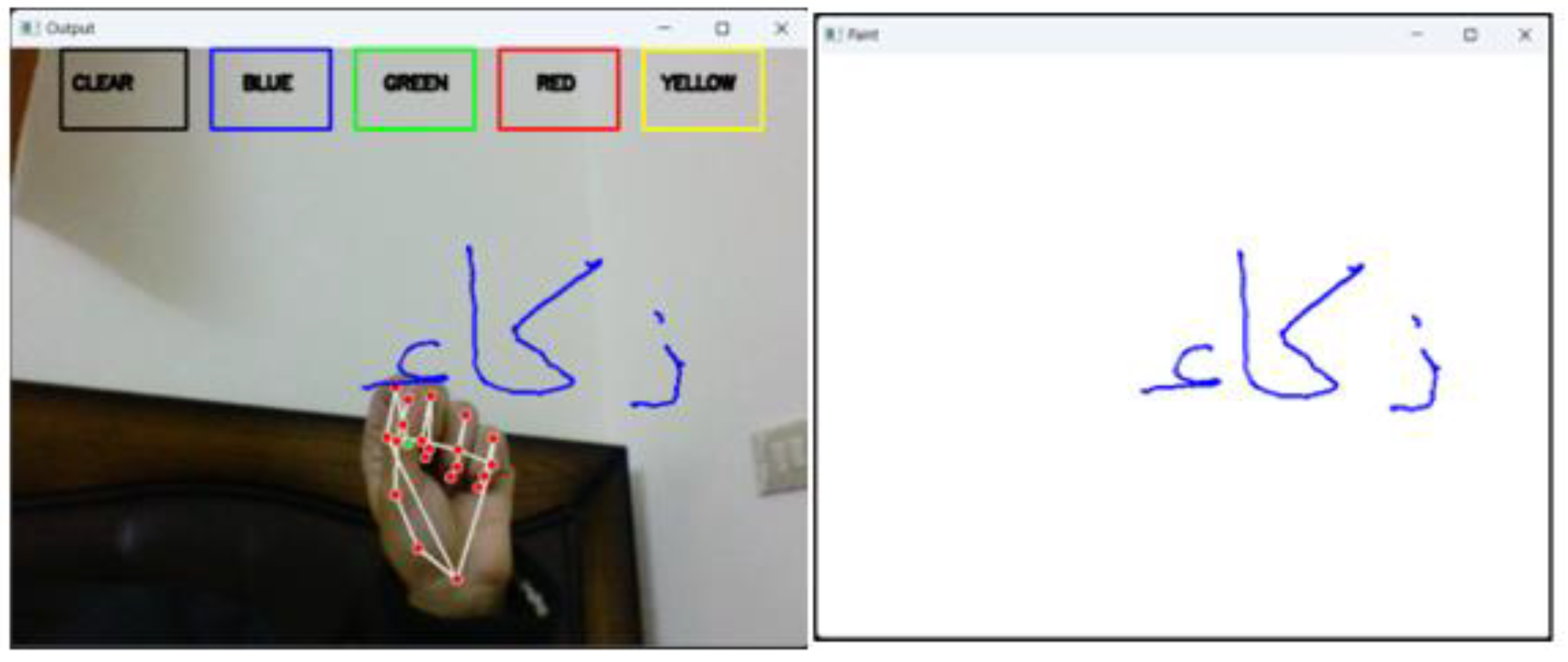

4.3. Sample of lettering writing by Experimental Setup

4.4. Validation of our model

4.5. Comparison of the proposed model with Preview Work

5. Conclusions and future work

Funding

References

- F. Al Abir, M. Al Siam, A. Sayeed, M. A. M. Hasan, and J. Shin, “Deep Learning Based Air-Writing Recognition with the Choice of Proper Interpolation Technique,” Sensors (Basel)., vol. 21, no. 24, pp. 1–15, 2021. https://doi.org/10.3390/s21248407. [CrossRef]

- S. Ahmed, W. Kim, J. Park, and S. H. Cho, “Radar-Based Air-Writing Gesture Recognition Using a Novel Multistream CNN Approach,” IEEE Internet Things J., vol. 9, no. 23, pp. 23869–23880, 2022. https://doi.org/10.1109/JIOT.2022.3189395. [CrossRef]

- S. Imtiaz and N. Kim, “Neural Network and Depth Sensor,” 2020.

- A. K. Choudhary, N. Dua, B. Phogat, and S. U. Saoji, “Air Canvas Application Using Opencv and Numpy in Python,” Int. Res. J. Eng. Technol., vol. 08, no. 08, pp. 1761–1765, 2021.

- U. H. Kim, Y. Hwang, S. K. Lee, and J. H. Kim, “Writing in the Air: Unconstrained Text Recognition From Finger Movement Using Spatio-Temporal Convolution,” IEEE Trans. Artif. Intell., 2022. https://doi.org/10.1109/TAI.2022.3212981. [CrossRef]

- L. Bouchriha, A. Zrigui, S. Mansouri, S. Berchech, and S. Omrani, “Arabic Handwritten Character Recognition Based on Convolution Neural Networks,” Commun. Comput. Inf. Sci., vol. 1653 CCIS, no. 8, pp. 286–293, 2022. https://doi.org/10.1007/978-3-031-16210-7_23. [CrossRef]

- N. Altwaijry and I. Al-Turaiki, “Arabic handwriting recognition system using convolutional neural network,” Neural Comput. Appl., vol. 33, no. 7, pp. 2249–2261, 2021. https://doi.org/10.1007/s00521-020-05070-8. [CrossRef]

- C. H. Hsieh, Y. S. Lo, J. Y. Chen, and S. K. Tang, “Air-Writing Recognition Based on Deep Convolutional Neural Networks,” IEEE Access, vol. 9, pp. 142827–142836, 2021. https://doi.org/10.1109/ACCESS.2021.3121093. [CrossRef]

- M. Khandokar, I and Hasan, M and Ernawan, F and Islam, S and Kabir, “Handwritten character recognition using convolutional neural network,” J. Phys. Conf. Ser., vol. 1918, p. 042152, 2021. https://doi.org/10.1088/1742-6596/1918/4/042152. [CrossRef]

- M. Mohd, F. Qamar, I. Al-Sheikh, and R. Salah, “Quranic optical text recognition using deep learning models,” IEEE Access, vol. 9, pp. 38318–38330, 2021. https://doi.org/10.1109/ACCESS.2021.3064019. [CrossRef]

- S. Malik et al., “An efficient skewed line segmentation technique for cursive script OCR,” Sci. Program., vol. 2020, 2020. https://doi.org/10.1155/2020/8866041. [CrossRef]

- S. Mukherjee, S. A. Ahmed, D. P. Dogra, S. Kar, and P. P. Roy, “Fingertip detection and tracking for recognition of air-writing in videos,” Expert Syst. Appl., vol. 136, pp. 217–229, 2019. https://doi.org/10.1016/j.eswa.2019.06.034. [CrossRef]

- M. A. Fadeel, “Off-line optical character recognition system for arabic handwritten text,” J. Pure Appl. Sci., vol. 18, no. 4, pp. 41–46, 2019.

- G. Sokar, E. E. Hemayed, and M. Rehan, “A Generic OCR Using Deep Siamese Convolution Neural Networks,” 2018 IEEE 9th Annu. Inf. Technol. Electron. Mob. Commun. Conf. IEMCON 2018, pp. 1238–1244, 2019. https://doi.org/10.1109/IEMCON.2018.8614784. [CrossRef]

- S. Misra, J. Singha, and R. H. Laskar, “Vision-based hand gesture recognition of alphabets, numbers, arithmetic operators and ASCII characters in order to develop a virtual text-entry interface system,” Neural Comput. Appl., vol. 29, no. 8, pp. 117–135, 2018. https://doi.org/10.1007/s00521-017-2838-6. [CrossRef]

- M. A. Khan, “Arabic handwritten alphabets, words and paragraphs per user (AHAWP) dataset,” Data Br., vol. 41, p. 107947, 2022. https://doi.org/10.1016/j.dib.2022.107947. [CrossRef]

- H. Abdi and L. J. Williams, “Principal component analysis. wiley interdisciplinary reviews: computational statistics,” Wiley Interdisplinary Rev. Comput. Stat., pp. 1–47, 2010.

- “I2OCR”, [Online]. Available: https://www.i2ocr.com/.

- K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., pp. 1–14, 2015.

- A. S. Jaradat et al., “Automated Monkeypox Skin Lesion Detection Using Deep Learning and Transfer Learning Techniques,” Int. J. Environ. Res. Public Health, vol. 20, no. 5, 2023. https://doi.org/10.3390/ijerph20054422. [CrossRef]

- F. N. Iandola, S. Han, M. W. Moskewicz, K. Ashraf, W. J. Dally, and K. Keutzer, “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size,” pp. 1–13, 2016, [Online]. Available: http://arxiv.org/abs/1602.07360.

- B. H. Shekar and G. Dagnew, “Grid search-based hyperparameter tuning and classification of microarray cancer data,” 2019 2nd Int. Conf. Adv. Comput. Commun. Paradig. ICACCP 2019, no. February, pp. 1–8, 2019. https://doi.org/10.1109/ICACCP.2019.8882943. [CrossRef]

- J. Bergstra and Y. Bengio, “Random search for hyper-parameter optimization,” J. Mach. Learn. Res., vol. 13, pp. 281–305, 2012.

- R. E. Al Mamlook, A. Nasayreh, H. Gharaibeh, and S. Shrestha, “Classification Of Cancer Genome Atlas Glioblastoma Multiform (TCGA-GBM) Using Machine Learning Method,” IEEE Int. Conf. Electro Inf. Technol., vol. 2023-May, no. July, pp. 265–270, 2023. https://doi.org/10.1109/eIT57321.2023.10187283. [CrossRef]

- S. V. N. Vishwanathan and M. N. Murty, “SSVM: A simple SVM algorithm,” Proc. Int. Jt. Conf. Neural Networks, vol. 3, no. 1, pp. 2393–2398, 2002. https://doi.org/10.1109/ijcnn.2002.1007516. [CrossRef]

- N. Kriegeskorte and T. Golan, “Neural network models and deep learning,” Curr. Biol., vol. 29, no. 7, pp. R231–R236, 2019. https://doi.org/10.1016/j.cub.2019.02.034. [CrossRef]

- G. Biau and E. Scornet, “A random forest guided tour,” Test, vol. 25, no. 2, pp. 197–227, 2016. https://doi.org/10.1007/s11749-016-0481-7. [CrossRef]

- S. Zhang, X. Li, M. Zong, X. Zhu, and D. Cheng, “Learning k for kNN Classification,” ACM Trans. Intell. Syst. Technol., vol. 8, no. 3, 2017. https://doi.org/10.1145/2990508. [CrossRef]

- M. Chen, G. AlRegib, and B. H. Juang, “Air-Writing Recognition - Part I: Modeling and Recognition of Characters, Words, and Connecting Motions,” IEEE Trans. Human-Machine Syst., vol. 46, no. 3, pp. 403–413, 2016. https://doi.org/10.1109/THMS.2015.2492598. [CrossRef]

- S. Ahmed, W. Kim, J. Park, and S. H. Cho, “Radar Based Air-Writing Gesture Recognition Using a Novel Multi-Stream CNN Approach,” IEEE Internet Things J., vol. PP, no. 8, p. 1, 2022. https://doi.org/10.1109/JIOT.2022.3189395. [CrossRef]

- J. K. Sharma, “Highly Accurate Trimesh and PointNet based algorithm for Gesture and Hindi air writing recognition Highly Accurate Trimesh and PointNet,” no. Lmc, pp. 0–17, 2023.

| CNN models | ML models | Accuracy of Optimization methods | ||

|---|---|---|---|---|

| ML | Grid Search | Random Search | Default Parameters | |

| VGG19 | SVM | 0.855 | 0.853 | 0.816 |

| NN | 0.847 | 0.851 | 0.825 | |

| RF | 0.744 | 0.735 | 0.706 | |

| KNN | 0.727 | 0.727 | 0.692 | |

| VGG16 | SVM | 0.855 | 0.853 | 0.816 |

| NN | 0.888 | 0.847 | 0.843 | |

| RF | 0.757 | 0.752 | 0.719 | |

| KNN | 0.751 | 0.751 | 0.699 | |

| SqueezeNet | SVM | 0.819 | 0.799 | 0.770 |

| NN | 0.825 | 0.823 | 0.813 | |

| RF | 0.729 | 0.724 | 0.695 | |

| KNN | 0.712 | 0.712 | 0.632 | |

| T-test | P-value |

| SVM vs NN | 0. 86123788 |

| SVM vs RF | 0.00280216 |

| SVM vs KNN | 0.00495305 |

| Group1 | Group2 | Mean diff | p-adj | lower | upper | reject |

| KNN | NN | 0.1173 | 0.0006 | 0.0619 | 0.1728 | True |

| KNN | RF | 0.09 | 0.009519 | -0.0464 | 0.0644 | True |

| KNN | SVM | 0.115 | 0.0007 | 0.0596 | 0.1704 | True |

| NN | RF | -0.1083 | 0.0011 | -0.1638 | -0.0529 | True |

| NN | SVM | -0.0023 | 0.999 | -0.0578 | 0.531 | False |

| RF | SVM | 0.106 | 0.0013 | 0.0506 | 0.1614 | True |

| Actual | Prediction | True/False |

| Beh (ب) | Beh (ب) | T |

| Dal(د) | Ain(ع) | F |

| Ain(ع) | Ain(ع) | T |

| Feh(ف) | Qaf(ق) | F |

| Heh(ه) | Heh(ه) | T |

| Jeem (ج) | Jeem (ج) | T |

| Kaf (ك) | Kaf (ك) | T |

| Lam (ل) | Dal(د) | F |

| Meem(م) | Meem(م) | T |

| Noon(ن) | Noon(ن) | T |

| Qaf(ق) | Feh(ف) | F |

| Raa(ر) | Raa(ر) | T |

| Sad(ص) | Sad(ص) | T |

| Seen(س) | Seen(س) | T |

| Tah(ت) | Tah(ت) | T |

| Waw(و) | Heh(ه) | F |

| Yaa(ي) | Beh (ب) | F |

| Paper | Languages | Method | Result |

| [1] | Air writing English | 2D-CNN | accuracy: 91.24% |

| [3] | Air-writing English | LSTM | accuracy: 99.32% |

| [4] | English | Faster RCNN | accuracy: 94% |

| [5] | Air writing Korean and English | 3D ResNet | Character error rate (CER): Korean: 33.16% |

| English: 29.24% | |||

| [12] | Air-writing English | Faster RCNN | mean accuracy: 96.11 % |

| [29] | Air writing English | - | error rate: 0.8% |

| [30] | Air writing English | MS-CNN | accuracy: 95% |

| [31] | Air writing Hindi | PointNet | recognition rate: >97% |

| Our Model | Air writing Arabic | Hybrid Model VGG16+NN | Accuracy :88% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).