1. Introduction of Breast Cancer

Breast cancer is a type of cancer that forms in the cells of the breast tissue [

1]. It is one of the most common cancers affecting women worldwide, although it can also occur in men, albeit less frequently. Breast cancer typically begins in the milk ducts (ductal carcinoma) [

2] or the milk-producing lobules (lobular carcinoma) of the breast [

3]. The disease can manifest as a lump or mass in the breast, but it can also spread to other parts of the body if left untreated.

The exact cause of breast cancer is not fully understood, but several risk factors have been identified. These include genetic mutations, such as mutations in the BRCA1 and BRCA2 genes, which increase the likelihood of developing breast cancer [

4,

5,

6]. Other risk factors include a family history of the disease, age, gender, hormonal factors (such as early onset of menstruation or late menopause), and exposure to certain environmental factors. While these risk factors can contribute to the development of breast cancer, many cases occur without an identifiable cause [

7].

Early detection is critical in the management of breast cancer [

8]. Regular screening methods such as mammography, clinical breast exams, and breast self-exams can help identify abnormalities in the breast tissue. If breast cancer is suspected, further diagnostic tests, including biopsies, may be performed to confirm the diagnosis [

9]. Treatment options for breast cancer vary depending on the stage and type of cancer but often include surgery, chemotherapy, radiation therapy, hormone therapy, targeted therapy, or a combination of these approaches. The choice of treatment is personalized based on the patient's specific case [

10].

Breast cancer awareness and research have made significant progress in recent years, leading to improved diagnostic techniques and more effective treatments [

11]. Early detection and advances in medical technology have contributed to higher survival rates for individuals diagnosed with breast cancer [

12]. Supportive care and psychosocial services are also essential components of breast cancer treatment, as they address the emotional and psychological well-being of patients and survivors [

13]. Public awareness campaigns, ongoing research, and regular screening continue to play a vital role in reducing the impact of breast cancer on individuals and communities worldwide [

14].

The article structure of this review is distributed as follows:

Section 2 focuses on an introduction to the functionality and application areas of deep learning,

Section 3 describes the methodological techniques of deep learning for breast cancer detection,

Section 4 describes an algorithm for detecting breast cancer using migration learning,

Section 5 elaborates on the role of GAN in the process of breast cancer detection,

Section 6 describes lifelong learning and the application of lifelong learning to breast cancer detection, and finally, conclusions and exploring future directions are given in

Section 7. By reading this review, the readers can further understand the key role played by deep learning methods in breast cancer detection and the future research directions. Deep learning provides a powerful tool to improve early detection and accurate classification of breast cancer, which is expected to improve patient survival and treatment outcomes.

2. Deep Learning

Deep learning is a subfield of machine learning that focuses on the development and training of artificial neural networks, particularly deep neural networks [

15]. It has gained widespread attention and prominence in recent years due to its remarkable ability to learn and make sense of complex patterns and data representations. Here are four key aspects of deep learning: neural network architectures, deep learning algorithms [

16] and techniques, large-scale datasets, hardware acceleration, and distributed computing [

17]. Neural network structures include many types of network structures and the following two network structures are very important in the problem of breast cancer classification.

2.1. Convolutional Neural Network (CNN)

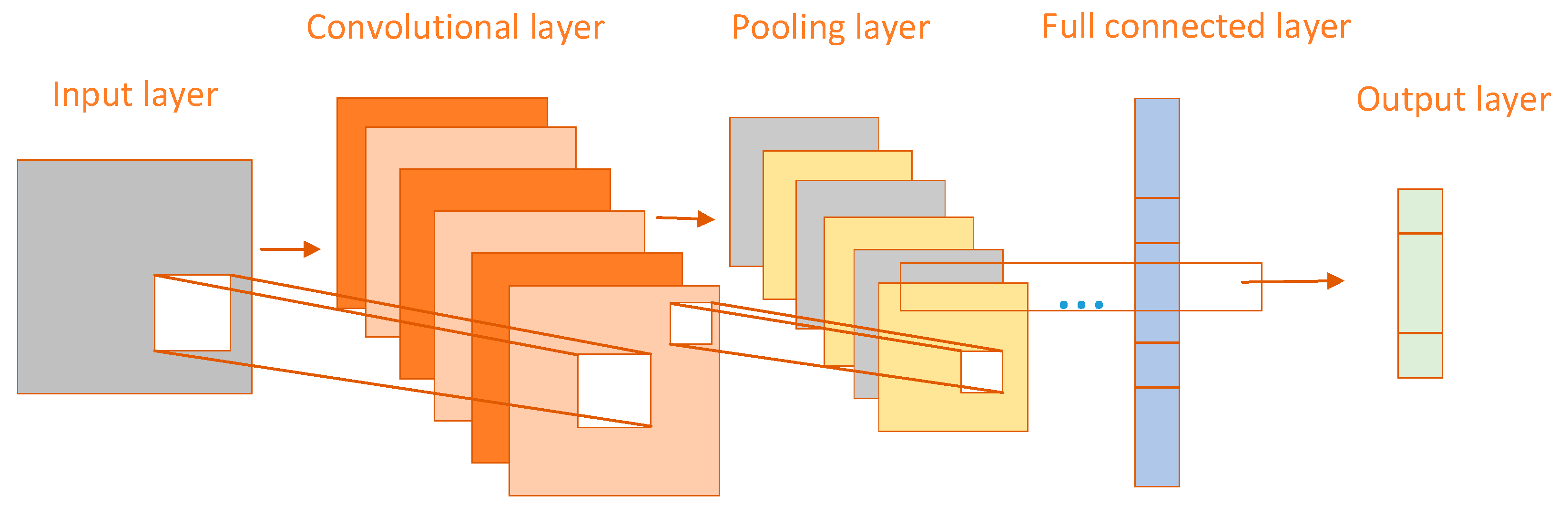

CNN performs well in processing image data and extracting features and is therefore widely used in breast cancer classification tasks [

18]. The CNN structure is divided into three layers, convolutional layer, pooling layer, and fully connected layer [

19]. The network structure of CNN is shown in

Figure 1 below. The convolutional layer is the most important in the CNN structure, which mainly uses a convolutional kernel to extract features from an image, generating a feature map that contains image edges, textures, and other features. Generally, multiple convolutional layers are stacked together to perform convolutional operations, and the more layers there are, the more abstract the extracted image features will be [

20]. The pooling layer is mainly used to reduce the spatial size and dimension of the feature map, reduce the amount of computation, and reduce the complexity of the model. The commonly used pooling operations are mainly maximum pooling and average pooling. Each layer of the fully connected layer is closely connected, and each neuron is connected to all neurons in the previous layer. The processed abstract features are mapped to the output categories and the outputs are mapped to the category probability distributions by the softmax activation function.

2.2. Recurrent Neural Network (RNN)

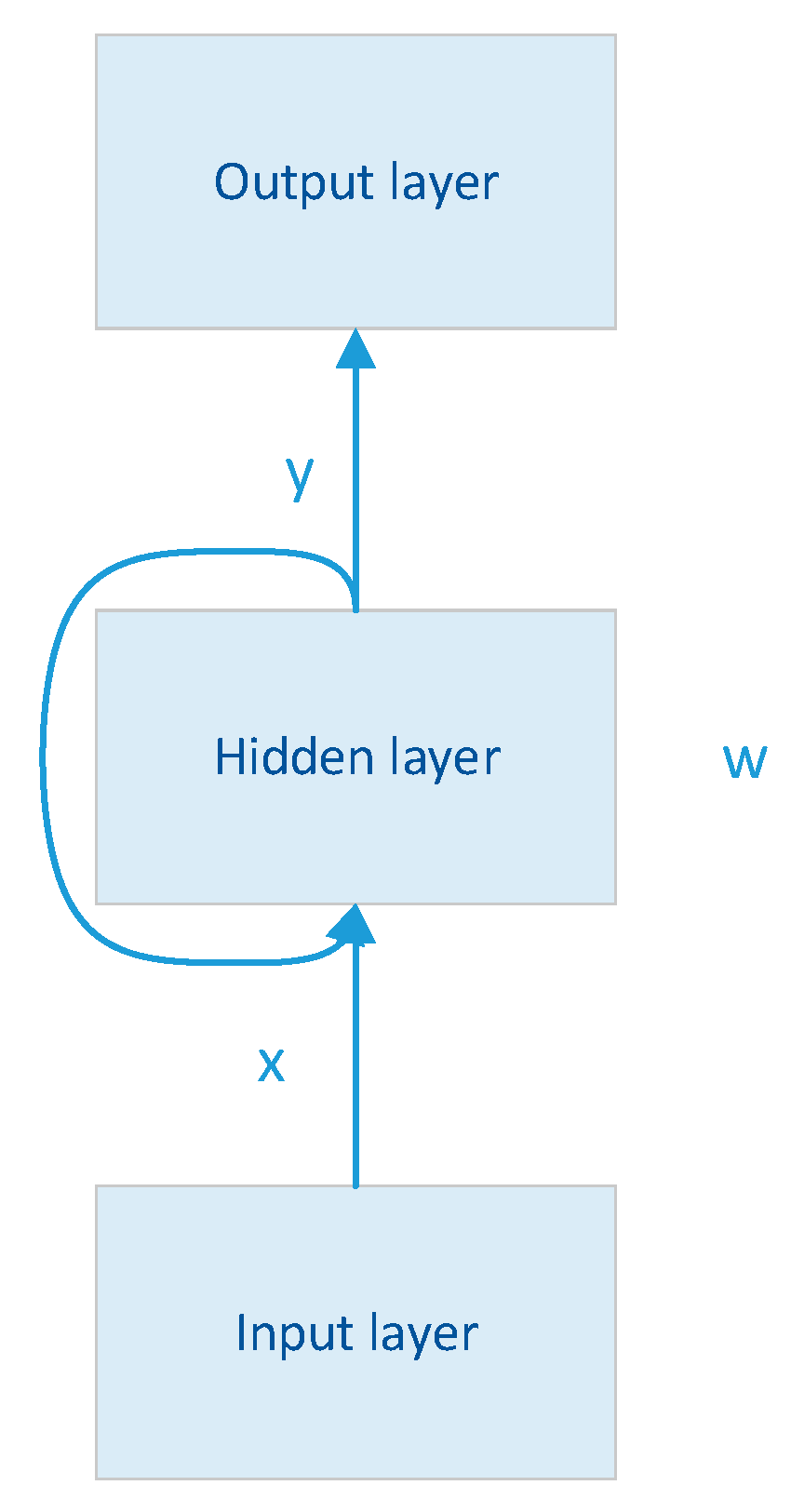

RNN is a deep learning model, and the model mainly contains a three-layer network structure, as shown in

Figure 2. To maintain and update the internal state, the RNN has a unique cyclic structure, which is the core feature of the RNN [

21]. This structure allows information to be passed from one-time step to the next and is therefore well suited for processing data with temporal or sequential relationships. So when breast data has a time series or sequence relationship, RNN can play an important role in the breast cancer classification task. Meanwhile, when training deep neural networks, the gradient is the signal used to update the model parameters. In the backpropagation algorithm, the gradient propagates from the output layer to the input layer. In RNNs, the gradient needs to be passed between time steps [

22]. The problem is that when the network is very deep or the time step is very long, the gradient may become very small (gradient vanishing) or very large (gradient exploding), leading to invalid or unstable weight updates. To solve this problem, variants of RNN, Long Short-Term Memory (LSTM), and Gated Recurrent Unit (GRU), have gradually emerged.

LSTM is an improved RNN architecture specifically designed to solve the problem of gradient vanishing and gradient explosion. LSTM introduces three gates (input gate, forgetting gate, and output gate) which control the flow of information about the internal state. Forgetting gates allow the network to forget unimportant information, input gates allow the network to accept new information, and output gates allow the network to output specific information. The design of these gates allows the LSTM to efficiently capture long-term dependencies while reducing the problems of gradient vanishing and gradient explosion [

23].

GRU is another improved RNN architecture, similar to LSTM but more simplified. It includes reset gates and update gates that control the updating of the internal state. GRU has relatively fewer structures and therefore less computational overhead, but still effectively captures long-term dependencies and mitigates the gradient problem. These improved RNN variants (LSTM and GRU) have become the preferred choice when processing sequential data, especially in tasks where long-term dependencies need to be considered [

24]. They have achieved significant success in areas such as natural language processing, machine translation, and speech recognition, as they allow models to better process and understand contextual information in sequential data while mitigating the gradient problem.

Where x, w, and y represent the matrices that linearly transform the vectors of the input, hidden, and output layers respectively.

At the core of deep learning are artificial neural networks, which are inspired by the structure and function of the human brain. These networks consist of interconnected nodes, or "neurons," organized into layers. Deep learning specifically refers to neural networks with multiple hidden layers between the input and output layers [

25]. These deep architectures allow the network to automatically learn hierarchical features and representations from data, making them exceptionally powerful for tasks like image and speech recognition, natural language processing, and more.

Deep learning excels at feature learning and representation. Unlike traditional machine learning, which often requires manual feature engineering, deep learning models automatically extract relevant features from raw data [

26,

27]. This is particularly valuable when working with unstructured data, such as images, audio, or text. Deep neural networks can capture intricate patterns and abstract features at different levels of granularity, allowing them to handle a wide range of complex tasks.

Deep learning models require large amounts of labeled data for effective training. Some common breast cancer data sets are shown in

Table 1. With the advent of big data and advances in computational resources, deep learning has become more practical and feasible. The availability of extensive datasets allows deep neural networks to generalize well to new, unseen data, leading to improved performance on various real-world applications. However, acquiring and preparing high-quality data remains a significant challenge in many deep-learning projects.

Deep learning has found applications across various domains, including computer vision (e.g., image and video analysis), natural language processing (e.g., language translation and sentiment analysis), speech recognition, autonomous vehicles, healthcare (e.g., medical image analysis and drug discovery), and more. Its versatility and ability to handle large-scale, complex problems make it a valuable tool for solving a wide range of real-world challenges [

29].

In all, deep learning is a subfield of machine learning that focuses on neural networks with multiple hidden layers. It excels at automatically learning features and representations from data, relies on large datasets for training, and has found applications in numerous domains [

30]. Its capacity to tackle complex problems and extract meaningful patterns from diverse data types has made deep learning a transformative technology with far-reaching implications for industries and research fields.

3. Deep Learning for Breast Cancer Detection

Deep learning has demonstrated significant potential in improving the early detection and diagnosis of breast cancer [

31,

32,

33]. Deep learning algorithms, particularly CNN [

34,

35], excel in image analysis. In the context of breast cancer, mammography and digital breast tomosynthesis (DBT) are commonly used imaging techniques. Deep learning models can be trained on large datasets of breast images, allowing them to automatically detect and classify suspicious features such as masses, microcalcifications, and architectural distortions. These models can assist radiologists by providing a preliminary analysis, reducing the risk of human error, and potentially increasing the accuracy of cancer detection [

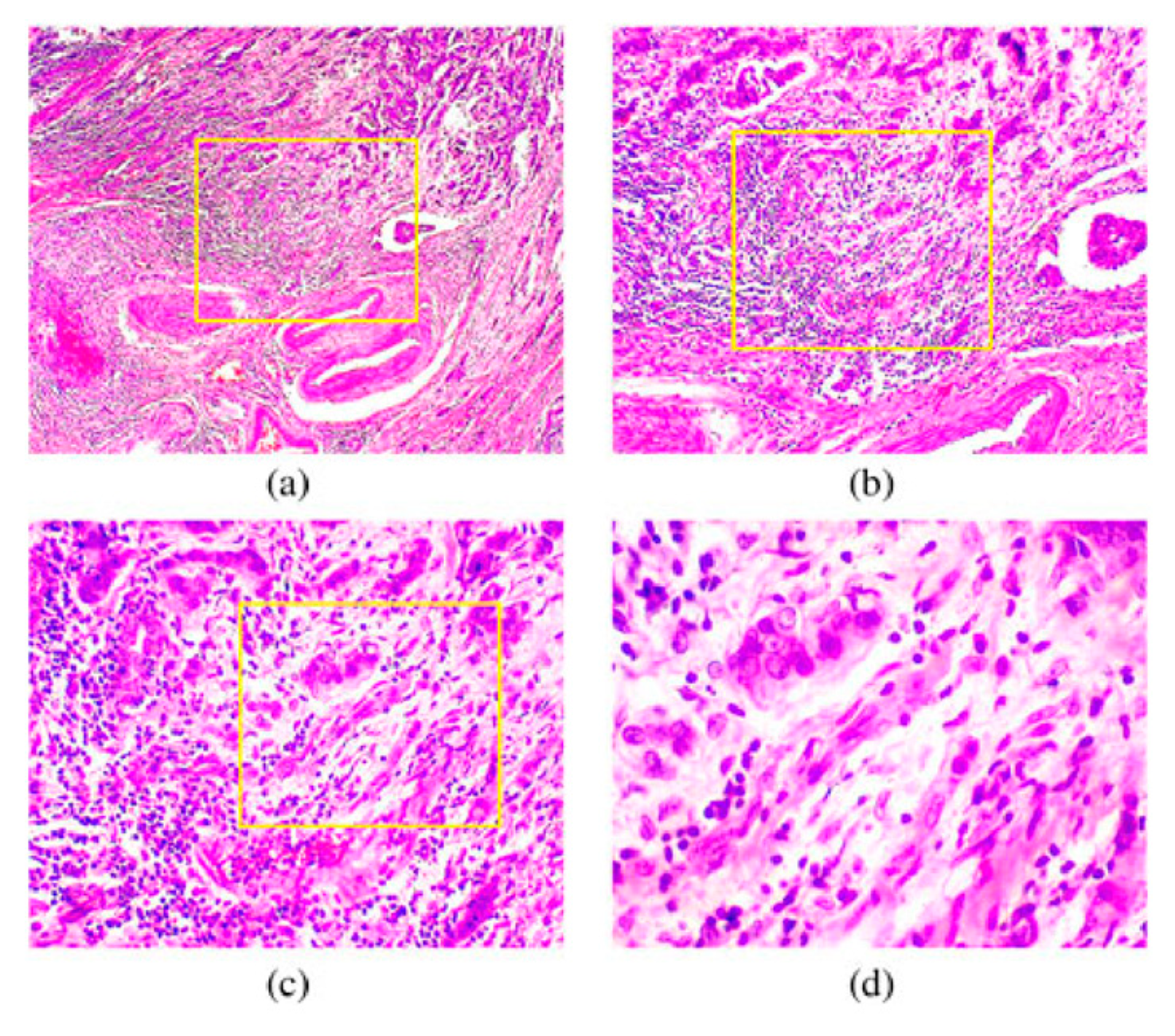

36]. A section of a malignant tumor of breast cells is shown in

Figure 3.

Early detection is crucial for improving breast cancer outcomes. Deep learning can help identify subtle abnormalities that might be missed during routine screenings [

37]. By analyzing mammograms and other breast imaging data, deep learning models can flag areas of concern, prompting further evaluation and potentially leading to earlier diagnosis and treatment initiation [

38]. This can be particularly beneficial in cases where cancer is in its early, more treatable stages.

Deep learning can also contribute to risk assessment and personalized screening programs. By analyzing a patient's medical history, genetic information, and breast imaging data, deep-learning models can help identify individuals at higher risk of developing breast cancer [

7,

39,

40]. This information can guide healthcare providers in tailoring screening recommendations, ensuring that high-risk patients receive more frequent or advanced screenings, while low-risk patients can benefit from less intensive screening protocols.

One challenge in breast cancer screening is the occurrence of false-positive results, which can lead to unnecessary anxiety, follow-up tests, and healthcare costs. Deep learning models can be trained to reduce false positives by refining their ability to differentiate between benign and malignant lesions [

41]. By doing so, they can enhance the overall efficiency and cost-effectiveness of breast cancer screening programs.

Deep learning can be integrated with other diagnostic tools and modalities. For example, it can assist pathologists in analyzing breast tissue samples (histopathology) by automating the identification of cancerous cells and assessing tumor characteristics [

42]. Additionally, deep learning models can aid in the interpretation of breast MRI scans, offering a complementary approach to mammography and improving diagnostic accuracy in cases where MRI is more suitable.

There are several current studies based on deep learning for breast cancer detection, Mahoro, et al. [

43] summarise different breast cancer screening methods to provide a foundation for accurate breast cancer classification. The paper also summarises the application of deep learning to breast imaging and presents the current challenges of combining AI and breast cancer clinical time. Umer, et al. [

44] proposed a deep learning method for the classification of histopathological images to achieve classification detection of multi-tired breast cancer. The researcher developed a deep learning model with a feature fusion and selection mechanism called 6B-Net. The model has a short classification training time and can classify and detect the data efficiently. To solve the problem of manual classification of breast cancer regions by doctors, Ting, et al. [

45] propose a CNN-improved breast cancer detection algorithm that achieves the classification of images into malignant, benign, and healthy patients, which is a triple classification problem. It achieves an accuracy of 90.50% and performs well in other evaluation metrics, achieving autonomy in breast cancer classification and reducing the burden on doctors. However, the accuracy of the method needs to be improved. Obayya, et al. [

46] developed an algorithm for arithmetic optimization of histopathological classification of breast cancer (AOADL-HBCC) with an accuracy of 96.77%, which was developed based on deep learning. The authors used median filtering and contrast for the data enhancement process which helped to improve the accuracy of the algorithm. In addition to this, a DBN classifier with a hyperparametric optimizer is also used in the paper. Not only does deep learning have a great impact on breast classification, but the combination of deep learning and other methods for breast cancer detection has shown promising results. For example, Jabeen, et al. [

47] proposed a method for breast cancer classification using a combination of deep learning and the best selected features, which has an optimal accuracy of 99.1%, outperforming most classification algorithms. The framework proposed in the paper is divided into five parts in total, first dataset augmentation is performed, feature extraction is performed in the middle two steps, then model optimisation is performed, and in the last step deep learning and the best selected features are fused which completes the task of classification of breast cancer.

4. Transfer Learning for Breast Cancer Detection

Transfer learning can play a pivotal role in improving the accuracy and efficiency of breast cancer detection through various approaches and applications [

48,

49,

50]. Transfer learning can leverage pre-trained deep neural networks, such as CNN, that have been trained on vast image datasets [

51]. These networks have learned to extract generic features from images, which can be highly valuable for breast cancer detection. By fine-tuning these networks on specific breast imaging datasets [

52], such as mammograms or ultrasound images, transfer learning enables the extraction of relevant features associated with breast cancer, such as the shape, texture, and spatial patterns of abnormalities [

53]. This reduces the need for manual feature engineering and accelerates the development of accurate detection models.

Breast cancer datasets, especially those with detailed annotations, can be limited in size and diversity. Transfer learning addresses this challenge by allowing the transfer of knowledge from larger and more diverse datasets. Models pre-trained on millions of images can be adapted for breast cancer detection, even when the specific dataset is relatively small [

54,

55]. This increases the efficiency of model training and enables the development of robust classifiers, particularly in cases where collecting large-scale, specialized medical datasets is challenging.

Transfer learning models benefit from the regularization effect of pre-training on large, diverse datasets. They tend to generalize better and are less prone to overfitting, even when dealing with limited medical data. By fine-tuning pre-trained models on breast cancer data, they can adapt their learned representations to the nuances of medical imaging, including variations in image quality, patient demographics, and disease presentation. This enhances the model's ability to make accurate predictions on new, unseen breast cancer cases [

56].

Breast cancer detection often relies on multiple imaging modalities, such as mammography, MRI, and ultrasound. Transfer learning can be applied to each modality separately, and then the knowledge from these modalities can be fused to create a more comprehensive diagnostic model [

57]. This fusion of information improves the overall accuracy and robustness of breast cancer detection systems, as it takes advantage of the strengths of each imaging technique.

Transfer learning models can be continuously updated and adapted to evolving knowledge about breast cancer and the availability of new data [

58]. As medical understanding of the disease advances and more patient data becomes accessible, transfer learning allows for the seamless integration of these insights into the diagnostic process. This adaptability ensures that breast cancer detection models remain up-to-date and aligned with the latest research and clinical practices [

59].

5. GAN for Breast Cancer Detection

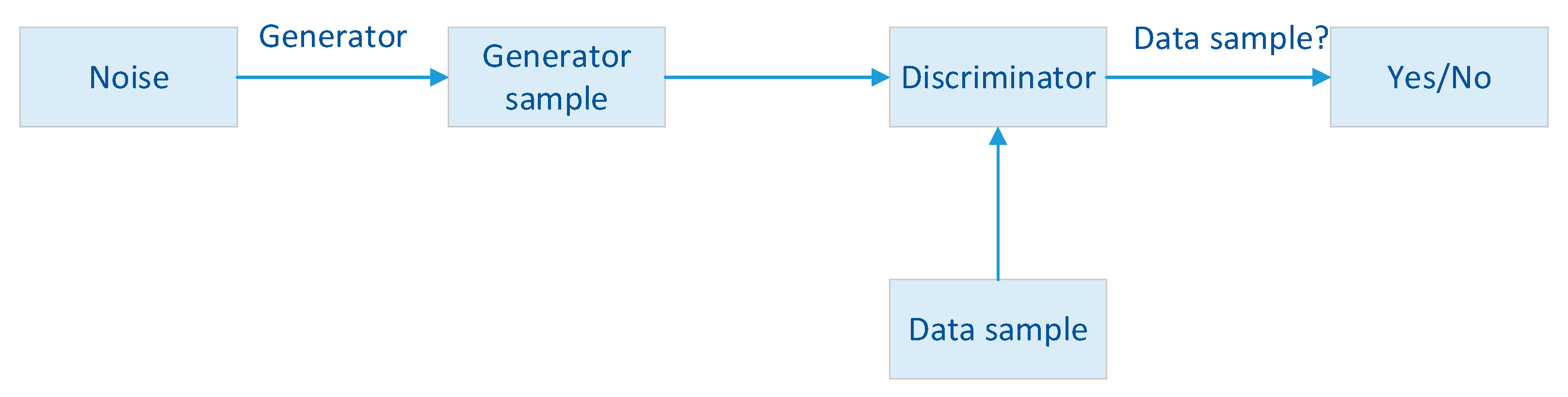

Generative Adversarial Networks (GAN[

24]) are primarily known for their ability to generate realistic data, but they can also play a role in improving breast cancer detection through various innovative approaches [

60,

61]. GAN can be used to augment medical imaging datasets, including those used for breast cancer detection. By training a GAN on a relatively small dataset of real mammograms or other breast images, it can generate synthetic images that closely resemble real cases. These synthetic images can then be added to the original dataset, effectively expanding the training data and diversifying it [

62]. This augmented dataset can improve the robustness and generalization capabilities of machine learning models, leading to more accurate breast cancer detection algorithms.

GAN can be employed to fill in missing or incomplete parts of medical images. In the context of breast cancer detection, this can be particularly useful for cases where portions of an image are obscured or unavailable due to technical limitations. GAN can generate realistic replacements for the missing regions, allowing radiologists and algorithms to analyze more complete images, potentially leading to better diagnostic accuracy [

63].

GAN can assist in tumor segmentation, which is a critical step in breast cancer diagnosis and treatment planning. GAN-based segmentation models can be trained to delineate the boundaries of breast tumors in medical images with high precision [

64]. This segmentation can aid radiologists in quantifying the size and extent of tumors, providing valuable information for treatment decisions. The overall schematic of the GAN network is shown in

Figure 4.

Medical images can be noisy due to various factors, such as imaging equipment limitations and patient motion. GAN can be used to denoise medical images by learning to distinguish between true anatomical structures and noise or artifacts. Cleaner images can improve the accuracy of breast cancer detection algorithms by reducing false positives and enhancing the visibility of subtle abnormalities [

65,

66,

67].

GAN can create variations in breast images by altering factors such as tissue density, lesion characteristics, and image quality. This is particularly useful for testing the robustness of detection algorithms and ensuring they perform well across diverse clinical scenarios [

68,

69]. By simulating different clinical conditions, GAN can help identify and rectify potential weaknesses in breast cancer detection models.

6. Lifelong Learning for Breast Cancer Detection

Lifelong learning, a concept inspired by the way humans continually acquire and adapt knowledge throughout their lives, can significantly contribute to the improvement of breast cancer detection and diagnosis in several ways [

70]. Lifelong learning systems can continuously update their knowledge and adapt to new information and techniques in the field of breast cancer detection [

71]. As medical research advances and new diagnostic technologies emerge, these systems can integrate the latest findings into their algorithms. For example, when new biomarkers, imaging modalities, or diagnostic criteria are introduced, lifelong learning models can be retrained to incorporate this evolving knowledge, ensuring that breast cancer detection remains at the forefront of medical innovation.

Lifelong learning allows for the refinement and improvement of breast cancer detection models over time [

72,

73]. These models can start with a solid foundation, perhaps based on a large initial dataset, and then be fine-tuned and updated as new patient data becomes available. This iterative learning process leads to more accurate and reliable diagnostic models, which are essential for consistently improving detection rates and reducing false positives [

74].

Breast cancer diagnosis often relies on multiple sources of data, such as mammograms, biopsies, genetic information, and patient medical histories [

75,

76,

77]. Lifelong learning systems can seamlessly integrate and synthesize information from various modalities, creating a more holistic and comprehensive view of a patient's condition. By considering a wide range of data sources, these systems can offer more precise and personalized diagnostic recommendations.

Lifelong learning can facilitate patient-centered care by incorporating patient feedback and outcomes data into the learning process [

78]. This allows the system to consider individual patient experiences, preferences, and responses to treatment, which can lead to more tailored and effective breast cancer detection and treatment strategies. Patient-centered approaches can improve patient satisfaction and overall healthcare outcomes.

Lifelong learning models can help reduce diagnostic delays by continuously optimizing the triage and prioritization of breast cancer cases [

77,

79]. By learning from historical data and adapting to changing trends, these systems can assist healthcare providers in identifying high-risk cases more quickly, ensuring that patients receive timely evaluations and treatments, which is crucial for better outcomes and survival rates.

7. Conclusion and Future Directions

In conclusion, deep learning has made significant strides in the field of breast cancer detection, offering the potential to revolutionize the way we diagnose and treat this disease. With its ability to automatically extract complex features from medical images, deep learning models have shown promise in improving the accuracy and efficiency of breast cancer detection. Additionally, transfer learning techniques and the integration of multimodal data have further enhanced the capabilities of deep learning algorithms in this domain.

The future of deep learning for breast cancer detection holds several exciting directions. Firstly, the development of more interpretable models will be crucial to gaining the trust of medical professionals and ensuring the transparency of decision-making processes. Explainable AI techniques will enable clinicians to understand and validate the decisions made by deep learning systems. Secondly, deep learning models could be applied to address the challenges of early detection and the identification of high-risk populations, allowing for more targeted and effective screening programs. Furthermore, the use of deep learning in predicting treatment responses and patient outcomes is an emerging area of research, which has the potential to personalize treatment plans and improve patient care.

Lastly, collaboration between researchers, clinicians, and AI experts will be essential for the successful integration of deep learning into clinical practice. Ensuring that AI models are rigorously evaluated, validated, and ethically deployed is critical for their adoption in healthcare settings. As the field continues to evolve, deep learning has the potential to make a profound impact on breast cancer detection, ultimately leading to earlier diagnoses, better treatment outcomes, and improved patient care.

Funding

This research did not receive any grants.

Acknowledgments

We thank all the anonymous reviewers for their hard reviewing work.

References

- Won, H.S.; Ahn, J.; Kim, Y.; Kim, J.S.; Song, J.-Y.; Kim, H.-K.; Lee, J.; Park, H.K.; Kim, Y.-S. Clinical significance of HER2-low expression in early breast cancer: a nationwide study from the Korean Breast Cancer Society. Breast Cancer Research 2022, 24, 22. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Wang, S.-H.; Liu, G.; Yang, J. Computer-aided diagnosis of abnormal breasts in mammogram images by weighted-type fractional Fourier transform. Adv. Mech. Eng. 2016, 8. [Google Scholar] [CrossRef]

- Guha, A.; Fradley, M.G.; Dent, S.F.; Weintraub, N.L.; Lustberg, M.B.; Alonso, A.; Addison, D. Incidence, risk factors, and mortality of atrial fibrillation in breast cancer: a SEER-Medicare analysis. Eur. Hear. J. 2021, 43, 300–312. [Google Scholar] [CrossRef] [PubMed]

- Sui, S.; Xu, S.; Pang, D. Emerging role of ferroptosis in breast cancer: New dawn for overcoming tumor progression. Pharmacol. Ther. 2021, 232, 107992. [Google Scholar] [CrossRef] [PubMed]

- Trapani, D.; Ginsburg, O.; Fadelu, T.; Lin, N.U.; Hassett, M.; Ilbawi, A.M.; Anderson, B.O.; Curigliano, G. Global challenges and policy solutions in breast cancer control. Cancer Treat. Rev. 2022, 104, 102339. [Google Scholar] [CrossRef] [PubMed]

- Shen, K.; Yu, H.; Xie, B.; Meng, Q.; Dong, C.; Shen, K.; Zhou, H.-B. Anticancer or carcinogenic? The role of estrogen receptor β in breast cancer progression. Pharmacol. Ther. 2023, 242, 108350. [Google Scholar] [CrossRef]

- Nassif, A.B.; Abu Talib, M.; Nasir, Q.; Afadar, Y.; Elgendy, O. Breast cancer detection using artificial intelligence techniques: A systematic literature review. Artif. Intell. Med. 2022, 127, 102276. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, X.; Lu, S.; Wang, H.; Phillips, P.; Wang, S. Smart detection on abnormal breasts in digital mammography based on contrast-limited adaptive histogram equalization and chaotic adaptive real-coded biogeography-based optimization. SIMULATION 2016, 92, 873–885. [Google Scholar] [CrossRef]

- Fan, R.; Tao, X.; Zhai, X.; Zhu, Y.; Li, Y.; Chen, Y.; Dong, D.; Yang, S.; Lv, L. Application of aptamer-drug delivery system in the therapy of breast cancer. Biomed. Pharmacother. 2023, 161, 114444. [Google Scholar] [CrossRef]

- Arnold, M.; Morgan, E.; Rumgay, H.; Mafra, A.; Singh, D.; Laversanne, M.; Vignat, J.; Gralow, J.R.; Cardoso, F.; Siesling, S.; et al. Current and future burden of breast cancer: Global statistics for 2020 and 2040. Breast 2022, 66, 15–23. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Pan, C.; Chen, X.; Wang, F. Abnormal breast identification by nine-layer convolutional neural network with parametric rectified linear unit and rank-based stochastic pooling. J. Comput. Sci. 2018, 27, 57–68. [Google Scholar] [CrossRef]

- Epaillard, N.; Bassil, J.; Pistilli, B. Current indications and future perspectives for antibody-drug conjugates in brain metastases of breast cancer. Cancer Treat. Rev. 2023, 119, 102597. [Google Scholar] [CrossRef] [PubMed]

- Smolarz, B.; Nowak, A.Z.; Romanowicz, H. Breast Cancer—Epidemiology, Classification, Pathogenesis and Treatment (Review of Literature). Cancers 2022, 14, 2569. [Google Scholar] [CrossRef]

- Wang, S.; Rao, R.V.; Chen, P.; Zhang, Y.; Liu, A.; Wei, L. Abnormal Breast Detection in Mammogram Images by Feed-forward Neural Network Trained by Jaya Algorithm. Fundam. Informaticae 2017, 151, 191–211. [Google Scholar] [CrossRef]

- S. Wang, "Advances in data preprocessing for biomedical data fusion: an overview of the methods, challenges, and prospects," Information Fusion, vol. 76, pp. 376-421, 2021.

- Jo, H.-K.; Kim, S.-H.; Kim, C.-L. Proposal of a new method for learning of diesel generator sounds and detecting abnormal sounds using an unsupervised deep learning algorithm. Nucl. Eng. Technol. 2023, 55, 506–515. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. J. King Saud Univ. - Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Yu, C.; Bi, X.; Fan, Y. Deep learning for fluid velocity field estimation: A review. Ocean Eng. 2023, 271. [Google Scholar] [CrossRef]

- Wang, S.-H.; Nayak, D.R.; Guttery, D.S.; Zhang, X.; Zhang, Y.-D. COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Inf. Fusion 2020, 68, 131–148. [Google Scholar] [CrossRef]

- Luca, M.; Barlacchi, G.; Lepri, B.; Pappalardo, L. A Survey on Deep Learning for Human Mobility. ACM Comput. Surv. 2021, 55, 1–44. [Google Scholar] [CrossRef]

- Khanduzi, R.; Sangaiah, A.K. An efficient recurrent neural network for defensive Stackelberg game. J. Comput. Sci. 2023, 67. [Google Scholar] [CrossRef]

- Zhu, Z.; Lei, Y.; Qi, G.; Chai, Y.; Mazur, N.; An, Y.; Huang, X. A review of the application of deep learning in intelligent fault diagnosis of rotating machinery. Measurement 2023, 206. [Google Scholar] [CrossRef]

- Fang, Y.; Han, H.-B.; Bo, W.-B.; Liu, W.; Wang, B.-H.; Wang, Y.-Y.; Dai, C.-Q. Deep neural network for modeling soliton dynamics in the mode-locked laser. Opt. Lett. 2023, 48, 779–782. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Yan, Q.; Zhu, X.; Yu, K. Smart industrial IoT empowered crowd sensing for safety monitoring in coal mine. Digit. Commun. Networks 2023, 9, 296–305. [Google Scholar] [CrossRef]

- Latif, S.; Rana, R.; Khalifa, S.; Jurdak, R.; Qadir, J.; Schuller, B.W. Survey of Deep Representation Learning for Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2021, 14, 1634–1654. [Google Scholar] [CrossRef]

- Jin, L.; Li, Z.; Tang, J. Deep Semantic Multimodal Hashing Network for Scalable Image-Text and Video-Text Retrievals. IEEE Trans. Neural Networks Learn. Syst. 2023, 34, 1838–1851. [Google Scholar] [CrossRef]

- Mehedi, S.T.; Anwar, A.; Rahman, Z.; Ahmed, K.; Islam, R. Dependable Intrusion Detection System for IoT: A Deep Transfer Learning Based Approach. IEEE Trans. Ind. Informatics 2022, 19, 1006–1017. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2015, 63, 1455–1462. [Google Scholar] [CrossRef]

- Q. Li, Z. Wen, Z. Wu, S. Hu, N. Wang, Y. Li, et al., "A Survey on Federated Learning Systems: Vision, Hype and Reality for Data Privacy and Protection," IEEE Transactions on Knowledge and Data Engineering, vol. 35, pp. 3347-3366, 2023.

- Djenouri, Y.; Belhadi, A.; Srivastava, G.; Ghosh, U.; Chatterjee, P.; Lin, J.C.-W. Fast and Accurate Deep Learning Framework for Secure Fault Diagnosis in the Industrial Internet of Things. IEEE Internet Things J. 2021, 10, 2802–2810. [Google Scholar] [CrossRef]

- Ragab, M.; Albukhari, A.; Alyami, J.; Mansour, R.F. Ensemble Deep-Learning-Enabled Clinical Decision Support System for Breast Cancer Diagnosis and Classification on Ultrasound Images. Biology 2022, 11, 439. [Google Scholar] [CrossRef]

- Jabeen, K.; Khan, M.A.; Balili, J.; Alhaisoni, M.; Almujally, N.A.; Alrashidi, H.; Tariq, U.; Cha, J.-H. BC2NetRF: Breast Cancer Classification from Mammogram Images Using Enhanced Deep Learning Features and Equilibrium-Jaya Controlled Regula Falsi-Based Features Selection. Diagnostics 2023, 13, 1238. [Google Scholar] [CrossRef]

- Sudharshan, P.; Petitjean, C.; Spanhol, F.; Oliveira, L.E.; Heutte, L.; Honeine, P. Multiple instance learning for histopathological breast cancer image classification. Expert Syst. Appl. 2019, 117, 103–111. [Google Scholar] [CrossRef]

- Wang, Z.; Li, M.; Wang, H.; Jiang, H.; Yao, Y.; Zhang, H.; Xin, J. Breast Cancer Detection Using Extreme Learning Machine Based on Feature Fusion With CNN Deep Features. IEEE Access 2019, 7, 105146–105158. [Google Scholar] [CrossRef]

- Houssein, E.H.; Emam, M.M.; Ali, A.A.; Suganthan, P.N. Deep and machine learning techniques for medical imaging-based breast cancer: A comprehensive review. Expert Syst. Appl. 2020, 167, 114161. [Google Scholar] [CrossRef]

- Saber, A.; Sakr, M.; Abo-Seida, O.M.; Keshk, A.; Chen, H. A Novel Deep-Learning Model for Automatic Detection and Classification of Breast Cancer Using the Transfer-Learning Technique. IEEE Access 2021, 9, 71194–71209. [Google Scholar] [CrossRef]

- Lu, S.-Y.; Wang, S.-H.; Zhang, Y.-D. SAFNet: A deep spatial attention network with classifier fusion for breast cancer detection. Comput. Biol. Med. 2022, 148, 105812. [Google Scholar] [CrossRef]

- Celik, Y.; Talo, M.; Yildirim, O.; Karabatak, M.; Acharya, U.R. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit. Lett. 2020, 133, 232–239. [Google Scholar] [CrossRef]

- Ahmad, S.; Ullah, T.; Ahmad, I.; Al-Sharabi, A.; Ullah, K.; Khan, R.A.; Rasheed, S.; Ullah, I.; Uddin, N.; Ali, S. A Novel Hybrid Deep Learning Model for Metastatic Cancer Detection. Comput. Intell. Neurosci. 2022, 2022, 8141530. [Google Scholar] [CrossRef]

- Xue, P.; Wang, J.; Qin, D.; Yan, H.; Qu, Y.; Seery, S.; Jiang, Y.; Qiao, Y. Deep learning in image-based breast and cervical cancer detection: a systematic review and meta-analysis. npj Digit. Med. 2022, 5, 19. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Al-Amidie, M.; Al-Asadi, A.; Humaidi, A.J.; Al-Shamma, O.; Fadhel, M.A.; Zhang, J.; Santamaría, J.; Duan, Y. Novel Transfer Learning Approach for Medical Imaging with Limited Labeled Data. Cancers 2021, 13, 1590. [Google Scholar] [CrossRef]

- Huang, S.; Yang, J.; Fong, S.; Zhao, Q. Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Lett. 2019, 471, 61–71. [Google Scholar] [CrossRef]

- Mahoro, E.; Akhloufi, M.A. Applying Deep Learning for Breast Cancer Detection in Radiology. Curr. Oncol. 2022, 29, 8767–8793. [Google Scholar] [CrossRef]

- Umer, M.J.; Sharif, M.; Kadry, S.; Alharbi, A. Multi-Class Classification of Breast Cancer Using 6B-Net with Deep Feature Fusion and Selection Method. J. Pers. Med. 2022, 12, 683. [Google Scholar] [CrossRef] [PubMed]

- Ting, F.F.; Tan, Y.J.; Sim, K.S. Convolutional neural network improvement for breast cancer classification. Expert Syst. Appl. 2018, 120, 103–115. [Google Scholar] [CrossRef]

- Obayya, M.; Maashi, M.S.; Nemri, N.; Mohsen, H.; Motwakel, A.; Osman, A.E.; Alneil, A.A.; Alsaid, M.I. Hyperparameter Optimizer with Deep Learning-Based Decision-Support Systems for Histopathological Breast Cancer Diagnosis. Cancers 2023, 15, 885. [Google Scholar] [CrossRef] [PubMed]

- Jabeen, K.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Zhang, Y.-D.; Hamza, A.; Mickus, A.; Damaševičius, R. Breast Cancer Classification from Ultrasound Images Using Probability-Based Optimal Deep Learning Feature Fusion. Sensors 2022, 22, 807. [Google Scholar] [CrossRef]

- Tian, R.; Yu, M.; Liao, L.; Zhang, C.; Zhao, J.; Sang, L.; Qian, W.; Wang, Z.; Huang, L.; Ma, H. An effective convolutional neural network for classification of benign and malignant breast and thyroid tumors from ultrasound images. Phys. Eng. Sci. Med. 2023, 46, 995–1013. [Google Scholar] [CrossRef]

- Wang, P.; Song, Q.; Li, Y.; Lv, S.; Wang, J.; Li, L.; Zhang, H. Cross-task extreme learning machine for breast cancer image classification with deep convolutional features. Biomed. Signal Process. Control. 2019, 57, 101789. [Google Scholar] [CrossRef]

- H. M. Whitney, H. Li, Y. Ji, P. Liu, and M. L. Giger, "Comparison of Breast MRI Tumor Classification Using Human-Engineered Radiomics, Transfer Learning From Deep Convolutional Neural Networks, and Fusion Methods," Proceedings of the IEEE, vol. 108, pp. 163-177, 2020.

- Wang, S.-H.; Zhang, Y.-D. DenseNet-201-Based Deep Neural Network with Composite Learning Factor and Precomputation for Multiple Sclerosis Classification. ACM Trans. Multimedia Comput. Commun. Appl. 2020, 16, 1–19. [Google Scholar] [CrossRef]

- M. A. Molina-Cabanillas, M. J. Jiménez-Navarro, R. Arjona, F. Martínez-Álvarez, and G. Asencio-Cortés, "DIAFAN-TL: An instance weighting-based transfer learning algorithm with application to phenology forecasting," Knowledge-Based Systems, vol. 254, p. 109644, 2022.

- Khan, S.; Islam, N.; Jan, Z.; Din, I.U.; Rodrigues, J.J.P.C. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit. Lett. 2019, 125, 1–6. [Google Scholar] [CrossRef]

- Wang, L. Holographic Microwave Image Classification Using a Convolutional Neural Network. Micromachines 2022, 13, 2049. [Google Scholar] [CrossRef]

- Chowdhury, D.; Das, A.; Dey, A.; Sarkar, S.; Dwivedi, A.D.; Mukkamala, R.R.; Murmu, L. ABCanDroid: A Cloud Integrated Android App for Noninvasive Early Breast Cancer Detection Using Transfer Learning. Sensors 2022, 22, 832. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Li, P.; Li, Y.; Wang, J.; Xu, J. Histopathological image classification based on cross-domain deep transferred feature fusion. Biomed. Signal Process. Control. 2021, 68. [Google Scholar] [CrossRef]

- Chaudhury, S.; Sau, K.; Khan, M.A.; Shabaz, M. Deep transfer learning for IDC breast cancer detection using fast AI technique and Sqeezenet architecture. Math. Biosci. Eng. 2023, 20, 10404–10427. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Wang, Y.; Zhang, P.; Qiao, H.; Sun, T.; Zhang, H.; Xu, X.; Shang, H. Collaborative Transfer Network for Multi-Classification of Breast Cancer Histopathological Images. IEEE Journal of Biomedical and Health Informatics, 2023; 1–12. [Google Scholar] [CrossRef]

- Saini, M.; Susan, S. VGGIN-Net: Deep Transfer Network for Imbalanced Breast Cancer Dataset. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 20, 752–762. [Google Scholar] [CrossRef]

- Xi, G.; Wang, Q.; Zhan, H.; Kang, D.; Liu, Y.; Luo, T.; Xu, M.; Kong, Q.; Zheng, L.; Chen, G.; et al. Automated classification of breast cancer histologic grade using multiphoton microscopy and generative adversarial networks. J. Phys. D: Appl. Phys. 2022, 56, 015401. [Google Scholar] [CrossRef]

- Shivhare, E.; Saxena, V. Optimized generative adversarial network based breast cancer diagnosis with wavelet and texture features. Multimedia Syst. 2022, 28, 1639–1655. [Google Scholar] [CrossRef]

- Pang, T.; Wong, J.H.D.; Ng, W.L.; Chan, C.S. Semi-supervised GAN-based Radiomics Model for Data Augmentation in Breast Ultrasound Mass Classification. Comput. Methods Programs Biomed. 2021, 203, 106018. [Google Scholar] [CrossRef] [PubMed]

- Haq, I.U.; Ali, H.; Wang, H.Y.; Cui, L.; Feng, J. BTS-GAN: Computer-aided segmentation system for breast tumor using MRI and conditional adversarial networks. Eng. Sci. Technol. Int. J. 2022, 36, 101154. [Google Scholar] [CrossRef]

- Ghose, S.; Cho, S.; Ginty, F.; McDonough, E.; Davis, C.; Zhang, Z.; Mitra, J.; Harris, A.L.; Thike, A.A.; Tan, P.H.; et al. Predicting Breast Cancer Events in Ductal Carcinoma In Situ (DCIS) Using Generative Adversarial Network Augmented Deep Learning Model. Cancers 2023, 15, 1922. [Google Scholar] [CrossRef]

- Strelcenia, E.; Prakoonwit, S. Improving Cancer Detection Classification Performance Using GANs in Breast Cancer Data. IEEE Access 2023, 11, 71594–71615. [Google Scholar] [CrossRef]

- Das, A.; Devarampati, V.K.; Nair, M.S. NAS-SGAN: A Semi-Supervised Generative Adversarial Network Model for Atypia Scoring of Breast Cancer Histopathological Images. IEEE J. Biomed. Heal. Informatics 2021, 26, 2276–2287. [Google Scholar] [CrossRef] [PubMed]

- Fujioka, T.; Satoh, Y.; Imokawa, T.; Mori, M.; Yamaga, E.; Takahashi, K.; Kubota, K.; Onishi, H.; Tateishi, U. Proposal to Improve the Image Quality of Short-Acquisition Time-Dedicated Breast Positron Emission Tomography Using the Pix2pix Generative Adversarial Network. Diagnostics 2022, 12, 3114. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Liu, C.; Li, T.; Zhou, Y. The whole slide breast histopathology image detection based on a fused model and heatmaps. Biomed. Signal Process. Control. 2023, 82. [Google Scholar] [CrossRef]

- Shahidi, F. Breast Cancer Histopathology Image Super-Resolution Using Wide-Attention GAN With Improved Wasserstein Gradient Penalty and Perceptual Loss. IEEE Access 2021, 9, 32795–32809. [Google Scholar] [CrossRef]

- Flynn, H.; Reeb, D.; Kandemir, M.; Peters, J. PAC-Bayesian lifelong learning for multi-armed bandits. Data Min. Knowl. Discov. 2022, 36, 841–876. [Google Scholar] [CrossRef]

- Sung, P.; Chia, A.; Chan, A.; Malhotra, R. Reciprocal Relationship Between Lifelong Learning and Volunteering Among Older Adults. Journals Gerontol. Ser. B 2023, 78, 902–912. [Google Scholar] [CrossRef]

- Thwe, W.P.; Kálmán, A. The regression models for lifelong learning competencies for teacher trainers. Heliyon 2023, 9, e13749. [Google Scholar] [CrossRef]

- Sun, G.; Cong, Y.; Wang, Q.; Zhong, B.; Fu, Y. Representative Task Self-Selection for Flexible Clustered Lifelong Learning. IEEE Trans. Neural Networks Learn. Syst. 2020, 33, 1467–1481. [Google Scholar] [CrossRef]

- M. Mlambo, C. Silén, and C. McGrath, "Lifelong learning and nurses’ continuing professional development, a metasynthesis of the literature," BMC Nursing, vol. 20, p. 62, 2021.

- Yap, J.S.; Tan, J. Lifelong learning competencies among chemical engineering students at Monash University Malaysia during the COVID-19 pandemic. Educ. Chem. Eng. 2021, 38, 60–69. [Google Scholar] [CrossRef]

- Acar, M.D.; Kilinc, C.G.; Demir, O. The Relationship Between Lifelong Learning Perceptions of Pediatric Nurses and Self-Confidence and Anxiety in Clinical Decision-Making Processes. Compr. Child Adolesc. Nurs. 2023, 46, 102–113. [Google Scholar] [CrossRef]

- Zhao, C.; Song, A.; Zhu, Y.; Jiang, S.; Liao, F.; Du, Y. Data-Driven Indoor Positioning Correction for Infrastructure-Enabled Autonomous Driving Systems: A Lifelong Framework. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3908–3921. [Google Scholar] [CrossRef]

- Awan, O.A. Preserving the Spirit of Lifelong Learning. Acad. Radiol. 2022, 29, 168–169. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Cong, Y.; Dong, J.; Liu, Y.; Ding, Z.; Yu, H. What and How: Generalized Lifelong Spectral Clustering via Dual Memory. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3895–3908. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).