Submitted:

27 September 2023

Posted:

29 September 2023

You are already at the latest version

Abstract

Keywords:

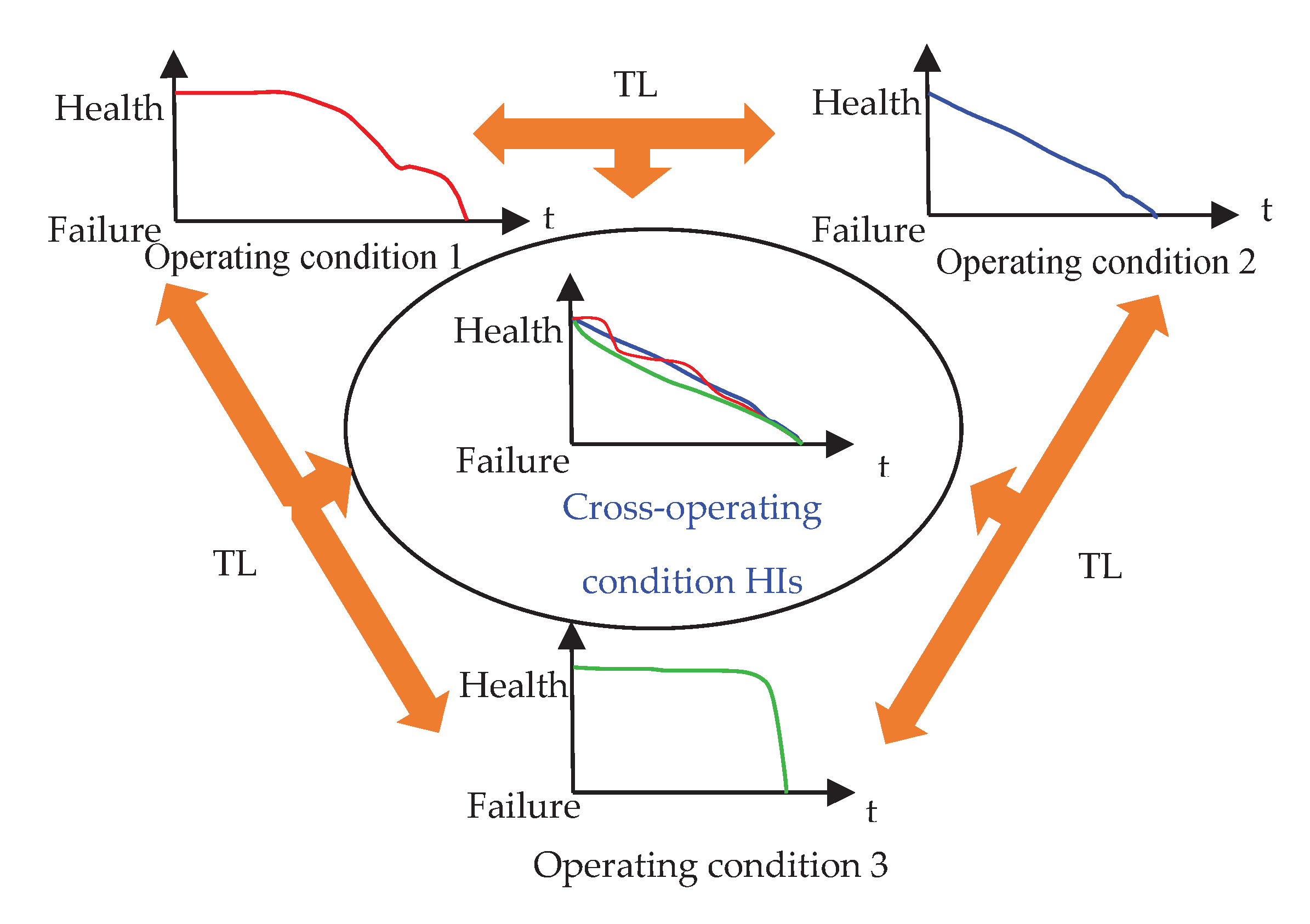

1. Introduction

2. The Related Work

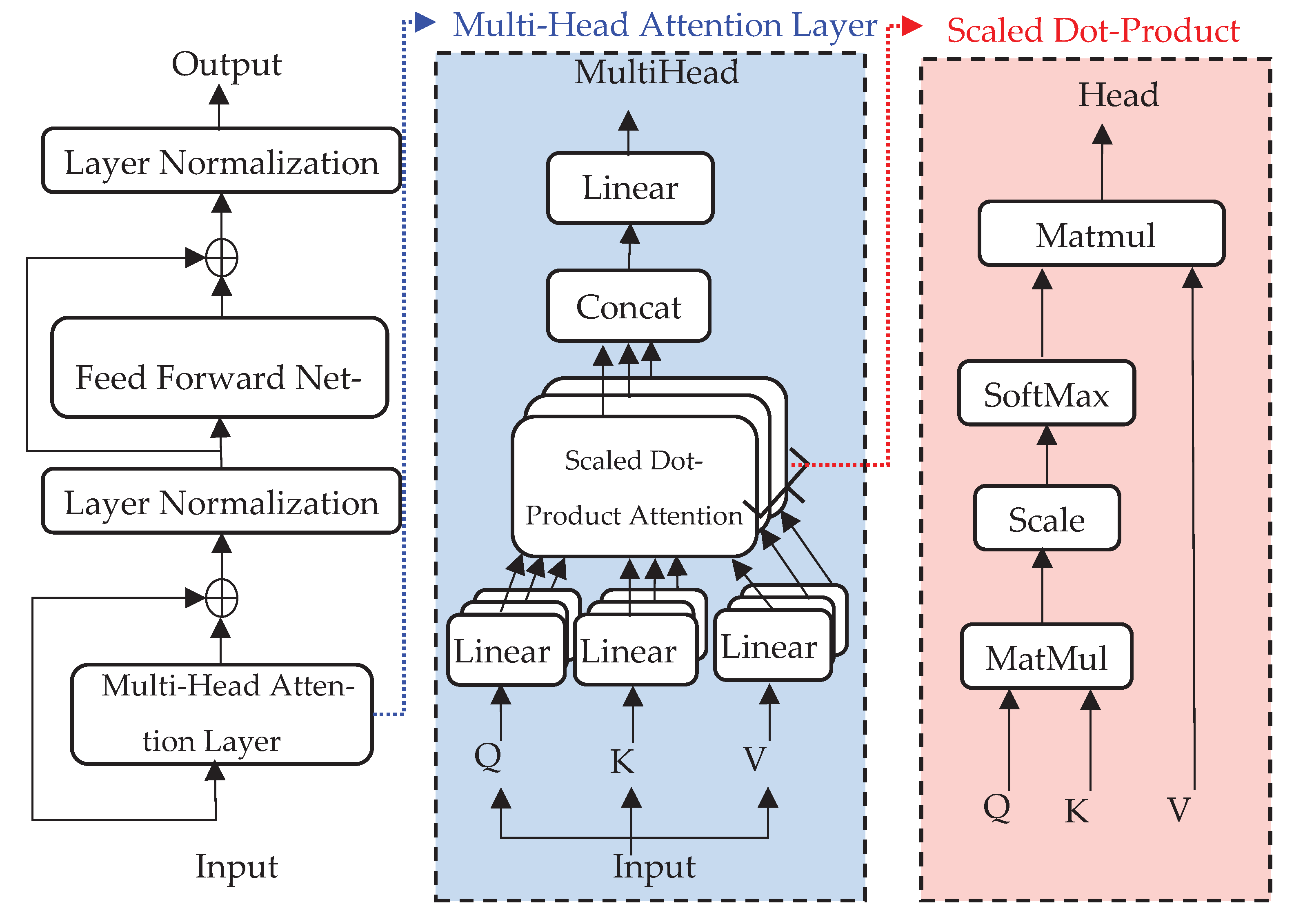

2.1. Transformer Network Structure

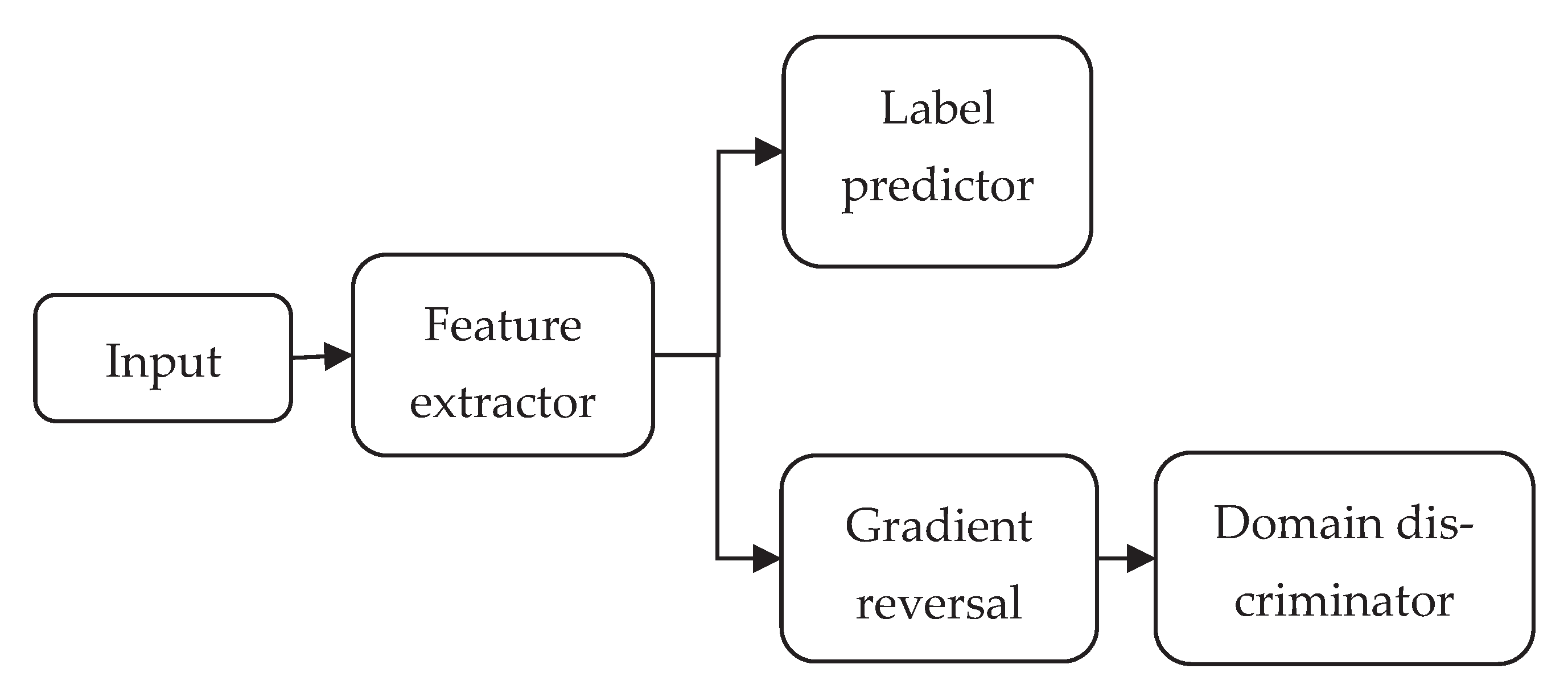

2.2. Domain Adversarial Network

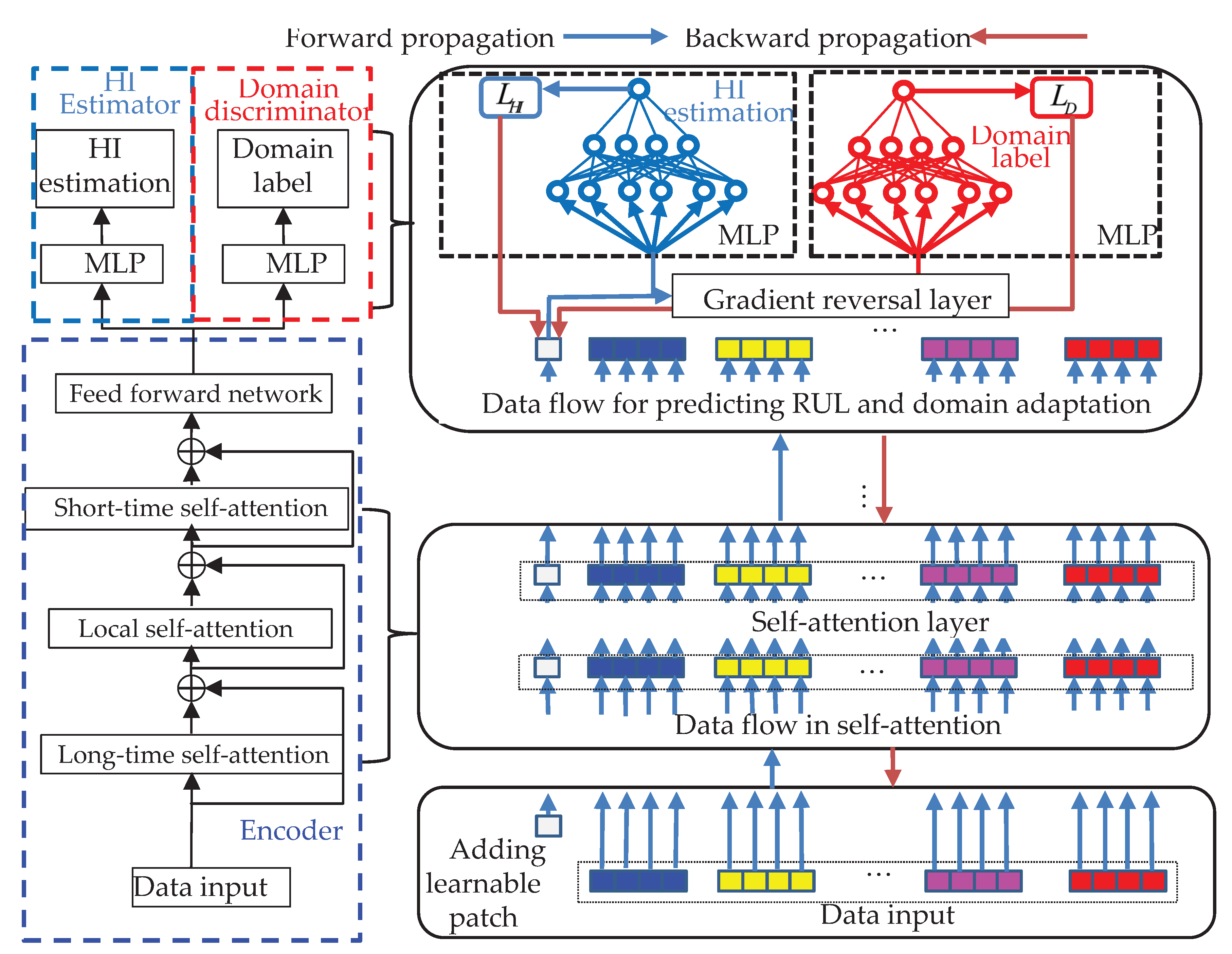

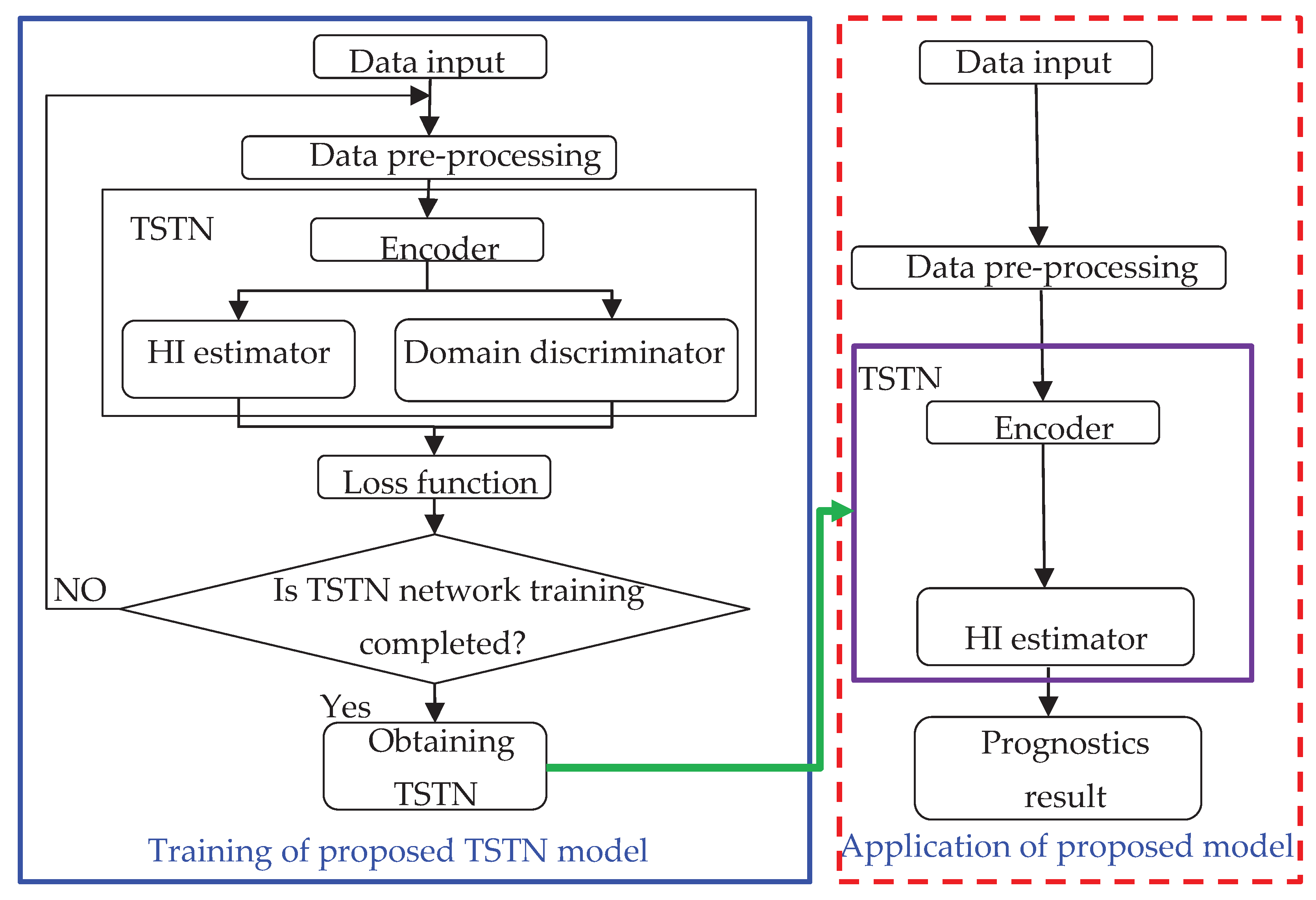

3. The Proposed TSTN

3.1. TSTN structure

- Query–key–value computation. The encoder input consists of patches. The patch collected in the frame is denoted as , and the query, key, and value vectors are computed by , , and , respectively. Following the extended derivation in[25], denoting the frame corresponding time is , and the rotary position embedding in the proposed method as follows:The predefined parameter is , and the calculation operation of is similar to that in (3). Using this position embedding method, the signal collected time information and the spectrum location information of patch can be recognized using self-attention. The first learnable patch needs the use of the same method to generate , ,and . Since the time-embedding information offers the time auxiliary information, private over-fitting will time a random value governed by .

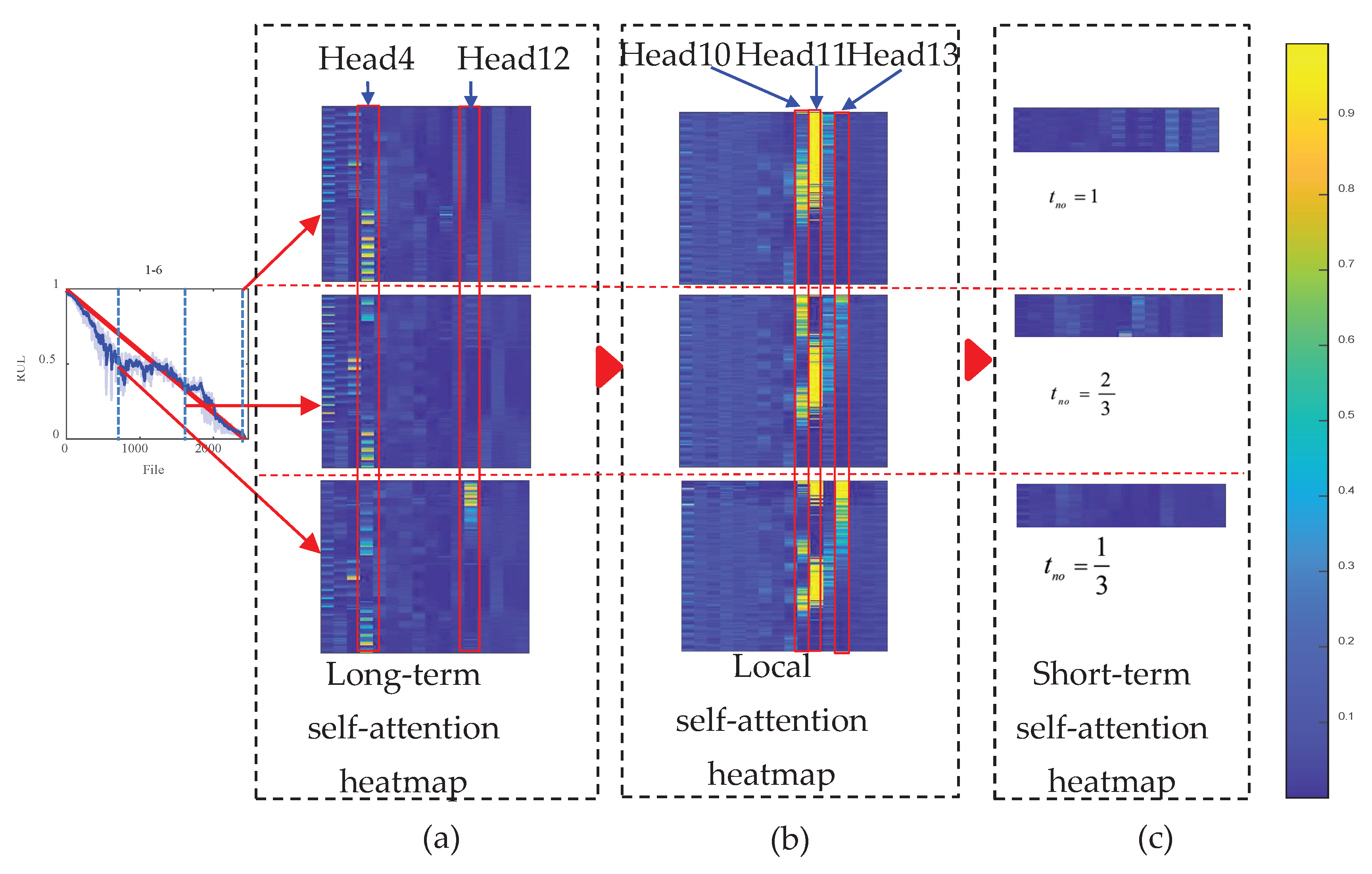

- Long-term, local, and short-term self-attention. The dimensions of the input data are enormous. The number of calculations is large when self-attention is calculated for each patch, thereby confusing the network. We propose three sub-self-attention parts to allow the network to capture the degradation trend from the high-dimensional spectrum: local, long-term, and short-term self-attention.

- FFN and layer normalization. The final layer of the encoder is the FFN and layer normalization; that is, .

- HI estimator. An MLP with one hidden layer was connected to the learnable patch of the encoder output, and the MLP output was the HI estimated result .

- Domain discriminator. The domain discriminator consisted of an MLP with one hidden layer connected to the learnable patch of the encoder output. The number of domain discriminators is equal to the number of degradation-process datasets. The output of each domain discriminator was a 2D vector. The second and first elements represent the current inputs sampled during the degradation process. The network learns a domain-invariant HI representation if the domain discriminator cannot differentiate the current input from the dataset.

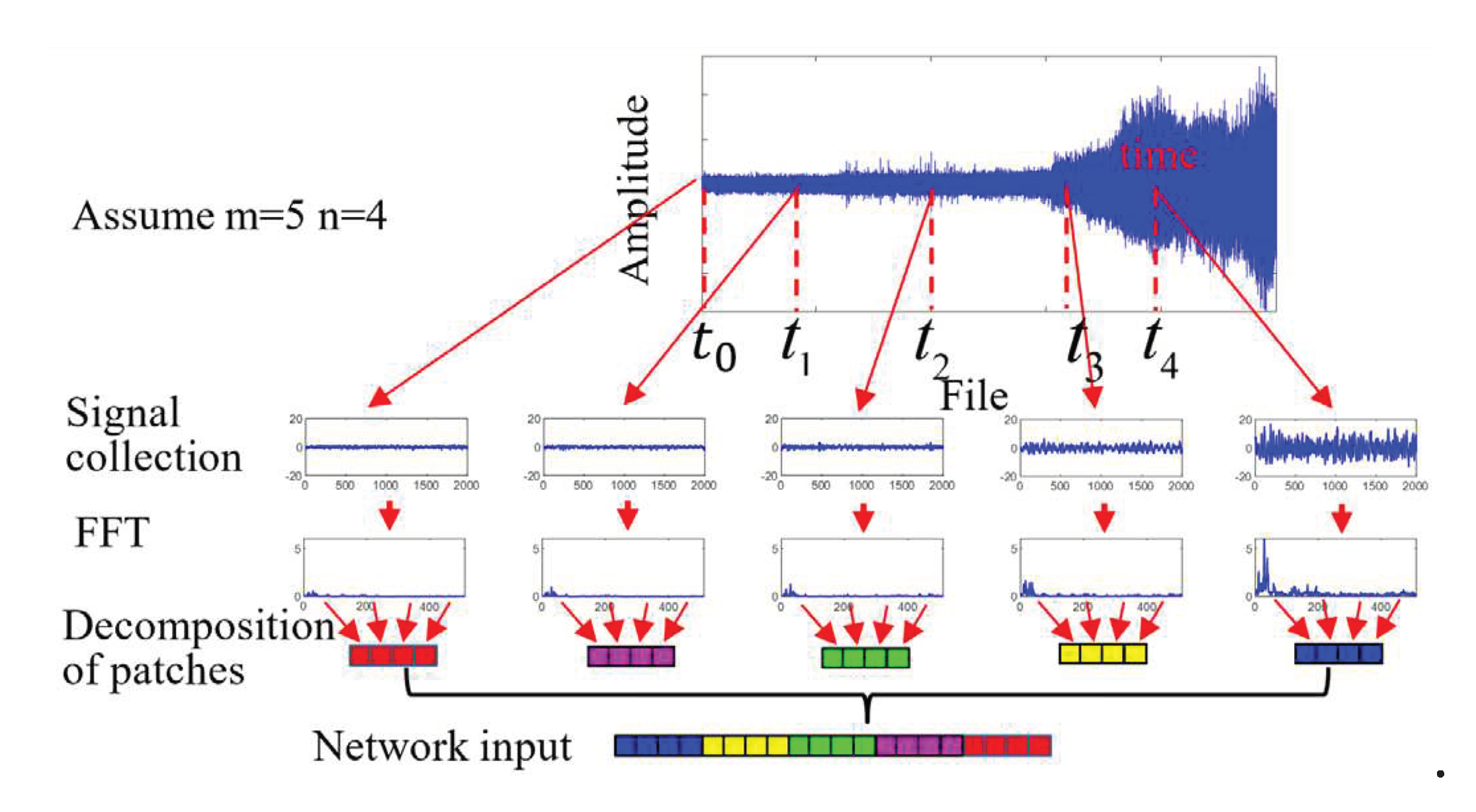

3.2. Data pre-processing

- Signal collection. The input of the proposed TSTN was clip consisting of frames with 512 spectrum features extracted from the measured vibration signal. The frames were divided according to the time to obtain abundant temporal information. The time-divided relationship follows ,, which denotes the time required to collect data.

- Decomposition of patches. Each spectrum feature is decomposed into non-overlapping patches with a size of ; that is, . These patches are then flattened into a vector as the network input.

- (1)

- Index collection: Assuming that the total length of the time series is 20s, set parameter m = 5. The indexes for collecting data are 0, 5, 10, 15 ,and 20.

- (2)

- Calculation of times: From the indexes, we can calculate the using the index.

- (3)

- Sampling data: Based on the calculated , the data are sampled at these time.

- (4)

- Fourier transform: Perform Fourier transform on the sampled time.

- (5)

- Select data points: From the Fourier transformed data, select the first 512 points for each sampling time.

- (6)

- Divide into blocks: Divide the selected 512data points into 4bloacks, each with a length of 1278.

- (7)

- Reverse concatenation: concatenate these 4 blocks in reverse order.

3.3. TSTN training

4. Experiment Analysis

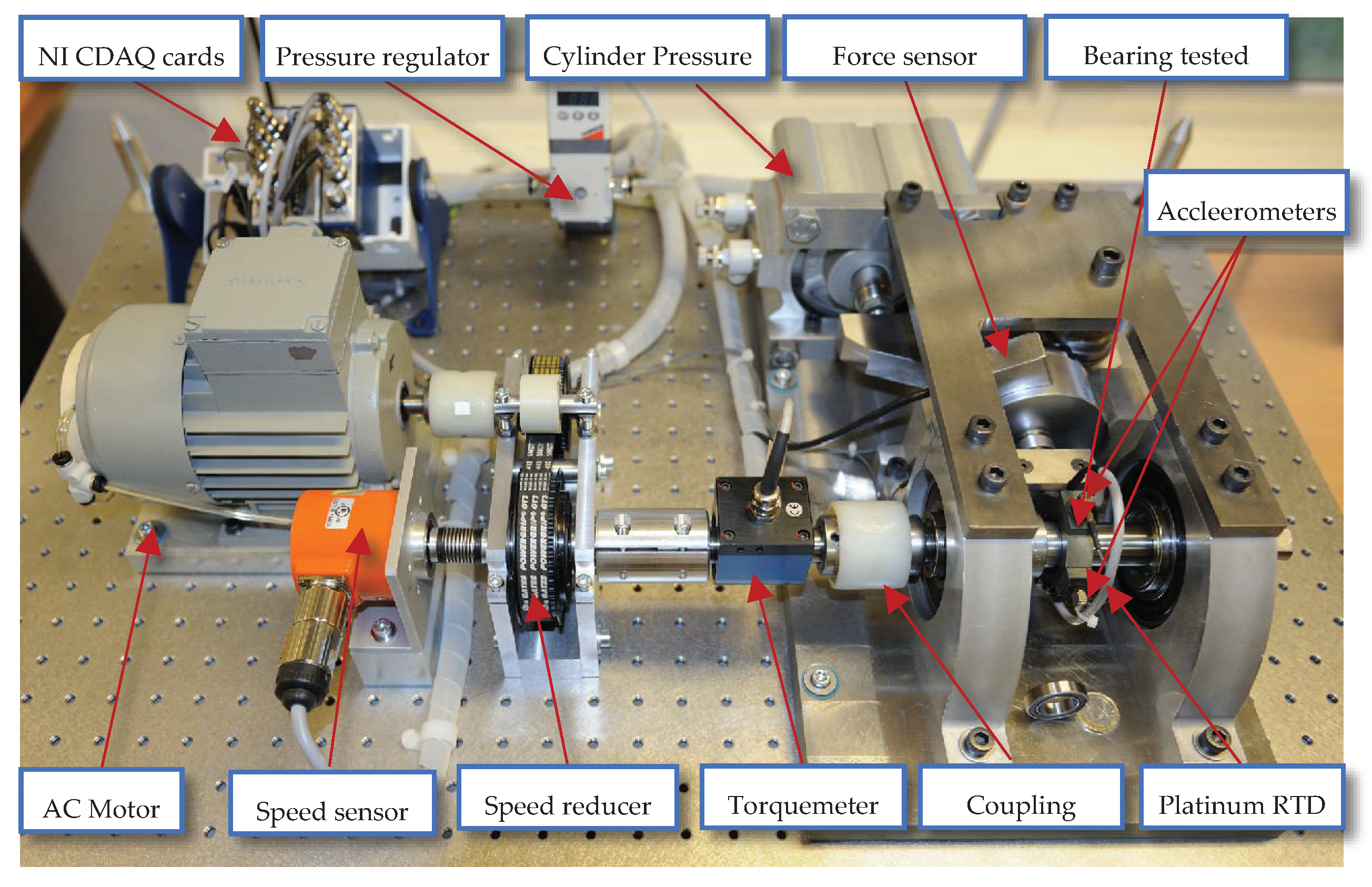

4.1. Training and testing regimes

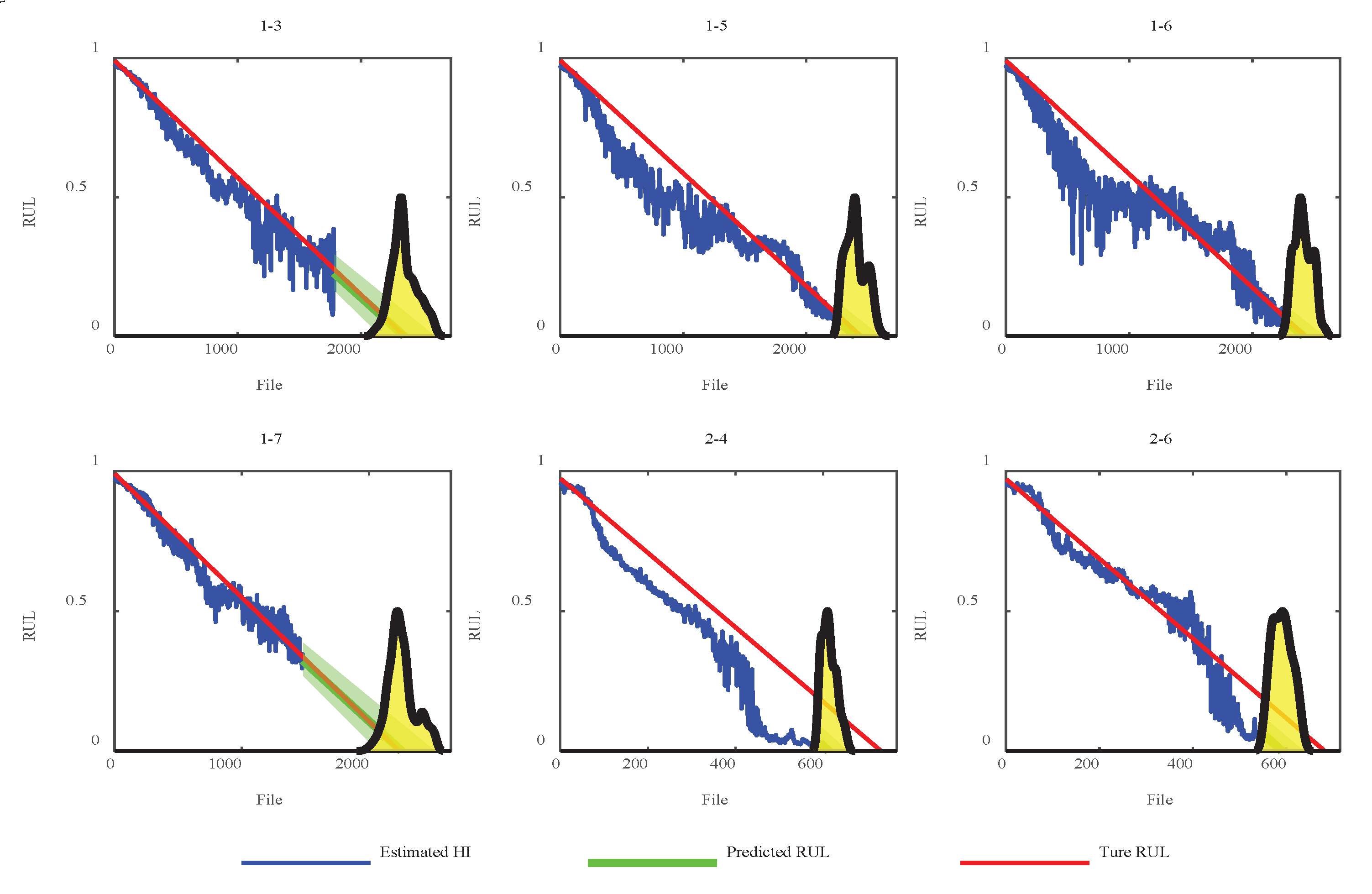

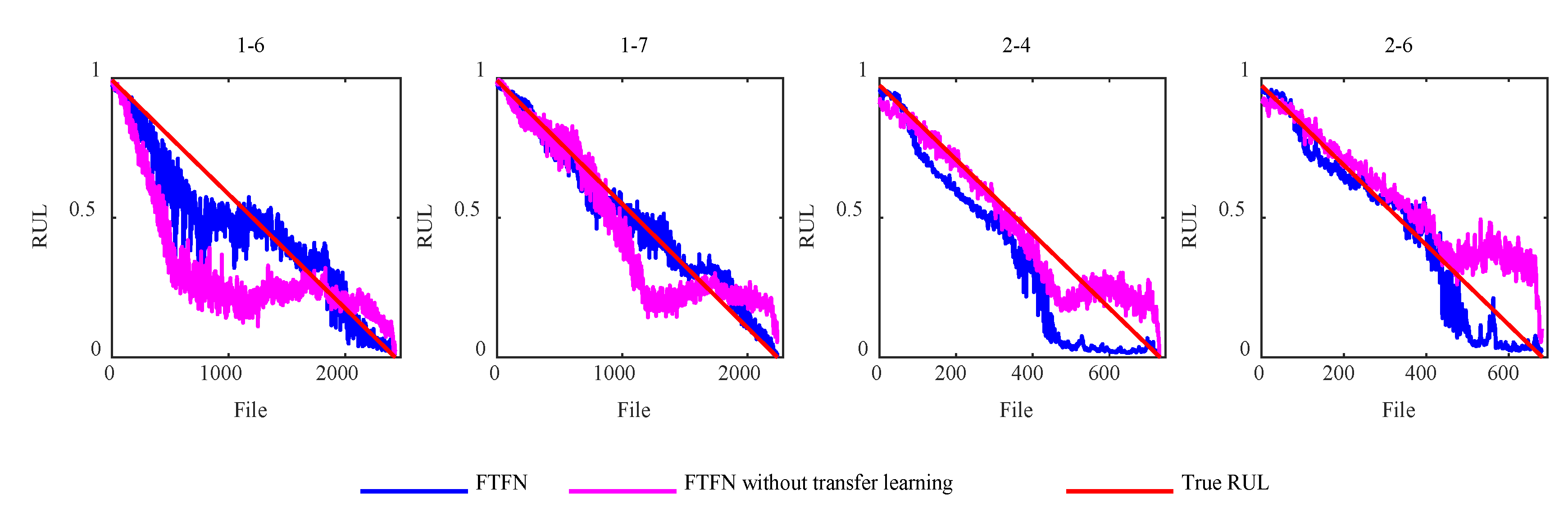

4.2. Prognostics result

| Operating condition | 1 | 2 | 3 |

| Speed (rpm) | 1800 | 1650 | 1500 |

| Loading (N) | 4000 | 4200 | 5000 |

| Training dateset | 1-1,1-2 | 2-1,2-2 | 3-1,3-2 |

| Testing dataset | 1-3,1-4,1-5,1-6,1-7 | 2-3,2-4,2-5,2-6,2-7 | 3-3 |

5. Comparisons and Analysis

5.1. Discussions of the proposed methodology

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zheng, K.; Li, T.; Su, Z.; Zhang, B. Sparse Elitist Group Lasso Denoising in Frequency Domain for Bearing Fault Diagnosis. IEEE Transactions on Industrial Informatics 2021, 17, 4681–4691. [Google Scholar] [CrossRef]

- Hall, D. L.; Llinas, J. An introduction to multisensor data fusion. Proceedings of the IEEE 1997, 85, 6–23. [Google Scholar] [CrossRef]

- Ma, C.; Zhai, X.; Wang, Z.; Tian, M.; Yu, Q.; Liu, L.; Liu, H.; Wang, H.; Yang, X. State of health prediction for lithium-ion batteries using multiple-view feature fusion and support vector regression ensemble. International Journal of Machine Learning and Cybernetics 2019, 10, 2269–2282. [Google Scholar] [CrossRef]

- Wei, Y.; Wu, D.; Terpenny, J. Decision-Level Data Fusion in Quality Control and Predictive Maintenance. IEEE Transactions on Automation Science and Engineering 2021, 18, 184–194. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Sun, H.; Ruan, H.; Qin, Y.; Tang, B. A generalized degradation tendency tracking strategy for gearbox remaining useful life prediction. Measurement 2023, 206, 112313. [Google Scholar] [CrossRef]

- Wang, D.; Chen, Y.; Shen, C.; Zhong, J.; Peng, Z.; Li, C. Fully interpretable neural network for locating resonance frequency bands for machine condition monitoring. Mechanical Systems and Signal Processing 2022, 168. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, N.; Shen, C. A new data-driven transferable remaining useful life prediction approach for bearing under different working conditions. Mechanical Systems and Signal Processing 2020, 139, 106602. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. Deep learning-based remaining useful life estimation of bearings using multi-scale feature extraction. Reliability Engineering & System Safety 2019, 182, 208–218. [Google Scholar]

- An, Q.; Tao, Z.; Xu, X.; El Mansori, M.; Chen, M. A data-driven model for milling tool remaining useful life prediction with convolutional and stacked LSTM network. Measurement 2020, 154, 107461. [Google Scholar] [CrossRef]

- Yudong, C.; Minping, J.; Peng, D.; Yifei, D. Transfer learning for remaining useful life prediction of multi-conditions bearings based on bidirectional-GRU network. Measurement 2021, 178. [Google Scholar]

- Xiang, S.; Qin, Y.; Zhu, C.; Wang, Y.; Chen, H. LSTM networks based on attention ordered neurons for gear remaining life prediction. ISA Transactions 2020, 106, 343–354. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lu, N.; Xing, S. Deep normalized convolutional neural network for imbalanced fault classification of machinery and its understanding via visualization. Mechanical Systems and Signal Processing 2018, 110, 349–367. [Google Scholar] [CrossRef]

- Peng, T.; Shen, C.; Sun, S.; Wang, D. Fault Feature Extractor Based on Bootstrap Your Own Latent and Data Augmentation Algorithm for Unlabeled Vibration Signals. IEEE Transactions on Industrial Electronics 2022, 69, 9547–9555. [Google Scholar] [CrossRef]

- Xu, X.; Wu, Q.; Li, X.; Huang, B. Dilated convolution neural network for remaining useful life prediction. Journal of Computing and Information Science in Engineering 2020, 20, 021004. [Google Scholar] [CrossRef]

- Li, X.; Ding, Q.; Sun, J.-Q. Remaining useful life estimation in prognostics using deep convolution neural networks. Reliability Engineering & System Safety 2018, 172, 1–11. [Google Scholar]

- Ren, L.; Sun, Y.; Wang, H.; Zhang, L. Prediction of Bearing Remaining Useful Life With Deep Convolution Neural Network. IEEE Access 2018, 6, 13041–13049. [Google Scholar] [CrossRef]

- Sun, M.; Wang, H.; Liu, P.; Huang, S.; Wang, P.; Meng, J. Stack Autoencoder Transfer Learning Algorithm for Bearing Fault Diagnosis Based on Class Separation and Domain Fusion. IEEE Transactions on Industrial Electronics 2022, 69, 3047–3058. [Google Scholar] [CrossRef]

- cheng Wen, B.; qing Xiao, M.; qi Wang, X.; Zhao, X.; feng Li, J.; Chen, X. Data-driven remaining useful life prediction based on domain adaptation. PeerJ Computer Science 2021, 7, e690. [Google Scholar]

- da Costa, P. R. d. O.; Akçay, A.; Zhang, Y.; Kaymak, U. Remaining useful lifetime prediction via deep domain adaptation. Reliability Engineering & System Safety 2020, 195, 106682. [Google Scholar]

- Mao, W.; He, J.; Zuo, M. J. Predicting Remaining Useful Life of Rolling Bearings Based on Deep Feature Representation and Transfer Learning. IEEE Transactions on Instrumentation and Measurement 2020, 69, 1594–1608. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need; Advances in neural information processing systems, 2017; 2017; pp. 5998–6008.

- Zhang, Z.; Song, W.; Li, Q. Dual-Aspect Self-Attention Based on Transformer for Remaining Useful Life Prediction. IEEE Transactions on Instrumentation and Measurement 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Su, X.; Liu, H.; Tao, L.; Lu, C.; Suo, M. An end-to-end framework for remaining useful life prediction of rolling bearing based on feature pre-extraction mechanism and deep adaptive transformer model. Computers & Industrial Engineering 2021, 161, 107531. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation, International conference on machine learning, 2015; PMLR: 2015; pp 1180-1189.

- Su, J.; Lu, Y.; Pan, S.; Wen, B.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. arXiv 2021, arXiv:2104.09864. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition, Proceedings of the IEEE conference on computer vision and pattern recognition, 2016; 2016; pp 770-778.

- Ba, J. L.; Kiros, J. R.; Hinton, G. E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Narang, S.; Chung, H. W.; Tay, Y.; Fedus, W.; Fevry, T.; Matena, M.; Malkan, K.; Fiedel, N.; Shazeer, N.; Lan, Z. Do transformer modifications transfer across implementations and applications? arXiv arXiv:2102.11972, 2021.

- Zhu, J.; Chen, N.; Shen, C. A New Multiple Source Domain Adaptation Fault Diagnosis Method Between Different Rotating Machines. IEEE Transactions on Industrial Informatics 2020, 1–1. [Google Scholar] [CrossRef]

- Nectoux, P.; Gouriveau, R.; Medjaher, K.; Ramasso, E.; Chebel-Morello, B.; Zerhouni, N.; Varnier, C. In PRONOSTIA: An experimental platform for bearings accelerated degradation tests, IEEE International Conference on Prognostics and Health Management, PHM'12., 2012; IEEE Catalog Number: CPF12PHM-CDR: 2012; pp 1-8.

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Hinchi, A. Z.; Tkiouat, M. Rolling element bearing remaining useful life estimation based on a convolutional long-short-Term memory network. Procedia Computer Science 2018, 127, 123–132. [Google Scholar] [CrossRef]

- Rathore, M. S.; Harsha, S. Prognostics Analysis of Rolling Bearing Based on Bi-Directional LSTM and Attention Mechanism. Journal of Failure Analysis and Prevention 2022, 22, 704–723. [Google Scholar] [CrossRef]

- Sutrisno, E.; Oh, H.; Vasan, A. S. S.; Pecht, M. Estimation of remaining useful life of ball bearings using data driven methodologies, 2012 IEEE Conference on Prognostics and Health Management, 18-21 June 2012, 2012; 2012; pp 1-7. 21 June.

| Encoder | Multi-Head | Dropout rate | ||

| 16 | 64 | 512 | 0.2 | |

| HI estimator (MLP) | Layer | Dense | Activation function | number |

| Fully connected | 32 | GeGLU | 1 | |

| Fully connected | 1 | GeGLU | 1 | |

| Domain discriminator (MLP) | Layer | Dense | Activation function | number |

| Fully connected | 32 | GeGLU | Equal to dataset number | |

| Fully connected | 2 | Softmax |

| Dataset | (our) | [31] | [34] | [33] | [32] |

| 1-3 | 0.5 | 43 | 37 | -5 | 55 |

| 1-4 | 23 | 67 | 80 | -9 | 39 |

| 1-5 | 25 | -22 | 9 | 22 | -99 |

| 1-6 | 9 | 21 | -5 | 18 | -121 |

| 1-7 | -2 | 17 | -2 | 43 | 71 |

| 2-3 | 82 | 37 | 64 | 45 | 76 |

| 2-4 | 85 | -19 | 10 | 33 | 20 |

| 2-5 | 2 | 54 | -440 | 50 | 8 |

| 2-6 | 70 | -13 | 49 | 26 | 18 |

| 2-7 | -1122 | -55 | -317 | -41 | 2 |

| 3-3 | -1633 | 3 | 90 | 20 | 3 |

| Score | 0.4017 | 0.2631 | 0.3066 | 0.3198 | 0.3828 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).