Submitted:

02 October 2023

Posted:

04 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Uncooled thermal cameras issues

1.2. Gradient descent algorithm

1.3. Objective of this work

2. Materials and Methods

2.1. Data

- Area around ca. 500 m Kocinka stream stretch near Grodzisko village (50.8715 N, 18.9661 E)

- Area around ca. 350 m Kocinka stream stretch near Rybna village (50.9371 N, 19.1134 E)

- Area around ca. 160 m Sudół stream stretch near Kraków city (50.0999 N, 19.9027 E)

2.2. Algorithm

2.2.1. Vignette correction

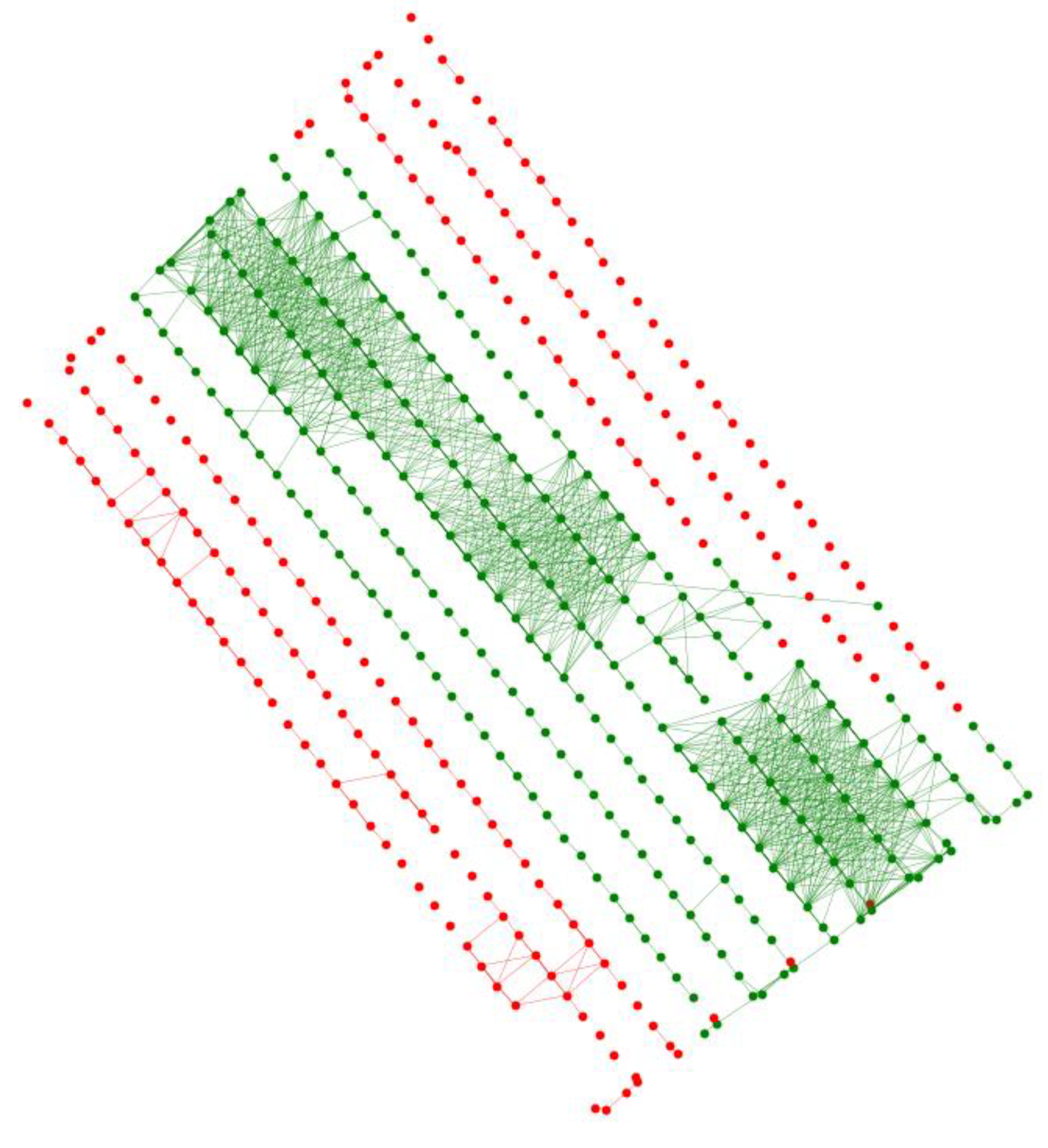

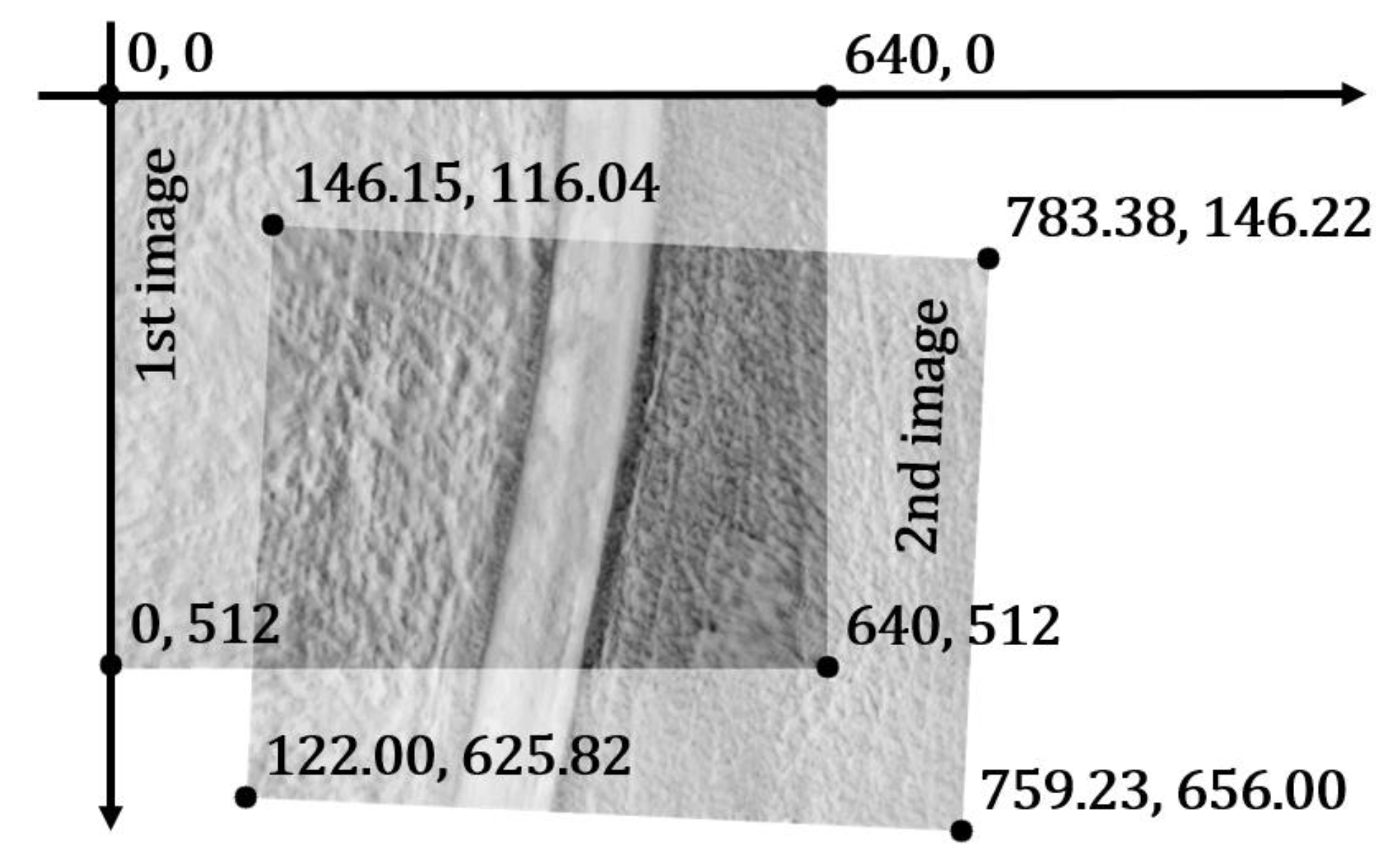

2.2.2. Georeferencing

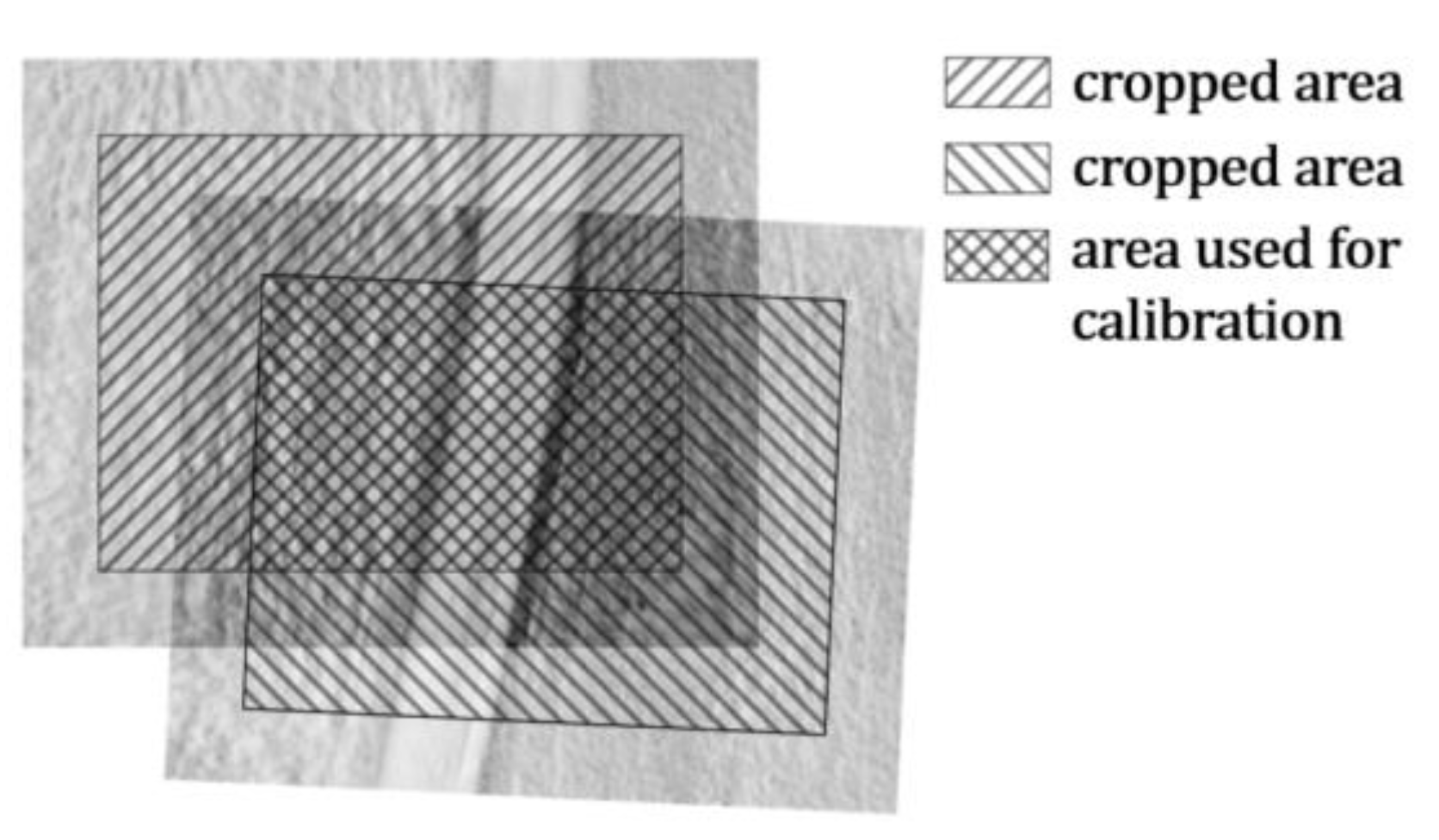

2.2.3. Thermal calibration

2.3. Final processing and result analysis

3. Results

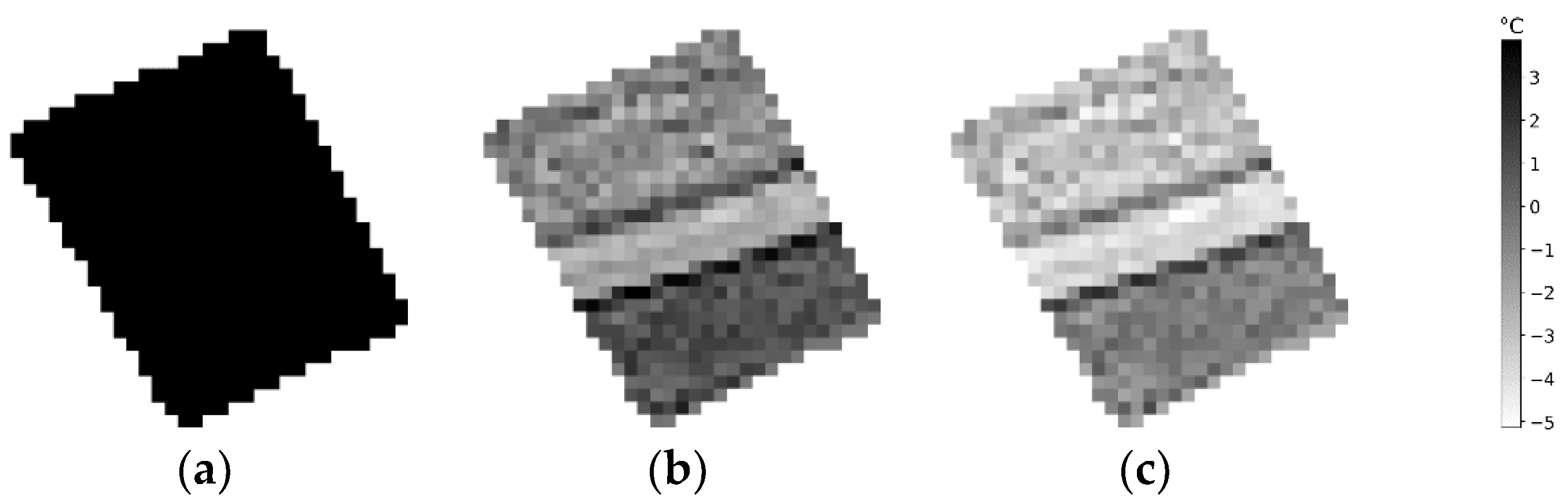

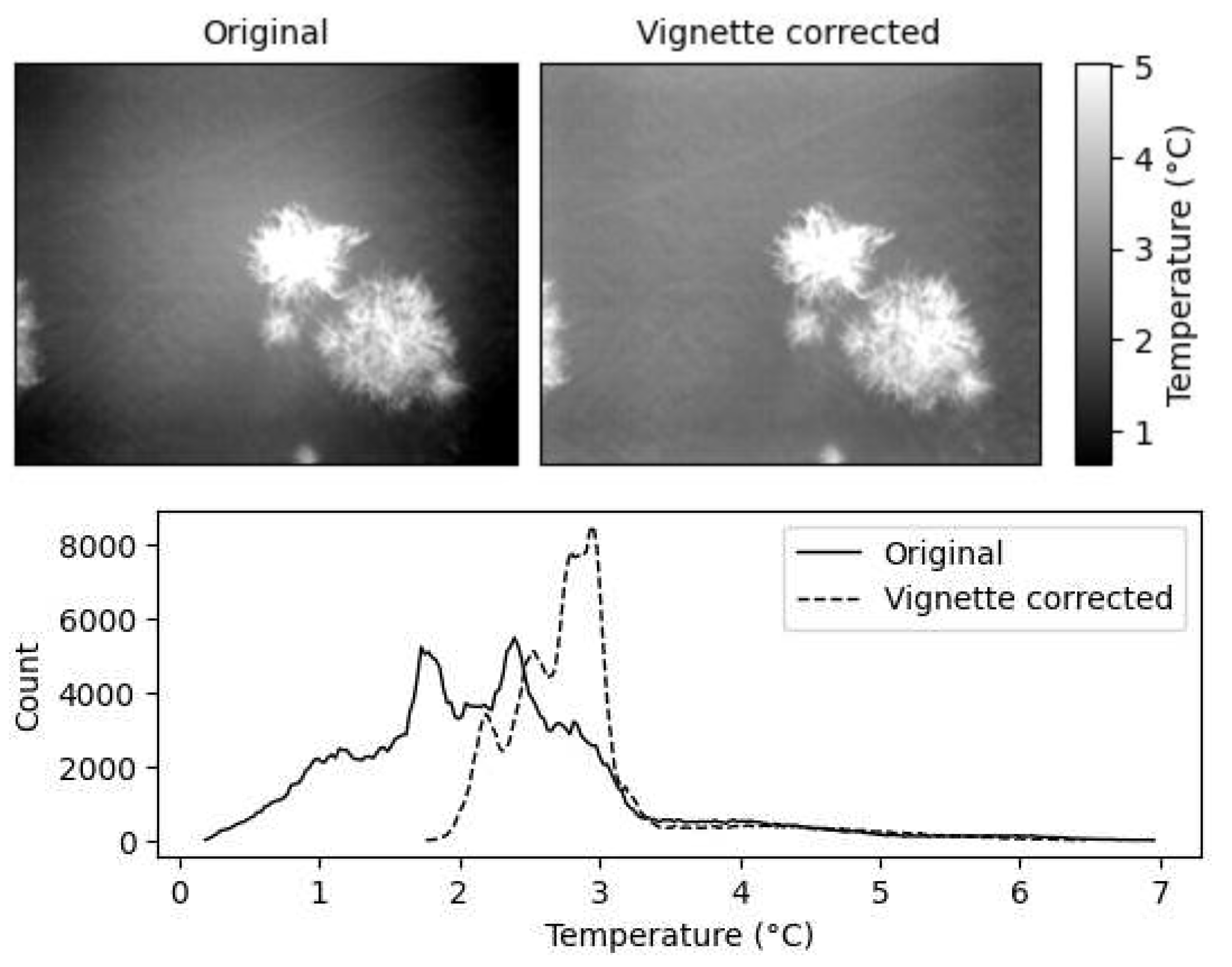

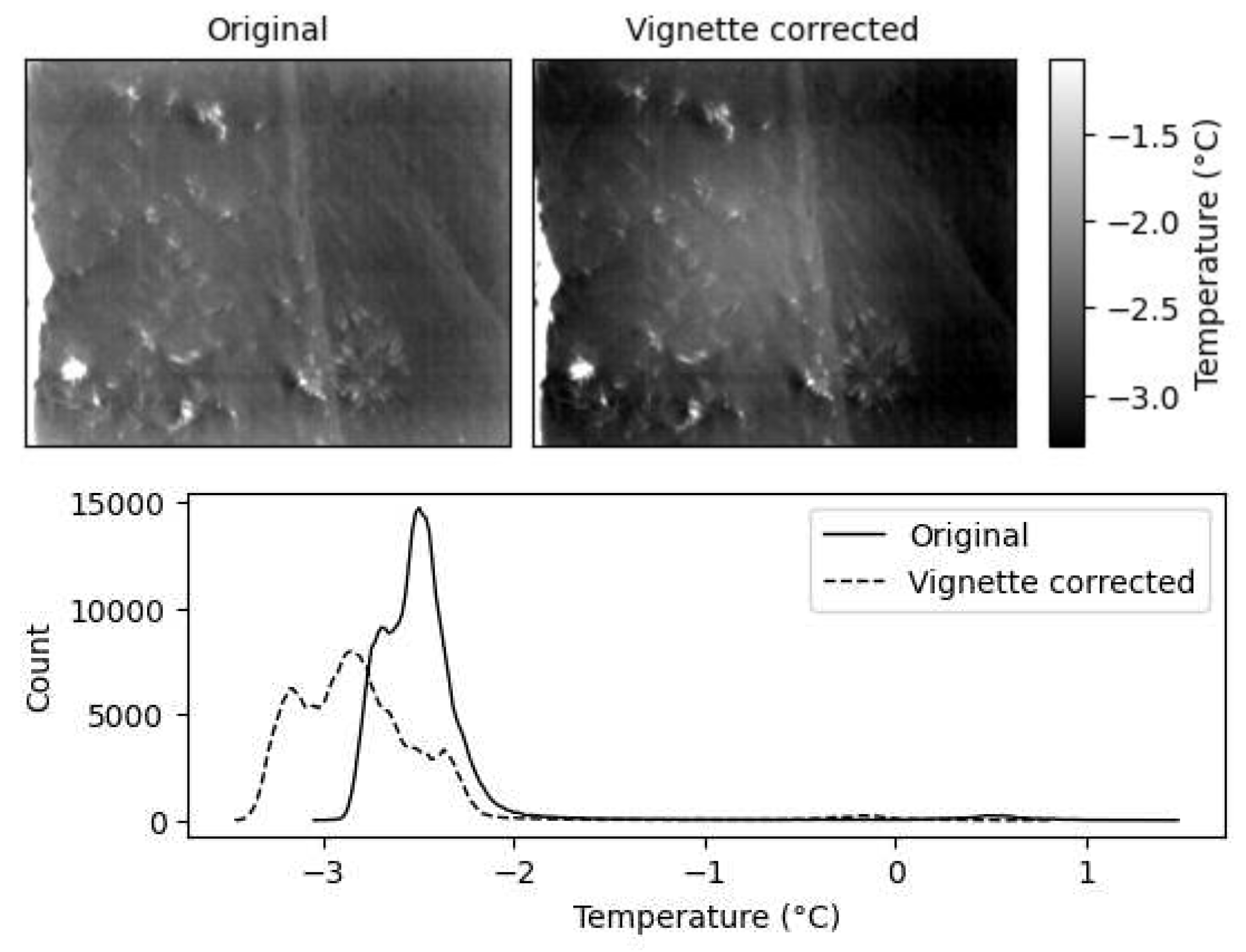

3.1. Vignette correction

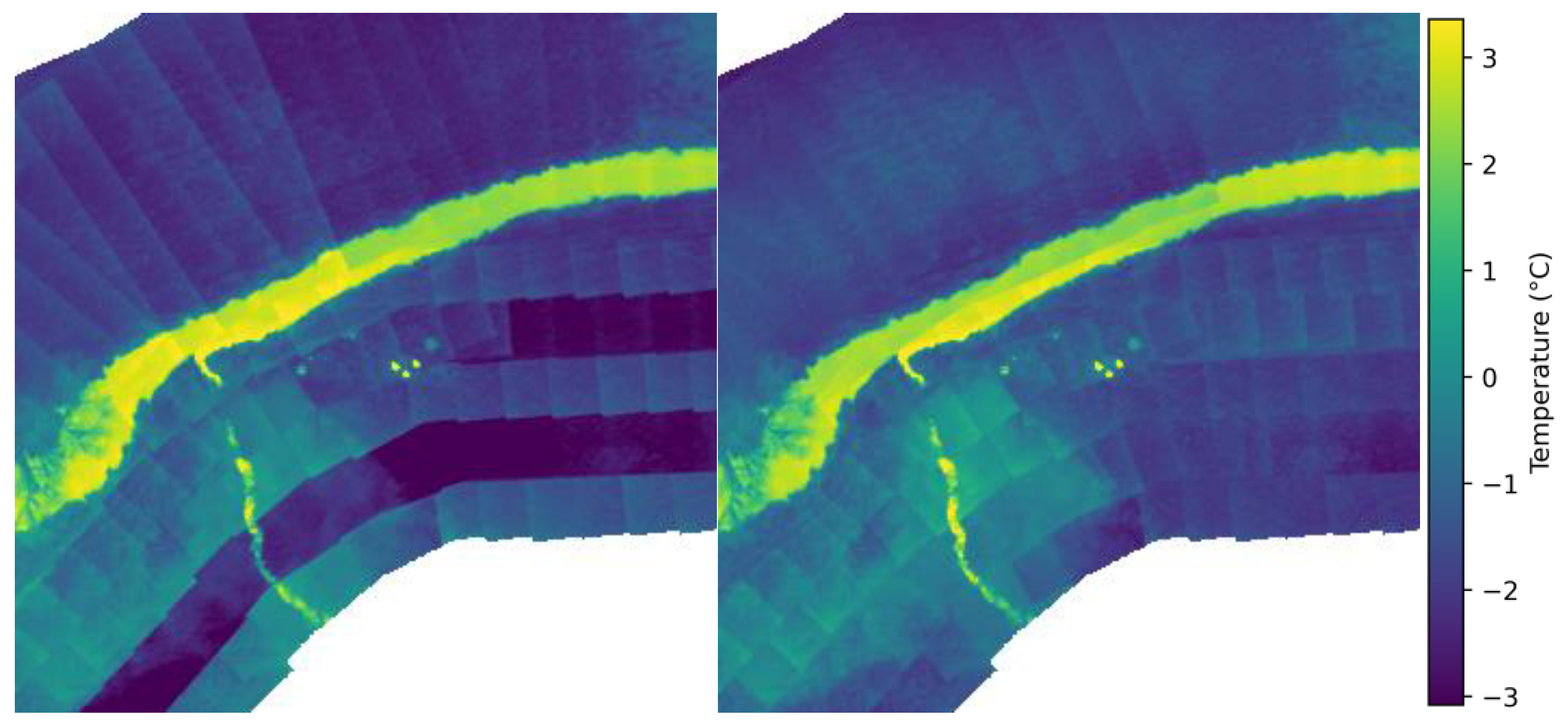

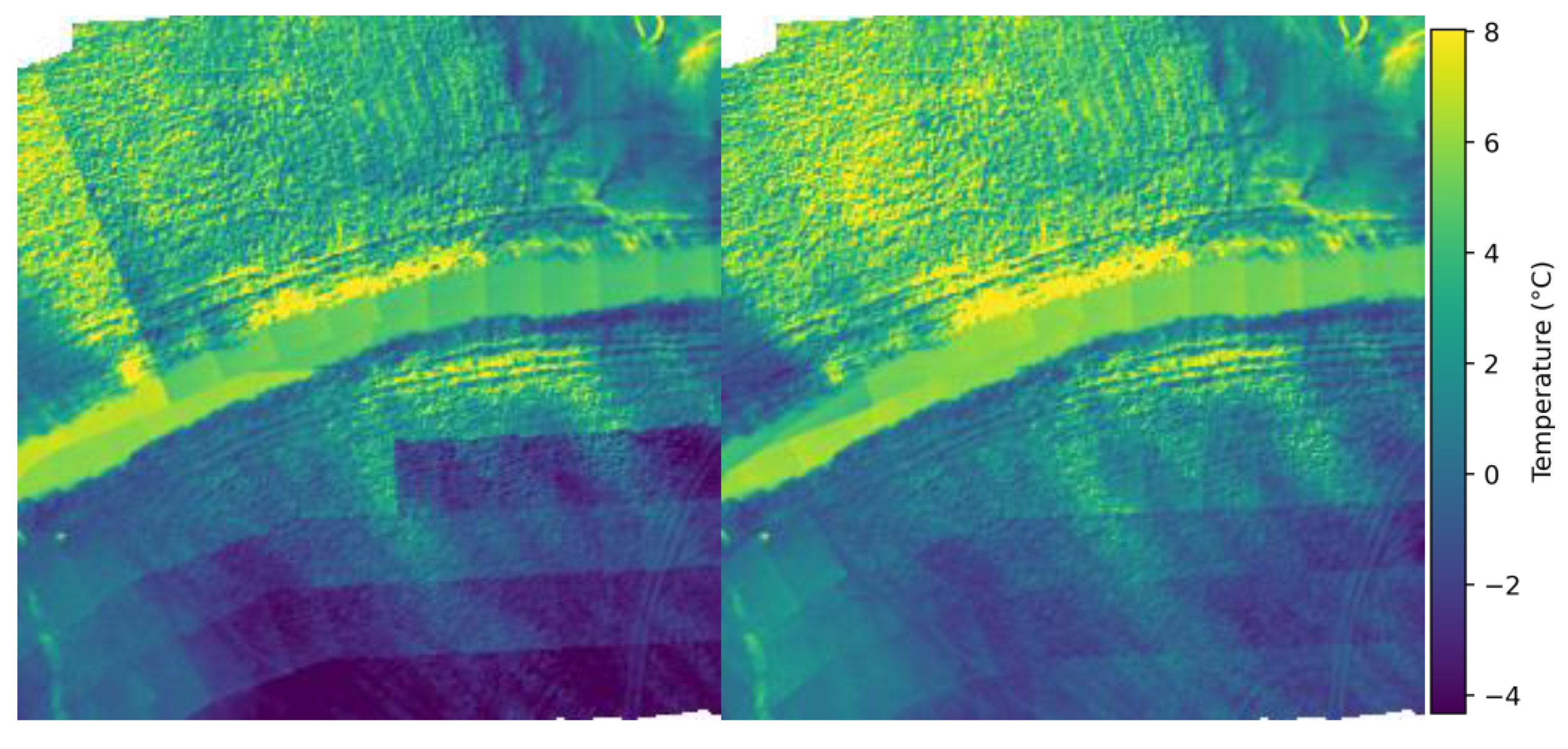

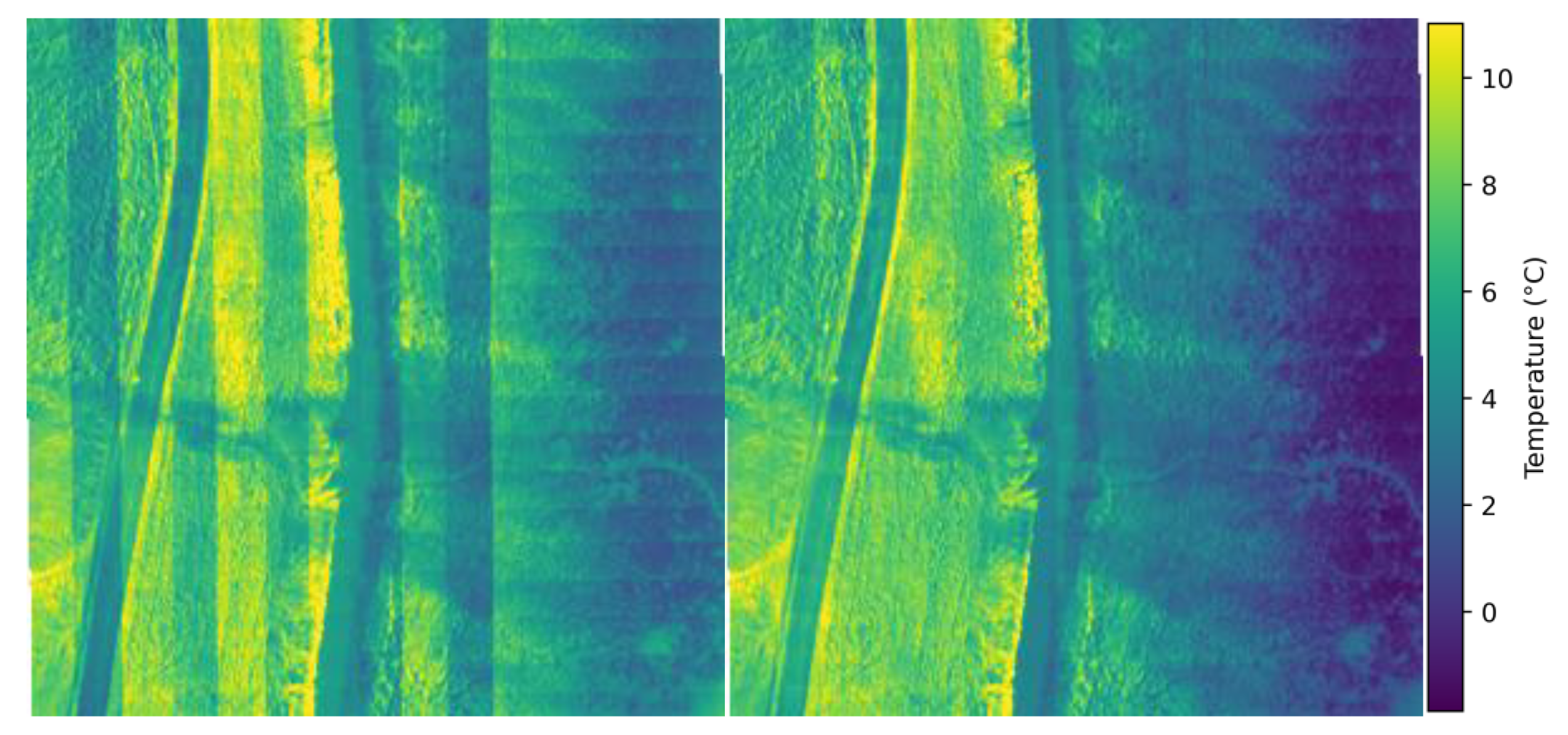

3.2. Visual assessment

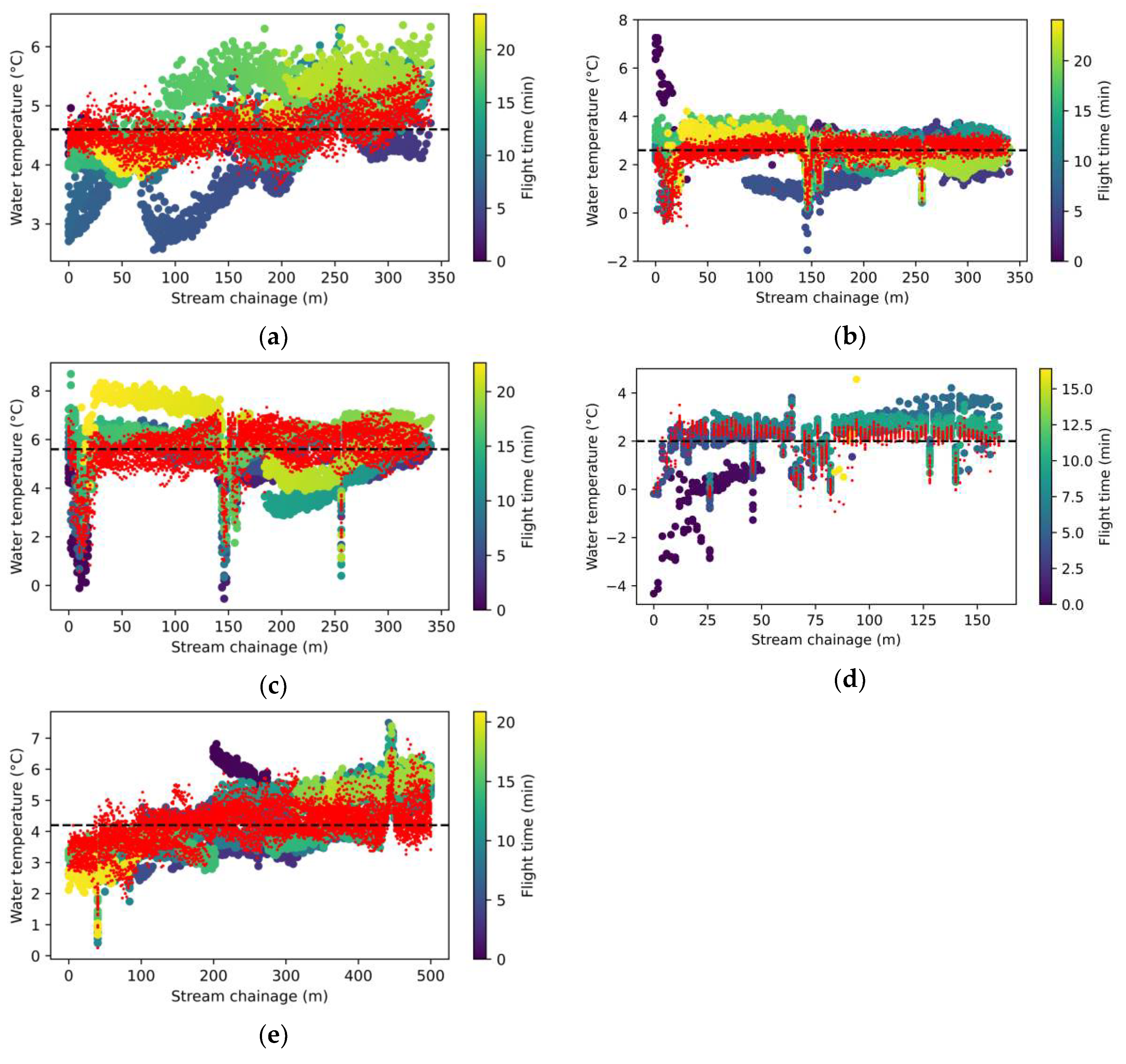

3.3. Waterbody temperature

4. Discussion

4.1. Devignetting

4.2. Visual assessment of mosaics

4.3. Measurement precision and accuracy

4.4. Applications

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Source Code

Appendix A

Conversion notebook

Georeferencing notebook

Georeferencing notebook

References

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Transactions on Geoscience and Remote Sensing 2009. [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sensing 2019, 11, 1443. [CrossRef]

- Lagüela, S.; Díaz−Vilariño, L.; Roca, D.; Lorenzo, H. Aerial Thermography from Low-Cost UAV for the Generation of Thermographic Digital Terrain Models. Opto-Electronics Review 2015, 23. [CrossRef]

- Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa Di Tropea’ (Italy). Remote Sensing 2020, 12, 3424. [CrossRef]

- Aragon, B.; Phinn, S.R.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-Mashharawi, S.; Alamoudi, T.S.; Andrade, C.F.; Turner, D.; Lucieer, A.; et al. A Calibration Procedure for Field and UAV-Based Uncooled Thermal Infrared Instruments. Sensors 2020. [CrossRef]

- Kelly, J.; Kljun, N.; Olsson, P.-O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and Best Practices for Deriving Temperature Data from an Uncalibrated UAV Thermal Infrared Camera. Remote Sensing 2019, 11, 567. [CrossRef]

- Kusnierek, K.; Korsaeth, A. Challenges in Using an Analog Uncooled Microbolometer Thermal Camera to Measure Crop Temperature. International Journal of Agricultural and Biological Engineering 2014.

- Yuan, W.; Hua, W. A Case Study of Vignetting Nonuniformity in UAV-Based Uncooled Thermal Cameras. Drones 2022. [CrossRef]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.; Ballesteros, R.; Poblete, T.; Moreno, M. Uncooled Thermal Camera Calibration and Optimization of the Photogrammetry Process for UAV Applications in Agriculture. Sensors 2017, 17, 2173. [CrossRef]

- Lin, D.; Maas, H.-G.; Westfeld, P.; Budzier, H.; Gerlach, G. An Advanced Radiometric Calibration Approach for Uncooled Thermal Cameras. Photogrammetric Record 2018. [CrossRef]

- Mesas-Carrascosa, F.J.; Pérez-Porras, F.J.; Larriva, J.E.M. de; Frau, C.M.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P.; García-Ferrer, A. Drift Correction of Lightweight Microbolometer Thermal Sensors On-Board Unmanned Aerial Vehicles. Remote Sensing 2018. [CrossRef]

- Yuanjie Zheng; Lin, S.; Kambhamettu, C.; Jingyi Yu; Sing Bing Kang Single-Image Vignetting Correction. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2243–2256. [CrossRef]

- Huang, L.Y. Luna983/Stitch-Aerial-Photos: Stable v1.1 2020.

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. International Journal of Computer Vision 2004, 60, 91–110. [CrossRef]

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the Proceedings of the Fourth International Conference on Computer Vision Theory and Applications (VISIGRAPP 2009) - Volume 1: VISAPP; SciTePress, 2009; pp. 331–340.

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [CrossRef]

- John, C.; Allan, H.D. A First Look at Graph Theory; Allied: New Delhi, India, 1995;

| Alias | Location | Time | Conditions | Water temperature |

| 20211215_kocinka_rybna | Kocinka, Rybna | 15.12.2021 12:20 | Fog, snow cover | 4.6 °C |

| 20220118_kocinka_rybna | Kocinka, Rybna | 18.01.2022 14:55 | Snow cover, total cloud cover | 2.6 °C |

| 20220325_kocinka_rybna | Kocinka, Rybna | 25.03.2022 07:30 | No cloud cover | 5.6 °C |

| 20221220_sudol_krakow | Sudół, Kraków | 20.12.2022 11:20 | Moderate cloud cover | 2.0 °C |

| 20230111_kocinka_grodzisko | Kocinka, Grodzisko | 11.01.2023 | No cloud cover | 4.2 °C |

| Case study | RMSE Uncalibrated |

RMSE Calibrated |

MAE Uncalibrated |

MAE Calibrated |

|---|---|---|---|---|

| 20211215_kocinka_rybna | 0.679375 | 0.310173 | 0.539997 | 0.249083 |

| 20220118_kocinka_rybna | 0.958521 | 0.528135 | 0.579392 | 0.337545 |

| 20220325_kocinka_rybna | 1.229638 | 0.93813 | 0.894125 | 0.664885 |

| 20221220_sudol_krakow | 1.099734 | 0.703925 | 0.831873 | 0.519501 |

| 20230111_kocinka_grodzisko | 0.96338 | 0.61609 | 0.815541 | 0.458868 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).