Submitted:

22 February 2024

Posted:

22 February 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

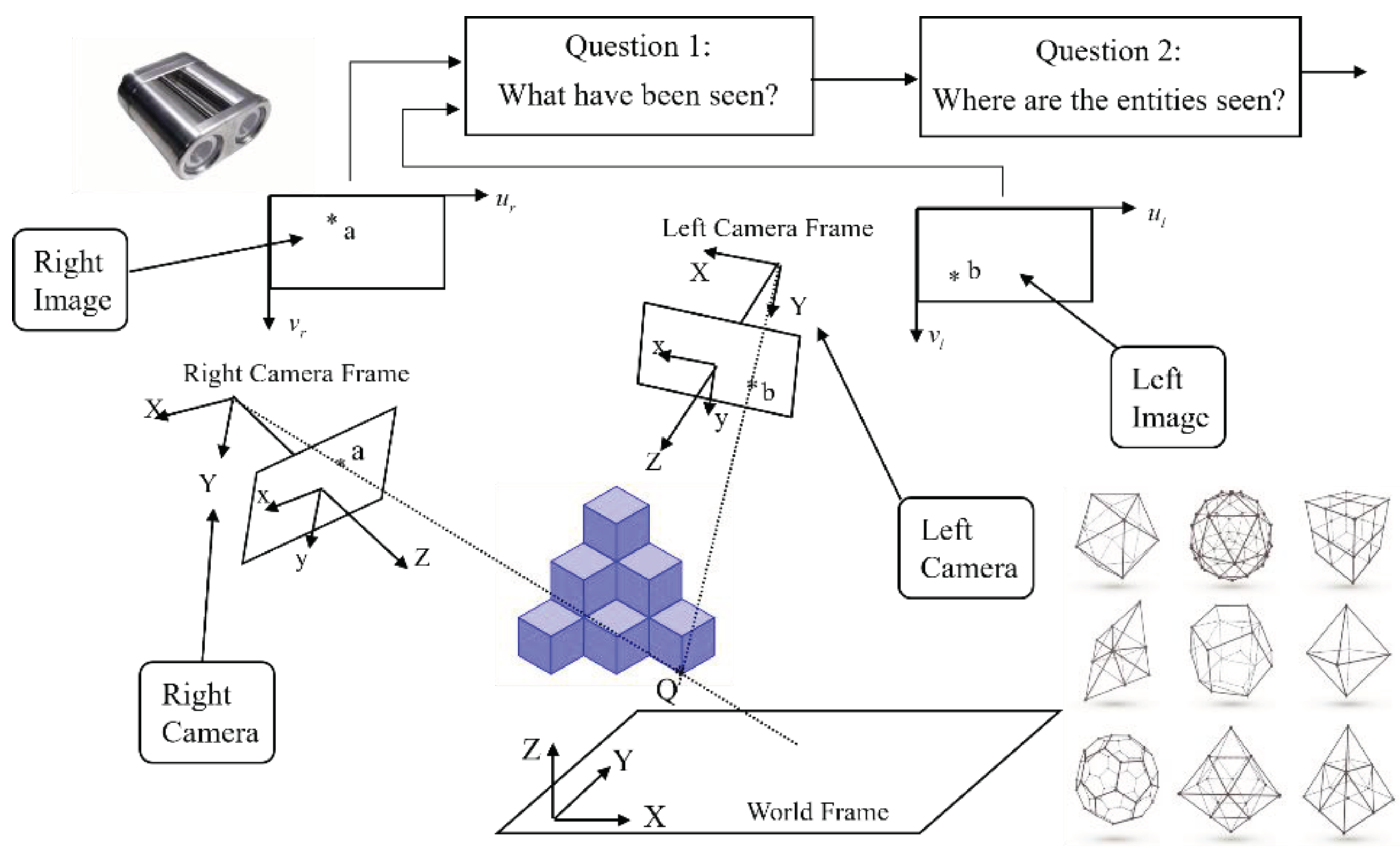

2. Problem Statement

3. Related Works

3.1. Concept of Kinematic Chain

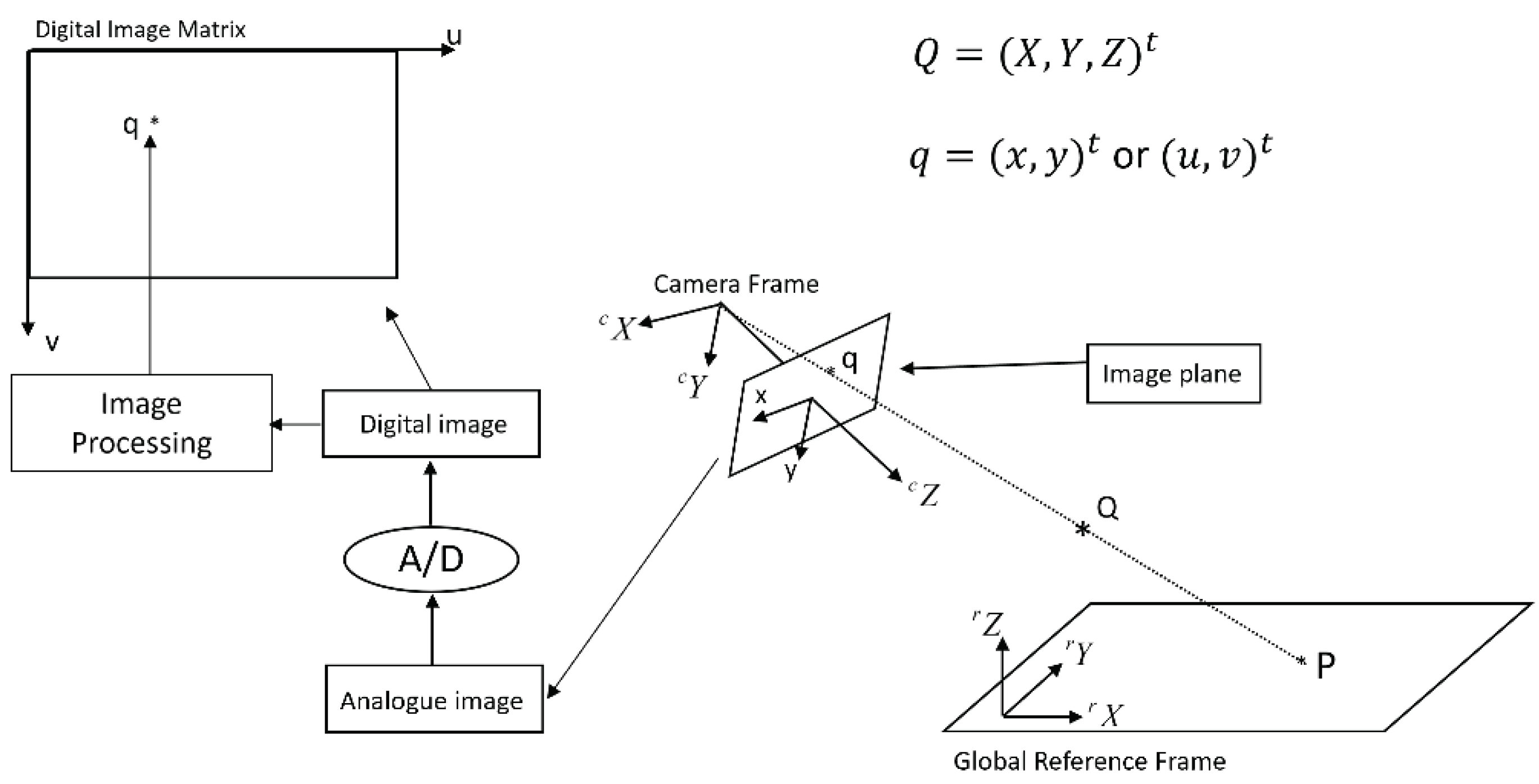

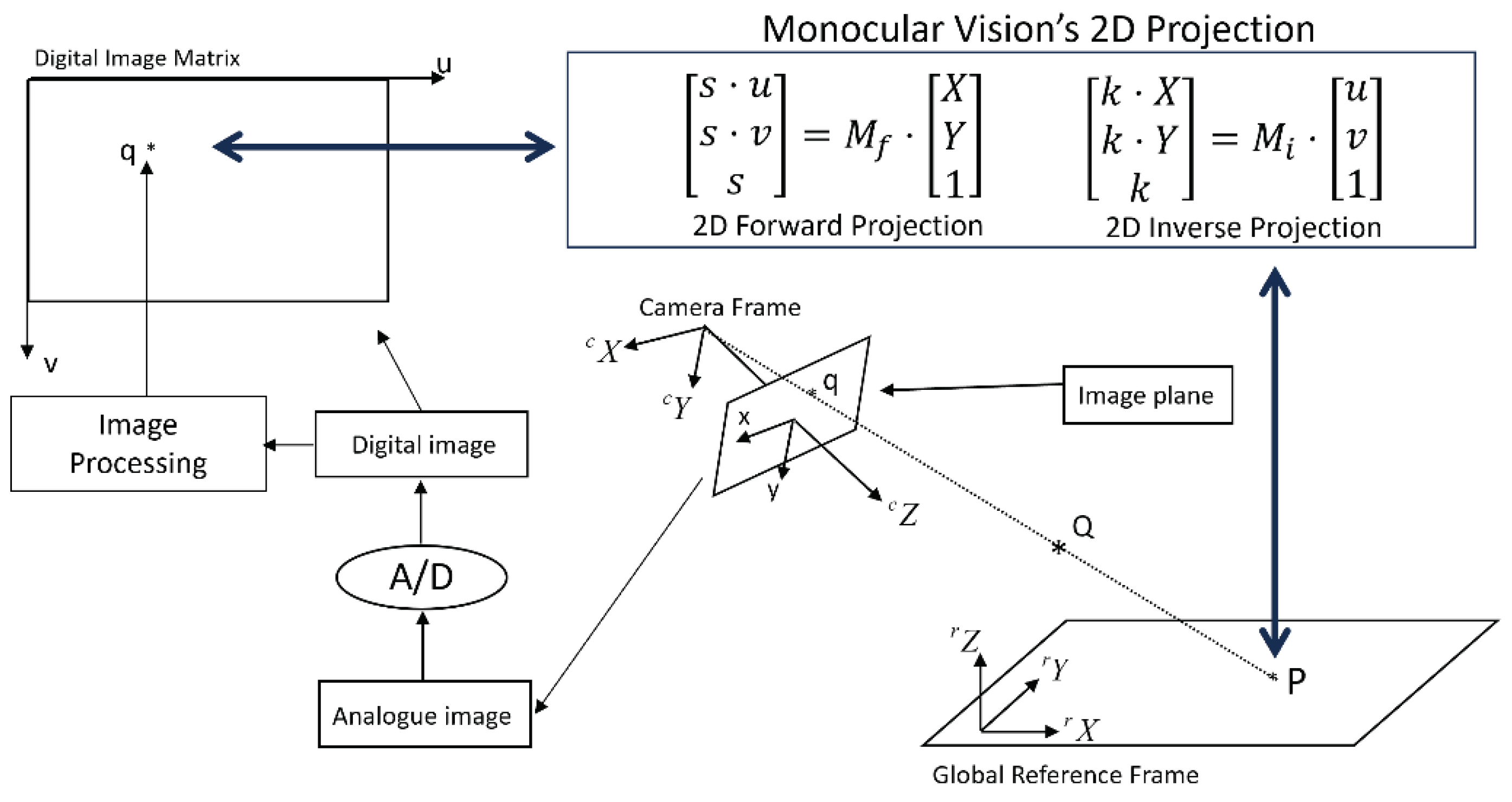

3.2. Forward Projection Matrix of Camera

3.7. Textbook Solution of Computing 3D Coordinates from Binocular Vision

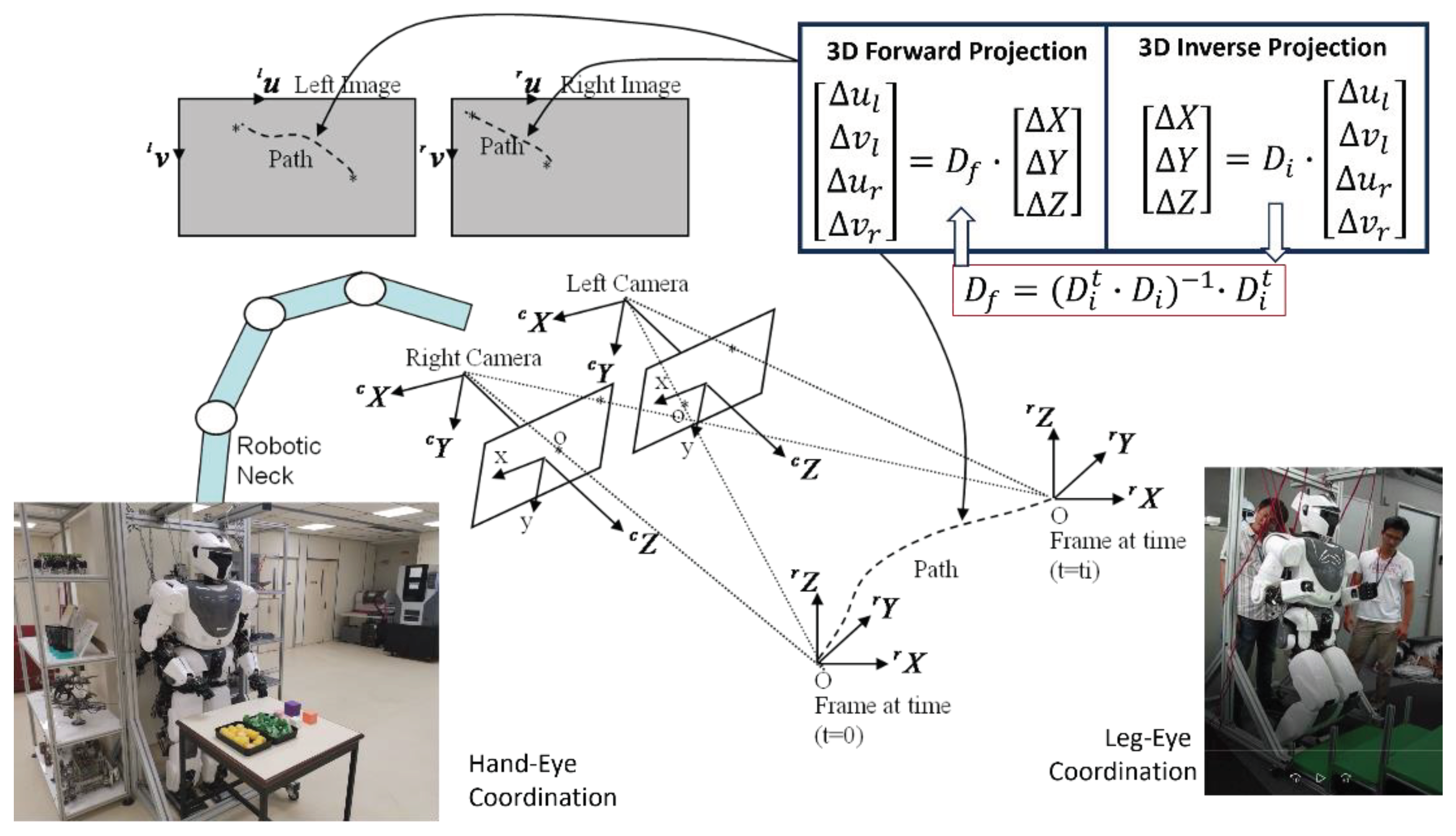

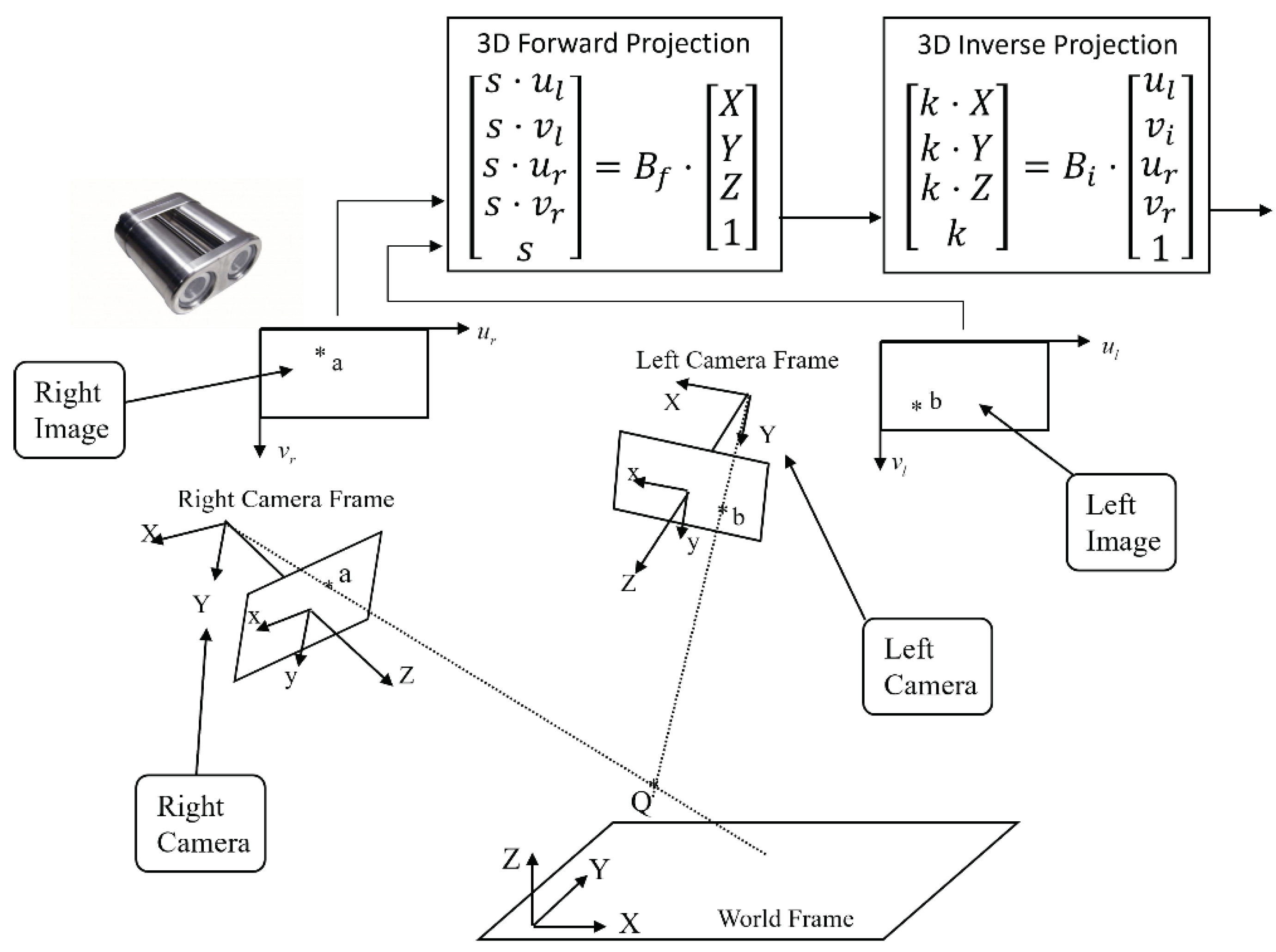

4. Equations of 3D Projection in Human-like Binocular Vision

4.1. Equation of 3D Inverse Projection of Position in Binocular Vision

4.2. Equation of 3D Forward Projection of Position in Binocular Vision

4.3. Equation of 3D Inverse Projection of Displacement of Binocular Vision

4.4. Equation of 3D Forward Projection of Displacement of Binocular Vision

5. Experimental Results

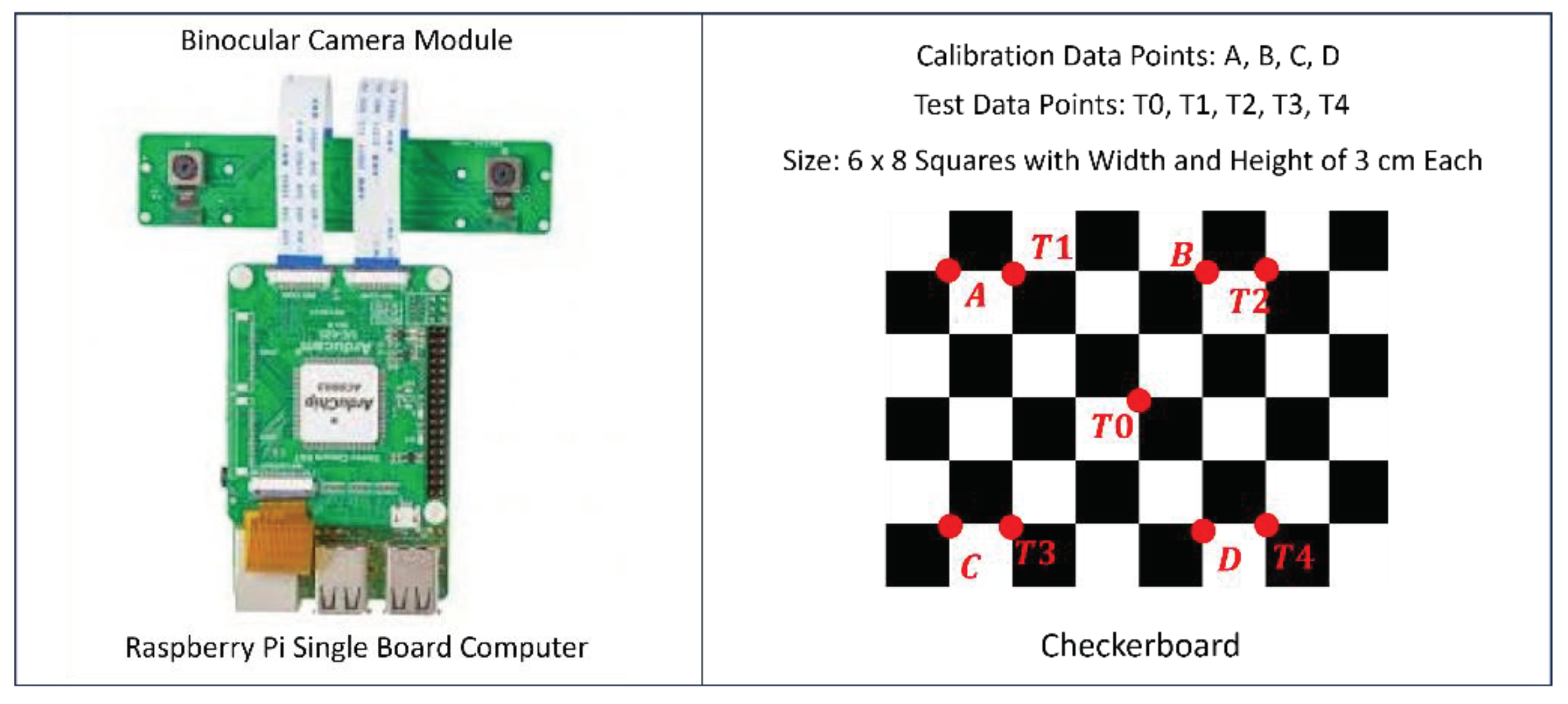

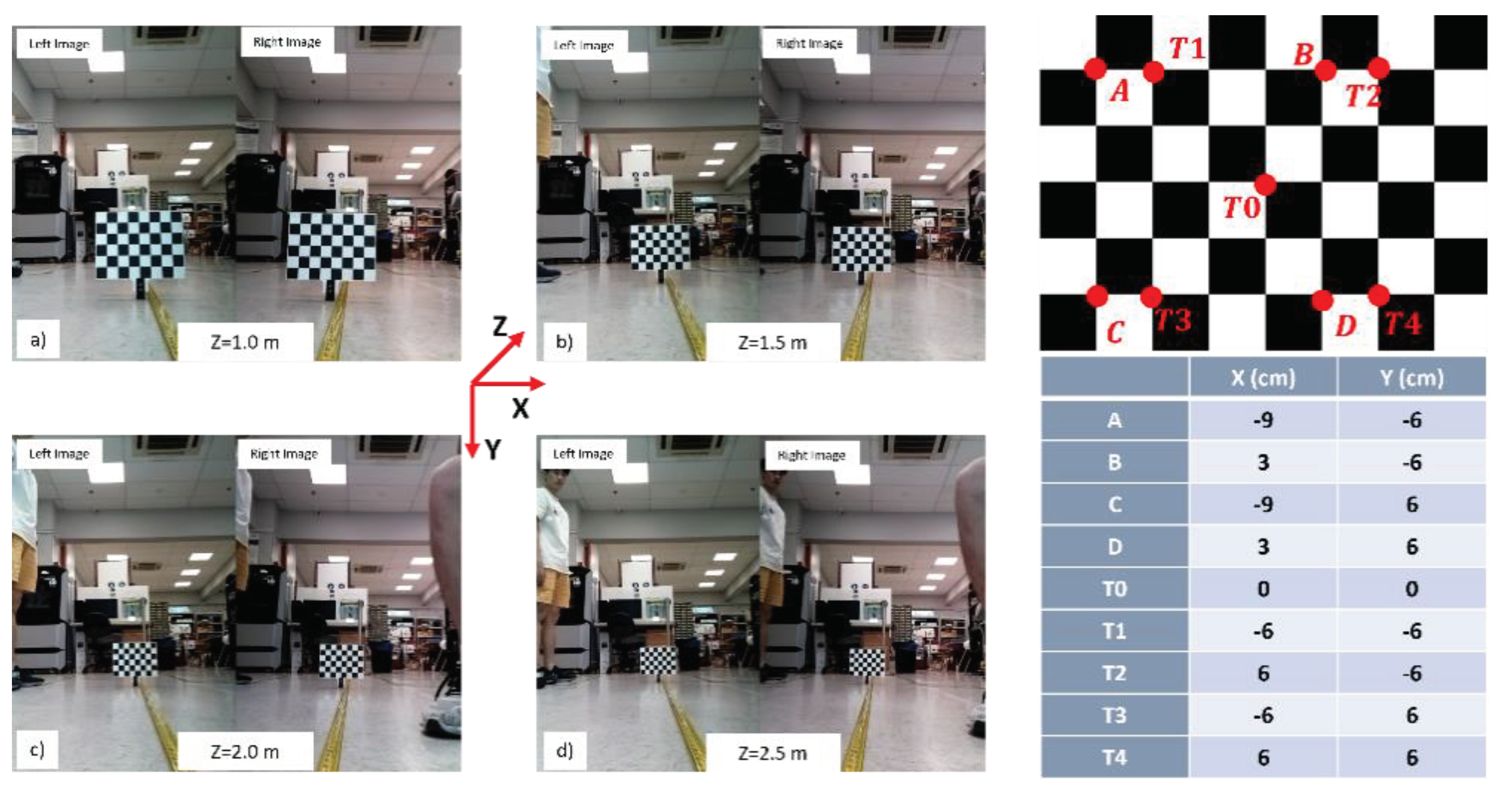

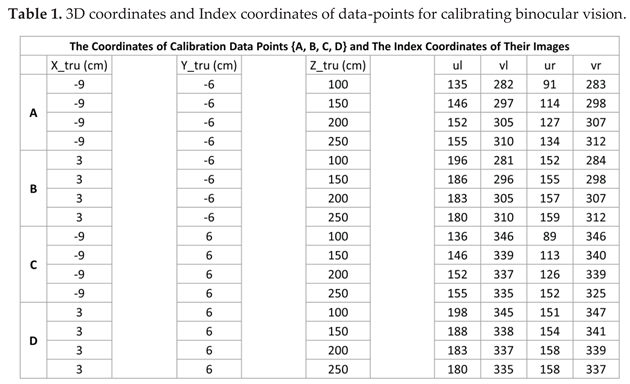

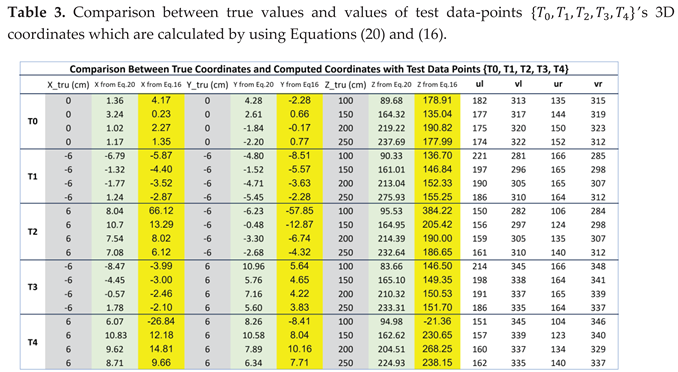

5.1. Real Experiment Validating Equation of 3D Inverse Projection of Position

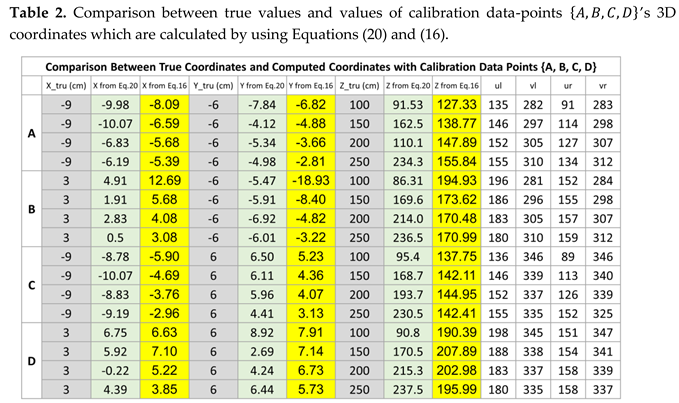

5.2. Comparative Study with Textbook Solution of Computing 3D Coordinates

6. Conclusion

Acknowledgments

References

- Xie, M. Hu, Z. C. and Chen, H. New Foundation of Artificial Intelligence. World Scientific, 2021.

- Horn, B. K. P. Robot Vision. The MIT Press, 1986.

- Tolhurst, D. J. Sustained and transient channels in human vision. Vision Research, 1975; Volume 15, Issue 10, pp1151-1155.

- Fahle M and Poggio, T. Visual hyperacuity: spatiotemporal interpolation in human vision, Proceedings of Royal Society, London, 1981.

- Enns, J. T. and Lleras, A. What’s next? New evidence for prediction in human vision. Trends in Cognitive Science 2008, 12, 327–333. [Google Scholar] [CrossRef] [PubMed]

- Laha, B. , Stafford, B. K. and Huberman, A. D. Regenerating optic pathways from the eye to the brain. Science, 2017, 356, 1031–1034. [Google Scholar] [CrossRef] [PubMed]

- Gregory, R. Eye and Brain: The Psychology of Seeing - Fifth Edition, The Princeton University Press, 2015.

- Pugh, A. (editor). Robot Vision. Springer-Verlag, 2013.

- Samani, H. (editor). Cognitive Robotics. CRC Press, 2015.

- Erlhagen, W. and Bicho, E. The dynamic neural field approach to cognitive robotics. Journal of Neural Engineering 2006, 3.

- Cangelosi, A. and Asada, M. Cognitive Robotics. The MIT Press, 2022.

- Faugeras, O. Three-dimensional Computer Vision: A Geometric Viewpoint. The MIT Press, 1993.

- Paragios, N. , Chen, Y. M. and Faugeras, O. (editors). Handbook of Mathematical Models in Computer Vision. Springer, 2006.

- Faugeras, O. Luong, Q. T. and Maybank, S. J. Camera self-calibration: Theory and experiments. European Conference on Computer Vision. Springer, 1992; LNCS, Volume 588.

- Stockman, G. and Shapiro, L. G. Computer Vision. Prentice Hall, 2001.

- Shirai, Y. Three-Dimensional Computer Vision. Springer, 2012.

- Khan, S. Rahmani, H., Shah, S. A. A. and Bennamoun, M. A Guide to Convolutional Neural Networks for Computer Vision. Springer, 2018.

- Szeliski, R. Computer Vision: Algorithms and Applications. Springer, 2022.

- Brooks, R. New Approaches to Robotics. Science 1991, 253. [Google Scholar] [CrossRef] [PubMed]

- Xie, M. Fundamentals of Robotics: Linking Perception to Action. World Scientific, 2003.

- Siciliano, B. and Khatib, O. Springer Handbook of Robotics. Springer, 2016.

- Murphy, R. Introduction to AI Robotics - Second Edition. The MIT Press, 2019.

- Clarke, T. A. and Fryer, J. G. (1998). The development of camera calibration methods and models. The Photogrammetric Record, Wiley Online Library.

- Zhang, Z. Y. , A flexible new technique for camera calibration. IEEE Transactions on Pattern Recognition and Machine Intelligence 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Wang, Q,, Fu, L. and Liu Z. Z (2010). Review on camera calibration, 2010 Chinese Control and Decision Conference, Xuzhou, 2010, pp. 3354-3358. [CrossRef]

- Cui, Y., Zhou, F. Q., Wang, Y. X., Liu, L., and Gao, H. Precise calibration of binocular vision system used for vision measurement, Optics Express 2014, 22, 9134-9149.

- Zhang, Y. J. (2023). Camera Calibration. In: 3-D Computer Vision. Springer, Singapore.

- Fetić, A. Jurić, D. and Osmanković, D. (2012). The procedure of a camera calibration using Camera Calibration Toolbox for MATLAB, 2012 Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, pp. 1752-1757.

- Xu, G., Chen. J. and Li, X. 3-D Reconstruction of Binocular Vision Using Distance Objective Generated From Two Pairs of Skew Projection Lines, IEEE Access 2017, 5, 27272–27280. [Google Scholar] [CrossRef]

- Xu, B. Q. and Liu, C. A 3D reconstruction method for buildings based on monocular vision. Computer-aided Civil and Infrastructure Engineering 2021, 37, 354–369. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).