Submitted:

06 October 2023

Posted:

09 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

2.1. Review of Energy Demand Forecasting in the World

2.2. Energy Demand Forecasting in Türkiye

3. Materials and Methods

3.1. ML Algorithms

- Light Gradient Boosting Machine (LightGBM) [72]

- XGBoost [73]

- Extra Tree Regression [74]

- Passive Aggressive Regressor (PAR) [75]

- Elastic Net [76]

- Least Angle Regression (LARS) [77]

- Lasso Least Angle Regression [78]

- Orthogonal Matching Pursuit (OMP) [79]

- Random Forest Regressor [80]

- Gradient Boosting Regressor [81]

- AdaBoost Regressor [82]

- Linear Regression [83]

- Lasso Regression [84]

- K Neighbors Regressor [85]

- Bayesian Ridge Regression [86]

- Decision Tree Regressor [87]

- Ridge Regression [88]

- Huber Regressor [89]

- Dummy Regressor

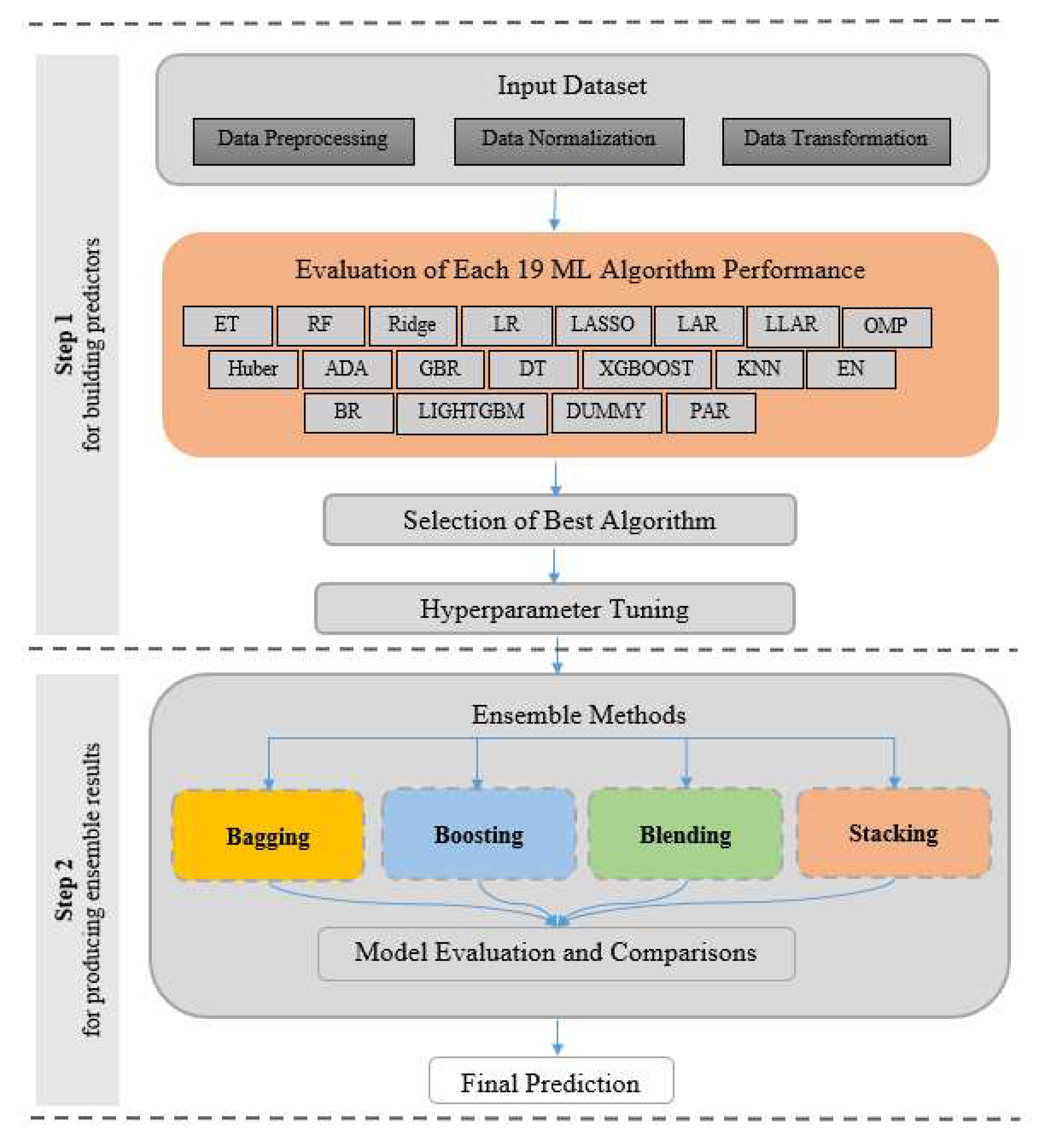

3.2. Structure of the Proposed Methods

3.2.1. K-Fold Cross-Validation

3.2.2. Model Hyperparameters Tuning

3.2.3. Performance Metrics

3.3. Data Collection

4. Results and Discussion

4.1. Implementation Setup

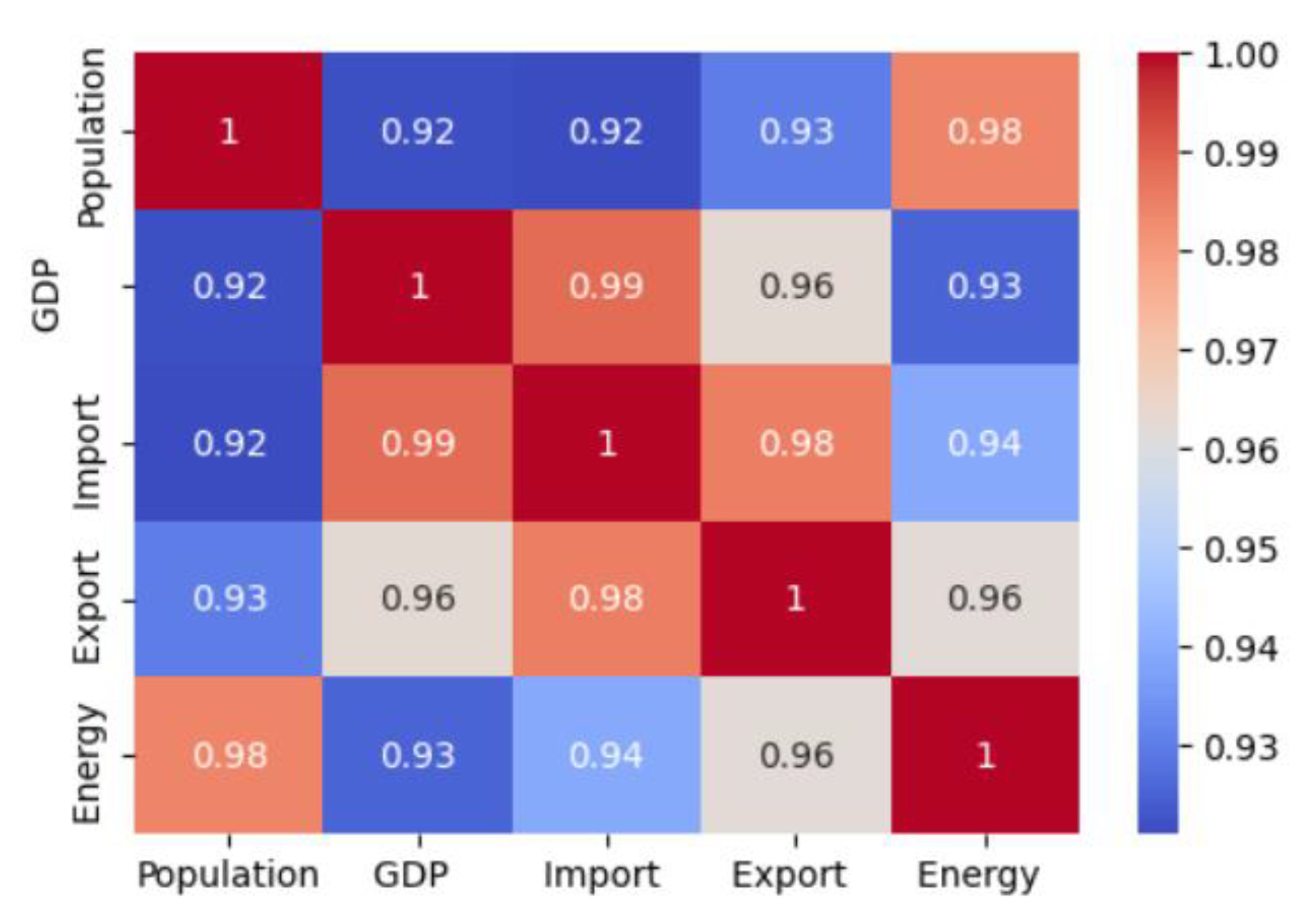

4.2. Feature Selection

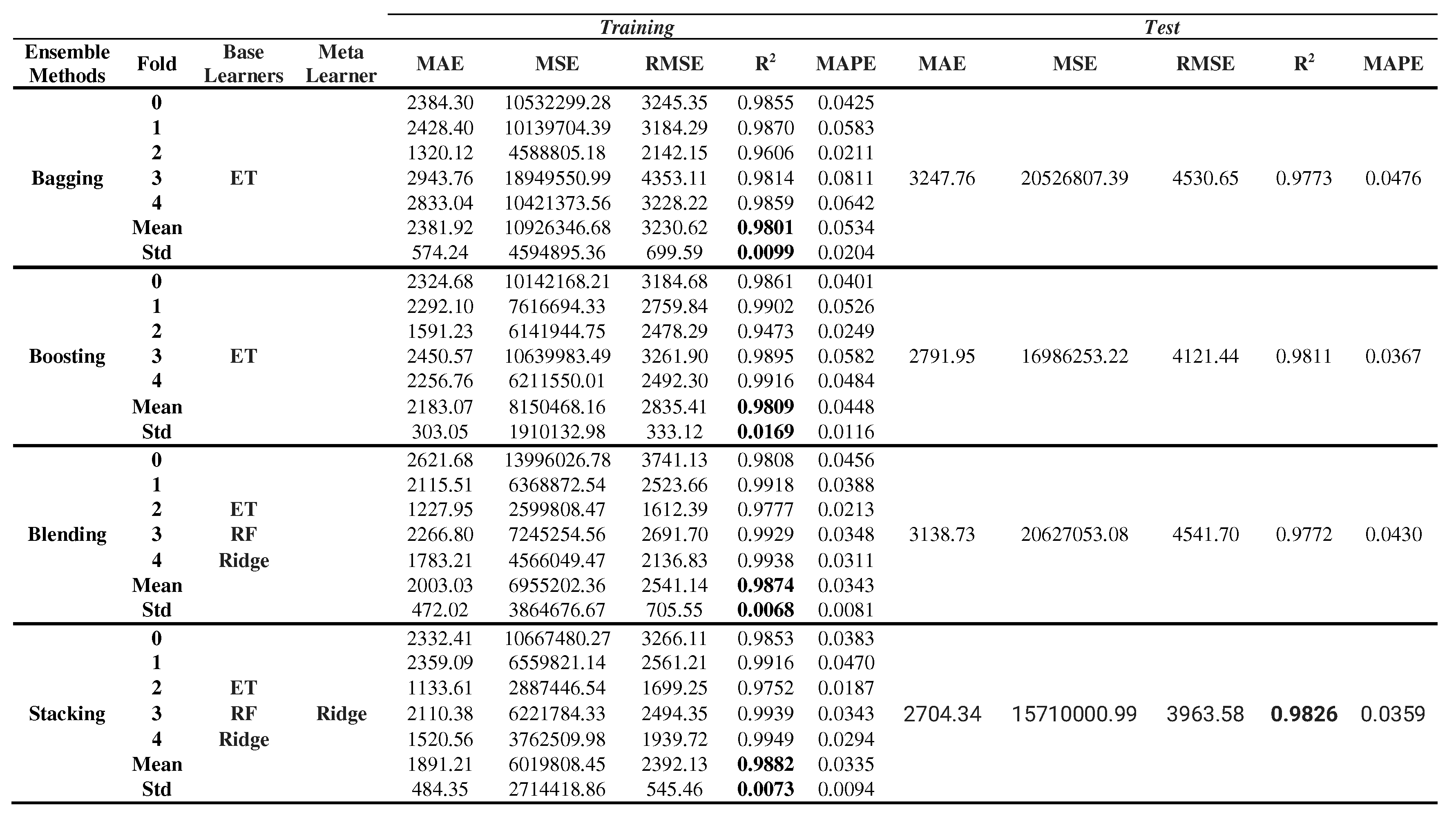

4.3. Performance Evaluation

| ML Algorithm | MAE | MSE | RMSE | R2 | MAPE |

|---|---|---|---|---|---|

| Extra Trees Regressor | 2296.86 | 8756864.57 | 2932.96 | 0.9788 | 0.0464 |

| Random Forest Regressor | 3186.05 | 14777499.11 | 3817.37 | 0.9684 | 0.0658 |

| Ridge Regression | 3676.12 | 21641675.00 | 4466.14 | 0.9655 | 0.0736 |

| Linear Regression | 3780.00 | 23739669.80 | 4668.86 | 0.9635 | 0.0825 |

| Lasso Regression | 3779.85 | 23736214.80 | 4668.54 | 0.9635 | 0.0825 |

| Least Angle Regression | 3780.00 | 23739655.90 | 4668.86 | 0.9635 | 0.0825 |

| Lasso Least Angle Regression | 3779.85 | 23736205.30 | 4668.54 | 0.9635 | 0.0825 |

| Orthogonal Matching Pursuit | 3780.00 | 23739655.90 | 4668.86 | 0.9635 | 0.0825 |

| Huber Regressor | 3828.58 | 22823167.86 | 4595.40 | 0.9634 | 0.0785 |

| AdaBoost Regressor | 3575.50 | 15583915.43 | 3934.88 | 0.9611 | 0.0691 |

| Gradient Boosting Regressor | 3772.78 | 16872570.61 | 4096.12 | 0.9556 | 0.0707 |

| Decision Tree Regressor | 3768.74 | 16856622.84 | 4094.38 | 0.9554 | 0.0707 |

| Extreme Gradient Boosting | 3768.71 | 16856282.40 | 4094.34 | 0.9554 | 0.0706 |

| K Neighbors Regressor | 3987.62 | 29417635.00 | 5274.57 | 0.9493 | 0.0848 |

| Elastic Net | 7402.15 | 81150325.20 | 8721.88 | 0.8666 | 0.1248 |

| Bayesian Ridge | 22303.79 | 705872003.20 | 25684.29 | -0.0970 | 0.4306 |

| Light Gradient Boosting Machine | 22303.79 | 705872041.79 | 25684.29 | -0.0970 | 0.4306 |

| Dummy Regressor | 22303.79 | 705872041.60 | 25684.29 | -0.0970 | 0.4306 |

| Passive Aggressive Regressor | 40863.75 | 2361836689.9 | 48178.33 | -3.9041 | 0.5635 |

| ML Algorithm | MAE | MSE | RMSE | R2 | MAPE |

|---|---|---|---|---|---|

| Extra Trees Regressor | 2989.27 | 17145375.48 | 4140.6975 | 0.9811 | 0.0406 |

5. Conclusions

- The GDP, population, import, export, energy data taken between 1979 and 2021 were used and it is observed that there has a strong correlation among them.

- Five statistical metrics are discussed to evaluate the performance of the algorithms in the forecast.

- A total of 19 machine learning algorithms were constructed and analyzed to select models for diverse ensemble combinations.

- Considering all metrics collectively, the stacking ensemble model utilizing Ridge Regressor as a meta-learner outperforms single ML algorithms as well as other bagging, boosting, and blending models.

- The results of predicted values demonstrate that stacking ensemble model has presented very satisfied results when comparing the truth energy-demand outputs.

- These ensemble models can readily be adapted and recommended for future energy demand forecasts in other countries. Notably, the stacking ensemble model demonstrates statistically superior results compared to other models, making it a more suitable choice for accurate forecasting.

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Bank Data World Development Indicators, Available online:. Available online: https://www.worldbank.org/en/country/turkey/overview (accessed on 1 June 2023).

- European Commission (2023) Eurostat, EU Energy and Climate Reports. Available online: https://commission.europa.eu/ (accessed on 1 May 2023).

- Republic of Türkiye, Ministry of Foreign Affairs, Türkiye’s International Energy Strategy. Available online: https://www.mfa.gov.tr/turkeys-energy-strategy.en.mfa (accessed on 1 June 2023).

- United Nations. Available online: https://sdgs.un.org/goals (accessed on 1 April 2023).

- Kong, K.G.H.; How, B.S.; Teng, S.Y.; Leong, W.D.; Foo, D.C.; Tan, R.R.; Sunarso, J. Towards data-driven process integration for renewable energy planning. Current Opinion in Chemical Engineering 2021, 31, 100665. [Google Scholar] [CrossRef]

- Singh, S.; Bansal, P.; Hosen, M.; Bansal, S.K. Forecasting annual natural gas consumption in USA: Application of machine learning techniques-ANN and SVM. Resources Policy 2023, 80, 103159. [Google Scholar] [CrossRef]

- Sözen, A. Future projection of the energy dependency of Turkey using artificial neural network. Energy policy 2009, 37, 4827–4833. [Google Scholar] [CrossRef]

- Panklib, K.; Prakasvudhisarn, C.; Khummongkol, D. Electricity consumption forecasting in Thailand using an artificial neural network and multiple linear regression. Energy Sources, Part B: Economics, Planning, and Policy 2005, 10, 427–434. [Google Scholar] [CrossRef]

- Murat, Y.S.; Ceylan, H. Use of artificial neural networks for transport energy demand modeling. Energy policy 2006, 34, 3165–3172. [Google Scholar] [CrossRef]

- Sahraei, M.A.; Çodur, M.K. Prediction of transportation energy demand by novel hybrid meta-heuristic ANN. Energy 2022, 249, 123735. [Google Scholar] [CrossRef]

- Çodur, M.Y; Ünal, A. An estimation of transport energy demand in Turkey via artificial neural networks. Promet-Traffic&Transportation 2019, 31, 151–161. [Google Scholar]

- Ferrero Bermejo, J.; Gómez Fernández, J.F; Olivencia Polo, F.; Crespo Márquez, A. A review of the use of artificial neural network models for energy and reliability prediction. A study of the solar PV, hydraulic and wind energy sources. Applied Sciences 2019, 9, 1844. [Google Scholar] [CrossRef]

- Kaya, T.; Kahraman, C. Multicriteria decision making in energy planning using a modified fuzzy TOPSIS methodology. Expert Systems with Applications 2011, 38, 6577–6585. [Google Scholar] [CrossRef]

- Azadeh, A.; Asadzadeh, S.M.; Ghanbari, A. An adaptive network-based fuzzy inference system for short-term natural gas demand estimation: Uncertain and complex environments. Energy Policy 2010, 38, 1529–1536. [Google Scholar] [CrossRef]

- Guevara, E.; Babonneau, F.; Homem-de-Mello, T.; Moret, S. A machine learning and distributionally robust optimization framework for strategic energy planning under uncertainty. Applied Energy 2020, 271, 115005. [Google Scholar] [CrossRef]

- Erdogdu, E. Electricity demand analysis using cointegration and ARIMA modelling: A case study of Turkey. Energy Policy 2007, 35, 1129–1146. [Google Scholar] [CrossRef]

- Ünler, A. Improvement of energy demand forecasts using swarm intelligence: The case of Turkey with projections to 2025. Energy Policy 2008, 36, 1937–1944. [Google Scholar] [CrossRef]

- Hamzaçebi, C. Forecasting of Turkey’s net electricity energy consumption on sectoral bases. Energy Policy 2007, 35, 2009–2016. [Google Scholar] [CrossRef]

- Kavaklioglu, K. Modeling and prediction of Turkey’s electricity consumption using Support Vector Regression. Applied Energy 2011, 88, 368–375. [Google Scholar] [CrossRef]

- Hotunoglu, H.; Karakaya, E. Forecasting Turkey’s Energy Demand Using Artificial Neural Networks: Three Scenario Applications. Ege Academic Review 2011, 11, 87–94. [Google Scholar]

- Utgikar, V.P.; Scott, J.P. Energy forecasting: Predictions, reality and analysis of causes of error. Energy Policy 2006, 34, 3087–3092. [Google Scholar] [CrossRef]

- El-Telbany, M.; El-Karmi, F. Short-term forecasting of Jordanian electricity demand using particle swarm optimization. Electr Power Syst Res 2008, 78, 425–433. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, C.; Qu, S.; Chen, X. An explainable artificial intelligence approach for financial distress prediction. Information Processing & Management 2022, 59, 102988. [Google Scholar]

- Hewage, P.; Trovati, M.; Pereira, E.; Behera, A. Deep learning-based effective fine-grained weather forecasting model. Pattern Analysis and Applications 2021, 24, 343–366. [Google Scholar] [CrossRef]

- Suganthi, L.; Samuel, A.A. Energy models for demand forecasting—A review. Renewable and sustainable energy reviews 2012, 16, 1223–1240. [Google Scholar] [CrossRef]

- Bao, Y.; Hilary, G.; Ke, B. Artificial intelligence and fraud detection. Innovative Technology at the Interface of Finance and Operations 2022, 1, 223–247. [Google Scholar]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. Journal of King Saud University-Computer and Information Sciences 2023. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Hategan, S.M.; Stefu, N.; Paulescu, M. An Ensemble Approach for Intra-Hour Forecasting of Solar Resource. Energies 2023, 16, 6608. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2009, Springer.

- Breiman, L. Bagging Predictors. Machine Learning 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. The Annals of Statistics 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked Generalization. Neural Networks 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble Methods in Machine Learning. International Workshop on Multiple Classifier Systems 2000, 1–15. [Google Scholar]

- World Energy Outlook IEA (2022). International Energy Agency, Paris. Available online: https://www.iea.org/data-and-statistics/data-product/world-energybalances#energy-balances (accessed on 1 April 2022).

- Zhang, M.; Mu, H.; Li, G.; Ning, Y. Forecasting the transport energy demand based on PLSR method in China. Energy 2009, 34, 1396–1400. [Google Scholar] [CrossRef]

- Kumar, U.; Jain, V.K. Time series models (Grey-Markov, Grey Model with rolling mechanism and singular spectrum analysis) to forecast energy consumption in India. Energy 2010, 35, 1709–1716. [Google Scholar] [CrossRef]

- Chaturvedi, S.; Rajasekar, E.; Natarajan, S.; McCullen, N.A. Comparative assessment of SARIMA, LSTM RNN and Fb Prophet models to forecast total and peak monthly energy demand for India. Energy Policy 2022, 168. [Google Scholar] [CrossRef]

- Sahraei, M.A.; Duman, H.; Çodur, M.Y.; Eyduran, E. Prediction of transportation energy demand: multivariate adaptive regression splines. Energy 2021, 224, 120090. [Google Scholar] [CrossRef]

- Javanmard, M.E.; Ghaderi, S.F. Energy demand forecasting in seven sectors by an optimization model based on machine learning algorithms. Sustainable Cities and Society 2023, 95. [Google Scholar] [CrossRef]

- Ye, J.; Dang, Y.; Ding, S.; Yang, Y. A novel energy consumption forecasting model combining an optimized DGM (1, 1) model with interval grey numbers. Journal of Cleaner Production 2019, 229, 256–267. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, H. Fuzzy Wavelet Neural Networks for City Electric Energy Consumption Forecasting. Energy Procedia 2012, 17, 1332–1338. [Google Scholar] [CrossRef]

- Mason, K.; Duggan, J.; Howley, E. Forecasting energy demand, wind generation and carbon dioxide emissions in Ireland using evolutionary neural networks. Energy 2018, 155, 705–720. [Google Scholar] [CrossRef]

- Muralitharan, K.; Sakthivel, R.; Vishnuvarthan, R. Neural network based optimization approach for energy demand prediction in smart grid. Neurocomputing 2018, 273, 199–208. [Google Scholar] [CrossRef]

- Yu, S.; Zhu, K.; Zhang, X. Energy demand projection of China using a path-coefficient analysis and PSO–GA approach. Energy Conversion and Management 2012, 53, 142–153. [Google Scholar] [CrossRef]

- Verwiebe, P.A.; Seim, S.; Burges, S.; Schulz, L.; Müller-Kirchenbauer, J. Modeling Energy Demand—A Systematic Literature Review. Energies 2021, 14, 7859. [Google Scholar] [CrossRef]

- Ghalehkhondabi, I.; Ardjmand, E.; Weckman, G. R.; Young, W.A. An overview of energy demand forecasting methods published in 2005–2015. Energy Systems 2017, 8, 411–447. [Google Scholar] [CrossRef]

- Aslan, M. Archimedes optimization algorithm based approaches for solving energy demand estimation problem: a case study of Turkey. Neural Computing and Applications 2023, 35, 19627–19649. [Google Scholar] [CrossRef]

- Korkmaz, E. Energy demand estimation in Turkey according to modes of transportation: Bezier search differential evolution and black widow optimization algorithms-based model development and application. Neural Computing and Applications 2023, 35, 7125–7146. [Google Scholar] [CrossRef]

- Aslan, M.; Beşkirli, M. Realization of Turkey’s energy demand forecast with the improved arithmetic optimization algorithm. Energy Reports 2022, 8, 18–32. [Google Scholar] [CrossRef]

- Ağbulut, Ü. Forecasting of transportation-related energy demand and CO2 emissions in Turkey with different machine learning algorithms. Sustainable Production and Consumption 2022, 29, 141–157. [Google Scholar] [CrossRef]

- Özdemir, D.; Dörterler, S.; Aydın, D. A new modified artificial bee colony algorithm for energy demand forecasting problem. Neural Computing and Applications 2022, 34, 17455–17471. [Google Scholar] [CrossRef]

- Özkış, A. A new model based on vortex search algorithm for estimating energy demand of Turkey. Pamukkale University Journal of Engineering Sciences 2020, 26, 959–965. [Google Scholar] [CrossRef]

- Tefek, M.F.; Uğuz, H.; Güçyetmez, M. A new hybrid gravitational search–teaching–learning-based optimization method for energy demand estimation of Turkey. Neural Computing and Applications 2019, 31, 2939–2954. [Google Scholar] [CrossRef]

- Beskirli, A.; Beskirli, M.; Hakli, H.; Uguz, H. Comparing energy demand estimation using artificial algae algorithm: The case of Turkey. Journal of Clean Energy Technologies 2018, 6, 349–352. [Google Scholar] [CrossRef]

- Cayir Ervural, B.; Ervural, B. Improvement of grey prediction models and their usage for energy demand forecasting. Journal of Intelligent & Fuzzy Systems 2018, 34, 2679–2688. [Google Scholar]

- Koç, İ.; Nureddin, R.; Kahramanlı, H. Implementation of GSA (Gravitation Search Algorithm) and IWO (Invasive Weed Optimization) for The Prediction of The Energy Demand in Turkey Using Linear Form. Selcuk Univ. J. Eng. Sci. Tech. 2018, 6, 529–543. [Google Scholar]

- Özturk, S.; Özturk, F. Forecasting energy consumption of Turkey by Arima model. Journal of Asian Scientific Research 2018, 8, 52. [Google Scholar] [CrossRef]

- Beskirli, M.; Hakli, H.; Kodaz, H. The energy demand estimation for Turkey using differential evolution algorithm. Sādhanā 2017, 42, 1705–1715. [Google Scholar] [CrossRef]

- Daş, G.S. Forecasting the energy demand of Turkey with a NN based on an improved Particle Swarm Optimization. Neural Computing and Applications 2017, 28, 539–549. [Google Scholar] [CrossRef]

- Kankal, M.; Uzlu, E. Neural network approach with teaching–learning-based optimization for modeling and forecasting long-term electric energy demand in Turkey. Neural Computing and Applications 2017, 28, 737–747. [Google Scholar] [CrossRef]

- Uguz, H.; Hakli, H.; Baykan, Ö.K. A new algorithm based on artificial bee colony algorithm for energy demand forecasting in Turkey. In 2015 4th International Conference on Advanced Computer Science Applications and Technologies (ACSAT) 2015, 56–61. [Google Scholar]

- Tutun, S.; Chou, C.A.; Canıyılmaz, E. A new forecasting for volatile behavior in net electricity consumption: a case study in Turkey. Energy 2015, 93, 2406–2422. [Google Scholar] [CrossRef]

- Kıran, M.S.; Özceylan, E.; Gündüz, M.; Paksoy, T. Swarm intelligence approaches to estimate electricity energy demand in Turkey. Knowl Based Syst 2012, 36, 93–103. [Google Scholar] [CrossRef]

- Kankal, M.; Akpınar, A.; Kömürcü, M.İ.; Özşahin, T.Ş. Modeling and forecasting of Turkey’s energy consumption using socio-economic and demographic variables. Applied Energy 2011, 88, 1927–1939. [Google Scholar] [CrossRef]

- Ediger, V.S.; Akar, S. ARIMA forecasting of primary energy demand by fuel in Turkey. Energy Policy 2007, 35, 1701–1708. [Google Scholar] [CrossRef]

- Toksari, M.D. Ant colony optimization approach to estimate energy demand of Turkey. Energy Policy 2007, 35, 3984–3990. [Google Scholar] [CrossRef]

- Sözen, A.; Arcaklioğlu, E.; Özkaymak, M. Turkey’s net energy consumption. Applied Energy 2005, 81, 209–221. [Google Scholar] [CrossRef]

- Canyurt, O.E.; Ceylan, H.; Ozturk, H.K.; Hepbasli, A. Energy demand estimation based on two-different genetic algorithm approaches. Energy Sources 2004, 26, 1313–1320. [Google Scholar] [CrossRef]

- Ceylan, H.; Ozturk, H.K. Estimating energy demand of Turkey based on economic indicators using genetic algorithm approach. Energy Convers Manage 2004, 45, 2525–2537. [Google Scholar] [CrossRef]

- Ceylan, H.; Ozturk, H.K.; Hepbasli, A.; Utlu, Z. Estimating energy and exergy production and consumption values using three different genetic algorithm approaches, part 2: application and scenarios. Energy Sources 2005, 27, 629–639. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.,; Chen, W.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Advances in Neural Information Processing Systems; 2017, 3146–3154.

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 785–794. 2016. [Google Scholar]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Machine learning 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Crammer, K.; Dekel, O.; Keshet, J.; Shalev-Shwartz, S.; Singer, Y. Online Passive-Aggressive Algorithms. Journal of Machine Learning Research 2006, 7, 551–585. [Google Scholar]

- Zou, H.; Hastie, T. Regularization and Variable Selection via the Elastic Net. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least Angle Regression. Annals of Statistics 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Annals of Statistics 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Elad, M.; Bruckstein, A. A generalized uncertainty principle and sparse representation in pairs of bases. IEEE Transactions on Information Theory 2002, 48, 2558–2567. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Machine learning 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Annals of Statistics 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. Machine learning: Proceedings of the thirteenth international conference (icml) 1996, 96, 148–156. [Google Scholar]

- Neter, J.; Kutner, M.H.; Nachtsheim, C.J.; Wasserman, W. Applied Linear Statistical Models (4th ed. ) 1996, Irwin. [Google Scholar]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection. IEEE Transactions on Pattern Analysis and Machine Intelligence 1997, 19. [Google Scholar] [CrossRef]

- MacKay, D.J. Bayesian Interpolation. Neural Computation 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classification and Regression Trees. Chapman & Hall/CRC, 1984.

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Huber, P.J. Robust Estimation of a Location Parameter. Annals of Mathematical Statistics 1964, 35, 492–518. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Turkish Statistical Institute (Turkstat), Statistical tables, Ankara, Türkiye. Available online: https://www.tuik.gov.tr/ (accessed on 1 June 2023).

- Turkish Ministry of Energy and Natural Resources (MENR). Ankara, Türkiye. Available online: https://enerji.gov.tr/eigm-raporlari (accessed on 1 June 2023).

| Author(s) | Year | Method used | Dataset | Input Parameters | Forecasting for |

|---|---|---|---|---|---|

| Aslan [48] | 2023 | Archimedes Optimization Algorithm | 1979-2005 1979-2011 | GDP, Population, Import, Export |

Energy |

| Korkmaz [49] | 2022 | Bezier Search Differential Evolution Black Widow Optimization (BWO) |

2000-2017 | Passenger-km, Freight-km, Carbon dioxide emissions, GDP, Infrastructure Investment |

Transportation Energy |

| Aslan and Beşkirli [50] | 2022 | Improved Arithmetic Optimization Algorithm | 1979-2011 | GDP, Population, Import, Export |

Energy |

| Ağbulut [51] | 2022 | Deep Learning (DL) Support Vector Machine (SVM) Artificial Neural Network (ANN) |

1970-2016 | GDP, Population, Vehicle-km, Year |

Transportation Energy |

| Özdemir et al. [52] | 2022 | Modified Artificial Bee Colony Algorithm | 1979-2005 | GDP, Population Import, Export |

Energy |

| Özkış [53] | 2020 | Vortex Search Algorithm (VS) | 1979-20051979-2011 | GDP, Population, Import, Export |

Energy |

| Tefek et. al. [54] | 2019 | Hybrid gravitational search, teaching, learning-based optimization method | 1980-2014 | Population, GDP, Installed power, Gross Generation, Net Consumption |

Energy |

| Beskirli et al. [55] | 2018 | Artificial Algae Algorithm (AAA) | 1979-2005 | GDP, Population, Import, Export |

Energy |

| Cayir Ervural and Ervural [56] | 2018 | Grey Prediction Model Based on GA Grey Prediction Model Based on PSO |

1996-2016 | Previous Annual Electricity Consumption Data | Energy |

| Koç et al. [57] | 2018 | Gravity Search Algorithm (GSA), Invasive Weed Optimization Algorithm (IWO) | 1979-2011 | GDP, Population, Import, Export |

Energy |

| Öztürk and Öztürk [58] | 2018 | ARIMA | 1970-2015 | Previous Energy Consumption Data | Energy |

| Beskirli et al. [59] | 2017 | Differential Evolution Algorithm (DE) | 1979-2011 | GDP, Population, Import, Export |

Energy |

| Daş [60] | 2017 | Neural Network Based on Particle Swarm Optimization | 1979-2005 | GDP, Population, Import, Export |

Energy |

| Kankal and Uzlu [61] | 2017 | ANN | 1980-2012 | GDP, Population, Import, Export |

Electricity Energy |

| Uguz et al. [62] | 2015 | Artificial Bee Colony with Variable Search Strategies (ABCVSS) | 1979-2005 | GDP, Population, Import, Export |

Energy |

| Tutun et al. [63] | 2015 | Regression and ANN | 1975-2010 | Imports, Exports, Gross generation, Transmitted energy |

Electricity Energy consumption |

| Kıran et al. [64] | 2012 | Hybrid Meta-Heuristic (Particle Swarm Optimization, Ant Colony Optimization) | 1979-2005 | GDP, Population, Import, Export |

Energy |

| Kankal et al. [65] | 2011 | Regression Analysis/ ANN | 1980-2007 | GDP, Population, İmport, Export, Employment |

Energy |

| Ediger and Akar [66] | 2007 | Autoregressive Integrated Moving Average (ARIMA) and seasonal ARIMA (SARIMA) | 1950-2005 | Energy | |

| Ünler [17] | 2008 | Particle Swarm Optimization | 1979-2005 | GDP, Population, Import, Export |

Energy |

| Toksarı [67] | 2007 | Ant Colony Optimization | 1970-2005 | Population, GDP, Import, Export |

Energy |

| Sözen et al. [68] | 2005 | ANN | 1975-2003 | Population, Gross Generation, Installed Capacity, Import, Export |

Energy |

| Canyurt et al. [69] | 2004 | Genetic Algorithm | 1970-2001 | GDP, Population Import, Export |

Energy |

| Ceylan and Öztürk [70] | 2004 | Genetic Algorithm | 1970-2001 | GDP, Population, Import, Export |

Energy |

| Ceylan et al. [71] | 2004 | Genetic Algorithm | 1990-2001 | GDP, Population, Import, Export |

Energy and exergy production and consumption |

| Variable | The influencing factors for using this variable |

|---|---|

| GDP | There exists a strong correlation between GDP and energy consumption, as the level of economic activity directly impacts the demand for energy. When the GDP of a country increases, it generally indicates a growth in industrial and commercial activities, leading to higher energy consumption. Considering the substantial impact of GDP on energy demand, GDP is often chosen as an independent variable in studies analyzing energy consumption patterns. |

| Population | Population growth directly affects the demand for energy in a country or region. As the population increases, there is a greater need for energy to meet the demands of the growing population, including residential, commercial, industrial, and transportation sectors. Understanding and considering population values as an independent variable is crucial for analyzing and planning energy resources. |

| Import | The relationship between imports and energy consumption is significant, as the availability and reliance on imported energy resources can directly impact a country's energy demand. The import values of energy resources are choosen as independent variables in this study due to their influence on the overall energy consumption patterns. |

| Export | The relationship between exports and energy consumption is an important aspect of understanding a country's energy demand. The export values of energy resources are choosen as independent variables in this study due to their potential impact on a country's overall energy consumption patterns. |

| Years | Population (106) | GDP ($ 109) |

Import ($ 109) |

Export ($ 109) |

Energy (Mtoe) |

|---|---|---|---|---|---|

| 1979 | 43.19 | 82.00 | 5.07 | 2.26 | 26.37 |

| 1980 | 44.09 | 68.82 | 7.91 | 2.91 | 27.51 |

| 1981 | 44.98 | 71.04 | 8.93 | 4.70 | 27.60 |

| 1982 | 45.95 | 64.55 | 8.84 | 5.75 | 29.59 |

| 1983 | 47.03 | 61.68 | 9.24 | 5.73 | 30.25 |

| 1984 | 48.11 | 59.99 | 10.76 | 7.13 | 31.75 |

| 1985 | 49.18 | 67.23 | 11.34 | 7.96 | 32.73 |

| 1986 | 50.22 | 75.73 | 11.10 | 7.46 | 34.59 |

| 1987 | 51.25 | 87.17 | 14.16 | 10.20 | 38.70 |

| 1988 | 52.28 | 90.85 | 14.34 | 11.66 | 39.73 |

| 1989 | 53.31 | 107.14 | 15.80 | 11.62 | 40.40 |

| 1990 | 54.32 | 150.68 | 22.30 | 12.96 | 42.24 |

| 1991 | 55.32 | 150.03 | 21.05 | 13.59 | 43.09 |

| 1992 | 56.30 | 158.46 | 22.87 | 14.71 | 44.70 |

| 1993 | 57.30 | 180.17 | 29.43 | 15.35 | 48.26 |

| 1994 | 58.31 | 130.69 | 23.27 | 18.11 | 45.77 |

| 1995 | 59.31 | 169.49 | 35.71 | 21.64 | 50.53 |

| 1996 | 60.29 | 181.48 | 43.63 | 23.22 | 54.85 |

| 1997 | 61.28 | 189.83 | 48.56 | 26.26 | 57.99 |

| 1998 | 62.24 | 275.97 | 45.92 | 26.97 | 57.12 |

| 1999 | 63.19 | 256.39 | 40.67 | 26.59 | 55.22 |

| 2000 | 64.11 | 274.30 | 54.50 | 27.77 | 61.60 |

| 2001 | 65.07 | 201.75 | 41.40 | 31.33 | 55.60 |

| 2002 | 65.99 | 240.25 | 51.55 | 36.06 | 59.49 |

| 2003 | 66.87 | 314.59 | 69.34 | 47.25 | 64.59 |

| 2004 | 67.79 | 408.88 | 97.54 | 63.17 | 68.24 |

| 2005 | 68.70 | 506.31 | 116.77 | 73.48 | 70.33 |

| 2006 | 69.60 | 557.06 | 139.58 | 85.53 | 74.82 |

| 2007 | 70.47 | 681.34 | 170.06 | 107.27 | 79.79 |

| 2008 | 71.32 | 770.46 | 201.96 | 132.03 | 77.76 |

| 2009 | 72.23 | 649.27 | 140.93 | 102.14 | 78.36 |

| 2010 | 73.20 | 776.99 | 185.54 | 113.88 | 79.84 |

| 2011 | 74.17 | 838.76 | 240.84 | 134.91 | 84.91 |

| 2012 | 75.28 | 880.56 | 236.55 | 152.46 | 88.84 |

| 2013 | 76.58 | 957.78 | 260.82 | 161.48 | 88.07 |

| 2014 | 78.11 | 938.95 | 251.14 | 166.50 | 89.25 |

| 2015 | 79.65 | 864.32 | 213.62 | 150.98 | 99.47 |

| 2016 | 81.02 | 869.69 | 202.19 | 149.25 | 104.57 |

| 2017 | 82.09 | 859.00 | 238.72 | 164.50 | 111.65 |

| 2018 | 82.81 | 778.47 | 231.15 | 177.17 | 109.44 |

| 2019 | 83.48 | 759.94 | 210.35 | 180.83 | 110.65 |

| 2020 | 84.14 | 720.30 | 219.52 | 169.64 | 113.70 |

| 2021 | 84.78 | 819.04 | 271.42 | 225.29 | 123.86 |

| Model | Input |

| M1 | GDP, Population |

| M2 | GDP, Import |

| M3 | GDP, Export |

| M4 | Population, Import |

| M5 | Population, Export |

| M6 | Import, Export |

| M7 | GDP, Population, Import |

| M8 | GDP, Population, Export |

| M9 | Population, Import, Export |

| M10 | GDP, Import, Export |

| M11 | *GDP, Population, Import, Export |

| Models | ML Algorithm | MAE | MSE | RMSE | R2 | MAPE |

|---|---|---|---|---|---|---|

| M1 | Extra Trees Regressor | 2743.27 | 14435527.00 | 3622.18 | 0.9751 | 0.0546 |

| Huber Regressor | 3419.46 | 20734897.00 | 4395.82 | 0.9642 | 0.0749 | |

| Extreme Gradient Boosting | 3725.91 | 22807495.50 | 4551.60 | 0.9625 | 0.0655 | |

| M2 | K Neighbors Regressor | 6881.86 | 84149616.80 | 8596.44 | 0.8710 | 0.1104 |

| Random Forest Regressor | 6809.08 | 114145888.80 | 9963.98 | 0.8252 | 0.1167 | |

| Extra Trees Regressor | 6452.43 | 123658755.00 | 9929.56 | 0.8117 | 0.1178 | |

| M3 | Extra Trees Regressor | 3977.99 | 44054367.30 | 5730.78 | 0.9299 | 0.0695 |

| Random Forest Regressor | 4631.80 | 55354193.10 | 6583.28 | 0.9162 | 0.0797 | |

| Gradient Boosting Regressor | 5351.58 | 64199913.90 | 7220.71 | 0.9031 | 0.0901 | |

| M4 | Extra Trees Regressor | 3042.48 | 17937995.55 | 3844.90 | 0.9733 | 0.0591 |

| Random Forest Regressor | 3666.07 | 22957290.18 | 4448.29 | 0.9716 | 0.0685 | |

| Gradient Boosting Regressor | 4156.41 | 26308872.75 | 4930.11 | 0.9652 | 0.0742 | |

| M5 | Huber Regressor | 3864.21 | 36775418.87 | 5527.85 | 0.9541 | 0.0601 |

| Lasso Regression | 4003.55 | 36200997.00 | 5456.62 | 0.9530 | 0.0678 | |

| Least Angle Regression | 4003.72 | 36196280.60 | 5456.35 | 0.9530 | 0.0678 | |

| M6 | K Neighbors Regressor | 5707.22 | 80792845.60 | 7992.88 | 0.8962 | 0.1035 |

| Random Forest Regressor | 5455.21 | 77021388.80 | 8193.73 | 0.8644 | 0.0930 | |

| Extra Trees Regressor | 5511.29 | 82472925.80 | 8300.80 | 0.8540 | 0.0950 | |

| M7 | Extra Trees Regressor | 2308.94 | 12339053.90 | 3277.79 | 0.9754 | 0.0488 |

| Random Forest Regressor | 2972.75 | 17408515.60 | 3854.32 | 0.9608 | 0.0576 | |

| AdaBoost Regressor | 3400.27 | 18546026.70 | 4014.45 | 0.9538 | 0.0640 | |

| M8 | Extra Trees Regressor | 3189.81 | 17110325.38 | 3874.79 | 0.9716 | 0.0537 |

| AdaBoost Regressor | 4293.89 | 29715232.81 | 5282.08 | 0.9475 | 0.0728 | |

| Random Forest Regressor | 4287.09 | 35730037.10 | 5185.02 | 0.9460 | 0.0700 | |

| M9 | Extra Trees Regressor | 3018.13 | 30503239.40 | 4394.13 | 0.9477 | 0.0407 |

| Random Forest Regressor | 3583.32 | 45575422.90 | 5273.91 | 0.9304 | 0.0473 | |

| AdaBoost Regressor | 4069.59 | 37580683.81 | 5358.08 | 0.9285 | 0.0609 | |

| M10 | K Neighbors Regressor | 5670.51 | 74121506.00 | 7652.91 | 0.9017 | 0.1003 |

| Random Forest Regressor | 5372.22 | 75187291.90 | 7930.92 | 0.8896 | 0.0942 | |

| Ridge Regression | 7009.35 | 69191604.80 | 8277.45 | 0.8621 | 0.1643 | |

| M11 | Extra Trees Regressor | 2296.86 | 8756864.57 | 2932.96 | 0.9788 | 0.0464 |

| Random Forest Regressor | 3186.05 | 14777499.11 | 3817.37 | 0.9684 | 0.0658 | |

| Ridge Regression | 3676.12 | 21641675.00 | 4466.13 | 0.9655 | 0.0736 |

| Sample Size | Population | GDP | Import | Export | Energy | Prediction |

|---|---|---|---|---|---|---|

| 1 | 65988664 | 2.40E+11 | 51553796 | 36059088 | 59486 | 62736.99 |

| 2 | 70468872 | 6.81E+11 | 170062720 | 107271752 | 79785 | 76948.98 |

| 3 | 44089068 | 6.88E+10 | 7909364 | 2910122 | 27507.96 | 26962.77 |

| 4 | 76576120 | 9.58E+11 | 260822800 | 161480912 | 88074.43 | 91513.96 |

| 5 | 78112072 | 9.39E+11 | 251142432 | 166504864 | 89248.78 | 96054.71 |

| 6 | 47026424 | 6.17E+10 | 9235002 | 5727834 | 30249.94 | 28972.36 |

| 7 | 52275888 | 9.09E+10 | 14335398 | 11662024 | 39733.94 | 38689.99 |

| 8 | 84775408 | 8.19E+11 | 271424480 | 225291392 | 123859.48 | 113726.34 |

| 9 | 48106764 | 6.00E+10 | 10757032 | 7133604 | 31747.45 | 30416.86 |

| 10 | 73195344 | 7.77E+11 | 185544336 | 113883216 | 79840.07 | 81925.22 |

| 11 | 53305232 | 1.07E+11 | 15792143 | 11624692 | 40395.43 | 40529.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).