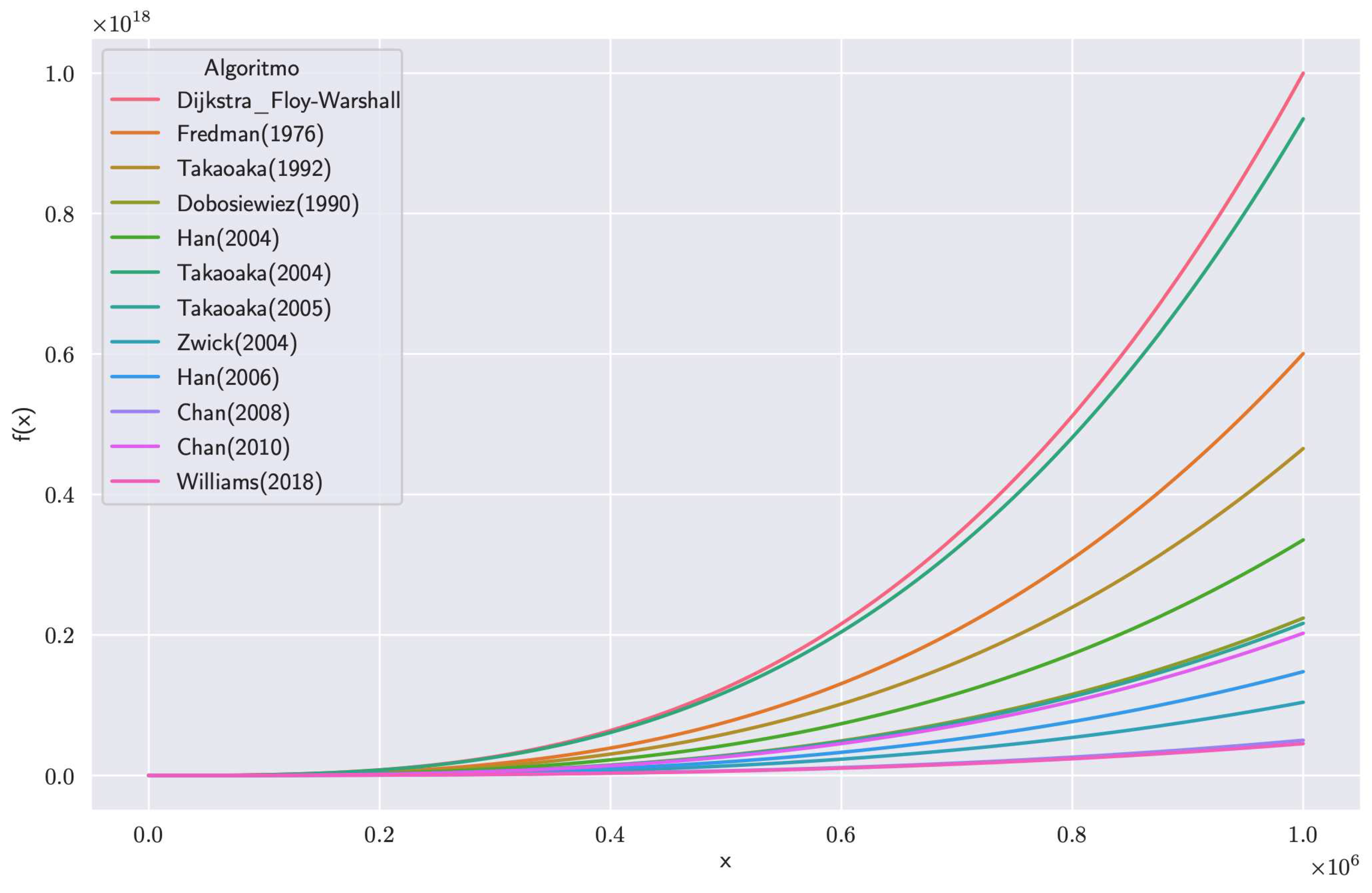

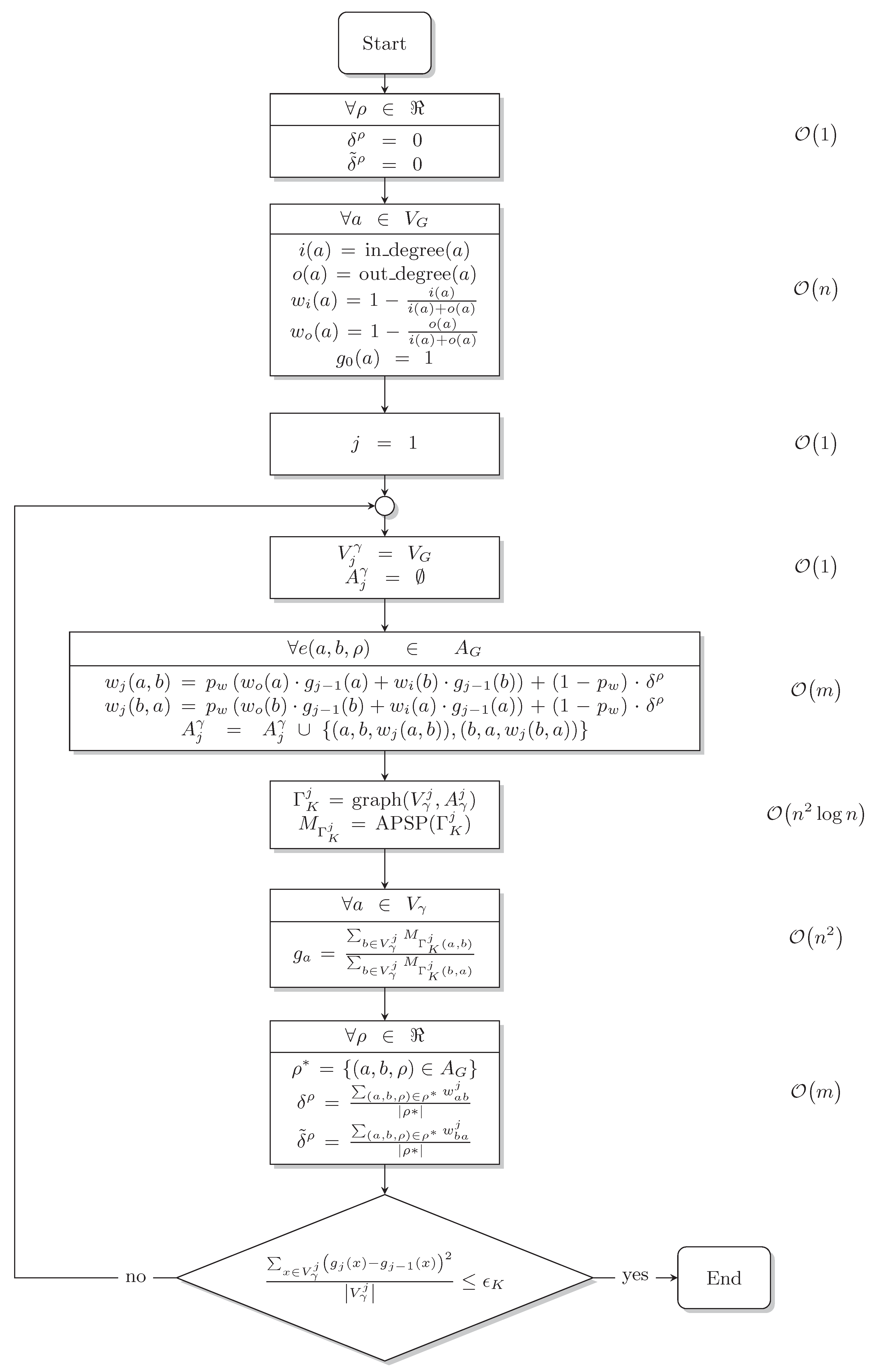

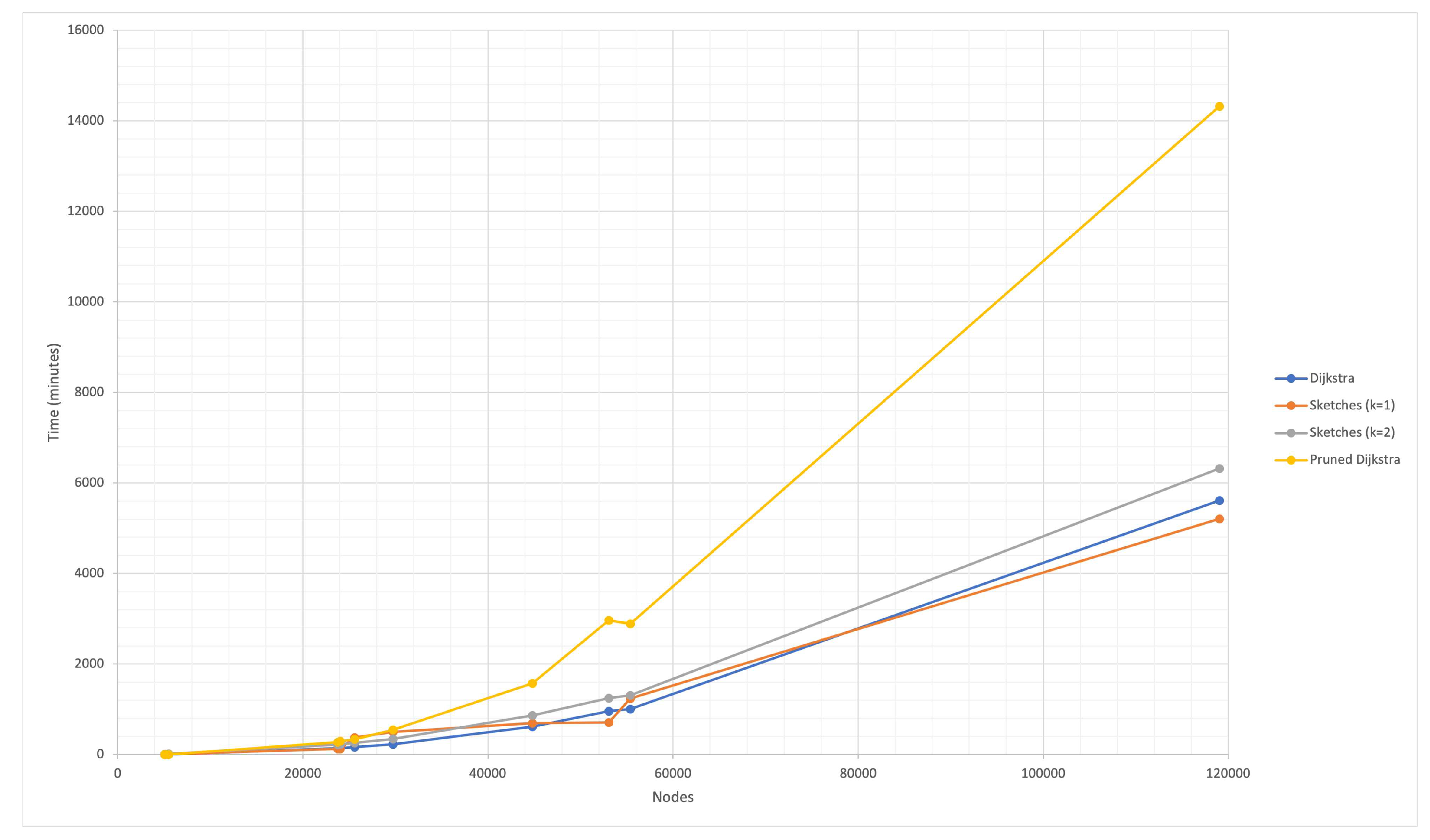

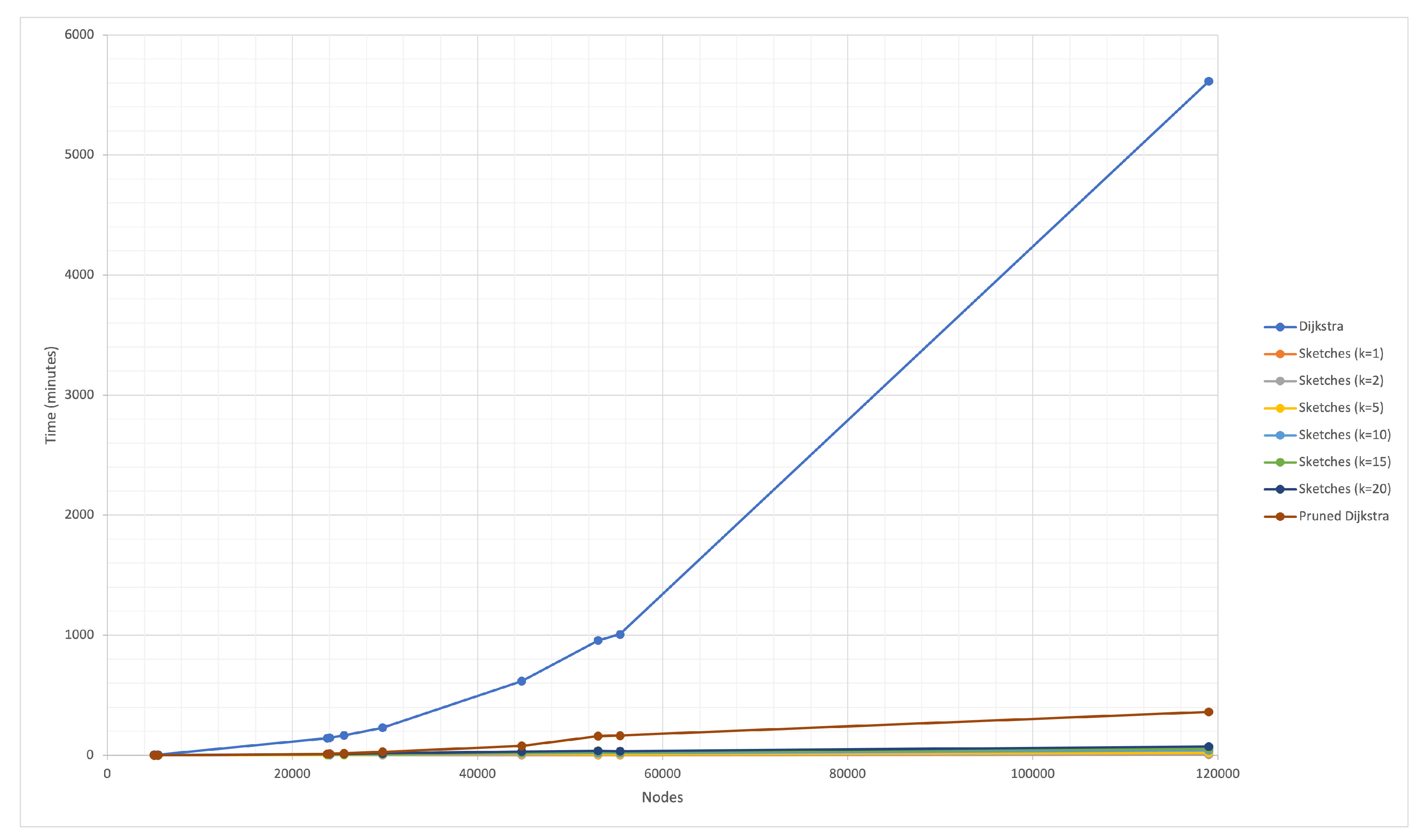

The solution presented combines several approaches to solve the APSP, although the DIS-C algorithm consists of other processes whose computational cost is non-significant.

3.2. The pruned Dijkstra

The pruned Dijkstra algorithm is an optimized version of Dijkstra’s algorithm in which unnecessary paths are removed in the search, keeping a list of pending nodes and choosing to expand the one with the minimum distance in the list. If we find a path longer than the currently known shortest path to any node during expansion, then that path is discarded and not considered again. In this way, the pruned Dijkstra algorithm reduces the number of paths to be considered. It is a generalization of the PLL (Pruned Landmark Labeling) method for undirected weighted graphs [

70]. The PLL method is based on the idea of 2-hop coverage [

72], which is an exact method. It is defined as follows: let

be an undirected and weighted graph; for each node

, the cover

is a set of pairs

, where

u is a node and

is the distance from

u to

v in the graph

G. All covers are denoted as indexed

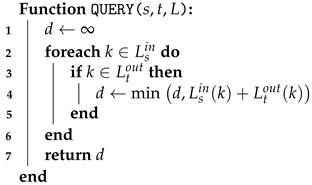

. To calculate the distance between two vertices,

s and

t, we use the search function (

Q), as shown in Eqn.

1.

L is a two-hop cover of

G if

for any

.

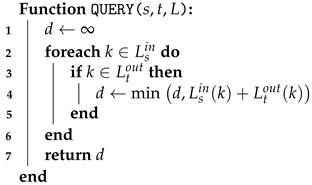

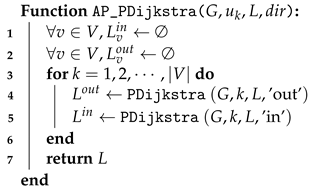

In the case of weighted directed graphs, it is necessary to calculate two indexes; the first

contains the coverage of all the nodes, considering the incoming edges. The second

considers the outgoing edges. The way to consult the distances has a minor modification, but the idea remains the same. Such change can be observed in Eqn.

2.

A naive way to build these indexes is through two executions of Dijkstra’s algorithm. Although this method needs to be more obvious and effective, we will explain the details to discuss the next method. Let , start with an empty index , where . Suppose Dijkstra is used on each vertex in the order . After the k-th Dijskstra on a vertex , the distances of are added to the coverages of the vertices reached, whereby for each with , where is the distance from node u to node v within the graph G. It is important to mention that the coverages of the vertices reached are not changed, which implies for each with . By performing this procedure starting with , the same result can be obtained but considering the output edges.

Thus, and are the final indices contained in . Obviously, for any pair of vertices, therefore and are the 2-hop covers of G. This is because, if s and t are reachable, then and .

As mentioned at the beginning of the description, this way of creating indexes could be more efficient. For this reason, pruning is added to the naive method. This technique reduces the search space considerably. Like the naive method, a pruned search is conducted with Dijkstra’s algorithm starting from the vertices in the following order: . Start with a pair of indices , and create indices , from and , respectively, using the information obtained by the k-th execution of pruned Dijkstra for vertex .

The pruning process is as follows: suppose we have the indices and and we execute the pruned Dijkstra from to create a new index . Assuming that we are visiting a vertex u with distance . If , then we prune u, that is, we do not add to and we do not add any neighbors of u to the priority queue. If the condition is not met, then is added to the cover of u, and Dijkstra is executed as normal. As with the naive method, we set and for all vertices that were not reached in the k-th execution of the pruned Dijkstra. In the end, and are the final indices contained in .

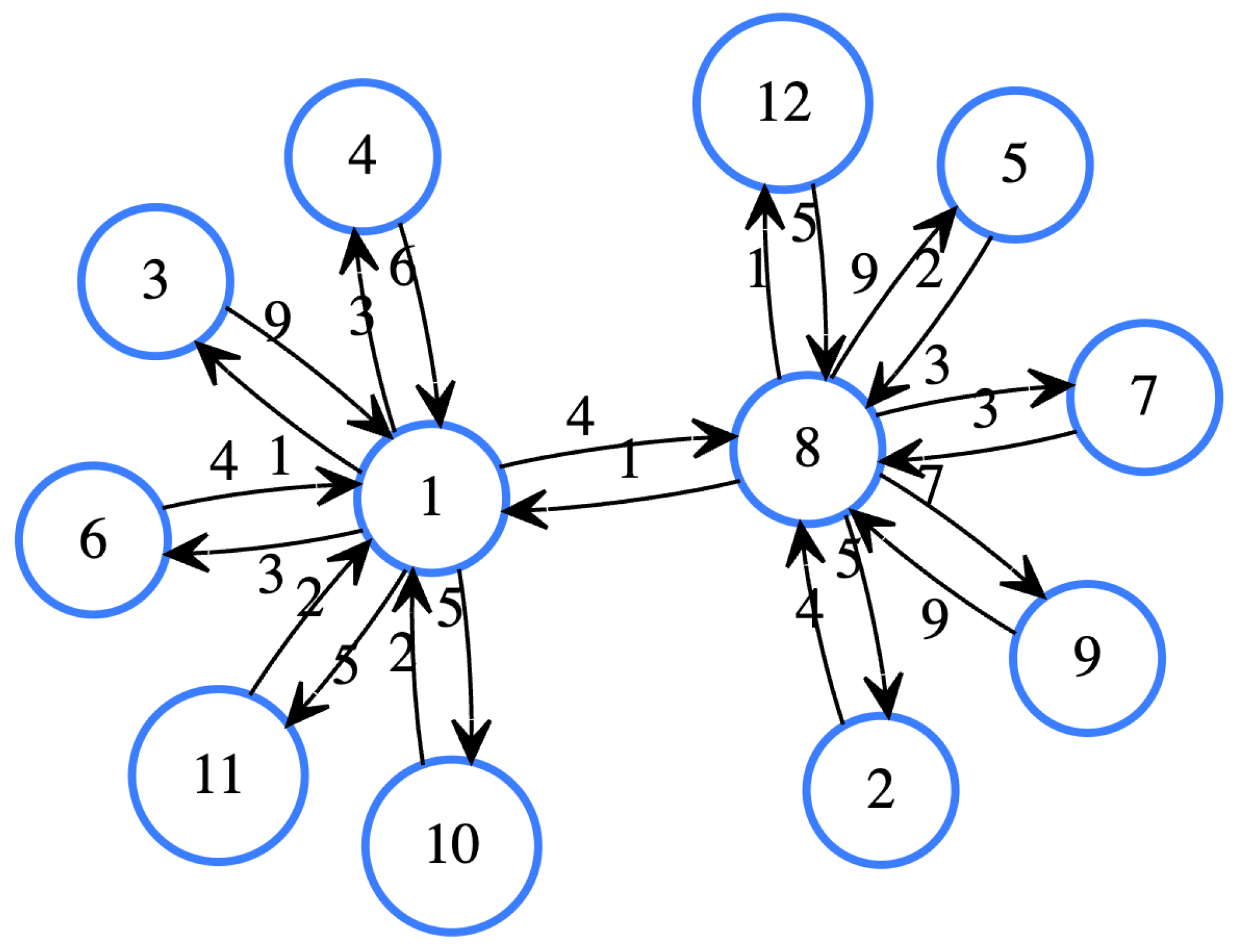

Figure 3 shows an example of the algorithm acting on the graph represented in the figure, taking the order of execution as

. The first pruned Dijkstra from vertex one visits all other vertices, forward and reverse (see

Figure 4a and

Figure 4b). During the next iteration from vertex 8 (

Figure 5e), when node one is visited, given that

1, vertex one is pruned and none of its neighbors are visited anymore; the same occurs in the opposite direction (see

Figure 5f). With these two executions, it is possible to cover all the shortest paths, and once the process is executed on the remaining vertices, they will only refer to themselves. The pruned Dijkstra algorithm is described in Algorithm 2, and the whole algorithm for constructing indices is presented in Algorithm 1.

|

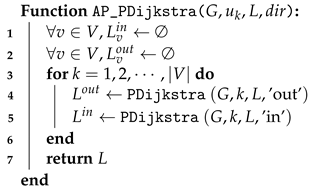

Algorithm 1:APSP_PDijkstra |

|

We executed the pruned Dijkstra in order

; and arbitrarily chose the order of the vertices; however, it is relevant for a good algorithm performance. Ideally, start from central vertices so that many shortest paths pass through them. There are many strategies to order the vertices; some of the most used ones apply to order by centralities. In this work, we choose the order of the vertices by the degree of centrality. The graphs generated by semantic networks behave like networks without scale, where high-degree vertices provide high connectivity to the network. In this way, the shortest path between two vertices will likely pass through those nodes.

|

Algorithm 2:The Pruned Dijkstra |

|

|

Algorithm 3: Check distance from source to target nodes according a 2-hop cover

|

|

3.2.1. The pruned Dijkstra analysis

To prove the correctness of the method, it suffices to show that the algorithm computes a two-hop coverage, that is, for any . Let be the index built without the pruning process, so it is a two-hop coverage; is the index built by applying the pruning process. Since there exists a node such that , and , the goal is to show that also exists in and .

Theorem 1. For , and ,

Proof. Let

, assume there is a path between them,

j the smallest number such that

,

and

. We must show that

and

also lie in

and

respectively; that is to say that

. To prove that

, first, for any

, suppose

, where

is a path between

a and

b. If we assume that

, by inequality:

Since

and

, it contradicts the fact that

j is the smallest number. Therefore,

for no

.

Now, we prove that . Suppose we execute the j-th Dijkstra pruned from node to construct index . Let . Since there is no for , it means that there is no vertex in the i-th pruned Dijkstra iteration that covers the shortest path of to t, therefore . Consequently, vertex t is visited without pruning and is added.

Finally, suppose we execute the j-th Dijkstra pruned from , but this time to build . If s and t are reachable in G, it means that there is a path between and s taking into account the input edges, or what is the same, there is . Since we already proved that for , it means that is a neighbor of s or , and since is the initial vertex of the path , then is the least distance neighbor sharing s. In any of the cases , therefore the node s is visited without pruning (remember that it is considering the input edges). Consequently is added. □

As mentioned, the order in which the nodes are processed influences the algorithm’s performance. Is there an order of execution such that the construction time is the minimum possible? The answer is yes; however, finding such an order is challenging. So, there are two approaches: the first is to find the minor nodes that cover all the shortest paths, which is a much bigger problem since this is an instance of the

minimum vertex coverage problem that is NP-hard. The second approach is a parameterized perspective; specifically, the explored parameter is the

width of the tree. It also relies on the process of

centroid decomposition of the tree [

73]. This approach deduces the following lemma; the formal proof is made in [

70].

Lemma 1. Let w be the width of the graph of G. There is a vertex order with which the pruned Dijkstra method executes in time for preprocessing, stores an index with space and answers each query in time.

Considering that this research study does not look for minimum two-hop coverage, using the upper bound of for the algorithm can be considered an inconsistency. Since there is no guarantee that the chosen order (degree centrality) is the same as the order necessary to achieve such computational complexity, however, what we concluded for our implementation is that it cannot be faster than the one theoretically obtained using the order with which . That is, our implementation has a lower bound . Also, in terms of Dijkstra’s algorithm, we can hypothesize that the construction of the coverage using the pruned process, regardless of the order of execution, is much smaller than for the construction of the naive coverage in highly connected graphs, that is to say, that .

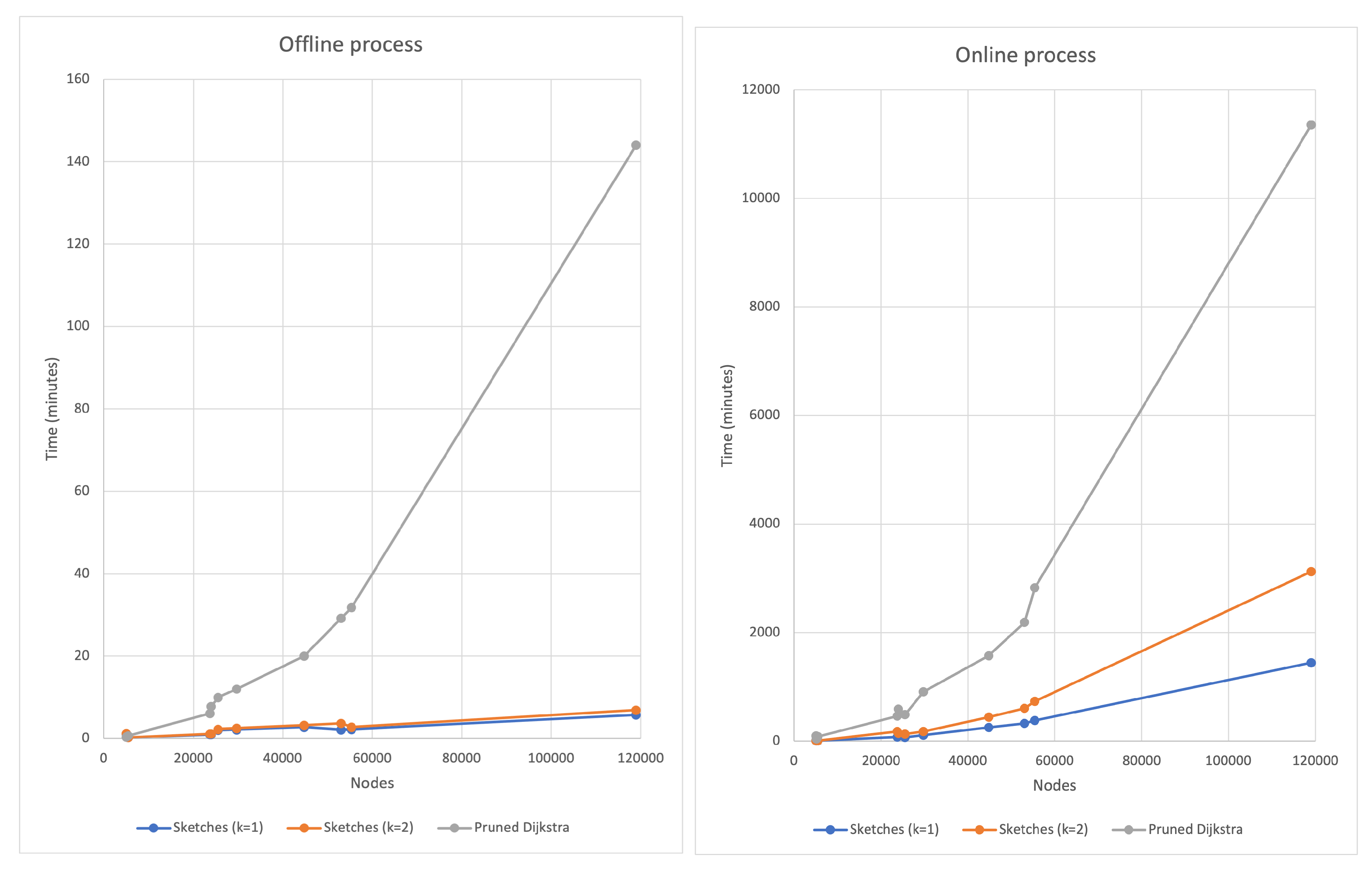

3.3. The sketches

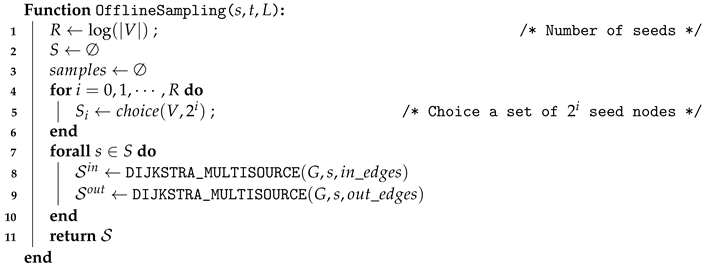

Sketch-based algorithms are methods comprising two general processes: offline and online. In the offline process, the sketches (that are crucial nodes, initially random) are computed from which the shortest path to all the other nodes is calculated. In the online process, we compute the closest distance between a pair of nodes from the closest common sketch between them.

The offline process consists of sampling sets of seed nodes, each containing a subset of size . From each set, the closest seed of each node is stored together with its distance; that is, for each node u, a sketch of the form SKETCH is created. Therefore, the final size of the sketch for each node is . This construction can be made with Dijkstra’s algorithm. The node closest to u is called the seed of u. This procedure is executed k times with different sample sizes. At the end of the k iterations, we obtain seeds for each node.

As with the previous algorithm, we must compute two sketches for each node. The first SKETCH and SKETCH representing the distance from node to the nearest seed and vice versa. We do this by running the search algorithm twice; one considering the input edges, the other with the output edges.

We can randomly choose

S, using some criteria to bias our choice. For example, we can discard those nodes whose degree is less than two. We can also take advantage of the graph’s structure, and its specific properties

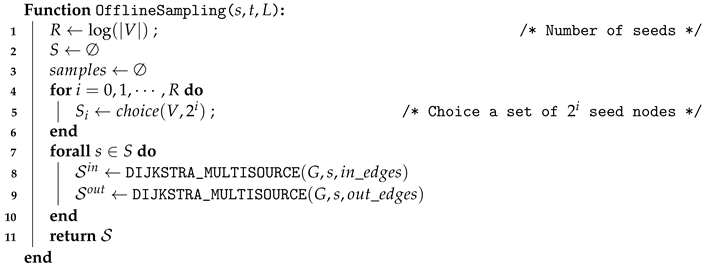

5. In the Algorithm 4, the proposed pseudocode is shown to generate the sketches of each node.

|

Algorithm 4:Sketches_DIS-C: offline sampling |

|

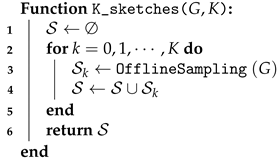

For each sample, we obtain the closest seed node and its distance for each node. This process is done with the classical Dijkstra multi-source algorithm. In this way, the seed nodes for each

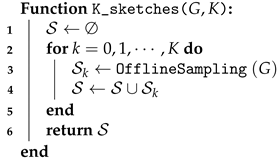

are computed (see Algorithm 5).

|

Algorithm 5:Sketches_DIS-C: k sketches offline |

|

In each iteration, we generated a sketch from an offline sample; therefore, at the end of the algorithm, there will be seeds for each node.

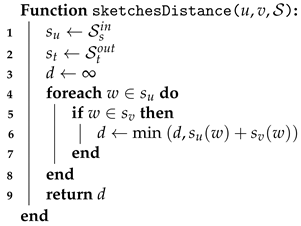

The second process of the method is the online one. The calculation of the approximate minimum distance between nodes

u and

v is done by looking at the distance from

u and

v to any node

w that appears in both

as in

. So, we compute the path length from

u to

v by adding the distance from

u to

w plus the distance from

w to

v. The minimum distance is taken if there are two or more common nodes

w. Algorithm 6 shows the proposal to perform said calculation.

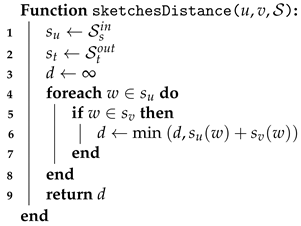

|

Algorithm 6:Sketches_DIS-C: k sketches online |

|

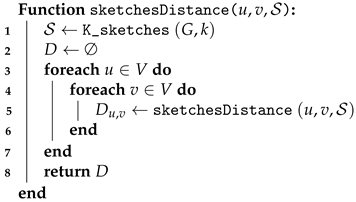

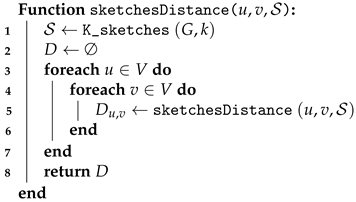

Finally, to solve the APSP problem, it is necessary to create another algorithm that it executes for each pair of nodes (Algorithm 7).

|

Algorithm 7:Sketches_DIS-C: k sketches online APSP |

|

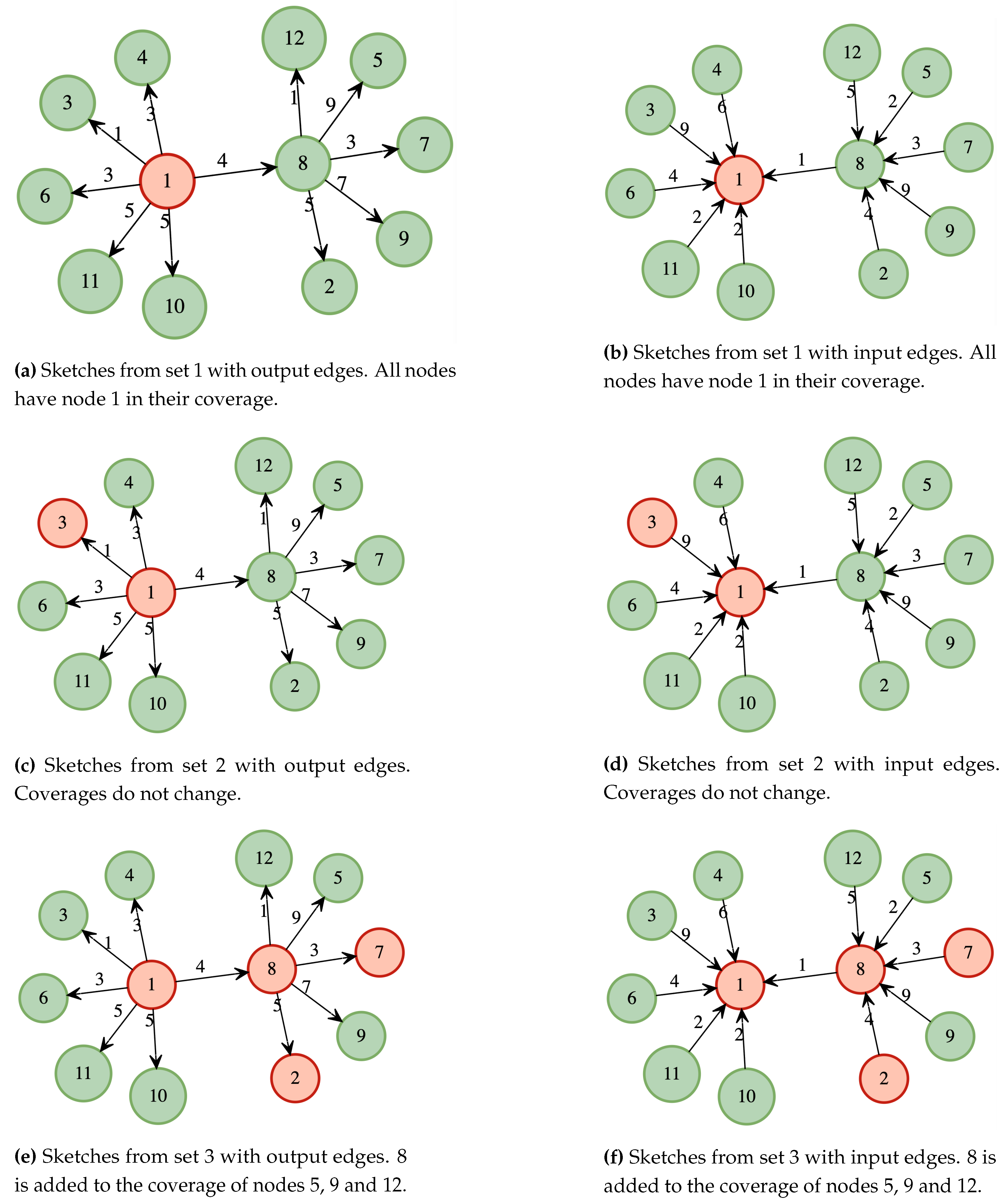

Figure 5 shows an example of the described procedure. The graph of

Figure 3 is taken as a reference. Since there are 12 nodes, the number of seed sets is 3 (

), and the size of the sets are 1, 2, and 4, respectively. The nodes in each set are 1; for the first,

; for the second, and

, for the last.

In the first iteration (

Figure 5a), all the nodes have node 1 in their coverage; this also happens for the input edges (see

Figure 5b). In the second iteration (

Figure 5c and

Figure 5d), the coverages do not change since node three does not cover any path shorter than those already covered by node 1, finally, in the last iteration (

Figure 5e and

Figure 5f), we have node 8 in the seed set, this covers the shortest path of its neighbors, except those that are also in the set; therefore, nodes 5, 12 and 9 will have 8 in their coverage. On the other hand, nodes 2 and 7 are contained in their respective coverage.

3.3.1. Sketches analysis

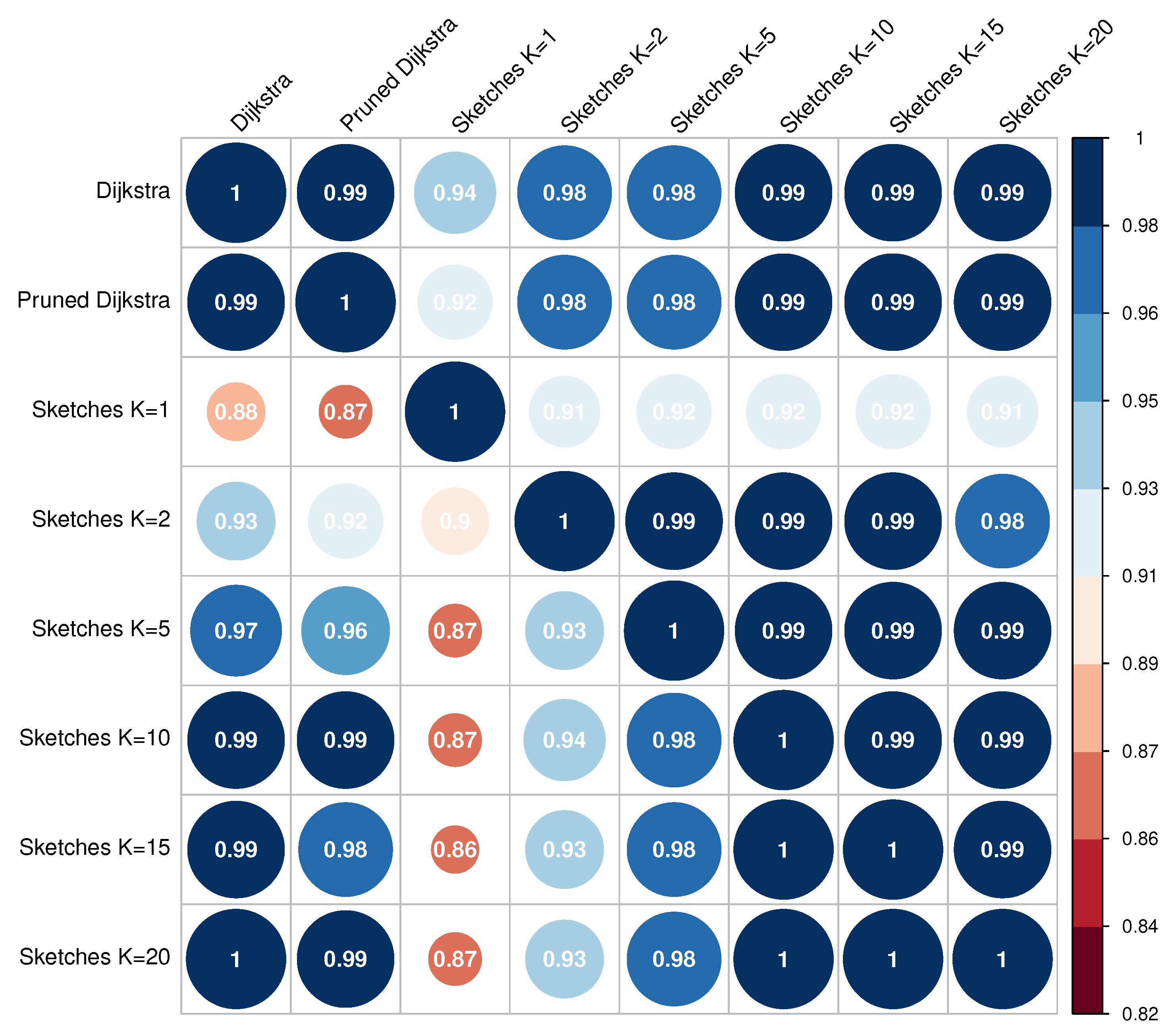

In the sketches-based method, there is no accuracy test for general graphs. It means that the method does not guarantee exact node labeling to answer the exact distance between all pairs of vertices. As far as computational complexity is concerned, it is easy to determine.

Theorem 2. For a set of nodes such that the size of said set is for , the computational complexity of the algorithm is .

Proof. We know that the sketch-based method selects a number w founded on the number of nodes in the graph, that is, . Then, given w, subsets of nodes are created for such that the size of each one is . It can be seen that . For each of the subsets, the multi-source Dijkstra algorithm is executed, with the aim of finding, for all the nodes of G, the closest node of the set. Dijkstra’s algorithm from multiple sources has a complexity of ; for each subset of nodes, it is necessary to apply (independent of size) a single execution of Dijkstra’s algorithm. Therefore, since there are w subsets of nodes, w Dijkstra processes from multiple sources must be executed; that is to say, in total, we reach a complexity of . □

In the implementation carried out in this work, we determined w by a logarithmic function , where . In this way, we ensured that . Therefore, for the implementation made, the method has a complexity of .